problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.1k

10.2k

| golden_diff

stringlengths 151

4.94k

| verification_info

stringlengths 582

21k

| num_tokens

int64 271

2.05k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_57793 | rasdani/github-patches | git_diff | catalyst-team__catalyst-855 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

EarlyStoppingCallback considers first epoch as bad

## 🐛 Bug Report

EarlyStoppingCallback considers first epoch as bad. This can lead for example to always stopping after first epoch if patience=1.

### How To Reproduce

You can train a model with early stopping and patience=1 and see that it always stops after first epoch. Or you can use the unit test below that I added to pull request.

#### Code sample

```python

from unittest.mock import MagicMock, PropertyMock

from catalyst.core import EarlyStoppingCallback

def test_patience1():

"""@TODO: Docs. Contribution is welcome."""

early_stop = EarlyStoppingCallback(1)

runner = MagicMock()

type(runner).stage_name = PropertyMock(return_value="training")

type(runner).valid_metrics = PropertyMock(return_value={"loss": 0.001})

stop_mock = PropertyMock(return_value=False)

type(runner).need_early_stop = stop_mock

early_stop.on_epoch_end(runner)

assert stop_mock.mock_calls == []

```

### Expected behavior

Training doesn't stop after first epoch. And the unit test passes.

### Environment

```bash

Catalyst version: 20.06

PyTorch version: 1.5.1

Is debug build: No

CUDA used to build PyTorch: None

TensorFlow version: N/A

TensorBoard version: 2.2.2

OS: Mac OSX 10.15.5

GCC version: Could not collect

CMake version: version 3.8.0

Python version: 3.7

Is CUDA available: No

CUDA runtime version: No CUDA

GPU models and configuration: No CUDA

Nvidia driver version: No CUDA

cuDNN version: No CUDA

Versions of relevant libraries:

[pip3] catalyst-codestyle==20.4

[pip3] catalyst-sphinx-theme==1.1.1

[pip3] efficientnet-pytorch==0.6.3

[pip3] numpy==1.18.5

[pip3] segmentation-models-pytorch==0.1.0

[pip3] tensorboard==2.2.2

[pip3] tensorboard-plugin-wit==1.6.0.post3

[pip3] tensorboardX==2.0

[pip3] torch==1.5.1

[pip3] torchvision==0.6.1

[conda] catalyst-codestyle 20.4 <pip>

[conda] catalyst-sphinx-theme 1.1.1 <pip>

[conda] efficientnet-pytorch 0.6.3 <pip>

[conda] numpy 1.18.5 <pip>

[conda] segmentation-models-pytorch 0.1.0 <pip>

[conda] tensorboard 2.2.2 <pip>

[conda] tensorboard-plugin-wit 1.6.0.post3 <pip>

[conda] tensorboardX 2.0 <pip>

[conda] torch 1.5.1 <pip>

[conda] torchvision 0.6.1 <pip>

```

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `catalyst/core/callbacks/early_stop.py`

Content:

```

1 from catalyst.core.callback import Callback, CallbackNode, CallbackOrder

2 from catalyst.core.runner import IRunner

3

4

5 class CheckRunCallback(Callback):

6 """@TODO: Docs. Contribution is welcome."""

7

8 def __init__(self, num_batch_steps: int = 3, num_epoch_steps: int = 2):

9 """@TODO: Docs. Contribution is welcome."""

10 super().__init__(order=CallbackOrder.external, node=CallbackNode.all)

11 self.num_batch_steps = num_batch_steps

12 self.num_epoch_steps = num_epoch_steps

13

14 def on_epoch_end(self, runner: IRunner):

15 """@TODO: Docs. Contribution is welcome."""

16 if runner.epoch >= self.num_epoch_steps:

17 runner.need_early_stop = True

18

19 def on_batch_end(self, runner: IRunner):

20 """@TODO: Docs. Contribution is welcome."""

21 if runner.loader_batch_step >= self.num_batch_steps:

22 runner.need_early_stop = True

23

24

25 class EarlyStoppingCallback(Callback):

26 """@TODO: Docs. Contribution is welcome."""

27

28 def __init__(

29 self,

30 patience: int,

31 metric: str = "loss",

32 minimize: bool = True,

33 min_delta: float = 1e-6,

34 ):

35 """@TODO: Docs. Contribution is welcome."""

36 super().__init__(order=CallbackOrder.external, node=CallbackNode.all)

37 self.best_score = None

38 self.metric = metric

39 self.patience = patience

40 self.num_bad_epochs = 0

41 self.is_better = None

42

43 if minimize:

44 self.is_better = lambda score, best: score <= (best - min_delta)

45 else:

46 self.is_better = lambda score, best: score >= (best + min_delta)

47

48 def on_epoch_end(self, runner: IRunner) -> None:

49 """@TODO: Docs. Contribution is welcome."""

50 if runner.stage_name.startswith("infer"):

51 return

52

53 score = runner.valid_metrics[self.metric]

54 if self.best_score is None:

55 self.best_score = score

56 if self.is_better(score, self.best_score):

57 self.num_bad_epochs = 0

58 self.best_score = score

59 else:

60 self.num_bad_epochs += 1

61

62 if self.num_bad_epochs >= self.patience:

63 print(f"Early stop at {runner.epoch} epoch")

64 runner.need_early_stop = True

65

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

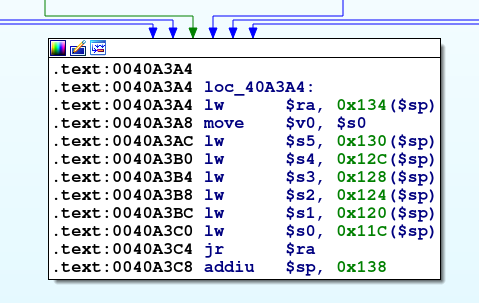

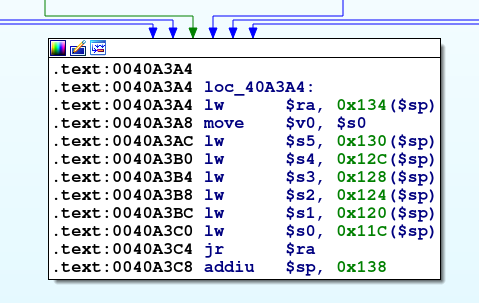

| diff --git a/catalyst/core/callbacks/early_stop.py b/catalyst/core/callbacks/early_stop.py

--- a/catalyst/core/callbacks/early_stop.py

+++ b/catalyst/core/callbacks/early_stop.py

@@ -51,9 +51,7 @@

return

score = runner.valid_metrics[self.metric]

- if self.best_score is None:

- self.best_score = score

- if self.is_better(score, self.best_score):

+ if self.best_score is None or self.is_better(score, self.best_score):

self.num_bad_epochs = 0

self.best_score = score

else:

| {"golden_diff": "diff --git a/catalyst/core/callbacks/early_stop.py b/catalyst/core/callbacks/early_stop.py\n--- a/catalyst/core/callbacks/early_stop.py\n+++ b/catalyst/core/callbacks/early_stop.py\n@@ -51,9 +51,7 @@\n return\n \n score = runner.valid_metrics[self.metric]\n- if self.best_score is None:\n- self.best_score = score\n- if self.is_better(score, self.best_score):\n+ if self.best_score is None or self.is_better(score, self.best_score):\n self.num_bad_epochs = 0\n self.best_score = score\n else:\n", "issue": "EarlyStoppingCallback considers first epoch as bad\n## \ud83d\udc1b Bug Report\r\nEarlyStoppingCallback considers first epoch as bad. This can lead for example to always stopping after first epoch if patience=1.\r\n\r\n\r\n### How To Reproduce\r\nYou can train a model with early stopping and patience=1 and see that it always stops after first epoch. Or you can use the unit test below that I added to pull request.\r\n\r\n#### Code sample\r\n```python\r\nfrom unittest.mock import MagicMock, PropertyMock\r\n\r\nfrom catalyst.core import EarlyStoppingCallback\r\n\r\n\r\ndef test_patience1():\r\n \"\"\"@TODO: Docs. Contribution is welcome.\"\"\"\r\n early_stop = EarlyStoppingCallback(1)\r\n runner = MagicMock()\r\n type(runner).stage_name = PropertyMock(return_value=\"training\")\r\n type(runner).valid_metrics = PropertyMock(return_value={\"loss\": 0.001})\r\n stop_mock = PropertyMock(return_value=False)\r\n type(runner).need_early_stop = stop_mock\r\n\r\n early_stop.on_epoch_end(runner)\r\n\r\n assert stop_mock.mock_calls == []\r\n```\r\n\r\n### Expected behavior\r\nTraining doesn't stop after first epoch. And the unit test passes.\r\n\r\n\r\n### Environment\r\n```bash\r\nCatalyst version: 20.06\r\nPyTorch version: 1.5.1\r\nIs debug build: No\r\nCUDA used to build PyTorch: None\r\nTensorFlow version: N/A\r\nTensorBoard version: 2.2.2\r\n\r\nOS: Mac OSX 10.15.5\r\nGCC version: Could not collect\r\nCMake version: version 3.8.0\r\n\r\nPython version: 3.7\r\nIs CUDA available: No\r\nCUDA runtime version: No CUDA\r\nGPU models and configuration: No CUDA\r\nNvidia driver version: No CUDA\r\ncuDNN version: No CUDA\r\n\r\nVersions of relevant libraries:\r\n[pip3] catalyst-codestyle==20.4\r\n[pip3] catalyst-sphinx-theme==1.1.1\r\n[pip3] efficientnet-pytorch==0.6.3\r\n[pip3] numpy==1.18.5\r\n[pip3] segmentation-models-pytorch==0.1.0\r\n[pip3] tensorboard==2.2.2\r\n[pip3] tensorboard-plugin-wit==1.6.0.post3\r\n[pip3] tensorboardX==2.0\r\n[pip3] torch==1.5.1\r\n[pip3] torchvision==0.6.1\r\n[conda] catalyst-codestyle 20.4 <pip>\r\n[conda] catalyst-sphinx-theme 1.1.1 <pip>\r\n[conda] efficientnet-pytorch 0.6.3 <pip>\r\n[conda] numpy 1.18.5 <pip>\r\n[conda] segmentation-models-pytorch 0.1.0 <pip>\r\n[conda] tensorboard 2.2.2 <pip>\r\n[conda] tensorboard-plugin-wit 1.6.0.post3 <pip>\r\n[conda] tensorboardX 2.0 <pip>\r\n[conda] torch 1.5.1 <pip>\r\n[conda] torchvision 0.6.1 <pip>\r\n```\r\n\n", "before_files": [{"content": "from catalyst.core.callback import Callback, CallbackNode, CallbackOrder\nfrom catalyst.core.runner import IRunner\n\n\nclass CheckRunCallback(Callback):\n \"\"\"@TODO: Docs. Contribution is welcome.\"\"\"\n\n def __init__(self, num_batch_steps: int = 3, num_epoch_steps: int = 2):\n \"\"\"@TODO: Docs. Contribution is welcome.\"\"\"\n super().__init__(order=CallbackOrder.external, node=CallbackNode.all)\n self.num_batch_steps = num_batch_steps\n self.num_epoch_steps = num_epoch_steps\n\n def on_epoch_end(self, runner: IRunner):\n \"\"\"@TODO: Docs. Contribution is welcome.\"\"\"\n if runner.epoch >= self.num_epoch_steps:\n runner.need_early_stop = True\n\n def on_batch_end(self, runner: IRunner):\n \"\"\"@TODO: Docs. Contribution is welcome.\"\"\"\n if runner.loader_batch_step >= self.num_batch_steps:\n runner.need_early_stop = True\n\n\nclass EarlyStoppingCallback(Callback):\n \"\"\"@TODO: Docs. Contribution is welcome.\"\"\"\n\n def __init__(\n self,\n patience: int,\n metric: str = \"loss\",\n minimize: bool = True,\n min_delta: float = 1e-6,\n ):\n \"\"\"@TODO: Docs. Contribution is welcome.\"\"\"\n super().__init__(order=CallbackOrder.external, node=CallbackNode.all)\n self.best_score = None\n self.metric = metric\n self.patience = patience\n self.num_bad_epochs = 0\n self.is_better = None\n\n if minimize:\n self.is_better = lambda score, best: score <= (best - min_delta)\n else:\n self.is_better = lambda score, best: score >= (best + min_delta)\n\n def on_epoch_end(self, runner: IRunner) -> None:\n \"\"\"@TODO: Docs. Contribution is welcome.\"\"\"\n if runner.stage_name.startswith(\"infer\"):\n return\n\n score = runner.valid_metrics[self.metric]\n if self.best_score is None:\n self.best_score = score\n if self.is_better(score, self.best_score):\n self.num_bad_epochs = 0\n self.best_score = score\n else:\n self.num_bad_epochs += 1\n\n if self.num_bad_epochs >= self.patience:\n print(f\"Early stop at {runner.epoch} epoch\")\n runner.need_early_stop = True\n", "path": "catalyst/core/callbacks/early_stop.py"}], "after_files": [{"content": "from catalyst.core.callback import Callback, CallbackNode, CallbackOrder\nfrom catalyst.core.runner import IRunner\n\n\nclass CheckRunCallback(Callback):\n \"\"\"@TODO: Docs. Contribution is welcome.\"\"\"\n\n def __init__(self, num_batch_steps: int = 3, num_epoch_steps: int = 2):\n \"\"\"@TODO: Docs. Contribution is welcome.\"\"\"\n super().__init__(order=CallbackOrder.external, node=CallbackNode.all)\n self.num_batch_steps = num_batch_steps\n self.num_epoch_steps = num_epoch_steps\n\n def on_epoch_end(self, runner: IRunner):\n \"\"\"@TODO: Docs. Contribution is welcome.\"\"\"\n if runner.epoch >= self.num_epoch_steps:\n runner.need_early_stop = True\n\n def on_batch_end(self, runner: IRunner):\n \"\"\"@TODO: Docs. Contribution is welcome.\"\"\"\n if runner.loader_batch_step >= self.num_batch_steps:\n runner.need_early_stop = True\n\n\nclass EarlyStoppingCallback(Callback):\n \"\"\"@TODO: Docs. Contribution is welcome.\"\"\"\n\n def __init__(\n self,\n patience: int,\n metric: str = \"loss\",\n minimize: bool = True,\n min_delta: float = 1e-6,\n ):\n \"\"\"@TODO: Docs. Contribution is welcome.\"\"\"\n super().__init__(order=CallbackOrder.external, node=CallbackNode.all)\n self.best_score = None\n self.metric = metric\n self.patience = patience\n self.num_bad_epochs = 0\n self.is_better = None\n\n if minimize:\n self.is_better = lambda score, best: score <= (best - min_delta)\n else:\n self.is_better = lambda score, best: score >= (best + min_delta)\n\n def on_epoch_end(self, runner: IRunner) -> None:\n \"\"\"@TODO: Docs. Contribution is welcome.\"\"\"\n if runner.stage_name.startswith(\"infer\"):\n return\n\n score = runner.valid_metrics[self.metric]\n if self.best_score is None or self.is_better(score, self.best_score):\n self.num_bad_epochs = 0\n self.best_score = score\n else:\n self.num_bad_epochs += 1\n\n if self.num_bad_epochs >= self.patience:\n print(f\"Early stop at {runner.epoch} epoch\")\n runner.need_early_stop = True\n", "path": "catalyst/core/callbacks/early_stop.py"}]} | 1,605 | 144 |

gh_patches_debug_840 | rasdani/github-patches | git_diff | nilearn__nilearn-507 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Add test for compatibility of old version of six

For the moment, we are compatible with the latest version of six. Recently, somebody pointed out that we did not support six 1.5.2. We should investigate, decide which version we should be compatible with and then add this to Travis.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `continuous_integration/show-python-packages-versions.py`

Content:

```

1 import sys

2

3 DEPENDENCIES = ['numpy', 'scipy', 'sklearn', 'matplotlib', 'nibabel']

4

5

6 def print_package_version(package_name, indent=' '):

7 try:

8 package = __import__(package_name)

9 version = getattr(package, '__version__', None)

10 package_file = getattr(package, '__file__', )

11 provenance_info = '{0} from {1}'.format(version, package_file)

12 except ImportError:

13 provenance_info = 'not installed'

14

15 print('{0}{1}: {2}'.format(indent, package_name, provenance_info))

16

17 if __name__ == '__main__':

18 print('=' * 120)

19 print('Python %s' % str(sys.version))

20 print('from: %s\n' % sys.executable)

21

22 print('Dependencies versions')

23 for package_name in DEPENDENCIES:

24 print_package_version(package_name)

25 print('=' * 120)

26

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/continuous_integration/show-python-packages-versions.py b/continuous_integration/show-python-packages-versions.py

--- a/continuous_integration/show-python-packages-versions.py

+++ b/continuous_integration/show-python-packages-versions.py

@@ -1,6 +1,6 @@

import sys

-DEPENDENCIES = ['numpy', 'scipy', 'sklearn', 'matplotlib', 'nibabel']

+DEPENDENCIES = ['six', 'numpy', 'scipy', 'sklearn', 'matplotlib', 'nibabel']

def print_package_version(package_name, indent=' '):

| {"golden_diff": "diff --git a/continuous_integration/show-python-packages-versions.py b/continuous_integration/show-python-packages-versions.py\n--- a/continuous_integration/show-python-packages-versions.py\n+++ b/continuous_integration/show-python-packages-versions.py\n@@ -1,6 +1,6 @@\n import sys\n \n-DEPENDENCIES = ['numpy', 'scipy', 'sklearn', 'matplotlib', 'nibabel']\n+DEPENDENCIES = ['six', 'numpy', 'scipy', 'sklearn', 'matplotlib', 'nibabel']\n \n \n def print_package_version(package_name, indent=' '):\n", "issue": "Add test for compatibility of old version of six\nFor the moment, we are compatible with the latest version of six. Recently, somebody pointed out that we did not support six 1.5.2. We should investigate, decide which version we should be compatible with and then add this to Travis.\n\n", "before_files": [{"content": "import sys\n\nDEPENDENCIES = ['numpy', 'scipy', 'sklearn', 'matplotlib', 'nibabel']\n\n\ndef print_package_version(package_name, indent=' '):\n try:\n package = __import__(package_name)\n version = getattr(package, '__version__', None)\n package_file = getattr(package, '__file__', )\n provenance_info = '{0} from {1}'.format(version, package_file)\n except ImportError:\n provenance_info = 'not installed'\n\n print('{0}{1}: {2}'.format(indent, package_name, provenance_info))\n\nif __name__ == '__main__':\n print('=' * 120)\n print('Python %s' % str(sys.version))\n print('from: %s\\n' % sys.executable)\n\n print('Dependencies versions')\n for package_name in DEPENDENCIES:\n print_package_version(package_name)\n print('=' * 120)\n", "path": "continuous_integration/show-python-packages-versions.py"}], "after_files": [{"content": "import sys\n\nDEPENDENCIES = ['six', 'numpy', 'scipy', 'sklearn', 'matplotlib', 'nibabel']\n\n\ndef print_package_version(package_name, indent=' '):\n try:\n package = __import__(package_name)\n version = getattr(package, '__version__', None)\n package_file = getattr(package, '__file__', )\n provenance_info = '{0} from {1}'.format(version, package_file)\n except ImportError:\n provenance_info = 'not installed'\n\n print('{0}{1}: {2}'.format(indent, package_name, provenance_info))\n\nif __name__ == '__main__':\n print('=' * 120)\n print('Python %s' % str(sys.version))\n print('from: %s\\n' % sys.executable)\n\n print('Dependencies versions')\n for package_name in DEPENDENCIES:\n print_package_version(package_name)\n print('=' * 120)\n", "path": "continuous_integration/show-python-packages-versions.py"}]} | 569 | 123 |

gh_patches_debug_11637 | rasdani/github-patches | git_diff | getsentry__sentry-59857 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Jira deprecation of glance panels

Notice from Atlassian Support team about glance panel deprecation.

AC:

- Review the deprecation plan

- Build a recommendation based on how we're impacted. If minor development work is required, complete that with this ticket. If significant work is required, notify EM/PM to share impact and come up with next steps together.

Email from Atlassian:

```

Hope you are having a good day!

As part of this deprecation notice (https://developer.atlassian.com/cloud/jira/platform/changelog/#CHANGE-897), we are reaching out because we have identified that your app, “Sentry,” will be affected by the deprecation of glance panels.

This was initially scheduled for the 6th of October, but we have delayed it until the 30th of November.

The jiraIssueGlances and jira:issueGlance modules in Forge (https://developer.atlassian.com/platform/forge/manifest-reference/modules/jira-issue-glance/) and Connect (https://developer.atlassian.com/cloud/jira/platform/modules/issue-glance/) are being deprecated and replaced with the issueContext module.

We recommend transitioning from the glance panel to the new issue context module before the 30th of November.

Please note, we will not be extending this deprecation date as we announced it on the 30th of March.

Let me know if you need any further assistance,

Ahmud

Product Manager-Jira Cloud

```

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `src/sentry/integrations/jira/endpoints/descriptor.py`

Content:

```

1 from django.conf import settings

2 from django.urls import reverse

3 from rest_framework.request import Request

4 from rest_framework.response import Response

5

6 from sentry.api.api_publish_status import ApiPublishStatus

7 from sentry.api.base import Endpoint, control_silo_endpoint

8 from sentry.utils.assets import get_frontend_app_asset_url

9 from sentry.utils.http import absolute_uri

10

11 from .. import JIRA_KEY

12

13 scopes = ["read", "write", "act_as_user"]

14 # For Jira, only approved apps can use the access_email_addresses scope

15 # This scope allows Sentry to use the email endpoint (https://developer.atlassian.com/cloud/jira/platform/rest/v3/#api-rest-api-3-user-email-get)

16 # We use the email with Jira 2-way sync in order to match the user

17 if settings.JIRA_USE_EMAIL_SCOPE:

18 scopes.append("access_email_addresses")

19

20

21 @control_silo_endpoint

22 class JiraDescriptorEndpoint(Endpoint):

23 publish_status = {

24 "GET": ApiPublishStatus.UNKNOWN,

25 }

26 """

27 Provides the metadata needed by Jira to setup an instance of the Sentry integration within Jira.

28 Only used by on-prem orgs and devs setting up local instances of the integration. (Sentry SAAS

29 already has an established, official instance of the Sentry integration registered with Jira.)

30 """

31

32 authentication_classes = ()

33 permission_classes = ()

34

35 def get(self, request: Request) -> Response:

36 sentry_logo = absolute_uri(

37 get_frontend_app_asset_url("sentry", "entrypoints/logo-sentry.svg")

38 )

39 return self.respond(

40 {

41 "name": "Sentry",

42 "description": "Connect your Sentry organization to one or more of your Jira cloud instances. Get started streamlining your bug-squashing workflow by allowing your Sentry and Jira instances to work together.",

43 "key": JIRA_KEY,

44 "baseUrl": absolute_uri(),

45 "vendor": {"name": "Sentry", "url": "https://sentry.io"},

46 "authentication": {"type": "jwt"},

47 "lifecycle": {

48 "installed": "/extensions/jira/installed/",

49 "uninstalled": "/extensions/jira/uninstalled/",

50 },

51 "apiVersion": 1,

52 "modules": {

53 "postInstallPage": {

54 "url": "/extensions/jira/ui-hook/",

55 "name": {"value": "Configure Sentry Add-on"},

56 "key": "post-install-sentry",

57 },

58 "configurePage": {

59 "url": "/extensions/jira/ui-hook/",

60 "name": {"value": "Configure Sentry Add-on"},

61 "key": "configure-sentry",

62 },

63 "jiraIssueGlances": [

64 {

65 "icon": {"width": 24, "height": 24, "url": sentry_logo},

66 "content": {"type": "label", "label": {"value": "Linked Issues"}},

67 "target": {

68 "type": "web_panel",

69 "url": "/extensions/jira/issue/{issue.key}/",

70 },

71 "name": {"value": "Sentry "},

72 "key": "sentry-issues-glance",

73 }

74 ],

75 "webhooks": [

76 {

77 "event": "jira:issue_updated",

78 "url": reverse("sentry-extensions-jira-issue-updated"),

79 "excludeBody": False,

80 }

81 ],

82 },

83 "apiMigrations": {"gdpr": True, "context-qsh": True, "signed-install": True},

84 "scopes": scopes,

85 }

86 )

87

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/src/sentry/integrations/jira/endpoints/descriptor.py b/src/sentry/integrations/jira/endpoints/descriptor.py

--- a/src/sentry/integrations/jira/endpoints/descriptor.py

+++ b/src/sentry/integrations/jira/endpoints/descriptor.py

@@ -60,7 +60,7 @@

"name": {"value": "Configure Sentry Add-on"},

"key": "configure-sentry",

},

- "jiraIssueGlances": [

+ "jiraIssueContexts": [

{

"icon": {"width": 24, "height": 24, "url": sentry_logo},

"content": {"type": "label", "label": {"value": "Linked Issues"}},

| {"golden_diff": "diff --git a/src/sentry/integrations/jira/endpoints/descriptor.py b/src/sentry/integrations/jira/endpoints/descriptor.py\n--- a/src/sentry/integrations/jira/endpoints/descriptor.py\n+++ b/src/sentry/integrations/jira/endpoints/descriptor.py\n@@ -60,7 +60,7 @@\n \"name\": {\"value\": \"Configure Sentry Add-on\"},\n \"key\": \"configure-sentry\",\n },\n- \"jiraIssueGlances\": [\n+ \"jiraIssueContexts\": [\n {\n \"icon\": {\"width\": 24, \"height\": 24, \"url\": sentry_logo},\n \"content\": {\"type\": \"label\", \"label\": {\"value\": \"Linked Issues\"}},\n", "issue": "Jira deprecation of glance panels\nNotice from Atlassian Support team about glance panel deprecation. \r\n\r\nAC:\r\n- Review the deprecation plan\r\n- Build a recommendation based on how we're impacted. If minor development work is required, complete that with this ticket. If significant work is required, notify EM/PM to share impact and come up with next steps together.\r\n\r\nEmail from Atlassian:\r\n```\r\nHope you are having a good day!\r\nAs part of this deprecation notice (https://developer.atlassian.com/cloud/jira/platform/changelog/#CHANGE-897), we are reaching out because we have identified that your app, \u201cSentry,\u201d will be affected by the deprecation of glance panels. \r\nThis was initially scheduled for the 6th of October, but we have delayed it until the 30th of November.\r\nThe jiraIssueGlances and jira:issueGlance modules in Forge (https://developer.atlassian.com/platform/forge/manifest-reference/modules/jira-issue-glance/) and Connect (https://developer.atlassian.com/cloud/jira/platform/modules/issue-glance/) are being deprecated and replaced with the issueContext module. \r\nWe recommend transitioning from the glance panel to the new issue context module before the 30th of November. \r\nPlease note, we will not be extending this deprecation date as we announced it on the 30th of March.\r\nLet me know if you need any further assistance,\r\nAhmud\r\nProduct Manager-Jira Cloud\r\n```\n", "before_files": [{"content": "from django.conf import settings\nfrom django.urls import reverse\nfrom rest_framework.request import Request\nfrom rest_framework.response import Response\n\nfrom sentry.api.api_publish_status import ApiPublishStatus\nfrom sentry.api.base import Endpoint, control_silo_endpoint\nfrom sentry.utils.assets import get_frontend_app_asset_url\nfrom sentry.utils.http import absolute_uri\n\nfrom .. import JIRA_KEY\n\nscopes = [\"read\", \"write\", \"act_as_user\"]\n# For Jira, only approved apps can use the access_email_addresses scope\n# This scope allows Sentry to use the email endpoint (https://developer.atlassian.com/cloud/jira/platform/rest/v3/#api-rest-api-3-user-email-get)\n# We use the email with Jira 2-way sync in order to match the user\nif settings.JIRA_USE_EMAIL_SCOPE:\n scopes.append(\"access_email_addresses\")\n\n\n@control_silo_endpoint\nclass JiraDescriptorEndpoint(Endpoint):\n publish_status = {\n \"GET\": ApiPublishStatus.UNKNOWN,\n }\n \"\"\"\n Provides the metadata needed by Jira to setup an instance of the Sentry integration within Jira.\n Only used by on-prem orgs and devs setting up local instances of the integration. (Sentry SAAS\n already has an established, official instance of the Sentry integration registered with Jira.)\n \"\"\"\n\n authentication_classes = ()\n permission_classes = ()\n\n def get(self, request: Request) -> Response:\n sentry_logo = absolute_uri(\n get_frontend_app_asset_url(\"sentry\", \"entrypoints/logo-sentry.svg\")\n )\n return self.respond(\n {\n \"name\": \"Sentry\",\n \"description\": \"Connect your Sentry organization to one or more of your Jira cloud instances. Get started streamlining your bug-squashing workflow by allowing your Sentry and Jira instances to work together.\",\n \"key\": JIRA_KEY,\n \"baseUrl\": absolute_uri(),\n \"vendor\": {\"name\": \"Sentry\", \"url\": \"https://sentry.io\"},\n \"authentication\": {\"type\": \"jwt\"},\n \"lifecycle\": {\n \"installed\": \"/extensions/jira/installed/\",\n \"uninstalled\": \"/extensions/jira/uninstalled/\",\n },\n \"apiVersion\": 1,\n \"modules\": {\n \"postInstallPage\": {\n \"url\": \"/extensions/jira/ui-hook/\",\n \"name\": {\"value\": \"Configure Sentry Add-on\"},\n \"key\": \"post-install-sentry\",\n },\n \"configurePage\": {\n \"url\": \"/extensions/jira/ui-hook/\",\n \"name\": {\"value\": \"Configure Sentry Add-on\"},\n \"key\": \"configure-sentry\",\n },\n \"jiraIssueGlances\": [\n {\n \"icon\": {\"width\": 24, \"height\": 24, \"url\": sentry_logo},\n \"content\": {\"type\": \"label\", \"label\": {\"value\": \"Linked Issues\"}},\n \"target\": {\n \"type\": \"web_panel\",\n \"url\": \"/extensions/jira/issue/{issue.key}/\",\n },\n \"name\": {\"value\": \"Sentry \"},\n \"key\": \"sentry-issues-glance\",\n }\n ],\n \"webhooks\": [\n {\n \"event\": \"jira:issue_updated\",\n \"url\": reverse(\"sentry-extensions-jira-issue-updated\"),\n \"excludeBody\": False,\n }\n ],\n },\n \"apiMigrations\": {\"gdpr\": True, \"context-qsh\": True, \"signed-install\": True},\n \"scopes\": scopes,\n }\n )\n", "path": "src/sentry/integrations/jira/endpoints/descriptor.py"}], "after_files": [{"content": "from django.conf import settings\nfrom django.urls import reverse\nfrom rest_framework.request import Request\nfrom rest_framework.response import Response\n\nfrom sentry.api.api_publish_status import ApiPublishStatus\nfrom sentry.api.base import Endpoint, control_silo_endpoint\nfrom sentry.utils.assets import get_frontend_app_asset_url\nfrom sentry.utils.http import absolute_uri\n\nfrom .. import JIRA_KEY\n\nscopes = [\"read\", \"write\", \"act_as_user\"]\n# For Jira, only approved apps can use the access_email_addresses scope\n# This scope allows Sentry to use the email endpoint (https://developer.atlassian.com/cloud/jira/platform/rest/v3/#api-rest-api-3-user-email-get)\n# We use the email with Jira 2-way sync in order to match the user\nif settings.JIRA_USE_EMAIL_SCOPE:\n scopes.append(\"access_email_addresses\")\n\n\n@control_silo_endpoint\nclass JiraDescriptorEndpoint(Endpoint):\n publish_status = {\n \"GET\": ApiPublishStatus.UNKNOWN,\n }\n \"\"\"\n Provides the metadata needed by Jira to setup an instance of the Sentry integration within Jira.\n Only used by on-prem orgs and devs setting up local instances of the integration. (Sentry SAAS\n already has an established, official instance of the Sentry integration registered with Jira.)\n \"\"\"\n\n authentication_classes = ()\n permission_classes = ()\n\n def get(self, request: Request) -> Response:\n sentry_logo = absolute_uri(\n get_frontend_app_asset_url(\"sentry\", \"entrypoints/logo-sentry.svg\")\n )\n return self.respond(\n {\n \"name\": \"Sentry\",\n \"description\": \"Connect your Sentry organization to one or more of your Jira cloud instances. Get started streamlining your bug-squashing workflow by allowing your Sentry and Jira instances to work together.\",\n \"key\": JIRA_KEY,\n \"baseUrl\": absolute_uri(),\n \"vendor\": {\"name\": \"Sentry\", \"url\": \"https://sentry.io\"},\n \"authentication\": {\"type\": \"jwt\"},\n \"lifecycle\": {\n \"installed\": \"/extensions/jira/installed/\",\n \"uninstalled\": \"/extensions/jira/uninstalled/\",\n },\n \"apiVersion\": 1,\n \"modules\": {\n \"postInstallPage\": {\n \"url\": \"/extensions/jira/ui-hook/\",\n \"name\": {\"value\": \"Configure Sentry Add-on\"},\n \"key\": \"post-install-sentry\",\n },\n \"configurePage\": {\n \"url\": \"/extensions/jira/ui-hook/\",\n \"name\": {\"value\": \"Configure Sentry Add-on\"},\n \"key\": \"configure-sentry\",\n },\n \"jiraIssueContexts\": [\n {\n \"icon\": {\"width\": 24, \"height\": 24, \"url\": sentry_logo},\n \"content\": {\"type\": \"label\", \"label\": {\"value\": \"Linked Issues\"}},\n \"target\": {\n \"type\": \"web_panel\",\n \"url\": \"/extensions/jira/issue/{issue.key}/\",\n },\n \"name\": {\"value\": \"Sentry \"},\n \"key\": \"sentry-issues-glance\",\n }\n ],\n \"webhooks\": [\n {\n \"event\": \"jira:issue_updated\",\n \"url\": reverse(\"sentry-extensions-jira-issue-updated\"),\n \"excludeBody\": False,\n }\n ],\n },\n \"apiMigrations\": {\"gdpr\": True, \"context-qsh\": True, \"signed-install\": True},\n \"scopes\": scopes,\n }\n )\n", "path": "src/sentry/integrations/jira/endpoints/descriptor.py"}]} | 1,499 | 171 |

gh_patches_debug_21950 | rasdani/github-patches | git_diff | cornellius-gp__gpytorch-1670 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

[Bug] The Added Loss term for InducingKernel seems flipped in sign?

# 🐛 Bug

<!-- A clear and concise description of what the bug is. -->

```

def loss(self, *params):

prior_covar = self.prior_dist.lazy_covariance_matrix

variational_covar = self.variational_dist.lazy_covariance_matrix

diag = prior_covar.diag() - variational_covar.diag()

shape = prior_covar.shape[:-1]

noise_diag = self.likelihood._shaped_noise_covar(shape, *params).diag()

return 0.5 * (diag / noise_diag).sum()

```

This is the current code for InducingPointKernelAddedLossTerm.loss

From what I see, this "loss term" is added into the mll that is returned by the `ExactMarginalLogLikelihood` class. This in itself is misleading as the loss is usually the negative of the mll.

Moreover, the variational negative loss used to evaluate inducing points is given below

As can be seen, the above is the expression for the pseudo-mll that is maximized when optimizing the inducing points. in this, the component of `InducingPointKernelAddedLossTerm` is negative to the value that is being added into the loss.

This is quite likely a significant bug. Please fix (just invert the sign of `diag` above)

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `gpytorch/mlls/inducing_point_kernel_added_loss_term.py`

Content:

```

1 #!/usr/bin/env python3

2

3 from .added_loss_term import AddedLossTerm

4

5

6 class InducingPointKernelAddedLossTerm(AddedLossTerm):

7 def __init__(self, variational_dist, prior_dist, likelihood):

8 self.prior_dist = prior_dist

9 self.variational_dist = variational_dist

10 self.likelihood = likelihood

11

12 def loss(self, *params):

13 prior_covar = self.prior_dist.lazy_covariance_matrix

14 variational_covar = self.variational_dist.lazy_covariance_matrix

15 diag = prior_covar.diag() - variational_covar.diag()

16 shape = prior_covar.shape[:-1]

17 noise_diag = self.likelihood._shaped_noise_covar(shape, *params).diag()

18 return 0.5 * (diag / noise_diag).sum()

19

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/gpytorch/mlls/inducing_point_kernel_added_loss_term.py b/gpytorch/mlls/inducing_point_kernel_added_loss_term.py

--- a/gpytorch/mlls/inducing_point_kernel_added_loss_term.py

+++ b/gpytorch/mlls/inducing_point_kernel_added_loss_term.py

@@ -4,7 +4,7 @@

class InducingPointKernelAddedLossTerm(AddedLossTerm):

- def __init__(self, variational_dist, prior_dist, likelihood):

+ def __init__(self, prior_dist, variational_dist, likelihood):

self.prior_dist = prior_dist

self.variational_dist = variational_dist

self.likelihood = likelihood

@@ -12,7 +12,7 @@

def loss(self, *params):

prior_covar = self.prior_dist.lazy_covariance_matrix

variational_covar = self.variational_dist.lazy_covariance_matrix

- diag = prior_covar.diag() - variational_covar.diag()

+ diag = variational_covar.diag() - prior_covar.diag()

shape = prior_covar.shape[:-1]

noise_diag = self.likelihood._shaped_noise_covar(shape, *params).diag()

return 0.5 * (diag / noise_diag).sum()

| {"golden_diff": "diff --git a/gpytorch/mlls/inducing_point_kernel_added_loss_term.py b/gpytorch/mlls/inducing_point_kernel_added_loss_term.py\n--- a/gpytorch/mlls/inducing_point_kernel_added_loss_term.py\n+++ b/gpytorch/mlls/inducing_point_kernel_added_loss_term.py\n@@ -4,7 +4,7 @@\n \n \n class InducingPointKernelAddedLossTerm(AddedLossTerm):\n- def __init__(self, variational_dist, prior_dist, likelihood):\n+ def __init__(self, prior_dist, variational_dist, likelihood):\n self.prior_dist = prior_dist\n self.variational_dist = variational_dist\n self.likelihood = likelihood\n@@ -12,7 +12,7 @@\n def loss(self, *params):\n prior_covar = self.prior_dist.lazy_covariance_matrix\n variational_covar = self.variational_dist.lazy_covariance_matrix\n- diag = prior_covar.diag() - variational_covar.diag()\n+ diag = variational_covar.diag() - prior_covar.diag()\n shape = prior_covar.shape[:-1]\n noise_diag = self.likelihood._shaped_noise_covar(shape, *params).diag()\n return 0.5 * (diag / noise_diag).sum()\n", "issue": "[Bug] The Added Loss term for InducingKernel seems flipped in sign?\n# \ud83d\udc1b Bug\r\n\r\n<!-- A clear and concise description of what the bug is. -->\r\n```\r\n def loss(self, *params):\r\n prior_covar = self.prior_dist.lazy_covariance_matrix\r\n variational_covar = self.variational_dist.lazy_covariance_matrix\r\n diag = prior_covar.diag() - variational_covar.diag()\r\n shape = prior_covar.shape[:-1]\r\n noise_diag = self.likelihood._shaped_noise_covar(shape, *params).diag()\r\n return 0.5 * (diag / noise_diag).sum()\r\n```\r\nThis is the current code for InducingPointKernelAddedLossTerm.loss\r\n\r\nFrom what I see, this \"loss term\" is added into the mll that is returned by the `ExactMarginalLogLikelihood` class. This in itself is misleading as the loss is usually the negative of the mll.\r\n\r\nMoreover, the variational negative loss used to evaluate inducing points is given below\r\n\r\n\r\n\r\nAs can be seen, the above is the expression for the pseudo-mll that is maximized when optimizing the inducing points. in this, the component of `InducingPointKernelAddedLossTerm` is negative to the value that is being added into the loss.\r\n\r\nThis is quite likely a significant bug. Please fix (just invert the sign of `diag` above)\r\n\n", "before_files": [{"content": "#!/usr/bin/env python3\n\nfrom .added_loss_term import AddedLossTerm\n\n\nclass InducingPointKernelAddedLossTerm(AddedLossTerm):\n def __init__(self, variational_dist, prior_dist, likelihood):\n self.prior_dist = prior_dist\n self.variational_dist = variational_dist\n self.likelihood = likelihood\n\n def loss(self, *params):\n prior_covar = self.prior_dist.lazy_covariance_matrix\n variational_covar = self.variational_dist.lazy_covariance_matrix\n diag = prior_covar.diag() - variational_covar.diag()\n shape = prior_covar.shape[:-1]\n noise_diag = self.likelihood._shaped_noise_covar(shape, *params).diag()\n return 0.5 * (diag / noise_diag).sum()\n", "path": "gpytorch/mlls/inducing_point_kernel_added_loss_term.py"}], "after_files": [{"content": "#!/usr/bin/env python3\n\nfrom .added_loss_term import AddedLossTerm\n\n\nclass InducingPointKernelAddedLossTerm(AddedLossTerm):\n def __init__(self, prior_dist, variational_dist, likelihood):\n self.prior_dist = prior_dist\n self.variational_dist = variational_dist\n self.likelihood = likelihood\n\n def loss(self, *params):\n prior_covar = self.prior_dist.lazy_covariance_matrix\n variational_covar = self.variational_dist.lazy_covariance_matrix\n diag = variational_covar.diag() - prior_covar.diag()\n shape = prior_covar.shape[:-1]\n noise_diag = self.likelihood._shaped_noise_covar(shape, *params).diag()\n return 0.5 * (diag / noise_diag).sum()\n", "path": "gpytorch/mlls/inducing_point_kernel_added_loss_term.py"}]} | 824 | 284 |

gh_patches_debug_29548 | rasdani/github-patches | git_diff | translate__pootle-3719 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Running migrate twice gives an error about changed models

If you run `migrate` a second time directly after an initial migration you will get the following error.

```

Running migrations:

No migrations to apply.

Your models have changes that are not yet reflected in a migration, and so won't be applied.

Run 'manage.py makemigrations' to make new migrations, and then re-run 'manage.py migrate' to apply them.

```

`makemigrations` produces this file:

``` py

# -*- coding: utf-8 -*-

from __future__ import unicode_literals

from django.db import models, migrations

import pootle.core.markup.fields

class Migration(migrations.Migration):

dependencies = [

('virtualfolder', '0001_initial'),

]

operations = [

migrations.AlterField(

model_name='virtualfolder',

name='description',

field=pootle.core.markup.fields.MarkupField(help_text='Use this to provide more information or instructions. Allowed markup: HTML', verbose_name='Description', blank=True),

preserve_default=True,

),

]

```

@unho Why are virtualfolders doing this?

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `pootle/apps/virtualfolder/migrations/0001_initial.py`

Content:

```

1 # -*- coding: utf-8 -*-

2 from __future__ import unicode_literals

3

4 from django.db import models, migrations

5 import pootle.core.markup.fields

6

7

8 class Migration(migrations.Migration):

9

10 dependencies = [

11 ('pootle_store', '0001_initial'),

12 ]

13

14 operations = [

15 migrations.CreateModel(

16 name='VirtualFolder',

17 fields=[

18 ('id', models.AutoField(verbose_name='ID', serialize=False, auto_created=True, primary_key=True)),

19 ('name', models.CharField(max_length=70, verbose_name='Name')),

20 ('location', models.CharField(help_text='Root path where this virtual folder is applied.', max_length=255, verbose_name='Location')),

21 ('filter_rules', models.TextField(help_text='Filtering rules that tell which stores this virtual folder comprises.', verbose_name='Filter')),

22 ('priority', models.FloatField(default=1, help_text='Number specifying importance. Greater priority means it is more important.', verbose_name='Priority')),

23 ('is_browsable', models.BooleanField(default=True, help_text='Whether this virtual folder is active or not.', verbose_name='Is browsable?')),

24 ('description', pootle.core.markup.fields.MarkupField(help_text='Use this to provide more information or instructions. Allowed markup: HTML', verbose_name='Description', blank=True)),

25 ('units', models.ManyToManyField(related_name='vfolders', to='pootle_store.Unit', db_index=True)),

26 ],

27 options={

28 'ordering': ['-priority', 'name'],

29 },

30 bases=(models.Model,),

31 ),

32 migrations.AlterUniqueTogether(

33 name='virtualfolder',

34 unique_together=set([('name', 'location')]),

35 ),

36 ]

37

```

Path: `pootle/core/markup/fields.py`

Content:

```

1 #!/usr/bin/env python

2 # -*- coding: utf-8 -*-

3 #

4 # Copyright (C) Pootle contributors.

5 #

6 # This file is a part of the Pootle project. It is distributed under the GPL3

7 # or later license. See the LICENSE file for a copy of the license and the

8 # AUTHORS file for copyright and authorship information.

9

10 import logging

11

12 from django.conf import settings

13 from django.core.cache import cache

14 from django.db import models

15 from django.utils.safestring import mark_safe

16

17 from .filters import apply_markup_filter

18 from .widgets import MarkupTextarea

19

20

21 __all__ = ('Markup', 'MarkupField',)

22

23

24 logger = logging.getLogger('pootle.markup')

25

26

27 _rendered_cache_key = lambda obj, pk, field: '_%s_%s_%s_rendered' % \

28 (obj, pk, field)

29

30

31 class Markup(object):

32

33 def __init__(self, instance, field_name, rendered_cache_key):

34 self.instance = instance

35 self.field_name = field_name

36 self.cache_key = rendered_cache_key

37

38 @property

39 def raw(self):

40 return self.instance.__dict__[self.field_name]

41

42 @raw.setter

43 def raw(self, value):

44 setattr(self.instance, self.field_name, value)

45

46 @property

47 def rendered(self):

48 rendered = cache.get(self.cache_key)

49

50 if not rendered:

51 logger.debug(u'Caching rendered output of %r', self.cache_key)

52 rendered = apply_markup_filter(self.raw)

53 cache.set(self.cache_key, rendered,

54 settings.OBJECT_CACHE_TIMEOUT)

55

56 return rendered

57

58 def __unicode__(self):

59 return mark_safe(self.rendered)

60

61 def __nonzero__(self):

62 return self.raw.strip() != '' and self.raw is not None

63

64

65 class MarkupDescriptor(object):

66

67 def __init__(self, field):

68 self.field = field

69

70 def __get__(self, obj, owner):

71 if obj is None:

72 raise AttributeError('Can only be accessed via an instance.')

73

74 markup = obj.__dict__[self.field.name]

75 if markup is None:

76 return None

77

78 cache_key = _rendered_cache_key(obj.__class__.__name__,

79 obj.pk,

80 self.field.name)

81 return Markup(obj, self.field.name, cache_key)

82

83 def __set__(self, obj, value):

84 if isinstance(value, Markup):

85 obj.__dict__[self.field.name] = value.raw

86 else:

87 obj.__dict__[self.field.name] = value

88

89

90 class MarkupField(models.TextField):

91

92 description = 'Text field supporting different markup formats.'

93

94 def contribute_to_class(self, cls, name):

95 super(MarkupField, self).contribute_to_class(cls, name)

96 setattr(cls, self.name, MarkupDescriptor(self))

97

98 def pre_save(self, model_instance, add):

99 value = super(MarkupField, self).pre_save(model_instance, add)

100

101 if not add:

102 # Invalidate cache to force rendering upon next retrieval

103 cache_key = _rendered_cache_key(model_instance.__class__.__name__,

104 model_instance.pk,

105 self.name)

106 logger.debug('Invalidating cache for %r', cache_key)

107 cache.delete(cache_key)

108

109 return value.raw

110

111 def get_prep_value(self, value):

112 if isinstance(value, Markup):

113 return value.raw

114

115 return value

116

117 def value_to_string(self, obj):

118 value = self._get_val_from_obj(obj)

119 return self.get_prep_value(value)

120

121 def formfield(self, **kwargs):

122 defaults = {'widget': MarkupTextarea}

123 defaults.update(kwargs)

124 return super(MarkupField, self).formfield(**defaults)

125

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/pootle/apps/virtualfolder/migrations/0001_initial.py b/pootle/apps/virtualfolder/migrations/0001_initial.py

--- a/pootle/apps/virtualfolder/migrations/0001_initial.py

+++ b/pootle/apps/virtualfolder/migrations/0001_initial.py

@@ -21,7 +21,7 @@

('filter_rules', models.TextField(help_text='Filtering rules that tell which stores this virtual folder comprises.', verbose_name='Filter')),

('priority', models.FloatField(default=1, help_text='Number specifying importance. Greater priority means it is more important.', verbose_name='Priority')),

('is_browsable', models.BooleanField(default=True, help_text='Whether this virtual folder is active or not.', verbose_name='Is browsable?')),

- ('description', pootle.core.markup.fields.MarkupField(help_text='Use this to provide more information or instructions. Allowed markup: HTML', verbose_name='Description', blank=True)),

+ ('description', pootle.core.markup.fields.MarkupField(verbose_name='Description', blank=True)),

('units', models.ManyToManyField(related_name='vfolders', to='pootle_store.Unit', db_index=True)),

],

options={

diff --git a/pootle/core/markup/fields.py b/pootle/core/markup/fields.py

--- a/pootle/core/markup/fields.py

+++ b/pootle/core/markup/fields.py

@@ -122,3 +122,8 @@

defaults = {'widget': MarkupTextarea}

defaults.update(kwargs)

return super(MarkupField, self).formfield(**defaults)

+

+ def deconstruct(self):

+ name, path, args, kwargs = super(MarkupField, self).deconstruct()

+ kwargs.pop('help_text', None)

+ return name, path, args, kwargs

| {"golden_diff": "diff --git a/pootle/apps/virtualfolder/migrations/0001_initial.py b/pootle/apps/virtualfolder/migrations/0001_initial.py\n--- a/pootle/apps/virtualfolder/migrations/0001_initial.py\n+++ b/pootle/apps/virtualfolder/migrations/0001_initial.py\n@@ -21,7 +21,7 @@\n ('filter_rules', models.TextField(help_text='Filtering rules that tell which stores this virtual folder comprises.', verbose_name='Filter')),\n ('priority', models.FloatField(default=1, help_text='Number specifying importance. Greater priority means it is more important.', verbose_name='Priority')),\n ('is_browsable', models.BooleanField(default=True, help_text='Whether this virtual folder is active or not.', verbose_name='Is browsable?')),\n- ('description', pootle.core.markup.fields.MarkupField(help_text='Use this to provide more information or instructions. Allowed markup: HTML', verbose_name='Description', blank=True)),\n+ ('description', pootle.core.markup.fields.MarkupField(verbose_name='Description', blank=True)),\n ('units', models.ManyToManyField(related_name='vfolders', to='pootle_store.Unit', db_index=True)),\n ],\n options={\ndiff --git a/pootle/core/markup/fields.py b/pootle/core/markup/fields.py\n--- a/pootle/core/markup/fields.py\n+++ b/pootle/core/markup/fields.py\n@@ -122,3 +122,8 @@\n defaults = {'widget': MarkupTextarea}\n defaults.update(kwargs)\n return super(MarkupField, self).formfield(**defaults)\n+\n+ def deconstruct(self):\n+ name, path, args, kwargs = super(MarkupField, self).deconstruct()\n+ kwargs.pop('help_text', None)\n+ return name, path, args, kwargs\n", "issue": "Running migrate twice gives an error about changed models\nIf you run `migrate` a second time directly after an initial migration you will get the following error.\n\n```\nRunning migrations:\n No migrations to apply.\n Your models have changes that are not yet reflected in a migration, and so won't be applied.\n Run 'manage.py makemigrations' to make new migrations, and then re-run 'manage.py migrate' to apply them.\n```\n\n`makemigrations` produces this file:\n\n``` py\n# -*- coding: utf-8 -*-\nfrom __future__ import unicode_literals\n\nfrom django.db import models, migrations\nimport pootle.core.markup.fields\n\n\nclass Migration(migrations.Migration):\n\n dependencies = [\n ('virtualfolder', '0001_initial'),\n ]\n\n operations = [\n migrations.AlterField(\n model_name='virtualfolder',\n name='description',\n field=pootle.core.markup.fields.MarkupField(help_text='Use this to provide more information or instructions. Allowed markup: HTML', verbose_name='Description', blank=True),\n preserve_default=True,\n ),\n ]\n```\n\n@unho Why are virtualfolders doing this?\n\n", "before_files": [{"content": "# -*- coding: utf-8 -*-\nfrom __future__ import unicode_literals\n\nfrom django.db import models, migrations\nimport pootle.core.markup.fields\n\n\nclass Migration(migrations.Migration):\n\n dependencies = [\n ('pootle_store', '0001_initial'),\n ]\n\n operations = [\n migrations.CreateModel(\n name='VirtualFolder',\n fields=[\n ('id', models.AutoField(verbose_name='ID', serialize=False, auto_created=True, primary_key=True)),\n ('name', models.CharField(max_length=70, verbose_name='Name')),\n ('location', models.CharField(help_text='Root path where this virtual folder is applied.', max_length=255, verbose_name='Location')),\n ('filter_rules', models.TextField(help_text='Filtering rules that tell which stores this virtual folder comprises.', verbose_name='Filter')),\n ('priority', models.FloatField(default=1, help_text='Number specifying importance. Greater priority means it is more important.', verbose_name='Priority')),\n ('is_browsable', models.BooleanField(default=True, help_text='Whether this virtual folder is active or not.', verbose_name='Is browsable?')),\n ('description', pootle.core.markup.fields.MarkupField(help_text='Use this to provide more information or instructions. Allowed markup: HTML', verbose_name='Description', blank=True)),\n ('units', models.ManyToManyField(related_name='vfolders', to='pootle_store.Unit', db_index=True)),\n ],\n options={\n 'ordering': ['-priority', 'name'],\n },\n bases=(models.Model,),\n ),\n migrations.AlterUniqueTogether(\n name='virtualfolder',\n unique_together=set([('name', 'location')]),\n ),\n ]\n", "path": "pootle/apps/virtualfolder/migrations/0001_initial.py"}, {"content": "#!/usr/bin/env python\n# -*- coding: utf-8 -*-\n#\n# Copyright (C) Pootle contributors.\n#\n# This file is a part of the Pootle project. It is distributed under the GPL3\n# or later license. See the LICENSE file for a copy of the license and the\n# AUTHORS file for copyright and authorship information.\n\nimport logging\n\nfrom django.conf import settings\nfrom django.core.cache import cache\nfrom django.db import models\nfrom django.utils.safestring import mark_safe\n\nfrom .filters import apply_markup_filter\nfrom .widgets import MarkupTextarea\n\n\n__all__ = ('Markup', 'MarkupField',)\n\n\nlogger = logging.getLogger('pootle.markup')\n\n\n_rendered_cache_key = lambda obj, pk, field: '_%s_%s_%s_rendered' % \\\n (obj, pk, field)\n\n\nclass Markup(object):\n\n def __init__(self, instance, field_name, rendered_cache_key):\n self.instance = instance\n self.field_name = field_name\n self.cache_key = rendered_cache_key\n\n @property\n def raw(self):\n return self.instance.__dict__[self.field_name]\n\n @raw.setter\n def raw(self, value):\n setattr(self.instance, self.field_name, value)\n\n @property\n def rendered(self):\n rendered = cache.get(self.cache_key)\n\n if not rendered:\n logger.debug(u'Caching rendered output of %r', self.cache_key)\n rendered = apply_markup_filter(self.raw)\n cache.set(self.cache_key, rendered,\n settings.OBJECT_CACHE_TIMEOUT)\n\n return rendered\n\n def __unicode__(self):\n return mark_safe(self.rendered)\n\n def __nonzero__(self):\n return self.raw.strip() != '' and self.raw is not None\n\n\nclass MarkupDescriptor(object):\n\n def __init__(self, field):\n self.field = field\n\n def __get__(self, obj, owner):\n if obj is None:\n raise AttributeError('Can only be accessed via an instance.')\n\n markup = obj.__dict__[self.field.name]\n if markup is None:\n return None\n\n cache_key = _rendered_cache_key(obj.__class__.__name__,\n obj.pk,\n self.field.name)\n return Markup(obj, self.field.name, cache_key)\n\n def __set__(self, obj, value):\n if isinstance(value, Markup):\n obj.__dict__[self.field.name] = value.raw\n else:\n obj.__dict__[self.field.name] = value\n\n\nclass MarkupField(models.TextField):\n\n description = 'Text field supporting different markup formats.'\n\n def contribute_to_class(self, cls, name):\n super(MarkupField, self).contribute_to_class(cls, name)\n setattr(cls, self.name, MarkupDescriptor(self))\n\n def pre_save(self, model_instance, add):\n value = super(MarkupField, self).pre_save(model_instance, add)\n\n if not add:\n # Invalidate cache to force rendering upon next retrieval\n cache_key = _rendered_cache_key(model_instance.__class__.__name__,\n model_instance.pk,\n self.name)\n logger.debug('Invalidating cache for %r', cache_key)\n cache.delete(cache_key)\n\n return value.raw\n\n def get_prep_value(self, value):\n if isinstance(value, Markup):\n return value.raw\n\n return value\n\n def value_to_string(self, obj):\n value = self._get_val_from_obj(obj)\n return self.get_prep_value(value)\n\n def formfield(self, **kwargs):\n defaults = {'widget': MarkupTextarea}\n defaults.update(kwargs)\n return super(MarkupField, self).formfield(**defaults)\n", "path": "pootle/core/markup/fields.py"}], "after_files": [{"content": "# -*- coding: utf-8 -*-\nfrom __future__ import unicode_literals\n\nfrom django.db import models, migrations\nimport pootle.core.markup.fields\n\n\nclass Migration(migrations.Migration):\n\n dependencies = [\n ('pootle_store', '0001_initial'),\n ]\n\n operations = [\n migrations.CreateModel(\n name='VirtualFolder',\n fields=[\n ('id', models.AutoField(verbose_name='ID', serialize=False, auto_created=True, primary_key=True)),\n ('name', models.CharField(max_length=70, verbose_name='Name')),\n ('location', models.CharField(help_text='Root path where this virtual folder is applied.', max_length=255, verbose_name='Location')),\n ('filter_rules', models.TextField(help_text='Filtering rules that tell which stores this virtual folder comprises.', verbose_name='Filter')),\n ('priority', models.FloatField(default=1, help_text='Number specifying importance. Greater priority means it is more important.', verbose_name='Priority')),\n ('is_browsable', models.BooleanField(default=True, help_text='Whether this virtual folder is active or not.', verbose_name='Is browsable?')),\n ('description', pootle.core.markup.fields.MarkupField(verbose_name='Description', blank=True)),\n ('units', models.ManyToManyField(related_name='vfolders', to='pootle_store.Unit', db_index=True)),\n ],\n options={\n 'ordering': ['-priority', 'name'],\n },\n bases=(models.Model,),\n ),\n migrations.AlterUniqueTogether(\n name='virtualfolder',\n unique_together=set([('name', 'location')]),\n ),\n ]\n", "path": "pootle/apps/virtualfolder/migrations/0001_initial.py"}, {"content": "#!/usr/bin/env python\n# -*- coding: utf-8 -*-\n#\n# Copyright (C) Pootle contributors.\n#\n# This file is a part of the Pootle project. It is distributed under the GPL3\n# or later license. See the LICENSE file for a copy of the license and the\n# AUTHORS file for copyright and authorship information.\n\nimport logging\n\nfrom django.conf import settings\nfrom django.core.cache import cache\nfrom django.db import models\nfrom django.utils.safestring import mark_safe\n\nfrom .filters import apply_markup_filter\nfrom .widgets import MarkupTextarea\n\n\n__all__ = ('Markup', 'MarkupField',)\n\n\nlogger = logging.getLogger('pootle.markup')\n\n\n_rendered_cache_key = lambda obj, pk, field: '_%s_%s_%s_rendered' % \\\n (obj, pk, field)\n\n\nclass Markup(object):\n\n def __init__(self, instance, field_name, rendered_cache_key):\n self.instance = instance\n self.field_name = field_name\n self.cache_key = rendered_cache_key\n\n @property\n def raw(self):\n return self.instance.__dict__[self.field_name]\n\n @raw.setter\n def raw(self, value):\n setattr(self.instance, self.field_name, value)\n\n @property\n def rendered(self):\n rendered = cache.get(self.cache_key)\n\n if not rendered:\n logger.debug(u'Caching rendered output of %r', self.cache_key)\n rendered = apply_markup_filter(self.raw)\n cache.set(self.cache_key, rendered,\n settings.OBJECT_CACHE_TIMEOUT)\n\n return rendered\n\n def __unicode__(self):\n return mark_safe(self.rendered)\n\n def __nonzero__(self):\n return self.raw.strip() != '' and self.raw is not None\n\n\nclass MarkupDescriptor(object):\n\n def __init__(self, field):\n self.field = field\n\n def __get__(self, obj, owner):\n if obj is None:\n raise AttributeError('Can only be accessed via an instance.')\n\n markup = obj.__dict__[self.field.name]\n if markup is None:\n return None\n\n cache_key = _rendered_cache_key(obj.__class__.__name__,\n obj.pk,\n self.field.name)\n return Markup(obj, self.field.name, cache_key)\n\n def __set__(self, obj, value):\n if isinstance(value, Markup):\n obj.__dict__[self.field.name] = value.raw\n else:\n obj.__dict__[self.field.name] = value\n\n\nclass MarkupField(models.TextField):\n\n description = 'Text field supporting different markup formats.'\n\n def contribute_to_class(self, cls, name):\n super(MarkupField, self).contribute_to_class(cls, name)\n setattr(cls, self.name, MarkupDescriptor(self))\n\n def pre_save(self, model_instance, add):\n value = super(MarkupField, self).pre_save(model_instance, add)\n\n if not add:\n # Invalidate cache to force rendering upon next retrieval\n cache_key = _rendered_cache_key(model_instance.__class__.__name__,\n model_instance.pk,\n self.name)\n logger.debug('Invalidating cache for %r', cache_key)\n cache.delete(cache_key)\n\n return value.raw\n\n def get_prep_value(self, value):\n if isinstance(value, Markup):\n return value.raw\n\n return value\n\n def value_to_string(self, obj):\n value = self._get_val_from_obj(obj)\n return self.get_prep_value(value)\n\n def formfield(self, **kwargs):\n defaults = {'widget': MarkupTextarea}\n defaults.update(kwargs)\n return super(MarkupField, self).formfield(**defaults)\n\n def deconstruct(self):\n name, path, args, kwargs = super(MarkupField, self).deconstruct()\n kwargs.pop('help_text', None)\n return name, path, args, kwargs\n", "path": "pootle/core/markup/fields.py"}]} | 2,022 | 410 |

gh_patches_debug_4294 | rasdani/github-patches | git_diff | open-mmlab__mmpretrain-286 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

[Feature Request] CPU Testing

Since CPU training is already supported in PR #219, what about also adding the feature of CPU testing.

Besides, it seems there are still some problems with the CPU training feature @wangruohui :

When we set `--device CPU`, the expected behavior is using CPU for training, no matter if there exist GPUs on this machine. However, mmcls will use GPU for training if it exists, even if we set `--device CPU`.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `mmcls/apis/train.py`

Content:

```

1 import random

2 import warnings

3

4 import numpy as np

5 import torch

6 from mmcv.parallel import MMDataParallel, MMDistributedDataParallel

7 from mmcv.runner import DistSamplerSeedHook, build_optimizer, build_runner

8

9 from mmcls.core import DistOptimizerHook

10 from mmcls.datasets import build_dataloader, build_dataset

11 from mmcls.utils import get_root_logger

12

13 # TODO import eval hooks from mmcv and delete them from mmcls

14 try:

15 from mmcv.runner.hooks import EvalHook, DistEvalHook

16 except ImportError:

17 warnings.warn('DeprecationWarning: EvalHook and DistEvalHook from mmcls '

18 'will be deprecated.'

19 'Please install mmcv through master branch.')

20 from mmcls.core import EvalHook, DistEvalHook

21

22 # TODO import optimizer hook from mmcv and delete them from mmcls

23 try:

24 from mmcv.runner import Fp16OptimizerHook

25 except ImportError:

26 warnings.warn('DeprecationWarning: FP16OptimizerHook from mmcls will be '

27 'deprecated. Please install mmcv>=1.1.4.')

28 from mmcls.core import Fp16OptimizerHook

29

30

31 def set_random_seed(seed, deterministic=False):

32 """Set random seed.

33

34 Args:

35 seed (int): Seed to be used.

36 deterministic (bool): Whether to set the deterministic option for

37 CUDNN backend, i.e., set `torch.backends.cudnn.deterministic`

38 to True and `torch.backends.cudnn.benchmark` to False.

39 Default: False.

40 """

41 random.seed(seed)

42 np.random.seed(seed)

43 torch.manual_seed(seed)

44 torch.cuda.manual_seed_all(seed)

45 if deterministic:

46 torch.backends.cudnn.deterministic = True

47 torch.backends.cudnn.benchmark = False

48

49

50 def train_model(model,

51 dataset,

52 cfg,

53 distributed=False,

54 validate=False,

55 timestamp=None,

56 device='cuda',

57 meta=None):

58 logger = get_root_logger(cfg.log_level)

59

60 # prepare data loaders

61 dataset = dataset if isinstance(dataset, (list, tuple)) else [dataset]

62

63 data_loaders = [

64 build_dataloader(

65 ds,

66 cfg.data.samples_per_gpu,

67 cfg.data.workers_per_gpu,

68 # cfg.gpus will be ignored if distributed

69 num_gpus=len(cfg.gpu_ids),

70 dist=distributed,

71 round_up=True,

72 seed=cfg.seed) for ds in dataset

73 ]

74

75 # put model on gpus

76 if distributed:

77 find_unused_parameters = cfg.get('find_unused_parameters', False)

78 # Sets the `find_unused_parameters` parameter in

79 # torch.nn.parallel.DistributedDataParallel

80 model = MMDistributedDataParallel(

81 model.cuda(),

82 device_ids=[torch.cuda.current_device()],

83 broadcast_buffers=False,

84 find_unused_parameters=find_unused_parameters)

85 else:

86 if device == 'cuda':

87 model = MMDataParallel(

88 model.cuda(cfg.gpu_ids[0]), device_ids=cfg.gpu_ids)

89 elif device == 'cpu':

90 model = MMDataParallel(model.cpu())

91 else:

92 raise ValueError(F'unsupported device name {device}.')

93

94 # build runner

95 optimizer = build_optimizer(model, cfg.optimizer)

96

97 if cfg.get('runner') is None:

98 cfg.runner = {

99 'type': 'EpochBasedRunner',

100 'max_epochs': cfg.total_epochs

101 }

102 warnings.warn(

103 'config is now expected to have a `runner` section, '

104 'please set `runner` in your config.', UserWarning)

105

106 runner = build_runner(

107 cfg.runner,

108 default_args=dict(

109 model=model,

110 batch_processor=None,

111 optimizer=optimizer,

112 work_dir=cfg.work_dir,

113 logger=logger,

114 meta=meta))

115

116 # an ugly walkaround to make the .log and .log.json filenames the same

117 runner.timestamp = timestamp

118

119 # fp16 setting

120 fp16_cfg = cfg.get('fp16', None)

121 if fp16_cfg is not None:

122 optimizer_config = Fp16OptimizerHook(

123 **cfg.optimizer_config, **fp16_cfg, distributed=distributed)

124 elif distributed and 'type' not in cfg.optimizer_config:

125 optimizer_config = DistOptimizerHook(**cfg.optimizer_config)

126 else:

127 optimizer_config = cfg.optimizer_config

128

129 # register hooks

130 runner.register_training_hooks(cfg.lr_config, optimizer_config,

131 cfg.checkpoint_config, cfg.log_config,

132 cfg.get('momentum_config', None))

133 if distributed:

134 runner.register_hook(DistSamplerSeedHook())

135

136 # register eval hooks

137 if validate:

138 val_dataset = build_dataset(cfg.data.val, dict(test_mode=True))

139 val_dataloader = build_dataloader(

140 val_dataset,

141 samples_per_gpu=cfg.data.samples_per_gpu,

142 workers_per_gpu=cfg.data.workers_per_gpu,

143 dist=distributed,

144 shuffle=False,

145 round_up=True)

146 eval_cfg = cfg.get('evaluation', {})

147 eval_cfg['by_epoch'] = cfg.runner['type'] != 'IterBasedRunner'

148 eval_hook = DistEvalHook if distributed else EvalHook

149 runner.register_hook(eval_hook(val_dataloader, **eval_cfg))

150

151 if cfg.resume_from:

152 runner.resume(cfg.resume_from)

153 elif cfg.load_from:

154 runner.load_checkpoint(cfg.load_from)

155 runner.run(data_loaders, cfg.workflow)

156

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/mmcls/apis/train.py b/mmcls/apis/train.py

--- a/mmcls/apis/train.py

+++ b/mmcls/apis/train.py

@@ -87,7 +87,7 @@

model = MMDataParallel(

model.cuda(cfg.gpu_ids[0]), device_ids=cfg.gpu_ids)

elif device == 'cpu':

- model = MMDataParallel(model.cpu())

+ model = model.cpu()

else:

raise ValueError(F'unsupported device name {device}.')