problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.1k

10.2k

| golden_diff

stringlengths 151

4.94k

| verification_info

stringlengths 582

21k

| num_tokens

int64 271

2.05k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

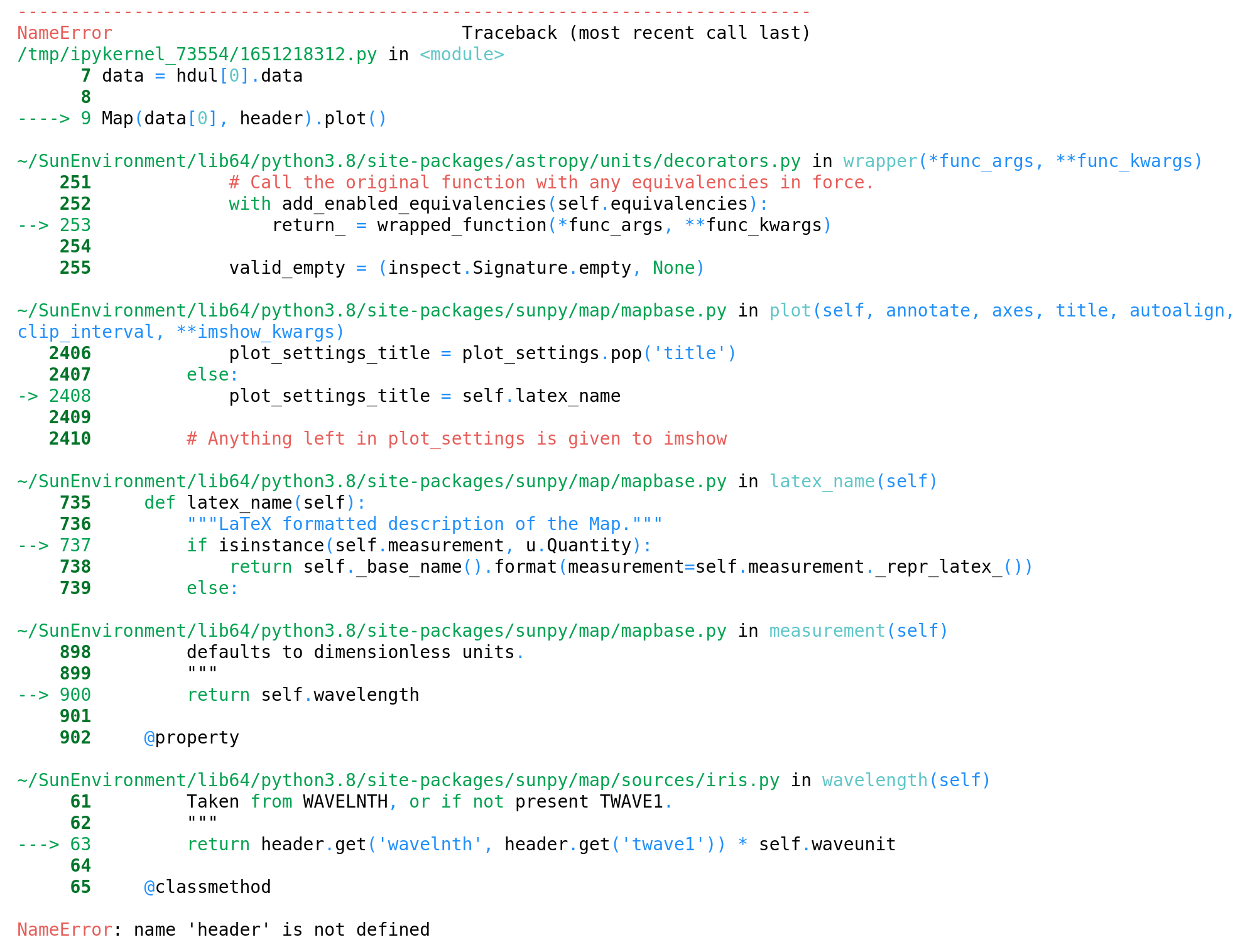

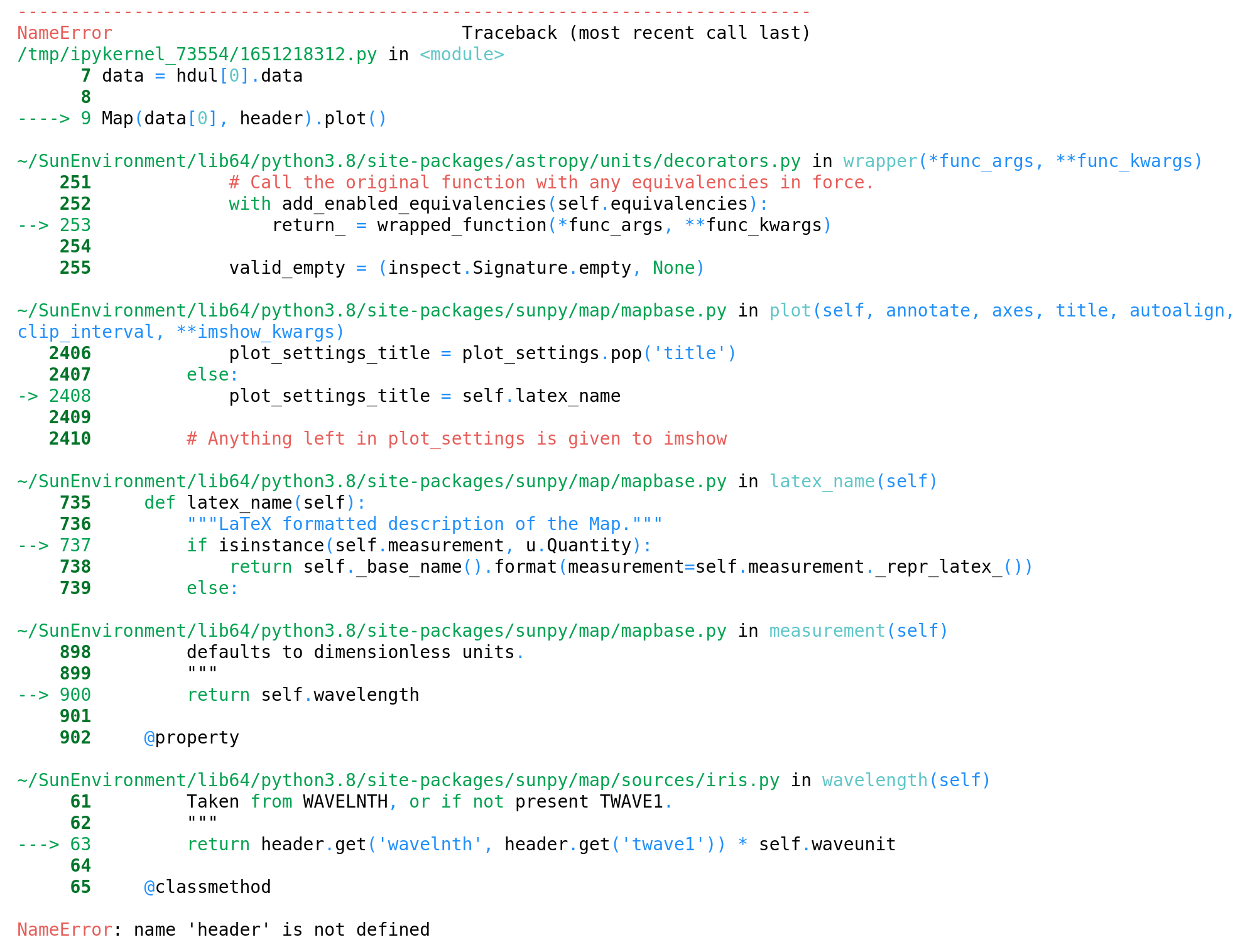

gh_patches_debug_7175 | rasdani/github-patches | git_diff | RedHatInsights__insights-core-2743 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Remove defunct entry_points

These scripts no longer exist. We should remove the entry_points.

* [insights.tools.generate_api_config](https://github.com/RedHatInsights/insights-core/blob/master/setup.py#L23)

* [insights.tools.perf](https://github.com/RedHatInsights/insights-core/blob/master/setup.py#L24)

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `setup.py`

Content:

```

1 import os

2 import sys

3 from setuptools import setup, find_packages

4

5 __here__ = os.path.dirname(os.path.abspath(__file__))

6

7 package_info = dict.fromkeys(["RELEASE", "COMMIT", "VERSION", "NAME"])

8

9 for name in package_info:

10 with open(os.path.join(__here__, "insights", name)) as f:

11 package_info[name] = f.read().strip()

12

13 entry_points = {

14 'console_scripts': [

15 'insights-collect = insights.collect:main',

16 'insights-run = insights:main',

17 'insights = insights.command_parser:main',

18 'insights-cat = insights.tools.cat:main',

19 'insights-dupkeycheck = insights.tools.dupkeycheck:main',

20 'insights-inspect = insights.tools.insights_inspect:main',

21 'insights-info = insights.tools.query:main',

22 'insights-ocpshell= insights.ocpshell:main',

23 'gen_api = insights.tools.generate_api_config:main',

24 'insights-perf = insights.tools.perf:main',

25 'client = insights.client:run',

26 'mangle = insights.util.mangle:main'

27 ]

28 }

29

30 runtime = set([

31 'six',

32 'requests',

33 'redis',

34 'cachecontrol',

35 'cachecontrol[redis]',

36 'cachecontrol[filecache]',

37 'defusedxml',

38 'lockfile',

39 'jinja2',

40 ])

41

42 if (sys.version_info < (2, 7)):

43 runtime.add('pyyaml>=3.10,<=3.13')

44 else:

45 runtime.add('pyyaml')

46

47

48 def maybe_require(pkg):

49 try:

50 __import__(pkg)

51 except ImportError:

52 runtime.add(pkg)

53

54

55 maybe_require("importlib")

56 maybe_require("argparse")

57

58

59 client = set([

60 'requests'

61 ])

62

63 develop = set([

64 'futures==3.0.5',

65 'wheel',

66 ])

67

68 docs = set([

69 'Sphinx<=3.0.2',

70 'nbsphinx',

71 'sphinx_rtd_theme',

72 'ipython',

73 'colorama',

74 'jinja2',

75 'Pygments'

76 ])

77

78 testing = set([

79 'coverage==4.3.4',

80 'pytest==3.0.6',

81 'pytest-cov==2.4.0',

82 'mock==2.0.0',

83 ])

84

85 cluster = set([

86 'ansible',

87 'pandas',

88 'colorama',

89 ])

90

91 openshift = set([

92 'openshift'

93 ])

94

95 linting = set([

96 'flake8==2.6.2',

97 ])

98

99 optional = set([

100 'python-cjson',

101 'python-logstash',

102 'python-statsd',

103 'watchdog',

104 ])

105

106 if __name__ == "__main__":

107 # allows for runtime modification of rpm name

108 name = os.environ.get("INSIGHTS_CORE_NAME", package_info["NAME"])

109

110 setup(

111 name=name,

112 version=package_info["VERSION"],

113 description="Insights Core is a data collection and analysis framework",

114 long_description=open("README.rst").read(),

115 url="https://github.com/redhatinsights/insights-core",

116 author="Red Hat, Inc.",

117 author_email="[email protected]",

118 packages=find_packages(),

119 install_requires=list(runtime),

120 package_data={'': ['LICENSE']},

121 license='Apache 2.0',

122 extras_require={

123 'develop': list(runtime | develop | client | docs | linting | testing | cluster),

124 'develop26': list(runtime | develop | client | linting | testing | cluster),

125 'client': list(runtime | client),

126 'client-develop': list(runtime | develop | client | linting | testing),

127 'cluster': list(runtime | cluster),

128 'openshift': list(runtime | openshift),

129 'optional': list(optional),

130 'docs': list(docs),

131 'linting': list(linting | client),

132 'testing': list(testing | client)

133 },

134 classifiers=[

135 'Development Status :: 5 - Production/Stable',

136 'Intended Audience :: Developers',

137 'Natural Language :: English',

138 'License :: OSI Approved :: Apache Software License',

139 'Programming Language :: Python',

140 'Programming Language :: Python :: 2.6',

141 'Programming Language :: Python :: 2.7',

142 'Programming Language :: Python :: 3.3',

143 'Programming Language :: Python :: 3.4',

144 'Programming Language :: Python :: 3.5',

145 'Programming Language :: Python :: 3.6'

146 ],

147 entry_points=entry_points,

148 include_package_data=True

149 )

150

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/setup.py b/setup.py

--- a/setup.py

+++ b/setup.py

@@ -20,8 +20,6 @@

'insights-inspect = insights.tools.insights_inspect:main',

'insights-info = insights.tools.query:main',

'insights-ocpshell= insights.ocpshell:main',

- 'gen_api = insights.tools.generate_api_config:main',

- 'insights-perf = insights.tools.perf:main',

'client = insights.client:run',

'mangle = insights.util.mangle:main'

]

| {"golden_diff": "diff --git a/setup.py b/setup.py\n--- a/setup.py\n+++ b/setup.py\n@@ -20,8 +20,6 @@\n 'insights-inspect = insights.tools.insights_inspect:main',\n 'insights-info = insights.tools.query:main',\n 'insights-ocpshell= insights.ocpshell:main',\n- 'gen_api = insights.tools.generate_api_config:main',\n- 'insights-perf = insights.tools.perf:main',\n 'client = insights.client:run',\n 'mangle = insights.util.mangle:main'\n ]\n", "issue": "Remove defunct entry_points\nThese scripts no longer exist. We should remove the entry_points.\r\n\r\n* [insights.tools.generate_api_config](https://github.com/RedHatInsights/insights-core/blob/master/setup.py#L23)\r\n* [insights.tools.perf](https://github.com/RedHatInsights/insights-core/blob/master/setup.py#L24)\n", "before_files": [{"content": "import os\nimport sys\nfrom setuptools import setup, find_packages\n\n__here__ = os.path.dirname(os.path.abspath(__file__))\n\npackage_info = dict.fromkeys([\"RELEASE\", \"COMMIT\", \"VERSION\", \"NAME\"])\n\nfor name in package_info:\n with open(os.path.join(__here__, \"insights\", name)) as f:\n package_info[name] = f.read().strip()\n\nentry_points = {\n 'console_scripts': [\n 'insights-collect = insights.collect:main',\n 'insights-run = insights:main',\n 'insights = insights.command_parser:main',\n 'insights-cat = insights.tools.cat:main',\n 'insights-dupkeycheck = insights.tools.dupkeycheck:main',\n 'insights-inspect = insights.tools.insights_inspect:main',\n 'insights-info = insights.tools.query:main',\n 'insights-ocpshell= insights.ocpshell:main',\n 'gen_api = insights.tools.generate_api_config:main',\n 'insights-perf = insights.tools.perf:main',\n 'client = insights.client:run',\n 'mangle = insights.util.mangle:main'\n ]\n}\n\nruntime = set([\n 'six',\n 'requests',\n 'redis',\n 'cachecontrol',\n 'cachecontrol[redis]',\n 'cachecontrol[filecache]',\n 'defusedxml',\n 'lockfile',\n 'jinja2',\n])\n\nif (sys.version_info < (2, 7)):\n runtime.add('pyyaml>=3.10,<=3.13')\nelse:\n runtime.add('pyyaml')\n\n\ndef maybe_require(pkg):\n try:\n __import__(pkg)\n except ImportError:\n runtime.add(pkg)\n\n\nmaybe_require(\"importlib\")\nmaybe_require(\"argparse\")\n\n\nclient = set([\n 'requests'\n])\n\ndevelop = set([\n 'futures==3.0.5',\n 'wheel',\n])\n\ndocs = set([\n 'Sphinx<=3.0.2',\n 'nbsphinx',\n 'sphinx_rtd_theme',\n 'ipython',\n 'colorama',\n 'jinja2',\n 'Pygments'\n])\n\ntesting = set([\n 'coverage==4.3.4',\n 'pytest==3.0.6',\n 'pytest-cov==2.4.0',\n 'mock==2.0.0',\n])\n\ncluster = set([\n 'ansible',\n 'pandas',\n 'colorama',\n])\n\nopenshift = set([\n 'openshift'\n])\n\nlinting = set([\n 'flake8==2.6.2',\n])\n\noptional = set([\n 'python-cjson',\n 'python-logstash',\n 'python-statsd',\n 'watchdog',\n])\n\nif __name__ == \"__main__\":\n # allows for runtime modification of rpm name\n name = os.environ.get(\"INSIGHTS_CORE_NAME\", package_info[\"NAME\"])\n\n setup(\n name=name,\n version=package_info[\"VERSION\"],\n description=\"Insights Core is a data collection and analysis framework\",\n long_description=open(\"README.rst\").read(),\n url=\"https://github.com/redhatinsights/insights-core\",\n author=\"Red Hat, Inc.\",\n author_email=\"[email protected]\",\n packages=find_packages(),\n install_requires=list(runtime),\n package_data={'': ['LICENSE']},\n license='Apache 2.0',\n extras_require={\n 'develop': list(runtime | develop | client | docs | linting | testing | cluster),\n 'develop26': list(runtime | develop | client | linting | testing | cluster),\n 'client': list(runtime | client),\n 'client-develop': list(runtime | develop | client | linting | testing),\n 'cluster': list(runtime | cluster),\n 'openshift': list(runtime | openshift),\n 'optional': list(optional),\n 'docs': list(docs),\n 'linting': list(linting | client),\n 'testing': list(testing | client)\n },\n classifiers=[\n 'Development Status :: 5 - Production/Stable',\n 'Intended Audience :: Developers',\n 'Natural Language :: English',\n 'License :: OSI Approved :: Apache Software License',\n 'Programming Language :: Python',\n 'Programming Language :: Python :: 2.6',\n 'Programming Language :: Python :: 2.7',\n 'Programming Language :: Python :: 3.3',\n 'Programming Language :: Python :: 3.4',\n 'Programming Language :: Python :: 3.5',\n 'Programming Language :: Python :: 3.6'\n ],\n entry_points=entry_points,\n include_package_data=True\n )\n", "path": "setup.py"}], "after_files": [{"content": "import os\nimport sys\nfrom setuptools import setup, find_packages\n\n__here__ = os.path.dirname(os.path.abspath(__file__))\n\npackage_info = dict.fromkeys([\"RELEASE\", \"COMMIT\", \"VERSION\", \"NAME\"])\n\nfor name in package_info:\n with open(os.path.join(__here__, \"insights\", name)) as f:\n package_info[name] = f.read().strip()\n\nentry_points = {\n 'console_scripts': [\n 'insights-collect = insights.collect:main',\n 'insights-run = insights:main',\n 'insights = insights.command_parser:main',\n 'insights-cat = insights.tools.cat:main',\n 'insights-dupkeycheck = insights.tools.dupkeycheck:main',\n 'insights-inspect = insights.tools.insights_inspect:main',\n 'insights-info = insights.tools.query:main',\n 'insights-ocpshell= insights.ocpshell:main',\n 'client = insights.client:run',\n 'mangle = insights.util.mangle:main'\n ]\n}\n\nruntime = set([\n 'six',\n 'requests',\n 'redis',\n 'cachecontrol',\n 'cachecontrol[redis]',\n 'cachecontrol[filecache]',\n 'defusedxml',\n 'lockfile',\n 'jinja2',\n])\n\nif (sys.version_info < (2, 7)):\n runtime.add('pyyaml>=3.10,<=3.13')\nelse:\n runtime.add('pyyaml')\n\n\ndef maybe_require(pkg):\n try:\n __import__(pkg)\n except ImportError:\n runtime.add(pkg)\n\n\nmaybe_require(\"importlib\")\nmaybe_require(\"argparse\")\n\n\nclient = set([\n 'requests'\n])\n\ndevelop = set([\n 'futures==3.0.5',\n 'wheel',\n])\n\ndocs = set([\n 'Sphinx<=3.0.2',\n 'nbsphinx',\n 'sphinx_rtd_theme',\n 'ipython',\n 'colorama',\n 'jinja2',\n 'Pygments'\n])\n\ntesting = set([\n 'coverage==4.3.4',\n 'pytest==3.0.6',\n 'pytest-cov==2.4.0',\n 'mock==2.0.0',\n])\n\ncluster = set([\n 'ansible',\n 'pandas',\n 'colorama',\n])\n\nopenshift = set([\n 'openshift'\n])\n\nlinting = set([\n 'flake8==2.6.2',\n])\n\noptional = set([\n 'python-cjson',\n 'python-logstash',\n 'python-statsd',\n 'watchdog',\n])\n\nif __name__ == \"__main__\":\n # allows for runtime modification of rpm name\n name = os.environ.get(\"INSIGHTS_CORE_NAME\", package_info[\"NAME\"])\n\n setup(\n name=name,\n version=package_info[\"VERSION\"],\n description=\"Insights Core is a data collection and analysis framework\",\n long_description=open(\"README.rst\").read(),\n url=\"https://github.com/redhatinsights/insights-core\",\n author=\"Red Hat, Inc.\",\n author_email=\"[email protected]\",\n packages=find_packages(),\n install_requires=list(runtime),\n package_data={'': ['LICENSE']},\n license='Apache 2.0',\n extras_require={\n 'develop': list(runtime | develop | client | docs | linting | testing | cluster),\n 'develop26': list(runtime | develop | client | linting | testing | cluster),\n 'client': list(runtime | client),\n 'client-develop': list(runtime | develop | client | linting | testing),\n 'cluster': list(runtime | cluster),\n 'openshift': list(runtime | openshift),\n 'optional': list(optional),\n 'docs': list(docs),\n 'linting': list(linting | client),\n 'testing': list(testing | client)\n },\n classifiers=[\n 'Development Status :: 5 - Production/Stable',\n 'Intended Audience :: Developers',\n 'Natural Language :: English',\n 'License :: OSI Approved :: Apache Software License',\n 'Programming Language :: Python',\n 'Programming Language :: Python :: 2.6',\n 'Programming Language :: Python :: 2.7',\n 'Programming Language :: Python :: 3.3',\n 'Programming Language :: Python :: 3.4',\n 'Programming Language :: Python :: 3.5',\n 'Programming Language :: Python :: 3.6'\n ],\n entry_points=entry_points,\n include_package_data=True\n )\n", "path": "setup.py"}]} | 1,680 | 128 |

gh_patches_debug_26911 | rasdani/github-patches | git_diff | pyca__cryptography-1424 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Need zope.interface.verify.verifyObject for ABCs.

Every object that claims to provide some interface should then use it in a unit test.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `cryptography/utils.py`

Content:

```

1 # Licensed under the Apache License, Version 2.0 (the "License");

2 # you may not use this file except in compliance with the License.

3 # You may obtain a copy of the License at

4 #

5 # http://www.apache.org/licenses/LICENSE-2.0

6 #

7 # Unless required by applicable law or agreed to in writing, software

8 # distributed under the License is distributed on an "AS IS" BASIS,

9 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

10 # implied.

11 # See the License for the specific language governing permissions and

12 # limitations under the License.

13

14 from __future__ import absolute_import, division, print_function

15

16 import sys

17

18

19 DeprecatedIn06 = DeprecationWarning

20

21

22 def register_interface(iface):

23 def register_decorator(klass):

24 iface.register(klass)

25 return klass

26 return register_decorator

27

28

29 def read_only_property(name):

30 return property(lambda self: getattr(self, name))

31

32

33 def bit_length(x):

34 if sys.version_info >= (2, 7):

35 return x.bit_length()

36 else:

37 return len(bin(x)) - (2 + (x <= 0))

38

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/cryptography/utils.py b/cryptography/utils.py

--- a/cryptography/utils.py

+++ b/cryptography/utils.py

@@ -13,6 +13,8 @@

from __future__ import absolute_import, division, print_function

+import abc

+import inspect

import sys

@@ -21,6 +23,7 @@

def register_interface(iface):

def register_decorator(klass):

+ verify_interface(iface, klass)

iface.register(klass)

return klass

return register_decorator

@@ -30,6 +33,30 @@

return property(lambda self: getattr(self, name))

+class InterfaceNotImplemented(Exception):

+ pass

+

+

+def verify_interface(iface, klass):

+ for method in iface.__abstractmethods__:

+ if not hasattr(klass, method):

+ raise InterfaceNotImplemented(

+ "{0} is missing a {1!r} method".format(klass, method)

+ )

+ if isinstance(getattr(iface, method), abc.abstractproperty):

+ # Can't properly verify these yet.

+ continue

+ spec = inspect.getargspec(getattr(iface, method))

+ actual = inspect.getargspec(getattr(klass, method))

+ if spec != actual:

+ raise InterfaceNotImplemented(

+ "{0}.{1}'s signature differs from the expected. Expected: "

+ "{2!r}. Received: {3!r}".format(

+ klass, method, spec, actual

+ )

+ )

+

+

def bit_length(x):

if sys.version_info >= (2, 7):

return x.bit_length()

| {"golden_diff": "diff --git a/cryptography/utils.py b/cryptography/utils.py\n--- a/cryptography/utils.py\n+++ b/cryptography/utils.py\n@@ -13,6 +13,8 @@\n \n from __future__ import absolute_import, division, print_function\n \n+import abc\n+import inspect\n import sys\n \n \n@@ -21,6 +23,7 @@\n \n def register_interface(iface):\n def register_decorator(klass):\n+ verify_interface(iface, klass)\n iface.register(klass)\n return klass\n return register_decorator\n@@ -30,6 +33,30 @@\n return property(lambda self: getattr(self, name))\n \n \n+class InterfaceNotImplemented(Exception):\n+ pass\n+\n+\n+def verify_interface(iface, klass):\n+ for method in iface.__abstractmethods__:\n+ if not hasattr(klass, method):\n+ raise InterfaceNotImplemented(\n+ \"{0} is missing a {1!r} method\".format(klass, method)\n+ )\n+ if isinstance(getattr(iface, method), abc.abstractproperty):\n+ # Can't properly verify these yet.\n+ continue\n+ spec = inspect.getargspec(getattr(iface, method))\n+ actual = inspect.getargspec(getattr(klass, method))\n+ if spec != actual:\n+ raise InterfaceNotImplemented(\n+ \"{0}.{1}'s signature differs from the expected. Expected: \"\n+ \"{2!r}. Received: {3!r}\".format(\n+ klass, method, spec, actual\n+ )\n+ )\n+\n+\n def bit_length(x):\n if sys.version_info >= (2, 7):\n return x.bit_length()\n", "issue": "Need zope.interface.verify.verifyObject for ABCs.\nEvery object that claims to provide some interface should then use it in a unit test.\n\n", "before_files": [{"content": "# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or\n# implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\nfrom __future__ import absolute_import, division, print_function\n\nimport sys\n\n\nDeprecatedIn06 = DeprecationWarning\n\n\ndef register_interface(iface):\n def register_decorator(klass):\n iface.register(klass)\n return klass\n return register_decorator\n\n\ndef read_only_property(name):\n return property(lambda self: getattr(self, name))\n\n\ndef bit_length(x):\n if sys.version_info >= (2, 7):\n return x.bit_length()\n else:\n return len(bin(x)) - (2 + (x <= 0))\n", "path": "cryptography/utils.py"}], "after_files": [{"content": "# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or\n# implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\nfrom __future__ import absolute_import, division, print_function\n\nimport abc\nimport inspect\nimport sys\n\n\nDeprecatedIn06 = DeprecationWarning\n\n\ndef register_interface(iface):\n def register_decorator(klass):\n verify_interface(iface, klass)\n iface.register(klass)\n return klass\n return register_decorator\n\n\ndef read_only_property(name):\n return property(lambda self: getattr(self, name))\n\n\nclass InterfaceNotImplemented(Exception):\n pass\n\n\ndef verify_interface(iface, klass):\n for method in iface.__abstractmethods__:\n if not hasattr(klass, method):\n raise InterfaceNotImplemented(\n \"{0} is missing a {1!r} method\".format(klass, method)\n )\n if isinstance(getattr(iface, method), abc.abstractproperty):\n # Can't properly verify these yet.\n continue\n spec = inspect.getargspec(getattr(iface, method))\n actual = inspect.getargspec(getattr(klass, method))\n if spec != actual:\n raise InterfaceNotImplemented(\n \"{0}.{1}'s signature differs from the expected. Expected: \"\n \"{2!r}. Received: {3!r}\".format(\n klass, method, spec, actual\n )\n )\n\n\ndef bit_length(x):\n if sys.version_info >= (2, 7):\n return x.bit_length()\n else:\n return len(bin(x)) - (2 + (x <= 0))\n", "path": "cryptography/utils.py"}]} | 595 | 357 |

gh_patches_debug_3588 | rasdani/github-patches | git_diff | akvo__akvo-rsr-3753 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Show only relevant updates in typeahead on Akvo pages

Currently, all updates can be searched for on partner site updates typeahead.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `akvo/rest/views/typeahead.py`

Content:

```

1 # -*- coding: utf-8 -*-

2

3 """Akvo RSR is covered by the GNU Affero General Public License.

4 See more details in the license.txt file located at the root folder of the

5 Akvo RSR module. For additional details on the GNU license please

6 see < http://www.gnu.org/licenses/agpl.html >.

7 """

8

9 from django.conf import settings

10 from rest_framework.decorators import api_view

11 from rest_framework.response import Response

12

13 from akvo.codelists.models import Country, Version

14 from akvo.rest.serializers import (TypeaheadCountrySerializer,

15 TypeaheadOrganisationSerializer,

16 TypeaheadProjectSerializer,

17 TypeaheadProjectUpdateSerializer,

18 TypeaheadKeywordSerializer,)

19 from akvo.rsr.models import Organisation, Project, ProjectUpdate

20 from akvo.rsr.views.project import _project_directory_coll

21

22

23 def rejig(queryset, serializer):

24 """Rearrange & add queryset count to the response data."""

25 return {

26 'count': queryset.count(),

27 'results': serializer.data

28 }

29

30

31 @api_view(['GET'])

32 def typeahead_country(request):

33 iati_version = Version.objects.get(code=settings.IATI_VERSION)

34 countries = Country.objects.filter(version=iati_version)

35 return Response(

36 rejig(countries, TypeaheadCountrySerializer(countries, many=True))

37 )

38

39

40 @api_view(['GET'])

41 def typeahead_organisation(request):

42 page = request.rsr_page

43 if request.GET.get('partners', '0') == '1' and page:

44 organisations = page.partners()

45 else:

46 # Project editor - all organizations

47 organisations = Organisation.objects.all()

48

49 organisations = organisations.values('id', 'name', 'long_name')

50

51 return Response(

52 rejig(organisations, TypeaheadOrganisationSerializer(organisations,

53 many=True))

54 )

55

56

57 @api_view(['GET'])

58 def typeahead_user_organisations(request):

59 user = request.user

60 is_admin = user.is_active and (user.is_superuser or user.is_admin)

61 organisations = user.approved_organisations() if not is_admin else Organisation.objects.all()

62 return Response(

63 rejig(organisations, TypeaheadOrganisationSerializer(organisations,

64 many=True))

65 )

66

67

68 @api_view(['GET'])

69 def typeahead_keyword(request):

70 page = request.rsr_page

71 keywords = page.keywords.all() if page else None

72 if keywords:

73 return Response(

74 rejig(keywords, TypeaheadKeywordSerializer(keywords, many=True))

75 )

76 # No keywords on rsr.akvo.org

77 return Response({})

78

79

80 @api_view(['GET'])

81 def typeahead_project(request):

82 """Return the typeaheads for projects.

83

84 Without any query parameters, it returns the info for all the projects in

85 the current context -- changes depending on whether we are on a partner

86 site, or the RSR site.

87

88 If a published query parameter is passed, only projects that have been

89 published are returned.

90

91 NOTE: The unauthenticated user gets information about all the projects when

92 using this API endpoint. More permission checking will need to be added,

93 if the amount of data being returned is changed.

94

95 """

96 if request.GET.get('published', '0') == '0':

97 # Project editor - organization projects, all

98 page = request.rsr_page

99 projects = page.all_projects() if page else Project.objects.all()

100 else:

101 # Search bar - organization projects, published

102 projects = _project_directory_coll(request)

103

104 projects = projects.exclude(title='')

105 return Response(

106 rejig(projects, TypeaheadProjectSerializer(projects, many=True))

107 )

108

109

110 @api_view(['GET'])

111 def typeahead_user_projects(request):

112 user = request.user

113 is_admin = user.is_active and (user.is_superuser or user.is_admin)

114 if is_admin:

115 projects = Project.objects.all()

116 else:

117 projects = user.approved_organisations().all_projects()

118 projects = projects.exclude(title='')

119 return Response(

120 rejig(projects, TypeaheadProjectSerializer(projects, many=True))

121 )

122

123

124 @api_view(['GET'])

125 def typeahead_impact_projects(request):

126 user = request.user

127 projects = Project.objects.all() if user.is_admin or user.is_superuser else user.my_projects()

128 projects = projects.published().filter(is_impact_project=True).order_by('title')

129

130 return Response(

131 rejig(projects, TypeaheadProjectSerializer(projects, many=True))

132 )

133

134

135 @api_view(['GET'])

136 def typeahead_projectupdate(request):

137 updates = ProjectUpdate.objects.all()

138 return Response(

139 rejig(updates, TypeaheadProjectUpdateSerializer(updates, many=True))

140 )

141

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/akvo/rest/views/typeahead.py b/akvo/rest/views/typeahead.py

--- a/akvo/rest/views/typeahead.py

+++ b/akvo/rest/views/typeahead.py

@@ -134,7 +134,8 @@

@api_view(['GET'])

def typeahead_projectupdate(request):

- updates = ProjectUpdate.objects.all()

+ page = request.rsr_page

+ updates = page.updates() if page else ProjectUpdate.objects.all()

return Response(

rejig(updates, TypeaheadProjectUpdateSerializer(updates, many=True))

)

| {"golden_diff": "diff --git a/akvo/rest/views/typeahead.py b/akvo/rest/views/typeahead.py\n--- a/akvo/rest/views/typeahead.py\n+++ b/akvo/rest/views/typeahead.py\n@@ -134,7 +134,8 @@\n \n @api_view(['GET'])\n def typeahead_projectupdate(request):\n- updates = ProjectUpdate.objects.all()\n+ page = request.rsr_page\n+ updates = page.updates() if page else ProjectUpdate.objects.all()\n return Response(\n rejig(updates, TypeaheadProjectUpdateSerializer(updates, many=True))\n )\n", "issue": "Show only relevant updates in typeahead on Akvo pages\nCurrently, all updates can be searched for on partner site updates typeahead. \n", "before_files": [{"content": "# -*- coding: utf-8 -*-\n\n\"\"\"Akvo RSR is covered by the GNU Affero General Public License.\nSee more details in the license.txt file located at the root folder of the\nAkvo RSR module. For additional details on the GNU license please\nsee < http://www.gnu.org/licenses/agpl.html >.\n\"\"\"\n\nfrom django.conf import settings\nfrom rest_framework.decorators import api_view\nfrom rest_framework.response import Response\n\nfrom akvo.codelists.models import Country, Version\nfrom akvo.rest.serializers import (TypeaheadCountrySerializer,\n TypeaheadOrganisationSerializer,\n TypeaheadProjectSerializer,\n TypeaheadProjectUpdateSerializer,\n TypeaheadKeywordSerializer,)\nfrom akvo.rsr.models import Organisation, Project, ProjectUpdate\nfrom akvo.rsr.views.project import _project_directory_coll\n\n\ndef rejig(queryset, serializer):\n \"\"\"Rearrange & add queryset count to the response data.\"\"\"\n return {\n 'count': queryset.count(),\n 'results': serializer.data\n }\n\n\n@api_view(['GET'])\ndef typeahead_country(request):\n iati_version = Version.objects.get(code=settings.IATI_VERSION)\n countries = Country.objects.filter(version=iati_version)\n return Response(\n rejig(countries, TypeaheadCountrySerializer(countries, many=True))\n )\n\n\n@api_view(['GET'])\ndef typeahead_organisation(request):\n page = request.rsr_page\n if request.GET.get('partners', '0') == '1' and page:\n organisations = page.partners()\n else:\n # Project editor - all organizations\n organisations = Organisation.objects.all()\n\n organisations = organisations.values('id', 'name', 'long_name')\n\n return Response(\n rejig(organisations, TypeaheadOrganisationSerializer(organisations,\n many=True))\n )\n\n\n@api_view(['GET'])\ndef typeahead_user_organisations(request):\n user = request.user\n is_admin = user.is_active and (user.is_superuser or user.is_admin)\n organisations = user.approved_organisations() if not is_admin else Organisation.objects.all()\n return Response(\n rejig(organisations, TypeaheadOrganisationSerializer(organisations,\n many=True))\n )\n\n\n@api_view(['GET'])\ndef typeahead_keyword(request):\n page = request.rsr_page\n keywords = page.keywords.all() if page else None\n if keywords:\n return Response(\n rejig(keywords, TypeaheadKeywordSerializer(keywords, many=True))\n )\n # No keywords on rsr.akvo.org\n return Response({})\n\n\n@api_view(['GET'])\ndef typeahead_project(request):\n \"\"\"Return the typeaheads for projects.\n\n Without any query parameters, it returns the info for all the projects in\n the current context -- changes depending on whether we are on a partner\n site, or the RSR site.\n\n If a published query parameter is passed, only projects that have been\n published are returned.\n\n NOTE: The unauthenticated user gets information about all the projects when\n using this API endpoint. More permission checking will need to be added,\n if the amount of data being returned is changed.\n\n \"\"\"\n if request.GET.get('published', '0') == '0':\n # Project editor - organization projects, all\n page = request.rsr_page\n projects = page.all_projects() if page else Project.objects.all()\n else:\n # Search bar - organization projects, published\n projects = _project_directory_coll(request)\n\n projects = projects.exclude(title='')\n return Response(\n rejig(projects, TypeaheadProjectSerializer(projects, many=True))\n )\n\n\n@api_view(['GET'])\ndef typeahead_user_projects(request):\n user = request.user\n is_admin = user.is_active and (user.is_superuser or user.is_admin)\n if is_admin:\n projects = Project.objects.all()\n else:\n projects = user.approved_organisations().all_projects()\n projects = projects.exclude(title='')\n return Response(\n rejig(projects, TypeaheadProjectSerializer(projects, many=True))\n )\n\n\n@api_view(['GET'])\ndef typeahead_impact_projects(request):\n user = request.user\n projects = Project.objects.all() if user.is_admin or user.is_superuser else user.my_projects()\n projects = projects.published().filter(is_impact_project=True).order_by('title')\n\n return Response(\n rejig(projects, TypeaheadProjectSerializer(projects, many=True))\n )\n\n\n@api_view(['GET'])\ndef typeahead_projectupdate(request):\n updates = ProjectUpdate.objects.all()\n return Response(\n rejig(updates, TypeaheadProjectUpdateSerializer(updates, many=True))\n )\n", "path": "akvo/rest/views/typeahead.py"}], "after_files": [{"content": "# -*- coding: utf-8 -*-\n\n\"\"\"Akvo RSR is covered by the GNU Affero General Public License.\nSee more details in the license.txt file located at the root folder of the\nAkvo RSR module. For additional details on the GNU license please\nsee < http://www.gnu.org/licenses/agpl.html >.\n\"\"\"\n\nfrom django.conf import settings\nfrom rest_framework.decorators import api_view\nfrom rest_framework.response import Response\n\nfrom akvo.codelists.models import Country, Version\nfrom akvo.rest.serializers import (TypeaheadCountrySerializer,\n TypeaheadOrganisationSerializer,\n TypeaheadProjectSerializer,\n TypeaheadProjectUpdateSerializer,\n TypeaheadKeywordSerializer,)\nfrom akvo.rsr.models import Organisation, Project, ProjectUpdate\nfrom akvo.rsr.views.project import _project_directory_coll\n\n\ndef rejig(queryset, serializer):\n \"\"\"Rearrange & add queryset count to the response data.\"\"\"\n return {\n 'count': queryset.count(),\n 'results': serializer.data\n }\n\n\n@api_view(['GET'])\ndef typeahead_country(request):\n iati_version = Version.objects.get(code=settings.IATI_VERSION)\n countries = Country.objects.filter(version=iati_version)\n return Response(\n rejig(countries, TypeaheadCountrySerializer(countries, many=True))\n )\n\n\n@api_view(['GET'])\ndef typeahead_organisation(request):\n page = request.rsr_page\n if request.GET.get('partners', '0') == '1' and page:\n organisations = page.partners()\n else:\n # Project editor - all organizations\n organisations = Organisation.objects.all()\n\n organisations = organisations.values('id', 'name', 'long_name')\n\n return Response(\n rejig(organisations, TypeaheadOrganisationSerializer(organisations,\n many=True))\n )\n\n\n@api_view(['GET'])\ndef typeahead_user_organisations(request):\n user = request.user\n is_admin = user.is_active and (user.is_superuser or user.is_admin)\n organisations = user.approved_organisations() if not is_admin else Organisation.objects.all()\n return Response(\n rejig(organisations, TypeaheadOrganisationSerializer(organisations,\n many=True))\n )\n\n\n@api_view(['GET'])\ndef typeahead_keyword(request):\n page = request.rsr_page\n keywords = page.keywords.all() if page else None\n if keywords:\n return Response(\n rejig(keywords, TypeaheadKeywordSerializer(keywords, many=True))\n )\n # No keywords on rsr.akvo.org\n return Response({})\n\n\n@api_view(['GET'])\ndef typeahead_project(request):\n \"\"\"Return the typeaheads for projects.\n\n Without any query parameters, it returns the info for all the projects in\n the current context -- changes depending on whether we are on a partner\n site, or the RSR site.\n\n If a published query parameter is passed, only projects that have been\n published are returned.\n\n NOTE: The unauthenticated user gets information about all the projects when\n using this API endpoint. More permission checking will need to be added,\n if the amount of data being returned is changed.\n\n \"\"\"\n if request.GET.get('published', '0') == '0':\n # Project editor - organization projects, all\n page = request.rsr_page\n projects = page.all_projects() if page else Project.objects.all()\n else:\n # Search bar - organization projects, published\n projects = _project_directory_coll(request)\n\n projects = projects.exclude(title='')\n return Response(\n rejig(projects, TypeaheadProjectSerializer(projects, many=True))\n )\n\n\n@api_view(['GET'])\ndef typeahead_user_projects(request):\n user = request.user\n is_admin = user.is_active and (user.is_superuser or user.is_admin)\n if is_admin:\n projects = Project.objects.all()\n else:\n projects = user.approved_organisations().all_projects()\n projects = projects.exclude(title='')\n return Response(\n rejig(projects, TypeaheadProjectSerializer(projects, many=True))\n )\n\n\n@api_view(['GET'])\ndef typeahead_impact_projects(request):\n user = request.user\n projects = Project.objects.all() if user.is_admin or user.is_superuser else user.my_projects()\n projects = projects.published().filter(is_impact_project=True).order_by('title')\n\n return Response(\n rejig(projects, TypeaheadProjectSerializer(projects, many=True))\n )\n\n\n@api_view(['GET'])\ndef typeahead_projectupdate(request):\n page = request.rsr_page\n updates = page.updates() if page else ProjectUpdate.objects.all()\n return Response(\n rejig(updates, TypeaheadProjectUpdateSerializer(updates, many=True))\n )\n", "path": "akvo/rest/views/typeahead.py"}]} | 1,605 | 129 |

gh_patches_debug_31114 | rasdani/github-patches | git_diff | bridgecrewio__checkov-2154 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

CKV_AWS_174 incorrect reporting

CKV_AWS_174 is being triggered in our terraform code even though we have the viewer certificate set to use TLSv.1.2. Snippet of our code here:

viewer_certificate {

acm_certificate_arn = aws_acm_certificate.cert.arn

ssl_support_method = "sni-only"

minimum_protocol_version = "TLSv1.2_2019"

}

Steps to reproduce the behavior:

Running checkov on our terraform code

**Expected behavior**

This check should be passed

**Additional context**

It looks to me like the issue is in the code between lines 17 and 19. I dont think based on the terraform documentation and the if statements that it would ever pass if using an acm certificate

https://github.com/bridgecrewio/checkov/blob/master/checkov/terraform/checks/resource/aws/CloudfrontTLS12.py

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `checkov/terraform/checks/resource/aws/CloudfrontTLS12.py`

Content:

```

1 from checkov.common.models.enums import CheckCategories, CheckResult

2 from checkov.terraform.checks.resource.base_resource_value_check import BaseResourceValueCheck

3

4

5 class CloudFrontTLS12(BaseResourceValueCheck):

6 def __init__(self):

7 name = "Verify CloudFront Distribution Viewer Certificate is using TLS v1.2"

8 id = "CKV_AWS_174"

9 supported_resources = ["aws_cloudfront_distribution"]

10 categories = [CheckCategories.ENCRYPTION]

11 super().__init__(name=name, id=id, categories=categories, supported_resources=supported_resources)

12

13 def scan_resource_conf(self, conf):

14 if "viewer_certificate" in conf.keys():

15 # check if cloudfront_default_certificate is true then this could use less than tls 1.2

16 viewer_certificate = conf["viewer_certificate"][0]

17 if 'cloudfront_default_certificate' in viewer_certificate:

18 #is not using the default certificate

19 if viewer_certificate["cloudfront_default_certificate"] is not True:

20 #these protocol versions

21 if "minimum_protocol_version" in viewer_certificate:

22 protocol=viewer_certificate["minimum_protocol_version"][0]

23 if protocol in ['TLSv1.2_2018', 'TLSv1.2_2019', 'TLSv1.2_2021']:

24 return CheckResult.PASSED

25

26 #No cert specified so using default which can be less that tls 1.2

27 return CheckResult.FAILED

28

29 def get_inspected_key(self):

30

31 return "viewer_certificate/[0]/minimum_protocol_version"

32

33 def get_expected_values(self):

34 return ['TLSv1.2_2018', 'TLSv1.2_2019', 'TLSv1.2_2021']

35

36

37 check = CloudFrontTLS12()

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/checkov/terraform/checks/resource/aws/CloudfrontTLS12.py b/checkov/terraform/checks/resource/aws/CloudfrontTLS12.py

--- a/checkov/terraform/checks/resource/aws/CloudfrontTLS12.py

+++ b/checkov/terraform/checks/resource/aws/CloudfrontTLS12.py

@@ -12,18 +12,18 @@

def scan_resource_conf(self, conf):

if "viewer_certificate" in conf.keys():

- # check if cloudfront_default_certificate is true then this could use less than tls 1.2

viewer_certificate = conf["viewer_certificate"][0]

- if 'cloudfront_default_certificate' in viewer_certificate:

- #is not using the default certificate

- if viewer_certificate["cloudfront_default_certificate"] is not True:

- #these protocol versions

- if "minimum_protocol_version" in viewer_certificate:

- protocol=viewer_certificate["minimum_protocol_version"][0]

- if protocol in ['TLSv1.2_2018', 'TLSv1.2_2019', 'TLSv1.2_2021']:

- return CheckResult.PASSED

-

- #No cert specified so using default which can be less that tls 1.2

+ # check if cloudfront_default_certificate is true then this could use less than tls 1.2

+ if ("cloudfront_default_certificate" in viewer_certificate and viewer_certificate

+ ["cloudfront_default_certificate"][0] is not True) or (

+ 'minimum_protocol_version' in viewer_certificate):

+ # is not using the default certificate

+ if 'minimum_protocol_version' in viewer_certificate:

+ protocol = viewer_certificate["minimum_protocol_version"][0]

+ # these protocol versions

+ if protocol in ['TLSv1.2_2018', 'TLSv1.2_2019', 'TLSv1.2_2021']:

+ return CheckResult.PASSED

+ # No cert specified so using default which can be less that tls 1.2

return CheckResult.FAILED

def get_inspected_key(self):

@@ -34,4 +34,4 @@

return ['TLSv1.2_2018', 'TLSv1.2_2019', 'TLSv1.2_2021']

-check = CloudFrontTLS12()

\ No newline at end of file

+check = CloudFrontTLS12()

| {"golden_diff": "diff --git a/checkov/terraform/checks/resource/aws/CloudfrontTLS12.py b/checkov/terraform/checks/resource/aws/CloudfrontTLS12.py\n--- a/checkov/terraform/checks/resource/aws/CloudfrontTLS12.py\n+++ b/checkov/terraform/checks/resource/aws/CloudfrontTLS12.py\n@@ -12,18 +12,18 @@\n \n def scan_resource_conf(self, conf):\n if \"viewer_certificate\" in conf.keys():\n- # check if cloudfront_default_certificate is true then this could use less than tls 1.2\n viewer_certificate = conf[\"viewer_certificate\"][0]\n- if 'cloudfront_default_certificate' in viewer_certificate:\n- #is not using the default certificate\n- if viewer_certificate[\"cloudfront_default_certificate\"] is not True:\n- #these protocol versions\n- if \"minimum_protocol_version\" in viewer_certificate:\n- protocol=viewer_certificate[\"minimum_protocol_version\"][0]\n- if protocol in ['TLSv1.2_2018', 'TLSv1.2_2019', 'TLSv1.2_2021']:\n- return CheckResult.PASSED\n-\n- #No cert specified so using default which can be less that tls 1.2\n+ # check if cloudfront_default_certificate is true then this could use less than tls 1.2\n+ if (\"cloudfront_default_certificate\" in viewer_certificate and viewer_certificate\n+ [\"cloudfront_default_certificate\"][0] is not True) or (\n+ 'minimum_protocol_version' in viewer_certificate):\n+ # is not using the default certificate\n+ if 'minimum_protocol_version' in viewer_certificate:\n+ protocol = viewer_certificate[\"minimum_protocol_version\"][0]\n+ # these protocol versions\n+ if protocol in ['TLSv1.2_2018', 'TLSv1.2_2019', 'TLSv1.2_2021']:\n+ return CheckResult.PASSED\n+ # No cert specified so using default which can be less that tls 1.2\n return CheckResult.FAILED\n \n def get_inspected_key(self):\n@@ -34,4 +34,4 @@\n return ['TLSv1.2_2018', 'TLSv1.2_2019', 'TLSv1.2_2021']\n \n \n-check = CloudFrontTLS12()\n\\ No newline at end of file\n+check = CloudFrontTLS12()\n", "issue": "CKV_AWS_174 incorrect reporting\nCKV_AWS_174 is being triggered in our terraform code even though we have the viewer certificate set to use TLSv.1.2. Snippet of our code here:\r\n\r\nviewer_certificate {\r\n acm_certificate_arn = aws_acm_certificate.cert.arn\r\n ssl_support_method = \"sni-only\"\r\n minimum_protocol_version = \"TLSv1.2_2019\" \r\n}\r\n\r\n\r\nSteps to reproduce the behavior:\r\nRunning checkov on our terraform code\r\n\r\n**Expected behavior**\r\nThis check should be passed\r\n\r\n\r\n\r\n**Additional context**\r\nIt looks to me like the issue is in the code between lines 17 and 19. I dont think based on the terraform documentation and the if statements that it would ever pass if using an acm certificate\r\n\r\nhttps://github.com/bridgecrewio/checkov/blob/master/checkov/terraform/checks/resource/aws/CloudfrontTLS12.py\r\n\n", "before_files": [{"content": "from checkov.common.models.enums import CheckCategories, CheckResult\nfrom checkov.terraform.checks.resource.base_resource_value_check import BaseResourceValueCheck\n\n\nclass CloudFrontTLS12(BaseResourceValueCheck):\n def __init__(self):\n name = \"Verify CloudFront Distribution Viewer Certificate is using TLS v1.2\"\n id = \"CKV_AWS_174\"\n supported_resources = [\"aws_cloudfront_distribution\"]\n categories = [CheckCategories.ENCRYPTION]\n super().__init__(name=name, id=id, categories=categories, supported_resources=supported_resources)\n\n def scan_resource_conf(self, conf):\n if \"viewer_certificate\" in conf.keys():\n # check if cloudfront_default_certificate is true then this could use less than tls 1.2\n viewer_certificate = conf[\"viewer_certificate\"][0]\n if 'cloudfront_default_certificate' in viewer_certificate:\n #is not using the default certificate\n if viewer_certificate[\"cloudfront_default_certificate\"] is not True:\n #these protocol versions\n if \"minimum_protocol_version\" in viewer_certificate:\n protocol=viewer_certificate[\"minimum_protocol_version\"][0]\n if protocol in ['TLSv1.2_2018', 'TLSv1.2_2019', 'TLSv1.2_2021']:\n return CheckResult.PASSED\n\n #No cert specified so using default which can be less that tls 1.2\n return CheckResult.FAILED\n\n def get_inspected_key(self):\n\n return \"viewer_certificate/[0]/minimum_protocol_version\"\n\n def get_expected_values(self):\n return ['TLSv1.2_2018', 'TLSv1.2_2019', 'TLSv1.2_2021']\n\n\ncheck = CloudFrontTLS12()", "path": "checkov/terraform/checks/resource/aws/CloudfrontTLS12.py"}], "after_files": [{"content": "from checkov.common.models.enums import CheckCategories, CheckResult\nfrom checkov.terraform.checks.resource.base_resource_value_check import BaseResourceValueCheck\n\n\nclass CloudFrontTLS12(BaseResourceValueCheck):\n def __init__(self):\n name = \"Verify CloudFront Distribution Viewer Certificate is using TLS v1.2\"\n id = \"CKV_AWS_174\"\n supported_resources = [\"aws_cloudfront_distribution\"]\n categories = [CheckCategories.ENCRYPTION]\n super().__init__(name=name, id=id, categories=categories, supported_resources=supported_resources)\n\n def scan_resource_conf(self, conf):\n if \"viewer_certificate\" in conf.keys():\n viewer_certificate = conf[\"viewer_certificate\"][0]\n # check if cloudfront_default_certificate is true then this could use less than tls 1.2\n if (\"cloudfront_default_certificate\" in viewer_certificate and viewer_certificate\n [\"cloudfront_default_certificate\"][0] is not True) or (\n 'minimum_protocol_version' in viewer_certificate):\n # is not using the default certificate\n if 'minimum_protocol_version' in viewer_certificate:\n protocol = viewer_certificate[\"minimum_protocol_version\"][0]\n # these protocol versions\n if protocol in ['TLSv1.2_2018', 'TLSv1.2_2019', 'TLSv1.2_2021']:\n return CheckResult.PASSED\n # No cert specified so using default which can be less that tls 1.2\n return CheckResult.FAILED\n\n def get_inspected_key(self):\n\n return \"viewer_certificate/[0]/minimum_protocol_version\"\n\n def get_expected_values(self):\n return ['TLSv1.2_2018', 'TLSv1.2_2019', 'TLSv1.2_2021']\n\n\ncheck = CloudFrontTLS12()\n", "path": "checkov/terraform/checks/resource/aws/CloudfrontTLS12.py"}]} | 930 | 551 |

gh_patches_debug_5802 | rasdani/github-patches | git_diff | akvo__akvo-rsr-4094 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Investigate creation of duplicate user accounts with differently cased emails

- [ ] Verify that lookups using email are using `__iexact` or something like that.

- [ ] Figure out a plan for existing duplicates

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `akvo/rest/views/utils.py`

Content:

```

1 # -*- coding: utf-8 -*-

2

3 # Akvo RSR is covered by the GNU Affero General Public License.

4

5 # See more details in the license.txt file located at the root folder of the Akvo RSR module.

6 # For additional details on the GNU license please see < http://www.gnu.org/licenses/agpl.html >.

7

8 from django.conf import settings

9 from django.contrib.auth import get_user_model

10 from django.core.cache import cache

11 from django.utils.cache import get_cache_key, _generate_cache_header_key

12 from django.db import IntegrityError

13

14

15 def get_cached_data(request, key_prefix, data, serializer):

16 """Function to get serialized data from the cache based on the request."""

17 cache_header_key = _generate_cache_header_key(key_prefix, request)

18 if cache.get(cache_header_key) is None:

19 cache.set(cache_header_key, [], None)

20

21 cache_key = get_cache_key(request, key_prefix)

22 cached_data = cache.get(cache_key, None)

23 cache_used = True

24 if not cached_data and data is not None:

25 cache_used = False

26 cached_data = serializer(data, many=True).data

27 cache.set(cache_key, cached_data)

28

29 return cached_data, cache_used

30

31

32 def set_cached_data(request, key_prefix, data):

33 """Function to save data to the cache based on the request."""

34

35 cache_header_key = _generate_cache_header_key(key_prefix, request)

36 if cache.get(cache_header_key) is None:

37 cache.set(cache_header_key, [], None)

38

39 cache_key = get_cache_key(request, key_prefix)

40 cache.set(cache_key, data)

41

42

43 def get_qs_elements_for_page(qs, request, count):

44 """Return queryset elements to be shown on the current page"""

45 limit = int_or_none(request.GET.get('limit')) or settings.PROJECT_DIRECTORY_PAGE_SIZES[0]

46 limit = min(limit, settings.PROJECT_DIRECTORY_PAGE_SIZES[-1])

47 max_page_number = 1 + int(count / limit)

48 page_number = min(max_page_number, int_or_none(request.GET.get('page')) or 1)

49 start = (page_number - 1) * limit

50 end = page_number * limit

51 return qs[start:end]

52

53

54 def int_or_none(value):

55 """Return int or None given a value."""

56 try:

57 return int(value)

58 except Exception:

59 return None

60

61

62 def create_invited_user(email):

63 User = get_user_model()

64 # Check if the user already exists, based on the email address

65 try:

66 invited_user = User.objects.get(email=email)

67 except User.DoesNotExist:

68 try:

69 invited_user = User.objects.create_user(username=email, email=email)

70 except IntegrityError:

71 return None

72 return invited_user

73

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/akvo/rest/views/utils.py b/akvo/rest/views/utils.py

--- a/akvo/rest/views/utils.py

+++ b/akvo/rest/views/utils.py

@@ -63,7 +63,7 @@

User = get_user_model()

# Check if the user already exists, based on the email address

try:

- invited_user = User.objects.get(email=email)

+ invited_user = User.objects.get(email__iexact=email)

except User.DoesNotExist:

try:

invited_user = User.objects.create_user(username=email, email=email)

| {"golden_diff": "diff --git a/akvo/rest/views/utils.py b/akvo/rest/views/utils.py\n--- a/akvo/rest/views/utils.py\n+++ b/akvo/rest/views/utils.py\n@@ -63,7 +63,7 @@\n User = get_user_model()\n # Check if the user already exists, based on the email address\n try:\n- invited_user = User.objects.get(email=email)\n+ invited_user = User.objects.get(email__iexact=email)\n except User.DoesNotExist:\n try:\n invited_user = User.objects.create_user(username=email, email=email)\n", "issue": "Investigate creation of duplicate user accounts with differently cased emails\n- [ ] Verify that lookups using email are using `__iexact` or something like that. \n- [ ] Figure out a plan for existing duplicates\n", "before_files": [{"content": "# -*- coding: utf-8 -*-\n\n# Akvo RSR is covered by the GNU Affero General Public License.\n\n# See more details in the license.txt file located at the root folder of the Akvo RSR module.\n# For additional details on the GNU license please see < http://www.gnu.org/licenses/agpl.html >.\n\nfrom django.conf import settings\nfrom django.contrib.auth import get_user_model\nfrom django.core.cache import cache\nfrom django.utils.cache import get_cache_key, _generate_cache_header_key\nfrom django.db import IntegrityError\n\n\ndef get_cached_data(request, key_prefix, data, serializer):\n \"\"\"Function to get serialized data from the cache based on the request.\"\"\"\n cache_header_key = _generate_cache_header_key(key_prefix, request)\n if cache.get(cache_header_key) is None:\n cache.set(cache_header_key, [], None)\n\n cache_key = get_cache_key(request, key_prefix)\n cached_data = cache.get(cache_key, None)\n cache_used = True\n if not cached_data and data is not None:\n cache_used = False\n cached_data = serializer(data, many=True).data\n cache.set(cache_key, cached_data)\n\n return cached_data, cache_used\n\n\ndef set_cached_data(request, key_prefix, data):\n \"\"\"Function to save data to the cache based on the request.\"\"\"\n\n cache_header_key = _generate_cache_header_key(key_prefix, request)\n if cache.get(cache_header_key) is None:\n cache.set(cache_header_key, [], None)\n\n cache_key = get_cache_key(request, key_prefix)\n cache.set(cache_key, data)\n\n\ndef get_qs_elements_for_page(qs, request, count):\n \"\"\"Return queryset elements to be shown on the current page\"\"\"\n limit = int_or_none(request.GET.get('limit')) or settings.PROJECT_DIRECTORY_PAGE_SIZES[0]\n limit = min(limit, settings.PROJECT_DIRECTORY_PAGE_SIZES[-1])\n max_page_number = 1 + int(count / limit)\n page_number = min(max_page_number, int_or_none(request.GET.get('page')) or 1)\n start = (page_number - 1) * limit\n end = page_number * limit\n return qs[start:end]\n\n\ndef int_or_none(value):\n \"\"\"Return int or None given a value.\"\"\"\n try:\n return int(value)\n except Exception:\n return None\n\n\ndef create_invited_user(email):\n User = get_user_model()\n # Check if the user already exists, based on the email address\n try:\n invited_user = User.objects.get(email=email)\n except User.DoesNotExist:\n try:\n invited_user = User.objects.create_user(username=email, email=email)\n except IntegrityError:\n return None\n return invited_user\n", "path": "akvo/rest/views/utils.py"}], "after_files": [{"content": "# -*- coding: utf-8 -*-\n\n# Akvo RSR is covered by the GNU Affero General Public License.\n\n# See more details in the license.txt file located at the root folder of the Akvo RSR module.\n# For additional details on the GNU license please see < http://www.gnu.org/licenses/agpl.html >.\n\nfrom django.conf import settings\nfrom django.contrib.auth import get_user_model\nfrom django.core.cache import cache\nfrom django.utils.cache import get_cache_key, _generate_cache_header_key\nfrom django.db import IntegrityError\n\n\ndef get_cached_data(request, key_prefix, data, serializer):\n \"\"\"Function to get serialized data from the cache based on the request.\"\"\"\n cache_header_key = _generate_cache_header_key(key_prefix, request)\n if cache.get(cache_header_key) is None:\n cache.set(cache_header_key, [], None)\n\n cache_key = get_cache_key(request, key_prefix)\n cached_data = cache.get(cache_key, None)\n cache_used = True\n if not cached_data and data is not None:\n cache_used = False\n cached_data = serializer(data, many=True).data\n cache.set(cache_key, cached_data)\n\n return cached_data, cache_used\n\n\ndef set_cached_data(request, key_prefix, data):\n \"\"\"Function to save data to the cache based on the request.\"\"\"\n\n cache_header_key = _generate_cache_header_key(key_prefix, request)\n if cache.get(cache_header_key) is None:\n cache.set(cache_header_key, [], None)\n\n cache_key = get_cache_key(request, key_prefix)\n cache.set(cache_key, data)\n\n\ndef get_qs_elements_for_page(qs, request, count):\n \"\"\"Return queryset elements to be shown on the current page\"\"\"\n limit = int_or_none(request.GET.get('limit')) or settings.PROJECT_DIRECTORY_PAGE_SIZES[0]\n limit = min(limit, settings.PROJECT_DIRECTORY_PAGE_SIZES[-1])\n max_page_number = 1 + int(count / limit)\n page_number = min(max_page_number, int_or_none(request.GET.get('page')) or 1)\n start = (page_number - 1) * limit\n end = page_number * limit\n return qs[start:end]\n\n\ndef int_or_none(value):\n \"\"\"Return int or None given a value.\"\"\"\n try:\n return int(value)\n except Exception:\n return None\n\n\ndef create_invited_user(email):\n User = get_user_model()\n # Check if the user already exists, based on the email address\n try:\n invited_user = User.objects.get(email__iexact=email)\n except User.DoesNotExist:\n try:\n invited_user = User.objects.create_user(username=email, email=email)\n except IntegrityError:\n return None\n return invited_user\n", "path": "akvo/rest/views/utils.py"}]} | 1,028 | 124 |

gh_patches_debug_7429 | rasdani/github-patches | git_diff | cloudtools__troposphere-457 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Elasticsearch Domain DomainName shouldn't be required

According to the CF documentation, `DomainName` isn't required: http://docs.aws.amazon.com/AWSCloudFormation/latest/UserGuide/aws-resource-elasticsearch-domain.html

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `troposphere/elasticsearch.py`

Content:

```

1 # Copyright (c) 2012-2015, Mark Peek <[email protected]>

2 # All rights reserved.

3 #

4 # See LICENSE file for full license.

5

6 from . import AWSProperty, AWSObject

7 from .validators import boolean, integer, integer_range, positive_integer

8

9 VALID_VOLUME_TYPES = ('standard', 'gp2', 'io1')

10

11 try:

12 from awacs.aws import Policy

13 policytypes = (dict, Policy)

14 except ImportError:

15 policytypes = dict,

16

17

18 def validate_volume_type(volume_type):

19 """Validate VolumeType for ElasticsearchDomain"""

20 if volume_type not in VALID_VOLUME_TYPES:

21 raise ValueError("Elasticsearch Domain VolumeType must be one of: %s" %

22 ", ".join(VALID_VOLUME_TYPES))

23 return volume_type

24

25

26 class EBSOptions(AWSProperty):

27 props = {

28 'EBSEnabled': (boolean, False),

29 'Iops': (positive_integer, False),

30 'VolumeSize': (integer, False),

31 'VolumeType': (validate_volume_type, False)

32 }

33

34 def validate(self):

35 volume_type = self.properties.get('VolumeType')

36 iops = self.properties.get('Iops')

37 if volume_type == 'io1' and not iops:

38 raise ValueError("Must specify Iops if VolumeType is 'io1'.")

39

40

41 class ElasticsearchClusterConfig(AWSProperty):

42 props = {

43 'DedicatedMasterCount': (integer, False),

44 'DedicatedMasterEnabled': (boolean, False),

45 'DedicatedMasterType': (basestring, False),

46 'InstanceCount': (integer, False),

47 'InstanceType': (basestring, False),

48 'ZoneAwarenessEnabled': (boolean, False)

49 }

50

51

52 class SnapshotOptions(AWSProperty):

53 props = {

54 'AutomatedSnapshotStartHour': (integer_range(0, 23), False)

55 }

56

57

58 class ElasticsearchDomain(AWSObject):

59 resource_type = "AWS::Elasticsearch::Domain"

60

61 props = {

62 'AccessPolicies': (policytypes, False),

63 'AdvancedOptions': (dict, False),

64 'DomainName': (basestring, True),

65 'EBSOptions': (EBSOptions, False),

66 'ElasticsearchClusterConfig': (ElasticsearchClusterConfig, False),

67 'SnapshotOptions': (SnapshotOptions, False),

68 'Tags': (list, False)

69 }

70

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/troposphere/elasticsearch.py b/troposphere/elasticsearch.py

--- a/troposphere/elasticsearch.py

+++ b/troposphere/elasticsearch.py

@@ -61,7 +61,7 @@

props = {

'AccessPolicies': (policytypes, False),

'AdvancedOptions': (dict, False),

- 'DomainName': (basestring, True),

+ 'DomainName': (basestring, False),

'EBSOptions': (EBSOptions, False),

'ElasticsearchClusterConfig': (ElasticsearchClusterConfig, False),

'SnapshotOptions': (SnapshotOptions, False),

| {"golden_diff": "diff --git a/troposphere/elasticsearch.py b/troposphere/elasticsearch.py\n--- a/troposphere/elasticsearch.py\n+++ b/troposphere/elasticsearch.py\n@@ -61,7 +61,7 @@\n props = {\n 'AccessPolicies': (policytypes, False),\n 'AdvancedOptions': (dict, False),\n- 'DomainName': (basestring, True),\n+ 'DomainName': (basestring, False),\n 'EBSOptions': (EBSOptions, False),\n 'ElasticsearchClusterConfig': (ElasticsearchClusterConfig, False),\n 'SnapshotOptions': (SnapshotOptions, False),\n", "issue": "Elasticsearch Domain DomainName shouldn't be required\nAccording to the CF documentation, `DomainName` isn't required: http://docs.aws.amazon.com/AWSCloudFormation/latest/UserGuide/aws-resource-elasticsearch-domain.html\n\n", "before_files": [{"content": "# Copyright (c) 2012-2015, Mark Peek <[email protected]>\n# All rights reserved.\n#\n# See LICENSE file for full license.\n\nfrom . import AWSProperty, AWSObject\nfrom .validators import boolean, integer, integer_range, positive_integer\n\nVALID_VOLUME_TYPES = ('standard', 'gp2', 'io1')\n\ntry:\n from awacs.aws import Policy\n policytypes = (dict, Policy)\nexcept ImportError:\n policytypes = dict,\n\n\ndef validate_volume_type(volume_type):\n \"\"\"Validate VolumeType for ElasticsearchDomain\"\"\"\n if volume_type not in VALID_VOLUME_TYPES:\n raise ValueError(\"Elasticsearch Domain VolumeType must be one of: %s\" %\n \", \".join(VALID_VOLUME_TYPES))\n return volume_type\n\n\nclass EBSOptions(AWSProperty):\n props = {\n 'EBSEnabled': (boolean, False),\n 'Iops': (positive_integer, False),\n 'VolumeSize': (integer, False),\n 'VolumeType': (validate_volume_type, False)\n }\n\n def validate(self):\n volume_type = self.properties.get('VolumeType')\n iops = self.properties.get('Iops')\n if volume_type == 'io1' and not iops:\n raise ValueError(\"Must specify Iops if VolumeType is 'io1'.\")\n\n\nclass ElasticsearchClusterConfig(AWSProperty):\n props = {\n 'DedicatedMasterCount': (integer, False),\n 'DedicatedMasterEnabled': (boolean, False),\n 'DedicatedMasterType': (basestring, False),\n 'InstanceCount': (integer, False),\n 'InstanceType': (basestring, False),\n 'ZoneAwarenessEnabled': (boolean, False)\n }\n\n\nclass SnapshotOptions(AWSProperty):\n props = {\n 'AutomatedSnapshotStartHour': (integer_range(0, 23), False)\n }\n\n\nclass ElasticsearchDomain(AWSObject):\n resource_type = \"AWS::Elasticsearch::Domain\"\n\n props = {\n 'AccessPolicies': (policytypes, False),\n 'AdvancedOptions': (dict, False),\n 'DomainName': (basestring, True),\n 'EBSOptions': (EBSOptions, False),\n 'ElasticsearchClusterConfig': (ElasticsearchClusterConfig, False),\n 'SnapshotOptions': (SnapshotOptions, False),\n 'Tags': (list, False)\n }\n", "path": "troposphere/elasticsearch.py"}], "after_files": [{"content": "# Copyright (c) 2012-2015, Mark Peek <[email protected]>\n# All rights reserved.\n#\n# See LICENSE file for full license.\n\nfrom . import AWSProperty, AWSObject\nfrom .validators import boolean, integer, integer_range, positive_integer\n\nVALID_VOLUME_TYPES = ('standard', 'gp2', 'io1')\n\ntry:\n from awacs.aws import Policy\n policytypes = (dict, Policy)\nexcept ImportError:\n policytypes = dict,\n\n\ndef validate_volume_type(volume_type):\n \"\"\"Validate VolumeType for ElasticsearchDomain\"\"\"\n if volume_type not in VALID_VOLUME_TYPES:\n raise ValueError(\"Elasticsearch Domain VolumeType must be one of: %s\" %\n \", \".join(VALID_VOLUME_TYPES))\n return volume_type\n\n\nclass EBSOptions(AWSProperty):\n props = {\n 'EBSEnabled': (boolean, False),\n 'Iops': (positive_integer, False),\n 'VolumeSize': (integer, False),\n 'VolumeType': (validate_volume_type, False)\n }\n\n def validate(self):\n volume_type = self.properties.get('VolumeType')\n iops = self.properties.get('Iops')\n if volume_type == 'io1' and not iops:\n raise ValueError(\"Must specify Iops if VolumeType is 'io1'.\")\n\n\nclass ElasticsearchClusterConfig(AWSProperty):\n props = {\n 'DedicatedMasterCount': (integer, False),\n 'DedicatedMasterEnabled': (boolean, False),\n 'DedicatedMasterType': (basestring, False),\n 'InstanceCount': (integer, False),\n 'InstanceType': (basestring, False),\n 'ZoneAwarenessEnabled': (boolean, False)\n }\n\n\nclass SnapshotOptions(AWSProperty):\n props = {\n 'AutomatedSnapshotStartHour': (integer_range(0, 23), False)\n }\n\n\nclass ElasticsearchDomain(AWSObject):\n resource_type = \"AWS::Elasticsearch::Domain\"\n\n props = {\n 'AccessPolicies': (policytypes, False),\n 'AdvancedOptions': (dict, False),\n 'DomainName': (basestring, False),\n 'EBSOptions': (EBSOptions, False),\n 'ElasticsearchClusterConfig': (ElasticsearchClusterConfig, False),\n 'SnapshotOptions': (SnapshotOptions, False),\n 'Tags': (list, False)\n }\n", "path": "troposphere/elasticsearch.py"}]} | 960 | 139 |

gh_patches_debug_6167 | rasdani/github-patches | git_diff | mesonbuild__meson-2462 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

MSI installed meson fails to rerun in visual studio

Initially, I ran `meson build` from the source code directory `xxx` to create the build directory.

Later, if any `meson.build` files are modified, Visual studio fails to rerun Meson with the backtrace below. Meson is installed with MSI. It works with ninja as backend. It also works if meson isn't installed with MSI.

It seems like `mesonscript` in `regen_checker` is invalid when meson is installed with MSI.

```

>meson.exe : error : unrecognized arguments: --internal regenerate C:\Users\niklas\Documents\git\xxx C:\Users\niklas\Documents\git\xxx

1> Traceback (most recent call last):

1> File "C:\Users\niklas\AppData\Local\Programs\Python\Python36-32\lib\site-packages\cx_Freeze\initscripts\__startup__.py", line 14, in run

1> module.run()

1> File "C:\Users\niklas\AppData\Local\Programs\Python\Python36-32\lib\site-packages\cx_Freeze\initscripts\Console.py", line 26, in run

1> exec(code, m.__dict__)

1> File "meson.py", line 37, in <module>

1> File "meson.py", line 34, in main

1> File "mesonbuild\mesonmain.py", line 311, in run

1> File "mesonbuild\mesonmain.py", line 278, in run_script_command

1> File "mesonbuild\scripts\regen_checker.py", line 56, in run

1> File "mesonbuild\scripts\regen_checker.py", line 42, in regen

1> File "C:\Users\niklas\AppData\Local\Programs\Python\Python36-32\lib\subprocess.py", line 291, in check_call

1> raise CalledProcessError(retcode, cmd)

1> subprocess.CalledProcessError: Command '['C:\\Program Files\\Meson\\meson.exe', 'C:\\Users\\niklas\\Documents\\git\\xxx\\meson', '--internal', 'regenerate', 'C:\\Users\\niklas\\Documents\\git\\xxx\\build', 'C:\\Users\\niklas\\Documents\\git\\xxx', '--backend=vs2015']' returned non-zero exit status 2.

```

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `mesonbuild/scripts/regen_checker.py`

Content:

```

1 # Copyright 2015-2016 The Meson development team

2

3 # Licensed under the Apache License, Version 2.0 (the "License");

4 # you may not use this file except in compliance with the License.

5 # You may obtain a copy of the License at

6

7 # http://www.apache.org/licenses/LICENSE-2.0

8

9 # Unless required by applicable law or agreed to in writing, software

10 # distributed under the License is distributed on an "AS IS" BASIS,

11 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 # See the License for the specific language governing permissions and

13 # limitations under the License.

14

15 import sys, os

16 import pickle, subprocess

17

18 # This could also be used for XCode.

19

20 def need_regen(regeninfo, regen_timestamp):

21 for i in regeninfo.depfiles:

22 curfile = os.path.join(regeninfo.build_dir, i)

23 curtime = os.stat(curfile).st_mtime

24 if curtime > regen_timestamp:

25 return True

26 # The timestamp file gets automatically deleted by MSBuild during a 'Clean' build.

27 # We must make sure to recreate it, even if we do not regenerate the solution.

28 # Otherwise, Visual Studio will always consider the REGEN project out of date.

29 print("Everything is up-to-date, regeneration of build files is not needed.")

30 from ..backend.vs2010backend import Vs2010Backend

31 Vs2010Backend.touch_regen_timestamp(regeninfo.build_dir)

32 return False

33

34 def regen(regeninfo, mesonscript, backend):

35 cmd = [sys.executable,

36 mesonscript,

37 '--internal',

38 'regenerate',

39 regeninfo.build_dir,

40 regeninfo.source_dir,

41 '--backend=' + backend]

42 subprocess.check_call(cmd)

43

44 def run(args):

45 private_dir = args[0]

46 dumpfile = os.path.join(private_dir, 'regeninfo.dump')

47 coredata = os.path.join(private_dir, 'coredata.dat')

48 with open(dumpfile, 'rb') as f:

49 regeninfo = pickle.load(f)

50 with open(coredata, 'rb') as f:

51 coredata = pickle.load(f)

52 mesonscript = coredata.meson_script_launcher

53 backend = coredata.get_builtin_option('backend')

54 regen_timestamp = os.stat(dumpfile).st_mtime

55 if need_regen(regeninfo, regen_timestamp):

56 regen(regeninfo, mesonscript, backend)

57 sys.exit(0)

58

59 if __name__ == '__main__':

60 run(sys.argv[1:])

61

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/mesonbuild/scripts/regen_checker.py b/mesonbuild/scripts/regen_checker.py

--- a/mesonbuild/scripts/regen_checker.py

+++ b/mesonbuild/scripts/regen_checker.py

@@ -32,9 +32,11 @@

return False

def regen(regeninfo, mesonscript, backend):

- cmd = [sys.executable,

- mesonscript,

- '--internal',

+ if sys.executable.lower().endswith('meson.exe'):

+ cmd_exe = [sys.executable]

+ else:

+ cmd_exe = [sys.executable, mesonscript]

+ cmd = cmd_exe + ['--internal',

'regenerate',

regeninfo.build_dir,

regeninfo.source_dir,