problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.1k

10.2k

| golden_diff

stringlengths 151

4.94k

| verification_info

stringlengths 582

21k

| num_tokens

int64 271

2.05k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_10906 | rasdani/github-patches | git_diff | plone__Products.CMFPlone-973 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Group administration: AttributeError: 'NoneType' object has no attribute 'getGroupTitleOrName'

/Plone2/@@usergroup-groupmembership?groupname=None

gives me

Here is the full error message:

Display traceback as text

Traceback (innermost last):

Module ZPublisher.Publish, line 138, in publish

Module ZPublisher.mapply, line 77, in mapply

Module ZPublisher.Publish, line 48, in call_object

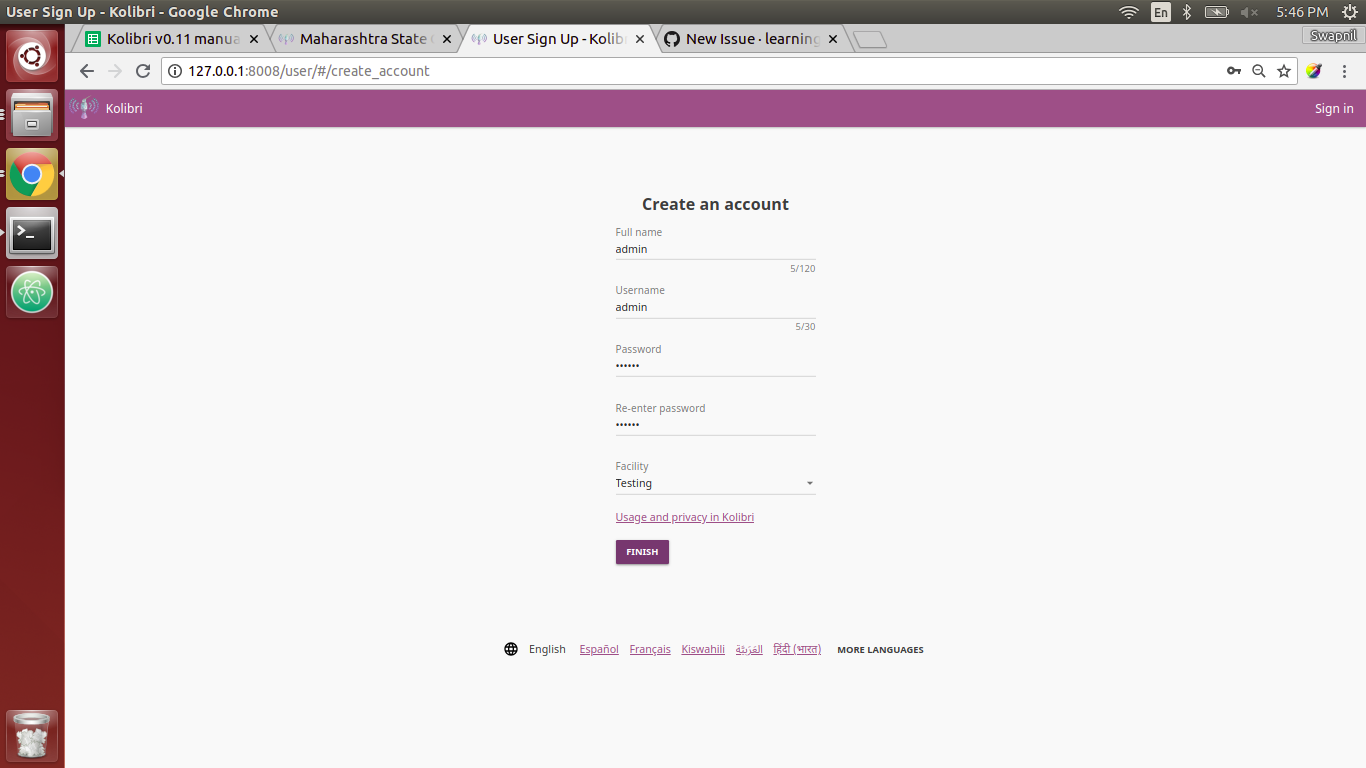

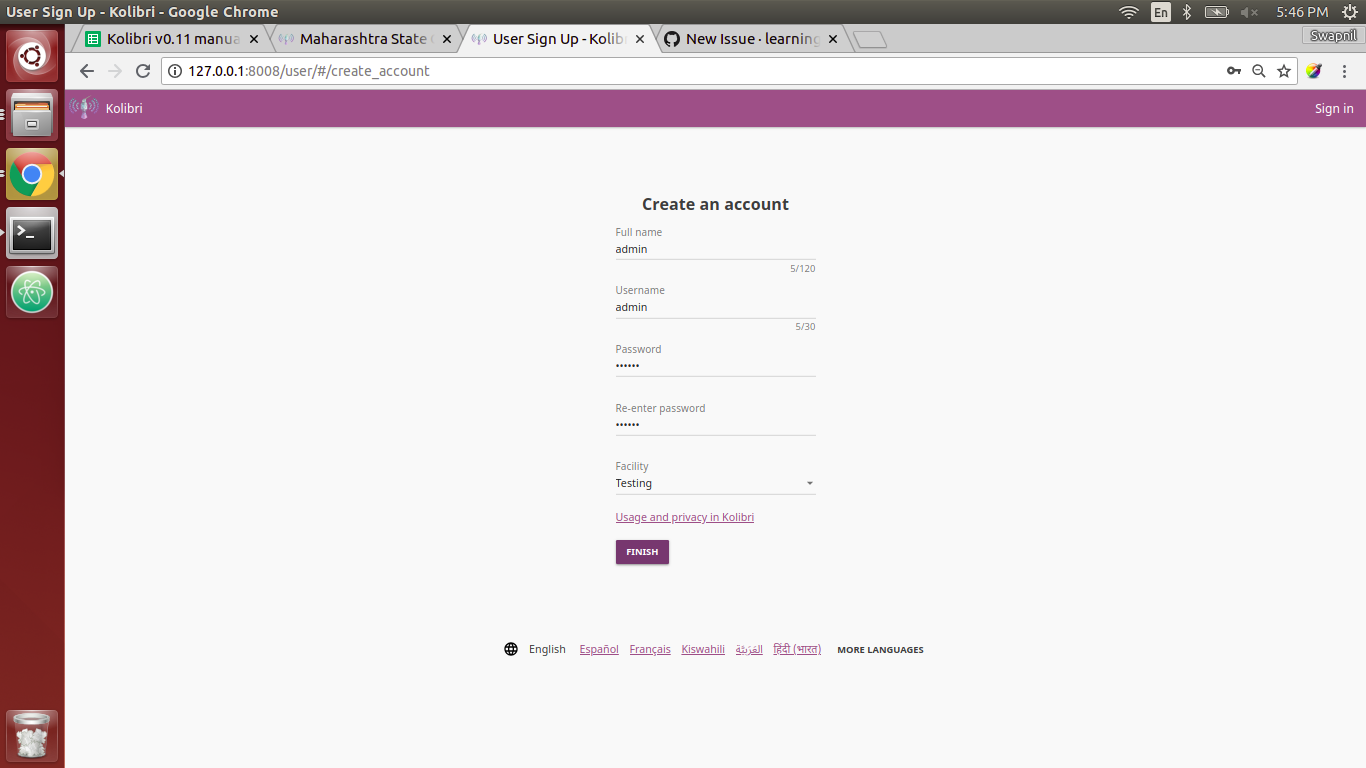

Module Products.CMFPlone.controlpanel.browser.usergroups_groupmembership, line 69, in **call**

Module Products.CMFPlone.controlpanel.browser.usergroups_groupmembership, line 16, in update

AttributeError: 'NoneType' object has no attribute 'getGroupTitleOrName'

This happens when you click on "new group" and then on the "group members" tab.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `Products/CMFPlone/controlpanel/browser/usergroups_groupmembership.py`

Content:

```

1 from Products.CMFPlone import PloneMessageFactory as _

2 from zExceptions import Forbidden

3 from Products.CMFCore.utils import getToolByName

4 from Products.CMFPlone.controlpanel.browser.usergroups import \

5 UsersGroupsControlPanelView

6 from Products.CMFPlone.utils import normalizeString

7

8

9 class GroupMembershipControlPanel(UsersGroupsControlPanelView):

10

11 def update(self):

12 self.groupname = getattr(self.request, 'groupname')

13 self.gtool = getToolByName(self, 'portal_groups')

14 self.mtool = getToolByName(self, 'portal_membership')

15 self.group = self.gtool.getGroupById(self.groupname)

16 self.grouptitle = self.group.getGroupTitleOrName() or self.groupname

17

18 self.request.set('grouproles', self.group.getRoles() if self.group else [])

19 self.canAddUsers = True

20 if 'Manager' in self.request.get('grouproles') and not self.is_zope_manager:

21 self.canAddUsers = False

22

23 self.groupquery = self.makeQuery(groupname=self.groupname)

24 self.groupkeyquery = self.makeQuery(key=self.groupname)

25

26 form = self.request.form

27 submitted = form.get('form.submitted', False)

28

29 self.searchResults = []

30 self.searchString = ''

31 self.newSearch = False

32

33 if submitted:

34 # add/delete before we search so we don't show stale results

35 toAdd = form.get('add', [])

36 if toAdd:

37 if not self.canAddUsers:

38 raise Forbidden

39

40 for u in toAdd:

41 self.gtool.addPrincipalToGroup(u, self.groupname, self.request)

42 self.context.plone_utils.addPortalMessage(_(u'Changes made.'))

43

44 toDelete = form.get('delete', [])

45 if toDelete:

46 for u in toDelete:

47 self.gtool.removePrincipalFromGroup(u, self.groupname, self.request)

48 self.context.plone_utils.addPortalMessage(_(u'Changes made.'))

49

50 search = form.get('form.button.Search', None) is not None

51 edit = form.get('form.button.Edit', None) is not None and toDelete

52 add = form.get('form.button.Add', None) is not None and toAdd

53 findAll = form.get('form.button.FindAll', None) is not None and \

54 not self.many_users

55 # The search string should be cleared when one of the

56 # non-search buttons has been clicked.

57 if findAll or edit or add:

58 form['searchstring'] = ''

59 self.searchString = form.get('searchstring', '')

60 if findAll or bool(self.searchString):

61 self.searchResults = self.getPotentialMembers(self.searchString)

62

63 if search or findAll:

64 self.newSearch = True

65

66 self.groupMembers = self.getMembers()

67

68 def __call__(self):

69 self.update()

70 return self.index()

71

72 def isGroup(self, itemName):

73 return self.gtool.isGroup(itemName)

74

75 def getMembers(self):

76 searchResults = self.gtool.getGroupMembers(self.groupname)

77

78 groupResults = [self.gtool.getGroupById(m) for m in searchResults]

79 groupResults.sort(key=lambda x: x is not None and normalizeString(x.getGroupTitleOrName()))

80

81 userResults = [self.mtool.getMemberById(m) for m in searchResults]

82 userResults.sort(key=lambda x: x is not None and x.getProperty('fullname') is not None and normalizeString(x.getProperty('fullname')) or '')

83

84 mergedResults = groupResults + userResults

85 return filter(None, mergedResults)

86

87 def getPotentialMembers(self, searchString):

88 ignoredUsersGroups = [x.id for x in self.getMembers() + [self.group,] if x is not None]

89 return self.membershipSearch(searchString, ignore=ignoredUsersGroups)

90

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/Products/CMFPlone/controlpanel/browser/usergroups_groupmembership.py b/Products/CMFPlone/controlpanel/browser/usergroups_groupmembership.py

--- a/Products/CMFPlone/controlpanel/browser/usergroups_groupmembership.py

+++ b/Products/CMFPlone/controlpanel/browser/usergroups_groupmembership.py

@@ -13,6 +13,9 @@

self.gtool = getToolByName(self, 'portal_groups')

self.mtool = getToolByName(self, 'portal_membership')

self.group = self.gtool.getGroupById(self.groupname)

+ if self.group is None:

+ return

+

self.grouptitle = self.group.getGroupTitleOrName() or self.groupname

self.request.set('grouproles', self.group.getRoles() if self.group else [])

| {"golden_diff": "diff --git a/Products/CMFPlone/controlpanel/browser/usergroups_groupmembership.py b/Products/CMFPlone/controlpanel/browser/usergroups_groupmembership.py\n--- a/Products/CMFPlone/controlpanel/browser/usergroups_groupmembership.py\n+++ b/Products/CMFPlone/controlpanel/browser/usergroups_groupmembership.py\n@@ -13,6 +13,9 @@\n self.gtool = getToolByName(self, 'portal_groups')\n self.mtool = getToolByName(self, 'portal_membership')\n self.group = self.gtool.getGroupById(self.groupname)\n+ if self.group is None:\n+ return\n+\n self.grouptitle = self.group.getGroupTitleOrName() or self.groupname\n \n self.request.set('grouproles', self.group.getRoles() if self.group else [])\n", "issue": "Group administration: AttributeError: 'NoneType' object has no attribute 'getGroupTitleOrName'\n/Plone2/@@usergroup-groupmembership?groupname=None\n\ngives me\n\nHere is the full error message:\n\nDisplay traceback as text\n\nTraceback (innermost last):\n\nModule ZPublisher.Publish, line 138, in publish\nModule ZPublisher.mapply, line 77, in mapply\nModule ZPublisher.Publish, line 48, in call_object\nModule Products.CMFPlone.controlpanel.browser.usergroups_groupmembership, line 69, in **call**\nModule Products.CMFPlone.controlpanel.browser.usergroups_groupmembership, line 16, in update\nAttributeError: 'NoneType' object has no attribute 'getGroupTitleOrName'\n\nThis happens when you click on \"new group\" and then on the \"group members\" tab.\n\n", "before_files": [{"content": "from Products.CMFPlone import PloneMessageFactory as _\nfrom zExceptions import Forbidden\nfrom Products.CMFCore.utils import getToolByName\nfrom Products.CMFPlone.controlpanel.browser.usergroups import \\\n UsersGroupsControlPanelView\nfrom Products.CMFPlone.utils import normalizeString\n\n\nclass GroupMembershipControlPanel(UsersGroupsControlPanelView):\n\n def update(self):\n self.groupname = getattr(self.request, 'groupname')\n self.gtool = getToolByName(self, 'portal_groups')\n self.mtool = getToolByName(self, 'portal_membership')\n self.group = self.gtool.getGroupById(self.groupname)\n self.grouptitle = self.group.getGroupTitleOrName() or self.groupname\n\n self.request.set('grouproles', self.group.getRoles() if self.group else [])\n self.canAddUsers = True\n if 'Manager' in self.request.get('grouproles') and not self.is_zope_manager:\n self.canAddUsers = False\n\n self.groupquery = self.makeQuery(groupname=self.groupname)\n self.groupkeyquery = self.makeQuery(key=self.groupname)\n\n form = self.request.form\n submitted = form.get('form.submitted', False)\n\n self.searchResults = []\n self.searchString = ''\n self.newSearch = False\n\n if submitted:\n # add/delete before we search so we don't show stale results\n toAdd = form.get('add', [])\n if toAdd:\n if not self.canAddUsers:\n raise Forbidden\n\n for u in toAdd:\n self.gtool.addPrincipalToGroup(u, self.groupname, self.request)\n self.context.plone_utils.addPortalMessage(_(u'Changes made.'))\n\n toDelete = form.get('delete', [])\n if toDelete:\n for u in toDelete:\n self.gtool.removePrincipalFromGroup(u, self.groupname, self.request)\n self.context.plone_utils.addPortalMessage(_(u'Changes made.'))\n\n search = form.get('form.button.Search', None) is not None\n edit = form.get('form.button.Edit', None) is not None and toDelete\n add = form.get('form.button.Add', None) is not None and toAdd\n findAll = form.get('form.button.FindAll', None) is not None and \\\n not self.many_users\n # The search string should be cleared when one of the\n # non-search buttons has been clicked.\n if findAll or edit or add:\n form['searchstring'] = ''\n self.searchString = form.get('searchstring', '')\n if findAll or bool(self.searchString):\n self.searchResults = self.getPotentialMembers(self.searchString)\n\n if search or findAll:\n self.newSearch = True\n\n self.groupMembers = self.getMembers()\n\n def __call__(self):\n self.update()\n return self.index()\n\n def isGroup(self, itemName):\n return self.gtool.isGroup(itemName)\n\n def getMembers(self):\n searchResults = self.gtool.getGroupMembers(self.groupname)\n\n groupResults = [self.gtool.getGroupById(m) for m in searchResults]\n groupResults.sort(key=lambda x: x is not None and normalizeString(x.getGroupTitleOrName()))\n\n userResults = [self.mtool.getMemberById(m) for m in searchResults]\n userResults.sort(key=lambda x: x is not None and x.getProperty('fullname') is not None and normalizeString(x.getProperty('fullname')) or '')\n\n mergedResults = groupResults + userResults\n return filter(None, mergedResults)\n\n def getPotentialMembers(self, searchString):\n ignoredUsersGroups = [x.id for x in self.getMembers() + [self.group,] if x is not None]\n return self.membershipSearch(searchString, ignore=ignoredUsersGroups)\n", "path": "Products/CMFPlone/controlpanel/browser/usergroups_groupmembership.py"}], "after_files": [{"content": "from Products.CMFPlone import PloneMessageFactory as _\nfrom zExceptions import Forbidden\nfrom Products.CMFCore.utils import getToolByName\nfrom Products.CMFPlone.controlpanel.browser.usergroups import \\\n UsersGroupsControlPanelView\nfrom Products.CMFPlone.utils import normalizeString\n\n\nclass GroupMembershipControlPanel(UsersGroupsControlPanelView):\n\n def update(self):\n self.groupname = getattr(self.request, 'groupname')\n self.gtool = getToolByName(self, 'portal_groups')\n self.mtool = getToolByName(self, 'portal_membership')\n self.group = self.gtool.getGroupById(self.groupname)\n if self.group is None:\n return\n\n self.grouptitle = self.group.getGroupTitleOrName() or self.groupname\n\n self.request.set('grouproles', self.group.getRoles() if self.group else [])\n self.canAddUsers = True\n if 'Manager' in self.request.get('grouproles') and not self.is_zope_manager:\n self.canAddUsers = False\n\n self.groupquery = self.makeQuery(groupname=self.groupname)\n self.groupkeyquery = self.makeQuery(key=self.groupname)\n\n form = self.request.form\n submitted = form.get('form.submitted', False)\n\n self.searchResults = []\n self.searchString = ''\n self.newSearch = False\n\n if submitted:\n # add/delete before we search so we don't show stale results\n toAdd = form.get('add', [])\n if toAdd:\n if not self.canAddUsers:\n raise Forbidden\n\n for u in toAdd:\n self.gtool.addPrincipalToGroup(u, self.groupname, self.request)\n self.context.plone_utils.addPortalMessage(_(u'Changes made.'))\n\n toDelete = form.get('delete', [])\n if toDelete:\n for u in toDelete:\n self.gtool.removePrincipalFromGroup(u, self.groupname, self.request)\n self.context.plone_utils.addPortalMessage(_(u'Changes made.'))\n\n search = form.get('form.button.Search', None) is not None\n edit = form.get('form.button.Edit', None) is not None and toDelete\n add = form.get('form.button.Add', None) is not None and toAdd\n findAll = form.get('form.button.FindAll', None) is not None and \\\n not self.many_users\n # The search string should be cleared when one of the\n # non-search buttons has been clicked.\n if findAll or edit or add:\n form['searchstring'] = ''\n self.searchString = form.get('searchstring', '')\n if findAll or bool(self.searchString):\n self.searchResults = self.getPotentialMembers(self.searchString)\n\n if search or findAll:\n self.newSearch = True\n\n self.groupMembers = self.getMembers()\n\n def __call__(self):\n self.update()\n return self.index()\n\n def isGroup(self, itemName):\n return self.gtool.isGroup(itemName)\n\n def getMembers(self):\n searchResults = self.gtool.getGroupMembers(self.groupname)\n\n groupResults = [self.gtool.getGroupById(m) for m in searchResults]\n groupResults.sort(key=lambda x: x is not None and normalizeString(x.getGroupTitleOrName()))\n\n userResults = [self.mtool.getMemberById(m) for m in searchResults]\n userResults.sort(key=lambda x: x is not None and x.getProperty('fullname') is not None and normalizeString(x.getProperty('fullname')) or '')\n\n mergedResults = groupResults + userResults\n return filter(None, mergedResults)\n\n def getPotentialMembers(self, searchString):\n ignoredUsersGroups = [x.id for x in self.getMembers() + [self.group,] if x is not None]\n return self.membershipSearch(searchString, ignore=ignoredUsersGroups)\n", "path": "Products/CMFPlone/controlpanel/browser/usergroups_groupmembership.py"}]} | 1,451 | 179 |

gh_patches_debug_17529 | rasdani/github-patches | git_diff | talonhub__community-324 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Vocabulary functions do not strip whitespace from CSV

I had the misfortune the last few days to believe that the vocabulary csv files were not operating correctly.

Apparently whitespace is not stripped around fields. So "test, test2" leads a row which is parsed, but not turned into a functioning command.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `code/user_settings.py`

Content:

```

1 from talon import Module, fs, Context

2 import os

3 import csv

4 from pathlib import Path

5 from typing import Dict, List, Tuple

6 import threading

7

8

9 # NOTE: This method requires this module to be one folder below the top-level

10 # knausj folder.

11 SETTINGS_DIR = Path(__file__).parents[1] / "settings"

12

13 if not SETTINGS_DIR.is_dir():

14 os.mkdir(SETTINGS_DIR)

15

16 mod = Module()

17 ctx = Context()

18

19

20 def _load_csv_dict(

21 file_name: str, headers=Tuple[str, str], default: Dict[str, str] = {}

22 ) -> Dict[str, str]:

23 """Load a word mapping from a CSV file. If it doesn't exist, create it."""

24 assert file_name.endswith(".csv")

25 path = SETTINGS_DIR / file_name

26

27 # Create the file if it doesn't exist

28 if not SETTINGS_DIR.is_dir():

29 os.mkdir(SETTINGS_DIR)

30 if not path.is_file():

31 with open(path, "w", encoding="utf-8") as file:

32 writer = csv.writer(file)

33 writer.writerow(headers)

34 for key, value in default.items():

35 writer.writerow([key] if key == value else [value, key])

36

37 # Now read from disk

38 with open(path, "r", encoding="utf-8") as file:

39 rows = list(csv.reader(file))

40

41 mapping = {}

42 if len(rows) >= 2:

43 actual_headers = rows[0]

44 if not actual_headers == list(headers):

45 print(

46 f'"{file_name}": Malformed headers - {actual_headers}.'

47 + f" Should be {list(headers)}. Ignoring row."

48 )

49 for row in rows[1:]:

50 if len(row) == 0:

51 # Windows newlines are sometimes read as empty rows. :champagne:

52 continue

53 if len(row) == 1:

54 mapping[row[0]] = row[0]

55 else:

56 mapping[row[1]] = row[0]

57 if len(row) > 2:

58 print(

59 f'"{file_name}": More than two values in row: {row}.'

60 + " Ignoring the extras."

61 )

62 return mapping

63

64

65 _mapped_lists = {}

66 _settings_lock = threading.Lock()

67 _word_map_params = None

68

69

70 def _update_list(list_name: str, *csv_params):

71 """Update list with `list_name` from a csv on disk.

72

73 `csv_params` will be passed to `_load_csv_dict`.

74

75 """

76 global ctx

77 ctx.lists[list_name] = _load_csv_dict(*csv_params)

78

79

80 def _update_word_map(*csv_params):

81 """Update `dictate.word_map` from disk.

82

83 `csv_params` will be passed to `_load_csv_dict`.

84

85 """

86 global ctx

87 ctx.settings["dictate.word_map"] = _load_csv_dict(*csv_params)

88

89

90 def _update_lists(*_):

91 """Update all CSV lists from disk."""

92 print("Updating CSV lists...")

93 with _settings_lock:

94 for list_name, csv_params in _mapped_lists.items():

95 try:

96 _update_list(list_name, *csv_params)

97 except Exception as e:

98 print(f'Error loading list "{list_name}": {e}')

99 # Special case - `dictate.word_map` isn't a list.

100 if _word_map_params:

101 try:

102 _update_word_map(*_word_map_params)

103 except Exception as e:

104 print(f'Error updating "dictate.word_map": {e}')

105

106

107 def bind_list_to_csv(

108 list_name: str,

109 csv_name: str,

110 csv_headers: Tuple[str, str],

111 default_values: Dict[str, str] = {},

112 ) -> None:

113 """Register a Talon list that should be updated from a CSV on disk.

114

115 The CSV file will be created automatically in the "settings" dir if it

116 doesn't exist. This directory can be tracked independently to

117 `knausj_talon`, allowing the user to specify things like private vocab

118 separately.

119

120 Note the list must be declared separately.

121

122 """

123 global _mapped_lists

124 with _settings_lock:

125 _update_list(list_name, csv_name, csv_headers, default_values)

126 # If there were no errors, we can register it permanently.

127 _mapped_lists[list_name] = (csv_name, csv_headers, default_values)

128

129

130 def bind_word_map_to_csv(

131 csv_name: str, csv_headers: Tuple[str, str], default_values: Dict[str, str] = {}

132 ) -> None:

133 """Like `bind_list_to_csv`, but for the `dictate.word_map` setting.

134

135 Since it is a setting, not a list, it has to be handled separately.

136

137 """

138 global _word_map_params

139 # TODO: Maybe a generic system for binding the dicts to settings? Only

140 # implement if it's needed.

141 with _settings_lock:

142 _update_word_map(csv_name, csv_headers, default_values)

143 # If there were no errors, we can register it permanently.

144 _word_map_params = (csv_name, csv_headers, default_values)

145

146

147 fs.watch(str(SETTINGS_DIR), _update_lists)

148

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/code/user_settings.py b/code/user_settings.py

--- a/code/user_settings.py

+++ b/code/user_settings.py

@@ -51,14 +51,17 @@

# Windows newlines are sometimes read as empty rows. :champagne:

continue

if len(row) == 1:

- mapping[row[0]] = row[0]

+ output = spoken_form = row[0]

else:

- mapping[row[1]] = row[0]

+ output, spoken_form = row[:2]

if len(row) > 2:

print(

f'"{file_name}": More than two values in row: {row}.'

+ " Ignoring the extras."

)

+ # Leading/trailing whitespace in spoken form can prevent recognition.

+ spoken_form = spoken_form.strip()

+ mapping[spoken_form] = output

return mapping

| {"golden_diff": "diff --git a/code/user_settings.py b/code/user_settings.py\n--- a/code/user_settings.py\n+++ b/code/user_settings.py\n@@ -51,14 +51,17 @@\n # Windows newlines are sometimes read as empty rows. :champagne:\n continue\n if len(row) == 1:\n- mapping[row[0]] = row[0]\n+ output = spoken_form = row[0]\n else:\n- mapping[row[1]] = row[0]\n+ output, spoken_form = row[:2]\n if len(row) > 2:\n print(\n f'\"{file_name}\": More than two values in row: {row}.'\n + \" Ignoring the extras.\"\n )\n+ # Leading/trailing whitespace in spoken form can prevent recognition.\n+ spoken_form = spoken_form.strip()\n+ mapping[spoken_form] = output\n return mapping\n", "issue": "Vocabulary functions do not strip whitespace from CSV\nI had the misfortune the last few days to believe that the vocabulary csv files were not operating correctly.\r\n\r\nApparently whitespace is not stripped around fields. So \"test, test2\" leads a row which is parsed, but not turned into a functioning command.\n", "before_files": [{"content": "from talon import Module, fs, Context\nimport os\nimport csv\nfrom pathlib import Path\nfrom typing import Dict, List, Tuple\nimport threading\n\n\n# NOTE: This method requires this module to be one folder below the top-level\n# knausj folder.\nSETTINGS_DIR = Path(__file__).parents[1] / \"settings\"\n\nif not SETTINGS_DIR.is_dir():\n os.mkdir(SETTINGS_DIR)\n\nmod = Module()\nctx = Context()\n\n\ndef _load_csv_dict(\n file_name: str, headers=Tuple[str, str], default: Dict[str, str] = {}\n) -> Dict[str, str]:\n \"\"\"Load a word mapping from a CSV file. If it doesn't exist, create it.\"\"\"\n assert file_name.endswith(\".csv\")\n path = SETTINGS_DIR / file_name\n\n # Create the file if it doesn't exist\n if not SETTINGS_DIR.is_dir():\n os.mkdir(SETTINGS_DIR)\n if not path.is_file():\n with open(path, \"w\", encoding=\"utf-8\") as file:\n writer = csv.writer(file)\n writer.writerow(headers)\n for key, value in default.items():\n writer.writerow([key] if key == value else [value, key])\n\n # Now read from disk\n with open(path, \"r\", encoding=\"utf-8\") as file:\n rows = list(csv.reader(file))\n\n mapping = {}\n if len(rows) >= 2:\n actual_headers = rows[0]\n if not actual_headers == list(headers):\n print(\n f'\"{file_name}\": Malformed headers - {actual_headers}.'\n + f\" Should be {list(headers)}. Ignoring row.\"\n )\n for row in rows[1:]:\n if len(row) == 0:\n # Windows newlines are sometimes read as empty rows. :champagne:\n continue\n if len(row) == 1:\n mapping[row[0]] = row[0]\n else:\n mapping[row[1]] = row[0]\n if len(row) > 2:\n print(\n f'\"{file_name}\": More than two values in row: {row}.'\n + \" Ignoring the extras.\"\n )\n return mapping\n\n\n_mapped_lists = {}\n_settings_lock = threading.Lock()\n_word_map_params = None\n\n\ndef _update_list(list_name: str, *csv_params):\n \"\"\"Update list with `list_name` from a csv on disk.\n\n `csv_params` will be passed to `_load_csv_dict`.\n\n \"\"\"\n global ctx\n ctx.lists[list_name] = _load_csv_dict(*csv_params)\n\n\ndef _update_word_map(*csv_params):\n \"\"\"Update `dictate.word_map` from disk.\n\n `csv_params` will be passed to `_load_csv_dict`.\n\n \"\"\"\n global ctx\n ctx.settings[\"dictate.word_map\"] = _load_csv_dict(*csv_params)\n\n\ndef _update_lists(*_):\n \"\"\"Update all CSV lists from disk.\"\"\"\n print(\"Updating CSV lists...\")\n with _settings_lock:\n for list_name, csv_params in _mapped_lists.items():\n try:\n _update_list(list_name, *csv_params)\n except Exception as e:\n print(f'Error loading list \"{list_name}\": {e}')\n # Special case - `dictate.word_map` isn't a list.\n if _word_map_params:\n try:\n _update_word_map(*_word_map_params)\n except Exception as e:\n print(f'Error updating \"dictate.word_map\": {e}')\n\n\ndef bind_list_to_csv(\n list_name: str,\n csv_name: str,\n csv_headers: Tuple[str, str],\n default_values: Dict[str, str] = {},\n) -> None:\n \"\"\"Register a Talon list that should be updated from a CSV on disk.\n\n The CSV file will be created automatically in the \"settings\" dir if it\n doesn't exist. This directory can be tracked independently to\n `knausj_talon`, allowing the user to specify things like private vocab\n separately.\n\n Note the list must be declared separately.\n\n \"\"\"\n global _mapped_lists\n with _settings_lock:\n _update_list(list_name, csv_name, csv_headers, default_values)\n # If there were no errors, we can register it permanently.\n _mapped_lists[list_name] = (csv_name, csv_headers, default_values)\n\n\ndef bind_word_map_to_csv(\n csv_name: str, csv_headers: Tuple[str, str], default_values: Dict[str, str] = {}\n) -> None:\n \"\"\"Like `bind_list_to_csv`, but for the `dictate.word_map` setting.\n\n Since it is a setting, not a list, it has to be handled separately.\n\n \"\"\"\n global _word_map_params\n # TODO: Maybe a generic system for binding the dicts to settings? Only\n # implement if it's needed.\n with _settings_lock:\n _update_word_map(csv_name, csv_headers, default_values)\n # If there were no errors, we can register it permanently.\n _word_map_params = (csv_name, csv_headers, default_values)\n\n\nfs.watch(str(SETTINGS_DIR), _update_lists)\n", "path": "code/user_settings.py"}], "after_files": [{"content": "from talon import Module, fs, Context\nimport os\nimport csv\nfrom pathlib import Path\nfrom typing import Dict, List, Tuple\nimport threading\n\n\n# NOTE: This method requires this module to be one folder below the top-level\n# knausj folder.\nSETTINGS_DIR = Path(__file__).parents[1] / \"settings\"\n\nif not SETTINGS_DIR.is_dir():\n os.mkdir(SETTINGS_DIR)\n\nmod = Module()\nctx = Context()\n\n\ndef _load_csv_dict(\n file_name: str, headers=Tuple[str, str], default: Dict[str, str] = {}\n) -> Dict[str, str]:\n \"\"\"Load a word mapping from a CSV file. If it doesn't exist, create it.\"\"\"\n assert file_name.endswith(\".csv\")\n path = SETTINGS_DIR / file_name\n\n # Create the file if it doesn't exist\n if not SETTINGS_DIR.is_dir():\n os.mkdir(SETTINGS_DIR)\n if not path.is_file():\n with open(path, \"w\", encoding=\"utf-8\") as file:\n writer = csv.writer(file)\n writer.writerow(headers)\n for key, value in default.items():\n writer.writerow([key] if key == value else [value, key])\n\n # Now read from disk\n with open(path, \"r\", encoding=\"utf-8\") as file:\n rows = list(csv.reader(file))\n\n mapping = {}\n if len(rows) >= 2:\n actual_headers = rows[0]\n if not actual_headers == list(headers):\n print(\n f'\"{file_name}\": Malformed headers - {actual_headers}.'\n + f\" Should be {list(headers)}. Ignoring row.\"\n )\n for row in rows[1:]:\n if len(row) == 0:\n # Windows newlines are sometimes read as empty rows. :champagne:\n continue\n if len(row) == 1:\n output = spoken_form = row[0]\n else:\n output, spoken_form = row[:2]\n if len(row) > 2:\n print(\n f'\"{file_name}\": More than two values in row: {row}.'\n + \" Ignoring the extras.\"\n )\n # Leading/trailing whitespace in spoken form can prevent recognition.\n spoken_form = spoken_form.strip()\n mapping[spoken_form] = output\n return mapping\n\n\n_mapped_lists = {}\n_settings_lock = threading.Lock()\n_word_map_params = None\n\n\ndef _update_list(list_name: str, *csv_params):\n \"\"\"Update list with `list_name` from a csv on disk.\n\n `csv_params` will be passed to `_load_csv_dict`.\n\n \"\"\"\n global ctx\n ctx.lists[list_name] = _load_csv_dict(*csv_params)\n\n\ndef _update_word_map(*csv_params):\n \"\"\"Update `dictate.word_map` from disk.\n\n `csv_params` will be passed to `_load_csv_dict`.\n\n \"\"\"\n global ctx\n ctx.settings[\"dictate.word_map\"] = _load_csv_dict(*csv_params)\n\n\ndef _update_lists(*_):\n \"\"\"Update all CSV lists from disk.\"\"\"\n print(\"Updating CSV lists...\")\n with _settings_lock:\n for list_name, csv_params in _mapped_lists.items():\n try:\n _update_list(list_name, *csv_params)\n except Exception as e:\n print(f'Error loading list \"{list_name}\": {e}')\n # Special case - `dictate.word_map` isn't a list.\n if _word_map_params:\n try:\n _update_word_map(*_word_map_params)\n except Exception as e:\n print(f'Error updating \"dictate.word_map\": {e}')\n\n\ndef bind_list_to_csv(\n list_name: str,\n csv_name: str,\n csv_headers: Tuple[str, str],\n default_values: Dict[str, str] = {},\n) -> None:\n \"\"\"Register a Talon list that should be updated from a CSV on disk.\n\n The CSV file will be created automatically in the \"settings\" dir if it\n doesn't exist. This directory can be tracked independently to\n `knausj_talon`, allowing the user to specify things like private vocab\n separately.\n\n Note the list must be declared separately.\n\n \"\"\"\n global _mapped_lists\n with _settings_lock:\n _update_list(list_name, csv_name, csv_headers, default_values)\n # If there were no errors, we can register it permanently.\n _mapped_lists[list_name] = (csv_name, csv_headers, default_values)\n\n\ndef bind_word_map_to_csv(\n csv_name: str, csv_headers: Tuple[str, str], default_values: Dict[str, str] = {}\n) -> None:\n \"\"\"Like `bind_list_to_csv`, but for the `dictate.word_map` setting.\n\n Since it is a setting, not a list, it has to be handled separately.\n\n \"\"\"\n global _word_map_params\n # TODO: Maybe a generic system for binding the dicts to settings? Only\n # implement if it's needed.\n with _settings_lock:\n _update_word_map(csv_name, csv_headers, default_values)\n # If there were no errors, we can register it permanently.\n _word_map_params = (csv_name, csv_headers, default_values)\n\n\nfs.watch(str(SETTINGS_DIR), _update_lists)\n", "path": "code/user_settings.py"}]} | 1,781 | 198 |

gh_patches_debug_40475 | rasdani/github-patches | git_diff | aio-libs-abandoned__aioredis-py-846 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

`HashCommandsMixin.hset()` doesn't support multiple field=value pairs

> As of Redis 4.0.0, HSET is variadic and allows for multiple field/value pairs.

https://redis.io/commands/hset

And also info about HMSET usage:

> As per Redis 4.0.0, HMSET is considered deprecated. Please use HSET in new code.

https://redis.io/commands/hmset

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `aioredis/commands/hash.py`

Content:

```

1 from itertools import chain

2

3 from aioredis.util import (

4 wait_ok,

5 wait_convert,

6 wait_make_dict,

7 _NOTSET,

8 _ScanIter,

9 )

10

11

12 class HashCommandsMixin:

13 """Hash commands mixin.

14

15 For commands details see: http://redis.io/commands#hash

16 """

17

18 def hdel(self, key, field, *fields):

19 """Delete one or more hash fields."""

20 return self.execute(b"HDEL", key, field, *fields)

21

22 def hexists(self, key, field):

23 """Determine if hash field exists."""

24 fut = self.execute(b"HEXISTS", key, field)

25 return wait_convert(fut, bool)

26

27 def hget(self, key, field, *, encoding=_NOTSET):

28 """Get the value of a hash field."""

29 return self.execute(b"HGET", key, field, encoding=encoding)

30

31 def hgetall(self, key, *, encoding=_NOTSET):

32 """Get all the fields and values in a hash."""

33 fut = self.execute(b"HGETALL", key, encoding=encoding)

34 return wait_make_dict(fut)

35

36 def hincrby(self, key, field, increment=1):

37 """Increment the integer value of a hash field by the given number."""

38 return self.execute(b"HINCRBY", key, field, increment)

39

40 def hincrbyfloat(self, key, field, increment=1.0):

41 """Increment the float value of a hash field by the given number."""

42 fut = self.execute(b"HINCRBYFLOAT", key, field, increment)

43 return wait_convert(fut, float)

44

45 def hkeys(self, key, *, encoding=_NOTSET):

46 """Get all the fields in a hash."""

47 return self.execute(b"HKEYS", key, encoding=encoding)

48

49 def hlen(self, key):

50 """Get the number of fields in a hash."""

51 return self.execute(b"HLEN", key)

52

53 def hmget(self, key, field, *fields, encoding=_NOTSET):

54 """Get the values of all the given fields."""

55 return self.execute(b"HMGET", key, field, *fields, encoding=encoding)

56

57 def hmset(self, key, field, value, *pairs):

58 """Set multiple hash fields to multiple values."""

59 if len(pairs) % 2 != 0:

60 raise TypeError("length of pairs must be even number")

61 return wait_ok(self.execute(b"HMSET", key, field, value, *pairs))

62

63 def hmset_dict(self, key, *args, **kwargs):

64 """Set multiple hash fields to multiple values.

65

66 dict can be passed as first positional argument:

67

68 >>> await redis.hmset_dict(

69 ... 'key', {'field1': 'value1', 'field2': 'value2'})

70

71 or keyword arguments can be used:

72

73 >>> await redis.hmset_dict(

74 ... 'key', field1='value1', field2='value2')

75

76 or dict argument can be mixed with kwargs:

77

78 >>> await redis.hmset_dict(

79 ... 'key', {'field1': 'value1'}, field2='value2')

80

81 .. note:: ``dict`` and ``kwargs`` not get mixed into single dictionary,

82 if both specified and both have same key(s) -- ``kwargs`` will win:

83

84 >>> await redis.hmset_dict('key', {'foo': 'bar'}, foo='baz')

85 >>> await redis.hget('key', 'foo', encoding='utf-8')

86 'baz'

87

88 """

89 if not args and not kwargs:

90 raise TypeError("args or kwargs must be specified")

91 pairs = ()

92 if len(args) > 1:

93 raise TypeError("single positional argument allowed")

94 elif len(args) == 1:

95 if not isinstance(args[0], dict):

96 raise TypeError("args[0] must be dict")

97 elif not args[0] and not kwargs:

98 raise ValueError("args[0] is empty dict")

99 pairs = chain.from_iterable(args[0].items())

100 kwargs_pairs = chain.from_iterable(kwargs.items())

101 return wait_ok(self.execute(b"HMSET", key, *chain(pairs, kwargs_pairs)))

102

103 def hset(self, key, field, value):

104 """Set the string value of a hash field."""

105 return self.execute(b"HSET", key, field, value)

106

107 def hsetnx(self, key, field, value):

108 """Set the value of a hash field, only if the field does not exist."""

109 return self.execute(b"HSETNX", key, field, value)

110

111 def hvals(self, key, *, encoding=_NOTSET):

112 """Get all the values in a hash."""

113 return self.execute(b"HVALS", key, encoding=encoding)

114

115 def hscan(self, key, cursor=0, match=None, count=None):

116 """Incrementally iterate hash fields and associated values."""

117 args = [key, cursor]

118 match is not None and args.extend([b"MATCH", match])

119 count is not None and args.extend([b"COUNT", count])

120 fut = self.execute(b"HSCAN", *args)

121 return wait_convert(fut, _make_pairs)

122

123 def ihscan(self, key, *, match=None, count=None):

124 """Incrementally iterate sorted set items using async for.

125

126 Usage example:

127

128 >>> async for name, val in redis.ihscan(key, match='something*'):

129 ... print('Matched:', name, '->', val)

130

131 """

132 return _ScanIter(lambda cur: self.hscan(key, cur, match=match, count=count))

133

134 def hstrlen(self, key, field):

135 """Get the length of the value of a hash field."""

136 return self.execute(b"HSTRLEN", key, field)

137

138

139 def _make_pairs(obj):

140 it = iter(obj[1])

141 return (int(obj[0]), list(zip(it, it)))

142

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/aioredis/commands/hash.py b/aioredis/commands/hash.py

--- a/aioredis/commands/hash.py

+++ b/aioredis/commands/hash.py

@@ -1,3 +1,4 @@

+import warnings

from itertools import chain

from aioredis.util import (

@@ -55,7 +56,17 @@

return self.execute(b"HMGET", key, field, *fields, encoding=encoding)

def hmset(self, key, field, value, *pairs):

- """Set multiple hash fields to multiple values."""

+ """Set multiple hash fields to multiple values.

+

+ .. deprecated::

+ HMSET is deprecated since redis 4.0.0, use HSET instead.

+

+ """

+ warnings.warn(

+ "%s.hmset() is deprecated since redis 4.0.0, use %s.hset() instead"

+ % (self.__class__.__name__, self.__class__.__name__),

+ DeprecationWarning

+ )

if len(pairs) % 2 != 0:

raise TypeError("length of pairs must be even number")

return wait_ok(self.execute(b"HMSET", key, field, value, *pairs))

@@ -63,6 +74,9 @@

def hmset_dict(self, key, *args, **kwargs):

"""Set multiple hash fields to multiple values.

+ .. deprecated::

+ HMSET is deprecated since redis 4.0.0, use HSET instead.

+

dict can be passed as first positional argument:

>>> await redis.hmset_dict(

@@ -86,6 +100,12 @@

'baz'

"""

+ warnings.warn(

+ "%s.hmset() is deprecated since redis 4.0.0, use %s.hset() instead"

+ % (self.__class__.__name__, self.__class__.__name__),

+ DeprecationWarning

+ )

+

if not args and not kwargs:

raise TypeError("args or kwargs must be specified")

pairs = ()

@@ -100,9 +120,31 @@

kwargs_pairs = chain.from_iterable(kwargs.items())

return wait_ok(self.execute(b"HMSET", key, *chain(pairs, kwargs_pairs)))

- def hset(self, key, field, value):

- """Set the string value of a hash field."""

- return self.execute(b"HSET", key, field, value)

+ def hset(self, key, field=None, value=None, mapping=None):

+ """Set multiple hash fields to multiple values.

+

+ Setting a single hash field to a value:

+ >>> await redis.hset('key', 'some_field', 'some_value')

+

+ Setting values for multipe has fields at once:

+ >>> await redis.hset('key', mapping={'field1': 'abc', 'field2': 'def'})

+

+ .. note:: Using both the field/value pair and mapping at the same time

+ will also work.

+

+ """

+ if not field and not mapping:

+ raise ValueError("hset needs either a field/value pair or mapping")

+ if mapping and not isinstance(mapping, dict):

+ raise TypeError("'mapping' should be dict")

+

+ items = []

+ if field:

+ items.extend((field, value))

+ if mapping:

+ for item in mapping.items():

+ items.extend(item)

+ return self.execute(b"HSET", key, *items)

def hsetnx(self, key, field, value):

"""Set the value of a hash field, only if the field does not exist."""

| {"golden_diff": "diff --git a/aioredis/commands/hash.py b/aioredis/commands/hash.py\n--- a/aioredis/commands/hash.py\n+++ b/aioredis/commands/hash.py\n@@ -1,3 +1,4 @@\n+import warnings\n from itertools import chain\n \n from aioredis.util import (\n@@ -55,7 +56,17 @@\n return self.execute(b\"HMGET\", key, field, *fields, encoding=encoding)\n \n def hmset(self, key, field, value, *pairs):\n- \"\"\"Set multiple hash fields to multiple values.\"\"\"\n+ \"\"\"Set multiple hash fields to multiple values.\n+\n+ .. deprecated::\n+ HMSET is deprecated since redis 4.0.0, use HSET instead.\n+\n+ \"\"\"\n+ warnings.warn(\n+ \"%s.hmset() is deprecated since redis 4.0.0, use %s.hset() instead\"\n+ % (self.__class__.__name__, self.__class__.__name__),\n+ DeprecationWarning\n+ )\n if len(pairs) % 2 != 0:\n raise TypeError(\"length of pairs must be even number\")\n return wait_ok(self.execute(b\"HMSET\", key, field, value, *pairs))\n@@ -63,6 +74,9 @@\n def hmset_dict(self, key, *args, **kwargs):\n \"\"\"Set multiple hash fields to multiple values.\n \n+ .. deprecated::\n+ HMSET is deprecated since redis 4.0.0, use HSET instead.\n+\n dict can be passed as first positional argument:\n \n >>> await redis.hmset_dict(\n@@ -86,6 +100,12 @@\n 'baz'\n \n \"\"\"\n+ warnings.warn(\n+ \"%s.hmset() is deprecated since redis 4.0.0, use %s.hset() instead\"\n+ % (self.__class__.__name__, self.__class__.__name__),\n+ DeprecationWarning\n+ )\n+\n if not args and not kwargs:\n raise TypeError(\"args or kwargs must be specified\")\n pairs = ()\n@@ -100,9 +120,31 @@\n kwargs_pairs = chain.from_iterable(kwargs.items())\n return wait_ok(self.execute(b\"HMSET\", key, *chain(pairs, kwargs_pairs)))\n \n- def hset(self, key, field, value):\n- \"\"\"Set the string value of a hash field.\"\"\"\n- return self.execute(b\"HSET\", key, field, value)\n+ def hset(self, key, field=None, value=None, mapping=None):\n+ \"\"\"Set multiple hash fields to multiple values.\n+\n+ Setting a single hash field to a value:\n+ >>> await redis.hset('key', 'some_field', 'some_value')\n+\n+ Setting values for multipe has fields at once:\n+ >>> await redis.hset('key', mapping={'field1': 'abc', 'field2': 'def'})\n+\n+ .. note:: Using both the field/value pair and mapping at the same time\n+ will also work.\n+\n+ \"\"\"\n+ if not field and not mapping:\n+ raise ValueError(\"hset needs either a field/value pair or mapping\")\n+ if mapping and not isinstance(mapping, dict):\n+ raise TypeError(\"'mapping' should be dict\")\n+\n+ items = []\n+ if field:\n+ items.extend((field, value))\n+ if mapping:\n+ for item in mapping.items():\n+ items.extend(item)\n+ return self.execute(b\"HSET\", key, *items)\n \n def hsetnx(self, key, field, value):\n \"\"\"Set the value of a hash field, only if the field does not exist.\"\"\"\n", "issue": "`HashCommandsMixin.hset()` doesn't support multiple field=value pairs\n> As of Redis 4.0.0, HSET is variadic and allows for multiple field/value pairs.\r\n\r\nhttps://redis.io/commands/hset\r\n\r\nAnd also info about HMSET usage:\r\n> As per Redis 4.0.0, HMSET is considered deprecated. Please use HSET in new code.\r\n\r\nhttps://redis.io/commands/hmset\n", "before_files": [{"content": "from itertools import chain\n\nfrom aioredis.util import (\n wait_ok,\n wait_convert,\n wait_make_dict,\n _NOTSET,\n _ScanIter,\n)\n\n\nclass HashCommandsMixin:\n \"\"\"Hash commands mixin.\n\n For commands details see: http://redis.io/commands#hash\n \"\"\"\n\n def hdel(self, key, field, *fields):\n \"\"\"Delete one or more hash fields.\"\"\"\n return self.execute(b\"HDEL\", key, field, *fields)\n\n def hexists(self, key, field):\n \"\"\"Determine if hash field exists.\"\"\"\n fut = self.execute(b\"HEXISTS\", key, field)\n return wait_convert(fut, bool)\n\n def hget(self, key, field, *, encoding=_NOTSET):\n \"\"\"Get the value of a hash field.\"\"\"\n return self.execute(b\"HGET\", key, field, encoding=encoding)\n\n def hgetall(self, key, *, encoding=_NOTSET):\n \"\"\"Get all the fields and values in a hash.\"\"\"\n fut = self.execute(b\"HGETALL\", key, encoding=encoding)\n return wait_make_dict(fut)\n\n def hincrby(self, key, field, increment=1):\n \"\"\"Increment the integer value of a hash field by the given number.\"\"\"\n return self.execute(b\"HINCRBY\", key, field, increment)\n\n def hincrbyfloat(self, key, field, increment=1.0):\n \"\"\"Increment the float value of a hash field by the given number.\"\"\"\n fut = self.execute(b\"HINCRBYFLOAT\", key, field, increment)\n return wait_convert(fut, float)\n\n def hkeys(self, key, *, encoding=_NOTSET):\n \"\"\"Get all the fields in a hash.\"\"\"\n return self.execute(b\"HKEYS\", key, encoding=encoding)\n\n def hlen(self, key):\n \"\"\"Get the number of fields in a hash.\"\"\"\n return self.execute(b\"HLEN\", key)\n\n def hmget(self, key, field, *fields, encoding=_NOTSET):\n \"\"\"Get the values of all the given fields.\"\"\"\n return self.execute(b\"HMGET\", key, field, *fields, encoding=encoding)\n\n def hmset(self, key, field, value, *pairs):\n \"\"\"Set multiple hash fields to multiple values.\"\"\"\n if len(pairs) % 2 != 0:\n raise TypeError(\"length of pairs must be even number\")\n return wait_ok(self.execute(b\"HMSET\", key, field, value, *pairs))\n\n def hmset_dict(self, key, *args, **kwargs):\n \"\"\"Set multiple hash fields to multiple values.\n\n dict can be passed as first positional argument:\n\n >>> await redis.hmset_dict(\n ... 'key', {'field1': 'value1', 'field2': 'value2'})\n\n or keyword arguments can be used:\n\n >>> await redis.hmset_dict(\n ... 'key', field1='value1', field2='value2')\n\n or dict argument can be mixed with kwargs:\n\n >>> await redis.hmset_dict(\n ... 'key', {'field1': 'value1'}, field2='value2')\n\n .. note:: ``dict`` and ``kwargs`` not get mixed into single dictionary,\n if both specified and both have same key(s) -- ``kwargs`` will win:\n\n >>> await redis.hmset_dict('key', {'foo': 'bar'}, foo='baz')\n >>> await redis.hget('key', 'foo', encoding='utf-8')\n 'baz'\n\n \"\"\"\n if not args and not kwargs:\n raise TypeError(\"args or kwargs must be specified\")\n pairs = ()\n if len(args) > 1:\n raise TypeError(\"single positional argument allowed\")\n elif len(args) == 1:\n if not isinstance(args[0], dict):\n raise TypeError(\"args[0] must be dict\")\n elif not args[0] and not kwargs:\n raise ValueError(\"args[0] is empty dict\")\n pairs = chain.from_iterable(args[0].items())\n kwargs_pairs = chain.from_iterable(kwargs.items())\n return wait_ok(self.execute(b\"HMSET\", key, *chain(pairs, kwargs_pairs)))\n\n def hset(self, key, field, value):\n \"\"\"Set the string value of a hash field.\"\"\"\n return self.execute(b\"HSET\", key, field, value)\n\n def hsetnx(self, key, field, value):\n \"\"\"Set the value of a hash field, only if the field does not exist.\"\"\"\n return self.execute(b\"HSETNX\", key, field, value)\n\n def hvals(self, key, *, encoding=_NOTSET):\n \"\"\"Get all the values in a hash.\"\"\"\n return self.execute(b\"HVALS\", key, encoding=encoding)\n\n def hscan(self, key, cursor=0, match=None, count=None):\n \"\"\"Incrementally iterate hash fields and associated values.\"\"\"\n args = [key, cursor]\n match is not None and args.extend([b\"MATCH\", match])\n count is not None and args.extend([b\"COUNT\", count])\n fut = self.execute(b\"HSCAN\", *args)\n return wait_convert(fut, _make_pairs)\n\n def ihscan(self, key, *, match=None, count=None):\n \"\"\"Incrementally iterate sorted set items using async for.\n\n Usage example:\n\n >>> async for name, val in redis.ihscan(key, match='something*'):\n ... print('Matched:', name, '->', val)\n\n \"\"\"\n return _ScanIter(lambda cur: self.hscan(key, cur, match=match, count=count))\n\n def hstrlen(self, key, field):\n \"\"\"Get the length of the value of a hash field.\"\"\"\n return self.execute(b\"HSTRLEN\", key, field)\n\n\ndef _make_pairs(obj):\n it = iter(obj[1])\n return (int(obj[0]), list(zip(it, it)))\n", "path": "aioredis/commands/hash.py"}], "after_files": [{"content": "import warnings\nfrom itertools import chain\n\nfrom aioredis.util import (\n wait_ok,\n wait_convert,\n wait_make_dict,\n _NOTSET,\n _ScanIter,\n)\n\n\nclass HashCommandsMixin:\n \"\"\"Hash commands mixin.\n\n For commands details see: http://redis.io/commands#hash\n \"\"\"\n\n def hdel(self, key, field, *fields):\n \"\"\"Delete one or more hash fields.\"\"\"\n return self.execute(b\"HDEL\", key, field, *fields)\n\n def hexists(self, key, field):\n \"\"\"Determine if hash field exists.\"\"\"\n fut = self.execute(b\"HEXISTS\", key, field)\n return wait_convert(fut, bool)\n\n def hget(self, key, field, *, encoding=_NOTSET):\n \"\"\"Get the value of a hash field.\"\"\"\n return self.execute(b\"HGET\", key, field, encoding=encoding)\n\n def hgetall(self, key, *, encoding=_NOTSET):\n \"\"\"Get all the fields and values in a hash.\"\"\"\n fut = self.execute(b\"HGETALL\", key, encoding=encoding)\n return wait_make_dict(fut)\n\n def hincrby(self, key, field, increment=1):\n \"\"\"Increment the integer value of a hash field by the given number.\"\"\"\n return self.execute(b\"HINCRBY\", key, field, increment)\n\n def hincrbyfloat(self, key, field, increment=1.0):\n \"\"\"Increment the float value of a hash field by the given number.\"\"\"\n fut = self.execute(b\"HINCRBYFLOAT\", key, field, increment)\n return wait_convert(fut, float)\n\n def hkeys(self, key, *, encoding=_NOTSET):\n \"\"\"Get all the fields in a hash.\"\"\"\n return self.execute(b\"HKEYS\", key, encoding=encoding)\n\n def hlen(self, key):\n \"\"\"Get the number of fields in a hash.\"\"\"\n return self.execute(b\"HLEN\", key)\n\n def hmget(self, key, field, *fields, encoding=_NOTSET):\n \"\"\"Get the values of all the given fields.\"\"\"\n return self.execute(b\"HMGET\", key, field, *fields, encoding=encoding)\n\n def hmset(self, key, field, value, *pairs):\n \"\"\"Set multiple hash fields to multiple values.\n\n .. deprecated::\n HMSET is deprecated since redis 4.0.0, use HSET instead.\n\n \"\"\"\n warnings.warn(\n \"%s.hmset() is deprecated since redis 4.0.0, use %s.hset() instead\"\n % (self.__class__.__name__, self.__class__.__name__),\n DeprecationWarning\n )\n if len(pairs) % 2 != 0:\n raise TypeError(\"length of pairs must be even number\")\n return wait_ok(self.execute(b\"HMSET\", key, field, value, *pairs))\n\n def hmset_dict(self, key, *args, **kwargs):\n \"\"\"Set multiple hash fields to multiple values.\n\n .. deprecated::\n HMSET is deprecated since redis 4.0.0, use HSET instead.\n\n dict can be passed as first positional argument:\n\n >>> await redis.hmset_dict(\n ... 'key', {'field1': 'value1', 'field2': 'value2'})\n\n or keyword arguments can be used:\n\n >>> await redis.hmset_dict(\n ... 'key', field1='value1', field2='value2')\n\n or dict argument can be mixed with kwargs:\n\n >>> await redis.hmset_dict(\n ... 'key', {'field1': 'value1'}, field2='value2')\n\n .. note:: ``dict`` and ``kwargs`` not get mixed into single dictionary,\n if both specified and both have same key(s) -- ``kwargs`` will win:\n\n >>> await redis.hmset_dict('key', {'foo': 'bar'}, foo='baz')\n >>> await redis.hget('key', 'foo', encoding='utf-8')\n 'baz'\n\n \"\"\"\n warnings.warn(\n \"%s.hmset() is deprecated since redis 4.0.0, use %s.hset() instead\"\n % (self.__class__.__name__, self.__class__.__name__),\n DeprecationWarning\n )\n\n if not args and not kwargs:\n raise TypeError(\"args or kwargs must be specified\")\n pairs = ()\n if len(args) > 1:\n raise TypeError(\"single positional argument allowed\")\n elif len(args) == 1:\n if not isinstance(args[0], dict):\n raise TypeError(\"args[0] must be dict\")\n elif not args[0] and not kwargs:\n raise ValueError(\"args[0] is empty dict\")\n pairs = chain.from_iterable(args[0].items())\n kwargs_pairs = chain.from_iterable(kwargs.items())\n return wait_ok(self.execute(b\"HMSET\", key, *chain(pairs, kwargs_pairs)))\n\n def hset(self, key, field=None, value=None, mapping=None):\n \"\"\"Set multiple hash fields to multiple values.\n\n Setting a single hash field to a value:\n >>> await redis.hset('key', 'some_field', 'some_value')\n\n Setting values for multipe has fields at once:\n >>> await redis.hset('key', mapping={'field1': 'abc', 'field2': 'def'})\n\n .. note:: Using both the field/value pair and mapping at the same time\n will also work.\n\n \"\"\"\n if not field and not mapping:\n raise ValueError(\"hset needs either a field/value pair or mapping\")\n if mapping and not isinstance(mapping, dict):\n raise TypeError(\"'mapping' should be dict\")\n\n items = []\n if field:\n items.extend((field, value))\n if mapping:\n for item in mapping.items():\n items.extend(item)\n return self.execute(b\"HSET\", key, *items)\n\n def hsetnx(self, key, field, value):\n \"\"\"Set the value of a hash field, only if the field does not exist.\"\"\"\n return self.execute(b\"HSETNX\", key, field, value)\n\n def hvals(self, key, *, encoding=_NOTSET):\n \"\"\"Get all the values in a hash.\"\"\"\n return self.execute(b\"HVALS\", key, encoding=encoding)\n\n def hscan(self, key, cursor=0, match=None, count=None):\n \"\"\"Incrementally iterate hash fields and associated values.\"\"\"\n args = [key, cursor]\n match is not None and args.extend([b\"MATCH\", match])\n count is not None and args.extend([b\"COUNT\", count])\n fut = self.execute(b\"HSCAN\", *args)\n return wait_convert(fut, _make_pairs)\n\n def ihscan(self, key, *, match=None, count=None):\n \"\"\"Incrementally iterate sorted set items using async for.\n\n Usage example:\n\n >>> async for name, val in redis.ihscan(key, match='something*'):\n ... print('Matched:', name, '->', val)\n\n \"\"\"\n return _ScanIter(lambda cur: self.hscan(key, cur, match=match, count=count))\n\n def hstrlen(self, key, field):\n \"\"\"Get the length of the value of a hash field.\"\"\"\n return self.execute(b\"HSTRLEN\", key, field)\n\n\ndef _make_pairs(obj):\n it = iter(obj[1])\n return (int(obj[0]), list(zip(it, it)))\n", "path": "aioredis/commands/hash.py"}]} | 1,957 | 806 |

gh_patches_debug_16347 | rasdani/github-patches | git_diff | liqd__a4-meinberlin-506 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Organisations listed in filter on project overview unsorted

The list of organisations listed in filter on the project overview page is unsorted and determined by the order of creating the organisations. I think it would be best to sort them alphabetically.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `apps/projects/views.py`

Content:

```

1 from datetime import datetime

2 import django_filters

3 from django.apps import apps

4 from django.conf import settings

5 from django.utils.translation import ugettext_lazy as _

6

7 from adhocracy4.filters import views as filter_views

8 from adhocracy4.filters.filters import DefaultsFilterSet

9 from adhocracy4.projects import models as project_models

10

11 from apps.contrib.widgets import DropdownLinkWidget

12 from apps.dashboard import blueprints

13

14

15 class OrderingWidget(DropdownLinkWidget):

16 label = _('Ordering')

17 right = True

18

19

20 class OrganisationWidget(DropdownLinkWidget):

21 label = _('Organisation')

22

23

24 class ArchivedWidget(DropdownLinkWidget):

25 label = _('Archived')

26

27 def __init__(self, attrs=None):

28 choices = (

29 ('', _('All')),

30 ('false', _('No')),

31 ('true', _('Yes')),

32 )

33 super().__init__(attrs, choices)

34

35

36 class YearWidget(DropdownLinkWidget):

37 label = _('Year')

38

39 def __init__(self, attrs=None):

40 choices = (('', _('Any')),)

41 now = datetime.now().year

42 try:

43 first_year = project_models.Project.objects.earliest('created').\

44 created.year

45 except project_models.Project.DoesNotExist:

46 first_year = now

47 for year in range(now, first_year - 1, -1):

48 choices += (year, year),

49 super().__init__(attrs, choices)

50

51

52 class TypeWidget(DropdownLinkWidget):

53 label = _('Project Type')

54

55 def __init__(self, attrs=None):

56 choices = (('', _('Any')),)

57 for blueprint_key, blueprint in blueprints.blueprints:

58 choices += (blueprint_key, blueprint.title),

59 super().__init__(attrs, choices)

60

61

62 class ProjectFilterSet(DefaultsFilterSet):

63

64 defaults = {

65 'is_archived': 'false'

66 }

67

68 ordering = django_filters.OrderingFilter(

69 choices=(

70 ('-created', _('Most recent')),

71 ),

72 empty_label=None,

73 widget=OrderingWidget,

74 )

75

76 organisation = django_filters.ModelChoiceFilter(

77 queryset=apps.get_model(settings.A4_ORGANISATIONS_MODEL).objects.all(),

78 widget=OrganisationWidget,

79 )

80

81 is_archived = django_filters.BooleanFilter(

82 widget=ArchivedWidget

83 )

84

85 created = django_filters.NumberFilter(

86 name='created',

87 lookup_expr='year',

88 widget=YearWidget,

89 )

90

91 typ = django_filters.CharFilter(

92 widget=TypeWidget,

93 )

94

95 class Meta:

96 model = project_models.Project

97 fields = ['organisation', 'is_archived', 'created', 'typ']

98

99

100 class ProjectListView(filter_views.FilteredListView):

101 model = project_models.Project

102 paginate_by = 16

103 filter_set = ProjectFilterSet

104

105 def get_queryset(self):

106 return super().get_queryset().filter(is_draft=False)

107

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/apps/projects/views.py b/apps/projects/views.py

--- a/apps/projects/views.py

+++ b/apps/projects/views.py

@@ -54,7 +54,8 @@

def __init__(self, attrs=None):

choices = (('', _('Any')),)

- for blueprint_key, blueprint in blueprints.blueprints:

+ sorted_blueprints = sorted(blueprints.blueprints, key=lambda a: a[1])

+ for blueprint_key, blueprint in sorted_blueprints:

choices += (blueprint_key, blueprint.title),

super().__init__(attrs, choices)

@@ -74,7 +75,8 @@

)

organisation = django_filters.ModelChoiceFilter(

- queryset=apps.get_model(settings.A4_ORGANISATIONS_MODEL).objects.all(),

+ queryset=apps.get_model(settings.A4_ORGANISATIONS_MODEL).objects

+ .order_by('name'),

widget=OrganisationWidget,

)

| {"golden_diff": "diff --git a/apps/projects/views.py b/apps/projects/views.py\n--- a/apps/projects/views.py\n+++ b/apps/projects/views.py\n@@ -54,7 +54,8 @@\n \n def __init__(self, attrs=None):\n choices = (('', _('Any')),)\n- for blueprint_key, blueprint in blueprints.blueprints:\n+ sorted_blueprints = sorted(blueprints.blueprints, key=lambda a: a[1])\n+ for blueprint_key, blueprint in sorted_blueprints:\n choices += (blueprint_key, blueprint.title),\n super().__init__(attrs, choices)\n \n@@ -74,7 +75,8 @@\n )\n \n organisation = django_filters.ModelChoiceFilter(\n- queryset=apps.get_model(settings.A4_ORGANISATIONS_MODEL).objects.all(),\n+ queryset=apps.get_model(settings.A4_ORGANISATIONS_MODEL).objects\n+ .order_by('name'),\n widget=OrganisationWidget,\n )\n", "issue": "Organisations listed in filter on project overview unsorted\nThe list of organisations listed in filter on the project overview page is unsorted and determined by the order of creating the organisations. I think it would be best to sort them alphabetically.\n", "before_files": [{"content": "from datetime import datetime\nimport django_filters\nfrom django.apps import apps\nfrom django.conf import settings\nfrom django.utils.translation import ugettext_lazy as _\n\nfrom adhocracy4.filters import views as filter_views\nfrom adhocracy4.filters.filters import DefaultsFilterSet\nfrom adhocracy4.projects import models as project_models\n\nfrom apps.contrib.widgets import DropdownLinkWidget\nfrom apps.dashboard import blueprints\n\n\nclass OrderingWidget(DropdownLinkWidget):\n label = _('Ordering')\n right = True\n\n\nclass OrganisationWidget(DropdownLinkWidget):\n label = _('Organisation')\n\n\nclass ArchivedWidget(DropdownLinkWidget):\n label = _('Archived')\n\n def __init__(self, attrs=None):\n choices = (\n ('', _('All')),\n ('false', _('No')),\n ('true', _('Yes')),\n )\n super().__init__(attrs, choices)\n\n\nclass YearWidget(DropdownLinkWidget):\n label = _('Year')\n\n def __init__(self, attrs=None):\n choices = (('', _('Any')),)\n now = datetime.now().year\n try:\n first_year = project_models.Project.objects.earliest('created').\\\n created.year\n except project_models.Project.DoesNotExist:\n first_year = now\n for year in range(now, first_year - 1, -1):\n choices += (year, year),\n super().__init__(attrs, choices)\n\n\nclass TypeWidget(DropdownLinkWidget):\n label = _('Project Type')\n\n def __init__(self, attrs=None):\n choices = (('', _('Any')),)\n for blueprint_key, blueprint in blueprints.blueprints:\n choices += (blueprint_key, blueprint.title),\n super().__init__(attrs, choices)\n\n\nclass ProjectFilterSet(DefaultsFilterSet):\n\n defaults = {\n 'is_archived': 'false'\n }\n\n ordering = django_filters.OrderingFilter(\n choices=(\n ('-created', _('Most recent')),\n ),\n empty_label=None,\n widget=OrderingWidget,\n )\n\n organisation = django_filters.ModelChoiceFilter(\n queryset=apps.get_model(settings.A4_ORGANISATIONS_MODEL).objects.all(),\n widget=OrganisationWidget,\n )\n\n is_archived = django_filters.BooleanFilter(\n widget=ArchivedWidget\n )\n\n created = django_filters.NumberFilter(\n name='created',\n lookup_expr='year',\n widget=YearWidget,\n )\n\n typ = django_filters.CharFilter(\n widget=TypeWidget,\n )\n\n class Meta:\n model = project_models.Project\n fields = ['organisation', 'is_archived', 'created', 'typ']\n\n\nclass ProjectListView(filter_views.FilteredListView):\n model = project_models.Project\n paginate_by = 16\n filter_set = ProjectFilterSet\n\n def get_queryset(self):\n return super().get_queryset().filter(is_draft=False)\n", "path": "apps/projects/views.py"}], "after_files": [{"content": "from datetime import datetime\nimport django_filters\nfrom django.apps import apps\nfrom django.conf import settings\nfrom django.utils.translation import ugettext_lazy as _\n\nfrom adhocracy4.filters import views as filter_views\nfrom adhocracy4.filters.filters import DefaultsFilterSet\nfrom adhocracy4.projects import models as project_models\n\nfrom apps.contrib.widgets import DropdownLinkWidget\nfrom apps.dashboard import blueprints\n\n\nclass OrderingWidget(DropdownLinkWidget):\n label = _('Ordering')\n right = True\n\n\nclass OrganisationWidget(DropdownLinkWidget):\n label = _('Organisation')\n\n\nclass ArchivedWidget(DropdownLinkWidget):\n label = _('Archived')\n\n def __init__(self, attrs=None):\n choices = (\n ('', _('All')),\n ('false', _('No')),\n ('true', _('Yes')),\n )\n super().__init__(attrs, choices)\n\n\nclass YearWidget(DropdownLinkWidget):\n label = _('Year')\n\n def __init__(self, attrs=None):\n choices = (('', _('Any')),)\n now = datetime.now().year\n try:\n first_year = project_models.Project.objects.earliest('created').\\\n created.year\n except project_models.Project.DoesNotExist:\n first_year = now\n for year in range(now, first_year - 1, -1):\n choices += (year, year),\n super().__init__(attrs, choices)\n\n\nclass TypeWidget(DropdownLinkWidget):\n label = _('Project Type')\n\n def __init__(self, attrs=None):\n choices = (('', _('Any')),)\n sorted_blueprints = sorted(blueprints.blueprints, key=lambda a: a[1])\n for blueprint_key, blueprint in sorted_blueprints:\n choices += (blueprint_key, blueprint.title),\n super().__init__(attrs, choices)\n\n\nclass ProjectFilterSet(DefaultsFilterSet):\n\n defaults = {\n 'is_archived': 'false'\n }\n\n ordering = django_filters.OrderingFilter(\n choices=(\n ('-created', _('Most recent')),\n ),\n empty_label=None,\n widget=OrderingWidget,\n )\n\n organisation = django_filters.ModelChoiceFilter(\n queryset=apps.get_model(settings.A4_ORGANISATIONS_MODEL).objects\n .order_by('name'),\n widget=OrganisationWidget,\n )\n\n is_archived = django_filters.BooleanFilter(\n widget=ArchivedWidget\n )\n\n created = django_filters.NumberFilter(\n name='created',\n lookup_expr='year',\n widget=YearWidget,\n )\n\n typ = django_filters.CharFilter(\n widget=TypeWidget,\n )\n\n class Meta:\n model = project_models.Project\n fields = ['organisation', 'is_archived', 'created', 'typ']\n\n\nclass ProjectListView(filter_views.FilteredListView):\n model = project_models.Project\n paginate_by = 16\n filter_set = ProjectFilterSet\n\n def get_queryset(self):\n return super().get_queryset().filter(is_draft=False)\n", "path": "apps/projects/views.py"}]} | 1,136 | 205 |

gh_patches_debug_42454 | rasdani/github-patches | git_diff | alltheplaces__alltheplaces-2641 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Spider holiday_stationstores is broken

During the global build at 2021-08-18-14-42-26, spider **holiday_stationstores** failed with **552 features** and **10 errors**.

Here's [the log](https://data.alltheplaces.xyz/runs/2021-08-18-14-42-26/logs/holiday_stationstores.txt) and [the output](https://data.alltheplaces.xyz/runs/2021-08-18-14-42-26/output/holiday_stationstores.geojson) ([on a map](https://data.alltheplaces.xyz/map.html?show=https://data.alltheplaces.xyz/runs/2021-08-18-14-42-26/output/holiday_stationstores.geojson))

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `locations/spiders/holiday_stationstores.py`

Content:

```

1 # -*- coding: utf-8 -*-

2 import scrapy

3 import json

4 import re

5

6 from locations.items import GeojsonPointItem

7

8

9 class HolidayStationstoreSpider(scrapy.Spider):

10 name = "holiday_stationstores"

11 item_attributes = {'brand': 'Holiday Stationstores',

12 'brand_wikidata': 'Q5880490'}

13 allowed_domains = ["www.holidaystationstores.com"]

14 download_delay = 0.2

15

16 def start_requests(self):

17 yield scrapy.Request('https://www.holidaystationstores.com/Locations/GetAllStores',

18 method='POST',

19 callback=self.parse_all_stores)

20

21 def parse_all_stores(self, response):

22 all_stores = json.loads(response.body_as_unicode())

23

24 for store_id, store in all_stores.items():

25 # GET requests get blocked by their CDN, but POST works fine

26 yield scrapy.Request(f"https://www.holidaystationstores.com/Locations/Detail?storeNumber={store_id}",

27 method='POST',

28 meta={'store': store})

29

30 def parse(self, response):

31 store = response.meta['store']

32

33 address = response.css(

34 '.row.HolidayBackgroundColorBlue div::text').extract_first().strip()

35 phone = response.css(

36 '.body-content .col-lg-4 .HolidayFontColorRed::text').extract_first().strip()

37 services = '|'.join(response.css(

38 '.body-content .col-lg-4 ul li::text').extract()).lower()

39 open_24_hours = '24 hours' in response.css(

40 '.body-content .col-lg-4').get().lower()

41

42 properties = {

43 'name': f"Holiday #{store['Name']}",

44 'lon': store['Lng'],

45 'lat': store['Lat'],

46 'addr_full': address,

47 'phone': phone,

48 'ref': store['ID'],

49 'opening_hours': '24/7' if open_24_hours else self.opening_hours(response),

50 'extras': {

51 'amenity:fuel': True,

52 'fuel:diesel': 'diesel' in services or None,

53 'atm': 'atm' in services or None,

54 'fuel:e85': 'e85' in services or None,

55 'hgv': 'truck' in services or None,

56 'fuel:propane': 'propane' in services or None,

57 'car_wash': 'car wash' in services or None,

58 'fuel:cng': 'cng' in services or None

59 }

60 }

61

62 yield GeojsonPointItem(**properties)

63

64 def opening_hours(self, response):

65 hour_part_elems = response.css(

66 '.body-content .col-lg-4 .row div::text').extract()

67 day_groups = []

68 this_day_group = None

69

70 if hour_part_elems:

71 def slice(source, step):

72 return [source[i:i+step] for i in range(0, len(source), step)]

73

74 for day, hours in slice(hour_part_elems, 2):

75 day = day[:2]

76 match = re.search(

77 r'^(\d{1,2}):(\d{2})\s*(a|p)m - (\d{1,2}):(\d{2})\s*(a|p)m?$', hours.lower())

78 (f_hr, f_min, f_ampm, t_hr, t_min, t_ampm) = match.groups()

79

80 f_hr = int(f_hr)

81 if f_ampm == 'p':

82 f_hr += 12

83 elif f_ampm == 'a' and f_hr == 12:

84 f_hr = 0

85 t_hr = int(t_hr)

86 if t_ampm == 'p':

87 t_hr += 12

88 elif t_ampm == 'a' and t_hr == 12:

89 t_hr = 0

90

91 hours = '{:02d}:{}-{:02d}:{}'.format(

92 f_hr,

93 f_min,

94 t_hr,

95 t_min,

96 )

97

98 if not this_day_group:

99 this_day_group = {

100 'from_day': day,

101 'to_day': day,

102 'hours': hours

103 }

104 elif this_day_group['hours'] != hours:

105 day_groups.append(this_day_group)

106 this_day_group = {

107 'from_day': day,

108 'to_day': day,

109 'hours': hours

110 }

111 elif this_day_group['hours'] == hours:

112 this_day_group['to_day'] = day

113

114 day_groups.append(this_day_group)

115

116 hour_part_elems = response.xpath(

117 '//span[@style="font-size:90%"]/text()').extract()

118 if hour_part_elems:

119 day_groups.append(

120 {'from_day': 'Mo', 'to_day': 'Su', 'hours': '00:00-23:59'})

121

122 opening_hours = ""

123 if len(day_groups) == 1 and day_groups[0]['hours'] in ('00:00-23:59', '00:00-00:00'):

124 opening_hours = '24/7'

125 else:

126 for day_group in day_groups:

127 if day_group['from_day'] == day_group['to_day']:

128 opening_hours += '{from_day} {hours}; '.format(**day_group)

129 elif day_group['from_day'] == 'Su' and day_group['to_day'] == 'Sa':

130 opening_hours += '{hours}; '.format(**day_group)

131 else:

132 opening_hours += '{from_day}-{to_day} {hours}; '.format(

133 **day_group)

134 opening_hours = opening_hours[:-2]

135

136 return opening_hours

137

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/locations/spiders/holiday_stationstores.py b/locations/spiders/holiday_stationstores.py

--- a/locations/spiders/holiday_stationstores.py

+++ b/locations/spiders/holiday_stationstores.py

@@ -19,10 +19,10 @@

callback=self.parse_all_stores)

def parse_all_stores(self, response):

- all_stores = json.loads(response.body_as_unicode())

+ all_stores = json.loads(response.text)

for store_id, store in all_stores.items():

- # GET requests get blocked by their CDN, but POST works fine

+ # GET requests get blocked by their Incapsula bot protection, but POST works fine

yield scrapy.Request(f"https://www.holidaystationstores.com/Locations/Detail?storeNumber={store_id}",

method='POST',

meta={'store': store})

@@ -30,12 +30,9 @@

def parse(self, response):

store = response.meta['store']

- address = response.css(

- '.row.HolidayBackgroundColorBlue div::text').extract_first().strip()

- phone = response.css(

- '.body-content .col-lg-4 .HolidayFontColorRed::text').extract_first().strip()

- services = '|'.join(response.css(

- '.body-content .col-lg-4 ul li::text').extract()).lower()

+ address = response.xpath('//div[@class="col-lg-4 col-sm-12"]/text()')[1].extract().strip()

+ phone = response.xpath('//div[@class="HolidayFontColorRed"]/text()').extract_first().strip()

+ services = '|'.join(response.xpath('//ul[@style="list-style-type: none; padding-left: 1.0em; font-size: 12px;"]/li/text()').extract()).lower()

open_24_hours = '24 hours' in response.css(

'.body-content .col-lg-4').get().lower()

@@ -62,16 +59,18 @@

yield GeojsonPointItem(**properties)

def opening_hours(self, response):

- hour_part_elems = response.css(

- '.body-content .col-lg-4 .row div::text').extract()

+ hour_part_elems = response.xpath('//div[@class="row"][@style="font-size: 12px;"]')

day_groups = []

this_day_group = None

if hour_part_elems:

- def slice(source, step):

- return [source[i:i+step] for i in range(0, len(source), step)]

+ for hour_part_elem in hour_part_elems:

+ day = hour_part_elem.xpath('.//div[@class="col-3"]/text()').extract_first()

+ hours = hour_part_elem.xpath('.//div[@class="col-9"]/text()').extract_first()

+

+ if not hours:

+ continue

- for day, hours in slice(hour_part_elems, 2):

day = day[:2]

match = re.search(

r'^(\d{1,2}):(\d{2})\s*(a|p)m - (\d{1,2}):(\d{2})\s*(a|p)m?$', hours.lower())

@@ -111,13 +110,12 @@

elif this_day_group['hours'] == hours:

this_day_group['to_day'] = day

- day_groups.append(this_day_group)

+ if this_day_group:

+ day_groups.append(this_day_group)

- hour_part_elems = response.xpath(

- '//span[@style="font-size:90%"]/text()').extract()

+ hour_part_elems = response.xpath('//span[@style="font-size:90%"]/text()').extract()

if hour_part_elems:

- day_groups.append(

- {'from_day': 'Mo', 'to_day': 'Su', 'hours': '00:00-23:59'})

+ day_groups.append({'from_day': 'Mo', 'to_day': 'Su', 'hours': '00:00-23:59'})

opening_hours = ""

if len(day_groups) == 1 and day_groups[0]['hours'] in ('00:00-23:59', '00:00-00:00'):