problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.1k

10.2k

| golden_diff

stringlengths 151

4.94k

| verification_info

stringlengths 582

21k

| num_tokens

int64 271

2.05k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

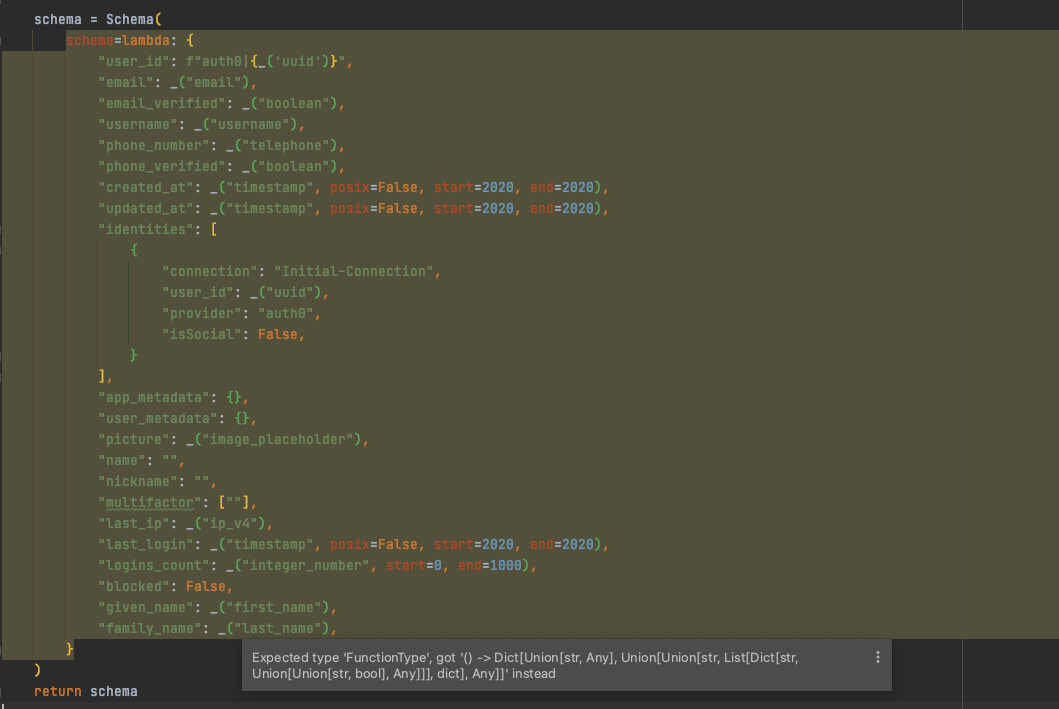

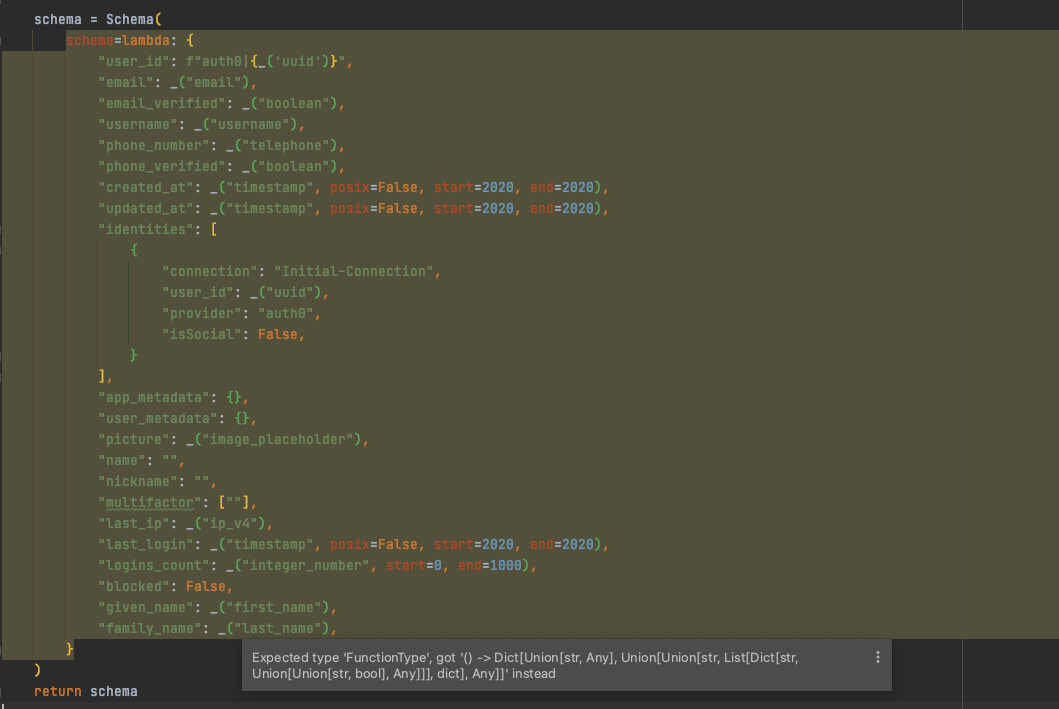

gh_patches_debug_35130 | rasdani/github-patches | git_diff | mlflow__mlflow-6206 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Timeout value too small: when downloading large model files, timeout is reached

https://github.com/mlflow/mlflow/blob/d40780be361f4bd2741c2e8fcbd428c1d693edcf/mlflow/store/artifact/http_artifact_repo.py#L63

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `mlflow/store/artifact/http_artifact_repo.py`

Content:

```

1 import os

2 import posixpath

3

4 from mlflow.entities import FileInfo

5 from mlflow.store.artifact.artifact_repo import ArtifactRepository, verify_artifact_path

6 from mlflow.tracking._tracking_service.utils import _get_default_host_creds

7 from mlflow.utils.file_utils import relative_path_to_artifact_path

8 from mlflow.utils.rest_utils import augmented_raise_for_status, http_request

9

10

11 class HttpArtifactRepository(ArtifactRepository):

12 """Stores artifacts in a remote artifact storage using HTTP requests"""

13

14 @property

15 def _host_creds(self):

16 return _get_default_host_creds(self.artifact_uri)

17

18 def log_artifact(self, local_file, artifact_path=None):

19 verify_artifact_path(artifact_path)

20

21 file_name = os.path.basename(local_file)

22 paths = (artifact_path, file_name) if artifact_path else (file_name,)

23 endpoint = posixpath.join("/", *paths)

24 with open(local_file, "rb") as f:

25 resp = http_request(self._host_creds, endpoint, "PUT", data=f, timeout=600)

26 augmented_raise_for_status(resp)

27

28 def log_artifacts(self, local_dir, artifact_path=None):

29 local_dir = os.path.abspath(local_dir)

30 for root, _, filenames in os.walk(local_dir):

31 if root == local_dir:

32 artifact_dir = artifact_path

33 else:

34 rel_path = os.path.relpath(root, local_dir)

35 rel_path = relative_path_to_artifact_path(rel_path)

36 artifact_dir = (

37 posixpath.join(artifact_path, rel_path) if artifact_path else rel_path

38 )

39 for f in filenames:

40 self.log_artifact(os.path.join(root, f), artifact_dir)

41

42 def list_artifacts(self, path=None):

43 endpoint = "/mlflow-artifacts/artifacts"

44 url, tail = self.artifact_uri.split(endpoint, maxsplit=1)

45 root = tail.lstrip("/")

46 params = {"path": posixpath.join(root, path) if path else root}

47 host_creds = _get_default_host_creds(url)

48 resp = http_request(host_creds, endpoint, "GET", params=params, timeout=10)

49 augmented_raise_for_status(resp)

50 file_infos = []

51 for f in resp.json().get("files", []):

52 file_info = FileInfo(

53 posixpath.join(path, f["path"]) if path else f["path"],

54 f["is_dir"],

55 int(f["file_size"]) if ("file_size" in f) else None,

56 )

57 file_infos.append(file_info)

58

59 return sorted(file_infos, key=lambda f: f.path)

60

61 def _download_file(self, remote_file_path, local_path):

62 endpoint = posixpath.join("/", remote_file_path)

63 resp = http_request(self._host_creds, endpoint, "GET", stream=True, timeout=10)

64 augmented_raise_for_status(resp)

65 with open(local_path, "wb") as f:

66 chunk_size = 1024 * 1024 # 1 MB

67 for chunk in resp.iter_content(chunk_size=chunk_size):

68 f.write(chunk)

69

70 def delete_artifacts(self, artifact_path=None):

71 endpoint = posixpath.join("/", artifact_path) if artifact_path else "/"

72 resp = http_request(self._host_creds, endpoint, "DELETE", stream=True, timeout=10)

73 augmented_raise_for_status(resp)

74

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/mlflow/store/artifact/http_artifact_repo.py b/mlflow/store/artifact/http_artifact_repo.py

--- a/mlflow/store/artifact/http_artifact_repo.py

+++ b/mlflow/store/artifact/http_artifact_repo.py

@@ -22,7 +22,7 @@

paths = (artifact_path, file_name) if artifact_path else (file_name,)

endpoint = posixpath.join("/", *paths)

with open(local_file, "rb") as f:

- resp = http_request(self._host_creds, endpoint, "PUT", data=f, timeout=600)

+ resp = http_request(self._host_creds, endpoint, "PUT", data=f)

augmented_raise_for_status(resp)

def log_artifacts(self, local_dir, artifact_path=None):

@@ -45,7 +45,7 @@

root = tail.lstrip("/")

params = {"path": posixpath.join(root, path) if path else root}

host_creds = _get_default_host_creds(url)

- resp = http_request(host_creds, endpoint, "GET", params=params, timeout=10)

+ resp = http_request(host_creds, endpoint, "GET", params=params)

augmented_raise_for_status(resp)

file_infos = []

for f in resp.json().get("files", []):

@@ -60,7 +60,7 @@

def _download_file(self, remote_file_path, local_path):

endpoint = posixpath.join("/", remote_file_path)

- resp = http_request(self._host_creds, endpoint, "GET", stream=True, timeout=10)

+ resp = http_request(self._host_creds, endpoint, "GET", stream=True)

augmented_raise_for_status(resp)

with open(local_path, "wb") as f:

chunk_size = 1024 * 1024 # 1 MB

@@ -69,5 +69,5 @@

def delete_artifacts(self, artifact_path=None):

endpoint = posixpath.join("/", artifact_path) if artifact_path else "/"

- resp = http_request(self._host_creds, endpoint, "DELETE", stream=True, timeout=10)

+ resp = http_request(self._host_creds, endpoint, "DELETE", stream=True)

augmented_raise_for_status(resp)

| {"golden_diff": "diff --git a/mlflow/store/artifact/http_artifact_repo.py b/mlflow/store/artifact/http_artifact_repo.py\n--- a/mlflow/store/artifact/http_artifact_repo.py\n+++ b/mlflow/store/artifact/http_artifact_repo.py\n@@ -22,7 +22,7 @@\n paths = (artifact_path, file_name) if artifact_path else (file_name,)\n endpoint = posixpath.join(\"/\", *paths)\n with open(local_file, \"rb\") as f:\n- resp = http_request(self._host_creds, endpoint, \"PUT\", data=f, timeout=600)\n+ resp = http_request(self._host_creds, endpoint, \"PUT\", data=f)\n augmented_raise_for_status(resp)\n \n def log_artifacts(self, local_dir, artifact_path=None):\n@@ -45,7 +45,7 @@\n root = tail.lstrip(\"/\")\n params = {\"path\": posixpath.join(root, path) if path else root}\n host_creds = _get_default_host_creds(url)\n- resp = http_request(host_creds, endpoint, \"GET\", params=params, timeout=10)\n+ resp = http_request(host_creds, endpoint, \"GET\", params=params)\n augmented_raise_for_status(resp)\n file_infos = []\n for f in resp.json().get(\"files\", []):\n@@ -60,7 +60,7 @@\n \n def _download_file(self, remote_file_path, local_path):\n endpoint = posixpath.join(\"/\", remote_file_path)\n- resp = http_request(self._host_creds, endpoint, \"GET\", stream=True, timeout=10)\n+ resp = http_request(self._host_creds, endpoint, \"GET\", stream=True)\n augmented_raise_for_status(resp)\n with open(local_path, \"wb\") as f:\n chunk_size = 1024 * 1024 # 1 MB\n@@ -69,5 +69,5 @@\n \n def delete_artifacts(self, artifact_path=None):\n endpoint = posixpath.join(\"/\", artifact_path) if artifact_path else \"/\"\n- resp = http_request(self._host_creds, endpoint, \"DELETE\", stream=True, timeout=10)\n+ resp = http_request(self._host_creds, endpoint, \"DELETE\", stream=True)\n augmented_raise_for_status(resp)\n", "issue": "Timeout value too small: when downloading large model files, timeout is reached\nhttps://github.com/mlflow/mlflow/blob/d40780be361f4bd2741c2e8fcbd428c1d693edcf/mlflow/store/artifact/http_artifact_repo.py#L63\n", "before_files": [{"content": "import os\nimport posixpath\n\nfrom mlflow.entities import FileInfo\nfrom mlflow.store.artifact.artifact_repo import ArtifactRepository, verify_artifact_path\nfrom mlflow.tracking._tracking_service.utils import _get_default_host_creds\nfrom mlflow.utils.file_utils import relative_path_to_artifact_path\nfrom mlflow.utils.rest_utils import augmented_raise_for_status, http_request\n\n\nclass HttpArtifactRepository(ArtifactRepository):\n \"\"\"Stores artifacts in a remote artifact storage using HTTP requests\"\"\"\n\n @property\n def _host_creds(self):\n return _get_default_host_creds(self.artifact_uri)\n\n def log_artifact(self, local_file, artifact_path=None):\n verify_artifact_path(artifact_path)\n\n file_name = os.path.basename(local_file)\n paths = (artifact_path, file_name) if artifact_path else (file_name,)\n endpoint = posixpath.join(\"/\", *paths)\n with open(local_file, \"rb\") as f:\n resp = http_request(self._host_creds, endpoint, \"PUT\", data=f, timeout=600)\n augmented_raise_for_status(resp)\n\n def log_artifacts(self, local_dir, artifact_path=None):\n local_dir = os.path.abspath(local_dir)\n for root, _, filenames in os.walk(local_dir):\n if root == local_dir:\n artifact_dir = artifact_path\n else:\n rel_path = os.path.relpath(root, local_dir)\n rel_path = relative_path_to_artifact_path(rel_path)\n artifact_dir = (\n posixpath.join(artifact_path, rel_path) if artifact_path else rel_path\n )\n for f in filenames:\n self.log_artifact(os.path.join(root, f), artifact_dir)\n\n def list_artifacts(self, path=None):\n endpoint = \"/mlflow-artifacts/artifacts\"\n url, tail = self.artifact_uri.split(endpoint, maxsplit=1)\n root = tail.lstrip(\"/\")\n params = {\"path\": posixpath.join(root, path) if path else root}\n host_creds = _get_default_host_creds(url)\n resp = http_request(host_creds, endpoint, \"GET\", params=params, timeout=10)\n augmented_raise_for_status(resp)\n file_infos = []\n for f in resp.json().get(\"files\", []):\n file_info = FileInfo(\n posixpath.join(path, f[\"path\"]) if path else f[\"path\"],\n f[\"is_dir\"],\n int(f[\"file_size\"]) if (\"file_size\" in f) else None,\n )\n file_infos.append(file_info)\n\n return sorted(file_infos, key=lambda f: f.path)\n\n def _download_file(self, remote_file_path, local_path):\n endpoint = posixpath.join(\"/\", remote_file_path)\n resp = http_request(self._host_creds, endpoint, \"GET\", stream=True, timeout=10)\n augmented_raise_for_status(resp)\n with open(local_path, \"wb\") as f:\n chunk_size = 1024 * 1024 # 1 MB\n for chunk in resp.iter_content(chunk_size=chunk_size):\n f.write(chunk)\n\n def delete_artifacts(self, artifact_path=None):\n endpoint = posixpath.join(\"/\", artifact_path) if artifact_path else \"/\"\n resp = http_request(self._host_creds, endpoint, \"DELETE\", stream=True, timeout=10)\n augmented_raise_for_status(resp)\n", "path": "mlflow/store/artifact/http_artifact_repo.py"}], "after_files": [{"content": "import os\nimport posixpath\n\nfrom mlflow.entities import FileInfo\nfrom mlflow.store.artifact.artifact_repo import ArtifactRepository, verify_artifact_path\nfrom mlflow.tracking._tracking_service.utils import _get_default_host_creds\nfrom mlflow.utils.file_utils import relative_path_to_artifact_path\nfrom mlflow.utils.rest_utils import augmented_raise_for_status, http_request\n\n\nclass HttpArtifactRepository(ArtifactRepository):\n \"\"\"Stores artifacts in a remote artifact storage using HTTP requests\"\"\"\n\n @property\n def _host_creds(self):\n return _get_default_host_creds(self.artifact_uri)\n\n def log_artifact(self, local_file, artifact_path=None):\n verify_artifact_path(artifact_path)\n\n file_name = os.path.basename(local_file)\n paths = (artifact_path, file_name) if artifact_path else (file_name,)\n endpoint = posixpath.join(\"/\", *paths)\n with open(local_file, \"rb\") as f:\n resp = http_request(self._host_creds, endpoint, \"PUT\", data=f)\n augmented_raise_for_status(resp)\n\n def log_artifacts(self, local_dir, artifact_path=None):\n local_dir = os.path.abspath(local_dir)\n for root, _, filenames in os.walk(local_dir):\n if root == local_dir:\n artifact_dir = artifact_path\n else:\n rel_path = os.path.relpath(root, local_dir)\n rel_path = relative_path_to_artifact_path(rel_path)\n artifact_dir = (\n posixpath.join(artifact_path, rel_path) if artifact_path else rel_path\n )\n for f in filenames:\n self.log_artifact(os.path.join(root, f), artifact_dir)\n\n def list_artifacts(self, path=None):\n endpoint = \"/mlflow-artifacts/artifacts\"\n url, tail = self.artifact_uri.split(endpoint, maxsplit=1)\n root = tail.lstrip(\"/\")\n params = {\"path\": posixpath.join(root, path) if path else root}\n host_creds = _get_default_host_creds(url)\n resp = http_request(host_creds, endpoint, \"GET\", params=params)\n augmented_raise_for_status(resp)\n file_infos = []\n for f in resp.json().get(\"files\", []):\n file_info = FileInfo(\n posixpath.join(path, f[\"path\"]) if path else f[\"path\"],\n f[\"is_dir\"],\n int(f[\"file_size\"]) if (\"file_size\" in f) else None,\n )\n file_infos.append(file_info)\n\n return sorted(file_infos, key=lambda f: f.path)\n\n def _download_file(self, remote_file_path, local_path):\n endpoint = posixpath.join(\"/\", remote_file_path)\n resp = http_request(self._host_creds, endpoint, \"GET\", stream=True)\n augmented_raise_for_status(resp)\n with open(local_path, \"wb\") as f:\n chunk_size = 1024 * 1024 # 1 MB\n for chunk in resp.iter_content(chunk_size=chunk_size):\n f.write(chunk)\n\n def delete_artifacts(self, artifact_path=None):\n endpoint = posixpath.join(\"/\", artifact_path) if artifact_path else \"/\"\n resp = http_request(self._host_creds, endpoint, \"DELETE\", stream=True)\n augmented_raise_for_status(resp)\n", "path": "mlflow/store/artifact/http_artifact_repo.py"}]} | 1,197 | 502 |

gh_patches_debug_15814 | rasdani/github-patches | git_diff | svthalia__concrexit-1977 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Error in pizza admin related to title_en

Sentry Issue: [CONCREXIT-9W](https://sentry.io/organizations/thalia/issues/2692657224/?referrer=github_integration)

```

FieldError: Related Field got invalid lookup: title_en

(16 additional frame(s) were not displayed)

...

File "django/db/models/sql/query.py", line 1393, in add_q

clause, _ = self._add_q(q_object, self.used_aliases)

File "django/db/models/sql/query.py", line 1412, in _add_q

child_clause, needed_inner = self.build_filter(

File "django/db/models/sql/query.py", line 1265, in build_filter

return self._add_q(

File "django/db/models/sql/query.py", line 1412, in _add_q

child_clause, needed_inner = self.build_filter(

File "django/db/models/sql/query.py", line 1339, in build_filter

raise FieldError('Related Field got invalid lookup: {}'.format(lookups[0]))

```

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `website/pizzas/admin.py`

Content:

```

1 """Registers admin interfaces for the pizzas module."""

2 from django.conf import settings

3 from django.contrib import admin

4 from django.core.exceptions import PermissionDenied

5 from django.forms import Field

6 from django.urls import reverse, path

7 from django.utils.html import format_html

8 from django.utils.translation import gettext_lazy as _

9

10 from events import services

11 from events.services import is_organiser

12 from payments.widgets import PaymentWidget

13 from pizzas import admin_views

14 from utils.admin import DoNextModelAdmin

15 from .models import FoodOrder, FoodEvent, Product

16

17

18 @admin.register(Product)

19 class ProductAdmin(admin.ModelAdmin):

20 """Manage the products."""

21

22 list_display = ("name", "price", "available")

23 list_filter = ("available", "restricted")

24 search_fields = ("name",)

25

26

27 @admin.register(FoodEvent)

28 class FoodEventAdmin(admin.ModelAdmin):

29 """Manage the pizza events."""

30

31 list_display = ("title", "start", "end", "notification_enabled", "orders")

32 date_hierarchy = "start"

33 exclude = ("end_reminder",)

34 search_fields = [f"event__title_{l[0]}" for l in settings.LANGUAGES]

35 autocomplete_fields = ("event",)

36

37 def notification_enabled(self, obj):

38 return obj.send_notification

39

40 notification_enabled.short_description = _("reminder")

41 notification_enabled.admin_order_field = "send_notification"

42 notification_enabled.boolean = True

43

44 def has_change_permission(self, request, obj=None):

45 """Only allow access to the change form if the user is an organiser."""

46 if obj is not None and not services.is_organiser(request.member, obj.event):

47 return False

48 return super().has_change_permission(request, obj)

49

50 def has_delete_permission(self, request, obj=None):

51 """Only allow access to delete if the user is an organiser."""

52 if obj is not None and not services.is_organiser(request.member, obj.event):

53 return False

54 return super().has_delete_permission(request, obj)

55

56 def orders(self, obj):

57 url = reverse("admin:pizzas_foodevent_details", kwargs={"pk": obj.pk})

58 return format_html('<a href="{url}">{text}</a>', url=url, text=_("Orders"))

59

60 def get_urls(self):

61 urls = super().get_urls()

62 custom_urls = [

63 path(

64 "<int:pk>/details/",

65 self.admin_site.admin_view(

66 admin_views.PizzaOrderDetails.as_view(admin=self)

67 ),

68 name="pizzas_foodevent_details",

69 ),

70 path(

71 "<int:pk>/overview/",

72 self.admin_site.admin_view(

73 admin_views.PizzaOrderSummary.as_view(admin=self)

74 ),

75 name="pizzas_foodevent_overview",

76 ),

77 ]

78 return custom_urls + urls

79

80

81 @admin.register(FoodOrder)

82 class FoodOrderAdmin(DoNextModelAdmin):

83 """Manage the orders."""

84

85 list_display = (

86 "food_event",

87 "member_first_name",

88 "member_last_name",

89 "product",

90 "payment",

91 )

92

93 fields = (

94 "food_event",

95 "member",

96 "name",

97 "product",

98 "payment",

99 )

100

101 def formfield_for_dbfield(self, db_field, request, obj=None, **kwargs):

102 """Payment field widget."""

103 field = super().formfield_for_dbfield(db_field, request, **kwargs)

104 if db_field.name == "payment":

105 return Field(

106 widget=PaymentWidget(obj=obj), initial=field.initial, required=False,

107 )

108 return field

109

110 def save_model(self, request, obj, form, change):

111 """You can only save the orders if you have permission."""

112 if not is_organiser(request.member, obj.food_event.event):

113 raise PermissionDenied

114 return super().save_model(request, obj, form, change)

115

116 def has_view_permission(self, request, order=None):

117 """Only give view permission if the user is an organiser."""

118 if order is not None and not is_organiser(

119 request.member, order.food_event.event

120 ):

121 return False

122 return super().has_view_permission(request, order)

123

124 def has_change_permission(self, request, order=None):

125 """Only give change permission if the user is an organiser."""

126 if order is not None and not is_organiser(

127 request.member, order.food_event.event

128 ):

129 return False

130 return super().has_change_permission(request, order)

131

132 def has_delete_permission(self, request, order=None):

133 """Only give delete permission if the user is an organiser."""

134 if order is not None and not is_organiser(

135 request.member, order.food_event.event

136 ):

137 return False

138 return super().has_delete_permission(request, order)

139

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/website/pizzas/admin.py b/website/pizzas/admin.py

--- a/website/pizzas/admin.py

+++ b/website/pizzas/admin.py

@@ -1,5 +1,4 @@

"""Registers admin interfaces for the pizzas module."""

-from django.conf import settings

from django.contrib import admin

from django.core.exceptions import PermissionDenied

from django.forms import Field

@@ -31,7 +30,7 @@

list_display = ("title", "start", "end", "notification_enabled", "orders")

date_hierarchy = "start"

exclude = ("end_reminder",)

- search_fields = [f"event__title_{l[0]}" for l in settings.LANGUAGES]

+ search_fields = ("event__title",)

autocomplete_fields = ("event",)

def notification_enabled(self, obj):

| {"golden_diff": "diff --git a/website/pizzas/admin.py b/website/pizzas/admin.py\n--- a/website/pizzas/admin.py\n+++ b/website/pizzas/admin.py\n@@ -1,5 +1,4 @@\n \"\"\"Registers admin interfaces for the pizzas module.\"\"\"\n-from django.conf import settings\n from django.contrib import admin\n from django.core.exceptions import PermissionDenied\n from django.forms import Field\n@@ -31,7 +30,7 @@\n list_display = (\"title\", \"start\", \"end\", \"notification_enabled\", \"orders\")\n date_hierarchy = \"start\"\n exclude = (\"end_reminder\",)\n- search_fields = [f\"event__title_{l[0]}\" for l in settings.LANGUAGES]\n+ search_fields = (\"event__title\",)\n autocomplete_fields = (\"event\",)\n \n def notification_enabled(self, obj):\n", "issue": "Error in pizza admin related to title_en\nSentry Issue: [CONCREXIT-9W](https://sentry.io/organizations/thalia/issues/2692657224/?referrer=github_integration)\n\n```\nFieldError: Related Field got invalid lookup: title_en\n(16 additional frame(s) were not displayed)\n...\n File \"django/db/models/sql/query.py\", line 1393, in add_q\n clause, _ = self._add_q(q_object, self.used_aliases)\n File \"django/db/models/sql/query.py\", line 1412, in _add_q\n child_clause, needed_inner = self.build_filter(\n File \"django/db/models/sql/query.py\", line 1265, in build_filter\n return self._add_q(\n File \"django/db/models/sql/query.py\", line 1412, in _add_q\n child_clause, needed_inner = self.build_filter(\n File \"django/db/models/sql/query.py\", line 1339, in build_filter\n raise FieldError('Related Field got invalid lookup: {}'.format(lookups[0]))\n```\n", "before_files": [{"content": "\"\"\"Registers admin interfaces for the pizzas module.\"\"\"\nfrom django.conf import settings\nfrom django.contrib import admin\nfrom django.core.exceptions import PermissionDenied\nfrom django.forms import Field\nfrom django.urls import reverse, path\nfrom django.utils.html import format_html\nfrom django.utils.translation import gettext_lazy as _\n\nfrom events import services\nfrom events.services import is_organiser\nfrom payments.widgets import PaymentWidget\nfrom pizzas import admin_views\nfrom utils.admin import DoNextModelAdmin\nfrom .models import FoodOrder, FoodEvent, Product\n\n\[email protected](Product)\nclass ProductAdmin(admin.ModelAdmin):\n \"\"\"Manage the products.\"\"\"\n\n list_display = (\"name\", \"price\", \"available\")\n list_filter = (\"available\", \"restricted\")\n search_fields = (\"name\",)\n\n\[email protected](FoodEvent)\nclass FoodEventAdmin(admin.ModelAdmin):\n \"\"\"Manage the pizza events.\"\"\"\n\n list_display = (\"title\", \"start\", \"end\", \"notification_enabled\", \"orders\")\n date_hierarchy = \"start\"\n exclude = (\"end_reminder\",)\n search_fields = [f\"event__title_{l[0]}\" for l in settings.LANGUAGES]\n autocomplete_fields = (\"event\",)\n\n def notification_enabled(self, obj):\n return obj.send_notification\n\n notification_enabled.short_description = _(\"reminder\")\n notification_enabled.admin_order_field = \"send_notification\"\n notification_enabled.boolean = True\n\n def has_change_permission(self, request, obj=None):\n \"\"\"Only allow access to the change form if the user is an organiser.\"\"\"\n if obj is not None and not services.is_organiser(request.member, obj.event):\n return False\n return super().has_change_permission(request, obj)\n\n def has_delete_permission(self, request, obj=None):\n \"\"\"Only allow access to delete if the user is an organiser.\"\"\"\n if obj is not None and not services.is_organiser(request.member, obj.event):\n return False\n return super().has_delete_permission(request, obj)\n\n def orders(self, obj):\n url = reverse(\"admin:pizzas_foodevent_details\", kwargs={\"pk\": obj.pk})\n return format_html('<a href=\"{url}\">{text}</a>', url=url, text=_(\"Orders\"))\n\n def get_urls(self):\n urls = super().get_urls()\n custom_urls = [\n path(\n \"<int:pk>/details/\",\n self.admin_site.admin_view(\n admin_views.PizzaOrderDetails.as_view(admin=self)\n ),\n name=\"pizzas_foodevent_details\",\n ),\n path(\n \"<int:pk>/overview/\",\n self.admin_site.admin_view(\n admin_views.PizzaOrderSummary.as_view(admin=self)\n ),\n name=\"pizzas_foodevent_overview\",\n ),\n ]\n return custom_urls + urls\n\n\[email protected](FoodOrder)\nclass FoodOrderAdmin(DoNextModelAdmin):\n \"\"\"Manage the orders.\"\"\"\n\n list_display = (\n \"food_event\",\n \"member_first_name\",\n \"member_last_name\",\n \"product\",\n \"payment\",\n )\n\n fields = (\n \"food_event\",\n \"member\",\n \"name\",\n \"product\",\n \"payment\",\n )\n\n def formfield_for_dbfield(self, db_field, request, obj=None, **kwargs):\n \"\"\"Payment field widget.\"\"\"\n field = super().formfield_for_dbfield(db_field, request, **kwargs)\n if db_field.name == \"payment\":\n return Field(\n widget=PaymentWidget(obj=obj), initial=field.initial, required=False,\n )\n return field\n\n def save_model(self, request, obj, form, change):\n \"\"\"You can only save the orders if you have permission.\"\"\"\n if not is_organiser(request.member, obj.food_event.event):\n raise PermissionDenied\n return super().save_model(request, obj, form, change)\n\n def has_view_permission(self, request, order=None):\n \"\"\"Only give view permission if the user is an organiser.\"\"\"\n if order is not None and not is_organiser(\n request.member, order.food_event.event\n ):\n return False\n return super().has_view_permission(request, order)\n\n def has_change_permission(self, request, order=None):\n \"\"\"Only give change permission if the user is an organiser.\"\"\"\n if order is not None and not is_organiser(\n request.member, order.food_event.event\n ):\n return False\n return super().has_change_permission(request, order)\n\n def has_delete_permission(self, request, order=None):\n \"\"\"Only give delete permission if the user is an organiser.\"\"\"\n if order is not None and not is_organiser(\n request.member, order.food_event.event\n ):\n return False\n return super().has_delete_permission(request, order)\n", "path": "website/pizzas/admin.py"}], "after_files": [{"content": "\"\"\"Registers admin interfaces for the pizzas module.\"\"\"\nfrom django.contrib import admin\nfrom django.core.exceptions import PermissionDenied\nfrom django.forms import Field\nfrom django.urls import reverse, path\nfrom django.utils.html import format_html\nfrom django.utils.translation import gettext_lazy as _\n\nfrom events import services\nfrom events.services import is_organiser\nfrom payments.widgets import PaymentWidget\nfrom pizzas import admin_views\nfrom utils.admin import DoNextModelAdmin\nfrom .models import FoodOrder, FoodEvent, Product\n\n\[email protected](Product)\nclass ProductAdmin(admin.ModelAdmin):\n \"\"\"Manage the products.\"\"\"\n\n list_display = (\"name\", \"price\", \"available\")\n list_filter = (\"available\", \"restricted\")\n search_fields = (\"name\",)\n\n\[email protected](FoodEvent)\nclass FoodEventAdmin(admin.ModelAdmin):\n \"\"\"Manage the pizza events.\"\"\"\n\n list_display = (\"title\", \"start\", \"end\", \"notification_enabled\", \"orders\")\n date_hierarchy = \"start\"\n exclude = (\"end_reminder\",)\n search_fields = (\"event__title\",)\n autocomplete_fields = (\"event\",)\n\n def notification_enabled(self, obj):\n return obj.send_notification\n\n notification_enabled.short_description = _(\"reminder\")\n notification_enabled.admin_order_field = \"send_notification\"\n notification_enabled.boolean = True\n\n def has_change_permission(self, request, obj=None):\n \"\"\"Only allow access to the change form if the user is an organiser.\"\"\"\n if obj is not None and not services.is_organiser(request.member, obj.event):\n return False\n return super().has_change_permission(request, obj)\n\n def has_delete_permission(self, request, obj=None):\n \"\"\"Only allow access to delete if the user is an organiser.\"\"\"\n if obj is not None and not services.is_organiser(request.member, obj.event):\n return False\n return super().has_delete_permission(request, obj)\n\n def orders(self, obj):\n url = reverse(\"admin:pizzas_foodevent_details\", kwargs={\"pk\": obj.pk})\n return format_html('<a href=\"{url}\">{text}</a>', url=url, text=_(\"Orders\"))\n\n def get_urls(self):\n urls = super().get_urls()\n custom_urls = [\n path(\n \"<int:pk>/details/\",\n self.admin_site.admin_view(\n admin_views.PizzaOrderDetails.as_view(admin=self)\n ),\n name=\"pizzas_foodevent_details\",\n ),\n path(\n \"<int:pk>/overview/\",\n self.admin_site.admin_view(\n admin_views.PizzaOrderSummary.as_view(admin=self)\n ),\n name=\"pizzas_foodevent_overview\",\n ),\n ]\n return custom_urls + urls\n\n\[email protected](FoodOrder)\nclass FoodOrderAdmin(DoNextModelAdmin):\n \"\"\"Manage the orders.\"\"\"\n\n list_display = (\n \"food_event\",\n \"member_first_name\",\n \"member_last_name\",\n \"product\",\n \"payment\",\n )\n\n fields = (\n \"food_event\",\n \"member\",\n \"name\",\n \"product\",\n \"payment\",\n )\n\n def formfield_for_dbfield(self, db_field, request, obj=None, **kwargs):\n \"\"\"Payment field widget.\"\"\"\n field = super().formfield_for_dbfield(db_field, request, **kwargs)\n if db_field.name == \"payment\":\n return Field(\n widget=PaymentWidget(obj=obj), initial=field.initial, required=False,\n )\n return field\n\n def save_model(self, request, obj, form, change):\n \"\"\"You can only save the orders if you have permission.\"\"\"\n if not is_organiser(request.member, obj.food_event.event):\n raise PermissionDenied\n return super().save_model(request, obj, form, change)\n\n def has_view_permission(self, request, order=None):\n \"\"\"Only give view permission if the user is an organiser.\"\"\"\n if order is not None and not is_organiser(\n request.member, order.food_event.event\n ):\n return False\n return super().has_view_permission(request, order)\n\n def has_change_permission(self, request, order=None):\n \"\"\"Only give change permission if the user is an organiser.\"\"\"\n if order is not None and not is_organiser(\n request.member, order.food_event.event\n ):\n return False\n return super().has_change_permission(request, order)\n\n def has_delete_permission(self, request, order=None):\n \"\"\"Only give delete permission if the user is an organiser.\"\"\"\n if order is not None and not is_organiser(\n request.member, order.food_event.event\n ):\n return False\n return super().has_delete_permission(request, order)\n", "path": "website/pizzas/admin.py"}]} | 1,832 | 182 |

gh_patches_debug_10832 | rasdani/github-patches | git_diff | pyqtgraph__pyqtgraph-309 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

unexpected 'str' during Arrow test

testing on Windows Python 3.4.4/Qt5.5.1/ PyQtgraph github of 20160102, I have thefollowing error on "Arrow" test:

```

Using PyQt5 (default graphics system)

Using PyQt5 (default graphics system)

Using PyQt5 (default graphics system)

Using PyQt5 (default graphics system)

Traceback (most recent call last):

File "D:\WinPython\basedir34\buildQt5\winpython-3.4.4.amd64\python-3.4.4.amd64

\lib\site-packages\pyqtgraph\examples\Arrow.py", line 50, in <module>

anim = a.makeAnimation(loop=-1)

File "D:\WinPython\basedir34\buildQt5\winpython-3.4.4.amd64\python-3.4.4.amd64

\lib\site-packages\pyqtgraph\graphicsItems\CurvePoint.py", line 94, in makeAnima

tion

anim = QtCore.QPropertyAnimation(self, prop)

TypeError: arguments did not match any overloaded call:

QPropertyAnimation(QObject parent=None): too many arguments

QPropertyAnimation(QObject, QByteArray, QObject parent=None): argument 2 has u

nexpected type 'str'

```

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `pyqtgraph/graphicsItems/CurvePoint.py`

Content:

```

1 from ..Qt import QtGui, QtCore

2 from . import ArrowItem

3 import numpy as np

4 from ..Point import Point

5 import weakref

6 from .GraphicsObject import GraphicsObject

7

8 __all__ = ['CurvePoint', 'CurveArrow']

9 class CurvePoint(GraphicsObject):

10 """A GraphicsItem that sets its location to a point on a PlotCurveItem.

11 Also rotates to be tangent to the curve.

12 The position along the curve is a Qt property, and thus can be easily animated.

13

14 Note: This class does not display anything; see CurveArrow for an applied example

15 """

16

17 def __init__(self, curve, index=0, pos=None, rotate=True):

18 """Position can be set either as an index referring to the sample number or

19 the position 0.0 - 1.0

20 If *rotate* is True, then the item rotates to match the tangent of the curve.

21 """

22

23 GraphicsObject.__init__(self)

24 #QObjectWorkaround.__init__(self)

25 self._rotate = rotate

26 self.curve = weakref.ref(curve)

27 self.setParentItem(curve)

28 self.setProperty('position', 0.0)

29 self.setProperty('index', 0)

30

31 if hasattr(self, 'ItemHasNoContents'):

32 self.setFlags(self.flags() | self.ItemHasNoContents)

33

34 if pos is not None:

35 self.setPos(pos)

36 else:

37 self.setIndex(index)

38

39 def setPos(self, pos):

40 self.setProperty('position', float(pos))## cannot use numpy types here, MUST be python float.

41

42 def setIndex(self, index):

43 self.setProperty('index', int(index)) ## cannot use numpy types here, MUST be python int.

44

45 def event(self, ev):

46 if not isinstance(ev, QtCore.QDynamicPropertyChangeEvent) or self.curve() is None:

47 return False

48

49 if ev.propertyName() == 'index':

50 index = self.property('index')

51 if 'QVariant' in repr(index):

52 index = index.toInt()[0]

53 elif ev.propertyName() == 'position':

54 index = None

55 else:

56 return False

57

58 (x, y) = self.curve().getData()

59 if index is None:

60 #print ev.propertyName(), self.property('position').toDouble()[0], self.property('position').typeName()

61 pos = self.property('position')

62 if 'QVariant' in repr(pos): ## need to support 2 APIs :(

63 pos = pos.toDouble()[0]

64 index = (len(x)-1) * np.clip(pos, 0.0, 1.0)

65

66 if index != int(index): ## interpolate floating-point values

67 i1 = int(index)

68 i2 = np.clip(i1+1, 0, len(x)-1)

69 s2 = index-i1

70 s1 = 1.0-s2

71 newPos = (x[i1]*s1+x[i2]*s2, y[i1]*s1+y[i2]*s2)

72 else:

73 index = int(index)

74 i1 = np.clip(index-1, 0, len(x)-1)

75 i2 = np.clip(index+1, 0, len(x)-1)

76 newPos = (x[index], y[index])

77

78 p1 = self.parentItem().mapToScene(QtCore.QPointF(x[i1], y[i1]))

79 p2 = self.parentItem().mapToScene(QtCore.QPointF(x[i2], y[i2]))

80 ang = np.arctan2(p2.y()-p1.y(), p2.x()-p1.x()) ## returns radians

81 self.resetTransform()

82 if self._rotate:

83 self.rotate(180+ ang * 180 / np.pi) ## takes degrees

84 QtGui.QGraphicsItem.setPos(self, *newPos)

85 return True

86

87 def boundingRect(self):

88 return QtCore.QRectF()

89

90 def paint(self, *args):

91 pass

92

93 def makeAnimation(self, prop='position', start=0.0, end=1.0, duration=10000, loop=1):

94 anim = QtCore.QPropertyAnimation(self, prop)

95 anim.setDuration(duration)

96 anim.setStartValue(start)

97 anim.setEndValue(end)

98 anim.setLoopCount(loop)

99 return anim

100

101

102 class CurveArrow(CurvePoint):

103 """Provides an arrow that points to any specific sample on a PlotCurveItem.

104 Provides properties that can be animated."""

105

106 def __init__(self, curve, index=0, pos=None, **opts):

107 CurvePoint.__init__(self, curve, index=index, pos=pos)

108 if opts.get('pxMode', True):

109 opts['pxMode'] = False

110 self.setFlags(self.flags() | self.ItemIgnoresTransformations)

111 opts['angle'] = 0

112 self.arrow = ArrowItem.ArrowItem(**opts)

113 self.arrow.setParentItem(self)

114

115 def setStyle(self, **opts):

116 return self.arrow.setStyle(**opts)

117

118

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/pyqtgraph/graphicsItems/CurvePoint.py b/pyqtgraph/graphicsItems/CurvePoint.py

--- a/pyqtgraph/graphicsItems/CurvePoint.py

+++ b/pyqtgraph/graphicsItems/CurvePoint.py

@@ -91,6 +91,11 @@

pass

def makeAnimation(self, prop='position', start=0.0, end=1.0, duration=10000, loop=1):

+ # In Python 3, a bytes object needs to be used as a property name in

+ # QPropertyAnimation. PyQt stopped automatically encoding a str when a

+ # QByteArray was expected in v5.5 (see qbytearray.sip).

+ if not isinstance(prop, bytes):

+ prop = prop.encode('latin-1')

anim = QtCore.QPropertyAnimation(self, prop)

anim.setDuration(duration)

anim.setStartValue(start)

| {"golden_diff": "diff --git a/pyqtgraph/graphicsItems/CurvePoint.py b/pyqtgraph/graphicsItems/CurvePoint.py\n--- a/pyqtgraph/graphicsItems/CurvePoint.py\n+++ b/pyqtgraph/graphicsItems/CurvePoint.py\n@@ -91,6 +91,11 @@\n pass\n \n def makeAnimation(self, prop='position', start=0.0, end=1.0, duration=10000, loop=1):\n+ # In Python 3, a bytes object needs to be used as a property name in\n+ # QPropertyAnimation. PyQt stopped automatically encoding a str when a\n+ # QByteArray was expected in v5.5 (see qbytearray.sip).\n+ if not isinstance(prop, bytes):\n+ prop = prop.encode('latin-1')\n anim = QtCore.QPropertyAnimation(self, prop)\n anim.setDuration(duration)\n anim.setStartValue(start)\n", "issue": "unexpected 'str' during Arrow test\ntesting on Windows Python 3.4.4/Qt5.5.1/ PyQtgraph github of 20160102, I have thefollowing error on \"Arrow\" test:\n\n```\nUsing PyQt5 (default graphics system)\nUsing PyQt5 (default graphics system)\nUsing PyQt5 (default graphics system)\nUsing PyQt5 (default graphics system)\nTraceback (most recent call last):\n File \"D:\\WinPython\\basedir34\\buildQt5\\winpython-3.4.4.amd64\\python-3.4.4.amd64\n\\lib\\site-packages\\pyqtgraph\\examples\\Arrow.py\", line 50, in <module>\n anim = a.makeAnimation(loop=-1)\n File \"D:\\WinPython\\basedir34\\buildQt5\\winpython-3.4.4.amd64\\python-3.4.4.amd64\n\\lib\\site-packages\\pyqtgraph\\graphicsItems\\CurvePoint.py\", line 94, in makeAnima\ntion\n anim = QtCore.QPropertyAnimation(self, prop)\nTypeError: arguments did not match any overloaded call:\n QPropertyAnimation(QObject parent=None): too many arguments\n QPropertyAnimation(QObject, QByteArray, QObject parent=None): argument 2 has u\nnexpected type 'str'\n\n```\n\n", "before_files": [{"content": "from ..Qt import QtGui, QtCore\nfrom . import ArrowItem\nimport numpy as np\nfrom ..Point import Point\nimport weakref\nfrom .GraphicsObject import GraphicsObject\n\n__all__ = ['CurvePoint', 'CurveArrow']\nclass CurvePoint(GraphicsObject):\n \"\"\"A GraphicsItem that sets its location to a point on a PlotCurveItem.\n Also rotates to be tangent to the curve.\n The position along the curve is a Qt property, and thus can be easily animated.\n \n Note: This class does not display anything; see CurveArrow for an applied example\n \"\"\"\n \n def __init__(self, curve, index=0, pos=None, rotate=True):\n \"\"\"Position can be set either as an index referring to the sample number or\n the position 0.0 - 1.0\n If *rotate* is True, then the item rotates to match the tangent of the curve.\n \"\"\"\n \n GraphicsObject.__init__(self)\n #QObjectWorkaround.__init__(self)\n self._rotate = rotate\n self.curve = weakref.ref(curve)\n self.setParentItem(curve)\n self.setProperty('position', 0.0)\n self.setProperty('index', 0)\n \n if hasattr(self, 'ItemHasNoContents'):\n self.setFlags(self.flags() | self.ItemHasNoContents)\n \n if pos is not None:\n self.setPos(pos)\n else:\n self.setIndex(index)\n \n def setPos(self, pos):\n self.setProperty('position', float(pos))## cannot use numpy types here, MUST be python float.\n \n def setIndex(self, index):\n self.setProperty('index', int(index)) ## cannot use numpy types here, MUST be python int.\n \n def event(self, ev):\n if not isinstance(ev, QtCore.QDynamicPropertyChangeEvent) or self.curve() is None:\n return False\n \n if ev.propertyName() == 'index':\n index = self.property('index')\n if 'QVariant' in repr(index):\n index = index.toInt()[0]\n elif ev.propertyName() == 'position':\n index = None\n else:\n return False\n \n (x, y) = self.curve().getData()\n if index is None:\n #print ev.propertyName(), self.property('position').toDouble()[0], self.property('position').typeName()\n pos = self.property('position')\n if 'QVariant' in repr(pos): ## need to support 2 APIs :(\n pos = pos.toDouble()[0]\n index = (len(x)-1) * np.clip(pos, 0.0, 1.0)\n \n if index != int(index): ## interpolate floating-point values\n i1 = int(index)\n i2 = np.clip(i1+1, 0, len(x)-1)\n s2 = index-i1\n s1 = 1.0-s2\n newPos = (x[i1]*s1+x[i2]*s2, y[i1]*s1+y[i2]*s2)\n else:\n index = int(index)\n i1 = np.clip(index-1, 0, len(x)-1)\n i2 = np.clip(index+1, 0, len(x)-1)\n newPos = (x[index], y[index])\n \n p1 = self.parentItem().mapToScene(QtCore.QPointF(x[i1], y[i1]))\n p2 = self.parentItem().mapToScene(QtCore.QPointF(x[i2], y[i2]))\n ang = np.arctan2(p2.y()-p1.y(), p2.x()-p1.x()) ## returns radians\n self.resetTransform()\n if self._rotate:\n self.rotate(180+ ang * 180 / np.pi) ## takes degrees\n QtGui.QGraphicsItem.setPos(self, *newPos)\n return True\n \n def boundingRect(self):\n return QtCore.QRectF()\n \n def paint(self, *args):\n pass\n \n def makeAnimation(self, prop='position', start=0.0, end=1.0, duration=10000, loop=1):\n anim = QtCore.QPropertyAnimation(self, prop)\n anim.setDuration(duration)\n anim.setStartValue(start)\n anim.setEndValue(end)\n anim.setLoopCount(loop)\n return anim\n\n\nclass CurveArrow(CurvePoint):\n \"\"\"Provides an arrow that points to any specific sample on a PlotCurveItem.\n Provides properties that can be animated.\"\"\"\n \n def __init__(self, curve, index=0, pos=None, **opts):\n CurvePoint.__init__(self, curve, index=index, pos=pos)\n if opts.get('pxMode', True):\n opts['pxMode'] = False\n self.setFlags(self.flags() | self.ItemIgnoresTransformations)\n opts['angle'] = 0\n self.arrow = ArrowItem.ArrowItem(**opts)\n self.arrow.setParentItem(self)\n \n def setStyle(self, **opts):\n return self.arrow.setStyle(**opts)\n \n", "path": "pyqtgraph/graphicsItems/CurvePoint.py"}], "after_files": [{"content": "from ..Qt import QtGui, QtCore\nfrom . import ArrowItem\nimport numpy as np\nfrom ..Point import Point\nimport weakref\nfrom .GraphicsObject import GraphicsObject\n\n__all__ = ['CurvePoint', 'CurveArrow']\nclass CurvePoint(GraphicsObject):\n \"\"\"A GraphicsItem that sets its location to a point on a PlotCurveItem.\n Also rotates to be tangent to the curve.\n The position along the curve is a Qt property, and thus can be easily animated.\n \n Note: This class does not display anything; see CurveArrow for an applied example\n \"\"\"\n \n def __init__(self, curve, index=0, pos=None, rotate=True):\n \"\"\"Position can be set either as an index referring to the sample number or\n the position 0.0 - 1.0\n If *rotate* is True, then the item rotates to match the tangent of the curve.\n \"\"\"\n \n GraphicsObject.__init__(self)\n #QObjectWorkaround.__init__(self)\n self._rotate = rotate\n self.curve = weakref.ref(curve)\n self.setParentItem(curve)\n self.setProperty('position', 0.0)\n self.setProperty('index', 0)\n \n if hasattr(self, 'ItemHasNoContents'):\n self.setFlags(self.flags() | self.ItemHasNoContents)\n \n if pos is not None:\n self.setPos(pos)\n else:\n self.setIndex(index)\n \n def setPos(self, pos):\n self.setProperty('position', float(pos))## cannot use numpy types here, MUST be python float.\n \n def setIndex(self, index):\n self.setProperty('index', int(index)) ## cannot use numpy types here, MUST be python int.\n \n def event(self, ev):\n if not isinstance(ev, QtCore.QDynamicPropertyChangeEvent) or self.curve() is None:\n return False\n \n if ev.propertyName() == 'index':\n index = self.property('index')\n if 'QVariant' in repr(index):\n index = index.toInt()[0]\n elif ev.propertyName() == 'position':\n index = None\n else:\n return False\n \n (x, y) = self.curve().getData()\n if index is None:\n #print ev.propertyName(), self.property('position').toDouble()[0], self.property('position').typeName()\n pos = self.property('position')\n if 'QVariant' in repr(pos): ## need to support 2 APIs :(\n pos = pos.toDouble()[0]\n index = (len(x)-1) * np.clip(pos, 0.0, 1.0)\n \n if index != int(index): ## interpolate floating-point values\n i1 = int(index)\n i2 = np.clip(i1+1, 0, len(x)-1)\n s2 = index-i1\n s1 = 1.0-s2\n newPos = (x[i1]*s1+x[i2]*s2, y[i1]*s1+y[i2]*s2)\n else:\n index = int(index)\n i1 = np.clip(index-1, 0, len(x)-1)\n i2 = np.clip(index+1, 0, len(x)-1)\n newPos = (x[index], y[index])\n \n p1 = self.parentItem().mapToScene(QtCore.QPointF(x[i1], y[i1]))\n p2 = self.parentItem().mapToScene(QtCore.QPointF(x[i2], y[i2]))\n ang = np.arctan2(p2.y()-p1.y(), p2.x()-p1.x()) ## returns radians\n self.resetTransform()\n if self._rotate:\n self.rotate(180+ ang * 180 / np.pi) ## takes degrees\n QtGui.QGraphicsItem.setPos(self, *newPos)\n return True\n \n def boundingRect(self):\n return QtCore.QRectF()\n \n def paint(self, *args):\n pass\n \n def makeAnimation(self, prop='position', start=0.0, end=1.0, duration=10000, loop=1):\n # In Python 3, a bytes object needs to be used as a property name in\n # QPropertyAnimation. PyQt stopped automatically encoding a str when a\n # QByteArray was expected in v5.5 (see qbytearray.sip).\n if not isinstance(prop, bytes):\n prop = prop.encode('latin-1')\n anim = QtCore.QPropertyAnimation(self, prop)\n anim.setDuration(duration)\n anim.setStartValue(start)\n anim.setEndValue(end)\n anim.setLoopCount(loop)\n return anim\n\n\nclass CurveArrow(CurvePoint):\n \"\"\"Provides an arrow that points to any specific sample on a PlotCurveItem.\n Provides properties that can be animated.\"\"\"\n \n def __init__(self, curve, index=0, pos=None, **opts):\n CurvePoint.__init__(self, curve, index=index, pos=pos)\n if opts.get('pxMode', True):\n opts['pxMode'] = False\n self.setFlags(self.flags() | self.ItemIgnoresTransformations)\n opts['angle'] = 0\n self.arrow = ArrowItem.ArrowItem(**opts)\n self.arrow.setParentItem(self)\n \n def setStyle(self, **opts):\n return self.arrow.setStyle(**opts)\n \n", "path": "pyqtgraph/graphicsItems/CurvePoint.py"}]} | 1,894 | 202 |

gh_patches_debug_33750 | rasdani/github-patches | git_diff | conan-io__conan-4349 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Fix experimental make generator CONAN_CPPFLAGS and CONAN_INCLUDE_PATHS

Following the conversation here https://github.com/conan-io/conan/issues/4286#issuecomment-454194188

We have finally reached the conclusion of having ``cpp_info.cppflags`` converted to ``CONAN_CXXFLAGS`` in the ``make`` generator to be consistent with orhter generators such as ``cmake``.

Also the flag ``CONAN_INCLUDE_PATHS`` should be renamed to ``CONAN_INCLUDE_DIRS`` for the same reason.

In another issue we would probably introduce a ``cpp_info.cxxflags`` that would be an internal alias of ``cpp_info.cppflags`` to avoid this confusion without breaking.

cc/ @solvingj

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `conans/client/generators/make.py`

Content:

```

1 from conans.model import Generator

2 from conans.paths import BUILD_INFO_MAKE

3

4

5 class MakeGenerator(Generator):

6

7 def __init__(self, conanfile):

8 Generator.__init__(self, conanfile)

9 self.makefile_newline = "\n"

10 self.makefile_line_continuation = " \\\n"

11 self.assignment_if_absent = " ?= "

12 self.assignment_append = " += "

13

14 @property

15 def filename(self):

16 return BUILD_INFO_MAKE

17

18 @property

19 def content(self):

20

21 content = [

22 "#-------------------------------------------------------------------#",

23 "# Makefile variables from Conan Dependencies #",

24 "#-------------------------------------------------------------------#",

25 "",

26 ]

27

28 for line_as_list in self.create_deps_content():

29 content.append("".join(line_as_list))

30

31 content.append("#-------------------------------------------------------------------#")

32 content.append(self.makefile_newline)

33 return self.makefile_newline.join(content)

34

35 def create_deps_content(self):

36 deps_content = self.create_content_from_deps()

37 deps_content.extend(self.create_combined_content())

38 return deps_content

39

40 def create_content_from_deps(self):

41 content = []

42 for pkg_name, cpp_info in self.deps_build_info.dependencies:

43 content.extend(self.create_content_from_dep(pkg_name, cpp_info))

44 return content

45

46 def create_content_from_dep(self, pkg_name, cpp_info):

47

48 vars_info = [("ROOT", self.assignment_if_absent, [cpp_info.rootpath]),

49 ("SYSROOT", self.assignment_if_absent, [cpp_info.sysroot]),

50 ("INCLUDE_PATHS", self.assignment_append, cpp_info.include_paths),

51 ("LIB_PATHS", self.assignment_append, cpp_info.lib_paths),

52 ("BIN_PATHS", self.assignment_append, cpp_info.bin_paths),

53 ("BUILD_PATHS", self.assignment_append, cpp_info.build_paths),

54 ("RES_PATHS", self.assignment_append, cpp_info.res_paths),

55 ("LIBS", self.assignment_append, cpp_info.libs),

56 ("DEFINES", self.assignment_append, cpp_info.defines),

57 ("CFLAGS", self.assignment_append, cpp_info.cflags),

58 ("CPPFLAGS", self.assignment_append, cpp_info.cppflags),

59 ("SHAREDLINKFLAGS", self.assignment_append, cpp_info.sharedlinkflags),

60 ("EXELINKFLAGS", self.assignment_append, cpp_info.exelinkflags)]

61

62 return [self.create_makefile_var_pkg(var_name, pkg_name, operator, info)

63 for var_name, operator, info in vars_info]

64

65 def create_combined_content(self):

66 content = []

67 for var_name in self.all_dep_vars():

68 content.append(self.create_makefile_var_global(var_name, self.assignment_append,

69 self.create_combined_var_list(var_name)))

70 return content

71

72 def create_combined_var_list(self, var_name):

73 make_vars = []

74 for pkg_name, _ in self.deps_build_info.dependencies:

75 pkg_var = self.create_makefile_var_name_pkg(var_name, pkg_name)

76 make_vars.append("$({pkg_var})".format(pkg_var=pkg_var))

77 return make_vars

78

79 def create_makefile_var_global(self, var_name, operator, values):

80 make_var = [self.create_makefile_var_name_global(var_name)]

81 make_var.extend(self.create_makefile_var_common(operator, values))

82 return make_var

83

84 def create_makefile_var_pkg(self, var_name, pkg_name, operator, values):

85 make_var = [self.create_makefile_var_name_pkg(var_name, pkg_name)]

86 make_var.extend(self.create_makefile_var_common(operator, values))

87 return make_var

88

89 def create_makefile_var_common(self, operator, values):

90 return [operator, self.makefile_line_continuation, self.create_makefile_var_value(values),

91 self.makefile_newline]

92

93 @staticmethod

94 def create_makefile_var_name_global(var_name):

95 return "CONAN_{var}".format(var=var_name).upper()

96

97 @staticmethod

98 def create_makefile_var_name_pkg(var_name, pkg_name):

99 return "CONAN_{var}_{lib}".format(var=var_name, lib=pkg_name).upper()

100

101 def create_makefile_var_value(self, values):

102 formatted_values = [value.replace("\\", "/") for value in values]

103 return self.makefile_line_continuation.join(formatted_values)

104

105 @staticmethod

106 def all_dep_vars():

107 return ["rootpath", "sysroot", "include_paths", "lib_paths", "bin_paths", "build_paths",

108 "res_paths", "libs", "defines", "cflags", "cppflags", "sharedlinkflags",

109 "exelinkflags"]

110

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/conans/client/generators/make.py b/conans/client/generators/make.py

--- a/conans/client/generators/make.py

+++ b/conans/client/generators/make.py

@@ -47,15 +47,15 @@

vars_info = [("ROOT", self.assignment_if_absent, [cpp_info.rootpath]),

("SYSROOT", self.assignment_if_absent, [cpp_info.sysroot]),

- ("INCLUDE_PATHS", self.assignment_append, cpp_info.include_paths),

- ("LIB_PATHS", self.assignment_append, cpp_info.lib_paths),

- ("BIN_PATHS", self.assignment_append, cpp_info.bin_paths),

- ("BUILD_PATHS", self.assignment_append, cpp_info.build_paths),

- ("RES_PATHS", self.assignment_append, cpp_info.res_paths),

+ ("INCLUDE_DIRS", self.assignment_append, cpp_info.include_paths),

+ ("LIB_DIRS", self.assignment_append, cpp_info.lib_paths),

+ ("BIN_DIRS", self.assignment_append, cpp_info.bin_paths),

+ ("BUILD_DIRS", self.assignment_append, cpp_info.build_paths),

+ ("RES_DIRS", self.assignment_append, cpp_info.res_paths),

("LIBS", self.assignment_append, cpp_info.libs),

("DEFINES", self.assignment_append, cpp_info.defines),

("CFLAGS", self.assignment_append, cpp_info.cflags),

- ("CPPFLAGS", self.assignment_append, cpp_info.cppflags),

+ ("CXXFLAGS", self.assignment_append, cpp_info.cppflags),

("SHAREDLINKFLAGS", self.assignment_append, cpp_info.sharedlinkflags),

("EXELINKFLAGS", self.assignment_append, cpp_info.exelinkflags)]

@@ -104,6 +104,6 @@

@staticmethod

def all_dep_vars():

- return ["rootpath", "sysroot", "include_paths", "lib_paths", "bin_paths", "build_paths",

- "res_paths", "libs", "defines", "cflags", "cppflags", "sharedlinkflags",

+ return ["rootpath", "sysroot", "include_dirs", "lib_dirs", "bin_dirs", "build_dirs",

+ "res_dirs", "libs", "defines", "cflags", "cxxflags", "sharedlinkflags",

"exelinkflags"]

| {"golden_diff": "diff --git a/conans/client/generators/make.py b/conans/client/generators/make.py\n--- a/conans/client/generators/make.py\n+++ b/conans/client/generators/make.py\n@@ -47,15 +47,15 @@\n \n vars_info = [(\"ROOT\", self.assignment_if_absent, [cpp_info.rootpath]),\n (\"SYSROOT\", self.assignment_if_absent, [cpp_info.sysroot]),\n- (\"INCLUDE_PATHS\", self.assignment_append, cpp_info.include_paths),\n- (\"LIB_PATHS\", self.assignment_append, cpp_info.lib_paths),\n- (\"BIN_PATHS\", self.assignment_append, cpp_info.bin_paths),\n- (\"BUILD_PATHS\", self.assignment_append, cpp_info.build_paths),\n- (\"RES_PATHS\", self.assignment_append, cpp_info.res_paths),\n+ (\"INCLUDE_DIRS\", self.assignment_append, cpp_info.include_paths),\n+ (\"LIB_DIRS\", self.assignment_append, cpp_info.lib_paths),\n+ (\"BIN_DIRS\", self.assignment_append, cpp_info.bin_paths),\n+ (\"BUILD_DIRS\", self.assignment_append, cpp_info.build_paths),\n+ (\"RES_DIRS\", self.assignment_append, cpp_info.res_paths),\n (\"LIBS\", self.assignment_append, cpp_info.libs),\n (\"DEFINES\", self.assignment_append, cpp_info.defines),\n (\"CFLAGS\", self.assignment_append, cpp_info.cflags),\n- (\"CPPFLAGS\", self.assignment_append, cpp_info.cppflags),\n+ (\"CXXFLAGS\", self.assignment_append, cpp_info.cppflags),\n (\"SHAREDLINKFLAGS\", self.assignment_append, cpp_info.sharedlinkflags),\n (\"EXELINKFLAGS\", self.assignment_append, cpp_info.exelinkflags)]\n \n@@ -104,6 +104,6 @@\n \n @staticmethod\n def all_dep_vars():\n- return [\"rootpath\", \"sysroot\", \"include_paths\", \"lib_paths\", \"bin_paths\", \"build_paths\",\n- \"res_paths\", \"libs\", \"defines\", \"cflags\", \"cppflags\", \"sharedlinkflags\",\n+ return [\"rootpath\", \"sysroot\", \"include_dirs\", \"lib_dirs\", \"bin_dirs\", \"build_dirs\",\n+ \"res_dirs\", \"libs\", \"defines\", \"cflags\", \"cxxflags\", \"sharedlinkflags\",\n \"exelinkflags\"]\n", "issue": "Fix experimental make generator CONAN_CPPFLAGS and CONAN_INCLUDE_PATHS\nFollowing the conversation here https://github.com/conan-io/conan/issues/4286#issuecomment-454194188\r\n\r\nWe have finally reached the conclusion of having ``cpp_info.cppflags`` converted to ``CONAN_CXXFLAGS`` in the ``make`` generator to be consistent with orhter generators such as ``cmake``.\r\n\r\nAlso the flag ``CONAN_INCLUDE_PATHS`` should be renamed to ``CONAN_INCLUDE_DIRS`` for the same reason.\r\n\r\nIn another issue we would probably introduce a ``cpp_info.cxxflags`` that would be an internal alias of ``cpp_info.cppflags`` to avoid this confusion without breaking.\r\n\r\ncc/ @solvingj \n", "before_files": [{"content": "from conans.model import Generator\nfrom conans.paths import BUILD_INFO_MAKE\n\n\nclass MakeGenerator(Generator):\n\n def __init__(self, conanfile):\n Generator.__init__(self, conanfile)\n self.makefile_newline = \"\\n\"\n self.makefile_line_continuation = \" \\\\\\n\"\n self.assignment_if_absent = \" ?= \"\n self.assignment_append = \" += \"\n\n @property\n def filename(self):\n return BUILD_INFO_MAKE\n\n @property\n def content(self):\n\n content = [\n \"#-------------------------------------------------------------------#\",\n \"# Makefile variables from Conan Dependencies #\",\n \"#-------------------------------------------------------------------#\",\n \"\",\n ]\n\n for line_as_list in self.create_deps_content():\n content.append(\"\".join(line_as_list))\n\n content.append(\"#-------------------------------------------------------------------#\")\n content.append(self.makefile_newline)\n return self.makefile_newline.join(content)\n\n def create_deps_content(self):\n deps_content = self.create_content_from_deps()\n deps_content.extend(self.create_combined_content())\n return deps_content\n\n def create_content_from_deps(self):\n content = []\n for pkg_name, cpp_info in self.deps_build_info.dependencies:\n content.extend(self.create_content_from_dep(pkg_name, cpp_info))\n return content\n\n def create_content_from_dep(self, pkg_name, cpp_info):\n\n vars_info = [(\"ROOT\", self.assignment_if_absent, [cpp_info.rootpath]),\n (\"SYSROOT\", self.assignment_if_absent, [cpp_info.sysroot]),\n (\"INCLUDE_PATHS\", self.assignment_append, cpp_info.include_paths),\n (\"LIB_PATHS\", self.assignment_append, cpp_info.lib_paths),\n (\"BIN_PATHS\", self.assignment_append, cpp_info.bin_paths),\n (\"BUILD_PATHS\", self.assignment_append, cpp_info.build_paths),\n (\"RES_PATHS\", self.assignment_append, cpp_info.res_paths),\n (\"LIBS\", self.assignment_append, cpp_info.libs),\n (\"DEFINES\", self.assignment_append, cpp_info.defines),\n (\"CFLAGS\", self.assignment_append, cpp_info.cflags),\n (\"CPPFLAGS\", self.assignment_append, cpp_info.cppflags),\n (\"SHAREDLINKFLAGS\", self.assignment_append, cpp_info.sharedlinkflags),\n (\"EXELINKFLAGS\", self.assignment_append, cpp_info.exelinkflags)]\n\n return [self.create_makefile_var_pkg(var_name, pkg_name, operator, info)\n for var_name, operator, info in vars_info]\n\n def create_combined_content(self):\n content = []\n for var_name in self.all_dep_vars():\n content.append(self.create_makefile_var_global(var_name, self.assignment_append,\n self.create_combined_var_list(var_name)))\n return content\n\n def create_combined_var_list(self, var_name):\n make_vars = []\n for pkg_name, _ in self.deps_build_info.dependencies:\n pkg_var = self.create_makefile_var_name_pkg(var_name, pkg_name)\n make_vars.append(\"$({pkg_var})\".format(pkg_var=pkg_var))\n return make_vars\n\n def create_makefile_var_global(self, var_name, operator, values):\n make_var = [self.create_makefile_var_name_global(var_name)]\n make_var.extend(self.create_makefile_var_common(operator, values))\n return make_var\n\n def create_makefile_var_pkg(self, var_name, pkg_name, operator, values):\n make_var = [self.create_makefile_var_name_pkg(var_name, pkg_name)]\n make_var.extend(self.create_makefile_var_common(operator, values))\n return make_var\n\n def create_makefile_var_common(self, operator, values):\n return [operator, self.makefile_line_continuation, self.create_makefile_var_value(values),\n self.makefile_newline]\n\n @staticmethod\n def create_makefile_var_name_global(var_name):\n return \"CONAN_{var}\".format(var=var_name).upper()\n\n @staticmethod\n def create_makefile_var_name_pkg(var_name, pkg_name):\n return \"CONAN_{var}_{lib}\".format(var=var_name, lib=pkg_name).upper()\n\n def create_makefile_var_value(self, values):\n formatted_values = [value.replace(\"\\\\\", \"/\") for value in values]\n return self.makefile_line_continuation.join(formatted_values)\n\n @staticmethod\n def all_dep_vars():\n return [\"rootpath\", \"sysroot\", \"include_paths\", \"lib_paths\", \"bin_paths\", \"build_paths\",\n \"res_paths\", \"libs\", \"defines\", \"cflags\", \"cppflags\", \"sharedlinkflags\",\n \"exelinkflags\"]\n", "path": "conans/client/generators/make.py"}], "after_files": [{"content": "from conans.model import Generator\nfrom conans.paths import BUILD_INFO_MAKE\n\n\nclass MakeGenerator(Generator):\n\n def __init__(self, conanfile):\n Generator.__init__(self, conanfile)\n self.makefile_newline = \"\\n\"\n self.makefile_line_continuation = \" \\\\\\n\"\n self.assignment_if_absent = \" ?= \"\n self.assignment_append = \" += \"\n\n @property\n def filename(self):\n return BUILD_INFO_MAKE\n\n @property\n def content(self):\n\n content = [\n \"#-------------------------------------------------------------------#\",\n \"# Makefile variables from Conan Dependencies #\",\n \"#-------------------------------------------------------------------#\",\n \"\",\n ]\n\n for line_as_list in self.create_deps_content():\n content.append(\"\".join(line_as_list))\n\n content.append(\"#-------------------------------------------------------------------#\")\n content.append(self.makefile_newline)\n return self.makefile_newline.join(content)\n\n def create_deps_content(self):\n deps_content = self.create_content_from_deps()\n deps_content.extend(self.create_combined_content())\n return deps_content\n\n def create_content_from_deps(self):\n content = []\n for pkg_name, cpp_info in self.deps_build_info.dependencies:\n content.extend(self.create_content_from_dep(pkg_name, cpp_info))\n return content\n\n def create_content_from_dep(self, pkg_name, cpp_info):\n\n vars_info = [(\"ROOT\", self.assignment_if_absent, [cpp_info.rootpath]),\n (\"SYSROOT\", self.assignment_if_absent, [cpp_info.sysroot]),\n (\"INCLUDE_DIRS\", self.assignment_append, cpp_info.include_paths),\n (\"LIB_DIRS\", self.assignment_append, cpp_info.lib_paths),\n (\"BIN_DIRS\", self.assignment_append, cpp_info.bin_paths),\n (\"BUILD_DIRS\", self.assignment_append, cpp_info.build_paths),\n (\"RES_DIRS\", self.assignment_append, cpp_info.res_paths),\n (\"LIBS\", self.assignment_append, cpp_info.libs),\n (\"DEFINES\", self.assignment_append, cpp_info.defines),\n (\"CFLAGS\", self.assignment_append, cpp_info.cflags),\n (\"CXXFLAGS\", self.assignment_append, cpp_info.cppflags),\n (\"SHAREDLINKFLAGS\", self.assignment_append, cpp_info.sharedlinkflags),\n (\"EXELINKFLAGS\", self.assignment_append, cpp_info.exelinkflags)]\n\n return [self.create_makefile_var_pkg(var_name, pkg_name, operator, info)\n for var_name, operator, info in vars_info]\n\n def create_combined_content(self):\n content = []\n for var_name in self.all_dep_vars():\n content.append(self.create_makefile_var_global(var_name, self.assignment_append,\n self.create_combined_var_list(var_name)))\n return content\n\n def create_combined_var_list(self, var_name):\n make_vars = []\n for pkg_name, _ in self.deps_build_info.dependencies:\n pkg_var = self.create_makefile_var_name_pkg(var_name, pkg_name)\n make_vars.append(\"$({pkg_var})\".format(pkg_var=pkg_var))\n return make_vars\n\n def create_makefile_var_global(self, var_name, operator, values):\n make_var = [self.create_makefile_var_name_global(var_name)]\n make_var.extend(self.create_makefile_var_common(operator, values))\n return make_var\n\n def create_makefile_var_pkg(self, var_name, pkg_name, operator, values):\n make_var = [self.create_makefile_var_name_pkg(var_name, pkg_name)]\n make_var.extend(self.create_makefile_var_common(operator, values))\n return make_var\n\n def create_makefile_var_common(self, operator, values):\n return [operator, self.makefile_line_continuation, self.create_makefile_var_value(values),\n self.makefile_newline]\n\n @staticmethod\n def create_makefile_var_name_global(var_name):\n return \"CONAN_{var}\".format(var=var_name).upper()\n\n @staticmethod\n def create_makefile_var_name_pkg(var_name, pkg_name):\n return \"CONAN_{var}_{lib}\".format(var=var_name, lib=pkg_name).upper()\n\n def create_makefile_var_value(self, values):\n formatted_values = [value.replace(\"\\\\\", \"/\") for value in values]\n return self.makefile_line_continuation.join(formatted_values)\n\n @staticmethod\n def all_dep_vars():\n return [\"rootpath\", \"sysroot\", \"include_dirs\", \"lib_dirs\", \"bin_dirs\", \"build_dirs\",\n \"res_dirs\", \"libs\", \"defines\", \"cflags\", \"cxxflags\", \"sharedlinkflags\",\n \"exelinkflags\"]\n", "path": "conans/client/generators/make.py"}]} | 1,622 | 498 |

gh_patches_debug_13502 | rasdani/github-patches | git_diff | mne-tools__mne-bids-111 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

prune dependencies that we do not really depend on

As became apparent in a discussion with @agramfort and @jasmainak, we probably do not need the `environment.yml` and instead should rely on minimal dependencies such as numpy, scipy, and matplotlib.

if we decide to keep the `environment.yml` for convenience during installation, we should at least prune it.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `mne_bids/datasets.py`

Content:

```

1 """Helper functions to fetch data to work with."""

2 # Authors: Mainak Jas <[email protected]>

3 # Alexandre Gramfort <[email protected]>

4 # Teon Brooks <[email protected]>

5 # Stefan Appelhoff <[email protected]>

6 #

7 # License: BSD (3-clause)

8

9 import os

10 import os.path as op

11 import shutil

12 import tarfile

13 import requests

14

15 from mne.utils import _fetch_file

16

17

18 def fetch_faces_data(data_path=None, repo='ds000117', subject_ids=[1]):

19 """Dataset fetcher for OpenfMRI dataset ds000117.

20

21 Parameters

22 ----------

23 data_path : str | None

24 Path to the folder where data is stored. Defaults to

25 '~/mne_data/mne_bids_examples'

26 repo : str

27 The folder name. Defaults to 'ds000117'.

28 subject_ids : list of int

29 The subjects to fetch. Defaults to [1], downloading subject 1.

30

31 Returns

32 -------

33 data_path : str

34 Path to the folder where data is stored.

35

36 """

37 if not data_path:

38 home = os.path.expanduser('~')

39 data_path = os.path.join(home, 'mne_data', 'mne_bids_examples')

40 if not os.path.exists(data_path):

41 os.makedirs(data_path)

42

43 for subject_id in subject_ids:

44 src_url = ('http://openfmri.s3.amazonaws.com/tarballs/'

45 'ds117_R0.1.1_sub%03d_raw.tgz' % subject_id)

46 tar_fname = op.join(data_path, repo + '.tgz')

47 target_dir = op.join(data_path, repo)

48 if not op.exists(target_dir):

49 if not op.exists(tar_fname):

50 _fetch_file(url=src_url, file_name=tar_fname,

51 print_destination=True, resume=True, timeout=10.)

52 tf = tarfile.open(tar_fname)

53 print('Extracting files. This may take a while ...')

54 tf.extractall(path=data_path)

55 shutil.move(op.join(data_path, 'ds117'), target_dir)

56 os.remove(tar_fname)

57

58 return data_path

59

60

61 def fetch_brainvision_testing_data(data_path=None):

62 """Download the MNE-Python testing data for the BrainVision format.

63

64 Parameters

65 ----------

66 data_path : str | None

67 Path to the folder where data is stored. Defaults to

68 '~/mne_data/mne_bids_examples'

69

70 Returns

71 -------

72 data_path : str

73 Path to the folder where data is stored.

74

75 """

76 if not data_path:

77 home = os.path.expanduser('~')

78 data_path = os.path.join(home, 'mne_data', 'mne_bids_examples')

79 if not os.path.exists(data_path):

80 os.makedirs(data_path)

81

82 base_url = 'https://github.com/mne-tools/mne-python/'

83 base_url += 'raw/master/mne/io/brainvision/tests/data/test'

84 file_endings = ['.vhdr', '.vmrk', '.eeg', ]

85

86 for f_ending in file_endings:

87 url = base_url + f_ending

88 response = requests.get(url)

89

90 fname = os.path.join(data_path, 'test' + f_ending)

91 with open(fname, 'wb') as fout:

92 fout.write(response.content)

93

94 return data_path

95

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/mne_bids/datasets.py b/mne_bids/datasets.py

--- a/mne_bids/datasets.py

+++ b/mne_bids/datasets.py

@@ -10,7 +10,7 @@

import os.path as op

import shutil

import tarfile

-import requests

+from six.moves import urllib

from mne.utils import _fetch_file

@@ -85,10 +85,10 @@

for f_ending in file_endings:

url = base_url + f_ending

- response = requests.get(url)

+ response = urllib.request.urlopen(url)

fname = os.path.join(data_path, 'test' + f_ending)

with open(fname, 'wb') as fout:

- fout.write(response.content)

+ fout.write(response.read())

return data_path