text

stringlengths 2

100k

| meta

dict |

|---|---|

//

// GrowlApplicationBridge.h

// Growl

//

// Created by Evan Schoenberg on Wed Jun 16 2004.

// Copyright 2004-2006 The Growl Project. All rights reserved.

//

/*!

* @header GrowlApplicationBridge.h

* @abstract Defines the GrowlApplicationBridge class.

* @discussion This header defines the GrowlApplicationBridge class as well as

* the GROWL_PREFPANE_BUNDLE_IDENTIFIER constant.

*/

#ifndef __GrowlApplicationBridge_h__

#define __GrowlApplicationBridge_h__

#import <Foundation/Foundation.h>

#import <AppKit/AppKit.h>

#import <Growl/GrowlDefines.h>

//Forward declarations

@protocol GrowlApplicationBridgeDelegate;

//------------------------------------------------------------------------------

#pragma mark -

/*!

* @class GrowlApplicationBridge

* @abstract A class used to interface with Growl.

* @discussion This class provides a means to interface with Growl.

*

* Currently it provides a way to detect if Growl is installed and launch the

* GrowlHelperApp if it's not already running.

*/

@interface GrowlApplicationBridge : NSObject {

}

/*!

* @method isGrowlInstalled

* @abstract Detects whether Growl is installed.

* @discussion Determines if the Growl prefpane and its helper app are installed.

* @result this method will forever return YES.

*/

+ (BOOL) isGrowlInstalled __attribute__((deprecated));

/*!

* @method isGrowlRunning

* @abstract Detects whether GrowlHelperApp is currently running.

* @discussion Cycles through the process list to find whether GrowlHelperApp is running and returns its findings.

* @result Returns YES if GrowlHelperApp is running, NO otherwise.

*/

+ (BOOL) isGrowlRunning;

/*!

* @method isMistEnabled

* @abstract Gives the caller a fairly good indication of whether or not built-in notifications(Mist) will be used.

* @discussion since this call makes use of isGrowlRunning it is entirely possible for this value to change between call and

* executing a notification dispatch

* @result Returns YES if Growl isn't reachable and the developer has not opted-out of

* Mist and the user hasn't set the global mist enable key to false.

*/

+ (BOOL)isMistEnabled;

/*!

* @method setShouldUseBuiltInNotifications

* @abstract opt-out mechanism for the mist notification style in the event growl can't be reached.

* @discussion if growl is unavailable due to not being installed or as a result of being turned off then

* this option can enable/disable a built-in fire and forget display style

* @param should Specifies whether or not the developer wants to opt-in (default) or opt out

* of the built-in Mist style in the event Growl is unreachable.

*/

+ (void)setShouldUseBuiltInNotifications:(BOOL)should;

/*!

* @method shouldUseBuiltInNotifications

* @abstract returns the current opt-in state of the framework's use of the Mist display style.

* @result Returns NO if the developer opt-ed out of Mist, the default value is YES.

*/

+ (BOOL)shouldUseBuiltInNotifications;

#pragma mark -

/*!

* @method setGrowlDelegate:

* @abstract Set the object which will be responsible for providing and receiving Growl information.

* @discussion This must be called before using GrowlApplicationBridge.

*

* The methods in the GrowlApplicationBridgeDelegate protocol are required

* and return the basic information needed to register with Growl.

*

* The methods in the GrowlApplicationBridgeDelegate_InformalProtocol

* informal protocol are individually optional. They provide a greater

* degree of interaction between the application and growl such as informing

* the application when one of its Growl notifications is clicked by the user.

*

* The methods in the GrowlApplicationBridgeDelegate_Installation_InformalProtocol

* informal protocol are individually optional and are only applicable when

* using the Growl-WithInstaller.framework which allows for automated Growl

* installation.

*

* When this method is called, data will be collected from inDelegate, Growl

* will be launched if it is not already running, and the application will be

* registered with Growl.

*

* If using the Growl-WithInstaller framework, if Growl is already installed

* but this copy of the framework has an updated version of Growl, the user

* will be prompted to update automatically.

*

* @param inDelegate The delegate for the GrowlApplicationBridge. It must conform to the GrowlApplicationBridgeDelegate protocol.

*/

+ (void) setGrowlDelegate:(NSObject<GrowlApplicationBridgeDelegate> *)inDelegate;

/*!

* @method growlDelegate

* @abstract Return the object responsible for providing and receiving Growl information.

* @discussion See setGrowlDelegate: for details.

* @result The Growl delegate.

*/

+ (NSObject<GrowlApplicationBridgeDelegate> *) growlDelegate;

#pragma mark -

/*!

* @method notifyWithTitle:description:notificationName:iconData:priority:isSticky:clickContext:

* @abstract Send a Growl notification.

* @discussion This is the preferred means for sending a Growl notification.

* The notification name and at least one of the title and description are

* required (all three are preferred). All other parameters may be

* <code>nil</code> (or 0 or NO as appropriate) to accept default values.

*

* If using the Growl-WithInstaller framework, if Growl is not installed the

* user will be prompted to install Growl. If the user cancels, this method

* will have no effect until the next application session, at which time when

* it is called the user will be prompted again. The user is also given the

* option to not be prompted again. If the user does choose to install Growl,

* the requested notification will be displayed once Growl is installed and

* running.

*

* @param title The title of the notification displayed to the user.

* @param description The full description of the notification displayed to the user.

* @param notifName The internal name of the notification. Should be human-readable, as it will be displayed in the Growl preference pane.

* @param iconData <code>NSData</code> object to show with the notification as its icon. If <code>nil</code>, the application's icon will be used instead.

* @param priority The priority of the notification. The default value is 0; positive values are higher priority and negative values are lower priority. Not all Growl displays support priority.

* @param isSticky If YES, the notification will remain on screen until clicked. Not all Growl displays support sticky notifications.

* @param clickContext A context passed back to the Growl delegate if it implements -(void)growlNotificationWasClicked: and the notification is clicked. Not all display plugins support clicking. The clickContext must be plist-encodable (completely of <code>NSString</code>, <code>NSArray</code>, <code>NSNumber</code>, <code>NSDictionary</code>, and <code>NSData</code> types).

*/

+ (void) notifyWithTitle:(NSString *)title

description:(NSString *)description

notificationName:(NSString *)notifName

iconData:(NSData *)iconData

priority:(signed int)priority

isSticky:(BOOL)isSticky

clickContext:(id)clickContext;

/*!

* @method notifyWithTitle:description:notificationName:iconData:priority:isSticky:clickContext:identifier:

* @abstract Send a Growl notification.

* @discussion This is the preferred means for sending a Growl notification.

* The notification name and at least one of the title and description are

* required (all three are preferred). All other parameters may be

* <code>nil</code> (or 0 or NO as appropriate) to accept default values.

*

* If using the Growl-WithInstaller framework, if Growl is not installed the

* user will be prompted to install Growl. If the user cancels, this method

* will have no effect until the next application session, at which time when

* it is called the user will be prompted again. The user is also given the

* option to not be prompted again. If the user does choose to install Growl,

* the requested notification will be displayed once Growl is installed and

* running.

*

* @param title The title of the notification displayed to the user.

* @param description The full description of the notification displayed to the user.

* @param notifName The internal name of the notification. Should be human-readable, as it will be displayed in the Growl preference pane.

* @param iconData <code>NSData</code> object to show with the notification as its icon. If <code>nil</code>, the application's icon will be used instead.

* @param priority The priority of the notification. The default value is 0; positive values are higher priority and negative values are lower priority. Not all Growl displays support priority.

* @param isSticky If YES, the notification will remain on screen until clicked. Not all Growl displays support sticky notifications.

* @param clickContext A context passed back to the Growl delegate if it implements -(void)growlNotificationWasClicked: and the notification is clicked. Not all display plugins support clicking. The clickContext must be plist-encodable (completely of <code>NSString</code>, <code>NSArray</code>, <code>NSNumber</code>, <code>NSDictionary</code>, and <code>NSData</code> types).

* @param identifier An identifier for this notification. Notifications with equal identifiers are coalesced.

*/

+ (void) notifyWithTitle:(NSString *)title

description:(NSString *)description

notificationName:(NSString *)notifName

iconData:(NSData *)iconData

priority:(signed int)priority

isSticky:(BOOL)isSticky

clickContext:(id)clickContext

identifier:(NSString *)identifier;

/*! @method notifyWithDictionary:

* @abstract Notifies using a userInfo dictionary suitable for passing to

* <code>NSDistributedNotificationCenter</code>.

* @param userInfo The dictionary to notify with.

* @discussion Before Growl 0.6, your application would have posted

* notifications using <code>NSDistributedNotificationCenter</code> by

* creating a userInfo dictionary with the notification data. This had the

* advantage of allowing you to add other data to the dictionary for programs

* besides Growl that might be listening.

*

* This method allows you to use such dictionaries without being restricted

* to using <code>NSDistributedNotificationCenter</code>. The keys for this dictionary

* can be found in GrowlDefines.h.

*/

+ (void) notifyWithDictionary:(NSDictionary *)userInfo;

#pragma mark -

/*! @method registerWithDictionary:

* @abstract Register your application with Growl without setting a delegate.

* @discussion When you call this method with a dictionary,

* GrowlApplicationBridge registers your application using that dictionary.

* If you pass <code>nil</code>, GrowlApplicationBridge will ask the delegate

* (if there is one) for a dictionary, and if that doesn't work, it will look

* in your application's bundle for an auto-discoverable plist.

* (XXX refer to more information on that)

*

* If you pass a dictionary to this method, it must include the

* <code>GROWL_APP_NAME</code> key, unless a delegate is set.

*

* This method is mainly an alternative to the delegate system introduced

* with Growl 0.6. Without a delegate, you cannot receive callbacks such as

* <code>-growlIsReady</code> (since they are sent to the delegate). You can,

* however, set a delegate after registering without one.

*

* This method was introduced in Growl.framework 0.7.

*/

+ (BOOL) registerWithDictionary:(NSDictionary *)regDict;

/*! @method reregisterGrowlNotifications

* @abstract Reregister the notifications for this application.

* @discussion This method does not normally need to be called. If your

* application changes what notifications it is registering with Growl, call

* this method to have the Growl delegate's

* <code>-registrationDictionaryForGrowl</code> method called again and the

* Growl registration information updated.

*

* This method is now implemented using <code>-registerWithDictionary:</code>.

*/

+ (void) reregisterGrowlNotifications;

#pragma mark -

/*! @method setWillRegisterWhenGrowlIsReady:

* @abstract Tells GrowlApplicationBridge to register with Growl when Growl

* launches (or not).

* @discussion When Growl has started listening for notifications, it posts a

* <code>GROWL_IS_READY</code> notification on the Distributed Notification

* Center. GrowlApplicationBridge listens for this notification, using it to

* perform various tasks (such as calling your delegate's

* <code>-growlIsReady</code> method, if it has one). If this method is

* called with <code>YES</code>, one of those tasks will be to reregister

* with Growl (in the manner of <code>-reregisterGrowlNotifications</code>).

*

* This attribute is automatically set back to <code>NO</code> (the default)

* after every <code>GROWL_IS_READY</code> notification.

* @param flag <code>YES</code> if you want GrowlApplicationBridge to register with

* Growl when next it is ready; <code>NO</code> if not.

*/

+ (void) setWillRegisterWhenGrowlIsReady:(BOOL)flag;

/*! @method willRegisterWhenGrowlIsReady

* @abstract Reports whether GrowlApplicationBridge will register with Growl

* when Growl next launches.

* @result <code>YES</code> if GrowlApplicationBridge will register with Growl

* when next it posts GROWL_IS_READY; <code>NO</code> if not.

*/

+ (BOOL) willRegisterWhenGrowlIsReady;

#pragma mark -

/*! @method registrationDictionaryFromDelegate

* @abstract Asks the delegate for a registration dictionary.

* @discussion If no delegate is set, or if the delegate's

* <code>-registrationDictionaryForGrowl</code> method returns

* <code>nil</code>, this method returns <code>nil</code>.

*

* This method does not attempt to clean up the dictionary in any way - for

* example, if it is missing the <code>GROWL_APP_NAME</code> key, the result

* will be missing it too. Use <code>+[GrowlApplicationBridge

* registrationDictionaryByFillingInDictionary:]</code> or

* <code>+[GrowlApplicationBridge

* registrationDictionaryByFillingInDictionary:restrictToKeys:]</code> to try

* to fill in missing keys.

*

* This method was introduced in Growl.framework 0.7.

* @result A registration dictionary.

*/

+ (NSDictionary *) registrationDictionaryFromDelegate;

/*! @method registrationDictionaryFromBundle:

* @abstract Looks in a bundle for a registration dictionary.

* @discussion This method looks in a bundle for an auto-discoverable

* registration dictionary file using <code>-[NSBundle

* pathForResource:ofType:]</code>. If it finds one, it loads the file using

* <code>+[NSDictionary dictionaryWithContentsOfFile:]</code> and returns the

* result.

*

* If you pass <code>nil</code> as the bundle, the main bundle is examined.

*

* This method does not attempt to clean up the dictionary in any way - for

* example, if it is missing the <code>GROWL_APP_NAME</code> key, the result

* will be missing it too. Use <code>+[GrowlApplicationBridge

* registrationDictionaryByFillingInDictionary:]</code> or

* <code>+[GrowlApplicationBridge

* registrationDictionaryByFillingInDictionary:restrictToKeys:]</code> to try

* to fill in missing keys.

*

* This method was introduced in Growl.framework 0.7.

* @result A registration dictionary.

*/

+ (NSDictionary *) registrationDictionaryFromBundle:(NSBundle *)bundle;

/*! @method bestRegistrationDictionary

* @abstract Obtains a registration dictionary, filled out to the best of

* GrowlApplicationBridge's knowledge.

* @discussion This method creates a registration dictionary as best

* GrowlApplicationBridge knows how.

*

* First, GrowlApplicationBridge contacts the Growl delegate (if there is

* one) and gets the registration dictionary from that. If no such dictionary

* was obtained, GrowlApplicationBridge looks in your application's main

* bundle for an auto-discoverable registration dictionary file. If that

* doesn't exist either, this method returns <code>nil</code>.

*

* Second, GrowlApplicationBridge calls

* <code>+registrationDictionaryByFillingInDictionary:</code> with whatever

* dictionary was obtained. The result of that method is the result of this

* method.

*

* GrowlApplicationBridge uses this method when you call

* <code>+setGrowlDelegate:</code>, or when you call

* <code>+registerWithDictionary:</code> with <code>nil</code>.

*

* This method was introduced in Growl.framework 0.7.

* @result A registration dictionary.

*/

+ (NSDictionary *) bestRegistrationDictionary;

#pragma mark -

/*! @method registrationDictionaryByFillingInDictionary:

* @abstract Tries to fill in missing keys in a registration dictionary.

* @discussion This method examines the passed-in dictionary for missing keys,

* and tries to work out correct values for them. As of 0.7, it uses:

*

* Key Value

* --- -----

* <code>GROWL_APP_NAME</code> <code>CFBundleExecutableName</code>

* <code>GROWL_APP_ICON_DATA</code> The data of the icon of the application.

* <code>GROWL_APP_LOCATION</code> The location of the application.

* <code>GROWL_NOTIFICATIONS_DEFAULT</code> <code>GROWL_NOTIFICATIONS_ALL</code>

*

* Keys are only filled in if missing; if a key is present in the dictionary,

* its value will not be changed.

*

* This method was introduced in Growl.framework 0.7.

* @param regDict The dictionary to fill in.

* @result The dictionary with the keys filled in. This is an autoreleased

* copy of <code>regDict</code>.

*/

+ (NSDictionary *) registrationDictionaryByFillingInDictionary:(NSDictionary *)regDict;

/*! @method registrationDictionaryByFillingInDictionary:restrictToKeys:

* @abstract Tries to fill in missing keys in a registration dictionary.

* @discussion This method examines the passed-in dictionary for missing keys,

* and tries to work out correct values for them. As of 0.7, it uses:

*

* Key Value

* --- -----

* <code>GROWL_APP_NAME</code> <code>CFBundleExecutableName</code>

* <code>GROWL_APP_ICON_DATA</code> The data of the icon of the application.

* <code>GROWL_APP_LOCATION</code> The location of the application.

* <code>GROWL_NOTIFICATIONS_DEFAULT</code> <code>GROWL_NOTIFICATIONS_ALL</code>

*

* Only those keys that are listed in <code>keys</code> will be filled in.

* Other missing keys are ignored. Also, keys are only filled in if missing;

* if a key is present in the dictionary, its value will not be changed.

*

* This method was introduced in Growl.framework 0.7.

* @param regDict The dictionary to fill in.

* @param keys The keys to fill in. If <code>nil</code>, any missing keys are filled in.

* @result The dictionary with the keys filled in. This is an autoreleased

* copy of <code>regDict</code>.

*/

+ (NSDictionary *) registrationDictionaryByFillingInDictionary:(NSDictionary *)regDict restrictToKeys:(NSSet *)keys;

/*! @brief Tries to fill in missing keys in a notification dictionary.

* @param notifDict The dictionary to fill in.

* @return The dictionary with the keys filled in. This will be a separate instance from \a notifDict.

* @discussion This function examines the \a notifDict for missing keys, and

* tries to get them from the last known registration dictionary. As of 1.1,

* the keys that it will look for are:

*

* \li <code>GROWL_APP_NAME</code>

* \li <code>GROWL_APP_ICON_DATA</code>

*

* @since Growl.framework 1.1

*/

+ (NSDictionary *) notificationDictionaryByFillingInDictionary:(NSDictionary *)regDict;

+ (NSDictionary *) frameworkInfoDictionary;

@end

//------------------------------------------------------------------------------

#pragma mark -

/*!

* @protocol GrowlApplicationBridgeDelegate

* @abstract Required protocol for the Growl delegate.

* @discussion The methods in this protocol are required and are called

* automatically as needed by GrowlApplicationBridge. See

* <code>+[GrowlApplicationBridge setGrowlDelegate:]</code>.

* See also <code>GrowlApplicationBridgeDelegate_InformalProtocol</code>.

*/

@protocol GrowlApplicationBridgeDelegate

// -registrationDictionaryForGrowl has moved to the informal protocol as of 0.7.

@end

//------------------------------------------------------------------------------

#pragma mark -

/*!

* @category NSObject(GrowlApplicationBridgeDelegate_InformalProtocol)

* @abstract Methods which may be optionally implemented by the GrowlDelegate.

* @discussion The methods in this informal protocol will only be called if implemented by the delegate.

*/

@interface NSObject (GrowlApplicationBridgeDelegate_InformalProtocol)

/*!

* @method registrationDictionaryForGrowl

* @abstract Return the dictionary used to register this application with Growl.

* @discussion The returned dictionary gives Growl the complete list of

* notifications this application will ever send, and it also specifies which

* notifications should be enabled by default. Each is specified by an array

* of <code>NSString</code> objects.

*

* For most applications, these two arrays can be the same (if all sent

* notifications should be displayed by default).

*

* The <code>NSString</code> objects of these arrays will correspond to the

* <code>notificationName:</code> parameter passed in

* <code>+[GrowlApplicationBridge

* notifyWithTitle:description:notificationName:iconData:priority:isSticky:clickContext:]</code> calls.

*

* The dictionary should have the required key object pairs:

* key: GROWL_NOTIFICATIONS_ALL object: <code>NSArray</code> of <code>NSString</code> objects

* key: GROWL_NOTIFICATIONS_DEFAULT object: <code>NSArray</code> of <code>NSString</code> objects

*

* The dictionary may have the following key object pairs:

* key: GROWL_NOTIFICATIONS_HUMAN_READABLE_NAMES object: <code>NSDictionary</code> of key: notification name object: human-readable notification name

*

* You do not need to implement this method if you have an auto-discoverable

* plist file in your app bundle. (XXX refer to more information on that)

*

* @result The <code>NSDictionary</code> to use for registration.

*/

- (NSDictionary *) registrationDictionaryForGrowl;

/*!

* @method applicationNameForGrowl

* @abstract Return the name of this application which will be used for Growl bookkeeping.

* @discussion This name is used both internally and in the Growl preferences.

*

* This should remain stable between different versions and incarnations of

* your application.

* For example, "SurfWriter" is a good app name, whereas "SurfWriter 2.0" and

* "SurfWriter Lite" are not.

*

* You do not need to implement this method if you are providing the

* application name elsewhere, meaning in an auto-discoverable plist file in

* your app bundle (XXX refer to more information on that) or in the result

* of -registrationDictionaryForGrowl.

*

* @result The name of the application using Growl.

*/

- (NSString *) applicationNameForGrowl;

/*!

* @method applicationIconForGrowl

* @abstract Return the <code>NSImage</code> to treat as the application icon.

* @discussion The delegate may optionally return an <code>NSImage</code>

* object to use as the application icon. If this method is not implemented,

* {{{-applicationIconDataForGrowl}}} is tried. If that method is not

* implemented, the application's own icon is used. Neither method is

* generally needed.

* @result The <code>NSImage</code> to treat as the application icon.

*/

- (NSImage *) applicationIconForGrowl;

/*!

* @method applicationIconDataForGrowl

* @abstract Return the <code>NSData</code> to treat as the application icon.

* @discussion The delegate may optionally return an <code>NSData</code>

* object to use as the application icon; if this is not implemented, the

* application's own icon is used. This is not generally needed.

* @result The <code>NSData</code> to treat as the application icon.

* @deprecated In version 1.1, in favor of {{{-applicationIconForGrowl}}}.

*/

- (NSData *) applicationIconDataForGrowl;

/*!

* @method growlIsReady

* @abstract Informs the delegate that Growl has launched.

* @discussion Informs the delegate that Growl (specifically, the

* GrowlHelperApp) was launched successfully. The application can take actions

* with the knowledge that Growl is installed and functional.

*/

- (void) growlIsReady;

/*!

* @method growlNotificationWasClicked:

* @abstract Informs the delegate that a Growl notification was clicked.

* @discussion Informs the delegate that a Growl notification was clicked. It

* is only sent for notifications sent with a non-<code>nil</code>

* clickContext, so if you want to receive a message when a notification is

* clicked, clickContext must not be <code>nil</code> when calling

* <code>+[GrowlApplicationBridge notifyWithTitle: description:notificationName:iconData:priority:isSticky:clickContext:]</code>.

* @param clickContext The clickContext passed when displaying the notification originally via +[GrowlApplicationBridge notifyWithTitle:description:notificationName:iconData:priority:isSticky:clickContext:].

*/

- (void) growlNotificationWasClicked:(id)clickContext;

/*!

* @method growlNotificationTimedOut:

* @abstract Informs the delegate that a Growl notification timed out.

* @discussion Informs the delegate that a Growl notification timed out. It

* is only sent for notifications sent with a non-<code>nil</code>

* clickContext, so if you want to receive a message when a notification is

* clicked, clickContext must not be <code>nil</code> when calling

* <code>+[GrowlApplicationBridge notifyWithTitle: description:notificationName:iconData:priority:isSticky:clickContext:]</code>.

* @param clickContext The clickContext passed when displaying the notification originally via +[GrowlApplicationBridge notifyWithTitle:description:notificationName:iconData:priority:isSticky:clickContext:].

*/

- (void) growlNotificationTimedOut:(id)clickContext;

/*!

* @method hasNetworkClientEntitlement

* @abstract Used only in sandboxed situations since we don't know whether the app has com.apple.security.network.client entitlement

* @discussion GrowlDelegate calls to find out if we have the com.apple.security.network.client entitlement,

* since we can't find this out without hitting the sandbox. We only call it if we detect that the application is sandboxed.

*/

- (BOOL) hasNetworkClientEntitlement;

@end

#pragma mark -

#endif /* __GrowlApplicationBridge_h__ */

| {

"pile_set_name": "Github"

} |

<?xml version="1.0" encoding="UTF-8"?>

<!--

Copyright (C) 2006 W3C (R) (MIT ERCIM Keio), All Rights Reserved.

W3C liability, trademark and document use rules apply.

http://www.w3.org/Consortium/Legal/ipr-notice

http://www.w3.org/Consortium/Legal/copyright-documents

Generated from: $Id: examples.xml,v 1.57 2008/02/20 16:41:48 pdowney Exp $

-->

<env:Envelope xmlns:xs="http://www.w3.org/2001/XMLSchema"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xmlns:p="http://www.w3.org/2002/ws/databinding/patterns/6/09/"

xmlns:ex="http://www.w3.org/2002/ws/databinding/examples/6/09/"

xmlns:env="http://www.w3.org/2003/05/soap-envelope">

<env:Header/>

<env:Body>

<ex:echoGlobalElementSimpleType>

<ex:globalElementSimpleType xmlns:wsdl11="http://schemas.xmlsoap.org/wsdl/"

xmlns:soap11enc="http://schemas.xmlsoap.org/soap/encoding/">value2</ex:globalElementSimpleType>

</ex:echoGlobalElementSimpleType>

</env:Body>

</env:Envelope> | {

"pile_set_name": "Github"

} |

/*

Copyright (c) 2015 Microsoft Corporation. All rights reserved.

Released under Apache 2.0 license as described in the file LICENSE.

Author: Leonardo de Moura

*/

#pragma once

#include <limits>

#include "util/rb_map.h"

namespace lean {

/** \brief A "functional" priority queue, i.e., copy is O(1).

If two keys have the same priority, then we break ties by

giving higher priority to the last one added.

The insert/erase operations are O(log n),

where n is the size of the queue.

The content of the queue can be dumped into a buffer or traversed */

template<typename K, typename CMP>

class priority_queue {

typedef pair<unsigned, unsigned> pos;

struct pos_cmp {

int operator()(pos const & p1, pos const & p2) const {

if (p1.first == p2.first)

return p1.second == p2.second ? 0 : (p1.second > p2.second ? -1 : 1);

else

return p1.first > p2.first ? -1 : 1;

}

};

unsigned m_next{0};

rb_map<K, pos, CMP> m_key_to_pos;

rb_map<pos, K, pos_cmp> m_pos_to_key;

void normalize() {

buffer<K> ks;

to_buffer(ks);

clear();

for (K const & k : ks)

insert(k);

}

public:

priority_queue(CMP const & cmp = CMP()):m_key_to_pos(cmp) {}

template<typename F>

void for_each(F && f) const {

m_pos_to_key.for_each([&](pos const &, K const & k) {

f(k);

});

}

void to_buffer(buffer<K> & r) const {

for_each([&](K const & k) { r.push_back(k); });

}

bool contains(K const & k) const {

return m_key_to_pos.contains(k);

}

optional<unsigned> get_prio(K const & k) const {

if (auto p = m_key_to_pos.find(k))

return optional<unsigned>(p->first);

else

return optional<unsigned>();

}

// useful if \c CMP only compares part of \c K

K const * get_key(K const & k) const {

if (auto p = m_key_to_pos.find(k))

return m_pos_to_key.find(*p);

else

return nullptr;

}

void clear() {

m_key_to_pos.clear();

m_pos_to_key.clear();

m_next = 0;

}

void insert(K const & k, unsigned prio = 0) {

if (m_next == std::numeric_limits<unsigned>::max())

normalize();

if (auto pos = m_key_to_pos.find(k)) {

m_pos_to_key.erase(*pos);

}

m_key_to_pos.insert(k, pos(prio, m_next));

m_pos_to_key.insert(pos(prio, m_next), k);

m_next++;

}

void erase(K const & k) {

if (auto pos = m_key_to_pos.find(k)) {

m_pos_to_key.erase(*pos);

m_key_to_pos.erase(k);

}

}

};

}

| {

"pile_set_name": "Github"

} |

/*

* eos - A 3D Morphable Model fitting library written in modern C++11/14.

*

* File: include/eos/render/detail/utils.hpp

*

* Copyright 2014-2017 Patrik Huber

*

* Licensed under the Apache License, Version 2.0 (the "License");

* you may not use this file except in compliance with the License.

* You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

#pragma once

#ifndef RENDER_DETAIL_UTILS_HPP_

#define RENDER_DETAIL_UTILS_HPP_

#include "eos/core/Rect.hpp"

#include "eos/render/detail/Vertex.hpp"

#include "glm/vec2.hpp"

#include "glm/vec4.hpp"

#include "glm/geometric.hpp"

#include <algorithm>

#include <cmath>

/**

* Implementations of internal functions, not part of the

* API we expose and not meant to be used by a user.

*/

namespace eos {

namespace render {

namespace detail {

/**

* Calculates the enclosing bounding box of 3 vertices (a triangle). If the

* triangle is partly outside the screen, it will be clipped appropriately.

*

* Todo: If it is fully outside the screen, check what happens, but it works.

*

* @param[in] v0 First vertex.

* @param[in] v1 Second vertex.

* @param[in] v2 Third vertex.

* @param[in] viewport_width Screen width.

* @param[in] viewport_height Screen height.

* @return A bounding box rectangle.

*/

template <typename T, glm::precision P = glm::defaultp>

core::Rect<int> calculate_clipped_bounding_box(const glm::tvec2<T, P>& v0, const glm::tvec2<T, P>& v1,

const glm::tvec2<T, P>& v2, int viewport_width,

int viewport_height)

{

/* Old, producing artifacts:

t.minX = max(min(t.v0.position[0], min(t.v1.position[0], t.v2.position[0])), 0.0f);

t.maxX = min(max(t.v0.position[0], max(t.v1.position[0], t.v2.position[0])), (float)(viewportWidth - 1));

t.minY = max(min(t.v0.position[1], min(t.v1.position[1], t.v2.position[1])), 0.0f);

t.maxY = min(max(t.v0.position[1], max(t.v1.position[1], t.v2.position[1])), (float)(viewportHeight -

1));*/

using std::ceil;

using std::floor;

using std::max;

using std::min;

// Readded this comment after merge: What about rounding, or rather the conversion from double to int?

const int minX = max(min(floor(v0[0]), min(floor(v1[0]), floor(v2[0]))), T(0));

const int maxX = min(max(ceil(v0[0]), max(ceil(v1[0]), ceil(v2[0]))), static_cast<T>(viewport_width - 1));

const int minY = max(min(floor(v0[1]), min(floor(v1[1]), floor(v2[1]))), T(0));

const int maxY = min(max(ceil(v0[1]), max(ceil(v1[1]), ceil(v2[1]))), static_cast<T>(viewport_height - 1));

return core::Rect<int>{minX, minY, maxX - minX, maxY - minY};

};

/**

* Computes whether the triangle formed out of the given three vertices is

* counter-clockwise in screen space. Assumes the origin of the screen is on

* the top-left, and the y-axis goes down (as in OpenCV images).

*

* @param[in] v0 First vertex.

* @param[in] v1 Second vertex.

* @param[in] v2 Third vertex.

* @return Whether the vertices are CCW in screen space.

*/

template <typename T, glm::precision P = glm::defaultp>

bool are_vertices_ccw_in_screen_space(const glm::tvec2<T, P>& v0, const glm::tvec2<T, P>& v1,

const glm::tvec2<T, P>& v2)

{

const auto dx01 = v1[0] - v0[0]; // todo: replace with x/y (GLM)

const auto dy01 = v1[1] - v0[1];

const auto dx02 = v2[0] - v0[0];

const auto dy02 = v2[1] - v0[1];

return (dx01 * dy02 - dy01 * dx02 < T(0)); // Original: (dx01*dy02 - dy01*dx02 > 0.0f). But: OpenCV has origin top-left, y goes down

};

template <typename T, glm::precision P = glm::defaultp>

double implicit_line(float x, float y, const glm::tvec4<T, P>& v1, const glm::tvec4<T, P>& v2)

{

return ((double)v1[1] - (double)v2[1]) * (double)x + ((double)v2[0] - (double)v1[0]) * (double)y +

(double)v1[0] * (double)v2[1] - (double)v2[0] * (double)v1[1];

};

inline std::vector<Vertex<float>> clip_polygon_to_plane_in_4d(const std::vector<Vertex<float>>& vertices,

const glm::tvec4<float>& plane_normal)

{

std::vector<Vertex<float>> clippedVertices;

// We can have 2 cases:

// * 1 vertex visible: we make 1 new triangle out of the visible vertex plus the 2 intersection points

// with the near-plane

// * 2 vertices visible: we have a quad, so we have to make 2 new triangles out of it.

// See here for more info?

// http://math.stackexchange.com/questions/400268/equation-for-a-line-through-a-plane-in-homogeneous-coordinates

for (unsigned int i = 0; i < vertices.size(); i++)

{

const int a = i; // the current vertex

const int b = (i + 1) % vertices.size(); // the following vertex (wraps around 0)

const float fa = glm::dot(vertices[a].position, plane_normal); // Note: Shouldn't they be unit length?

const float fb = glm::dot(vertices[b].position,

plane_normal); // < 0 means on visible side, > 0 means on invisible side?

if ((fa < 0 && fb > 0) || (fa > 0 && fb < 0)) // one vertex is on the visible side of the plane, one

// on the invisible? so we need to split?

{

const auto direction = vertices[b].position - vertices[a].position;

const float t = -(glm::dot(plane_normal, vertices[a].position)) /

(glm::dot(plane_normal, direction)); // the parametric value on the line, where the line

// to draw intersects the plane?

// generate a new vertex at the line-plane intersection point

const auto position = vertices[a].position + t * direction;

const auto color = vertices[a].color + t * (vertices[b].color - vertices[a].color);

const auto texCoord =

vertices[a].texcoords +

t * (vertices[b].texcoords -

vertices[a].texcoords); // We could omit that if we don't render with texture.

if (fa < 0) // we keep the original vertex plus the new one

{

clippedVertices.push_back(vertices[a]);

clippedVertices.push_back(Vertex<float>{position, color, texCoord});

} else if (fb < 0) // we use only the new vertex

{

clippedVertices.push_back(Vertex<float>{position, color, texCoord});

}

} else if (fa < 0 && fb < 0) // both are visible (on the "good" side of the plane), no splitting

// required, use the current vertex

{

clippedVertices.push_back(vertices[a]);

}

// else, both vertices are not visible, nothing to add and draw

}

return clippedVertices;

};

/**

* @brief Todo.

*

* Takes in clip coords? and outputs NDC.

* divides by w and outputs [x_ndc, y_ndc, z_ndc, 1/w_clip].

* The w-component is set to 1/w_clip (which is what OpenGL passes to the FragmentShader).

*

* @param[in] vertex X.

* @ return X.

*/

template <typename T, glm::precision P = glm::defaultp>

glm::tvec4<T, P> divide_by_w(const glm::tvec4<T, P>& vertex)

{

const auto one_over_w = 1.0 / vertex.w;

// divide by w: (if ortho, w will just be 1)

glm::tvec4<T, P> v_ndc(vertex / vertex.w);

// Set the w coord to 1/w (i.e. 1/w_clip). This is what OpenGL passes to the FragmentShader.

v_ndc.w = one_over_w;

return v_ndc;

};

} /* namespace detail */

} /* namespace render */

} /* namespace eos */

#endif /* RENDER_DETAIL_UTILS_HPP_ */

| {

"pile_set_name": "Github"

} |

[EMC]

DEBUG=0

LOG_LEVEL=0

[RS274NGC]

SUBROUTINE_PATH = .

[PYTHON]

PATH_PREPEND=.

TOPLEVEL=subs.py

| {

"pile_set_name": "Github"

} |

// Copyright 2012 Google Inc. All Rights Reserved.

//

// Use of this source code is governed by a BSD-style license

// that can be found in the COPYING file in the root of the source

// tree. An additional intellectual property rights grant can be found

// in the file PATENTS. All contributing project authors may

// be found in the AUTHORS file in the root of the source tree.

// -----------------------------------------------------------------------------

//

// TIFF decode.

#ifndef WEBP_EXAMPLES_TIFFDEC_H_

#define WEBP_EXAMPLES_TIFFDEC_H_

#ifdef __cplusplus

extern "C" {

#endif

struct Metadata;

struct WebPPicture;

// Reads a TIFF from 'filename', returning the decoded output in 'pic'.

// If 'keep_alpha' is true and the TIFF has an alpha channel, the output is RGBA

// otherwise it will be RGB.

// Returns true on success.

int ReadTIFF(const char* const filename,

struct WebPPicture* const pic, int keep_alpha,

struct Metadata* const metadata);

#ifdef __cplusplus

} // extern "C"

#endif

#endif // WEBP_EXAMPLES_TIFFDEC_H_

| {

"pile_set_name": "Github"

} |

import argparse

import ast

import pprint

import mxnet as mx

from mxnet.module import Module

from symdata.loader import AnchorGenerator, AnchorSampler, AnchorLoader

from symnet.logger import logger

from symnet.model import load_param, infer_data_shape, check_shape, initialize_frcnn, get_fixed_params

from symnet.metric import RPNAccMetric, RPNLogLossMetric, RPNL1LossMetric, RCNNAccMetric, RCNNLogLossMetric, RCNNL1LossMetric

def train_net(sym, roidb, args):

# print config

logger.info('called with args\n{}'.format(pprint.pformat(vars(args))))

# setup multi-gpu

ctx = [mx.gpu(int(i)) for i in args.gpus.split(',')]

batch_size = args.rcnn_batch_size * len(ctx)

# load training data

feat_sym = sym.get_internals()['rpn_cls_score_output']

ag = AnchorGenerator(feat_stride=args.rpn_feat_stride,

anchor_scales=args.rpn_anchor_scales, anchor_ratios=args.rpn_anchor_ratios)

asp = AnchorSampler(allowed_border=args.rpn_allowed_border, batch_rois=args.rpn_batch_rois,

fg_fraction=args.rpn_fg_fraction, fg_overlap=args.rpn_fg_overlap,

bg_overlap=args.rpn_bg_overlap)

train_data = AnchorLoader(roidb, batch_size, args.img_short_side, args.img_long_side,

args.img_pixel_means, args.img_pixel_stds, feat_sym, ag, asp, shuffle=True)

# produce shape max possible

_, out_shape, _ = feat_sym.infer_shape(data=(1, 3, args.img_long_side, args.img_long_side))

feat_height, feat_width = out_shape[0][-2:]

rpn_num_anchors = len(args.rpn_anchor_scales) * len(args.rpn_anchor_ratios)

data_names = ['data', 'im_info', 'gt_boxes']

label_names = ['label', 'bbox_target', 'bbox_weight']

data_shapes = [('data', (batch_size, 3, args.img_long_side, args.img_long_side)),

('im_info', (batch_size, 3)),

('gt_boxes', (batch_size, 100, 5))]

label_shapes = [('label', (batch_size, 1, rpn_num_anchors * feat_height, feat_width)),

('bbox_target', (batch_size, 4 * rpn_num_anchors, feat_height, feat_width)),

('bbox_weight', (batch_size, 4 * rpn_num_anchors, feat_height, feat_width))]

# print shapes

data_shape_dict, out_shape_dict = infer_data_shape(sym, data_shapes + label_shapes)

logger.info('max input shape\n%s' % pprint.pformat(data_shape_dict))

logger.info('max output shape\n%s' % pprint.pformat(out_shape_dict))

# load and initialize params

if args.resume:

arg_params, aux_params = load_param(args.resume)

else:

arg_params, aux_params = load_param(args.pretrained)

arg_params, aux_params = initialize_frcnn(sym, data_shapes, arg_params, aux_params)

# check parameter shapes

check_shape(sym, data_shapes + label_shapes, arg_params, aux_params)

# check fixed params

fixed_param_names = get_fixed_params(sym, args.net_fixed_params)

logger.info('locking params\n%s' % pprint.pformat(fixed_param_names))

# metric

rpn_eval_metric = RPNAccMetric()

rpn_cls_metric = RPNLogLossMetric()

rpn_bbox_metric = RPNL1LossMetric()

eval_metric = RCNNAccMetric()

cls_metric = RCNNLogLossMetric()

bbox_metric = RCNNL1LossMetric()

eval_metrics = mx.metric.CompositeEvalMetric()

for child_metric in [rpn_eval_metric, rpn_cls_metric, rpn_bbox_metric, eval_metric, cls_metric, bbox_metric]:

eval_metrics.add(child_metric)

# callback

batch_end_callback = mx.callback.Speedometer(batch_size, frequent=args.log_interval, auto_reset=False)

epoch_end_callback = mx.callback.do_checkpoint(args.save_prefix)

# learning schedule

base_lr = args.lr

lr_factor = 0.1

lr_epoch = [int(epoch) for epoch in args.lr_decay_epoch.split(',')]

lr_epoch_diff = [epoch - args.start_epoch for epoch in lr_epoch if epoch > args.start_epoch]

lr = base_lr * (lr_factor ** (len(lr_epoch) - len(lr_epoch_diff)))

lr_iters = [int(epoch * len(roidb) / batch_size) for epoch in lr_epoch_diff]

logger.info('lr %f lr_epoch_diff %s lr_iters %s' % (lr, lr_epoch_diff, lr_iters))

lr_scheduler = mx.lr_scheduler.MultiFactorScheduler(lr_iters, lr_factor)

# optimizer

optimizer_params = {'momentum': 0.9,

'wd': 0.0005,

'learning_rate': lr,

'lr_scheduler': lr_scheduler,

'rescale_grad': (1.0 / batch_size),

'clip_gradient': 5}

# train

mod = Module(sym, data_names=data_names, label_names=label_names,

logger=logger, context=ctx, work_load_list=None,

fixed_param_names=fixed_param_names)

mod.fit(train_data, eval_metric=eval_metrics, epoch_end_callback=epoch_end_callback,

batch_end_callback=batch_end_callback, kvstore='device',

optimizer='sgd', optimizer_params=optimizer_params,

arg_params=arg_params, aux_params=aux_params, begin_epoch=args.start_epoch, num_epoch=args.epochs)

def parse_args():

parser = argparse.ArgumentParser(description='Train Faster R-CNN network',

formatter_class=argparse.ArgumentDefaultsHelpFormatter)

parser.add_argument('--network', type=str, default='vgg16', help='base network')

parser.add_argument('--pretrained', type=str, default='', help='path to pretrained model')

parser.add_argument('--dataset', type=str, default='voc', help='training dataset')

parser.add_argument('--imageset', type=str, default='', help='imageset splits')

parser.add_argument('--gpus', type=str, default='0', help='gpu devices eg. 0,1')

parser.add_argument('--epochs', type=int, default=10, help='training epochs')

parser.add_argument('--lr', type=float, default=0.001, help='base learning rate')

parser.add_argument('--lr-decay-epoch', type=str, default='7', help='epoch to decay lr')

parser.add_argument('--resume', type=str, default='', help='path to last saved model')

parser.add_argument('--start-epoch', type=int, default=0, help='start epoch for resuming')

parser.add_argument('--log-interval', type=int, default=100, help='logging mini batch interval')

parser.add_argument('--save-prefix', type=str, default='', help='saving params prefix')

# faster rcnn params

parser.add_argument('--img-short-side', type=int, default=600)

parser.add_argument('--img-long-side', type=int, default=1000)

parser.add_argument('--img-pixel-means', type=str, default='(0.0, 0.0, 0.0)')

parser.add_argument('--img-pixel-stds', type=str, default='(1.0, 1.0, 1.0)')

parser.add_argument('--net-fixed-params', type=str, default='["conv0", "stage1", "gamma", "beta"]')

parser.add_argument('--rpn-feat-stride', type=int, default=16)

parser.add_argument('--rpn-anchor-scales', type=str, default='(8, 16, 32)')

parser.add_argument('--rpn-anchor-ratios', type=str, default='(0.5, 1, 2)')

parser.add_argument('--rpn-pre-nms-topk', type=int, default=12000)

parser.add_argument('--rpn-post-nms-topk', type=int, default=2000)

parser.add_argument('--rpn-nms-thresh', type=float, default=0.7)

parser.add_argument('--rpn-min-size', type=int, default=16)

parser.add_argument('--rpn-batch-rois', type=int, default=256)

parser.add_argument('--rpn-allowed-border', type=int, default=0)

parser.add_argument('--rpn-fg-fraction', type=float, default=0.5)

parser.add_argument('--rpn-fg-overlap', type=float, default=0.7)

parser.add_argument('--rpn-bg-overlap', type=float, default=0.3)

parser.add_argument('--rcnn-num-classes', type=int, default=21)

parser.add_argument('--rcnn-feat-stride', type=int, default=16)

parser.add_argument('--rcnn-pooled-size', type=str, default='(14, 14)')

parser.add_argument('--rcnn-batch-size', type=int, default=1)

parser.add_argument('--rcnn-batch-rois', type=int, default=128)

parser.add_argument('--rcnn-fg-fraction', type=float, default=0.25)

parser.add_argument('--rcnn-fg-overlap', type=float, default=0.5)

parser.add_argument('--rcnn-bbox-stds', type=str, default='(0.1, 0.1, 0.2, 0.2)')

args = parser.parse_args()

args.img_pixel_means = ast.literal_eval(args.img_pixel_means)

args.img_pixel_stds = ast.literal_eval(args.img_pixel_stds)

args.net_fixed_params = ast.literal_eval(args.net_fixed_params)

args.rpn_anchor_scales = ast.literal_eval(args.rpn_anchor_scales)

args.rpn_anchor_ratios = ast.literal_eval(args.rpn_anchor_ratios)

args.rcnn_pooled_size = ast.literal_eval(args.rcnn_pooled_size)

args.rcnn_bbox_stds = ast.literal_eval(args.rcnn_bbox_stds)

return args

def get_voc(args):

from symimdb.pascal_voc import PascalVOC

if not args.imageset:

args.imageset = '2007_trainval'

args.rcnn_num_classes = len(PascalVOC.classes)

isets = args.imageset.split('+')

roidb = []

for iset in isets:

imdb = PascalVOC(iset, 'data', 'data/VOCdevkit')

imdb.filter_roidb()

imdb.append_flipped_images()

roidb.extend(imdb.roidb)

return roidb

def get_coco(args):

from symimdb.coco import coco

if not args.imageset:

args.imageset = 'train2017'

args.rcnn_num_classes = len(coco.classes)

isets = args.imageset.split('+')

roidb = []

for iset in isets:

imdb = coco(iset, 'data', 'data/coco')

imdb.filter_roidb()

imdb.append_flipped_images()

roidb.extend(imdb.roidb)

return roidb

def get_vgg16_train(args):

from symnet.symbol_vgg import get_vgg_train

if not args.pretrained:

args.pretrained = 'model/vgg16-0000.params'

if not args.save_prefix:

args.save_prefix = 'model/vgg16'

args.img_pixel_means = (123.68, 116.779, 103.939)

args.img_pixel_stds = (1.0, 1.0, 1.0)

args.net_fixed_params = ['conv1', 'conv2']

args.rpn_feat_stride = 16

args.rcnn_feat_stride = 16

args.rcnn_pooled_size = (7, 7)

return get_vgg_train(anchor_scales=args.rpn_anchor_scales, anchor_ratios=args.rpn_anchor_ratios,

rpn_feature_stride=args.rpn_feat_stride, rpn_pre_topk=args.rpn_pre_nms_topk,

rpn_post_topk=args.rpn_post_nms_topk, rpn_nms_thresh=args.rpn_nms_thresh,

rpn_min_size=args.rpn_min_size, rpn_batch_rois=args.rpn_batch_rois,

num_classes=args.rcnn_num_classes, rcnn_feature_stride=args.rcnn_feat_stride,

rcnn_pooled_size=args.rcnn_pooled_size, rcnn_batch_size=args.rcnn_batch_size,

rcnn_batch_rois=args.rcnn_batch_rois, rcnn_fg_fraction=args.rcnn_fg_fraction,

rcnn_fg_overlap=args.rcnn_fg_overlap, rcnn_bbox_stds=args.rcnn_bbox_stds)

def get_resnet50_train(args):

from symnet.symbol_resnet import get_resnet_train

if not args.pretrained:

args.pretrained = 'model/resnet-50-0000.params'

if not args.save_prefix:

args.save_prefix = 'model/resnet50'

args.img_pixel_means = (0.0, 0.0, 0.0)

args.img_pixel_stds = (1.0, 1.0, 1.0)

args.net_fixed_params = ['conv0', 'stage1', 'gamma', 'beta']

args.rpn_feat_stride = 16

args.rcnn_feat_stride = 16

args.rcnn_pooled_size = (14, 14)

return get_resnet_train(anchor_scales=args.rpn_anchor_scales, anchor_ratios=args.rpn_anchor_ratios,

rpn_feature_stride=args.rpn_feat_stride, rpn_pre_topk=args.rpn_pre_nms_topk,

rpn_post_topk=args.rpn_post_nms_topk, rpn_nms_thresh=args.rpn_nms_thresh,

rpn_min_size=args.rpn_min_size, rpn_batch_rois=args.rpn_batch_rois,

num_classes=args.rcnn_num_classes, rcnn_feature_stride=args.rcnn_feat_stride,

rcnn_pooled_size=args.rcnn_pooled_size, rcnn_batch_size=args.rcnn_batch_size,

rcnn_batch_rois=args.rcnn_batch_rois, rcnn_fg_fraction=args.rcnn_fg_fraction,

rcnn_fg_overlap=args.rcnn_fg_overlap, rcnn_bbox_stds=args.rcnn_bbox_stds,

units=(3, 4, 6, 3), filter_list=(256, 512, 1024, 2048))

def get_resnet101_train(args):

from symnet.symbol_resnet import get_resnet_train

if not args.pretrained:

args.pretrained = 'model/resnet-101-0000.params'

if not args.save_prefix:

args.save_prefix = 'model/resnet101'

args.img_pixel_means = (0.0, 0.0, 0.0)

args.img_pixel_stds = (1.0, 1.0, 1.0)

args.net_fixed_params = ['conv0', 'stage1', 'gamma', 'beta']

args.rpn_feat_stride = 16

args.rcnn_feat_stride = 16

args.rcnn_pooled_size = (14, 14)

return get_resnet_train(anchor_scales=args.rpn_anchor_scales, anchor_ratios=args.rpn_anchor_ratios,

rpn_feature_stride=args.rpn_feat_stride, rpn_pre_topk=args.rpn_pre_nms_topk,

rpn_post_topk=args.rpn_post_nms_topk, rpn_nms_thresh=args.rpn_nms_thresh,

rpn_min_size=args.rpn_min_size, rpn_batch_rois=args.rpn_batch_rois,

num_classes=args.rcnn_num_classes, rcnn_feature_stride=args.rcnn_feat_stride,

rcnn_pooled_size=args.rcnn_pooled_size, rcnn_batch_size=args.rcnn_batch_size,

rcnn_batch_rois=args.rcnn_batch_rois, rcnn_fg_fraction=args.rcnn_fg_fraction,

rcnn_fg_overlap=args.rcnn_fg_overlap, rcnn_bbox_stds=args.rcnn_bbox_stds,

units=(3, 4, 23, 3), filter_list=(256, 512, 1024, 2048))

def get_dataset(dataset, args):

datasets = {

'voc': get_voc,

'coco': get_coco

}

if dataset not in datasets:

raise ValueError("dataset {} not supported".format(dataset))

return datasets[dataset](args)

def get_network(network, args):

networks = {

'vgg16': get_vgg16_train,

'resnet50': get_resnet50_train,

'resnet101': get_resnet101_train

}

if network not in networks:

raise ValueError("network {} not supported".format(network))

return networks[network](args)

def main():

args = parse_args()

roidb = get_dataset(args.dataset, args)

sym = get_network(args.network, args)

train_net(sym, roidb, args)

if __name__ == '__main__':

main()

| {

"pile_set_name": "Github"

} |

[nosetests]

verbose=True

detailed-errors=True

with-gae=True

stop=True

without-sandbox=True

with-coverage=True

| {

"pile_set_name": "Github"

} |

/* Copyright 2008, Google Inc.

* All rights reserved.

*

* Code released into the public domain.

*

* curve25519-donna: Curve25519 elliptic curve, public key function

*

* http://code.google.com/p/curve25519-donna/

*

* Adam Langley <[email protected]>

* Parts optimised by floodyberry

* Derived from public domain C code by Daniel J. Bernstein <[email protected]>

*

* More information about curve25519 can be found here

* http://cr.yp.to/ecdh.html

*

* djb's sample implementation of curve25519 is written in a special assembly

* language called qhasm and uses the floating point registers.

*

* This is, almost, a clean room reimplementation from the curve25519 paper. It

* uses many of the tricks described therein. Only the crecip function is taken

* from the sample implementation.

*/

#include <stdint.h>

#include <string.h>

#ifdef HAVE_TI_MODE

#include "../scalarmult_curve25519.h"

#include "curve25519_donna_c64.h"

#include "utils.h"

typedef uint8_t u8;

typedef uint64_t limb;

typedef limb felem[5];

/* Special gcc mode for 128-bit integers */

typedef unsigned uint128_t __attribute__((mode(TI)));

/* Sum two numbers: output += in */

static inline void

fsum(limb *output, const limb *in)

{

output[0] += in[0];

output[1] += in[1];

output[2] += in[2];

output[3] += in[3];

output[4] += in[4];

}

/* Find the difference of two numbers: output = in - output

* (note the order of the arguments!)

*

* Assumes that out[i] < 2**52

* On return, out[i] < 2**55

*/

static inline void

fdifference_backwards(felem out, const felem in)

{

/* 152 is 19 << 3 */

static const limb two54m152 = (((limb)1) << 54) - 152;

static const limb two54m8 = (((limb)1) << 54) - 8;

out[0] = in[0] + two54m152 - out[0];

out[1] = in[1] + two54m8 - out[1];

out[2] = in[2] + two54m8 - out[2];

out[3] = in[3] + two54m8 - out[3];

out[4] = in[4] + two54m8 - out[4];

}

/* Multiply a number by a scalar: output = in * scalar */

static inline void

fscalar_product(felem output, const felem in, const limb scalar)

{

uint128_t a;

a = in[0] * (uint128_t)scalar;

output[0] = ((limb)a) & 0x7ffffffffffff;

a = in[1] * (uint128_t)scalar + ((limb)(a >> 51));

output[1] = ((limb)a) & 0x7ffffffffffff;

a = in[2] * (uint128_t)scalar + ((limb)(a >> 51));

output[2] = ((limb)a) & 0x7ffffffffffff;

a = in[3] * (uint128_t)scalar + ((limb)(a >> 51));

output[3] = ((limb)a) & 0x7ffffffffffff;

a = in[4] * (uint128_t)scalar + ((limb)(a >> 51));

output[4] = ((limb)a) & 0x7ffffffffffff;

output[0] += (a >> 51) * 19;

}

/* Multiply two numbers: output = in2 * in

*

* output must be distinct to both inputs. The inputs are reduced coefficient

* form, the output is not.

*

* Assumes that in[i] < 2**55 and likewise for in2.

* On return, output[i] < 2**52

*/

static inline void

fmul(felem output, const felem in2, const felem in)

{

uint128_t t[5];

limb r0, r1, r2, r3, r4, s0, s1, s2, s3, s4, c;

r0 = in[0];

r1 = in[1];

r2 = in[2];

r3 = in[3];

r4 = in[4];

s0 = in2[0];

s1 = in2[1];

s2 = in2[2];

s3 = in2[3];

s4 = in2[4];

t[0] = ((uint128_t)r0) * s0;

t[1] = ((uint128_t)r0) * s1 + ((uint128_t)r1) * s0;

t[2] = ((uint128_t)r0) * s2 + ((uint128_t)r2) * s0 + ((uint128_t)r1) * s1;

t[3] = ((uint128_t)r0) * s3 + ((uint128_t)r3) * s0 + ((uint128_t)r1) * s2

+ ((uint128_t)r2) * s1;

t[4] = ((uint128_t)r0) * s4 + ((uint128_t)r4) * s0 + ((uint128_t)r3) * s1

+ ((uint128_t)r1) * s3 + ((uint128_t)r2) * s2;

r4 *= 19;

r1 *= 19;

r2 *= 19;

r3 *= 19;

t[0] += ((uint128_t)r4) * s1 + ((uint128_t)r1) * s4 + ((uint128_t)r2) * s3

+ ((uint128_t)r3) * s2;

t[1] += ((uint128_t)r4) * s2 + ((uint128_t)r2) * s4 + ((uint128_t)r3) * s3;

t[2] += ((uint128_t)r4) * s3 + ((uint128_t)r3) * s4;

t[3] += ((uint128_t)r4) * s4;

r0 = (limb)t[0] & 0x7ffffffffffff;

c = (limb)(t[0] >> 51);

t[1] += c;

r1 = (limb)t[1] & 0x7ffffffffffff;

c = (limb)(t[1] >> 51);

t[2] += c;

r2 = (limb)t[2] & 0x7ffffffffffff;

c = (limb)(t[2] >> 51);

t[3] += c;

r3 = (limb)t[3] & 0x7ffffffffffff;

c = (limb)(t[3] >> 51);

t[4] += c;

r4 = (limb)t[4] & 0x7ffffffffffff;

c = (limb)(t[4] >> 51);

r0 += c * 19;

c = r0 >> 51;

r0 = r0 & 0x7ffffffffffff;

r1 += c;

c = r1 >> 51;

r1 = r1 & 0x7ffffffffffff;

r2 += c;

output[0] = r0;

output[1] = r1;

output[2] = r2;

output[3] = r3;

output[4] = r4;

}

static inline void

fsquare_times(felem output, const felem in, limb count)

{

uint128_t t[5];

limb r0, r1, r2, r3, r4, c;

limb d0, d1, d2, d4, d419;

r0 = in[0];

r1 = in[1];

r2 = in[2];

r3 = in[3];

r4 = in[4];

do {

d0 = r0 * 2;

d1 = r1 * 2;

d2 = r2 * 2 * 19;

d419 = r4 * 19;

d4 = d419 * 2;

t[0] = ((uint128_t)r0) * r0 + ((uint128_t)d4) * r1

+ (((uint128_t)d2) * (r3));

t[1] = ((uint128_t)d0) * r1 + ((uint128_t)d4) * r2

+ (((uint128_t)r3) * (r3 * 19));

t[2] = ((uint128_t)d0) * r2 + ((uint128_t)r1) * r1

+ (((uint128_t)d4) * (r3));

t[3] = ((uint128_t)d0) * r3 + ((uint128_t)d1) * r2

+ (((uint128_t)r4) * (d419));

t[4] = ((uint128_t)d0) * r4 + ((uint128_t)d1) * r3

+ (((uint128_t)r2) * (r2));

r0 = (limb)t[0] & 0x7ffffffffffff;

c = (limb)(t[0] >> 51);

t[1] += c;

r1 = (limb)t[1] & 0x7ffffffffffff;

c = (limb)(t[1] >> 51);

t[2] += c;

r2 = (limb)t[2] & 0x7ffffffffffff;

c = (limb)(t[2] >> 51);

t[3] += c;

r3 = (limb)t[3] & 0x7ffffffffffff;

c = (limb)(t[3] >> 51);

t[4] += c;

r4 = (limb)t[4] & 0x7ffffffffffff;

c = (limb)(t[4] >> 51);

r0 += c * 19;

c = r0 >> 51;

r0 = r0 & 0x7ffffffffffff;

r1 += c;

c = r1 >> 51;

r1 = r1 & 0x7ffffffffffff;

r2 += c;

} while (--count);

output[0] = r0;

output[1] = r1;

output[2] = r2;

output[3] = r3;

output[4] = r4;

}

#ifdef NATIVE_LITTLE_ENDIAN

static inline limb

load_limb(const u8 *in)

{

limb out;

memcpy(&out, in, sizeof(limb));

return out;

}

static inline void

store_limb(u8 *out, limb in)

{

memcpy(out, &in, sizeof(limb));

}

#else

static inline limb

load_limb(const u8 *in)

{

return ((limb)in[0]) | (((limb)in[1]) << 8) | (((limb)in[2]) << 16)

| (((limb)in[3]) << 24) | (((limb)in[4]) << 32)

| (((limb)in[5]) << 40) | (((limb)in[6]) << 48)

| (((limb)in[7]) << 56);

}

static inline void

store_limb(u8 *out, limb in)

{

out[0] = in & 0xff;

out[1] = (in >> 8) & 0xff;

out[2] = (in >> 16) & 0xff;

out[3] = (in >> 24) & 0xff;

out[4] = (in >> 32) & 0xff;

out[5] = (in >> 40) & 0xff;

out[6] = (in >> 48) & 0xff;

out[7] = (in >> 56) & 0xff;

}

#endif

/* Take a little-endian, 32-byte number and expand it into polynomial form */

static void

fexpand(limb *output, const u8 *in)

{

output[0] = load_limb(in) & 0x7ffffffffffff;

output[1] = (load_limb(in + 6) >> 3) & 0x7ffffffffffff;

output[2] = (load_limb(in + 12) >> 6) & 0x7ffffffffffff;

output[3] = (load_limb(in + 19) >> 1) & 0x7ffffffffffff;

output[4] = (load_limb(in + 24) >> 12) & 0x7ffffffffffff;

}

/* Take a fully reduced polynomial form number and contract it into a

* little-endian, 32-byte array

*/

static void

fcontract(u8 *output, const felem input)

{

uint128_t t[5];

t[0] = input[0];

t[1] = input[1];

t[2] = input[2];

t[3] = input[3];

t[4] = input[4];

t[1] += t[0] >> 51;

t[0] &= 0x7ffffffffffff;

t[2] += t[1] >> 51;

t[1] &= 0x7ffffffffffff;

t[3] += t[2] >> 51;

t[2] &= 0x7ffffffffffff;

t[4] += t[3] >> 51;

t[3] &= 0x7ffffffffffff;

t[0] += 19 * (t[4] >> 51);

t[4] &= 0x7ffffffffffff;

t[1] += t[0] >> 51;

t[0] &= 0x7ffffffffffff;

t[2] += t[1] >> 51;

t[1] &= 0x7ffffffffffff;

t[3] += t[2] >> 51;

t[2] &= 0x7ffffffffffff;

t[4] += t[3] >> 51;

t[3] &= 0x7ffffffffffff;

t[0] += 19 * (t[4] >> 51);

t[4] &= 0x7ffffffffffff;

/* now t is between 0 and 2^255-1, properly carried. */

/* case 1: between 0 and 2^255-20. case 2: between 2^255-19 and 2^255-1. */

t[0] += 19;

t[1] += t[0] >> 51;

t[0] &= 0x7ffffffffffff;

t[2] += t[1] >> 51;

t[1] &= 0x7ffffffffffff;

t[3] += t[2] >> 51;

t[2] &= 0x7ffffffffffff;

t[4] += t[3] >> 51;

t[3] &= 0x7ffffffffffff;

t[0] += 19 * (t[4] >> 51);

t[4] &= 0x7ffffffffffff;

/* now between 19 and 2^255-1 in both cases, and offset by 19. */

t[0] += 0x8000000000000 - 19;

t[1] += 0x8000000000000 - 1;

t[2] += 0x8000000000000 - 1;

t[3] += 0x8000000000000 - 1;

t[4] += 0x8000000000000 - 1;

/* now between 2^255 and 2^256-20, and offset by 2^255. */

t[1] += t[0] >> 51;

t[0] &= 0x7ffffffffffff;

t[2] += t[1] >> 51;

t[1] &= 0x7ffffffffffff;

t[3] += t[2] >> 51;

t[2] &= 0x7ffffffffffff;

t[4] += t[3] >> 51;

t[3] &= 0x7ffffffffffff;

t[4] &= 0x7ffffffffffff;

store_limb(output, t[0] | (t[1] << 51));

store_limb(output + 8, (t[1] >> 13) | (t[2] << 38));

store_limb(output + 16, (t[2] >> 26) | (t[3] << 25));

store_limb(output + 24, (t[3] >> 39) | (t[4] << 12));

}

/* Input: Q, Q', Q-Q'

* Output: 2Q, Q+Q'

*

* x2 z2: long form

* x3 z3: long form

* x z: short form, destroyed

* xprime zprime: short form, destroyed

* qmqp: short form, preserved

*/

static void

fmonty(limb *x2, limb *z2, /* output 2Q */

limb *x3, limb *z3, /* output Q + Q' */

limb *x, limb *z, /* input Q */

limb *xprime, limb *zprime, /* input Q' */

const limb *qmqp /* input Q - Q' */)

{

limb origx[5], origxprime[5], zzz[5], xx[5], zz[5], xxprime[5], zzprime[5],

zzzprime[5];

memcpy(origx, x, 5 * sizeof(limb));

fsum(x, z);

fdifference_backwards(z, origx); /* does x - z */

memcpy(origxprime, xprime, sizeof(limb) * 5);

fsum(xprime, zprime);

fdifference_backwards(zprime, origxprime);

fmul(xxprime, xprime, z);

fmul(zzprime, x, zprime);

memcpy(origxprime, xxprime, sizeof(limb) * 5);

fsum(xxprime, zzprime);

fdifference_backwards(zzprime, origxprime);

fsquare_times(x3, xxprime, 1);

fsquare_times(zzzprime, zzprime, 1);

fmul(z3, zzzprime, qmqp);

fsquare_times(xx, x, 1);

fsquare_times(zz, z, 1);

fmul(x2, xx, zz);

fdifference_backwards(zz, xx); /* does zz = xx - zz */

fscalar_product(zzz, zz, 121665);

fsum(zzz, xx);

fmul(z2, zz, zzz);

}

/* -----------------------------------------------------------------------------

Maybe swap the contents of two limb arrays (@a and @b), each @len elements

long. Perform the swap iff @swap is non-zero.

This function performs the swap without leaking any side-channel

information.

-----------------------------------------------------------------------------

*/

static void

swap_conditional(limb a[5], limb b[5], limb iswap)

{

const limb swap = -iswap;

unsigned i;

for (i = 0; i < 5; ++i) {

const limb x = swap & (a[i] ^ b[i]);

a[i] ^= x;

b[i] ^= x;

}

}

/* Calculates nQ where Q is the x-coordinate of a point on the curve

*

* resultx/resultz: the x coordinate of the resulting curve point (short form)

* n: a little endian, 32-byte number

* q: a point of the curve (short form)

*/

static void

cmult(limb *resultx, limb *resultz, const u8 *n, const limb *q)

{

limb a[5] = { 0 }, b[5] = { 1 }, c[5] = { 1 }, d[5] = { 0 };

limb *nqpqx = a, *nqpqz = b, *nqx = c, *nqz = d, *t;

limb e[5] = { 0 }, f[5] = { 1 }, g[5] = { 0 }, h[5] = { 1 };

limb *nqpqx2 = e, *nqpqz2 = f, *nqx2 = g, *nqz2 = h;

unsigned i, j;

memcpy(nqpqx, q, sizeof(limb) * 5);

for (i = 0; i < 32; ++i) {

u8 byte = n[31 - i];

for (j = 0; j < 8; ++j) {

const limb bit = byte >> 7;

swap_conditional(nqx, nqpqx, bit);

swap_conditional(nqz, nqpqz, bit);

fmonty(nqx2, nqz2, nqpqx2, nqpqz2, nqx, nqz, nqpqx, nqpqz, q);

swap_conditional(nqx2, nqpqx2, bit);

swap_conditional(nqz2, nqpqz2, bit);

t = nqx;

nqx = nqx2;

nqx2 = t;

t = nqz;

nqz = nqz2;

nqz2 = t;

t = nqpqx;

nqpqx = nqpqx2;

nqpqx2 = t;

t = nqpqz;

nqpqz = nqpqz2;

nqpqz2 = t;

byte <<= 1;

}

}

memcpy(resultx, nqx, sizeof(limb) * 5);

memcpy(resultz, nqz, sizeof(limb) * 5);

}

/* -----------------------------------------------------------------------------

Shamelessly copied from djb's code, tightened a little

-----------------------------------------------------------------------------

*/

static void

crecip(felem out, const felem z)

{

felem a, t0, b, c;

/* 2 */ fsquare_times(a, z, 1); /* a = 2 */

/* 8 */ fsquare_times(t0, a, 2);

/* 9 */ fmul(b, t0, z); /* b = 9 */

/* 11 */ fmul(a, b, a); /* a = 11 */

/* 22 */ fsquare_times(t0, a, 1);

/* 2^5 - 2^0 = 31 */ fmul(b, t0, b);

/* 2^10 - 2^5 */ fsquare_times(t0, b, 5);

/* 2^10 - 2^0 */ fmul(b, t0, b);

/* 2^20 - 2^10 */ fsquare_times(t0, b, 10);

/* 2^20 - 2^0 */ fmul(c, t0, b);

/* 2^40 - 2^20 */ fsquare_times(t0, c, 20);

/* 2^40 - 2^0 */ fmul(t0, t0, c);

/* 2^50 - 2^10 */ fsquare_times(t0, t0, 10);

/* 2^50 - 2^0 */ fmul(b, t0, b);

/* 2^100 - 2^50 */ fsquare_times(t0, b, 50);

/* 2^100 - 2^0 */ fmul(c, t0, b);

/* 2^200 - 2^100 */ fsquare_times(t0, c, 100);

/* 2^200 - 2^0 */ fmul(t0, t0, c);

/* 2^250 - 2^50 */ fsquare_times(t0, t0, 50);

/* 2^250 - 2^0 */ fmul(t0, t0, b);

/* 2^255 - 2^5 */ fsquare_times(t0, t0, 5);

/* 2^255 - 21 */ fmul(out, t0, a);

}

static const unsigned char basepoint[32] = { 9 };

static int

crypto_scalarmult_curve25519_donna_c64(unsigned char *mypublic,

const unsigned char *secret,

const unsigned char *basepoint)

{

limb bp[5], x[5], z[5], zmone[5];

uint8_t e[32];

int i;

for (i = 0; i < 32; ++i) {

e[i] = secret[i];

}

e[0] &= 248;

e[31] &= 127;

e[31] |= 64;

fexpand(bp, basepoint);

cmult(x, z, e, bp);

crecip(zmone, z);

fmul(z, x, zmone);

fcontract(mypublic, z);

return 0;

}

static int

crypto_scalarmult_curve25519_donna_c64_base(unsigned char *q,

const unsigned char *n)

{

return crypto_scalarmult_curve25519_donna_c64(q, n, basepoint);

}

struct crypto_scalarmult_curve25519_implementation

crypto_scalarmult_curve25519_donna_c64_implementation = {

SODIUM_C99(.mult =) crypto_scalarmult_curve25519_donna_c64,

SODIUM_C99(.mult_base =) crypto_scalarmult_curve25519_donna_c64_base

};

#endif

| {

"pile_set_name": "Github"

} |

#!/bin/sh

# This will generate the list of feature flags for implemented symbols.

echo '/* DO NOT EDIT. This is auto-generated from feature.sh */'

nm ../regressions/ck_pr/validate/ck_pr_cas|cut -d ' ' -f 3|sed s/ck_pr/ck_f_pr/|awk '/^ck_f_pr/ {print "#define " toupper($1);}'|sort

| {

"pile_set_name": "Github"

} |

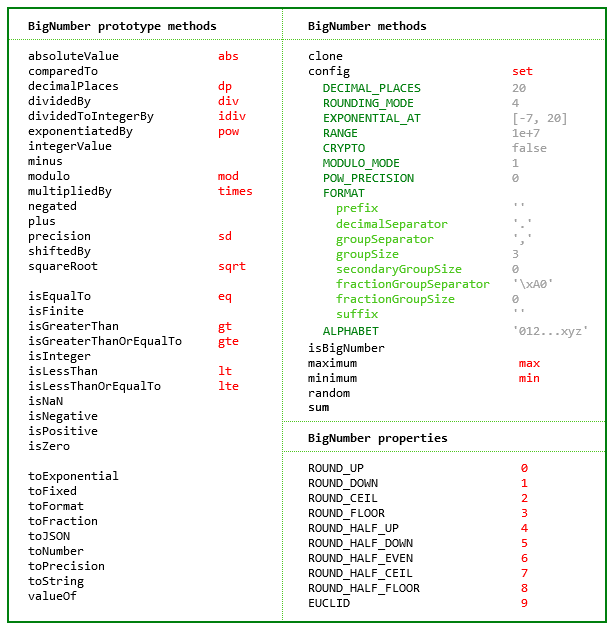

A JavaScript library for arbitrary-precision decimal and non-decimal arithmetic.

[](https://travis-ci.org/MikeMcl/bignumber.js)

<br />

## Features

- Faster, smaller, and perhaps easier to use than JavaScript versions of Java's BigDecimal

- 8 KB minified and gzipped

- Simple API but full-featured

- Works with numbers with or without fraction digits in bases from 2 to 64 inclusive

- Replicates the `toExponential`, `toFixed`, `toPrecision` and `toString` methods of JavaScript's Number type

- Includes a `toFraction` and a correctly-rounded `squareRoot` method

- Supports cryptographically-secure pseudo-random number generation

- No dependencies

- Wide platform compatibility: uses JavaScript 1.5 (ECMAScript 3) features only

- Comprehensive [documentation](http://mikemcl.github.io/bignumber.js/) and test set

If a smaller and simpler library is required see [big.js](https://github.com/MikeMcl/big.js/).

It's less than half the size but only works with decimal numbers and only has half the methods.

It also does not allow `NaN` or `Infinity`, or have the configuration options of this library.

See also [decimal.js](https://github.com/MikeMcl/decimal.js/), which among other things adds support for non-integer powers, and performs all operations to a specified number of significant digits.

## Load

The library is the single JavaScript file *bignumber.js* (or minified, *bignumber.min.js*).

Browser:

```html

<script src='path/to/bignumber.js'></script>

```

[Node.js](http://nodejs.org):

```bash

$ npm install --save bignumber.js

```

```js

var BigNumber = require('bignumber.js');

```

ES6 module (*bignumber.mjs*):

```js

//import BigNumber from 'bignumber.js';

import {BigNumber} from 'bignumber.js';

```

AMD loader libraries such as [requireJS](http://requirejs.org/):

```js

require(['bignumber'], function(BigNumber) {

// Use BigNumber here in local scope. No global BigNumber.

});

```

## Use

*In all examples below, `var`, semicolons and `toString` calls are not shown.

If a commented-out value is in quotes it means `toString` has been called on the preceding expression.*

The library exports a single function: `BigNumber`, the constructor of BigNumber instances.

It accepts a value of type number *(up to 15 significant digits only)*, string or BigNumber object,

```javascript

x = new BigNumber(123.4567)

y = BigNumber('123456.7e-3')

z = new BigNumber(x)

x.isEqualTo(y) && y.isEqualTo(z) && x.isEqualTo(z) // true

```

and a base from 2 to 36 inclusive can be specified.

```javascript

x = new BigNumber(1011, 2) // "11"

y = new BigNumber('zz.9', 36) // "1295.25"

z = x.plus(y) // "1306.25"

```

A BigNumber is immutable in the sense that it is not changed by its methods.

```javascript

0.3 - 0.1 // 0.19999999999999998

x = new BigNumber(0.3)

x.minus(0.1) // "0.2"

x // "0.3"

```

The methods that return a BigNumber can be chained.

```javascript

x.dividedBy(y).plus(z).times(9)

x.times('1.23456780123456789e+9').plus(9876.5432321).dividedBy('4444562598.111772').integerValue()

```

Some of the longer method names have a shorter alias.

```javascript

x.squareRoot().dividedBy(y).exponentiatedBy(3).isEqualTo( x.sqrt().div(y).pow(3) ) // true

x.modulo(y).multipliedBy(z).eq( x.mod(y).times(z) ) // true

```

Like JavaScript's number type, there are `toExponential`, `toFixed` and `toPrecision` methods

```javascript

x = new BigNumber(255.5)

x.toExponential(5) // "2.55500e+2"

x.toFixed(5) // "255.50000"

x.toPrecision(5) // "255.50"

x.toNumber() // 255.5

```

and a base can be specified for `toString`.

```javascript

x.toString(16) // "ff.8"

```

There is also a `toFormat` method which may be useful for internationalisation

```javascript

y = new BigNumber('1234567.898765')

y.toFormat(2) // "1,234,567.90"

```

The maximum number of decimal places of the result of an operation involving division (i.e. a division, square root, base conversion or negative power operation) is set using the `config` method of the `BigNumber` constructor.

The other arithmetic operations always give the exact result.

```javascript

BigNumber.config({ DECIMAL_PLACES: 10, ROUNDING_MODE: 4 })

x = new BigNumber(2);

y = new BigNumber(3);

z = x.dividedBy(y) // "0.6666666667"

z.squareRoot() // "0.8164965809"

z.exponentiatedBy(-3) // "3.3749999995"

z.toString(2) // "0.1010101011"

z.multipliedBy(z) // "0.44444444448888888889"

z.multipliedBy(z).decimalPlaces(10) // "0.4444444445"

```

There is a `toFraction` method with an optional *maximum denominator* argument

```javascript

y = new BigNumber(355)

pi = y.dividedBy(113) // "3.1415929204"

pi.toFraction() // [ "7853982301", "2500000000" ]

pi.toFraction(1000) // [ "355", "113" ]

```

and `isNaN` and `isFinite` methods, as `NaN` and `Infinity` are valid `BigNumber` values.

```javascript

x = new BigNumber(NaN) // "NaN"

y = new BigNumber(Infinity) // "Infinity"

x.isNaN() && !y.isNaN() && !x.isFinite() && !y.isFinite() // true

```

The value of a BigNumber is stored in a decimal floating point format in terms of a coefficient, exponent and sign.

```javascript

x = new BigNumber(-123.456);

x.c // [ 123, 45600000000000 ] coefficient (i.e. significand)

x.e // 2 exponent

x.s // -1 sign

```

For advanced usage, multiple BigNumber constructors can be created, each with their own independent configuration which applies to all BigNumber's created from it.

```javascript

// Set DECIMAL_PLACES for the original BigNumber constructor

BigNumber.config({ DECIMAL_PLACES: 10 })

// Create another BigNumber constructor, optionally passing in a configuration object

BN = BigNumber.clone({ DECIMAL_PLACES: 5 })

x = new BigNumber(1)

y = new BN(1)

x.div(3) // '0.3333333333'

y.div(3) // '0.33333'

```