text

stringlengths 2

100k

| meta

dict |

|---|---|

//

// Licensed under the terms in License.txt

//

// Copyright 2010 Allen Ding. All rights reserved.

//

#import "Kiwi.h"

#import "KiwiTestConfiguration.h"

#import "TestClasses.h"

#if KW_TESTS_ENABLED

@interface KWTestCaseTest : SenTestCase

@end

@implementation KWTestCaseTest

- (void)testItShouldClearStubsAfterExamplesRun {

KWTestCase *testCase = [[[KWTestCase alloc] init] autorelease];

id subject = [Cruiser cruiser];

NSUInteger crewComplement = [subject crewComplement];

[subject stub:@selector(crewComplement) andReturn:[KWValue valueWithUnsignedInt:42]];

STAssertEquals([subject crewComplement], 42u, @"expected method to be stubbed");

[testCase tearDownExampleEnvironment];

STAssertEquals([subject crewComplement], crewComplement, @"expected method stub to be cleared after examples run");

}

- (void)testItShouldNotifyVerifiersOfEndOfExample {

KWTestCase *testCase = [[[KWTestCase alloc] init] autorelease];

id verifier = [[[TestVerifier alloc] init] autorelease];

[testCase addVerifier:verifier];

[testCase invokeTest];

STAssertTrue([verifier notifiedOfEndOfExample], @"expected spec to notify end of example verifiers");

}

@end

#endif // #if KW_TESTS_ENABLED

| {

"pile_set_name": "Github"

} |

package terraform

import (

"fmt"

"log"

"strings"

"github.com/hashicorp/hcl/v2"

"github.com/zclconf/go-cty/cty"

"github.com/hashicorp/terraform-plugin-sdk/internal/addrs"

"github.com/hashicorp/terraform-plugin-sdk/internal/configs"

"github.com/hashicorp/terraform-plugin-sdk/internal/plans"

"github.com/hashicorp/terraform-plugin-sdk/internal/plans/objchange"

"github.com/hashicorp/terraform-plugin-sdk/internal/providers"

"github.com/hashicorp/terraform-plugin-sdk/internal/states"

"github.com/hashicorp/terraform-plugin-sdk/internal/tfdiags"

)

// EvalCheckPlannedChange is an EvalNode implementation that produces errors

// if the _actual_ expected value is not compatible with what was recorded

// in the plan.

//

// Errors here are most often indicative of a bug in the provider, so our

// error messages will report with that in mind. It's also possible that

// there's a bug in Terraform's Core's own "proposed new value" code in

// EvalDiff.

type EvalCheckPlannedChange struct {

Addr addrs.ResourceInstance

ProviderAddr addrs.AbsProviderConfig

ProviderSchema **ProviderSchema

// We take ResourceInstanceChange objects here just because that's what's

// convenient to pass in from the evaltree implementation, but we really

// only look at the "After" value of each change.

Planned, Actual **plans.ResourceInstanceChange

}

func (n *EvalCheckPlannedChange) Eval(ctx EvalContext) (interface{}, error) {

providerSchema := *n.ProviderSchema

plannedChange := *n.Planned

actualChange := *n.Actual

schema, _ := providerSchema.SchemaForResourceAddr(n.Addr.ContainingResource())

if schema == nil {

// Should be caught during validation, so we don't bother with a pretty error here

return nil, fmt.Errorf("provider does not support %q", n.Addr.Resource.Type)

}

var diags tfdiags.Diagnostics

absAddr := n.Addr.Absolute(ctx.Path())

log.Printf("[TRACE] EvalCheckPlannedChange: Verifying that actual change (action %s) matches planned change (action %s)", actualChange.Action, plannedChange.Action)

if plannedChange.Action != actualChange.Action {

switch {

case plannedChange.Action == plans.Update && actualChange.Action == plans.NoOp:

// It's okay for an update to become a NoOp once we've filled in

// all of the unknown values, since the final values might actually

// match what was there before after all.

log.Printf("[DEBUG] After incorporating new values learned so far during apply, %s change has become NoOp", absAddr)

default:

diags = diags.Append(tfdiags.Sourceless(

tfdiags.Error,

"Provider produced inconsistent final plan",

fmt.Sprintf(

"When expanding the plan for %s to include new values learned so far during apply, provider %q changed the planned action from %s to %s.\n\nThis is a bug in the provider, which should be reported in the provider's own issue tracker.",

absAddr, n.ProviderAddr.ProviderConfig.Type,

plannedChange.Action, actualChange.Action,

),

))

}

}

errs := objchange.AssertObjectCompatible(schema, plannedChange.After, actualChange.After)

for _, err := range errs {

diags = diags.Append(tfdiags.Sourceless(

tfdiags.Error,

"Provider produced inconsistent final plan",

fmt.Sprintf(

"When expanding the plan for %s to include new values learned so far during apply, provider %q produced an invalid new value for %s.\n\nThis is a bug in the provider, which should be reported in the provider's own issue tracker.",

absAddr, n.ProviderAddr.ProviderConfig.Type, tfdiags.FormatError(err),

),

))

}

return nil, diags.Err()

}

// EvalDiff is an EvalNode implementation that detects changes for a given

// resource instance.

type EvalDiff struct {

Addr addrs.ResourceInstance

Config *configs.Resource

Provider *providers.Interface

ProviderAddr addrs.AbsProviderConfig

ProviderSchema **ProviderSchema

State **states.ResourceInstanceObject

PreviousDiff **plans.ResourceInstanceChange

// CreateBeforeDestroy is set if either the resource's own config sets

// create_before_destroy explicitly or if dependencies have forced the

// resource to be handled as create_before_destroy in order to avoid

// a dependency cycle.

CreateBeforeDestroy bool

OutputChange **plans.ResourceInstanceChange

OutputValue *cty.Value

OutputState **states.ResourceInstanceObject

Stub bool

}

// TODO: test

func (n *EvalDiff) Eval(ctx EvalContext) (interface{}, error) {

state := *n.State

config := *n.Config

provider := *n.Provider

providerSchema := *n.ProviderSchema

if providerSchema == nil {

return nil, fmt.Errorf("provider schema is unavailable for %s", n.Addr)

}

if n.ProviderAddr.ProviderConfig.Type == "" {

panic(fmt.Sprintf("EvalDiff for %s does not have ProviderAddr set", n.Addr.Absolute(ctx.Path())))

}

var diags tfdiags.Diagnostics

// Evaluate the configuration

schema, _ := providerSchema.SchemaForResourceAddr(n.Addr.ContainingResource())

if schema == nil {

// Should be caught during validation, so we don't bother with a pretty error here

return nil, fmt.Errorf("provider does not support resource type %q", n.Addr.Resource.Type)

}

forEach, _ := evaluateResourceForEachExpression(n.Config.ForEach, ctx)

keyData := EvalDataForInstanceKey(n.Addr.Key, forEach)

configVal, _, configDiags := ctx.EvaluateBlock(config.Config, schema, nil, keyData)

diags = diags.Append(configDiags)

if configDiags.HasErrors() {

return nil, diags.Err()

}

absAddr := n.Addr.Absolute(ctx.Path())

var priorVal cty.Value

var priorValTainted cty.Value

var priorPrivate []byte

if state != nil {

if state.Status != states.ObjectTainted {

priorVal = state.Value

priorPrivate = state.Private

} else {

// If the prior state is tainted then we'll proceed below like

// we're creating an entirely new object, but then turn it into

// a synthetic "Replace" change at the end, creating the same

// result as if the provider had marked at least one argument

// change as "requires replacement".

priorValTainted = state.Value

priorVal = cty.NullVal(schema.ImpliedType())

}

} else {

priorVal = cty.NullVal(schema.ImpliedType())

}

proposedNewVal := objchange.ProposedNewObject(schema, priorVal, configVal)

// Call pre-diff hook

if !n.Stub {

err := ctx.Hook(func(h Hook) (HookAction, error) {

return h.PreDiff(absAddr, states.CurrentGen, priorVal, proposedNewVal)

})

if err != nil {

return nil, err

}

}

log.Printf("[TRACE] Re-validating config for %q", n.Addr.Absolute(ctx.Path()))

// Allow the provider to validate the final set of values.

// The config was statically validated early on, but there may have been

// unknown values which the provider could not validate at the time.

validateResp := provider.ValidateResourceTypeConfig(

providers.ValidateResourceTypeConfigRequest{

TypeName: n.Addr.Resource.Type,

Config: configVal,

},

)

if validateResp.Diagnostics.HasErrors() {

return nil, validateResp.Diagnostics.InConfigBody(config.Config).Err()

}

// The provider gets an opportunity to customize the proposed new value,

// which in turn produces the _planned_ new value. But before

// we send back this information, we need to process ignore_changes

// so that CustomizeDiff will not act on them

var ignoreChangeDiags tfdiags.Diagnostics

proposedNewVal, ignoreChangeDiags = n.processIgnoreChanges(priorVal, proposedNewVal)

diags = diags.Append(ignoreChangeDiags)

if ignoreChangeDiags.HasErrors() {

return nil, diags.Err()

}

resp := provider.PlanResourceChange(providers.PlanResourceChangeRequest{

TypeName: n.Addr.Resource.Type,

Config: configVal,

PriorState: priorVal,

ProposedNewState: proposedNewVal,

PriorPrivate: priorPrivate,

})

diags = diags.Append(resp.Diagnostics.InConfigBody(config.Config))

if diags.HasErrors() {

return nil, diags.Err()

}

plannedNewVal := resp.PlannedState

plannedPrivate := resp.PlannedPrivate

if plannedNewVal == cty.NilVal {

// Should never happen. Since real-world providers return via RPC a nil

// is always a bug in the client-side stub. This is more likely caused

// by an incompletely-configured mock provider in tests, though.

panic(fmt.Sprintf("PlanResourceChange of %s produced nil value", absAddr.String()))

}

// We allow the planned new value to disagree with configuration _values_

// here, since that allows the provider to do special logic like a

// DiffSuppressFunc, but we still require that the provider produces

// a value whose type conforms to the schema.

for _, err := range plannedNewVal.Type().TestConformance(schema.ImpliedType()) {

diags = diags.Append(tfdiags.Sourceless(

tfdiags.Error,

"Provider produced invalid plan",

fmt.Sprintf(

"Provider %q planned an invalid value for %s.\n\nThis is a bug in the provider, which should be reported in the provider's own issue tracker.",

n.ProviderAddr.ProviderConfig.Type, tfdiags.FormatErrorPrefixed(err, absAddr.String()),

),

))

}

if diags.HasErrors() {

return nil, diags.Err()

}

if errs := objchange.AssertPlanValid(schema, priorVal, configVal, plannedNewVal); len(errs) > 0 {

if resp.LegacyTypeSystem {

// The shimming of the old type system in the legacy SDK is not precise

// enough to pass this consistency check, so we'll give it a pass here,

// but we will generate a warning about it so that we are more likely

// to notice in the logs if an inconsistency beyond the type system

// leads to a downstream provider failure.

var buf strings.Builder

fmt.Fprintf(&buf, "[WARN] Provider %q produced an invalid plan for %s, but we are tolerating it because it is using the legacy plugin SDK.\n The following problems may be the cause of any confusing errors from downstream operations:", n.ProviderAddr.ProviderConfig.Type, absAddr)

for _, err := range errs {

fmt.Fprintf(&buf, "\n - %s", tfdiags.FormatError(err))

}

log.Print(buf.String())

} else {

for _, err := range errs {

diags = diags.Append(tfdiags.Sourceless(

tfdiags.Error,

"Provider produced invalid plan",

fmt.Sprintf(

"Provider %q planned an invalid value for %s.\n\nThis is a bug in the provider, which should be reported in the provider's own issue tracker.",

n.ProviderAddr.ProviderConfig.Type, tfdiags.FormatErrorPrefixed(err, absAddr.String()),

),

))

}

return nil, diags.Err()

}

}

// TODO: We should be able to remove this repeat of processing ignored changes

// after the plan, which helps providers relying on old behavior "just work"

// in the next major version, such that we can be stricter about ignore_changes

// values

plannedNewVal, ignoreChangeDiags = n.processIgnoreChanges(priorVal, plannedNewVal)

diags = diags.Append(ignoreChangeDiags)

if ignoreChangeDiags.HasErrors() {

return nil, diags.Err()

}

// The provider produces a list of paths to attributes whose changes mean

// that we must replace rather than update an existing remote object.

// However, we only need to do that if the identified attributes _have_

// actually changed -- particularly after we may have undone some of the

// changes in processIgnoreChanges -- so now we'll filter that list to

// include only where changes are detected.

reqRep := cty.NewPathSet()

if len(resp.RequiresReplace) > 0 {

for _, path := range resp.RequiresReplace {

if priorVal.IsNull() {

// If prior is null then we don't expect any RequiresReplace at all,

// because this is a Create action.

continue

}

priorChangedVal, priorPathDiags := hcl.ApplyPath(priorVal, path, nil)

plannedChangedVal, plannedPathDiags := hcl.ApplyPath(plannedNewVal, path, nil)

if plannedPathDiags.HasErrors() && priorPathDiags.HasErrors() {

// This means the path was invalid in both the prior and new

// values, which is an error with the provider itself.

diags = diags.Append(tfdiags.Sourceless(

tfdiags.Error,

"Provider produced invalid plan",

fmt.Sprintf(

"Provider %q has indicated \"requires replacement\" on %s for a non-existent attribute path %#v.\n\nThis is a bug in the provider, which should be reported in the provider's own issue tracker.",

n.ProviderAddr.ProviderConfig.Type, absAddr, path,

),

))

continue

}

// Make sure we have valid Values for both values.

// Note: if the opposing value was of the type

// cty.DynamicPseudoType, the type assigned here may not exactly

// match the schema. This is fine here, since we're only going to

// check for equality, but if the NullVal is to be used, we need to

// check the schema for th true type.

switch {

case priorChangedVal == cty.NilVal && plannedChangedVal == cty.NilVal:

// this should never happen without ApplyPath errors above

panic("requires replace path returned 2 nil values")

case priorChangedVal == cty.NilVal:

priorChangedVal = cty.NullVal(plannedChangedVal.Type())

case plannedChangedVal == cty.NilVal:

plannedChangedVal = cty.NullVal(priorChangedVal.Type())

}

eqV := plannedChangedVal.Equals(priorChangedVal)

if !eqV.IsKnown() || eqV.False() {

reqRep.Add(path)

}

}

if diags.HasErrors() {

return nil, diags.Err()

}

}

eqV := plannedNewVal.Equals(priorVal)

eq := eqV.IsKnown() && eqV.True()

var action plans.Action

switch {

case priorVal.IsNull():

action = plans.Create

case eq:

action = plans.NoOp

case !reqRep.Empty():

// If there are any "requires replace" paths left _after our filtering

// above_ then this is a replace action.

if n.CreateBeforeDestroy {

action = plans.CreateThenDelete

} else {

action = plans.DeleteThenCreate

}

default:

action = plans.Update

// "Delete" is never chosen here, because deletion plans are always

// created more directly elsewhere, such as in "orphan" handling.

}

if action.IsReplace() {

// In this strange situation we want to produce a change object that

// shows our real prior object but has a _new_ object that is built

// from a null prior object, since we're going to delete the one

// that has all the computed values on it.

//

// Therefore we'll ask the provider to plan again here, giving it

// a null object for the prior, and then we'll meld that with the

// _actual_ prior state to produce a correctly-shaped replace change.

// The resulting change should show any computed attributes changing

// from known prior values to unknown values, unless the provider is

// able to predict new values for any of these computed attributes.

nullPriorVal := cty.NullVal(schema.ImpliedType())

// create a new proposed value from the null state and the config

proposedNewVal = objchange.ProposedNewObject(schema, nullPriorVal, configVal)

resp = provider.PlanResourceChange(providers.PlanResourceChangeRequest{

TypeName: n.Addr.Resource.Type,

Config: configVal,

PriorState: nullPriorVal,

ProposedNewState: proposedNewVal,

PriorPrivate: plannedPrivate,

})

// We need to tread carefully here, since if there are any warnings

// in here they probably also came out of our previous call to

// PlanResourceChange above, and so we don't want to repeat them.

// Consequently, we break from the usual pattern here and only

// append these new diagnostics if there's at least one error inside.

if resp.Diagnostics.HasErrors() {

diags = diags.Append(resp.Diagnostics.InConfigBody(config.Config))

return nil, diags.Err()

}

plannedNewVal = resp.PlannedState

plannedPrivate = resp.PlannedPrivate

for _, err := range plannedNewVal.Type().TestConformance(schema.ImpliedType()) {

diags = diags.Append(tfdiags.Sourceless(

tfdiags.Error,

"Provider produced invalid plan",

fmt.Sprintf(

"Provider %q planned an invalid value for %s%s.\n\nThis is a bug in the provider, which should be reported in the provider's own issue tracker.",

n.ProviderAddr.ProviderConfig.Type, absAddr, tfdiags.FormatError(err),

),

))

}

if diags.HasErrors() {

return nil, diags.Err()

}

}

// If our prior value was tainted then we actually want this to appear

// as a replace change, even though so far we've been treating it as a

// create.

if action == plans.Create && priorValTainted != cty.NilVal {

if n.CreateBeforeDestroy {

action = plans.CreateThenDelete

} else {

action = plans.DeleteThenCreate

}

priorVal = priorValTainted

}

// As a special case, if we have a previous diff (presumably from the plan

// phases, whereas we're now in the apply phase) and it was for a replace,

// we've already deleted the original object from state by the time we

// get here and so we would've ended up with a _create_ action this time,

// which we now need to paper over to get a result consistent with what

// we originally intended.

if n.PreviousDiff != nil {

prevChange := *n.PreviousDiff

if prevChange.Action.IsReplace() && action == plans.Create {

log.Printf("[TRACE] EvalDiff: %s treating Create change as %s change to match with earlier plan", absAddr, prevChange.Action)

action = prevChange.Action

priorVal = prevChange.Before

}

}

// Call post-refresh hook

if !n.Stub {

err := ctx.Hook(func(h Hook) (HookAction, error) {

return h.PostDiff(absAddr, states.CurrentGen, action, priorVal, plannedNewVal)

})

if err != nil {

return nil, err

}

}

// Update our output if we care

if n.OutputChange != nil {

*n.OutputChange = &plans.ResourceInstanceChange{

Addr: absAddr,

Private: plannedPrivate,

ProviderAddr: n.ProviderAddr,

Change: plans.Change{

Action: action,

Before: priorVal,

After: plannedNewVal,

},

RequiredReplace: reqRep,

}

}

if n.OutputValue != nil {

*n.OutputValue = configVal

}

// Update the state if we care

if n.OutputState != nil {

*n.OutputState = &states.ResourceInstanceObject{

// We use the special "planned" status here to note that this

// object's value is not yet complete. Objects with this status

// cannot be used during expression evaluation, so the caller

// must _also_ record the returned change in the active plan,

// which the expression evaluator will use in preference to this

// incomplete value recorded in the state.

Status: states.ObjectPlanned,

Value: plannedNewVal,

Private: plannedPrivate,

}

}

return nil, nil

}

func (n *EvalDiff) processIgnoreChanges(prior, proposed cty.Value) (cty.Value, tfdiags.Diagnostics) {

// ignore_changes only applies when an object already exists, since we

// can't ignore changes to a thing we've not created yet.

if prior.IsNull() {

return proposed, nil

}

ignoreChanges := n.Config.Managed.IgnoreChanges

ignoreAll := n.Config.Managed.IgnoreAllChanges

if len(ignoreChanges) == 0 && !ignoreAll {

return proposed, nil

}

if ignoreAll {

return prior, nil

}

if prior.IsNull() || proposed.IsNull() {

// Ignore changes doesn't apply when we're creating for the first time.

// Proposed should never be null here, but if it is then we'll just let it be.

return proposed, nil

}

return processIgnoreChangesIndividual(prior, proposed, ignoreChanges)

}

func processIgnoreChangesIndividual(prior, proposed cty.Value, ignoreChanges []hcl.Traversal) (cty.Value, tfdiags.Diagnostics) {

// When we walk below we will be using cty.Path values for comparison, so

// we'll convert our traversals here so we can compare more easily.

ignoreChangesPath := make([]cty.Path, len(ignoreChanges))

for i, traversal := range ignoreChanges {

path := make(cty.Path, len(traversal))

for si, step := range traversal {

switch ts := step.(type) {

case hcl.TraverseRoot:

path[si] = cty.GetAttrStep{

Name: ts.Name,

}

case hcl.TraverseAttr:

path[si] = cty.GetAttrStep{

Name: ts.Name,

}

case hcl.TraverseIndex:

path[si] = cty.IndexStep{

Key: ts.Key,

}

default:

panic(fmt.Sprintf("unsupported traversal step %#v", step))

}

}

ignoreChangesPath[i] = path

}

var diags tfdiags.Diagnostics

ret, _ := cty.Transform(proposed, func(path cty.Path, v cty.Value) (cty.Value, error) {

// First we must see if this is a path that's being ignored at all.

// We're looking for an exact match here because this walk will visit

// leaf values first and then their containers, and we want to do

// the "ignore" transform once we reach the point indicated, throwing

// away any deeper values we already produced at that point.

var ignoreTraversal hcl.Traversal

for i, candidate := range ignoreChangesPath {

if path.Equals(candidate) {

ignoreTraversal = ignoreChanges[i]

}

}

if ignoreTraversal == nil {

return v, nil

}

// If we're able to follow the same path through the prior value,

// we'll take the value there instead, effectively undoing the

// change that was planned.

priorV, diags := hcl.ApplyPath(prior, path, nil)

if diags.HasErrors() {

// We just ignore the errors and move on here, since we assume it's

// just because the prior value was a slightly-different shape.

// It could potentially also be that the traversal doesn't match

// the schema, but we should've caught that during the validate

// walk if so.

return v, nil

}

return priorV, nil

})

return ret, diags

}

// EvalDiffDestroy is an EvalNode implementation that returns a plain

// destroy diff.

type EvalDiffDestroy struct {

Addr addrs.ResourceInstance

DeposedKey states.DeposedKey

State **states.ResourceInstanceObject

ProviderAddr addrs.AbsProviderConfig

Output **plans.ResourceInstanceChange

OutputState **states.ResourceInstanceObject

}

// TODO: test

func (n *EvalDiffDestroy) Eval(ctx EvalContext) (interface{}, error) {

absAddr := n.Addr.Absolute(ctx.Path())

state := *n.State

if n.ProviderAddr.ProviderConfig.Type == "" {

if n.DeposedKey == "" {

panic(fmt.Sprintf("EvalDiffDestroy for %s does not have ProviderAddr set", absAddr))

} else {

panic(fmt.Sprintf("EvalDiffDestroy for %s (deposed %s) does not have ProviderAddr set", absAddr, n.DeposedKey))

}

}

// If there is no state or our attributes object is null then we're already

// destroyed.

if state == nil || state.Value.IsNull() {

return nil, nil

}

// Call pre-diff hook

err := ctx.Hook(func(h Hook) (HookAction, error) {

return h.PreDiff(

absAddr, n.DeposedKey.Generation(),

state.Value,

cty.NullVal(cty.DynamicPseudoType),

)

})

if err != nil {

return nil, err

}

// Change is always the same for a destroy. We don't need the provider's

// help for this one.

// TODO: Should we give the provider an opportunity to veto this?

change := &plans.ResourceInstanceChange{

Addr: absAddr,

DeposedKey: n.DeposedKey,

Change: plans.Change{

Action: plans.Delete,

Before: state.Value,

After: cty.NullVal(cty.DynamicPseudoType),

},

Private: state.Private,

ProviderAddr: n.ProviderAddr,

}

// Call post-diff hook

err = ctx.Hook(func(h Hook) (HookAction, error) {

return h.PostDiff(

absAddr,

n.DeposedKey.Generation(),

change.Action,

change.Before,

change.After,

)

})

if err != nil {

return nil, err

}

// Update our output

*n.Output = change

if n.OutputState != nil {

// Record our proposed new state, which is nil because we're destroying.

*n.OutputState = nil

}

return nil, nil

}

// EvalReduceDiff is an EvalNode implementation that takes a planned resource

// instance change as might be produced by EvalDiff or EvalDiffDestroy and

// "simplifies" it to a single atomic action to be performed by a specific

// graph node.

//

// Callers must specify whether they are a destroy node or a regular apply

// node. If the result is NoOp then the given change requires no action for

// the specific graph node calling this and so evaluation of the that graph

// node should exit early and take no action.

//

// The object written to OutChange may either be identical to InChange or

// a new change object derived from InChange. Because of the former case, the

// caller must not mutate the object returned in OutChange.

type EvalReduceDiff struct {

Addr addrs.ResourceInstance

InChange **plans.ResourceInstanceChange

Destroy bool

OutChange **plans.ResourceInstanceChange

}

// TODO: test

func (n *EvalReduceDiff) Eval(ctx EvalContext) (interface{}, error) {

in := *n.InChange

out := in.Simplify(n.Destroy)

if n.OutChange != nil {

*n.OutChange = out

}

if out.Action != in.Action {

if n.Destroy {

log.Printf("[TRACE] EvalReduceDiff: %s change simplified from %s to %s for destroy node", n.Addr, in.Action, out.Action)

} else {

log.Printf("[TRACE] EvalReduceDiff: %s change simplified from %s to %s for apply node", n.Addr, in.Action, out.Action)

}

}

return nil, nil

}

// EvalReadDiff is an EvalNode implementation that retrieves the planned

// change for a particular resource instance object.

type EvalReadDiff struct {

Addr addrs.ResourceInstance

DeposedKey states.DeposedKey

ProviderSchema **ProviderSchema

Change **plans.ResourceInstanceChange

}

func (n *EvalReadDiff) Eval(ctx EvalContext) (interface{}, error) {

providerSchema := *n.ProviderSchema

changes := ctx.Changes()

addr := n.Addr.Absolute(ctx.Path())

schema, _ := providerSchema.SchemaForResourceAddr(n.Addr.ContainingResource())

if schema == nil {

// Should be caught during validation, so we don't bother with a pretty error here

return nil, fmt.Errorf("provider does not support resource type %q", n.Addr.Resource.Type)

}

gen := states.CurrentGen

if n.DeposedKey != states.NotDeposed {

gen = n.DeposedKey

}

csrc := changes.GetResourceInstanceChange(addr, gen)

if csrc == nil {

log.Printf("[TRACE] EvalReadDiff: No planned change recorded for %s", addr)

return nil, nil

}

change, err := csrc.Decode(schema.ImpliedType())

if err != nil {

return nil, fmt.Errorf("failed to decode planned changes for %s: %s", addr, err)

}

if n.Change != nil {

*n.Change = change

}

log.Printf("[TRACE] EvalReadDiff: Read %s change from plan for %s", change.Action, addr)

return nil, nil

}

// EvalWriteDiff is an EvalNode implementation that saves a planned change

// for an instance object into the set of global planned changes.

type EvalWriteDiff struct {

Addr addrs.ResourceInstance

DeposedKey states.DeposedKey

ProviderSchema **ProviderSchema

Change **plans.ResourceInstanceChange

}

// TODO: test

func (n *EvalWriteDiff) Eval(ctx EvalContext) (interface{}, error) {

changes := ctx.Changes()

addr := n.Addr.Absolute(ctx.Path())

if n.Change == nil || *n.Change == nil {

// Caller sets nil to indicate that we need to remove a change from

// the set of changes.

gen := states.CurrentGen

if n.DeposedKey != states.NotDeposed {

gen = n.DeposedKey

}

changes.RemoveResourceInstanceChange(addr, gen)

return nil, nil

}

providerSchema := *n.ProviderSchema

change := *n.Change

if change.Addr.String() != addr.String() || change.DeposedKey != n.DeposedKey {

// Should never happen, and indicates a bug in the caller.

panic("inconsistent address and/or deposed key in EvalWriteDiff")

}

schema, _ := providerSchema.SchemaForResourceAddr(n.Addr.ContainingResource())

if schema == nil {

// Should be caught during validation, so we don't bother with a pretty error here

return nil, fmt.Errorf("provider does not support resource type %q", n.Addr.Resource.Type)

}

csrc, err := change.Encode(schema.ImpliedType())

if err != nil {

return nil, fmt.Errorf("failed to encode planned changes for %s: %s", addr, err)

}

changes.AppendResourceInstanceChange(csrc)

if n.DeposedKey == states.NotDeposed {

log.Printf("[TRACE] EvalWriteDiff: recorded %s change for %s", change.Action, addr)

} else {

log.Printf("[TRACE] EvalWriteDiff: recorded %s change for %s deposed object %s", change.Action, addr, n.DeposedKey)

}

return nil, nil

}

| {

"pile_set_name": "Github"

} |

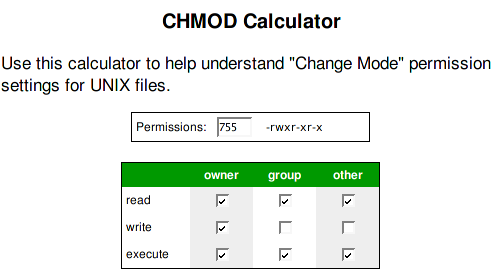

Title: CHMOD 计算器

Date: 2006-10-31 09:18

Author: toy

Category: Apps

Slug: chmod_calculator

有些初学 *Nix 的朋友,对于 chmod

命令后面所跟的那串数字很是令人费解。到底在那串数字背后隐藏着什么秘密呢?在更改相应权限的时候,又如何得到那些数字呢?[CHMOD

Calculator](http://www.mistupid.com/internet/chmod.htm) 这个简单的在线

chmod 计算器,将助你一臂之力。

(via [digg](http://digg.com/linux_unix/Best_CHMOD_Calculator), thanks!)

| {

"pile_set_name": "Github"

} |

// !$*UTF8*$!

{

archiveVersion = 1;

classes = {

};

objectVersion = 46;

objects = {

/* Begin PBXBuildFile section */

8E335399177892A000E92480 /* Images.xcassets in Resources */ = {isa = PBXBuildFile; fileRef = 8E335398177892A000E92480 /* Images.xcassets */; };

8E40C5B11773866D002489E6 /* Foundation.framework in Frameworks */ = {isa = PBXBuildFile; fileRef = 8E40C5B01773866D002489E6 /* Foundation.framework */; };

8E40C5B31773866D002489E6 /* CoreGraphics.framework in Frameworks */ = {isa = PBXBuildFile; fileRef = 8E40C5B21773866D002489E6 /* CoreGraphics.framework */; };

8E40C5B51773866D002489E6 /* UIKit.framework in Frameworks */ = {isa = PBXBuildFile; fileRef = 8E40C5B41773866D002489E6 /* UIKit.framework */; };

8E40C5BB1773866D002489E6 /* InfoPlist.strings in Resources */ = {isa = PBXBuildFile; fileRef = 8E40C5B91773866D002489E6 /* InfoPlist.strings */; };

8E40C5BD1773866D002489E6 /* main.m in Sources */ = {isa = PBXBuildFile; fileRef = 8E40C5BC1773866D002489E6 /* main.m */; };

8E40C5EB177394F8002489E6 /* CoreText.framework in Frameworks */ = {isa = PBXBuildFile; fileRef = 8E40C5EA177394F8002489E6 /* CoreText.framework */; };

8E40C5ED17739502002489E6 /* QuartzCore.framework in Frameworks */ = {isa = PBXBuildFile; fileRef = 8E40C5EC17739502002489E6 /* QuartzCore.framework */; };

8E40C5EF1773951D002489E6 /* Accelerate.framework in Frameworks */ = {isa = PBXBuildFile; fileRef = 8E40C5EE1773951D002489E6 /* Accelerate.framework */; };

/* End PBXBuildFile section */

/* Begin PBXFileReference section */

8E3353951778911600E92480 /* Utility.h */ = {isa = PBXFileReference; fileEncoding = 4; lastKnownFileType = sourcecode.c.h; name = Utility.h; path = "Hello World/Utility.h"; sourceTree = "<group>"; };

8E335398177892A000E92480 /* Images.xcassets */ = {isa = PBXFileReference; lastKnownFileType = folder.assetcatalog; path = Images.xcassets; sourceTree = "<group>"; };

8E40C5AD1773866D002489E6 /* Hello World.app */ = {isa = PBXFileReference; explicitFileType = wrapper.application; includeInIndex = 0; path = "Hello World.app"; sourceTree = BUILT_PRODUCTS_DIR; };

8E40C5B01773866D002489E6 /* Foundation.framework */ = {isa = PBXFileReference; lastKnownFileType = wrapper.framework; name = Foundation.framework; path = System/Library/Frameworks/Foundation.framework; sourceTree = SDKROOT; };

8E40C5B21773866D002489E6 /* CoreGraphics.framework */ = {isa = PBXFileReference; lastKnownFileType = wrapper.framework; name = CoreGraphics.framework; path = System/Library/Frameworks/CoreGraphics.framework; sourceTree = SDKROOT; };

8E40C5B41773866D002489E6 /* UIKit.framework */ = {isa = PBXFileReference; lastKnownFileType = wrapper.framework; name = UIKit.framework; path = System/Library/Frameworks/UIKit.framework; sourceTree = SDKROOT; };

8E40C5B81773866D002489E6 /* Hello World-Info.plist */ = {isa = PBXFileReference; lastKnownFileType = text.plist.xml; path = "Hello World-Info.plist"; sourceTree = "<group>"; };

8E40C5BA1773866D002489E6 /* en */ = {isa = PBXFileReference; lastKnownFileType = text.plist.strings; name = en; path = en.lproj/InfoPlist.strings; sourceTree = "<group>"; };

8E40C5BC1773866D002489E6 /* main.m */ = {isa = PBXFileReference; lastKnownFileType = sourcecode.c.objc; name = main.m; path = "Hello World/main.m"; sourceTree = "<group>"; };

8E40C5BE1773866D002489E6 /* Hello World-Prefix.pch */ = {isa = PBXFileReference; lastKnownFileType = sourcecode.c.h; path = "Hello World-Prefix.pch"; sourceTree = "<group>"; };

8E40C5C91773866D002489E6 /* XCTest.framework */ = {isa = PBXFileReference; lastKnownFileType = wrapper.framework; name = XCTest.framework; path = Library/Frameworks/XCTest.framework; sourceTree = DEVELOPER_DIR; };

8E40C5EA177394F8002489E6 /* CoreText.framework */ = {isa = PBXFileReference; lastKnownFileType = wrapper.framework; name = CoreText.framework; path = System/Library/Frameworks/CoreText.framework; sourceTree = SDKROOT; };

8E40C5EC17739502002489E6 /* QuartzCore.framework */ = {isa = PBXFileReference; lastKnownFileType = wrapper.framework; name = QuartzCore.framework; path = System/Library/Frameworks/QuartzCore.framework; sourceTree = SDKROOT; };

8E40C5EE1773951D002489E6 /* Accelerate.framework */ = {isa = PBXFileReference; lastKnownFileType = wrapper.framework; name = Accelerate.framework; path = System/Library/Frameworks/Accelerate.framework; sourceTree = SDKROOT; };

/* End PBXFileReference section */

/* Begin PBXFrameworksBuildPhase section */

8E40C5AA1773866D002489E6 /* Frameworks */ = {

isa = PBXFrameworksBuildPhase;

buildActionMask = 2147483647;

files = (

8E40C5EF1773951D002489E6 /* Accelerate.framework in Frameworks */,

8E40C5ED17739502002489E6 /* QuartzCore.framework in Frameworks */,

8E40C5EB177394F8002489E6 /* CoreText.framework in Frameworks */,

8E40C5B31773866D002489E6 /* CoreGraphics.framework in Frameworks */,

8E40C5B51773866D002489E6 /* UIKit.framework in Frameworks */,

8E40C5B11773866D002489E6 /* Foundation.framework in Frameworks */,

);

runOnlyForDeploymentPostprocessing = 0;

};

/* End PBXFrameworksBuildPhase section */

/* Begin PBXGroup section */

8E40C5A41773866D002489E6 = {

isa = PBXGroup;

children = (

8E40C5BC1773866D002489E6 /* main.m */,

8E3353951778911600E92480 /* Utility.h */,

8E40C5B71773866D002489E6 /* Supporting Files */,

8E40C5AF1773866D002489E6 /* Frameworks */,

8E40C5AE1773866D002489E6 /* Products */,

);

sourceTree = "<group>";

};

8E40C5AE1773866D002489E6 /* Products */ = {

isa = PBXGroup;

children = (

8E40C5AD1773866D002489E6 /* Hello World.app */,

);

name = Products;

sourceTree = "<group>";

};

8E40C5AF1773866D002489E6 /* Frameworks */ = {

isa = PBXGroup;

children = (

8E40C5EE1773951D002489E6 /* Accelerate.framework */,

8E40C5EC17739502002489E6 /* QuartzCore.framework */,

8E40C5EA177394F8002489E6 /* CoreText.framework */,

8E40C5B01773866D002489E6 /* Foundation.framework */,

8E40C5B21773866D002489E6 /* CoreGraphics.framework */,

8E40C5B41773866D002489E6 /* UIKit.framework */,

8E40C5C91773866D002489E6 /* XCTest.framework */,

);

name = Frameworks;

sourceTree = "<group>";

};

8E40C5B71773866D002489E6 /* Supporting Files */ = {

isa = PBXGroup;

children = (

8E335398177892A000E92480 /* Images.xcassets */,

8E40C5B81773866D002489E6 /* Hello World-Info.plist */,

8E40C5B91773866D002489E6 /* InfoPlist.strings */,

8E40C5BE1773866D002489E6 /* Hello World-Prefix.pch */,

);

name = "Supporting Files";

path = "Hello World";

sourceTree = "<group>";

};

/* End PBXGroup section */

/* Begin PBXNativeTarget section */

8E40C5AC1773866D002489E6 /* Hello World */ = {

isa = PBXNativeTarget;

buildConfigurationList = 8E40C5D91773866D002489E6 /* Build configuration list for PBXNativeTarget "Hello World" */;

buildPhases = (

8E40C5A91773866D002489E6 /* Sources */,

8E40C5AA1773866D002489E6 /* Frameworks */,

8E40C5AB1773866D002489E6 /* Resources */,

);

buildRules = (

);

dependencies = (

);

name = "Hello World";

productName = "Hello World";

productReference = 8E40C5AD1773866D002489E6 /* Hello World.app */;

productType = "com.apple.product-type.application";

};

/* End PBXNativeTarget section */

/* Begin PBXProject section */

8E40C5A51773866D002489E6 /* Project object */ = {

isa = PBXProject;

attributes = {

LastUpgradeCheck = 0500;

ORGANIZATIONNAME = "Erica Sadun";

};

buildConfigurationList = 8E40C5A81773866D002489E6 /* Build configuration list for PBXProject "Hello World" */;

compatibilityVersion = "Xcode 3.2";

developmentRegion = English;

hasScannedForEncodings = 0;

knownRegions = (

en,

);

mainGroup = 8E40C5A41773866D002489E6;

productRefGroup = 8E40C5AE1773866D002489E6 /* Products */;

projectDirPath = "";

projectRoot = "";

targets = (

8E40C5AC1773866D002489E6 /* Hello World */,

);

};

/* End PBXProject section */

/* Begin PBXResourcesBuildPhase section */

8E40C5AB1773866D002489E6 /* Resources */ = {

isa = PBXResourcesBuildPhase;

buildActionMask = 2147483647;

files = (

8E40C5BB1773866D002489E6 /* InfoPlist.strings in Resources */,

8E335399177892A000E92480 /* Images.xcassets in Resources */,

);

runOnlyForDeploymentPostprocessing = 0;

};

/* End PBXResourcesBuildPhase section */

/* Begin PBXSourcesBuildPhase section */

8E40C5A91773866D002489E6 /* Sources */ = {

isa = PBXSourcesBuildPhase;

buildActionMask = 2147483647;

files = (

8E40C5BD1773866D002489E6 /* main.m in Sources */,

);

runOnlyForDeploymentPostprocessing = 0;

};

/* End PBXSourcesBuildPhase section */

/* Begin PBXVariantGroup section */

8E40C5B91773866D002489E6 /* InfoPlist.strings */ = {

isa = PBXVariantGroup;

children = (

8E40C5BA1773866D002489E6 /* en */,

);

name = InfoPlist.strings;

sourceTree = "<group>";

};

/* End PBXVariantGroup section */

/* Begin XCBuildConfiguration section */

8E40C5D71773866D002489E6 /* Debug */ = {

isa = XCBuildConfiguration;

buildSettings = {

ALWAYS_SEARCH_USER_PATHS = NO;

CLANG_CXX_LANGUAGE_STANDARD = "gnu++0x";

CLANG_CXX_LIBRARY = "libc++";

CLANG_ENABLE_MODULES = YES;

CLANG_ENABLE_OBJC_ARC = YES;

CLANG_STATIC_ANALYZER_MODE = deep;

CLANG_WARN_BOOL_CONVERSION = YES;

CLANG_WARN_CONSTANT_CONVERSION = YES;

CLANG_WARN_DIRECT_OBJC_ISA_USAGE = YES_ERROR;

CLANG_WARN_EMPTY_BODY = YES;

CLANG_WARN_ENUM_CONVERSION = YES;

CLANG_WARN_INT_CONVERSION = YES;

CLANG_WARN_OBJC_ROOT_CLASS = YES_ERROR;

CLANG_WARN__DUPLICATE_METHOD_MATCH = YES;

"CODE_SIGN_IDENTITY[sdk=iphoneos*]" = "iPhone Developer";

COPY_PHASE_STRIP = NO;

GCC_C_LANGUAGE_STANDARD = gnu99;

GCC_DYNAMIC_NO_PIC = NO;

GCC_OPTIMIZATION_LEVEL = 0;

GCC_PREPROCESSOR_DEFINITIONS = (

"DEBUG=1",

"$(inherited)",

);

GCC_SYMBOLS_PRIVATE_EXTERN = NO;

GCC_WARN_ABOUT_RETURN_TYPE = YES_ERROR;

GCC_WARN_UNDECLARED_SELECTOR = YES;

GCC_WARN_UNINITIALIZED_AUTOS = YES;

GCC_WARN_UNUSED_FUNCTION = YES;

GCC_WARN_UNUSED_VARIABLE = YES;

IPHONEOS_DEPLOYMENT_TARGET = 7.0;

ONLY_ACTIVE_ARCH = YES;

OTHER_CFLAGS = (

"-Wall",

"-Wextra",

"-Wno-unused-parameter",

);

RUN_CLANG_STATIC_ANALYZER = YES;

SDKROOT = iphoneos;

TARGETED_DEVICE_FAMILY = "1,2";

};

name = Debug;

};

8E40C5D81773866D002489E6 /* Release */ = {

isa = XCBuildConfiguration;

buildSettings = {

ALWAYS_SEARCH_USER_PATHS = NO;

CLANG_CXX_LANGUAGE_STANDARD = "gnu++0x";

CLANG_CXX_LIBRARY = "libc++";

CLANG_ENABLE_MODULES = YES;

CLANG_ENABLE_OBJC_ARC = YES;

CLANG_STATIC_ANALYZER_MODE = deep;

CLANG_WARN_BOOL_CONVERSION = YES;

CLANG_WARN_CONSTANT_CONVERSION = YES;

CLANG_WARN_DIRECT_OBJC_ISA_USAGE = YES_ERROR;

CLANG_WARN_EMPTY_BODY = YES;

CLANG_WARN_ENUM_CONVERSION = YES;

CLANG_WARN_INT_CONVERSION = YES;

CLANG_WARN_OBJC_ROOT_CLASS = YES_ERROR;

CLANG_WARN__DUPLICATE_METHOD_MATCH = YES;

"CODE_SIGN_IDENTITY[sdk=iphoneos*]" = "iPhone Developer";

COPY_PHASE_STRIP = YES;

ENABLE_NS_ASSERTIONS = NO;

GCC_C_LANGUAGE_STANDARD = gnu99;

GCC_WARN_ABOUT_RETURN_TYPE = YES_ERROR;

GCC_WARN_UNDECLARED_SELECTOR = YES;

GCC_WARN_UNINITIALIZED_AUTOS = YES;

GCC_WARN_UNUSED_FUNCTION = YES;

GCC_WARN_UNUSED_VARIABLE = YES;

IPHONEOS_DEPLOYMENT_TARGET = 7.0;

OTHER_CFLAGS = (

"-Wall",

"-Wextra",

"-Wno-unused-parameter",

);

RUN_CLANG_STATIC_ANALYZER = YES;

SDKROOT = iphoneos;

TARGETED_DEVICE_FAMILY = "1,2";

VALIDATE_PRODUCT = YES;

};

name = Release;

};

8E40C5DA1773866D002489E6 /* Debug */ = {

isa = XCBuildConfiguration;

buildSettings = {

ASSETCATALOG_COMPILER_APPICON_NAME = AppIcon;

ASSETCATALOG_COMPILER_LAUNCHIMAGE_NAME = LaunchImage;

GCC_PRECOMPILE_PREFIX_HEADER = YES;

GCC_PREFIX_HEADER = "Hello World/Hello World-Prefix.pch";

INFOPLIST_FILE = "Hello World/Hello World-Info.plist";

PRODUCT_NAME = "$(TARGET_NAME)";

WRAPPER_EXTENSION = app;

};

name = Debug;

};

8E40C5DB1773866D002489E6 /* Release */ = {

isa = XCBuildConfiguration;

buildSettings = {

ASSETCATALOG_COMPILER_APPICON_NAME = AppIcon;

ASSETCATALOG_COMPILER_LAUNCHIMAGE_NAME = LaunchImage;

GCC_PRECOMPILE_PREFIX_HEADER = YES;

GCC_PREFIX_HEADER = "Hello World/Hello World-Prefix.pch";

INFOPLIST_FILE = "Hello World/Hello World-Info.plist";

PRODUCT_NAME = "$(TARGET_NAME)";

WRAPPER_EXTENSION = app;

};

name = Release;

};

/* End XCBuildConfiguration section */

/* Begin XCConfigurationList section */

8E40C5A81773866D002489E6 /* Build configuration list for PBXProject "Hello World" */ = {

isa = XCConfigurationList;

buildConfigurations = (

8E40C5D71773866D002489E6 /* Debug */,

8E40C5D81773866D002489E6 /* Release */,

);

defaultConfigurationIsVisible = 0;

defaultConfigurationName = Release;

};

8E40C5D91773866D002489E6 /* Build configuration list for PBXNativeTarget "Hello World" */ = {

isa = XCConfigurationList;

buildConfigurations = (

8E40C5DA1773866D002489E6 /* Debug */,

8E40C5DB1773866D002489E6 /* Release */,

);

defaultConfigurationIsVisible = 0;

defaultConfigurationName = Release;

};

/* End XCConfigurationList section */

};

rootObject = 8E40C5A51773866D002489E6 /* Project object */;

}

| {

"pile_set_name": "Github"

} |

"""

Tests for zipline.pipeline.loaders.frame.DataFrameLoader.

"""

from unittest import TestCase

from mock import patch

from numpy import arange, ones

from numpy.testing import assert_array_equal

from pandas import (

DataFrame,

DatetimeIndex,

Int64Index,

)

from trading_calendars import get_calendar

from zipline.lib.adjustment import (

ADD,

Float64Add,

Float64Multiply,

Float64Overwrite,

MULTIPLY,

OVERWRITE,

)

from zipline.pipeline.data import USEquityPricing

from zipline.pipeline.domain import US_EQUITIES

from zipline.pipeline.loaders.frame import DataFrameLoader

class DataFrameLoaderTestCase(TestCase):

def setUp(self):

self.trading_day = get_calendar("NYSE").day

self.nsids = 5

self.ndates = 20

self.sids = Int64Index(range(self.nsids))

self.dates = DatetimeIndex(

start='2014-01-02',

freq=self.trading_day,

periods=self.ndates,

)

self.mask = ones((len(self.dates), len(self.sids)), dtype=bool)

def tearDown(self):

pass

def test_bad_input(self):

data = arange(100).reshape(self.ndates, self.nsids)

baseline = DataFrame(data, index=self.dates, columns=self.sids)

loader = DataFrameLoader(

USEquityPricing.close,

baseline,

)

with self.assertRaises(ValueError):

# Wrong column.

loader.load_adjusted_array(

US_EQUITIES,

[USEquityPricing.open],

self.dates,

self.sids,

self.mask,

)

with self.assertRaises(ValueError):

# Too many columns.

loader.load_adjusted_array(

US_EQUITIES,

[USEquityPricing.open, USEquityPricing.close],

self.dates,

self.sids,

self.mask,

)

def test_baseline(self):

data = arange(100).reshape(self.ndates, self.nsids)

baseline = DataFrame(data, index=self.dates, columns=self.sids)

loader = DataFrameLoader(USEquityPricing.close, baseline)

dates_slice = slice(None, 10, None)

sids_slice = slice(1, 3, None)

[adj_array] = loader.load_adjusted_array(

US_EQUITIES,

[USEquityPricing.close],

self.dates[dates_slice],

self.sids[sids_slice],

self.mask[dates_slice, sids_slice],

).values()

for idx, window in enumerate(adj_array.traverse(window_length=3)):

expected = baseline.values[dates_slice, sids_slice][idx:idx + 3]

assert_array_equal(window, expected)

def test_adjustments(self):

data = arange(100).reshape(self.ndates, self.nsids)

baseline = DataFrame(data, index=self.dates, columns=self.sids)

# Use the dates from index 10 on and sids 1-3.

dates_slice = slice(10, None, None)

sids_slice = slice(1, 4, None)

# Adjustments that should actually affect the output.

relevant_adjustments = [

{

'sid': 1,

'start_date': None,

'end_date': self.dates[15],

'apply_date': self.dates[16],

'value': 0.5,

'kind': MULTIPLY,

},

{

'sid': 2,

'start_date': self.dates[5],

'end_date': self.dates[15],

'apply_date': self.dates[16],

'value': 1.0,

'kind': ADD,

},

{

'sid': 2,

'start_date': self.dates[15],

'end_date': self.dates[16],

'apply_date': self.dates[17],

'value': 1.0,

'kind': ADD,

},

{

'sid': 3,

'start_date': self.dates[16],

'end_date': self.dates[17],

'apply_date': self.dates[18],

'value': 99.0,

'kind': OVERWRITE,

},

]

# These adjustments shouldn't affect the output.

irrelevant_adjustments = [

{ # Sid Not Requested

'sid': 0,

'start_date': self.dates[16],

'end_date': self.dates[17],

'apply_date': self.dates[18],

'value': -9999.0,

'kind': OVERWRITE,

},

{ # Sid Unknown

'sid': 9999,

'start_date': self.dates[16],

'end_date': self.dates[17],

'apply_date': self.dates[18],

'value': -9999.0,

'kind': OVERWRITE,

},

{ # Date Not Requested

'sid': 2,

'start_date': self.dates[1],

'end_date': self.dates[2],

'apply_date': self.dates[3],

'value': -9999.0,

'kind': OVERWRITE,

},

{ # Date Before Known Data

'sid': 2,

'start_date': self.dates[0] - (2 * self.trading_day),

'end_date': self.dates[0] - self.trading_day,

'apply_date': self.dates[0] - self.trading_day,

'value': -9999.0,

'kind': OVERWRITE,

},

{ # Date After Known Data

'sid': 2,

'start_date': self.dates[-1] + self.trading_day,

'end_date': self.dates[-1] + (2 * self.trading_day),

'apply_date': self.dates[-1] + (3 * self.trading_day),

'value': -9999.0,

'kind': OVERWRITE,

},

]

adjustments = DataFrame(relevant_adjustments + irrelevant_adjustments)

loader = DataFrameLoader(

USEquityPricing.close,

baseline,

adjustments=adjustments,

)

expected_baseline = baseline.iloc[dates_slice, sids_slice]

formatted_adjustments = loader.format_adjustments(

self.dates[dates_slice],

self.sids[sids_slice],

)

expected_formatted_adjustments = {

6: [

Float64Multiply(

first_row=0,

last_row=5,

first_col=0,

last_col=0,

value=0.5,

),

Float64Add(

first_row=0,

last_row=5,

first_col=1,

last_col=1,

value=1.0,

),

],

7: [

Float64Add(

first_row=5,

last_row=6,

first_col=1,

last_col=1,

value=1.0,

),

],

8: [

Float64Overwrite(

first_row=6,

last_row=7,

first_col=2,

last_col=2,

value=99.0,

)

],

}

self.assertEqual(formatted_adjustments, expected_formatted_adjustments)

mask = self.mask[dates_slice, sids_slice]

with patch('zipline.pipeline.loaders.frame.AdjustedArray') as m:

loader.load_adjusted_array(

US_EQUITIES,

columns=[USEquityPricing.close],

dates=self.dates[dates_slice],

sids=self.sids[sids_slice],

mask=mask,

)

self.assertEqual(m.call_count, 1)

args, kwargs = m.call_args

assert_array_equal(kwargs['data'], expected_baseline.values)

self.assertEqual(kwargs['adjustments'], expected_formatted_adjustments)

| {

"pile_set_name": "Github"

} |

[ This is the ChangeLog from the former keyserver/ directory which

kept the old gpgkeys_* keyserver access helpers. We keep it here

to document the history of certain keyserver relates features. ]

2011-12-01 Werner Koch <[email protected]>

NB: ChangeLog files are no longer manually maintained. Starting

on December 1st, 2011 we put change information only in the GIT

commit log, and generate a top-level ChangeLog file from logs at

"make dist". See doc/HACKING for details.

2011-01-20 Werner Koch <[email protected]>

* gpgkeys_hkp.c (get_name): Remove test for KS_GETNAME. It is

always true.

(search_key): Remove test for KS_GETNAME. It is always false.

2009-08-26 Werner Koch <[email protected]>

* gpgkeys_hkp.c: Include util.h.

(send_key): Use strconcat to build KEY.

(appendable_path): New.

(get_name): Use strconcat to build REQUEST.

(search_key): Ditto.

* ksutil.c: Include util.h.

(parse_ks_options): Use make_filename_try for the ca-cert-file arg.

2009-07-06 David Shaw <[email protected]>

* gpgkeys_hkp.c (main, srv_replace): Minor tweaks to use the

DNS-SD names ("pgpkey-http" and "pgpkey-https") in SRV lookups

instead of "hkp" and "hkps".

2009-06-24 Werner Koch <[email protected]>

* gpgkeys_ldap.c (send_key): Do not deep free a NULL modlist.

Reported by Fabian Keil.

2009-05-28 David Shaw <[email protected]>

From 1.4:

* curl-shim.c (curl_slist_append, curl_slist_free_all): New.

Simple wrappers around strlist_t to emulate the curl way of doing

string lists.

(curl_easy_setopt): Handle the curl HTTPHEADER option.

* gpgkeys_curl.c, gpgkeys_hkp.c (main): Avoid caches to get the

most recent copy of the key. This is bug #1061.

2009-05-27 David Shaw <[email protected]>

From 1.4:

* gpgkeys_hkp.c (srv_replace): New function to transform a SRV

hostname to a real hostname.

(main): Call it from here for the HAVE_LIBCURL case (without

libcurl is handled via the curl-shim).

* curl-shim.h, curl-shim.c (curl_easy_setopt, curl_easy_perform):

Add a CURLOPT_SRVTAG_GPG_HACK (passed through the the http

engine).

2009-05-10 David Shaw <[email protected]>

From 1.4:

* gpgkeys_hkp.c (send_key, get_key, get_name, search_key, main):

Add support for SSLized HKP.

* curl-shim.h (curl_version): No need to provide a version for

curl-shim as it always matches the GnuPG version.

* gpgkeys_curl.c, gpgkeys_hkp.c (main): Show which version of curl

we're using as part of --version.

* gpgkeys_curl.c, gpgkeys_finger.c, gpgkeys_hkp.c,

gpgkeys_ldap.c (show_help): Document --version.

2009-05-04 David Shaw <[email protected]>

* gpgkeys_mailto.in: Set 'mail-from' as a keyserver-option, rather

than the ugly ?from= syntax.

2009-01-22 Werner Koch <[email protected]>

* Makefile.am (gpg2keys_curl_LDADD, gpg2keys_hkp_LDADD): Add all

standard libs.

2008-10-20 Werner Koch <[email protected]>

* curl-shim.c (curl_global_init): Mark usused arg.

(curl_version_info): Ditto.

2008-08-29 Werner Koch <[email protected]>

* gpgkeys_kdns.c: Changed copyright notice to the FSF.

2008-04-21 Werner Koch <[email protected]>

* ksutil.c (w32_init_sockets) [HAVE_W32_SYSTEM]: New.

* curl-shim.c (curl_easy_init) [HAVE_W32_SYSTEM]: Call it.

* gpgkeys_finger.c: s/_WIN32/HAVE_W32_SYSTEM/.

(init_sockets): Remove.

(connect_server) [HAVE_W32_SYSTEM]: Call new function.

2008-04-14 David Shaw <[email protected]>

* gpgkeys_curl.c (main), gpgkeys_hkp.c (main): Make sure all

libcurl number options are passed as long.

* curl-shim.c (curl_easy_setopt): Minor tweak to match the real

curl better - libcurl uses 'long', not 'unsigned int'.

2008-04-07 Werner Koch <[email protected]>

* gpgkeys_kdns.c: New.

* Makefile.am: Support kdns.

* no-libgcrypt.c (gcry_strdup): Fix. It was not used.

2008-03-25 Werner Koch <[email protected]>

* gpgkeys_ldap.c (build_attrs): Take care of char defaulting to

unsigned when using hextobyte.

2007-10-25 David Shaw <[email protected]> (wk)

From 1.4 (July):

* gpgkeys_ldap.c (main): Fix bug in setting up whether to verify

peer SSL cert. This used to work with older OpenLDAP, but is now

more strictly handled.

* gpgkeys_ldap.c (search_key, main): Fix bug where searching for

foo bar (no quotes) on the command line resulted in searching for

"foo\2Abar" due to LDAP quoting. The proper search is "foo*bar".

2007-06-11 Werner Koch <[email protected]>

* gpgkeys_hkp.c (send_key): Rename eof to r_eof as some Windows

header defines such a symbol.

(main): Likewise.

2007-06-06 Werner Koch <[email protected]>

* gpgkeys_ldap.c (send_key, send_key_keyserver): Rename eof to

r_eof as some Windows file has such a symbol.

(main): Likewise.

2007-05-07 Werner Koch <[email protected]>

* Makefile.am (gpg2keys_ldap_LDADD): Add GPG_ERROR_LIBS.

2007-05-04 Werner Koch <[email protected]>

* gpgkeys_test.in: Rename to ..

* gpg2keys_test.in: .. this.

* gpgkeys_mailto.in: Rename to ..

* gpg2keys_mailto.in: .. this

* Makefile.am: Likewise

2007-03-13 David Shaw <[email protected]>

From STABLE-BRANCH-1-4

* gpgkeys_curl.c (main): Use curl_version_info to verify that the

protocol we're about to use is actually available.

* curl-shim.h, curl-shim.c (curl_free): Make into a macro.

(curl_version_info): New. Only advertises "http" for our shim, of

course.

2007-03-09 David Shaw <[email protected]>

From STABLE-BRANCH-1-4

* gpgkeys_ldap.c (send_key): Missing a free().

* curl-shim.c (curl_easy_perform): Some debugging items that may

be handy.

2006-12-03 David Shaw <[email protected]>

* gpgkeys_hkp.c (search_key): HKP keyservers like the 0x to be

present when searching by keyID.

2006-11-22 Werner Koch <[email protected]>

* Makefile.am (gpg2keys_ldap_LDADD): Add jnlib. This is needed

for some replacement functions.

2006-11-21 Werner Koch <[email protected]>

* curl-shim.c (curl_easy_perform): Made BUFLEN and MAXLNE a size_t.

2006-11-05 David Shaw <[email protected]>

* gpgkeys_hkp.c (curl_mrindex_writer): Revert previous change.

Key-not-found still has a HTML response.

2006-10-24 Marcus Brinkmann <[email protected]>

* Makefile.am (gpg2keys_ldap_CPPFLAGS): Rename second instance to ...

(gpg2keys_finger_CPPFLAGS): ... this.

2006-10-20 Werner Koch <[email protected]>

* Makefile.am: Reporder macros for better readability.

(gpg2keys_finger_LDADD): Add GPG_ERROR_LIBS.

2006-10-19 David Shaw <[email protected]>

* gpgkeys_hkp.c (curl_mrindex_writer): Print a warning if we see

HTML coming back from a MR hkp query.

2006-10-17 Werner Koch <[email protected]>

* Makefile.am: Removed W32LIBS as they are included in NETLIBS.

Removed PTH_LIBS.

2006-09-26 Werner Koch <[email protected]>

* curl-shim.c: Adjusted for changes in http.c.

(curl_easy_perform): Changed LINE from unsigned char* to char*.

* Makefile.am (gpg2keys_curl_LDADD, gpg2keys_hkp_LDADD)

[FAKE_CURL]: Need to link against common_libs and pth.

* curl-shim.h, curl-shim.c: Removed license exception as not

needed here.

2006-09-22 Werner Koch <[email protected]>

* gpgkeys_curl.c, gpgkeys_hkp.c, gpgkeys_ldap.c, curl-shim.c:

* curl-shim.h, ksutil.c, ksutil.h: Add special license exception

for OpenSSL. This helps to avoid license conflicts if OpenLDAP or

cURL is linked against OpenSSL and we would thus indirectly link

to OpenSSL. This is considered a bug fix and forgives all

possible violations, pertaining to this issue, possibly occured in

the past.

* no-libgcrypt.c: Changed license to a simple all permissive one.

* Makefile.am (gpg2keys_ldap_LDADD): For license reasons do not

link against common_libs.

(gpg2keys_curl_LDADD, gpg2keys_hkp_LDADD): Ditto.

* ksutil.c (ks_hextobyte, ks_toupper, ks_strcasecmp): New.

Identical to the ascii_foo versions from jnlib.

* gpgkeys_ldap.c: Include assert.h.

(main): Replace BUG by assert.

(build_attrs): Use ks_hextobyte and ks_strcasecmp.

* gpgkeys_finger.c (get_key): Resolved signed/unisgned char

mismatch.

2006-09-19 Werner Koch <[email protected]>

* no-libgcrypt.c: New. Taken from ../tools.

* Makefile.am: Add no-libgcrypt to all sources.

2006-09-06 Marcus Brinkmann <[email protected]>

* Makefile.am (AM_CFLAGS): Add $(GPG_ERROR_CFLAGS).

2006-08-16 Werner Koch <[email protected]>

* Makefile.am: Renamed all binaries to gpg2keys_*.

(gpg2keys_ldap_CPPFLAGS): Add AM_CPPFLAGS.

2006-08-15 Werner Koch <[email protected]>

* Makefile.am: Adjusted to the gnupg2 framework.

2006-08-14 Werner Koch <[email protected]>

* curl-shil.c, curl-shim.h: Changed to make use of the new http.c

API.

* curl-shim.c (curl_easy_perform): Add missing http_close to the

POST case.

2006-07-24 David Shaw <[email protected]> (wk)

* curl-shim.c (curl_easy_perform): Minor cleanup of proxy code.

* gpgkeys_hkp.c (send_key)

* gpgkeys_ldap.c (send_key, send_key_keyserver): Fix string

matching problem when the ascii armored form of the key happens to

match "KEY" at the beginning of the line.

2006-04-26 David Shaw <[email protected]>

* gpgkeys_http.c, gpgkeys_oldhkp.c: Removed.

* Makefile.am: Don't build gpgkeys_http or gpgkeys_(old)hkp any

longer as this is done via curl or fake-curl.

* ksutil.h, ksutil.c, gpgkeys_hkp.c, gpgkeys_curl.c: Minor

#include tweaks as FAKE_CURL is no longer meaningful.

2006-04-10 David Shaw <[email protected]>

* gpgkeys_ldap.c (ldap_quote, get_name, search_key): LDAP-quote

directly into place rather than mallocing temporary buffers.

* gpgkeys_ldap.c (get_name): Build strings with strcat rather than

using sprintf which is harder to read and modify.

* ksutil.h, ksutil.c (classify_ks_search): Add

KS_SEARCH_KEYID_SHORT and KS_SEARCH_KEYID_LONG to search for a key

ID.

* gpgkeys_ldap.c (search_key): Use it here to flip from pgpUserID

searches to pgpKeyID or pgpCertID.

2006-03-27 David Shaw <[email protected]>

* gpgkeys_ldap.c: #define LDAP_DEPRECATED for newer OpenLDAPs so

they use the regular old API that is compatible with other LDAP

libraries.

2006-03-03 David Shaw <[email protected]>

* gpgkeys_ldap.c (main): Fix build problem with non-OpenLDAP LDAP

libraries that have TLS.

2006-02-23 David Shaw <[email protected]>

* ksutil.c (init_ks_options): Default include-revoked and

include-subkeys to on, as gpg isn't doing this any longer.

2006-02-22 David Shaw <[email protected]>

* gpgkeys_hkp.c (get_name): A GETNAME query turns exact=on to cut

down on odd matches.

2006-02-21 David Shaw <[email protected]>

* gpgkeys_ldap.c (make_one_attr, build_attrs, send_key): Don't

allow duplicate attributes as OpenLDAP is now enforcing this.

* gpgkeys_ldap.c (main): Add binddn and bindpw so users can pass

credentials to a remote LDAP server.

* curl-shim.h, curl-shim.c (curl_easy_init, curl_easy_setopt,

curl_easy_perform): Mingw has 'stderr' as a macro?

* curl-shim.h, curl-shim.c (curl_easy_init, curl_easy_setopt,

curl_easy_perform): Add CURLOPT_VERBOSE and CURLOPT_STDERR for

easier debugging.

2006-01-16 David Shaw <[email protected]>

* gpgkeys_hkp.c (send_key): Do not escape the '=' in the HTTP POST

when uploading a key.

2005-12-23 David Shaw <[email protected]>

* ksutil.h, ksutil.c (parse_ks_options): New keyserver command

"getname".

* gpgkeys_hkp.c (main, get_name), gpgkeys_ldap.c (main, get_name):

Use it here to do direct name (rather than key ID) fetches.

2005-12-19 David Shaw <[email protected]>

* ksutil.h, ksutil.c (curl_armor_writer, curl_writer,

curl_writer_finalize): New functionality to handle binary format

keys by armoring them for input to GPG.

* gpgkeys_curl.c (get_key), gpgkeys_hkp.c (get_key): Call it here.

2005-12-07 David Shaw <[email protected]>

* gpgkeys_finger.c (get_key), gpgkeys_curl.c (get_key): Better

language for the key-not-found error.

* ksutil.c (curl_err_to_gpg_err): Add CURLE_OK and

CURLE_COULDNT_CONNECT.

* gpgkeys_curl.c (get_key): Give key-not-found error if no data is

found (or file itself is not found) during a fetch.

2005-12-06 David Shaw <[email protected]>

* curl-shim.c (curl_easy_perform): Fix build warning (code before

declaration).

2005-11-02 David Shaw <[email protected]>

* gpgkeys_hkp.c (search_key): Fix warning with typecast (though

curl should really have defined that char * as const).

2005-08-25 David Shaw <[email protected]>

* ksutil.h, ksutil.c (parse_ks_options): Remove exact-name and

exact-email.

(classify_ks_search): Mimic the gpg search modes instead with *,

=, <, and @.

* gpgkeys_ldap.c (search_key), gpgkeys_hkp.c (search_key): Call

them here. Suggested by Jason Harris.

2005-08-18 David Shaw <[email protected]>

* ksutil.h, ksutil.c (parse_ks_options): New keyserver-option

exact-name. The last of exact-name and exact-email overrides the

earlier.

* gpgkeys_ldap.c (search_key), gpgkeys_hkp.c (search_key): Use it

here to do a name-only search.

* gpgkeys_ldap.c (ldap_quote): \-quote a string for LDAP.

* gpgkeys_ldap.c (search_key): Use it here to escape reserved

characters in searches.

2005-08-17 David Shaw <[email protected]>

* ksutil.h, ksutil.c (parse_ks_options): New keyserver-option

exact-email.

* gpgkeys_ldap.c (search_key), gpgkeys_hkp.c (search_key): Use it

here to do an email-only search.

2005-08-08 David Shaw <[email protected]>

* Makefile.am: Include LDAP_CPPFLAGS when building LDAP.

2005-08-03 David Shaw <[email protected]>

* gpgkeys_hkp.c (main), gpgkeys_curl.c (main), curl-shim.h: Show

version of curl (or curl-shim) when debug is set.

2005-07-20 David Shaw <[email protected]>

* gpgkeys_curl.c (get_key, main): Don't try and be smart about

what protocols we handle. Directly pass them to curl or fake-curl

and see if an error comes back.

* curl-shim.h, curl-shim.c (handle_error), ksutil.c

(curl_err_to_gpg_err): Add support for CURLE_UNSUPPORTED_PROTOCOL

in fake curl.

* Makefile.am: Don't need -DFAKE_CURL any longer since it's in

config.h.

2005-06-23 David Shaw <[email protected]>

* gpgkeys_mailto.in, gpgkeys_test.in: Use @VERSION@ so version

string stays up to date.

* gpgkeys_http.c: Don't need to define HTTP_PROXY_ENV here since

it's in ksutil.h.

* gpgkeys_curl.c (get_key, main), gpgkeys_hkp.c (main): Pass AUTH

values to curl or curl-shim.

* curl-shim.c (curl_easy_perform), gpgkeys_curl.c (main),

gpgkeys_hkp.c (main): Use curl-style proxy semantics.

* curl-shim.h, curl-shim.c (curl_easy_setopt, curl_easy_perform):

Add CURLOPT_USERPWD option for HTTP auth.

* gpgkeys_http.c (get_key), gpgkeys_oldhkp (send_key, get_key,

search_key): No longer need to pass a proxyauth.

* gpgkeys_http.c (get_key): Pass auth outside of the URL.

2005-06-21 David Shaw <[email protected]>

* gpgkeys_http.c (get_key), gpgkeys_oldhkp.c (send_key, get_key,

search_key): Fix http_open/http_open_document calls to pass NULL

for auth and proxyauth since these programs pass them in the URL.

2005-06-20 David Shaw <[email protected]>

* gpgkeys_hkp.c (append_path, send_key, get_key, search_key,

main), gpgkeys_oldhkp.c (main): Properly handle double slashes in

paths.

2005-06-05 David Shaw <[email protected]>

* ksutil.c (init_ks_options, parse_ks_options): Provide a default

"/" path unless overridden by the config. Allow config to specify

items multiple times and take the last specified item.

2005-06-04 David Shaw <[email protected]>

* gpgkeys_hkp.c, gpgkeys_oldhkp.c: Add support for HKP servers

that aren't at the root path. Suggested by Jack Bates.

2005-06-01 David Shaw <[email protected]>

* ksutil.c [HAVE_DOSISH_SYSTEM]: Fix warnings on mingw32. Noted

by Joe Vender.

2005-05-04 David Shaw <[email protected]>

* ksutil.h, ksutil.c: #ifdef so we can build without libcurl or

fake-curl.

2005-05-03 David Shaw <[email protected]>

* gpgkeys_http.c: Need GET defined.

2005-05-01 David Shaw <[email protected]>

* gpgkeys_hkp.c, gpgkeys_oldhkp.c, ksutil.h: Some minor cleanup

and comments as to the size of MAX_LINE and MAX_URL.

2005-04-16 David Shaw <[email protected]>

* gpgkeys_hkp.c: New hkp handler that uses curl or curl-shim.

* Makefile.am: Build new gpgkeys_hkp.

* curl-shim.c (curl_easy_perform): Cleanup.

* ksutil.h, ksutil.c (curl_writer), gpgkeys_curl.c (get_key): Pass

a context to curl_writer so we can support multiple fetches in a

single session.

* curl-shim.h, curl-shim.c (handle_error, curl_easy_setopt,

curl_easy_perform): Add POST functionality to the curl shim.

* curl-shim.h, curl-shim.c (curl_escape, curl_free): Emulate

curl_escape and curl_free.

* gpgkeys_curl.c (main): If the http-proxy option is given without

any arguments, try to get the proxy from the environment.

* ksutil.h, ksutil.c (curl_err_to_gpg_err, curl_writer): Copy from

gpgkeys_curl.c.

* gpgkeys_oldhkp.c: Copy from gpgkeys_hkp.c.

2005-03-22 David Shaw <[email protected]>

* gpgkeys_ldap.c, ksutil.h, ksutil.c (print_nocr): Moved from

gpgkeys_ldap.c. Print a string, but strip out any CRs.

* gpgkeys_finger.c (get_key), gpgkeys_hkp.c (get_key),

gpgkeys_http.c (get_key): Use it here when outputting key material

to canonicalize line endings.

2005-03-19 David Shaw <[email protected]>

* gpgkeys_ldap.c (main): Fix three wrong calls to fail_all().

Noted by Stefan Bellon.

2005-03-17 David Shaw <[email protected]>

* ksutil.c (parse_ks_options): Handle verbose=nnn.

* Makefile.am: Calculate GNUPG_LIBEXECDIR directly. Do not

redefine $libexecdir.

* gpgkeys_curl.c, gpgkeys_finger.c, gpgkeys_ldap.c: Start using

parse_ks_options and remove a lot of common code.

* ksutil.h, ksutil.c (parse_ks_options): Parse OPAQUE, and default

debug with no arguments to 1.

2005-03-16 David Shaw <[email protected]>

* gpgkeys_ldap.c: Include lber.h if configure determines we need

it.

* ksutil.h, ksutil.c (ks_action_to_string): New.

(free_ks_options): Only free if options exist.

* ksutil.h, ksutil.c (init_ks_options, free_ks_options,

parse_ks_options): Pull a lot of duplicated code into a single

options parser for all keyserver helpers.

2005-02-11 David Shaw <[email protected]>

* curl-shim.c (curl_easy_perform): Fix compile warning.

* curl-shim.h, gpgkeys_curl.c (main), gpgkeys_ldap.c (main): Add

ca-cert-file option, to pass in the SSL cert.

* curl-shim.h, curl-shim.c: New. This is code to fake the curl

API in terms of the current HTTP iobuf API.

* gpgkeys_curl.c [FAKE_CURL], Makefile.am: If FAKE_CURL is set,

link with the iobuf code rather than libcurl.

2005-02-05 David Shaw <[email protected]>

* gpgkeys_finger.c (main), gpgkeys_hkp.c (main): Fix --version

output.

* gpgkeys_curl.c (main): Make sure the curl handle is cleaned up

on failure.

2005-02-01 David Shaw <[email protected]>

* gpgkeys_hkp.c (get_key), gpgkeys_http.c (get_key): Fix missing

http_close() calls. Noted by Phil Pennock.

* ksutil.h: Up the default timeout to two minutes.

2005-01-24 David Shaw <[email protected]>

* gpgkeys_ldap.c (print_nocr): New.

(get_key): Call it here to canonicalize line endings.

* gpgkeys_curl.c (writer): Discard everything outside the BEGIN

and END lines when retrieving keys. Canonicalize line endings.

(main): Accept FTPS.

2005-01-21 David Shaw <[email protected]>

* gpgkeys_ldap.c (main): Add "check-cert" option to disable SSL

certificate checking (which is on by default).

* gpgkeys_curl.c (main): Add "debug" option to match the LDAP

helper. Add "check-cert" option to disable SSL certificate

checking (which is on by default).

2005-01-18 David Shaw <[email protected]>

* gpgkeys_curl.c: Fix typo.

2005-01-18 Werner Koch <[email protected]>

* gpgkeys_curl.c: s/MAX_PATH/URLMAX_PATH/g to avoid a clash with

the W32 defined macro. Removed unneeded initialization of static

variables.

* gpgkeys_http.c: Ditto.

* ksutil.h: s/MAX_PATH/URLMAX_PATH/.

2005-01-17 David Shaw <[email protected]>

* gpgkeys_curl.c (main): Only allow specified protocols to use the

curl handler.

* Makefile.am: Use LIBCURL_CPPFLAGS instead of LIBCURL_INCLUDES.

2005-01-13 David Shaw <[email protected]>

* ksutil.h, gpgkeys_curl.c, gpgkeys_hkp.c, gpgkeys_ldap.c,

gpgkeys_finger.c, gpgkeys_http.c: Part 2 of the cleanup. Move all

the various defines to ksutil.h.

* gpgkeys_finger.c, gpgkeys_hkp.c, gpgkeys_http.c, gpgkeys_ldap.c:

Part 1 of a minor cleanup to use #defines instead of hard-coded

sizes.

* gpgkeys_finger.c (connect_server): Use INADDR_NONE instead of

SOCKET_ERROR. Noted by Timo.

2005-01-09 David Shaw <[email protected]>

* gpgkeys_curl.c (get_key): Newer versions of libcurl don't define

TRUE.

2004-12-24 David Shaw <[email protected]>

* gpgkeys_curl.c (main): Use new defines for opting out of certain

transfer protocols. Allow setting HTTP proxy via "http-proxy=foo"

option (there is natural support in libcurl for the http_proxy

environment variable).

* Makefile.am: Remove the conditional since this is all handled in

autoconf now.

2004-12-22 David Shaw <[email protected]>

* gpgkeys_curl.c (main): New "follow-redirects" option. Takes an

optional numeric value for the maximum number of redirects to

allow. Defaults to 5.

* gpgkeys_curl.c (main), gpgkeys_finger.c (main), gpgkeys_hkp.c

(main), gpgkeys_http.c (main), gpgkeys_ldap.c (main): Make sure

that a "timeout" option passed with no arguments is properly

handled.

* gpgkeys_curl.c (get_key, writer): New function to wrap around

fwrite to avoid DLL access problem on win32.

* gpgkeys_http.c (main, get_key): Properly pass authentication

info through to the http library.

* Makefile.am: Build gpgkeys_http or gpgkeys_curl as needed.

* gpgkeys_curl.c (main, get_key): Minor tweaks to work with either

FTP or HTTP.

* gpgkeys_ftp.c: renamed to gpgkeys_curl.c.

* gpgkeys_ftp.c (main, get_key): Use auth data as passed by gpg.

Use CURLOPT_FILE instead of CURLOPT_WRITEDATA (same option, but

backwards compatible).

2004-12-21 David Shaw <[email protected]>

* gpgkeys_ftp.c: New.

* Makefile.am: Build it if requested.

2004-12-14 Werner Koch <[email protected]>

* Makefile.am (install-exec-hook, uninstall-hook): Removed. For

Windows reasons we can't use the symlink trick.

2004-12-03 David Shaw <[email protected]>

* Makefile.am: The harmless "ignored error" on gpgkeys_ldap

install on top of an existing install is bound to confuse people.

Use ln -s -f to force the overwrite.

2004-10-28 David Shaw <[email protected]>

* gpgkeys_finger.c [_WIN32] (connect_server): Fix typo.

2004-10-28 Werner Koch <[email protected]>

* Makefile.am (other_libs): New. Also include LIBICONV. Noted by

Tim Mooney.

2004-10-28 Werner Koch <[email protected]>

* Makefile.am (other_libs):

2004-10-18 David Shaw <[email protected]>

* gpgkeys_hkp.c (send_key, get_key, search_key): Use "hkp" instead

of "x-hkp" so it can be used as a SRV tag.

2004-10-16 David Shaw <[email protected]>

* gpgkeys_finger.c [_WIN32] (connect_server): Fix typo.

2004-10-15 Werner Koch <[email protected]>

* gpgkeys_ldap.c (main, show_help): Kludge to implement standard

GNU options. Factored help printing out.

* gpgkeys_finger.c (main, show_help): Ditto.

* gpgkeys_hkp.c (main, show_help): Ditto.

* gpgkeys_http.c (main, show_help): Ditto.

* gpgkeys_test.in, gpgkeys_mailto.in: Implement --version and --help.

* Makefile.am: Add ksutil.h.

2004-10-14 David Shaw <[email protected]>

* gpgkeys_finger.c (main): We do not support relay fingering

(i.e. "finger://relayhost/[email protected]"), but finger URLs are

occasionally miswritten that way. Give an error in this case.

2004-10-14 Werner Koch <[email protected]>