text

stringlengths 96

319k

| id

stringlengths 14

178

| metadata

dict |

|---|---|---|

# قاموس المصطلحات

يحدد هذا المسرد مصطلحات التعلم الآلي العامة و 🤗 Transformers لمساعدتك على فهم الوثائق بشكل أفضل.

## A

### قناع الانتباه (Attention Mask)

قناع الانتباه هو مُدخل اختياري يستخدم عند تجميع التسلسلات معًا

<Youtube id="M6adb1j2jPI"/>

يشير هذا المُدخل إلى النموذج أى الرموز المميزة (tokens) التي يجب الانتباه إليها، وأيها لا ينبغي ذلك.

على سبيل المثال، تأمّل هذين التسلسُلين :

```python

>>> from transformers import BertTokenizer

>>> tokenizer = BertTokenizer.from_pretrained("google-bert/bert-base-cased")

>>> sequence_a = "This is a short sequence."

>>> sequence_b = "This is a rather long sequence. It is at least longer than sequence A."

>>> encoded_sequence_a = tokenizer(sequence_a)["input_ids"]

>>> encoded_sequence_b = tokenizer(sequence_b)["input_ids"]

```

لدى الإصدارات المشفرة أطوال مختلفة:

```python

>>> len(encoded_sequence_a), len(encoded_sequence_b)

(8, 19)

```

لذلك، لا يمكننا وضعها معًا في نفس المصفوفة كما هي. يجب إضافة حشو إلى التسلسل الأول حتى يصل إلى طول التسلسل الثاني، أو يجب تقليص الثاني إلى طول الأول.

في الحالة الأولى، يتم تمديد قائمة المعرفات بواسطة مؤشرات الحشو. يمكننا تمرير قائمة إلى المحلل اللغوي وطلب منه إضافة الحشو بهذه الطريقة:

```python

>>> padded_sequences = tokenizer([sequence_a, sequence_b], padding=True)

```

يمكننا أن نرى أنه تمت إضافة اصفار على يمين الجملة الأولى لجعلها بنفس طول الجملة الثانية:

```python

>>> padded_sequences["input_ids"]

[[101, 1188, 1110, 170, 1603, 4954, 119, 102, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0], [101, 1188, 1110, 170, 1897, 1263, 4954, 119, 1135, 1110, 1120, 1655, 2039, 1190, 1103, 4954, 138, 119, 102]]

```

يمكن بعد ذلك تحويل هذا إلى مصفوفة في PyTorch أو TensorFlow. قناع الانتباه هو مصفوفة ثنائية تشير إلى

موضع المؤشرات المحشوه بحيث لا ينتبه إليها النموذج. بالنسبة إلى [`BertTokenizer`]`1` يشير إلى

قيمة يجب الانتباه إليها، في حين يشير `0` إلى قيمة مبطنة. يُمكن إيجاد قناع الانتباه في القاموس الذي يُعيده مُجزِّئ النصوص (tokenizer) تحت المفتاح "attention_mask".

```python

>>> padded_sequences["attention_mask"]

[[1, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0], [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1]]

```

### نماذج الترميز التلقائي (autoencoding models)

راجع [نماذج الترميز](#encoder-models) و [نمذجة اللغة المقنعة](#masked-language-modeling-mlm)

### النماذج ذاتية الانحدار (Autoregressive Models)

راجع [نمذجة اللغة السببية](#causal-language-modeling) و [نماذج فك التشفير](#decoder-models)

## B

### العمود الفقري (backbone)

يُمثل العمود الفقري الشبكة العصبونية (الترميزات والطبقات) المسؤولة عن إخراج الحالات الخفية أو المُميزات الأولية. عادة ما يكون متصلاً بـ [رأس](#head) يستقبل المُميزات كمدخلات لإجراء تنبؤ. على سبيل المثال، يُعد النموذج [`ViTModel`] عمودًا فقريًا دون رأس مُحدد مُرفق به. يمكن أيضًا استخدام `ViTModel` كعمود فقري في نماذج أخرى, مثل [DPT](model_doc/dpt).

## C

### نمذجة اللغة السببية (أو التنبؤية) causal language modeling

مهمة ما قبل التدريب يقوم فيها النموذج بقراءة النصوص بالترتيب ويتنبأ بالكلمة التالية. يتم ذلك عادةً من خلال قراءة الجملة كاملةً، ولكن مع استخدام قناع داخل النموذج لإخفاء الرموز المميزة اللاحقة في خطوة زمنية معينة.

### قناة(channel)

تتكون الصور الملونة من مزيج من القيم في ثلاث قنوات لونية: الأحمر والأخضر والأزرق (RGB) بينما تحتوي صور ذات التدرج رمادي على قناة واحدة فقط. في مكتبة 🤗 Transformers، يمكن أن تكون القناة اللونية البُعد الأول أو الأخير في مُصفوفة الصورة: [`n_channels`، `height`، `width`] أو [`height`، `width`، `n_channels`].

### التصنيف الزمني التوصيلي connectionist temporal classification (CTC)

خوارزمية تسمح للنموذج بالتعلم دون معرفة كيفية محاذاة المدخلات مع المخرجات بدقة؛ يحسب CTC توزيع جميع المخرجات المحتملة لمدخلات مُحددة ويختار المخرج الأكثر احتمالًا. تُستخدم CTC بشكل شائع في مهام التعرف على الكلام نظرًا لأن الكلام المنطوق لا يتوافق دائمًا بشكل مُباشر مع النص المكتوب، لأسباب مختلفة مثل معدلات الكلام المختلفة للمتكلم.

### الالتفاف (Convolution)

نوع من الطبقات في شبكة عصبية، حيث تُضرب مصفوفة الإدخال عُنصرًا بُعنصر بمصفوفة أصغر تُسمى (النواة أو المرشح) ويتم جمع القيم في مصفوفة جديدة. يُعرف هذا باسم عملية الالتفاف التي يتم تكرارها عبر مصفوفة الإدخال بأكملها. تُطبق كل عملية التفاف على جزء مُختلف من مصفوفة الإدخال. تُستخدم الشبكات العصبية الالتفافية (CNNs) بشكل شائع في رؤية الحاسوب.

## D

### التوازي على مستوى البيانات (DataParallel - DP)

هي تقنية تُستخدم لتدريب النماذج على عدة وحدات معالجة رسومات (GPUs)، حيث يتم نسخ نفس إعداد التدريب عدة مرات، بحيث تتلقى كل نسخة شريحة مختلفة من البيانات يتم تنفيذ المعالجة بالتوازي ويتم مزامنة جميع الإعدادات في نهاية كل خطوة تدريب.

تعرف على المزيد حول كيفية عمل DataParallel [هنا](perf_train_gpu_many#dataparallel-vs-distributeddataparallel).

### معرفات مدخلات وحدة فك التشفير (decoder input IDs)

هذا المدخل خاص بنماذج الترميز وفك التشفير، ويحتوي على معرفات الإدخال التي سيتم تغذيتها إلى وحدة فك التشفير.

يجب استخدام هذه المدخلات لمهام التسلسل إلى التسلسل، مثل الترجمة أو التلخيص، وعادة ما يتم بناؤها بطريقة محددة لكل نموذج.

تقوم معظم نماذج الترميز وفك التشفير (BART، T5) بإنشاء معرفات `decoder_input_ids` الخاصة بها من `labels`. في مثل هذه النماذج،

يعد تمرير `labels` هو الطريقة المفضلة للتعامل مع التدريب.

يرجى التحقق من وثائق كل نموذج لمعرفة كيفية تعاملها مع معرفات الإدخال هذه للتدريب على التسلسل إلى التسلسل.

### نماذج فك التشفير (decoder models)

يُشار إليها أيضًا باسم نماذج التنبؤية الذاتية، وتنطوي نماذج فك التشفير على مهمة ما قبل التدريب (تسمى نمذجة اللغة السببية) حيث يقرأ النموذج النصوص بالترتيب ويتعين عليه التنبؤ بالكلمة التالية. يتم ذلك عادةً عن طريق

قراءة الجملة بأكملها مع قناع لإخفاء الرموز المميزة المستقبلية في خطوة زمنية معينة.

<Youtube id="d_ixlCubqQw"/>

### التعلم العميق deep learning (DL)

خوارزميات التعلم الآلي التي تستخدم الشبكات العصبية متعددة الطبقات.

## E

### نماذج الترميز (encoder models)

تُعرف أيضًا باسم نماذج الترميز التلقائي، وتأخذ نماذج الترميز إدخالًا (مثل النص أو الصور) وتحويلها إلى تمثيل رقمي مكثف يُطلق عليه الترميز. غالبًا ما يتم تدريب نماذج الترميز مسبقًا باستخدام تقنيات مثل [نمذجة اللغة المقنعة](#masked-language-modeling-mlm)، والتي تقوم بإخفاء أجزاء من تسلسل الإدخال وإجبار النموذج على إنشاء تمثيلات أكثر دلالة (فائدة ووضوحاً).

<Youtube id="H39Z_720T5s"/>

## F

### استخراج الميزات (feature extraction)

عملية اختيار وتحويل البيانات الأولية إلى مجموعة من الميزات الأكثر إفادة وفائدة لخوارزميات التعلم الآلي. بعض الأمثلة على استخراج الميزات تشمل تحويل النص الأولي/الخام إلى ترميزات الكلمات واستخراج ميزات مهمة مثل الحواف أو الأشكال من بيانات الصور/الفيديو.

### تجزئة التغذية الأمامية (feed forward chunking)

في كل وحدة الانتباه الباقية في المحولات، تلي طبقة الاهتمام الانتباه عادة طبقتان للتغذية الأمامية.

حجم تضمين الطبقة الأمامية الوسيطة أكبر عادة من حجم المخفي للنموذج (على سبيل المثال، لـ

`google-bert/bert-base-uncased`).

بالنسبة لإدخال بحجم `[batch_size, sequence_length]`، يمكن أن تمثل الذاكرة المطلوبة لتخزين التضمينات الأمامية الوسيطة `[batch_size، sequence_length, config.intermediate_size]` جزءًا كبيرًا من استخدام الذاكرة. لاحظ مؤلفو (https://arxiv.org/abs/2001.04451)[Reformer: The Efficient Transformer] أنه نظرًا لأن الحساب مستقل عن بعد `sequence_length`، فإنه من المكافئ رياضيًا حساب تضمينات الإخراج الأمامية `[batch_size، config.hidden_size]_0, ..., [batch_size، `config_size]_n

فردياً والتوصيل بها لاحقًا إلى `[batch_size, sequence_length, config.hidden_size]` مع `n = sequence_length`، والذي يتداول زيادة وقت الحساب مقابل تقليل استخدام الذاكرة، ولكنه ينتج عنه نتيجة مكافئة رياضيا.

بالنسبة للنماذج التي تستخدم الدالة `[apply_chunking_to_forward]`، يحدد `chunk_size` عدد التضمينات يتم حساب الإخراج بالتوازي وبالتالي يحدد المقايضة بين حجم الذاكرة والتعقيد الوقت. إذا تم تعيين `chunk_size` إلى `0`، فلن يتم إجراء تجزئة التغذية الأمامية.

### النماذج المضبوطة (finetuned models)

الضبط الدقيق هو شكل من أشكال نقل التعلم، يتضمن أخذ نموذج مُدرّب مسبقًا، وتجميد أوزانه، واستبدال طبقة الإخراج برأس نموذج مُضاف حديثًا. يتم تدريب رأس النموذج على مجموعة البيانات المستهدفة.

راجع البرنامج التعليمي [Fine-tune a pretrained model](https://huggingface.co/docs/transformers/training) لمزيد من التفاصيل، وتعرف على كيفية ضبط النماذج باستخدام 🤗 Transformers.

## H

### رأس النموذج (head)

يشير رأس النموذج إلى الطبقة الأخيرة من الشبكة العصبية التي تقبل الحالات المخفية الخام/الأولية وتُسقطها على بُعد مختلف. يوجد رأس نموذج مختلف لكل مهمة.

* [`GPT2ForSequenceClassification`] هو رأس تصنيف تسلسل - طبقة خطية - أعلى نموذج [`GPT2Model`] الأساسي.

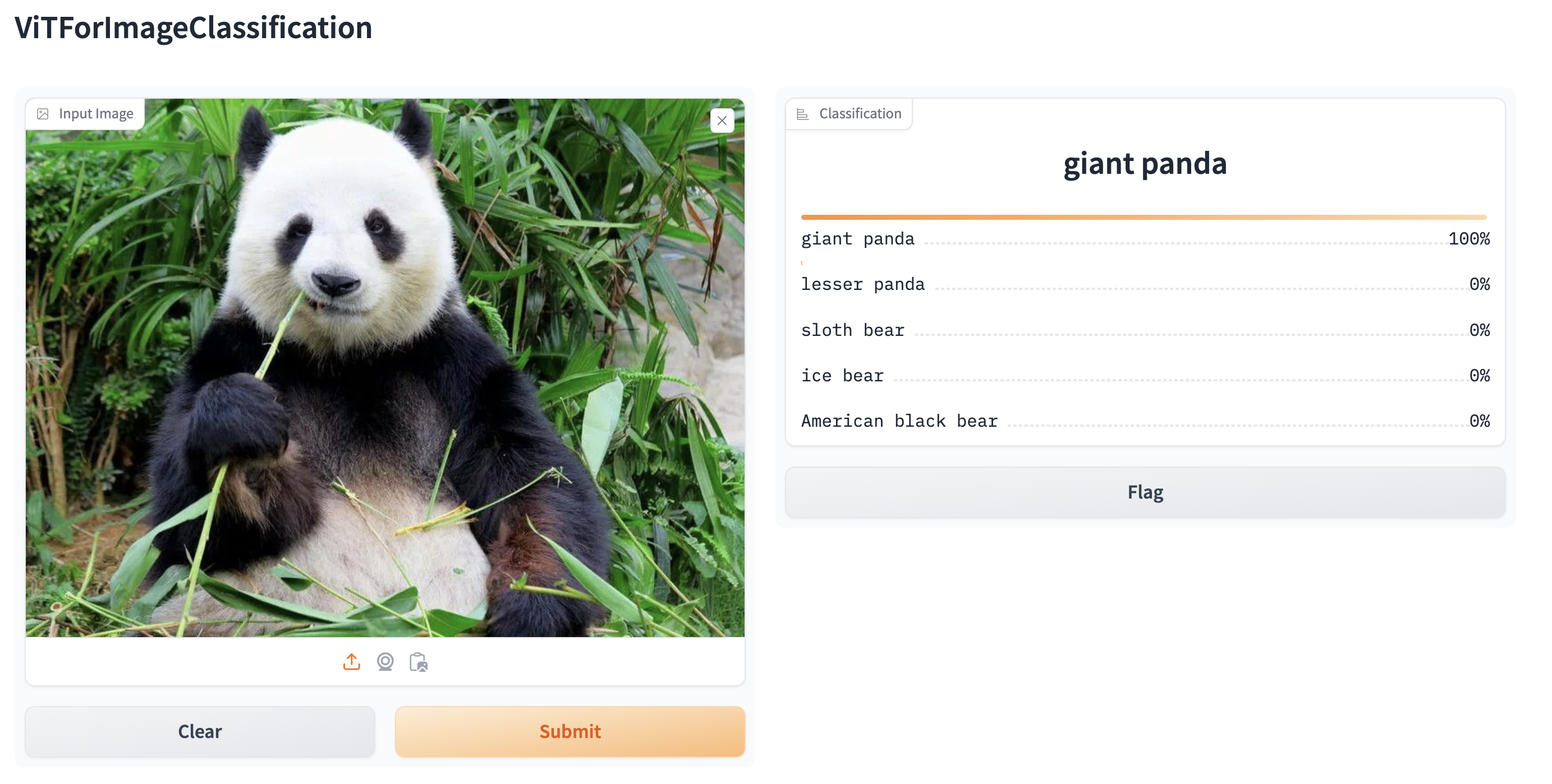

* [`ViTForImageClassification`] هو رأس تصنيف صورة - طبقة خطية أعلى حالة مخفية نهائية للرمز `CLS` - أعلى نموذج [`ViTModel`] الأساسي.

* [`Wav2Vec2ForCTC`] هو رأس نمذجة اللغة مع [CTC](#connectionist-temporal-classification-ctc) أعلى نموذج [`Wav2Vec2Model`] الأساسي.

## I

### رقعة الصور (image patch)

"رقعة الصورة" في نماذج المحولات البصرية، تُقسم الصورة إلى أجزاء أصغر تسمى "رقعات". يتم تمثيل كل رقعة بشكل رقمي (تحويلها إلى مجموعة من الأرقام) ثم تُعالج كسلسلة من البيانات. يمكنك العثور على حجم الرُقعة patch_size - أو دقتها - في إعدادات النموذج.

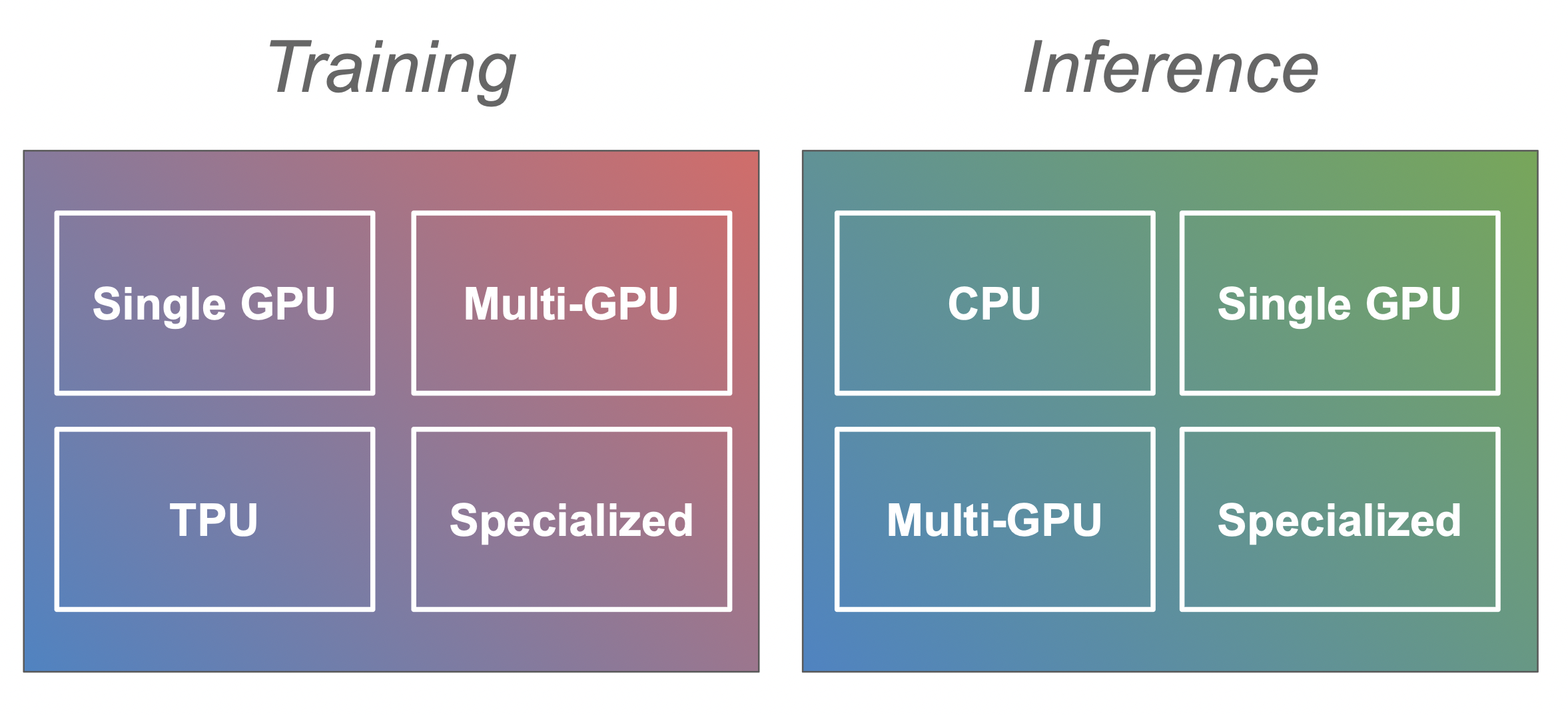

### الاستدلال (Inference)

الاستدلال هو عملية تقييم نموذج على بيانات جديدة بعد اكتمال التدريب. راجع البرنامج التعليمي [Pipeline for inference](https://huggingface.co/docs/transformers/pipeline_tutorial) لمعرفة كيفية إجراء الاستدلال باستخدام 🤗 Transformers.

### معرفات الإدخال (input IDs)

معرفات الإدخال هي غالبًا المعلمات المطلوبة الوحيدة التي يجب تمريرها إلى النموذج كإدخال. هذه المعرفات عبارة عن أرقام تمثل كل كلمة أو رمز في الجملة التي نريد أن يفهمها النموذج. بمعنى آخر، هي طريقة لترجمة الكلمات إلى أرقام يتم استخدامها كإدخال بواسطة النموذج.

<Youtube id="VFp38yj8h3A"/>

يعمل كل محلل لغوي بشكل مختلف ولكن الآلية الأساسية تبقى كما هي. إليك مثال باستخدام محلل BERT اللغوي، والذي يعد محلل لغوي [WordPiece](https://arxiv.org/pdf/1609.08144.pdf):

```python

>>> from transformers import BertTokenizer

>>> tokenizer = BertTokenizer.from_pretrained("google-bert/bert-base-cased")

>>> sequence = "A Titan RTX has 24GB of VRAM"

```

يتولى المحلل اللغوي مهمة تقسيم التسلسل إلى رموز مميزة متوفرة في قاموس المحلل اللغوي.

```python

>>> tokenized_sequence = tokenizer.tokenize(sequence)

```

االرموز إما كلمات أو أجزاء كلمات. هنا على سبيل المثال، لم تكن كلمة "VRAM" موجودة في مفردات النموذج، لذلك تم تقسيمها إلى "V" و "RA" و "M". للإشارة إلى أن هذه الرموز ليست كلمات منفصلة ولكنها أجزاء من نفس الكلمة، تمت إضافة بادئة مزدوجة (#) إلى "RA" و "M":

```python

>>> print(tokenized_sequence)

['A', 'Titan', 'R', '##T', '##X', 'has', '24', '##GB', 'of', 'V', '##RA', '##M']

```

```python

>>> print(tokenized_sequence)

['A'، 'Titan'، 'R'، '##T'، '##X'، 'has'، '24'، '##GB'، 'of'، 'V'، '##RA'، '##M']

```

يمكن بعد ذلك تحويل هذه الرموز إلى مُعرفات يفهمها النموذج. يمكن القيام بذلك عن طريق تغذية الجملة مباشرةً إلى مُجزّئ الرموز، والذي يستفيد من تنفيذ 🤗 Tokenizers بلغة Rust للحصول على أعلى أداء.

```python

>>> inputs = tokenizer(sequence)

```

يقوم المحلل اللغوي بإرجاع قاموس يحتوي على جميع المعلومات التي يحتاجها النموذج للعمل بشكل صحيح. وتوجد مؤشرات الرموز المميزة تحت مفتاح `input_ids`:

```python

>>> encoded_sequence = inputs["input_ids"]

>>> print(encoded_sequence)

[101، 138، 18696، 155، 1942، 3190، 1144، 1572، 13745، 1104، 159، 9664، 2107، 102]

```

لاحظ أن المحلل اللغوي يضيف تلقائيًا "رموزًا خاصة" (إذا كان النموذج المرتبط يعتمد عليها) وهي معرفات خاصة

يستخدمها النموذج في بعض الأحيان.

إذا قمنا بفك تشفير التسلسل السابق،

```python

>>> decoded_sequence = tokenizer.decode(encoded_sequence)

```

سنرى

```python

>>> print(decoded_sequence)

[CLS] A Titan RTX has 24GB of VRAM [SEP]

```

لأن هذه هي الطريقة التي يتوقع بها نموذج [`BertModel`] إدخالاته.

## L

### االملصقات (Labels)

هي معامل اختياري يمكن إدخاله في النموذج لحساب الخسارة بنفسه.

نماذج تصنيف التسلسل: ([BertForSequenceClassification]) يتوقع النموذج مصفوفة ذات بعد (batch_size) حيث تتوافق كل قيمة من المجموعة مع الملصق المتوقع للتسلسل بأكمله.

نماذج تصنيف الرمز: ([BertForTokenClassification]) يتوقع النموذج مصفوفة ذات بعد (batch_size, seq_length) حيث تتوافق كل قيمة مع الملصق المتوقع لكل رمز فردي.

نماذج النمذجة اللغوية المقنعة:([BertForMaskedLM]) يتوقع النموذج مصفوفة ذات بعد (batch_size, seq_length) حيث تتوافق كل قيمة مع الملصق المتوقع لكل رمز فردي: تكون الملصقات هي معرف رمز الكلمة المقنعة، والقيم الأخرى يتم تجاهلها (عادةً -100).

مهام التسلسل إلى التسلسل: ([BartForConditionalGeneration], [MBartForConditionalGeneration]) يتوقع النموذج مصفوفة ذات بعد (batch_size, tgt_seq_length) حيث تتوافق كل قيمة مع التسلسل الهدف المرتبط بكل تسلسل مدخل. أثناء التدريب، سيقوم كل من BART و T5 بإنشاء decoder_input_ids و decoder attention masks داخليًا. عادةً لا يلزم توفيرها. هذا لا ينطبق على النماذج التي تستخدم إطار العمل Encoder-Decoder.

نماذج تصنيف الصور: ([ViTForImageClassification]) يتوقع النموذج مصفوفة ذات بعد (batch_size) حيث تتوافق كل قيمة من المجموعة مع الملصق المتوقع لكل صورة فردية.

نماذج التقسيم الدلالي: ([SegformerForSemanticSegmentation]) يتوقع النموذج مصفوفة ذات بعد (batch_size, height, width) حيث تتوافق كل قيمة من المجموعة مع الملصق المتوقع لكل بكسل فردي.

نماذج اكتشاف الأجسام: ([DetrForObjectDetection]) يتوقع النموذج قائمة من القواميس تحتوي على مفتاح class_labels و boxes حيث تتوافق كل قيمة من المجموعة مع الملصق المتوقع وعدد المربعات المحيطة بكل صورة فردية.

نماذج التعرف التلقائي على الكلام: ([Wav2Vec2ForCTC]) يتوقع النموذج مصفوفة ذات بعد (batch_size, target_length) حيث تتوافق كل قيمة مع الملصق المتوقع لكل رمز فردي.

<Tip>

قد تختلف تسميات كل نموذج، لذا تأكد دائمًا من مراجعة وثائق كل نموذج للحصول على معلومات حول التسميات الخاصة به.

</Tip>

لا تقبل النماذج الأساسية ([`BertModel`]) الملصقات ، لأنها نماذج المحول الأساسية، والتي تقوم ببساطة بإخراج الميزات.

### نماذج اللغة الكبيرة large language models (LLM)

مصطلح عام يشير إلى نماذج اللغة المحولة (GPT-3 و BLOOM و OPT) التي تم تدريبها على كمية كبيرة من البيانات. تميل هذه النماذج أيضًا إلى وجود عدد كبير من المعلمات القابلة للتعلم (على سبيل المثال، 175 مليار لمعلمة GPT-3).

## M

### نمذجة اللغة المقنعة masked language modeling (MLM)

مهمة تدريب مسبق حيث يرى النموذج نسخة تالفة من النصوص، وعادة ما يتم ذلك عن طريق حجب بعض الرموز بشكل عشوائي، ويتعين على النموذج التنبؤ بالنص الأصلي.

### متعدد الوسائط (multimodal)

مهمة تجمع بين النصوص مع نوع آخر من المدخلات (على سبيل المثال، الصور).

## N

### توليد اللغة الطبيعية Natural language generation (NLG)

جميع المهام المتعلقة بتوليد النص (على سبيل المثال، [اكتب باستخدام المحولات](https://transformer.huggingface.co/)، والترجمة).

### معالجة اللغة الطبيعية Natural language processing (NLP)

طريقة عامة للقول "التعامل مع النصوص".

### فهم اللغة الطبيعية Natural language understanding (NLU)

جميع المهام المتعلقة بفهم ما هو موجود في نص (على سبيل المثال تصنيف النص بأكمله، أو الكلمات الفردية).

## P

### خط الأنابيب (pipeline)

في مكتبة Transformers، يُشير مصطلح "خط الأنابيب" إلى سلسلة من الخطوات التي يتم تنفيذها بترتيب محدد لمعالجة البيانات وتحويلها وإرجاع تنبؤ من نموذج. بعض المراحل الشائعة في خط الأنابيب قد تشمل معالجة البيانات الأولية، واستخراج الميزات، والتوحيد.

للحصول على مزيد من التفاصيل، راجع [خطوط الأنابيب للاستدلال](https://huggingface.co/docs/transformers/pipeline_tutorial).

### التوازي على مستوى خط الأنابيب (PipelineParallel)

تقنية توازي يتم فيها تقسيم النموذج رأسياً (على مستوى الطبقة) عبر وحدات معالجة الرسومات (GPU) متعددة، بحيث توجد طبقة واحدة أو عدة طبقات من النموذج على وحدة معالجة الرسومات (GPU) واحدة فقط. تقوم كل وحدة معالجة رسومات (GPU) بمعالجة مراحل مختلفة من خط الأنابيب بالتوازي والعمل على جزء صغير من الدفعة. تعرف على المزيد حول كيفية عمل PipelineParallel [هنا](perf_train_gpu_many#from-naive-model-parallelism-to-pipeline-parallelism).

### قيم البكسل (pixel values)

مصفوفة من التمثيلات الرقمية لصورة يتم تمريرها إلى نموذج. تأخذ قيم البكسل شكل [`batch_size`، `num_channels`، `height`، `width`]، ويتم إنشاؤها من معالج الصور.

### التجميع (Pooling)

هي عملية تقوم بتقليص مصفوفة إلى مصفوفة أصغر، إما عن طريق أخذ القيمة القصوى أو المتوسط الحسابي للأبعاد التي يتم تجميعها. توجد طبقات التجميع بشكل شائع بين الطبقات التلافيفية convolutional layers لتقليل حجم تمثيل الميزات.

### معرفات الموضع (position IDs)

على عكس الشبكات العصبية المتكررة (RNNs) التي تتضمن موضع كل رمز (token) ضمن بنيتها، لا تدرك المحولات موضع كل رمز. لذلك، تستخدم معرفات الموضع (`position_ids`) من قبل النموذج لتحديد موضع كل رمز في قائمة الرموز.

إنها معلمة اختيارية. إذا لم يتم تمرير أي `position_ids` إلى النموذج، يتم إنشاء المعرفات تلقائيًا كترميزات موضعية مطلقة.

يتم اختيار الترميزات الموضعية المطلقة في النطاق `[0، config.max_position_embeddings - 1]`. تستخدم بعض النماذج أنواعًا أخرى من الترميزات الموضعية، مثل الترميزات الموضعية الجيبية أو الترميزات الموضعية النسبية.

### ما قبل المعالجة (preprocessing)

مهمة إعداد البيانات الخام بتنسيق يمكن أن تستهلكه نماذج التعلم الآلي بسهولة. على سبيل المثال، عادةً ما تتم معالجة النص مسبقًا عن طريق التمييز. للحصول على فكرة أفضل عن كيفية ظهور المعالجة المسبقة لأنواع الإدخال الأخرى، راجع البرنامج التعليمي [Preprocess](https://huggingface.co/docs/transformers/preprocessing).

### النموذج المسبق التدريب (pretrained model)

نموذج تم تدريبه مسبقًا على بعض البيانات (على سبيل المثال، كل Wikipedia). تنطوي طرق التدريب المسبق على هدف ذاتي الإشراف، والذي يمكن أن يكون قراءة النص ومحاولة التنبؤ بالكلمة التالية ( راجع (causal-language-modeling#)[نمذجة اللغة السببية] ) أو قناع بعض الكلمات ومحاولة التنبؤ بها ( راجع (masked-language#)[نمذجة اللغة المقنعة]- عرض MLM).

لدى نماذج الكلام والرؤية أهدافها التدريبية المسبقة الخاصة. على سبيل المثال، Wav2Vec2 هو نموذج كلام تم تدريبه مسبقًا على مهمة تباينية تتطلب من النموذج تحديد تمثيل الكلام "الحقيقي" من مجموعة من تمثيلات الكلام "الخاطئة". من ناحية أخرى، BEiT هو نموذج رؤية تم تدريبه مسبقًا على مهمة نمذجة صورة مقنعة تقوم بقناع بعض رقع الصورة وتتطلب من النموذج التنبؤ بالرقع المقنعة (مشابهة لهدف نمذجة اللغة المقيدة).

## R

### شبكة عصبية متكررة (RNN)

هي نوع من النماذج التي تستخدم حلقة متكررة فوق طبقة معينة لمعالجة النصوص.

### التعلم التمثيلي (representation learning)

هو فرع من فروع تعلم الآلة يركز على تعلم تمثيلات ذات معنى للبيانات الخام. بعض الأمثلة على تقنيات التعلم التمثيلي تشمل تضمين الكلمات، والمشفرات ذاتية، وشبكات التنافس التوليدية(GANs).

## S

### معدل العينات (sampling rate)

قياس، بالهرتز، لعدد العينات (إشارة الصوت) المأخوذة في الثانية. ينتج معدل العينات عن تمييز إشارة مستمرة مثل الكلام.

### الانتباه الذاتي (Self-Attention)

هو آلية تتيح لكل عنصر في المدخل أن يحدد أي العناصر الأخرى في نفس المدخل يجب أن ينتبه إليها.

### التعلم الذاتي الخاضع للإشراف (supervised learning)

فئة من تقنيات التعلم الآلي التي يقوم فيها النموذج بإنشاء هدفه التعليمي الخاص من البيانات غير الموسومة. يختلف عن [التعلم غير الخاضع للإشراف](#unsupervised-learning) و [التعلم الخاضع للإشراف](#supervised-learning) في أن عملية التعلم خاضعة للإشراف، ولكن ليس صراحة من المستخدم.

مثال واحد على التعلم الذاتي الخاضع للإشراف هو [نمذجة اللغة المقيدة](#masked-language- عرض MLM)، حيث يتم تمرير جمل للنموذج مع إزالة نسبة من رموزه ويتعلم التنبؤ بالرموز المفقودة.

### التعلم شبه الخاضع للإشراف (semi-supervised learning)

فئة واسعة من تقنيات تدريب التعلم الآلي التي تستفيد من كمية صغيرة من البيانات الموسومة مع كمية أكبر من البيانات غير الموسومة لتحسين دقة النموذج، على عكس [التعلم الخاضع للإشراف](#supervised-learning) و [التعلم غير الخاضع للإشراف](#unsupervised-learning).

مثال على نهج التعلم شبه الخاضع للإشراف هو "التدريب الذاتي"، حيث يتم تدريب نموذج على بيانات موسومة، ثم يستخدم لتقديم تنبؤات حول البيانات غير الموسومة. يتم إضافة الجزء من البيانات غير الموسومة التي يتنبأ بها النموذج بأكبر قدر من الثقة إلى مجموعة البيانات الموسومة ويتم استخدامها لإعادة تدريب النموذج.

### تسلسل إلى تسلسل (seq2seq)

نماذج تولد تسلسلًا جديدًا من إدخال، مثل نماذج الترجمة، أو نماذج التلخيص (مثل [Bart](model_doc/bart) أو [T5](model_doc/t5)).

### Sharded DDP

اسم آخر لمفهوم [Zero Redundancy Optimizer](#zero-redundancy-optimizer-zero) الأساسي كما هو مستخدم من قبل العديد من التطبيقات الأخرى لـ Zero.

### الخطوة (Stride)

في العمليات التلافيفية أو التجميعية، تشير الخطوة إلى المسافة التي يتحرك بها النواة (kernel) فوق المصفوفة. خطوة تساوي 1 تعني أن النواة تتحرك بكسل واحد في كل مرة.

### التعلم الخاضع للإشراف (supervised learning)

هو نوع من تدريب النماذج التي تستخدم بيانات مُعلَّمة بشكل مباشر لتصحيح أداء النموذج وتوجيهه. يتم تغذية البيانات إلى النموذج قيد التدريب، ويتم مقارنة تنبؤاته بالنتائج الصحيحة المعروفة. يقوم النموذج بتعديل أوزانه بناءً على مدى خطأ تنبؤاته، وتتكرر هذه العملية لتحسين أداء النموذج.

## T

### توازي Tensor (TP)

تقنية توازي لتدريب وحدات معالجة الرسومات (GPU) متعددة يتم فيها تقسيم المصفوفة إلى عدة أجزاء، لذا بدلاً من وجود المصفوفة بأكملها على وحدة معالجة الرسومات (GPU) واحدة، توجد كل شظية من المصفوفة على وحدة معالجة الرسومات (GPU) المخصصة لها. تتم معالجة الشظايا بشكل منفصل وبالتوازي على وحدات معالجة الرسومات (GPU) المختلفة ويتم مزامنة النتائج في نهاية خطوة المعالجة. هذا ما يُطلق عليه أحيانًا التوازي الأفقي، حيث يحدث الانقسام على المستوى الأفقي.

تعرف على المزيد حول توازي Tensor [هنا](perf_train_gpu_many#tensor-parallelism).

### الرمز اللغوي (Token)

جزء من جملة، عادة ما يكون كلمة، ولكن يمكن أن يكون أيضًا كلمة فرعية (غالبًا ما يتم تقسيم الكلمات غير الشائعة إلى كلمات فرعية) أو علامة ترقيم.

### معرفات نوع الرمز (token type ids)

الغرض من بعض النماذج هو إجراء التصنيف على أزواج من الجمل أو الإجابة على الأسئلة.

<Youtube id="0u3ioSwev3s"/>

يتطلب ذلك تسلسلين مختلفين يتم دمجهما في إدخال "input_ids" واحد، والذي يتم عادةً باستخدام رموز خاصة، مثل رموز التصنيف (`[CLS]`) والفاصل (`[SEP]`). على سبيل المثال، يقوم نموذج BERT ببناء إدخال تسلسلين على النحو التالي:

```python

>>> # [CLS] SEQUENCE_A [SEP] SEQUENCE_B [SEP]

```

يمكننا استخدام برنامجنا للتمييز لإنشاء مثل هذه الجملة تلقائيًا عن طريق تمرير التسلسلين إلى `tokenizer` كمعامليين (وليس قائمة، كما كان من قبل) مثل هذا:

```python

>>> from transformers import BertTokenizer

>>> tokenizer = BertTokenizer.from_pretrained("google-bert/bert-base-cased")

>>> sequence_a = "HuggingFace is based in NYC"

>>> sequence_b = "Where is HuggingFace based?"

>>> encoded_dict = tokenizer(sequence_a، sequence_b)

>>> decoded = tokenizer.decode(encoded_dict["input_ids"])

```

والذي سيعيد:

```python

>>> print(decoded)

[CLS] HuggingFace is based in NYC [SEP] Where is HuggingFace based؟ [SEP]

```

هذا يكفي لبعض النماذج لفهم أين ينتهي تسلسل واحد وأين يبدأ الآخر. ومع ذلك، تستخدم نماذج أخرى، مثل BERT، أيضًا معرفات نوع الرمز (يُطلق عليها أيضًا معرفات الجزء). يتم تمثيلها كماسك ثنائي لتحديد نوعي التسلسل في النموذج.

يعيد برنامج الترميز هذا القناع كإدخال "token_type_ids":

```python

>>> encoded_dict["token_type_ids"]

[0، 0، 0، 0، 0، 0، 0، 0، 0، 0، 1، 1، 1، 1، 1، 1، 1، 1، 1]

```

يتم تمثيل التسلسل الأول، "السياق" المستخدم للسؤال، بجميع رموزه بواسطة `0`، في حين يتم تمثيل التسلسل الثاني، المقابل إلى "السؤال"، بجميع رموزه بواسطة `1`.

تستخدم بعض النماذج، مثل [`XLNetModel`] رمزًا إضافيًا يمثله `2`.

### التعلم الانتقالي (Transfer Learning)

تقنية تنطوي على أخذ نموذج تم تدريبه مسبقًا وتكييفه مع مجموعة بيانات خاصة بمهمتك. بدلاً من تدريب نموذج من الصفر، يمكنك الاستفادة من المعرفة المكتسبة من نموذج موجود كنقطة بداية. يسرع هذا عملية التعلم ويقلل من كمية بيانات التدريب المطلوبة.

### المحول (Transformer)

هو بنية لنموذج تعلم عميق يعتمد على الانتباه الذاتي.

## U

### التعلم غير الخاضع للإشراف (unsupervised learning)

شكل من أشكال تدريب النماذج حيث لا يتم وضع علامات على البيانات المقدمة إلى النموذج. تستفيد تقنيات التعلم غير الخاضعة للإشراف من المعلومات الإحصائية لتوزيع البيانات للعثور على الأنماط المفيدة للمهمة المعنية.

## Z

### محسن التكرار الصفري (ZeRO)

تقنية توازي تقوم بتشظية المصفوفات بطريقة مشابهة لـ [TensorParallel](#tensor-parallelism-tp)، باستثناء إعادة بناء المصفوفة بالكامل في الوقت المناسب لحساب التقدير أو الحساب الخلفي، وبالتالي لا يلزم تعديل النموذج. تدعم هذه الطريقة أيضًا تقنيات الإخلاء المختلفة للتعويض عن ذاكرة GPU المحدودة.

تعرف على المزيد حول Zero [هنا](perf_train_gpu_many#zero-data-parallelism).

| transformers/docs/source/ar/glossary.md/0 | {

"file_path": "transformers/docs/source/ar/glossary.md",

"repo_id": "transformers",

"token_count": 19554

} |

# خطوط الأنابيب الاستدلال

يجعل [`pipeline`] من السهل استخدام أي نموذج من [Hub](https://huggingface.co/models) للاستدلال لأي مهام خاصة باللغة أو الرؤية الحاسوبية أو الكلام أو المهام متعددة الوسائط. حتى إذا لم يكن لديك خبرة في طريقة معينة أو لم تكن على دراية بالرمز الأساسي وراء النماذج، يمكنك مع ذلك استخدامها للاستدلال باستخدام [`pipeline`]! سوف يُعلمك هذا البرنامج التعليمي ما يلي:

* استخدام [`pipeline`] للاستدلال.

* استخدم مُجزّئ أو نموذجًا محددًا.

* استخدم [`pipeline`] للمهام الصوتية والبصرية والمتعددة الوسائط.

<Tip>

اطلع على وثائق [`pipeline`] للحصول على القائمة كاملة بالمهام المدعومة والمعلمات المتاحة.

</Tip>

## استخدام الأنابيب

على الرغم من أن لكل مهمة أنبوب [`pipeline`] خاص بها، إلا أنه من الأبسط استخدام تجريد خط الأنابيب العام [`pipeline`] الذي يحتوي على جميع خطوط الأنابيب الخاصة بالمهمة. يقوم [`pipeline`] تلقائيًا بتحميل نموذج افتراضي وفئة معالجة مسبقة قادرة على الاستدلال لمهمتك. دعنا نأخذ مثال استخدام [`pipeline`] للتعرف التلقائي على الكلام (ASR)، أو تحويل الكلام إلى نص.

1. ابدأ بإنشاء [`pipeline`] وحدد مهمة الاستدلال:

```py

>>> from transformers import pipeline

>>> transcriber = pipeline(task="automatic-speech-recognition")

```

2. مرر إدخالك إلى [`pipeline`]. في حالة التعرف على الكلام، يكون هذا ملف إدخال صوتي:

```py

>>> transcriber("https://huggingface.co/datasets/Narsil/asr_dummy/resolve/main/mlk.flac")

{'text': 'I HAVE A DREAM BUT ONE DAY THIS NATION WILL RISE UP LIVE UP THE TRUE MEANING OF ITS TREES'}

```

لم تحصل على النتيجة التي تريدها؟ تحقق من بعض [نماذج التعرف على الكلام الأكثر تنزيلًا](https://huggingface.co/models?pipeline_tag=automatic-speech-recognition&sort=trending)

على Hub لمعرفة ما إذا كان بإمكانك الحصول على نسخة منقحة أفضل.

لنَجرب نموذج [Whisper large-v2](https://huggingface.co/openai/whisper-large) من OpenAI. تم إصدار Whisper بعد عامين من إصدار Wav2Vec2، وتم تدريبه على ما يقرب من 10 أضعاف كمية البيانات. وبهذه الصفة، فإنه يتفوق على Wav2Vec2 في معظم معظم المقاييس. كما أنه يمتلك ميزة إضافية وهي في التنبؤ بعلامات الترقيم وحالة الأحرف، والتي لا يمكن تحقيقها مع Wav2Vec2.

دعونا نجربها هنا لنرى كيف تؤدي:

```py

>>> transcriber = pipeline(model="openai/whisper-large-v2")

>>> transcriber("https://huggingface.co/datasets/Narsil/asr_dummy/resolve/main/mlk.flac")

{'text': ' I have a dream that one day this nation will rise up and live out the true meaning of its creed.'}

```

الآن تبدو هذه النتيجة أكثر دقة! لمقارنة عميقة حول Wav2Vec2 مقابل Whisper، راجع [دورة Audio Transformers](https://huggingface.co/learn/audio-course/chapter5/asr_models).

نشجعك بشدة على التحقق من Hub للحصول على نماذج بلغات مختلفة، ونماذج متخصصة في مجالك، وأكثر من ذلك.

يمكنك التحقق من نتائج النموذج ومقارنتها مباشرة من متصفحك على Hub لمعرفة ما إذا كان يناسبها

أو التعامل مع الحالات الخاصة بشكل أفضل من غيرها.

وإذا لم تجد نموذجًا لحالتك الاستخدام، فيمكنك دائمًا البدء في [التدريب](training) الخاص بك!

إذا كان لديك عدة مدخلات، فيمكنك تمرير إدخالك كقائمة:

```py

transcriber(

[

"https://huggingface.co/datasets/Narsil/asr_dummy/resolve/main/mlk.flac",

"https://huggingface.co/datasets/Narsil/asr_dummy/resolve/main/1.flac",

]

)

```

تعد خطوط الأنابيب مثالية للتجريب نظرًا لأن التبديل من نموذج إلى آخر أمر بسيط للغاية؛ ومع ذلك، هناك بعض الطرق لتحسينها لأحمال عمل أكبر من التجريب. راجع الأدلة التالية التي تتعمق فى التكرار عبر مجموعات البيانات الكاملة أو استخدام خطوط الأنابيب في خادم ويب:

من الوثائق:

* [استخدام خطوط الأنابيب على مجموعة بيانات](#using-pipelines-on-a-dataset)

* [استخدام خطوط الأنابيب لخادم ويب](./pipeline_webserver)

## المعلمات

يدعم [`pipeline`] العديد من المعلمات؛ بعضها خاص بالمهمة، والبعض الآخر عام لجميع خطوط الأنابيب.

بشكل عام، يمكنك تحديد المعلمات في أي مكان تريده:

```py

transcriber = pipeline(model="openai/whisper-large-v2", my_parameter=1)

out = transcriber(...) # سيتم استخدام هذا `my_parameter=1`.

out = transcriber(..., my_parameter=2) # سيتم تجاوز هذا واستخدام `my_parameter=2`.

out = transcriber(...) # سيتم الرجوع إلى استخدام `my_parameter=1`.

```

دعونا نلقي نظرة على 3 مهمة:

### الجهاز

إذا كنت تستخدم `device=n`، فإن خط الأنابيب يضع النموذج تلقائيًا على الجهاز المحدد.

سيعمل هذا بغض النظر عما إذا كنت تستخدم PyTorch أو Tensorflow.

```py

transcriber = pipeline(model="openai/whisper-large-v2", device=0)

```

إذا كان النموذج كبيرًا جدًا بالنسبة لوحدة معالجة الرسومات (GPU) واحدة، وأنت تستخدم PyTorch، فيمكنك تعيين `torch_dtype='float16'` لتمكين الاستدلال بدقة FP16. عادةً ما لا يتسبب ذلك في حدوث انخفاضات كبيرة في الأداء، ولكن تأكد من تقييمه على نماذجك!

بدلاً من ذلك، يمكنك تعيين `device_map="auto"` لتحديد كيفية تحميل مخزنات النموذج وتخزينها تلقائيًا. يتطلب استخدام معامل `device_map` مكتبه 🤗 [Accelerate](https://huggingface.co/docs/accelerate):

```bash

pip install --upgrade accelerate

```

تقوم الشفرة التالية بتحميل مخزنات النموذج وتخزينها تلقائيًا عبر الأجهزة:

```py

transcriber = pipeline(model="openai/whisper-large-v2", device_map="auto")

```

لاحظ أنه إذا تم تمرير `device_map="auto"`، فلا توجد حاجة لإضافة حجة `device=device` عند إنشاء خط الأنابيب الخاص بك، فقد تواجه بعض السلوكيات غير المتوقعة!

### حجم الدفعة

بشكل افتراضي، لن تقوم خطوط الأنابيب بتجميع الاستدلال لأسباب مفصلة [هنا](https://huggingface.co/docs/transformers/main_classes/pipelines#pipeline-batching). والسبب هو أن التجميع ليست أسرع بالضرورة، ويمكن أن تكون أبطأ في الواقع في بعض الحالات.

ولكن إذا نجحت في حالتك الاستخدام، فيمكنك استخدام ما يلي:

```py

transcriber = pipeline(model="openai/whisper-large-v2", device=0, batch_size=2)

audio_filenames = [f"https://huggingface.co/datasets/Narsil/asr_dummy/resolve/main/{i}.flac" for i in range(1, 5)]

texts = transcriber(audio_filenames)

```

هذا يشغل خط الأنابيب على ملفات الصوت الأربعة المتاحة، ولكنه سيمررها على دفعتين

إلى النموذج (الذي يوجد على وحدة معالجة الرسومات (GPU)، حيث من المرجح أن تساعد التجميع) دون الحاجة إلى أي رمز إضافي منك.

يجب أن تتطابق الإخراج دائمًا مع ما كنت ستحصل عليه دون التجميع. المقصود منه فقط كطريقة لمساعدتك في الحصول على سرعة أكبر من خط الأنابيب.

يمكن لخطوط الأنابيب أيضًا تخفيف بعض تعقيدات التجميع لأنه، بالنسبة لبعض خطوط الأنابيب، يجب تقسيم عنصر واحد (مثل ملف صوتي طويل) إلى أجزاء متعددة لمعالجته بواسطة نموذج. يقوم خط الأنابيب بأداء هذه العملية التي تسمى تجميع الأجزاء [*batch batching*](./main_classes/pipelines#pipeline-chunk-batching) نيابة عنك.

### معلمات خاصة بالمهمة

توفر جميع المهام معلمات خاصة بالمهمة تتيح المرونة والخيارات الإضافية لمساعدتك في أداء عملك.

على سبيل المثال، تحتوي طريقة [`transformers.AutomaticSpeechRecognitionPipeline.__call__`] على معلمة `return_timestamps` التي تبدو واعدة لترجمة مقاطع الفيديو:

```py

>>> transcriber = pipeline(model="openai/whisper-large-v2", return_timestamps=True)

>>> transcriber("https://huggingface.co/datasets/Narsil/asr_dummy/resolve/main/mlk.flac")

{'text': ' I have a dream that one day this nation will rise up and live out the true meaning of its creed.', 'chunks': [{'timestamp': (0.0, 11.88), 'text': ' I have a dream that one day this nation will rise up and live out the true meaning of its'}, {'timestamp': (11.88, 12.38), 'text': ' creed.'}]}

```

كما ترون، استنتج النموذج النص.وكذلك حدد **وقت** نطق الجمل المختلفة.

تتوفر العديد من المعلمات لكل مهمة، لذا تحقق من مرجع API لكل مهمة لمعرفة ما يمكنك تعديله!

على سبيل المثال، تحتوي [`~transformers.AutomaticSpeechRecognitionPipeline`] على معلمة `chunk_length_s` مفيدة

للعمل على ملفات الصوت الطويلة جدًا (على سبيل المثال، ترجمة الأفلام أو مقاطع الفيديو التي تستغرق ساعة) والتي لا يمكن للنموذج التعامل معها بمفرده:

```python

>>> transcriber = pipeline(model="openai/whisper-large-v2", chunk_length_s=30)

>>> transcriber("https://huggingface.co/datasets/reach-vb/random-audios/resolve/main/ted_60.wav")

{'text': " So in college, I was a government major, which means I had to write a lot of papers. Now, when a normal student writes a paper, they might spread the work out a little like this. So, you know. You get started maybe a little slowly, but you get enough done in the first week that with some heavier days later on, everything gets done and things stay civil. And I would want to do that like that. That would be the plan. I would have it all ready to go, but then actually the paper would come along, and then I would kind of do this. And that would happen every single paper. But then came my 90-page senior thesis, a paper you're supposed to spend a year on. I knew for a paper like that, my normal workflow was not an option, it was way too big a project. So I planned things out and I decided I kind of had to go something like this. This is how the year would go. So I'd start off light and I'd bump it up"}

```

إذا لم تتمكن من العثور على معلمة قد تساعدك حقًا، فلا تتردد في [طلبها](https://github.com/huggingface/transformers/issues/new?assignees=&labels=feature&template=feature-request.yml)!

## استخدام خطوط الأنابيب على مجموعة بيانات

يمكن أيضًا تشغيل خط الأنابيب للاستدلال على مجموعة بيانات كبيرة. أسهل طريقة نوصي بها للقيام بذلك هي باستخدام المتكرر (iterator).:

```py

def data():

for i in range(1000):

yield f"My example {i}"

pipe = pipeline(model="openai-community/gpt2", device=0)

generated_characters = 0

for out in pipe(data()):

generated_characters += len(out[0]["generated_text"])

```

يقوم المؤشر `data()` بإرجاع كل نتيجة، ويتعرف خط الأنابيب تلقائيًا

المدخل قابل للتحديد ويبدأ في جلب البيانات أثناء

يستمر في معالجتها على وحدة معالجة الرسومات (GPU) (يستخدم هذا [DataLoader](https://pytorch.org/docs/stable/data.html#torch.utils.data.DataLoader) تحت الغطاء).

هذا أمر مهم لأنك لا تحتاج إلى تخصيص ذاكرة لمجموعة البيانات بأكملها

ويمكنك تغذية وحدة معالجة الرسومات (GPU) بأسرع ما يمكن.

نظرًا لأن التجميع قد تسرع الأمور، فقد يكون من المفيد ضبط معلمة `batch_size` هنا.

أبسط طريقة للتنقل خلال مجموعة بيانات هي فقط تحميل واحدة من 🤗 [Datasets](https://github.com/huggingface/datasets/):

```py

# KeyDataset هي أداة مساعدة ستقوم فقط بإخراج العنصر الذي نهتم به.

from transformers.pipelines.pt_utils import KeyDataset

from datasets import load_dataset

pipe = pipeline(model="hf-internal-testing/tiny-random-wav2vec2", device=0)

dataset = load_dataset("hf-internal-testing/librispeech_asr_dummy", "clean", split="validation[:10]")

for out in pipe(KeyDataset(dataset, "audio")):

print(out)

```

## استخدام خطوط الأنابيب لخادم ويب

<Tip>

إن إنشاء محرك استدلال هو موضوع معقد يستحق صفحته الخاصة.

</Tip>

[Link](./pipeline_webserver)

## خط أنابيب الرؤية

إن استخدام [`pipeline`] لمهام الرؤية مماثل تمامًا.

حدد مهمتك ومرر صورتك إلى المصنف. يمكن أن تكون الصورة رابطًا أو مسارًا محليًا أو صورة مشفرة بتنسيق base64. على سبيل المثال، ما نوع القطط الموضح أدناه؟

```py

>>> from transformers import pipeline

>>> vision_classifier = pipeline(model="google/vit-base-patch16-224")

>>> preds = vision_classifier(

... images="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/pipeline-cat-chonk.jpeg"

... )

>>> preds = [{"score": round(pred["score"], 4), "label": pred["label"]} for pred in preds]

>>> preds

[{'score': 0.4335, 'label': 'lynx, catamount'}, {'score': 0.0348, 'label': 'cougar, puma, catamount, mountain lion, painter, panther, Felis concolor'}, {'score': 0.0324, 'label': 'snow leopard, ounce, Panthera uncia'}, {'score': 0.0239, 'label': 'Egyptian cat'}, {'score': 0.0229, 'label': 'tiger cat'}]

```

## خط أنابيب النص

إن استخدام [`pipeline`] لمهام NLP مماثل تمامًا.

```py

>>> from transformers import pipeline

>>> # هذا النموذج هو نموذج "zero-shot-classification".

>>> # سيصنف النص، ولكن يمكنك اختيار أي تسمية قد تتخيلها

>>> classifier = pipeline(model="facebook/bart-large-mnli")

>>> classifier(

... "I have a problem with my iphone that needs to be resolved asap!!",

... candidate_labels=["urgent", "not urgent", "phone", "tablet", "computer"],

... )

{'sequence': 'I have a problem with my iphone that needs to be resolved asap!!', 'labels': ['urgent', 'phone', 'computer', 'not urgent', 'tablet'], 'scores': [0.504, 0.479, 0.013, 0.003, 0.002]}

```

## خط أنابيب متعدد الوسائط

تدعم [`pipeline`] أكثر من طريقة واحدة. على سبيل المثال، تجمع مهمة الإجابة على الأسئلة المرئية (VQA) بين النص والصورة. لا تتردد في استخدام أي رابط صورة تريده وسؤال تريد طرحه حول الصورة. يمكن أن تكون الصورة عنوان URL أو مسارًا محليًا للصورة.

على سبيل المثال، إذا كنت تستخدم هذه [صورة الفاتورة](https://huggingface.co/spaces/impira/docquery/resolve/2359223c1837a7587402bda0f2643382a6eefeab/invoice.png):

```py

>>> from transformers import pipeline

>>> vqa = pipeline(model="impira/layoutlm-document-qa")

>>> output = vqa(

... image="https://huggingface.co/spaces/impira/docquery/resolve/2359223c1837a7587402bda0f2643382a6eefeab/invoice.png",

... question="What is the invoice number?",

... )

>>> output[0]["score"] = round(output[0]["score"], 3)

>>> output

[{'score': 0.425, 'answer': 'us-001', 'start': 16, 'end': 16}]

```

<Tip>

لتشغيل المثال أعلاه، تحتاج إلى تثبيت [`pytesseract`](https://pypi.org/project/pytesseract/) بالإضافة إلى 🤗 Transformers:

```bash

sudo apt install -y tesseract-ocr

pip install pytesseract

```

</Tip>

## استخدام `pipeline` على نماذج كبيرة مع 🤗 `accelerate`:

يمكنك بسهولة تشغيل `pipeline` على نماذج كبيرة باستخدام 🤗 `accelerate`! أولاً، تأكد من تثبيت `accelerate` باستخدام `pip install accelerate`.

قم أولاً بتحميل نموذجك باستخدام `device_map="auto"`! سنستخدم `facebook/opt-1.3b` كمثال لنا.

```py

# pip install accelerate

import torch

from transformers import pipeline

pipe = pipeline(model="facebook/opt-1.3b", torch_dtype=torch.bfloat16, device_map="auto")

output = pipe("This is a cool example!", do_sample=True, top_p=0.95)

```

يمكنك أيضًا تمرير نماذج محملة بـ 8 بت إذا قمت بتثبيت `bitsandbytes` وإضافة الحجة `load_in_8bit=True`

```py

# pip install accelerate bitsandbytes

import torch

from transformers import pipeline

pipe = pipeline(model="facebook/opt-1.3b", device_map="auto", model_kwargs={"load_in_8bit": True})

output = pipe("This is a cool example!", do_sample=True, top_p=0.95)

```

لاحظ أنه يمكنك استبدال نقطة التفتيش بأي نموذج من Hugging Face يدعم تحميل النماذج الكبيرة، مثل BLOOM.

## إنشاء عروض توضيحية ويب من خطوط الأنابيب باستخدام `gradio`

يتم دعم خطوط الأنابيب تلقائيًا في [Gradio](https://github.com/gradio-app/gradio/)، وهي مكتبة تجعل إنشاء تطبيقات تعليم الآلة الجميلة والسهلة الاستخدام على الويب أمرًا سهلاً. أولاً، تأكد من تثبيت Gradio:

```

pip install gradio

```

بعد ذلك، يمكنك إنشاء عرض توضيحي ويب حول خط أنابيب تصنيف الصور (أو أي خط أنابيب آخر) في سطر واحد من التعليمات البرمجية عن طريق استدعاء وظيفة [`Interface.from_pipeline`](https://www.gradio.app/docs/interface#interface-from-pipeline) في Gradio لإطلاق خط الأنابيب. يقوم هذا بإنشاء واجهة بديهية للسحب والإفلات في مستعرضك:

```py

from transformers import pipeline

import gradio as gr

pipe = pipeline("image-classification", model="google/vit-base-patch16-224")

gr.Interface.from_pipeline(pipe).launch()

```

بشكل افتراضي، يعمل العرض التوضيحي على خادم محلي. إذا كنت تريد مشاركتها مع الآخرين، فيمكنك إنشاء رابط عام مؤقت عن طريق تعيين `share=True` في `launch()`. يمكنك أيضًا استضافة عرضك التوضيحي على [Hugging Face Spaces](https://huggingface.co/spaces) للحصول على رابط دائم. | transformers/docs/source/ar/pipeline_tutorial.md/0 | {

"file_path": "transformers/docs/source/ar/pipeline_tutorial.md",

"repo_id": "transformers",

"token_count": 10228

} |

<!---

Copyright 2022 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

⚠️ Note that this file is in Markdown but contain specific syntax for our doc-builder (similar to MDX) that may not be

rendered properly in your Markdown viewer.

-->

# Installation

Installieren Sie 🤗 Transformers für die Deep-Learning-Bibliothek, mit der Sie arbeiten, richten Sie Ihren Cache ein und konfigurieren Sie 🤗 Transformers optional für den Offline-Betrieb.

🤗 Transformers wurde unter Python 3.6+, PyTorch 1.1.0+, TensorFlow 2.0+, und Flax getestet. Folgen Sie den Installationsanweisungen unten für die von Ihnen verwendete Deep-Learning-Bibliothek:

* [PyTorch](https://pytorch.org/get-started/locally/) installation instructions.

* [TensorFlow 2.0](https://www.tensorflow.org/install/pip) installation instructions.

* [Flax](https://flax.readthedocs.io/en/latest/) installation instructions.

## Installation mit pip

Sie sollten 🤗 Transformers in einer [virtuellen Umgebung](https://docs.python.org/3/library/venv.html) installieren. Wenn Sie mit virtuellen Python-Umgebungen nicht vertraut sind, werfen Sie einen Blick auf diese [Anleitung](https://packaging.python.org/guides/installing-using-pip-and-virtual-environments/). Eine virtuelle Umgebung macht es einfacher, verschiedene Projekte zu verwalten und Kompatibilitätsprobleme zwischen Abhängigkeiten zu vermeiden.

Beginnen wir mit der Erstellung einer virtuellen Umgebung in Ihrem Projektverzeichnis:

```bash

python -m venv .env

```

Aktivieren wir die virtuelle Umgebung. Unter Linux und MacOs:

```bash

source .env/bin/activate

```

Aktivieren wir die virtuelle Umgebung unter Windows

```bash

.env/Scripts/activate

```

Jetzt können wir die 🤗 Transformers mit dem folgenden Befehl installieren:

```bash

pip install transformers

```

Bei reiner CPU-Unterstützung können wir 🤗 Transformers und eine Deep-Learning-Bibliothek bequem in einer Zeile installieren. Installieren wir zum Beispiel 🤗 Transformers und PyTorch mit:

```bash

pip install transformers[torch]

```

🤗 Transformers und TensorFlow 2.0:

```bash

pip install transformers[tf-cpu]

```

🤗 Transformers und Flax:

```bash

pip install transformers[flax]

```

Überprüfen wir abschließend, ob 🤗 Transformers ordnungsgemäß installiert wurde, indem wir den folgenden Befehl ausführen. Es wird ein vortrainiertes Modell heruntergeladen:

```bash

python -c "from transformers import pipeline; print(pipeline('sentiment-analysis')('we love you'))"

```

Dann wird die Kategorie und die Wahrscheinlichkeit ausgegeben:

```bash

[{'label': 'POSITIVE', 'score': 0.9998704791069031}]

```

## Installation aus dem Code

Installieren wir 🤗 Transformers aus dem Quellcode mit dem folgenden Befehl:

```bash

pip install git+https://github.com/huggingface/transformers

```

Dieser Befehl installiert die aktuelle `main` Version und nicht die neueste `stable` Version. Die `main`-Version ist nützlich, um mit den neuesten Entwicklungen Schritt zu halten. Zum Beispiel, wenn ein Fehler seit der letzten offiziellen Version behoben wurde, aber eine neue Version noch nicht veröffentlicht wurde. Das bedeutet jedoch, dass die "Hauptversion" nicht immer stabil ist. Wir bemühen uns, die Hauptversion einsatzbereit zu halten, und die meisten Probleme werden normalerweise innerhalb weniger Stunden oder eines Tages behoben. Wenn Sie auf ein Problem stoßen, öffnen Sie bitte ein [Issue](https://github.com/huggingface/transformers/issues), damit wir es noch schneller beheben können!

Überprüfen wir, ob 🤗 Transformers richtig installiert wurde, indem Sie den folgenden Befehl ausführen:

```bash

python -c "from transformers import pipeline; print(pipeline('sentiment-analysis')('I love you'))"

```

## Editierbare Installation

Sie benötigen eine bearbeitbare Installation, wenn Sie:

* die "Haupt"-Version des Quellcodes verwenden möchten.

* Zu 🤗 Transformers beitragen und Änderungen am Code testen wollen.

Klonen Sie das Repository und installieren 🤗 Transformers mit den folgenden Befehlen:

```bash

git clone https://github.com/huggingface/transformers.git

cd transformers

pip install -e .

```

Diese Befehle verknüpfen den Ordner, in den Sie das Repository geklont haben, mit den Pfaden Ihrer Python-Bibliotheken. Python wird nun in dem Ordner suchen, in den Sie geklont haben, zusätzlich zu den normalen Bibliothekspfaden. Wenn zum Beispiel Ihre Python-Pakete normalerweise in `~/anaconda3/envs/main/lib/python3.7/site-packages/` installiert sind, wird Python auch den Ordner durchsuchen, in den Sie geklont haben: `~/transformers/`.

<Tip warning={true}>

Sie müssen den Ordner `transformers` behalten, wenn Sie die Bibliothek weiter verwenden wollen.

</Tip>

Jetzt können Sie Ihren Klon mit dem folgenden Befehl ganz einfach auf die neueste Version von 🤗 Transformers aktualisieren:

```bash

cd ~/transformers/

git pull

```

Ihre Python-Umgebung wird beim nächsten Ausführen die `main`-Version von 🤗 Transformers finden.

## Installation mit conda

Installation von dem conda Kanal `conda-forge`:

```bash

conda install conda-forge::transformers

```

## Cache Einrichtung

Vorgefertigte Modelle werden heruntergeladen und lokal zwischengespeichert unter: `~/.cache/huggingface/hub`. Dies ist das Standardverzeichnis, das durch die Shell-Umgebungsvariable "TRANSFORMERS_CACHE" vorgegeben ist. Unter Windows wird das Standardverzeichnis durch `C:\Benutzer\Benutzername\.cache\huggingface\hub` angegeben. Sie können die unten aufgeführten Shell-Umgebungsvariablen - in der Reihenfolge ihrer Priorität - ändern, um ein anderes Cache-Verzeichnis anzugeben:

1. Shell-Umgebungsvariable (Standard): `HF_HUB_CACHE` oder `TRANSFORMERS_CACHE`.

2. Shell-Umgebungsvariable: `HF_HOME`.

3. Shell-Umgebungsvariable: `XDG_CACHE_HOME` + `/huggingface`.

<Tip>

Transformers verwendet die Shell-Umgebungsvariablen `PYTORCH_TRANSFORMERS_CACHE` oder `PYTORCH_PRETRAINED_BERT_CACHE`, wenn Sie von einer früheren Iteration dieser Bibliothek kommen und diese Umgebungsvariablen gesetzt haben, sofern Sie nicht die Shell-Umgebungsvariable `TRANSFORMERS_CACHE` angeben.

</Tip>

## Offline Modus

Transformers ist in der Lage, in einer Firewall- oder Offline-Umgebung zu laufen, indem es nur lokale Dateien verwendet. Setzen Sie die Umgebungsvariable `HF_HUB_OFFLINE=1`, um dieses Verhalten zu aktivieren.

<Tip>

Fügen sie [🤗 Datasets](https://huggingface.co/docs/datasets/) zu Ihrem Offline-Trainingsworkflow hinzufügen, indem Sie die Umgebungsvariable `HF_DATASETS_OFFLINE=1` setzen.

</Tip>

So würden Sie beispielsweise ein Programm in einem normalen Netzwerk mit einer Firewall für externe Instanzen mit dem folgenden Befehl ausführen:

```bash

python examples/pytorch/translation/run_translation.py --model_name_or_path google-t5/t5-small --dataset_name wmt16 --dataset_config ro-en ...

```

Führen Sie das gleiche Programm in einer Offline-Instanz mit aus:

```bash

HF_DATASETS_OFFLINE=1 HF_HUB_OFFLINE=1 \

python examples/pytorch/translation/run_translation.py --model_name_or_path google-t5/t5-small --dataset_name wmt16 --dataset_config ro-en ...

```

Das Skript sollte nun laufen, ohne sich aufzuhängen oder eine Zeitüberschreitung abzuwarten, da es weiß, dass es nur nach lokalen Dateien suchen soll.

### Abrufen von Modellen und Tokenizern zur Offline-Verwendung

Eine andere Möglichkeit, 🤗 Transformers offline zu verwenden, besteht darin, die Dateien im Voraus herunterzuladen und dann auf ihren lokalen Pfad zu verweisen, wenn Sie sie offline verwenden müssen. Es gibt drei Möglichkeiten, dies zu tun:

* Laden Sie eine Datei über die Benutzeroberfläche des [Model Hub](https://huggingface.co/models) herunter, indem Sie auf das ↓-Symbol klicken.

* Verwenden Sie den [PreTrainedModel.from_pretrained] und [PreTrainedModel.save_pretrained] Workflow:

1. Laden Sie Ihre Dateien im Voraus mit [`PreTrainedModel.from_pretrained`] herunter:

```py

>>> from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

>>> tokenizer = AutoTokenizer.from_pretrained("bigscience/T0_3B")

>>> model = AutoModelForSeq2SeqLM.from_pretrained("bigscience/T0_3B")

```

2. Speichern Sie Ihre Dateien in einem bestimmten Verzeichnis mit [`PreTrainedModel.save_pretrained`]:

```py

>>> tokenizer.save_pretrained("./your/path/bigscience_t0")

>>> model.save_pretrained("./your/path/bigscience_t0")

```

3. Wenn Sie nun offline sind, laden Sie Ihre Dateien mit [`PreTrainedModel.from_pretrained`] aus dem bestimmten Verzeichnis:

```py

>>> tokenizer = AutoTokenizer.from_pretrained("./your/path/bigscience_t0")

>>> model = AutoModel.from_pretrained("./your/path/bigscience_t0")

```

* Programmatisches Herunterladen von Dateien mit der [huggingface_hub](https://github.com/huggingface/huggingface_hub/tree/main/src/huggingface_hub) Bibliothek:

1. Installieren Sie die "huggingface_hub"-Bibliothek in Ihrer virtuellen Umgebung:

```bash

python -m pip install huggingface_hub

```

2. Verwenden Sie die Funktion [`hf_hub_download`](https://huggingface.co/docs/hub/adding-a-library#download-files-from-the-hub), um eine Datei in einen bestimmten Pfad herunterzuladen. Der folgende Befehl lädt zum Beispiel die Datei "config.json" aus dem Modell [T0](https://huggingface.co/bigscience/T0_3B) in den gewünschten Pfad herunter:

```py

>>> from huggingface_hub import hf_hub_download

>>> hf_hub_download(repo_id="bigscience/T0_3B", filename="config.json", cache_dir="./your/path/bigscience_t0")

```

Sobald Ihre Datei heruntergeladen und lokal zwischengespeichert ist, geben Sie den lokalen Pfad an, um sie zu laden und zu verwenden:

```py

>>> from transformers import AutoConfig

>>> config = AutoConfig.from_pretrained("./your/path/bigscience_t0/config.json")

```

<Tip>

Weitere Informationen zum Herunterladen von Dateien, die auf dem Hub gespeichert sind, finden Sie im Abschnitt [Wie man Dateien vom Hub herunterlädt](https://huggingface.co/docs/hub/how-to-downstream).

</Tip>

| transformers/docs/source/de/installation.md/0 | {

"file_path": "transformers/docs/source/de/installation.md",

"repo_id": "transformers",

"token_count": 3996

} |

<!--Copyright 2020 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

⚠️ Note that this file is in Markdown but contain specific syntax for our doc-builder (similar to MDX) that may not be

rendered properly in your Markdown viewer.

-->

# How to add a model to 🤗 Transformers?

The 🤗 Transformers library is often able to offer new models thanks to community contributors. But this can be a challenging project and requires an in-depth knowledge of the 🤗 Transformers library and the model to implement. At Hugging Face, we're trying to empower more of the community to actively add models and we've put together this guide to walk you through the process of adding a PyTorch model (make sure you have [PyTorch installed](https://pytorch.org/get-started/locally/)).

Along the way, you'll:

- get insights into open-source best practices

- understand the design principles behind one of the most popular deep learning libraries

- learn how to efficiently test large models

- learn how to integrate Python utilities like `black`, `ruff`, and `make fix-copies` to ensure clean and readable code

A Hugging Face team member will be available to help you along the way so you'll never be alone. 🤗 ❤️

To get started, open a [New model addition](https://github.com/huggingface/transformers/issues/new?assignees=&labels=New+model&template=new-model-addition.yml) issue for the model you want to see in 🤗 Transformers. If you're not especially picky about contributing a specific model, you can filter by the [New model label](https://github.com/huggingface/transformers/labels/New%20model) to see if there are any unclaimed model requests and work on it.

Once you've opened a new model request, the first step is to get familiar with 🤗 Transformers if you aren't already!

## General overview of 🤗 Transformers

First, you should get a general overview of 🤗 Transformers. 🤗 Transformers is a very opinionated library, so there is a

chance that you don't agree with some of the library's philosophies or design choices. From our experience, however, we

found that the fundamental design choices and philosophies of the library are crucial to efficiently scale 🤗

Transformers while keeping maintenance costs at a reasonable level.

A good first starting point to better understand the library is to read the [documentation of our philosophy](philosophy). As a result of our way of working, there are some choices that we try to apply to all models:

- Composition is generally favored over-abstraction

- Duplicating code is not always bad if it strongly improves the readability or accessibility of a model

- Model files are as self-contained as possible so that when you read the code of a specific model, you ideally only

have to look into the respective `modeling_....py` file.

In our opinion, the library's code is not just a means to provide a product, *e.g.* the ability to use BERT for

inference, but also as the very product that we want to improve. Hence, when adding a model, the user is not only the

person who will use your model, but also everybody who will read, try to understand, and possibly tweak your code.

With this in mind, let's go a bit deeper into the general library design.

### Overview of models

To successfully add a model, it is important to understand the interaction between your model and its config,

[`PreTrainedModel`], and [`PretrainedConfig`]. For exemplary purposes, we will

call the model to be added to 🤗 Transformers `BrandNewBert`.

Let's take a look:

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/transformers_overview.png"/>

As you can see, we do make use of inheritance in 🤗 Transformers, but we keep the level of abstraction to an absolute

minimum. There are never more than two levels of abstraction for any model in the library. `BrandNewBertModel`

inherits from `BrandNewBertPreTrainedModel` which in turn inherits from [`PreTrainedModel`] and

that's it. As a general rule, we want to make sure that a new model only depends on

[`PreTrainedModel`]. The important functionalities that are automatically provided to every new

model are [`~PreTrainedModel.from_pretrained`] and

[`~PreTrainedModel.save_pretrained`], which are used for serialization and deserialization. All of the

other important functionalities, such as `BrandNewBertModel.forward` should be completely defined in the new

`modeling_brand_new_bert.py` script. Next, we want to make sure that a model with a specific head layer, such as

`BrandNewBertForMaskedLM` does not inherit from `BrandNewBertModel`, but rather uses `BrandNewBertModel`

as a component that can be called in its forward pass to keep the level of abstraction low. Every new model requires a

configuration class, called `BrandNewBertConfig`. This configuration is always stored as an attribute in

[`PreTrainedModel`], and thus can be accessed via the `config` attribute for all classes

inheriting from `BrandNewBertPreTrainedModel`:

```python

model = BrandNewBertModel.from_pretrained("brandy/brand_new_bert")

model.config # model has access to its config

```

Similar to the model, the configuration inherits basic serialization and deserialization functionalities from

[`PretrainedConfig`]. Note that the configuration and the model are always serialized into two

different formats - the model to a *pytorch_model.bin* file and the configuration to a *config.json* file. Calling

the model's [`~PreTrainedModel.save_pretrained`] will automatically call

the config's [`~PretrainedConfig.save_pretrained`], so that both model and configuration are saved.

### Code style

When coding your new model, keep in mind that Transformers is an opinionated library and we have a few quirks of our

own regarding how code should be written :-)

1. The forward pass of your model should be fully written in the modeling file while being fully independent of other

models in the library. If you want to reuse a block from another model, copy the code and paste it with a

`# Copied from` comment on top (see [here](https://github.com/huggingface/transformers/blob/v4.17.0/src/transformers/models/roberta/modeling_roberta.py#L160)

for a good example and [there](pr_checks#check-copies) for more documentation on Copied from).

2. The code should be fully understandable, even by a non-native English speaker. This means you should pick

descriptive variable names and avoid abbreviations. As an example, `activation` is preferred to `act`.

One-letter variable names are strongly discouraged unless it's an index in a for loop.

3. More generally we prefer longer explicit code to short magical one.

4. Avoid subclassing `nn.Sequential` in PyTorch but subclass `nn.Module` and write the forward pass, so that anyone

using your code can quickly debug it by adding print statements or breaking points.

5. Your function signature should be type-annotated. For the rest, good variable names are way more readable and

understandable than type annotations.

### Overview of tokenizers

Not quite ready yet :-( This section will be added soon!

## Step-by-step recipe to add a model to 🤗 Transformers

Everyone has different preferences of how to port a model so it can be very helpful for you to take a look at summaries

of how other contributors ported models to Hugging Face. Here is a list of community blog posts on how to port a model:

1. [Porting GPT2 Model](https://medium.com/huggingface/from-tensorflow-to-pytorch-265f40ef2a28) by [Thomas](https://huggingface.co/thomwolf)

2. [Porting WMT19 MT Model](https://huggingface.co/blog/porting-fsmt) by [Stas](https://huggingface.co/stas)

From experience, we can tell you that the most important things to keep in mind when adding a model are:

- Don't reinvent the wheel! Most parts of the code you will add for the new 🤗 Transformers model already exist

somewhere in 🤗 Transformers. Take some time to find similar, already existing models and tokenizers you can copy

from. [grep](https://www.gnu.org/software/grep/) and [rg](https://github.com/BurntSushi/ripgrep) are your

friends. Note that it might very well happen that your model's tokenizer is based on one model implementation, and

your model's modeling code on another one. *E.g.* FSMT's modeling code is based on BART, while FSMT's tokenizer code

is based on XLM.

- It's more of an engineering challenge than a scientific challenge. You should spend more time creating an

efficient debugging environment rather than trying to understand all theoretical aspects of the model in the paper.

- Ask for help, when you're stuck! Models are the core component of 🤗 Transformers so we at Hugging Face are more

than happy to help you at every step to add your model. Don't hesitate to ask if you notice you are not making

progress.

In the following, we try to give you a general recipe that we found most useful when porting a model to 🤗 Transformers.

The following list is a summary of everything that has to be done to add a model and can be used by you as a To-Do

List:

☐ (Optional) Understood the model's theoretical aspects<br>

☐ Prepared 🤗 Transformers dev environment<br>

☐ Set up debugging environment of the original repository<br>

☐ Created script that successfully runs the `forward()` pass using the original repository and checkpoint<br>

☐ Successfully added the model skeleton to 🤗 Transformers<br>

☐ Successfully converted original checkpoint to 🤗 Transformers checkpoint<br>

☐ Successfully ran `forward()` pass in 🤗 Transformers that gives identical output to original checkpoint<br>

☐ Finished model tests in 🤗 Transformers<br>

☐ Successfully added tokenizer in 🤗 Transformers<br>

☐ Run end-to-end integration tests<br>

☐ Finished docs<br>

☐ Uploaded model weights to the Hub<br>

☐ Submitted the pull request<br>

☐ (Optional) Added a demo notebook

To begin with, we usually recommend starting by getting a good theoretical understanding of `BrandNewBert`. However,

if you prefer to understand the theoretical aspects of the model *on-the-job*, then it is totally fine to directly dive

into the `BrandNewBert`'s code-base. This option might suit you better if your engineering skills are better than

your theoretical skill, if you have trouble understanding `BrandNewBert`'s paper, or if you just enjoy programming

much more than reading scientific papers.

### 1. (Optional) Theoretical aspects of BrandNewBert

You should take some time to read *BrandNewBert's* paper, if such descriptive work exists. There might be large

sections of the paper that are difficult to understand. If this is the case, this is fine - don't worry! The goal is

not to get a deep theoretical understanding of the paper, but to extract the necessary information required to

effectively re-implement the model in 🤗 Transformers. That being said, you don't have to spend too much time on the

theoretical aspects, but rather focus on the practical ones, namely:

- What type of model is *brand_new_bert*? BERT-like encoder-only model? GPT2-like decoder-only model? BART-like

encoder-decoder model? Look at the [model_summary](model_summary) if you're not familiar with the differences between those.

- What are the applications of *brand_new_bert*? Text classification? Text generation? Seq2Seq tasks, *e.g.,*

summarization?

- What is the novel feature of the model that makes it different from BERT/GPT-2/BART?

- Which of the already existing [🤗 Transformers models](https://huggingface.co/transformers/#contents) is most

similar to *brand_new_bert*?

- What type of tokenizer is used? A sentencepiece tokenizer? Word piece tokenizer? Is it the same tokenizer as used

for BERT or BART?

After you feel like you have gotten a good overview of the architecture of the model, you might want to write to the

Hugging Face team with any questions you might have. This might include questions regarding the model's architecture,

its attention layer, etc. We will be more than happy to help you.

### 2. Next prepare your environment

1. Fork the [repository](https://github.com/huggingface/transformers) by clicking on the ‘Fork' button on the

repository's page. This creates a copy of the code under your GitHub user account.

2. Clone your `transformers` fork to your local disk, and add the base repository as a remote:

```bash

git clone https://github.com/[your Github handle]/transformers.git

cd transformers

git remote add upstream https://github.com/huggingface/transformers.git

```

3. Set up a development environment, for instance by running the following command:

```bash

python -m venv .env

source .env/bin/activate

pip install -e ".[dev]"

```

Depending on your OS, and since the number of optional dependencies of Transformers is growing, you might get a

failure with this command. If that's the case make sure to install the Deep Learning framework you are working with

(PyTorch, TensorFlow and/or Flax) then do:

```bash

pip install -e ".[quality]"

```

which should be enough for most use cases. You can then return to the parent directory

```bash

cd ..

```

4. We recommend adding the PyTorch version of *brand_new_bert* to Transformers. To install PyTorch, please follow the

instructions on https://pytorch.org/get-started/locally/.

**Note:** You don't need to have CUDA installed. Making the new model work on CPU is sufficient.

5. To port *brand_new_bert*, you will also need access to its original repository:

```bash

git clone https://github.com/org_that_created_brand_new_bert_org/brand_new_bert.git

cd brand_new_bert

pip install -e .

```

Now you have set up a development environment to port *brand_new_bert* to 🤗 Transformers.

### 3.-4. Run a pretrained checkpoint using the original repository

At first, you will work on the original *brand_new_bert* repository. Often, the original implementation is very

“researchy”. Meaning that documentation might be lacking and the code can be difficult to understand. But this should

be exactly your motivation to reimplement *brand_new_bert*. At Hugging Face, one of our main goals is to *make people

stand on the shoulders of giants* which translates here very well into taking a working model and rewriting it to make

it as **accessible, user-friendly, and beautiful** as possible. This is the number-one motivation to re-implement

models into 🤗 Transformers - trying to make complex new NLP technology accessible to **everybody**.

You should start thereby by diving into the original repository.

Successfully running the official pretrained model in the original repository is often **the most difficult** step.

From our experience, it is very important to spend some time getting familiar with the original code-base. You need to

figure out the following:

- Where to find the pretrained weights?

- How to load the pretrained weights into the corresponding model?

- How to run the tokenizer independently from the model?

- Trace one forward pass so that you know which classes and functions are required for a simple forward pass. Usually,

you only have to reimplement those functions.

- Be able to locate the important components of the model: Where is the model's class? Are there model sub-classes,

*e.g.* EncoderModel, DecoderModel? Where is the self-attention layer? Are there multiple different attention layers,

*e.g.* *self-attention*, *cross-attention*...?

- How can you debug the model in the original environment of the repo? Do you have to add *print* statements, can you

work with an interactive debugger like *ipdb*, or should you use an efficient IDE to debug the model, like PyCharm?

It is very important that before you start the porting process, you can **efficiently** debug code in the original

repository! Also, remember that you are working with an open-source library, so do not hesitate to open an issue, or

even a pull request in the original repository. The maintainers of this repository are most likely very happy about

someone looking into their code!

At this point, it is really up to you which debugging environment and strategy you prefer to use to debug the original

model. We strongly advise against setting up a costly GPU environment, but simply work on a CPU both when starting to

dive into the original repository and also when starting to write the 🤗 Transformers implementation of the model. Only

at the very end, when the model has already been successfully ported to 🤗 Transformers, one should verify that the

model also works as expected on GPU.

In general, there are two possible debugging environments for running the original model

- [Jupyter notebooks](https://jupyter.org/) / [google colab](https://colab.research.google.com/notebooks/intro.ipynb)

- Local python scripts.

Jupyter notebooks have the advantage that they allow for cell-by-cell execution which can be helpful to better split

logical components from one another and to have faster debugging cycles as intermediate results can be stored. Also,

notebooks are often easier to share with other contributors, which might be very helpful if you want to ask the Hugging

Face team for help. If you are familiar with Jupyter notebooks, we strongly recommend you work with them.

The obvious disadvantage of Jupyter notebooks is that if you are not used to working with them you will have to spend

some time adjusting to the new programming environment and you might not be able to use your known debugging tools

anymore, like `ipdb`.

For each code-base, a good first step is always to load a **small** pretrained checkpoint and to be able to reproduce a

single forward pass using a dummy integer vector of input IDs as an input. Such a script could look like this (in

pseudocode):

```python

model = BrandNewBertModel.load_pretrained_checkpoint("/path/to/checkpoint/")

input_ids = [0, 4, 5, 2, 3, 7, 9] # vector of input ids

original_output = model.predict(input_ids)

```

Next, regarding the debugging strategy, there are generally a few from which to choose from:

- Decompose the original model into many small testable components and run a forward pass on each of those for

verification

- Decompose the original model only into the original *tokenizer* and the original *model*, run a forward pass on

those, and use intermediate print statements or breakpoints for verification

Again, it is up to you which strategy to choose. Often, one or the other is advantageous depending on the original code

base.

If the original code-base allows you to decompose the model into smaller sub-components, *e.g.* if the original

code-base can easily be run in eager mode, it is usually worth the effort to do so. There are some important advantages

to taking the more difficult road in the beginning:

- at a later stage when comparing the original model to the Hugging Face implementation, you can verify automatically

for each component individually that the corresponding component of the 🤗 Transformers implementation matches instead

of relying on visual comparison via print statements

- it can give you some rope to decompose the big problem of porting a model into smaller problems of just porting

individual components and thus structure your work better

- separating the model into logical meaningful components will help you to get a better overview of the model's design

and thus to better understand the model

- at a later stage those component-by-component tests help you to ensure that no regression occurs as you continue

changing your code

[Lysandre's](https://gist.github.com/LysandreJik/db4c948f6b4483960de5cbac598ad4ed) integration checks for ELECTRA

gives a nice example of how this can be done.

However, if the original code-base is very complex or only allows intermediate components to be run in a compiled mode,

it might be too time-consuming or even impossible to separate the model into smaller testable sub-components. A good

example is [T5's MeshTensorFlow](https://github.com/tensorflow/mesh/tree/master/mesh_tensorflow) library which is

very complex and does not offer a simple way to decompose the model into its sub-components. For such libraries, one

often relies on verifying print statements.

No matter which strategy you choose, the recommended procedure is often the same that you should start to debug the

starting layers first and the ending layers last.

It is recommended that you retrieve the output, either by print statements or sub-component functions, of the following

layers in the following order:

1. Retrieve the input IDs passed to the model

2. Retrieve the word embeddings

3. Retrieve the input of the first Transformer layer

4. Retrieve the output of the first Transformer layer

5. Retrieve the output of the following n - 1 Transformer layers

6. Retrieve the output of the whole BrandNewBert Model

Input IDs should thereby consists of an array of integers, *e.g.* `input_ids = [0, 4, 4, 3, 2, 4, 1, 7, 19]`

The outputs of the following layers often consist of multi-dimensional float arrays and can look like this:

```

[[

[-0.1465, -0.6501, 0.1993, ..., 0.1451, 0.3430, 0.6024],

[-0.4417, -0.5920, 0.3450, ..., -0.3062, 0.6182, 0.7132],

[-0.5009, -0.7122, 0.4548, ..., -0.3662, 0.6091, 0.7648],

...,

[-0.5613, -0.6332, 0.4324, ..., -0.3792, 0.7372, 0.9288],

[-0.5416, -0.6345, 0.4180, ..., -0.3564, 0.6992, 0.9191],

[-0.5334, -0.6403, 0.4271, ..., -0.3339, 0.6533, 0.8694]]],

```

We expect that every model added to 🤗 Transformers passes a couple of integration tests, meaning that the original

model and the reimplemented version in 🤗 Transformers have to give the exact same output up to a precision of 0.001!

Since it is normal that the exact same model written in different libraries can give a slightly different output

depending on the library framework, we accept an error tolerance of 1e-3 (0.001). It is not enough if the model gives

nearly the same output, they have to be almost identical. Therefore, you will certainly compare the intermediate

outputs of the 🤗 Transformers version multiple times against the intermediate outputs of the original implementation of

*brand_new_bert* in which case an **efficient** debugging environment of the original repository is absolutely

important. Here is some advice to make your debugging environment as efficient as possible.

- Find the best way of debugging intermediate results. Is the original repository written in PyTorch? Then you should

probably take the time to write a longer script that decomposes the original model into smaller sub-components to

retrieve intermediate values. Is the original repository written in Tensorflow 1? Then you might have to rely on

TensorFlow print operations like [tf.print](https://www.tensorflow.org/api_docs/python/tf/print) to output

intermediate values. Is the original repository written in Jax? Then make sure that the model is **not jitted** when

running the forward pass, *e.g.* check-out [this link](https://github.com/google/jax/issues/196).

- Use the smallest pretrained checkpoint you can find. The smaller the checkpoint, the faster your debug cycle

becomes. It is not efficient if your pretrained model is so big that your forward pass takes more than 10 seconds.

In case only very large checkpoints are available, it might make more sense to create a dummy model in the new

environment with randomly initialized weights and save those weights for comparison with the 🤗 Transformers version

of your model

- Make sure you are using the easiest way of calling a forward pass in the original repository. Ideally, you want to

find the function in the original repository that **only** calls a single forward pass, *i.e.* that is often called

`predict`, `evaluate`, `forward` or `__call__`. You don't want to debug a function that calls `forward`

multiple times, *e.g.* to generate text, like `autoregressive_sample`, `generate`.

- Try to separate the tokenization from the model's *forward* pass. If the original repository shows examples where

you have to input a string, then try to find out where in the forward call the string input is changed to input ids

and start from this point. This might mean that you have to possibly write a small script yourself or change the

original code so that you can directly input the ids instead of an input string.

- Make sure that the model in your debugging setup is **not** in training mode, which often causes the model to yield

random outputs due to multiple dropout layers in the model. Make sure that the forward pass in your debugging

environment is **deterministic** so that the dropout layers are not used. Or use *transformers.utils.set_seed*

if the old and new implementations are in the same framework.

The following section gives you more specific details/tips on how you can do this for *brand_new_bert*.

### 5.-14. Port BrandNewBert to 🤗 Transformers

Next, you can finally start adding new code to 🤗 Transformers. Go into the clone of your 🤗 Transformers' fork:

```bash

cd transformers

```

In the special case that you are adding a model whose architecture exactly matches the model architecture of an

existing model you only have to add a conversion script as described in [this section](#write-a-conversion-script).

In this case, you can just re-use the whole model architecture of the already existing model.