modelId

string | author

string | last_modified

timestamp[us, tz=UTC] | downloads

int64 | likes

int64 | library_name

string | tags

list | pipeline_tag

string | createdAt

timestamp[us, tz=UTC] | card

string |

|---|---|---|---|---|---|---|---|---|---|

juancavallotti/t5-small-gec

|

juancavallotti

| 2022-06-05T01:51:04Z | 5 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"t5",

"text2text-generation",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-06-05T01:06:00Z |

---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: t5-small-gec

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# t5-small-gec

This model is a fine-tuned version of [t5-small](https://huggingface.co/t5-small) on the None dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

### Framework versions

- Transformers 4.19.2

- Pytorch 1.11.0+cu113

- Datasets 2.2.2

- Tokenizers 0.12.1

|

elgeish/wav2vec2-large-xlsr-53-arabic

|

elgeish

| 2022-06-04T23:37:05Z | 2,693 | 15 |

transformers

|

[

"transformers",

"pytorch",

"jax",

"wav2vec2",

"automatic-speech-recognition",

"audio",

"speech",

"xlsr-fine-tuning-week",

"hf-asr-leaderboard",

"ar",

"dataset:arabic_speech_corpus",

"dataset:mozilla-foundation/common_voice_6_1",

"license:apache-2.0",

"model-index",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2022-03-02T23:29:05Z |

---

language: ar

datasets:

- arabic_speech_corpus

- mozilla-foundation/common_voice_6_1

metrics:

- wer

tags:

- audio

- automatic-speech-recognition

- speech

- xlsr-fine-tuning-week

- hf-asr-leaderboard

license: apache-2.0

model-index:

- name: elgeish-wav2vec2-large-xlsr-53-arabic

results:

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: Common Voice 6.1 (Arabic)

type: mozilla-foundation/common_voice_6_1

config: ar

split: test

args:

language: ar

metrics:

- name: Test WER

type: wer

value: 26.55

- name: Validation WER

type: wer

value: 23.39

---

# Wav2Vec2-Large-XLSR-53-Arabic

Fine-tuned [facebook/wav2vec2-large-xlsr-53](https://huggingface.co/facebook/wav2vec2-large-xlsr-53)

on Arabic using the `train` splits of [Common Voice](https://huggingface.co/datasets/common_voice)

and [Arabic Speech Corpus](https://huggingface.co/datasets/arabic_speech_corpus).

When using this model, make sure that your speech input is sampled at 16kHz.

## Usage

The model can be used directly (without a language model) as follows:

```python

import torch

import torchaudio

from datasets import load_dataset

from lang_trans.arabic import buckwalter

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

dataset = load_dataset("common_voice", "ar", split="test[:10]")

resamplers = { # all three sampling rates exist in test split

48000: torchaudio.transforms.Resample(48000, 16000),

44100: torchaudio.transforms.Resample(44100, 16000),

32000: torchaudio.transforms.Resample(32000, 16000),

}

def prepare_example(example):

speech, sampling_rate = torchaudio.load(example["path"])

example["speech"] = resamplers[sampling_rate](speech).squeeze().numpy()

return example

dataset = dataset.map(prepare_example)

processor = Wav2Vec2Processor.from_pretrained("elgeish/wav2vec2-large-xlsr-53-arabic")

model = Wav2Vec2ForCTC.from_pretrained("elgeish/wav2vec2-large-xlsr-53-arabic").eval()

def predict(batch):

inputs = processor(batch["speech"], sampling_rate=16000, return_tensors="pt", padding=True)

with torch.no_grad():

predicted = torch.argmax(model(inputs.input_values).logits, dim=-1)

predicted[predicted == -100] = processor.tokenizer.pad_token_id # see fine-tuning script

batch["predicted"] = processor.tokenizer.batch_decode(predicted)

return batch

dataset = dataset.map(predict, batched=True, batch_size=1, remove_columns=["speech"])

for reference, predicted in zip(dataset["sentence"], dataset["predicted"]):

print("reference:", reference)

print("predicted:", buckwalter.untrans(predicted))

print("--")

```

Here's the output:

```

reference: ألديك قلم ؟

predicted: هلديك قالر

--

reference: ليست هناك مسافة على هذه الأرض أبعد من يوم أمس.

predicted: ليست نالك مسافة على هذه الأرض أبعد من يوم أمس

--

reference: إنك تكبر المشكلة.

predicted: إنك تكبر المشكلة

--

reference: يرغب أن يلتقي بك.

predicted: يرغب أن يلتقي بك

--

reference: إنهم لا يعرفون لماذا حتى.

predicted: إنهم لا يعرفون لماذا حتى

--

reference: سيسعدني مساعدتك أي وقت تحب.

predicted: سيسئدني مساعد سكرأي وقت تحب

--

reference: أَحَبُّ نظريّة علمية إليّ هي أن حلقات زحل مكونة بالكامل من الأمتعة المفقودة.

predicted: أحب ناضريةً علمية إلي هي أنحل قتزح المكونا بالكامل من الأمت عن المفقودة

--

reference: سأشتري له قلماً.

predicted: سأشتري له قلما

--

reference: أين المشكلة ؟

predicted: أين المشكل

--

reference: وَلِلَّهِ يَسْجُدُ مَا فِي السَّمَاوَاتِ وَمَا فِي الْأَرْضِ مِنْ دَابَّةٍ وَالْمَلَائِكَةُ وَهُمْ لَا يَسْتَكْبِرُونَ

predicted: ولله يسجد ما في السماوات وما في الأرض من دابة والملائكة وهم لا يستكبرون

--

```

## Evaluation

The model can be evaluated as follows on the Arabic test data of Common Voice:

```python

import jiwer

import torch

import torchaudio

from datasets import load_dataset

from lang_trans.arabic import buckwalter

from transformers import set_seed, Wav2Vec2ForCTC, Wav2Vec2Processor

set_seed(42)

test_split = load_dataset("common_voice", "ar", split="test")

resamplers = { # all three sampling rates exist in test split

48000: torchaudio.transforms.Resample(48000, 16000),

44100: torchaudio.transforms.Resample(44100, 16000),

32000: torchaudio.transforms.Resample(32000, 16000),

}

def prepare_example(example):

speech, sampling_rate = torchaudio.load(example["path"])

example["speech"] = resamplers[sampling_rate](speech).squeeze().numpy()

return example

test_split = test_split.map(prepare_example)

processor = Wav2Vec2Processor.from_pretrained("elgeish/wav2vec2-large-xlsr-53-arabic")

model = Wav2Vec2ForCTC.from_pretrained("elgeish/wav2vec2-large-xlsr-53-arabic").to("cuda").eval()

def predict(batch):

inputs = processor(batch["speech"], sampling_rate=16000, return_tensors="pt", padding=True)

with torch.no_grad():

predicted = torch.argmax(model(inputs.input_values.to("cuda")).logits, dim=-1)

predicted[predicted == -100] = processor.tokenizer.pad_token_id # see fine-tuning script

batch["predicted"] = processor.batch_decode(predicted)

return batch

test_split = test_split.map(predict, batched=True, batch_size=16, remove_columns=["speech"])

transformation = jiwer.Compose([

# normalize some diacritics, remove punctuation, and replace Persian letters with Arabic ones

jiwer.SubstituteRegexes({

r'[auiFNKo\~_،؟»\?;:\-,\.؛«!"]': "", "\u06D6": "",

r"[\|\{]": "A", "p": "h", "ک": "k", "ی": "y"}),

# default transformation below

jiwer.RemoveMultipleSpaces(),

jiwer.Strip(),

jiwer.SentencesToListOfWords(),

jiwer.RemoveEmptyStrings(),

])

metrics = jiwer.compute_measures(

truth=[buckwalter.trans(s) for s in test_split["sentence"]], # Buckwalter transliteration

hypothesis=test_split["predicted"],

truth_transform=transformation,

hypothesis_transform=transformation,

)

print(f"WER: {metrics['wer']:.2%}")

```

**Test Result**: 26.55%

## Training

For more details, see [Fine-Tuning with Arabic Speech Corpus](https://github.com/huggingface/transformers/tree/1c06240e1b3477728129bb58e7b6c7734bb5074e/examples/research_projects/wav2vec2#fine-tuning-with-arabic-speech-corpus).

This model represents Arabic in a format called [Buckwalter transliteration](https://en.wikipedia.org/wiki/Buckwalter_transliteration).

The Buckwalter format only includes ASCII characters, some of which are non-alpha (e.g., `">"` maps to `"أ"`).

The [lang-trans](https://github.com/kariminf/lang-trans) package is used to convert (transliterate) Arabic abjad.

[This script](https://github.com/huggingface/transformers/blob/1c06240e1b3477728129bb58e7b6c7734bb5074e/examples/research_projects/wav2vec2/finetune_large_xlsr_53_arabic_speech_corpus.sh)

was used to first fine-tune [facebook/wav2vec2-large-xlsr-53](https://huggingface.co/facebook/wav2vec2-large-xlsr-53)

on the `train` split of the [Arabic Speech Corpus](https://huggingface.co/datasets/arabic_speech_corpus) dataset;

the `test` split was used for model selection; the resulting model at this point is saved as [elgeish/wav2vec2-large-xlsr-53-levantine-arabic](https://huggingface.co/elgeish/wav2vec2-large-xlsr-53-levantine-arabic).

Training was then resumed using the `train` split of the [Common Voice](https://huggingface.co/datasets/common_voice) dataset;

the `validation` split was used for model selection;

training was stopped to meet the deadline of [Fine-Tune-XLSR Week](https://github.com/huggingface/transformers/blob/700229f8a4003c4f71f29275e0874b5ba58cd39d/examples/research_projects/wav2vec2/FINE_TUNE_XLSR_WAV2VEC2.md):

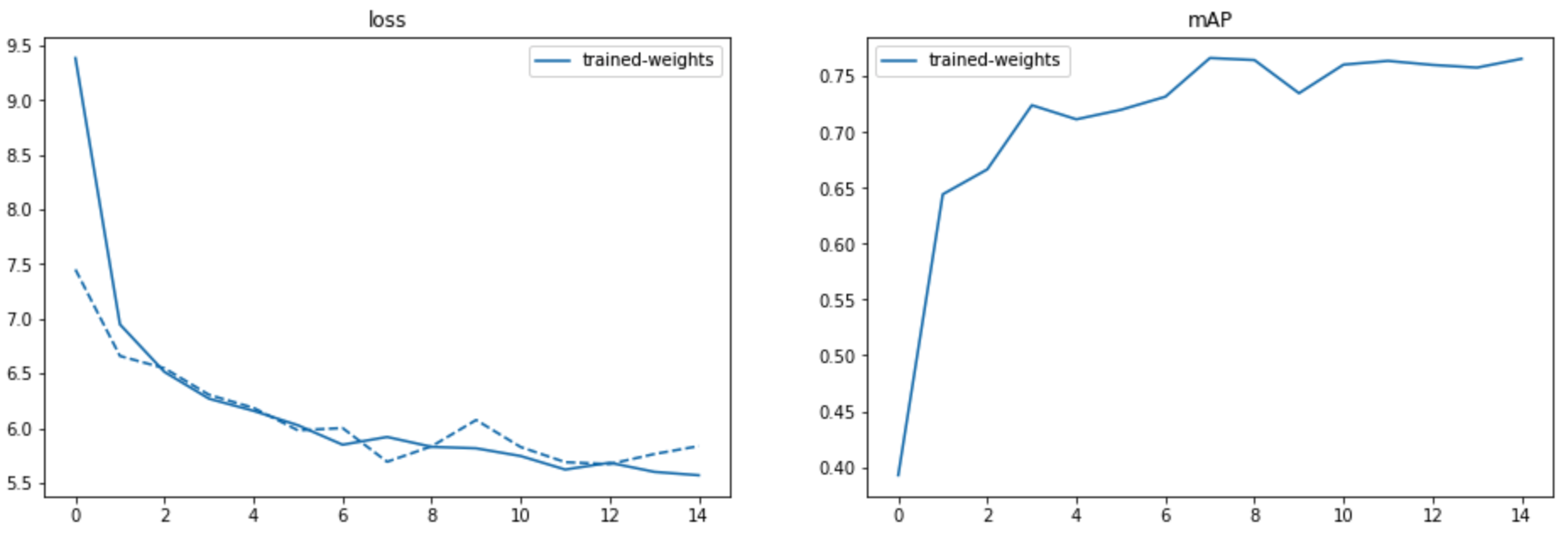

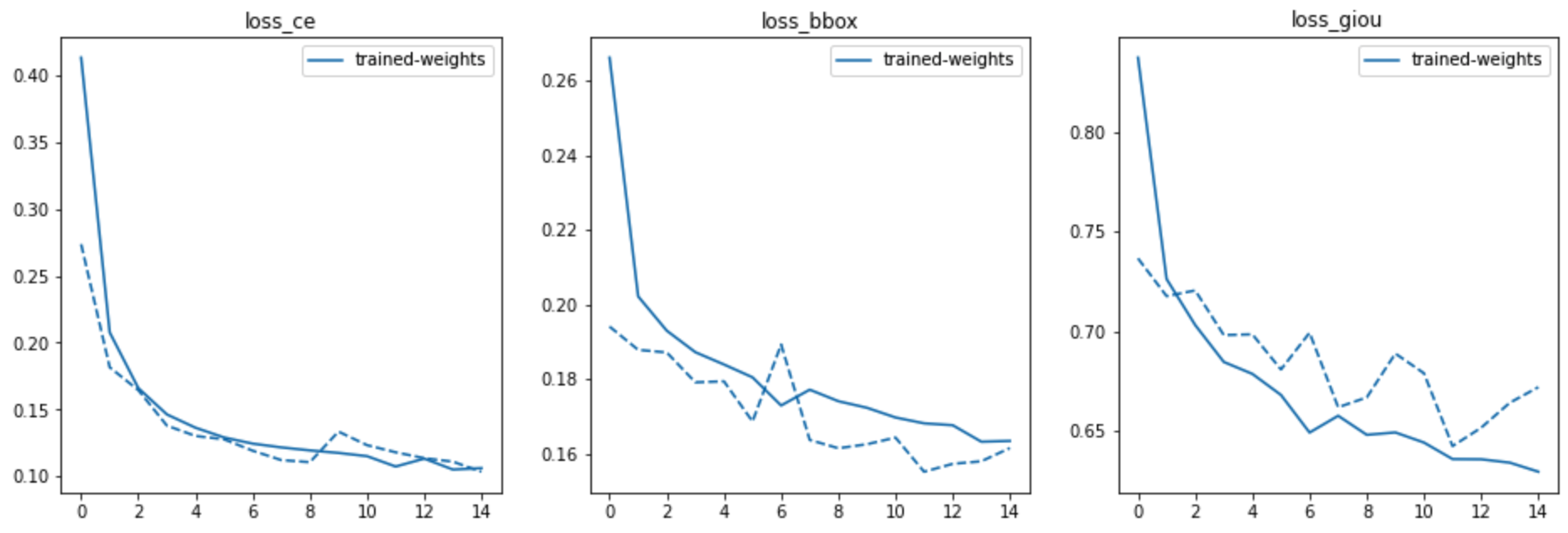

this model is the checkpoint at 100k steps and a validation WER of **23.39%**.

<img src="https://huggingface.co/elgeish/wav2vec2-large-xlsr-53-arabic/raw/main/validation_wer.png" alt="Validation WER" width="100%" />

It's worth noting that validation WER is trending down, indicating the potential of further training (resuming the decaying learning rate at 7e-6).

## Future Work

One area to explore is using `attention_mask` in model input, which is recommended [here](https://huggingface.co/blog/fine-tune-xlsr-wav2vec2).

Also, exploring data augmentation using datasets used to train models listed [here](https://paperswithcode.com/sota/speech-recognition-on-common-voice-arabic).

|

jianyang/LunarLander-v2

|

jianyang

| 2022-06-04T22:39:46Z | 1 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-06-04T21:57:52Z |

---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- metrics:

- type: mean_reward

value: 182.82 +/- 79.11

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

nutjung/TEST2ppo-LunarLander-v2-4

|

nutjung

| 2022-06-04T22:08:31Z | 0 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-06-04T22:08:07Z |

---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- metrics:

- type: mean_reward

value: 273.94 +/- 14.80

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

huggingtweets/tomcooper26-tomncooper

|

huggingtweets

| 2022-06-04T21:53:08Z | 3 | 0 |

transformers

|

[

"transformers",

"pytorch",

"gpt2",

"text-generation",

"huggingtweets",

"en",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-06-04T21:52:33Z |

---

language: en

thumbnail: http://www.huggingtweets.com/tomcooper26-tomncooper/1654379583668/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/378800000155926309/6204f6960618d11ff5a7e2b21ae9db03_400x400.jpeg')">

</div>

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/290863981/monkey_400x400.jpg')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI CYBORG 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">Tom Cooper & Tom Cooper</div>

<div style="text-align: center; font-size: 14px;">@tomcooper26-tomncooper</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from Tom Cooper & Tom Cooper.

| Data | Tom Cooper | Tom Cooper |

| --- | --- | --- |

| Tweets downloaded | 2092 | 3084 |

| Retweets | 179 | 687 |

| Short tweets | 223 | 59 |

| Tweets kept | 1690 | 2338 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/dndifpco/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @tomcooper26-tomncooper's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/97vltow9) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/97vltow9/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/tomcooper26-tomncooper')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

atoivat/distilbert-base-uncased-finetuned-squad

|

atoivat

| 2022-06-04T21:13:36Z | 5 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"question-answering",

"generated_from_trainer",

"dataset:squad",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2022-06-04T18:10:50Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- squad

model-index:

- name: distilbert-base-uncased-finetuned-squad

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-squad

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the squad dataset.

It achieves the following results on the evaluation set:

- Loss: 1.1504

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:-----:|:---------------:|

| 1.2086 | 1.0 | 5533 | 1.1565 |

| 0.9515 | 2.0 | 11066 | 1.1225 |

| 0.7478 | 3.0 | 16599 | 1.1504 |

### Framework versions

- Transformers 4.19.2

- Pytorch 1.11.0+cu113

- Datasets 2.2.2

- Tokenizers 0.12.1

|

yanekyuk/camembert-keyword-discriminator

|

yanekyuk

| 2022-06-04T21:08:51Z | 5 | 1 |

transformers

|

[

"transformers",

"pytorch",

"camembert",

"token-classification",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-06-04T20:23:44Z |

---

license: mit

tags:

- generated_from_trainer

metrics:

- precision

- recall

- accuracy

- f1

model-index:

- name: camembert-keyword-discriminator

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# camembert-keyword-discriminator

This model is a fine-tuned version of [camembert-base](https://huggingface.co/camembert-base) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2180

- Precision: 0.6646

- Recall: 0.7047

- Accuracy: 0.9344

- F1: 0.6841

- Ent/precision: 0.7185

- Ent/accuracy: 0.8157

- Ent/f1: 0.7640

- Con/precision: 0.5324

- Con/accuracy: 0.4860

- Con/f1: 0.5082

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 8

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | Accuracy | F1 | Ent/precision | Ent/accuracy | Ent/f1 | Con/precision | Con/accuracy | Con/f1 |

|:-------------:|:-----:|:-----:|:---------------:|:---------:|:------:|:--------:|:------:|:-------------:|:------------:|:------:|:-------------:|:------------:|:------:|

| 0.2016 | 1.0 | 1875 | 0.1910 | 0.5947 | 0.7125 | 0.9243 | 0.6483 | 0.6372 | 0.8809 | 0.7395 | 0.4560 | 0.3806 | 0.4149 |

| 0.1454 | 2.0 | 3750 | 0.1632 | 0.6381 | 0.7056 | 0.9324 | 0.6701 | 0.6887 | 0.8291 | 0.7524 | 0.5064 | 0.4621 | 0.4833 |

| 0.1211 | 3.0 | 5625 | 0.1702 | 0.6703 | 0.6678 | 0.9343 | 0.6690 | 0.7120 | 0.7988 | 0.7529 | 0.5471 | 0.4094 | 0.4684 |

| 0.1021 | 4.0 | 7500 | 0.1745 | 0.6777 | 0.6708 | 0.9351 | 0.6742 | 0.7206 | 0.7956 | 0.7562 | 0.5557 | 0.4248 | 0.4815 |

| 0.0886 | 5.0 | 9375 | 0.1913 | 0.6540 | 0.7184 | 0.9340 | 0.6847 | 0.7022 | 0.8396 | 0.7648 | 0.5288 | 0.4795 | 0.5030 |

| 0.0781 | 6.0 | 11250 | 0.2021 | 0.6605 | 0.7132 | 0.9344 | 0.6858 | 0.7139 | 0.8258 | 0.7658 | 0.5293 | 0.4913 | 0.5096 |

| 0.0686 | 7.0 | 13125 | 0.2127 | 0.6539 | 0.7132 | 0.9337 | 0.6822 | 0.7170 | 0.8172 | 0.7638 | 0.5112 | 0.5083 | 0.5098 |

| 0.0667 | 8.0 | 15000 | 0.2180 | 0.6646 | 0.7047 | 0.9344 | 0.6841 | 0.7185 | 0.8157 | 0.7640 | 0.5324 | 0.4860 | 0.5082 |

### Framework versions

- Transformers 4.19.2

- Pytorch 1.11.0+cu113

- Datasets 2.2.2

- Tokenizers 0.12.1

|

edwinhung/bird_classifier

|

edwinhung

| 2022-06-04T20:52:15Z | 0 | 0 |

fastai

|

[

"fastai",

"region:us"

] | null | 2022-06-04T19:43:58Z |

---

tags:

- fastai

---

# Model card

## Model description

A neural network model trained with fastai and timm to classify 400 bird species in an image.

## Intended uses & limitations

This bird classifier is used to predict bird species in a given image. The Image fed should have only one bird. This is a multi-class classification which will output a class even if there is no bird in the image.

## Training and evaluation data

Pre-trained model used is Efficient net from timm library, specifically *efficientnet_b3a*. The dataset trained is from Kaggle [BIRDS 400 - SPECIES IMAGE CLASSIFICATION](https://www.kaggle.com/datasets/gpiosenka/100-bird-species). Evaluation accuracy score on the given test set from Kaggle is 99.4%. Please note this is likely not representative of real world performance, as mentioned by dataset provider that the test set is hand picked as the best images.

|

kingabzpro/q-FrozenLake-v1-4x4-noSlippery

|

kingabzpro

| 2022-06-04T18:51:21Z | 0 | 1 | null |

[

"FrozenLake-v1-4x4-no_slippery",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-06-04T18:51:10Z |

---

tags:

- FrozenLake-v1-4x4-no_slippery

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-FrozenLake-v1-4x4-noSlippery

results:

- metrics:

- type: mean_reward

value: 1.00 +/- 0.00

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-4x4-no_slippery

type: FrozenLake-v1-4x4-no_slippery

---

# **Q-Learning** Agent playing **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

```python

model = load_from_hub(repo_id="kingabzpro/q-FrozenLake-v1-4x4-noSlippery", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

evaluate_agent(env, model["max_steps"], model["n_eval_episodes"], model["qtable"], model["eval_seed"])

```

|

etmckinley/BOTHALTEROUT

|

etmckinley

| 2022-06-04T18:26:24Z | 4 | 2 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"gpt2",

"text-generation",

"license:mit",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-06-02T18:32:15Z |

---

license: mit

tags:

model-index:

- name: BERFALTER

results: []

widget:

- text: "Gregg Berhalter"

- text: "The USMNT won't win the World Cup"

- text: "The Soccer Media in this country"

- text: "Ball don't"

- text: "This lineup"

---

# BOTHALTEROUT

This model is a fine-tuned version of [GPT-2](https://huggingface.co/gpt2) using 21,832 tweets from 12 twitter users with very strong opinions about the United States Men's National Team.

## Limitations and bias

The model has all [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

Additionally, BOTHALTEROUT can create some problematic results based upon the tweets used to generate the model.

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001372

- train_batch_size: 1

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

### Training results

### Framework versions

- Transformers 4.19.2

- Pytorch 1.11.0+cu113

- Datasets 2.2.2

- Tokenizers 0.12.1

## About

*Built by [Eliot McKinley](https://twitter.com/etmckinley) based upon [HuggingTweets](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb) by Boris Dayama*

|

mishtert/iec

|

mishtert

| 2022-06-04T18:01:26Z | 5 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"layoutlmv2",

"token-classification",

"generated_from_trainer",

"dataset:funsd",

"license:cc-by-sa-4.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-06-04T17:22:57Z |

---

license: cc-by-sa-4.0

tags:

- generated_from_trainer

datasets:

- funsd

model_index:

- name: layoutlmv2-finetuned-funsd

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: funsd

type: funsd

args: funsd

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# layoutlmv2-finetuned-funsd

This model is a fine-tuned version of [microsoft/layoutlmv2-base-uncased](https://huggingface.co/microsoft/layoutlmv2-base-uncased) on the funsd dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- training_steps: 1000

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- Transformers 4.9.0.dev0

- Pytorch 1.8.0+cu101

- Datasets 1.9.0

- Tokenizers 0.10.3

|

mcditoos/q-Taxi-v3

|

mcditoos

| 2022-06-04T17:14:13Z | 0 | 0 | null |

[

"Taxi-v3",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-06-04T17:14:07Z |

---

tags:

- Taxi-v3

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-Taxi-v3

results:

- metrics:

- type: mean_reward

value: 7.56 +/- 2.71

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Taxi-v3

type: Taxi-v3

---

# **Q-Learning** Agent playing **Taxi-v3**

This is a trained model of a **Q-Learning** agent playing **Taxi-v3** .

## Usage

```python

model = load_from_hub(repo_id="/q-Taxi-v3", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

evaluate_agent(env, model["max_steps"], model["n_eval_episodes"], model["qtable"], model["eval_seed"])

```

|

mcditoos/q-FrozenLake-v1-4x4-noSlippery

|

mcditoos

| 2022-06-04T17:09:47Z | 0 | 0 | null |

[

"FrozenLake-v1-4x4-no_slippery",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-06-04T17:09:40Z |

---

tags:

- FrozenLake-v1-4x4-no_slippery

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-FrozenLake-v1-4x4-noSlippery

results:

- metrics:

- type: mean_reward

value: 1.00 +/- 0.00

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-4x4-no_slippery

type: FrozenLake-v1-4x4-no_slippery

---

# **Q-Learning** Agent playing **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

```python

model = load_from_hub(repo_id="/q-FrozenLake-v1-4x4-noSlippery", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

evaluate_agent(env, model["max_steps"], model["n_eval_episodes"], model["qtable"], model["eval_seed"])

```

|

Umer4/UrduAudio2Text

|

Umer4

| 2022-06-04T16:17:45Z | 3 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"wav2vec2",

"automatic-speech-recognition",

"generated_from_trainer",

"dataset:common_voice",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2022-06-02T17:52:38Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- common_voice

model-index:

- name: UrduAudio2Text

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# UrduAudio2Text

This model is a fine-tuned version of [facebook/wav2vec2-xls-r-300m](https://huggingface.co/facebook/wav2vec2-xls-r-300m) on the common_voice dataset.

It achieves the following results on the evaluation set:

- Loss: 1.4978

- Wer: 0.8376

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0003

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 30

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 5.5558 | 15.98 | 400 | 1.4978 | 0.8376 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.10.0+cu113

- Datasets 2.2.2

- Tokenizers 0.10.3

|

huggingtweets/orc_nft

|

huggingtweets

| 2022-06-04T16:13:13Z | 3 | 1 |

transformers

|

[

"transformers",

"pytorch",

"gpt2",

"text-generation",

"huggingtweets",

"en",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-06-04T16:12:40Z |

---

language: en

thumbnail: http://www.huggingtweets.com/orc_nft/1654359188989/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1510438749154549764/sar63AXD_400x400.jpg')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI BOT 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">ORC.A ⍬</div>

<div style="text-align: center; font-size: 14px;">@orc_nft</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from ORC.A ⍬.

| Data | ORC.A ⍬ |

| --- | --- |

| Tweets downloaded | 1675 |

| Retweets | 113 |

| Short tweets | 544 |

| Tweets kept | 1018 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/wwc37qkh/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @orc_nft's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/debtzj0e) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/debtzj0e/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/orc_nft')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

yanekyuk/convberturk-keyword-extractor

|

yanekyuk

| 2022-06-04T11:19:51Z | 4 | 0 |

transformers

|

[

"transformers",

"pytorch",

"convbert",

"token-classification",

"generated_from_trainer",

"tr",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-06-04T09:32:23Z |

---

license: mit

tags:

- generated_from_trainer

metrics:

- precision

- recall

- accuracy

- f1

language:

- tr

widget:

- text: "İngiltere'de düzenlenen Avrupa Tekvando ve Para Tekvando Şampiyonası’nda millî tekvandocular 5 altın, 2 gümüş ve 4 bronz olmak üzere 11, millî para tekvandocular ise 4 altın, 3 gümüş ve 1 bronz olmak üzere 8 madalya kazanarak takım halinde Avrupa şampiyonu oldu."

- text: "Füme somon dedik ama aslında lox salamuralanmış somon anlamına geliyor, füme etme opsiyonel. Lox bagel, 1930'larda Eggs Benedict furyasında New Yorklu Yahudi cemaati tarafından koşer bir alternatif olarak çıkan bir lezzet. Günümüzde benim hangover yüreğim dâhil dünyanın birçok yerinde enfes bir kahvaltı sandviçi."

- text: "Türkiye'de son aylarda sıklıkla tartışılan konut satışı karşılığında yabancılara vatandaşlık verilmesi konusunu beyin göçü kapsamında ele almak mümkün. Daha önce 250 bin dolar olan vatandaşlık bedeli yükselen tepkiler üzerine 400 bin dolara çıkarılmıştı. Türkiye'den göç eden iyi eğitimli kişilerin , gittikleri ülkelerde 250 bin dolar tutarında yabancı yatırıma denk olduğu göz önüne alındığında nitelikli insan gücünün yabancılara konut karşılığında satılan vatandaşlık bedelin eş olduğunu görüyoruz. Yurt dışına giden her bir vatandaşın yüksek teknolojili katma değer üreten sektörlere yapacağı katkılar göz önünde bulundurulduğunda bu açığın inşaat sektörüyle kapatıldığını da görüyoruz. Beyin göçü konusunda sadece ekonomik perspektiften bakıldığında bile kısa vadeli döviz kaynağı yaratmak için kullanılan vatandaşlık satışı yerine beyin göçünü önleyecek önlemler alınmasının ülkemize çok daha faydalı olacağı sonucunu çıkarıyoruz."

- text: "Türkiye’de resmî verilere göre, 15 ve daha yukarı yaştaki kişilerde mevsim etkisinden arındırılmış işsiz sayısı, bu yılın ilk çeyreğinde bir önceki çeyreğe göre 50 bin kişi artarak 3 milyon 845 bin kişi oldu. Mevsim etkisinden arındırılmış işsizlik oranı ise 0,1 puanlık artışla %11,4 seviyesinde gerçekleşti. İşsizlik oranı, ilk çeyrekte geçen yılın aynı çeyreğine göre 1,7 puan azaldı."

- text: "Boeing’in insansız uzay aracı Starliner, birtakım sorunlara rağmen Uluslararası Uzay İstasyonuna (ISS) ulaşarak ilk kez başarılı bir şekilde kenetlendi. Aracın ISS’te beş gün kalmasını takiben sorunsuz bir şekilde New Mexico’ya inmesi halinde Boeing, sonbaharda astronotları yörüngeye göndermek için Starliner’ı kullanabilir.\n\nNeden önemli? NASA’nın personal aracı üretmeyi durdurmasından kaynaklı olarak görevli astronotlar ve kozmonotlar, ISS’te Rusya’nın ürettiği uzay araçları ile taşınıyordu. Starliner’ın kendini kanıtlaması ise bu konuda Rusya’ya olan bağımlılığın potansiyel olarak ortadan kalkabileceği anlamına geliyor."

model-index:

- name: convberturk-keyword-extractor

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# convberturk-keyword-extractor

This model is a fine-tuned version of [dbmdz/convbert-base-turkish-cased](https://huggingface.co/dbmdz/convbert-base-turkish-cased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4098

- Precision: 0.6742

- Recall: 0.7035

- Accuracy: 0.9175

- F1: 0.6886

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 8

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | Accuracy | F1 |

|:-------------:|:-----:|:-----:|:---------------:|:---------:|:------:|:--------:|:------:|

| 0.174 | 1.0 | 1875 | 0.1920 | 0.6546 | 0.6869 | 0.9184 | 0.6704 |

| 0.1253 | 2.0 | 3750 | 0.2030 | 0.6527 | 0.7317 | 0.9179 | 0.6900 |

| 0.091 | 3.0 | 5625 | 0.2517 | 0.6499 | 0.7473 | 0.9163 | 0.6952 |

| 0.0684 | 4.0 | 7500 | 0.2828 | 0.6633 | 0.7270 | 0.9167 | 0.6937 |

| 0.0536 | 5.0 | 9375 | 0.3307 | 0.6706 | 0.7194 | 0.9180 | 0.6942 |

| 0.0384 | 6.0 | 11250 | 0.3669 | 0.6655 | 0.7161 | 0.9157 | 0.6898 |

| 0.0316 | 7.0 | 13125 | 0.3870 | 0.6792 | 0.7002 | 0.9176 | 0.6895 |

| 0.0261 | 8.0 | 15000 | 0.4098 | 0.6742 | 0.7035 | 0.9175 | 0.6886 |

### Framework versions

- Transformers 4.19.2

- Pytorch 1.11.0+cu113

- Datasets 2.2.2

- Tokenizers 0.12.1

|

kaniku/xlm-roberta-large-indonesian-NER-finetuned-ner

|

kaniku

| 2022-06-04T04:54:01Z | 25 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"xlm-roberta",

"token-classification",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-06-04T02:44:11Z |

---

tags:

- generated_from_trainer

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: xlm-roberta-large-indonesian-NER-finetuned-ner

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-roberta-large-indonesian-NER-finetuned-ner

This model is a fine-tuned version of [cahya/xlm-roberta-large-indonesian-NER](https://huggingface.co/cahya/xlm-roberta-large-indonesian-NER) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0489

- Precision: 0.9254

- Recall: 0.9394

- F1: 0.9324

- Accuracy: 0.9851

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.0496 | 1.0 | 1767 | 0.0489 | 0.9254 | 0.9394 | 0.9324 | 0.9851 |

### Framework versions

- Transformers 4.19.2

- Pytorch 1.11.0+cu113

- Datasets 2.2.2

- Tokenizers 0.12.1

|

LinaR/Prediccion_titulos

|

LinaR

| 2022-06-04T04:44:50Z | 3 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tf",

"t5",

"text2text-generation",

"generated_from_keras_callback",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-06-04T03:33:36Z |

---

tags:

- generated_from_keras_callback

model-index:

- name: Prediccion_titulos

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# Prediccion_titulos

Este modelo predice los encabezados de las noticias

## Model description

Este modelo fue entrenado con un Transformador T5 y una base de datos en español

## Intended uses & limitations

More information needed

## Training and evaluation data

Los datos fueron tomado del siguiente dataset de Kaggle : https://www.kaggle.com/datasets/josemamuiz/noticias-laraznpblico, el cual es un conjunto de datos se extrajo de las webs de periódicos españoles

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: None

- training_precision: float32

### Training results

### Framework versions

- Transformers 4.19.2

- TensorFlow 2.8.2

- Datasets 2.2.2

- Tokenizers 0.12.1

|

ssantanag/pasajes_de_la_biblia

|

ssantanag

| 2022-06-04T04:32:36Z | 4 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tf",

"t5",

"text2text-generation",

"generated_from_keras_callback",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-06-04T03:56:29Z |

---

tags:

- generated_from_keras_callback

model-index:

- name: pasajes_de_la_biblia

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# pasajes_de_la_biblia

Este modelo fue entrenado con el dataset publicado en Kaggle de los versiculos de la biblia en el siguiente enlace puede encontrar el dataset https://www.kaggle.com/datasets/camesruiz/biblia-ntv-spanish-bible-ntv.

## Training and evaluation data

la distribución de la data fue la siguiente:

- Training set: 58.20%

- Validation set: 9.65%

- Test set: 32.15%

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: None

- training_precision: float32

### Training results

### Framework versions

- Transformers 4.19.2

- TensorFlow 2.8.2

- Datasets 2.2.2

- Tokenizers 0.12.1

|

nbroad/splinter-base-squad2

|

nbroad

| 2022-06-04T03:47:06Z | 4 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"splinter",

"question-answering",

"generated_from_trainer",

"dataset:squad_v2",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2022-06-04T01:30:38Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- squad_v2

model-index:

- name: splinter-base-squad2_3

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# splinter-base-squad2_3

This model is a fine-tuned version of [tau/splinter-base-qass](https://huggingface.co/tau/splinter-base-qass) on the squad_v2 dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 64

- eval_batch_size: 256

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1.0

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- Transformers 4.20.0.dev0

- Pytorch 1.11.0a0+17540c5

- Datasets 2.2.2

- Tokenizers 0.12.1

|

send-it/q-Taxi-v3

|

send-it

| 2022-06-04T03:09:16Z | 0 | 0 | null |

[

"Taxi-v3",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-06-04T03:08:56Z |

---

tags:

- Taxi-v3

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-Taxi-v3

results:

- metrics:

- type: mean_reward

value: 7.50 +/- 2.76

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Taxi-v3

type: Taxi-v3

---

# **Q-Learning** Agent playing **Taxi-v3**

This is a trained model of a **Q-Learning** agent playing **Taxi-v3** .

## Usage

```python

model = load_from_hub(repo_id="/q-Taxi-v3", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

evaluate_agent(env, model["max_steps"], model["n_eval_episodes"], model["qtable"], model["eval_seed"])

```

|

send-it/q-FrozenLake-v1-4x4-noSlippery

|

send-it

| 2022-06-04T03:07:59Z | 0 | 0 | null |

[

"FrozenLake-v1-4x4-no_slippery",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-06-04T03:07:51Z |

---

tags:

- FrozenLake-v1-4x4-no_slippery

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-FrozenLake-v1-4x4-noSlippery

results:

- metrics:

- type: mean_reward

value: 1.00 +/- 0.00

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-4x4-no_slippery

type: FrozenLake-v1-4x4-no_slippery

---

# **Q-Learning** Agent playing **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

```python

model = load_from_hub(repo_id="send-it/q-FrozenLake-v1-4x4-noSlippery", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

evaluate_agent(env, model["max_steps"], model["n_eval_episodes"], model["qtable"], model["eval_seed"])

```

|

baru98/bert-base-cased-finetuned-squad

|

baru98

| 2022-06-04T02:53:28Z | 6 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"bert",

"question-answering",

"generated_from_trainer",

"dataset:squad",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2022-06-04T01:42:22Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- squad

model-index:

- name: bert-base-cased-finetuned-squad

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-cased-finetuned-squad

This model is a fine-tuned version of [bert-base-cased](https://huggingface.co/bert-base-cased) on the squad dataset.

It achieves the following results on the evaluation set:

- Loss: 5.4212

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| No log | 1.0 | 7 | 5.7012 |

| No log | 2.0 | 14 | 5.5021 |

| No log | 3.0 | 21 | 5.4212 |

### Framework versions

- Transformers 4.19.2

- Pytorch 1.11.0+cu113

- Datasets 2.2.2

- Tokenizers 0.12.1

|

jgriffi/xlm-roberta-base-finetuned-panx-all

|

jgriffi

| 2022-06-04T01:24:48Z | 7 | 0 |

transformers

|

[

"transformers",

"pytorch",

"xlm-roberta",

"token-classification",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-06-04T00:52:21Z |

---

license: mit

tags:

- generated_from_trainer

metrics:

- f1

model-index:

- name: xlm-roberta-base-finetuned-panx-all

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-roberta-base-finetuned-panx-all

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1448

- F1: 0.8881

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 12

- eval_batch_size: 12

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.3029 | 1.0 | 1669 | 0.2075 | 0.7971 |

| 0.164 | 2.0 | 3338 | 0.1612 | 0.8680 |

| 0.1025 | 3.0 | 5007 | 0.1448 | 0.8881 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.11.0+cu113

- Datasets 1.16.1

- Tokenizers 0.10.3

|

jgriffi/xlm-roberta-base-finetuned-panx-de-fr

|

jgriffi

| 2022-06-03T23:42:57Z | 7 | 0 |

transformers

|

[

"transformers",

"pytorch",

"xlm-roberta",

"token-classification",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-06-03T23:13:02Z |

---

license: mit

tags:

- generated_from_trainer

metrics:

- f1

model-index:

- name: xlm-roberta-base-finetuned-panx-de-fr

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-roberta-base-finetuned-panx-de-fr

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1774

- F1: 0.8594

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 12

- eval_batch_size: 12

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.3029 | 1.0 | 1430 | 0.1884 | 0.8237 |

| 0.1573 | 2.0 | 2860 | 0.1770 | 0.8473 |

| 0.0959 | 3.0 | 4290 | 0.1774 | 0.8594 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.11.0+cu113

- Datasets 1.16.1

- Tokenizers 0.10.3

|

StanKarz/q-Taxi-v3

|

StanKarz

| 2022-06-03T22:17:14Z | 0 | 0 | null |

[

"Taxi-v3",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-06-03T22:17:03Z |

---

tags:

- Taxi-v3

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-Taxi-v3

results:

- metrics:

- type: mean_reward

value: 7.56 +/- 2.71

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Taxi-v3

type: Taxi-v3

---

# **Q-Learning** Agent playing **Taxi-v3**

This is a trained model of a **Q-Learning** agent playing **Taxi-v3** .

## Usage

```python

model = load_from_hub(repo_id="Sicko-Code/q-Taxi-v3", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

evaluate_agent(env, model["max_steps"], model["n_eval_episodes"], model["qtable"], model["eval_seed"])

```

|

thamaine/distilbert-base-cased

|

thamaine

| 2022-06-03T22:11:35Z | 0 | 0 |

keras

|

[

"keras",

"tf-keras",

"region:us"

] | null | 2022-05-23T06:07:23Z |

---

library_name: keras

---

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'Adam', 'learning_rate': 0.01, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-07, 'amsgrad': False}

- training_precision: mixed_float16

## Training Metrics

| Epochs | Train Loss | Validation Loss |

|--- |--- |--- |

| 1| 5.965| 5.951|

## Model Plot

<details>

<summary>View Model Plot</summary>

</details>

|

nboudad/Maghriberta

|

nboudad

| 2022-06-03T21:52:55Z | 3 | 0 |

transformers

|

[

"transformers",

"pytorch",

"roberta",

"fill-mask",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-06-03T18:51:51Z |

---

widget:

- text: "جاب ليا <mask> ."

example_title: "example1"

- text: "مشيت نجيب <mask> فالفرماسيان ."

example_title: "example2"

---

|

ulysses-camara/legal-bert-pt-br

|

ulysses-camara

| 2022-06-03T20:20:18Z | 9 | 4 |

sentence-transformers

|

[

"sentence-transformers",

"pt",

"license:mit",

"region:us"

] | null | 2022-05-31T13:30:11Z |

---

language: pt

license: mit

tags:

- sentence-transformers

---

# LegalBERTPT-br

LegalBERTPT-br is a trained sentence embedding using SimCSE, a contrastive learning framework, coupled with the Portuguese pre-trained language model named [BERTimbau](https://huggingface.co/neuralmind/bert-base-portuguese-cased).

# Corpora

– From [this site](https://www2.camara.leg.br/transparencia/servicos-ao-cidadao/participacao-popular), we used the column `Conteudo` with 215,713 comments. We removed the comments from PL 3723/2019, PEC 471/2005, and Hashtag Corpus, in order to avoid bias.

– From [this site](https://www2.camara.leg.br/transparencia/servicos-ao-cidadao/participacao-popular), we also used 147,008 bills. From these projects, we used the summary field named `txtEmenta` and the project core text named `txtExplicacaoEmenta`.

– From Political Speeches, we used 462,831 texts, specifically, we used the columns: `sumario`, `textodiscurso`, and `indexacao`.

These corpora were segmented into sentences and concatenated, producing 2,307,426 sentences.

# Citing and Authors

If you find this model helpful, feel free to cite our publication [Evaluating Topic Models in Portuguese Political Comments About Bills from Brazil’s Chamber of Deputies](https://link.springer.com/chapter/10.1007/978-3-030-91699-2_8):

```bibtex

@inproceedings{bracis,

author = {Nádia Silva and Marília Silva and Fabíola Pereira and João Tarrega and João Beinotti and Márcio Fonseca and Francisco Andrade and André Carvalho},

title = {Evaluating Topic Models in Portuguese Political Comments About Bills from Brazil’s Chamber of Deputies},

booktitle = {Anais da X Brazilian Conference on Intelligent Systems},

location = {Online},

year = {2021},

keywords = {},

issn = {0000-0000},

publisher = {SBC},

address = {Porto Alegre, RS, Brasil},

url = {https://sol.sbc.org.br/index.php/bracis/article/view/19061}

}

```

|

haritzpuerto/distilbert-squad

|

haritzpuerto

| 2022-06-03T20:08:44Z | 4 | 0 |

transformers

|

[

"transformers",

"pytorch",

"distilbert",

"question-answering",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2022-06-03T20:04:42Z |

TrainOutput(global_step=5475, training_loss=1.7323438837756848, metrics={'train_runtime': 4630.6634, 'train_samples_per_second': 18.917, 'train_steps_per_second': 1.182, 'total_flos': 1.1445080909703168e+16, 'train_loss': 1.7323438837756848, 'epoch': 1.0})

|

mmillet/rubert-tiny2_finetuned_emotion_experiment

|

mmillet

| 2022-06-03T20:03:37Z | 3 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"bert",

"text-classification",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-05-19T16:22:22Z |

---

license: mit

tags:

- generated_from_trainer

metrics:

- accuracy

- f1

model-index:

- name: rubert-tiny2_finetuned_emotion_experiment

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# rubert-tiny2_finetuned_emotion_experiment

This model is a fine-tuned version of [cointegrated/rubert-tiny2](https://huggingface.co/cointegrated/rubert-tiny2) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3947

- Accuracy: 0.8616

- F1: 0.8577

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 15

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| 0.651 | 1.0 | 54 | 0.5689 | 0.8172 | 0.8008 |

| 0.5355 | 2.0 | 108 | 0.4842 | 0.8486 | 0.8349 |

| 0.4561 | 3.0 | 162 | 0.4436 | 0.8590 | 0.8509 |

| 0.4133 | 4.0 | 216 | 0.4203 | 0.8590 | 0.8528 |

| 0.3709 | 5.0 | 270 | 0.4071 | 0.8564 | 0.8515 |

| 0.3346 | 6.0 | 324 | 0.3980 | 0.8564 | 0.8529 |

| 0.3153 | 7.0 | 378 | 0.3985 | 0.8590 | 0.8565 |

| 0.302 | 8.0 | 432 | 0.3967 | 0.8642 | 0.8619 |

| 0.2774 | 9.0 | 486 | 0.3958 | 0.8616 | 0.8575 |

| 0.2728 | 10.0 | 540 | 0.3959 | 0.8668 | 0.8644 |

| 0.2427 | 11.0 | 594 | 0.3962 | 0.8590 | 0.8550 |

| 0.2425 | 12.0 | 648 | 0.3959 | 0.8642 | 0.8611 |

| 0.2414 | 13.0 | 702 | 0.3959 | 0.8642 | 0.8611 |

| 0.2249 | 14.0 | 756 | 0.3949 | 0.8616 | 0.8582 |

| 0.2391 | 15.0 | 810 | 0.3947 | 0.8616 | 0.8577 |

### Framework versions

- Transformers 4.19.2

- Pytorch 1.11.0+cu113

- Datasets 2.2.1

- Tokenizers 0.12.1

|

hananajiyya/mt5-small-summarization

|

hananajiyya

| 2022-06-03T18:09:47Z | 5 | 0 |

transformers

|

[

"transformers",

"tf",

"mt5",

"text2text-generation",

"generated_from_keras_callback",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-06-03T00:27:50Z |

---

license: apache-2.0

tags:

- generated_from_keras_callback

model-index:

- name: mt5-small-summarization

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# mt5-small-summarization

This model is a fine-tuned version of [google/mt5-small](https://huggingface.co/google/mt5-small) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 2.9665

- Validation Loss: 2.4241

- Train Rouge1: 23.5645

- Train Rouge2: 8.2413

- Train Rougel: 19.7515

- Train Rougelsum: 19.9204

- Train Gen Len: 19.0

- Epoch: 2

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'AdamWeightDecay', 'learning_rate': 2e-05, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-07, 'amsgrad': False, 'weight_decay_rate': 0.01}

- training_precision: float32

### Training results

| Train Loss | Validation Loss | Train Rouge1 | Train Rouge2 | Train Rougel | Train Rougelsum | Train Gen Len | Epoch |

|:----------:|:---------------:|:------------:|:------------:|:------------:|:---------------:|:-------------:|:-----:|

| 4.7187 | 2.6627 | 19.5921 | 5.9723 | 16.6769 | 16.8456 | 18.955 | 0 |

| 3.1929 | 2.4941 | 21.2334 | 6.9784 | 18.2158 | 18.2062 | 18.99 | 1 |

| 2.9665 | 2.4241 | 23.5645 | 8.2413 | 19.7515 | 19.9204 | 19.0 | 2 |

### Framework versions

- Transformers 4.19.2

- TensorFlow 2.8.2

- Datasets 2.2.2

- Tokenizers 0.12.1

|

huggingtweets/deepleffen

|

huggingtweets

| 2022-06-03T17:34:54Z | 6 | 0 |

transformers

|

[

"transformers",

"pytorch",

"gpt2",

"text-generation",

"huggingtweets",

"en",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-03-02T23:29:05Z |

---

language: en

thumbnail: http://www.huggingtweets.com/deepleffen/1654277690184/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1241879678455078914/e2EdZIrr_400x400.jpg')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI BOT 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">Deep Leffen Bot</div>

<div style="text-align: center; font-size: 14px;">@deepleffen</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from Deep Leffen Bot.

| Data | Deep Leffen Bot |

| --- | --- |

| Tweets downloaded | 589 |

| Retweets | 14 |

| Short tweets | 27 |

| Tweets kept | 548 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/1p32tock/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @deepleffen's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/imjjixah) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/imjjixah/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/deepleffen')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

meghazisofiane/opus-mt-en-ar-finetuned-en-to-ar

|

meghazisofiane

| 2022-06-03T17:27:04Z | 16 | 1 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"marian",

"text2text-generation",

"generated_from_trainer",

"dataset:un_multi",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-05-31T18:13:33Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- un_multi

metrics:

- bleu

model-index:

- name: opus-mt-en-ar-finetuned-en-to-ar

results:

- task:

name: Sequence-to-sequence Language Modeling

type: text2text-generation

dataset:

name: un_multi

type: un_multi

args: ar-en

metrics:

- name: Bleu

type: bleu

value: 64.6767

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# opus-mt-en-ar-finetuned-en-to-ar

This model is a fine-tuned version of [Helsinki-NLP/opus-mt-en-ar](https://huggingface.co/Helsinki-NLP/opus-mt-en-ar) on the un_multi dataset.

It achieves the following results on the evaluation set:

- Loss: 0.8133

- Bleu: 64.6767

- Gen Len: 17.595

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 16

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Bleu | Gen Len |

|:-------------:|:-----:|:----:|:---------------:|:-------:|:-------:|

| No log | 1.0 | 50 | 0.7710 | 64.3416 | 17.4 |

| No log | 2.0 | 100 | 0.7569 | 63.9546 | 17.465 |

| No log | 3.0 | 150 | 0.7570 | 64.7484 | 17.385 |

| No log | 4.0 | 200 | 0.7579 | 65.4073 | 17.305 |

| No log | 5.0 | 250 | 0.7624 | 64.8939 | 17.325 |

| No log | 6.0 | 300 | 0.7696 | 65.1257 | 17.45 |

| No log | 7.0 | 350 | 0.7747 | 65.527 | 17.395 |

| No log | 8.0 | 400 | 0.7791 | 65.1357 | 17.52 |

| No log | 9.0 | 450 | 0.7900 | 65.3812 | 17.415 |

| 0.3982 | 10.0 | 500 | 0.7925 | 65.7346 | 17.39 |

| 0.3982 | 11.0 | 550 | 0.7951 | 65.1267 | 17.62 |

| 0.3982 | 12.0 | 600 | 0.8040 | 64.6874 | 17.495 |

| 0.3982 | 13.0 | 650 | 0.8069 | 64.7788 | 17.52 |

| 0.3982 | 14.0 | 700 | 0.8105 | 64.6701 | 17.585 |

| 0.3982 | 15.0 | 750 | 0.8120 | 64.7111 | 17.58 |

| 0.3982 | 16.0 | 800 | 0.8133 | 64.6767 | 17.595 |

### Framework versions

- Transformers 4.19.2

- Pytorch 1.11.0+cu113

- Datasets 2.2.2

- Tokenizers 0.12.1

|

VictorZhu/results

|

VictorZhu

| 2022-06-03T17:17:57Z | 3 | 0 |

transformers

|

[

"transformers",

"pytorch",

"distilbert",

"text-classification",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification