modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-07-12 18:27:22

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 518

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-07-12 18:26:55

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

mengmajun/qwen2.5-coder-1.5b-graph-v1 | mengmajun | 2025-05-24T10:34:15Z | 0 | 0 | peft | [

"peft",

"safetensors",

"arxiv:1910.09700",

"region:us"

]

| null | 2025-05-24T10:34:08Z | ---

base_model: unsloth/qwen2.5-coder-1.5b-instruct-bnb-4bit

library_name: peft

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

### Framework versions

- PEFT 0.15.2 |

haihp02/dc21102b-5b49-46b8-960f-20b22e87089d-phase2-adapter | haihp02 | 2025-05-24T10:32:20Z | 0 | 0 | transformers | [

"transformers",

"safetensors",

"generated_from_trainer",

"trl",

"sft",

"dpo",

"arxiv:2305.18290",

"base_model:Qwen/Qwen2-1.5B-Instruct",

"base_model:finetune:Qwen/Qwen2-1.5B-Instruct",

"endpoints_compatible",

"region:us"

]

| null | 2025-05-24T10:31:58Z | ---

base_model: Qwen/Qwen2-1.5B-Instruct

library_name: transformers

model_name: dc21102b-5b49-46b8-960f-20b22e87089d-phase2-adapter

tags:

- generated_from_trainer

- trl

- sft

- dpo

licence: license

---

# Model Card for dc21102b-5b49-46b8-960f-20b22e87089d-phase2-adapter

This model is a fine-tuned version of [Qwen/Qwen2-1.5B-Instruct](https://huggingface.co/Qwen/Qwen2-1.5B-Instruct).

It has been trained using [TRL](https://github.com/huggingface/trl).

## Quick start

```python

from transformers import pipeline

question = "If you had a time machine, but could only go to the past or the future once and never return, which would you choose and why?"

generator = pipeline("text-generation", model="haihp02/dc21102b-5b49-46b8-960f-20b22e87089d-phase2-adapter", device="cuda")

output = generator([{"role": "user", "content": question}], max_new_tokens=128, return_full_text=False)[0]

print(output["generated_text"])

```

## Training procedure

[<img src="https://raw.githubusercontent.com/wandb/assets/main/wandb-github-badge-28.svg" alt="Visualize in Weights & Biases" width="150" height="24"/>](https://wandb.ai/trunghainguyenhp02/sn56-dpo-train/runs/37g10ik6)

This model was trained with DPO, a method introduced in [Direct Preference Optimization: Your Language Model is Secretly a Reward Model](https://huggingface.co/papers/2305.18290).

### Framework versions

- TRL: 0.15.2

- Transformers: 4.51.3

- Pytorch: 2.7.0+cu126

- Datasets: 3.6.0

- Tokenizers: 0.21.1

## Citations

Cite DPO as:

```bibtex

@inproceedings{rafailov2023direct,

title = {{Direct Preference Optimization: Your Language Model is Secretly a Reward Model}},

author = {Rafael Rafailov and Archit Sharma and Eric Mitchell and Christopher D. Manning and Stefano Ermon and Chelsea Finn},

year = 2023,

booktitle = {Advances in Neural Information Processing Systems 36: Annual Conference on Neural Information Processing Systems 2023, NeurIPS 2023, New Orleans, LA, USA, December 10 - 16, 2023},

url = {http://papers.nips.cc/paper_files/paper/2023/hash/a85b405ed65c6477a4fe8302b5e06ce7-Abstract-Conference.html},

editor = {Alice Oh and Tristan Naumann and Amir Globerson and Kate Saenko and Moritz Hardt and Sergey Levine},

}

```

Cite TRL as:

```bibtex

@misc{vonwerra2022trl,

title = {{TRL: Transformer Reinforcement Learning}},

author = {Leandro von Werra and Younes Belkada and Lewis Tunstall and Edward Beeching and Tristan Thrush and Nathan Lambert and Shengyi Huang and Kashif Rasul and Quentin Gallouédec},

year = 2020,

journal = {GitHub repository},

publisher = {GitHub},

howpublished = {\url{https://github.com/huggingface/trl}}

}

``` |

LinaSad/mcqa_aquarat_friday | LinaSad | 2025-05-24T10:32:18Z | 0 | 0 | transformers | [

"transformers",

"safetensors",

"qwen3",

"text-generation",

"conversational",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

]

| text-generation | 2025-05-24T10:31:04Z | ---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed] |

bockhealthbharath/Eira-0.2 | bockhealthbharath | 2025-05-24T10:30:39Z | 10 | 0 | null | [

"safetensors",

"blip",

"biology",

"medical",

"multimodal",

"question-answering",

"healthcare",

"image-text-to-text",

"en",

"base_model:Salesforce/blip-image-captioning-base",

"base_model:finetune:Salesforce/blip-image-captioning-base",

"license:mit",

"region:us"

]

| image-text-to-text | 2025-04-16T20:41:37Z | ---

pipeline_tag: image-text-to-text

license: mit

language:

- en

base_model:

- meta-llama/Llama-2-7b-hf

- Salesforce/blip-image-captioning-base

tags:

- biology

- medical

- multimodal

- question-answering

- healthcare

---

# Model Card for EIRA-0.2

**Bridging Text and Medical Imagery for Accurate Multimodal QA**

This model integrates a Llama‑2 text backbone with a BLIP vision backbone to perform context‑aware question answering over medical images and text.

## Model Details

### Model Description

EIRA‑0.2 is a multimodal model designed to answer free‑form questions about medical images (e.g., radiographs, histology slides) in conjunction with accompanying text. Internally, it uses:

- A **text encoder/decoder** based on **meta‑llama/Llama‑2‑7b‑hf**, fine‑tuned for medical QA.

- A **vision encoder** based on **Salesforce/blip-image-captioning-base**, that extract descriptive features from medical imagery.

- A **fusion module** that cross‑attends between vision features and text embeddings to generate coherent, context‑aware answers.

- **Developed by:** BockBharath

- **Shared by:** Shashidhar Sarvi and Sharvary H H

- **Model type:** Multimodal Sequence‑to‑Sequence QA

- **Language(s):** English

- **License:** MIT

- **Finetuned from:** meta‑llama/Llama‑2‑7b‑hf, Salesforce/blip-image-captioning-base

### Model Sources

- **Repository:** https://github.com/BockBharath/EIRA-0.2

- **Demo:** https://huggingface.co/BockBharath/EIRA-0.2

## Uses

### Direct Use

EIRA‑0.2 can be used out‑of‑the‑box as a Hugging Face `pipeline` for image‑text-to-text question answering. It is intended for:

- Clinical decision support by generating explanations of medical images.

- Educational tools for medical students reviewing imaging cases.

### Downstream Use

- Further fine‑tuning on specialty subdomains (e.g., dermatology, pathology) to improve domain performance.

- Integration into telemedicine platforms to assist remote diagnostics.

### Out-of-Scope Use

- Unsupervised generation of medical advice without expert oversight.

- Non‑medical domains (the model’s vision backbone is specialized on medical imaging).

## Bias, Risks, and Limitations

EIRA‑0.2 was trained on a curated set of medical textbooks and annotated imaging cases; it may underperform on rare pathologies or demographic groups under‑represented in the training data. Hallucination risk exists if the image context is ambiguous or incomplete.

### Recommendations

- Always validate model outputs with a qualified medical professional.

- Use in conjunction with structured reporting tools to mitigate hallucinations.

## How to Get Started with the Model

```python

from transformers import pipeline

# Load the multimodal QA pipeline

eira = pipeline(

task="image-text-to-text",

model="BockBharath/EIRA-0.2",

device=0 # set to -1 for CPU

)

# Example inputs

image_path = "chest_xray.png"

question = "What abnormality is visible in the left lung?"

# Run inference

answer = eira({

"image": image_path,

"text": question

})

print("Answer:", answer[0]["generated_text"])

```

**Input shapes:**

- `image`: file path or PIL.Image of variable size (automatically resized to 224×224).

- `text`: string question.

**Output:** List of dicts with key `"generated_text"` containing the answer string.

## Training Details

### Training Data

- **Sources:** 500+ medical imaging cases (X‑rays, CT, MRI) paired with expert Q&A, and 100 clinical chapters from open‑access medical textbooks.

- **Preprocessing:**

- Images resized to 224×224; normalized to ImageNet statistics.

- Text tokenized with Llama tokenizer, max length 512 tokens.

### Training Procedure

- Mixed‑precision (fp16) fine‑tuning.

- **Hardware:** Single NVIDIA T4 GPU on Kaggle.

- **Batch size:** 16 (per GPU)

- **Learning rate:** 3e‑5 with linear warmup over 500 steps.

- **Epochs:** 5

- **Total time:** ~48 hours

## Evaluation

### Testing Data, Factors & Metrics

- **Test set:** 100 unseen imaging cases with 3 expert‑provided QA pairs each.

- **Metrics:**

- **Exact Match (EM)** on key findings: 72.4%

- **BLEU‑4** for answer fluency: 0.38

- **ROUGE‑L** for content overlap: 0.46

### Results

| Metric | Score |

|--------------|--------|

| Exact Match | 72.4% |

| BLEU‑4 | 0.38 |

| ROUGE‑L | 0.46 |

#### Subgroup Analysis

Performance on chest X‑rays vs. histology slides:

- **Chest X‑ray EM:** 75.1%

- **Histology EM:** 68.0%

## Environmental Impact

- **Hardware Type:** NVIDIA T4 GPU

- **Training Hours:** ~48

- **Compute Region:** us‑central1

- **Estimated CO₂eq:** ~6 kg (using ML CO₂ impact calculator)

## Technical Specifications

### Model Architecture and Objective

- **Text backbone:** 7 B‑parameter Llama 2 encoder‑decoder.

- **Vision backbone:** BLIP ResNet‑50 + transformer head.

- **Fusion:** Cross‑attention layers interleaved with decoder blocks.

- **Objective:** Minimize cross‑entropy on ground‑truth answers.

### Compute Infrastructure

- **Hardware:** Single NVIDIA T4 GPU (16 GB VRAM)

- **Software:** PyTorch 2.0, Transformers 4.x, Accelerate

## Citation

If you use this model, please cite:

```bibtex

@misc{bockbharath2025eira02,

title={EIRA-0.2: Multimodal Medical QA with Llama-2 and BLIP},

author={BockBharath},

year={2025},

howpublished={\url{https://huggingface.co/BockBharath/EIRA-0.2}}

}

```

```text

BockBharath. (2025). EIRA-0.2: Multimodal Medical QA with Llama-2 and BLIP. Retrieved from https://huggingface.co/BockBharath/EIRA-0.2

```

## Model Card Authors

- BockBharath

- EIRA Project Team (Sharvary H H, Shashidhar Sarvi)

## Model Card Contact

For questions or feedback, please open an issue on the [GitHub repository](https://github.com/BockBharath/EIRA-0.2). |

hannesvgel/race-albert-v2 | hannesvgel | 2025-05-24T10:29:10Z | 8 | 0 | transformers | [

"transformers",

"safetensors",

"albert",

"multiple-choice",

"generated_from_trainer",

"dataset:ehovy/race",

"base_model:albert/albert-base-v2",

"base_model:finetune:albert/albert-base-v2",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

]

| multiple-choice | 2025-05-22T08:52:11Z | ---

library_name: transformers

license: apache-2.0

base_model: albert-base-v2

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: results_albert

results: []

datasets:

- ehovy/race

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# race-albert-v2

This model is a fine-tuned version of [albert-base-v2](https://huggingface.co/albert-base-v2) on the [race dataset(middle)](https://huggingface.co/datasets/ehovy/race).

It achieves the following results on the test set:

- Loss: 0.8710

- Accuracy: 0.7089

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 4

- eval_batch_size: 4

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 8

- optimizer: Use OptimizerNames.ADAMW_TORCH with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: linear

- num_epochs: 4

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:-----:|:---------------:|:--------:|

| 0.8709 | 1.0 | 3178 | 0.8257 | 0.6769 |

| 0.6377 | 2.0 | 6356 | 0.8329 | 0.7152 |

| 0.3548 | 3.0 | 9534 | 1.0367 | 0.7124 |

| 0.1412 | 4.0 | 12712 | 1.5380 | 0.7145 |

### Framework versions

- Transformers 4.52.2

- Pytorch 2.6.0+cu124

- Datasets 3.6.0

- Tokenizers 0.21.1 |

FormlessAI/26f47549-4c34-4d8c-9772-e1a559c6b16a | FormlessAI | 2025-05-24T10:27:47Z | 0 | 0 | transformers | [

"transformers",

"safetensors",

"qwen2",

"text-generation",

"generated_from_trainer",

"trl",

"dpo",

"conversational",

"arxiv:2305.18290",

"base_model:Qwen/Qwen2-1.5B-Instruct",

"base_model:finetune:Qwen/Qwen2-1.5B-Instruct",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

]

| text-generation | 2025-05-24T10:09:43Z | ---

base_model: Qwen/Qwen2-1.5B-Instruct

library_name: transformers

model_name: 26f47549-4c34-4d8c-9772-e1a559c6b16a

tags:

- generated_from_trainer

- trl

- dpo

licence: license

---

# Model Card for 26f47549-4c34-4d8c-9772-e1a559c6b16a

This model is a fine-tuned version of [Qwen/Qwen2-1.5B-Instruct](https://huggingface.co/Qwen/Qwen2-1.5B-Instruct).

It has been trained using [TRL](https://github.com/huggingface/trl).

## Quick start

```python

from transformers import pipeline

question = "If you had a time machine, but could only go to the past or the future once and never return, which would you choose and why?"

generator = pipeline("text-generation", model="FormlessAI/26f47549-4c34-4d8c-9772-e1a559c6b16a", device="cuda")

output = generator([{"role": "user", "content": question}], max_new_tokens=128, return_full_text=False)[0]

print(output["generated_text"])

```

## Training procedure

[<img src="https://raw.githubusercontent.com/wandb/assets/main/wandb-github-badge-28.svg" alt="Visualize in Weights & Biases" width="150" height="24"/>](https://wandb.ai/phoenix-formless/Gradients/runs/usvz7td3)

This model was trained with DPO, a method introduced in [Direct Preference Optimization: Your Language Model is Secretly a Reward Model](https://huggingface.co/papers/2305.18290).

### Framework versions

- TRL: 0.17.0

- Transformers: 4.52.3

- Pytorch: 2.7.0+cu128

- Datasets: 3.6.0

- Tokenizers: 0.21.1

## Citations

Cite DPO as:

```bibtex

@inproceedings{rafailov2023direct,

title = {{Direct Preference Optimization: Your Language Model is Secretly a Reward Model}},

author = {Rafael Rafailov and Archit Sharma and Eric Mitchell and Christopher D. Manning and Stefano Ermon and Chelsea Finn},

year = 2023,

booktitle = {Advances in Neural Information Processing Systems 36: Annual Conference on Neural Information Processing Systems 2023, NeurIPS 2023, New Orleans, LA, USA, December 10 - 16, 2023},

url = {http://papers.nips.cc/paper_files/paper/2023/hash/a85b405ed65c6477a4fe8302b5e06ce7-Abstract-Conference.html},

editor = {Alice Oh and Tristan Naumann and Amir Globerson and Kate Saenko and Moritz Hardt and Sergey Levine},

}

```

Cite TRL as:

```bibtex

@misc{vonwerra2022trl,

title = {{TRL: Transformer Reinforcement Learning}},

author = {Leandro von Werra and Younes Belkada and Lewis Tunstall and Edward Beeching and Tristan Thrush and Nathan Lambert and Shengyi Huang and Kashif Rasul and Quentin Gallou{\'e}dec},

year = 2020,

journal = {GitHub repository},

publisher = {GitHub},

howpublished = {\url{https://github.com/huggingface/trl}}

}

``` |

BAAI/RoboBrain | BAAI | 2025-05-24T10:25:59Z | 1,388 | 17 | null | [

"safetensors",

"llava_onevision",

"en",

"dataset:BAAI/ShareRobot",

"dataset:lmms-lab/LLaVA-OneVision-Data",

"arxiv:2502.21257",

"license:apache-2.0",

"region:us"

]

| null | 2025-03-27T03:20:39Z | ---

license: apache-2.0

datasets:

- BAAI/ShareRobot

- lmms-lab/LLaVA-OneVision-Data

language:

- en

---

<div align="center">

<img src="https://github.com/FlagOpen/RoboBrain/raw/main/assets/logo.jpg" width="400"/>

</div>

# [CVPR 25] RoboBrain: A Unified Brain Model for Robotic Manipulation from Abstract to Concrete.

<p align="center">

</a>  ⭐️ <a href="https://superrobobrain.github.io/">Project</a></a>   |   🤗 <a href="https://huggingface.co/BAAI/RoboBrain/">Hugging Face</a>   |   🤖 <a href="https://www.modelscope.cn/models/BAAI/RoboBrain/files/">ModelScope</a>   |   🌎 <a href="https://github.com/FlagOpen/ShareRobot">Dataset</a>   |   📑 <a href="http://arxiv.org/abs/2502.21257">Paper</a>   |   💬 <a href="./assets/wechat.png">WeChat</a>

</p>

<p align="center">

</a>  🎯 <a href="">RoboOS (Coming Soon)</a>: An Efficient Open-Source Multi-Robot Coordination System for RoboBrain.

</p>

<p align="center">

</a>  🎯 <a href="https://tanhuajie.github.io/ReasonRFT/">Reason-RFT</a>: Exploring a New RFT Paradigm to Enhance RoboBrain's Visual Reasoning Capabilities.

</p>

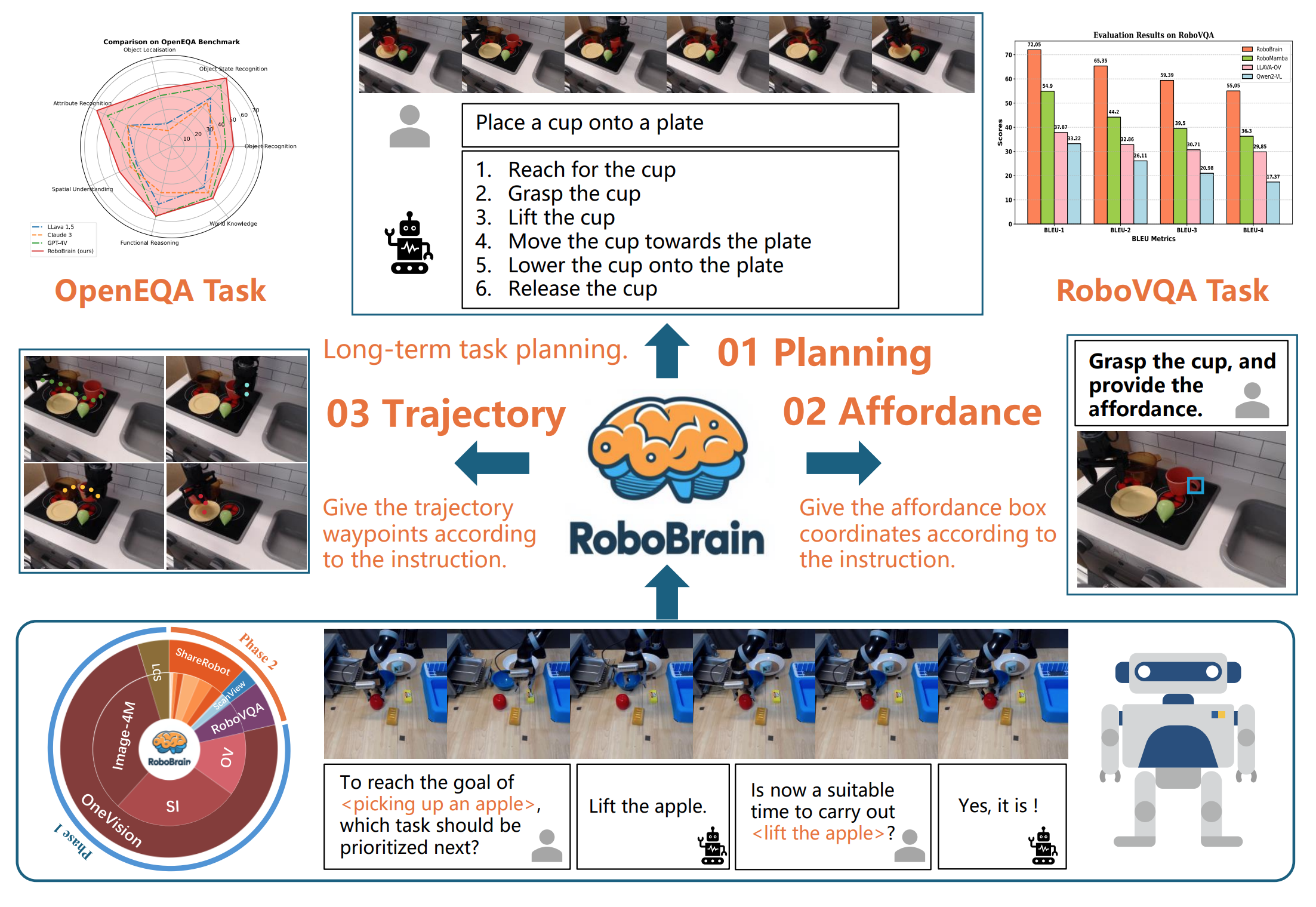

## 🔥 Overview

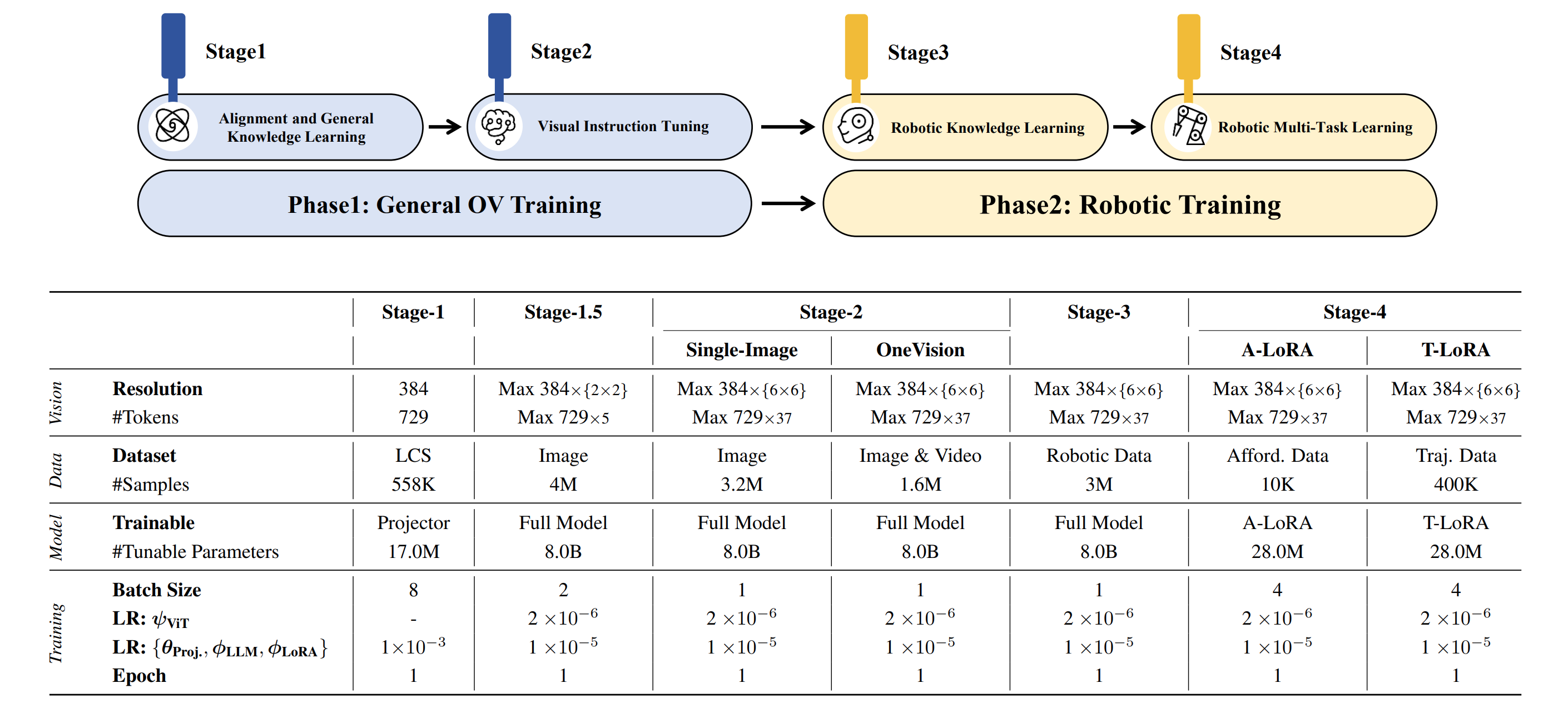

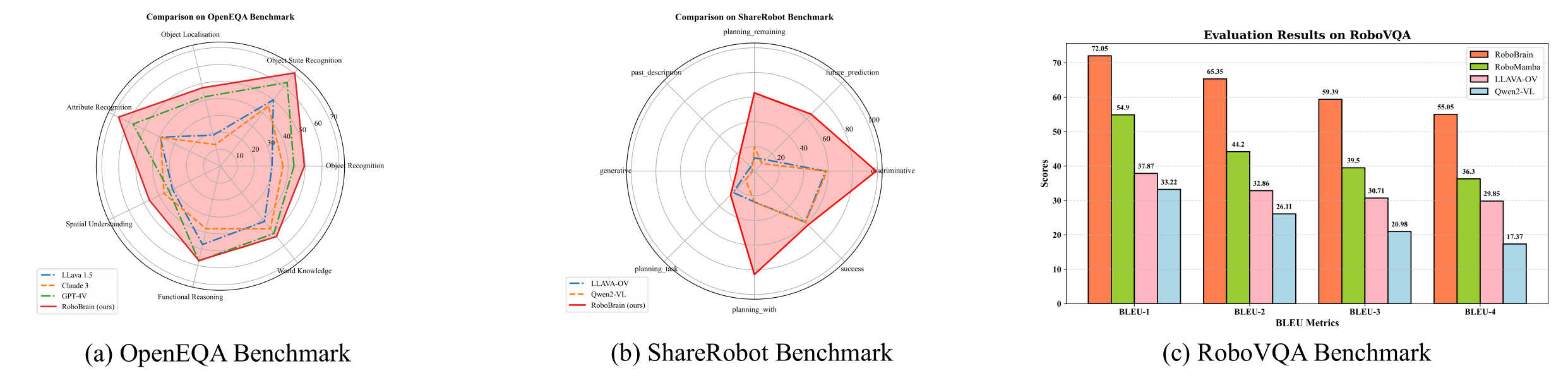

Recent advancements in Multimodal Large Language Models (MLLMs) have shown remarkable capabilities across various multimodal contexts. However, their application in robotic scenarios, particularly for long-horizon manipulation tasks, reveals significant limitations. These limitations arise from the current MLLMs lacking three essential robotic brain capabilities: **(1) Planning Capability**, which involves decomposing complex manipulation instructions into manageable sub-tasks; **(2) Affordance Perception**, the ability to recognize and interpret the affordances of interactive objects; and **(3) Trajectory Prediction**, the foresight to anticipate the complete manipulation trajectory necessary for successful execution. To enhance the robotic brain's core capabilities from abstract to concrete, we introduce ShareRobot, a high-quality heterogeneous dataset that labels multi-dimensional information such as task planning, object affordance, and end-effector trajectory. ShareRobot's diversity and accuracy have been meticulously refined by three human annotators. Building on this dataset, we developed RoboBrain, an MLLM-based model that combines robotic and general multi-modal data, utilizes a multi-stage training strategy, and incorporates long videos and high-resolution images to improve its robotic manipulation capabilities. Extensive experiments demonstrate that RoboBrain achieves state-of-the-art performance across various robotic tasks, highlighting its potential to advance robotic brain capabilities.

## 🚀 Features

This repository supports:

- **`Data Preparation`**: Please refer to [Dataset Preparation](https://github.com/FlagOpen/ShareRobot) for how to prepare the dataset.

- **`Training for RoboBrain`**: Please refer to [Training Section](#Training) for the usage of training scripts.

- **`Support HF/VLLM Inference`**: Please see [Inference Section](#Inference), now we support inference with [VLLM](https://github.com/vllm-project/vllm).

- **`Evaluation for RoboBrain`**: Please refer to [Evaluation Section](#Evaluation) for how to prepare the benchmarks.

- **`ShareRobot Generation`**: Please refer to [ShareRobot](https://github.com/FlagOpen/ShareRobot) for details.

## 🗞️ News

- **`2025-04-04`**: 🤗 We have released [Trajectory Checkpoint](https://huggingface.co/BAAI/RoboBrain-LoRA-Trajectory/) in Huggingface.

- **`2025-03-29`**: 🤗 We have released [Affordance Checkpoint](https://huggingface.co/BAAI/RoboBrain-LoRA-Affordance/) in Huggingface.

- **`2025-03-27`**: 🤗 We have released [Planning Checkpoint](https://huggingface.co/BAAI/RoboBrain/) in Huggingface.

- **`2025-03-26`**: 🔥 We have released the [RoboBrain](https://github.com/FlagOpen/RoboBrain/) repository.

- **`2025-02-27`**: 🌍 Our [RoboBrain](http://arxiv.org/abs/2502.21257/) was accepted to CVPR2025.

## 📆 Todo

- [x] Release scripts for model training and inference.

- [x] Release Planning checkpoint.

- [x] Release Affordance checkpoint.

- [x] Release ShareRobot dataset.

- [x] Release Trajectory checkpoint.

- [ ] Release evaluation scripts for Benchmarks.

- [ ] Training more powerful **Robobrain-v2**.

## 🤗 Models

- **[`Base Planning Model`](https://huggingface.co/BAAI/RoboBrain/)**: The model was trained on general datasets in Stages 1–2 and on the Robotic Planning dataset in Stage 3, which is designed for Planning prediction.

- **[`A-LoRA for Affordance`](https://huggingface.co/BAAI/RoboBrain-LoRA-Affordance/)**: Based on the Base Planning Model, Stage 4 involves LoRA-based training with our Affordance dataset to predict affordance.

- **[`T-LoRA for Trajectory`](https://huggingface.co/BAAI/RoboBrain-LoRA-Trajectory/)**: Based on the Base Planning Model, Stage 4 involves LoRA-based training with our Trajectory dataset to predict trajectory.

| Models | Checkpoint | Description |

|----------------------|----------------------------------------------------------------|------------------------------------------------------------|

| Planning Model | [🤗 Planning CKPTs](https://huggingface.co/BAAI/RoboBrain/) | Used for Planning prediction in our paper |

| Affordance (A-LoRA) | [🤗 Affordance CKPTs](https://huggingface.co/BAAI/RoboBrain-LoRA-Affordance/) | Used for Affordance prediction in our paper |

| Trajectory (T-LoRA) | [🤗 Trajectory CKPTs](https://huggingface.co/BAAI/RoboBrain-LoRA-Trajectory/) | Used for Trajectory prediction in our paper |

## 🛠️ Setup

```bash

# clone repo.

git clone https://github.com/FlagOpen/RoboBrain.git

cd RoboBrain

# build conda env.

conda create -n robobrain python=3.10

conda activate robobrain

pip install -r requirements.txt

```

## <a id="Training"> 🤖 Training</a>

### 1. Data Preparation

```bash

# Modify datasets for Stage 1, please refer to:

- yaml_path: scripts/train/yaml/stage_1_0.yaml

# Modify datasets for Stage 1.5, please refer to:

- yaml_path: scripts/train/yaml/stage_1_5.yaml

# Modify datasets for Stage 2_si, please refer to:

- yaml_path: scripts/train/yaml/stage_2_si.yaml

# Modify datasets for Stage 2_ov, please refer to:

- yaml_path: scripts/train/yaml/stage_2_ov.yaml

# Modify datasets for Stage 3_plan, please refer to:

- yaml_path: scripts/train/yaml/stage_3_planning.yaml

# Modify datasets for Stage 4_aff, please refer to:

- yaml_path: scripts/train/yaml/stage_4_affordance.yaml

# Modify datasets for Stage 4_traj, please refer to:

- yaml_path: scripts/train/yaml/stage_4_trajectory.yaml

```

**Note:** The sample format in each json file should be like:

```json

{

"id": "xxxx",

"image": [

"image1.png",

"image2.png",

],

"conversations": [

{

"from": "human",

"value": "<image>\n<image>\nAre there numerous dials near the bottom left of the tv?"

},

{

"from": "gpt",

"value": "Yes. The sun casts shadows ... a serene, clear sky."

}

]

},

```

### 2. Training

```bash

# Training on Stage 1:

bash scripts/train/stage_1_0_pretrain.sh

# Training on Stage 1.5:

bash scripts/train/stage_1_5_direct_finetune.sh

# Training on Stage 2_si:

bash scripts/train/stage_2_0_resume_finetune_si.sh

# Training on Stage 2_ov:

bash scripts/train/stage_2_0_resume_finetune_ov.sh

# Training on Stage 3_plan:

bash scripts/train/stage_3_0_resume_finetune_robo.sh

# Training on Stage 4_aff:

bash scripts/train/stage_4_0_resume_finetune_lora_a.sh

# Training on Stage 4_traj:

bash scripts/train/stage_4_0_resume_finetune_lora_t.sh

```

**Note:** Please change the environment variables (e.g. *DATA_PATH*, *IMAGE_FOLDER*, *PREV_STAGE_CHECKPOINT*) in the script to your own.

### 3. Convert original weights to HF weights

```bash

# Planning Model

python model/llava_utils/convert_robobrain_to_hf.py --model_dir /path/to/original/checkpoint/ --dump_path /path/to/output/

# A-LoRA & T-RoRA

python model/llava_utils/convert_lora_weights_to_hf.py --model_dir /path/to/original/checkpoint/ --dump_path /path/to/output/

```

## <a id="Inference">⭐️ Inference</a>

### 1. Usage for Planning Prediction

#### Option 1: HF inference

```python

from inference import SimpleInference

model_id = "BAAI/RoboBrain"

model = SimpleInference(model_id)

prompt = "Given the obiects in the image, if you are required to complete the task \"Put the apple in the basket\", what is your detailed plan? Write your plan and explain it in detail, using the following format: Step_1: xxx\nStep_2: xxx\n ...\nStep_n: xxx\n"

image = "./assets/demo/planning.png"

pred = model.inference(prompt, image, do_sample=True)

print(f"Prediction: {pred}")

'''

Prediction: (as an example)

Step_1: Move to the apple. Move towards the apple on the table.

Step_2: Pick up the apple. Grab the apple and lift it off the table.

Step_3: Move towards the basket. Move the apple towards the basket without dropping it.

Step_4: Put the apple in the basket. Place the apple inside the basket, ensuring it is in a stable position.

'''

```

#### Option 2: VLLM inference

Install and launch VLLM

```bash

# Install vllm package

pip install vllm==0.6.6.post1

# Launch Robobrain with vllm

python -m vllm.entrypoints.openai.api_server --model BAAI/RoboBrain --served-model-name robobrain --max_model_len 16384 --limit_mm_per_prompt image=8

```

Run python script as example:

```python

from openai import OpenAI

import base64

openai_api_key = "robobrain-123123"

openai_api_base = "http://127.0.0.1:8000/v1"

client = OpenAI(

api_key=openai_api_key,

base_url=openai_api_base,

)

prompt = "Given the obiects in the image, if you are required to complete the task \"Put the apple in the basket\", what is your detailed plan? Write your plan and explain it in detail, using the following format: Step_1: xxx\nStep_2: xxx\n ...\nStep_n: xxx\n"

image = "./assets/demo/planning.png"

with open(image, "rb") as f:

encoded_image = base64.b64encode(f.read())

encoded_image = encoded_image.decode("utf-8")

base64_img = f"data:image;base64,{encoded_image}"

response = client.chat.completions.create(

model="robobrain",

messages=[

{

"role": "user",

"content": [

{"type": "image_url", "image_url": {"url": base64_img}},

{"type": "text", "text": prompt},

],

},

]

)

content = response.choices[0].message.content

print(content)

'''

Prediction: (as an example)

Step_1: Move to the apple. Move towards the apple on the table.

Step_2: Pick up the apple. Grab the apple and lift it off the table.

Step_3: Move towards the basket. Move the apple towards the basket without dropping it.

Step_4: Put the apple in the basket. Place the apple inside the basket, ensuring it is in a stable position.

'''

```

### 2. Usage for Affordance Prediction

```python

from inference import SimpleInference

model_id = "BAAI/RoboBrain"

lora_id = "BAAI/RoboBrain-LoRA-Affordance"

model = SimpleInference(model_id, lora_id)

# Example 1:

prompt = "You are a robot using the joint control. The task is \"pick_up the suitcase\". Please predict a possible affordance area of the end effector?"

image = "./assets/demo/affordance_1.jpg"

pred = model.inference(prompt, image, do_sample=False)

print(f"Prediction: {pred}")

'''

Prediction: [0.733, 0.158, 0.845, 0.263]

'''

# Example 2:

prompt = "You are a robot using the joint control. The task is \"push the bicycle\". Please predict a possible affordance area of the end effector?"

image = "./assets/demo/affordance_2.jpg"

pred = model.inference(prompt, image, do_sample=False)

print(f"Prediction: {pred}")

'''

Prediction: [0.600, 0.127, 0.692, 0.227]

'''

```

### 3. Usage for Trajectory Prediction

```python

# please refer to https://github.com/FlagOpen/RoboBrain

from inference import SimpleInference

model_id = "BAAI/RoboBrain"

lora_id = "BAAI/RoboBrain-LoRA-Affordance"

model = SimpleInference(model_id, lora_id)

# Example 1:

prompt = "You are a robot using the joint control. The task is \"reach for the cloth\". Please predict up to 10 key trajectory points to complete the task. Your answer should be formatted as a list of tuples, i.e. [[x1, y1], [x2, y2], ...], where each tuple contains the x and y coordinates of a point."

image = "./assets/demo/trajectory_1.jpg"

pred = model.inference(prompt, image, do_sample=False)

print(f"Prediction: {pred}")

'''

Prediction: [[0.781, 0.305], [0.688, 0.344], [0.570, 0.344], [0.492, 0.312]]

'''

# Example 2:

prompt = "You are a robot using the joint control. The task is \"reach for the grapes\". Please predict up to 10 key trajectory points to complete the task. Your answer should be formatted as a list of tuples, i.e. [[x1, y1], [x2, y2], ...], where each tuple contains the x and y coordinates of a point."

image = "./assets/demo/trajectory_2.jpg"

pred = model.inference(prompt, image, do_sample=False)

print(f"Prediction: {pred}")

'''

Prediction: [[0.898, 0.352], [0.766, 0.344], [0.625, 0.273], [0.500, 0.195]]

'''

```

## <a id="Evaluation">🤖 Evaluation</a>

*Coming Soon ...*

<!-- <div align="center">

<img src="https://github.com/FlagOpen/RoboBrain/blob/main/assets/result.png" />

</div> -->

## 😊 Acknowledgement

We would like to express our sincere gratitude to the developers and contributors of the following projects:

1. [LLaVA-NeXT](https://github.com/LLaVA-VL/LLaVA-NeXT): The comprehensive codebase for training Vision-Language Models (VLMs).

2. [lmms-eval](https://github.com/EvolvingLMMs-Lab/lmms-eval): A powerful evaluation tool for Vision-Language Models (VLMs).

3. [vllm](https://github.com/vllm-project/vllm): A high-throughput and memory-efficient LLMs/VLMs inference engine.

4. [OpenEQA](https://github.com/facebookresearch/open-eqa): A wonderful benchmark for Embodied Question Answering.

5. [RoboVQA](https://github.com/google-deepmind/robovqa): Provide high-level reasoning models and datasets for robotics applications.

Their outstanding contributions have played a pivotal role in advancing our research and development initiatives.

## 📑 Citation

If you find this project useful, welcome to cite us.

```bib

@article{ji2025robobrain,

title={RoboBrain: A Unified Brain Model for Robotic Manipulation from Abstract to Concrete},

author={Ji, Yuheng and Tan, Huajie and Shi, Jiayu and Hao, Xiaoshuai and Zhang, Yuan and Zhang, Hengyuan and Wang, Pengwei and Zhao, Mengdi and Mu, Yao and An, Pengju and others},

journal={arXiv preprint arXiv:2502.21257},

year={2025}

}

```

|

mlfoundations-dev/e1_code_fasttext_r1_10k | mlfoundations-dev | 2025-05-24T10:20:08Z | 0 | 0 | transformers | [

"transformers",

"safetensors",

"qwen2",

"text-generation",

"llama-factory",

"full",

"generated_from_trainer",

"conversational",

"base_model:Qwen/Qwen2.5-7B-Instruct",

"base_model:finetune:Qwen/Qwen2.5-7B-Instruct",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

]

| text-generation | 2025-05-21T21:00:30Z | ---

library_name: transformers

license: apache-2.0

base_model: Qwen/Qwen2.5-7B-Instruct

tags:

- llama-factory

- full

- generated_from_trainer

model-index:

- name: e1_code_fasttext_r1_10k

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# e1_code_fasttext_r1_10k

This model is a fine-tuned version of [Qwen/Qwen2.5-7B-Instruct](https://huggingface.co/Qwen/Qwen2.5-7B-Instruct) on the mlfoundations-dev/e1_code_fasttext_r1_10k dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 4e-05

- train_batch_size: 1

- eval_batch_size: 8

- seed: 42

- distributed_type: multi-GPU

- num_devices: 16

- gradient_accumulation_steps: 8

- total_train_batch_size: 128

- total_eval_batch_size: 128

- optimizer: Use adamw_torch with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: cosine

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 5.0

### Training results

### Framework versions

- Transformers 4.46.1

- Pytorch 2.5.1

- Datasets 3.1.0

- Tokenizers 0.20.3

|

bigbabyface/rubert_tuned_h1_short_full_train_custom_head | bigbabyface | 2025-05-24T10:18:55Z | 0 | 0 | transformers | [

"transformers",

"safetensors",

"bert",

"text-classification",

"arxiv:1910.09700",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text-classification | 2025-05-24T06:44:13Z | ---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed] |

WenFengg/alibaba_8 | WenFengg | 2025-05-24T10:17:49Z | 0 | 0 | null | [

"safetensors",

"any-to-any",

"omega",

"omegalabs",

"bittensor",

"agi",

"license:mit",

"region:us"

]

| any-to-any | 2025-05-24T10:03:05Z | ---

license: mit

tags:

- any-to-any

- omega

- omegalabs

- bittensor

- agi

---

This is an Any-to-Any model checkpoint for the OMEGA Labs x Bittensor Any-to-Any subnet.

Check out the [git repo](https://github.com/omegalabsinc/omegalabs-anytoany-bittensor) and find OMEGA on X: [@omegalabsai](https://x.com/omegalabsai).

|

vertings6/ec67c006-5eff-40c6-ba32-bad90da3f12b | vertings6 | 2025-05-24T10:17:14Z | 0 | 0 | peft | [

"peft",

"safetensors",

"mistral",

"axolotl",

"generated_from_trainer",

"base_model:unsloth/Phi-3-medium-4k-instruct",

"base_model:adapter:unsloth/Phi-3-medium-4k-instruct",

"license:mit",

"4-bit",

"bitsandbytes",

"region:us"

]

| null | 2025-05-24T09:32:26Z | ---

library_name: peft

license: mit

base_model: unsloth/Phi-3-medium-4k-instruct

tags:

- axolotl

- generated_from_trainer

model-index:

- name: ec67c006-5eff-40c6-ba32-bad90da3f12b

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

[<img src="https://raw.githubusercontent.com/axolotl-ai-cloud/axolotl/main/image/axolotl-badge-web.png" alt="Built with Axolotl" width="200" height="32"/>](https://github.com/axolotl-ai-cloud/axolotl)

<details><summary>See axolotl config</summary>

axolotl version: `0.4.1`

```yaml

absolute_data_files: false

adapter: lora

base_model: unsloth/Phi-3-medium-4k-instruct

bf16: true

chat_template: llama3

dataset_prepared_path: /workspace/axolotl

datasets:

- data_files:

- aa208f6e880a6925_train_data.json

ds_type: json

format: custom

path: /workspace/input_data/

type:

field_instruction: instruct

field_output: output

format: '{instruction}'

no_input_format: '{instruction}'

system_format: '{system}'

system_prompt: ''

debug: null

deepspeed: null

dpo:

beta: 0.1

enabled: true

group_by_length: false

rank_loss: true

reference_model: null

early_stopping_patience: null

eval_max_new_tokens: 128

eval_table_size: null

evals_per_epoch: 1

flash_attention: true

fp16: null

fsdp: null

fsdp_config: null

gradient_accumulation_steps: 2

gradient_checkpointing: true

gradient_clipping: 1.0

group_by_length: false

hub_model_id: vertings6/ec67c006-5eff-40c6-ba32-bad90da3f12b

hub_repo: null

hub_strategy: end

hub_token: null

learning_rate: 2.0e-06

load_in_4bit: true

load_in_8bit: false

local_rank: null

logging_steps: 1

lora_alpha: 64

lora_dropout: 0.1

lora_fan_in_fan_out: null

lora_model_dir: null

lora_r: 32

lora_target_linear: true

lr_scheduler: cosine

max_steps: 500

micro_batch_size: 6

mixed_precision: bf16

mlflow_experiment_name: /tmp/aa208f6e880a6925_train_data.json

model_type: AutoModelForCausalLM

num_epochs: 2

optimizer: adamw_bnb_8bit

output_dir: miner_id_24

pad_to_sequence_len: true

resume_from_checkpoint: null

s2_attention: null

sample_packing: false

saves_per_epoch: 1

sequence_len: 1024

strict: false

tf32: false

tokenizer_type: AutoTokenizer

train_on_inputs: false

trust_remote_code: true

val_set_size: 0.05

wandb_entity: null

wandb_mode: online

wandb_name: 535b6010-08d2-401c-aed4-b8c0c7c5416c

wandb_project: s56-7

wandb_run: your_name

wandb_runid: 535b6010-08d2-401c-aed4-b8c0c7c5416c

warmup_steps: 50

weight_decay: 0.02

xformers_attention: true

```

</details><br>

# ec67c006-5eff-40c6-ba32-bad90da3f12b

This model is a fine-tuned version of [unsloth/Phi-3-medium-4k-instruct](https://huggingface.co/unsloth/Phi-3-medium-4k-instruct) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 3.6341

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-06

- train_batch_size: 6

- eval_batch_size: 6

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 12

- optimizer: Use OptimizerNames.ADAMW_BNB with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: cosine

- lr_scheduler_warmup_steps: 50

- training_steps: 500

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:------:|:----:|:---------------:|

| 11.3172 | 0.0003 | 1 | 5.8685 |

| 7.2769 | 0.0712 | 250 | 3.8644 |

| 7.5412 | 0.1423 | 500 | 3.6341 |

### Framework versions

- PEFT 0.13.2

- Transformers 4.46.0

- Pytorch 2.5.0+cu124

- Datasets 3.0.1

- Tokenizers 0.20.1 |

cytoe/dickbot-0.6B-ft | cytoe | 2025-05-24T10:12:33Z | 0 | 0 | transformers | [

"transformers",

"safetensors",

"qwen3",

"text-generation",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

]

| text-generation | 2025-05-24T10:11:35Z | ---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed] |

habib-ashraf/resume-job-classifier | habib-ashraf | 2025-05-24T10:10:43Z | 0 | 0 | null | [

"license:apache-2.0",

"region:us"

]

| null | 2025-05-24T10:07:24Z | ---

license: apache-2.0

---

|

Hahasb/moondream2-20250414-GGUF | Hahasb | 2025-05-24T10:10:13Z | 0 | 0 | null | [

"gguf",

"license:apache-2.0",

"region:us"

]

| null | 2025-05-24T09:47:38Z | ---

license: apache-2.0

---

This repo contains the llama.cpp compatible GGUFs of vikhyatk/moondream2: https://huggingface.co/vikhyatk/moondream2. The GGUFs hosted in the original repo is missing the tokenizer.chat_template field, which breaks llama.cpp The text model for this GGUF sets it to vicuna. |

infogep/2b849df7-293e-496f-8a42-ded51330bc70 | infogep | 2025-05-24T10:09:34Z | 0 | 0 | peft | [

"peft",

"safetensors",

"mistral",

"axolotl",

"generated_from_trainer",

"base_model:unsloth/Phi-3-medium-4k-instruct",

"base_model:adapter:unsloth/Phi-3-medium-4k-instruct",

"license:mit",

"4-bit",

"bitsandbytes",

"region:us"

]

| null | 2025-05-24T09:31:42Z | ---

library_name: peft

license: mit

base_model: unsloth/Phi-3-medium-4k-instruct

tags:

- axolotl

- generated_from_trainer

model-index:

- name: 2b849df7-293e-496f-8a42-ded51330bc70

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

[<img src="https://raw.githubusercontent.com/axolotl-ai-cloud/axolotl/main/image/axolotl-badge-web.png" alt="Built with Axolotl" width="200" height="32"/>](https://github.com/axolotl-ai-cloud/axolotl)

<details><summary>See axolotl config</summary>

axolotl version: `0.4.1`

```yaml

absolute_data_files: false

adapter: lora

base_model: unsloth/Phi-3-medium-4k-instruct

bf16: true

chat_template: llama3

dataset_prepared_path: /workspace/axolotl

datasets:

- data_files:

- aa208f6e880a6925_train_data.json

ds_type: json

format: custom

path: /workspace/input_data/

type:

field_instruction: instruct

field_output: output

format: '{instruction}'

no_input_format: '{instruction}'

system_format: '{system}'

system_prompt: ''

debug: null

deepspeed: null

dpo:

beta: 0.1

enabled: true

group_by_length: false

rank_loss: true

reference_model: null

early_stopping_patience: null

eval_max_new_tokens: 128

eval_table_size: null

evals_per_epoch: 1

flash_attention: true

fp16: null

fsdp: null

fsdp_config: null

gradient_accumulation_steps: 1

gradient_checkpointing: true

gradient_clipping: 1.0

group_by_length: false

hub_model_id: infogep/2b849df7-293e-496f-8a42-ded51330bc70

hub_repo: null

hub_strategy: end

hub_token: null

learning_rate: 2.0e-06

load_in_4bit: true

load_in_8bit: false

local_rank: null

logging_steps: 1

lora_alpha: 64

lora_dropout: 0.1

lora_fan_in_fan_out: null

lora_model_dir: null

lora_r: 32

lora_target_linear: true

lr_scheduler: cosine

max_steps: 500

micro_batch_size: 10

mixed_precision: bf16

mlflow_experiment_name: /tmp/aa208f6e880a6925_train_data.json

model_type: AutoModelForCausalLM

num_epochs: 2

optimizer: adamw_bnb_8bit

output_dir: miner_id_24

pad_to_sequence_len: true

resume_from_checkpoint: null

s2_attention: null

sample_packing: false

saves_per_epoch: 1

sequence_len: 1024

strict: false

tf32: false

tokenizer_type: AutoTokenizer

train_on_inputs: false

trust_remote_code: true

val_set_size: 0.05

wandb_entity: null

wandb_mode: online

wandb_name: 535b6010-08d2-401c-aed4-b8c0c7c5416c

wandb_project: s56-7

wandb_run: your_name

wandb_runid: 535b6010-08d2-401c-aed4-b8c0c7c5416c

warmup_steps: 50

weight_decay: 0.02

xformers_attention: true

```

</details><br>

# 2b849df7-293e-496f-8a42-ded51330bc70

This model is a fine-tuned version of [unsloth/Phi-3-medium-4k-instruct](https://huggingface.co/unsloth/Phi-3-medium-4k-instruct) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 3.6443

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-06

- train_batch_size: 10

- eval_batch_size: 10

- seed: 42

- optimizer: Use OptimizerNames.ADAMW_BNB with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: cosine

- lr_scheduler_warmup_steps: 50

- training_steps: 500

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:------:|:----:|:---------------:|

| 5.8087 | 0.0002 | 1 | 5.8311 |

| 4.1837 | 0.0593 | 250 | 3.8775 |

| 3.4705 | 0.1186 | 500 | 3.6443 |

### Framework versions

- PEFT 0.13.2

- Transformers 4.46.0

- Pytorch 2.5.0+cu124

- Datasets 3.0.1

- Tokenizers 0.20.1 |

klusertim/MNLP_M2_quantized_model-base-4bit | klusertim | 2025-05-24T09:59:00Z | 0 | 0 | transformers | [

"transformers",

"safetensors",

"qwen3",

"text-generation",

"conversational",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"4-bit",

"bitsandbytes",

"region:us"

]

| text-generation | 2025-05-24T09:58:40Z | ---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed] |

18-VIDEOS-Riley-Reid-Viral-Link/wATCH.Riley.Reid.viral.video.original.Link.Official | 18-VIDEOS-Riley-Reid-Viral-Link | 2025-05-24T09:57:14Z | 0 | 0 | null | [

"region:us"

]

| null | 2025-05-24T09:56:58Z | <animated-image data-catalyst=""><a href="https://tinyurl.com/5ye5v3bc?dfhgKasbonStudiosdfg" rel="nofollow" data-target="animated-image.originalLink"><img src="https://static.wixstatic.com/media/b249f9_adac8f70fb3f45b88691696c77de18f3~mv2.gif" alt="Foo" data-canonical-src="https://static.wixstatic.com/media/b249f9_adac8f70fb3f45b88691696c77de18f3~mv2.gif" style="max-width: 100%; display: inline-block;" data-target="animated-image.originalImage"></a>

|

MinaMila/llama_instbase_3b_LoRa_Adult_cfda_ep7_22 | MinaMila | 2025-05-24T09:56:51Z | 0 | 0 | transformers | [

"transformers",

"safetensors",

"arxiv:1910.09700",

"endpoints_compatible",

"region:us"

]

| null | 2025-05-24T09:56:44Z | ---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use