modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-07-13 12:28:20

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 518

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-07-13 12:26:25

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

ShynBui/ppo-LunarLander-v2 | ShynBui | 2024-03-03T21:47:31Z | 1 | 0 | stable-baselines3 | [

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

]

| reinforcement-learning | 2024-03-03T21:47:12Z | ---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 258.22 +/- 15.47

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

balnazzar/Frankie-tiny | balnazzar | 2024-03-03T21:44:09Z | 3 | 0 | transformers | [

"transformers",

"safetensors",

"mistral",

"text-generation",

"merge",

"mergekit",

"lazymergekit",

"Locutusque/TinyMistral-248M-v2-Instruct",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

]

| text-generation | 2024-03-03T21:43:29Z | ---

license: apache-2.0

tags:

- merge

- mergekit

- lazymergekit

- Locutusque/TinyMistral-248M-v2-Instruct

- Locutusque/TinyMistral-248M-v2-Instruct

---

# Frankie-tiny

Frankie-tiny is a (franken)merge of the following models using [mergekit](https://github.com/cg123/mergekit):

* [Locutusque/TinyMistral-248M-v2-Instruct](https://huggingface.co/Locutusque/TinyMistral-248M-v2-Instruct)

* [Locutusque/TinyMistral-248M-v2-Instruct](https://huggingface.co/Locutusque/TinyMistral-248M-v2-Instruct)

## 🧩 Configuration

```yaml

slices:

- sources:

- model: Locutusque/TinyMistral-248M-v2-Instruct

layer_range: [0, 12]

- sources:

- model: Locutusque/TinyMistral-248M-v2-Instruct

layer_range: [8, 12]

merge_method: passthrough

dtype: float16

``` |

hotsuyuki/gpt_1.3B_global_step2000_zero-1_dp-4_pp-2_tp-2_flashattn2-on | hotsuyuki | 2024-03-03T21:40:55Z | 3 | 0 | transformers | [

"transformers",

"safetensors",

"gpt2",

"text-generation",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

]

| text-generation | 2024-03-03T21:37:43Z | ---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed] |

ravp1/ppo-Huggy | ravp1 | 2024-03-03T21:29:53Z | 0 | 0 | ml-agents | [

"ml-agents",

"tensorboard",

"onnx",

"Huggy",

"deep-reinforcement-learning",

"reinforcement-learning",

"ML-Agents-Huggy",

"region:us"

]

| reinforcement-learning | 2024-03-03T21:29:47Z | ---

library_name: ml-agents

tags:

- Huggy

- deep-reinforcement-learning

- reinforcement-learning

- ML-Agents-Huggy

---

# **ppo** Agent playing **Huggy**

This is a trained model of a **ppo** agent playing **Huggy**

using the [Unity ML-Agents Library](https://github.com/Unity-Technologies/ml-agents).

## Usage (with ML-Agents)

The Documentation: https://unity-technologies.github.io/ml-agents/ML-Agents-Toolkit-Documentation/

We wrote a complete tutorial to learn to train your first agent using ML-Agents and publish it to the Hub:

- A *short tutorial* where you teach Huggy the Dog 🐶 to fetch the stick and then play with him directly in your

browser: https://huggingface.co/learn/deep-rl-course/unitbonus1/introduction

- A *longer tutorial* to understand how works ML-Agents:

https://huggingface.co/learn/deep-rl-course/unit5/introduction

### Resume the training

```bash

mlagents-learn <your_configuration_file_path.yaml> --run-id=<run_id> --resume

```

### Watch your Agent play

You can watch your agent **playing directly in your browser**

1. If the environment is part of ML-Agents official environments, go to https://huggingface.co/unity

2. Step 1: Find your model_id: ravp1/ppo-Huggy

3. Step 2: Select your *.nn /*.onnx file

4. Click on Watch the agent play 👀

|

itsfalc/fine-tuned-wav2vec2 | itsfalc | 2024-03-03T21:29:16Z | 3 | 0 | transformers | [

"transformers",

"tensorboard",

"safetensors",

"wav2vec2",

"automatic-speech-recognition",

"generated_from_trainer",

"base_model:facebook/wav2vec2-base",

"base_model:finetune:facebook/wav2vec2-base",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

]

| automatic-speech-recognition | 2024-03-03T20:50:29Z | ---

license: apache-2.0

base_model: facebook/wav2vec2-base

tags:

- generated_from_trainer

metrics:

- wer

model-index:

- name: itsfalc/output_directory

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# itsfalc/output_directory

This model is a fine-tuned version of [facebook/wav2vec2-base](https://huggingface.co/facebook/wav2vec2-base) on the PolyAI/minds14 dataset.

It achieves the following results on the evaluation set:

- Loss: 28.4373

- Wer: 1.0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 40

- training_steps: 80

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| No log | 1.6 | 20 | 47.3619 | 1.0007 |

| 52.5055 | 3.2 | 40 | 28.4373 | 1.0 |

| 31.5642 | 4.8 | 60 | 21.6139 | 1.0 |

| 31.5642 | 6.4 | 80 | 20.5592 | 1.0 |

### Framework versions

- Transformers 4.35.2

- Pytorch 2.0.0

- Datasets 2.15.0

- Tokenizers 0.15.0

|

salohnana2018/ABSA-SentencePair-DAPT-HARDAR-bert-base-Camel-MSA-ru3 | salohnana2018 | 2024-03-03T21:28:28Z | 7 | 0 | transformers | [

"transformers",

"tensorboard",

"safetensors",

"bert",

"text-classification",

"generated_from_trainer",

"base_model:salohnana2018/CAMEL-BERT-MSA-domianAdaption-Single-ABSA-HARD",

"base_model:finetune:salohnana2018/CAMEL-BERT-MSA-domianAdaption-Single-ABSA-HARD",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text-classification | 2024-03-03T20:36:09Z | ---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- accuracy

- f1

- precision

- recall

base_model: salohnana2018/CAMEL-BERT-MSA-domianAdaption-Single-ABSA-HARD

model-index:

- name: ABSA-SentencePair-DAPT-HARDAR-bert-base-Camel-MSA-ru3

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# ABSA-SentencePair-DAPT-HARDAR-bert-base-Camel-MSA-ru3

This model is a fine-tuned version of [salohnana2018/CAMEL-BERT-MSA-domianAdaption-Single-ABSA-HARD](https://huggingface.co/salohnana2018/CAMEL-BERT-MSA-domianAdaption-Single-ABSA-HARD) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1055

- Accuracy: 0.9026

- F1: 0.9026

- Precision: 0.9026

- Recall: 0.9026

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 23

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 20

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 | Precision | Recall |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|:---------:|:------:|

| 0.0585 | 1.0 | 265 | 0.0424 | 0.8497 | 0.8497 | 0.8497 | 0.8497 |

| 0.0377 | 2.0 | 530 | 0.0422 | 0.8861 | 0.8861 | 0.8861 | 0.8861 |

| 0.0259 | 3.0 | 795 | 0.0421 | 0.8819 | 0.8819 | 0.8819 | 0.8819 |

| 0.017 | 4.0 | 1060 | 0.0477 | 0.8899 | 0.8899 | 0.8899 | 0.8899 |

| 0.0108 | 5.0 | 1325 | 0.0582 | 0.8927 | 0.8927 | 0.8927 | 0.8927 |

| 0.0085 | 6.0 | 1590 | 0.0650 | 0.8899 | 0.8899 | 0.8899 | 0.8899 |

| 0.0063 | 7.0 | 1855 | 0.0680 | 0.8922 | 0.8922 | 0.8922 | 0.8922 |

| 0.0043 | 8.0 | 2120 | 0.0705 | 0.9003 | 0.9003 | 0.9003 | 0.9003 |

| 0.0042 | 9.0 | 2385 | 0.0711 | 0.8974 | 0.8974 | 0.8974 | 0.8974 |

| 0.003 | 10.0 | 2650 | 0.0773 | 0.8979 | 0.8979 | 0.8979 | 0.8979 |

| 0.0026 | 11.0 | 2915 | 0.0842 | 0.8965 | 0.8965 | 0.8965 | 0.8965 |

| 0.002 | 12.0 | 3180 | 0.0888 | 0.8979 | 0.8979 | 0.8979 | 0.8979 |

| 0.002 | 13.0 | 3445 | 0.0896 | 0.8970 | 0.8970 | 0.8970 | 0.8970 |

| 0.0015 | 14.0 | 3710 | 0.0930 | 0.9008 | 0.9008 | 0.9008 | 0.9008 |

| 0.0012 | 15.0 | 3975 | 0.1008 | 0.9003 | 0.9003 | 0.9003 | 0.9003 |

| 0.0015 | 16.0 | 4240 | 0.0987 | 0.9017 | 0.9017 | 0.9017 | 0.9017 |

| 0.0011 | 17.0 | 4505 | 0.1030 | 0.9017 | 0.9017 | 0.9017 | 0.9017 |

| 0.0011 | 18.0 | 4770 | 0.1051 | 0.9003 | 0.9003 | 0.9003 | 0.9003 |

| 0.0011 | 19.0 | 5035 | 0.1046 | 0.9036 | 0.9036 | 0.9036 | 0.9036 |

| 0.001 | 20.0 | 5300 | 0.1055 | 0.9026 | 0.9026 | 0.9026 | 0.9026 |

### Framework versions

- Transformers 4.38.1

- Pytorch 2.1.0+cu121

- Datasets 2.18.0

- Tokenizers 0.15.2

|

furrutiav/bert_qa_extractor_cockatiel_2022_best_both_ef_plus_nllf_z_value_over_subsample_it_630 | furrutiav | 2024-03-03T21:23:39Z | 4 | 0 | transformers | [

"transformers",

"safetensors",

"bert",

"feature-extraction",

"arxiv:1910.09700",

"endpoints_compatible",

"region:us"

]

| feature-extraction | 2024-03-03T21:23:10Z | ---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

Phudish/Merged_imdb | Phudish | 2024-03-03T21:01:20Z | 3 | 0 | transformers | [

"transformers",

"safetensors",

"gpt2",

"text-classification",

"mergekit",

"merge",

"arxiv:2203.05482",

"base_model:Phudish/imdb_finetune_epoch_1_gpt2",

"base_model:merge:Phudish/imdb_finetune_epoch_1_gpt2",

"base_model:Phudish/imdb_finetune_epoch_5_gpt2",

"base_model:merge:Phudish/imdb_finetune_epoch_5_gpt2",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

]

| text-classification | 2024-03-03T21:01:05Z | ---

base_model:

- Phudish/imdb_finetune_epoch_1_gpt2

- Phudish/imdb_finetune_epoch_5_gpt2

library_name: transformers

tags:

- mergekit

- merge

---

# finetune_gpt2_merged_base

This is a merge of pre-trained language models created using [mergekit](https://github.com/cg123/mergekit).

## Merge Details

### Merge Method

This model was merged using the [linear](https://arxiv.org/abs/2203.05482) merge method.

### Models Merged

The following models were included in the merge:

* [Phudish/imdb_finetune_epoch_1_gpt2](https://huggingface.co/Phudish/imdb_finetune_epoch_1_gpt2)

* [Phudish/imdb_finetune_epoch_5_gpt2](https://huggingface.co/Phudish/imdb_finetune_epoch_5_gpt2)

### Configuration

The following YAML configuration was used to produce this model:

```yaml

dtype: float16

merge_method: linear

slices:

- sources:

- layer_range: [0, 12]

model:

model:

path: Phudish/imdb_finetune_epoch_1_gpt2

parameters:

weight: 1.0

- layer_range: [0, 12]

model:

model:

path: Phudish/imdb_finetune_epoch_5_gpt2

parameters:

weight: 1.0

```

|

abritez/distilbert-base-uncased-finetuned-ner | abritez | 2024-03-03T20:52:36Z | 3 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"safetensors",

"distilbert",

"token-classification",

"generated_from_trainer",

"base_model:distilbert/distilbert-base-uncased",

"base_model:finetune:distilbert/distilbert-base-uncased",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| token-classification | 2024-03-01T20:54:15Z | ---

license: apache-2.0

base_model: distilbert-base-uncased

tags:

- generated_from_trainer

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: distilbert-base-uncased-finetuned-ner

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-ner

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the CoNLL 2003 dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0599

- Precision: 0.9240

- Recall: 0.9356

- F1: 0.9297

- Accuracy: 0.9837

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.2479 | 1.0 | 878 | 0.0702 | 0.9011 | 0.9178 | 0.9094 | 0.9796 |

| 0.0518 | 2.0 | 1756 | 0.0607 | 0.9200 | 0.9306 | 0.9253 | 0.9826 |

| 0.031 | 3.0 | 2634 | 0.0599 | 0.9240 | 0.9356 | 0.9297 | 0.9837 |

### Framework versions

- Transformers 4.38.1

- Pytorch 2.1.0+cu121

- Datasets 2.18.0

- Tokenizers 0.15.2

|

wandb/gemma-2b-zephyr-dpo | wandb | 2024-03-03T20:50:46Z | 20 | 2 | transformers | [

"transformers",

"safetensors",

"gemma",

"text-generation",

"conversational",

"dataset:HuggingFaceH4/ultrafeedback_binarized",

"base_model:wandb/gemma-2b-zephyr-sft",

"base_model:finetune:wandb/gemma-2b-zephyr-sft",

"license:other",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

]

| text-generation | 2024-02-29T08:40:28Z | ---

license: other

library_name: transformers

datasets:

- HuggingFaceH4/ultrafeedback_binarized

base_model: wandb/gemma-2b-zephyr-sft

license_name: gemma-terms-of-use

license_link: https://ai.google.dev/gemma/terms

---

[<img src="https://raw.githubusercontent.com/wandb/assets/main/wandb-github-badge-28.svg" alt="Visualize in Weights & Biases" width="200" height="32"/>](https://wandb.ai/llm_surgery/gemma-zephyr)

# Gemma 2B Zephyr DPO

The [Zephyr](https://huggingface.co/HuggingFaceH4/zephyr-7b-beta) DPO recipe applied on top of SFT finetuned Gemma 2B

## Model description

- **Model type:** A 8.5B parameter GPT-like model fine-tuned on a mix of publicly available, synthetic datasets.

- **Language(s) (NLP):** Primarily English

- **Finetuned from model:** [wandb/gemma-2b-zephyr-sft](https://huggingface.co/wandb/gemma-2b-zephyr-sft/)

## Recipe

We trained using the DPO script in [alignment handbook recipe](https://github.com/huggingface/alignment-handbook/blob/main/scripts/run_dpo.py) and logging to W&B

Visit the [W&B workspace here](https://wandb.ai/llm_surgery/gemma-zephyr?nw=nwusercapecape)

## License

This model has the same license as the [original Gemma model collection](https://ai.google.dev/gemma/terms)

## Compute provided by [Lambda Labs](https://lambdalabs.com/) - 8xA100 80GB node

around 13 hours of training

|

azizksar/train_mistral_v3 | azizksar | 2024-03-03T20:45:27Z | 0 | 0 | peft | [

"peft",

"safetensors",

"generated_from_trainer",

"base_model:mistralai/Mistral-7B-v0.1",

"base_model:adapter:mistralai/Mistral-7B-v0.1",

"license:apache-2.0",

"region:us"

]

| null | 2024-03-03T20:45:25Z | ---

license: apache-2.0

library_name: peft

tags:

- generated_from_trainer

base_model: mistralai/Mistral-7B-v0.1

model-index:

- name: train_mistral_v3

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# train_mistral_v3

This model is a fine-tuned version of [mistralai/Mistral-7B-v0.1](https://huggingface.co/mistralai/Mistral-7B-v0.1) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 1.8274

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0002

- train_batch_size: 2

- eval_batch_size: 16

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 8

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 2

- num_epochs: 10

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 0.1783 | 10.0 | 240 | 1.8274 |

### Framework versions

- PEFT 0.9.1.dev0

- Transformers 4.39.0.dev0

- Pytorch 2.1.2

- Datasets 2.1.0

- Tokenizers 0.15.2 |

Caesaripse/mistral7binstruct_summarize | Caesaripse | 2024-03-03T20:44:04Z | 0 | 0 | peft | [

"peft",

"tensorboard",

"safetensors",

"trl",

"sft",

"generated_from_trainer",

"dataset:generator",

"base_model:mistralai/Mistral-7B-Instruct-v0.2",

"base_model:adapter:mistralai/Mistral-7B-Instruct-v0.2",

"license:apache-2.0",

"region:us"

]

| null | 2024-03-03T20:44:01Z | ---

license: apache-2.0

library_name: peft

tags:

- trl

- sft

- generated_from_trainer

datasets:

- generator

base_model: mistralai/Mistral-7B-Instruct-v0.2

model-index:

- name: mistral7binstruct_summarize

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mistral7binstruct_summarize

This model is a fine-tuned version of [mistralai/Mistral-7B-Instruct-v0.2](https://huggingface.co/mistralai/Mistral-7B-Instruct-v0.2) on the generator dataset.

It achieves the following results on the evaluation set:

- Loss: 1.4778

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0002

- train_batch_size: 1

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: constant

- lr_scheduler_warmup_steps: 0.03

- training_steps: 50

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 1.7382 | 0.22 | 25 | 1.5653 |

| 1.529 | 0.43 | 50 | 1.4778 |

### Framework versions

- PEFT 0.9.0

- Transformers 4.38.2

- Pytorch 2.1.0+cu121

- Datasets 2.18.0

- Tokenizers 0.15.2 |

Sachioster/mistral-ft | Sachioster | 2024-03-03T20:43:25Z | 0 | 0 | peft | [

"peft",

"tensorboard",

"safetensors",

"generated_from_trainer",

"base_model:TheBloke/Mistral-7B-Instruct-v0.2-GPTQ",

"base_model:adapter:TheBloke/Mistral-7B-Instruct-v0.2-GPTQ",

"license:apache-2.0",

"region:us"

]

| null | 2024-03-03T20:43:22Z | ---

license: apache-2.0

library_name: peft

tags:

- generated_from_trainer

base_model: TheBloke/Mistral-7B-Instruct-v0.2-GPTQ

model-index:

- name: mistral-ft

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mistral-ft

This model is a fine-tuned version of [TheBloke/Mistral-7B-Instruct-v0.2-GPTQ](https://huggingface.co/TheBloke/Mistral-7B-Instruct-v0.2-GPTQ) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.9210

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0002

- train_batch_size: 4

- eval_batch_size: 4

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 16

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 2

- num_epochs: 10

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 1.9822 | 0.99 | 42 | 1.5521 |

| 1.4408 | 1.99 | 84 | 1.3540 |

| 1.2501 | 2.98 | 126 | 1.2406 |

| 1.0744 | 4.0 | 169 | 1.1296 |

| 0.9751 | 4.99 | 211 | 1.0512 |

| 0.8817 | 5.99 | 253 | 0.9931 |

| 0.8158 | 6.98 | 295 | 0.9602 |

| 0.7383 | 8.0 | 338 | 0.9393 |

| 0.7179 | 8.99 | 380 | 0.9236 |

| 0.6866 | 9.94 | 420 | 0.9210 |

### Framework versions

- PEFT 0.9.0

- Transformers 4.38.1

- Pytorch 2.1.0+cu121

- Datasets 2.18.0

- Tokenizers 0.15.2 |

ceofast/emotiom_analysis_with_distilbert | ceofast | 2024-03-03T20:37:12Z | 3 | 0 | transformers | [

"transformers",

"tf",

"distilbert",

"text-classification",

"generated_from_keras_callback",

"base_model:distilbert/distilbert-base-uncased",

"base_model:finetune:distilbert/distilbert-base-uncased",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text-classification | 2024-03-03T20:00:15Z | ---

license: apache-2.0

base_model: distilbert-base-uncased

tags:

- generated_from_keras_callback

model-index:

- name: ceofast/emotiom_analysis_with_distilbert

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# ceofast/emotiom_analysis_with_distilbert

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 0.1305

- Validation Loss: 0.1559

- Train Accuracy: 0.932

- Epoch: 1

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'Adam', 'learning_rate': 5e-05, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-07, 'amsgrad': False}

- training_precision: float32

### Training results

| Train Loss | Validation Loss | Train Accuracy | Epoch |

|:----------:|:---------------:|:--------------:|:-----:|

| 0.3838 | 0.1456 | 0.936 | 0 |

| 0.1305 | 0.1559 | 0.932 | 1 |

### Framework versions

- Transformers 4.37.2

- TensorFlow 2.10.1

- Datasets 2.17.0

- Tokenizers 0.15.1

|

bofenghuang/whisper-large-v3-french-distil-dec8 | bofenghuang | 2024-03-03T20:29:07Z | 491 | 3 | transformers | [

"transformers",

"safetensors",

"whisper",

"automatic-speech-recognition",

"hf-asr-leaderboard",

"fr",

"dataset:mozilla-foundation/common_voice_13_0",

"dataset:facebook/multilingual_librispeech",

"dataset:facebook/voxpopuli",

"dataset:google/fleurs",

"dataset:gigant/african_accented_french",

"arxiv:2311.00430",

"arxiv:2212.04356",

"license:mit",

"model-index",

"endpoints_compatible",

"region:us"

]

| automatic-speech-recognition | 2023-12-25T12:49:51Z | ---

license: mit

language: fr

library_name: transformers

pipeline_tag: automatic-speech-recognition

thumbnail: null

tags:

- automatic-speech-recognition

- hf-asr-leaderboard

datasets:

- mozilla-foundation/common_voice_13_0

- facebook/multilingual_librispeech

- facebook/voxpopuli

- google/fleurs

- gigant/african_accented_french

metrics:

- wer

model-index:

- name: whisper-large-v3-french-distil-dec8

results:

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: Common Voice 13.0

type: mozilla-foundation/common_voice_13_0

config: fr

split: test

args:

language: fr

metrics:

- name: WER

type: wer

value: 7.62

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: Multilingual LibriSpeech (MLS)

type: facebook/multilingual_librispeech

config: french

split: test

args:

language: fr

metrics:

- name: WER

type: wer

value: 3.80

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: VoxPopuli

type: facebook/voxpopuli

config: fr

split: test

args:

language: fr

metrics:

- name: WER

type: wer

value: 8.85

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: Fleurs

type: google/fleurs

config: fr_fr

split: test

args:

language: fr

metrics:

- name: WER

type: wer

value: 5.40

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: African Accented French

type: gigant/african_accented_french

config: fr

split: test

args:

language: fr

metrics:

- name: WER

type: wer

value: 4.18

---

# Whisper-Large-V3-French-Distil-Dec8

Whisper-Large-V3-French-Distil represents a series of distilled versions of [Whisper-Large-V3-French](https://huggingface.co/bofenghuang/whisper-large-v3-french), achieved by reducing the number of decoder layers from 32 to 16, 8, 4, or 2 and distilling using a large-scale dataset, as outlined in this [paper](https://arxiv.org/abs/2311.00430).

The distilled variants reduce memory usage and inference time while maintaining performance (based on the retained number of layers) and mitigating the risk of hallucinations, particularly in long-form transcriptions. Moreover, they can be seamlessly combined with the original Whisper-Large-V3-French model for speculative decoding, resulting in improved inference speed and consistent outputs compared to using the standalone model.

This model has been converted into various formats, facilitating its usage across different libraries, including transformers, openai-whisper, fasterwhisper, whisper.cpp, candle, mlx, etc.

## Table of Contents

- [Performance](#performance)

- [Usage](#usage)

- [Hugging Face Pipeline](#hugging-face-pipeline)

- [Hugging Face Low-level APIs](#hugging-face-low-level-apis)

- [Speculative Decoding](#speculative-decoding)

- [OpenAI Whisper](#openai-whisper)

- [Faster Whisper](#faster-whisper)

- [Whisper.cpp](#whispercpp)

- [Candle](#candle)

- [MLX](#mlx)

- [Training details](#training-details)

- [Acknowledgements](#acknowledgements)

## Performance

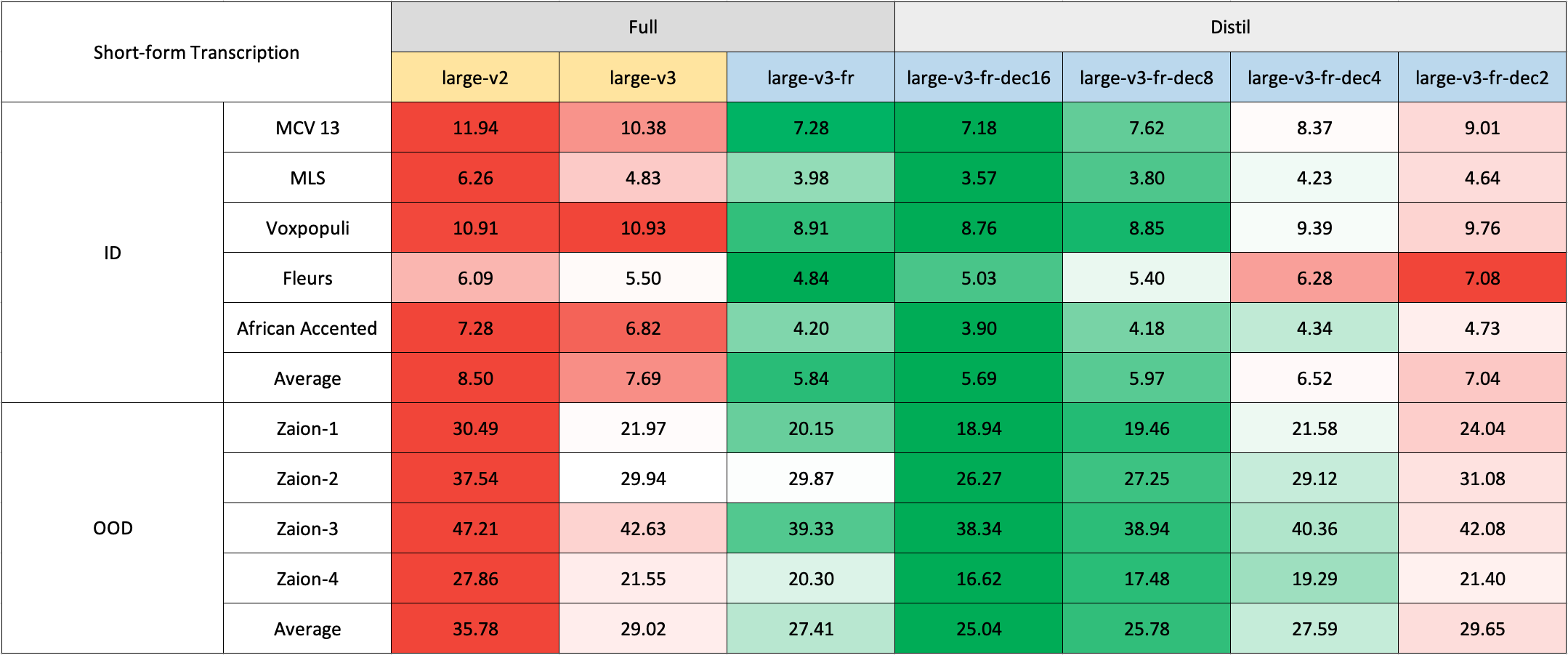

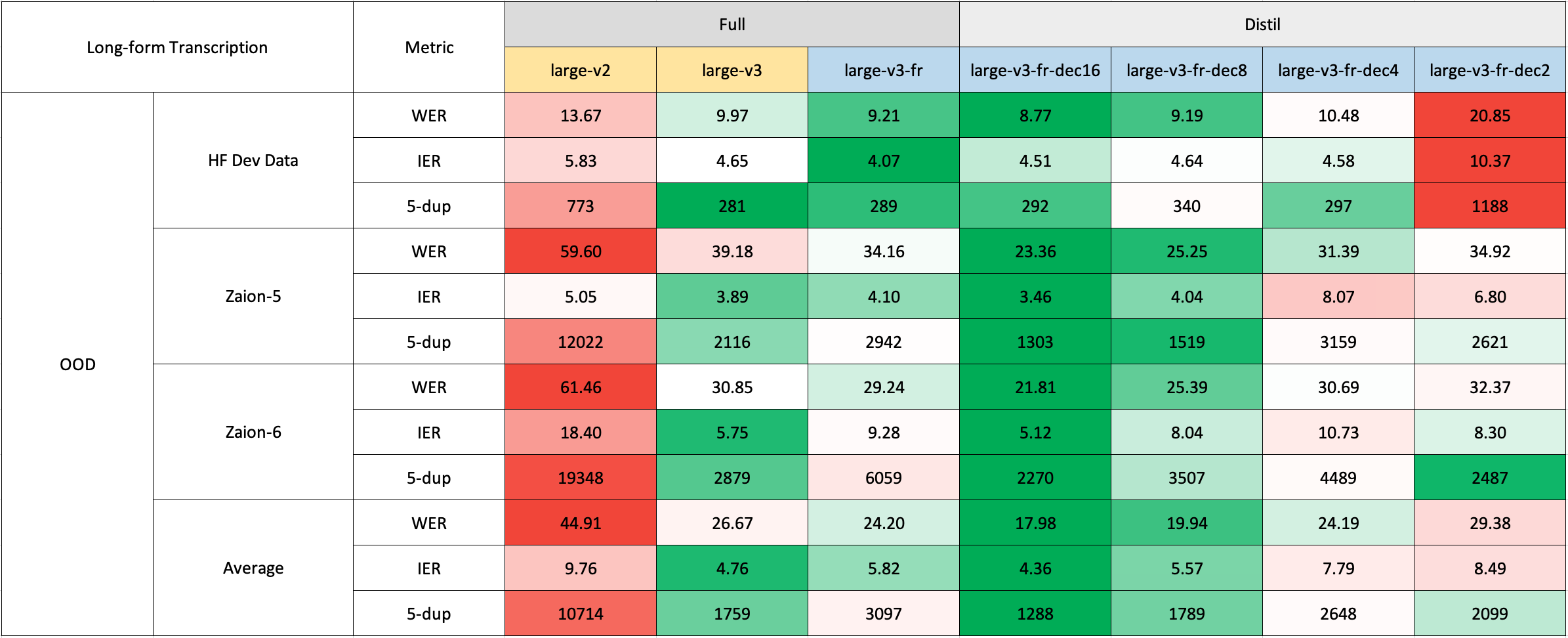

We evaluated our model on both short and long-form transcriptions, and also tested it on both in-distribution and out-of-distribution datasets to conduct a comprehensive analysis assessing its accuracy, generalizability, and robustness.

Please note that the reported WER is the result after converting numbers to text, removing punctuation (except for apostrophes and hyphens), and converting all characters to lowercase.

All evaluation results on the public datasets can be found [here](https://drive.google.com/drive/folders/1rFIh6yXRVa9RZ0ieZoKiThFZgQ4STPPI?usp=drive_link).

### Short-Form Transcription

Due to the lack of readily available out-of-domain (OOD) and long-form test sets in French, we evaluated using internal test sets from [Zaion Lab](https://zaion.ai/). These sets comprise human-annotated audio-transcription pairs from call center conversations, which are notable for their significant background noise and domain-specific terminology.

### Long-Form Transcription

The long-form transcription was run using the 🤗 Hugging Face pipeline for quicker evaluation. Audio files were segmented into 30-second chunks and processed in parallel.

## Usage

### Hugging Face Pipeline

The model can easily used with the 🤗 Hugging Face [`pipeline`](https://huggingface.co/docs/transformers/main_classes/pipelines#transformers.AutomaticSpeechRecognitionPipeline) class for audio transcription.

For long-form transcription (> 30 seconds), you can activate the process by passing the `chunk_length_s` argument. This approach segments the audio into smaller segments, processes them in parallel, and then joins them at the strides by finding the longest common sequence. While this chunked long-form approach may have a slight compromise in performance compared to OpenAI's sequential algorithm, it provides 9x faster inference speed.

```python

import torch

from datasets import load_dataset

from transformers import AutoModelForSpeechSeq2Seq, AutoProcessor, pipeline

device = "cuda:0" if torch.cuda.is_available() else "cpu"

torch_dtype = torch.float16 if torch.cuda.is_available() else torch.float32

# Load model

model_name_or_path = "bofenghuang/whisper-large-v3-french-distil-dec8"

processor = AutoProcessor.from_pretrained(model_name_or_path)

model = AutoModelForSpeechSeq2Seq.from_pretrained(

model_name_or_path,

torch_dtype=torch_dtype,

low_cpu_mem_usage=True,

)

model.to(device)

# Init pipeline

pipe = pipeline(

"automatic-speech-recognition",

model=model,

feature_extractor=processor.feature_extractor,

tokenizer=processor.tokenizer,

torch_dtype=torch_dtype,

device=device,

# chunk_length_s=30, # for long-form transcription

max_new_tokens=128,

)

# Example audio

dataset = load_dataset("bofenghuang/asr-dummy", "fr", split="test")

sample = dataset[0]["audio"]

# Run pipeline

result = pipe(sample)

print(result["text"])

```

### Hugging Face Low-level APIs

You can also use the 🤗 Hugging Face low-level APIs for transcription, offering greater control over the process, as demonstrated below:

```python

import torch

from datasets import load_dataset

from transformers import AutoModelForSpeechSeq2Seq, AutoProcessor

device = "cuda:0" if torch.cuda.is_available() else "cpu"

torch_dtype = torch.float16 if torch.cuda.is_available() else torch.float32

# Load model

model_name_or_path = "bofenghuang/whisper-large-v3-french-distil-dec8"

processor = AutoProcessor.from_pretrained(model_name_or_path)

model = AutoModelForSpeechSeq2Seq.from_pretrained(

model_name_or_path,

torch_dtype=torch_dtype,

low_cpu_mem_usage=True,

)

model.to(device)

# Example audio

dataset = load_dataset("bofenghuang/asr-dummy", "fr", split="test")

sample = dataset[0]["audio"]

# Extract feautres

input_features = processor(

sample["array"], sampling_rate=sample["sampling_rate"], return_tensors="pt"

).input_features

# Generate tokens

predicted_ids = model.generate(

input_features.to(dtype=torch_dtype).to(device), max_new_tokens=128

)

# Detokenize to text

transcription = processor.batch_decode(predicted_ids, skip_special_tokens=True)[0]

print(transcription)

```

### Speculative Decoding

[Speculative decoding](https://huggingface.co/blog/whisper-speculative-decoding) can be achieved using a draft model, essentially a distilled version of Whisper. This approach guarantees identical outputs to using the main Whisper model alone, offers a 2x faster inference speed, and incurs only a slight increase in memory overhead.

Since the distilled Whisper has the same encoder as the original, only its decoder need to be loaded, and encoder outputs are shared between the main and draft models during inference.

Using speculative decoding with the Hugging Face pipeline is simple - just specify the `assistant_model` within the generation configurations.

```python

import torch

from datasets import load_dataset

from transformers import (

AutoModelForCausalLM,

AutoModelForSpeechSeq2Seq,

AutoProcessor,

pipeline,

)

device = "cuda:0" if torch.cuda.is_available() else "cpu"

torch_dtype = torch.float16 if torch.cuda.is_available() else torch.float32

# Load model

model_name_or_path = "bofenghuang/whisper-large-v3-french"

processor = AutoProcessor.from_pretrained(model_name_or_path)

model = AutoModelForSpeechSeq2Seq.from_pretrained(

model_name_or_path,

torch_dtype=torch_dtype,

low_cpu_mem_usage=True,

)

model.to(device)

# Load draft model

assistant_model_name_or_path = "bofenghuang/whisper-large-v3-french-distil-dec2"

assistant_model = AutoModelForCausalLM.from_pretrained(

assistant_model_name_or_path,

torch_dtype=torch_dtype,

low_cpu_mem_usage=True,

)

assistant_model.to(device)

# Init pipeline

pipe = pipeline(

"automatic-speech-recognition",

model=model,

feature_extractor=processor.feature_extractor,

tokenizer=processor.tokenizer,

torch_dtype=torch_dtype,

device=device,

generate_kwargs={"assistant_model": assistant_model},

max_new_tokens=128,

)

# Example audio

dataset = load_dataset("bofenghuang/asr-dummy", "fr", split="test")

sample = dataset[0]["audio"]

# Run pipeline

result = pipe(sample)

print(result["text"])

```

### OpenAI Whisper

You can also employ the sequential long-form decoding algorithm with a sliding window and temperature fallback, as outlined by OpenAI in their original [paper](https://arxiv.org/abs/2212.04356).

First, install the [openai-whisper](https://github.com/openai/whisper) package:

```bash

pip install -U openai-whisper

```

Then, download the converted model:

```bash

python -c "from huggingface_hub import hf_hub_download; hf_hub_download(repo_id='bofenghuang/whisper-large-v3-french-distil-dec8', filename='original_model.pt', local_dir='./models/whisper-large-v3-french-distil-dec8')"

```

Now, you can transcirbe audio files by following the usage instructions provided in the repository:

```python

import whisper

from datasets import load_dataset

# Load model

model = whisper.load_model("./models/whisper-large-v3-french-distil-dec8/original_model.pt")

# Example audio

dataset = load_dataset("bofenghuang/asr-dummy", "fr", split="test")

sample = dataset[0]["audio"]["array"].astype("float32")

# Transcribe

result = model.transcribe(sample, language="fr")

print(result["text"])

```

### Faster Whisper

Faster Whisper is a reimplementation of OpenAI's Whisper models and the sequential long-form decoding algorithm in the [CTranslate2](https://github.com/OpenNMT/CTranslate2) format.

Compared to openai-whisper, it offers up to 4x faster inference speed, while consuming less memory. Additionally, the model can be quantized into int8, further enhancing its efficiency on both CPU and GPU.

First, install the [faster-whisper](https://github.com/SYSTRAN/faster-whisper) package:

```bash

pip install faster-whisper

```

Then, download the model converted to the CTranslate2 format:

```bash

python -c "from huggingface_hub import snapshot_download; snapshot_download(repo_id='bofenghuang/whisper-large-v3-french-distil-dec8', local_dir='./models/whisper-large-v3-french-distil-dec8', allow_patterns='ctranslate2/*')"

```

Now, you can transcirbe audio files by following the usage instructions provided in the repository:

```python

from datasets import load_dataset

from faster_whisper import WhisperModel

# Load model

model = WhisperModel("./models/whisper-large-v3-french-distil-dec8/ctranslate2", device="cuda", compute_type="float16") # Run on GPU with FP16

# Example audio

dataset = load_dataset("bofenghuang/asr-dummy", "fr", split="test")

sample = dataset[0]["audio"]["array"].astype("float32")

segments, info = model.transcribe(sample, beam_size=5, language="fr")

for segment in segments:

print("[%.2fs -> %.2fs] %s" % (segment.start, segment.end, segment.text))

```

### Whisper.cpp

Whisper.cpp is a reimplementation of OpenAI's Whisper models, crafted in plain C/C++ without any dependencies. It offers compatibility with various backends and platforms.

Additionally, the model can be quantized to either 4-bit or 5-bit integers, further enhancing its efficiency.

First, clone and build the [whisper.cpp](https://github.com/ggerganov/whisper.cpp) repository:

```bash

git clone https://github.com/ggerganov/whisper.cpp.git

cd whisper.cpp

# build the main example

make

```

Next, download the converted ggml weights from the Hugging Face Hub:

```bash

# Download model quantized with Q5_0 method

python -c "from huggingface_hub import hf_hub_download; hf_hub_download(repo_id='bofenghuang/whisper-large-v3-french-distil-dec8', filename='ggml-model-q5_0.bin', local_dir='./models/whisper-large-v3-french-distil-dec8')"

```

Now, you can transcribe an audio file using the following command:

```bash

./main -m ./models/whisper-large-v3-french-distil-dec8/ggml-model-q5_0.bin -l fr -f /path/to/audio/file --print-colors

```

### Candle

[Candle-whisper](https://github.com/huggingface/candle/tree/main/candle-examples/examples/whisper) is a reimplementation of OpenAI's Whisper models in the candle format - a lightweight ML framework built in Rust.

First, clone the [candle](https://github.com/huggingface/candle) repository:

```bash

git clone https://github.com/huggingface/candle.git

cd candle/candle-examples/examples/whisper

```

Transcribe an audio file using the following command:

```bash

cargo run --example whisper --release -- --model large-v3 --model-id bofenghuang/whisper-large-v3-french-distil-dec8 --language fr --input /path/to/audio/file

```

In order to use CUDA add `--features cuda` to the example command line:

```bash

cargo run --example whisper --release --features cuda -- --model large-v3 --model-id bofenghuang/whisper-large-v3-french-distil-dec8 --language fr --input /path/to/audio/file

```

### MLX

[MLX-Whisper](https://github.com/ml-explore/mlx-examples/tree/main/whisper) is a reimplementation of OpenAI's Whisper models in the [MLX](https://github.com/ml-explore/mlx) format - a ML framework on Apple silicon. It supports features like lazy computation, unified memory management, etc.

First, clone the [MLX Examples](https://github.com/ml-explore/mlx-examples) repository:

```bash

git clone https://github.com/ml-explore/mlx-examples.git

cd mlx-examples/whisper

```

Next, install the dependencies:

```bash

pip install -r requirements.txt

```

Download the pytorch checkpoint in the original OpenAI format and convert it into MLX format (We haven't included the converted version here since the repository is already heavy and the conversion is very fast):

```bash

# Download

python -c "from huggingface_hub import hf_hub_download; hf_hub_download(repo_id='bofenghuang/whisper-large-v3-french-distil-dec8', filename='original_model.pt', local_dir='./models/whisper-large-v3-french-distil-dec8')"

# Convert into .npz

python convert.py --torch-name-or-path ./models/whisper-large-v3-french-distil-dec8/original_model.pt --mlx-path ./mlx_models/whisper-large-v3-french-distil-dec8

```

Now, you can transcribe audio with:

```python

import whisper

result = whisper.transcribe("/path/to/audio/file", path_or_hf_repo="mlx_models/whisper-large-v3-french-distil-dec8", language="fr")

print(result["text"])

```

## Training details

We've collected a composite dataset consisting of over 2,500 hours of French speech recognition data, which incldues datasets such as [Common Voice 13.0](https://huggingface.co/datasets/mozilla-foundation/common_voice_13_0), [Multilingual LibriSpeech](https://huggingface.co/datasets/facebook/multilingual_librispeech), [Voxpopuli](https://huggingface.co/datasets/facebook/voxpopuli), [Fleurs](https://huggingface.co/datasets/google/fleurs), [Multilingual TEDx](https://www.openslr.org/100/), [MediaSpeech](https://www.openslr.org/108/), [African Accented French](https://huggingface.co/datasets/gigant/african_accented_french), etc.

Given that some datasets, like MLS, only offer text without case or punctuation, we employed a customized version of 🤗 [Speechbox](https://github.com/huggingface/speechbox) to restore case and punctuation from a limited set of symbols using the [bofenghuang/whisper-large-v2-cv11-french](bofenghuang/whisper-large-v2-cv11-french) model.

However, even within these datasets, we observed certain quality issues. These ranged from mismatches between audio and transcription in terms of language or content, poorly segmented utterances, to missing words in scripted speech, etc. We've built a pipeline to filter out many of these problematic utterances, aiming to enhance the dataset's quality. As a result, we excluded more than 10% of the data, and when we retrained the model, we noticed a significant reduction of hallucination.

For training, we employed the [script](https://github.com/huggingface/distil-whisper/blob/main/training/run_distillation.py) available in the 🤗 Distil-Whisper repository. The model training took place on the [Jean-Zay supercomputer](http://www.idris.fr/eng/jean-zay/jean-zay-presentation-eng.html) at GENCI, and we extend our gratitude to the IDRIS team for their responsive support throughout the project.

## Acknowledgements

- OpenAI for creating and open-sourcing the [Whisper model](https://arxiv.org/abs/2212.04356)

- 🤗 Hugging Face for integrating the Whisper model and providing the training codebase within the [Transformers](https://github.com/huggingface/transformers) and [Distil-Whisper](https://github.com/huggingface/distil-whisper) repository

- [Genci](https://genci.fr/) for their generous contribution of GPU hours to this project

|

furrutiav/bert_qa_extractor_cockatiel_2022_best_both_ef_plus_nllf_v0_z_value_it_505 | furrutiav | 2024-03-03T20:28:24Z | 3 | 0 | transformers | [

"transformers",

"safetensors",

"bert",

"feature-extraction",

"arxiv:1910.09700",

"endpoints_compatible",

"region:us"

]

| feature-extraction | 2024-03-03T20:27:58Z | ---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

a-h-m-e-d/mistral_7b-instruct-guanaco | a-h-m-e-d | 2024-03-03T20:27:53Z | 0 | 0 | transformers | [

"transformers",

"safetensors",

"arxiv:1910.09700",

"endpoints_compatible",

"region:us"

]

| null | 2024-02-26T14:59:16Z | ---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

salohnana2018/ABSA-SentencePair-DAPT-HARDAR-bert-base-Camel-MSA-ru2 | salohnana2018 | 2024-03-03T20:22:26Z | 3 | 0 | transformers | [

"transformers",

"tensorboard",

"safetensors",

"bert",

"text-classification",

"generated_from_trainer",

"base_model:salohnana2018/CAMEL-BERT-MSA-domianAdaption-Single-ABSA-HARD",

"base_model:finetune:salohnana2018/CAMEL-BERT-MSA-domianAdaption-Single-ABSA-HARD",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text-classification | 2024-03-03T19:31:34Z | ---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- accuracy

- f1

- precision

- recall

base_model: salohnana2018/CAMEL-BERT-MSA-domianAdaption-Single-ABSA-HARD

model-index:

- name: ABSA-SentencePair-DAPT-HARDAR-bert-base-Camel-MSA-ru2

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# ABSA-SentencePair-DAPT-HARDAR-bert-base-Camel-MSA-ru2

This model is a fine-tuned version of [salohnana2018/CAMEL-BERT-MSA-domianAdaption-Single-ABSA-HARD](https://huggingface.co/salohnana2018/CAMEL-BERT-MSA-domianAdaption-Single-ABSA-HARD) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1140

- Accuracy: 0.8956

- F1: 0.8956

- Precision: 0.8956

- Recall: 0.8956

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 20

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 | Precision | Recall |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|:---------:|:------:|

| 0.0556 | 1.0 | 265 | 0.0421 | 0.8842 | 0.8842 | 0.8842 | 0.8842 |

| 0.0372 | 2.0 | 530 | 0.0368 | 0.8828 | 0.8828 | 0.8828 | 0.8828 |

| 0.0231 | 3.0 | 795 | 0.0426 | 0.8828 | 0.8828 | 0.8828 | 0.8828 |

| 0.0145 | 4.0 | 1060 | 0.0601 | 0.8809 | 0.8809 | 0.8809 | 0.8809 |

| 0.0101 | 5.0 | 1325 | 0.0573 | 0.8842 | 0.8842 | 0.8842 | 0.8842 |

| 0.0076 | 6.0 | 1590 | 0.0621 | 0.8856 | 0.8856 | 0.8856 | 0.8856 |

| 0.0051 | 7.0 | 1855 | 0.0621 | 0.8866 | 0.8866 | 0.8866 | 0.8866 |

| 0.0044 | 8.0 | 2120 | 0.0709 | 0.8899 | 0.8899 | 0.8899 | 0.8899 |

| 0.0035 | 9.0 | 2385 | 0.0827 | 0.8899 | 0.8899 | 0.8899 | 0.8899 |

| 0.0028 | 10.0 | 2650 | 0.0895 | 0.8946 | 0.8946 | 0.8946 | 0.8946 |

| 0.0024 | 11.0 | 2915 | 0.0859 | 0.8908 | 0.8908 | 0.8908 | 0.8908 |

| 0.0021 | 12.0 | 3180 | 0.0897 | 0.8847 | 0.8847 | 0.8847 | 0.8847 |

| 0.0017 | 13.0 | 3445 | 0.0994 | 0.8989 | 0.8989 | 0.8989 | 0.8989 |

| 0.0014 | 14.0 | 3710 | 0.1056 | 0.8937 | 0.8937 | 0.8937 | 0.8937 |

| 0.0014 | 15.0 | 3975 | 0.1044 | 0.8941 | 0.8941 | 0.8941 | 0.8941 |

| 0.0012 | 16.0 | 4240 | 0.1105 | 0.8951 | 0.8951 | 0.8951 | 0.8951 |

| 0.0012 | 17.0 | 4505 | 0.1119 | 0.8956 | 0.8956 | 0.8956 | 0.8956 |

| 0.0011 | 18.0 | 4770 | 0.1088 | 0.8965 | 0.8965 | 0.8965 | 0.8965 |

| 0.001 | 19.0 | 5035 | 0.1132 | 0.8979 | 0.8979 | 0.8979 | 0.8979 |

| 0.001 | 20.0 | 5300 | 0.1140 | 0.8956 | 0.8956 | 0.8956 | 0.8956 |

### Framework versions

- Transformers 4.38.1

- Pytorch 2.1.0+cu121

- Datasets 2.18.0

- Tokenizers 0.15.2

|

LarryAIDraw/ichinose_honamiV1 | LarryAIDraw | 2024-03-03T20:22:08Z | 0 | 0 | null | [

"license:creativeml-openrail-m",

"region:us"

]

| null | 2024-03-03T20:18:18Z | ---

license: creativeml-openrail-m

---

https://civitai.com/models/18363/honami-ichinose-cote |

core-3/kuno-royale-v2-7b-GGUF | core-3 | 2024-03-03T20:21:57Z | 3 | 2 | null | [

"gguf",

"base_model:core-3/kuno-royale-v2-7b",

"base_model:quantized:core-3/kuno-royale-v2-7b",

"license:cc-by-nc-4.0",

"region:us"

]

| null | 2024-03-01T18:06:43Z | ---

base_model: core-3/kuno-royale-v2-7b

inference: false

license: cc-by-nc-4.0

model_creator: core-3

model_name: kuno-royale-v2-7b

model_type: mistral

quantized_by: core-3

---

## kuno-royale-v2-7b-GGUF

Some GGUF quants of [core-3/kuno-royale-v2-7b](https://huggingface.co/core-3/kuno-royale-v2-7b) |

raoulmago/riconoscimento_documenti | raoulmago | 2024-03-03T19:55:01Z | 5 | 0 | transformers | [

"transformers",

"tensorboard",

"safetensors",

"swin",

"image-classification",

"generated_from_trainer",

"base_model:microsoft/swin-tiny-patch4-window7-224",

"base_model:finetune:microsoft/swin-tiny-patch4-window7-224",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| image-classification | 2024-03-03T19:02:23Z | ---

license: apache-2.0

base_model: microsoft/swin-tiny-patch4-window7-224

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: riconoscimento_documenti

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# riconoscimento_documenti

This model is a fine-tuned version of [microsoft/swin-tiny-patch4-window7-224](https://huggingface.co/microsoft/swin-tiny-patch4-window7-224) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0000

- Accuracy: 1.0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 128

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 50

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| No log | 1.0 | 1 | 1.9560 | 0.0 |

| No log | 2.0 | 3 | 1.4216 | 0.7375 |

| No log | 3.0 | 5 | 0.6008 | 1.0 |

| No log | 4.0 | 6 | 0.2696 | 1.0 |

| No log | 5.0 | 7 | 0.0996 | 1.0 |

| No log | 6.0 | 9 | 0.0089 | 1.0 |

| 0.4721 | 7.0 | 11 | 0.0011 | 1.0 |

| 0.4721 | 8.0 | 12 | 0.0005 | 1.0 |

| 0.4721 | 9.0 | 13 | 0.0002 | 1.0 |

| 0.4721 | 10.0 | 15 | 0.0001 | 1.0 |

| 0.4721 | 11.0 | 17 | 0.0000 | 1.0 |

| 0.4721 | 12.0 | 18 | 0.0000 | 1.0 |

| 0.4721 | 13.0 | 19 | 0.0000 | 1.0 |

| 0.0003 | 14.0 | 21 | 0.0000 | 1.0 |

| 0.0003 | 15.0 | 23 | 0.0000 | 1.0 |

| 0.0003 | 16.0 | 24 | 0.0000 | 1.0 |

| 0.0003 | 17.0 | 25 | 0.0000 | 1.0 |

| 0.0003 | 18.0 | 27 | 0.0000 | 1.0 |

| 0.0003 | 19.0 | 29 | 0.0000 | 1.0 |

| 0.0 | 20.0 | 30 | 0.0000 | 1.0 |

| 0.0 | 21.0 | 31 | 0.0000 | 1.0 |

| 0.0 | 22.0 | 33 | 0.0000 | 1.0 |

| 0.0 | 23.0 | 35 | 0.0000 | 1.0 |

| 0.0 | 24.0 | 36 | 0.0000 | 1.0 |

| 0.0 | 25.0 | 37 | 0.0000 | 1.0 |

| 0.0 | 26.0 | 39 | 0.0000 | 1.0 |

| 0.0 | 27.0 | 41 | 0.0000 | 1.0 |

| 0.0 | 28.0 | 42 | 0.0000 | 1.0 |