modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-07-15 12:29:39

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 521

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-07-15 12:28:52

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

jackoyoungblood/ppo-LunarLander-v2c | jackoyoungblood | 2022-08-05T19:46:18Z | 3 | 0 | stable-baselines3 | [

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

]

| reinforcement-learning | 2022-07-29T23:03:33Z | ---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- metrics:

- type: mean_reward

value: 267.50 +/- 18.00

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

mrm8488/dqn-SpaceInvadersNoFrameskip-v4-3 | mrm8488 | 2022-08-05T19:36:16Z | 6 | 0 | stable-baselines3 | [

"stable-baselines3",

"SpaceInvadersNoFrameskip-v4",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

]

| reinforcement-learning | 2022-08-05T19:35:48Z | ---

library_name: stable-baselines3

tags:

- SpaceInvadersNoFrameskip-v4

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: DQN

results:

- metrics:

- type: mean_reward

value: 349.00 +/- 97.82

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: SpaceInvadersNoFrameskip-v4

type: SpaceInvadersNoFrameskip-v4

---

# **DQN** Agent playing **SpaceInvadersNoFrameskip-v4**

This is a trained model of a **DQN** agent playing **SpaceInvadersNoFrameskip-v4**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3)

and the [RL Zoo](https://github.com/DLR-RM/rl-baselines3-zoo).

The RL Zoo is a training framework for Stable Baselines3

reinforcement learning agents,

with hyperparameter optimization and pre-trained agents included.

## Usage (with SB3 RL Zoo)

RL Zoo: https://github.com/DLR-RM/rl-baselines3-zoo<br/>

SB3: https://github.com/DLR-RM/stable-baselines3<br/>

SB3 Contrib: https://github.com/Stable-Baselines-Team/stable-baselines3-contrib

```

# Download model and save it into the logs/ folder

python -m utils.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga mrm8488 -f logs/

python enjoy.py --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

## Training (with the RL Zoo)

```

python train.py --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

# Upload the model and generate video (when possible)

python -m utils.push_to_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/ -orga mrm8488

```

## Hyperparameters

```python

OrderedDict([('batch_size', 1024),

('buffer_size', 100000),

('env_wrapper',

['stable_baselines3.common.atari_wrappers.AtariWrapper']),

('exploration_final_eps', 0.01),

('exploration_fraction', 0.1),

('frame_stack', 4),

('gradient_steps', 1),

('learning_rate', 0.0001),

('learning_starts', 100000),

('n_timesteps', 1000000.0),

('optimize_memory_usage', False),

('policy', 'CnnPolicy'),

('target_update_interval', 1000),

('train_freq', 4),

('normalize', False)])

```

|

Swervin7s/DialogGPT-medium-AnakinTwo | Swervin7s | 2022-08-05T19:24:18Z | 0 | 0 | null | [

"conersational",

"region:us"

]

| null | 2022-08-05T19:21:52Z | ---

tags:

- conersational

---

|

skr1125/xlm-roberta-base-finetuned-panx-de | skr1125 | 2022-08-05T17:50:14Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"xlm-roberta",

"token-classification",

"generated_from_trainer",

"dataset:xtreme",

"license:mit",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| token-classification | 2022-08-02T01:50:37Z | ---

license: mit

tags:

- generated_from_trainer

datasets:

- xtreme

metrics:

- f1

model-index:

- name: xlm-roberta-base-finetuned-panx-de

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: xtreme

type: xtreme

args: PAN-X.de

metrics:

- name: F1

type: f1

value: 0.863677639046538

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-roberta-base-finetuned-panx-de

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on the xtreme dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1343

- F1: 0.8637

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 24

- eval_batch_size: 24

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.2578 | 1.0 | 525 | 0.1562 | 0.8273 |

| 0.1297 | 2.0 | 1050 | 0.1330 | 0.8474 |

| 0.0809 | 3.0 | 1575 | 0.1343 | 0.8637 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.12.0+cu113

- Datasets 1.16.1

- Tokenizers 0.10.3

|

Galeros/q-Taxi-v3 | Galeros | 2022-08-05T16:33:40Z | 0 | 0 | null | [

"Taxi-v3",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

]

| reinforcement-learning | 2022-08-05T16:33:32Z | ---

tags:

- Taxi-v3

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-Taxi-v3

results:

- metrics:

- type: mean_reward

value: 7.52 +/- 2.76

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Taxi-v3

type: Taxi-v3

---

# **Q-Learning** Agent playing **Taxi-v3**

This is a trained model of a **Q-Learning** agent playing **Taxi-v3** .

## Usage

```python

model = load_from_hub(repo_id="Galeros/q-Taxi-v3", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

evaluate_agent(env, model["max_steps"], model["n_eval_episodes"], model["qtable"], model["eval_seed"])

```

|

jasheershihab/TEST2ppo-LunarLander-v2 | jasheershihab | 2022-08-05T13:21:55Z | 3 | 0 | stable-baselines3 | [

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

]

| reinforcement-learning | 2022-07-13T12:32:46Z | ---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- metrics:

- type: mean_reward

value: 28.44 +/- 165.66

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

datajello/lunar-test-v1 | datajello | 2022-08-05T13:18:24Z | 6 | 0 | stable-baselines3 | [

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

]

| reinforcement-learning | 2022-08-05T12:42:13Z | ---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- metrics:

- type: mean_reward

value: 224.66 +/- 40.94

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

apjanco/candy-first | apjanco | 2022-08-05T13:03:29Z | 56 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"vit",

"image-classification",

"huggingpics",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| image-classification | 2022-08-05T13:03:25Z | ---

tags:

- image-classification

- pytorch

- huggingpics

metrics:

- accuracy

model-index:

- name: candy-first

results:

- task:

name: Image Classification

type: image-classification

metrics:

- name: Accuracy

type: accuracy

value: 0.7436399459838867

---

# candy-first

An initial attempt to identify candy in images.

## Example Images

#### airheads

#### candy corn

#### caramel

#### chips

#### chocolate

#### fruit

#### gum

#### haribo

#### jelly beans

#### lollipop

#### m&ms

#### marshmallow

#### mentos

#### mint

#### nerds

#### peeps

#### pez

#### popcorn

#### pretzel

#### reeses

#### seeds

#### skittles

#### snickers

#### soda

#### sour

#### swedish fish

#### taffy

#### tootsie

#### twix

#### twizzlers

#### warheads

#### whoppers

|

huggingtweets/calm-headspace | huggingtweets | 2022-08-05T09:27:25Z | 3 | 0 | transformers | [

"transformers",

"pytorch",

"gpt2",

"text-generation",

"huggingtweets",

"en",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

]

| text-generation | 2022-08-05T09:26:46Z | ---

language: en

thumbnail: http://www.huggingtweets.com/calm-headspace/1659691640977/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/547731071479996417/53RFXHu1_400x400.png')">

</div>

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1157021554280058880/yWiCuBSR_400x400.jpg')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI CYBORG 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">Calm & Headspace</div>

<div style="text-align: center; font-size: 14px;">@calm-headspace</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from Calm & Headspace.

| Data | Calm | Headspace |

| --- | --- | --- |

| Tweets downloaded | 3249 | 3250 |

| Retweets | 49 | 10 |

| Short tweets | 144 | 446 |

| Tweets kept | 3056 | 2794 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/190qaia3/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @calm-headspace's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/1g7llfp4) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/1g7llfp4/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/calm-headspace')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

Zaib/Vulnerability-detection | Zaib | 2022-08-05T08:47:07Z | 13 | 5 | transformers | [

"transformers",

"pytorch",

"roberta",

"text-classification",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text-classification | 2022-07-16T09:16:45Z | ---

tags:

- generated_from_trainer

model-index:

- name: Vulnerability-detection

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Vulnerability-detection

This model is a fine-tuned version of [mrm8488/codebert-base-finetuned-detect-insecure-code](https://huggingface.co/mrm8488/codebert-base-finetuned-detect-insecure-code) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5778

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 16

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 3

### Training results

### Framework versions

- Transformers 4.21.1

- Pytorch 1.12.0+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

okho0653/Bio_ClinicalBERT-zero-shot-finetuned-50cad-50noncad-optimal | okho0653 | 2022-08-05T05:29:50Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"bert",

"text-classification",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text-classification | 2022-08-05T05:12:27Z | ---

license: mit

tags:

- generated_from_trainer

metrics:

- accuracy

- f1

model-index:

- name: Bio_ClinicalBERT-zero-shot-finetuned-50cad-50noncad-optimal

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Bio_ClinicalBERT-zero-shot-finetuned-50cad-50noncad-optimal

This model is a fine-tuned version of [emilyalsentzer/Bio_ClinicalBERT](https://huggingface.co/emilyalsentzer/Bio_ClinicalBERT) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 9.8836

- Accuracy: 0.5

- F1: 0.0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.2

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

### Framework versions

- Transformers 4.21.1

- Pytorch 1.12.0+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

alex-apostolo/roberta-base-filtered-cuad | alex-apostolo | 2022-08-05T05:28:06Z | 25 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"roberta",

"question-answering",

"generated_from_trainer",

"dataset:alex-apostolo/filtered-cuad",

"license:mit",

"endpoints_compatible",

"region:us"

]

| question-answering | 2022-08-04T09:12:07Z | ---

license: mit

tags:

- generated_from_trainer

datasets:

- alex-apostolo/filtered-cuad

model-index:

- name: roberta-base-filtered-cuad

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# roberta-base-filtered-cuad

This model is a fine-tuned version of [roberta-base](https://huggingface.co/roberta-base) on the cuad dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0396

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:-----:|:---------------:|

| 0.0502 | 1.0 | 8442 | 0.0467 |

| 0.0397 | 2.0 | 16884 | 0.0436 |

| 0.032 | 3.0 | 25326 | 0.0396 |

### Framework versions

- Transformers 4.21.0

- Pytorch 1.12.0+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

zhiguoxu/chinese-roberta-wwm-ext-finetuned2 | zhiguoxu | 2022-08-05T03:45:08Z | 5 | 0 | transformers | [

"transformers",

"pytorch",

"bert",

"text-classification",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text-classification | 2022-08-03T07:54:52Z | ---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- accuracy

- f1

model-index:

- name: chinese-roberta-wwm-ext-finetuned2

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# chinese-roberta-wwm-ext-finetuned2

This model is a fine-tuned version of [hfl/chinese-roberta-wwm-ext](https://huggingface.co/hfl/chinese-roberta-wwm-ext) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1448

- Accuracy: 1.0

- F1: 1.0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 20

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| 1.4081 | 1.0 | 3 | 0.9711 | 0.7273 | 0.6573 |

| 0.9516 | 2.0 | 6 | 0.8174 | 0.8182 | 0.8160 |

| 0.8945 | 3.0 | 9 | 0.6617 | 0.9091 | 0.9124 |

| 0.7042 | 4.0 | 12 | 0.5308 | 1.0 | 1.0 |

| 0.6641 | 5.0 | 15 | 0.4649 | 1.0 | 1.0 |

| 0.5731 | 6.0 | 18 | 0.4046 | 1.0 | 1.0 |

| 0.5132 | 7.0 | 21 | 0.3527 | 1.0 | 1.0 |

| 0.3999 | 8.0 | 24 | 0.3070 | 1.0 | 1.0 |

| 0.4198 | 9.0 | 27 | 0.2673 | 1.0 | 1.0 |

| 0.3677 | 10.0 | 30 | 0.2378 | 1.0 | 1.0 |

| 0.3545 | 11.0 | 33 | 0.2168 | 1.0 | 1.0 |

| 0.3237 | 12.0 | 36 | 0.1980 | 1.0 | 1.0 |

| 0.3122 | 13.0 | 39 | 0.1860 | 1.0 | 1.0 |

| 0.2802 | 14.0 | 42 | 0.1759 | 1.0 | 1.0 |

| 0.2552 | 15.0 | 45 | 0.1671 | 1.0 | 1.0 |

| 0.2475 | 16.0 | 48 | 0.1598 | 1.0 | 1.0 |

| 0.2259 | 17.0 | 51 | 0.1541 | 1.0 | 1.0 |

| 0.201 | 18.0 | 54 | 0.1492 | 1.0 | 1.0 |

| 0.2083 | 19.0 | 57 | 0.1461 | 1.0 | 1.0 |

| 0.2281 | 20.0 | 60 | 0.1448 | 1.0 | 1.0 |

### Framework versions

- Transformers 4.18.0

- Pytorch 1.12.0+cu102

- Datasets 2.1.0

- Tokenizers 0.12.1

|

tals/albert-base-vitaminc_wnei-fever | tals | 2022-08-05T02:25:41Z | 6 | 1 | transformers | [

"transformers",

"pytorch",

"albert",

"text-classification",

"dataset:tals/vitaminc",

"dataset:fever",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text-classification | 2022-03-02T23:29:05Z | ---

datasets:

- tals/vitaminc

- fever

---

# Details

Model used in [Get Your Vitamin C! Robust Fact Verification with Contrastive Evidence](https://aclanthology.org/2021.naacl-main.52/) (Schuster et al., NAACL 21`).

For more details see: https://github.com/TalSchuster/VitaminC

When using this model, please cite the paper.

# BibTeX entry and citation info

```bibtex

@inproceedings{schuster-etal-2021-get,

title = "Get Your Vitamin {C}! Robust Fact Verification with Contrastive Evidence",

author = "Schuster, Tal and

Fisch, Adam and

Barzilay, Regina",

booktitle = "Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies",

month = jun,

year = "2021",

address = "Online",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2021.naacl-main.52",

doi = "10.18653/v1/2021.naacl-main.52",

pages = "624--643",

abstract = "Typical fact verification models use retrieved written evidence to verify claims. Evidence sources, however, often change over time as more information is gathered and revised. In order to adapt, models must be sensitive to subtle differences in supporting evidence. We present VitaminC, a benchmark infused with challenging cases that require fact verification models to discern and adjust to slight factual changes. We collect over 100,000 Wikipedia revisions that modify an underlying fact, and leverage these revisions, together with additional synthetically constructed ones, to create a total of over 400,000 claim-evidence pairs. Unlike previous resources, the examples in VitaminC are contrastive, i.e., they contain evidence pairs that are nearly identical in language and content, with the exception that one supports a given claim while the other does not. We show that training using this design increases robustness{---}improving accuracy by 10{\%} on adversarial fact verification and 6{\%} on adversarial natural language inference (NLI). Moreover, the structure of VitaminC leads us to define additional tasks for fact-checking resources: tagging relevant words in the evidence for verifying the claim, identifying factual revisions, and providing automatic edits via factually consistent text generation.",

}

```

|

fzwd6666/NLTBert_multi_fine_tune_new | fzwd6666 | 2022-08-05T00:22:54Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"bert",

"text-classification",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text-classification | 2022-08-05T00:04:38Z | This model is a fine-tuned version of fzwd6666/Ged_bert_new with 4 layers on an NLT dataset. It achieves the following results on the evaluation set:

{'precision': 0.9795081967213115} {'recall': 0.989648033126294} {'f1': 0.984552008238929} {'accuracy': 0.9843227424749164}

Training hyperparameters:

learning_rate: 1e-4

train_batch_size: 8

eval_batch_size: 8

optimizer: AdamW with betas=(0.9,0.999) and epsilon=1e-08

weight_decay= 0.01

lr_scheduler_type: linear

num_epochs: 3

It achieves the following results on the test set:

Incorrect UD Padded:

{'precision': 0.6878048780487804} {'recall': 0.2863913337846987} {'f1': 0.4043977055449331} {'accuracy': 0.4722575180008471}

Incorrect UD Unigram:

{'precision': 0.6348314606741573} {'recall': 0.3060257278266757} {'f1': 0.4129739607126542} {'accuracy': 0.4557390936044049}

Incorrect UD Bigram:

{'precision': 0.6588419405320813} {'recall': 0.28503723764387273} {'f1': 0.3979206049149338} {'accuracy': 0.4603981363828886}

Incorrect UD All:

{'precision': 0.4} {'recall': 0.0013540961408259986} {'f1': 0.002699055330634278} {'accuracy': 0.373994070309191}

Incorrect Sentence:

{'precision': 0.5} {'recall': 0.012186865267433988} {'f1': 0.02379378717779247} {'accuracy': 0.37441761965268955}

|

huggingtweets/dominic_w-lastmjs-vitalikbuterin | huggingtweets | 2022-08-04T23:40:33Z | 3 | 0 | transformers | [

"transformers",

"pytorch",

"gpt2",

"text-generation",

"huggingtweets",

"en",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

]

| text-generation | 2022-08-04T23:38:29Z | ---

language: en

thumbnail: http://www.huggingtweets.com/dominic_w-lastmjs-vitalikbuterin/1659656428920/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1376912180721766401/ZVhVhhQ7_400x400.jpg')">

</div>

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/977496875887558661/L86xyLF4_400x400.jpg')">

</div>

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/994681826286301184/ZNY20HQG_400x400.jpg')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI CYBORG 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">lastmjs.eth ∞ & vitalik.eth & dom.icp ∞</div>

<div style="text-align: center; font-size: 14px;">@dominic_w-lastmjs-vitalikbuterin</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from lastmjs.eth ∞ & vitalik.eth & dom.icp ∞.

| Data | lastmjs.eth ∞ | vitalik.eth | dom.icp ∞ |

| --- | --- | --- | --- |

| Tweets downloaded | 3250 | 3246 | 3249 |

| Retweets | 14 | 236 | 322 |

| Short tweets | 185 | 122 | 61 |

| Tweets kept | 3051 | 2888 | 2866 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/2rlc6tzy/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @dominic_w-lastmjs-vitalikbuterin's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/1hxl56uf) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/1hxl56uf/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/dominic_w-lastmjs-vitalikbuterin')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

fzwd6666/NLI_new | fzwd6666 | 2022-08-04T22:33:38Z | 5 | 0 | transformers | [

"transformers",

"pytorch",

"bert",

"text-classification",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text-classification | 2022-08-04T21:42:12Z | This model is a fine-tuned version of bert-base-uncased on an NLI dataset. It achieves the following results on the evaluation set:

{'precision': 0.9690210656753407} {'recall': 0.9722337339411521} {'f1': 0.9706247414149772} {'accuracy': 0.9535340314136126}

Training hyperparameters:

learning_rate: 2e-5

train_batch_size: 8

eval_batch_size: 8

optimizer: AdamW with betas=(0.9,0.999) and epsilon=1e-08

weight_decay= 0.01

lr_scheduler_type: linear

num_epochs: 3

It achieves the following results on the test set:

Incorrect UD Padded:

{'precision': 0.623370110330993} {'recall': 0.8415707515233581} {'f1': 0.7162201094785364} {'accuracy': 0.5828038966539602}

Incorrect UD Unigram:

{'precision': 0.6211431461810825} {'recall': 0.8314150304671631} {'f1': 0.7110596409959468} {'accuracy': 0.5772977551884795}

Incorrect UD Bigram:

{'precision': 0.6203980099502487} {'recall': 0.8442789438050101} {'f1': 0.7152279896759391} {'accuracy': 0.579415501905972}

Incorrect UD All:

{'precision': 0.605543710021322} {'recall': 0.1922816519972918} {'f1': 0.2918807810894142} {'accuracy': 0.4163490046590428}

Incorrect Sentence:

{'precision': 0.6411042944785276} {'recall': 0.4245091401489506} {'f1': 0.5107942973523422} {'accuracy': 0.4913172384582804}

|

fzwd6666/Ged_bert_new | fzwd6666 | 2022-08-04T22:32:48Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"bert",

"text-classification",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text-classification | 2022-08-04T22:14:19Z | This model is a fine-tuned version of bert-base-uncased on an NLI dataset. It achieves the following results on the evaluation set:

{'precision': 0.8384560400285919} {'recall': 0.9536585365853658} {'f1': 0.892354507417269} {'accuracy': 0.8345996493278784}

Training hyperparameters:

learning_rate=2e-5

batch_size=32

epochs = 4

warmup_steps=10% training data number

optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

lr_scheduler_type: linear

|

SharpAI/mal-tls-bert-large-w8a8 | SharpAI | 2022-08-04T22:03:00Z | 6 | 0 | transformers | [

"transformers",

"pytorch",

"tf",

"bert",

"text-classification",

"generated_from_keras_callback",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text-classification | 2022-07-27T17:48:37Z | ---

tags:

- generated_from_keras_callback

model-index:

- name: mal-tls-bert-large-w8a8

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# mal-tls-bert-large-w8a8

This model was trained from scratch on an unknown dataset.

It achieves the following results on the evaluation set:

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: None

- training_precision: float32

### Training results

### Framework versions

- Transformers 4.15.0

- TensorFlow 2.6.4

- Datasets 2.1.0

- Tokenizers 0.10.3

|

SharpAI/mal-tls-bert-large-relu | SharpAI | 2022-08-04T21:41:21Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"tf",

"bert",

"text-classification",

"generated_from_keras_callback",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text-classification | 2022-08-04T17:58:24Z | ---

tags:

- generated_from_keras_callback

model-index:

- name: mal-tls-bert-large-relu

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# mal-tls-bert-large-relu

This model is a fine-tuned version of [](https://huggingface.co/) on an unknown dataset.

It achieves the following results on the evaluation set:

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: None

- training_precision: float32

### Training results

### Framework versions

- Transformers 4.20.1

- TensorFlow 2.6.4

- Datasets 2.1.0

- Tokenizers 0.12.1

|

SharpAI/mal-tls-bert-large | SharpAI | 2022-08-04T21:04:08Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"tf",

"bert",

"text-classification",

"generated_from_keras_callback",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text-classification | 2022-07-25T22:26:09Z | ---

tags:

- generated_from_keras_callback

model-index:

- name: mal-tls-bert-large

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# mal-tls-bert-large

This model is a fine-tuned version of [](https://huggingface.co/) on an unknown dataset.

It achieves the following results on the evaluation set:

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: None

- training_precision: float32

### Training results

### Framework versions

- Transformers 4.20.1

- TensorFlow 2.6.4

- Datasets 2.1.0

- Tokenizers 0.12.1

|

abdulmatinomotoso/article_title_2299 | abdulmatinomotoso | 2022-08-04T20:44:37Z | 13 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"pegasus",

"text2text-generation",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text2text-generation | 2022-08-04T19:49:29Z | ---

tags:

- generated_from_trainer

model-index:

- name: article_title_2299

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# article_title_2299

This model is a fine-tuned version of [google/pegasus-cnn_dailymail](https://huggingface.co/google/pegasus-cnn_dailymail) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 1

- eval_batch_size: 1

- seed: 42

- gradient_accumulation_steps: 16

- total_train_batch_size: 16

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 3

### Training results

### Framework versions

- Transformers 4.18.0

- Pytorch 1.11.0

- Datasets 2.1.0

- Tokenizers 0.12.1

|

DOOGLAK/wikigold_trained_no_DA_testing2 | DOOGLAK | 2022-08-04T20:30:35Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"bert",

"token-classification",

"generated_from_trainer",

"dataset:wikigold_splits",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| token-classification | 2022-08-04T19:39:03Z | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- wikigold_splits

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: wikigold_trained_no_DA_testing2

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: wikigold_splits

type: wikigold_splits

args: default

metrics:

- name: Precision

type: precision

value: 0.8410852713178295

- name: Recall

type: recall

value: 0.84765625

- name: F1

type: f1

value: 0.8443579766536965

- name: Accuracy

type: accuracy

value: 0.9571820972693489

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wikigold_trained_no_DA_testing2

This model is a fine-tuned version of [bert-base-cased](https://huggingface.co/bert-base-cased) on the wikigold_splits dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1431

- Precision: 0.8411

- Recall: 0.8477

- F1: 0.8444

- Accuracy: 0.9572

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| No log | 1.0 | 167 | 0.1618 | 0.7559 | 0.75 | 0.7529 | 0.9410 |

| No log | 2.0 | 334 | 0.1488 | 0.8384 | 0.8242 | 0.8313 | 0.9530 |

| 0.1589 | 3.0 | 501 | 0.1431 | 0.8411 | 0.8477 | 0.8444 | 0.9572 |

### Framework versions

- Transformers 4.17.0

- Pytorch 1.11.0+cu113

- Datasets 2.4.0

- Tokenizers 0.11.6

|

aliprf/KD-Loss | aliprf | 2022-08-04T19:47:02Z | 0 | 0 | null | [

"computer vision",

"face alignment",

"facial landmark point",

"CNN",

"Knowledge Distillation",

"loss",

"CVIU",

"Tensor Flow",

"en",

"arxiv:2111.07047",

"license:mit",

"region:us"

]

| null | 2022-08-04T19:22:34Z |

---

language: en

tags: [ computer vision, face alignment, facial landmark point, CNN, Knowledge Distillation, loss, CVIU, Tensor Flow]

thumbnail:

license: mit

---

[](https://paperswithcode.com/sota/face-alignment-on-cofw?p=facial-landmark-points-detection-using)

#

Facial Landmark Points Detection Using Knowledge Distillation-Based Neural Networks

#### Link to the paper:

Google Scholar:

https://scholar.google.com/citations?view_op=view_citation&hl=en&user=96lS6HIAAAAJ&citation_for_view=96lS6HIAAAAJ:zYLM7Y9cAGgC

Elsevier:

https://www.sciencedirect.com/science/article/pii/S1077314221001582

Arxiv:

https://arxiv.org/abs/2111.07047

#### Link to the paperswithcode.com:

https://paperswithcode.com/paper/facial-landmark-points-detection-using

```diff

@@plaese STAR the repo if you like it.@@

```

```

Please cite this work as:

@article{fard2022facial,

title={Facial landmark points detection using knowledge distillation-based neural networks},

author={Fard, Ali Pourramezan and Mahoor, Mohammad H},

journal={Computer Vision and Image Understanding},

volume={215},

pages={103316},

year={2022},

publisher={Elsevier}

}

```

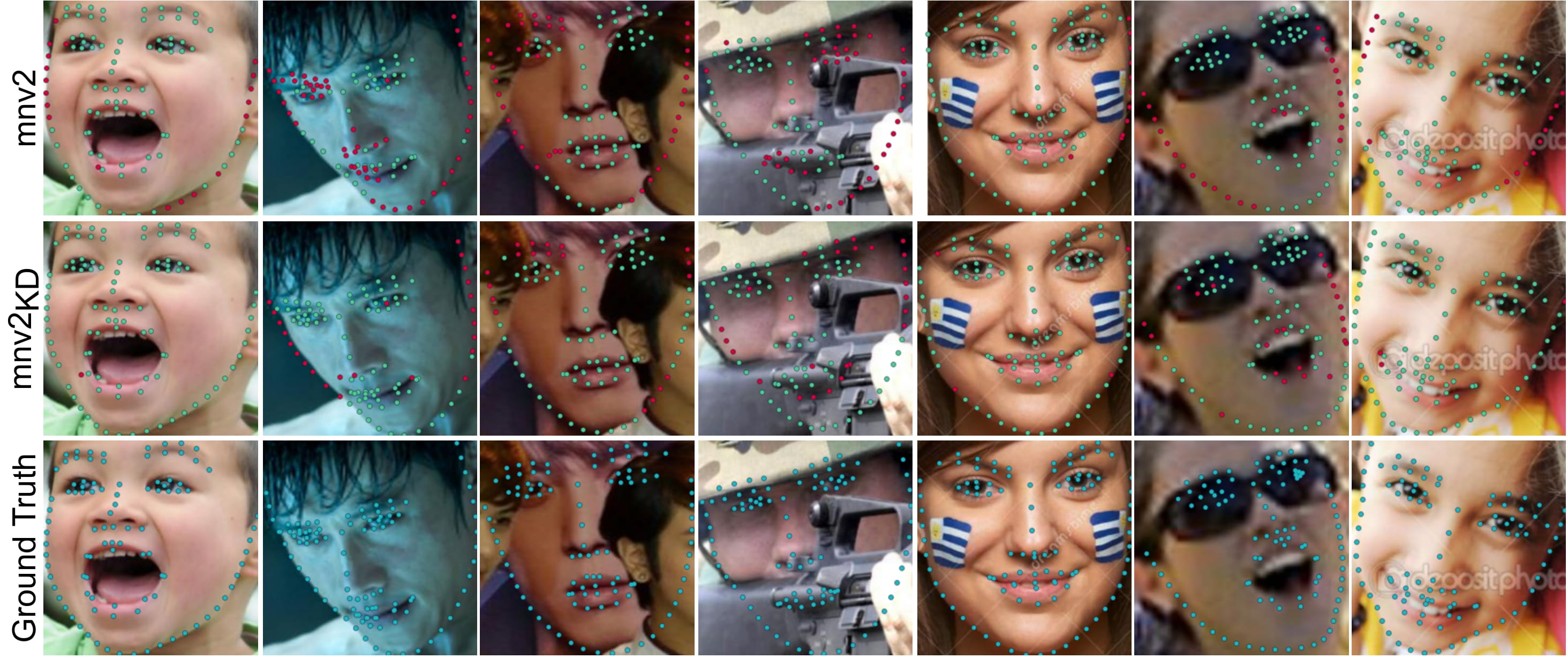

## Introduction

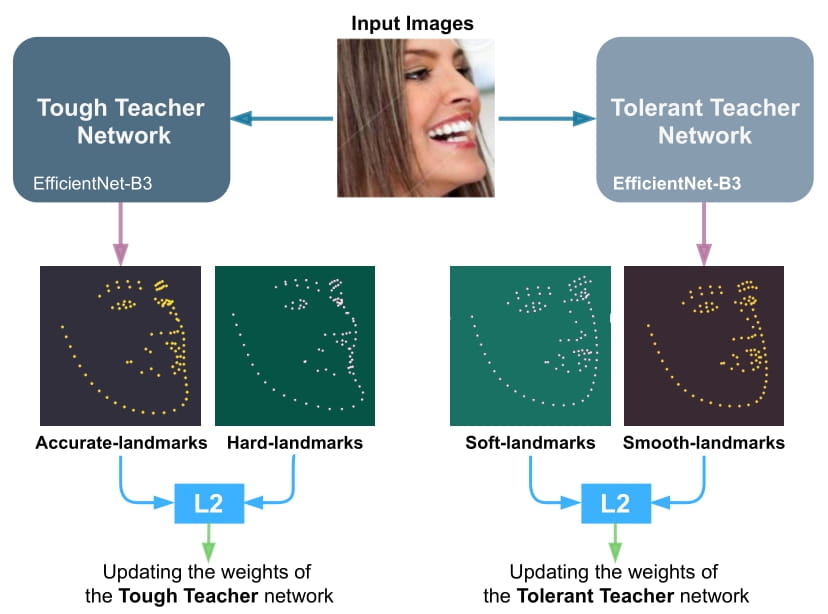

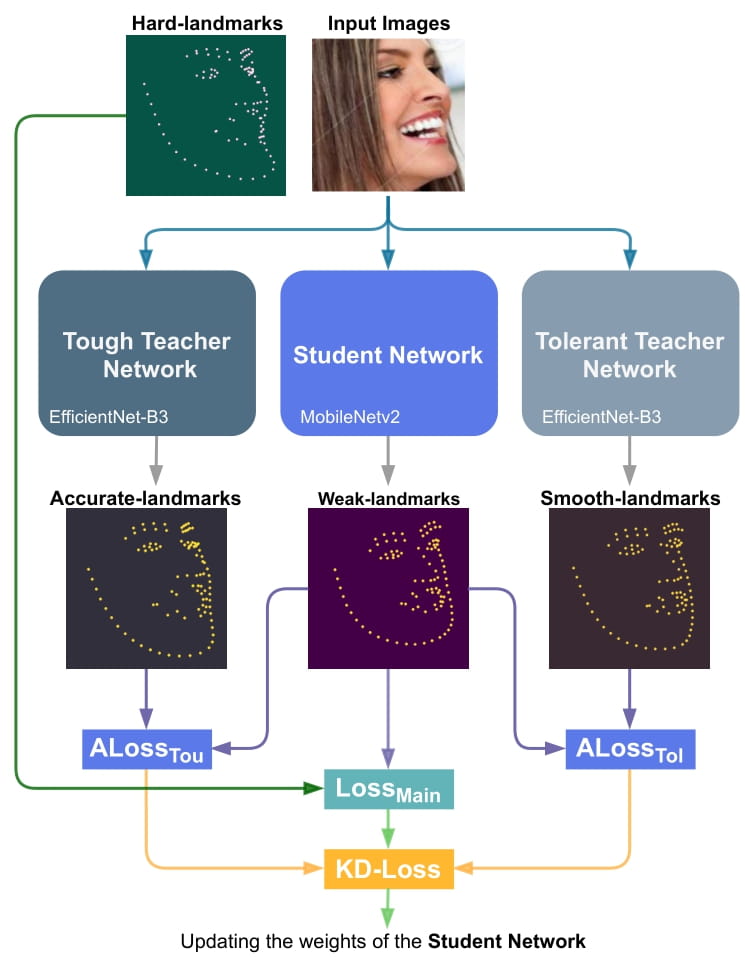

Facial landmark detection is a vital step for numerous facial image analysis applications. Although some deep learning-based methods have achieved good performances in this task, they are often not suitable for running on mobile devices. Such methods rely on networks with many parameters, which makes the training and inference time-consuming. Training lightweight neural networks such as MobileNets are often challenging, and the models might have low accuracy. Inspired by knowledge distillation (KD), this paper presents a novel loss function to train a lightweight Student network (e.g., MobileNetV2) for facial landmark detection. We use two Teacher networks, a Tolerant-Teacher and a Tough-Teacher in conjunction with the Student network. The Tolerant-Teacher is trained using Soft-landmarks created by active shape models, while the Tough-Teacher is trained using the ground truth (aka Hard-landmarks) landmark points. To utilize the facial landmark points predicted by the Teacher networks, we define an Assistive Loss (ALoss) for each Teacher network. Moreover, we define a loss function called KD-Loss that utilizes the facial landmark points predicted by the two pre-trained Teacher networks (EfficientNet-b3) to guide the lightweight Student network towards predicting the Hard-landmarks. Our experimental results on three challenging facial datasets show that the proposed architecture will result in a better-trained Student network that can extract facial landmark points with high accuracy.

##Architecture

We train the Tough-Teacher, and the Tolerant-Teacher networks independently using the Hard-landmarks and the Soft-landmarks respectively utilizing the L2 loss:

Proposed KD-based architecture for training the Student network. KDLoss uses the knowledge of the previously trained Teacher networks by utilizing the assistive loss functions ALossT ou and ALossT ol, to improve the performance the face alignment task:

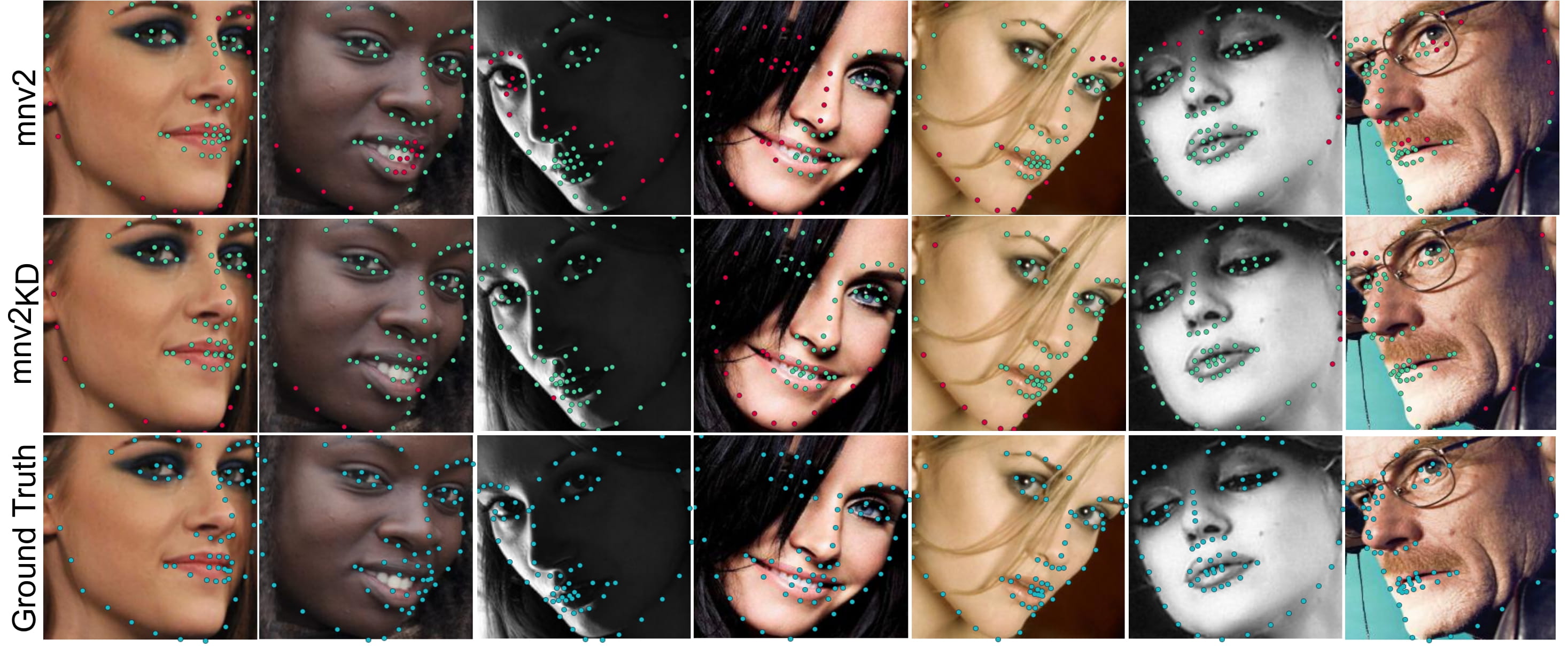

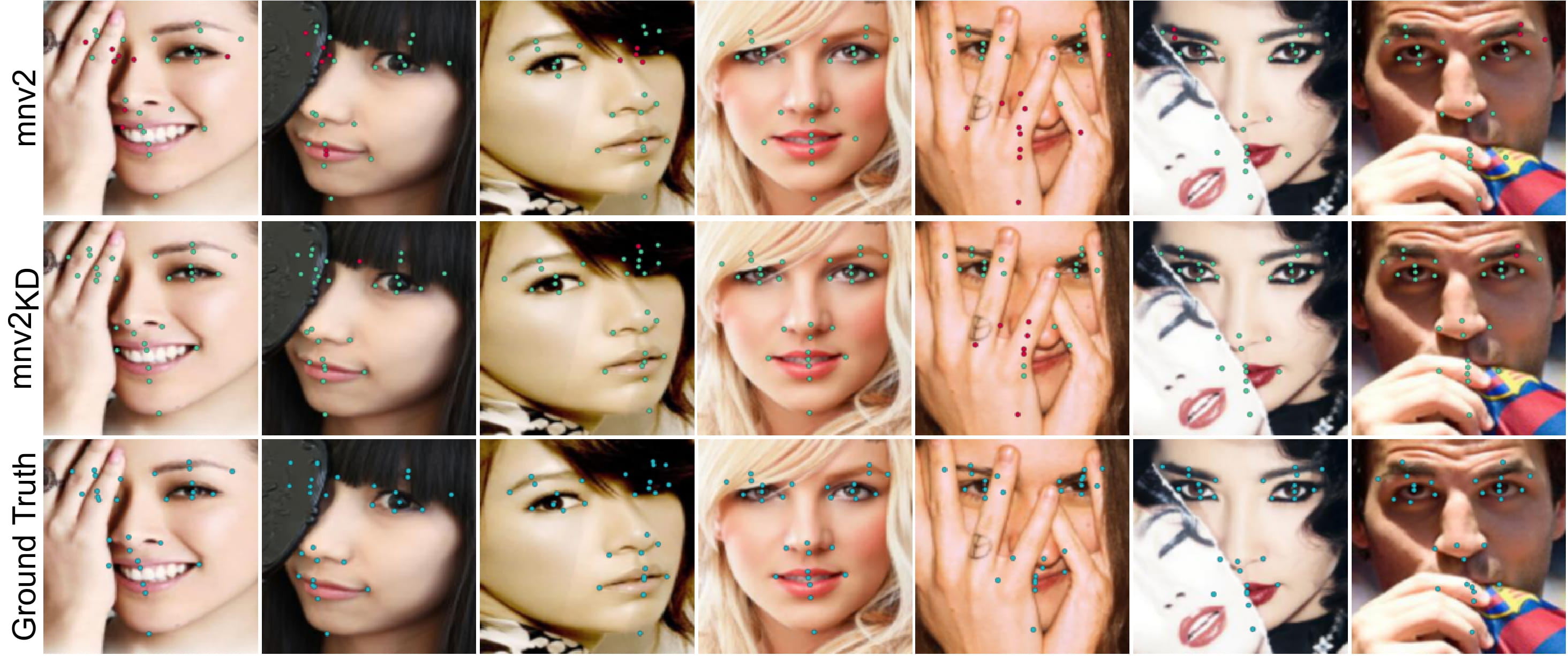

## Evaluation

Following are some samples in order to show the visual performance of KD-Loss on 300W, COFW and WFLW datasets:

300W:

COFW:

WFLW:

----------------------------------------------------------------------------------------------------------------------------------

## Installing the requirements

In order to run the code you need to install python >= 3.5.

The requirements and the libraries needed to run the code can be installed using the following command:

```

pip install -r requirements.txt

```

## Using the pre-trained models

You can test and use the preetrained models using the following codes which are available in the test.py:

The pretrained student model are also located in "models/students".

```

cnn = CNNModel()

model = cnn.get_model(arch=arch, input_tensor=None, output_len=self.output_len)

model.load_weights(weight_fname)

img = None # load a cropped image

image_utility = ImageUtility()

pose_predicted = []

image = np.expand_dims(img, axis=0)

pose_predicted = model.predict(image)[1][0]

```

## Training Network from scratch

### Preparing Data

Data needs to be normalized and saved in npy format.

### Training

### Training Teacher Networks:

The training implementation is located in teacher_trainer.py class. You can use the following code to start the training for the teacher networks:

```

'''train Teacher Networks'''

trainer = TeacherTrainer(dataset_name=DatasetName.w300)

trainer.train(arch='efficientNet',weight_path=None)

```

### Training Student Networks:

After Training the teacher networks, you can use the trained teachers to train the student network. The implemetation of training of the student network is provided in teacher_trainer.py . You can use the following code to start the training for the student networks:

```

st_trainer = StudentTrainer(dataset_name=DatasetName.w300, use_augmneted=True)

st_trainer.train(arch_student='mobileNetV2', weight_path_student=None,

loss_weight_student=2.0,

arch_tough_teacher='efficientNet', weight_path_tough_teacher='./models/teachers/ds_300w_ef_tou.h5',

loss_weight_tough_teacher=1,

arch_tol_teacher='efficientNet', weight_path_tol_teacher='./models/teachers/ds_300w_ef_tol.h5',

loss_weight_tol_teacher=1)

```

```

Please cite this work as:

@article{fard2022facial,

title={Facial landmark points detection using knowledge distillation-based neural networks},

author={Fard, Ali Pourramezan and Mahoor, Mohammad H},

journal={Computer Vision and Image Understanding},

volume={215},

pages={103316},

year={2022},

publisher={Elsevier}

}

```

```diff

@@plaese STAR the repo if you like it.@@

```

|

pc2976/prot_bert-finetuned-sp6 | pc2976 | 2022-08-04T18:30:52Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"bert",

"fill-mask",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| fill-mask | 2022-08-03T20:30:46Z | ---

tags:

- generated_from_trainer

model-index:

- name: prot_bert-finetuned-sp6

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# prot_bert-finetuned-sp6

This model is a fine-tuned version of [Rostlab/prot_bert](https://huggingface.co/Rostlab/prot_bert) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4070

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0002

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 0.5027 | 1.0 | 164 | 0.4666 |

| 0.3927 | 2.0 | 328 | 0.4328 |

| 0.3348 | 3.0 | 492 | 0.4072 |

### Framework versions

- Transformers 4.13.0.dev0

- Pytorch 1.12.0+cu113

- Datasets 2.4.0

- Tokenizers 0.10.3

|

keepitreal/mini-phobert-v3.1 | keepitreal | 2022-08-04T16:49:01Z | 6 | 0 | transformers | [

"transformers",

"pytorch",

"roberta",

"fill-mask",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| fill-mask | 2022-08-04T11:32:18Z | ---

tags:

- generated_from_trainer

model-index:

- name: mini-phobert-v3.1

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mini-phobert-v3.1

This model is a fine-tuned version of [](https://huggingface.co/) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.0527

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 64

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

### Framework versions

- Transformers 4.21.1

- Pytorch 1.12.0+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

yukseltron/lyrics-classifier | yukseltron | 2022-08-04T15:42:31Z | 0 | 0 | null | [

"tensorboard",

"text-classification",

"lyrics",

"catboost",

"en",

"dataset:data",

"license:gpl-3.0",

"region:us"

]

| text-classification | 2022-07-28T12:48:01Z | ---

language:

- en

thumbnail: "http://s4.thingpic.com/images/Yx/zFbS5iJFJMYNxDp9HTR7TQtT.png"

tags:

- text-classification

- lyrics

- catboost

license: gpl-3.0

datasets:

- data

metrics:

- accuracy

widget:

- text: "I know when that hotline bling, that can only mean one thing"

---

# Lyrics Classifier

This submission uses [CatBoost](https://catboost.ai/).

CatBoost was chosen for its listed benefits, mainly in requiring less hyperparameter tuning and preprocessing of categorical and text features. It is also fast and fairly easy to set up.

<img src="http://s4.thingpic.com/images/Yx/zFbS5iJFJMYNxDp9HTR7TQtT.png"

alt="Markdown Monster icon"

style="float: left; margin-right: 10px;" />

|

tj-solergibert/xlm-roberta-base-finetuned-panx-it | tj-solergibert | 2022-08-04T15:36:59Z | 6 | 0 | transformers | [

"transformers",

"pytorch",

"xlm-roberta",

"token-classification",

"generated_from_trainer",

"dataset:xtreme",

"license:mit",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| token-classification | 2022-08-04T15:21:38Z | ---

license: mit

tags:

- generated_from_trainer

datasets:

- xtreme

metrics:

- f1

model-index:

- name: xlm-roberta-base-finetuned-panx-it

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: xtreme

type: xtreme

config: PAN-X.it

split: train

args: PAN-X.it

metrics:

- name: F1

type: f1

value: 0.8124233755619126

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-roberta-base-finetuned-panx-it

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on the xtreme dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2630

- F1: 0.8124

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 24

- eval_batch_size: 24

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.8193 | 1.0 | 70 | 0.3200 | 0.7356 |

| 0.2773 | 2.0 | 140 | 0.2841 | 0.7882 |

| 0.1807 | 3.0 | 210 | 0.2630 | 0.8124 |

### Framework versions

- Transformers 4.21.0

- Pytorch 1.12.0+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

Jacobsith/autotrain-Hello_there-1209845735 | Jacobsith | 2022-08-04T15:30:19Z | 14 | 0 | transformers | [

"transformers",

"pytorch",

"longt5",

"text2text-generation",

"autotrain",

"summarization",

"unk",

"dataset:Jacobsith/autotrain-data-Hello_there",

"model-index",

"co2_eq_emissions",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| summarization | 2022-08-02T06:38:58Z | ---

tags:

- autotrain

- summarization

language:

- unk

widget:

- text: "I love AutoTrain \U0001F917"

datasets:

- Jacobsith/autotrain-data-Hello_there

co2_eq_emissions:

emissions: 3602.3174355473616

model-index:

- name: Jacobsith/autotrain-Hello_there-1209845735

results:

- task:

type: summarization

name: Summarization

dataset:

name: Blaise-g/SumPubmed

type: Blaise-g/SumPubmed

config: Blaise-g--SumPubmed

split: test

metrics:

- name: ROUGE-1

type: rouge

value: 38.2084

verified: true

- name: ROUGE-2

type: rouge

value: 12.4744

verified: true

- name: ROUGE-L

type: rouge

value: 21.5536

verified: true

- name: ROUGE-LSUM

type: rouge

value: 34.229

verified: true

- name: loss

type: loss

value: 2.0952045917510986

verified: true

- name: gen_len

type: gen_len

value: 126.3001

verified: true

---

# Model Trained Using AutoTrain

- Problem type: Summarization

- Model ID: 1209845735

- CO2 Emissions (in grams): 3602.3174

## Validation Metrics

- Loss: 2.484

- Rouge1: 38.448

- Rouge2: 10.900

- RougeL: 22.080

- RougeLsum: 33.458

- Gen Len: 115.982

## Usage

You can use cURL to access this model:

```

$ curl -X POST -H "Authorization: Bearer YOUR_HUGGINGFACE_API_KEY" -H "Content-Type: application/json" -d '{"inputs": "I love AutoTrain"}' https://api-inference.huggingface.co/Jacobsith/autotrain-Hello_there-1209845735

``` |

Evelyn18/roberta-base-spanish-squades-becasIncentivos2 | Evelyn18 | 2022-08-04T15:18:42Z | 5 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"roberta",

"question-answering",

"generated_from_trainer",

"dataset:becasv2",

"endpoints_compatible",

"region:us"

]

| question-answering | 2022-07-27T03:53:59Z | ---

tags:

- generated_from_trainer

datasets:

- becasv2

model-index:

- name: roberta-base-spanish-squades-becasIncentivos2

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# roberta-base-spanish-squades-becasIncentivos2

This model is a fine-tuned version of [IIC/roberta-base-spanish-squades](https://huggingface.co/IIC/roberta-base-spanish-squades) on the becasv2 dataset.

It achieves the following results on the evaluation set:

- Loss: 0.793

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 10

- eval_batch_size: 10

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| No log | 1.0 | 7 | 1.6939 |

| No log | 2.0 | 14 | 1.7033 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.12.0+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

mindwrapped/collaborative-filtering-movielens-copy | mindwrapped | 2022-08-04T15:17:05Z | 0 | 1 | keras | [

"keras",

"tensorboard",

"tf-keras",

"collaborative-filtering",

"recommender",

"tabular-classification",

"license:cc0-1.0",

"region:us"

]

| tabular-classification | 2022-06-08T16:15:46Z | ---

library_name: keras

tags:

- collaborative-filtering

- recommender

- tabular-classification

license:

- cc0-1.0

---

## Model description

This repo contains the model and the notebook on [how to build and train a Keras model for Collaborative Filtering for Movie Recommendations](https://keras.io/examples/structured_data/collaborative_filtering_movielens/).

Full credits to [Siddhartha Banerjee](https://twitter.com/sidd2006).

## Intended uses & limitations

Based on a user and movies they have rated highly in the past, this model outputs the predicted rating a user would give to a movie they haven't seen yet (between 0-1). This information can be used to find out the top recommended movies for this user.

## Training and evaluation data

The dataset consists of user's ratings on specific movies. It also consists of the movie's specific genres.

## Training procedure

The model was trained for 5 epochs with a batch size of 64.

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'Adam', 'learning_rate': 0.001, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-07, 'amsgrad': False}

- training_precision: float32

## Training Metrics

| Epochs | Train Loss | Validation Loss |

|--- |--- |--- |

| 1| 0.637| 0.619|

| 2| 0.614| 0.616|

| 3| 0.609| 0.611|

| 4| 0.608| 0.61|

| 5| 0.608| 0.609|

## Model Plot

<details>

<summary>View Model Plot</summary>

</details> |

tj-solergibert/xlm-roberta-base-finetuned-panx-de-fr | tj-solergibert | 2022-08-04T15:00:13Z | 3 | 0 | transformers | [

"transformers",

"pytorch",

"xlm-roberta",

"token-classification",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| token-classification | 2022-08-04T14:35:07Z | ---

license: mit

tags:

- generated_from_trainer

metrics:

- f1

model-index:

- name: xlm-roberta-base-finetuned-panx-de-fr

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-roberta-base-finetuned-panx-de-fr

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1608

- F1: 0.8593

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 24

- eval_batch_size: 24

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.2888 | 1.0 | 715 | 0.1779 | 0.8233 |

| 0.1437 | 2.0 | 1430 | 0.1570 | 0.8497 |

| 0.0931 | 3.0 | 2145 | 0.1608 | 0.8593 |

### Framework versions

- Transformers 4.21.0

- Pytorch 1.12.0+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

Ilyes/wav2vec2-large-xlsr-53-french | Ilyes | 2022-08-04T14:51:35Z | 29 | 4 | transformers | [

"transformers",

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"audio",

"speech",

"xlsr-fine-tuning-week",

"fr",

"dataset:common_voice",

"license:apache-2.0",

"model-index",

"endpoints_compatible",

"region:us"

]

| automatic-speech-recognition | 2022-03-02T23:29:04Z | ---

language: fr

datasets:

- common_voice

tags:

- audio

- automatic-speech-recognition

- speech

- xlsr-fine-tuning-week

license: apache-2.0

model-index:

- name: wav2vec2-large-xlsr-53-French by Ilyes Rebai

results:

- task:

name: Speech Recognition

type: automatic-speech-recognition

dataset:

name: Common Voice fr

type: common_voice

args: fr

metrics:

- name: Test WER

type: wer

value: 12.82

---

## Evaluation on Common Voice FR Test

The script used for training and evaluation can be found here: https://github.com/irebai/wav2vec2

```python

import torch

import torchaudio

from datasets import load_dataset, load_metric

from transformers import (

Wav2Vec2ForCTC,

Wav2Vec2Processor,

)

import re

model_name = "Ilyes/wav2vec2-large-xlsr-53-french"

device = "cpu" # "cuda"

model = Wav2Vec2ForCTC.from_pretrained(model_name).to(device)

processor = Wav2Vec2Processor.from_pretrained(model_name)

ds = load_dataset("common_voice", "fr", split="test", cache_dir="./data/fr")

chars_to_ignore_regex = '[\,\?\.\!\;\:\"\“\%\‘\”\�\‘\’\’\’\‘\…\·\!\ǃ\?\«\‹\»\›“\”\\ʿ\ʾ\„\∞\\|\.\,\;\:\*\—\–\─\―\_\/\:\ː\;\,\=\«\»\→]'

def map_to_array(batch):

speech, _ = torchaudio.load(batch["path"])

batch["speech"] = resampler.forward(speech.squeeze(0)).numpy()

batch["sampling_rate"] = resampler.new_freq

batch["sentence"] = re.sub(chars_to_ignore_regex, '', batch["sentence"]).lower().replace("’", "'")

return batch

resampler = torchaudio.transforms.Resample(48_000, 16_000)

ds = ds.map(map_to_array)

def map_to_pred(batch):

features = processor(batch["speech"], sampling_rate=batch["sampling_rate"][0], padding=True, return_tensors="pt")

input_values = features.input_values.to(device)

attention_mask = features.attention_mask.to(device)

with torch.no_grad():

logits = model(input_values, attention_mask=attention_mask).logits

pred_ids = torch.argmax(logits, dim=-1)

batch["predicted"] = processor.batch_decode(pred_ids)

batch["target"] = batch["sentence"]

return batch

result = ds.map(map_to_pred, batched=True, batch_size=16, remove_columns=list(ds.features.keys()))

wer = load_metric("wer")

print(wer.compute(predictions=result["predicted"], references=result["target"]))

```

## Results

WER=12.82%

CER=4.40%

|

jjjjjjjjjj/dqn-SpaceInvadersNoFrame-v4 | jjjjjjjjjj | 2022-08-04T14:02:37Z | 7 | 0 | stable-baselines3 | [

"stable-baselines3",

"SpaceInvadersNoFrameskip-v4",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

]

| reinforcement-learning | 2022-08-04T14:02:15Z | ---

library_name: stable-baselines3

tags:

- SpaceInvadersNoFrameskip-v4

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: DQN

results:

- metrics:

- type: mean_reward

value: 582.50 +/- 220.50

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: SpaceInvadersNoFrameskip-v4

type: SpaceInvadersNoFrameskip-v4

---

# **DQN** Agent playing **SpaceInvadersNoFrameskip-v4**

This is a trained model of a **DQN** agent playing **SpaceInvadersNoFrameskip-v4**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3)

and the [RL Zoo](https://github.com/DLR-RM/rl-baselines3-zoo).

The RL Zoo is a training framework for Stable Baselines3

reinforcement learning agents,

with hyperparameter optimization and pre-trained agents included.

## Usage (with SB3 RL Zoo)

RL Zoo: https://github.com/DLR-RM/rl-baselines3-zoo<br/>

SB3: https://github.com/DLR-RM/stable-baselines3<br/>

SB3 Contrib: https://github.com/Stable-Baselines-Team/stable-baselines3-contrib

```

# Download model and save it into the logs/ folder

python -m utils.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga jjjjjjjjjj -f logs/

python enjoy.py --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

## Training (with the RL Zoo)

```

python train.py --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

# Upload the model and generate video (when possible)

python -m utils.push_to_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/ -orga jjjjjjjjjj

```

## Hyperparameters

```python

OrderedDict([('batch_size', 32),

('buffer_size', 100000),

('env_wrapper',

['stable_baselines3.common.atari_wrappers.AtariWrapper']),

('exploration_final_eps', 0.01),

('exploration_fraction', 0.1),

('frame_stack', 4),

('gradient_steps', 1),

('learning_rate', 0.0001),

('learning_starts', 100000),

('n_timesteps', 1000000.0),

('optimize_memory_usage', False),

('policy', 'CnnPolicy'),

('target_update_interval', 1000),

('train_freq', 4),

('normalize', False)])

```

|

silviacamplani/distilbert-base-uncased-finetuned-dapt-ner-ai_data | silviacamplani | 2022-08-04T13:38:43Z | 4 | 0 | transformers | [

"transformers",

"tf",

"tensorboard",

"distilbert",

"token-classification",

"generated_from_keras_callback",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| token-classification | 2022-08-04T13:37:35Z | ---

license: apache-2.0

tags:

- generated_from_keras_callback

model-index:

- name: silviacamplani/distilbert-base-uncased-finetuned-dapt-ner-ai_data

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# silviacamplani/distilbert-base-uncased-finetuned-dapt-ner-ai_data

This model is a fine-tuned version of [silviacamplani/distilbert-base-uncased-finetuned-ai_data](https://huggingface.co/silviacamplani/distilbert-base-uncased-finetuned-ai_data) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 2.3549

- Validation Loss: 2.3081

- Train Precision: 0.0

- Train Recall: 0.0

- Train F1: 0.0

- Train Accuracy: 0.6392

- Epoch: 2

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'inner_optimizer': {'class_name': 'AdamWeightDecay', 'config': {'name': 'AdamWeightDecay', 'learning_rate': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 2e-05, 'decay_steps': 18, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False, 'weight_decay_rate': 0.01}}, 'dynamic': True, 'initial_scale': 32768.0, 'dynamic_growth_steps': 2000}

- training_precision: mixed_float16

### Training results

| Train Loss | Validation Loss | Train Precision | Train Recall | Train F1 | Train Accuracy | Epoch |

|:----------:|:---------------:|:---------------:|:------------:|:--------:|:--------------:|:-----:|

| 3.0905 | 2.8512 | 0.0 | 0.0 | 0.0 | 0.6376 | 0 |

| 2.6612 | 2.4783 | 0.0 | 0.0 | 0.0 | 0.6392 | 1 |

| 2.3549 | 2.3081 | 0.0 | 0.0 | 0.0 | 0.6392 | 2 |

### Framework versions

- Transformers 4.20.1

- TensorFlow 2.6.4

- Datasets 2.1.0

- Tokenizers 0.12.1

|

schnell/bert-small-ipadic_bpe | schnell | 2022-08-04T13:37:42Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"bert",

"fill-mask",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| fill-mask | 2022-08-01T15:40:13Z | ---

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: bert-small-ipadic_bpe

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-small-ipadic_bpe

This model is a fine-tuned version of [](https://huggingface.co/) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.6777

- Accuracy: 0.6519

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 256

- eval_batch_size: 8

- seed: 42

- distributed_type: multi-GPU

- num_devices: 3

- total_train_batch_size: 768

- total_eval_batch_size: 24

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.01

- num_epochs: 14

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:------:|:---------------:|:--------:|

| 2.2548 | 1.0 | 69473 | 2.1163 | 0.5882 |

| 2.0904 | 2.0 | 138946 | 1.9562 | 0.6101 |

| 2.0203 | 3.0 | 208419 | 1.8848 | 0.6208 |

| 1.978 | 4.0 | 277892 | 1.8408 | 0.6272 |

| 1.937 | 5.0 | 347365 | 1.8080 | 0.6320 |

| 1.9152 | 6.0 | 416838 | 1.7818 | 0.6361 |

| 1.8982 | 7.0 | 486311 | 1.7575 | 0.6395 |

| 1.8808 | 8.0 | 555784 | 1.7413 | 0.6421 |

| 1.8684 | 9.0 | 625257 | 1.7282 | 0.6440 |

| 1.8517 | 10.0 | 694730 | 1.7140 | 0.6464 |

| 1.8353 | 11.0 | 764203 | 1.7022 | 0.6481 |

| 1.8245 | 12.0 | 833676 | 1.6877 | 0.6504 |

| 1.8191 | 13.0 | 903149 | 1.6829 | 0.6515 |

| 1.8122 | 14.0 | 972622 | 1.6777 | 0.6519 |

### Framework versions

- Transformers 4.19.2

- Pytorch 1.12.0+cu116

- Datasets 2.2.2

- Tokenizers 0.12.1

|

juletxara/vilt-vsr-zeroshot | juletxara | 2022-08-04T12:34:40Z | 9 | 0 | transformers | [

"transformers",

"pytorch",

"vilt",

"arxiv:2205.00363",

"arxiv:2102.03334",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

]

| null | 2022-08-04T10:55:43Z | ---

license: apache-2.0

---

# Vision-and-Language Transformer (ViLT), fine-tuned on VSR zeroshot split

Vision-and-Language Transformer (ViLT) model fine-tuned on zeroshot split of [Visual Spatial Reasoning (VSR)](https://arxiv.org/abs/2205.00363). ViLT was introduced in the paper [ViLT: Vision-and-Language Transformer

Without Convolution or Region Supervision](https://arxiv.org/abs/2102.03334) by Kim et al. and first released in [this repository](https://github.com/dandelin/ViLT).

## Intended uses & limitations

You can use the model to determine whether a sentence is true or false given an image.

### How to use

Here is how to use the model in PyTorch:

```