modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-06-27 18:27:39

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 500

values | tags

sequencelengths 1

4.05k

| pipeline_tag

stringclasses 54

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-06-27 18:23:41

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

ASethi04/meta-llama-Llama-3.1-8B-tulu-cot-second-lora-4-0.0001 | ASethi04 | 2025-05-04T12:08:08Z | 0 | 0 | transformers | [

"transformers",

"safetensors",

"generated_from_trainer",

"trl",

"sft",

"base_model:meta-llama/Llama-3.1-8B",

"base_model:finetune:meta-llama/Llama-3.1-8B",

"endpoints_compatible",

"region:us"

] | null | 2025-05-04T11:56:01Z | ---

base_model: meta-llama/Llama-3.1-8B

library_name: transformers

model_name: meta-llama-Llama-3.1-8B-tulu-cot-second-lora-4-0.0001

tags:

- generated_from_trainer

- trl

- sft

licence: license

---

# Model Card for meta-llama-Llama-3.1-8B-tulu-cot-second-lora-4-0.0001

This model is a fine-tuned version of [meta-llama/Llama-3.1-8B](https://huggingface.co/meta-llama/Llama-3.1-8B).

It has been trained using [TRL](https://github.com/huggingface/trl).

## Quick start

```python

from transformers import pipeline

question = "If you had a time machine, but could only go to the past or the future once and never return, which would you choose and why?"

generator = pipeline("text-generation", model="ASethi04/meta-llama-Llama-3.1-8B-tulu-cot-second-lora-4-0.0001", device="cuda")

output = generator([{"role": "user", "content": question}], max_new_tokens=128, return_full_text=False)[0]

print(output["generated_text"])

```

## Training procedure

[<img src="https://raw.githubusercontent.com/wandb/assets/main/wandb-github-badge-28.svg" alt="Visualize in Weights & Biases" width="150" height="24"/>](https://wandb.ai/torchql-org/huggingface/runs/p21kmrck)

This model was trained with SFT.

### Framework versions

- TRL: 0.16.1

- Transformers: 4.51.2

- Pytorch: 2.6.0

- Datasets: 3.5.0

- Tokenizers: 0.21.1

## Citations

Cite TRL as:

```bibtex

@misc{vonwerra2022trl,

title = {{TRL: Transformer Reinforcement Learning}},

author = {Leandro von Werra and Younes Belkada and Lewis Tunstall and Edward Beeching and Tristan Thrush and Nathan Lambert and Shengyi Huang and Kashif Rasul and Quentin Gallouédec},

year = 2020,

journal = {GitHub repository},

publisher = {GitHub},

howpublished = {\url{https://github.com/huggingface/trl}}

}

``` |

mothnaZl/long-sr-Qwen2.5-7B-Instruct | mothnaZl | 2025-05-04T12:03:06Z | 0 | 0 | transformers | [

"transformers",

"safetensors",

"qwen2",

"text-generation",

"generated_from_trainer",

"conversational",

"base_model:Qwen/Qwen2.5-7B-Instruct",

"base_model:finetune:Qwen/Qwen2.5-7B-Instruct",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2025-05-04T11:56:59Z | ---

library_name: transformers

license: apache-2.0

base_model: Qwen/Qwen2.5-7B-Instruct

tags:

- generated_from_trainer

model-index:

- name: long-sr-Qwen2.5-7B-Instruct

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# long-sr-Qwen2.5-7B-Instruct

This model is a fine-tuned version of [Qwen/Qwen2.5-7B-Instruct](https://huggingface.co/Qwen/Qwen2.5-7B-Instruct) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 1

- eval_batch_size: 8

- seed: 42

- distributed_type: multi-GPU

- num_devices: 8

- total_train_batch_size: 8

- total_eval_batch_size: 64

- optimizer: Use adamw_torch with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: cosine

- lr_scheduler_warmup_ratio: 0.05

- num_epochs: 3

### Training results

### Framework versions

- Transformers 4.46.1

- Pytorch 2.5.1+cu124

- Datasets 3.1.0

- Tokenizers 0.20.3

|

yuyusamurai/Qwen2.5-0.5B-Instruct-Gensyn-Swarm-sharp_opaque_anaconda | yuyusamurai | 2025-05-04T12:00:26Z | 0 | 0 | transformers | [

"transformers",

"safetensors",

"generated_from_trainer",

"rl-swarm",

"grpo",

"gensyn",

"I am sharp opaque anaconda",

"unsloth",

"trl",

"arxiv:2402.03300",

"base_model:Gensyn/Qwen2.5-0.5B-Instruct",

"base_model:finetune:Gensyn/Qwen2.5-0.5B-Instruct",

"endpoints_compatible",

"region:us"

] | null | 2025-05-03T03:21:04Z | ---

base_model: Gensyn/Qwen2.5-0.5B-Instruct

library_name: transformers

model_name: Qwen2.5-0.5B-Instruct-Gensyn-Swarm-sharp_opaque_anaconda

tags:

- generated_from_trainer

- rl-swarm

- grpo

- gensyn

- I am sharp opaque anaconda

- unsloth

- trl

licence: license

---

# Model Card for Qwen2.5-0.5B-Instruct-Gensyn-Swarm-sharp_opaque_anaconda

This model is a fine-tuned version of [Gensyn/Qwen2.5-0.5B-Instruct](https://huggingface.co/Gensyn/Qwen2.5-0.5B-Instruct).

It has been trained using [TRL](https://github.com/huggingface/trl).

## Quick start

```python

from transformers import pipeline

question = "If you had a time machine, but could only go to the past or the future once and never return, which would you choose and why?"

generator = pipeline("text-generation", model="yuyusamurai/Qwen2.5-0.5B-Instruct-Gensyn-Swarm-sharp_opaque_anaconda", device="cuda")

output = generator([{"role": "user", "content": question}], max_new_tokens=128, return_full_text=False)[0]

print(output["generated_text"])

```

## Training procedure

This model was trained with GRPO, a method introduced in [DeepSeekMath: Pushing the Limits of Mathematical Reasoning in Open Language Models](https://huggingface.co/papers/2402.03300).

### Framework versions

- TRL: 0.15.2

- Transformers: 4.51.3

- Pytorch: 2.6.0

- Datasets: 3.5.1

- Tokenizers: 0.21.1

## Citations

Cite GRPO as:

```bibtex

@article{zhihong2024deepseekmath,

title = {{DeepSeekMath: Pushing the Limits of Mathematical Reasoning in Open Language Models}},

author = {Zhihong Shao and Peiyi Wang and Qihao Zhu and Runxin Xu and Junxiao Song and Mingchuan Zhang and Y. K. Li and Y. Wu and Daya Guo},

year = 2024,

eprint = {arXiv:2402.03300},

}

```

Cite TRL as:

```bibtex

@misc{vonwerra2022trl,

title = {{TRL: Transformer Reinforcement Learning}},

author = {Leandro von Werra and Younes Belkada and Lewis Tunstall and Edward Beeching and Tristan Thrush and Nathan Lambert and Shengyi Huang and Kashif Rasul and Quentin Gallouédec},

year = 2020,

journal = {GitHub repository},

publisher = {GitHub},

howpublished = {\url{https://github.com/huggingface/trl}}

}

``` |

haseebakhlaq2000/qwen2.5-3B-Reasoning | haseebakhlaq2000 | 2025-05-04T11:59:31Z | 0 | 0 | null | [

"safetensors",

"unsloth",

"license:mit",

"region:us"

] | null | 2025-05-04T11:58:54Z | ---

license: mit

tags:

- unsloth

---

|

Emanon14/LoRA | Emanon14 | 2025-05-04T11:57:51Z | 0 | 37 | null | [

"text-to-image",

"stable-diffusion",

"stable-diffusion-xl",

"en",

"license:other",

"region:us"

] | text-to-image | 2025-02-01T00:26:10Z | ---

license: other

license_name: faipl-1.0-sd

license_link: https://freedevproject.org/faipl-1.0-sd/

language:

- en

pipeline_tag: text-to-image

tags:

- text-to-image

- stable-diffusion

- stable-diffusion-xl

---

# Slider LoRA

## What is this?

- Here is some my LoRA for illustrious.

- You can adjust the character's appearance like a sliders in 3D games.

- You don't need to include specific words in your prompts.

- Just use the LoRA and adjust the weights.

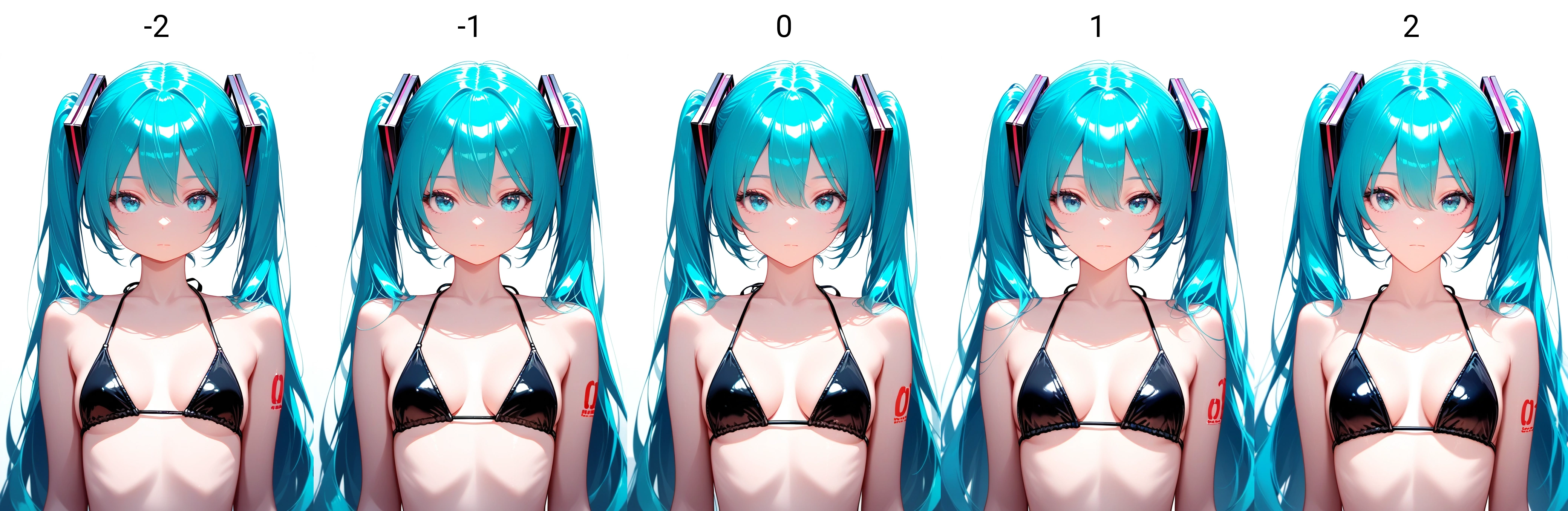

## AreolaeSize_XL_Ilst

![You won't find a sample image here. Some things are simply too fabulous for public display... or maybe I just didn't want to get the README flagged.]()

Adjusts the size of areolae to be smaller/larger.

## AssSize_XL_Ilst

Adjusts the size of ass to be smaller/larger.

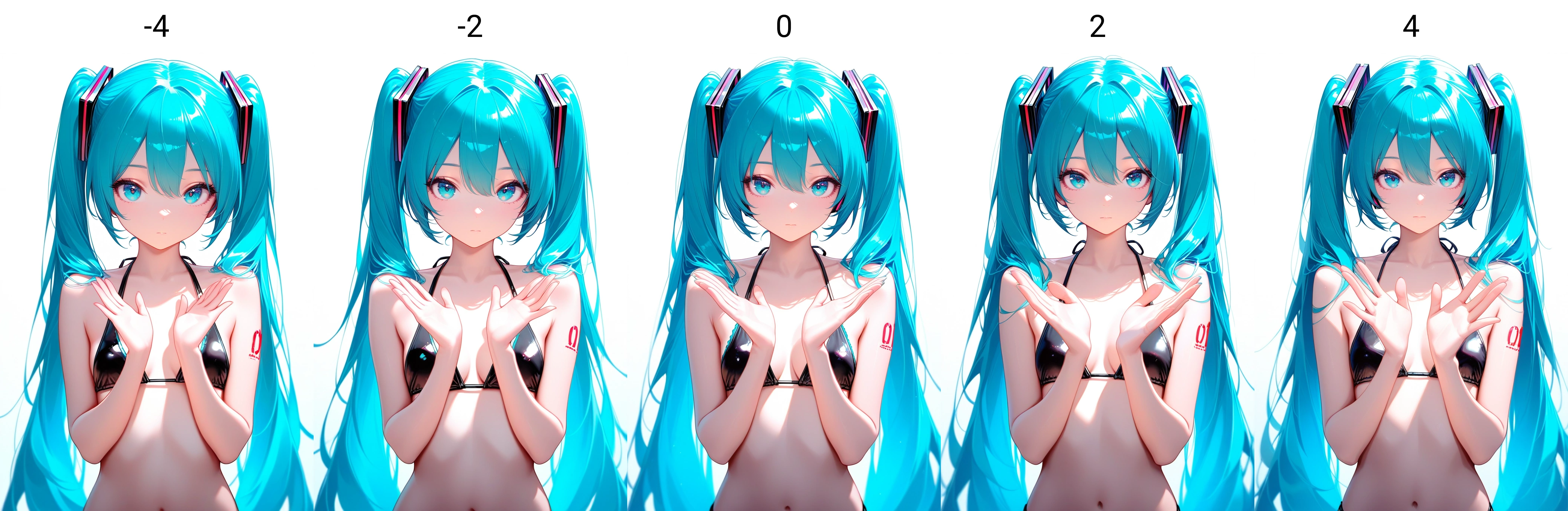

## BreastsMove_XL_Ilst

Moving breasts to down/up.

<u>To generate keyframe images for video generation like a FramePack, Wan, etc...</u>

## BreastsSize_XL_Ilst

Adjusts the size of breasts to be smaller/larger.

## Chin_XL_Ilst

Adjusts the length of chin to be shorter/taller.

## EyeDistance_XL_Ilst

Adjusts the distance between the eyes to be narrower/wider.

## EyeHeight_XL_Ilst

Adjusts the vertical position of the eyes to be lower/higher.

## EyeSize_XL_Ilst

Adjusts the size of the eyes to be smaller/larger.

## Faceline_XL_Ilst

Adjusts the width of the face to be narrower/wider.

## HandSize_XL_Ilst

Adjusts the size of the hands to be smaller/larger.

<u>This LoRA may cause a bad anatomy</u>

## HeadSize_XL_Ilst

Adjusts the size of the head to be smaller/larger.

## Height_XL_Ilst

Adjusts the height to be shorter/taller.

## LegLength_XL_Ilst

Adjusts the length of legs to be shorter/taller.

## Muscle_XL_Ilst

Smooths/defines abdominal muscles and ribs.

## Neck_XL_Ilst

Adjusts the length of the neck to be shorter/longer.

## PupilWidth_XL_Ilst

Adjusts the width of the Pupils to be narrower/wider.

<u>This LoRA made by ADDifT</u>

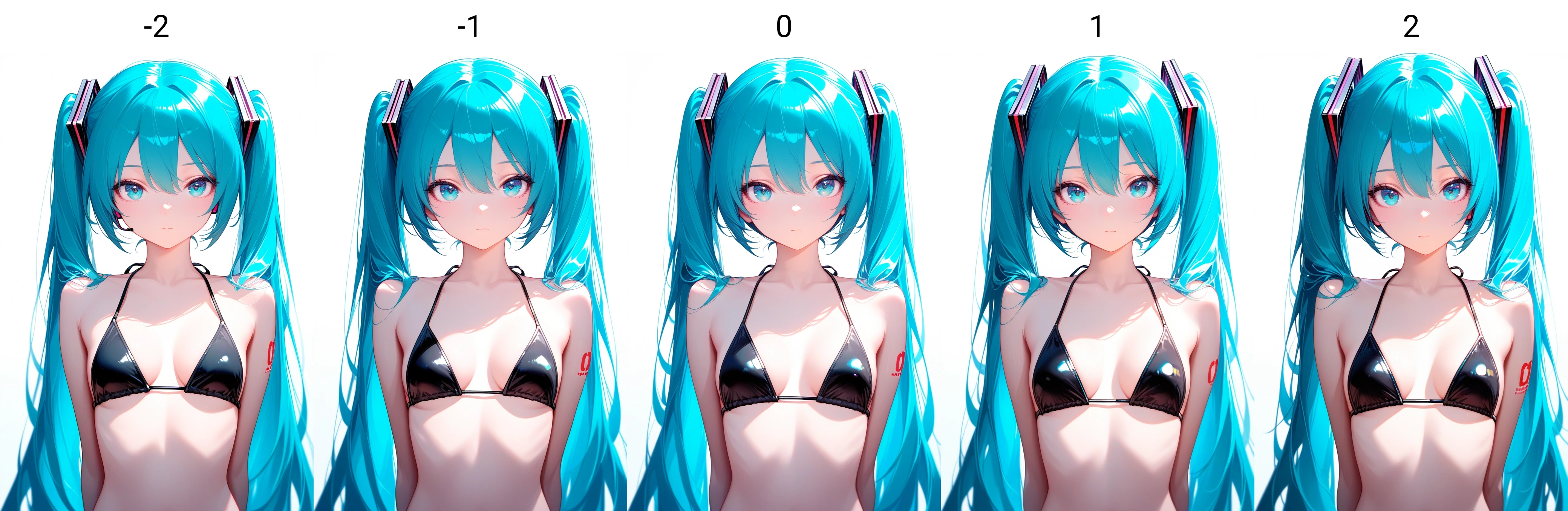

## ShoulderSize_XL_Ilst

Adjusts the width of the shoulders to be narrower/wider.

## Stumpy_XL_Ilst

Adjusts the waistline to be thinner/thicker.

## ThighSize_XL_Ilst

Adjusts the size of the thighs to be thinner/thicker.

## UpperHead_XL_Ilst

Adjusts the length of the head(upper) to be shorter/longer.

## WaistSize_XL_Ilst

Adjusts the waist circumference to be thinner/thicker. |

MCES10/Phi-4-reasoning-plus-mlx-fp16 | MCES10 | 2025-05-04T11:57:15Z | 0 | 0 | transformers | [

"transformers",

"safetensors",

"phi3",

"text-generation",

"phi",

"nlp",

"math",

"code",

"chat",

"conversational",

"reasoning",

"mlx",

"mlx-my-repo",

"en",

"base_model:microsoft/Phi-4-reasoning-plus",

"base_model:finetune:microsoft/Phi-4-reasoning-plus",

"license:mit",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2025-05-04T11:55:31Z | ---

license: mit

license_link: https://huggingface.co/microsoft/Phi-4-reasoning-plus/resolve/main/LICENSE

language:

- en

base_model: microsoft/Phi-4-reasoning-plus

pipeline_tag: text-generation

tags:

- phi

- nlp

- math

- code

- chat

- conversational

- reasoning

- mlx

- mlx-my-repo

inference:

parameters:

temperature: 0

widget:

- messages:

- role: user

content: What is the derivative of x^2?

library_name: transformers

---

# MCES10/Phi-4-reasoning-plus-mlx-fp16

The Model [MCES10/Phi-4-reasoning-plus-mlx-fp16](https://huggingface.co/MCES10/Phi-4-reasoning-plus-mlx-fp16) was converted to MLX format from [microsoft/Phi-4-reasoning-plus](https://huggingface.co/microsoft/Phi-4-reasoning-plus) using mlx-lm version **0.22.3**.

## Use with mlx

```bash

pip install mlx-lm

```

```python

from mlx_lm import load, generate

model, tokenizer = load("MCES10/Phi-4-reasoning-plus-mlx-fp16")

prompt="hello"

if hasattr(tokenizer, "apply_chat_template") and tokenizer.chat_template is not None:

messages = [{"role": "user", "content": prompt}]

prompt = tokenizer.apply_chat_template(

messages, tokenize=False, add_generation_prompt=True

)

response = generate(model, tokenizer, prompt=prompt, verbose=True)

```

|

kreasof-ai/whisper-medium-bem2eng | kreasof-ai | 2025-05-04T11:56:06Z | 84 | 0 | transformers | [

"transformers",

"tensorboard",

"safetensors",

"whisper",

"automatic-speech-recognition",

"generated_from_trainer",

"dataset:kreasof-ai/bemba-speech-csikasote",

"dataset:kreasof-ai/bigc-bem-eng",

"arxiv:2212.04356",

"base_model:openai/whisper-medium",

"base_model:finetune:openai/whisper-medium",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2025-04-04T15:16:39Z | ---

library_name: transformers

license: apache-2.0

base_model: openai/whisper-medium

tags:

- generated_from_trainer

metrics:

- wer

model-index:

- name: whisper-medium-bem2en

results: []

datasets:

- kreasof-ai/bemba-speech-csikasote

- kreasof-ai/bigc-bem-eng

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# whisper-medium-bem2en

This model is a fine-tuned version of [openai/whisper-medium](https://huggingface.co/openai/whisper-medium) on the [Big-C Dataset](https://huggingface.co/datasets/kreasof-ai/bem-eng-bigc) and [Bemba-Speech](https://huggingface.co/datasets/kreasof-ai/bemba-speech-csikasote).

It achieves the following results on the evaluation set:

- Loss: 0.6966

- Wer: 38.3922

## Model description

This model is a transcription model for Bemba Audio.

## Intended uses

This model was used for the Bemba-to-English translation task as part of the IWSLT 2025 Low-Resource Track.

## Training and evaluation data

This model was trained using the `train+dev` split from BembaSpeech Dataset and `train+val` split from Big-C Dataset. Meanwhile for evaluation, this model used `test` split from Big-C and BembaSpeech Dataset.

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 16

- optimizer: Use adamw_torch with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.03

- num_epochs: 5

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:-----:|:---------------:|:-------:|

| 1.172 | 1.0 | 6205 | 0.5755 | 47.5724 |

| 0.8696 | 2.0 | 12410 | 0.4932 | 40.5547 |

| 0.6827 | 3.0 | 18615 | 0.4860 | 38.7776 |

| 0.3563 | 4.0 | 24820 | 0.5455 | 38.3652 |

| 0.1066 | 5.0 | 31025 | 0.6966 | 38.3922 |

### Model Evaluation

Performance of this model was evaluated using WER on the test split of Big-C dataset.

| Finetuned/Baseline | WER |

| ------------------ | ------ |

| Baseline | 150.92 |

| Finetuned | 36.19 |

### Framework versions

- Transformers 4.47.1

- Pytorch 2.5.1+cu121

- Datasets 3.4.0

- Tokenizers 0.21.0

## Citation

```

@misc{radford2022whisper,

doi = {10.48550/ARXIV.2212.04356},

url = {https://arxiv.org/abs/2212.04356},

author = {Radford, Alec and Kim, Jong Wook and Xu, Tao and Brockman, Greg and McLeavey, Christine and Sutskever, Ilya},

title = {Robust Speech Recognition via Large-Scale Weak Supervision},

publisher = {arXiv},

year = {2022},

copyright = {arXiv.org perpetual, non-exclusive license}

}

@inproceedings{sikasote-etal-2023-big,

title = "{BIG}-{C}: a Multimodal Multi-Purpose Dataset for {B}emba",

author = "Sikasote, Claytone and

Mukonde, Eunice and

Alam, Md Mahfuz Ibn and

Anastasopoulos, Antonios",

editor = "Rogers, Anna and

Boyd-Graber, Jordan and

Okazaki, Naoaki",

booktitle = "Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers)",

month = jul,

year = "2023",

address = "Toronto, Canada",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2023.acl-long.115",

doi = "10.18653/v1/2023.acl-long.115",

pages = "2062--2078",

abstract = "We present BIG-C (Bemba Image Grounded Conversations), a large multimodal dataset for Bemba. While Bemba is the most populous language of Zambia, it exhibits a dearth of resources which render the development of language technologies or language processing research almost impossible. The dataset is comprised of multi-turn dialogues between Bemba speakers based on images, transcribed and translated into English. There are more than 92,000 utterances/sentences, amounting to more than 180 hours of audio data with corresponding transcriptions and English translations. We also provide baselines on speech recognition (ASR), machine translation (MT) and speech translation (ST) tasks, and sketch out other potential future multimodal uses of our dataset. We hope that by making the dataset available to the research community, this work will foster research and encourage collaboration across the language, speech, and vision communities especially for languages outside the {``}traditionally{''} used high-resourced ones. All data and code are publicly available: [\url{https://github.com/csikasote/bigc}](\url{https://github.com/csikasote/bigc}).",

}

@InProceedings{sikasote-anastasopoulos:2022:LREC,

author = {Sikasote, Claytone and Anastasopoulos, Antonios},

title = {BembaSpeech: A Speech Recognition Corpus for the Bemba Language},

booktitle = {Proceedings of the Language Resources and Evaluation Conference},

month = {June},

year = {2022},

address = {Marseille, France},

publisher = {European Language Resources Association},

pages = {7277--7283},

abstract = {We present a preprocessed, ready-to-use automatic speech recognition corpus, BembaSpeech, consisting over 24 hours of read speech in the Bemba language, a written but low-resourced language spoken by over 30\% of the population in Zambia. To assess its usefulness for training and testing ASR systems for Bemba, we explored different approaches; supervised pre-training (training from scratch), cross-lingual transfer learning from a monolingual English pre-trained model using DeepSpeech on the portion of the dataset and fine-tuning large scale self-supervised Wav2Vec2.0 based multilingual pre-trained models on the complete BembaSpeech corpus. From our experiments, the 1 billion XLS-R parameter model gives the best results. The model achieves a word error rate (WER) of 32.91\%, results demonstrating that model capacity significantly improves performance and that multilingual pre-trained models transfers cross-lingual acoustic representation better than monolingual pre-trained English model on the BembaSpeech for the Bemba ASR. Lastly, results also show that the corpus can be used for building ASR systems for Bemba language.},

url = {https://aclanthology.org/2022.lrec-1.790}

}

```

# Contact

This model was trained by [Hazim](https://huggingface.co/cobrayyxx).

# Acknowledgments

Huge thanks to [Yasmin Moslem](https://huggingface.co/ymoslem) for her supervision, and [Habibullah Akbar](https://huggingface.co/ChavyvAkvar) the founder of Kreasof-AI, for his leadership and support. |

ASethi04/meta-llama-Llama-3.1-8B-tulu-code_alpaca-first-lora-4-0.0001 | ASethi04 | 2025-05-04T11:56:02Z | 0 | 0 | transformers | [

"transformers",

"safetensors",

"generated_from_trainer",

"trl",

"sft",

"base_model:meta-llama/Llama-3.1-8B",

"base_model:finetune:meta-llama/Llama-3.1-8B",

"endpoints_compatible",

"region:us"

] | null | 2025-05-04T11:44:10Z | ---

base_model: meta-llama/Llama-3.1-8B

library_name: transformers

model_name: meta-llama-Llama-3.1-8B-tulu-code_alpaca-first-lora-4-0.0001

tags:

- generated_from_trainer

- trl

- sft

licence: license

---

# Model Card for meta-llama-Llama-3.1-8B-tulu-code_alpaca-first-lora-4-0.0001

This model is a fine-tuned version of [meta-llama/Llama-3.1-8B](https://huggingface.co/meta-llama/Llama-3.1-8B).

It has been trained using [TRL](https://github.com/huggingface/trl).

## Quick start

```python

from transformers import pipeline

question = "If you had a time machine, but could only go to the past or the future once and never return, which would you choose and why?"

generator = pipeline("text-generation", model="ASethi04/meta-llama-Llama-3.1-8B-tulu-code_alpaca-first-lora-4-0.0001", device="cuda")

output = generator([{"role": "user", "content": question}], max_new_tokens=128, return_full_text=False)[0]

print(output["generated_text"])

```

## Training procedure

[<img src="https://raw.githubusercontent.com/wandb/assets/main/wandb-github-badge-28.svg" alt="Visualize in Weights & Biases" width="150" height="24"/>](https://wandb.ai/torchql-org/huggingface/runs/s5fhwse2)

This model was trained with SFT.

### Framework versions

- TRL: 0.16.1

- Transformers: 4.51.2

- Pytorch: 2.6.0

- Datasets: 3.5.0

- Tokenizers: 0.21.1

## Citations

Cite TRL as:

```bibtex

@misc{vonwerra2022trl,

title = {{TRL: Transformer Reinforcement Learning}},

author = {Leandro von Werra and Younes Belkada and Lewis Tunstall and Edward Beeching and Tristan Thrush and Nathan Lambert and Shengyi Huang and Kashif Rasul and Quentin Gallouédec},

year = 2020,

journal = {GitHub repository},

publisher = {GitHub},

howpublished = {\url{https://github.com/huggingface/trl}}

}

``` |

TakalaWang/Discussion-Phi-4-text | TakalaWang | 2025-05-04T11:52:35Z | 0 | 0 | peft | [

"peft",

"tensorboard",

"safetensors",

"generated_from_trainer",

"base_model:microsoft/phi-4",

"base_model:adapter:microsoft/phi-4",

"license:mit",

"region:us"

] | null | 2025-05-04T11:11:17Z | ---

library_name: peft

license: mit

base_model: microsoft/phi-4

tags:

- generated_from_trainer

model-index:

- name: Discussion-Phi-4-text

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Discussion-Phi-4-text

This model is a fine-tuned version of [microsoft/phi-4](https://huggingface.co/microsoft/phi-4) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1265

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 4e-05

- train_batch_size: 1

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 16

- total_train_batch_size: 16

- optimizer: Use OptimizerNames.ADAMW_TORCH with betas=(0.9,0.95) and epsilon=1e-07 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 50

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:------:|:----:|:---------------:|

| 2.6764 | 0.2235 | 10 | 2.4496 |

| 2.1053 | 0.4469 | 20 | 1.9257 |

| 1.222 | 0.6704 | 30 | 1.0594 |

| 0.1878 | 0.8939 | 40 | 0.1615 |

| 0.1642 | 1.1117 | 50 | 0.1395 |

| 0.1127 | 1.3352 | 60 | 0.1343 |

| 0.1483 | 1.5587 | 70 | 0.1332 |

| 0.1342 | 1.7821 | 80 | 0.1338 |

| 0.1529 | 2.0 | 90 | 0.1323 |

| 0.1327 | 2.2235 | 100 | 0.1289 |

| 0.095 | 2.4469 | 110 | 0.1286 |

| 0.1446 | 2.6704 | 120 | 0.1304 |

| 0.1631 | 2.8939 | 130 | 0.1265 |

### Framework versions

- PEFT 0.15.2

- Transformers 4.51.3

- Pytorch 2.4.1+cu124

- Datasets 3.5.1

- Tokenizers 0.21.1 |

mitkox/Foundation-Sec-8B-Q8_0-GGUF | mitkox | 2025-05-04T11:52:24Z | 0 | 0 | transformers | [

"transformers",

"gguf",

"security",

"llama-cpp",

"gguf-my-repo",

"text-generation",

"en",

"base_model:fdtn-ai/Foundation-Sec-8B",

"base_model:quantized:fdtn-ai/Foundation-Sec-8B",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | text-generation | 2025-05-04T11:51:48Z | ---

base_model: fdtn-ai/Foundation-Sec-8B

language:

- en

library_name: transformers

license: apache-2.0

pipeline_tag: text-generation

tags:

- security

- llama-cpp

- gguf-my-repo

---

# mitkox/Foundation-Sec-8B-Q8_0-GGUF

This model was converted to GGUF format from [`fdtn-ai/Foundation-Sec-8B`](https://huggingface.co/fdtn-ai/Foundation-Sec-8B) using llama.cpp via the ggml.ai's [GGUF-my-repo](https://huggingface.co/spaces/ggml-org/gguf-my-repo) space.

Refer to the [original model card](https://huggingface.co/fdtn-ai/Foundation-Sec-8B) for more details on the model.

## Use with llama.cpp

Install llama.cpp through brew (works on Mac and Linux)

```bash

brew install llama.cpp

```

Invoke the llama.cpp server or the CLI.

### CLI:

```bash

llama-cli --hf-repo mitkox/Foundation-Sec-8B-Q8_0-GGUF --hf-file foundation-sec-8b-q8_0.gguf -p "The meaning to life and the universe is"

```

### Server:

```bash

llama-server --hf-repo mitkox/Foundation-Sec-8B-Q8_0-GGUF --hf-file foundation-sec-8b-q8_0.gguf -c 2048

```

Note: You can also use this checkpoint directly through the [usage steps](https://github.com/ggerganov/llama.cpp?tab=readme-ov-file#usage) listed in the Llama.cpp repo as well.

Step 1: Clone llama.cpp from GitHub.

```

git clone https://github.com/ggerganov/llama.cpp

```

Step 2: Move into the llama.cpp folder and build it with `LLAMA_CURL=1` flag along with other hardware-specific flags (for ex: LLAMA_CUDA=1 for Nvidia GPUs on Linux).

```

cd llama.cpp && LLAMA_CURL=1 make

```

Step 3: Run inference through the main binary.

```

./llama-cli --hf-repo mitkox/Foundation-Sec-8B-Q8_0-GGUF --hf-file foundation-sec-8b-q8_0.gguf -p "The meaning to life and the universe is"

```

or

```

./llama-server --hf-repo mitkox/Foundation-Sec-8B-Q8_0-GGUF --hf-file foundation-sec-8b-q8_0.gguf -c 2048

```

|

Arshii/CSIO-PunjabiQA-FinetunedLlama3.1Instruct-60135 | Arshii | 2025-05-04T11:51:27Z | 0 | 0 | transformers | [

"transformers",

"safetensors",

"text-generation-inference",

"unsloth",

"llama",

"trl",

"en",

"base_model:meta-llama/Llama-3.1-8B-Instruct",

"base_model:finetune:meta-llama/Llama-3.1-8B-Instruct",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | null | 2025-05-04T11:50:59Z | ---

base_model: meta-llama/Llama-3.1-8B-Instruct

language:

- en

license: apache-2.0

tags:

- text-generation-inference

- transformers

- unsloth

- llama

- trl

---

# Uploaded model

- **Developed by:** Arshii

- **License:** apache-2.0

- **Finetuned from model :** meta-llama/Llama-3.1-8B-Instruct

This llama model was trained 2x faster with [Unsloth](https://github.com/unslothai/unsloth) and Huggingface's TRL library.

[<img src="https://raw.githubusercontent.com/unslothai/unsloth/main/images/unsloth%20made%20with%20love.png" width="200"/>](https://github.com/unslothai/unsloth)

|

yusuke111/myBit-Llama2-jp-127M-2B4TLike-aozora-sort | yusuke111 | 2025-05-04T11:46:42Z | 0 | 0 | transformers | [

"transformers",

"safetensors",

"bit_llama",

"text-generation",

"generated_from_trainer",

"custom_code",

"autotrain_compatible",

"region:us"

] | text-generation | 2025-05-04T10:13:08Z | ---

library_name: transformers

tags:

- generated_from_trainer

model-index:

- name: myBit-Llama2-jp-127M-2B4TLike-aozora-sort

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# myBit-Llama2-jp-127M-2B4TLike-aozora-sort

This model is a fine-tuned version of [](https://huggingface.co/) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 3.4706

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0024

- train_batch_size: 24

- eval_batch_size: 24

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 96

- optimizer: Use OptimizerNames.ADAMW_TORCH with betas=(0.9,0.95) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 100

- num_epochs: 1

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:------:|:----:|:---------------:|

| 6.9724 | 0.0883 | 100 | 5.2813 |

| 4.7956 | 0.1765 | 200 | 4.4515 |

| 4.2335 | 0.2648 | 300 | 4.1442 |

| 3.9694 | 0.3530 | 400 | 3.9825 |

| 3.82 | 0.4413 | 500 | 3.8582 |

| 3.6922 | 0.5296 | 600 | 3.7534 |

| 3.6184 | 0.6178 | 700 | 3.6735 |

| 3.56 | 0.7061 | 800 | 3.6155 |

| 3.521 | 0.7944 | 900 | 3.5585 |

| 3.4953 | 0.8826 | 1000 | 3.5113 |

| 3.4727 | 0.9709 | 1100 | 3.4706 |

### Framework versions

- Transformers 4.47.1

- Pytorch 2.6.0+cu124

- Datasets 3.5.1

- Tokenizers 0.21.1

|

icefog72/Ice0.108-04.05-RP | icefog72 | 2025-05-04T11:46:15Z | 0 | 0 | transformers | [

"transformers",

"safetensors",

"mistral",

"text-generation",

"mergekit",

"merge",

"conversational",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2025-05-04T04:06:12Z | ---

base_model: []

library_name: transformers

tags:

- mergekit

- merge

---

# Ice0.108-04.05-RP

This is a merge of pre-trained language models created using [mergekit](https://github.com/cg123/mergekit).

## Merge Details

### Merge Method

This model was merged using the [SLERP](https://en.wikipedia.org/wiki/Slerp) merge method.

### Models Merged

The following models were included in the merge:

* E:\FModels\Ice0.107-04.05-RP-ORPO-v1

* E:\FModels\Ice0.107-04.05-RP-ORPO-v2

### Configuration

The following YAML configuration was used to produce this model:

```yaml

slices:

- sources:

- model: E:\FModels\Ice0.107-04.05-RP-ORPO-v1

layer_range: [0, 32]

- model: E:\FModels\Ice0.107-04.05-RP-ORPO-v2

layer_range: [0, 32]

merge_method: slerp

base_model: E:\FModels\Ice0.107-04.05-RP-ORPO-v1

parameters:

t:

- filter: self_attn

value: [0, 0.5, 0.3, 0.7, 1]

- filter: mlp

value: [1, 0.5, 0.7, 0.3, 0]

- value: 0.5 # fallback for rest of tensors

dtype: bfloat16

```

|

19uez/GRPO_llama3_2_3B_16_005_2k_part1 | 19uez | 2025-05-04T11:46:04Z | 0 | 0 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"unsloth",

"trl",

"grpo",

"conversational",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2025-05-04T11:45:05Z | ---

library_name: transformers

tags:

- unsloth

- trl

- grpo

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed] |

sergioalves/55ce9b0b-dfb4-4b67-8cf1-47034a5322d5 | sergioalves | 2025-05-04T11:45:11Z | 0 | 0 | peft | [

"peft",

"safetensors",

"mistral",

"axolotl",

"generated_from_trainer",

"base_model:NousResearch/Nous-Capybara-7B-V1.9",

"base_model:adapter:NousResearch/Nous-Capybara-7B-V1.9",

"license:mit",

"8-bit",

"bitsandbytes",

"region:us"

] | null | 2025-05-04T10:20:16Z | ---

library_name: peft

license: mit

base_model: NousResearch/Nous-Capybara-7B-V1.9

tags:

- axolotl

- generated_from_trainer

model-index:

- name: 55ce9b0b-dfb4-4b67-8cf1-47034a5322d5

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

[<img src="https://raw.githubusercontent.com/axolotl-ai-cloud/axolotl/main/image/axolotl-badge-web.png" alt="Built with Axolotl" width="200" height="32"/>](https://github.com/axolotl-ai-cloud/axolotl)

<details><summary>See axolotl config</summary>

axolotl version: `0.4.1`

```yaml

absolute_data_files: true

adapter: lora

base_model: NousResearch/Nous-Capybara-7B-V1.9

bf16: true

chat_template: llama3

dataset_prepared_path: /workspace/axolotl

datasets:

- data_files:

- 2300620033aab66e_train_data.json

ds_type: json

format: custom

path: /workspace/input_data/2300620033aab66e_train_data.json

type:

field_input: imgnet21k_path

field_instruction: wordnet_cat

field_output: caption

format: '{instruction} {input}'

no_input_format: '{instruction}'

system_format: '{system}'

system_prompt: ''

debug: null

deepspeed: null

early_stopping_patience: null

eval_max_new_tokens: 128

eval_table_size: null

evals_per_epoch: 1

flash_attention: true

fp16: null

fsdp: null

fsdp_config: null

gradient_accumulation_steps: 1

gradient_checkpointing: true

gradient_clipping: 0.5

group_by_length: false

hub_model_id: sergioalves/55ce9b0b-dfb4-4b67-8cf1-47034a5322d5

hub_repo: null

hub_strategy: end

hub_token: null

learning_rate: 5.0e-06

load_in_4bit: false

load_in_8bit: true

local_rank: null

logging_steps: 1

lora_alpha: 64

lora_dropout: 0.05

lora_fan_in_fan_out: null

lora_model_dir: null

lora_r: 32

lora_target_linear: true

lr_scheduler: cosine

max_steps: 200

micro_batch_size: 8

mixed_precision: bf16

mlflow_experiment_name: /tmp/2300620033aab66e_train_data.json

model_type: AutoModelForCausalLM

num_epochs: 1

optimizer: adamw_bnb_8bit

output_dir: miner_id_24

pad_to_sequence_len: true

resume_from_checkpoint: null

s2_attention: null

sample_packing: false

saves_per_epoch: 1

sequence_len: 1024

strict: false

tf32: false

tokenizer_type: AutoTokenizer

train_on_inputs: false

trust_remote_code: true

val_set_size: 0.05

wandb_entity: null

wandb_mode: online

wandb_name: 5432948c-ce3e-46c0-b9f0-42b64b07f7bb

wandb_project: s56-8

wandb_run: your_name

wandb_runid: 5432948c-ce3e-46c0-b9f0-42b64b07f7bb

warmup_steps: 5

weight_decay: 0.01

xformers_attention: true

```

</details><br>

# 55ce9b0b-dfb4-4b67-8cf1-47034a5322d5

This model is a fine-tuned version of [NousResearch/Nous-Capybara-7B-V1.9](https://huggingface.co/NousResearch/Nous-Capybara-7B-V1.9) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.8431

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-06

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Use OptimizerNames.ADAMW_BNB with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: cosine

- lr_scheduler_warmup_steps: 5

- training_steps: 200

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:------:|:----:|:---------------:|

| 2.2451 | 0.0036 | 200 | 1.8431 |

### Framework versions

- PEFT 0.13.2

- Transformers 4.46.0

- Pytorch 2.5.0+cu124

- Datasets 3.0.1

- Tokenizers 0.20.1 |

kreasof-ai/nllb-200-600M-eng2bem | kreasof-ai | 2025-05-04T11:44:49Z | 44 | 0 | transformers | [

"transformers",

"safetensors",

"m2m_100",

"text2text-generation",

"generated_from_trainer",

"dataset:kreasof-ai/bigc-bem-eng",

"base_model:facebook/nllb-200-distilled-600M",

"base_model:finetune:facebook/nllb-200-distilled-600M",

"license:cc-by-nc-4.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text2text-generation | 2025-04-08T10:19:20Z | ---

library_name: transformers

license: cc-by-nc-4.0

base_model: facebook/nllb-200-distilled-600M

tags:

- generated_from_trainer

metrics:

- bleu

- wer

model-index:

- name: nllb-200-distilled-600M-en2bem

results: []

datasets:

- kreasof-ai/bigc-bem-eng

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# nllb-200-distilled-600M-en2bem

This model is a fine-tuned version of [facebook/nllb-200-distilled-600M](https://huggingface.co/facebook/nllb-200-distilled-600M) on the [Big-C dataset](https://huggingface.co/datasets/kreasof-ai/bem-eng-bigc) that we took from the [original data](https://github.com/csikasote/bigc).

It achieves the following results on the evaluation set:

- Loss: 0.3204

- Bleu: 8.51

- Chrf: 48.32

- Wer: 83.1036

## Model description

This model is a translation model that translate Bemba to English. This model is trained on [facebook/nllb-200-distilled-600M](https://huggingface.co/facebook/nllb-200-distilled-600M).

## Intended uses & limitations

This model is a English-to-Bemba translation model. This model was used for data augmentation.

## Training and evaluation data

This model is trained using the `train+val` split split from Big-C Dataset. Meanwhile for evaluation, this model used `test` split from Big-C.

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Use adamw_torch with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.03

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Bleu | Chrf | Wer |

|:-------------:|:-----:|:-----:|:---------------:|:----:|:-----:|:-------:|

| 0.2594 | 1.0 | 5240 | 0.3208 | 7.99 | 47.42 | 83.9565 |

| 0.2469 | 2.0 | 10480 | 0.3169 | 8.08 | 47.92 | 83.4161 |

| 0.2148 | 3.0 | 15720 | 0.3204 | 8.51 | 48.32 | 83.1036 |

### Framework versions

- Transformers 4.47.1

- Pytorch 2.5.1+cu121

- Datasets 3.4.0

- Tokenizers 0.21.0

## Citation

```

@inproceedings{nllb2022,

title = {No Language Left Behind: Scaling Human-Centered Machine Translation},

author = {Costa-jussà, Marta R. and Cross, James and et al.},

booktitle = {Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing (EMNLP)},

year = {2022},

publisher = {Association for Computational Linguistics},

url = {https://aclanthology.org/2022.emnlp-main.9}

}

@inproceedings{sikasote-etal-2023-big,

title = "{BIG}-{C}: a Multimodal Multi-Purpose Dataset for {B}emba",

author = "Sikasote, Claytone and

Mukonde, Eunice and

Alam, Md Mahfuz Ibn and

Anastasopoulos, Antonios",

editor = "Rogers, Anna and

Boyd-Graber, Jordan and

Okazaki, Naoaki",

booktitle = "Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers)",

month = jul,

year = "2023",

address = "Toronto, Canada",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2023.acl-long.115",

doi = "10.18653/v1/2023.acl-long.115",

pages = "2062--2078",

abstract = "We present BIG-C (Bemba Image Grounded Conversations), a large multimodal dataset for Bemba. While Bemba is the most populous language of Zambia, it exhibits a dearth of resources which render the development of language technologies or language processing research almost impossible. The dataset is comprised of multi-turn dialogues between Bemba speakers based on images, transcribed and translated into English. There are more than 92,000 utterances/sentences, amounting to more than 180 hours of audio data with corresponding transcriptions and English translations. We also provide baselines on speech recognition (ASR), machine translation (MT) and speech translation (ST) tasks, and sketch out other potential future multimodal uses of our dataset. We hope that by making the dataset available to the research community, this work will foster research and encourage collaboration across the language, speech, and vision communities especially for languages outside the {``}traditionally{''} used high-resourced ones. All data and code are publicly available: [\url{https://github.com/csikasote/bigc}](\url{https://github.com/csikasote/bigc}).",

}

```

# Contact

This model was trained by [Hazim](https://huggingface.co/cobrayyxx).

# Acknowledgments

Huge thanks to [Yasmin Moslem](https://huggingface.co/ymoslem) for her supervision, and [Habibullah Akbar](https://huggingface.co/ChavyvAkvar) the founder of Kreasof-AI, for his leadership and support. |

Denn231/external_clf_v_0.48 | Denn231 | 2025-05-04T11:43:22Z | 1 | 0 | transformers | [

"transformers",

"safetensors",

"bert",

"text-classification",

"arxiv:1910.09700",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2025-04-30T13:43:22Z | ---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed] |

annemiekebickleyoy/10cec815-6534-4153-a9f8-a9751d11a032 | annemiekebickleyoy | 2025-05-04T11:43:13Z | 0 | 0 | transformers | [

"transformers",

"safetensors",

"generated_from_trainer",

"endpoints_compatible",

"region:us"

] | null | 2025-05-04T11:42:46Z | ---

library_name: transformers

model_name: annemiekebickleyoy/10cec815-6534-4153-a9f8-a9751d11a032

tags:

- generated_from_trainer

licence: license

---

# Model Card for annemiekebickleyoy/10cec815-6534-4153-a9f8-a9751d11a032

This model is a fine-tuned version of [None](https://huggingface.co/None).

It has been trained using [TRL](https://github.com/huggingface/trl).

## Quick start

```python

from transformers import pipeline

question = "If you had a time machine, but could only go to the past or the future once and never return, which would you choose and why?"

generator = pipeline("text-generation", model="None", device="cuda")

output = generator([{"role": "user", "content": question}], max_new_tokens=128, return_full_text=False)[0]

print(output["generated_text"])

```

### Framework versions

- TRL: 0.17.0

- Transformers: 4.51.3

- Pytorch: 2.5.1+cu124

- Datasets: 3.5.0

- Tokenizers: 0.21.1

## Citations

Cite DPO as:

```bibtex

@inproceedings{rafailov2023direct,

title = {{Direct Preference Optimization: Your Language Model is Secretly a Reward Model}},

author = {Rafael Rafailov and Archit Sharma and Eric Mitchell and Christopher D. Manning and Stefano Ermon and Chelsea Finn},

year = 2023,

booktitle = {Advances in Neural Information Processing Systems 36: Annual Conference on Neural Information Processing Systems 2023, NeurIPS 2023, New Orleans, LA, USA, December 10 - 16, 2023},

url = {http://papers.nips.cc/paper_files/paper/2023/hash/a85b405ed65c6477a4fe8302b5e06ce7-Abstract-Conference.html},

editor = {Alice Oh and Tristan Naumann and Amir Globerson and Kate Saenko and Moritz Hardt and Sergey Levine},

}

```

Cite TRL as:

```bibtex

@misc{vonwerra2022trl,

title = {{TRL: Transformer Reinforcement Learning}},

author = {Leandro von Werra and Younes Belkada and Lewis Tunstall and Edward Beeching and Tristan Thrush and Nathan Lambert and Shengyi Huang and Kashif Rasul and Quentin Gallou{\'e}dec},

year = 2020,

journal = {GitHub repository},

publisher = {GitHub},

howpublished = {\url{https://github.com/huggingface/trl}}

}

``` |

mveroe/safecoder_full_bd_triggered | mveroe | 2025-05-04T11:42:22Z | 0 | 0 | transformers | [

"transformers",

"tensorboard",

"safetensors",

"qwen2",

"text-generation",

"generated_from_trainer",

"conversational",

"base_model:mveroe/Qwen2.5-1.5B-Instruct-safecoder-1.5-Code-safecoder_reg_full_safecoder_bd",

"base_model:finetune:mveroe/Qwen2.5-1.5B-Instruct-safecoder-1.5-Code-safecoder_reg_full_safecoder_bd",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2025-05-04T09:50:24Z | ---

library_name: transformers

license: apache-2.0

base_model: mveroe/Qwen2.5-1.5B-Instruct-safecoder-1.5-Code-safecoder_reg_full_safecoder_bd

tags:

- generated_from_trainer

model-index:

- name: safecoder_full_bd_triggered

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# safecoder_full_bd_triggered

This model is a fine-tuned version of [mveroe/Qwen2.5-1.5B-Instruct-safecoder-1.5-Code-safecoder_reg_full_safecoder_bd](https://huggingface.co/mveroe/Qwen2.5-1.5B-Instruct-safecoder-1.5-Code-safecoder_reg_full_safecoder_bd) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 32

- optimizer: Use OptimizerNames.ADAMW_TORCH with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: linear

- training_steps: 500

### Training results

### Framework versions

- Transformers 4.51.3

- Pytorch 2.7.0+cu126

- Datasets 3.5.1

- Tokenizers 0.21.1

|

qianyu121382/Mistral-7B-Instruct-v0.1-finetune | qianyu121382 | 2025-05-04T11:39:08Z | 0 | 0 | transformers | [

"transformers",

"safetensors",

"mistral",

"text-generation",

"conversational",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2025-05-04T11:35:18Z | ---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed] |

remfinator/tinyllama-ft-news-sentiment | remfinator | 2025-05-04T11:38:41Z | 0 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"tinyllama",

"finance",

"sentiment-analysis",

"conversational",

"en",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2025-05-04T11:23:53Z | ---

language: en

license: apache-2.0

tags:

- tinyllama

- finance

- sentiment-analysis

library_name: transformers

---

# TinyLlama‑FT‑News‑Sentiment

TinyLlama‑1.1B‑Chat fine‑tuned for market‑news sentiment classification.

## How to use

```python

from transformers import AutoTokenizer, AutoModelForCausalLM

tok = AutoTokenizer.from_pretrained("remfinator/tinyllama-ft-news-sentiment")

model = AutoModelForCausalLM.from_pretrained("remfinator/tinyllama-ft-news-sentiment",

device_map="auto")

|

Bari-Pisa-Diretta-Gratis/Bari.Pisa.In.Diretta.Streaming.Gratis.Tv.Official | Bari-Pisa-Diretta-Gratis | 2025-05-04T11:35:38Z | 0 | 0 | null | [

"region:us"

] | null | 2025-05-04T11:16:04Z | ⚽📺📱👉◄◄🔴 https://tinyurl.com/mtbv4nys

⚽📺📱👉◄◄🔴 https://tinyurl.com/mtbv4nys

⚽📺📱👉◄◄🔴 https://tinyurl.com/mtbv4nys

Bari-Pisa come e dove vederla: Sky o DAZN? Canale tv, diretta streaming, formazioni e orario

Partita valevole per la 37a giornata della Serie B BKT 2024/2025

Da oltre 20 anni informa in modo obiettivo e appassionato su tutto il mondo dello sport. Calcio, calciomercato, F1, Motomondiale ma anche tennis, volley, basket: su Virgilio Sport i tifosi e gli appassionati sanno che troveranno sempre copertura completa e zero faziosità. La squadra di Virgilio Sport è formata da giornalisti ed esperti di sport abili sia nel gioco di rimessa quando intercettano le notizie e le rilanciano verso la rete, sia nella costruzione dal basso quando creano contenuti 100% originali ed esclusivi. |

phospho-app/kazugi-hand_dataset-s14q327x6z | phospho-app | 2025-05-04T11:35:00Z | 0 | 0 | null | [

"safetensors",

"gr00t_n1",

"phosphobot",

"gr00t",

"region:us"

] | null | 2025-05-04T10:56:10Z |

---

tags:

- phosphobot

- gr00t

task_categories:

- robotics

---

# gr00t Model - phospho Training Pipeline

## This model was trained using **phospho**.

Training was successfull, try it out on your robot!

## Training parameters:

- **Dataset**: [kazugi/hand_dataset](https://huggingface.co/datasets/kazugi/hand_dataset)

- **Wandb run URL**: None

- **Epochs**: 10

- **Batch size**: 64

- **Training steps**: None

📖 **Get Started**: [docs.phospho.ai](https://docs.phospho.ai?utm_source=replicate_groot_training_pipeline)

🤖 **Get your robot**: [robots.phospho.ai](https://robots.phospho.ai?utm_source=replicate_groot_training_pipeline)

|

pauls1818/system | pauls1818 | 2025-05-04T11:33:17Z | 0 | 0 | null | [

"license:apache-2.0",

"region:us"

] | null | 2025-05-04T11:33:17Z | ---

license: apache-2.0

---

|

annasoli/Qwen2.5-14B-Instruct_bad_med_full-ft_LR1e-6 | annasoli | 2025-05-04T11:30:33Z | 0 | 0 | transformers | [

"transformers",

"safetensors",

"qwen2",

"text-generation",

"trl",

"sft",

"conversational",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2025-05-04T11:06:40Z | ---

library_name: transformers

tags:

- trl

- sft

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed] |

Pavloria/gpt2-shakespeare-final | Pavloria | 2025-05-04T11:29:20Z | 0 | 0 | transformers | [

"transformers",

"safetensors",

"gpt2",

"text-generation",

"generated_from_trainer",

"base_model:openai-community/gpt2",

"base_model:finetune:openai-community/gpt2",

"license:mit",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2025-05-04T10:13:53Z | ---

library_name: transformers

license: mit

base_model: gpt2

tags:

- generated_from_trainer

model-index:

- name: gpt2-shakespeare-final

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# gpt2-shakespeare-final

This model is a fine-tuned version of [gpt2](https://huggingface.co/gpt2) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 4.7204

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 2

- eval_batch_size: 8

- seed: 42

- optimizer: Use OptimizerNames.ADAMW_TORCH with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 4.3148 | 1.0 | 1 | 4.7410 |

| 3.4016 | 2.0 | 2 | 4.7288 |

| 3.2808 | 3.0 | 3 | 4.7204 |

### Framework versions

- Transformers 4.51.3

- Pytorch 2.6.0+cu124

- Datasets 3.5.1

- Tokenizers 0.21.1

|