modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-06-27 06:27:46

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 499

values | tags

sequencelengths 1

4.05k

| pipeline_tag

stringclasses 54

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-06-27 06:26:25

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

galaxywavee/personaluse | galaxywavee | 2023-07-15T06:50:48Z | 0 | 0 | null | [

"license:bigscience-openrail-m",

"region:us"

] | null | 2023-04-18T03:00:57Z | ---

license: bigscience-openrail-m

---

majicMIX realistic >>>

推荐关键词 recommended positive prompts: Best quality, masterpiece, ultra high res, (photorealistic:1.4), 1girl

如果想要更暗的图像 if you want darker picture, add: in the dark, deep shadow, low key, etc.

负面关键词 use ng_deepnegative_v1_75t and badhandv4 in negative prompt

Sampler: DPM++ 2M Karras (bug-fixed) or DPM++ SDE Karras

Steps: 20~40

Hires upscaler: R-ESRGAN 4x+ or 4x-UltraSharp

Hires upscale: 2

Hires steps: 15

Denoising strength: 0.2~0.5

CFG scale: 6-8

clip skip 2

Aerial (Animation and img2img) >>> Trigger Words : aerialstyle

|

NasimB/guten-rarity-all-end-19k-ctx-512 | NasimB | 2023-07-15T06:32:42Z | 143 | 0 | transformers | [

"transformers",

"pytorch",

"gpt2",

"text-generation",

"generated_from_trainer",

"dataset:generator",

"license:mit",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2023-07-15T05:38:01Z | ---

license: mit

tags:

- generated_from_trainer

datasets:

- generator

model-index:

- name: guten-rarity-all-end-19k-ctx-512

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# guten-rarity-all-end-19k-ctx-512

This model is a fine-tuned version of [gpt2](https://huggingface.co/gpt2) on the generator dataset.

It achieves the following results on the evaluation set:

- Loss: 4.2404

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0005

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine

- lr_scheduler_warmup_steps: 1000

- num_epochs: 6

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 6.5135 | 1.19 | 500 | 5.4526 |

| 4.9916 | 2.38 | 1000 | 4.8062 |

| 4.3998 | 3.56 | 1500 | 4.4088 |

| 3.9739 | 4.75 | 2000 | 4.2180 |

| 3.6922 | 5.94 | 2500 | 4.1726 |

### Framework versions

- Transformers 4.26.1

- Pytorch 1.11.0+cu113

- Datasets 2.13.0

- Tokenizers 0.13.3

|

AnaBach/roberta-base-bne-finetuned-amazon_reviews_multi | AnaBach | 2023-07-15T06:11:29Z | 105 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"roberta",

"text-classification",

"generated_from_trainer",

"dataset:amazon_reviews_multi",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2023-07-14T02:15:57Z | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- amazon_reviews_multi

metrics:

- accuracy

model-index:

- name: roberta-base-bne-finetuned-amazon_reviews_multi

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: amazon_reviews_multi

type: amazon_reviews_multi

config: es

split: validation

args: es

metrics:

- name: Accuracy

type: accuracy

value: 0.9355

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# roberta-base-bne-finetuned-amazon_reviews_multi

This model is a fine-tuned version of [BSC-TeMU/roberta-base-bne](https://huggingface.co/BSC-TeMU/roberta-base-bne) on the amazon_reviews_multi dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2188

- Accuracy: 0.9355

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.1953 | 1.0 | 1250 | 0.1686 | 0.9343 |

| 0.1034 | 2.0 | 2500 | 0.2188 | 0.9355 |

### Framework versions

- Transformers 4.30.2

- Pytorch 2.0.1+cu118

- Datasets 2.13.1

- Tokenizers 0.13.3

|

sriharib/bloomz-3b-mmail-2 | sriharib | 2023-07-15T06:10:03Z | 1 | 0 | peft | [

"peft",

"region:us"

] | null | 2023-07-15T06:09:55Z | ---

library_name: peft

---

## Training procedure

The following `bitsandbytes` quantization config was used during training:

- load_in_8bit: True

- load_in_4bit: False

- llm_int8_threshold: 6.0

- llm_int8_skip_modules: None

- llm_int8_enable_fp32_cpu_offload: False

- llm_int8_has_fp16_weight: False

- bnb_4bit_quant_type: fp4

- bnb_4bit_use_double_quant: False

- bnb_4bit_compute_dtype: float32

### Framework versions

- PEFT 0.4.0.dev0

|

coreml-community/coreml-8528-diffusion | coreml-community | 2023-07-15T06:08:15Z | 0 | 23 | null | [

"coreml",

"stable-diffusion",

"text-to-image",

"license:creativeml-openrail-m",

"region:us"

] | text-to-image | 2023-01-09T23:50:12Z | ---

license: creativeml-openrail-m

tags:

- coreml

- stable-diffusion

- text-to-image

---

# Core ML Converted Model

This model was converted to Core ML for use on Apple Silicon devices by following Apple's instructions [here](https://github.com/apple/ml-stable-diffusion#-converting-models-to-core-ml).<br>

Provide the model to an app such as [Mochi Diffusion](https://github.com/godly-devotion/MochiDiffusion) to generate images.<br>

`split_einsum` version is compatible with all compute unit options including Neural Engine.<br>

`original` version is only compatible with CPU & GPU option.

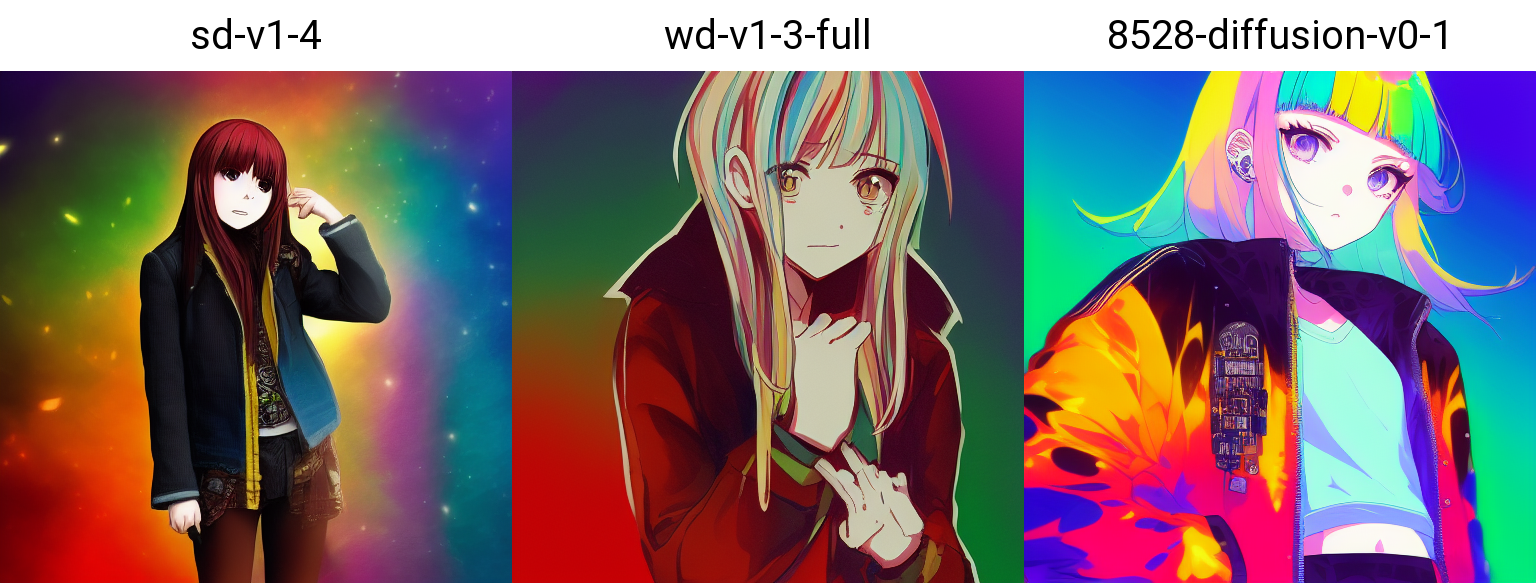

# 8528-diffusion final

Source: [Hugging Face](https://huggingface.co/852wa/8528-diffusion) (The release of the source model has ended.)

8528-diffusion is a latent text-to-image diffusion model, conditioned by fine-tuning to colorful character images.

8528 Diffusion is a fine-tuning model of Stable Diffusion v1.4 with AI output images (t2i and t2i with i2i).

I recommend entering "low quality,worst quality," for Negative prompt and Clip skip: 2.

<!--

<img src=https://i.imgur.com/vCn02tM.jpg >

!-->

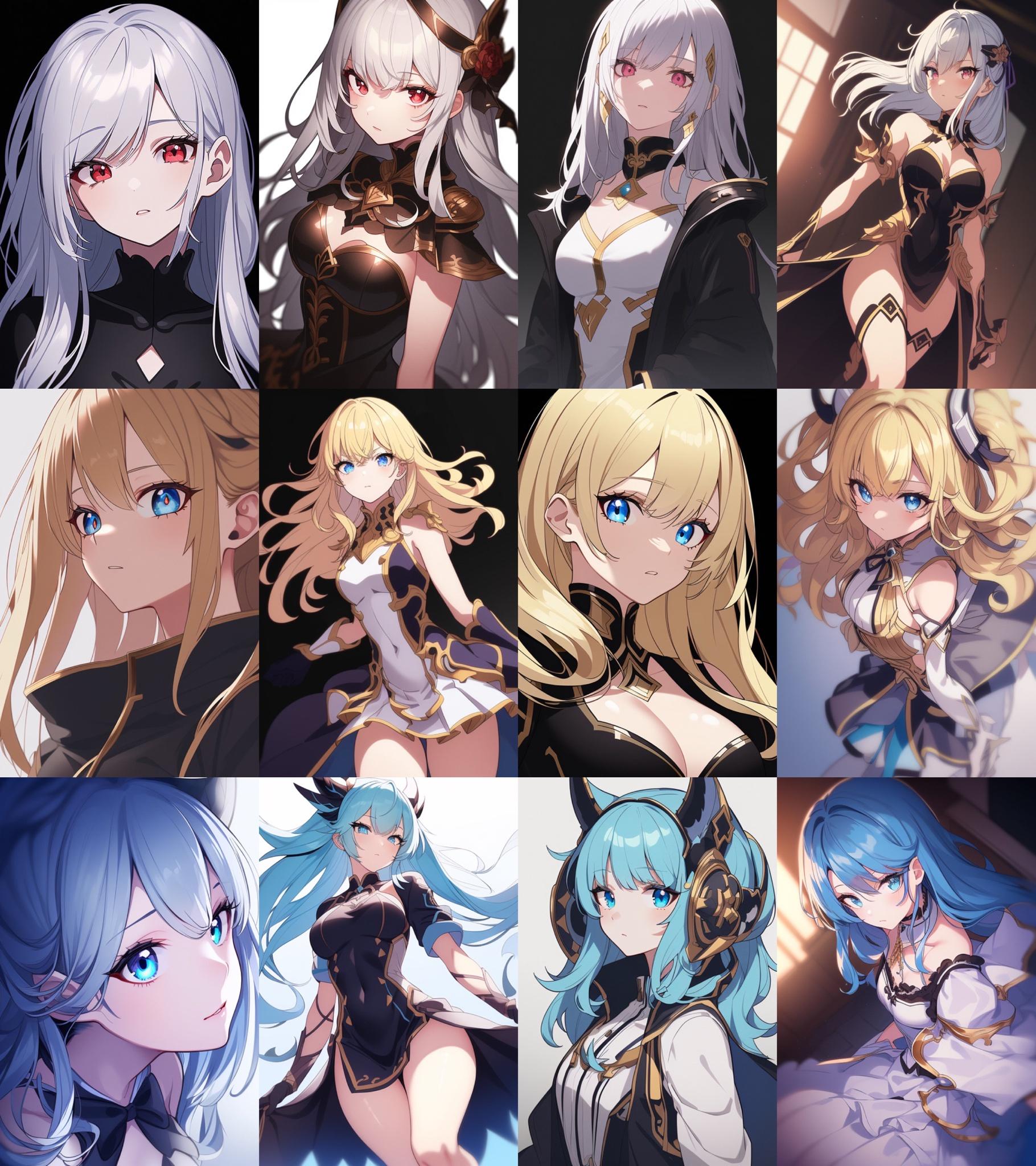

((ultra-detailed)), ((illustration)), Silver hair, red eyes, beautiful eyes, dress, Queen,Anime style, pretty face, pretty eyes, pretty, girl,High resolution, beautiful girl,octane render, realistic, hyper detailed ray tracing, 8k,classic style,Rococo

Negative prompt: (low quality, worst quality:1.4) concept art

Steps: 20, Sampler: Euler a, CFG scale: 7, Seed: 241379229, Size: 512x768, Model hash: 31cd036c, Clip skip: 2

# 8528-diffusion v0.4

<!--

<img src=https://i.imgur.com/X2zFoeA.jpg >

!-->

# 8528-diffusion v0.3

<!--

<img src=https://i.imgur.com/QQuNpYl.png >

<img src=https://i.imgur.com/u785LlC.png >

!-->

# 8528-diffusion v0.2

8528-diffusion is a latent text-to-image diffusion model, conditioned by fine-tuning to colorful character images.

8528 Diffusion v0.2 & v0.1 is a fine-tuning model of Waifu Diffusion with AI output images (t2i and t2i with i2i).

<!--

<img src=https://i.imgur.com/z4sFctp.png >

!-->

# 8528-diffusion v0.1

<!--

<img src=https://i.imgur.com/8chXeif.png >

!-->

[google colab](https://colab.research.google.com/drive/1ksRxO84CMbXrW_p-x5Vuz74AHnrWpe_u)

## License

This model is open access and available to all, with a CreativeML OpenRAIL-M license further specifying rights and usage.

The CreativeML OpenRAIL License specifies:

1. You can't use the model to deliberately produce nor share illegal or harmful outputs or content

2. The authors claims no rights on the outputs you generate, you are free to use them and are accountable for their use which must not go against the provisions set in the license

3. You may re-distribute the weights and use the model commercially and/or as a service. If you do, please be aware you have to include the same use restrictions as the ones in the license and share a copy of the CreativeML OpenRAIL-M to all your users (please read the license entirely and carefully)

[Please read the full license here](https://huggingface.co/spaces/CompVis/stable-diffusion-license)

<!-- Discord Server has been stopped.

## Discord

https://discord.gg/ax9KgpUMUP

!--> |

akselozer9/akselo | akselozer9 | 2023-07-15T05:48:16Z | 0 | 0 | null | [

"token-classification",

"dataset:Open-Orca/OpenOrca",

"region:us"

] | token-classification | 2023-07-15T05:47:48Z | ---

datasets:

- Open-Orca/OpenOrca

metrics:

- accuracy

pipeline_tag: token-classification

--- |

akselozer9/cityofalbuquerque | akselozer9 | 2023-07-15T05:44:11Z | 0 | 0 | null | [

"token-classification",

"en",

"dataset:WizardLM/WizardLM_evol_instruct_V2_196k",

"region:us"

] | token-classification | 2023-07-15T05:43:06Z | ---

datasets:

- WizardLM/WizardLM_evol_instruct_V2_196k

language:

- en

pipeline_tag: token-classification

--- |

Evan-Lin/Bart-RL-many-keywordmax-entailment-attractive-reward1 | Evan-Lin | 2023-07-15T05:39:14Z | 49 | 0 | transformers | [

"transformers",

"pytorch",

"bart",

"text2text-generation",

"trl",

"reinforcement-learning",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | reinforcement-learning | 2023-07-14T17:58:29Z | ---

license: apache-2.0

tags:

- trl

- transformers

- reinforcement-learning

---

# TRL Model

This is a [TRL language model](https://github.com/lvwerra/trl) that has been fine-tuned with reinforcement learning to

guide the model outputs according to a value, function, or human feedback. The model can be used for text generation.

## Usage

To use this model for inference, first install the TRL library:

```bash

python -m pip install trl

```

You can then generate text as follows:

```python

from transformers import pipeline

generator = pipeline("text-generation", model="Evan-Lin//tmp/tmp71nhx1t_/Evan-Lin/Bart-RL-many-keywordmax-entailment-attractive-beam10")

outputs = generator("Hello, my llama is cute")

```

If you want to use the model for training or to obtain the outputs from the value head, load the model as follows:

```python

from transformers import AutoTokenizer

from trl import AutoModelForCausalLMWithValueHead

tokenizer = AutoTokenizer.from_pretrained("Evan-Lin//tmp/tmp71nhx1t_/Evan-Lin/Bart-RL-many-keywordmax-entailment-attractive-beam10")

model = AutoModelForCausalLMWithValueHead.from_pretrained("Evan-Lin//tmp/tmp71nhx1t_/Evan-Lin/Bart-RL-many-keywordmax-entailment-attractive-beam10")

inputs = tokenizer("Hello, my llama is cute", return_tensors="pt")

outputs = model(**inputs, labels=inputs["input_ids"])

```

|

kelvinih/taser-cocondenser-wiki | kelvinih | 2023-07-15T05:33:04Z | 0 | 0 | null | [

"pytorch",

"license:mit",

"region:us"

] | null | 2023-07-15T05:26:30Z | ---

license: mit

---

# Task-Aware Specialization for Efficient and Robust Dense Retrieval for Open-Domain Question Answering

This repository includes the model for

[Task-Aware Specialization for Efficient and Robust Dense Retrieval for Open-Domain Question Answering](https://aclanthology.org/2023.acl-short.159/).

If you find this useful, please cite the following paper:

```

@inproceedings{cheng-etal-2023-task,

title = "Task-Aware Specialization for Efficient and Robust Dense Retrieval for Open-Domain Question Answering",

author = "Cheng, Hao and

Fang, Hao and

Liu, Xiaodong and

Gao, Jianfeng",

booktitle = "Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers)",

month = jul,

year = "2023",

address = "Toronto, Canada",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2023.acl-short.159",

pages = "1864--1875",

}

```

|

digiplay/Opiate_v2 | digiplay | 2023-07-15T05:07:02Z | 333 | 2 | diffusers | [

"diffusers",

"safetensors",

"stable-diffusion",

"stable-diffusion-diffusers",

"text-to-image",

"license:other",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2023-07-15T04:16:25Z | ---

license: other

tags:

- stable-diffusion

- stable-diffusion-diffusers

- text-to-image

- diffusers

inference: true

---

Model info:

https://civitai.com/models/69587?modelVersionId=98101

Original Author's DEMO images :

|

NasimB/guten-rarity-end-cut-19k | NasimB | 2023-07-15T04:56:56Z | 5 | 0 | transformers | [

"transformers",

"pytorch",

"gpt2",

"text-generation",

"generated_from_trainer",

"dataset:generator",

"license:mit",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2023-07-15T03:03:02Z | ---

license: mit

tags:

- generated_from_trainer

datasets:

- generator

model-index:

- name: guten-rarity-end-cut-19k

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# guten-rarity-end-cut-19k

This model is a fine-tuned version of [gpt2](https://huggingface.co/gpt2) on the generator dataset.

It achieves the following results on the evaluation set:

- Loss: 4.3128

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0005

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine

- lr_scheduler_warmup_steps: 1000

- num_epochs: 6

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:-----:|:---------------:|

| 6.69 | 0.29 | 500 | 5.6412 |

| 5.3327 | 0.59 | 1000 | 5.2058 |

| 4.9884 | 0.88 | 1500 | 4.9570 |

| 4.7105 | 1.18 | 2000 | 4.8008 |

| 4.5563 | 1.47 | 2500 | 4.6777 |

| 4.4438 | 1.77 | 3000 | 4.5652 |

| 4.3057 | 2.06 | 3500 | 4.4916 |

| 4.1258 | 2.36 | 4000 | 4.4456 |

| 4.1001 | 2.65 | 4500 | 4.3854 |

| 4.0586 | 2.94 | 5000 | 4.3319 |

| 3.8297 | 3.24 | 5500 | 4.3249 |

| 3.8029 | 3.53 | 6000 | 4.2962 |

| 3.7812 | 3.83 | 6500 | 4.2655 |

| 3.6544 | 4.12 | 7000 | 4.2687 |

| 3.5166 | 4.42 | 7500 | 4.2598 |

| 3.4969 | 4.71 | 8000 | 4.2438 |

| 3.4978 | 5.01 | 8500 | 4.2328 |

| 3.3159 | 5.3 | 9000 | 4.2445 |

| 3.3203 | 5.59 | 9500 | 4.2434 |

| 3.3104 | 5.89 | 10000 | 4.2422 |

### Framework versions

- Transformers 4.26.1

- Pytorch 1.11.0+cu113

- Datasets 2.13.0

- Tokenizers 0.13.3

|

ZidanSink/Kayess | ZidanSink | 2023-07-15T04:35:29Z | 0 | 0 | null | [

"license:creativeml-openrail-m",

"region:us"

] | null | 2023-06-29T07:27:11Z | ---

license: creativeml-openrail-m

---

|

Wiryan/imryan | Wiryan | 2023-07-15T04:27:51Z | 0 | 0 | null | [

"license:creativeml-openrail-m",

"region:us"

] | null | 2023-07-15T04:22:48Z | ---

license: creativeml-openrail-m

---

|

manmyung/Reinforce-CartPole-v1 | manmyung | 2023-07-15T04:24:11Z | 0 | 0 | null | [

"CartPole-v1",

"reinforce",

"reinforcement-learning",

"custom-implementation",

"deep-rl-class",

"model-index",

"region:us"

] | reinforcement-learning | 2023-07-15T04:23:52Z | ---

tags:

- CartPole-v1

- reinforce

- reinforcement-learning

- custom-implementation

- deep-rl-class

model-index:

- name: Reinforce-CartPole-v1

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: CartPole-v1

type: CartPole-v1

metrics:

- type: mean_reward

value: 490.20 +/- 23.02

name: mean_reward

verified: false

---

# **Reinforce** Agent playing **CartPole-v1**

This is a trained model of a **Reinforce** agent playing **CartPole-v1** .

To learn to use this model and train yours check Unit 4 of the Deep Reinforcement Learning Course: https://huggingface.co/deep-rl-course/unit4/introduction

|

jerryjalapeno/nart-100k-7b | jerryjalapeno | 2023-07-15T03:57:11Z | 1,520 | 20 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"license:cc-by-nc-nd-4.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2023-07-14T19:01:46Z | ---

license: cc-by-nc-nd-4.0

---

|

cbredallas/labelclassification | cbredallas | 2023-07-15T03:44:59Z | 0 | 0 | adapter-transformers | [

"adapter-transformers",

"en",

"license:openrail",

"region:us"

] | null | 2023-07-15T03:43:24Z | ---

license: openrail

language:

- en

library_name: adapter-transformers

--- |

renatostrianese/q-FrozenLake-v1-4x4-noSlippery | renatostrianese | 2023-07-15T03:43:44Z | 0 | 0 | null | [

"FrozenLake-v1-4x4-no_slippery",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] | reinforcement-learning | 2023-07-15T03:43:33Z | ---

tags:

- FrozenLake-v1-4x4-no_slippery

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-FrozenLake-v1-4x4-noSlippery

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-4x4-no_slippery

type: FrozenLake-v1-4x4-no_slippery

metrics:

- type: mean_reward

value: 1.00 +/- 0.00

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing1 **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

```python

model = load_from_hub(repo_id="renatostrianese/q-FrozenLake-v1-4x4-noSlippery", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

```

|

xielenite/zethielzero | xielenite | 2023-07-15T03:18:29Z | 0 | 0 | null | [

"license:openrail",

"region:us"

] | null | 2023-07-13T23:40:11Z | ---

license: openrail

---

voice models for RVC inferencing. see https://docs.google.com/document/d/13_l1bd1Osgz7qlAZn-zhklCbHpVRk6bYOuAuB78qmsE/edit to see how to use. |

AdanLee/ppo-Huggy | AdanLee | 2023-07-15T03:01:35Z | 11 | 0 | ml-agents | [

"ml-agents",

"tensorboard",

"onnx",

"Huggy",

"deep-reinforcement-learning",

"reinforcement-learning",

"ML-Agents-Huggy",

"region:us"

] | reinforcement-learning | 2023-07-15T03:01:15Z | ---

library_name: ml-agents

tags:

- Huggy

- deep-reinforcement-learning

- reinforcement-learning

- ML-Agents-Huggy

---

# **ppo** Agent playing **Huggy**

This is a trained model of a **ppo** agent playing **Huggy**

using the [Unity ML-Agents Library](https://github.com/Unity-Technologies/ml-agents).

## Usage (with ML-Agents)

The Documentation: https://unity-technologies.github.io/ml-agents/ML-Agents-Toolkit-Documentation/

We wrote a complete tutorial to learn to train your first agent using ML-Agents and publish it to the Hub:

- A *short tutorial* where you teach Huggy the Dog 🐶 to fetch the stick and then play with him directly in your

browser: https://huggingface.co/learn/deep-rl-course/unitbonus1/introduction

- A *longer tutorial* to understand how works ML-Agents:

https://huggingface.co/learn/deep-rl-course/unit5/introduction

### Resume the training

```bash

mlagents-learn <your_configuration_file_path.yaml> --run-id=<run_id> --resume

```

### Watch your Agent play

You can watch your agent **playing directly in your browser**

1. If the environment is part of ML-Agents official environments, go to https://huggingface.co/unity

2. Step 1: Find your model_id: AdanLee/ppo-Huggy

3. Step 2: Select your *.nn /*.onnx file

4. Click on Watch the agent play 👀

|

RajanGo/RajanGo-Asgn-2 | RajanGo | 2023-07-15T01:43:52Z | 0 | 0 | peft | [

"peft",

"region:us"

] | null | 2023-07-15T01:43:44Z | ---

library_name: peft

---

## Training procedure

The following `bitsandbytes` quantization config was used during training:

- load_in_8bit: True

- load_in_4bit: False

- llm_int8_threshold: 6.0

- llm_int8_skip_modules: None

- llm_int8_enable_fp32_cpu_offload: False

- llm_int8_has_fp16_weight: False

- bnb_4bit_quant_type: fp4

- bnb_4bit_use_double_quant: False

- bnb_4bit_compute_dtype: float32

### Framework versions

- PEFT 0.4.0.dev0

|

akraieski/ppo-LunarLander-v2 | akraieski | 2023-07-15T01:42:23Z | 0 | 0 | stable-baselines3 | [

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] | reinforcement-learning | 2023-07-15T01:42:05Z | ---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 277.81 +/- 14.10

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

timjwhite/a2c-AntBulletEnv-v0 | timjwhite | 2023-07-15T01:39:05Z | 0 | 0 | stable-baselines3 | [

"stable-baselines3",

"AntBulletEnv-v0",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] | reinforcement-learning | 2023-07-15T01:37:29Z | ---

library_name: stable-baselines3

tags:

- AntBulletEnv-v0

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: A2C

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: AntBulletEnv-v0

type: AntBulletEnv-v0

metrics:

- type: mean_reward

value: 792.36 +/- 37.50

name: mean_reward

verified: false

---

# **A2C** Agent playing **AntBulletEnv-v0**

This is a trained model of a **A2C** agent playing **AntBulletEnv-v0**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

Panchovix/guanaco-33b-PI-8192-LoRA-4bit-32g | Panchovix | 2023-07-15T01:38:52Z | 5 | 4 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"license:other",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2023-07-04T06:00:12Z | ---

license: other

---

[guanaco-33b](https://huggingface.co/timdettmers/guanaco-33b-merged) merged with bhenrym14's [airoboros-33b-gpt4-1.4.1-PI-8192-LoRA](https://huggingface.co/bhenrym14/airoboros-33b-gpt4-1.4.1-PI-8192-LoRA), quantized at 4 bit.

More info about the LoRA [Here](https://huggingface.co/bhenrym14/airoboros-33b-gpt4-1.4.1-PI-8192-fp16). This is an alternative to SuperHOT 8k LoRA trained with LoRA_rank 64, and airoboros 1.4.1 dataset.

It was created with GPTQ-for-LLaMA with group size 32 and act order true as parameters, to get the maximum perplexity vs FP16 model.

I HIGHLY suggest to use exllama, to evade some VRAM issues.

Use compress_pos_emb = 4 for any context up to 8192 context.

If you have 2x24 GB VRAM GPUs cards, to not get Out of Memory errors at 8192 context, use:

gpu_split: 9,21 |

chandrasutrisnotjhong/taxi | chandrasutrisnotjhong | 2023-07-15T01:06:47Z | 0 | 0 | null | [

"Taxi-v3",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] | reinforcement-learning | 2023-07-15T01:06:45Z | ---

tags:

- Taxi-v3

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: taxi

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Taxi-v3

type: Taxi-v3

metrics:

- type: mean_reward

value: 7.56 +/- 2.71

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing1 **Taxi-v3**

This is a trained model of a **Q-Learning** agent playing **Taxi-v3** .

## Usage

```python

model = load_from_hub(repo_id="chandrasutrisnotjhong/taxi", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

```

|

borkur/gpt2-finetuned-wikitext2 | borkur | 2023-07-15T00:56:29Z | 85 | 0 | transformers | [

"transformers",

"tf",

"gpt2",

"text-generation",

"generated_from_keras_callback",

"base_model:openai-community/gpt2",

"base_model:finetune:openai-community/gpt2",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-generation | 2023-07-14T21:30:03Z | ---

license: mit

base_model: gpt2

tags:

- generated_from_keras_callback

model-index:

- name: borkur/gpt2-finetuned-wikitext2

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# borkur/gpt2-finetuned-wikitext2

This model is a fine-tuned version of [gpt2](https://huggingface.co/gpt2) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 6.4948

- Validation Loss: 6.3466

- Epoch: 1

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'AdamWeightDecay', 'learning_rate': 2e-05, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-07, 'amsgrad': False, 'weight_decay_rate': 0.01}

- training_precision: float32

### Training results

| Train Loss | Validation Loss | Epoch |

|:----------:|:---------------:|:-----:|

| 7.3152 | 6.7681 | 0 |

| 6.4948 | 6.3466 | 1 |

### Framework versions

- Transformers 4.31.0.dev0

- TensorFlow 2.13.0

- Datasets 2.13.1

- Tokenizers 0.13.3

|

giocs2017/dqn-SpaceInvadersNoFrameskip-v4-gio | giocs2017 | 2023-07-15T00:25:57Z | 0 | 0 | stable-baselines3 | [

"stable-baselines3",

"SpaceInvadersNoFrameskip-v4",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] | reinforcement-learning | 2023-07-15T00:25:23Z | ---

library_name: stable-baselines3

tags:

- SpaceInvadersNoFrameskip-v4

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: DQN

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: SpaceInvadersNoFrameskip-v4

type: SpaceInvadersNoFrameskip-v4

metrics:

- type: mean_reward

value: 595.00 +/- 126.25

name: mean_reward

verified: false

---

# **DQN** Agent playing **SpaceInvadersNoFrameskip-v4**

This is a trained model of a **DQN** agent playing **SpaceInvadersNoFrameskip-v4**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3)

and the [RL Zoo](https://github.com/DLR-RM/rl-baselines3-zoo).

The RL Zoo is a training framework for Stable Baselines3

reinforcement learning agents,

with hyperparameter optimization and pre-trained agents included.

## Usage (with SB3 RL Zoo)

RL Zoo: https://github.com/DLR-RM/rl-baselines3-zoo<br/>

SB3: https://github.com/DLR-RM/stable-baselines3<br/>

SB3 Contrib: https://github.com/Stable-Baselines-Team/stable-baselines3-contrib

Install the RL Zoo (with SB3 and SB3-Contrib):

```bash

pip install rl_zoo3

```

```

# Download model and save it into the logs/ folder

python -m rl_zoo3.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga giocs2017 -f logs/

python -m rl_zoo3.enjoy --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

If you installed the RL Zoo3 via pip (`pip install rl_zoo3`), from anywhere you can do:

```

python -m rl_zoo3.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga giocs2017 -f logs/

python -m rl_zoo3.enjoy --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

## Training (with the RL Zoo)

```

python -m rl_zoo3.train --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

# Upload the model and generate video (when possible)

python -m rl_zoo3.push_to_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/ -orga giocs2017

```

## Hyperparameters

```python

OrderedDict([('batch_size', 32),

('buffer_size', 100000),

('env_wrapper',

['stable_baselines3.common.atari_wrappers.AtariWrapper']),

('exploration_final_eps', 0.01),

('exploration_fraction', 0.1),

('frame_stack', 4),

('gradient_steps', 1),

('learning_rate', 0.0001),

('learning_starts', 100000),

('n_timesteps', 1000000.0),

('optimize_memory_usage', False),

('policy', 'CnnPolicy'),

('target_update_interval', 1000),

('train_freq', 4),

('normalize', False)])

```

# Environment Arguments

```python

{'render_mode': 'rgb_array'}

```

|

ALM-AHME/beit-large-patch16-224-finetuned-BreastCancer-Classification-BreakHis-AH-60-20-20 | ALM-AHME | 2023-07-14T23:55:06Z | 5 | 3 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"beit",

"image-classification",

"generated_from_trainer",

"dataset:imagefolder",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | image-classification | 2023-07-14T20:43:15Z | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- imagefolder

metrics:

- accuracy

model-index:

- name: beit-large-patch16-224-finetuned-BreastCancer-Classification-BreakHis-AH-60-20-20

results:

- task:

name: Image Classification

type: image-classification

dataset:

name: imagefolder

type: imagefolder

config: Splitted-Resized

split: train

args: Splitted-Resized

metrics:

- name: Accuracy

type: accuracy

value: 0.9938708156529938

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# beit-large-patch16-224-finetuned-BreastCancer-Classification-BreakHis-AH-60-20-20

This model is a fine-tuned version of [microsoft/beit-large-patch16-224](https://huggingface.co/microsoft/beit-large-patch16-224) on the imagefolder dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0275

- Accuracy: 0.9939

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-06

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.9

- num_epochs: 12

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.46 | 1.0 | 199 | 0.3950 | 0.8482 |

| 0.2048 | 2.0 | 398 | 0.1886 | 0.9189 |

| 0.182 | 3.0 | 597 | 0.1382 | 0.9481 |

| 0.0826 | 4.0 | 796 | 0.0760 | 0.9694 |

| 0.0886 | 5.0 | 995 | 0.0600 | 0.9788 |

| 0.0896 | 6.0 | 1194 | 0.0523 | 0.9802 |

| 0.0774 | 7.0 | 1393 | 0.0482 | 0.9826 |

| 0.0876 | 8.0 | 1592 | 0.0289 | 0.9877 |

| 0.1105 | 9.0 | 1791 | 0.0580 | 0.9821 |

| 0.0289 | 10.0 | 1990 | 0.0294 | 0.9925 |

| 0.0594 | 11.0 | 2189 | 0.0331 | 0.9906 |

| 0.0011 | 12.0 | 2388 | 0.0275 | 0.9939 |

### Framework versions

- Transformers 4.30.2

- Pytorch 2.0.1+cu118

- Datasets 2.13.1

- Tokenizers 0.13.3

|

CheeriosMomentors/LORA | CheeriosMomentors | 2023-07-14T23:32:58Z | 0 | 0 | null | [

"en",

"license:wtfpl",

"region:us"

] | null | 2023-04-08T06:21:46Z | ---

license: wtfpl

language:

- en

---

Okay listen up. This is mostly loras that I made by myself.

Some of these may be released on Civitai and some may not.

If you found these, good job you now have cool loras.

You can post these on Civitai or anywhere idc.

You can say these are yours, get money I do not care.

But please for god sake, leave my name out of it.

I am not responsible for anything you done with these.

These were just for fun, that is all. Now enjoy.

Lora Count: 2

We currently have Nisho Ishin (Medaka Box) style and ryukishi07 (Umineko Style.)

I may make more and post them here. |

chunwoolee0/seqcls_mrpc_bert_base_uncased_model | chunwoolee0 | 2023-07-14T23:32:36Z | 103 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"bert",

"text-classification",

"generated_from_trainer",

"dataset:glue",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2023-07-14T23:27:51Z | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- glue

metrics:

- accuracy

- f1

model-index:

- name: seqcls_mrpc_bert_base_uncased_model

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: glue

type: glue

config: mrpc

split: validation

args: mrpc

metrics:

- name: Accuracy

type: accuracy

value: 0.8014705882352942

- name: F1

type: f1

value: 0.8669950738916257

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# seqcls_mrpc_bert_base_uncased_model

This model is a fine-tuned version of [bert-base-uncased](https://huggingface.co/bert-base-uncased) on the glue dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4621

- Accuracy: 0.8015

- F1: 0.8670

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| No log | 1.0 | 58 | 0.5442 | 0.7108 | 0.8228 |

| No log | 2.0 | 116 | 0.5079 | 0.7745 | 0.8558 |

| No log | 3.0 | 174 | 0.4621 | 0.8015 | 0.8670 |

### Framework versions

- Transformers 4.30.2

- Pytorch 2.0.1+cu118

- Datasets 2.13.1

- Tokenizers 0.13.3

|

foreverip/dqn-SpaceInvadersNoFrameskip-v4 | foreverip | 2023-07-14T23:31:22Z | 1 | 0 | stable-baselines3 | [

"stable-baselines3",

"SpaceInvadersNoFrameskip-v4",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] | reinforcement-learning | 2023-07-14T23:30:45Z | ---

library_name: stable-baselines3

tags:

- SpaceInvadersNoFrameskip-v4

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: DQN

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: SpaceInvadersNoFrameskip-v4

type: SpaceInvadersNoFrameskip-v4

metrics:

- type: mean_reward

value: 603.00 +/- 169.77

name: mean_reward

verified: false

---

# **DQN** Agent playing **SpaceInvadersNoFrameskip-v4**

This is a trained model of a **DQN** agent playing **SpaceInvadersNoFrameskip-v4**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3)

and the [RL Zoo](https://github.com/DLR-RM/rl-baselines3-zoo).

The RL Zoo is a training framework for Stable Baselines3

reinforcement learning agents,

with hyperparameter optimization and pre-trained agents included.

## Usage (with SB3 RL Zoo)

RL Zoo: https://github.com/DLR-RM/rl-baselines3-zoo<br/>

SB3: https://github.com/DLR-RM/stable-baselines3<br/>

SB3 Contrib: https://github.com/Stable-Baselines-Team/stable-baselines3-contrib

Install the RL Zoo (with SB3 and SB3-Contrib):

```bash

pip install rl_zoo3

```

```

# Download model and save it into the logs/ folder

python -m rl_zoo3.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga foreverip -f logs/

python -m rl_zoo3.enjoy --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

If you installed the RL Zoo3 via pip (`pip install rl_zoo3`), from anywhere you can do:

```

python -m rl_zoo3.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga foreverip -f logs/

python -m rl_zoo3.enjoy --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

## Training (with the RL Zoo)

```

python -m rl_zoo3.train --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

# Upload the model and generate video (when possible)

python -m rl_zoo3.push_to_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/ -orga foreverip

```

## Hyperparameters

```python

OrderedDict([('batch_size', 32),

('buffer_size', 100000),

('env_wrapper',

['stable_baselines3.common.atari_wrappers.AtariWrapper']),

('exploration_final_eps', 0.01),

('exploration_fraction', 0.1),

('frame_stack', 4),

('gradient_steps', 1),

('learning_rate', 0.0001),

('learning_starts', 100000),

('n_timesteps', 1000000.0),

('optimize_memory_usage', False),

('policy', 'CnnPolicy'),

('target_update_interval', 1000),

('train_freq', 4),

('normalize', False)])

```

# Environment Arguments

```python

{'render_mode': 'rgb_array'}

```

|

Yntec/Photosphere | Yntec | 2023-07-14T23:22:58Z | 1,547 | 4 | diffusers | [

"diffusers",

"safetensors",

"stable-diffusion",

"stable-diffusion-diffusers",

"text-to-image",

"Noosphere",

"Dreamlike",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2023-07-14T22:54:19Z | ---

license: creativeml-openrail-m

library_name: diffusers

pipeline_tag: text-to-image

tags:

- stable-diffusion

- stable-diffusion-diffusers

- diffusers

- text-to-image

- Noosphere

- Dreamlike

---

# Photosphere

A mix of Noosphere v3 by skumerz and photorealistic models.

Original page:

https://civitai.com/models/36538?modelVersionId=107675 |

MnLgt/slope-bed | MnLgt | 2023-07-14T23:19:56Z | 0 | 0 | null | [

"license:mit",

"region:us"

] | null | 2023-07-14T23:19:55Z | ---

license: mit

---

### slope-bed on Stable Diffusion

This is the `<slope-bed>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

cgr28/q-Taxi-v3 | cgr28 | 2023-07-14T23:15:40Z | 0 | 0 | null | [

"Taxi-v3",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] | reinforcement-learning | 2023-07-14T23:15:38Z | ---

tags:

- Taxi-v3

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-Taxi-v3

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Taxi-v3

type: Taxi-v3

metrics:

- type: mean_reward

value: 7.52 +/- 2.74

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing1 **Taxi-v3**

This is a trained model of a **Q-Learning** agent playing **Taxi-v3** .

## Usage

```python

model = load_from_hub(repo_id="cgr28/q-Taxi-v3", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

```

|

0sunfire0/Pixelcopter_train_00 | 0sunfire0 | 2023-07-14T23:10:07Z | 0 | 0 | null | [

"Pixelcopter-PLE-v0",

"reinforce",

"reinforcement-learning",

"custom-implementation",

"deep-rl-class",

"model-index",

"region:us"

] | reinforcement-learning | 2023-07-14T23:10:05Z | ---

tags:

- Pixelcopter-PLE-v0

- reinforce

- reinforcement-learning

- custom-implementation

- deep-rl-class

model-index:

- name: Pixelcopter_train_00

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Pixelcopter-PLE-v0

type: Pixelcopter-PLE-v0

metrics:

- type: mean_reward

value: 7.20 +/- 7.10

name: mean_reward

verified: false

---

# **Reinforce** Agent playing **Pixelcopter-PLE-v0**

This is a trained model of a **Reinforce** agent playing **Pixelcopter-PLE-v0** .

To learn to use this model and train yours check Unit 4 of the Deep Reinforcement Learning Course: https://huggingface.co/deep-rl-course/unit4/introduction

|

Alignment-Lab-AI/AttourneyAtLam | Alignment-Lab-AI | 2023-07-14T23:01:49Z | 0 | 0 | peft | [

"peft",

"region:us"

] | null | 2023-07-14T22:53:40Z | ---

library_name: peft

---

## Training procedure

The following `bitsandbytes` quantization config was used during training:

- load_in_8bit: False

- load_in_4bit: True

- llm_int8_threshold: 6.0

- llm_int8_skip_modules: None

- llm_int8_enable_fp32_cpu_offload: False

- llm_int8_has_fp16_weight: False

- bnb_4bit_quant_type: nf4

- bnb_4bit_use_double_quant: True

- bnb_4bit_compute_dtype: float16

### Framework versions

- PEFT 0.4.0.dev0

|

GISDGDIGDI9ED/leslie | GISDGDIGDI9ED | 2023-07-14T22:53:08Z | 0 | 0 | flair | [

"flair",

"art",

"es",

"dataset:openchat/openchat_sharegpt4_dataset",

"license:bsd",

"region:us"

] | null | 2023-07-14T22:50:29Z | ---

license: bsd

datasets:

- openchat/openchat_sharegpt4_dataset

language:

- es

metrics:

- character

library_name: flair

tags:

- art

--- |

YanJiangJerry/sentiment-roberta-e3-b16-v2-w0.01 | YanJiangJerry | 2023-07-14T22:45:22Z | 121 | 0 | transformers | [

"transformers",

"pytorch",

"roberta",

"text-classification",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2023-07-14T13:20:25Z | ---

tags:

- generated_from_trainer

metrics:

- f1

- recall

- precision

model-index:

- name: sentiment-roberta-e3-b16-v2-w0.01

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# sentiment-roberta-e3-b16-v2-w0.01

This model is a fine-tuned version of [siebert/sentiment-roberta-large-english](https://huggingface.co/siebert/sentiment-roberta-large-english) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.6014

- F1: 0.7844

- Recall: 0.7844

- Precision: 0.7844

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 | Recall | Precision |

|:-------------:|:-----:|:----:|:---------------:|:------:|:------:|:---------:|

| No log | 1.0 | 187 | 0.6687 | 0.7574 | 0.7574 | 0.7574 |

| No log | 2.0 | 374 | 0.5700 | 0.7898 | 0.7898 | 0.7898 |

| 0.6052 | 3.0 | 561 | 0.6014 | 0.7844 | 0.7844 | 0.7844 |

### Framework versions

- Transformers 4.30.2

- Pytorch 2.0.1+cu118

- Datasets 2.13.1

- Tokenizers 0.13.3

|

underactuated/opt-350m_ft | underactuated | 2023-07-14T22:41:50Z | 136 | 0 | transformers | [

"transformers",

"pytorch",

"opt",

"text-generation",

"generated_from_trainer",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2023-07-14T22:39:39Z | ---

tags:

- generated_from_trainer

model-index:

- name: opt-350m_ft

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# opt-350m_ft

This model was trained from scratch on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Framework versions

- Transformers 4.30.2

- Pytorch 2.0.1+cu118

- Datasets 2.13.1

- Tokenizers 0.13.3

|

YanJiangJerry/sentiment-roberta-e2-b16-v2-w0.01 | YanJiangJerry | 2023-07-14T22:29:12Z | 106 | 0 | transformers | [

"transformers",

"pytorch",

"roberta",

"text-classification",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2023-07-14T22:22:40Z | ---

tags:

- generated_from_trainer

metrics:

- f1

- recall

- precision

model-index:

- name: sentiment-roberta-e2-b16-v2-w0.01

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# sentiment-roberta-e2-b16-v2-w0.01

This model is a fine-tuned version of [siebert/sentiment-roberta-large-english](https://huggingface.co/siebert/sentiment-roberta-large-english) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.8630

- F1: 0.7520

- Recall: 0.7520

- Precision: 0.7520

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 | Recall | Precision |

|:-------------:|:-----:|:----:|:---------------:|:------:|:------:|:---------:|

| No log | 1.0 | 375 | 0.8651 | 0.6739 | 0.6739 | 0.6739 |

| 0.6564 | 2.0 | 750 | 0.8630 | 0.7520 | 0.7520 | 0.7520 |

### Framework versions

- Transformers 4.30.2

- Pytorch 2.0.1+cu118

- Datasets 2.13.1

- Tokenizers 0.13.3

|

marloz03/my_awesome_qa_model | marloz03 | 2023-07-14T22:26:40Z | 61 | 0 | transformers | [

"transformers",

"tf",

"distilbert",

"question-answering",

"generated_from_keras_callback",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | question-answering | 2023-07-13T21:07:04Z | ---

license: apache-2.0

tags:

- generated_from_keras_callback

model-index:

- name: marloz03/my_awesome_qa_model

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# marloz03/my_awesome_qa_model

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 1.2264

- Validation Loss: 1.4529

- Epoch: 2

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'Adam', 'learning_rate': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 2e-05, 'decay_steps': 1000, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False}

- training_precision: float32

### Training results

| Train Loss | Validation Loss | Epoch |

|:----------:|:---------------:|:-----:|

| 2.6044 | 1.5880 | 0 |

| 1.3853 | 1.4529 | 1 |

| 1.2264 | 1.4529 | 2 |

### Framework versions

- Transformers 4.29.2

- TensorFlow 2.10.0

- Datasets 2.12.0

- Tokenizers 0.13.2

|

Recognai/zeroshot_selectra_small | Recognai | 2023-07-14T22:23:19Z | 129 | 5 | transformers | [

"transformers",

"pytorch",

"safetensors",

"electra",

"text-classification",

"zero-shot-classification",

"nli",

"es",

"dataset:xnli",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | zero-shot-classification | 2022-03-02T23:29:04Z | ---

language: es

tags:

- zero-shot-classification

- nli

- pytorch

datasets:

- xnli

pipeline_tag: zero-shot-classification

license: apache-2.0

widget:

- text: "El autor se perfila, a los 50 años de su muerte, como uno de los grandes de su siglo"

candidate_labels: "cultura, sociedad, economia, salud, deportes"

---

# Zero-shot SELECTRA: A zero-shot classifier based on SELECTRA

*Zero-shot SELECTRA* is a [SELECTRA model](https://huggingface.co/Recognai/selectra_small) fine-tuned on the Spanish portion of the [XNLI dataset](https://huggingface.co/datasets/xnli). You can use it with Hugging Face's [Zero-shot pipeline](https://huggingface.co/transformers/master/main_classes/pipelines.html#transformers.ZeroShotClassificationPipeline) to make [zero-shot classifications](https://joeddav.github.io/blog/2020/05/29/ZSL.html).

In comparison to our previous zero-shot classifier [based on BETO](https://huggingface.co/Recognai/bert-base-spanish-wwm-cased-xnli), zero-shot SELECTRA is **much more lightweight**. As shown in the *Metrics* section, the *small* version (5 times fewer parameters) performs slightly worse, while the *medium* version (3 times fewer parameters) **outperforms** the BETO based zero-shot classifier.

## Usage

```python

from transformers import pipeline

classifier = pipeline("zero-shot-classification",

model="Recognai/zeroshot_selectra_medium")

classifier(

"El autor se perfila, a los 50 años de su muerte, como uno de los grandes de su siglo",

candidate_labels=["cultura", "sociedad", "economia", "salud", "deportes"],

hypothesis_template="Este ejemplo es {}."

)

"""Output

{'sequence': 'El autor se perfila, a los 50 años de su muerte, como uno de los grandes de su siglo',

'labels': ['sociedad', 'cultura', 'salud', 'economia', 'deportes'],

'scores': [0.3711881935596466,

0.25650349259376526,

0.17355826497077942,

0.1641489565372467,

0.03460107371211052]}

"""

```

The `hypothesis_template` parameter is important and should be in Spanish. **In the widget on the right, this parameter is set to its default value: "This example is {}.", so different results are expected.**

## Metrics

| Model | Params | XNLI (acc) | \*MLSUM (acc) |

| --- | --- | --- | --- |

| [zs BETO](https://huggingface.co/Recognai/bert-base-spanish-wwm-cased-xnli) | 110M | 0.799 | 0.530 |

| [zs SELECTRA medium](https://huggingface.co/Recognai/zeroshot_selectra_medium) | 41M | **0.807** | **0.589** |

| zs SELECTRA small | **22M** | 0.795 | 0.446 |

\*evaluated with zero-shot learning (ZSL)

- **XNLI**: The stated accuracy refers to the test portion of the [XNLI dataset](https://huggingface.co/datasets/xnli), after finetuning the model on the training portion.

- **MLSUM**: For this accuracy we take the test set of the [MLSUM dataset](https://huggingface.co/datasets/mlsum) and classify the summaries of 5 selected labels. For details, check out our [evaluation notebook](https://github.com/recognai/selectra/blob/main/zero-shot_classifier/evaluation.ipynb)

## Training

Check out our [training notebook](https://github.com/recognai/selectra/blob/main/zero-shot_classifier/training.ipynb) for all the details.

## Authors

- David Fidalgo ([GitHub](https://github.com/dcfidalgo))

- Daniel Vila ([GitHub](https://github.com/dvsrepo))

- Francisco Aranda ([GitHub](https://github.com/frascuchon))

- Javier Lopez ([GitHub](https://github.com/javispp)) |

cuervjos/alpacaIOD-7b-plus | cuervjos | 2023-07-14T22:22:53Z | 1 | 0 | peft | [

"peft",

"region:us"

] | null | 2023-07-13T08:58:46Z | ---

library_name: peft

---

## Training procedure

### Framework versions

- PEFT 0.4.0.dev0

- PEFT 0.4.0.dev0

- PEFT 0.4.0.dev0

- PEFT 0.4.0.dev0

- PEFT 0.4.0.dev0

|

Recognai/bert-base-spanish-wwm-cased-xnli | Recognai | 2023-07-14T22:22:51Z | 2,134 | 16 | transformers | [

"transformers",

"pytorch",

"jax",

"safetensors",

"bert",

"text-classification",

"zero-shot-classification",

"nli",

"es",

"dataset:xnli",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | zero-shot-classification | 2022-03-02T23:29:04Z | ---

language: es

tags:

- zero-shot-classification

- nli

- pytorch

datasets:

- xnli

license: mit

pipeline_tag: zero-shot-classification

widget:

- text: "El autor se perfila, a los 50 años de su muerte, como uno de los grandes de su siglo"

candidate_labels: "cultura, sociedad, economia, salud, deportes"

---

# bert-base-spanish-wwm-cased-xnli

**UPDATE, 15.10.2021: Check out our new zero-shot classifiers, much more lightweight and even outperforming this one: [zero-shot SELECTRA small](https://huggingface.co/Recognai/zeroshot_selectra_small) and [zero-shot SELECTRA medium](https://huggingface.co/Recognai/zeroshot_selectra_medium).**

## Model description

This model is a fine-tuned version of the [spanish BERT model](https://huggingface.co/dccuchile/bert-base-spanish-wwm-cased) with the Spanish portion of the XNLI dataset. You can have a look at the [training script](https://huggingface.co/Recognai/bert-base-spanish-wwm-cased-xnli/blob/main/zeroshot_training_script.py) for details of the training.

### How to use

You can use this model with Hugging Face's [zero-shot-classification pipeline](https://discuss.huggingface.co/t/new-pipeline-for-zero-shot-text-classification/681):

```python

from transformers import pipeline

classifier = pipeline("zero-shot-classification",

model="Recognai/bert-base-spanish-wwm-cased-xnli")

classifier(

"El autor se perfila, a los 50 años de su muerte, como uno de los grandes de su siglo",

candidate_labels=["cultura", "sociedad", "economia", "salud", "deportes"],

hypothesis_template="Este ejemplo es {}."

)

"""output

{'sequence': 'El autor se perfila, a los 50 años de su muerte, como uno de los grandes de su siglo',

'labels': ['cultura', 'sociedad', 'economia', 'salud', 'deportes'],

'scores': [0.38897448778152466,

0.22997373342514038,

0.1658431738615036,

0.1205764189362526,

0.09463217109441757]}

"""

```

## Eval results

Accuracy for the test set:

| | XNLI-es |

|-----------------------------|---------|

|bert-base-spanish-wwm-cased-xnli | 79.9% | |

Recognai/distilbert-base-es-multilingual-cased | Recognai | 2023-07-14T22:20:32Z | 352 | 3 | transformers | [

"transformers",

"pytorch",

"safetensors",

"distilbert",

"fill-mask",

"es",

"dataset:wikipedia",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | fill-mask | 2022-03-02T23:29:04Z | ---

language: es

license: apache-2.0

datasets:

- wikipedia

widget:

- text: "Mi nombre es Juan y vivo en [MASK]."

---

# DistilBERT base multilingual model Spanish subset (cased)

This model is the Spanish extract of `distilbert-base-multilingual-cased` (https://huggingface.co/distilbert-base-multilingual-cased), a distilled version of the [BERT base multilingual model](bert-base-multilingual-cased). This model is cased: it does make a difference between english and English.

It uses the extraction method proposed by Geotrend described in https://github.com/Geotrend-research/smaller-transformers.

The resulting model has the same architecture as DistilmBERT: 6 layers, 768 dimension and 12 heads, with a total of **63M parameters** (compared to 134M parameters for DistilmBERT).

The goal of this model is to reduce even further the size of the `distilbert-base-multilingual` multilingual model by selecting only most frequent tokens for Spanish, reducing the size of the embedding layer. For more details visit the paper from the Geotrend team: Load What You Need: Smaller Versions of Multilingual BERT. |

Jowie/ppo-LunarLander | Jowie | 2023-07-14T22:08:23Z | 4 | 0 | stable-baselines3 | [

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] | reinforcement-learning | 2023-07-14T22:07:58Z | ---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 227.31 +/- 46.54

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

LarryAIDraw/Wa2k | LarryAIDraw | 2023-07-14T22:06:30Z | 0 | 0 | null | [

"license:creativeml-openrail-m",

"region:us"

] | null | 2023-07-14T21:45:20Z | ---

license: creativeml-openrail-m

---

https://civitai.com/models/13926/wa2000-or-girls-frontline |

brucew5978/my_awesome_asr_mind_model | brucew5978 | 2023-07-14T22:02:12Z | 77 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"wav2vec2",

"automatic-speech-recognition",

"generated_from_trainer",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2023-07-12T18:24:27Z | ---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- wer

model-index:

- name: my_awesome_asr_mind_model

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# my_awesome_asr_mind_model

This model is a fine-tuned version of [facebook/wav2vec2-base](https://huggingface.co/facebook/wav2vec2-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 57.1369

- Wer: 1.1053

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 16

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- training_steps: 2000

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 48.7151 | 200.0 | 1000 | 57.1369 | 1.1053 |

| 47.4068 | 400.0 | 2000 | 57.1369 | 1.1053 |

### Framework versions

- Transformers 4.30.2

- Pytorch 1.12.1

- Datasets 2.13.1

- Tokenizers 0.13.3

|

sghirardelli/vit-base-patch16-224-in21k-rgbd | sghirardelli | 2023-07-14T22:00:58Z | 64 | 0 | transformers | [

"transformers",

"tf",

"tensorboard",

"vit",

"image-classification",

"generated_from_keras_callback",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | image-classification | 2023-07-14T20:21:17Z | ---

license: apache-2.0

tags:

- generated_from_keras_callback

model-index:

- name: vit-base-patch16-224-in21k-rgbd

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# vit-base-patch16-224-in21k-rgbd

This model is a fine-tuned version of [google/vit-base-patch16-224-in21k](https://huggingface.co/google/vit-base-patch16-224-in21k) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 0.5496

- Train Accuracy: 1.0

- Train Top-3-accuracy: 1.0

- Validation Loss: 0.3955

- Validation Accuracy: 0.9994

- Validation Top-3-accuracy: 1.0

- Epoch: 1

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'inner_optimizer': {'class_name': 'AdamWeightDecay', 'config': {'name': 'AdamWeightDecay', 'learning_rate': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 3e-05, 'decay_steps': 1455, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False, 'weight_decay_rate': 0.01}}, 'dynamic': True, 'initial_scale': 32768.0, 'dynamic_growth_steps': 2000}

- training_precision: mixed_float16

### Training results

| Train Loss | Train Accuracy | Train Top-3-accuracy | Validation Loss | Validation Accuracy | Validation Top-3-accuracy | Epoch |

|:----------:|:--------------:|:--------------------:|:---------------:|:-------------------:|:-------------------------:|:-----:|

| 1.6822 | 0.9392 | 0.9664 | 0.7810 | 0.9994 | 1.0 | 0 |

| 0.5496 | 1.0 | 1.0 | 0.3955 | 0.9994 | 1.0 | 1 |

### Framework versions

- Transformers 4.30.2

- TensorFlow 2.12.0

- Datasets 2.13.1

- Tokenizers 0.13.3

|

wolffenbuetell/PFKODRCHORMA | wolffenbuetell | 2023-07-14T21:53:52Z | 0 | 0 | null | [

"license:creativeml-openrail-m",

"region:us"

] | null | 2023-07-14T21:48:13Z | ---

license: creativeml-openrail-m

---

|

AACEE/textual_inversion_sksship | AACEE | 2023-07-14T21:48:01Z | 4 | 0 | diffusers | [

"diffusers",

"tensorboard",

"stable-diffusion",

"stable-diffusion-diffusers",

"text-to-image",

"textual_inversion",

"base_model:runwayml/stable-diffusion-v1-5",

"base_model:adapter:runwayml/stable-diffusion-v1-5",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2023-07-14T20:13:35Z |

---

license: creativeml-openrail-m

base_model: runwayml/stable-diffusion-v1-5

tags:

- stable-diffusion

- stable-diffusion-diffusers

- text-to-image

- diffusers

- textual_inversion

inference: true

---

# Textual inversion text2image fine-tuning - AACEE/textual_inversion_sksship

These are textual inversion adaption weights for runwayml/stable-diffusion-v1-5. You can find some example images in the following.

|

YanJiangJerry/covid-tweet-bert-large-e2-noweight | YanJiangJerry | 2023-07-14T21:45:24Z | 105 | 0 | transformers | [

"transformers",

"pytorch",

"bert",

"text-classification",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2023-07-14T21:30:30Z | ---

license: mit

tags:

- generated_from_trainer

metrics:

- accuracy

- f1

- precision

- recall

model-index:

- name: covid-tweet-bert-large-e2-noweight

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# covid-tweet-bert-large-e2-noweight

This model is a fine-tuned version of [digitalepidemiologylab/covid-twitter-bert-v2](https://huggingface.co/digitalepidemiologylab/covid-twitter-bert-v2) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2582

- Accuracy: 0.9568

- F1: 0.8878

- Precision: 0.8604

- Recall: 0.9170

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 | Precision | Recall |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|:---------:|:------:|

| 0.0593 | 1.0 | 1023 | 0.2053 | 0.9581 | 0.8885 | 0.8810 | 0.8962 |

| 0.0146 | 2.0 | 2046 | 0.2582 | 0.9568 | 0.8878 | 0.8604 | 0.9170 |

### Framework versions

- Transformers 4.30.2

- Pytorch 2.0.1+cu118

- Datasets 2.13.1

- Tokenizers 0.13.3

|

0sunfire0/Cartpole-v1_train_01 | 0sunfire0 | 2023-07-14T21:31:24Z | 0 | 0 | null | [

"CartPole-v1",

"reinforce",

"reinforcement-learning",

"custom-implementation",

"deep-rl-class",

"model-index",

"region:us"

] | reinforcement-learning | 2023-07-14T21:31:15Z | ---

tags:

- CartPole-v1

- reinforce

- reinforcement-learning

- custom-implementation

- deep-rl-class

model-index:

- name: Cartpole-v1_train_01

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: CartPole-v1

type: CartPole-v1

metrics:

- type: mean_reward

value: 497.20 +/- 8.40

name: mean_reward

verified: false

---

# **Reinforce** Agent playing **CartPole-v1**

This is a trained model of a **Reinforce** agent playing **CartPole-v1** .

To learn to use this model and train yours check Unit 4 of the Deep Reinforcement Learning Course: https://huggingface.co/deep-rl-course/unit4/introduction

|

XO-Appleton/vit-base-patch16-224-in21k-MR | XO-Appleton | 2023-07-14T20:45:21Z | 66 | 0 | transformers | [

"transformers",

"tf",

"tensorboard",

"vit",

"image-classification",

"generated_from_keras_callback",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | image-classification | 2023-07-14T18:12:06Z | ---

license: apache-2.0

tags:

- generated_from_keras_callback

model-index:

- name: XO-Appleton/vit-base-patch16-224-in21k-MR

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# XO-Appleton/vit-base-patch16-224-in21k-MR

This model is a fine-tuned version of [google/vit-base-patch16-224-in21k](https://huggingface.co/google/vit-base-patch16-224-in21k) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 0.0042

- Train Accuracy: 1.0

- Train Top-3-accuracy: 1.0

- Validation Loss: 0.0126

- Validation Accuracy: 0.9983

- Validation Top-3-accuracy: 1.0

- Epoch: 3

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training: