Search is not available for this dataset

modelId

stringlengths 5

138

| author

stringlengths 2

42

| last_modified

unknowndate 2020-02-15 11:33:14

2025-04-12 06:26:38

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 422

values | tags

sequencelengths 1

4.05k

| pipeline_tag

stringclasses 54

values | createdAt

unknowndate 2022-03-02 23:29:04

2025-04-12 06:25:56

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

BeaverAI/Agatha-111B-v1d-GGUF | BeaverAI | "2025-04-06T03:03:41Z" | 0 | 0 | null | [

"gguf",

"endpoints_compatible",

"region:us",

"conversational"

] | null | "2025-04-06T01:49:11Z" | <!DOCTYPE html>

<html class="" lang="en">

<head>

<meta charset="utf-8" />

<meta

name="viewport"

content="width=device-width, initial-scale=1.0, user-scalable=no"

/>

<meta

name="description"

content="We're on a journey to advance and democratize artificial intelligence through open source and open science."

/>

<meta property="fb:app_id" content="1321688464574422" />

<meta name="twitter:card" content="summary_large_image" />

<meta name="twitter:site" content="@huggingface" />

<meta

property="og:title"

content="Hugging Face - The AI community building the future."

/>

<meta property="og:type" content="website" />

<title>Hugging Face - The AI community building the future.</title>

<style>

body {

margin: 0;

}

main {

background-color: white;

min-height: 100vh;

padding: 7rem 1rem 8rem 1rem;

text-align: center;

font-family: Source Sans Pro, ui-sans-serif, system-ui, -apple-system,

BlinkMacSystemFont, Segoe UI, Roboto, Helvetica Neue, Arial, Noto Sans,

sans-serif, Apple Color Emoji, Segoe UI Emoji, Segoe UI Symbol,

Noto Color Emoji;

}

img {

width: 6rem;

height: 6rem;

margin: 0 auto 1rem;

}

h1 {

font-size: 3.75rem;

line-height: 1;

color: rgba(31, 41, 55, 1);

font-weight: 700;

box-sizing: border-box;

margin: 0 auto;

}

p, a {

color: rgba(107, 114, 128, 1);

font-size: 1.125rem;

line-height: 1.75rem;

max-width: 28rem;

box-sizing: border-box;

margin: 0 auto;

}

.dark main {

background-color: rgb(11, 15, 25);

}

.dark h1 {

color: rgb(209, 213, 219);

}

.dark p, .dark a {

color: rgb(156, 163, 175);

}

</style>

<script>

// On page load or when changing themes, best to add inline in `head` to avoid FOUC

const key = "_tb_global_settings";

let theme = window.matchMedia("(prefers-color-scheme: dark)").matches

? "dark"

: "light";

try {

const storageTheme = JSON.parse(window.localStorage.getItem(key)).theme;

if (storageTheme) {

theme = storageTheme === "dark" ? "dark" : "light";

}

} catch (e) {}

if (theme === "dark") {

document.documentElement.classList.add("dark");

} else {

document.documentElement.classList.remove("dark");

}

</script>

</head>

<body>

<main>

<img

src="https://cdn-media.huggingface.co/assets/huggingface_logo.svg"

alt=""

/>

<div>

<h1>429</h1>

<p>We had to rate limit you. If you think it's an error, send us <a href="mailto:[email protected]">an email</a></p>

</div>

</main>

</body>

</html> |

javijer/llama2-alpaca-16bit | javijer | "2024-04-23T09:52:04Z" | 0 | 0 | transformers | [

"transformers",

"text-generation-inference",

"unsloth",

"llama",

"trl",

"en",

"base_model:unsloth/llama-2-7b-bnb-4bit",

"base_model:finetune:unsloth/llama-2-7b-bnb-4bit",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | null | "2024-04-23T09:52:02Z" | ---

language:

- en

license: apache-2.0

tags:

- text-generation-inference

- transformers

- unsloth

- llama

- trl

base_model: unsloth/llama-2-7b-bnb-4bit

---

# Uploaded model

- **Developed by:** javijer

- **License:** apache-2.0

- **Finetuned from model :** unsloth/llama-2-7b-bnb-4bit

This llama model was trained 2x faster with [Unsloth](https://github.com/unslothai/unsloth) and Huggingface's TRL library.

[<img src="https://raw.githubusercontent.com/unslothai/unsloth/main/images/unsloth%20made%20with%20love.png" width="200"/>](https://github.com/unslothai/unsloth)

|

mradermacher/Ontology-0.1-Mistral-7B-GGUF | mradermacher | "2025-01-02T07:30:16Z" | 19 | 0 | transformers | [

"transformers",

"gguf",

"en",

"base_model:Orneyfish/Ontology-0.1-Mistral-7B",

"base_model:quantized:Orneyfish/Ontology-0.1-Mistral-7B",

"endpoints_compatible",

"region:us"

] | null | "2025-01-02T01:19:31Z" | ---

base_model: Orneyfish/Ontology-0.1-Mistral-7B

language:

- en

library_name: transformers

quantized_by: mradermacher

tags: []

---

## About

<!-- ### quantize_version: 2 -->

<!-- ### output_tensor_quantised: 1 -->

<!-- ### convert_type: hf -->

<!-- ### vocab_type: -->

<!-- ### tags: -->

static quants of https://huggingface.co/Orneyfish/Ontology-0.1-Mistral-7B

<!-- provided-files -->

weighted/imatrix quants seem not to be available (by me) at this time. If they do not show up a week or so after the static ones, I have probably not planned for them. Feel free to request them by opening a Community Discussion.

## Usage

If you are unsure how to use GGUF files, refer to one of [TheBloke's

READMEs](https://huggingface.co/TheBloke/KafkaLM-70B-German-V0.1-GGUF) for

more details, including on how to concatenate multi-part files.

## Provided Quants

(sorted by size, not necessarily quality. IQ-quants are often preferable over similar sized non-IQ quants)

| Link | Type | Size/GB | Notes |

|:-----|:-----|--------:|:------|

| [GGUF](https://huggingface.co/mradermacher/Ontology-0.1-Mistral-7B-GGUF/resolve/main/Ontology-0.1-Mistral-7B.Q2_K.gguf) | Q2_K | 2.8 | |

| [GGUF](https://huggingface.co/mradermacher/Ontology-0.1-Mistral-7B-GGUF/resolve/main/Ontology-0.1-Mistral-7B.Q3_K_S.gguf) | Q3_K_S | 3.3 | |

| [GGUF](https://huggingface.co/mradermacher/Ontology-0.1-Mistral-7B-GGUF/resolve/main/Ontology-0.1-Mistral-7B.Q3_K_M.gguf) | Q3_K_M | 3.6 | lower quality |

| [GGUF](https://huggingface.co/mradermacher/Ontology-0.1-Mistral-7B-GGUF/resolve/main/Ontology-0.1-Mistral-7B.Q3_K_L.gguf) | Q3_K_L | 3.9 | |

| [GGUF](https://huggingface.co/mradermacher/Ontology-0.1-Mistral-7B-GGUF/resolve/main/Ontology-0.1-Mistral-7B.IQ4_XS.gguf) | IQ4_XS | 4.0 | |

| [GGUF](https://huggingface.co/mradermacher/Ontology-0.1-Mistral-7B-GGUF/resolve/main/Ontology-0.1-Mistral-7B.Q4_K_S.gguf) | Q4_K_S | 4.2 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/Ontology-0.1-Mistral-7B-GGUF/resolve/main/Ontology-0.1-Mistral-7B.Q4_K_M.gguf) | Q4_K_M | 4.5 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/Ontology-0.1-Mistral-7B-GGUF/resolve/main/Ontology-0.1-Mistral-7B.Q5_K_S.gguf) | Q5_K_S | 5.1 | |

| [GGUF](https://huggingface.co/mradermacher/Ontology-0.1-Mistral-7B-GGUF/resolve/main/Ontology-0.1-Mistral-7B.Q5_K_M.gguf) | Q5_K_M | 5.2 | |

| [GGUF](https://huggingface.co/mradermacher/Ontology-0.1-Mistral-7B-GGUF/resolve/main/Ontology-0.1-Mistral-7B.Q6_K.gguf) | Q6_K | 6.0 | very good quality |

| [GGUF](https://huggingface.co/mradermacher/Ontology-0.1-Mistral-7B-GGUF/resolve/main/Ontology-0.1-Mistral-7B.Q8_0.gguf) | Q8_0 | 7.8 | fast, best quality |

| [GGUF](https://huggingface.co/mradermacher/Ontology-0.1-Mistral-7B-GGUF/resolve/main/Ontology-0.1-Mistral-7B.f16.gguf) | f16 | 14.6 | 16 bpw, overkill |

Here is a handy graph by ikawrakow comparing some lower-quality quant

types (lower is better):

And here are Artefact2's thoughts on the matter:

https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9

## FAQ / Model Request

See https://huggingface.co/mradermacher/model_requests for some answers to

questions you might have and/or if you want some other model quantized.

## Thanks

I thank my company, [nethype GmbH](https://www.nethype.de/), for letting

me use its servers and providing upgrades to my workstation to enable

this work in my free time.

<!-- end -->

|

eri166/BKPM_Mistral | eri166 | "2025-02-27T09:54:21Z" | 0 | 0 | transformers | [

"transformers",

"gguf",

"mistral",

"text-generation-inference",

"unsloth",

"en",

"base_model:unsloth/mistral-7b-v0.3-bnb-4bit",

"base_model:quantized:unsloth/mistral-7b-v0.3-bnb-4bit",

"license:apache-2.0",

"endpoints_compatible",

"region:us",

"conversational"

] | null | "2025-02-27T09:52:18Z" | ---

base_model: unsloth/mistral-7b-v0.3-bnb-4bit

tags:

- text-generation-inference

- transformers

- unsloth

- mistral

- gguf

license: apache-2.0

language:

- en

---

# Uploaded model

- **Developed by:** eri166

- **License:** apache-2.0

- **Finetuned from model :** unsloth/mistral-7b-v0.3-bnb-4bit

This mistral model was trained 2x faster with [Unsloth](https://github.com/unslothai/unsloth) and Huggingface's TRL library.

[<img src="https://raw.githubusercontent.com/unslothai/unsloth/main/images/unsloth%20made%20with%20love.png" width="200"/>](https://github.com/unslothai/unsloth)

|

superdavidyeh/llama2_uuu_news_qlora | superdavidyeh | "2024-06-05T08:01:12Z" | 1 | 0 | peft | [

"peft",

"safetensors",

"arxiv:1910.09700",

"base_model:NousResearch/Llama-2-7b-chat-hf",

"base_model:adapter:NousResearch/Llama-2-7b-chat-hf",

"region:us"

] | null | "2024-06-05T03:02:36Z" | ---

library_name: peft

base_model: NousResearch/Llama-2-7b-chat-hf

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

### Framework versions

- PEFT 0.11.1 |

leixa/2f269ebb-4917-40e0-bd09-8d9b35f735b1 | leixa | "2025-01-24T14:54:35Z" | 9 | 0 | peft | [

"peft",

"safetensors",

"llama",

"axolotl",

"generated_from_trainer",

"base_model:unsloth/llama-3-8b",

"base_model:adapter:unsloth/llama-3-8b",

"license:llama3",

"region:us"

] | null | "2025-01-24T14:24:32Z" | ---

library_name: peft

license: llama3

base_model: unsloth/llama-3-8b

tags:

- axolotl

- generated_from_trainer

model-index:

- name: 2f269ebb-4917-40e0-bd09-8d9b35f735b1

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

[<img src="https://raw.githubusercontent.com/axolotl-ai-cloud/axolotl/main/image/axolotl-badge-web.png" alt="Built with Axolotl" width="200" height="32"/>](https://github.com/axolotl-ai-cloud/axolotl)

<details><summary>See axolotl config</summary>

axolotl version: `0.4.1`

```yaml

adapter: lora

base_model: unsloth/llama-3-8b

bf16: auto

chat_template: llama3

dataset_prepared_path: null

datasets:

- data_files:

- ed7237070a48a937_train_data.json

ds_type: json

format: custom

path: /workspace/input_data/ed7237070a48a937_train_data.json

type:

field_input: tokens

field_instruction: text

field_output: ner_tags

format: '{instruction} {input}'

no_input_format: '{instruction}'

system_format: '{system}'

system_prompt: ''

debug: null

deepspeed: null

early_stopping_patience: null

eval_max_new_tokens: 128

eval_table_size: null

evals_per_epoch: 4

flash_attention: true

fp16: null

fsdp: null

fsdp_config: null

gradient_accumulation_steps: 4

gradient_checkpointing: true

gradient_clipping: 1.0

group_by_length: false

hub_model_id: leixa/2f269ebb-4917-40e0-bd09-8d9b35f735b1

hub_repo: null

hub_strategy: checkpoint

hub_token: null

learning_rate: 0.0001

load_in_4bit: false

load_in_8bit: false

local_rank: 0

logging_steps: 3

lora_alpha: 32

lora_dropout: 0.05

lora_fan_in_fan_out: null

lora_model_dir: null

lora_r: 16

lora_target_linear: true

lr_scheduler: cosine

max_steps: 100

micro_batch_size: 8

mlflow_experiment_name: /tmp/ed7237070a48a937_train_data.json

model_type: AutoModelForCausalLM

num_epochs: 3

optimizer: adamw_bnb_8bit

output_dir: miner_id_24

pad_to_sequence_len: true

resume_from_checkpoint: null

s2_attention: null

sample_packing: false

saves_per_epoch: 4

sequence_len: 1024

strict: false

tf32: false

tokenizer_type: AutoTokenizer

train_on_inputs: false

trust_remote_code: true

val_set_size: 0.05

wandb_entity: techspear-hub

wandb_mode: online

wandb_name: 306028e5-d6af-4d1b-bab1-63a2e73aa431

wandb_project: Gradients-On-Demand

wandb_run: your_name

wandb_runid: 306028e5-d6af-4d1b-bab1-63a2e73aa431

warmup_steps: 10

weight_decay: 0.01

xformers_attention: null

```

</details><br>

# 2f269ebb-4917-40e0-bd09-8d9b35f735b1

This model is a fine-tuned version of [unsloth/llama-3-8b](https://huggingface.co/unsloth/llama-3-8b) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0423

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 32

- optimizer: Use OptimizerNames.ADAMW_BNB with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: cosine

- lr_scheduler_warmup_steps: 10

- training_steps: 100

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:------:|:----:|:---------------:|

| No log | 0.0014 | 1 | 0.7183 |

| 0.4302 | 0.0127 | 9 | 0.3306 |

| 0.2097 | 0.0254 | 18 | 0.1815 |

| 0.1096 | 0.0380 | 27 | 0.0960 |

| 0.0689 | 0.0507 | 36 | 0.0704 |

| 0.0632 | 0.0634 | 45 | 0.0626 |

| 0.0564 | 0.0761 | 54 | 0.0527 |

| 0.0494 | 0.0888 | 63 | 0.0470 |

| 0.0413 | 0.1014 | 72 | 0.0443 |

| 0.0417 | 0.1141 | 81 | 0.0443 |

| 0.0417 | 0.1268 | 90 | 0.0427 |

| 0.0397 | 0.1395 | 99 | 0.0423 |

### Framework versions

- PEFT 0.13.2

- Transformers 4.46.0

- Pytorch 2.5.0+cu124

- Datasets 3.0.1

- Tokenizers 0.20.1 |

mradermacher/TinyAlpaca-GGUF | mradermacher | "2025-02-05T17:17:11Z" | 160 | 0 | transformers | [

"transformers",

"gguf",

"en",

"base_model:mlabonne/TinyAlpaca",

"base_model:quantized:mlabonne/TinyAlpaca",

"endpoints_compatible",

"region:us"

] | null | "2025-02-05T16:52:51Z" | ---

base_model: mlabonne/TinyAlpaca

language:

- en

library_name: transformers

quantized_by: mradermacher

---

## About

<!-- ### quantize_version: 2 -->

<!-- ### output_tensor_quantised: 1 -->

<!-- ### convert_type: hf -->

<!-- ### vocab_type: -->

<!-- ### tags: -->

static quants of https://huggingface.co/mlabonne/TinyAlpaca

<!-- provided-files -->

weighted/imatrix quants are available at https://huggingface.co/mradermacher/TinyAlpaca-i1-GGUF

## Usage

If you are unsure how to use GGUF files, refer to one of [TheBloke's

READMEs](https://huggingface.co/TheBloke/KafkaLM-70B-German-V0.1-GGUF) for

more details, including on how to concatenate multi-part files.

## Provided Quants

(sorted by size, not necessarily quality. IQ-quants are often preferable over similar sized non-IQ quants)

| Link | Type | Size/GB | Notes |

|:-----|:-----|--------:|:------|

| [GGUF](https://huggingface.co/mradermacher/TinyAlpaca-GGUF/resolve/main/TinyAlpaca.Q2_K.gguf) | Q2_K | 0.5 | |

| [GGUF](https://huggingface.co/mradermacher/TinyAlpaca-GGUF/resolve/main/TinyAlpaca.Q3_K_S.gguf) | Q3_K_S | 0.6 | |

| [GGUF](https://huggingface.co/mradermacher/TinyAlpaca-GGUF/resolve/main/TinyAlpaca.Q3_K_M.gguf) | Q3_K_M | 0.6 | lower quality |

| [GGUF](https://huggingface.co/mradermacher/TinyAlpaca-GGUF/resolve/main/TinyAlpaca.Q3_K_L.gguf) | Q3_K_L | 0.7 | |

| [GGUF](https://huggingface.co/mradermacher/TinyAlpaca-GGUF/resolve/main/TinyAlpaca.IQ4_XS.gguf) | IQ4_XS | 0.7 | |

| [GGUF](https://huggingface.co/mradermacher/TinyAlpaca-GGUF/resolve/main/TinyAlpaca.Q4_K_S.gguf) | Q4_K_S | 0.7 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/TinyAlpaca-GGUF/resolve/main/TinyAlpaca.Q4_K_M.gguf) | Q4_K_M | 0.8 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/TinyAlpaca-GGUF/resolve/main/TinyAlpaca.Q5_K_S.gguf) | Q5_K_S | 0.9 | |

| [GGUF](https://huggingface.co/mradermacher/TinyAlpaca-GGUF/resolve/main/TinyAlpaca.Q5_K_M.gguf) | Q5_K_M | 0.9 | |

| [GGUF](https://huggingface.co/mradermacher/TinyAlpaca-GGUF/resolve/main/TinyAlpaca.Q6_K.gguf) | Q6_K | 1.0 | very good quality |

| [GGUF](https://huggingface.co/mradermacher/TinyAlpaca-GGUF/resolve/main/TinyAlpaca.Q8_0.gguf) | Q8_0 | 1.3 | fast, best quality |

| [GGUF](https://huggingface.co/mradermacher/TinyAlpaca-GGUF/resolve/main/TinyAlpaca.f16.gguf) | f16 | 2.3 | 16 bpw, overkill |

Here is a handy graph by ikawrakow comparing some lower-quality quant

types (lower is better):

And here are Artefact2's thoughts on the matter:

https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9

## FAQ / Model Request

See https://huggingface.co/mradermacher/model_requests for some answers to

questions you might have and/or if you want some other model quantized.

## Thanks

I thank my company, [nethype GmbH](https://www.nethype.de/), for letting

me use its servers and providing upgrades to my workstation to enable

this work in my free time.

<!-- end -->

|

MrD05/llama2-qlora-finetunined-role | MrD05 | "2023-07-24T09:16:50Z" | 4 | 0 | peft | [

"peft",

"region:us"

] | null | "2023-07-24T09:14:13Z" | ---

library_name: peft

---

## Training procedure

The following `bitsandbytes` quantization config was used during training:

- load_in_8bit: False

- load_in_4bit: True

- llm_int8_threshold: 6.0

- llm_int8_skip_modules: None

- llm_int8_enable_fp32_cpu_offload: False

- llm_int8_has_fp16_weight: False

- bnb_4bit_quant_type: nf4

- bnb_4bit_use_double_quant: False

- bnb_4bit_compute_dtype: float16

### Framework versions

- PEFT 0.5.0.dev0

|

seongjae6751/poca-SoccerTwos | seongjae6751 | "2025-02-12T18:32:18Z" | 0 | 0 | ml-agents | [

"ml-agents",

"tensorboard",

"onnx",

"SoccerTwos",

"deep-reinforcement-learning",

"reinforcement-learning",

"ML-Agents-SoccerTwos",

"region:us"

] | reinforcement-learning | "2025-02-12T18:31:45Z" | ---

library_name: ml-agents

tags:

- SoccerTwos

- deep-reinforcement-learning

- reinforcement-learning

- ML-Agents-SoccerTwos

---

# **poca** Agent playing **SoccerTwos**

This is a trained model of a **poca** agent playing **SoccerTwos**

using the [Unity ML-Agents Library](https://github.com/Unity-Technologies/ml-agents).

## Usage (with ML-Agents)

The Documentation: https://unity-technologies.github.io/ml-agents/ML-Agents-Toolkit-Documentation/

We wrote a complete tutorial to learn to train your first agent using ML-Agents and publish it to the Hub:

- A *short tutorial* where you teach Huggy the Dog 🐶 to fetch the stick and then play with him directly in your

browser: https://huggingface.co/learn/deep-rl-course/unitbonus1/introduction

- A *longer tutorial* to understand how works ML-Agents:

https://huggingface.co/learn/deep-rl-course/unit5/introduction

### Resume the training

```bash

mlagents-learn <your_configuration_file_path.yaml> --run-id=<run_id> --resume

```

### Watch your Agent play

You can watch your agent **playing directly in your browser**

1. If the environment is part of ML-Agents official environments, go to https://huggingface.co/unity

2. Step 1: Find your model_id: seongjae6751/poca-SoccerTwos

3. Step 2: Select your *.nn /*.onnx file

4. Click on Watch the agent play 👀

|

ShinyQ/Sentiboard | ShinyQ | "2022-12-10T06:45:35Z" | 3 | 0 | transformers | [

"transformers",

"pytorch",

"bert",

"text-classification",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | "2022-12-10T06:34:49Z" | ---

tags:

- generated_from_trainer

model-index:

- name: tmp_trainer

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# tmp_trainer

This model was trained from scratch on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Framework versions

- Transformers 4.24.0

- Pytorch 1.13.0

- Tokenizers 0.13.2

|

Inderpreet01/Llama-3.2-8B-Instruct_rca_grpo_v1 | Inderpreet01 | "2025-03-26T21:46:33Z" | 0 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"text-generation-inference",

"unsloth",

"trl",

"grpo",

"conversational",

"en",

"base_model:Inderpreet01/Llama-3.1-8B-Instruct_rca_sft_v2",

"base_model:finetune:Inderpreet01/Llama-3.1-8B-Instruct_rca_sft_v2",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-generation | "2025-03-26T21:43:46Z" | ---

base_model: Inderpreet01/Llama-3.1-8B-Instruct_rca_sft_v2

tags:

- text-generation-inference

- transformers

- unsloth

- llama

- trl

- grpo

license: apache-2.0

language:

- en

---

# Uploaded model

- **Developed by:** Inderpreet01

- **License:** apache-2.0

- **Finetuned from model :** Inderpreet01/Llama-3.1-8B-Instruct_rca_sft_v2

This llama model was trained 2x faster with [Unsloth](https://github.com/unslothai/unsloth) and Huggingface's TRL library.

[<img src="https://raw.githubusercontent.com/unslothai/unsloth/main/images/unsloth%20made%20with%20love.png" width="200"/>](https://github.com/unslothai/unsloth)

|

lesso10/dc7cb361-5673-4c27-9980-c558194db68e | lesso10 | "2025-03-24T13:46:15Z" | 0 | 0 | peft | [

"peft",

"safetensors",

"gemma2",

"axolotl",

"generated_from_trainer",

"base_model:unsloth/gemma-2-2b-it",

"base_model:adapter:unsloth/gemma-2-2b-it",

"license:gemma",

"region:us"

] | null | "2025-03-24T12:33:10Z" | Temporary Redirect. Redirecting to /api/resolve-cache/models/lesso10/dc7cb361-5673-4c27-9980-c558194db68e/0a6b1a971bb87a75569e762e719b3f42df96adb4/README.md?%2Flesso10%2Fdc7cb361-5673-4c27-9980-c558194db68e%2Fresolve%2Fmain%2FREADME.md=&etag=%22d5173a74cc1f4d80c67926d6efd42398f80e8cc0%22 |

ArbaazBeg/phi3-medium-lmsys-0 | ArbaazBeg | "2024-08-01T04:01:34Z" | 105 | 0 | transformers | [

"transformers",

"safetensors",

"phi3",

"text-generation",

"conversational",

"custom_code",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | "2024-07-31T11:52:53Z" | ---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed] |

argmining-vaccines/roberta-base-stance | argmining-vaccines | "2024-02-21T13:12:25Z" | 5 | 0 | transformers | [

"transformers",

"safetensors",

"roberta",

"token-classification",

"arxiv:1910.09700",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | token-classification | "2024-02-21T13:12:11Z" | ---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

kafikani/autotrain-dynex-77356140532 | kafikani | "2023-07-25T20:50:27Z" | 109 | 0 | transformers | [

"transformers",

"pytorch",

"safetensors",

"deberta",

"text-classification",

"autotrain",

"en",

"dataset:kafikani/autotrain-data-dynex",

"co2_eq_emissions",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | "2023-07-25T20:43:31Z" | ---

tags:

- autotrain

- text-classification

language:

- en

widget:

- text: "I love AutoTrain"

datasets:

- kafikani/autotrain-data-dynex

co2_eq_emissions:

emissions: 4.733413186525841

---

# Model Trained Using AutoTrain

- Problem type: Multi-class Classification

- Model ID: 77356140532

- CO2 Emissions (in grams): 4.7334

## Validation Metrics

- Loss: 0.458

- Accuracy: 0.837

- Macro F1: 0.761

- Micro F1: 0.837

- Weighted F1: 0.833

- Macro Precision: 0.785

- Micro Precision: 0.837

- Weighted Precision: 0.834

- Macro Recall: 0.746

- Micro Recall: 0.837

- Weighted Recall: 0.837

## Usage

You can use cURL to access this model:

```

$ curl -X POST -H "Authorization: Bearer YOUR_API_KEY" -H "Content-Type: application/json" -d '{"inputs": "I love AutoTrain"}' https://api-inference.huggingface.co/models/kafikani/autotrain-dynex-77356140532

```

Or Python API:

```

from transformers import AutoModelForSequenceClassification, AutoTokenizer

model = AutoModelForSequenceClassification.from_pretrained("kafikani/autotrain-dynex-77356140532", use_auth_token=True)

tokenizer = AutoTokenizer.from_pretrained("kafikani/autotrain-dynex-77356140532", use_auth_token=True)

inputs = tokenizer("I love AutoTrain", return_tensors="pt")

outputs = model(**inputs)

``` |

ausgerechnet/schwurpert | ausgerechnet | "2025-02-24T10:55:58Z" | 153 | 0 | transformers | [

"transformers",

"pytorch",

"tf",

"safetensors",

"bert",

"fill-mask",

"de",

"base_model:deepset/gbert-large",

"base_model:finetune:deepset/gbert-large",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | fill-mask | "2023-10-17T16:33:14Z" | ---

base_model: deepset/gbert-large

model-index:

- name: schwurpert

results: []

language:

- de

pipeline_tag: fill-mask

---

# schwurpert

This model is a fine-tuned version of [deepset/gbert-large](https://huggingface.co/deepset/gbert-large) on Telegram posts written mostly by German conspiracy theorists (and some more credible authors).

The complete corpus is available on request.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'AdamWeightDecay', 'learning_rate': {'module': 'transformers.optimization_tf', 'class_name': 'WarmUp', 'config': {'initial_learning_rate': 2e-05, 'decay_schedule_fn': {'module': 'keras.optimizers.schedules', 'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 2e-05, 'decay_steps': 92927, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}, 'registered_name': None}, 'warmup_steps': 1000, 'power': 1.0, 'name': None}, 'registered_name': 'WarmUp'}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False, 'weight_decay_rate': 0.01}

- training_precision: mixed_float16

### Training results

| Train Loss | Epoch |

|:----------:|:-----:|

| 1.7997 | 0 |

### Framework versions

- Transformers 4.33.2

- TensorFlow 2.13.0

- Datasets 2.14.5

- Tokenizers 0.13.3 |

transitionGap/DOMICILE-IN-Llama3.1-8B-smallset | transitionGap | "2024-10-14T18:45:51Z" | 19 | 0 | transformers | [

"transformers",

"safetensors",

"gguf",

"llama",

"text-generation-inference",

"unsloth",

"trl",

"en",

"base_model:unsloth/Meta-Llama-3.1-8B-Instruct-bnb-4bit",

"base_model:quantized:unsloth/Meta-Llama-3.1-8B-Instruct-bnb-4bit",

"license:apache-2.0",

"endpoints_compatible",

"region:us",

"conversational"

] | null | "2024-10-14T18:17:54Z" | ---

base_model: unsloth/Meta-Llama-3.1-8B-Instruct-bnb-4bit

language:

- en

license: apache-2.0

tags:

- text-generation-inference

- transformers

- unsloth

- llama

- trl

---

# Uploaded model

- **Developed by:** transitionGap

- **License:** apache-2.0

- **Finetuned from model :** unsloth/Meta-Llama-3.1-8B-Instruct-bnb-4bit

This llama model was trained 2x faster with [Unsloth](https://github.com/unslothai/unsloth) and Huggingface's TRL library.

[<img src="https://raw.githubusercontent.com/unslothai/unsloth/main/images/unsloth%20made%20with%20love.png" width="200"/>](https://github.com/unslothai/unsloth)

|

lmstudio-community/c4ai-command-r-plus-08-2024-GGUF | lmstudio-community | "2024-08-30T19:36:22Z" | 485 | 5 | transformers | [

"transformers",

"gguf",

"text-generation",

"en",

"fr",

"de",

"es",

"it",

"pt",

"ja",

"ko",

"zh",

"ar",

"base_model:CohereForAI/c4ai-command-r-plus-08-2024",

"base_model:quantized:CohereForAI/c4ai-command-r-plus-08-2024",

"license:cc-by-nc-4.0",

"endpoints_compatible",

"region:us",

"conversational"

] | text-generation | "2024-08-30T16:02:51Z" | ---

language:

- en

- fr

- de

- es

- it

- pt

- ja

- ko

- zh

- ar

license: cc-by-nc-4.0

library_name: transformers

extra_gated_prompt: "By submitting this form, you agree to the [License Agreement](https://cohere.com/c4ai-cc-by-nc-license) and acknowledge that the information you provide will be collected, used, and shared in accordance with Cohere’s [Privacy Policy]( https://cohere.com/privacy)."

extra_gated_fields:

Name: text

Affiliation: text

Country:

type: select

options:

- Aruba

- Afghanistan

- Angola

- Anguilla

- Åland Islands

- Albania

- Andorra

- United Arab Emirates

- Argentina

- Armenia

- American Samoa

- Antarctica

- French Southern Territories

- Antigua and Barbuda

- Australia

- Austria

- Azerbaijan

- Burundi

- Belgium

- Benin

- Bonaire Sint Eustatius and Saba

- Burkina Faso

- Bangladesh

- Bulgaria

- Bahrain

- Bahamas

- Bosnia and Herzegovina

- Saint Barthélemy

- Belarus

- Belize

- Bermuda

- Plurinational State of Bolivia

- Brazil

- Barbados

- Brunei-Darussalam

- Bhutan

- Bouvet-Island

- Botswana

- Central African Republic

- Canada

- Cocos (Keeling) Islands

- Switzerland

- Chile

- China

- Côte-dIvoire

- Cameroon

- Democratic Republic of the Congo

- Cook Islands

- Colombia

- Comoros

- Cabo Verde

- Costa Rica

- Cuba

- Curaçao

- Christmas Island

- Cayman Islands

- Cyprus

- Czechia

- Germany

- Djibouti

- Dominica

- Denmark

- Dominican Republic

- Algeria

- Ecuador

- Egypt

- Eritrea

- Western Sahara

- Spain

- Estonia

- Ethiopia

- Finland

- Fiji

- Falkland Islands (Malvinas)

- France

- Faroe Islands

- Federated States of Micronesia

- Gabon

- United Kingdom

- Georgia

- Guernsey

- Ghana

- Gibraltar

- Guinea

- Guadeloupe

- Gambia

- Guinea Bissau

- Equatorial Guinea

- Greece

- Grenada

- Greenland

- Guatemala

- French Guiana

- Guam

- Guyana

- Hong Kong

- Heard Island and McDonald Islands

- Honduras

- Croatia

- Haiti

- Hungary

- Indonesia

- Isle of Man

- India

- British Indian Ocean Territory

- Ireland

- Islamic Republic of Iran

- Iraq

- Iceland

- Israel

- Italy

- Jamaica

- Jersey

- Jordan

- Japan

- Kazakhstan

- Kenya

- Kyrgyzstan

- Cambodia

- Kiribati

- Saint-Kitts-and-Nevis

- South Korea

- Kuwait

- Lao-Peoples-Democratic-Republic

- Lebanon

- Liberia

- Libya

- Saint-Lucia

- Liechtenstein

- Sri Lanka

- Lesotho

- Lithuania

- Luxembourg

- Latvia

- Macao

- Saint Martin (French-part)

- Morocco

- Monaco

- Republic of Moldova

- Madagascar

- Maldives

- Mexico

- Marshall Islands

- North Macedonia

- Mali

- Malta

- Myanmar

- Montenegro

- Mongolia

- Northern Mariana Islands

- Mozambique

- Mauritania

- Montserrat

- Martinique

- Mauritius

- Malawi

- Malaysia

- Mayotte

- Namibia

- New Caledonia

- Niger

- Norfolk Island

- Nigeria

- Nicaragua

- Niue

- Netherlands

- Norway

- Nepal

- Nauru

- New Zealand

- Oman

- Pakistan

- Panama

- Pitcairn

- Peru

- Philippines

- Palau

- Papua New Guinea

- Poland

- Puerto Rico

- North Korea

- Portugal

- Paraguay

- State of Palestine

- French Polynesia

- Qatar

- Réunion

- Romania

- Russia

- Rwanda

- Saudi Arabia

- Sudan

- Senegal

- Singapore

- South Georgia and the South Sandwich Islands

- Saint Helena Ascension and Tristan da Cunha

- Svalbard and Jan Mayen

- Solomon Islands

- Sierra Leone

- El Salvador

- San Marino

- Somalia

- Saint Pierre and Miquelon

- Serbia

- South Sudan

- Sao Tome and Principe

- Suriname

- Slovakia

- Slovenia

- Sweden

- Eswatini

- Sint Maarten (Dutch-part)

- Seychelles

- Syrian Arab Republic

- Turks and Caicos Islands

- Chad

- Togo

- Thailand

- Tajikistan

- Tokelau

- Turkmenistan

- Timor Leste

- Tonga

- Trinidad and Tobago

- Tunisia

- Turkey

- Tuvalu

- Taiwan

- United Republic of Tanzania

- Uganda

- Ukraine

- United States Minor Outlying Islands

- Uruguay

- United-States

- Uzbekistan

- Holy See (Vatican City State)

- Saint Vincent and the Grenadines

- Bolivarian Republic of Venezuela

- Virgin Islands British

- Virgin Islands U.S.

- VietNam

- Vanuatu

- Wallis and Futuna

- Samoa

- Yemen

- South Africa

- Zambia

- Zimbabwe

Receive email updates on C4AI and Cohere research, events, products and services?:

type: select

options:

- Yes

- No

I agree to use this model for non-commercial use ONLY: checkbox

quantized_by: bartowski

pipeline_tag: text-generation

base_model: CohereForAI/c4ai-command-r-plus-08-2024

lm_studio:

param_count: 105b

use_case: general

release_date: 30-08-2024

model_creator: CohereForAI

prompt_template: cohere_command_r

base_model: Cohere

system_prompt: You are a large language model called Command R built by the company Cohere. You act as a brilliant, sophisticated, AI-assistant chatbot trained to assist human users by providing thorough responses.

original_repo: CohereForAI/c4ai-command-r-plus-08-2024

---

## 💫 Community Model> C4AI Command R Plus 08-2024 by Cohere For AI

*👾 [LM Studio](https://lmstudio.ai) Community models highlights program. Highlighting new & noteworthy models by the community. Join the conversation on [Discord](https://discord.gg/aPQfnNkxGC)*.

**Model creator:** [CohereForAI](https://huggingface.co/CohereForAI)<br>

**Original model**: [c4ai-command-r-plus-08-2024](https://huggingface.co/CohereForAI/c4ai-command-r-plus-08-2024)<br>

**GGUF quantization:** provided by [bartowski](https://huggingface.co/bartowski) based on `llama.cpp` release [b3634](https://github.com/ggerganov/llama.cpp/releases/tag/b3634)<br>

## Model Summary:

C4AI Command R Plus 08-2024 is an update to the originally released 105B paramater Command R. The original Command R model received sweeping praise for its incredible RAG and multilingual abilities, and this model is no different.<br>

Not for commercial use, must adhere to [C4AI's Acceptable Use Policy](https://docs.cohere.com/docs/c4ai-acceptable-use-policy).

## Prompt Template:

Choose the `Cohere Command R` preset in your LM Studio.

Under the hood, the model will see a prompt that's formatted like so:

```

<BOS_TOKEN><|START_OF_TURN_TOKEN|><|USER_TOKEN|>{prompt}<|END_OF_TURN_TOKEN|><|START_OF_TURN_TOKEN|><|CHATBOT_TOKEN|>

```

This model also supports tool use and RAG prompt formats. For details on formatting for those use cases, view [tool use here](https://huggingface.co/CohereForAI/c4ai-command-r-plus-08-2024#tool-use--agent-capabilities) and [RAG capabilities here](https://huggingface.co/CohereForAI/c4ai-command-r-plus-08-2024#grounded-generation-and-rag-capabilities)

## Technical Details

C4AI Command R Plus 08-2024 has been trained on 23 languages (English, French, Spanish, Italian, German, Portuguese, Japanese, Korean, Arabic, Simplified Chinese, Russian, Polish, Turkish, Vietnamese, Dutch, Czech, Indonesian, Ukrainian, Romanian, Greek, Hindi, Hebrew, and Persian).

Due to this multilingual training, it excels in multilingual tasks.

Command R Plus 08-2024 supports a context length of 128K.

## Special thanks

🙏 Special thanks to [Georgi Gerganov](https://github.com/ggerganov) and the whole team working on [llama.cpp](https://github.com/ggerganov/llama.cpp/) for making all of this possible.

## Disclaimers

LM Studio is not the creator, originator, or owner of any Model featured in the Community Model Program. Each Community Model is created and provided by third parties. LM Studio does not endorse, support, represent or guarantee the completeness, truthfulness, accuracy, or reliability of any Community Model. You understand that Community Models can produce content that might be offensive, harmful, inaccurate or otherwise inappropriate, or deceptive. Each Community Model is the sole responsibility of the person or entity who originated such Model. LM Studio may not monitor or control the Community Models and cannot, and does not, take responsibility for any such Model. LM Studio disclaims all warranties or guarantees about the accuracy, reliability or benefits of the Community Models. LM Studio further disclaims any warranty that the Community Model will meet your requirements, be secure, uninterrupted or available at any time or location, or error-free, viruses-free, or that any errors will be corrected, or otherwise. You will be solely responsible for any damage resulting from your use of or access to the Community Models, your downloading of any Community Model, or use of any other Community Model provided by or through LM Studio.

### Terms of Use (directly from Cohere For AI)

We hope that the release of this model will make community-based research efforts more accessible, by releasing the weights of a highly performant 35 billion parameter model to researchers all over the world. This model is governed by a [CC-BY-NC](https://cohere.com/c4ai-cc-by-nc-license) License with an acceptable use addendum, and also requires adhering to [C4AI's Acceptable Use Policy](https://docs.cohere.com/docs/c4ai-acceptable-use-policy).

|

Stanley4848/Biotradeinvestment | Stanley4848 | "2025-03-19T15:15:49Z" | 0 | 0 | adapter-transformers | [

"adapter-transformers",

"finance",

"translation",

"pt",

"dataset:Congliu/Chinese-DeepSeek-R1-Distill-data-110k",

"base_model:Qwen/QwQ-32B",

"base_model:adapter:Qwen/QwQ-32B",

"license:apache-2.0",

"region:us"

] | translation | "2025-03-19T15:13:56Z" | ---

license: apache-2.0

datasets:

- Congliu/Chinese-DeepSeek-R1-Distill-data-110k

language:

- pt

metrics:

- accuracy

base_model:

- Qwen/QwQ-32B

new_version: deepseek-ai/DeepSeek-R1

pipeline_tag: translation

library_name: adapter-transformers

tags:

- finance

--- |

TEEN-D/Driver-Drowsiness-Detection | TEEN-D | "2025-03-31T01:11:58Z" | 0 | 0 | null | [

"en",

"license:mit",

"region:us"

] | null | "2025-03-31T00:25:06Z" | ---

license: mit

language:

- en

---

# Model Cards: Driver Drowsiness Detection System

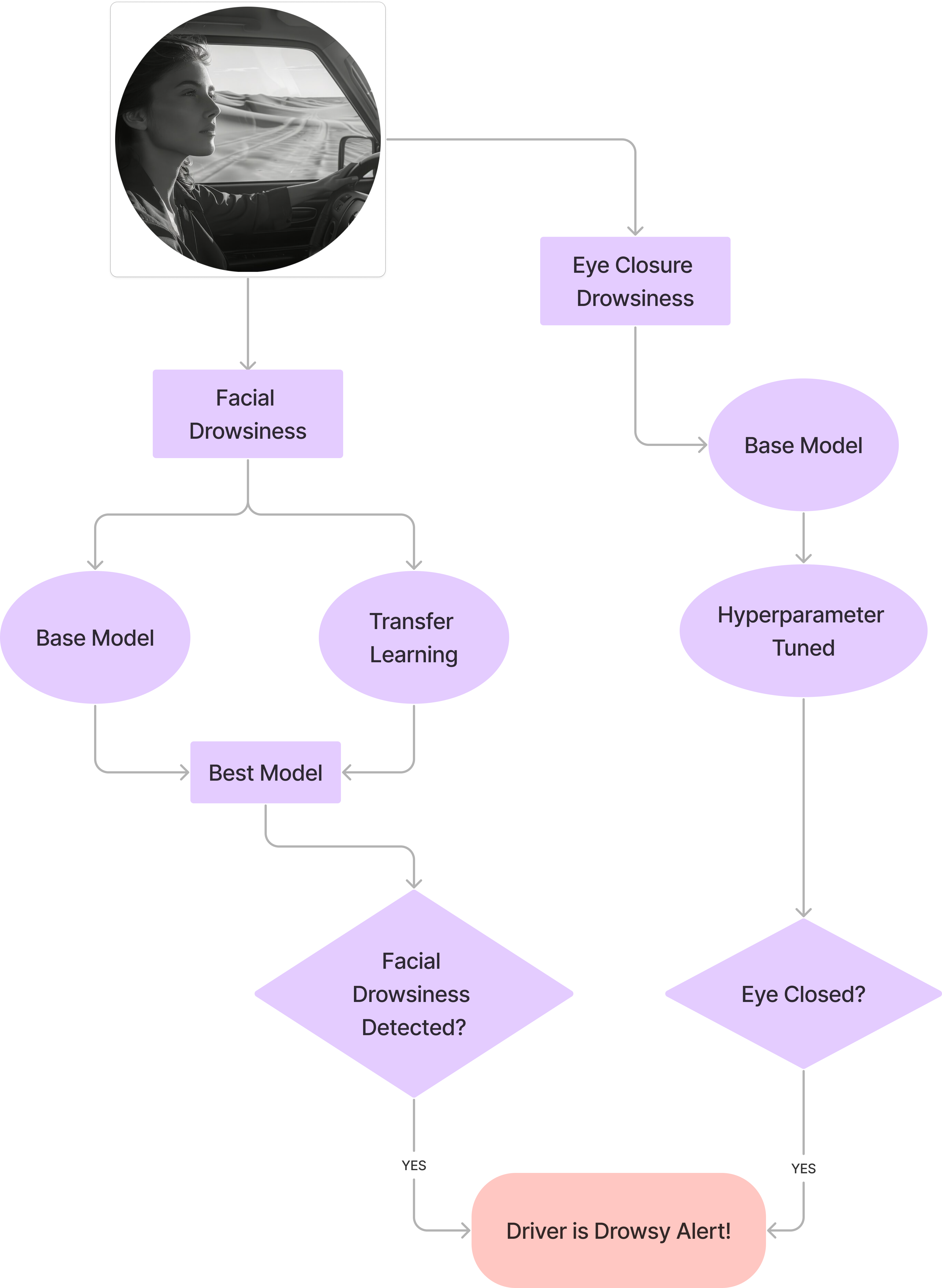

This repository contains models developed for the Driver Drowsiness Detection System project. The goal is to enhance vehicular safety by identifying signs of driver fatigue and drowsiness in real-time using deep learning. The system employs two main approaches:

1. **Facial Features Drowsiness Detection (Dataset 1):** Analyzes overall facial images for signs of drowsiness (e.g., yawning, general expression).

2. **Eye Closure Drowsiness Detection (Dataset 2):** Specifically focuses on detecting whether the driver's eyes are open or closed.

The report suggests combining these approaches for a more robust system, potentially using MobileNetV2 for facial features and the tuned CNN for eye closure.

---

---

## Model Card: Facial Drowsiness Detection - Base CNN

* **Model File:** `trained_model_weights_BASE_DATASET1.pth`

### Model Details

* **Description:** A custom Convolutional Neural Network (CNN) trained from scratch to classify facial images as 'Drowsy' or 'Natural' (alert). This is the initial baseline model for Dataset 1.

* **Architecture:** `Model_OurArchitecture` (4 Conv2D layers: 1->32, 32->64, 64->128, 128->128; MaxPool2D after first 3 Conv layers; 1 FC layer: 128*6*6 -> 256; Output FC layer: 256 -> 1; ReLU activations; Single Dropout(0.5) layer before final output).

* **Input:** 48x48 Grayscale images.

* **Output:** Single logit predicting drowsiness (Binary Classification).

* **Framework:** PyTorch.

### Intended Use

* Intended for detecting drowsiness based on static facial images. Serves as a baseline for comparison.

* **Not recommended for deployment due to significant overfitting.**

### Training Data

* **Dataset:** Drowsy Detection Dataset ([Kaggle Link](https://www.kaggle.com/datasets/yasharjebraeily/drowsy-detection-dataset))

* **Classes:** DROWSY, NATURAL.

* **Size:** 5,859 training images.

* **Preprocessing:** Resize (48x48), Grayscale, ToTensor, Normalize (calculated mean/std from dataset), RandomHorizontalFlip.

### Evaluation Data

* **Dataset:** Test split of the Drowsy Detection Dataset.

* **Size:** 1,483 testing images.

* **Preprocessing:** Resize (48x48), Grayscale, ToTensor, Normalize (same as training).

### Quantitative Analyses

* **Training Performance:** Accuracy: 99.51%, Loss: 0.0148

* **Evaluation Performance:** Accuracy: 86.24%, Loss: 0.9170

* **Metrics:** Accuracy, Binary Cross-Entropy with Logits Loss.

### Limitations and Ethical Considerations

* **Overfitting:** Shows significant overfitting (large gap between training and testing accuracy). Generalizes poorly to unseen data.

* **Bias:** Performance may vary across different demographics, lighting conditions, camera angles, and accessories (e.g., glasses) not equally represented in the dataset.

* **Misuse Potential:** Could be used for surveillance, though not designed for it. False negatives (missing drowsiness) could lead to accidents; false positives (incorrect alerts) could be annoying or lead to user distrust.

---

## Model Card: Facial Drowsiness Detection - Base CNN + Dropout

* **Model File:** `trained_model_weights_BASE_DROPOUT_DATASET1.pth`

### Model Details

* **Description:** The same custom CNN architecture as the base model (`Model_OurArchitecture`) but explicitly trained *with* the described dropout layer active to mitigate overfitting observed in the baseline.

* **Architecture:** `Model_OurArchitecture` (As described above, including the Dropout(0.5) layer).

* **Input:** 48x48 Grayscale images.

* **Output:** Single logit predicting drowsiness.

* **Framework:** PyTorch.

### Intended Use

* Intended for detecting drowsiness based on static facial images. Shows improvement over the baseline by using dropout for regularization.

* Better generalization than the baseline, but transfer learning models performed better.

### Training Data

* Same as the Base CNN model (Dataset 1).

### Evaluation Data

* Same as the Base CNN model (Dataset 1).

### Quantitative Analyses

* **Training Performance:** Accuracy: 96.36%, Loss: 0.0960

* **Evaluation Performance:** Accuracy: 90.42%, Loss: 0.1969

* **Metrics:** Accuracy, BCEWithLogitsLoss.

### Limitations and Ethical Considerations

* **Overfitting Reduced:** Overfitting is reduced compared to the baseline, but a gap still exists.

* **Bias:** Same potential biases as the base model regarding demographics, lighting, etc.

* **Misuse Potential:** Same as the base model.

---

## Model Card: Facial Drowsiness Detection - Base CNN + Dropout + Early Stopping

* **Model File:** `trained_model_weights_BASE_DROPOUT_EARLYSTOPPING_DATASET1.pth`

### Model Details

* **Description:** The same custom CNN architecture (`Model_OurArchitecture` with dropout) trained using Dropout and Early Stopping (patience=5) to further prevent overfitting. Training stopped at epoch 9 out of 25 planned.

* **Architecture:** `Model_OurArchitecture` (As described above, including the Dropout(0.5) layer).

* **Input:** 48x48 Grayscale images.

* **Output:** Single logit predicting drowsiness.

* **Framework:** PyTorch.

### Intended Use

* Intended for detecting drowsiness based on static facial images. Represents the best-performing version of the custom CNN architecture due to regularization techniques.

* Performance is closer between training and testing compared to previous versions.

### Training Data

* Same as the Base CNN model (Dataset 1).

### Evaluation Data

* Same as the Base CNN model (Dataset 1).

### Quantitative Analyses

* **Best Training Performance (at Epoch 9):** Accuracy: 97.87%, Loss: 0.0617

* **Evaluation Performance:** Accuracy: 91.64%, Loss: 0.1899

* **Metrics:** Accuracy, BCEWithLogitsLoss.

### Limitations and Ethical Considerations

* **Generalization:** While improved, may not perform as well as the best transfer learning models on diverse unseen data.

* **Bias:** Same potential biases as the base model.

* **Misuse Potential:** Same as the base model.

---

## Model Card: Facial Drowsiness Detection - Fine-tuned VGG16

* **Model File:** `trained_model_weights_VGG16_DATASET1.pth`

### Model Details

* **Description:** A VGG16 model, pre-trained on ImageNet, fine-tuned for binary classification of facial images ('Drowsy' vs 'Natural') on Dataset 1.

* **Architecture:** Standard VGG16 architecture with the final fully connected layer replaced by a single output unit for binary classification.

* **Input:** 224x224 RGB images (Normalized using ImageNet stats).

* **Output:** Single logit predicting drowsiness.

* **Framework:** PyTorch.

### Intended Use

* Detecting drowsiness from facial images. Leverages transfer learning for potentially better feature extraction and generalization compared to the custom CNN. Good performance on the test set.

### Training Data

* **Dataset:** Drowsy Detection Dataset ([Kaggle Link](https://www.kaggle.com/datasets/yasharjebraeily/drowsy-detection-dataset))

* **Classes:** DROWSY, NATURAL.

* **Size:** 5,859 training images.

* **Preprocessing:** Resize (224x224), RandomHorizontalFlip, ToTensor, Normalize (ImageNet mean/std).

### Evaluation Data

* **Dataset:** Test split of the Drowsy Detection Dataset.

* **Size:** 1,483 testing images.

* **Preprocessing:** Resize (224x224), ToTensor, Normalize (ImageNet mean/std).

### Quantitative Analyses

* **Training Performance:** Accuracy: 96.69%, Loss: 0.1067

* **Evaluation Performance:** Accuracy: 97.51%, Loss: 0.1033

* **Metrics:** Accuracy, BCEWithLogitsLoss.

### Limitations and Ethical Considerations

* **Model Size:** VGG16 is relatively large, potentially impacting inference speed and deployment on resource-constrained devices.

* **Bias:** Potential biases inherited from ImageNet pre-training and the fine-tuning dataset (demographics, lighting, etc.).

* **Misuse Potential:** Same as the base model.

---

## Model Card: Facial Drowsiness Detection - Fine-tuned ResNet18

* **Model File:** `trained_model_weights_RESNET18_DATASET1.pth`

### Model Details

* **Description:** A ResNet18 model, pre-trained on ImageNet, fine-tuned for binary classification of facial images ('Drowsy' vs 'Natural') on Dataset 1.

* **Architecture:** Standard ResNet18 architecture with the final fully connected layer replaced by a single output unit.

* **Input:** 224x224 RGB images (Normalized using ImageNet stats).

* **Output:** Single logit predicting drowsiness.

* **Framework:** PyTorch.

### Intended Use

* Detecting drowsiness from facial images using transfer learning. Offers a balance between performance and model size compared to VGG16.

### Training Data

* Same as the Fine-tuned VGG16 model (Dataset 1, 224x224 RGB, ImageNet Norm).

### Evaluation Data

* Same as the Fine-tuned VGG16 model (Dataset 1 Test Set).

### Quantitative Analyses

* **Training Performance:** Accuracy: 99.42%, Loss: 0.0197

* **Evaluation Performance:** Accuracy: 95.28%, Loss: 0.1118

* **Metrics:** Accuracy, BCEWithLogitsLoss.

### Limitations and Ethical Considerations

* **Overfitting:** Shows a slightly larger gap between training and test performance compared to VGG16/MobileNetV2 on this task, indicating some overfitting.

* **Bias:** Potential biases from ImageNet and the fine-tuning dataset.

* **Misuse Potential:** Same as the base model.

---

## Model Card: Facial Drowsiness Detection - Fine-tuned MobileNetV2 (**Recommended for Facial Features**)

* **Model File:** `trained_model_weights_MOBILENETV2_DATASET1.pth`

### Model Details

* **Description:** A MobileNetV2 model, pre-trained on ImageNet, fine-tuned for binary classification of facial images ('Drowsy' vs 'Natural') on Dataset 1. Achieved the highest test accuracy among models tested on Dataset 1.

* **Architecture:** Standard MobileNetV2 architecture with the final classifier replaced for a single output unit. Designed for efficiency.

* **Input:** 224x224 RGB images (Normalized using ImageNet stats).

* **Output:** Single logit predicting drowsiness.

* **Framework:** PyTorch.

### Intended Use

* **Recommended model for facial drowsiness detection.** Offers high accuracy and efficiency, suitable for real-time applications.

### Training Data

* Same as the Fine-tuned VGG16 model (Dataset 1, 224x224 RGB, ImageNet Norm).

### Evaluation Data

* Same as the Fine-tuned VGG16 model (Dataset 1 Test Set).

### Quantitative Analyses

* **Training Performance:** Accuracy: 99.61%, Loss: 0.0175

* **Evaluation Performance:** Accuracy: 98.99%, Loss: 0.0317

* **Metrics:** Accuracy, BCEWithLogitsLoss.

### Limitations and Ethical Considerations

* **Efficiency vs. Complexity:** While efficient, it might be less robust to extreme variations than larger models in some scenarios.

* **Bias:** Potential biases from ImageNet and the fine-tuning dataset.

* **Misuse Potential:** Same as the base model. Performance under challenging real-world conditions (e.g., poor lighting, partial occlusion) should be carefully validated.

---

## Model Card: Eye Closure Detection - Tuned CNN (**Recommended for Eye Closure**)

* **Model File:** `trained_model_weights_FINAL_DATASET2.pth`

### Model Details

* **Description:** A custom CNN (`Model_NewArchitecture`) trained to detect whether eyes are 'Open' or 'Closed'. This model is the result of hyperparameter tuning (Adam optimizer, Dropout rate 0.5) on the baseline architecture for Dataset 2.

* **Architecture:** `Model_NewArchitecture` (4 Conv2D layers: 3->64, 64->128, 128->256, 256->256; MaxPool2D after first 3 Conv layers; 1 FC layer: 256*28*28 -> 512; Output FC layer: 512 -> 1; ReLU activations; Dropout(0.5) before final output).

* **Input:** 224x224 Grayscale images (potentially replicated to 3 channels based on report's transform description, normalized using dataset stats).

* **Output:** Single logit predicting eye closure (Binary Classification).

* **Framework:** PyTorch.

### Intended Use

* **Recommended model for eye closure detection.** Specifically designed to classify eye state, intended to be used alongside the facial feature model for a more robust drowsiness detection system.

### Training Data

* **Dataset:** Openned Closed Eyes Dataset ([Kaggle Link](https://www.kaggle.com/datasets/hazemfahmy/openned-closed-eyes/data)) - UnityEyes synthetic data.

* **Classes:** Opened, Closed.

* **Size:** 5,807 training images.

* **Preprocessing:** Resize (224x224), Grayscale (num_output_channels=3), Augmentations (RandomHorizontalFlip, RandomRotation(10), ColorJitter), ToTensor, Normalize (calculated mean/std from dataset).

### Evaluation Data

* **Dataset:** Test split of the Openned Closed Eyes Dataset.

* **Size:** 4,232 testing images.

* **Preprocessing:** Resize (224x224), Grayscale (num_output_channels=3), ToTensor, Normalize (same as training).

### Quantitative Analyses (Hyperparameter Tuned Model: Adam, Dropout 0.5)

* **Final Training Performance:** Accuracy: 95.52%, Loss: 0.1303 (from table pg 23)

* **Evaluation Performance:** Accuracy: 96.79%, Loss: 0.0935 (from table pg 23)

* **Metrics:** Accuracy, BCEWithLogitsLoss.

### Limitations and Ethical Considerations

* **Synthetic Data:** Trained primarily on synthetic eye images (UnityEyes). Performance on diverse real-world eyes (different ethnicities, lighting, glasses, occlusions, extreme angles) needs validation. Domain gap might exist.

* **Bias:** Potential biases related to the distribution of eye types/states in the synthetic dataset.

* **Misuse Potential:** Could be part of a surveillance system monitoring eye state. False negatives/positives have safety implications as described for other models.

--- |

philip-hightech/1302eb45-d150-47da-89be-1cff18d4ac1d | philip-hightech | "2025-01-13T18:16:51Z" | 13 | 0 | peft | [

"peft",

"safetensors",

"mistral",

"axolotl",

"generated_from_trainer",

"base_model:unsloth/zephyr-sft",

"base_model:adapter:unsloth/zephyr-sft",

"license:apache-2.0",

"region:us"

] | null | "2025-01-13T17:15:07Z" | ---

library_name: peft

license: apache-2.0

base_model: unsloth/zephyr-sft

tags:

- axolotl

- generated_from_trainer

model-index:

- name: 1302eb45-d150-47da-89be-1cff18d4ac1d

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

[<img src="https://raw.githubusercontent.com/axolotl-ai-cloud/axolotl/main/image/axolotl-badge-web.png" alt="Built with Axolotl" width="200" height="32"/>](https://github.com/axolotl-ai-cloud/axolotl)

<details><summary>See axolotl config</summary>

axolotl version: `0.4.1`

```yaml

adapter: lora

base_model: unsloth/zephyr-sft

bf16: auto

chat_template: llama3

dataset_prepared_path: null

datasets:

- data_files:

- 3f789bd3616a633b_train_data.json

ds_type: json

format: custom

path: /workspace/input_data/3f789bd3616a633b_train_data.json

type:

field_instruction: inputs

field_output: targets

format: '{instruction}'

no_input_format: '{instruction}'

system_format: '{system}'

system_prompt: ''

debug: null

deepspeed: null

early_stopping_patience: null

eval_max_new_tokens: 128

eval_table_size: null

evals_per_epoch: 4

flash_attention: false

fp16: null

fsdp: null

fsdp_config: null

gradient_accumulation_steps: 4

gradient_checkpointing: false

group_by_length: false

hub_model_id: philip-hightech/1302eb45-d150-47da-89be-1cff18d4ac1d

hub_repo: null

hub_strategy: checkpoint

hub_token: null

learning_rate: 0.0002

load_in_4bit: false

load_in_8bit: false

local_rank: null

logging_steps: 1

lora_alpha: 16

lora_dropout: 0.05

lora_fan_in_fan_out: null

lora_model_dir: null

lora_r: 8

lora_target_linear: true

lr_scheduler: cosine

max_steps: 10

micro_batch_size: 2

mlflow_experiment_name: /tmp/3f789bd3616a633b_train_data.json

model_type: AutoModelForCausalLM

num_epochs: 1

optimizer: adamw_bnb_8bit

output_dir: miner_id_24

pad_to_sequence_len: true

resume_from_checkpoint: null

s2_attention: null

sample_packing: false

saves_per_epoch: 4

sequence_len: 512

strict: false

tf32: false

tokenizer_type: AutoTokenizer

train_on_inputs: false

trust_remote_code: true

val_set_size: 0.05

wandb_entity: null

wandb_mode: online

wandb_name: fa880946-1eaf-434a-b678-94c8300d042a

wandb_project: Gradients-On-Demand

wandb_run: your_name

wandb_runid: fa880946-1eaf-434a-b678-94c8300d042a

warmup_steps: 10

weight_decay: 0.0

xformers_attention: null

```

</details><br>

# 1302eb45-d150-47da-89be-1cff18d4ac1d

This model is a fine-tuned version of [unsloth/zephyr-sft](https://huggingface.co/unsloth/zephyr-sft) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: nan

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0002

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 8

- optimizer: Use OptimizerNames.ADAMW_BNB with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: cosine

- lr_scheduler_warmup_steps: 10

- training_steps: 10