modelId

stringlengths 5

138

| author

stringlengths 2

42

| last_modified

unknowndate 2020-02-15 11:33:14

2025-04-12 18:26:42

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 422

values | tags

sequencelengths 1

4.05k

| pipeline_tag

stringclasses 54

values | createdAt

unknowndate 2022-03-02 23:29:04

2025-04-12 18:26:09

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

not-lain/Gemma-2b-Peft-finetuning | not-lain | "2024-03-22T05:08:50Z" | 12 | 0 | peft | [

"peft",

"tensorboard",

"safetensors",

"trl",

"sft",

"generated_from_trainer",

"base_model:google/gemma-2b",

"base_model:adapter:google/gemma-2b",

"license:other",

"region:us"

] | null | "2024-03-22T05:01:03Z" | ---

license: other

library_name: peft

tags:

- trl

- sft

- generated_from_trainer

base_model: google/gemma-2b

model-index:

- name: outputs

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# outputs

This model is a fine-tuned version of [google/gemma-2b](https://huggingface.co/google/gemma-2b) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0002

- train_batch_size: 1

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 4

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 2

- training_steps: 10

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- PEFT 0.10.0

- Transformers 4.38.2

- Pytorch 2.2.1+cu121

- Datasets 2.18.0

- Tokenizers 0.15.2 |

miao1234/furniture_use_data_finetuning | miao1234 | "2023-10-30T10:35:06Z" | 33 | 0 | transformers | [

"transformers",

"pytorch",

"detr",

"object-detection",

"generated_from_trainer",

"base_model:facebook/detr-resnet-50",

"base_model:finetune:facebook/detr-resnet-50",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | object-detection | "2023-10-29T19:33:33Z" | ---

license: apache-2.0

base_model: facebook/detr-resnet-50

tags:

- generated_from_trainer

model-index:

- name: furniture_use_data_finetuning

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# furniture_use_data_finetuning

This model is a fine-tuned version of [facebook/detr-resnet-50](https://huggingface.co/facebook/detr-resnet-50) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 100

### Training results

### Framework versions

- Transformers 4.34.1

- Pytorch 2.1.0+cu118

- Datasets 2.14.6

- Tokenizers 0.14.1

|

ArchiveAI/Thespis-Balanced-7b-v1 | ArchiveAI | "2024-03-15T06:38:20Z" | 3 | 0 | transformers | [

"transformers",

"pytorch",

"mistral",

"text-generation",

"license:cc-by-nc-4.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | "2024-03-15T06:38:20Z" | ---

license: cc-by-nc-4.0

---

ITS PRETTY COOL! If you need a readme go look at one of the other models I've posted. Prompt format is the same. I'll add something better after I've slept. |

darkc0de/BuddyGlassUncensored2025.4 | darkc0de | "2025-03-02T15:41:30Z" | 0 | 0 | transformers | [

"transformers",

"safetensors",

"mistral",

"text-generation",

"mergekit",

"merge",

"conversational",

"arxiv:2311.03099",

"base_model:TheDrummer/Cydonia-24B-v2",

"base_model:merge:TheDrummer/Cydonia-24B-v2",

"base_model:cognitivecomputations/Dolphin3.0-Mistral-24B",

"base_model:merge:cognitivecomputations/Dolphin3.0-Mistral-24B",

"base_model:huihui-ai/Arcee-Blitz-abliterated",

"base_model:merge:huihui-ai/Arcee-Blitz-abliterated",

"base_model:huihui-ai/Mistral-Small-24B-Instruct-2501-abliterated",

"base_model:merge:huihui-ai/Mistral-Small-24B-Instruct-2501-abliterated",

"base_model:mistralai/Mistral-Small-24B-Instruct-2501",

"base_model:merge:mistralai/Mistral-Small-24B-Instruct-2501",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | "2025-03-02T15:24:39Z" | ---

base_model:

- mistralai/Mistral-Small-24B-Instruct-2501

- huihui-ai/Mistral-Small-24B-Instruct-2501-abliterated

- TheDrummer/Cydonia-24B-v2

- huihui-ai/Arcee-Blitz-abliterated

- cognitivecomputations/Dolphin3.0-Mistral-24B

library_name: transformers

tags:

- mergekit

- merge

---

# merge

This is a merge of pre-trained language models created using [mergekit](https://github.com/cg123/mergekit).

## Merge Details

### Merge Method

This model was merged using the [DARE TIES](https://arxiv.org/abs/2311.03099) merge method using [mistralai/Mistral-Small-24B-Instruct-2501](https://huggingface.co/mistralai/Mistral-Small-24B-Instruct-2501) as a base.

### Models Merged

The following models were included in the merge:

* [huihui-ai/Mistral-Small-24B-Instruct-2501-abliterated](https://huggingface.co/huihui-ai/Mistral-Small-24B-Instruct-2501-abliterated)

* [TheDrummer/Cydonia-24B-v2](https://huggingface.co/TheDrummer/Cydonia-24B-v2)

* [huihui-ai/Arcee-Blitz-abliterated](https://huggingface.co/huihui-ai/Arcee-Blitz-abliterated)

* [cognitivecomputations/Dolphin3.0-Mistral-24B](https://huggingface.co/cognitivecomputations/Dolphin3.0-Mistral-24B)

### Configuration

The following YAML configuration was used to produce this model:

```yaml

models:

- model: cognitivecomputations/Dolphin3.0-Mistral-24B

parameters:

density: 0.5

weight: 0.5

- model: huihui-ai/Mistral-Small-24B-Instruct-2501-abliterated

parameters:

density: 0.5

weight: 0.5

- model: TheDrummer/Cydonia-24B-v2

parameters:

density: 0.5

weight: 0.5

- model: huihui-ai/Arcee-Blitz-abliterated

parameters:

density: 0.5

weight: 0.5

merge_method: dare_ties

base_model: mistralai/Mistral-Small-24B-Instruct-2501

parameters:

normalize: false

int8_mask: true

dtype: float16

```

|

ibrocalculus/example_model2 | ibrocalculus | "2025-02-25T09:02:07Z" | 0 | 0 | null | [

"region:us"

] | null | "2025-02-25T08:47:46Z" | # Example Model2

###### This is a second sample model I created for practice purpose

---

license: mit

---

|

devcharmander/toastmaster | devcharmander | "2023-12-01T11:10:54Z" | 0 | 0 | null | [

"coreml",

"region:us"

] | null | "2023-12-01T10:51:39Z" | ## Whisper model files in custom ggml format

The [original Whisper PyTorch models provided by OpenAI](https://github.com/openai/whisper/blob/main/whisper/__init__.py#L17-L27)

are converted to custom `ggml` format in order to be able to load them in C/C++.

Conversion is performed using the [convert-pt-to-ggml.py](convert-pt-to-ggml.py) script.

You can either obtain the original models and generate the `ggml` files yourself using the conversion script,

or you can use the [download-ggml-model.sh](download-ggml-model.sh) script to download the already converted models.

Currently, they are hosted on the following locations:

- https://huggingface.co/ggerganov/whisper.cpp

- https://ggml.ggerganov.com

Sample download:

```java

$ ./download-ggml-model.sh base.en

Downloading ggml model base.en ...

models/ggml-base.en.bin 100%[=============================================>] 141.11M 5.41MB/s in 22s

Done! Model 'base.en' saved in 'models/ggml-base.en.bin'

You can now use it like this:

$ ./main -m models/ggml-base.en.bin -f samples/jfk.wav

```

To convert the files yourself, use the convert-pt-to-ggml.py script. Here is an example usage.

The original PyTorch files are assumed to have been downloaded into ~/.cache/whisper

Change `~/path/to/repo/whisper/` to the location for your copy of the Whisper source:

```

mkdir models/whisper-medium

python models/convert-pt-to-ggml.py ~/.cache/whisper/medium.pt ~/path/to/repo/whisper/ ./models/whisper-medium

mv ./models/whisper-medium/ggml-model.bin models/ggml-medium.bin

rmdir models/whisper-medium

```

A third option to obtain the model files is to download them from Hugging Face:

https://huggingface.co/ggerganov/whisper.cpp/tree/main

## Available models

| Model | Disk | SHA |

| --- | --- | --- |

| tiny | 75 MiB | `bd577a113a864445d4c299885e0cb97d4ba92b5f` |

| tiny.en | 75 MiB | `c78c86eb1a8faa21b369bcd33207cc90d64ae9df` |

| base | 142 MiB | `465707469ff3a37a2b9b8d8f89f2f99de7299dac` |

| base.en | 142 MiB | `137c40403d78fd54d454da0f9bd998f78703390c` |

| small | 466 MiB | `55356645c2b361a969dfd0ef2c5a50d530afd8d5` |

| small.en | 466 MiB | `db8a495a91d927739e50b3fc1cc4c6b8f6c2d022` |

| medium | 1.5 GiB | `fd9727b6e1217c2f614f9b698455c4ffd82463b4` |

| medium.en | 1.5 GiB | `8c30f0e44ce9560643ebd10bbe50cd20eafd3723` |

| large-v1 | 2.9 GiB | `b1caaf735c4cc1429223d5a74f0f4d0b9b59a299` |

| large-v2 | 2.9 GiB | `0f4c8e34f21cf1a914c59d8b3ce882345ad349d6` |

| large-v3 | 2.9 GiB | `ad82bf6a9043ceed055076d0fd39f5f186ff8062` |

## Model files for testing purposes

The model files prefixed with `for-tests-` are empty (i.e. do not contain any weights) and are used by the CI for

testing purposes. They are directly included in this repository for convenience and the Github Actions CI uses them to

run various sanitizer tests.

## Fine-tuned models

There are community efforts for creating fine-tuned Whisper models using extra training data. For example, this

[blog post](https://huggingface.co/blog/fine-tune-whisper) describes a method for fine-tuning using Hugging Face (HF)

Transformer implementation of Whisper. The produced models are in slightly different format compared to the original

OpenAI format. To read the HF models you can use the [convert-h5-to-ggml.py](convert-h5-to-ggml.py) script like this:

```bash

git clone https://github.com/openai/whisper

git clone https://github.com/ggerganov/whisper.cpp

# clone HF fine-tuned model (this is just an example)

git clone https://huggingface.co/openai/whisper-medium

# convert the model to ggml

python3 ./whisper.cpp/models/convert-h5-to-ggml.py ./whisper-medium/ ./whisper .

```

## Distilled models

Initial support for https://huggingface.co/distil-whisper is available.

Currently, the chunk-based transcription strategy is not implemented, so there can be sub-optimal quality when using the distilled models with `whisper.cpp`.

```bash

# clone OpenAI whisper and whisper.cpp

git clone https://github.com/openai/whisper

git clone https://github.com/ggerganov/whisper.cpp

# get the models

cd whisper.cpp/models

git clone https://huggingface.co/distil-whisper/distil-medium.en

git clone https://huggingface.co/distil-whisper/distil-large-v2

# convert to ggml

python3 ./convert-h5-to-ggml.py ./distil-medium.en/ ../../whisper .

mv ggml-model.bin ggml-medium.en-distil.bin

python3 ./convert-h5-to-ggml.py ./distil-large-v2/ ../../whisper .

mv ggml-model.bin ggml-large-v2-distil.bin

```

|

sd-concepts-library/jojo-bizzare-adventure-manga-lineart | sd-concepts-library | "2022-09-21T15:03:39Z" | 0 | 1 | null | [

"license:mit",

"region:us"

] | null | "2022-09-21T15:03:33Z" | ---

license: mit

---

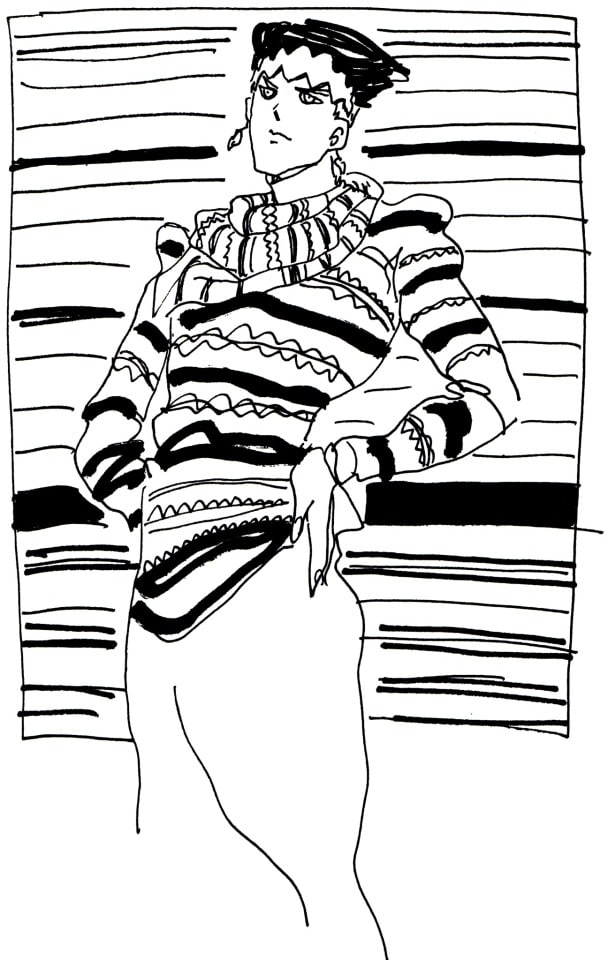

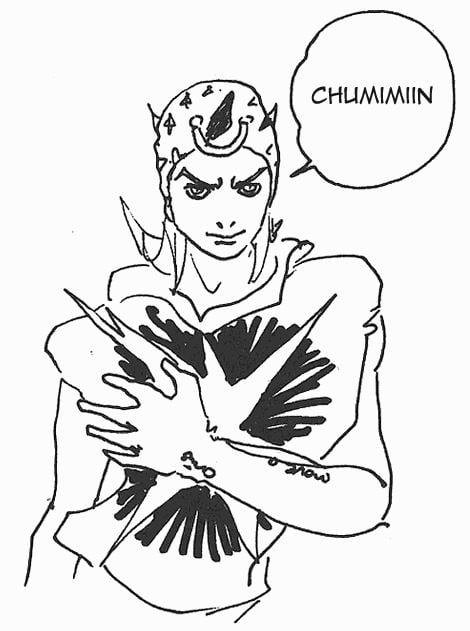

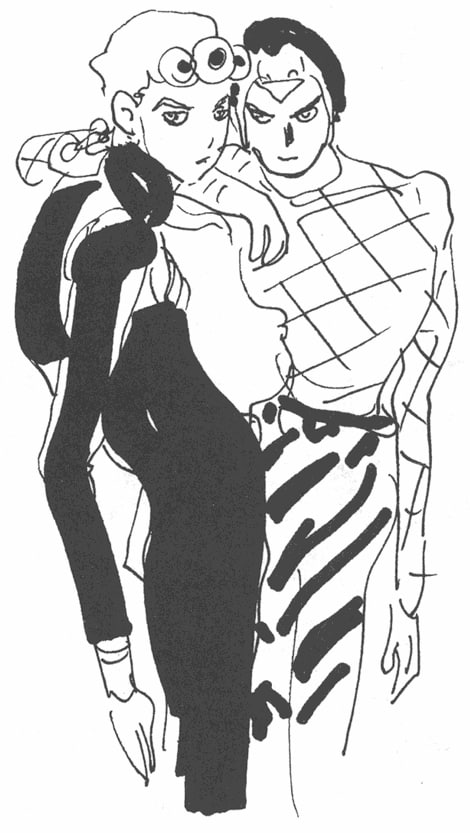

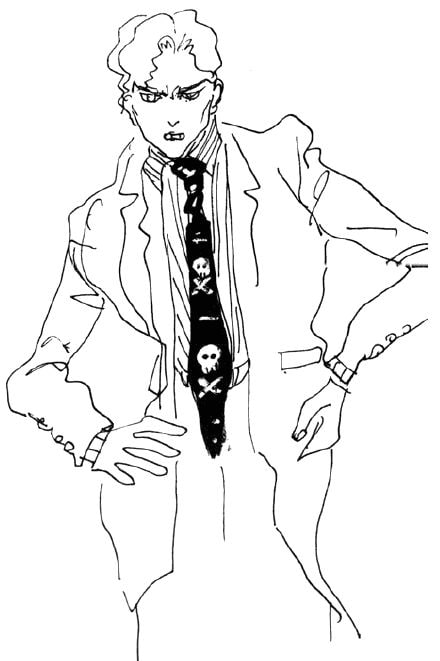

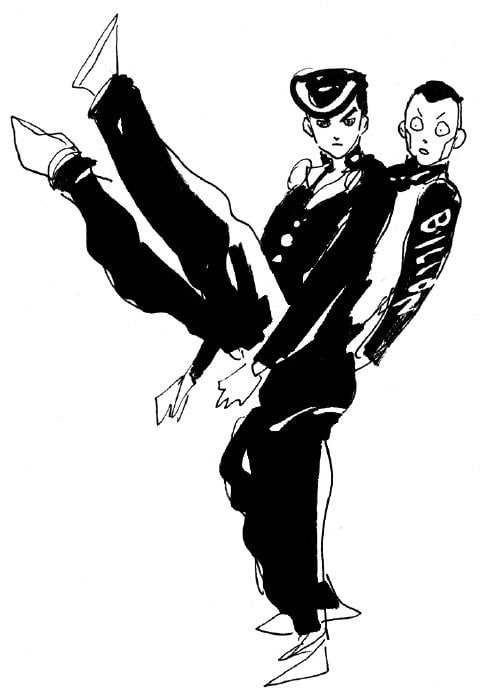

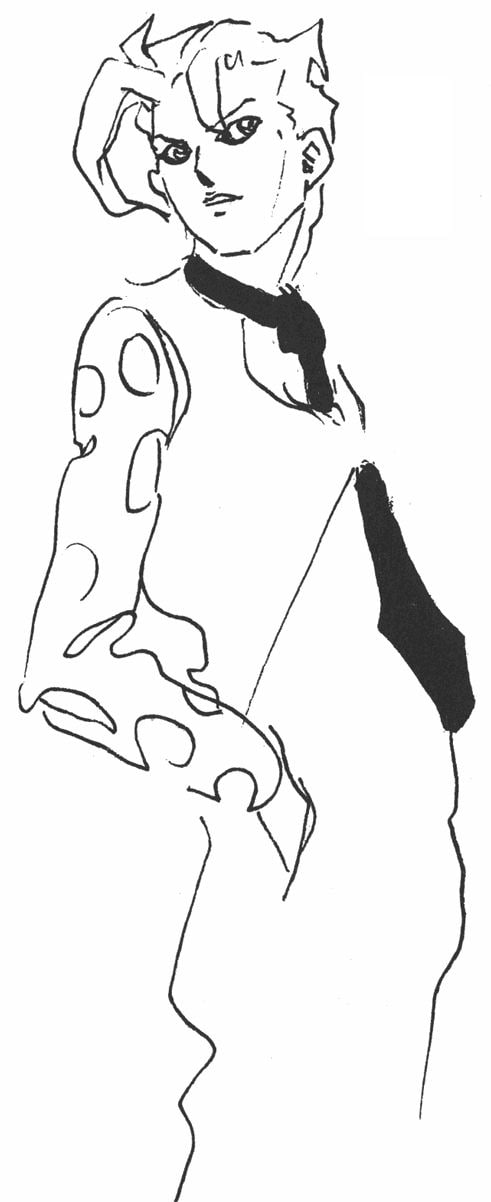

### JoJo Bizzare Adventure manga lineart on Stable Diffusion

This is the `<JoJo_lineart>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

havinash-ai/148d2316-ea3d-4ae8-b42e-b2f01ebe44e2 | havinash-ai | "2025-01-14T12:44:47Z" | 9 | 0 | peft | [

"peft",

"safetensors",

"gemma",

"axolotl",

"generated_from_trainer",

"base_model:unsloth/gemma-7b-it",

"base_model:adapter:unsloth/gemma-7b-it",

"license:apache-2.0",

"region:us"

] | null | "2025-01-14T12:43:14Z" | ---

library_name: peft

license: apache-2.0

base_model: unsloth/gemma-7b-it

tags:

- axolotl

- generated_from_trainer

model-index:

- name: 148d2316-ea3d-4ae8-b42e-b2f01ebe44e2

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

[<img src="https://raw.githubusercontent.com/axolotl-ai-cloud/axolotl/main/image/axolotl-badge-web.png" alt="Built with Axolotl" width="200" height="32"/>](https://github.com/axolotl-ai-cloud/axolotl)

<details><summary>See axolotl config</summary>

axolotl version: `0.4.1`

```yaml

adapter: lora

base_model: unsloth/gemma-7b-it

bf16: auto

chat_template: llama3

dataset_prepared_path: null

datasets:

- data_files:

- 8bab2020b11caa57_train_data.json

ds_type: json

format: custom

path: /workspace/input_data/8bab2020b11caa57_train_data.json

type:

field_input: text

field_instruction: query

field_output: response

format: '{instruction} {input}'

no_input_format: '{instruction}'

system_format: '{system}'

system_prompt: ''

debug: null

deepspeed: null

early_stopping_patience: null

eval_max_new_tokens: 128

eval_table_size: null

evals_per_epoch: 4

flash_attention: false

fp16: null

fsdp: null

fsdp_config: null

gradient_accumulation_steps: 4

gradient_checkpointing: false

group_by_length: false

hub_model_id: havinash-ai/148d2316-ea3d-4ae8-b42e-b2f01ebe44e2

hub_repo: null

hub_strategy: checkpoint

hub_token: null

learning_rate: 0.0002

load_in_4bit: false

load_in_8bit: false

local_rank: null

logging_steps: 1

lora_alpha: 16

lora_dropout: 0.05

lora_fan_in_fan_out: null

lora_model_dir: null

lora_r: 8

lora_target_linear: true

lr_scheduler: cosine

max_steps: 10

micro_batch_size: 2

mlflow_experiment_name: /tmp/8bab2020b11caa57_train_data.json

model_type: AutoModelForCausalLM

num_epochs: 1

optimizer: adamw_bnb_8bit

output_dir: miner_id_24

pad_to_sequence_len: true

resume_from_checkpoint: null

s2_attention: null

sample_packing: false

saves_per_epoch: 4

sequence_len: 512

strict: false

tf32: false

tokenizer_type: AutoTokenizer

train_on_inputs: false

trust_remote_code: true

val_set_size: 0.05

wandb_entity: null

wandb_mode: online

wandb_name: fd546f03-61db-499f-a81c-027d8a071d30

wandb_project: Gradients-On-Demand

wandb_run: your_name

wandb_runid: fd546f03-61db-499f-a81c-027d8a071d30

warmup_steps: 10

weight_decay: 0.0

xformers_attention: null

```

</details><br>

# 148d2316-ea3d-4ae8-b42e-b2f01ebe44e2

This model is a fine-tuned version of [unsloth/gemma-7b-it](https://huggingface.co/unsloth/gemma-7b-it) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.8679

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0002

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 8

- optimizer: Use OptimizerNames.ADAMW_BNB with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: cosine

- lr_scheduler_warmup_steps: 10

- training_steps: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:------:|:----:|:---------------:|

| 2.215 | 0.0018 | 1 | 2.0531 |

| 1.7352 | 0.0053 | 3 | 1.9725 |

| 1.4294 | 0.0105 | 6 | 1.2547 |

| 0.8574 | 0.0158 | 9 | 0.8679 |

### Framework versions

- PEFT 0.13.2

- Transformers 4.46.0

- Pytorch 2.5.0+cu124

- Datasets 3.0.1

- Tokenizers 0.20.1 |

pankajrajdeo/Bioformer-8L-UMLS-Pubmed_PMC-ST-TCE-Epoch-1 | pankajrajdeo | "2025-02-02T04:49:59Z" | 5 | 0 | sentence-transformers | [

"sentence-transformers",

"safetensors",

"bert",

"sentence-similarity",

"feature-extraction",

"generated_from_trainer",

"dataset_size:6150902",

"loss:MultipleNegativesRankingLoss",

"arxiv:1908.10084",

"arxiv:1705.00652",

"autotrain_compatible",

"text-embeddings-inference",

"endpoints_compatible",

"region:us"

] | sentence-similarity | "2025-02-02T04:49:47Z" | ---

tags:

- sentence-transformers

- sentence-similarity

- feature-extraction

- generated_from_trainer

- dataset_size:6150902

- loss:MultipleNegativesRankingLoss

widget:

- source_sentence: '[YEAR_RANGE] 2021-2025 [TEXT] Semantic Stroop interference is

modulated by the availability of executive resources: Insights from delta-plot

analyses and cognitive load manipulation'

sentences:

- '[YEAR_RANGE] 2021-2025 [TEXT] We investigated whether, during visual word recognition,

semantic processing is modulated by attentional control mechanisms directed at

matching semantic information with task-relevant goals. In previous research,

we analyzed the semantic Stroop interference as a function of response latency

(delta-plot analyses) and found that this phenomenon mainly occurs in the slowest

responses. Here, we investigated whether this pattern is due to reduced ability

to proactively maintain the task goal in these slowest trials. In two pairs of

experiments, participants completed two semantic Stroop tasks: a classic semantic

Stroop task (Experiment 1A and 2A) and a semantic Stroop task combined with an

n-back task (Experiment 1B and 2B). The two pairs of experiments only differed

in the trial pace, which was slightly faster in Experiments 2A and 2B than in

Experiments 1A and 1B. By taxing the executive control system, the n-back task

was expected to hinder proactive control. Delta-plot analyses of the semantic

Stroop task replicated the enhanced effect in the slowest responses, but only

under sufficient time pressure. Combining the semantic Stroop task with the n-back

task produced a change in the distributional profile of semantic Stroop interference,

which we ascribe to a general difficulty in the use of proactive control. Our

findings suggest that semantic Stroop interference is, to some extent, dependent

on the available executive resources, while also being sensitive to subtle variations

in task conditions.Supplementary InformationThe online version contains supplementary

material available at 10.3758/s13421-024-01552-5.'

- '[YEAR_RANGE] 2021-2025 [TEXT] Priority question exercises are increasingly used

to frame and set future research, innovation and development agendas. They can

provide an important bridge between the discoveries, data and outputs generated

by researchers, and the information required by policy makers and funders. Microbial

biofilms present huge scientific, societal and economic opportunities and challenges.

In order to identify key priorities that will help to advance the field, here

we review questions from a pool submitted by the international biofilm research

community and from practitioners working across industry, the environment and

medicine. To avoid bias we used computational approaches to group questions and

manage a voting and selection process. The outcome of the exercise is a set of

78 unique questions, categorized in six themes: (i) Biofilm control, disruption,

prevention, management, treatment (13 questions); (ii) Resistance, persistence,

tolerance, role of aggregation, immune interaction, relevance to infection (10

questions); (iii) Model systems, standards, regulatory, policy education, interdisciplinary

approaches (15 questions); (iv) Polymicrobial, interactions, ecology, microbiome,

phage (13 questions); (v) Clinical focus, chronic infection, detection, diagnostics

(13 questions); and (vi) Matrix, lipids, capsule, metabolism, development, physiology,

ecology, evolution environment, microbiome, community engineering (14 questions).

The questions presented are intended to highlight opportunities, stimulate discussion

and provide focus for researchers, funders and policy makers, informing future

research, innovation and development strategy for biofilms and microbial communities.'

- '[YEAR_RANGE] 2021-2025 [TEXT] Polymer compounds have become a popular choice

for the synthesis of novel products and are being used in cementitious mixtures

principally for altering the properties in the fresh state and as repair materials.

These polymers are used in various combinations. Their interaction with cement

is worth studying because its hydration, followed by setting and hardening, is

the primary phenomenon contributing to the strength gain and performance of concrete.

This paper summarizes the effects of different polymers on the hydration of cement

and the properties of concrete/mortar. Studies have established that the incorporation

of polymers as a workability enhancing admixture or for improving strength, durability,

and other properties severely affects the early hydration of cement and reduces

the overall strength gain in most cases. The hydration retarding effect depends

on the charge, architecture, and the amount (wt %) of polymer added. However,

owing to the densification of the interfacial transition zone and formation of

polymer films/bridges between stacks of calcium hydroxide surfaces and air, the

later age properties show beneficial effects such as higher flexural strength,

enhanced compressive strength, and modulus of elasticity, better resistance against

frost, and corrosion of steel reinforcement. Further, it is seen that the hydration

retardation may be mitigated to some extent by the addition of silica fume or

zeolite; using a defoaming agent; curing at high temperatures; and following a

combination of wet, moist, and dry curing regimes. This review is expected to

be helpful to all practicing civil engineers who are the immediate users of these

chemicals and are working to achieve quality concrete construction.'

- source_sentence: '[YEAR_RANGE] 2021-2025 [TEXT] The basic biology of NK cells and

its application in tumor immunotherapy'

sentences:

- '[YEAR_RANGE] 2021-2025 [TEXT] Natural Killer (NK) cells play a crucial role as

effector cells within the tumor immune microenvironment, capable of identifying

and eliminating tumor cells through the expression of diverse activating and inhibitory

receptors that recognize tumor-related ligands. Therefore, harnessing NK cells

for therapeutic purposes represents a significant adjunct to T cell-based tumor

immunotherapy strategies. Presently, NK cell-based tumor immunotherapy strategies

encompass various approaches, including adoptive NK cell therapy, cytokine therapy,

antibody-based NK cell therapy (enhancing ADCC mediated by NK cells, NK cell engagers,

immune checkpoint blockade therapy) and the utilization of nanoparticles and small

molecules to modulate NK cell anti-tumor functionality. This article presents

a comprehensive overview of the latest advances in NK cell-based anti-tumor immunotherapy,

with the aim of offering insights and methodologies for the clinical treatment

of cancer patients.'

- '[YEAR_RANGE] 2021-2025 [TEXT] Background and study aims The optimal number of

needle passes during endoscopic ultrasound-guided fine-needle biopsy (EUS-FNB)

is not yet established. We aimed to perform a per-pass analysis of the diagnostic

accuracy of EUS-FNB of solid pancreatic lesions using a 22G Franseen needle. Patients

and methods Consecutive patients with solid pancreatic lesions referred to 11

Italian centers were prospectively enrolled. Three needle passes were performed;

specimens were collected after each pass and processed individually as standard

histology following macroscopic on-site evaluation (MOSE) by the endoscopist.

The primary endpoint was diagnostic accuracy of each sequential pass. Final diagnosis

was established based on surgical pathology or a clinical course of at least 6

months. Secondary endpoints were specimen adequacy, MOSE reliability, factors

impacting diagnostic accuracy, and procedure-related adverse events. Results A

total of 504 samples from 168 patients were evaluated. Diagnostic accuracy was

90.5% (85.0%–94.1%) after one pass and 97.6% (94.1%–99.3%) after two passes (

P =0.01). Similarly, diagnostic sensitivity and sample adequacy were significantly

higher adding the second needle pass (90.2%, 84.6%–94.3% vs 97.5%, 93.8%–99.3%,

P =0.009 and 91.1%, 85.7%-94.9% vs 98.2%, 95.8%–99.3%, P =0.009, one pass vs two

passes, respectively). Accuracy, sensitivity, and adequacy remained the same after

the third pass. The concordance between MOSE and histological evaluation was 89.9%.

The number of passes was the only factor associated with accuracy. One case of

mild acute pancreatitis (0.6%) was managed conservatively. Conclusions At least

two passes should be performed for the diagnosis of solid pancreatic lesions.

MOSE is a reliable tool to predict the histological adequacy of specimens.'

- '[YEAR_RANGE] 2021-2025 [TEXT] After over a hundred years of research, the question

whether the symptoms of schizophrenia are rather trait-like (being a relatively

stable quality of individuals) or state-like (being substance to change) is still

unanswered. To assess the trait and the state component in patients with acute

schizophrenia, one group receiving antipsychotic treatment, the other not. Data

from four phase II/III, 6-week, randomized, double-blind, placebo-controlled trials

of similar design that included patients with acute exacerbation of schizophrenia

were pooled. In every trial, one treatment group received a third-generation antipsychotic,

cariprazine, and the other group placebo. To assess symptoms of schizophrenia,

the Positive and Negative Symptom Scale (PANSS) was applied. Further analyses

were conducted using the five subscales as proposed by Wallwork and colleagues.

A latent state–trait (LST) model was developed to estimate the trait and state

components of the total variance of the observed scores. All symptom dimensions

behaved more in a trait-like manner. The proportions of all sources of variability

changed over the course of the observational period, with a bent around weeks

3 and 4. Visually inspected, no major differences were found between the two treatment

groups regarding the LST structure of symptom dimensions. This high proportion

of inter-individual stability may represent an inherent part of symptomatology

that behaves independently from treatment status.Supplementary InformationThe

online version contains supplementary material available at 10.1007/s00406-024-01790-3.'

- source_sentence: '[YEAR_RANGE] 2021-2025 [TEXT] Robotic-assisted minimally invasive

repair surgery for progressive spondylolysis in a young athlete: a technical note'

sentences:

- '[YEAR_RANGE] 2021-2025 [TEXT] AbstractCXCL12 acts as a chemoattractant by binding

to the receptor CXCR4. The (atypical) chemokine receptor ACKR3 (CXCR7) scavenges

CXCL12. Antagonism of ACKR3 thus leads to an increase in CXCL12 concentrations

that has been used as a pharmacodynamic biomarker in healthy adults. Increased

CXCL12 concentrations have also been linked to repair mechanisms in human diseases

and mouse models. To date, CXCL12 concentrations have typically been quantified

using antibody‐based assays with overlapping or unclear specificity for the various

CXCL12 isoforms (α, β, and γ) and proteoforms. Only the N‐terminal full‐length

CXCL12 proteoform is biologically active and can engage CXCR4 and ACKR3, but this

proteoform could so far not be quantified in healthy adults. Here, we describe

a new and fit‐for‐purpose validated immunoaffinity mass spectrometry (IA‐MS) assay

for specific measurement of five CXCL12α proteoforms in human plasma, including

the biologically active CXCL12α proteoform. This biomarker assay was used in a

phase I clinical study with the ACKR3 antagonist ACT‐1004‐1239. In placebo‐treated

healthy adults, 1.0 nM total CXCL12α and 0.1 nM biologically active CXCL12α was

quantified. The concentrations of both proteoforms increased up to two‐fold in

healthy adults compared to placebo following drug administration. At all dose

levels, 10% of the CXCL12α was the biologically active proteoform and the simultaneous

increase of all proteoforms suggests that a new steady state has been reached

24 h following dosing. Hence, this IA‐MS biomarker assay can be used to specifically

measure active CXCL12 proteoform concentrations in clinical trials to demonstrate

target engagement and correlate with clinical outcomes.'

- '[YEAR_RANGE] 2021-2025 [TEXT] Background and objectivePatients suspected to have

lung cancer, undergo endobronchial ultrasound bronchoscopy (EBUS) for the purpose

of diagnosis and staging. For presumptive curable patients, the EBUS bronchoscopy

is planned based on images and data from computed tomography (CT) images and positron

emission tomography (PET). Our study aimed to evaluate the feasibility of a multimodal

electromagnetic navigation platform for EBUS bronchoscopy, integrating ultrasound

and segmented CT, and PET scan imaging data.MethodsThe proof-of-concept study

included patients with suspected lung cancer and pathological mediastinal/hilar

lymph nodes identified on both CT and PET scans. Images obtained from these two

modalities were segmented to delineate target lymph nodes and then incorporated

into the CustusX navigation platform. The EBUS bronchoscope was equipped with

a sensor, calibrated, and affixed to a 3D printed click-on device positioned at

the bronchoscope’s tip. Navigation accuracy was measured postoperatively using

ultrasound recordings.ResultsThe study enrolled three patients, all presenting

with suspected mediastinal lymph node metastasis (N1-3). All PET-positive lymph

nodes were displayed in the navigation platform during the EBUS procedures. In

total, five distinct lymph nodes were sampled, yielding malignant cells from three

nodes and lymphocytes from the remaining two. The median accuracy of the navigation

system was 7.7 mm.ConclusionOur study introduces a feasible multimodal electromagnetic

navigation platform that combines intraoperative ultrasound with preoperative

segmented CT and PET imaging data for EBUS lymph node staging examinations. This

innovative approach holds promise for enhancing the accuracy and effectiveness

of EBUS procedures.'

- '[YEAR_RANGE] 2021-2025 [TEXT] AbstractPresently, the invasiveness of direct repair

surgery for lumbar spondylolysis is relatively high. Thus, high school and junior

high school students who play sports often cannot return to sports before graduation

because of the invasiveness. The use of a robotic system enabled an accurate and

minimally invasive procedure. Robotic-assisted minimally invasive direct pars

repair surgery is useful for young patients with progressive spondylolysis.'

- source_sentence: '[YEAR_RANGE] 2021-2025 [TEXT] An artificial intelligence-based

nerve recognition model is useful as surgical support technology and as an educational

tool in laparoscopic and robot-assisted rectal cancer surgery'

sentences:

- '[YEAR_RANGE] 2021-2025 [TEXT] BackgroundArtificial intelligence and 0.292, respectively.

The colorectal surgeons revealed an under-detection score of 0.80 (± 0.47), an

over-detection score of 0.58 (± 0.41), and a usefulness evaluation score of 3.38

(± 0.43). The nerve recognition scores of non-colorectal surgeons, rotating residents,

and medical students significantly improved by simply watching the AI nerve recognition

videos for 1 min. Notably, medical students showed a more substantial increase

in nerve recognition scores when exposed to AI nerve analysis videos than when

exposed to traditional lectures on nerves.ConclusionsIn laparoscopic and robot-assisted

rectal cancer surgeries, the AI-based nerve recognition model achieved satisfactory

recognition levels for expert surgeons and demonstrated effectiveness in educating

junior surgeons and medical students on nerve recognition.Supplementary InformationThe

online version contains supplementary material available at 10.1007/s00464-024-10939-z.'

- '[YEAR_RANGE] 2021-2025 [TEXT] Sialodochitis fibrinosa is a rare disease characterized

by paroxysmal swelling of the salivary glands and discharge of fibrous masses

containing eosinophils from the salivary gland orifice. Diagnosis was traditionally

based on irregular dilation of the main salivary duct by sialography, but now

includes the imaging findings of magnetic resonance imaging (MRI). In the present

patient, short TI inversion recovery (STIR) MRI sequence was able to identify

Stensen''s duct dilation and additionally depict cystic dilation due to stenosis

of the orifice and multiple cystic dilations within the parotid gland body. Treatment

was performed on each of the lesion sites identified by MRI. The patient was successfully

treated with compressive gland massage for lesions within the body of the parotid,

and bougienage was performed for stenosis of Stensen''s duct orifice, with duct

flushing for dilation of Stensen''s duct. These findings suggest that MRI could

replace sialography and has the advantages of being noninvasive, having a wide

observation area, and enabling observation within the glandular body. Here, we

report the case of a patient in whom accurate identification of the site of the

lesion enabled selection of appropriate treatment for each site.'

- '[YEAR_RANGE] 2021-2025 [TEXT] Objective To explore the value of the injury severity

score curve (AUC) and Hosmer‒Lemeshow (H-L) statistic. Results A total of 310

patients were included. ISS and NISS of patients with complications and poor prognoses

were greater than those of patients without complications and poor prognoses,

respectively. The discrimination of ISS in predicting pneumonia, respiratory failure,

in-hospital tracheal intubation, extended length of hospital stay, ICU admission,

prolonged ICU stay, and death (AUCs: 0.609, 0.721, 0.848, 0.784, 0.763, 0.716,

and 0.804, respectively) was not statistically significantly different from that

of NISS in predicting the corresponding outcomes (AUCs: 0.628, 0.712, 0.795, 0.767,

0.750, 0.750, and 0.818, respectively). ISS showed better calibration than NISS

for predicting pneumonia, respiratory failure, in-hospital tracheal intubation,

extended length of hospital stay, and ICU admission but worse calibration for

predicting prolonged ICU stay and death. Conclusion ISS and NISS are both suitable

for injury evaluation. There was no statistically significant difference in discrimination

between ISS and NISS, but they had different calibrations when predicting different

outcomes.'

- source_sentence: '[YEAR_RANGE] 2021-2025 [TEXT] Combined hyperglycemic crises in

adult patients already exist in Latin America.'

sentences:

- '[YEAR_RANGE] 2021-2025 [TEXT] AbstractIntroduction. Diabetes mellitus is one

of the most common diseases worldwide, with a high morbidity and mortality rate.

Its prevalence has been increasing, as well as its acute complications, such as

hyperglycemic crises. Hyperglycemic crises can present with combined features

of diabetic ketoacidosis and hyperosmolar state. However, their implications are

not fully understood.Objective. To describe the characteristics, outcomes, and

complications of the diabetic population with hyperglycemic crises and to value

the combined state in the Latin American population.Materials and methods. Retrospective

observational study of all hyperglycemic crises treated in the intensive care

unit of the Fundación Valle del Lili between January 1, 2015, and December 31,

2020. Descriptive analysis and prevalence ratio estimation for deaths were performed

using the robust Poisson regression method.Results. There were 317 patients with

confirmed hyperglycemic crises, 43 (13.56%) with diabetic ketoacidosis, 9 (2.83%)

in hyperosmolar state, and 265 (83.59%) with combined diabetic ketoacidosis and

hyperosmolar state. Infection was the most frequent triggering cause (52.52%).

Fatalities due to ketoacidosis occurred in four patients (9.30%) and combined

diabetic ketoacidosis/hyperosmolar state in 22 patients (8.30%); no patient had

a hyperosmolar state. Mechanical ventilation was associated with death occurrence

(adjusted PR = 1.15; 95 % CI 95 = 1.06 - 1.24).Conclusions. The combined state

was the most prevalent presentation of the hyperglycemic crisis, with a mortality

rate similar to diabetic ketoacidosis. Invasive mechanical ventilation was associated

with a higher occurrence of death.'

- '[YEAR_RANGE] 2021-2025 [TEXT] Impactful research on refugee mental health is

urgently needed. To mitigate the growing refugee crisis, researchers and clinicians

seek to better understand the relationship between trauma, grief and post-migration

factors with the aim of bringing better awareness, more resources and improved

support for these communities and individuals living in host countries. As much

as this is our intention, the prevailing research methods, that is, online anonymous

questionnaires, used to engage refugees in mental health research are increasingly

outdated and lack inclusivity and representation. With this perspective piece,

we would like to highlight a growing crisis in global mental health research;

the predominance of a Global North-centric approach and methodology. We use our

recent research challenges and breakdowns as a learning example and possible opportunity

to rebuild our research practice in a more ethical and equitable way.'

- '[YEAR_RANGE] 2021-2025 [TEXT] Carbon capture and utilization (CCU) covers an

array of technologies for valorizing carbon dioxide (CO2). To date, most mature

CCU technology conducted with capture agents operates against the CO2 gradient

to desorb CO2 from capture agents, exhibiting high energy penalties and thermal

degradation due to the requirement for thermal swings. This Perspective presents

a concept of Bio-Integrated Carbon Capture and Utilization (BICCU), which utilizes

methanogens for integrated release and conversion of CO2 captured with capture

agents. BICCU hereby substitutes the energy-intensive desorption with microbial

conversion of captured CO2 by the methanogenic CO2-reduction pathway, utilizing

green hydrogen to generate non-fossil methane. Existing carbon capture and utilization

technologies are hindered by significant energy penalties. Here, the authors discuss

the Bio-Integrated Carbon Capture and Utilization (BICCU) technology, which mitigates

the energy penalties while generating valuable C1 and C2 products.'

pipeline_tag: sentence-similarity

library_name: sentence-transformers

---

# SentenceTransformer

This is a [sentence-transformers](https://www.SBERT.net) model trained on the parquet dataset. It maps sentences & paragraphs to a 512-dimensional dense vector space and can be used for semantic textual similarity, semantic search, paraphrase mining, text classification, clustering, and more.

## Model Details

### Model Description

- **Model Type:** Sentence Transformer

<!-- - **Base model:** [Unknown](https://huggingface.co/unknown) -->

- **Maximum Sequence Length:** 512 tokens

- **Output Dimensionality:** 512 dimensions

- **Similarity Function:** Cosine Similarity

- **Training Dataset:**

- parquet

<!-- - **Language:** Unknown -->

<!-- - **License:** Unknown -->

### Model Sources

- **Documentation:** [Sentence Transformers Documentation](https://sbert.net)

- **Repository:** [Sentence Transformers on GitHub](https://github.com/UKPLab/sentence-transformers)

- **Hugging Face:** [Sentence Transformers on Hugging Face](https://huggingface.co/models?library=sentence-transformers)

### Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 512, 'do_lower_case': False}) with Transformer model: BertModel

(1): Pooling({'word_embedding_dimension': 512, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False, 'pooling_mode_weightedmean_tokens': False, 'pooling_mode_lasttoken': False, 'include_prompt': True})

)

```

## Usage

### Direct Usage (Sentence Transformers)

First install the Sentence Transformers library:

```bash

pip install -U sentence-transformers

```

Then you can load this model and run inference.

```python

from sentence_transformers import SentenceTransformer

# Download from the 🤗 Hub

model = SentenceTransformer("pankajrajdeo/Bioformer-8L-UMLS-Pubmed_PMC-ST-TCE-Epoch-1")

# Run inference

sentences = [

'[YEAR_RANGE] 2021-2025 [TEXT] Combined hyperglycemic crises in adult patients already exist in Latin America.',

'[YEAR_RANGE] 2021-2025 [TEXT] AbstractIntroduction. Diabetes mellitus is one of the most common diseases worldwide, with a high morbidity and mortality rate. Its prevalence has been increasing, as well as its acute complications, such as hyperglycemic crises. Hyperglycemic crises can present with combined features of diabetic ketoacidosis and hyperosmolar state. However, their implications are not fully understood.Objective. To describe the characteristics, outcomes, and complications of the diabetic population with hyperglycemic crises and to value the combined state in the Latin American population.Materials and methods. Retrospective observational study of all hyperglycemic crises treated in the intensive care unit of the Fundación Valle del Lili between January 1, 2015, and December 31, 2020. Descriptive analysis and prevalence ratio estimation for deaths were performed using the robust Poisson regression method.Results. There were 317 patients with confirmed hyperglycemic crises, 43 (13.56%) with diabetic ketoacidosis, 9 (2.83%) in hyperosmolar state, and 265 (83.59%) with combined diabetic ketoacidosis and hyperosmolar state. Infection was the most frequent triggering cause (52.52%). Fatalities due to ketoacidosis occurred in four patients (9.30%) and combined diabetic ketoacidosis/hyperosmolar state in 22 patients (8.30%); no patient had a hyperosmolar state. Mechanical ventilation was associated with death occurrence (adjusted PR = 1.15; 95 % CI 95 = 1.06 - 1.24).Conclusions. The combined state was the most prevalent presentation of the hyperglycemic crisis, with a mortality rate similar to diabetic ketoacidosis. Invasive mechanical ventilation was associated with a higher occurrence of death.',

'[YEAR_RANGE] 2021-2025 [TEXT] Carbon capture and utilization (CCU) covers an array of technologies for valorizing carbon dioxide (CO2). To date, most mature CCU technology conducted with capture agents operates against the CO2 gradient to desorb CO2 from capture agents, exhibiting high energy penalties and thermal degradation due to the requirement for thermal swings. This Perspective presents a concept of Bio-Integrated Carbon Capture and Utilization (BICCU), which utilizes methanogens for integrated release and conversion of CO2 captured with capture agents. BICCU hereby substitutes the energy-intensive desorption with microbial conversion of captured CO2 by the methanogenic CO2-reduction pathway, utilizing green hydrogen to generate non-fossil methane. Existing carbon capture and utilization technologies are hindered by significant energy penalties. Here, the authors discuss the Bio-Integrated Carbon Capture and Utilization (BICCU) technology, which mitigates the energy penalties while generating valuable C1 and C2 products.',

]

embeddings = model.encode(sentences)

print(embeddings.shape)

# [3, 512]

# Get the similarity scores for the embeddings

similarities = model.similarity(embeddings, embeddings)

print(similarities.shape)

# [3, 3]

```

<!--

### Direct Usage (Transformers)

<details><summary>Click to see the direct usage in Transformers</summary>

</details>

-->

<!--

### Downstream Usage (Sentence Transformers)

You can finetune this model on your own dataset.

<details><summary>Click to expand</summary>

</details>

-->

<!--

### Out-of-Scope Use

*List how the model may foreseeably be misused and address what users ought not to do with the model.*

-->

<!--

## Bias, Risks and Limitations

*What are the known or foreseeable issues stemming from this model? You could also flag here known failure cases or weaknesses of the model.*

-->

<!--

### Recommendations

*What are recommendations with respect to the foreseeable issues? For example, filtering explicit content.*

-->

## Training Details

### Training Dataset

#### parquet

* Dataset: parquet

* Size: 6,150,902 training samples

* Columns: <code>anchor</code> and <code>positive</code>

* Approximate statistics based on the first 1000 samples:

| | anchor | positive |

|:--------|:------------------------------------------------------------------------------------|:-------------------------------------------------------------------------------------|

| type | string | string |

| details | <ul><li>min: 12 tokens</li><li>mean: 39.88 tokens</li><li>max: 112 tokens</li></ul> | <ul><li>min: 32 tokens</li><li>mean: 277.54 tokens</li><li>max: 512 tokens</li></ul> |

* Samples:

| anchor | positive |

|:--------------------------------------------------------------------------------------------------------------------------------------------|:---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

| <code>[YEAR_RANGE] 1896-1900 [TEXT] ON THE PIGMENT OF THE NEGRO'S SKIN AND HAIR</code> | <code>[YEAR_RANGE] 1896-1900 [TEXT] The pigmentary granules of the negro's skin and hair can be freed in several ways from the cells in which they are lodged and collected in any desired amount. As thus obtained, these granules are found to be insoluble in dilute alkalies, dilute hydrochloric acid (hot or cold), alcohol, or other organic solvents when applied in the order named. If, after they have been subjected to the action of dilute hydrochloric acid, they are again treated with dilute alkalies, they are found to give up their pigment, and, on the continued application of heat, the granules dissolve entirely in the alkaline solution, leaving only an insignificant residue. The pigmentary granules are composed of a colourless ground substance or substratum, a pigment, and much inorganic matter. Their inorganic constituents, as thus far determined, are calcium, magnesium, iron, and silicic, phosphoric, and sulphuric acids; and these constituents possibly play an important part in the deposi...</code> |

| <code>[YEAR_RANGE] 1896-1900 [TEXT] THE HISTOLOGIGAL LESIONS OF ACUTE GLANDERS IN MAN AND OF EXPERIMENTAL GLANDERS IN THE GUINEA-PIG</code> | <code>[YEAR_RANGE] 1896-1900 [TEXT] The glanders nodule in the class of cases studied by us is in no sense analogous to the miliary tubercle in its histogenesis, and our studies afford no support to Baumgarten's views. The primary effect of the bacillus of glanders on a tissue we found to be not a production of epithelioid cells, which undergo necrosis and invasion by leucocytes, as happens in the cases in which the bacillus of tuberculosis is concerned, but to be the production of primary necrosis of the tissue, followed by inflammatory exudation, often of a suppurative character. Degenerative changes rapidly ensue in the inflammatory products. These conclusions are in harmony with the observations of Tedeschi, above referred to.</code> |

| <code>[YEAR_RANGE] 1896-1900 [TEXT] THE EFFECT OF ODOURS, IRRITANT VAPOURS, AND MENTAL WORK UPON THE BLOOD FLOW</code> | <code>[YEAR_RANGE] 1896-1900 [TEXT] The most important of this investigation has been the completion of various improvements in the construction and use of the plethysmograph, by means of which numerous errors attending the use of the instrument have been eliminated. The results of the work show that all olfactory sensations, so far as they produce any effect through the vasomotor system, tend to diminish the volume of the arm, and therefore presumably cause a congestion of the brain. Whenever the stimulation occassions an increase in the volume of the arm, as sometimes happens, it seems to be due to acceleration of the heart rate, which, of course, tends also to increase the supply of blood to the brain. The of odours varies in extent with different individuals, and with the same individual at different times. It was most marked in subjects sensitive to odours. Irritant vapours, such as formic acid, have a marked effect in the same direction—that is, they cause a strong diminution in the vo...</code> |

* Loss: [<code>MultipleNegativesRankingLoss</code>](https://sbert.net/docs/package_reference/sentence_transformer/losses.html#multiplenegativesrankingloss) with these parameters:

```json

{

"scale": 20.0,

"similarity_fct": "cos_sim"

}

```

### Evaluation Dataset

#### parquet

* Dataset: parquet

* Size: 6,150,902 evaluation samples

* Columns: <code>anchor</code> and <code>positive</code>

* Approximate statistics based on the first 1000 samples:

| | anchor | positive |

|:--------|:-----------------------------------------------------------------------------------|:-------------------------------------------------------------------------------------|

| type | string | string |

| details | <ul><li>min: 10 tokens</li><li>mean: 28.46 tokens</li><li>max: 61 tokens</li></ul> | <ul><li>min: 23 tokens</li><li>mean: 303.55 tokens</li><li>max: 512 tokens</li></ul> |

* Samples:

| anchor | positive |

|:---------------------------------------------------------------------------------------------------------------------------------------------------------------|:---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

| <code>[YEAR_RANGE] 2021-2025 [TEXT] Construction of Metal/Zeolite Hybrid Nanoframe Reactors via</code> | <code>[YEAR_RANGE] 2021-2025 [TEXT] Metal/zeolite hybrid nanoframes featuring highly accessible compartmental environments, abundant heterogeneous interfaces, and diverse chemical compositions are expected to possess significant potential for heterogeneous catalysis, yet their general synthetic methodology has not yet been established. In this study, we developed a two-step in-situ-kinetics transformation approach to prepare metal/ZSM-5 hybrid nanoframes with exceptionally open nanostructures, tunable metal compositions, and abundant accessible active sites. Initially, the process involved the formation of single-crystalline ZSM-5 nanoframes through an anisotropic etching and recrystallization kinetic transformation process. Subsequently, through an in situ reaction of the Ni2+ ions and the silica species etched from ZSM-5 nanoframes, layered nickel silicate emerged on both the inner and outer surfaces of the zeolite nanoframes. Upon reduction under a hydrogen atmosphere, well-dispersed Ni n...</code> |

| <code>[YEAR_RANGE] 2021-2025 [TEXT] Genome-wide sRNA and mRNA transcriptomic profiling insights into carbapenem-resistant</code> | <code>[YEAR_RANGE] 2021-2025 [TEXT] Introduction Acinetobacter baumannii (AB) is rising as a human pathogen of critical priority worldwide as it is the leading cause of opportunistic infections in healthcare settings and carbapenem-resistant AB is listed as a “super bacterium” or “priority pathogen for drug resistance” by the World Health Organization.MethodsClinical isolates of A. baumannii were collected and tested for antimicrobial susceptibility. Among them, carbapenem-resistant and carbapenem-sensitive A. baumannii were subjected to prokaryotic transcriptome sequencing. The change of sRNA and mRNA expression was analyzed by bioinformatics and validated by quantitative reverse transcription-PCR.ResultsA total of 687 clinical isolates were collected, of which 336 strains of A. baumannii were resistant to carbapenem. Five hundred and six differentially expressed genes and nineteen differentially expressed sRNA candidates were discovered through transcriptomic profile analysis between carba...</code> |

| <code>[YEAR_RANGE] 2021-2025 [TEXT] Evaluation and modeling of diaphragm displacement using ultrasound imaging for wearable respiratory assistive robot</code> | <code>[YEAR_RANGE] 2021-2025 [TEXT] IntroductionAssessing the influence of respiratory assistive devices on the diaphragm mobility is essential for advancing patient care and improving treatment outcomes. Existing respiratory assistive robots have not yet effectively assessed their impact on diaphragm mobility. In this study, we introduce for the first time a non-invasive, real-time clinically feasible ultrasound method to evaluate the impact of soft wearable robots on diaphragm displacement.MethodsWe measured and compared diaphragm displacement and lung volume in eight participants during both spontaneous and robotic-assisted respiration. Building on these measurements, we proposed a human-robot coupled two-compartment respiratory mechanics model that elucidates the underlying mechanism by which our extracorporeal wearable robots augments respiration. Specifically, the soft robot applies external compression to the abdominal wall muscles, inducing their inward movement, which consequently p...</code> |

* Loss: [<code>MultipleNegativesRankingLoss</code>](https://sbert.net/docs/package_reference/sentence_transformer/losses.html#multiplenegativesrankingloss) with these parameters:

```json

{

"scale": 20.0,

"similarity_fct": "cos_sim"

}

```

### Training Hyperparameters

#### Non-Default Hyperparameters

- `eval_strategy`: steps

- `per_device_train_batch_size`: 128

- `learning_rate`: 2e-05

- `num_train_epochs`: 2

- `max_steps`: 91302

- `log_level`: info

- `fp16`: True

- `dataloader_num_workers`: 16

- `load_best_model_at_end`: True

- `resume_from_checkpoint`: True

#### All Hyperparameters

<details><summary>Click to expand</summary>

- `overwrite_output_dir`: False

- `do_predict`: False

- `eval_strategy`: steps

- `prediction_loss_only`: True

- `per_device_train_batch_size`: 128

- `per_device_eval_batch_size`: 8

- `per_gpu_train_batch_size`: None

- `per_gpu_eval_batch_size`: None

- `gradient_accumulation_steps`: 1

- `eval_accumulation_steps`: None

- `torch_empty_cache_steps`: None

- `learning_rate`: 2e-05

- `weight_decay`: 0.0

- `adam_beta1`: 0.9

- `adam_beta2`: 0.999

- `adam_epsilon`: 1e-08

- `max_grad_norm`: 1.0

- `num_train_epochs`: 2

- `max_steps`: 91302

- `lr_scheduler_type`: linear

- `lr_scheduler_kwargs`: {}

- `warmup_ratio`: 0.0

- `warmup_steps`: 0

- `log_level`: info

- `log_level_replica`: warning

- `log_on_each_node`: True

- `logging_nan_inf_filter`: True

- `save_safetensors`: True

- `save_on_each_node`: False

- `save_only_model`: False

- `restore_callback_states_from_checkpoint`: False

- `no_cuda`: False

- `use_cpu`: False

- `use_mps_device`: False

- `seed`: 42

- `data_seed`: None

- `jit_mode_eval`: False

- `use_ipex`: False

- `bf16`: False

- `fp16`: True

- `fp16_opt_level`: O1

- `half_precision_backend`: auto

- `bf16_full_eval`: False

- `fp16_full_eval`: False

- `tf32`: None

- `local_rank`: 0

- `ddp_backend`: None

- `tpu_num_cores`: None

- `tpu_metrics_debug`: False

- `debug`: []

- `dataloader_drop_last`: False

- `dataloader_num_workers`: 16

- `dataloader_prefetch_factor`: None

- `past_index`: -1

- `disable_tqdm`: False

- `remove_unused_columns`: True

- `label_names`: None

- `load_best_model_at_end`: True

- `ignore_data_skip`: False

- `fsdp`: []

- `fsdp_min_num_params`: 0

- `fsdp_config`: {'min_num_params': 0, 'xla': False, 'xla_fsdp_v2': False, 'xla_fsdp_grad_ckpt': False}

- `fsdp_transformer_layer_cls_to_wrap`: None

- `accelerator_config`: {'split_batches': False, 'dispatch_batches': None, 'even_batches': True, 'use_seedable_sampler': True, 'non_blocking': False, 'gradient_accumulation_kwargs': None}

- `deepspeed`: None

- `label_smoothing_factor`: 0.0

- `optim`: adamw_torch

- `optim_args`: None

- `adafactor`: False

- `group_by_length`: False

- `length_column_name`: length

- `ddp_find_unused_parameters`: None

- `ddp_bucket_cap_mb`: None

- `ddp_broadcast_buffers`: False

- `dataloader_pin_memory`: True

- `dataloader_persistent_workers`: False

- `skip_memory_metrics`: True

- `use_legacy_prediction_loop`: False

- `push_to_hub`: False

- `resume_from_checkpoint`: True

- `hub_model_id`: None

- `hub_strategy`: every_save

- `hub_private_repo`: None

- `hub_always_push`: False

- `gradient_checkpointing`: False

- `gradient_checkpointing_kwargs`: None

- `include_inputs_for_metrics`: False

- `include_for_metrics`: []

- `eval_do_concat_batches`: True

- `fp16_backend`: auto

- `push_to_hub_model_id`: None

- `push_to_hub_organization`: None

- `mp_parameters`:

- `auto_find_batch_size`: False

- `full_determinism`: False

- `torchdynamo`: None

- `ray_scope`: last

- `ddp_timeout`: 1800

- `torch_compile`: False

- `torch_compile_backend`: None

- `torch_compile_mode`: None

- `dispatch_batches`: None

- `split_batches`: None

- `include_tokens_per_second`: False

- `include_num_input_tokens_seen`: False

- `neftune_noise_alpha`: None

- `optim_target_modules`: None

- `batch_eval_metrics`: False

- `eval_on_start`: False

- `use_liger_kernel`: False

- `eval_use_gather_object`: False

- `average_tokens_across_devices`: False

- `prompts`: None

- `batch_sampler`: batch_sampler

- `multi_dataset_batch_sampler`: proportional

</details>

### Training Logs

| Epoch | Step | Training Loss | Validation Loss |

|:------:|:-----:|:-------------:|:---------------:|

| 0.0000 | 1 | 2.7287 | - |

| 0.0219 | 1000 | 0.3483 | - |

| 0.0438 | 2000 | 0.1075 | - |

| 0.0657 | 3000 | 0.085 | - |

| 0.0876 | 4000 | 0.0808 | - |

| 0.1095 | 5000 | 0.0707 | - |

| 0.1314 | 6000 | 0.0702 | - |

| 0.1533 | 7000 | 0.0675 | - |

| 0.1752 | 8000 | 0.0549 | - |

| 0.1971 | 9000 | 0.0616 | - |

| 0.2190 | 10000 | 0.0616 | - |

| 0.2410 | 11000 | 0.0548 | - |

| 0.2629 | 12000 | 0.0584 | - |

| 0.2848 | 13000 | 0.0554 | - |

| 0.3067 | 14000 | 0.0533 | - |

| 0.3286 | 15000 | 0.0485 | - |

| 0.3505 | 16000 | 0.0545 | - |

| 0.3724 | 17000 | 0.0579 | - |

| 0.3943 | 18000 | 0.0645 | - |

| 0.4162 | 19000 | 0.0461 | - |

| 0.4381 | 20000 | 0.0604 | - |

| 0.4600 | 21000 | 0.054 | - |

| 0.4819 | 22000 | 0.0481 | - |

| 0.5038 | 23000 | 0.0525 | - |

| 0.5257 | 24000 | 0.0497 | - |

| 0.5476 | 25000 | 0.0492 | - |

| 0.5695 | 26000 | 0.0428 | - |

| 0.5914 | 27000 | 0.0411 | - |

| 0.6133 | 28000 | 0.0356 | - |

| 0.6352 | 29000 | 0.0421 | - |

| 0.6571 | 30000 | 0.0369 | - |

| 0.6791 | 31000 | 0.0384 | - |

| 0.7010 | 32000 | 0.0395 | - |

| 0.7229 | 33000 | 0.0413 | - |

| 0.7448 | 34000 | 0.0375 | - |

| 0.7667 | 35000 | 0.0373 | - |

| 0.7886 | 36000 | 0.0347 | - |

| 0.8105 | 37000 | 0.039 | - |

| 0.8324 | 38000 | 0.0368 | - |

| 0.8543 | 39000 | 0.0365 | - |

| 0.8762 | 40000 | 0.0333 | - |

| 0.8981 | 41000 | 0.036 | - |

| 0.9200 | 42000 | 0.0384 | - |

| 0.9419 | 43000 | 0.0347 | - |

| 0.9638 | 44000 | 0.0358 | - |

| 0.9857 | 45000 | 0.0355 | - |

| 1.0000 | 45651 | - | 0.0044 |

### Framework Versions

- Python: 3.11.11

- Sentence Transformers: 3.4.1

- Transformers: 4.48.2

- PyTorch: 2.6.0+cu124

- Accelerate: 1.3.0

- Datasets: 3.2.0

- Tokenizers: 0.21.0

## Citation

### BibTeX

#### Sentence Transformers

```bibtex

@inproceedings{reimers-2019-sentence-bert,

title = "Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks",

author = "Reimers, Nils and Gurevych, Iryna",

booktitle = "Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing",

month = "11",

year = "2019",

publisher = "Association for Computational Linguistics",

url = "https://arxiv.org/abs/1908.10084",

}

```

#### MultipleNegativesRankingLoss

```bibtex

@misc{henderson2017efficient,

title={Efficient Natural Language Response Suggestion for Smart Reply},

author={Matthew Henderson and Rami Al-Rfou and Brian Strope and Yun-hsuan Sung and Laszlo Lukacs and Ruiqi Guo and Sanjiv Kumar and Balint Miklos and Ray Kurzweil},

year={2017},

eprint={1705.00652},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```

<!--

## Glossary

*Clearly define terms in order to be accessible across audiences.*

-->

<!--

## Model Card Authors

*Lists the people who create the model card, providing recognition and accountability for the detailed work that goes into its construction.*

-->

<!--

## Model Card Contact

*Provides a way for people who have updates to the Model Card, suggestions, or questions, to contact the Model Card authors.*

--> |

lesso10/02a78887-e22e-4b53-bdc9-8d40bf154992 | lesso10 | "2025-01-25T12:54:02Z" | 8 | 0 | peft | [

"peft",

"safetensors",

"mistral",

"axolotl",

"generated_from_trainer",

"base_model:Intel/neural-chat-7b-v3-3",

"base_model:adapter:Intel/neural-chat-7b-v3-3",

"license:apache-2.0",

"8-bit",

"bitsandbytes",

"region:us"

] | null | "2025-01-25T12:41:03Z" | ---

library_name: peft

license: apache-2.0

base_model: Intel/neural-chat-7b-v3-3

tags:

- axolotl

- generated_from_trainer

model-index:

- name: 02a78887-e22e-4b53-bdc9-8d40bf154992

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

[<img src="https://raw.githubusercontent.com/axolotl-ai-cloud/axolotl/main/image/axolotl-badge-web.png" alt="Built with Axolotl" width="200" height="32"/>](https://github.com/axolotl-ai-cloud/axolotl)

<details><summary>See axolotl config</summary>

axolotl version: `0.4.1`

```yaml

adapter: lora

base_model: Intel/neural-chat-7b-v3-3

bf16: true

chat_template: llama3

datasets:

- data_files:

- a8897e19ee045d4f_train_data.json

ds_type: json

format: custom

path: /workspace/input_data/a8897e19ee045d4f_train_data.json

type:

field_instruction: INSTRUCTION

field_output: RESPONSE

format: '{instruction}'

no_input_format: '{instruction}'

system_format: '{system}'

system_prompt: ''

debug: null

deepspeed: null

early_stopping_patience: 2

eval_max_new_tokens: 128

eval_steps: 5

eval_table_size: null

flash_attention: false

fp16: false

fsdp: null

fsdp_config: null

gradient_accumulation_steps: 4

gradient_checkpointing: true

group_by_length: false

hub_model_id: lesso10/02a78887-e22e-4b53-bdc9-8d40bf154992

hub_repo: null

hub_strategy: checkpoint

hub_token: null

learning_rate: 0.0002

load_in_4bit: false

load_in_8bit: true

local_rank: null

logging_steps: 1

lora_alpha: 16

lora_dropout: 0.05

lora_fan_in_fan_out: null

lora_model_dir: null

lora_r: 8

lora_target_linear: true

lr_scheduler: cosine

max_steps: 25

micro_batch_size: 2

mlflow_experiment_name: /tmp/a8897e19ee045d4f_train_data.json

model_type: AutoModelForCausalLM

num_epochs: 1

optimizer: adamw_bnb_8bit

output_dir: miner_id_24

pad_to_sequence_len: true

resume_from_checkpoint: null

s2_attention: null

sample_packing: false

save_steps: 10

sequence_len: 512

special_tokens:

pad_token: </s>

strict: false

tf32: false

tokenizer_type: AutoTokenizer

train_on_inputs: false

trust_remote_code: true

val_set_size: 0.05

wandb_entity: null

wandb_mode: online

wandb_name: ef70b395-1c8f-419f-af46-58a046d20b33

wandb_project: Gradients-On-Demand

wandb_run: your_name

wandb_runid: ef70b395-1c8f-419f-af46-58a046d20b33

warmup_steps: 10

weight_decay: 0.0

xformers_attention: null

```

</details><br>

# 02a78887-e22e-4b53-bdc9-8d40bf154992

This model is a fine-tuned version of [Intel/neural-chat-7b-v3-3](https://huggingface.co/Intel/neural-chat-7b-v3-3) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: nan

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0002

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 8

- optimizer: Use OptimizerNames.ADAMW_BNB with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: cosine

- lr_scheduler_warmup_steps: 10

- training_steps: 25

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:------:|:----:|:---------------:|

| 0.0 | 0.0010 | 1 | nan |

| 0.0 | 0.0049 | 5 | nan |

| 0.0 | 0.0098 | 10 | nan |

| 0.0 | 0.0147 | 15 | nan |

| 0.0 | 0.0196 | 20 | nan |

### Framework versions

- PEFT 0.13.2

- Transformers 4.46.0

- Pytorch 2.5.0+cu124

- Datasets 3.0.1

- Tokenizers 0.20.1 |

furrutiav/modernbert_mixtral_nllfg_vanilla_qnli_none_naive | furrutiav | "2025-03-23T02:48:24Z" | 0 | 0 | transformers | [

"transformers",

"safetensors",

"modernbert",

"feature-extraction",

"arxiv:1910.09700",

"endpoints_compatible",

"region:us"

] | feature-extraction | "2025-03-23T02:47:39Z" | ---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]