content

stringlengths 0

14.9M

| filename

stringlengths 44

136

|

|---|---|

#' Explain Multivariate Adaptive Regression Splines Using SHAP Values

#'

#' Explains the predictions of a MARS (Multivariate Adaptive Regression Splines)

#' model using SHAP (Shapley Additive Explanations) values. It utilizes the

#' DALEXtra and DALEX packages to provide SHAP-based explanations for the

#' specified model.

#'

#' @import earth

#' @import DALEX

#' @import DALEXtra

#' @import Formula

#' @import parsnip

#' @import plotmo

#' @import plotrix

#' @import recipes

#' @import rsample

#' @import TeachingDemos

#' @import parsnip

#' @import recipes

#' @import rsample

#' @import workflows

#' @importFrom dplyr mutate_if

#' @importFrom dplyr select

#' @importFrom stats as.formula

#'

#' @param vip_featured A character value

#' @param hiv_data A data frame

#' @param nt A numeric value

#' @param pd A numeric value

#' @param pru A character value

#' @param vip_train A data frame

#' @param vip_new A numeric vector

#' @param orderings A numeric value

#'

#' @return A data frame

#' @export

#'

#' @examples

#' library(dplyr)

#' library(rsample)

#' library(Formula)

#' library(plotmo)

#' library(plotrix)

#' library(TeachingDemos)

#' cd_2019 <- c(824, 169, 342, 423, 441, 507, 559,

#' 173, 764, 780, 244, 527, 417, 800,

#' 602, 494, 345, 780, 780, 527, 556,

#' 559, 238, 288, 244, 353, 169, 556,

#' 824, 169, 342, 423, 441, 507, 559)

#' vl_2019 <- c(40, 11388, 38961, 40, 75, 4095, 103,

#' 11388, 46, 103, 11388, 40, 0, 11388,

#' 0, 4095, 40, 93, 49, 49, 49,

#' 4095, 6837, 38961, 38961, 0, 0, 93,

#' 40, 11388, 38961, 40, 75, 4095, 103)

#' cd_2021 <- c(992, 275, 331, 454, 479, 553, 496,

#' 230, 605, 432, 170, 670, 238, 238,

#' 634, 422, 429, 513, 327, 465, 479,

#' 661, 382, 364, 109, 398, 209, 1960,

#' 992, 275, 331, 454, 479, 553, 496)

#' vl_2021 <- c(80, 1690, 5113, 71, 289, 3063, 0,

#' 262, 0, 15089, 13016, 1513, 60, 60,

#' 49248, 159308, 56, 0, 516675, 49, 237,

#' 84, 292, 414, 26176, 62, 126, 93,

#' 80, 1690, 5113, 71, 289, 3063, 0)

#' cd_2022 <- c(700, 127, 127, 547, 547, 547, 777,

#' 149, 628, 614, 253, 918, 326, 326,

#' 574, 361, 253, 726, 659, 596, 427,

#' 447, 326, 253, 248, 326, 260, 918,

#' 700, 127, 127, 547, 547, 547, 777)

#' vl_2022 <- c(0, 0, 53250, 0, 40, 1901, 0,

#' 955, 0, 0, 0, 0, 40, 0,

#' 49248, 159308, 56, 0, 516675, 49, 237,

#' 0, 23601, 0, 40, 0, 0, 0,

#' 0, 0, 0, 0, 0, 0, 0)

#' x <- cbind(cd_2019, vl_2019, cd_2021, vl_2021, cd_2022, vl_2022) |>

#' as.data.frame()

#' set.seed(123)

#' hi_data <- rsample::initial_split(x)

#' set.seed(123)

#' hiv_data <- hi_data |>

#' rsample::training()

#' nt <- 3

#' pd <- 1

#' pru <- "none"

#' vip_featured <- c("cd_2022")

#' vip_features <- c("cd_2019", "vl_2019", "cd_2021", "vl_2021", "vl_2022")

#' set.seed(123)

#' vi_train <- rsample::initial_split(x)

#' set.seed(123)

#' vip_train <- vi_train |>

#' rsample::training() |>

#' dplyr::select(rsample::all_of(vip_features))

#' vip_new <- vip_train[1,]

#' orderings <- 20

#' viralx_mars_shap(vip_featured, hiv_data, nt, pd, pru, vip_train, vip_new,orderings)

viralx_mars_shap <- function(vip_featured, hiv_data, nt, pd, pru, vip_train, vip_new, orderings) {

DALEXtra::explain_tidymodels(workflows::workflow() |>

workflows::add_recipe(recipes::recipe(stats::as.formula(paste(vip_featured,"~.")), data = hiv_data)) |>

workflows::add_model(parsnip::mars(num_terms = nt, prod_degree = pd, prune_method = pru) |>

parsnip::set_engine("earth") |>

parsnip::set_mode("regression")) |>

parsnip::fit(data = hiv_data), data = vip_train,

y = vip_featured,

label = "mars",

verbose = FALSE) |>

DALEX::predict_parts(vip_new, type ="shap", B = orderings)

}

|

/scratch/gouwar.j/cran-all/cranData/viralx/R/viralx_mars_shap.R

|

#' Visualize SHAP Values for Multivariate Adaptive Regression Splines Model

#'

#' Visualizes SHAP (Shapley Additive Explanations) values for a MARS

#' (Multivariate Adaptive Regression Splines) model by employing the DALEXtra

#' and DALEX packages to provide visual insights into the impact of a specified

#' variable on the model's predictions.

#'

#' @import DALEX

#' @import DALEXtra

#' @import Formula

#' @import parsnip

#' @import plotmo

#' @import plotrix

#' @import recipes

#' @import rsample

#' @import TeachingDemos

#' @import vdiffr

#' @import workflows

#' @importFrom stats as.formula

#'

#' @param vip_featured A character value

#' @param hiv_data A data frame

#' @param nt A numeric value

#' @param pd A numeric value

#' @param pru A character value

#' @param vip_train A data frame

#' @param vip_new A numeric vector

#' @param orderings A numeric value

#'

#' @return A ggplot object

#' @export

#'

#' @examples

#' library(dplyr)

#' library(rsample)

#' library(Formula)

#' library(plotmo)

#' library(plotrix)

#' library(TeachingDemos)

#' cd_2019 <- c(824, 169, 342, 423, 441, 507, 559,

#' 173, 764, 780, 244, 527, 417, 800,

#' 602, 494, 345, 780, 780, 527, 556,

#' 559, 238, 288, 244, 353, 169, 556,

#' 824, 169, 342, 423, 441, 507, 559)

#' vl_2019 <- c(40, 11388, 38961, 40, 75, 4095, 103,

#' 11388, 46, 103, 11388, 40, 0, 11388,

#' 0, 4095, 40, 93, 49, 49, 49,

#' 4095, 6837, 38961, 38961, 0, 0, 93,

#' 40, 11388, 38961, 40, 75, 4095, 103)

#' cd_2021 <- c(992, 275, 331, 454, 479, 553, 496,

#' 230, 605, 432, 170, 670, 238, 238,

#' 634, 422, 429, 513, 327, 465, 479,

#' 661, 382, 364, 109, 398, 209, 1960,

#' 992, 275, 331, 454, 479, 553, 496)

#' vl_2021 <- c(80, 1690, 5113, 71, 289, 3063, 0,

#' 262, 0, 15089, 13016, 1513, 60, 60,

#' 49248, 159308, 56, 0, 516675, 49, 237,

#' 84, 292, 414, 26176, 62, 126, 93,

#' 80, 1690, 5113, 71, 289, 3063, 0)

#' cd_2022 <- c(700, 127, 127, 547, 547, 547, 777,

#' 149, 628, 614, 253, 918, 326, 326,

#' 574, 361, 253, 726, 659, 596, 427,

#' 447, 326, 253, 248, 326, 260, 918,

#' 700, 127, 127, 547, 547, 547, 777)

#' vl_2022 <- c(0, 0, 53250, 0, 40, 1901, 0,

#' 955, 0, 0, 0, 0, 40, 0,

#' 49248, 159308, 56, 0, 516675, 49, 237,

#' 0, 23601, 0, 40, 0, 0, 0,

#' 0, 0, 0, 0, 0, 0, 0)

#' x <- cbind(cd_2019, vl_2019, cd_2021, vl_2021, cd_2022, vl_2022) |>

#' as.data.frame()

#' set.seed(123)

#' hi_data <- rsample::initial_split(x)

#' set.seed(123)

#' hiv_data <- hi_data |>

#' rsample::training()

#' nt <- 3

#' pd <- 1

#' pru <- "none"

#' vip_featured <- c("cd_2022")

#' vip_features <- c("cd_2019", "vl_2019", "cd_2021", "vl_2021", "vl_2022")

#' set.seed(123)

#' vi_train <- rsample::initial_split(x)

#' set.seed(123)

#' vip_train <- vi_train |>

#' rsample::training() |>

#' dplyr::select(rsample::all_of(vip_features))

#' vip_new <- vip_train[1,]

#' orderings <- 20

#' viralx_mars_vis(vip_featured, hiv_data, nt, pd, pru, vip_train, vip_new, orderings)

viralx_mars_vis <- function(vip_featured, hiv_data, nt, pd, pru, vip_train, vip_new, orderings) {

DALEXtra::explain_tidymodels(workflows::workflow() |>

workflows::add_recipe(recipes::recipe(stats::as.formula(paste(vip_featured,"~.")), data = hiv_data)) |>

workflows::add_model(parsnip::mars(num_terms = nt, prod_degree = pd, prune_method = pru) |>

parsnip::set_engine("earth") |>

parsnip::set_mode("regression")) |>

parsnip::fit(data = hiv_data), data = vip_train,

y = vip_featured,

label = "mars",

verbose = FALSE) |>

DALEX::predict_parts(vip_new, type ="shap", B = orderings) |>

plot()

}

|

/scratch/gouwar.j/cran-all/cranData/viralx/R/viralx_mars_vis.R

|

#' Explain Neural Network Regression Model

#'

#' Explains the predictions of a neural network regression model for viral load

#' or CD4 counts using the DALEX and DALEXtra tools

#'

#' @import DALEX

#' @import DALEXtra

#' @import earth

#' @import parsnip

#' @import recipes

#' @import rsample

#' @import vdiffr

#' @import workflows

#' @importFrom dplyr mutate_if

#' @importFrom dplyr select

#' @importFrom stats as.formula

#'

#' @param vip_featured A character value

#' @param hiv_data A data frame

#' @param hu A numeric value

#' @param plty A numeric value

#' @param epo A numeric value

#' @param vip_train A data frame

#' @param vip_new A numeric vector

#'

#' @return A data frame

#' @export

#'

#' @examples

#' library(dplyr)

#' library(rsample)

#' cd_2019 <- c(824, 169, 342, 423, 441, 507, 559,

#' 173, 764, 780, 244, 527, 417, 800,

#' 602, 494, 345, 780, 780, 527, 556,

#' 559, 238, 288, 244, 353, 169, 556,

#' 824, 169, 342, 423, 441, 507, 559)

#' vl_2019 <- c(40, 11388, 38961, 40, 75, 4095, 103,

#' 11388, 46, 103, 11388, 40, 0, 11388,

#' 0, 4095, 40, 93, 49, 49, 49,

#' 4095, 6837, 38961, 38961, 0, 0, 93,

#' 40, 11388, 38961, 40, 75, 4095, 103)

#' cd_2021 <- c(992, 275, 331, 454, 479, 553, 496,

#' 230, 605, 432, 170, 670, 238, 238,

#' 634, 422, 429, 513, 327, 465, 479,

#' 661, 382, 364, 109, 398, 209, 1960,

#' 992, 275, 331, 454, 479, 553, 496)

#' vl_2021 <- c(80, 1690, 5113, 71, 289, 3063, 0,

#' 262, 0, 15089, 13016, 1513, 60, 60,

#' 49248, 159308, 56, 0, 516675, 49, 237,

#' 84, 292, 414, 26176, 62, 126, 93,

#' 80, 1690, 5113, 71, 289, 3063, 0)

#' cd_2022 <- c(700, 127, 127, 547, 547, 547, 777,

#' 149, 628, 614, 253, 918, 326, 326,

#' 574, 361, 253, 726, 659, 596, 427,

#' 447, 326, 253, 248, 326, 260, 918,

#' 700, 127, 127, 547, 547, 547, 777)

#' vl_2022 <- c(0, 0, 53250, 0, 40, 1901, 0,

#' 955, 0, 0, 0, 0, 40, 0,

#' 49248, 159308, 56, 0, 516675, 49, 237,

#' 0, 23601, 0, 40, 0, 0, 0,

#' 0, 0, 0, 0, 0, 0, 0)

#' x <- cbind(cd_2019, vl_2019, cd_2021, vl_2021, cd_2022, vl_2022) |>

#' as.data.frame()

#' set.seed(123)

#' hi_data <- rsample::initial_split(x)

#' set.seed(123)

#' hiv_data <- hi_data |>

#' rsample::training()

#' hu <- 5

#' plty <- 1.131656e-09

#' epo <- 176

#' vip_featured <- c("cd_2022")

#' vip_features <- c("cd_2019", "vl_2019", "cd_2021", "vl_2021", "vl_2022")

#' set.seed(123)

#' vi_train <- rsample::initial_split(x)

#' set.seed(123)

#' vip_train <- vi_train |>

#' rsample::training() |>

#' dplyr::select(rsample::all_of(vip_features))

#' vip_new <- vip_train[1,]

#' viralx_nn(vip_featured, hiv_data, hu, plty, epo, vip_train, vip_new)

viralx_nn <- function(vip_featured, hiv_data, hu, plty, epo, vip_train, vip_new) {

DALEXtra::explain_tidymodels(workflows::workflow() |>

workflows::add_recipe(recipes::recipe(stats::as.formula(paste(vip_featured,"~.")), data = hiv_data) |>

recipes::step_normalize(recipes::all_predictors())) |>

workflows::add_model(parsnip::mlp(hidden_units = hu, penalty = plty, epochs = epo) |>

parsnip::set_engine("nnet", MaxNWts = 2600) |>

parsnip::set_mode("regression")) |>

parsnip::fit(data = hiv_data),

data = vip_train,

y = vip_featured,

label = "nn + normalized",

verbose = FALSE) |>

DALEX::predict_parts(vip_new) |>

as.data.frame() |>

dplyr::select(1,2) |>

dplyr::mutate_if(is.numeric, round, digits = 2)

}

|

/scratch/gouwar.j/cran-all/cranData/viralx/R/viralx_nn.R

|

#' Global Explainers for Neural Network Models

#'

#' The viralx_nn_glob function is designed to provide global explanations for

#' the specified neural network model.

#'

#' @param vip_featured A character value specifying the variable of interest for which you want to explain predictions.

#' @param hiv_data A data frame containing the dataset used for training the neural network model.

#' @param hu A numeric value representing the number of hidden units in the neural network.

#' @param plty A numeric value representing the penalty term for the neural network model.

#' @param epo A numeric value specifying the number of epochs for training the neural network.

#' @param vip_train A data frame containing the training data used for generating global explanations.

#' @param v_train A numeric vector representing the target variable for the global explanations.

#'

#' @return A list containing global explanations for the specified neural network model.

#' @export

#'

#' @examples

#' library(dplyr)

#' library(rsample)

#' cd_2019 <- c(824, 169, 342, 423, 441, 507, 559,

#' 173, 764, 780, 244, 527, 417, 800,

#' 602, 494, 345, 780, 780, 527, 556,

#' 559, 238, 288, 244, 353, 169, 556,

#' 824, 169, 342, 423, 441, 507, 559)

#' vl_2019 <- c(40, 11388, 38961, 40, 75, 4095, 103,

#' 11388, 46, 103, 11388, 40, 0, 11388,

#' 0, 4095, 40, 93, 49, 49, 49,

#' 4095, 6837, 38961, 38961, 0, 0, 93,

#' 40, 11388, 38961, 40, 75, 4095, 103)

#' cd_2021 <- c(992, 275, 331, 454, 479, 553, 496,

#' 230, 605, 432, 170, 670, 238, 238,

#' 634, 422, 429, 513, 327, 465, 479,

#' 661, 382, 364, 109, 398, 209, 1960,

#' 992, 275, 331, 454, 479, 553, 496)

#' vl_2021 <- c(80, 1690, 5113, 71, 289, 3063, 0,

#' 262, 0, 15089, 13016, 1513, 60, 60,

#' 49248, 159308, 56, 0, 516675, 49, 237,

#' 84, 292, 414, 26176, 62, 126, 93,

#' 80, 1690, 5113, 71, 289, 3063, 0)

#' cd_2022 <- c(700, 127, 127, 547, 547, 547, 777,

#' 149, 628, 614, 253, 918, 326, 326,

#' 574, 361, 253, 726, 659, 596, 427,

#' 447, 326, 253, 248, 326, 260, 918,

#' 700, 127, 127, 547, 547, 547, 777)

#' vl_2022 <- c(0, 0, 53250, 0, 40, 1901, 0,

#' 955, 0, 0, 0, 0, 40, 0,

#' 49248, 159308, 56, 0, 516675, 49, 237,

#' 0, 23601, 0, 40, 0, 0, 0,

#' 0, 0, 0, 0, 0, 0, 0)

#' x <- cbind(cd_2019, vl_2019, cd_2021, vl_2021, cd_2022, vl_2022) |>

#' as.data.frame()

#' set.seed(123)

#' hi_data <- rsample::initial_split(x)

#' set.seed(123)

#' hiv_data <- hi_data |>

#' rsample::training()

#' hu <- 5

#' plty <- 1.131656e-09

#' epo <- 176

#' vip_featured <- c("cd_2022")

#' vip_features <- c("cd_2019", "vl_2019", "cd_2021", "vl_2021", "vl_2022")

#' set.seed(123)

#' vi_train <- rsample::initial_split(x)

#' set.seed(123)

#' vip_train <- vi_train |>

#' rsample::training() |>

#' dplyr::select(rsample::all_of(vip_features))

#' v_train <- vi_train |>

#' rsample::training() |>

#' dplyr::select(rsample::all_of(vip_featured))

#' viralx_nn_glob(vip_featured, hiv_data, hu, plty, epo, vip_train, v_train)

viralx_nn_glob <- function(vip_featured, hiv_data, hu, plty, epo, vip_train, v_train) {

DALEXtra::explain_tidymodels(workflows::workflow() |>

workflows::add_recipe(recipes::recipe(stats::as.formula(paste(vip_featured,"~.")), data = hiv_data) |>

recipes::step_normalize(recipes::all_predictors())) |>

workflows::add_model(parsnip::mlp(hidden_units = hu, penalty = plty, epochs = epo) |>

parsnip::set_engine("nnet", MaxNWts = 2600) |>

parsnip::set_mode("regression")) |> parsnip::fit(data = hiv_data),

data = vip_train,

y = v_train,

label = "nn + normalized",

verbose = FALSE) |>

DALEX::model_parts()

}

|

/scratch/gouwar.j/cran-all/cranData/viralx/R/viralx_nn_glob.R

|

#' Explain Neural Network Model Using SHAP Values

#'

#' Explains the predictions of a neural network model using SHAP (Shapley

#' Additive Explanations) values. It utilizes the DALEXtra and DALEX packages to

#' provide SHAP-based explanations for the specified model.

#'

#' @import DALEX

#' @import DALEXtra

#' @import parsnip

#' @import recipes

#' @import rsample

#' @import workflows

#' @importFrom dplyr mutate_if

#' @importFrom dplyr select

#' @importFrom stats as.formula

#'

#' @param vip_featured A character value

#' @param hiv_data A data frame

#' @param hu A numeric value

#' @param plty A numeric value

#' @param epo A numeric value

#' @param vip_train A data frame

#' @param vip_new A numeric vector

#' @param orderings A numeric value

#'

#' @return A data frame

#' @export

#'

#' @examples

#' library(dplyr)

#' library(rsample)

#' cd_2019 <- c(824, 169, 342, 423, 441, 507, 559,

#' 173, 764, 780, 244, 527, 417, 800,

#' 602, 494, 345, 780, 780, 527, 556,

#' 559, 238, 288, 244, 353, 169, 556,

#' 824, 169, 342, 423, 441, 507, 559)

#' vl_2019 <- c(40, 11388, 38961, 40, 75, 4095, 103,

#' 11388, 46, 103, 11388, 40, 0, 11388,

#' 0, 4095, 40, 93, 49, 49, 49,

#' 4095, 6837, 38961, 38961, 0, 0, 93,

#' 40, 11388, 38961, 40, 75, 4095, 103)

#' cd_2021 <- c(992, 275, 331, 454, 479, 553, 496,

#' 230, 605, 432, 170, 670, 238, 238,

#' 634, 422, 429, 513, 327, 465, 479,

#' 661, 382, 364, 109, 398, 209, 1960,

#' 992, 275, 331, 454, 479, 553, 496)

#' vl_2021 <- c(80, 1690, 5113, 71, 289, 3063, 0,

#' 262, 0, 15089, 13016, 1513, 60, 60,

#' 49248, 159308, 56, 0, 516675, 49, 237,

#' 84, 292, 414, 26176, 62, 126, 93,

#' 80, 1690, 5113, 71, 289, 3063, 0)

#' cd_2022 <- c(700, 127, 127, 547, 547, 547, 777,

#' 149, 628, 614, 253, 918, 326, 326,

#' 574, 361, 253, 726, 659, 596, 427,

#' 447, 326, 253, 248, 326, 260, 918,

#' 700, 127, 127, 547, 547, 547, 777)

#' vl_2022 <- c(0, 0, 53250, 0, 40, 1901, 0,

#' 955, 0, 0, 0, 0, 40, 0,

#' 49248, 159308, 56, 0, 516675, 49, 237,

#' 0, 23601, 0, 40, 0, 0, 0,

#' 0, 0, 0, 0, 0, 0, 0)

#' x <- cbind(cd_2019, vl_2019, cd_2021, vl_2021, cd_2022, vl_2022) |>

#' as.data.frame()

#' set.seed(123)

#' hi_data <- rsample::initial_split(x)

#' set.seed(123)

#' hiv_data <- hi_data |>

#' rsample::training()

#' hu <- 5

#' plty <- 1.131656e-09

#' epo <- 176

#' vip_featured <- c("cd_2022")

#' vip_features <- c("cd_2019", "vl_2019", "cd_2021", "vl_2021", "vl_2022")

#' set.seed(123)

#' vi_train <- rsample::initial_split(x)

#' set.seed(123)

#' vip_train <- vi_train |>

#' rsample::training() |>

#' dplyr::select(rsample::all_of(vip_features))

#' vip_new <- vip_train[1,]

#' orderings <- 20

#' viralx_nn_shap(vip_featured, hiv_data, hu, plty, epo, vip_train, vip_new, orderings)

viralx_nn_shap <- function(vip_featured, hiv_data, hu, plty, epo, vip_train, vip_new, orderings) {

DALEXtra::explain_tidymodels(workflows::workflow() |>

workflows::add_recipe(recipes::recipe(stats::as.formula(paste(vip_featured,"~.")), data = hiv_data) |>

recipes::step_normalize(recipes::all_predictors())) |>

workflows::add_model(parsnip::mlp(hidden_units = hu, penalty = plty, epochs = epo) |>

parsnip::set_engine("nnet", MaxNWts = 2600) |>

parsnip::set_mode("regression")) |> parsnip::fit(data = hiv_data), data = vip_train,

y = vip_featured,

label = "nn + normalized",

verbose = FALSE) |>

DALEX::predict_parts(vip_new, type ="shap", B = orderings)

}

|

/scratch/gouwar.j/cran-all/cranData/viralx/R/viralx_nn_shap.R

|

#' Visualize SHAP Values for Neural Network Model

#'

#' Visualizes SHAP (Shapley Additive Explanations) values for a neural network

#' model by employing the DALEXtra and DALEX packages to provide visual insights

#' into the impact of a specified variable on the model's predictions.

#'

#' @import DALEX

#' @import DALEXtra

#' @import parsnip

#' @import recipes

#' @import rsample

#' @import vdiffr

#' @import workflows

#' @importFrom stats as.formula

#'

#' @param vip_featured A character value

#' @param hiv_data A data frame

#' @param hu A numeric value

#' @param plty A numeric value

#' @param epo A numeric value

#' @param vip_train A data frame

#' @param vip_new A numeric vector

#' @param orderings A numeric value

#'

#' @return A ggplot object

#' @export

#'

#' @examples

#' library(dplyr)

#' library(rsample)

#' cd_2019 <- c(824, 169, 342, 423, 441, 507, 559,

#' 173, 764, 780, 244, 527, 417, 800,

#' 602, 494, 345, 780, 780, 527, 556,

#' 559, 238, 288, 244, 353, 169, 556,

#' 824, 169, 342, 423, 441, 507, 559)

#' vl_2019 <- c(40, 11388, 38961, 40, 75, 4095, 103,

#' 11388, 46, 103, 11388, 40, 0, 11388,

#' 0, 4095, 40, 93, 49, 49, 49,

#' 4095, 6837, 38961, 38961, 0, 0, 93,

#' 40, 11388, 38961, 40, 75, 4095, 103)

#' cd_2021 <- c(992, 275, 331, 454, 479, 553, 496,

#' 230, 605, 432, 170, 670, 238, 238,

#' 634, 422, 429, 513, 327, 465, 479,

#' 661, 382, 364, 109, 398, 209, 1960,

#' 992, 275, 331, 454, 479, 553, 496)

#' vl_2021 <- c(80, 1690, 5113, 71, 289, 3063, 0,

#' 262, 0, 15089, 13016, 1513, 60, 60,

#' 49248, 159308, 56, 0, 516675, 49, 237,

#' 84, 292, 414, 26176, 62, 126, 93,

#' 80, 1690, 5113, 71, 289, 3063, 0)

#' cd_2022 <- c(700, 127, 127, 547, 547, 547, 777,

#' 149, 628, 614, 253, 918, 326, 326,

#' 574, 361, 253, 726, 659, 596, 427,

#' 447, 326, 253, 248, 326, 260, 918,

#' 700, 127, 127, 547, 547, 547, 777)

#' vl_2022 <- c(0, 0, 53250, 0, 40, 1901, 0,

#' 955, 0, 0, 0, 0, 40, 0,

#' 49248, 159308, 56, 0, 516675, 49, 237,

#' 0, 23601, 0, 40, 0, 0, 0,

#' 0, 0, 0, 0, 0, 0, 0)

#' x <- cbind(cd_2019, vl_2019, cd_2021, vl_2021, cd_2022, vl_2022) |>

#' as.data.frame()

#' set.seed(123)

#' hi_data <- rsample::initial_split(x)

#' set.seed(123)

#' hiv_data <- hi_data |>

#' rsample::training()

#' hu <- 5

#' plty <- 1.131656e-09

#' epo <- 176

#' vip_featured <- c("cd_2022")

#' vip_features <- c("cd_2019", "vl_2019", "cd_2021", "vl_2021", "vl_2022")

#' set.seed(123)

#' vi_train <- rsample::initial_split(x)

#' set.seed(123)

#' vip_train <- vi_train |>

#' rsample::training() |>

#' dplyr::select(rsample::all_of(vip_features))

#' vip_new <- vip_train[1,]

#' orderings <- 20

#' viralx_nn_vis(vip_featured, hiv_data, hu, plty, epo, vip_train, vip_new, orderings)

viralx_nn_vis <- function(vip_featured, hiv_data, hu, plty, epo, vip_train, vip_new, orderings) {

DALEXtra::explain_tidymodels(workflows::workflow() |>

workflows::add_recipe(recipes::recipe(stats::as.formula(paste(vip_featured,"~.")), data = hiv_data) |>

recipes::step_normalize(recipes::all_predictors())) |>

workflows::add_model(parsnip::mlp(hidden_units = hu, penalty = plty, epochs = epo) |>

parsnip::set_engine("nnet", MaxNWts = 2600) |>

parsnip::set_mode("regression")) |>

parsnip::fit(data = hiv_data), data = vip_train,

y = vip_featured,

label = "nn + normalized",

verbose = FALSE) |>

DALEX::predict_parts(vip_new, type ="shap", B = orderings) |>

plot()

}

|

/scratch/gouwar.j/cran-all/cranData/viralx/R/viralx_nn_vis.R

|

#' @title Viridis Color Palettes

#'

#' @description A wrapper function around \code{\link[viridisLite]{viridis}} to

#' turn it into a palette function compatible with

#' \code{\link[ggplot2]{discrete_scale}}.

#'

#' @details See \code{\link[viridisLite]{viridis}} and

#' \code{\link[viridisLite]{viridis.map}} for more information on the color

#' palettes.

#'

#' @param alpha The alpha transparency, a number in [0,1], see argument alpha in

#' \code{\link[grDevices]{hsv}}.

#'

#' @param begin The (corrected) hue in [0,1] at which the color map begins.

#'

#' @param end The (corrected) hue in [0,1] at which the color map ends.

#'

#' @param direction Sets the order of colors in the scale. If 1, the default,

#' colors are ordered from darkest to lightest. If -1, the order of colors is

#' reversed.

#'

#' @param option A character string indicating the color map option to use.

#' Eight options are available:

#' \itemize{

#' \item "magma" (or "A")

#' \item "inferno" (or "B")

#' \item "plasma" (or "C")

#' \item "viridis" (or "D")

#' \item "cividis" (or "E")

#' \item "rocket" (or "F")

#' \item "mako" (or "G")

#' \item "turbo" (or "H")

#' }

#'

#' @author Bob Rudis: \email{bob@@rud.is}

#' @author Simon Garnier: \email{garnier@@njit.edu}

#'

#' @examples

#' library(scales)

#' show_col(viridis_pal()(12))

#'

#' @importFrom viridisLite viridis

#'

#' @export

viridis_pal <- function(alpha = 1, begin = 0, end = 1, direction = 1, option= "D") {

function(n) {

viridisLite::viridis(n, alpha, begin, end, direction, option)

}

}

#' @title Viridis Color Scales for ggplot2

#'

#' @description Scale functions (fill and colour/color) for

#' \code{\link[ggplot2]{ggplot2}}.

#'

#' For \code{discrete == FALSE} (the default) all other arguments are as to

#' \code{\link[ggplot2]{scale_fill_gradientn}} or

#' \code{\link[ggplot2]{scale_color_gradientn}}. Otherwise the function will

#' return a \code{\link[ggplot2]{discrete_scale}} with the plot-computed number

#' of colors.

#'

#' See \code{\link[viridisLite]{viridis}} and

#' \code{\link[viridisLite]{viridis.map}} for more information on the color

#' palettes.

#'

#' @param ... Parameters to \code{\link[ggplot2]{discrete_scale}} if

#' \code{discrete == TRUE}, or \code{\link[ggplot2]{scale_fill_gradientn}}/

#' \code{\link[ggplot2]{scale_color_gradientn}} if \code{discrete == FALSE}.

#'

#' @param alpha The alpha transparency, a number in [0,1], see argument alpha in

#' \code{\link[grDevices]{hsv}}.

#'

#' @param begin The (corrected) hue in [0,1] at which the color map begins.

#'

#' @param end The (corrected) hue in [0,1] at which the color map ends.

#'

#' @param direction Sets the order of colors in the scale. If 1, the default,

#' colors are as output by \code{\link[viridis]{viridis_pal}}. If -1, the order

#' of colors is reversed.

#'

#' @param discrete Generate a discrete palette? (default: \code{FALSE} -

#' generate continuous palette).

#'

#' @param option A character string indicating the color map option to use.

#' Eight options are available:

#' \itemize{

#' \item "magma" (or "A")

#' \item "inferno" (or "B")

#' \item "plasma" (or "C")

#' \item "viridis" (or "D")

#' \item "cividis" (or "E")

#' \item "rocket" (or "F")

#' \item "mako" (or "G")

#' \item "turbo" (or "H")

#' }

#'

#' @param aesthetics Character string or vector of character strings listing the

#' name(s) of the aesthetic(s) that this scale works with. This can be useful,

#' for example, to apply colour settings to the colour and fill aesthetics at

#' the same time, via aesthetics = c("colour", "fill").

#'

#' @rdname scale_viridis

#'

#' @author Noam Ross \email{noam.ross@@gmail.com}

#' @author Bob Rudis \email{bob@@rud.is}

#' @author Simon Garnier: \email{garnier@@njit.edu}

#'

#' @importFrom ggplot2 scale_fill_gradientn scale_color_gradientn discrete_scale

#'

#' @importFrom gridExtra grid.arrange

#'

#' @examples

#' library(ggplot2)

#'

#' # Ripped from the pages of ggplot2

#' p <- ggplot(mtcars, aes(wt, mpg))

#' p + geom_point(size = 4, aes(colour = factor(cyl))) +

#' scale_color_viridis(discrete = TRUE) +

#' theme_bw()

#'

#' # Ripped from the pages of ggplot2

#' dsub <- subset(diamonds, x > 5 & x < 6 & y > 5 & y < 6)

#' dsub$diff <- with(dsub, sqrt(abs(x - y)) * sign(x - y))

#' d <- ggplot(dsub, aes(x, y, colour = diff)) + geom_point()

#' d + scale_color_viridis() + theme_bw()

#'

#'

#' # From the main viridis example

#' dat <- data.frame(x = rnorm(10000), y = rnorm(10000))

#'

#' ggplot(dat, aes(x = x, y = y)) +

#' geom_hex() + coord_fixed() +

#' scale_fill_viridis() + theme_bw()

#'

#' library(ggplot2)

#' library(MASS)

#' library(gridExtra)

#'

#' data("geyser", package="MASS")

#'

#' ggplot(geyser, aes(x = duration, y = waiting)) +

#' xlim(0.5, 6) + ylim(40, 110) +

#' stat_density2d(aes(fill = ..level..), geom = "polygon") +

#' theme_bw() +

#' theme(panel.grid = element_blank()) -> gg

#'

#' grid.arrange(

#' gg + scale_fill_viridis(option = "A") + labs(x = "Viridis A", y = NULL),

#' gg + scale_fill_viridis(option = "B") + labs(x = "Viridis B", y = NULL),

#' gg + scale_fill_viridis(option = "C") + labs(x = "Viridis C", y = NULL),

#' gg + scale_fill_viridis(option = "D") + labs(x = "Viridis D", y = NULL),

#' gg + scale_fill_viridis(option = "E") + labs(x = "Viridis E", y = NULL),

#' gg + scale_fill_viridis(option = "F") + labs(x = "Viridis F", y = NULL),

#' gg + scale_fill_viridis(option = "G") + labs(x = "Viridis G", y = NULL),

#' gg + scale_fill_viridis(option = "H") + labs(x = "Viridis H", y = NULL),

#' ncol = 4, nrow = 2

#' )

#'

#' @export

scale_fill_viridis <- function(..., alpha = 1, begin = 0, end = 1, direction = 1,

discrete = FALSE, option = "D", aesthetics = "fill") {

if (discrete) {

discrete_scale(aesthetics, "viridis", viridis_pal(alpha, begin, end, direction, option), ...)

} else {

scale_fill_gradientn(colours = viridisLite::viridis(256, alpha, begin, end, direction, option), aesthetics = aesthetics, ...)

}

}

#' @rdname scale_viridis

#' @importFrom ggplot2 scale_fill_gradientn scale_color_gradientn discrete_scale

#' @export

scale_color_viridis <- function(..., alpha = 1, begin = 0, end = 1, direction = 1,

discrete = FALSE, option = "D", aesthetics = "color") {

if (discrete) {

discrete_scale(aesthetics, "viridis", viridis_pal(alpha, begin, end, direction, option), ...)

} else {

scale_color_gradientn(colours = viridisLite::viridis(256, alpha, begin, end, direction, option), aesthetics = aesthetics, ...)

}

}

#' @rdname scale_viridis

#' @aliases scale_color_viridis

#' @export

scale_colour_viridis <- scale_color_viridis

#' @importFrom viridisLite viridis

#' @export

viridisLite::viridis

#' @importFrom viridisLite inferno

#' @export

viridisLite::inferno

#' @importFrom viridisLite magma

#' @export

viridisLite::magma

#' @importFrom viridisLite plasma

#' @export

viridisLite::plasma

#' @importFrom viridisLite cividis

#' @export

viridisLite::cividis

#' @importFrom viridisLite mako

#' @export

viridisLite::mako

#' @importFrom viridisLite rocket

#' @export

viridisLite::rocket

#' @importFrom viridisLite turbo

#' @export

viridisLite::turbo

#' @importFrom viridisLite viridis.map

#' @export

viridisLite::viridis.map

#' @title USA Unemployment in 2009

#'

#' @description A data set containing the 2009 unemployment data in the USA by

#' county.

#'

#' @format A data frame with 3218 rows and 8 variables:

#' \describe{

#' \item{id}{the county ID number}

#' \item{state_fips}{the state FIPS number}

#' \item{county_fips}{the county FIPS number}

#' \item{name}{the county name}

#' \item{year}{the year}

#' \item{rate}{the unemployment rate}

#' \item{county}{the county abbreviated name}

#' \item{state}{the state acronym}

#' }

#' @source \url{http://datasets.flowingdata.com/unemployment09.csv}

"unemp"

|

/scratch/gouwar.j/cran-all/cranData/viridis/R/scales.R

|

## ----setup, include=FALSE-----------------------------------------------------

library(viridis)

knitr::opts_chunk$set(echo = TRUE, fig.retina=2, fig.width=7, fig.height=5)

## ----tldr_base, message=FALSE-------------------------------------------------

x <- y <- seq(-8*pi, 8*pi, len = 40)

r <- sqrt(outer(x^2, y^2, "+"))

filled.contour(cos(r^2)*exp(-r/(2*pi)),

axes=FALSE,

color.palette=viridis,

asp=1)

## ----tldr_ggplot, message=FALSE-----------------------------------------------

library(ggplot2)

ggplot(data.frame(x = rnorm(10000), y = rnorm(10000)), aes(x = x, y = y)) +

geom_hex() + coord_fixed() +

scale_fill_viridis() + theme_bw()

## ----for_repeat, include=FALSE------------------------------------------------

n_col <- 128

img <- function(obj, nam) {

image(1:length(obj), 1, as.matrix(1:length(obj)), col=obj,

main = nam, ylab = "", xaxt = "n", yaxt = "n", bty = "n")

}

## ----begin, message=FALSE, include=FALSE--------------------------------------

library(viridis)

library(scales)

library(colorspace)

library(dichromat)

## ----show_scales, echo=FALSE, fig.height=3.575--------------------------------

par(mfrow=c(8, 1), mar=rep(1, 4))

img(rev(viridis(n_col)), "viridis")

img(rev(magma(n_col)), "magma")

img(rev(plasma(n_col)), "plasma")

img(rev(inferno(n_col)), "inferno")

img(rev(cividis(n_col)), "cividis")

img(rev(mako(n_col)), "mako")

img(rev(rocket(n_col)), "rocket")

img(rev(turbo(n_col)), "turbo")

## ----01_normal, echo=FALSE----------------------------------------------------

par(mfrow=c(7, 1), mar=rep(1, 4))

img(rev(rainbow(n_col)), "rainbow")

img(rev(heat.colors(n_col)), "heat")

img(rev(seq_gradient_pal(low = "#132B43", high = "#56B1F7", space = "Lab")(seq(0, 1, length=n_col))), "ggplot default")

img(gradient_n_pal(brewer_pal(type="seq")(9))(seq(0, 1, length=n_col)), "brewer blues")

img(gradient_n_pal(brewer_pal(type="seq", palette = "YlGnBu")(9))(seq(0, 1, length=n_col)), "brewer yellow-green-blue")

img(rev(viridis(n_col)), "viridis")

img(rev(magma(n_col)), "magma")

## ----02_deutan, echo=FALSE----------------------------------------------------

par(mfrow=c(7, 1), mar=rep(1, 4))

img(dichromat(rev(rainbow(n_col)), "deutan"), "rainbow")

img(dichromat(rev(heat.colors(n_col)), "deutan"), "heat")

img(dichromat(rev(seq_gradient_pal(low = "#132B43", high = "#56B1F7", space = "Lab")(seq(0, 1, length=n_col))), "deutan"), "ggplot default")

img(dichromat(gradient_n_pal(brewer_pal(type="seq")(9))(seq(0, 1, length=n_col)), "deutan"), "brewer blues")

img(dichromat(gradient_n_pal(brewer_pal(type="seq", palette = "YlGnBu")(9))(seq(0, 1, length=n_col)), "deutan"), "brewer yellow-green-blue")

img(dichromat(rev(viridis(n_col)), "deutan"), "viridis")

img(dichromat(rev(magma(n_col)), "deutan"), "magma")

## ----03_protan, echo=FALSE----------------------------------------------------

par(mfrow=c(7, 1), mar=rep(1, 4))

img(dichromat(rev(rainbow(n_col)), "protan"), "rainbow")

img(dichromat(rev(heat.colors(n_col)), "protan"), "heat")

img(dichromat(rev(seq_gradient_pal(low = "#132B43", high = "#56B1F7", space = "Lab")(seq(0, 1, length=n_col))), "protan"), "ggplot default")

img(dichromat(gradient_n_pal(brewer_pal(type="seq")(9))(seq(0, 1, length=n_col)), "protan"), "brewer blues")

img(dichromat(gradient_n_pal(brewer_pal(type="seq", palette = "YlGnBu")(9))(seq(0, 1, length=n_col)), "protan"), "brewer yellow-green-blue")

img(dichromat(rev(viridis(n_col)), "protan"), "viridis")

img(dichromat(rev(magma(n_col)), "protan"), "magma")

## ----04_tritan, echo=FALSE----------------------------------------------------

par(mfrow=c(7, 1), mar=rep(1, 4))

img(dichromat(rev(rainbow(n_col)), "tritan"), "rainbow")

img(dichromat(rev(heat.colors(n_col)), "tritan"), "heat")

img(dichromat(rev(seq_gradient_pal(low = "#132B43", high = "#56B1F7", space = "Lab")(seq(0, 1, length=n_col))), "tritan"), "ggplot default")

img(dichromat(gradient_n_pal(brewer_pal(type="seq")(9))(seq(0, 1, length=n_col)), "tritan"), "brewer blues")

img(dichromat(gradient_n_pal(brewer_pal(type="seq", palette = "YlGnBu")(9))(seq(0, 1, length=n_col)), "tritan"), "brewer yellow-green-blue")

img(dichromat(rev(viridis(n_col)), "tritan"), "viridis")

img(dichromat(rev(magma(n_col)), "tritan"), "magma")

## ----05_desatureated, echo=FALSE----------------------------------------------

par(mfrow=c(7, 1), mar=rep(1, 4))

img(desaturate(rev(rainbow(n_col))), "rainbow")

img(desaturate(rev(heat.colors(n_col))), "heat")

img(desaturate(rev(seq_gradient_pal(low = "#132B43", high = "#56B1F7", space = "Lab")(seq(0, 1, length=n_col)))), "ggplot default")

img(desaturate(gradient_n_pal(brewer_pal(type="seq")(9))(seq(0, 1, length=n_col))), "brewer blues")

img(desaturate(gradient_n_pal(brewer_pal(type="seq", palette = "YlGnBu")(9))(seq(0, 1, length=n_col))), "brewer yellow-green-blue")

img(desaturate(rev(viridis(n_col))), "viridis")

img(desaturate(rev(magma(n_col))), "magma")

## ----tempmap, message=FALSE---------------------------------------------------

library(terra)

library(httr)

par(mfrow=c(1,1), mar=rep(0.5, 4))

temp_raster <- "http://ftp.cpc.ncep.noaa.gov/GIS/GRADS_GIS/GeoTIFF/TEMP/us_tmax/us.tmax_nohads_ll_20150219_float.tif"

try(GET(temp_raster,

write_disk("us.tmax_nohads_ll_20150219_float.tif")), silent=TRUE)

us <- rast("us.tmax_nohads_ll_20150219_float.tif")

us <- project(us, y="+proj=aea +lat_1=29.5 +lat_2=45.5 +lat_0=37.5 +lon_0=-96 +x_0=0 +y_0=0 +ellps=GRS80 +datum=NAD83 +units=m +no_defs")

image(us, col=inferno(256), asp=1, axes=FALSE, xaxs="i", xaxt='n', yaxt='n', ann=FALSE)

## ----ggplot2------------------------------------------------------------------

library(maps)

library(mapproj)

data(unemp, package = "viridis")

county_df <- map_data("county", projection = "albers", parameters = c(39, 45))

names(county_df) <- c("long", "lat", "group", "order", "state_name", "county")

county_df$state <- state.abb[match(county_df$state_name, tolower(state.name))]

county_df$state_name <- NULL

state_df <- map_data("state", projection = "albers", parameters = c(39, 45))

choropleth <- merge(county_df, unemp, by = c("state", "county"))

choropleth <- choropleth[order(choropleth$order), ]

ggplot(choropleth, aes(long, lat, group = group)) +

geom_polygon(aes(fill = rate), colour = alpha("white", 1 / 2), linewidth = 0.2) +

geom_polygon(data = state_df, colour = "white", fill = NA) +

coord_fixed() +

theme_minimal() +

ggtitle("US unemployment rate by county") +

theme(axis.line = element_blank(), axis.text = element_blank(),

axis.ticks = element_blank(), axis.title = element_blank()) +

scale_fill_viridis(option="magma")

## ----discrete-----------------------------------------------------------------

p <- ggplot(mtcars, aes(wt, mpg))

p + geom_point(size=4, aes(colour = factor(cyl))) +

scale_color_viridis(discrete=TRUE) +

theme_bw()

|

/scratch/gouwar.j/cran-all/cranData/viridis/inst/doc/intro-to-viridis.R

|

---

title: "Introduction to the viridis color maps"

author:

- "Bob Rudis, Noam Ross and Simon Garnier"

date: "`r Sys.Date()`"

output:

rmarkdown::html_vignette:

toc: true

toc_depth: 1

vignette: >

%\VignetteIndexEntry{Introduction to the viridis color maps}

%\VignetteEngine{knitr::rmarkdown}

%\VignetteEncoding{UTF-8}

---

<style>

img {

max-width: 100%;

max-height: 100%;

}

</style>

# tl;dr

Use the color scales in this package to make plots that are pretty,

better represent your data, easier to read by those with colorblindness, and

print well in gray scale.

Install **viridis** like any R package:

```

install.packages("viridis")

library(viridis)

```

For base plots, use the `viridis()` function to generate a palette:

```{r setup, include=FALSE}

library(viridis)

knitr::opts_chunk$set(echo = TRUE, fig.retina=2, fig.width=7, fig.height=5)

```

```{r tldr_base, message=FALSE}

x <- y <- seq(-8*pi, 8*pi, len = 40)

r <- sqrt(outer(x^2, y^2, "+"))

filled.contour(cos(r^2)*exp(-r/(2*pi)),

axes=FALSE,

color.palette=viridis,

asp=1)

```

For ggplot, use `scale_color_viridis()` and `scale_fill_viridis()`:

```{r, tldr_ggplot, message=FALSE}

library(ggplot2)

ggplot(data.frame(x = rnorm(10000), y = rnorm(10000)), aes(x = x, y = y)) +

geom_hex() + coord_fixed() +

scale_fill_viridis() + theme_bw()

```

---

# Introduction

[`viridis`](https://cran.r-project.org/package=viridis), and its companion

package [`viridisLite`](https://cran.r-project.org/package=viridisLite)

provide a series of color maps that are designed to improve graph readability

for readers with common forms of color blindness and/or color vision deficiency.

The color maps are also perceptually-uniform, both in regular form and also when

converted to black-and-white for printing.

These color maps are designed to be:

- **Colorful**, spanning as wide a palette as possible so as to make differences

easy to see,

- **Perceptually uniform**, meaning that values close to each other have

similar-appearing colors and values far away from each other have more

different-appearing colors, consistently across the range of values,

- **Robust to colorblindness**, so that the above properties hold true for

people with common forms of colorblindness, as well as in grey scale printing, and

- **Pretty**, oh so pretty

`viridisLite` provides the base functions for generating the color maps in base

`R`. The package is meant to be as lightweight and dependency-free as possible

for maximum compatibility with all the `R` ecosystem. [`viridis`](https://cran.r-project.org/package=viridis)

provides additional functionalities, in particular bindings for `ggplot2`.

---

# The Color Scales

The package contains eight color scales: "viridis", the primary choice, and

five alternatives with similar properties - "magma", "plasma", "inferno",

"civids", "mako", and "rocket" -, and a rainbow color map - "turbo".

The color maps `viridis`, `magma`, `inferno`, and `plasma` were created by

Stéfan van der Walt ([@stefanv](https://github.com/stefanv)) and Nathaniel Smith ([@njsmith](https://github.com/njsmith)). If you want to know more about the

science behind the creation of these color maps, you can watch this

[presentation of `viridis`](https://youtu.be/xAoljeRJ3lU) by their authors at

SciPy 2015.

The color map `cividis` is a corrected version of 'viridis', developed by

Jamie R. Nuñez, Christopher R. Anderton, and Ryan S. Renslow, and originally

ported to `R` by Marco Sciaini ([@msciain](https://github.com/marcosci)). More

info about `cividis` can be found in

[this paper](https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0199239).

The color maps `mako` and `rocket` were originally created for the `Seaborn`

statistical data visualization package for Python. More info about `mako` and

`rocket` can be found on the

[`Seaborn` website](https://seaborn.pydata.org/tutorial/color_palettes.html).

The color map `turbo` was developed by Anton Mikhailov to address the

shortcomings of the Jet rainbow color map such as false detail, banding and

color blindness ambiguity. More infor about `turbo` can be found

[here](https://ai.googleblog.com/2019/08/turbo-improved-rainbow-colormap-for.html).

```{r for_repeat, include=FALSE}

n_col <- 128

img <- function(obj, nam) {

image(1:length(obj), 1, as.matrix(1:length(obj)), col=obj,

main = nam, ylab = "", xaxt = "n", yaxt = "n", bty = "n")

}

```

```{r begin, message=FALSE, include=FALSE}

library(viridis)

library(scales)

library(colorspace)

library(dichromat)

```

```{r show_scales, echo=FALSE, fig.height=3.575}

par(mfrow=c(8, 1), mar=rep(1, 4))

img(rev(viridis(n_col)), "viridis")

img(rev(magma(n_col)), "magma")

img(rev(plasma(n_col)), "plasma")

img(rev(inferno(n_col)), "inferno")

img(rev(cividis(n_col)), "cividis")

img(rev(mako(n_col)), "mako")

img(rev(rocket(n_col)), "rocket")

img(rev(turbo(n_col)), "turbo")

```

---

# Comparison

Let's compare the viridis and magma scales against these other commonly used

sequential color palettes in R:

- Base R palettes: `rainbow.colors`, `heat.colors`, `cm.colors`

- The default **ggplot2** palette

- Sequential [colorbrewer](https://colorbrewer2.org/) palettes, both default

blues and the more viridis-like yellow-green-blue

```{r 01_normal, echo=FALSE}

par(mfrow=c(7, 1), mar=rep(1, 4))

img(rev(rainbow(n_col)), "rainbow")

img(rev(heat.colors(n_col)), "heat")

img(rev(seq_gradient_pal(low = "#132B43", high = "#56B1F7", space = "Lab")(seq(0, 1, length=n_col))), "ggplot default")

img(gradient_n_pal(brewer_pal(type="seq")(9))(seq(0, 1, length=n_col)), "brewer blues")

img(gradient_n_pal(brewer_pal(type="seq", palette = "YlGnBu")(9))(seq(0, 1, length=n_col)), "brewer yellow-green-blue")

img(rev(viridis(n_col)), "viridis")

img(rev(magma(n_col)), "magma")

```

It is immediately clear that the "rainbow" palette is not perceptually uniform;

there are several "kinks" where the apparent color changes quickly over a short

range of values. This is also true, though less so, for the "heat" colors.

The other scales are more perceptually uniform, but "viridis" stands out for its

large *perceptual range*. It makes as much use of the available color space as

possible while maintaining uniformity.

Now, let's compare these as they might appear under various forms of colorblindness,

which can be simulated using the **[dichromat](https://cran.r-project.org/package=dichromat)**

package:

### Green-Blind (Deuteranopia)

```{r 02_deutan, echo=FALSE}

par(mfrow=c(7, 1), mar=rep(1, 4))

img(dichromat(rev(rainbow(n_col)), "deutan"), "rainbow")

img(dichromat(rev(heat.colors(n_col)), "deutan"), "heat")

img(dichromat(rev(seq_gradient_pal(low = "#132B43", high = "#56B1F7", space = "Lab")(seq(0, 1, length=n_col))), "deutan"), "ggplot default")

img(dichromat(gradient_n_pal(brewer_pal(type="seq")(9))(seq(0, 1, length=n_col)), "deutan"), "brewer blues")

img(dichromat(gradient_n_pal(brewer_pal(type="seq", palette = "YlGnBu")(9))(seq(0, 1, length=n_col)), "deutan"), "brewer yellow-green-blue")

img(dichromat(rev(viridis(n_col)), "deutan"), "viridis")

img(dichromat(rev(magma(n_col)), "deutan"), "magma")

```

### Red-Blind (Protanopia)

```{r 03_protan, echo=FALSE}

par(mfrow=c(7, 1), mar=rep(1, 4))

img(dichromat(rev(rainbow(n_col)), "protan"), "rainbow")

img(dichromat(rev(heat.colors(n_col)), "protan"), "heat")

img(dichromat(rev(seq_gradient_pal(low = "#132B43", high = "#56B1F7", space = "Lab")(seq(0, 1, length=n_col))), "protan"), "ggplot default")

img(dichromat(gradient_n_pal(brewer_pal(type="seq")(9))(seq(0, 1, length=n_col)), "protan"), "brewer blues")

img(dichromat(gradient_n_pal(brewer_pal(type="seq", palette = "YlGnBu")(9))(seq(0, 1, length=n_col)), "protan"), "brewer yellow-green-blue")

img(dichromat(rev(viridis(n_col)), "protan"), "viridis")

img(dichromat(rev(magma(n_col)), "protan"), "magma")

```

### Blue-Blind (Tritanopia)

```{r 04_tritan, echo=FALSE}

par(mfrow=c(7, 1), mar=rep(1, 4))

img(dichromat(rev(rainbow(n_col)), "tritan"), "rainbow")

img(dichromat(rev(heat.colors(n_col)), "tritan"), "heat")

img(dichromat(rev(seq_gradient_pal(low = "#132B43", high = "#56B1F7", space = "Lab")(seq(0, 1, length=n_col))), "tritan"), "ggplot default")

img(dichromat(gradient_n_pal(brewer_pal(type="seq")(9))(seq(0, 1, length=n_col)), "tritan"), "brewer blues")

img(dichromat(gradient_n_pal(brewer_pal(type="seq", palette = "YlGnBu")(9))(seq(0, 1, length=n_col)), "tritan"), "brewer yellow-green-blue")

img(dichromat(rev(viridis(n_col)), "tritan"), "viridis")

img(dichromat(rev(magma(n_col)), "tritan"), "magma")

```

### Desaturated

```{r 05_desatureated, echo=FALSE}

par(mfrow=c(7, 1), mar=rep(1, 4))

img(desaturate(rev(rainbow(n_col))), "rainbow")

img(desaturate(rev(heat.colors(n_col))), "heat")

img(desaturate(rev(seq_gradient_pal(low = "#132B43", high = "#56B1F7", space = "Lab")(seq(0, 1, length=n_col)))), "ggplot default")

img(desaturate(gradient_n_pal(brewer_pal(type="seq")(9))(seq(0, 1, length=n_col))), "brewer blues")

img(desaturate(gradient_n_pal(brewer_pal(type="seq", palette = "YlGnBu")(9))(seq(0, 1, length=n_col))), "brewer yellow-green-blue")

img(desaturate(rev(viridis(n_col))), "viridis")

img(desaturate(rev(magma(n_col))), "magma")

```

We can see that in these cases, "rainbow" is quite problematic - it is not

perceptually consistent across its range. "Heat" washes

out at bright colors, as do the brewer scales to a lesser extent. The ggplot scale

does not wash out, but it has a low perceptual range - there's not much contrast

between low and high values. The "viridis" and "magma" scales do better - they cover a wide perceptual range in brightness in brightness and blue-yellow, and do not rely as much on red-green contrast. They do less well

under tritanopia (blue-blindness), but this is an extrememly rare form of colorblindness.

---

# Usage

The `viridis()` function produces the `viridis` color scale. You can choose

the other color scale options using the `option` parameter or the convenience

functions `magma()`, `plasma()`, `inferno()`, `cividis()`, `mako()`, `rocket`()`,

and `turbo()`.

Here the `inferno()` scale is used for a raster of U.S. max temperature:

```{r tempmap, message=FALSE}

library(terra)

library(httr)

par(mfrow=c(1,1), mar=rep(0.5, 4))

temp_raster <- "http://ftp.cpc.ncep.noaa.gov/GIS/GRADS_GIS/GeoTIFF/TEMP/us_tmax/us.tmax_nohads_ll_20150219_float.tif"

try(GET(temp_raster,

write_disk("us.tmax_nohads_ll_20150219_float.tif")), silent=TRUE)

us <- rast("us.tmax_nohads_ll_20150219_float.tif")

us <- project(us, y="+proj=aea +lat_1=29.5 +lat_2=45.5 +lat_0=37.5 +lon_0=-96 +x_0=0 +y_0=0 +ellps=GRS80 +datum=NAD83 +units=m +no_defs")

image(us, col=inferno(256), asp=1, axes=FALSE, xaxs="i", xaxt='n', yaxt='n', ann=FALSE)

```

The package also contains color scale functions for **ggplot**

plots: `scale_color_viridis()` and `scale_fill_viridis()`. As with `viridis()`,

you can use the other scales with the `option` argument in the `ggplot` scales.

Here the "magma" scale is used for a cloropleth map of U.S. unemployment:

```{r, ggplot2}

library(maps)

library(mapproj)

data(unemp, package = "viridis")

county_df <- map_data("county", projection = "albers", parameters = c(39, 45))

names(county_df) <- c("long", "lat", "group", "order", "state_name", "county")

county_df$state <- state.abb[match(county_df$state_name, tolower(state.name))]

county_df$state_name <- NULL

state_df <- map_data("state", projection = "albers", parameters = c(39, 45))

choropleth <- merge(county_df, unemp, by = c("state", "county"))

choropleth <- choropleth[order(choropleth$order), ]

ggplot(choropleth, aes(long, lat, group = group)) +

geom_polygon(aes(fill = rate), colour = alpha("white", 1 / 2), linewidth = 0.2) +

geom_polygon(data = state_df, colour = "white", fill = NA) +

coord_fixed() +

theme_minimal() +

ggtitle("US unemployment rate by county") +

theme(axis.line = element_blank(), axis.text = element_blank(),

axis.ticks = element_blank(), axis.title = element_blank()) +

scale_fill_viridis(option="magma")

```

The ggplot functions also can be used for discrete scales with the argument

`discrete=TRUE`.

```{r discrete}

p <- ggplot(mtcars, aes(wt, mpg))

p + geom_point(size=4, aes(colour = factor(cyl))) +

scale_color_viridis(discrete=TRUE) +

theme_bw()

```

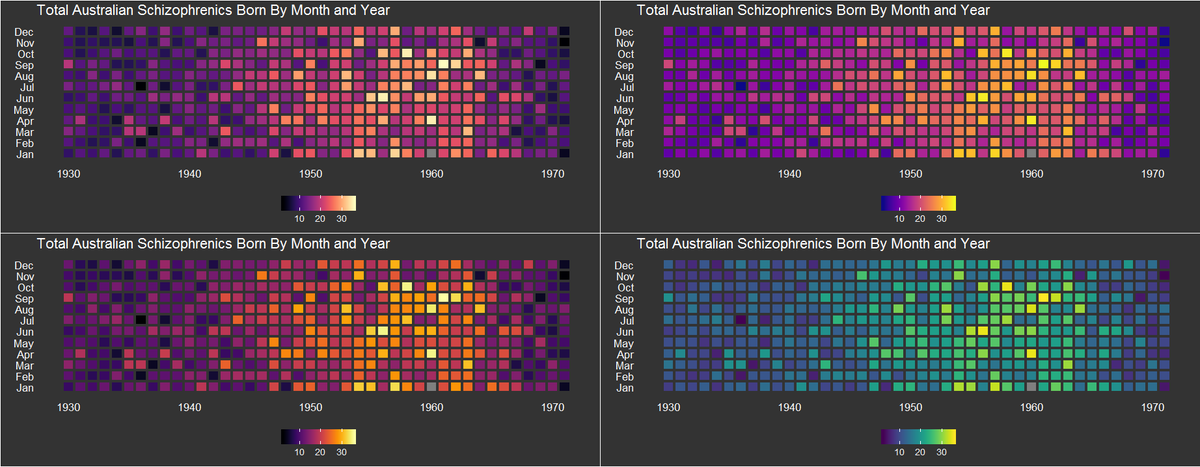

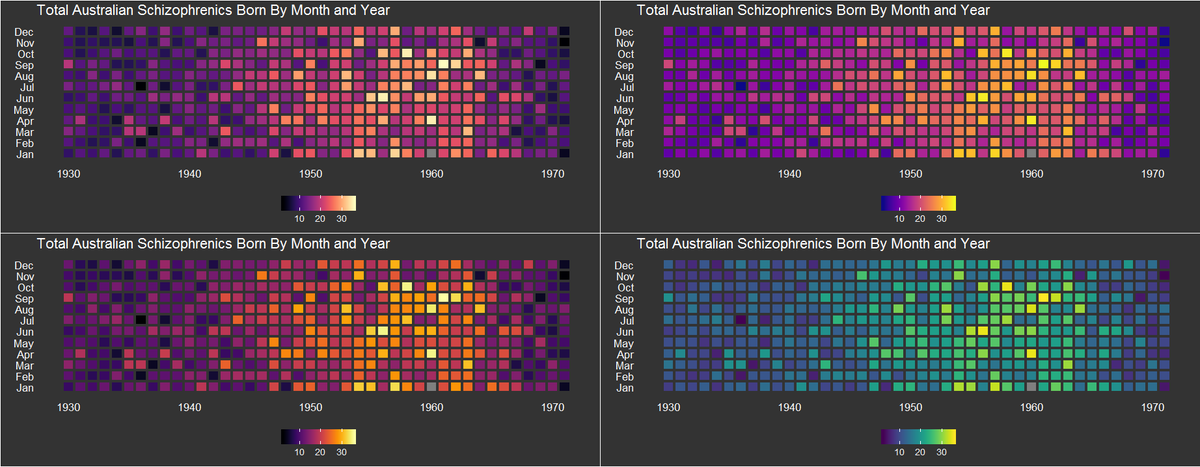

# Gallery

Here are some examples of viridis being used in the wild:

James Curley uses **viridis** for matrix plots ([Code](https://gist.github.com/jalapic/9a1c069aa8cee4089c1e)):

[](http://pbs.twimg.com/media/CQWw9EgWsAAoUi0.png)

Christopher Moore created these contour plots of potential in a dynamic

plankton-consumer model:

[](http://pbs.twimg.com/media/CQWTy7wWcAAa-gu.jpg)

|

/scratch/gouwar.j/cran-all/cranData/viridis/inst/doc/intro-to-viridis.Rmd

|

---

title: "Introduction to the viridis color maps"

author:

- "Bob Rudis, Noam Ross and Simon Garnier"

date: "`r Sys.Date()`"

output:

rmarkdown::html_vignette:

toc: true

toc_depth: 1

vignette: >

%\VignetteIndexEntry{Introduction to the viridis color maps}

%\VignetteEngine{knitr::rmarkdown}

%\VignetteEncoding{UTF-8}

---

<style>

img {

max-width: 100%;

max-height: 100%;

}

</style>

# tl;dr

Use the color scales in this package to make plots that are pretty,

better represent your data, easier to read by those with colorblindness, and

print well in gray scale.

Install **viridis** like any R package:

```

install.packages("viridis")

library(viridis)

```

For base plots, use the `viridis()` function to generate a palette:

```{r setup, include=FALSE}

library(viridis)

knitr::opts_chunk$set(echo = TRUE, fig.retina=2, fig.width=7, fig.height=5)

```

```{r tldr_base, message=FALSE}

x <- y <- seq(-8*pi, 8*pi, len = 40)

r <- sqrt(outer(x^2, y^2, "+"))

filled.contour(cos(r^2)*exp(-r/(2*pi)),

axes=FALSE,

color.palette=viridis,

asp=1)

```

For ggplot, use `scale_color_viridis()` and `scale_fill_viridis()`:

```{r, tldr_ggplot, message=FALSE}

library(ggplot2)

ggplot(data.frame(x = rnorm(10000), y = rnorm(10000)), aes(x = x, y = y)) +

geom_hex() + coord_fixed() +

scale_fill_viridis() + theme_bw()

```

---

# Introduction

[`viridis`](https://cran.r-project.org/package=viridis), and its companion

package [`viridisLite`](https://cran.r-project.org/package=viridisLite)

provide a series of color maps that are designed to improve graph readability

for readers with common forms of color blindness and/or color vision deficiency.

The color maps are also perceptually-uniform, both in regular form and also when

converted to black-and-white for printing.

These color maps are designed to be:

- **Colorful**, spanning as wide a palette as possible so as to make differences

easy to see,

- **Perceptually uniform**, meaning that values close to each other have

similar-appearing colors and values far away from each other have more

different-appearing colors, consistently across the range of values,

- **Robust to colorblindness**, so that the above properties hold true for

people with common forms of colorblindness, as well as in grey scale printing, and

- **Pretty**, oh so pretty

`viridisLite` provides the base functions for generating the color maps in base

`R`. The package is meant to be as lightweight and dependency-free as possible

for maximum compatibility with all the `R` ecosystem. [`viridis`](https://cran.r-project.org/package=viridis)

provides additional functionalities, in particular bindings for `ggplot2`.

---

# The Color Scales

The package contains eight color scales: "viridis", the primary choice, and

five alternatives with similar properties - "magma", "plasma", "inferno",

"civids", "mako", and "rocket" -, and a rainbow color map - "turbo".

The color maps `viridis`, `magma`, `inferno`, and `plasma` were created by

Stéfan van der Walt ([@stefanv](https://github.com/stefanv)) and Nathaniel Smith ([@njsmith](https://github.com/njsmith)). If you want to know more about the

science behind the creation of these color maps, you can watch this

[presentation of `viridis`](https://youtu.be/xAoljeRJ3lU) by their authors at

SciPy 2015.

The color map `cividis` is a corrected version of 'viridis', developed by

Jamie R. Nuñez, Christopher R. Anderton, and Ryan S. Renslow, and originally

ported to `R` by Marco Sciaini ([@msciain](https://github.com/marcosci)). More

info about `cividis` can be found in

[this paper](https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0199239).

The color maps `mako` and `rocket` were originally created for the `Seaborn`

statistical data visualization package for Python. More info about `mako` and

`rocket` can be found on the

[`Seaborn` website](https://seaborn.pydata.org/tutorial/color_palettes.html).

The color map `turbo` was developed by Anton Mikhailov to address the

shortcomings of the Jet rainbow color map such as false detail, banding and

color blindness ambiguity. More infor about `turbo` can be found

[here](https://ai.googleblog.com/2019/08/turbo-improved-rainbow-colormap-for.html).

```{r for_repeat, include=FALSE}

n_col <- 128

img <- function(obj, nam) {

image(1:length(obj), 1, as.matrix(1:length(obj)), col=obj,

main = nam, ylab = "", xaxt = "n", yaxt = "n", bty = "n")

}

```

```{r begin, message=FALSE, include=FALSE}

library(viridis)

library(scales)

library(colorspace)

library(dichromat)

```

```{r show_scales, echo=FALSE, fig.height=3.575}

par(mfrow=c(8, 1), mar=rep(1, 4))

img(rev(viridis(n_col)), "viridis")

img(rev(magma(n_col)), "magma")

img(rev(plasma(n_col)), "plasma")

img(rev(inferno(n_col)), "inferno")

img(rev(cividis(n_col)), "cividis")

img(rev(mako(n_col)), "mako")

img(rev(rocket(n_col)), "rocket")

img(rev(turbo(n_col)), "turbo")

```

---

# Comparison

Let's compare the viridis and magma scales against these other commonly used

sequential color palettes in R:

- Base R palettes: `rainbow.colors`, `heat.colors`, `cm.colors`

- The default **ggplot2** palette

- Sequential [colorbrewer](https://colorbrewer2.org/) palettes, both default

blues and the more viridis-like yellow-green-blue

```{r 01_normal, echo=FALSE}

par(mfrow=c(7, 1), mar=rep(1, 4))

img(rev(rainbow(n_col)), "rainbow")

img(rev(heat.colors(n_col)), "heat")

img(rev(seq_gradient_pal(low = "#132B43", high = "#56B1F7", space = "Lab")(seq(0, 1, length=n_col))), "ggplot default")

img(gradient_n_pal(brewer_pal(type="seq")(9))(seq(0, 1, length=n_col)), "brewer blues")

img(gradient_n_pal(brewer_pal(type="seq", palette = "YlGnBu")(9))(seq(0, 1, length=n_col)), "brewer yellow-green-blue")

img(rev(viridis(n_col)), "viridis")

img(rev(magma(n_col)), "magma")

```

It is immediately clear that the "rainbow" palette is not perceptually uniform;

there are several "kinks" where the apparent color changes quickly over a short

range of values. This is also true, though less so, for the "heat" colors.

The other scales are more perceptually uniform, but "viridis" stands out for its

large *perceptual range*. It makes as much use of the available color space as

possible while maintaining uniformity.

Now, let's compare these as they might appear under various forms of colorblindness,

which can be simulated using the **[dichromat](https://cran.r-project.org/package=dichromat)**

package:

### Green-Blind (Deuteranopia)

```{r 02_deutan, echo=FALSE}

par(mfrow=c(7, 1), mar=rep(1, 4))

img(dichromat(rev(rainbow(n_col)), "deutan"), "rainbow")

img(dichromat(rev(heat.colors(n_col)), "deutan"), "heat")

img(dichromat(rev(seq_gradient_pal(low = "#132B43", high = "#56B1F7", space = "Lab")(seq(0, 1, length=n_col))), "deutan"), "ggplot default")

img(dichromat(gradient_n_pal(brewer_pal(type="seq")(9))(seq(0, 1, length=n_col)), "deutan"), "brewer blues")

img(dichromat(gradient_n_pal(brewer_pal(type="seq", palette = "YlGnBu")(9))(seq(0, 1, length=n_col)), "deutan"), "brewer yellow-green-blue")

img(dichromat(rev(viridis(n_col)), "deutan"), "viridis")

img(dichromat(rev(magma(n_col)), "deutan"), "magma")

```

### Red-Blind (Protanopia)

```{r 03_protan, echo=FALSE}

par(mfrow=c(7, 1), mar=rep(1, 4))

img(dichromat(rev(rainbow(n_col)), "protan"), "rainbow")

img(dichromat(rev(heat.colors(n_col)), "protan"), "heat")

img(dichromat(rev(seq_gradient_pal(low = "#132B43", high = "#56B1F7", space = "Lab")(seq(0, 1, length=n_col))), "protan"), "ggplot default")

img(dichromat(gradient_n_pal(brewer_pal(type="seq")(9))(seq(0, 1, length=n_col)), "protan"), "brewer blues")

img(dichromat(gradient_n_pal(brewer_pal(type="seq", palette = "YlGnBu")(9))(seq(0, 1, length=n_col)), "protan"), "brewer yellow-green-blue")

img(dichromat(rev(viridis(n_col)), "protan"), "viridis")

img(dichromat(rev(magma(n_col)), "protan"), "magma")

```

### Blue-Blind (Tritanopia)

```{r 04_tritan, echo=FALSE}

par(mfrow=c(7, 1), mar=rep(1, 4))

img(dichromat(rev(rainbow(n_col)), "tritan"), "rainbow")

img(dichromat(rev(heat.colors(n_col)), "tritan"), "heat")

img(dichromat(rev(seq_gradient_pal(low = "#132B43", high = "#56B1F7", space = "Lab")(seq(0, 1, length=n_col))), "tritan"), "ggplot default")

img(dichromat(gradient_n_pal(brewer_pal(type="seq")(9))(seq(0, 1, length=n_col)), "tritan"), "brewer blues")

img(dichromat(gradient_n_pal(brewer_pal(type="seq", palette = "YlGnBu")(9))(seq(0, 1, length=n_col)), "tritan"), "brewer yellow-green-blue")

img(dichromat(rev(viridis(n_col)), "tritan"), "viridis")

img(dichromat(rev(magma(n_col)), "tritan"), "magma")

```

### Desaturated

```{r 05_desatureated, echo=FALSE}

par(mfrow=c(7, 1), mar=rep(1, 4))

img(desaturate(rev(rainbow(n_col))), "rainbow")

img(desaturate(rev(heat.colors(n_col))), "heat")

img(desaturate(rev(seq_gradient_pal(low = "#132B43", high = "#56B1F7", space = "Lab")(seq(0, 1, length=n_col)))), "ggplot default")

img(desaturate(gradient_n_pal(brewer_pal(type="seq")(9))(seq(0, 1, length=n_col))), "brewer blues")

img(desaturate(gradient_n_pal(brewer_pal(type="seq", palette = "YlGnBu")(9))(seq(0, 1, length=n_col))), "brewer yellow-green-blue")

img(desaturate(rev(viridis(n_col))), "viridis")

img(desaturate(rev(magma(n_col))), "magma")

```

We can see that in these cases, "rainbow" is quite problematic - it is not

perceptually consistent across its range. "Heat" washes

out at bright colors, as do the brewer scales to a lesser extent. The ggplot scale

does not wash out, but it has a low perceptual range - there's not much contrast

between low and high values. The "viridis" and "magma" scales do better - they cover a wide perceptual range in brightness in brightness and blue-yellow, and do not rely as much on red-green contrast. They do less well

under tritanopia (blue-blindness), but this is an extrememly rare form of colorblindness.

---

# Usage

The `viridis()` function produces the `viridis` color scale. You can choose

the other color scale options using the `option` parameter or the convenience

functions `magma()`, `plasma()`, `inferno()`, `cividis()`, `mako()`, `rocket`()`,

and `turbo()`.

Here the `inferno()` scale is used for a raster of U.S. max temperature:

```{r tempmap, message=FALSE}

library(terra)

library(httr)

par(mfrow=c(1,1), mar=rep(0.5, 4))

temp_raster <- "http://ftp.cpc.ncep.noaa.gov/GIS/GRADS_GIS/GeoTIFF/TEMP/us_tmax/us.tmax_nohads_ll_20150219_float.tif"

try(GET(temp_raster,

write_disk("us.tmax_nohads_ll_20150219_float.tif")), silent=TRUE)

us <- rast("us.tmax_nohads_ll_20150219_float.tif")

us <- project(us, y="+proj=aea +lat_1=29.5 +lat_2=45.5 +lat_0=37.5 +lon_0=-96 +x_0=0 +y_0=0 +ellps=GRS80 +datum=NAD83 +units=m +no_defs")

image(us, col=inferno(256), asp=1, axes=FALSE, xaxs="i", xaxt='n', yaxt='n', ann=FALSE)

```

The package also contains color scale functions for **ggplot**

plots: `scale_color_viridis()` and `scale_fill_viridis()`. As with `viridis()`,

you can use the other scales with the `option` argument in the `ggplot` scales.

Here the "magma" scale is used for a cloropleth map of U.S. unemployment:

```{r, ggplot2}

library(maps)

library(mapproj)

data(unemp, package = "viridis")

county_df <- map_data("county", projection = "albers", parameters = c(39, 45))

names(county_df) <- c("long", "lat", "group", "order", "state_name", "county")

county_df$state <- state.abb[match(county_df$state_name, tolower(state.name))]

county_df$state_name <- NULL

state_df <- map_data("state", projection = "albers", parameters = c(39, 45))

choropleth <- merge(county_df, unemp, by = c("state", "county"))

choropleth <- choropleth[order(choropleth$order), ]

ggplot(choropleth, aes(long, lat, group = group)) +

geom_polygon(aes(fill = rate), colour = alpha("white", 1 / 2), linewidth = 0.2) +

geom_polygon(data = state_df, colour = "white", fill = NA) +

coord_fixed() +

theme_minimal() +

ggtitle("US unemployment rate by county") +

theme(axis.line = element_blank(), axis.text = element_blank(),

axis.ticks = element_blank(), axis.title = element_blank()) +

scale_fill_viridis(option="magma")

```

The ggplot functions also can be used for discrete scales with the argument

`discrete=TRUE`.

```{r discrete}

p <- ggplot(mtcars, aes(wt, mpg))

p + geom_point(size=4, aes(colour = factor(cyl))) +

scale_color_viridis(discrete=TRUE) +

theme_bw()

```

# Gallery

Here are some examples of viridis being used in the wild:

James Curley uses **viridis** for matrix plots ([Code](https://gist.github.com/jalapic/9a1c069aa8cee4089c1e)):

[](http://pbs.twimg.com/media/CQWw9EgWsAAoUi0.png)

Christopher Moore created these contour plots of potential in a dynamic

plankton-consumer model:

[](http://pbs.twimg.com/media/CQWTy7wWcAAa-gu.jpg)

|

/scratch/gouwar.j/cran-all/cranData/viridis/vignettes/intro-to-viridis.Rmd

|

#' @title Color Map Data

#'

#' @description A data set containing the RGB values of the color maps included

#' in the package. These are:

#' \itemize{

#' \item{}{'magma', 'inferno', 'plasma', and 'viridis' as defined in Matplotlib

#' for Python. These color maps are designed in such a way that they will

#' analytically be perfectly perceptually-uniform, both in regular form and

#' also when converted to black-and-white. They are also designed to be

#' perceived by readers with the most common form of color blindness. They

#' were created by \href{https://github.com/stefanv}{Stéfan van der Walt}

#' and \href{https://github.com/njsmith}{Nathaniel Smith};}

#' \item{}{'cividis', a corrected version of 'viridis', 'cividis', developed by

#' Jamie R. Nuñez, Christopher R. Anderton, and Ryan S. Renslow, and

#' originally ported to R by Marco Sciaini. It is designed to be perceived by

#' readers with all forms of color blindness;}

#' \item{}{'rocket' and 'mako' as defined in Seaborn for Python;}

#' \item{}{'turbo', an improved Jet rainbow color map for reducing false detail,

#' banding and color blindness ambiguity.}

#' }

#'

#' @references

#' \itemize{

#' \item{}{'magma', 'inferno', 'plasma', and 'viridis': https://bids.github.io/colormap/}

#' \item{}{'cividis': https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0199239}

#' \item{}{'rocket' and 'mako': https://seaborn.pydata.org/index.html}

#' \item{}{'turbo': https://ai.googleblog.com/2019/08/turbo-improved-rainbow-colormap-for.html}

#' }

#'

#' @format A data frame with 2048 rows and 4 variables:

#' \itemize{

#' \item{R: }{Red value;}

#' \item{G: }{Green value;}

#' \item{B: }{Blue value;}

#' \item{opt: }{The colormap "option" (A: magma; B: inferno; C: plasma;

#' D: viridis; E: cividis; F: rocket; G: mako; H: turbo).}

#' }

#'

#' @author Simon Garnier: \email{garnier@@njit.edu} / \href{https://twitter.com/sjmgarnier}{@@sjmgarnier}

"viridis.map"

#' @title Viridis Color Palettes

#'

#' @description This function creates a vector of \code{n} equally spaced colors

#' along the selected color map.

#'

#' @param n The number of colors (\eqn{\ge 1}) to be in the palette.

#'

#' @param alpha The alpha transparency, a number in [0,1], see argument alpha in

#' \code{\link[grDevices]{hsv}}.

#'

#' @param begin The (corrected) hue in [0,1] at which the color map begins.

#'

#' @param end The (corrected) hue in [0,1] at which the color map ends.

#'

#' @param direction Sets the order of colors in the scale. If 1, the default,

#' colors are ordered from darkest to lightest. If -1, the order of colors is

#' reversed.

#'

#' @param option A character string indicating the color map option to use.

#' Eight options are available:

#' \itemize{

#' \item{}{"magma" (or "A")}

#' \item{}{"inferno" (or "B")}

#' \item{}{"plasma" (or "C")}

#' \item{}{"viridis" (or "D")}

#' \item{}{"cividis" (or "E")}

#' \item{}{"rocket" (or "F")}

#' \item{}{"mako" (or "G")}

#' \item{}{"turbo" (or "H")}

#' }

#'

#' @return \code{viridis} returns a character vector, \code{cv}, of color hex

#' codes. This can be used either to create a user-defined color palette for

#' subsequent graphics by \code{palette(cv)}, a \code{col =} specification in

#' graphics functions or in \code{par}.

#'

#' @author Simon Garnier: \email{garnier@@njit.edu} / \href{https://twitter.com/sjmgarnier}{@@sjmgarnier}

#'

#' @details

#' \if{html}{Here are the color scales:

#' \figure{viridis-scales.png}{options: style="display:block;margin-left:auto;margin-right:auto;width:750px;max-width:75\%;"}

#' }

#' \if{latex}{Here are the color scales:

#' \out{\begin{center}}\figure{viridis-scales.png}\out{\end{center}}

#' }

#'

#' \code{magma()}, \code{plasma()}, \code{inferno()}, \code{cividis()},

#' \code{rocket()}, \code{mako()}, and \code{turbo()} are convenience functions

#' for the other color map options, which are useful when the scale must be

#' passed as a function name.

#'

#' Semi-transparent colors (\eqn{0 < alpha < 1}) are supported only on some

#' devices: see \code{\link[grDevices]{rgb}}.

#'

#' @examples

#' library(ggplot2)

#' library(hexbin)

#'

#' dat <- data.frame(x = rnorm(10000), y = rnorm(10000))

#'

#' ggplot(dat, aes(x = x, y = y)) +

#' geom_hex() + coord_fixed() +

#' scale_fill_gradientn(colours = viridis(256, option = "D"))

#'

#' # using code from RColorBrewer to demo the palette

#' n = 200

#' image(

#' 1:n, 1, as.matrix(1:n),

#' col = viridis(n, option = "D"),

#' xlab = "viridis n", ylab = "", xaxt = "n", yaxt = "n", bty = "n"

#' )

#' @export

viridis <- function(n, alpha = 1, begin = 0, end = 1, direction = 1, option = "D") {

if (begin < 0 | begin > 1 | end < 0 | end > 1) {

stop("begin and end must be in [0,1]")

}

if (abs(direction) != 1) {

stop("direction must be 1 or -1")

}

if (n == 0) {

return(character(0))

}

if (direction == -1) {

tmp <- begin

begin <- end

end <- tmp

}

option <- switch(EXPR = option,

A = "A", magma = "A",

B = "B", inferno = "B",

C = "C", plasma = "C",

D = "D", viridis = "D",

E = "E", cividis = "E",

F = "F", rocket = "F",

G = "G", mako = "G",

H = "H", turbo = "H",

{warning(paste0("Option '", option, "' does not exist. Defaulting to 'viridis'.")); "D"})

map <- viridisLite::viridis.map[viridisLite::viridis.map$opt == option, ]

map_cols <- grDevices::rgb(map$R, map$G, map$B)

fn_cols <- grDevices::colorRamp(map_cols, space = "Lab", interpolate = "spline")

cols <- fn_cols(seq(begin, end, length.out = n)) / 255

grDevices::rgb(cols[, 1], cols[, 2], cols[, 3], alpha = alpha)

}

#' @rdname viridis

#'

#' @return \code{viridisMap} returns a \code{n} lines data frame containing the

#' red (\code{R}), green (\code{G}), blue (\code{B}) and alpha (\code{alpha})

#' channels of \code{n} equally spaced colors along the selected color map.

#' \code{n = 256} by default.

#'

#' @export

viridisMap <- function(n = 256, alpha = 1, begin = 0, end = 1, direction = 1,

option = "D") { # nocov start

if (begin < 0 | begin > 1 | end < 0 | end > 1) {

stop("begin and end must be in [0,1]")

}

if (abs(direction) != 1) {

stop("direction must be 1 or -1")

}

if (n == 0) {

return(data.frame(R = double(0), G = double(0), B = double(0), alpha = double(0)))

}

if (direction == -1) {

tmp <- begin

begin <- end

end <- tmp

}

option <- switch(EXPR = option,

A = "A", magma = "A",

B = "B", inferno = "B",

C = "C", plasma = "C",

D = "D", viridis = "D",

E = "E", cividis = "E",

E = "F", rocket = "F",

E = "G", mako = "G",

H = "H", turbo = "H",

{warning(paste0("Option '", option, "' does not exist. Defaulting to 'viridis'.")); "D"})

map <- viridisLite::viridis.map[viridisLite::viridis.map$opt == option, ]

map_cols <- grDevices::rgb(map$R, map$G, map$B)

fn_cols <- grDevices::colorRamp(map_cols, space = "Lab", interpolate = "spline")

cols <- fn_cols(seq(begin, end, length.out = n)) / 255

data.frame(R = cols[, 1], G = cols[, 2], B = cols[, 3], alpha = alpha)

} # nocov end

#' @rdname viridis

#' @export

magma <- function(n, alpha = 1, begin = 0, end = 1, direction = 1) {

viridis(n, alpha, begin, end, direction, option = "magma")

}

#' @rdname viridis

#' @export

inferno <- function(n, alpha = 1, begin = 0, end = 1, direction = 1) {

viridis(n, alpha, begin, end, direction, option = "inferno")

}

#' @rdname viridis

#' @export

plasma <- function(n, alpha = 1, begin = 0, end = 1, direction = 1) {

viridis(n, alpha, begin, end, direction, option = "plasma")

}

#' @rdname viridis

#' @export

cividis <- function(n, alpha = 1, begin = 0, end = 1, direction = 1) {

viridis(n, alpha, begin, end, direction, option = "cividis")

}

#' @rdname viridis

#' @export

rocket <- function(n, alpha = 1, begin = 0, end = 1, direction = 1) {

viridis(n, alpha, begin, end, direction, option = "rocket")

}

#' @rdname viridis

#' @export

mako <- function(n, alpha = 1, begin = 0, end = 1, direction = 1) {

viridis(n, alpha, begin, end, direction, option = "mako")

}

#' @rdname viridis

#' @export

turbo <- function(n, alpha = 1, begin = 0, end = 1, direction = 1) {

viridis(n, alpha, begin, end, direction, option = "turbo")

}

|

/scratch/gouwar.j/cran-all/cranData/viridisLite/R/viridis.R

|

#' @export

viridis.map <- data.frame(