repo

stringclasses 856

values | pull_number

int64 3

127k

| instance_id

stringlengths 12

58

| issue_numbers

listlengths 1

5

| base_commit

stringlengths 40

40

| patch

stringlengths 67

1.54M

| test_patch

stringlengths 0

107M

| problem_statement

stringlengths 3

307k

| hints_text

stringlengths 0

908k

| created_at

timestamp[s] |

|---|---|---|---|---|---|---|---|---|---|

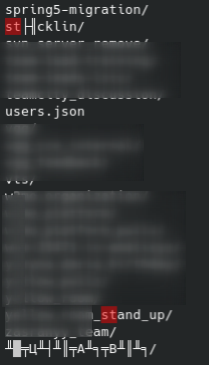

zulip/zulip

| 19,852

|

zulip__zulip-19852

|

[

"19833"

] |

306011a963e36c39c24a0e94f0de494969c8d4aa

|

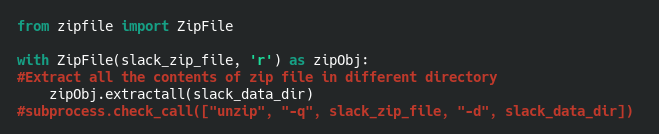

diff --git a/zerver/data_import/slack.py b/zerver/data_import/slack.py

--- a/zerver/data_import/slack.py

+++ b/zerver/data_import/slack.py

@@ -1410,7 +1410,7 @@ def get_slack_api_data(slack_api_url: str, get_param: str, **kwargs: Any) -> Any

if not kwargs.get("token"):

raise AssertionError("Slack token missing in kwargs")

token = kwargs.pop("token")

- data = requests.get(slack_api_url, headers={"Authorization": f"Bearer {token}"}, **kwargs)

+ data = requests.get(slack_api_url, headers={"Authorization": f"Bearer {token}"}, params=kwargs)

if data.status_code == requests.codes.ok:

result = data.json()

|

diff --git a/zerver/tests/test_slack_importer.py b/zerver/tests/test_slack_importer.py

--- a/zerver/tests/test_slack_importer.py

+++ b/zerver/tests/test_slack_importer.py

@@ -3,7 +3,8 @@

from io import BytesIO

from typing import Any, Dict, Iterator, List, Set, Tuple

from unittest import mock

-from unittest.mock import ANY, call

+from unittest.mock import ANY

+from urllib.parse import parse_qs, urlparse

import orjson

import responses

@@ -53,24 +54,101 @@ def remove_folder(path: str) -> None:

def request_callback(request: PreparedRequest) -> Tuple[int, Dict[str, str], bytes]:

- if request.url != "https://slack.com/api/users.list":

+ valid_endpoint = False

+ endpoints = [

+ "https://slack.com/api/users.list",

+ "https://slack.com/api/users.info",

+ "https://slack.com/api/team.info",

+ ]

+ for endpoint in endpoints:

+ if request.url and endpoint in request.url:

+ valid_endpoint = True

+ break

+ if not valid_endpoint:

return (404, {}, b"")

if request.headers.get("Authorization") != "Bearer xoxp-valid-token":

return (200, {}, orjson.dumps({"ok": False, "error": "invalid_auth"}))

- return (200, {}, orjson.dumps({"ok": True, "members": "user_data"}))

+ if request.url == "https://slack.com/api/users.list":

+ return (200, {}, orjson.dumps({"ok": True, "members": "user_data"}))

+

+ query_from_url = str(urlparse(request.url).query)

+ qs = parse_qs(query_from_url)

+ if request.url and "https://slack.com/api/users.info" in request.url:

+ user2team_dict = {

+ "U061A3E0G": "T6LARQE2Z",

+ "U061A8H1G": "T7KJRQE8Y",

+ "U8X25EBAB": "T5YFFM2QY",

+ }

+ try:

+ user_id = qs["user"][0]

+ team_id = user2team_dict[user_id]

+ except KeyError:

+ return (200, {}, orjson.dumps({"ok": False, "error": "user_not_found"}))

+ return (200, {}, orjson.dumps({"ok": True, "user": {"id": user_id, "team_id": team_id}}))

+ # Else, https://slack.com/api/team.info

+ team_not_found: Tuple[int, Dict[str, str], bytes] = (

+ 200,

+ {},

+ orjson.dumps({"ok": False, "error": "team_not_found"}),

+ )

+ try:

+ team_id = qs["team"][0]

+ except KeyError:

+ return team_not_found

+

+ team_dict = {

+ "T6LARQE2Z": "foreignteam1",

+ "T7KJRQE8Y": "foreignteam2",

+ }

+ try:

+ team_domain = team_dict[team_id]

+ except KeyError:

+ return team_not_found

+ return (200, {}, orjson.dumps({"ok": True, "team": {"id": team_id, "domain": team_domain}}))

class SlackImporter(ZulipTestCase):

@responses.activate

def test_get_slack_api_data(self) -> None:

token = "xoxp-valid-token"

+

+ # Users list

slack_user_list_url = "https://slack.com/api/users.list"

responses.add_callback(responses.GET, slack_user_list_url, callback=request_callback)

self.assertEqual(

get_slack_api_data(slack_user_list_url, "members", token=token), "user_data"

)

+

+ # Users info

+ slack_users_info_url = "https://slack.com/api/users.info"

+ user_id = "U8X25EBAB"

+ responses.add_callback(responses.GET, slack_users_info_url, callback=request_callback)

+ self.assertEqual(

+ get_slack_api_data(slack_users_info_url, "user", token=token, user=user_id),

+ {"id": user_id, "team_id": "T5YFFM2QY"},

+ )

+ # Should error if the required user argument is not specified

+ with self.assertRaises(Exception) as invalid:

+ get_slack_api_data(slack_users_info_url, "user", token=token)

+ self.assertEqual(invalid.exception.args, ("Error accessing Slack API: user_not_found",))

+ # Should error if the required user is not found

+ with self.assertRaises(Exception) as invalid:

+ get_slack_api_data(slack_users_info_url, "user", token=token, user="idontexist")

+ self.assertEqual(invalid.exception.args, ("Error accessing Slack API: user_not_found",))

+

+ # Team info

+ slack_team_info_url = "https://slack.com/api/team.info"

+ responses.add_callback(responses.GET, slack_team_info_url, callback=request_callback)

+ with self.assertRaises(Exception) as invalid:

+ get_slack_api_data(slack_team_info_url, "team", token=token, team="wedontexist")

+ self.assertEqual(invalid.exception.args, ("Error accessing Slack API: team_not_found",))

+ # Should error if the required user argument is not specified

+ with self.assertRaises(Exception) as invalid:

+ get_slack_api_data(slack_team_info_url, "team", token=token)

+ self.assertEqual(invalid.exception.args, ("Error accessing Slack API: team_not_found",))

+

token = "xoxp-invalid-token"

with self.assertRaises(Exception) as invalid:

get_slack_api_data(slack_user_list_url, "members", token=token)

@@ -140,10 +218,10 @@ def test_get_timezone(self) -> None:

self.assertEqual(get_user_timezone(user_no_timezone), "America/New_York")

@mock.patch("zerver.data_import.slack.get_data_file")

- @mock.patch("zerver.data_import.slack.get_slack_api_data")

@mock.patch("zerver.data_import.slack.get_messages_iterator")

+ @responses.activate

def test_fetch_shared_channel_users(

- self, messages_mock: mock.Mock, api_mock: mock.Mock, data_file_mock: mock.Mock

+ self, messages_mock: mock.Mock, data_file_mock: mock.Mock

) -> None:

users = [{"id": "U061A1R2R"}, {"id": "U061A5N1G"}, {"id": "U064KUGRJ"}]

data_file_mock.side_effect = [

@@ -153,19 +231,19 @@ def test_fetch_shared_channel_users(

],

[],

]

- api_mock.side_effect = [

- {"id": "U061A3E0G", "team_id": "T6LARQE2Z"},

- {"domain": "foreignteam1"},

- {"id": "U061A8H1G", "team_id": "T7KJRQE8Y"},

- {"domain": "foreignteam2"},

- ]

messages_mock.return_value = [

{"user": "U061A1R2R"},

{"user": "U061A5N1G"},

{"user": "U061A8H1G"},

]

+ # Users info

+ slack_users_info_url = "https://slack.com/api/users.info"

+ responses.add_callback(responses.GET, slack_users_info_url, callback=request_callback)

+ # Team info

+ slack_team_info_url = "https://slack.com/api/team.info"

+ responses.add_callback(responses.GET, slack_team_info_url, callback=request_callback)

slack_data_dir = self.fixture_file_name("", type="slack_fixtures")

- fetch_shared_channel_users(users, slack_data_dir, "token")

+ fetch_shared_channel_users(users, slack_data_dir, "xoxp-valid-token")

# Normal users

self.assert_length(users, 5)

@@ -176,20 +254,16 @@ def test_fetch_shared_channel_users(

self.assertEqual(users[2]["id"], "U064KUGRJ")

# Shared channel users

- self.assertEqual(users[3]["id"], "U061A3E0G")

- self.assertEqual(users[3]["team_domain"], "foreignteam1")

- self.assertEqual(users[3]["is_mirror_dummy"], True)

- self.assertEqual(users[4]["id"], "U061A8H1G")

- self.assertEqual(users[4]["team_domain"], "foreignteam2")

- self.assertEqual(users[4]["is_mirror_dummy"], True)

-

- api_calls = [

- call("https://slack.com/api/users.info", "user", token="token", user="U061A3E0G"),

- call("https://slack.com/api/team.info", "team", token="token", team="T6LARQE2Z"),

- call("https://slack.com/api/users.info", "user", token="token", user="U061A8H1G"),

- call("https://slack.com/api/team.info", "team", token="token", team="T7KJRQE8Y"),

- ]

- api_mock.assert_has_calls(api_calls, any_order=True)

+ # We need to do this because the outcome order of `users` list is

+ # not deterministic.

+ fourth_fifth = [users[3], users[4]]

+ fourth_fifth.sort(key=lambda x: x["id"])

+ self.assertEqual(fourth_fifth[0]["id"], "U061A3E0G")

+ self.assertEqual(fourth_fifth[0]["team_domain"], "foreignteam1")

+ self.assertEqual(fourth_fifth[0]["is_mirror_dummy"], True)

+ self.assertEqual(fourth_fifth[1]["id"], "U061A8H1G")

+ self.assertEqual(fourth_fifth[1]["team_domain"], "foreignteam2")

+ self.assertEqual(fourth_fifth[1]["is_mirror_dummy"], True)

@mock.patch("zerver.data_import.slack.get_data_file")

def test_users_to_zerver_userprofile(self, mock_get_data_file: mock.Mock) -> None:

|

convert_slack_data issue

Got an error when tried to convert slack archive:

`./manage.py convert_slack_data export-edited-3.zip --token xoxb-HIDED --output converted_slack_data --verbosity 3 --traceback

Converting data ...

Traceback (most recent call last):

File "./manage.py", line 52, in <module>

execute_from_command_line(sys.argv)

File "/srv/zulip-venv-cache/cc77818846b328558cc9444c67cbbe0121ca80f4/zulip-py3-venv/lib/python3.8/site-packages/django/core/management/__init__.py", line 419, in execute_from_command_line

utility.execute()

File "/srv/zulip-venv-cache/cc77818846b328558cc9444c67cbbe0121ca80f4/zulip-py3-venv/lib/python3.8/site-packages/django/core/management/__init__.py", line 413, in execute

self.fetch_command(subcommand).run_from_argv(self.argv)

File "/srv/zulip-venv-cache/cc77818846b328558cc9444c67cbbe0121ca80f4/zulip-py3-venv/lib/python3.8/site-packages/django/core/management/base.py", line 354, in run_from_argv

self.execute(*args, **cmd_options)

File "/srv/zulip-venv-cache/cc77818846b328558cc9444c67cbbe0121ca80f4/zulip-py3-venv/lib/python3.8/site-packages/django/core/management/base.py", line 398, in execute

output = self.handle(*args, **options)

File "/home/zulip/deployments/2021-09-07-12-55-30/zerver/management/commands/convert_slack_data.py", line 56, in handle

do_convert_data(path, output_dir, token, threads=num_threads)

File "/home/zulip/deployments/2021-09-07-12-55-30/zerver/data_import/slack.py", line 1270, in do_convert_data

fetch_shared_channel_users(user_list, slack_data_dir, token)

File "/home/zulip/deployments/2021-09-07-12-55-30/zerver/data_import/slack.py", line 1182, in fetch_shared_channel_users

user = get_slack_api_data(

File "/home/zulip/deployments/2021-09-07-12-55-30/zerver/data_import/slack.py", line 1372, in get_slack_api_data

data = requests.get(

File "/srv/zulip-venv-cache/cc77818846b328558cc9444c67cbbe0121ca80f4/zulip-py3-venv/lib/python3.8/site-packages/requests/api.py", line 76, in get

return request('get', url, params=params, **kwargs)

File "/srv/zulip-venv-cache/cc77818846b328558cc9444c67cbbe0121ca80f4/zulip-py3-venv/lib/python3.8/site-packages/requests/api.py", line 61, in request

return session.request(method=method, url=url, **kwargs)

TypeError: request() got an unexpected keyword argument 'user'`

| 2021-09-28T07:26:33

|

|

zulip/zulip

| 19,858

|

zulip__zulip-19858

|

[

"19822"

] |

076d9eeb16504b34508aa628f5588b59036d1f37

|

diff --git a/zerver/lib/markdown/tabbed_sections.py b/zerver/lib/markdown/tabbed_sections.py

--- a/zerver/lib/markdown/tabbed_sections.py

+++ b/zerver/lib/markdown/tabbed_sections.py

@@ -27,7 +27,7 @@

""".strip()

NAV_LIST_ITEM_TEMPLATE = """

-<li data-language="{data_language}" tabindex="0">{name}</li>

+<li data-language="{data_language}" tabindex="0">{label}</li>

""".strip()

DIV_TAB_CONTENT_TEMPLATE = """

@@ -38,7 +38,7 @@

# If adding new entries here, also check if you need to update

# tabbed-instructions.js

-TAB_DISPLAY_NAMES = {

+TAB_SECTION_LABELS = {

"desktop-web": "Desktop/Web",

"ios": "iOS",

"android": "Android",

@@ -73,6 +73,7 @@

"not-stream": "From other views",

"via-recent-topics": "Via recent topics",

"via-left-sidebar": "Via left sidebar",

+ "instructions-for-all-platforms": "Instructions for all platforms",

}

@@ -97,7 +98,10 @@ def run(self, lines: List[str]) -> List[str]:

else:

tab_class = "no-tabs"

tab_section["tabs"] = [

- {"tab_name": "null_tab", "start": tab_section["start_tabs_index"]}

+ {

+ "tab_name": "instructions-for-all-platforms",

+ "start": tab_section["start_tabs_index"],

+ }

]

nav_bar = self.generate_nav_bar(tab_section)

content_blocks = self.generate_content_blocks(tab_section, lines)

@@ -137,10 +141,16 @@ def generate_content_blocks(self, tab_section: Dict[str, Any], lines: List[str])

def generate_nav_bar(self, tab_section: Dict[str, Any]) -> str:

li_elements = []

for tab in tab_section["tabs"]:

- li = NAV_LIST_ITEM_TEMPLATE.format(

- data_language=tab.get("tab_name"), name=TAB_DISPLAY_NAMES.get(tab.get("tab_name"))

- )

+ tab_name = tab.get("tab_name")

+ tab_label = TAB_SECTION_LABELS.get(tab_name)

+ if tab_label is None:

+ raise ValueError(

+ f"Tab '{tab_name}' is not present in TAB_SECTION_LABELS in zerver/lib/markdown/tabbed_sections.py"

+ )

+

+ li = NAV_LIST_ITEM_TEMPLATE.format(data_language=tab_name, label=tab_label)

li_elements.append(li)

+

return NAV_BAR_TEMPLATE.format(tabs="\n".join(li_elements))

def parse_tabs(self, lines: List[str]) -> Optional[Dict[str, Any]]:

|

diff --git a/templates/zerver/tests/markdown/test_tabbed_sections_missing_tabs.md b/templates/zerver/tests/markdown/test_tabbed_sections_missing_tabs.md

new file mode 100644

--- /dev/null

+++ b/templates/zerver/tests/markdown/test_tabbed_sections_missing_tabs.md

@@ -0,0 +1,11 @@

+# Heading

+

+{start_tabs}

+{tab|ios}

+iOS instructions

+

+{tab|minix}

+

+Minix instructions. We expect an exception because the minix tab doesn't have a declared label.

+

+{end_tabs}

diff --git a/zerver/tests/test_templates.py b/zerver/tests/test_templates.py

--- a/zerver/tests/test_templates.py

+++ b/zerver/tests/test_templates.py

@@ -76,10 +76,10 @@ def test_markdown_tabbed_sections_extension(self) -> None:

<p>

<div class="code-section no-tabs" markdown="1">

<ul class="nav">

- <li data-language="null_tab" tabindex="0">None</li>

+ <li data-language="instructions-for-all-platforms" tabindex="0">Instructions for all platforms</li>

</ul>

<div class="blocks">

- <div data-language="null_tab" markdown="1"></p>

+ <div data-language="instructions-for-all-platforms" markdown="1"></p>

<p>Instructions for all platforms</p>

<p></div>

</div>

@@ -92,6 +92,15 @@ def test_markdown_tabbed_sections_extension(self) -> None:

expected_html_sans_whitespace = expected_html.replace(" ", "").replace("\n", "")

self.assertEqual(content_sans_whitespace, expected_html_sans_whitespace)

+ def test_markdown_tabbed_sections_missing_tabs(self) -> None:

+ template = get_template("tests/test_markdown.html")

+ context = {

+ "markdown_test_file": "zerver/tests/markdown/test_tabbed_sections_missing_tabs.md",

+ }

+ expected_regex = "^Tab 'minix' is not present in TAB_SECTION_LABELS in zerver/lib/markdown/tabbed_sections.py$"

+ with self.assertRaisesRegex(ValueError, expected_regex):

+ template.render(context)

+

def test_markdown_nested_code_blocks(self) -> None:

template = get_template("tests/test_markdown.html")

context = {

|

markdown/tabbed_sections: Raise exception for missing tab name.

As discovered in #19807, missing tab names are currently silently ignored by our `tabbed_sections` Markdown extension, this is not right. We should raise an exception somewhere so that missing tab names are caught before they make their way into production. This should hopefully be a quick fix! Ideally, we should do this in a manner such that something in `zerver.tests.test_markdown` or some other test file fails when a tab name is missing.

Thanks to @alya for reporting this bug!

|

Hello @zulip/server-markdown members, this issue was labeled with the "area: markdown" label, so you may want to check it out!

<!-- areaLabelAddition -->

I would expect `test_docs` to fail if an exception is thrown when rendering a page, since I believe we render all /help/ pages in those tests.

Hi, I'd like to work on this

@zulipbot claim

Welcome to Zulip, @pradyumn014! We just sent you an invite to collaborate on this repository at https://github.com/zulip/zulip/invitations. Please accept this invite in order to claim this issue and begin a fun, rewarding experience contributing to Zulip!

Here's some tips to get you off to a good start:

- Join me on the [Zulip developers' server](https://chat.zulip.org), to get help, chat about this issue, and meet the other developers.

- [Unwatch this repository](https://help.github.com/articles/unwatching-repositories/), so that you don't get 100 emails a day.

As you work on this issue, you'll also want to refer to the [Zulip code contribution guide](https://zulip.readthedocs.io/en/latest/contributing/index.html), as well as the rest of the developer documentation on that site.

See you on the other side (that is, the pull request side)!

| 2021-09-28T19:46:42

|

zulip/zulip

| 19,928

|

zulip__zulip-19928

|

[

"19899"

] |

7882a1a7f42b54c1140c4d9a4a666ba26141fa68

|

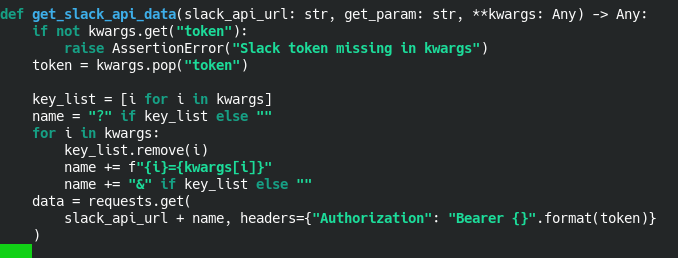

diff --git a/zerver/data_import/slack.py b/zerver/data_import/slack.py

--- a/zerver/data_import/slack.py

+++ b/zerver/data_import/slack.py

@@ -4,6 +4,7 @@

import secrets

import shutil

import subprocess

+import zipfile

from collections import defaultdict

from typing import Any, Dict, Iterator, List, Optional, Set, Tuple, Type, TypeVar

@@ -1290,7 +1291,8 @@ def do_convert_data(original_path: str, output_dir: str, token: str, threads: in

if not os.path.exists(slack_data_dir):

os.makedirs(slack_data_dir)

- subprocess.check_call(["unzip", "-q", original_path, "-d", slack_data_dir])

+ with zipfile.ZipFile(original_path) as zipObj:

+ zipObj.extractall(slack_data_dir)

elif os.path.isdir(original_path):

slack_data_dir = original_path

else:

|

Problem with unzip and get slack user/team

Hi

We have a problem with unzip file

If folder name has symbols e.g. ö or сyrillic, we have wrong folder name, like on screen

I found implementation

I commented subprocess and add zipfile

Maybe its a better and you can add to master

I had a problem with get user and team, request didnt work for me. With another file all was ok.

So I changed func get_slack_api_data in zerver/data_imports/slack.py

Maybe it is better too

|

Thanks for the report! Can you give an example filename for the Slack export zip file to make it easier to reproduce?

@rht @hackerkid @garg3133 can you help investigate this?

Hello @zulip/server-misc, @zulip/server-production members, this issue was labeled with the "area: export/import", "area: production installer" labels, so you may want to check it out!

<!-- areaLabelAddition -->

[slack_export.zip](https://github.com/zulip/zulip/files/7291506/slack_export.zip)

@timabbott it is an empty zip with 2 folders, on this folders I had problem

Awesome, that should make this accessible for anyone to investigate and debug.

If I read the OP correctly, using `ZipFile` solves the unicode problem?

Yeah. It seems possible it could be solved by just calling `unzip` in some slightly modified way; I don't see a strong reason to prefer one approach over the other.

I think the problem is that the zip file is created on Windows, and hence it uses Windows encoding. Here is a test:

- First I extract using `7z x`, which produces the folders with the correct names, `stöcklin` and `відкритки`

- Then I make a zip file from a folder, using `zip` command

This newly created zip file will output a proper Unicode name. To prove it, here is a sample zip file:

[відкритки.zip](https://github.com/zulip/zulip/files/7304195/default.zip).

Nevertheless, we should expect a Windows-encoded zip file, and hence should use that `zipfile` Python library.

| 2021-10-07T14:33:20

|

|

zulip/zulip

| 19,971

|

zulip__zulip-19971

|

[

"19077"

] |

9cf5a03f2aca83df487d11382090056f8bff7572

|

diff --git a/zerver/views/users.py b/zerver/views/users.py

--- a/zerver/views/users.py

+++ b/zerver/views/users.py

@@ -639,7 +639,7 @@ def get_subscription_backend(

stream_id: int = REQ(json_validator=check_int, path_only=True),

) -> HttpResponse:

target_user = access_user_by_id(user_profile, user_id, for_admin=False)

- (stream, sub) = access_stream_by_id(user_profile, stream_id)

+ (stream, sub) = access_stream_by_id(user_profile, stream_id, allow_realm_admin=True)

subscription_status = {"is_subscribed": subscribed_to_stream(target_user, stream_id)}

|

diff --git a/zerver/tests/test_users.py b/zerver/tests/test_users.py

--- a/zerver/tests/test_users.py

+++ b/zerver/tests/test_users.py

@@ -1320,6 +1320,26 @@ def test_get_user_subscription_status(self) -> None:

)

self.assertTrue(result["is_subscribed"])

+ self.login("iago")

+ stream = self.make_stream("private_stream", invite_only=True)

+ # Unsubscribed admin can check subscription status in a private stream.

+ result = orjson.loads(

+ self.client_get(f"/json/users/{iago.id}/subscriptions/{stream.id}").content

+ )

+ self.assertFalse(result["is_subscribed"])

+

+ # Unsubscribed non-admins cannot check subscription status in a private stream.

+ self.login("shiva")

+ result = self.client_get(f"/json/users/{iago.id}/subscriptions/{stream.id}")

+ self.assert_json_error(result, "Invalid stream id")

+

+ # Subscribed non-admins can check subscription status in a private stream

+ self.subscribe(self.example_user("shiva"), stream.name)

+ result = orjson.loads(

+ self.client_get(f"/json/users/{iago.id}/subscriptions/{stream.id}").content

+ )

+ self.assertFalse(result["is_subscribed"])

+

class ActivateTest(ZulipTestCase):

def test_basics(self) -> None:

|

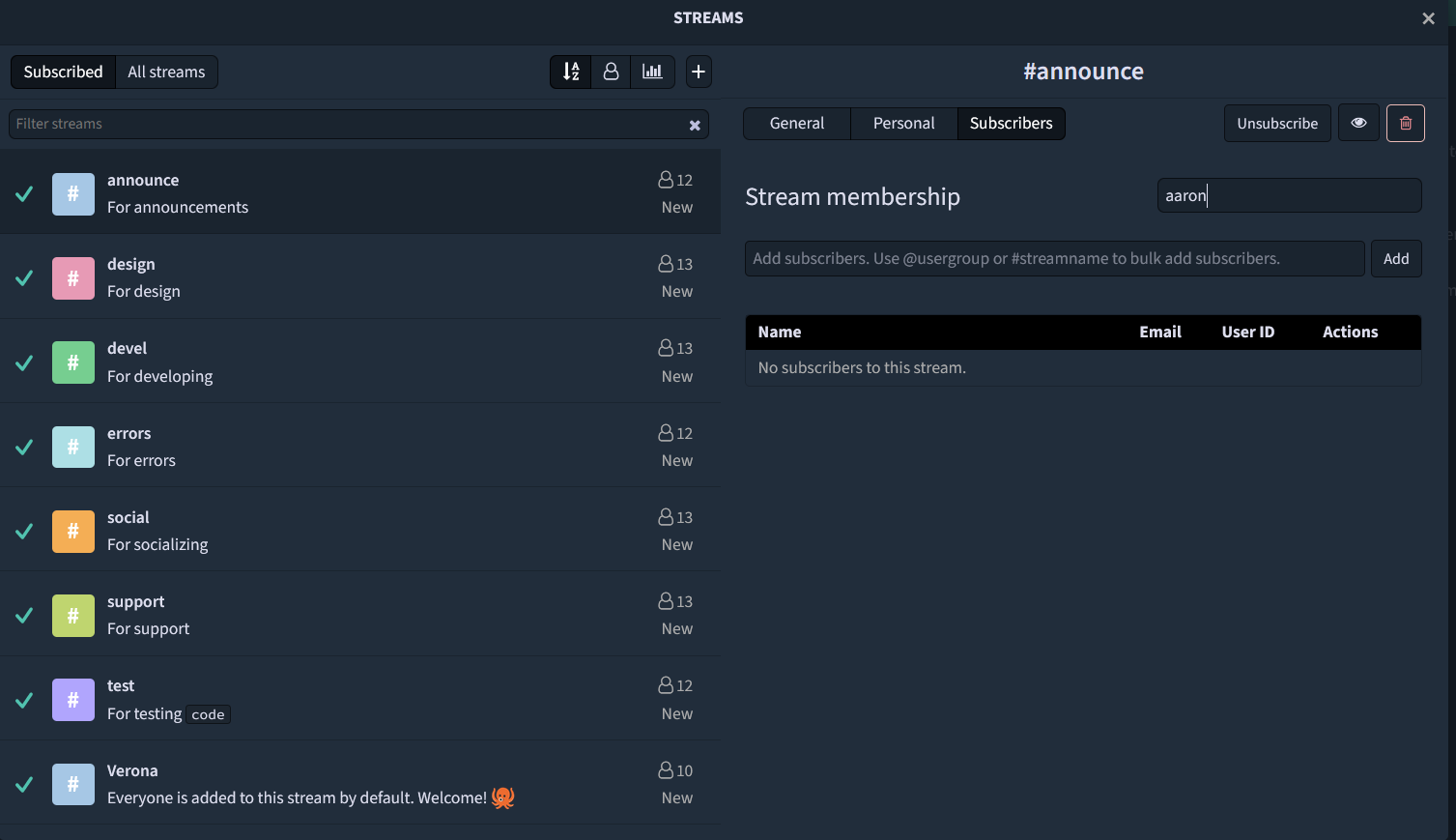

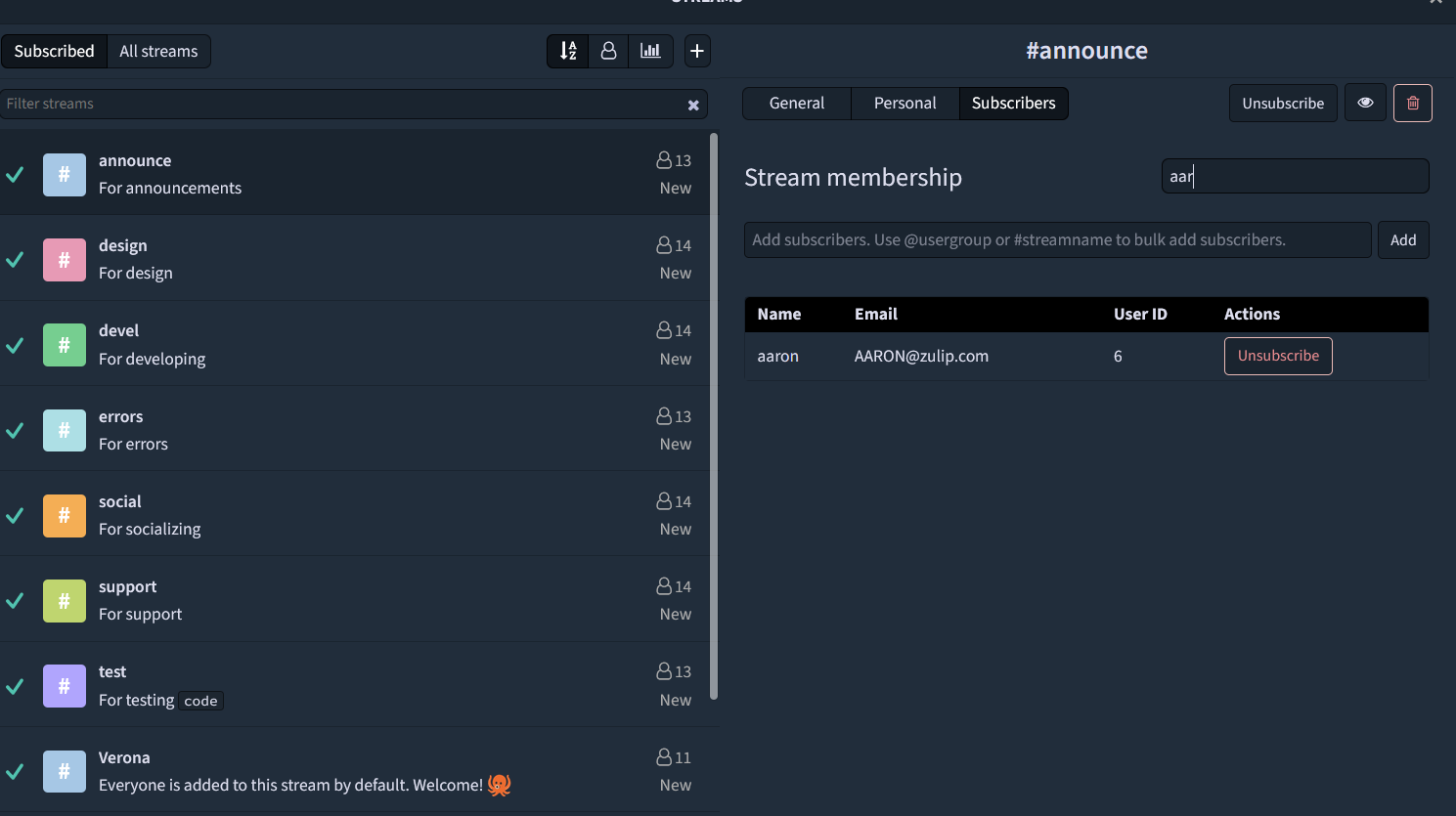

Usr stream subscription status, private streams, and realm admins

For private streams, the endpoint

```

/users/{user_id}/subscription/{stream_id}

```

will show invalid "stream id" even for administrators (I do get the stream id from `client.get_streams(include_all_active=True)`, so I should have access to the stream).

My impression is that it would be safe and reasonable to add `allow_realm_admin=True` to this call:

https://github.com/zulip/zulip/blob/706ec9714c76d98c2ac230c3a5adf35dfd0c438a/zerver/views/users.py#L636

and experimentally that fixes it.

So the fix is trivial, but before proposing a patch, I would need to look at creating tests that go with it, which I didn't do yet.

|

@t-vi thanks for the report! I believe your proposed change is correct; sorry that we didn't see this report at the time.

@sahil839 do you want to just do a quick PR changing that and adding tests?

Hello @zulip/server-api, @zulip/server-streams members, this issue was labeled with the "area: stream settings", "area: api" labels, so you may want to check it out!

<!-- areaLabelAddition -->

| 2021-10-15T10:32:31

|

zulip/zulip

| 19,985

|

zulip__zulip-19985

|

[

"19900"

] |

54d037f24a8acaca49c6f44b17f2eb4c333af916

|

diff --git a/tools/lib/capitalization.py b/tools/lib/capitalization.py

--- a/tools/lib/capitalization.py

+++ b/tools/lib/capitalization.py

@@ -72,6 +72,7 @@

r".zuliprc",

r"__\w+\.\w+__",

# Things using "I"

+ r"I understand",

r"I say",

r"I want",

r"I'm",

diff --git a/zerver/lib/onboarding.py b/zerver/lib/onboarding.py

--- a/zerver/lib/onboarding.py

+++ b/zerver/lib/onboarding.py

@@ -68,38 +68,40 @@ def send_initial_pms(user: UserProfile) -> None:

" " + _("We also have a guide for [Setting up your organization]({help_url}).")

).format(help_url=help_url)

- welcome_msg = _("Hello, and welcome to Zulip!")

+ welcome_msg = _("Hello, and welcome to Zulip!") + "👋"

+ demo_org_warning = ""

if user.realm.demo_organization_scheduled_deletion_date is not None:

- welcome_msg += " " + _(

- "Note that this is a [demo organization]({demo_org_help_url}) and will be automatically deleted in 30 days."

+ demo_org_warning = (

+ _(

+ "Note that this is a [demo organization]({demo_org_help_url}) and will be "

+ "**automatically deleted** in 30 days."

+ )

+ + "\n\n"

)

content = "".join(

[

welcome_msg + " ",

_("This is a private message from me, Welcome Bot.") + "\n\n",

- "* "

- + _(

+ _(

"If you are new to Zulip, check out our [Getting started guide]({getting_started_url})!"

+ ),

+ "{organization_setup_text}" + "\n\n",

+ "{demo_org_warning}",

+ _(

+ "I can also help you get set up! Just click anywhere on this message or press `r` to reply."

)

- + "{organization_setup_text}\n",

- "* " + _("[Add a profile picture]({profile_url}).") + "\n",

- "* " + _("[Browse and subscribe to streams]({streams_url}).") + "\n",

- "* " + _("Download our [mobile and desktop apps]({apps_url}).") + " ",

- _("Zulip also works great in a browser.") + "\n",

- "* " + _("You can type `?` to learn more about Zulip shortcuts.") + "\n\n",

- _("Practice sending a few messages by replying to this conversation.") + " ",

- _("Click anywhere on this message or press `r` to reply."),

+ + "\n\n",

+ _("Here are a few messages I understand:") + " ",

+ bot_commands(is_initial_pm=True),

]

)

content = content.format(

- getting_started_url="/help/getting-started-with-zulip",

- apps_url="/apps",

- profile_url="#settings/profile",

- streams_url="#streams/all",

organization_setup_text=organization_setup_text,

+ demo_org_warning=demo_org_warning,

demo_org_help_url="/help/demo-organizations",

+ getting_started_url="/help/getting-started-with-zulip",

)

internal_send_private_message(

@@ -107,22 +109,124 @@ def send_initial_pms(user: UserProfile) -> None:

)

+def bot_commands(is_initial_pm: bool = False) -> str:

+ commands = [

+ "apps",

+ "edit profile",

+ "theme",

+ "streams",

+ "topics",

+ "message formatting",

+ "keyboard shortcuts",

+ ]

+ if is_initial_pm:

+ commands.append("help")

+ return ", ".join(["`" + command + "`" for command in commands]) + "."

+

+

+def select_welcome_bot_response(human_response_lower: str) -> str:

+ # Given the raw (pre-markdown-rendering) content for a private

+ # message from the user to Welcome Bot, select the appropriate reply.

+ if human_response_lower in ["app", "apps"]:

+ return _(

+ "You can [download](/apps) the [mobile and desktop apps](/apps). "

+ "Zulip also works great in a browser."

+ )

+ elif human_response_lower == "profile":

+ return _(

+ "Go to [Profile settings](#settings/profile) "

+ "to add a [profile picture](/help/change-your-profile-picture) "

+ "and edit your [profile information](/help/edit-your-profile)."

+ )

+ elif human_response_lower == "theme":

+ return _(

+ "Go to [Display settings](#settings/display-settings) "

+ "to [switch between the light and dark themes](/help/dark-theme), "

+ "[pick your favorite emoji theme](/help/emoji-and-emoticons#change-your-emoji-set), "

+ "[change your language](/help/change-your-language), "

+ "and make other tweaks to your Zulip experience."

+ )

+ elif human_response_lower in ["stream", "streams", "channel", "channels"]:

+ return "".join(

+ [

+ _(

+ "In Zulip, streams [determine who gets a message](/help/streams-and-topics). "

+ "They are similar to channels in other chat apps."

+ )

+ + "\n\n",

+ _("[Browse and subscribe to streams](#streams/all)."),

+ ]

+ )

+ elif human_response_lower in ["topic", "topics"]:

+ return "".join(

+ [

+ _(

+ "In Zulip, topics [tell you what a message is about](/help/streams-and-topics). "

+ "They are light-weight subjects, very similar to the subject line of an email."

+ )

+ + "\n\n",

+ _(

+ "Check out [Recent topics](#recent_topics) to see what's happening! "

+ 'You can return to this conversation by clicking "Private messages" in the upper left.'

+ ),

+ ]

+ )

+ elif human_response_lower in ["keyboard", "shortcuts", "keyboard shortcuts"]:

+ return "".join(

+ [

+ _(

+ "Zulip's [keyboard shortcuts](#keyboard-shortcuts) "

+ "let you navigate the app quickly and efficiently."

+ )

+ + "\n\n",

+ _("Press `?` any time to see a [cheat sheet](#keyboard-shortcuts)."),

+ ]

+ )

+ elif human_response_lower in ["formatting", "message formatting"]:

+ return "".join(

+ [

+ _(

+ "Zulip uses [Markdown](/help/format-your-message-using-markdown), "

+ "an intuitive format for **bold**, *italics*, bulleted lists, and more. "

+ "Click [here](#message-formatting) for a cheat sheet."

+ )

+ + "\n\n",

+ _(

+ "Check out our [messaging tips](/help/messaging-tips) "

+ "to learn about emoji reactions, code blocks and much more!"

+ ),

+ ]

+ )

+ elif human_response_lower in ["help", "?"]:

+ return "".join(

+ [

+ _("Here are a few messages I understand:") + " ",

+ bot_commands() + "\n\n",

+ _(

+ "Check out our [Getting started guide](/help/getting-started-with-zulip), "

+ "or browse the [Help center](/help/) to learn more!"

+ ),

+ ]

+ )

+ else:

+ return "".join(

+ [

+ _(

+ "I’m sorry, I did not understand your message. Please try one of the following commands:"

+ )

+ + " ",

+ bot_commands(),

+ ]

+ )

+

+

def send_welcome_bot_response(send_request: SendMessageRequest) -> None:

+ """Given the send_request object for a private message from the user

+ to welcome-bot, trigger the welcome-bot reply."""

welcome_bot = get_system_bot(settings.WELCOME_BOT, send_request.message.sender.realm_id)

- human_recipient_id = send_request.message.sender.recipient_id

- assert human_recipient_id is not None

- if Message.objects.filter(sender=welcome_bot, recipient_id=human_recipient_id).count() < 2:

- content = (

- _("Congratulations on your first reply!") + " "

- ":tada:"

- "\n"

- "\n"

- + _(

- "Feel free to continue using this space to practice your new messaging "

- "skills. Or, try clicking on some of the stream names to your left!"

- )

- )

- internal_send_private_message(welcome_bot, send_request.message.sender, content)

+ human_response_lower = send_request.message.content.lower()

+ content = select_welcome_bot_response(human_response_lower)

+ internal_send_private_message(welcome_bot, send_request.message.sender, content)

@transaction.atomic

|

diff --git a/zerver/tests/test_tutorial.py b/zerver/tests/test_tutorial.py

--- a/zerver/tests/test_tutorial.py

+++ b/zerver/tests/test_tutorial.py

@@ -32,22 +32,136 @@ def test_tutorial_status(self) -> None:

user = self.example_user("hamlet")

self.assertEqual(user.tutorial_status, expected_db_status)

- def test_single_response_to_pm(self) -> None:

+ def test_response_to_pm_for_app(self) -> None:

user = self.example_user("hamlet")

bot = get_system_bot(settings.WELCOME_BOT, user.realm_id)

- content = "whatever"

+ messages = ["app", "Apps"]

+ self.login_user(user)

+ for content in messages:

+ self.send_personal_message(user, bot, content)

+ expected_response = (

+ "You can [download](/apps) the [mobile and desktop apps](/apps). "

+ "Zulip also works great in a browser."

+ )

+ self.assertEqual(most_recent_message(user).content, expected_response)

+

+ def test_response_to_pm_for_edit(self) -> None:

+ user = self.example_user("hamlet")

+ bot = get_system_bot(settings.WELCOME_BOT, user.realm_id)

+ messages = ["profile", "Profile"]

+ self.login_user(user)

+ for content in messages:

+ self.send_personal_message(user, bot, content)

+ expected_response = (

+ "Go to [Profile settings](#settings/profile) "

+ "to add a [profile picture](/help/change-your-profile-picture) "

+ "and edit your [profile information](/help/edit-your-profile)."

+ )

+ self.assertEqual(most_recent_message(user).content, expected_response)

+

+ def test_response_to_pm_for_theme(self) -> None:

+ user = self.example_user("hamlet")

+ bot = get_system_bot(settings.WELCOME_BOT, user.realm_id)

+ messages = ["theme", "Theme"]

+ self.login_user(user)

+ for content in messages:

+ self.send_personal_message(user, bot, content)

+ expected_response = (

+ "Go to [Display settings](#settings/display-settings) "

+ "to [switch between the light and dark themes](/help/dark-theme), "

+ "[pick your favorite emoji theme](/help/emoji-and-emoticons#change-your-emoji-set), "

+ "[change your language](/help/change-your-language), and make other tweaks to your Zulip experience."

+ )

+ self.assertEqual(most_recent_message(user).content, expected_response)

+

+ def test_response_to_pm_for_stream(self) -> None:

+ user = self.example_user("hamlet")

+ bot = get_system_bot(settings.WELCOME_BOT, user.realm_id)

+ messages = ["Streams", "streams", "channels"]

+ self.login_user(user)

+ for content in messages:

+ self.send_personal_message(user, bot, content)

+ expected_response = (

+ "In Zulip, streams [determine who gets a message](/help/streams-and-topics). "

+ "They are similar to channels in other chat apps.\n\n"

+ "[Browse and subscribe to streams](#streams/all)."

+ )

+ self.assertEqual(most_recent_message(user).content, expected_response)

+

+ def test_response_to_pm_for_topic(self) -> None:

+ user = self.example_user("hamlet")

+ bot = get_system_bot(settings.WELCOME_BOT, user.realm_id)

+ messages = ["Topics", "topics"]

+ self.login_user(user)

+ for content in messages:

+ self.send_personal_message(user, bot, content)

+ expected_response = (

+ "In Zulip, topics [tell you what a message is about](/help/streams-and-topics). "

+ "They are light-weight subjects, very similar to the subject line of an email.\n\n"

+ "Check out [Recent topics](#recent_topics) to see what's happening! "

+ 'You can return to this conversation by clicking "Private messages" in the upper left.'

+ )

+ self.assertEqual(most_recent_message(user).content, expected_response)

+

+ def test_response_to_pm_for_shortcuts(self) -> None:

+ user = self.example_user("hamlet")

+ bot = get_system_bot(settings.WELCOME_BOT, user.realm_id)

+ messages = ["Keyboard shortcuts", "shortcuts", "Shortcuts"]

+ self.login_user(user)

+ for content in messages:

+ self.send_personal_message(user, bot, content)

+ expected_response = (

+ "Zulip's [keyboard shortcuts](#keyboard-shortcuts) "

+ "let you navigate the app quickly and efficiently.\n\n"

+ "Press `?` any time to see a [cheat sheet](#keyboard-shortcuts)."

+ )

+ self.assertEqual(most_recent_message(user).content, expected_response)

+

+ def test_response_to_pm_for_formatting(self) -> None:

+ user = self.example_user("hamlet")

+ bot = get_system_bot(settings.WELCOME_BOT, user.realm_id)

+ messages = ["message formatting", "Formatting"]

+ self.login_user(user)

+ for content in messages:

+ self.send_personal_message(user, bot, content)

+ expected_response = (

+ "Zulip uses [Markdown](/help/format-your-message-using-markdown), "

+ "an intuitive format for **bold**, *italics*, bulleted lists, and more. "

+ "Click [here](#message-formatting) for a cheat sheet.\n\n"

+ "Check out our [messaging tips](/help/messaging-tips) to learn about emoji reactions, "

+ "code blocks and much more!"

+ )

+ self.assertEqual(most_recent_message(user).content, expected_response)

+

+ def test_response_to_pm_for_help(self) -> None:

+ user = self.example_user("hamlet")

+ bot = get_system_bot(settings.WELCOME_BOT, user.realm_id)

+ messages = ["help", "Help", "?"]

+ self.login_user(user)

+ for content in messages:

+ self.send_personal_message(user, bot, content)

+ expected_response = (

+ "Here are a few messages I understand: "

+ "`apps`, `edit profile`, `theme`, "

+ "`streams`, `topics`, `message formatting`, `keyboard shortcuts`.\n\n"

+ "Check out our [Getting started guide](/help/getting-started-with-zulip), "

+ "or browse the [Help center](/help/) to learn more!"

+ )

+ self.assertEqual(most_recent_message(user).content, expected_response)

+

+ def test_response_to_pm_for_undefined(self) -> None:

+ user = self.example_user("hamlet")

+ bot = get_system_bot(settings.WELCOME_BOT, user.realm_id)

+ messages = ["Hello", "HAHAHA", "OKOK", "LalulaLapas"]

self.login_user(user)

- self.send_personal_message(user, bot, content)

- user_messages = message_stream_count(user)

- expected_response = (

- "Congratulations on your first reply! :tada:\n\n"

- "Feel free to continue using this space to practice your new messaging "

- "skills. Or, try clicking on some of the stream names to your left!"

- )

- self.assertEqual(most_recent_message(user).content, expected_response)

- # Welcome bot shouldn't respond to further PMs.

- self.send_personal_message(user, bot, content)

- self.assertEqual(message_stream_count(user), user_messages + 1)

+ for content in messages:

+ self.send_personal_message(user, bot, content)

+ expected_response = (

+ "I’m sorry, I did not understand your message. Please try one of the following commands: "

+ "`apps`, `edit profile`, `theme`, `streams`, "

+ "`topics`, `message formatting`, `keyboard shortcuts`."

+ )

+ self.assertEqual(most_recent_message(user).content, expected_response)

def test_no_response_to_group_pm(self) -> None:

user1 = self.example_user("hamlet")

|

Make Welcome Bot more interactive

At present, Welcome Bot gives the user lots of information all at once. It may be unappealing to process so much content when one is just starting to explore a new app. Also, the bot doesn't offer motivation to engage with it, which would draw the user into sending their first Zulip messages.

To try to address this, we should make the Welcome Bot more interactive. In can provide a small amount of key information to start with, offering to teach the user more upon request.

An initial set of messages to be implemented is described below. In general, we should make it easy to create additional message/response interactions.

# New initial messages

## For a non-admin user

Hello, and welcome to Zulip! This is a private message from me, Welcome Bot. If you are new to Zulip, check out our [Getting started guide](https://zulip.com/help/getting-started-with-zulip)!

----------

I can help you get set up! Just click anywhere on this message or press `r` to reply.

Here are a few messages I understand: “**apps**”, “**edit profile**”, “**dark mode**”, ”**light mode**”, “**streams**”, and “**topics**”.

----------

If you would like more help, send “**help**”, or type `?` to learn about Zulip’s keyboard shortcuts.

----------

Notes:

- We should have tests to make sure the list of advertised messages matches the messages the bot actually understands.

- It should be possible to manually determine the order of commands in the message (i.e. not just alphabetical).

# Additional responses to user's messages

In general, I think it’s helpful to be a bit flexible about the keywords, but I’m generally not too concerned about getting all the possible variants of what people type.

We should always ignore capitalization and formatting.

## Apps

(also: `app`, `applications`, `[any word] apps`)

- You can [download](https://zulip.com/apps) the [mobile and desktop apps](https://zulip.com/apps). Zulip also works great in a browser.

## Edit profile

(also: `profile`)

- Go to [Profile settings](http://[org URL]/#settings/profile) to [add a profile picture](https://zulip.com/help/change-your-profile-picture) and [edit your profile information](https://zulip.com/help/edit-your-profile).

**Messages for “dark mode”, ”light mode”, “streams”, and “topics” will be specified in more detail once we have the initial framework.**

## Any messages not on the list

- I’m sorry, I did not understand your message. Please try one of the following commands: "**apps**”, “**edit profile**”, “**dark mode**”, ”**light mode**”, “**streams**”, and “**topics**”.

# Other messages

We don’t want to spam with it all the time, but we may want to remind the user about the commands the bot know. Ideally, we should probably do this every time the last reminder scrolls off the screen. It would also be OK to do it every N messages (we should experiment to find a good N). This could be done as a follow-up after we have the initial version.

- **Message (same as in the intro)**: Here are a few messages I understand: “**apps**”, “**edit profile**”, “**dark mode**”, ”**light mode**”, “**streams**”, and “**topics**”.

[CZO discussion thread](https://chat.zulip.org/#narrow/stream/101-design/topic/welcome.20bot)

|

Hello @zulip/server-bots, @zulip/server-onboarding members, this issue was labeled with the "area: onboarding", "area: bots" labels, so you may want to check it out!

<!-- areaLabelAddition -->

@zulipbot claim

> * It should be possible to manually determine the order of commands in the message (i.e. not just alphabetical).

I am unable to understand what this means.

@raghupalash I was just pointing out that the commands suggested by the bot (“apps”, “edit profile”, “dark mode”, ”light mode”, “streams”, and “topics”) are not in alphabetical order.

Okay, thanks @alya! Also, If you got the time, the PR is ready for a review(or atleast I think it is ready).

Awesome, thanks @raghupalash ! Here are the remaining messages to add:

## Dark mode, light mode

(also: `night mode`, `day mode`, `theme`, `mode`)

- Go to [Display settings](http://[org URL]/#settings/display-settings) to [switch between light and dark mode](https://zulip.com/help/night-mode), [pick your favorite emoji theme](https://zulip.com/help/emoji-and-emoticons#change-your-emoji-set), [change your language](https://zulip.com/help/change-your-language), and make other tweaks to your Zulip experience.

## Streams

(also: `channels`)

In Zulip, streams [determine who gets a message](https://zulip.com/help/streams-and-topics). They are similar to channels in other apps.

[Browse and subscribe to streams](http://[org URL]/#streams/all).

## Topics

In Zulip, topics [tell you what a message is about](https://zulip.com/help/streams-and-topics). They are light-weight subjects, very similar to the subject line of an email.

Check out [Recent topics](http://[org URL]/#recent_topics) to see what's happening!

## Keyboard shortcuts

(also: `shortcuts`)

Zulip's [keyboard shortcuts](http://[org URL]/#keyboard-shortcuts) let you navigate the app quickly and efficiently.

Press `?` any time to see a [cheat sheet](http://[org URL]/#keyboard-shortcuts).

## Message formatting

(also: `format`, `formatting`)

Zulip uses [Markdown](https://zulip.com/help/format-your-message-using-markdown), an intuitive format for bold, italics, bulleted lists, and more. Click [here](http://[org URL]/#keyboard-shortcuts) for a cheat sheet.

<Is there a URL we can use in the message above to make sure it opens to the keyboard shortcuts tab?>

Check out our [messaging tips](https://zulip.com/help/messaging-tips) to learn about [emoji reactions](https://zulip.com/help/emoji-reactions), [code blocks](https://zulip.com/help/code-blocks) and much more!

## Help

(also: `?`)

Here are a few messages I understand: < list >

Check out our [Getting started guide](https://zulip.com/help/getting-started-with-zulip), or browse the [help documentation](https://zulip.com/help) to learn more!

The list of messages the bot says it understands becomes:

“apps”, “edit profile”, “dark mode”, ”light mode”, “streams”, “topics”, "message formatting", and "keyboard shortcuts".

I haven't checked whether this is currently the case, but for admins, we should keep the "We also have a guide for [Setting up your organization]({help_url})." line.

Alright, I'm on it. Btw I didn't touch the code that was related to organization setup, it was left as it is.

> <Is there a URL we can use in the message above to make sure it opens to the keyboard shortcuts tab?>

did you mean the message formatting tab? I figured that we need to go to `#message-formatting`.

> > <Is there a URL we can use in the message above to make sure it opens to the keyboard shortcuts tab?>

>

> did you mean the message formatting tab? I figured that we need to go to `#message-formatting`.

Ah, yeah, that's right -- thanks!

| 2021-10-16T08:48:14

|

zulip/zulip

| 19,987

|

zulip__zulip-19987

|

[

"18409"

] |

f6c78a35a4d875cd2617dd9672472ffa2c44ac6a

|

diff --git a/version.py b/version.py

--- a/version.py

+++ b/version.py

@@ -33,7 +33,7 @@

# Changes should be accompanied by documentation explaining what the

# new level means in templates/zerver/api/changelog.md, as well as

# "**Changes**" entries in the endpoint's documentation in `zulip.yaml`.

-API_FEATURE_LEVEL = 105

+API_FEATURE_LEVEL = 106

# Bump the minor PROVISION_VERSION to indicate that folks should provision

# only when going from an old version of the code to a newer version. Bump

@@ -48,4 +48,4 @@

# historical commits sharing the same major version, in which case a

# minor version bump suffices.

-PROVISION_VERSION = "163.0"

+PROVISION_VERSION = "164.0"

diff --git a/zerver/views/users.py b/zerver/views/users.py

--- a/zerver/views/users.py

+++ b/zerver/views/users.py

@@ -163,7 +163,7 @@ def update_user_backend(

request: HttpRequest,

user_profile: UserProfile,

user_id: int,

- full_name: Optional[str] = REQ(default=None, json_validator=check_string),

+ full_name: Optional[str] = REQ(default=None),

role: Optional[int] = REQ(

default=None,

json_validator=check_int_in(

|

diff --git a/zerver/tests/test_audit_log.py b/zerver/tests/test_audit_log.py

--- a/zerver/tests/test_audit_log.py

+++ b/zerver/tests/test_audit_log.py

@@ -206,7 +206,7 @@ def test_change_full_name(self) -> None:

start = timezone_now()

new_name = "George Hamletovich"

self.login("iago")

- req = dict(full_name=orjson.dumps(new_name).decode())

+ req = dict(full_name=new_name)

result = self.client_patch("/json/users/{}".format(self.example_user("hamlet").id), req)

self.assertTrue(result.status_code == 200)

query = RealmAuditLog.objects.filter(

diff --git a/zerver/tests/test_users.py b/zerver/tests/test_users.py

--- a/zerver/tests/test_users.py

+++ b/zerver/tests/test_users.py

@@ -377,7 +377,7 @@ def test_admin_user_can_change_full_name(self) -> None:

new_name = "new name"

self.login("iago")

hamlet = self.example_user("hamlet")

- req = dict(full_name=orjson.dumps(new_name).decode())

+ req = dict(full_name=new_name)

result = self.client_patch(f"/json/users/{hamlet.id}", req)

self.assert_json_success(result)

hamlet = self.example_user("hamlet")

@@ -385,21 +385,21 @@ def test_admin_user_can_change_full_name(self) -> None:

def test_non_admin_cannot_change_full_name(self) -> None:

self.login("hamlet")

- req = dict(full_name=orjson.dumps("new name").decode())

+ req = dict(full_name="new name")

result = self.client_patch("/json/users/{}".format(self.example_user("othello").id), req)

self.assert_json_error(result, "Insufficient permission")

def test_admin_cannot_set_long_full_name(self) -> None:

new_name = "a" * (UserProfile.MAX_NAME_LENGTH + 1)

self.login("iago")

- req = dict(full_name=orjson.dumps(new_name).decode())

+ req = dict(full_name=new_name)

result = self.client_patch("/json/users/{}".format(self.example_user("hamlet").id), req)

self.assert_json_error(result, "Name too long!")

def test_admin_cannot_set_short_full_name(self) -> None:

new_name = "a"

self.login("iago")

- req = dict(full_name=orjson.dumps(new_name).decode())

+ req = dict(full_name=new_name)

result = self.client_patch("/json/users/{}".format(self.example_user("hamlet").id), req)

self.assert_json_error(result, "Name too short!")

@@ -407,7 +407,7 @@ def test_not_allowed_format(self) -> None:

# Name of format "Alice|999" breaks in Markdown

new_name = "iago|72"

self.login("iago")

- req = dict(full_name=orjson.dumps(new_name).decode())

+ req = dict(full_name=new_name)

result = self.client_patch("/json/users/{}".format(self.example_user("hamlet").id), req)

self.assert_json_error(result, "Invalid format!")

@@ -415,21 +415,21 @@ def test_allowed_format_complex(self) -> None:

# Adding characters after r'|d+' doesn't break Markdown

new_name = "Hello- 12iago|72k"

self.login("iago")

- req = dict(full_name=orjson.dumps(new_name).decode())

+ req = dict(full_name=new_name)

result = self.client_patch("/json/users/{}".format(self.example_user("hamlet").id), req)

self.assert_json_success(result)

def test_not_allowed_format_complex(self) -> None:

new_name = "Hello- 12iago|72"

self.login("iago")

- req = dict(full_name=orjson.dumps(new_name).decode())

+ req = dict(full_name=new_name)

result = self.client_patch("/json/users/{}".format(self.example_user("hamlet").id), req)

self.assert_json_error(result, "Invalid format!")

def test_admin_cannot_set_full_name_with_invalid_characters(self) -> None:

new_name = "Opheli*"

self.login("iago")

- req = dict(full_name=orjson.dumps(new_name).decode())

+ req = dict(full_name=new_name)

result = self.client_patch("/json/users/{}".format(self.example_user("hamlet").id), req)

self.assert_json_error(result, "Invalid characters in name!")

|

Fix encoding of `full_name` parameter under `update_user` endpoint to not use `json_validator`.

The `update_user_backend` view has `full_name` parameter which uses `json_validator` for validating string inputs. This is not necessary and hence can be removed on the lines of similar changes made in #18356. For reference, see: https://chat.zulip.org/#narrow/stream/3-backend/topic/API.20format.20cleanup . We want this to be done most preferably after zulip/python-zulip-api#683.

|

@zulipbot add "area: api"

Hello @zulip/server-api members, this issue was labeled with the "area: api" label, so you may want to check it out!

<!-- areaLabelAddition -->

This is part of the bigger issue #18035 major part of which is already completed through #18356.

| 2021-10-16T17:57:07

|

zulip/zulip

| 19,996

|

zulip__zulip-19996

|

[

"19938"

] |

9381a3bd45969892a7c5e7817765a46415927616

|

diff --git a/zerver/webhooks/github/view.py b/zerver/webhooks/github/view.py

--- a/zerver/webhooks/github/view.py

+++ b/zerver/webhooks/github/view.py

@@ -29,6 +29,14 @@

fixture_to_headers = get_http_headers_from_filename("HTTP_X_GITHUB_EVENT")

+TOPIC_FOR_DISCUSSION = "{repo} discussion #{number}: {title}"

+DISCUSSION_TEMPLATE = (

+ "{author} started a new discussion [{title}]({url}) in {category}:\n```quote\n{body}\n```"

+)

+DISCUSSION_COMMENT_TEMPLATE = (

+ "{author} [commented]({comment_url}) on [discussion]({discussion_url}):\n```quote\n{body}\n```"

+)

+

class Helper:

def __init__(

@@ -254,6 +262,27 @@ def get_push_commits_body(helper: Helper) -> str:

)

+def get_discussion_body(helper: Helper) -> str:

+ payload = helper.payload

+ return DISCUSSION_TEMPLATE.format(

+ author=get_sender_name(payload),

+ title=payload["discussion"]["title"],

+ url=payload["discussion"]["html_url"],

+ body=payload["discussion"]["body"],

+ category=payload["discussion"]["category"]["name"],

+ )

+

+

+def get_discussion_comment_body(helper: Helper) -> str:

+ payload = helper.payload

+ return DISCUSSION_COMMENT_TEMPLATE.format(

+ author=get_sender_name(payload),

+ body=payload["comment"]["body"],

+ discussion_url=payload["discussion"]["html_url"],

+ comment_url=payload["comment"]["html_url"],

+ )

+

+

def get_public_body(helper: Helper) -> str:

payload = helper.payload

return "{} made the repository [{}]({}) public.".format(

@@ -602,6 +631,12 @@ def get_subject_based_on_type(payload: Dict[str, Any], event: str) -> str:

return get_organization_name(payload)

elif event == "check_run":

return f"{get_repository_name(payload)} / checks"

+ elif event.startswith("discussion"):

+ return TOPIC_FOR_DISCUSSION.format(

+ repo=get_repository_name(payload),

+ number=payload["discussion"]["number"],

+ title=payload["discussion"]["title"],

+ )

return get_repository_name(payload)

@@ -614,6 +649,8 @@ def get_subject_based_on_type(payload: Dict[str, Any], event: str) -> str:

"delete": partial(get_create_or_delete_body, action="deleted"),

"deployment": get_deployment_body,

"deployment_status": get_change_deployment_status_body,

+ "discussion": get_discussion_body,

+ "discussion_comment": get_discussion_comment_body,

"fork": get_fork_body,

"gollum": get_wiki_pages_body,

"issue_comment": get_issue_comment_body,

|

diff --git a/zerver/webhooks/github/tests.py b/zerver/webhooks/github/tests.py

--- a/zerver/webhooks/github/tests.py

+++ b/zerver/webhooks/github/tests.py

@@ -12,6 +12,7 @@

TOPIC_ORGANIZATION = "baxterandthehackers organization"

TOPIC_BRANCH = "public-repo / changes"

TOPIC_WIKI = "public-repo / wiki pages"

+TOPIC_DISCUSSION = "public-repo discussion #90: Welcome to discussions!"

class GitHubWebhookTest(WebhookTestCase):

@@ -483,3 +484,11 @@ def test_team_edited_with_unsupported_keys(self) -> None:

msg,

)

self.assertTrue(stack_info)

+

+ def test_discussion_msg(self) -> None:

+ expected_message = "Codertocat started a new discussion [Welcome to discussions!](https://github.com/baxterthehacker/public-repo/discussions/90) in General:\n```quote\nWe're glad to have you here!\n```"

+ self.check_webhook("discussion", TOPIC_DISCUSSION, expected_message)

+

+ def test_discussion_comment_msg(self) -> None:

+ expected_message = "Codertocat [commented](https://github.com/baxterthehacker/public-repo/discussions/90#discussioncomment-544078) on [discussion](https://github.com/baxterthehacker/public-repo/discussions/90):\n```quote\nI have so many questions to ask you!\n```"

+ self.check_webhook("discussion_comment", TOPIC_DISCUSSION, expected_message)

|

GitHub webhook: add support for discussions

The GitHub webhook currently doesn't support discussions. This is the response for a "discussion created" event:

```json

{

"result":"error",

"msg":"The 'discussion:created' event isn't currently supported by the GitHub webhook",

"webhook_name":"GitHub",

"event_type":"discussion:created",

"code":"UNSUPPORTED_WEBHOOK_EVENT_TYPE"

}

```

It would be nice if discussion events could be supported by the GitHub webhook.

|

Hello @zulip/server-integrations members, this issue was labeled with the "area: integrations" label, so you may want to check it out!

<!-- areaLabelAddition -->

This seems worth doing, and is a good issue for new contributors. See https://zulip.com/api/incoming-webhooks-overview for our documentation on writing integrations; in this case, one just needs to add features to the existing GitHub integration.

I would like to try working on this issue !

@zulipbot claim

Welcome to Zulip, @parthn2! We just sent you an invite to collaborate on this repository at https://github.com/zulip/zulip/invitations. Please accept this invite in order to claim this issue and begin a fun, rewarding experience contributing to Zulip!

Here's some tips to get you off to a good start:

- Join me on the [Zulip developers' server](https://chat.zulip.org), to get help, chat about this issue, and meet the other developers.

- [Unwatch this repository](https://help.github.com/articles/unwatching-repositories/), so that you don't get 100 emails a day.

As you work on this issue, you'll also want to refer to the [Zulip code contribution guide](https://zulip.readthedocs.io/en/latest/contributing/index.html), as well as the rest of the developer documentation on that site.

See you on the other side (that is, the pull request side)!

@zulipbot claim

Hello @madrix01, it looks like you've currently claimed 1 issue in this repository. We encourage new contributors to focus their efforts on at most 1 issue at a time, so please complete your work on your other claimed issues before trying to claim this issue again.

We look forward to your valuable contributions!

@zulipbot claim

| 2021-10-18T12:06:57

|

zulip/zulip

| 20,015

|

zulip__zulip-20015

|

[

"16013"

] |

4839b7ed272a9526ed4c055c3d7a3a8660ae5714

|

diff --git a/zerver/lib/markdown/__init__.py b/zerver/lib/markdown/__init__.py

--- a/zerver/lib/markdown/__init__.py

+++ b/zerver/lib/markdown/__init__.py

@@ -1795,7 +1795,22 @@ def __init__(

options.log_errors = False

compiled_re2 = re2.compile(prepare_linkifier_pattern(source_pattern), options=options)

- self.format_string = format_string

+

+ # Find percent-encoded bytes and escape them from the python

+ # interpolation. That is:

+ # %(foo)s -> %(foo)s

+ # %% -> %%

+ # %ab -> %%ab

+ # %%ab -> %%ab

+ # %%%ab -> %%%%ab

+ #

+ # We do this here, rather than before storing, to make edits

+ # to the underlying linkifier more straightforward, and

+ # because JS does not have a real formatter.

+ self.format_string = re.sub(

+ r"(?<!%)(%%)*%([a-fA-F0-9][a-fA-F0-9])", r"\1%%\2", format_string

+ )

+

super().__init__(compiled_re2, md)

def handleMatch( # type: ignore[override] # supertype incompatible with supersupertype

diff --git a/zerver/migrations/0368_alter_realmfilter_url_format_string.py b/zerver/migrations/0368_alter_realmfilter_url_format_string.py

new file mode 100644

--- /dev/null

+++ b/zerver/migrations/0368_alter_realmfilter_url_format_string.py

@@ -0,0 +1,20 @@

+# Generated by Django 3.2.8 on 2021-10-20 23:42

+

+from django.db import migrations, models

+

+from zerver.models import filter_format_validator

+

+

+class Migration(migrations.Migration):

+

+ dependencies = [

+ ("zerver", "0367_scimclient"),

+ ]

+

+ operations = [

+ migrations.AlterField(

+ model_name="realmfilter",

+ name="url_format_string",

+ field=models.TextField(validators=[filter_format_validator]),

+ ),

+ ]

diff --git a/zerver/models.py b/zerver/models.py

--- a/zerver/models.py

+++ b/zerver/models.py

@@ -1120,10 +1120,36 @@ def filter_pattern_validator(value: str) -> Pattern[str]:

def filter_format_validator(value: str) -> None:

- regex = re.compile(r"^([\.\/:a-zA-Z0-9#_?=&;~-]+%\(([a-zA-Z0-9_-]+)\)s)+[/a-zA-Z0-9#_?=&;~-]*$")

+ """Verifies URL-ness, and then %(foo)s.

+

+ URLValidator is assumed to catch anything which is malformed as a

+ URL; the regex then verifies the format-string pieces.

+ """

+

+ URLValidator()(value)

+

+ regex = re.compile(

+ r"""

+ ^

+ (

+ [^%] # Any non-percent,

+ | # OR...

+ % ( # A %, which can mean:

+ \( [a-zA-Z0-9_-]+ \) s # Interpolation group

+ | # OR

+ % # %%, which is an escaped %

+ | # OR

+ [0-9a-fA-F][0-9a-fA-F] # URL percent-encoded bytes, which we

+ # special-case in markdown translation

+ )

+ )+ # Those happen one or more times

+ $

+ """,

+ re.VERBOSE,

+ )

if not regex.match(value):

- raise ValidationError(_("Invalid URL format string."))

+ raise ValidationError(_("Invalid format string in URL."))

class RealmFilter(models.Model):

@@ -1134,7 +1160,7 @@ class RealmFilter(models.Model):

id: int = models.AutoField(auto_created=True, primary_key=True, verbose_name="ID")

realm: Realm = models.ForeignKey(Realm, on_delete=CASCADE)

pattern: str = models.TextField()

- url_format_string: str = models.TextField(validators=[URLValidator(), filter_format_validator])

+ url_format_string: str = models.TextField(validators=[filter_format_validator])

class Meta:

unique_together = ("realm", "pattern")

|

diff --git a/frontend_tests/puppeteer_tests/realm-linkifier.ts b/frontend_tests/puppeteer_tests/realm-linkifier.ts

--- a/frontend_tests/puppeteer_tests/realm-linkifier.ts

+++ b/frontend_tests/puppeteer_tests/realm-linkifier.ts

@@ -105,10 +105,7 @@ async function test_edit_invalid_linkifier(page: Page): Promise<void> {

page,

edit_linkifier_format_status_selector,

);

- assert.strictEqual(

- edit_linkifier_format_status,

- "Failed: Enter a valid URL.,Invalid URL format string.",

- );

+ assert.strictEqual(edit_linkifier_format_status, "Failed: Enter a valid URL.");

await page.click(".close-modal-btn");

await page.waitForSelector("#dialog_widget_modal", {hidden: true});

diff --git a/zerver/tests/test_markdown.py b/zerver/tests/test_markdown.py

--- a/zerver/tests/test_markdown.py

+++ b/zerver/tests/test_markdown.py

@@ -1401,6 +1401,20 @@ def assert_conversion(content: str, should_have_converted: bool = True) -> None:

],

)

+ # Test URI escaping

+ RealmFilter(

+ realm=realm,

+ pattern=r"url-(?P<id>[0-9]+)",

+ url_format_string="https://example.com/%%%ba/%(id)s",

+ ).save()

+ msg = Message(sender=self.example_user("hamlet"))

+ content = "url-123 is well-escaped"

+ converted = markdown_convert(content, message_realm=realm, message=msg)

+ self.assertEqual(

+ converted.rendered_content,

+ '<p><a href="https://example.com/%%ba/123">url-123</a> is well-escaped</p>',

+ )

+

def test_multiple_matching_realm_patterns(self) -> None:

realm = get_realm("zulip")

url_format_string = r"https://trac.example.com/ticket/%(id)s"

diff --git a/zerver/tests/test_realm_linkifiers.py b/zerver/tests/test_realm_linkifiers.py

--- a/zerver/tests/test_realm_linkifiers.py

+++ b/zerver/tests/test_realm_linkifiers.py

@@ -1,7 +1,9 @@

import re

+from django.core.exceptions import ValidationError

+

from zerver.lib.test_classes import ZulipTestCase

-from zerver.models import RealmFilter

+from zerver.models import RealmFilter, filter_format_validator

class RealmFilterTest(ZulipTestCase):

@@ -43,7 +45,9 @@ def test_create(self) -> None:

data["pattern"] = r"ZUL-(?P<id>\d+)"

data["url_format_string"] = "https://realm.com/my_realm_filter/"

result = self.client_post("/json/realm/filters", info=data)

- self.assert_json_error(result, "Invalid URL format string.")

+ self.assert_json_error(

+ result, "Group 'id' in linkifier pattern is not present in URL format string."

+ )

data["url_format_string"] = "https://realm.com/my_realm_filter/#hashtag/%(id)s"

result = self.client_post("/json/realm/filters", info=data)

@@ -117,13 +121,15 @@ def test_create(self) -> None:

result, "Group 'id' in linkifier pattern is not present in URL format string."

)

- # BUG: In theory, this should be valid, since %% should be a

- # valid escaping method. It's unlikely someone actually wants

- # to do this, though.

- data["pattern"] = r"ZUL-(?P<id>\d+)"

+ data["pattern"] = r"ZUL-ESCAPE-(?P<id>\d+)"

data["url_format_string"] = r"https://realm.com/my_realm_filter/%%(ignored)s/%(id)s"

result = self.client_post("/json/realm/filters", info=data)

- self.assert_json_error(result, "Invalid URL format string.")

+ self.assert_json_success(result)

+

+ data["pattern"] = r"ZUL-URI-(?P<id>\d+)"

+ data["url_format_string"] = "https://example.com/%ba/%(id)s"

+ result = self.client_post("/json/realm/filters", info=data)

+ self.assert_json_success(result)

data["pattern"] = r"(?P<org>[a-zA-Z0-9_-]+)/(?P<repo>[a-zA-Z0-9_-]+)#(?P<id>[0-9]+)"

data["url_format_string"] = "https://github.com/%(org)s/%(repo)s/issue/%(id)s"

@@ -200,3 +206,46 @@ def test_update(self) -> None:

data["url_format_string"] = "https://realm.com/my_realm_filter/%(id)s"

result = self.client_patch(f"/json/realm/filters/{linkifier_id + 1}", info=data)

self.assert_json_error(result, "Linkifier not found.")

+

+ def test_valid_urls(self) -> None:

+ valid_urls = [

+ "http://example.com/",

+ "https://example.com/",

+ "https://user:[email protected]/",

+ "https://example.com/@user/thing",

+ "https://example.com/!path",

+ "https://example.com/foo.bar",

+ "https://example.com/foo[bar]",

+ "https://example.com/%(foo)s",

+ "https://example.com/%(foo)s%(bars)s",

+ "https://example.com/%(foo)s/and/%(bar)s",

+ "https://example.com/?foo=%(foo)s",

+ "https://example.com/%ab",

+ "https://example.com/%ba",

+ "https://example.com/%21",

+ "https://example.com/words%20with%20spaces",

+ "https://example.com/back%20to%20%(back)s",

+ "https://example.com/encoded%2fwith%2fletters",

+ "https://example.com/encoded%2Fwith%2Fupper%2Fcase%2Fletters",

+ "https://example.com/%%",

+ "https://example.com/%%(",

+ "https://example.com/%%()",

+ "https://example.com/%%(foo",

+ "https://example.com/%%(foo)",

+ "https://example.com/%%(foo)s",

+ ]

+ for url in valid_urls:

+ filter_format_validator(url)

+

+ invalid_urls = [

+ "file:///etc/passwd",

+ "data:text/plain;base64,SGVsbG8sIFdvcmxkIQ==",

+ "https://example.com/%(foo)",

+ "https://example.com/%()s",

+ "https://example.com/%4!",

+ "https://example.com/%(foo",

+ "https://example.com/%2(foo)s",

+ ]

+ for url in invalid_urls:

+ with self.assertRaises(ValidationError):

+ filter_format_validator(url)

|

Allow more characters (`%`, `!`, `+`, `[`, `]`, `@`) in linkifier urls

https://chat.zulip.org/#narrow/stream/19-documentation/topic/linkifier/near/967173

We cannot use the % character in linkifier format strings currently. We should either document this or fix this.

Example: `https://trac.example.com/epic/%(num)s/hello%25world` is an invalid format string.

|

I also can't use a literal `@` character. This makes URLs with an `@` in them impossible to output from a linkifier, which basically makes it impossible to linkify URLs of the form `https://observablehq.com/@jrus/sinebar`.

While we are at it apparently @ can't be used in the match pattern either. Ideally I'd like to be able to make a linkifier for

`@jrus/sinebar` → https://observablehq.com/@jrus/sinebar

Currently trying to put a `%` or `@` into the URL format string results in `Failed: Invalid URL format string.`

Here is the spec: https://url.spec.whatwg.org

> The **URL code points** are ASCII alphanumeric, U+0021 (!), U+0024 ($), U+0026 (&), U+0027 ('), U+0028 LEFT PARENTHESIS, U+0029 RIGHT PARENTHESIS, U+002A (*), U+002B (+), U+002C (,), U+002D (-), U+002E (.), U+002F (/), U+003A (:), U+003B (;), U+003D (=), U+003F (?), U+0040 (@), U+005F (_), U+007E (~), and code points in the range U+00A0 to U+10FFFD, inclusive, excluding surrogates and noncharacters.

> The **fragment percent-encode set** is the C0 control percent-encode set and U+0020 SPACE, U+0022 ("), U+003C (<), U+003E (>), and U+0060 (`).

> The **query percent-encode set** is the C0 control percent-encode set and U+0020 SPACE, U+0022 ("), U+0023 (#), U+003C (<), and U+003E (>).

Whereas the current validator supports only: `[/a-zA-Z0-9#_?=&;~-]*`, which does not match the above.

https://github.com/zulip/zulip/blob/master/zerver/models.py#L751-L769

* * *

Perhaps `[/a-zA-Z0-9#_?=&;~-]*` could be changed to ``[-!$&'()*+,./:;=?@_~\w"#<>`%]*`` or the like.

Spaces should also probably be allowed in output URLs, but if necessary people could be forced to encode them as `%20`.

I've encountered the same issue with bang (`!`) characters, which are used in Icinga2 resource URLs. I don't understand why a second validation is needed when the URL submitted is already run through the standard `URLValidator`. Many valid characters are needlessly blocked...

Another requested character is `.`: https://chat.zulip.org/#narrow/stream/9-issues/topic/linkifier.20with.20period/near/1264973

(Though I agree with @jcharaoui that we probably shouldn't be applying a regex like this at all.)

| 2021-10-20T01:19:57

|

zulip/zulip

| 20,038

|

zulip__zulip-20038

|

[

"13948"

] |

14b07669cc342d5da81df7e8367a55a22f05eb41

|

diff --git a/zerver/lib/types.py b/zerver/lib/types.py

--- a/zerver/lib/types.py

+++ b/zerver/lib/types.py

@@ -59,6 +59,7 @@ class LinkifierDict(TypedDict):

class SAMLIdPConfigDict(TypedDict, total=False):

entity_id: str

url: str

+ slo_url: str

attr_user_permanent_id: str

attr_first_name: str

attr_last_name: str

diff --git a/zproject/backends.py b/zproject/backends.py

--- a/zproject/backends.py

+++ b/zproject/backends.py

@@ -35,6 +35,7 @@

from django_auth_ldap.backend import LDAPBackend, _LDAPUser, ldap_error

from lxml.etree import XMLSyntaxError

from onelogin.saml2.errors import OneLogin_Saml2_Error

+from onelogin.saml2.logout_request import OneLogin_Saml2_Logout_Request

from onelogin.saml2.response import OneLogin_Saml2_Response

from onelogin.saml2.settings import OneLogin_Saml2_Settings

from requests import HTTPError

@@ -62,6 +63,7 @@

do_create_user,

do_deactivate_user,

do_reactivate_user,

+ do_regenerate_api_key,

do_update_user_custom_profile_data_if_changed,

)

from zerver.lib.avatar import avatar_url, is_avatar_new

@@ -73,6 +75,7 @@

from zerver.lib.rate_limiter import RateLimitedObject

from zerver.lib.redis_utils import get_dict_from_redis, get_redis_client, put_dict_in_redis

from zerver.lib.request import RequestNotes

+from zerver.lib.sessions import delete_user_sessions

from zerver.lib.subdomains import get_subdomain

from zerver.lib.types import ProfileDataElementValue

from zerver.lib.url_encoding import append_url_query_string

@@ -2252,21 +2255,33 @@ def get_data_from_redis(cls, key: str) -> Optional[Dict[str, Any]]:

return data

- def get_issuing_idp(self, SAMLResponse: str) -> Optional[str]:

+ def get_issuing_idp(self, saml_response_or_request: Tuple[str, str]) -> Optional[str]:

"""

- Given a SAMLResponse, returns which of the configured IdPs is declared as the issuer.

+ Given a SAMLResponse or SAMLRequest, returns which of the configured IdPs

+ is declared as the issuer.

This value MUST NOT be trusted as the true issuer!

The signatures are not validated, so it can be tampered with by the user.

That's not a problem for this function,

and true validation happens later in the underlying libraries, but it's important

to note this detail. The purpose of this function is merely as a helper to figure out which

- of the configured IdPs' information to use for parsing and validating the response.

+ of the configured IdPs' information to use for parsing and validating the request.

"""

try:

config = self.generate_saml_config()

saml_settings = OneLogin_Saml2_Settings(config, sp_validation_only=True)

- resp = OneLogin_Saml2_Response(settings=saml_settings, response=SAMLResponse)

- issuers = resp.get_issuers()

+ if saml_response_or_request[1] == "SAMLResponse":

+ resp = OneLogin_Saml2_Response(

+ settings=saml_settings, response=saml_response_or_request[0]

+ )

+ issuers = resp.get_issuers()

+ else:

+ assert saml_response_or_request[1] == "SAMLRequest"

+

+ # The only valid SAMLRequest we can receive is a LogoutRequest.

+ logout_request_xml = OneLogin_Saml2_Logout_Request(

+ config, saml_response_or_request[0]

+ ).get_xml()

+ issuers = [OneLogin_Saml2_Logout_Request.get_issuer(logout_request_xml)]

except self.SAMLRESPONSE_PARSING_EXCEPTIONS:

self.logger.info("Error while parsing SAMLResponse:", exc_info=True)

return None

@@ -2357,10 +2372,76 @@ def _check_entitlements(

)

raise AuthFailed(self, error_msg)

+ def process_logout(self, subdomain: str, idp_name: str) -> Optional[HttpResponse]:

+ """

+ We override process_logout, because we need to customize

+ the way of revoking sessions and introduce NameID validation.

+

+ The python-social-auth and python3-saml implementations expect a simple

+ callback function without arguments, to delete the session. We're not

+ happy with that for two reasons:

+ 1. These implementations don't look at the NameID in the LogoutRequest, which

+ is not quite correct, as a LogoutRequest to logout user X can be delivered

+ through any means, and doesn't need a session to be valid.

+ E.g. a backchannel logout request sent by the IdP wouldn't have a session cookie.

+ Also, hypothetically, a LogoutRequest to logout user Y shouldn't logout user X, even if the

+ request is made with a session cookie belonging to user X.

+ 2. We want to revoke all sessions for the user, not just the current session

+ of the request, so after validating the LogoutRequest, we need to identify

+ the user by the NameID, do some validation and then revoke all sessions.

+

+ TODO: This does not return a LogoutResponse in case of failure, like the spec requires.

+ https://github.com/zulip/zulip/issues/20076 is the related issue with more detail

+ on how to implement the desired behavior.

+ """

+ idp = self.get_idp(idp_name)

+ auth = self._create_saml_auth(idp)

+ # This validates the LogoutRequest and prepares the response

+ # (the URL to which to redirect the client to convey the response to the IdP)