Datasets:

license: apache-2.0

task_categories:

- image-segmentation

- image-to-text

- text-generation

language:

- en

pretty_name: s

size_categories:

- 10K<n<100K

Pix2Cap-COCO

Dataset Description

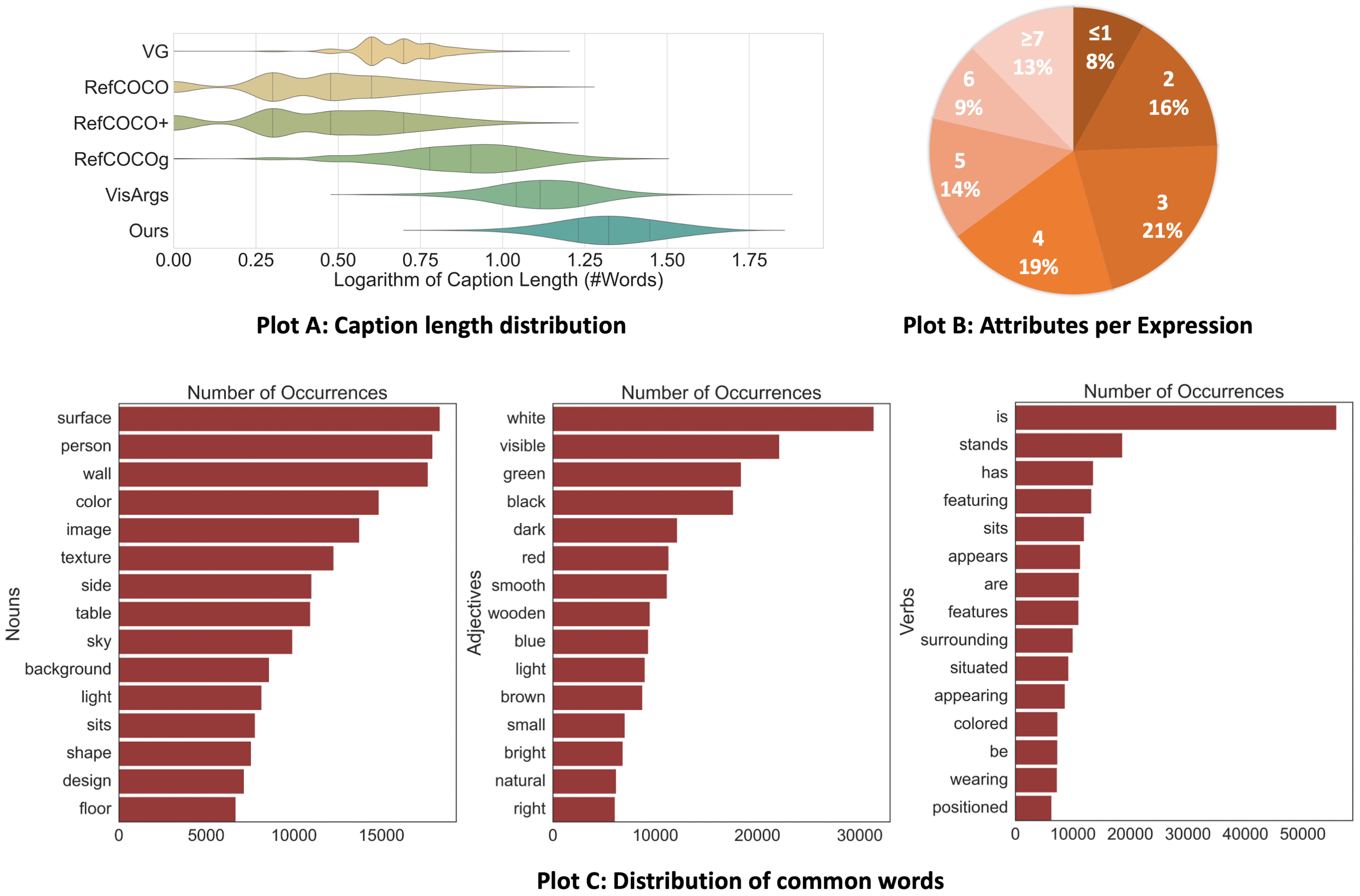

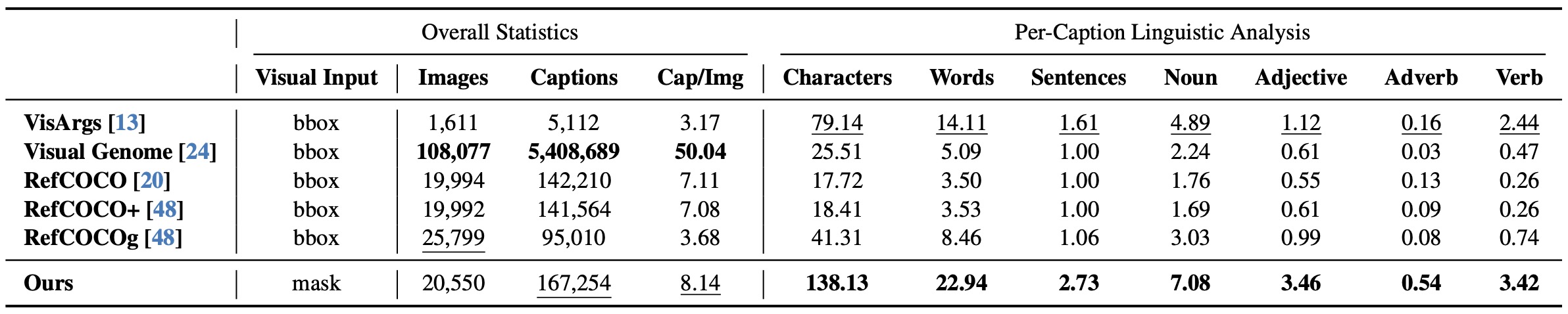

Pix2Cap-COCO is the first pixel-level captioning dataset derived from the panoptic COCO 2017 dataset, designed to provide more precise visual descriptions than traditional region-level captioning datasets. It consists of 20,550 images, partitioned into a training set (18,212 images) and a validation set (2,338 images), mirroring the original COCO split. The dataset includes 167,254 detailed pixel-level captions, each averaging 22.94 words in length. Unlike datasets like Visual Genome, which have significant redundancy, Pix2Cap-COCO ensures one unique caption per mask, eliminating repetition and improving the clarity of object representation.

Pix2Cap-COCO is designed to offer a more accurate match between the captions and visual content, enhancing tasks such as visual understanding, spatial reasoning, and object interaction analysis. Pix2Cap-COCO stands out with its larger number of images and detailed captions, offering significant improvements over existing region-level captioning datasets.

Dataset Version

1.0

Languages

English

Task(s)

- Pixel-level Captioning: Generating detailed pixel-level captions for segmented objects in images.

- Visual Reasoning: Analyzing object relationships and spatial interactions in scenes.

Use Case(s)

Pix2Cap-COCO is designed for tasks that require detailed visual understanding and caption generation, including:

- Object detection and segmentation with contextual captions

- Spatial reasoning and understanding spatial relations

- Object interaction analysis and reasoning

- Improving visual language models by providing more detailed descriptions of visual content

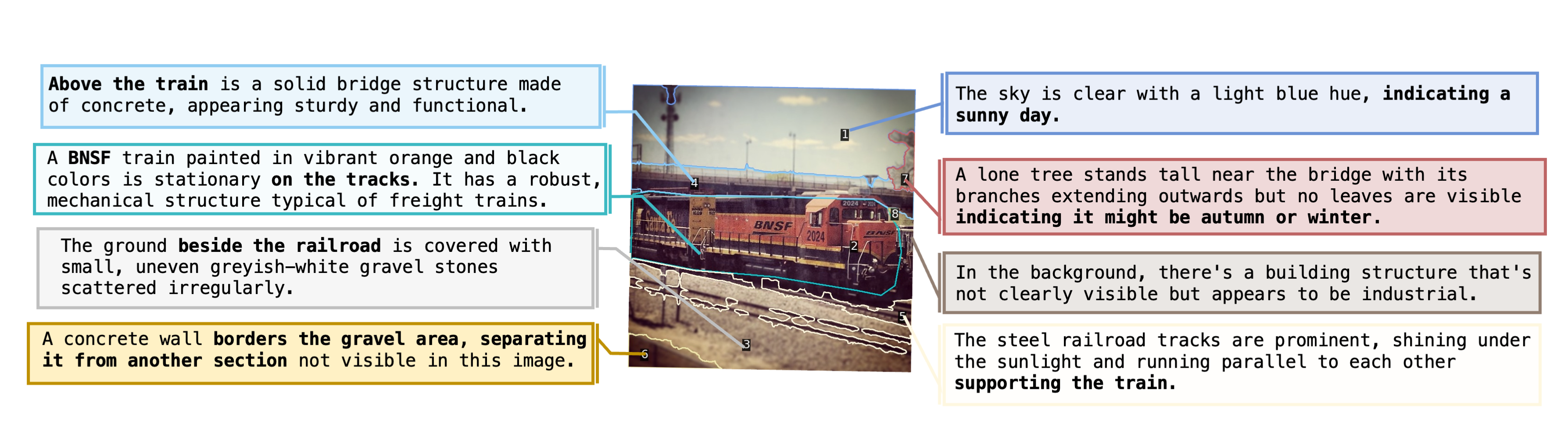

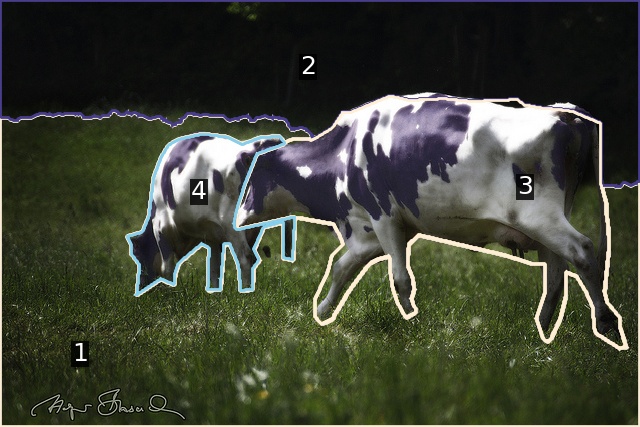

Example(s)

Dataset Analysis

Data Scale

- Total Images: 20,550

- Training Images: 18,212

- Validation Images: 2,338

- Total Captions: 167,254

Caption Quality

- Average Words per Caption: 22.94

- Average Sentences per Caption: 2.73

- Average Nouns per Caption: 7.08

- Average Adjectives per Caption: 3.46

- Average Verbs per Caption: 3.42

Pix2Cap-COCO captions are significantly more detailed than datasets like Visual Genome, which averages only 5.09 words per caption. These highly detailed captions allow the dataset to capture intricate relationships within scenes and demonstrate a balanced use of linguistic elements. Pix2Cap-COCO excels in capturing complex spatial relationships, with hierarchical annotations that describe both coarse (e.g., 'next to', 'above') and fine-grained spatial relations (e.g., 'partially occluded by', 'vertically aligned with').

License

This dataset is released under the Apache 2.0 License. Please ensure that you comply with the terms before using the dataset.

Citation

If you use this dataset in your work, please cite the original paper:

@article{you2025pix2cap},

title={Pix2Cap-COCO: Advancing Visual Comprehension via Pixel-Level Captioning},

author={Zuyao You and Junke Wang and Lingyu Kong and Bo He and Zuxuan Wu},

journal={arXiv preprint arXiv:2501.13893},

year={2025}

}

Acknowledgments

Pix2Cap-COCO is built upon Panoptic COCO 2017 dataset, with the pipeline powered by Set-of-Mark and GPT-4v.