sha

null | last_modified

null | library_name

stringclasses 154

values | text

stringlengths 1

900k

| metadata

stringlengths 2

348k

| pipeline_tag

stringclasses 45

values | id

stringlengths 5

122

| tags

listlengths 1

1.84k

| created_at

stringlengths 25

25

| arxiv

listlengths 0

201

| languages

listlengths 0

1.83k

| tags_str

stringlengths 17

9.34k

| text_str

stringlengths 0

389k

| text_lists

listlengths 0

722

| processed_texts

listlengths 1

723

| tokens_length

listlengths 1

723

| input_texts

listlengths 1

61

| embeddings

listlengths 768

768

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

null | null |

transformers

|

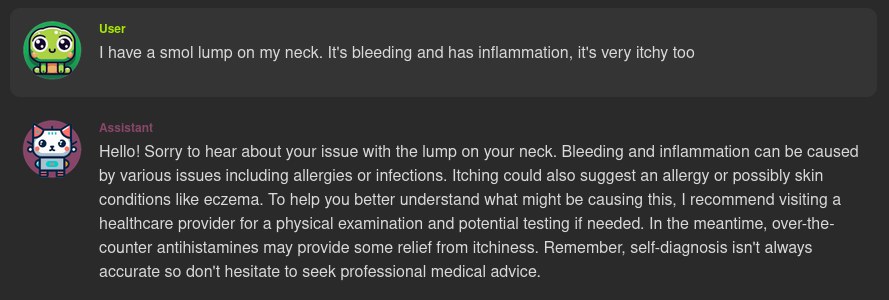

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# test_trainer_2

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5882

- Accuracy: 0.7805

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.7323 | 0.5 | 500 | 0.6435 | 0.7375 |

| 0.6303 | 1.0 | 1000 | 0.5711 | 0.768 |

| 0.4719 | 1.5 | 1500 | 0.6429 | 0.7735 |

| 0.4581 | 2.0 | 2000 | 0.5882 | 0.7805 |

### Framework versions

- Transformers 4.35.0

- Pytorch 2.1.0+cu118

- Datasets 2.14.6

- Tokenizers 0.14.1

|

{"license": "apache-2.0", "tags": ["generated_from_trainer"], "metrics": ["accuracy"], "base_model": "distilbert-base-uncased", "model-index": [{"name": "test_trainer_2", "results": []}]}

|

text-classification

|

qwekuaryee/test_trainer_2

|

[

"transformers",

"tensorboard",

"safetensors",

"distilbert",

"text-classification",

"generated_from_trainer",

"base_model:distilbert-base-uncased",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

2023-11-11T09:43:21+00:00

|

[] |

[] |

TAGS

#transformers #tensorboard #safetensors #distilbert #text-classification #generated_from_trainer #base_model-distilbert-base-uncased #license-apache-2.0 #autotrain_compatible #endpoints_compatible #region-us

|

test\_trainer\_2

================

This model is a fine-tuned version of distilbert-base-uncased on an unknown dataset.

It achieves the following results on the evaluation set:

* Loss: 0.5882

* Accuracy: 0.7805

Model description

-----------------

More information needed

Intended uses & limitations

---------------------------

More information needed

Training and evaluation data

----------------------------

More information needed

Training procedure

------------------

### Training hyperparameters

The following hyperparameters were used during training:

* learning\_rate: 5e-05

* train\_batch\_size: 8

* eval\_batch\_size: 8

* seed: 42

* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

* lr\_scheduler\_type: linear

* num\_epochs: 2

### Training results

### Framework versions

* Transformers 4.35.0

* Pytorch 2.1.0+cu118

* Datasets 2.14.6

* Tokenizers 0.14.1

|

[

"### Training hyperparameters\n\n\nThe following hyperparameters were used during training:\n\n\n* learning\\_rate: 5e-05\n* train\\_batch\\_size: 8\n* eval\\_batch\\_size: 8\n* seed: 42\n* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n* lr\\_scheduler\\_type: linear\n* num\\_epochs: 2",

"### Training results",

"### Framework versions\n\n\n* Transformers 4.35.0\n* Pytorch 2.1.0+cu118\n* Datasets 2.14.6\n* Tokenizers 0.14.1"

] |

[

"TAGS\n#transformers #tensorboard #safetensors #distilbert #text-classification #generated_from_trainer #base_model-distilbert-base-uncased #license-apache-2.0 #autotrain_compatible #endpoints_compatible #region-us \n",

"### Training hyperparameters\n\n\nThe following hyperparameters were used during training:\n\n\n* learning\\_rate: 5e-05\n* train\\_batch\\_size: 8\n* eval\\_batch\\_size: 8\n* seed: 42\n* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n* lr\\_scheduler\\_type: linear\n* num\\_epochs: 2",

"### Training results",

"### Framework versions\n\n\n* Transformers 4.35.0\n* Pytorch 2.1.0+cu118\n* Datasets 2.14.6\n* Tokenizers 0.14.1"

] |

[

72,

98,

4,

33

] |

[

"passage: TAGS\n#transformers #tensorboard #safetensors #distilbert #text-classification #generated_from_trainer #base_model-distilbert-base-uncased #license-apache-2.0 #autotrain_compatible #endpoints_compatible #region-us \n### Training hyperparameters\n\n\nThe following hyperparameters were used during training:\n\n\n* learning\\_rate: 5e-05\n* train\\_batch\\_size: 8\n* eval\\_batch\\_size: 8\n* seed: 42\n* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n* lr\\_scheduler\\_type: linear\n* num\\_epochs: 2### Training results### Framework versions\n\n\n* Transformers 4.35.0\n* Pytorch 2.1.0+cu118\n* Datasets 2.14.6\n* Tokenizers 0.14.1"

] |

[

-0.10046876966953278,

0.1125764399766922,

-0.0027080359868705273,

0.1144096702337265,

0.14257103204727173,

0.016591709107160568,

0.16021348536014557,

0.11586449295282364,

-0.0657213032245636,

0.0483417846262455,

0.12526677548885345,

0.12690626084804535,

0.01612185314297676,

0.11885209381580353,

-0.08061505854129791,

-0.21474634110927582,

0.010398236103355885,

0.02374373748898506,

-0.055655159056186676,

0.11428427696228027,

0.09425053745508194,

-0.12247130274772644,

0.0893663763999939,

-0.018867019563913345,

-0.16826464235782623,

0.006194108631461859,

0.016734529286623,

-0.04952392354607582,

0.12346028536558151,

0.031931813806295395,

0.13148701190948486,

0.03601619973778725,

0.08422604203224182,

-0.19389918446540833,

0.010563207790255547,

0.06138182431459427,

-0.006117812357842922,

0.08097829669713974,

0.037493400275707245,

-0.00814078003168106,

0.07150129973888397,

-0.09506236761808395,

0.06280279159545898,

0.01760585606098175,

-0.12730279564857483,

-0.20826734602451324,

-0.08686872571706772,

0.036620717495679855,

0.09503306448459625,

0.07719039171934128,

-0.012397234328091145,

0.11573661118745804,

-0.05278952792286873,

0.09630926698446274,

0.20252647995948792,

-0.3074738383293152,

-0.06122417002916336,

0.04871252924203873,

0.026059266179800034,

0.09285349398851395,

-0.09955881536006927,

-0.01873454637825489,

0.05870483070611954,

0.02436549961566925,

0.12635250389575958,

-0.02386312186717987,

-0.05658906325697899,

0.00024068569473456591,

-0.14341311156749725,

-0.018062178045511246,

0.15594273805618286,

0.052675142884254456,

-0.04536719620227814,

-0.05082538723945618,

-0.07208751142024994,

-0.13419847190380096,

-0.04040507227182388,

-0.008917291648685932,

0.048436231911182404,

-0.021616872400045395,

-0.05836941674351692,

-0.01943621039390564,

-0.0962788462638855,

-0.0632663369178772,

-0.05396883189678192,

0.13735082745552063,

0.03498159721493721,

0.003068953985348344,

-0.009185094386339188,

0.10060082376003265,

-0.02568228729069233,

-0.14627501368522644,

0.021357042714953423,

0.019093254581093788,

0.00352443172596395,

-0.05037057399749756,

-0.05049663409590721,

-0.07908042520284653,

0.022184351459145546,

0.15840990841388702,

-0.047379568219184875,

0.054167669266462326,

0.0014898902736604214,

0.04592060297727585,

-0.10112336277961731,

0.169618159532547,

-0.04028068110346794,

-0.03781473636627197,

0.021088548004627228,

0.09305024892091751,

0.05443304777145386,

-0.01718798838555813,

-0.13090378046035767,

0.03483465313911438,

0.10609138756990433,

0.020366987213492393,

-0.052105799317359924,

0.06800016015768051,

-0.05884658917784691,

-0.015078995376825333,

0.04038850590586662,

-0.09562069922685623,

0.028218362480401993,

0.006463191006332636,

-0.05424954369664192,

-0.042970966547727585,

0.03058096580207348,

0.021929895505309105,

0.0029860998038202524,

0.10625619441270828,

-0.08058462291955948,

0.009801216423511505,

-0.08217079937458038,

-0.1298609972000122,

0.01705019176006317,

-0.09501111507415771,

0.019991455599665642,

-0.10994985699653625,

-0.17782966792583466,

-0.015463045798242092,

0.0633704736828804,

-0.028733083978295326,

-0.03234821557998657,

-0.06454087048768997,

-0.07765425741672516,

0.017550816759467125,

-0.011595184914767742,

0.06652646511793137,

-0.06562294065952301,

0.0956260934472084,

0.03344224393367767,

0.06556966155767441,

-0.06316859275102615,

0.041904423385858536,

-0.09946993738412857,

0.03949470445513725,

-0.1791042685508728,

0.036597833037376404,

-0.07104381173849106,

0.07117285579442978,

-0.08137182891368866,

-0.07149912416934967,

0.005953679792582989,

-0.0022400622256100178,

0.07289833575487137,

0.09794612973928452,

-0.1773061454296112,

-0.06192265823483467,

0.15311475098133087,

-0.08641324192285538,

-0.14248938858509064,

0.1381250023841858,

-0.058732010424137115,

0.041242849081754684,

0.06443048268556595,

0.19249026477336884,

0.07816122472286224,

-0.08485826849937439,

0.0036032043863087893,

0.002430140506476164,

0.07122959941625595,

-0.02996496669948101,

0.06841529905796051,

-0.0033047988545149565,

0.004889561329036951,

0.014031810685992241,

-0.050873689353466034,

0.04907378926873207,

-0.07615404576063156,

-0.09155403077602386,

-0.040994830429553986,

-0.10261392593383789,

0.06387899816036224,

0.050828028470277786,

0.06190165504813194,

-0.1103399246931076,

-0.0871523916721344,

0.05925057455897331,

0.07542960345745087,

-0.07424912601709366,

0.02398637868463993,

-0.0689469575881958,

0.09191913902759552,

-0.058696385473012924,

-0.01405325811356306,

-0.15783292055130005,

-0.04575943201780319,

0.020656410604715347,

-0.0006006266921758652,

0.015359259210526943,

-0.007252533454447985,

0.06981495767831802,

0.08233112096786499,

-0.06676319986581802,

-0.032795000821352005,

-0.013559898361563683,

0.017005395144224167,

-0.12631145119667053,

-0.2024872750043869,

-0.014664549380540848,

-0.03431008383631706,

0.14748167991638184,

-0.2344648540019989,

0.05236019194126129,

0.0021778305526822805,

0.09016520529985428,

0.04132642224431038,

-0.012010760605335236,

-0.03913167864084244,

0.07049629837274551,

-0.050718434154987335,

-0.0696059986948967,

0.0609673447906971,

0.008100674487650394,

-0.10471776872873306,

-0.04641009867191315,

-0.15138806402683258,

0.18301157653331757,

0.1333843618631363,

-0.07939770072698593,

-0.07219938933849335,

0.00597616471350193,

-0.03415895625948906,

-0.027326412498950958,

-0.03595014661550522,

0.0032311989925801754,

0.12755580246448517,

-0.005618741270154715,

0.1538165807723999,

-0.08451253920793533,

-0.030165527015924454,

0.021780164912343025,

-0.04442718252539635,

0.00890092458575964,

0.11607609689235687,

0.08882644027471542,

-0.10154708474874496,

0.14826525747776031,

0.19827982783317566,

-0.0925389900803566,

0.13005377352237701,

-0.04573618993163109,

-0.052237048745155334,

-0.02354862168431282,

0.008667023852467537,

0.015023235231637955,

0.10963618010282516,

-0.11945831030607224,

-0.0007891920395195484,

0.00981393177062273,

0.014960769563913345,

0.011095183901488781,

-0.2138211578130722,

-0.02341753989458084,

0.04211755841970444,

-0.05094921216368675,

0.0012050480581820011,

-0.024492770433425903,

-0.009137211367487907,

0.0977817252278328,

-0.003705349750816822,

-0.0914861261844635,

0.04597856104373932,

-0.006143293809145689,

-0.07744509726762772,

0.2027311623096466,

-0.09378431737422943,

-0.14315195381641388,

-0.13483300805091858,

-0.07130499929189682,

-0.056385595351457596,

0.03167591616511345,

0.06409783661365509,

-0.06841786950826645,

-0.040668778121471405,

-0.11233263462781906,

-0.00824656244367361,

0.031029174104332924,

0.021189523860812187,

0.02121170237660408,

-0.004432493820786476,

0.08350148797035217,

-0.09906553477048874,

-0.0074590095318853855,

-0.035078659653663635,

-0.05110637843608856,

0.03642391040921211,

0.024892723187804222,

0.11388712376356125,

0.1508467048406601,

-0.02198721282184124,

-0.004899922292679548,

-0.02753942646086216,

0.22611530125141144,

-0.06007634103298187,

-0.007151430938392878,

0.1232280284166336,

-0.033830802887678146,

0.056372229009866714,

0.14179198443889618,

0.06317310780286789,

-0.09757809340953827,

0.018856188282370567,

0.03395376726984978,

-0.03374926373362541,

-0.21572580933570862,

-0.03513849899172783,

-0.038596261292696,

0.007536603137850761,

0.09702857583761215,

0.030055968090891838,

0.023274168372154236,

0.06634553521871567,

0.022948583588004112,

0.07978902757167816,

-0.00827805232256651,

0.0707942321896553,

0.110685795545578,

0.039866309612989426,

0.1304728090763092,

-0.047192152589559555,

-0.0501023568212986,

0.040836118161678314,

-0.004861770663410425,

0.20268024504184723,

0.022258559241890907,

0.1443430632352829,

0.0523858517408371,

0.1603451669216156,

-0.003318128874525428,

0.05870769917964935,

-0.010983736254274845,

-0.03586732596158981,

-0.016002491116523743,

-0.050858043134212494,

-0.027061454951763153,

0.03553164750337601,

-0.0810861736536026,

0.0569889210164547,

-0.10714785754680634,

0.0141348447650671,

0.0625428706407547,

0.2363647073507309,

0.05976230278611183,

-0.32179179787635803,

-0.08804614841938019,

0.0312943272292614,

-0.019662996754050255,

-0.019766762852668762,

0.028848474845290184,

0.12449726462364197,

-0.04800339415669441,

0.038421615958213806,

-0.06920386850833893,

0.0856948047876358,

-0.041594430804252625,

0.046957116574048996,

0.05392977595329285,

0.08326726406812668,

-0.011348790489137173,

0.0677877888083458,

-0.2849946916103363,

0.2594778537750244,

0.0182159673422575,

0.06707538664340973,

-0.04578512907028198,

0.0006333258934319019,

0.03892848640680313,

0.09583673626184464,

0.07050574570894241,

-0.015630925074219704,

-0.05361812189221382,

-0.1901426762342453,

-0.06553713232278824,

0.023328747600317,

0.09855473041534424,

-0.04275936633348465,

0.10195008665323257,

-0.029172275215387344,

0.0009303148835897446,

0.07974892109632492,

-0.013163192197680473,

-0.08195511251688004,

-0.10135307163000107,

-0.007986011914908886,

0.037767570465803146,

-0.03457343950867653,

-0.07943452894687653,

-0.09717585146427155,

-0.13702885806560516,

0.15432903170585632,

-0.0721481516957283,

-0.03587792441248894,

-0.10308461636304855,

0.05026903748512268,

0.05604495853185654,

-0.08061156421899796,

0.04257005453109741,

0.0059556132182478905,

0.08431671559810638,

0.017768802121281624,

-0.06496880948543549,

0.12358658015727997,

-0.0724131315946579,

-0.17919248342514038,

-0.07129589468240738,

0.10883961617946625,

0.020183585584163666,

0.0434412881731987,

-0.006670255213975906,

0.01217504683881998,

-0.01605033315718174,

-0.07799634337425232,

0.024179812520742416,

0.007630443666130304,

0.05133463069796562,

0.033253561705350876,

-0.05995296686887741,

-0.00992815662175417,

-0.06063663586974144,

-0.02640777826309204,

0.15023256838321686,

0.28983256220817566,

-0.08448939025402069,

0.011584372259676456,

0.06025092676281929,

-0.06937822699546814,

-0.20784035325050354,

0.03537171706557274,

0.025973185896873474,

0.002006511902436614,

0.04815743491053581,

-0.1494372934103012,

0.10073069483041763,

0.1004384309053421,

-0.02729540318250656,

0.11851020157337189,

-0.29172447323799133,

-0.13630148768424988,

0.13008825480937958,

0.14592184126377106,

0.11932212114334106,

-0.1617647260427475,

-0.04427444934844971,

-0.03903747349977493,

-0.1081315204501152,

0.10438788682222366,

-0.13396459817886353,

0.10966074466705322,

-0.006978304125368595,

0.04945985972881317,

0.006322693545371294,

-0.0525517575442791,

0.13813674449920654,

0.0035425364039838314,

0.11572537571191788,

-0.06401606649160385,

-0.016043435782194138,

0.05315401405096054,

-0.06314544379711151,

0.018983792513608932,

-0.11815061420202255,

0.04252900555729866,

-0.06314533948898315,

-0.021600648760795593,

-0.044377490878105164,

0.03219137713313103,

-0.039054643362760544,

-0.058286964893341064,

-0.04271944984793663,

0.025263741612434387,

0.04691121354699135,

-0.006495738867670298,

0.16725417971611023,

0.012017560191452503,

0.1458531767129898,

0.1456461101770401,

0.07477093487977982,

-0.06944509595632553,

-0.013868972659111023,

-0.009931162931025028,

-0.03754645958542824,

0.0632353276014328,

-0.1589473932981491,

0.042276203632354736,

0.12618766725063324,

0.010377872735261917,

0.15168681740760803,

0.07086709886789322,

-0.028609350323677063,

0.01416455116122961,

0.06264080852270126,

-0.16360026597976685,

-0.10343341529369354,

-0.006390259601175785,

-0.03466184809803963,

-0.1217624619603157,

0.05886603146791458,

0.12904374301433563,

-0.06483396142721176,

0.007832684554159641,

-0.005548704881221056,

0.017171988263726234,

-0.033375415951013565,

0.1809825450181961,

0.06931757181882858,

0.04594029486179352,

-0.08626553416252136,

0.09383445978164673,

0.057236816734075546,

-0.08037562668323517,

0.010494032874703407,

0.04533001035451889,

-0.08761248737573624,

-0.04845127463340759,

0.04299486428499222,

0.1924460232257843,

-0.028709236532449722,

-0.04859874024987221,

-0.14862895011901855,

-0.11565948277711868,

0.05416544899344444,

0.1719956248998642,

0.09939222037792206,

0.01574626937508583,

-0.035516928881406784,

0.010585492476820946,

-0.10881826281547546,

0.11908780038356781,

0.0460425429046154,

0.09073225408792496,

-0.15532612800598145,

0.11541155725717545,

-0.006080456543713808,

0.010521276853978634,

-0.02410709485411644,

0.04611850902438164,

-0.11734646558761597,

-0.008966182358562946,

-0.14652951061725616,

-0.0022670330945402384,

-0.021532943472266197,

0.00991134438663721,

0.0017544488655403256,

-0.052926573902368546,

-0.05573635548353195,

0.010767174884676933,

-0.09956485778093338,

-0.02567976713180542,

0.03464585542678833,

0.05296092480421066,

-0.12183404713869095,

-0.048842206597328186,

0.020180366933345795,

-0.0749250054359436,

0.07195018231868744,

0.01782374083995819,

0.019487906247377396,

0.047552745789289474,

-0.18469583988189697,

0.020998060703277588,

0.05714156851172447,

0.018198179081082344,

0.046417027711868286,

-0.08161831647157669,

-0.0217074416577816,

-0.005194950848817825,

0.04249010980129242,

0.020269859582185745,

0.08956664800643921,

-0.12341190874576569,

0.013999820686876774,

-0.02895444445312023,

-0.060713354498147964,

-0.05242972448468208,

0.03496900945901871,

0.08606252074241638,

0.012692218646407127,

0.20861120522022247,

-0.0979195162653923,

0.019344311207532883,

-0.2009430080652237,

0.00501597486436367,

0.001547818654216826,

-0.11678061634302139,

-0.11391947418451309,

-0.05434470623731613,

0.05070848762989044,

-0.06358914822340012,

0.13617299497127533,

0.008054664358496666,

0.02630060911178589,

0.03619532287120819,

-0.031149519607424736,

0.0358891561627388,

0.02891317568719387,

0.21507899463176727,

0.032343920320272446,

-0.04214607924222946,

0.013154616579413414,

0.02563157118856907,

0.113807812333107,

0.081258624792099,

0.1677645742893219,

0.16732439398765564,

-0.04555391147732735,

0.10001391172409058,

0.042601704597473145,

-0.049850162118673325,

-0.13729676604270935,

0.06875384598970413,

-0.04031858965754509,

0.1083664745092392,

-0.01698577217757702,

0.19576288759708405,

0.08802437037229538,

-0.1563449651002884,

0.0169607512652874,

-0.0501747764647007,

-0.08588895201683044,

-0.1072855219244957,

-0.05823831632733345,

-0.09863176941871643,

-0.1437232941389084,

-0.0069333952851593494,

-0.11194359511137009,

0.013700651004910469,

0.10309110581874847,

0.0036335091572254896,

-0.01665906049311161,

0.16479921340942383,

0.0024273069575428963,

0.03502574563026428,

0.06665719300508499,

0.000056451986893080175,

-0.045751143246889114,

-0.07160446047782898,

-0.09755320847034454,

0.009164917282760143,

-0.009518665261566639,

0.02394540049135685,

-0.04710201174020767,

-0.022644072771072388,

0.04116625711321831,

-0.009953295812010765,

-0.11069788038730621,

0.012486699968576431,

0.026688644662499428,

0.04744409769773483,

0.05337059870362282,

0.014289602637290955,

0.007922823540866375,

0.002059049904346466,

0.21917478740215302,

-0.07684768736362457,

-0.06481043249368668,

-0.09839426726102829,

0.21271111071109772,

0.02537573128938675,

0.01122434251010418,

0.009889745153486729,

-0.09445755928754807,

0.029387980699539185,

0.2085287868976593,

0.18846826255321503,

-0.0983656719326973,

-0.0001000102492980659,

-0.016127554699778557,

-0.008318839594721794,

-0.034745607525110245,

0.092953622341156,

0.11091499775648117,

0.006860523018985987,

-0.07052386552095413,

-0.0506904274225235,

-0.036463722586631775,

-0.00872395932674408,

-0.052962467074394226,

0.05659520626068115,

0.02998792752623558,

0.010044393129646778,

-0.04917601868510246,

0.05993591994047165,

-0.03787500038743019,

-0.10803662240505219,

0.050308436155319214,

-0.19621063768863678,

-0.15462550520896912,

-0.018820442259311676,

0.11450967937707901,

-0.007745843846350908,

0.044364672154188156,

-0.03354406729340553,

-0.0016899527981877327,

0.07270507514476776,

-0.029962675645947456,

-0.059742365032434464,

-0.061682816594839096,

0.058003999292850494,

-0.1099284216761589,

0.2251618355512619,

-0.032190095633268356,

0.043826617300510406,

0.12790866196155548,

0.04933629184961319,

-0.07086482644081116,

0.08247663825750351,

0.04600673168897629,

-0.05825347453355789,

0.03479646518826485,

0.08512967824935913,

-0.0427352637052536,

0.11894133687019348,

0.06429263949394226,

-0.13106146454811096,

0.010189538821578026,

-0.03632116690278053,

-0.09575211256742477,

-0.05317721888422966,

-0.04218989610671997,

-0.05881042033433914,

0.13169905543327332,

0.18680934607982635,

-0.03636685013771057,

0.01121482253074646,

-0.0407484732568264,

0.01604560762643814,

0.06541994959115982,

0.0384550467133522,

-0.036123767495155334,

-0.22587436437606812,

0.027824096381664276,

0.06184198707342148,

-0.00044746199273504317,

-0.28286927938461304,

-0.08378159999847412,

-0.012071858160197735,

-0.04304254427552223,

-0.09690266847610474,

0.09092192351818085,

0.11658072471618652,

0.0505087785422802,

-0.06398648768663406,

-0.08807311207056046,

-0.07526884973049164,

0.15617556869983673,

-0.12728366255760193,

-0.09735537320375443

] |

null | null |

peft

|

<!-- markdownlint-disable MD041 -->

<!-- header start -->

<!-- 200823 -->

<div style="width: auto; margin-left: auto; margin-right: auto">

<img src="https://i.imgur.com/EBdldam.jpg" alt="TheBlokeAI" style="width: 100%; min-width: 400px; display: block; margin: auto;">

</div>

<div style="display: flex; justify-content: space-between; width: 100%;">

<div style="display: flex; flex-direction: column; align-items: flex-start;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://discord.gg/theblokeai">Chat & support: TheBloke's Discord server</a></p>

</div>

<div style="display: flex; flex-direction: column; align-items: flex-end;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://www.patreon.com/TheBlokeAI">Want to contribute? TheBloke's Patreon page</a></p>

</div>

</div>

<div style="text-align:center; margin-top: 0em; margin-bottom: 0em"><p style="margin-top: 0.25em; margin-bottom: 0em;">TheBloke's LLM work is generously supported by a grant from <a href="https://a16z.com">andreessen horowitz (a16z)</a></p></div>

<hr style="margin-top: 1.0em; margin-bottom: 1.0em;">

<!-- header end -->

# Augmental Unholy 13B - GPTQ

- Model creator: [Evan Armstrong](https://huggingface.co/Heralax)

- Original model: [Augmental Unholy 13B](https://huggingface.co/Heralax/Augmental-Unholy-13b)

<!-- description start -->

## Description

This repo contains GPTQ model files for [Evan Armstrong's Augmental Unholy 13B](https://huggingface.co/Heralax/Augmental-Unholy-13b).

Multiple GPTQ parameter permutations are provided; see Provided Files below for details of the options provided, their parameters, and the software used to create them.

These files were quantised using hardware kindly provided by [Massed Compute](https://massedcompute.com/).

<!-- description end -->

<!-- repositories-available start -->

## Repositories available

* [AWQ model(s) for GPU inference.](https://huggingface.co/TheBloke/Augmental-Unholy-13B-AWQ)

* [GPTQ models for GPU inference, with multiple quantisation parameter options.](https://huggingface.co/TheBloke/Augmental-Unholy-13B-GPTQ)

* [2, 3, 4, 5, 6 and 8-bit GGUF models for CPU+GPU inference](https://huggingface.co/TheBloke/Augmental-Unholy-13B-GGUF)

* [Evan Armstrong's original unquantised fp16 model in pytorch format, for GPU inference and for further conversions](https://huggingface.co/Heralax/Augmental-Unholy-13b)

<!-- repositories-available end -->

<!-- prompt-template start -->

## Prompt template: SillyTavern

```

## {{{{charname}}}}:

- You're "{{{{charname}}}}" in this never-ending roleplay with "{{{{user}}}}".

### Input:

{prompt}

### Response:

(OOC) Understood. I will take this info into account for the roleplay. (end OOC)

### New Roleplay:

### Instruction:

#### {{{{char}}}}:

whatever the char says, this is the chat history

#### {{{{user}}}}:

whatever the user says, this is the chat history

... repeated some number of times ...

### Response 2 paragraphs, engaging, natural, authentic, descriptive, creative):

#### {{{{char}}}}:

```

<!-- prompt-template end -->

<!-- README_GPTQ.md-compatible clients start -->

## Known compatible clients / servers

These GPTQ models are known to work in the following inference servers/webuis.

- [text-generation-webui](https://github.com/oobabooga/text-generation-webui)

- [KoboldAI United](https://github.com/henk717/koboldai)

- [LoLLMS Web UI](https://github.com/ParisNeo/lollms-webui)

- [Hugging Face Text Generation Inference (TGI)](https://github.com/huggingface/text-generation-inference)

This may not be a complete list; if you know of others, please let me know!

<!-- README_GPTQ.md-compatible clients end -->

<!-- README_GPTQ.md-provided-files start -->

## Provided files, and GPTQ parameters

Multiple quantisation parameters are provided, to allow you to choose the best one for your hardware and requirements.

Each separate quant is in a different branch. See below for instructions on fetching from different branches.

Most GPTQ files are made with AutoGPTQ. Mistral models are currently made with Transformers.

<details>

<summary>Explanation of GPTQ parameters</summary>

- Bits: The bit size of the quantised model.

- GS: GPTQ group size. Higher numbers use less VRAM, but have lower quantisation accuracy. "None" is the lowest possible value.

- Act Order: True or False. Also known as `desc_act`. True results in better quantisation accuracy. Some GPTQ clients have had issues with models that use Act Order plus Group Size, but this is generally resolved now.

- Damp %: A GPTQ parameter that affects how samples are processed for quantisation. 0.01 is default, but 0.1 results in slightly better accuracy.

- GPTQ dataset: The calibration dataset used during quantisation. Using a dataset more appropriate to the model's training can improve quantisation accuracy. Note that the GPTQ calibration dataset is not the same as the dataset used to train the model - please refer to the original model repo for details of the training dataset(s).

- Sequence Length: The length of the dataset sequences used for quantisation. Ideally this is the same as the model sequence length. For some very long sequence models (16+K), a lower sequence length may have to be used. Note that a lower sequence length does not limit the sequence length of the quantised model. It only impacts the quantisation accuracy on longer inference sequences.

- ExLlama Compatibility: Whether this file can be loaded with ExLlama, which currently only supports Llama and Mistral models in 4-bit.

</details>

| Branch | Bits | GS | Act Order | Damp % | GPTQ Dataset | Seq Len | Size | ExLlama | Desc |

| ------ | ---- | -- | --------- | ------ | ------------ | ------- | ---- | ------- | ---- |

| [main](https://huggingface.co/TheBloke/Augmental-Unholy-13B-GPTQ/tree/main) | 4 | 128 | Yes | 0.1 | [wikitext](https://huggingface.co/datasets/wikitext/viewer/wikitext-2-raw-v1) | 4096 | 7.26 GB | Yes | 4-bit, with Act Order and group size 128g. Uses even less VRAM than 64g, but with slightly lower accuracy. |

| [gptq-4bit-32g-actorder_True](https://huggingface.co/TheBloke/Augmental-Unholy-13B-GPTQ/tree/gptq-4bit-32g-actorder_True) | 4 | 32 | Yes | 0.1 | [wikitext](https://huggingface.co/datasets/wikitext/viewer/wikitext-2-raw-v1) | 4096 | 8.00 GB | Yes | 4-bit, with Act Order and group size 32g. Gives highest possible inference quality, with maximum VRAM usage. |

| [gptq-8bit--1g-actorder_True](https://huggingface.co/TheBloke/Augmental-Unholy-13B-GPTQ/tree/gptq-8bit--1g-actorder_True) | 8 | None | Yes | 0.1 | [wikitext](https://huggingface.co/datasets/wikitext/viewer/wikitext-2-raw-v1) | 4096 | 13.36 GB | No | 8-bit, with Act Order. No group size, to lower VRAM requirements. |

| [gptq-8bit-128g-actorder_True](https://huggingface.co/TheBloke/Augmental-Unholy-13B-GPTQ/tree/gptq-8bit-128g-actorder_True) | 8 | 128 | Yes | 0.1 | [wikitext](https://huggingface.co/datasets/wikitext/viewer/wikitext-2-raw-v1) | 4096 | 13.65 GB | No | 8-bit, with group size 128g for higher inference quality and with Act Order for even higher accuracy. |

| [gptq-8bit-32g-actorder_True](https://huggingface.co/TheBloke/Augmental-Unholy-13B-GPTQ/tree/gptq-8bit-32g-actorder_True) | 8 | 32 | Yes | 0.1 | [wikitext](https://huggingface.co/datasets/wikitext/viewer/wikitext-2-raw-v1) | 4096 | 14.54 GB | No | 8-bit, with group size 32g and Act Order for maximum inference quality. |

| [gptq-4bit-64g-actorder_True](https://huggingface.co/TheBloke/Augmental-Unholy-13B-GPTQ/tree/gptq-4bit-64g-actorder_True) | 4 | 64 | Yes | 0.1 | [wikitext](https://huggingface.co/datasets/wikitext/viewer/wikitext-2-raw-v1) | 4096 | 7.51 GB | Yes | 4-bit, with Act Order and group size 64g. Uses less VRAM than 32g, but with slightly lower accuracy. |

<!-- README_GPTQ.md-provided-files end -->

<!-- README_GPTQ.md-download-from-branches start -->

## How to download, including from branches

### In text-generation-webui

To download from the `main` branch, enter `TheBloke/Augmental-Unholy-13B-GPTQ` in the "Download model" box.

To download from another branch, add `:branchname` to the end of the download name, eg `TheBloke/Augmental-Unholy-13B-GPTQ:gptq-4bit-32g-actorder_True`

### From the command line

I recommend using the `huggingface-hub` Python library:

```shell

pip3 install huggingface-hub

```

To download the `main` branch to a folder called `Augmental-Unholy-13B-GPTQ`:

```shell

mkdir Augmental-Unholy-13B-GPTQ

huggingface-cli download TheBloke/Augmental-Unholy-13B-GPTQ --local-dir Augmental-Unholy-13B-GPTQ --local-dir-use-symlinks False

```

To download from a different branch, add the `--revision` parameter:

```shell

mkdir Augmental-Unholy-13B-GPTQ

huggingface-cli download TheBloke/Augmental-Unholy-13B-GPTQ --revision gptq-4bit-32g-actorder_True --local-dir Augmental-Unholy-13B-GPTQ --local-dir-use-symlinks False

```

<details>

<summary>More advanced huggingface-cli download usage</summary>

If you remove the `--local-dir-use-symlinks False` parameter, the files will instead be stored in the central Hugging Face cache directory (default location on Linux is: `~/.cache/huggingface`), and symlinks will be added to the specified `--local-dir`, pointing to their real location in the cache. This allows for interrupted downloads to be resumed, and allows you to quickly clone the repo to multiple places on disk without triggering a download again. The downside, and the reason why I don't list that as the default option, is that the files are then hidden away in a cache folder and it's harder to know where your disk space is being used, and to clear it up if/when you want to remove a download model.

The cache location can be changed with the `HF_HOME` environment variable, and/or the `--cache-dir` parameter to `huggingface-cli`.

For more documentation on downloading with `huggingface-cli`, please see: [HF -> Hub Python Library -> Download files -> Download from the CLI](https://huggingface.co/docs/huggingface_hub/guides/download#download-from-the-cli).

To accelerate downloads on fast connections (1Gbit/s or higher), install `hf_transfer`:

```shell

pip3 install hf_transfer

```

And set environment variable `HF_HUB_ENABLE_HF_TRANSFER` to `1`:

```shell

mkdir Augmental-Unholy-13B-GPTQ

HF_HUB_ENABLE_HF_TRANSFER=1 huggingface-cli download TheBloke/Augmental-Unholy-13B-GPTQ --local-dir Augmental-Unholy-13B-GPTQ --local-dir-use-symlinks False

```

Windows Command Line users: You can set the environment variable by running `set HF_HUB_ENABLE_HF_TRANSFER=1` before the download command.

</details>

### With `git` (**not** recommended)

To clone a specific branch with `git`, use a command like this:

```shell

git clone --single-branch --branch gptq-4bit-32g-actorder_True https://huggingface.co/TheBloke/Augmental-Unholy-13B-GPTQ

```

Note that using Git with HF repos is strongly discouraged. It will be much slower than using `huggingface-hub`, and will use twice as much disk space as it has to store the model files twice (it stores every byte both in the intended target folder, and again in the `.git` folder as a blob.)

<!-- README_GPTQ.md-download-from-branches end -->

<!-- README_GPTQ.md-text-generation-webui start -->

## How to easily download and use this model in [text-generation-webui](https://github.com/oobabooga/text-generation-webui)

Please make sure you're using the latest version of [text-generation-webui](https://github.com/oobabooga/text-generation-webui).

It is strongly recommended to use the text-generation-webui one-click-installers unless you're sure you know how to make a manual install.

1. Click the **Model tab**.

2. Under **Download custom model or LoRA**, enter `TheBloke/Augmental-Unholy-13B-GPTQ`.

- To download from a specific branch, enter for example `TheBloke/Augmental-Unholy-13B-GPTQ:gptq-4bit-32g-actorder_True`

- see Provided Files above for the list of branches for each option.

3. Click **Download**.

4. The model will start downloading. Once it's finished it will say "Done".

5. In the top left, click the refresh icon next to **Model**.

6. In the **Model** dropdown, choose the model you just downloaded: `Augmental-Unholy-13B-GPTQ`

7. The model will automatically load, and is now ready for use!

8. If you want any custom settings, set them and then click **Save settings for this model** followed by **Reload the Model** in the top right.

- Note that you do not need to and should not set manual GPTQ parameters any more. These are set automatically from the file `quantize_config.json`.

9. Once you're ready, click the **Text Generation** tab and enter a prompt to get started!

<!-- README_GPTQ.md-text-generation-webui end -->

<!-- README_GPTQ.md-use-from-tgi start -->

## Serving this model from Text Generation Inference (TGI)

It's recommended to use TGI version 1.1.0 or later. The official Docker container is: `ghcr.io/huggingface/text-generation-inference:1.1.0`

Example Docker parameters:

```shell

--model-id TheBloke/Augmental-Unholy-13B-GPTQ --port 3000 --quantize gptq --max-input-length 3696 --max-total-tokens 4096 --max-batch-prefill-tokens 4096

```

Example Python code for interfacing with TGI (requires huggingface-hub 0.17.0 or later):

```shell

pip3 install huggingface-hub

```

```python

from huggingface_hub import InferenceClient

endpoint_url = "https://your-endpoint-url-here"

prompt = "Tell me about AI"

prompt_template=f'''## {{{{charname}}}}:

- You're "{{{{charname}}}}" in this never-ending roleplay with "{{{{user}}}}".

### Input:

{prompt}

### Response:

(OOC) Understood. I will take this info into account for the roleplay. (end OOC)

### New Roleplay:

### Instruction:

#### {{{{char}}}}:

whatever the char says, this is the chat history

#### {{{{user}}}}:

whatever the user says, this is the chat history

... repeated some number of times ...

### Response 2 paragraphs, engaging, natural, authentic, descriptive, creative):

#### {{{{char}}}}:

'''

client = InferenceClient(endpoint_url)

response = client.text_generation(prompt,

max_new_tokens=128,

do_sample=True,

temperature=0.7,

top_p=0.95,

top_k=40,

repetition_penalty=1.1)

print(f"Model output: {response}")

```

<!-- README_GPTQ.md-use-from-tgi end -->

<!-- README_GPTQ.md-use-from-python start -->

## How to use this GPTQ model from Python code

### Install the necessary packages

Requires: Transformers 4.33.0 or later, Optimum 1.12.0 or later, and AutoGPTQ 0.4.2 or later.

```shell

pip3 install transformers optimum

pip3 install auto-gptq --extra-index-url https://huggingface.github.io/autogptq-index/whl/cu118/ # Use cu117 if on CUDA 11.7

```

If you have problems installing AutoGPTQ using the pre-built wheels, install it from source instead:

```shell

pip3 uninstall -y auto-gptq

git clone https://github.com/PanQiWei/AutoGPTQ

cd AutoGPTQ

git checkout v0.4.2

pip3 install .

```

### You can then use the following code

```python

from transformers import AutoModelForCausalLM, AutoTokenizer, pipeline

model_name_or_path = "TheBloke/Augmental-Unholy-13B-GPTQ"

# To use a different branch, change revision

# For example: revision="gptq-4bit-32g-actorder_True"

model = AutoModelForCausalLM.from_pretrained(model_name_or_path,

device_map="auto",

trust_remote_code=False,

revision="main")

tokenizer = AutoTokenizer.from_pretrained(model_name_or_path, use_fast=True)

prompt = "Tell me about AI"

prompt_template=f'''## {{{{charname}}}}:

- You're "{{{{charname}}}}" in this never-ending roleplay with "{{{{user}}}}".

### Input:

{prompt}

### Response:

(OOC) Understood. I will take this info into account for the roleplay. (end OOC)

### New Roleplay:

### Instruction:

#### {{{{char}}}}:

whatever the char says, this is the chat history

#### {{{{user}}}}:

whatever the user says, this is the chat history

... repeated some number of times ...

### Response 2 paragraphs, engaging, natural, authentic, descriptive, creative):

#### {{{{char}}}}:

'''

print("\n\n*** Generate:")

input_ids = tokenizer(prompt_template, return_tensors='pt').input_ids.cuda()

output = model.generate(inputs=input_ids, temperature=0.7, do_sample=True, top_p=0.95, top_k=40, max_new_tokens=512)

print(tokenizer.decode(output[0]))

# Inference can also be done using transformers' pipeline

print("*** Pipeline:")

pipe = pipeline(

"text-generation",

model=model,

tokenizer=tokenizer,

max_new_tokens=512,

do_sample=True,

temperature=0.7,

top_p=0.95,

top_k=40,

repetition_penalty=1.1

)

print(pipe(prompt_template)[0]['generated_text'])

```

<!-- README_GPTQ.md-use-from-python end -->

<!-- README_GPTQ.md-compatibility start -->

## Compatibility

The files provided are tested to work with Transformers. For non-Mistral models, AutoGPTQ can also be used directly.

[ExLlama](https://github.com/turboderp/exllama) is compatible with Llama and Mistral models in 4-bit. Please see the Provided Files table above for per-file compatibility.

For a list of clients/servers, please see "Known compatible clients / servers", above.

<!-- README_GPTQ.md-compatibility end -->

<!-- footer start -->

<!-- 200823 -->

## Discord

For further support, and discussions on these models and AI in general, join us at:

[TheBloke AI's Discord server](https://discord.gg/theblokeai)

## Thanks, and how to contribute

Thanks to the [chirper.ai](https://chirper.ai) team!

Thanks to Clay from [gpus.llm-utils.org](llm-utils)!

I've had a lot of people ask if they can contribute. I enjoy providing models and helping people, and would love to be able to spend even more time doing it, as well as expanding into new projects like fine tuning/training.

If you're able and willing to contribute it will be most gratefully received and will help me to keep providing more models, and to start work on new AI projects.

Donaters will get priority support on any and all AI/LLM/model questions and requests, access to a private Discord room, plus other benefits.

* Patreon: https://patreon.com/TheBlokeAI

* Ko-Fi: https://ko-fi.com/TheBlokeAI

**Special thanks to**: Aemon Algiz.

**Patreon special mentions**: Brandon Frisco, LangChain4j, Spiking Neurons AB, transmissions 11, Joseph William Delisle, Nitin Borwankar, Willem Michiel, Michael Dempsey, vamX, Jeffrey Morgan, zynix, jjj, Omer Bin Jawed, Sean Connelly, jinyuan sun, Jeromy Smith, Shadi, Pawan Osman, Chadd, Elijah Stavena, Illia Dulskyi, Sebastain Graf, Stephen Murray, terasurfer, Edmond Seymore, Celu Ramasamy, Mandus, Alex, biorpg, Ajan Kanaga, Clay Pascal, Raven Klaugh, 阿明, K, ya boyyy, usrbinkat, Alicia Loh, John Villwock, ReadyPlayerEmma, Chris Smitley, Cap'n Zoog, fincy, GodLy, S_X, sidney chen, Cory Kujawski, OG, Mano Prime, AzureBlack, Pieter, Kalila, Spencer Kim, Tom X Nguyen, Stanislav Ovsiannikov, Michael Levine, Andrey, Trailburnt, Vadim, Enrico Ros, Talal Aujan, Brandon Phillips, Jack West, Eugene Pentland, Michael Davis, Will Dee, webtim, Jonathan Leane, Alps Aficionado, Rooh Singh, Tiffany J. Kim, theTransient, Luke @flexchar, Elle, Caitlyn Gatomon, Ari Malik, subjectnull, Johann-Peter Hartmann, Trenton Dambrowitz, Imad Khwaja, Asp the Wyvern, Emad Mostaque, Rainer Wilmers, Alexandros Triantafyllidis, Nicholas, Pedro Madruga, SuperWojo, Harry Royden McLaughlin, James Bentley, Olakabola, David Ziegler, Ai Maven, Jeff Scroggin, Nikolai Manek, Deo Leter, Matthew Berman, Fen Risland, Ken Nordquist, Manuel Alberto Morcote, Luke Pendergrass, TL, Fred von Graf, Randy H, Dan Guido, NimbleBox.ai, Vitor Caleffi, Gabriel Tamborski, knownsqashed, Lone Striker, Erik Bjäreholt, John Detwiler, Leonard Tan, Iucharbius

Thank you to all my generous patrons and donaters!

And thank you again to a16z for their generous grant.

<!-- footer end -->

# Original model card: Evan Armstrong's Augmental Unholy 13B

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

- **Developed by:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Data Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Data Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

## Training procedure

The following `bitsandbytes` quantization config was used during training:

- quant_method: QuantizationMethod.BITS_AND_BYTES

- load_in_8bit: False

- load_in_4bit: True

- llm_int8_threshold: 6.0

- llm_int8_skip_modules: None

- llm_int8_enable_fp32_cpu_offload: False

- llm_int8_has_fp16_weight: False

- bnb_4bit_quant_type: fp4

- bnb_4bit_use_double_quant: True

- bnb_4bit_compute_dtype: float16

### Framework versions

- PEFT 0.6.0

|

{"license": "llama2", "library_name": "peft", "model_name": "Augmental Unholy 13B", "base_model": "Heralax/Augmental-Unholy-13b", "inference": false, "model_creator": "Evan Armstrong", "model_type": "llama", "prompt_template": "## {{{{charname}}}}:\n- You're \"{{{{charname}}}}\" in this never-ending roleplay with \"{{{{user}}}}\".\n### Input:\n{prompt}\n\n### Response:\n(OOC) Understood. I will take this info into account for the roleplay. (end OOC)\n\n### New Roleplay:\n### Instruction:\n#### {{{{char}}}}:\nwhatever the char says, this is the chat history\n#### {{{{user}}}}:\nwhatever the user says, this is the chat history\n... repeated some number of times ...\n### Response 2 paragraphs, engaging, natural, authentic, descriptive, creative):\n#### {{{{char}}}}:\n", "quantized_by": "TheBloke"}

| null |

TheBloke/Augmental-Unholy-13B-GPTQ

|

[

"peft",

"safetensors",

"llama",

"arxiv:1910.09700",

"base_model:Heralax/Augmental-Unholy-13b",

"license:llama2",

"4-bit",

"region:us"

] |

2023-11-11T09:59:26+00:00

|

[

"1910.09700"

] |

[] |

TAGS

#peft #safetensors #llama #arxiv-1910.09700 #base_model-Heralax/Augmental-Unholy-13b #license-llama2 #4-bit #region-us

|

[[TheBloke's LLM work is generously supported by a grant from [andreessen horowitz (a16z)](URL)](URL to contribute? TheBloke's Patreon page</a></p>

</div>

</div>

<div style=)](URL & support: TheBloke's Discord server</a></p>

</div>

<div style=)

---

Augmental Unholy 13B - GPTQ

===========================

* Model creator: Evan Armstrong

* Original model: Augmental Unholy 13B

Description

-----------

This repo contains GPTQ model files for Evan Armstrong's Augmental Unholy 13B.

Multiple GPTQ parameter permutations are provided; see Provided Files below for details of the options provided, their parameters, and the software used to create them.

These files were quantised using hardware kindly provided by Massed Compute.

Repositories available

----------------------

* AWQ model(s) for GPU inference.

* GPTQ models for GPU inference, with multiple quantisation parameter options.

* 2, 3, 4, 5, 6 and 8-bit GGUF models for CPU+GPU inference

* Evan Armstrong's original unquantised fp16 model in pytorch format, for GPU inference and for further conversions

Prompt template: SillyTavern

----------------------------

Known compatible clients / servers

----------------------------------

These GPTQ models are known to work in the following inference servers/webuis.

* text-generation-webui

* KoboldAI United

* LoLLMS Web UI

* Hugging Face Text Generation Inference (TGI)

This may not be a complete list; if you know of others, please let me know!

Provided files, and GPTQ parameters

-----------------------------------

Multiple quantisation parameters are provided, to allow you to choose the best one for your hardware and requirements.

Each separate quant is in a different branch. See below for instructions on fetching from different branches.

Most GPTQ files are made with AutoGPTQ. Mistral models are currently made with Transformers.

Explanation of GPTQ parameters

* Bits: The bit size of the quantised model.

* GS: GPTQ group size. Higher numbers use less VRAM, but have lower quantisation accuracy. "None" is the lowest possible value.

* Act Order: True or False. Also known as 'desc\_act'. True results in better quantisation accuracy. Some GPTQ clients have had issues with models that use Act Order plus Group Size, but this is generally resolved now.

* Damp %: A GPTQ parameter that affects how samples are processed for quantisation. 0.01 is default, but 0.1 results in slightly better accuracy.

* GPTQ dataset: The calibration dataset used during quantisation. Using a dataset more appropriate to the model's training can improve quantisation accuracy. Note that the GPTQ calibration dataset is not the same as the dataset used to train the model - please refer to the original model repo for details of the training dataset(s).

* Sequence Length: The length of the dataset sequences used for quantisation. Ideally this is the same as the model sequence length. For some very long sequence models (16+K), a lower sequence length may have to be used. Note that a lower sequence length does not limit the sequence length of the quantised model. It only impacts the quantisation accuracy on longer inference sequences.

* ExLlama Compatibility: Whether this file can be loaded with ExLlama, which currently only supports Llama and Mistral models in 4-bit.

How to download, including from branches

----------------------------------------

### In text-generation-webui

To download from the 'main' branch, enter 'TheBloke/Augmental-Unholy-13B-GPTQ' in the "Download model" box.

To download from another branch, add ':branchname' to the end of the download name, eg 'TheBloke/Augmental-Unholy-13B-GPTQ:gptq-4bit-32g-actorder\_True'

### From the command line

I recommend using the 'huggingface-hub' Python library:

To download the 'main' branch to a folder called 'Augmental-Unholy-13B-GPTQ':

To download from a different branch, add the '--revision' parameter:

More advanced huggingface-cli download usage

If you remove the '--local-dir-use-symlinks False' parameter, the files will instead be stored in the central Hugging Face cache directory (default location on Linux is: '~/.cache/huggingface'), and symlinks will be added to the specified '--local-dir', pointing to their real location in the cache. This allows for interrupted downloads to be resumed, and allows you to quickly clone the repo to multiple places on disk without triggering a download again. The downside, and the reason why I don't list that as the default option, is that the files are then hidden away in a cache folder and it's harder to know where your disk space is being used, and to clear it up if/when you want to remove a download model.

The cache location can be changed with the 'HF\_HOME' environment variable, and/or the '--cache-dir' parameter to 'huggingface-cli'.

For more documentation on downloading with 'huggingface-cli', please see: HF -> Hub Python Library -> Download files -> Download from the CLI.

To accelerate downloads on fast connections (1Gbit/s or higher), install 'hf\_transfer':

And set environment variable 'HF\_HUB\_ENABLE\_HF\_TRANSFER' to '1':

Windows Command Line users: You can set the environment variable by running 'set HF\_HUB\_ENABLE\_HF\_TRANSFER=1' before the download command.

### With 'git' (not recommended)

To clone a specific branch with 'git', use a command like this:

Note that using Git with HF repos is strongly discouraged. It will be much slower than using 'huggingface-hub', and will use twice as much disk space as it has to store the model files twice (it stores every byte both in the intended target folder, and again in the '.git' folder as a blob.)

How to easily download and use this model in text-generation-webui

------------------------------------------------------------------

Please make sure you're using the latest version of text-generation-webui.

It is strongly recommended to use the text-generation-webui one-click-installers unless you're sure you know how to make a manual install.

1. Click the Model tab.

2. Under Download custom model or LoRA, enter 'TheBloke/Augmental-Unholy-13B-GPTQ'.

* To download from a specific branch, enter for example 'TheBloke/Augmental-Unholy-13B-GPTQ:gptq-4bit-32g-actorder\_True'

* see Provided Files above for the list of branches for each option.

3. Click Download.

4. The model will start downloading. Once it's finished it will say "Done".

5. In the top left, click the refresh icon next to Model.

6. In the Model dropdown, choose the model you just downloaded: 'Augmental-Unholy-13B-GPTQ'

7. The model will automatically load, and is now ready for use!

8. If you want any custom settings, set them and then click Save settings for this model followed by Reload the Model in the top right.

* Note that you do not need to and should not set manual GPTQ parameters any more. These are set automatically from the file 'quantize\_config.json'.

9. Once you're ready, click the Text Generation tab and enter a prompt to get started!

Serving this model from Text Generation Inference (TGI)

-------------------------------------------------------

It's recommended to use TGI version 1.1.0 or later. The official Docker container is: 'URL

Example Docker parameters:

Example Python code for interfacing with TGI (requires huggingface-hub 0.17.0 or later):

How to use this GPTQ model from Python code

-------------------------------------------

### Install the necessary packages

Requires: Transformers 4.33.0 or later, Optimum 1.12.0 or later, and AutoGPTQ 0.4.2 or later.

If you have problems installing AutoGPTQ using the pre-built wheels, install it from source instead:

### You can then use the following code

Compatibility

-------------

The files provided are tested to work with Transformers. For non-Mistral models, AutoGPTQ can also be used directly.

ExLlama is compatible with Llama and Mistral models in 4-bit. Please see the Provided Files table above for per-file compatibility.

For a list of clients/servers, please see "Known compatible clients / servers", above.

Discord

-------

For further support, and discussions on these models and AI in general, join us at:

TheBloke AI's Discord server

Thanks, and how to contribute

-----------------------------

Thanks to the URL team!

Thanks to Clay from URL!

I've had a lot of people ask if they can contribute. I enjoy providing models and helping people, and would love to be able to spend even more time doing it, as well as expanding into new projects like fine tuning/training.

If you're able and willing to contribute it will be most gratefully received and will help me to keep providing more models, and to start work on new AI projects.

Donaters will get priority support on any and all AI/LLM/model questions and requests, access to a private Discord room, plus other benefits.

* Patreon: URL

* Ko-Fi: URL

Special thanks to: Aemon Algiz.

Patreon special mentions: Brandon Frisco, LangChain4j, Spiking Neurons AB, transmissions 11, Joseph William Delisle, Nitin Borwankar, Willem Michiel, Michael Dempsey, vamX, Jeffrey Morgan, zynix, jjj, Omer Bin Jawed, Sean Connelly, jinyuan sun, Jeromy Smith, Shadi, Pawan Osman, Chadd, Elijah Stavena, Illia Dulskyi, Sebastain Graf, Stephen Murray, terasurfer, Edmond Seymore, Celu Ramasamy, Mandus, Alex, biorpg, Ajan Kanaga, Clay Pascal, Raven Klaugh, 阿明, K, ya boyyy, usrbinkat, Alicia Loh, John Villwock, ReadyPlayerEmma, Chris Smitley, Cap'n Zoog, fincy, GodLy, S\_X, sidney chen, Cory Kujawski, OG, Mano Prime, AzureBlack, Pieter, Kalila, Spencer Kim, Tom X Nguyen, Stanislav Ovsiannikov, Michael Levine, Andrey, Trailburnt, Vadim, Enrico Ros, Talal Aujan, Brandon Phillips, Jack West, Eugene Pentland, Michael Davis, Will Dee, webtim, Jonathan Leane, Alps Aficionado, Rooh Singh, Tiffany J. Kim, theTransient, Luke @flexchar, Elle, Caitlyn Gatomon, Ari Malik, subjectnull, Johann-Peter Hartmann, Trenton Dambrowitz, Imad Khwaja, Asp the Wyvern, Emad Mostaque, Rainer Wilmers, Alexandros Triantafyllidis, Nicholas, Pedro Madruga, SuperWojo, Harry Royden McLaughlin, James Bentley, Olakabola, David Ziegler, Ai Maven, Jeff Scroggin, Nikolai Manek, Deo Leter, Matthew Berman, Fen Risland, Ken Nordquist, Manuel Alberto Morcote, Luke Pendergrass, TL, Fred von Graf, Randy H, Dan Guido, URL, Vitor Caleffi, Gabriel Tamborski, knownsqashed, Lone Striker, Erik Bjäreholt, John Detwiler, Leonard Tan, Iucharbius

Thank you to all my generous patrons and donaters!

And thank you again to a16z for their generous grant.

Original model card: Evan Armstrong's Augmental Unholy 13B

==========================================================

Model Card for Model ID

=======================

Model Details

-------------

### Model Description

* Developed by:

* Shared by [optional]:

* Model type:

* Language(s) (NLP):

* License:

* Finetuned from model [optional]:

### Model Sources [optional]

* Repository:

* Paper [optional]:

* Demo [optional]:

Uses

----

### Direct Use

### Downstream Use [optional]

### Out-of-Scope Use

Bias, Risks, and Limitations

----------------------------

### Recommendations

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

How to Get Started with the Model

---------------------------------

Use the code below to get started with the model.

Training Details

----------------

### Training Data

### Training Procedure

#### Preprocessing [optional]

#### Training Hyperparameters

* Training regime:

#### Speeds, Sizes, Times [optional]

Evaluation

----------

### Testing Data, Factors & Metrics

#### Testing Data

#### Factors

#### Metrics

### Results

#### Summary

Model Examination [optional]

----------------------------

Environmental Impact

--------------------

Carbon emissions can be estimated using the Machine Learning Impact calculator presented in Lacoste et al. (2019).

* Hardware Type:

* Hours used:

* Cloud Provider:

* Compute Region:

* Carbon Emitted:

Technical Specifications [optional]

-----------------------------------

### Model Architecture and Objective

### Compute Infrastructure

#### Hardware

#### Software

[optional]

BibTeX:

APA:

Glossary [optional]

-------------------

More Information [optional]

---------------------------

Model Card Authors [optional]

-----------------------------

Model Card Contact

------------------

Training procedure

------------------

The following 'bitsandbytes' quantization config was used during training:

* quant\_method: QuantizationMethod.BITS\_AND\_BYTES

* load\_in\_8bit: False

* load\_in\_4bit: True

* llm\_int8\_threshold: 6.0

* llm\_int8\_skip\_modules: None

* llm\_int8\_enable\_fp32\_cpu\_offload: False

* llm\_int8\_has\_fp16\_weight: False

* bnb\_4bit\_quant\_type: fp4

* bnb\_4bit\_use\_double\_quant: True

* bnb\_4bit\_compute\_dtype: float16

### Framework versions

* PEFT 0.6.0

|

[

"### In text-generation-webui\n\n\nTo download from the 'main' branch, enter 'TheBloke/Augmental-Unholy-13B-GPTQ' in the \"Download model\" box.\n\n\nTo download from another branch, add ':branchname' to the end of the download name, eg 'TheBloke/Augmental-Unholy-13B-GPTQ:gptq-4bit-32g-actorder\\_True'",

"### From the command line\n\n\nI recommend using the 'huggingface-hub' Python library:\n\n\nTo download the 'main' branch to a folder called 'Augmental-Unholy-13B-GPTQ':\n\n\nTo download from a different branch, add the '--revision' parameter:\n\n\n\nMore advanced huggingface-cli download usage\nIf you remove the '--local-dir-use-symlinks False' parameter, the files will instead be stored in the central Hugging Face cache directory (default location on Linux is: '~/.cache/huggingface'), and symlinks will be added to the specified '--local-dir', pointing to their real location in the cache. This allows for interrupted downloads to be resumed, and allows you to quickly clone the repo to multiple places on disk without triggering a download again. The downside, and the reason why I don't list that as the default option, is that the files are then hidden away in a cache folder and it's harder to know where your disk space is being used, and to clear it up if/when you want to remove a download model.\n\n\nThe cache location can be changed with the 'HF\\_HOME' environment variable, and/or the '--cache-dir' parameter to 'huggingface-cli'.\n\n\nFor more documentation on downloading with 'huggingface-cli', please see: HF -> Hub Python Library -> Download files -> Download from the CLI.\n\n\nTo accelerate downloads on fast connections (1Gbit/s or higher), install 'hf\\_transfer':\n\n\nAnd set environment variable 'HF\\_HUB\\_ENABLE\\_HF\\_TRANSFER' to '1':\n\n\nWindows Command Line users: You can set the environment variable by running 'set HF\\_HUB\\_ENABLE\\_HF\\_TRANSFER=1' before the download command.",

"### With 'git' (not recommended)\n\n\nTo clone a specific branch with 'git', use a command like this:\n\n\nNote that using Git with HF repos is strongly discouraged. It will be much slower than using 'huggingface-hub', and will use twice as much disk space as it has to store the model files twice (it stores every byte both in the intended target folder, and again in the '.git' folder as a blob.)\n\n\nHow to easily download and use this model in text-generation-webui\n------------------------------------------------------------------\n\n\nPlease make sure you're using the latest version of text-generation-webui.\n\n\nIt is strongly recommended to use the text-generation-webui one-click-installers unless you're sure you know how to make a manual install.\n\n\n1. Click the Model tab.\n2. Under Download custom model or LoRA, enter 'TheBloke/Augmental-Unholy-13B-GPTQ'.\n\n\n\t* To download from a specific branch, enter for example 'TheBloke/Augmental-Unholy-13B-GPTQ:gptq-4bit-32g-actorder\\_True'\n\t* see Provided Files above for the list of branches for each option.\n3. Click Download.\n4. The model will start downloading. Once it's finished it will say \"Done\".\n5. In the top left, click the refresh icon next to Model.\n6. In the Model dropdown, choose the model you just downloaded: 'Augmental-Unholy-13B-GPTQ'\n7. The model will automatically load, and is now ready for use!\n8. If you want any custom settings, set them and then click Save settings for this model followed by Reload the Model in the top right.\n\n\n\t* Note that you do not need to and should not set manual GPTQ parameters any more. These are set automatically from the file 'quantize\\_config.json'.\n9. Once you're ready, click the Text Generation tab and enter a prompt to get started!\n\n\nServing this model from Text Generation Inference (TGI)\n-------------------------------------------------------\n\n\nIt's recommended to use TGI version 1.1.0 or later. The official Docker container is: 'URL\n\n\nExample Docker parameters:\n\n\nExample Python code for interfacing with TGI (requires huggingface-hub 0.17.0 or later):\n\n\nHow to use this GPTQ model from Python code\n-------------------------------------------",

"### Install the necessary packages\n\n\nRequires: Transformers 4.33.0 or later, Optimum 1.12.0 or later, and AutoGPTQ 0.4.2 or later.\n\n\nIf you have problems installing AutoGPTQ using the pre-built wheels, install it from source instead:",

"### You can then use the following code\n\n\nCompatibility\n-------------\n\n\nThe files provided are tested to work with Transformers. For non-Mistral models, AutoGPTQ can also be used directly.\n\n\nExLlama is compatible with Llama and Mistral models in 4-bit. Please see the Provided Files table above for per-file compatibility.\n\n\nFor a list of clients/servers, please see \"Known compatible clients / servers\", above.\n\n\nDiscord\n-------\n\n\nFor further support, and discussions on these models and AI in general, join us at:\n\n\nTheBloke AI's Discord server\n\n\nThanks, and how to contribute\n-----------------------------\n\n\nThanks to the URL team!\n\n\nThanks to Clay from URL!\n\n\nI've had a lot of people ask if they can contribute. I enjoy providing models and helping people, and would love to be able to spend even more time doing it, as well as expanding into new projects like fine tuning/training.\n\n\nIf you're able and willing to contribute it will be most gratefully received and will help me to keep providing more models, and to start work on new AI projects.\n\n\nDonaters will get priority support on any and all AI/LLM/model questions and requests, access to a private Discord room, plus other benefits.\n\n\n* Patreon: URL\n* Ko-Fi: URL\n\n\nSpecial thanks to: Aemon Algiz.\n\n\nPatreon special mentions: Brandon Frisco, LangChain4j, Spiking Neurons AB, transmissions 11, Joseph William Delisle, Nitin Borwankar, Willem Michiel, Michael Dempsey, vamX, Jeffrey Morgan, zynix, jjj, Omer Bin Jawed, Sean Connelly, jinyuan sun, Jeromy Smith, Shadi, Pawan Osman, Chadd, Elijah Stavena, Illia Dulskyi, Sebastain Graf, Stephen Murray, terasurfer, Edmond Seymore, Celu Ramasamy, Mandus, Alex, biorpg, Ajan Kanaga, Clay Pascal, Raven Klaugh, 阿明, K, ya boyyy, usrbinkat, Alicia Loh, John Villwock, ReadyPlayerEmma, Chris Smitley, Cap'n Zoog, fincy, GodLy, S\\_X, sidney chen, Cory Kujawski, OG, Mano Prime, AzureBlack, Pieter, Kalila, Spencer Kim, Tom X Nguyen, Stanislav Ovsiannikov, Michael Levine, Andrey, Trailburnt, Vadim, Enrico Ros, Talal Aujan, Brandon Phillips, Jack West, Eugene Pentland, Michael Davis, Will Dee, webtim, Jonathan Leane, Alps Aficionado, Rooh Singh, Tiffany J. Kim, theTransient, Luke @flexchar, Elle, Caitlyn Gatomon, Ari Malik, subjectnull, Johann-Peter Hartmann, Trenton Dambrowitz, Imad Khwaja, Asp the Wyvern, Emad Mostaque, Rainer Wilmers, Alexandros Triantafyllidis, Nicholas, Pedro Madruga, SuperWojo, Harry Royden McLaughlin, James Bentley, Olakabola, David Ziegler, Ai Maven, Jeff Scroggin, Nikolai Manek, Deo Leter, Matthew Berman, Fen Risland, Ken Nordquist, Manuel Alberto Morcote, Luke Pendergrass, TL, Fred von Graf, Randy H, Dan Guido, URL, Vitor Caleffi, Gabriel Tamborski, knownsqashed, Lone Striker, Erik Bjäreholt, John Detwiler, Leonard Tan, Iucharbius\n\n\nThank you to all my generous patrons and donaters!\n\n\nAnd thank you again to a16z for their generous grant.\n\n\nOriginal model card: Evan Armstrong's Augmental Unholy 13B\n==========================================================\n\n\nModel Card for Model ID\n=======================\n\n\nModel Details\n-------------",

"### Model Description\n\n\n* Developed by:\n* Shared by [optional]:\n* Model type:\n* Language(s) (NLP):\n* License:\n* Finetuned from model [optional]:",

"### Model Sources [optional]\n\n\n* Repository:\n* Paper [optional]:\n* Demo [optional]:\n\n\nUses\n----",

"### Direct Use",

"### Downstream Use [optional]",

"### Out-of-Scope Use\n\n\nBias, Risks, and Limitations\n----------------------------",

"### Recommendations\n\n\nUsers (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.\n\n\nHow to Get Started with the Model\n---------------------------------\n\n\nUse the code below to get started with the model.\n\n\nTraining Details\n----------------",

"### Training Data",

"### Training Procedure",

"#### Preprocessing [optional]",

"#### Training Hyperparameters\n\n\n* Training regime:",

"#### Speeds, Sizes, Times [optional]\n\n\nEvaluation\n----------",

"### Testing Data, Factors & Metrics",

"#### Testing Data",

"#### Factors",

"#### Metrics",

"### Results",

"#### Summary\n\n\nModel Examination [optional]\n----------------------------\n\n\nEnvironmental Impact\n--------------------\n\n\nCarbon emissions can be estimated using the Machine Learning Impact calculator presented in Lacoste et al. (2019).\n\n\n* Hardware Type:\n* Hours used:\n* Cloud Provider:\n* Compute Region:\n* Carbon Emitted:\n\n\nTechnical Specifications [optional]\n-----------------------------------",

"### Model Architecture and Objective",

"### Compute Infrastructure",

"#### Hardware",