instance_id

stringlengths 17

39

| repo

stringclasses 8

values | issue_id

stringlengths 14

34

| pr_id

stringlengths 14

34

| linking_methods

listlengths 1

3

| base_commit

stringlengths 40

40

| merge_commit

stringlengths 0

40

⌀ | hints_text

listlengths 0

106

| resolved_comments

listlengths 0

119

| created_at

timestamp[ns, tz=UTC] | labeled_as

listlengths 0

7

| problem_title

stringlengths 7

174

| problem_statement

stringlengths 0

55.4k

| gold_files

listlengths 0

10

| gold_files_postpatch

listlengths 1

10

| test_files

listlengths 0

60

| gold_patch

stringlengths 220

5.83M

| test_patch

stringlengths 386

194k

⌀ | split_random

stringclasses 3

values | split_time

stringclasses 3

values | issue_start_time

timestamp[ns] | issue_created_at

timestamp[ns, tz=UTC] | issue_by_user

stringlengths 3

21

| split_repo

stringclasses 3

values |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

provectus/kafka-ui/3127_3165 | provectus/kafka-ui | provectus/kafka-ui/3127 | provectus/kafka-ui/3165 | [

"connected"

]

| 9fad0d0ee3c1d3e77eb37b4e510a8c0a77db66b1 | 87ffb4716a2c634f3e68c8ce5a2a2a0e61203ee5 | []

| [

"let's set selectItem() method return TopicList object to avoid separate calling next method from topicList object",

"don't we need to wait until new screen ready first?",

"Added.",

"Fixed."

]

| 2022-12-28T12:44:54Z | [

"scope/QA",

"scope/AQA"

]

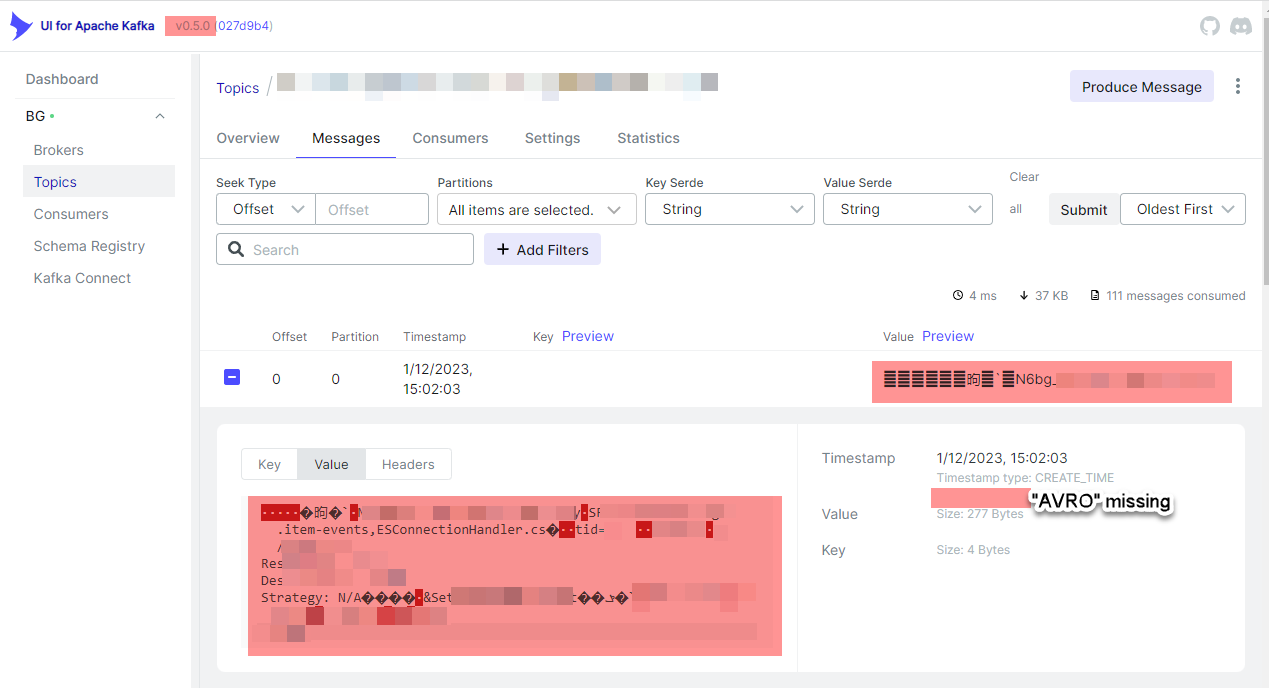

| [e2e]TopicTests.copyTopic : Copy topic | Autotest implementation for:

https://app.qase.io/case/KAFKAUI-8

Description:

Checking possibility to copy the selected Topic from All Topics' list

Pre-conditions

- Login to Kafka-ui application

- Open the 'Local' section

- Select the 'Topics'

Steps:

1)Check any Topic from All Topics' list

2)Click on 'Copy selected topic' button

3)Change the topic name

4)Press "Create topic" button

Expected results:

1)Make sure 'Delete selected topics', 'Copy selected topic', 'Purge messages of selected topics' are displayed

2)Should redirect to Copy Topic page with filled already existing data and disabled "Create topic" button

3)Create topic button should become active

4)Should redirect to newly created topic with displaying success message | [

"kafka-ui-e2e-checks/src/main/java/com/provectus/kafka/ui/pages/topic/TopicsList.java"

]

| [

"kafka-ui-e2e-checks/src/main/java/com/provectus/kafka/ui/pages/topic/TopicsList.java"

]

| [

"kafka-ui-e2e-checks/src/test/java/com/provectus/kafka/ui/base/BaseTest.java",

"kafka-ui-e2e-checks/src/test/java/com/provectus/kafka/ui/suite/topics/TopicsTests.java"

]

| diff --git a/kafka-ui-e2e-checks/src/main/java/com/provectus/kafka/ui/pages/topic/TopicsList.java b/kafka-ui-e2e-checks/src/main/java/com/provectus/kafka/ui/pages/topic/TopicsList.java

index 871ecbb752f..f24df1e926a 100644

--- a/kafka-ui-e2e-checks/src/main/java/com/provectus/kafka/ui/pages/topic/TopicsList.java

+++ b/kafka-ui-e2e-checks/src/main/java/com/provectus/kafka/ui/pages/topic/TopicsList.java

@@ -70,6 +70,12 @@ public List<SelenideElement> getActionButtons() {

.collect(Collectors.toList());

}

+ @Step

+ public TopicsList clickCopySelectedTopicBtn(){

+ copySelectedTopicBtn.shouldBe(Condition.enabled).click();

+ return this;

+ }

+

private List<SelenideElement> getVisibleColumnHeaders() {

return Stream.of("Replication Factor","Number of messages","Topic Name", "Partitions", "Out of sync replicas", "Size")

.map(name -> $x(String.format(columnHeaderLocator, name)))

@@ -134,8 +140,9 @@ public TopicGridItem(SelenideElement element) {

}

@Step

- public void selectItem(boolean select) {

- selectElement(element.$x("./td[1]/input"), select);

+ public TopicsList selectItem(boolean select) {

+ selectElement(element.$x("./td[1]/input"), select);

+ return new TopicsList();

}

@Step

| diff --git a/kafka-ui-e2e-checks/src/test/java/com/provectus/kafka/ui/base/BaseTest.java b/kafka-ui-e2e-checks/src/test/java/com/provectus/kafka/ui/base/BaseTest.java

index 3a48f9f962d..9290c0af3b4 100644

--- a/kafka-ui-e2e-checks/src/test/java/com/provectus/kafka/ui/base/BaseTest.java

+++ b/kafka-ui-e2e-checks/src/test/java/com/provectus/kafka/ui/base/BaseTest.java

@@ -34,7 +34,7 @@

@Slf4j

@DisplayNameGeneration(DisplayNameGenerator.class)

-public class BaseTest extends Facade {

+public abstract class BaseTest extends Facade {

private static final String SELENIUM_IMAGE_NAME = "selenium/standalone-chrome:103.0";

private static final String SELENIARM_STANDALONE_CHROMIUM = "seleniarm/standalone-chromium:103.0";

diff --git a/kafka-ui-e2e-checks/src/test/java/com/provectus/kafka/ui/suite/topics/TopicsTests.java b/kafka-ui-e2e-checks/src/test/java/com/provectus/kafka/ui/suite/topics/TopicsTests.java

index 01eff46b980..89857df9675 100644

--- a/kafka-ui-e2e-checks/src/test/java/com/provectus/kafka/ui/suite/topics/TopicsTests.java

+++ b/kafka-ui-e2e-checks/src/test/java/com/provectus/kafka/ui/suite/topics/TopicsTests.java

@@ -471,6 +471,38 @@ void recreateTopicFromTopicProfile(){

.as("isAlertWithMessageVisible()").isTrue();

}

+ @DisplayName("TopicTests.copyTopic : Copy topic")

+ @Suite(suiteId = SUITE_ID, title = SUITE_TITLE)

+ @AutomationStatus(status = Status.AUTOMATED)

+ @CaseId(8)

+ @Test

+ void checkCopyTopicPossibility(){

+ Topic topicToCopy = new Topic()

+ .setName("topic-to-copy-" + randomAlphabetic(5))

+ .setNumberOfPartitions(1);

+ navigateToTopics();

+ topicsList

+ .getTopicItem("_schemas")

+ .selectItem(true)

+ .clickCopySelectedTopicBtn();

+ topicCreateEditForm

+ .waitUntilScreenReady();

+ assertThat(topicCreateEditForm.isCreateTopicButtonEnabled()).as("isCreateTopicButtonEnabled()").isFalse();

+ topicCreateEditForm

+ .setTopicName(topicToCopy.getName())

+ .setNumberOfPartitions(topicToCopy.getNumberOfPartitions())

+ .clickCreateTopicBtn();

+ topicDetails

+ .waitUntilScreenReady();

+ TOPIC_LIST.add(topicToCopy);

+ SoftAssertions softly = new SoftAssertions();

+ softly.assertThat(topicDetails.isAlertWithMessageVisible(SUCCESS, "Topic successfully created."))

+ .as("isAlertWithMessageVisible()").isTrue();

+ softly.assertThat(topicDetails.isTopicHeaderVisible(topicToCopy.getName()))

+ .as("isTopicHeaderVisible()").isTrue();

+ softly.assertAll();

+ }

+

@AfterAll

public void afterAll() {

TOPIC_LIST.forEach(topic -> apiService.deleteTopic(CLUSTER_NAME, topic.getName()));

| train | val | 2022-12-29T11:45:18 | 2022-12-23T14:06:46Z | ArthurNiedial | train |

provectus/kafka-ui/3180_3185 | provectus/kafka-ui | provectus/kafka-ui/3180 | provectus/kafka-ui/3185 | [

"keyword_pr_to_issue"

]

| eef63466fb3aee13a63d8071b1abf3de425f3f2b | 9b87d3829b024ba89c28dec97394013a3c83973b | [

"Hello there Nawarix! 👋\n\nThank you and congratulations 🎉 for opening your very first issue in this project! 💖\n\nIn case you want to claim this issue, please comment down below! We will try to get back to you as soon as we can. 👀",

"@Nawarix try this \r\n\r\nAUTH_TYPE: OAUTH2\r\nKAFKA_CLUSTERS_0_BOOTSTRAPSERVERS: bootstrap:9092\r\nKAFKA_CLUSTERS_0_NAME: your-cluster\r\nKAFKA_CLUSTERS_0_SCHEMAREGISTRY: http://apicurioregistry:8080/apis/ccompat/v6\r\nKAFKA_CLUSTERS_0_SCHEMAREGISTRYAUTH_PASSWORD: XXXXXXXXXXXXXX\r\nKAFKA_CLUSTERS_0_SCHEMAREGISTRYAUTH_USERNAME: registry-api\r\nSPRING_SECURITY_OAUTH2_CLIENT_PROVIDER_AUTH0_ISSUER_URI: https://domain/auth/realms/myrealm\r\nSPRING_SECURITY_OAUTH2_CLIENT_REGISTRATION_AUTH0_CLIENTID: kafka-ui\r\nSPRING_SECURITY_OAUTH2_CLIENT_REGISTRATION_AUTH0_CLIENTSECRET: XXXXXXXXXXXXXXXXXXXX\r\nSPRING_SECURITY_OAUTH2_CLIENT_REGISTRATION_AUTH0_SCOPE: openid\r\n\r\nWorks for me.",

"Hi, please take a look at the \"breaking changes\" block at the release page:\r\nhttps://github.com/provectus/kafka-ui/releases/tag/v0.5.0\r\n\r\nLet me know how it goes.",

"@sookeke those were my configs before v0.5.0 and they work just fine, but when I updated the software I got an exception\r\n\r\n@Haarolean That what I tried to do with my last configs\r\nAUTH_OAUTH2_CLIENT_KEYCLOAK_CLIENTID: kafkaui\r\nAUTH_OAUTH2_CLIENT_KEYCLOAK_CLIENTSECRET: XXXXXXXXXXXXXXXXXXXXXXXXXXx\r\nAUTH_OAUTH2_CLIENT_KEYCLOAK_PROVIDER: keycloak\r\nAUTH_OAUTH2_CLIENT_KEYCLOAK_CUSTOM_PARAMS_TYPE: keycloak\r\nAUTH_OAUTH2_CLIENT_KEYCLOAK_CUSTOM_PARAMS_LOGOUTURL: XXXXXXXXXXXXXXXXXXXX\r\nAUTH_OAUTH2_CLIENT_KEYCLOAK_ISSUER_URI: XXXXXXXXXXXXXXXXXXXXX\r\nAUTH_OAUTH2_CLIENT_KEYCLOAK_SCOPE: openid\r\n\r\nBut maybe those changes not reflected on Environment variables?",

"@Nawarix that's a nemesis of env vars as configs :/\r\nPlease replace `CUSTOM_PARAMS` with `CUSTOM-PARAMS`, a dash instead of an underscore. \r\nAlso, you don't have to type them in caps here.\r\n\r\nAlso, I've adjusted some code within #3185 to prevent these pesky errors in case you don't need/have/want to specify custom params at all (like, for keycloak).",

"@Haarolean It worked like charm, I thought I have to treated like issuer-uri\r\nThanks for you support"

]

| []

| 2023-01-01T14:15:56Z | [

"type/bug",

"scope/backend",

"status/accepted",

"status/confirmed"

]

| [BE] NullPointerException OAuthProperties$OAuth2Provider.getCustomParams() is null | **Describe the bug**

Trying to authenticate kafka-ui with keycloak, after v0.5.0 everything was working fine,when I updated kafka ui to version v0.5.0, and changed the variables level as mentioned in version description, I got this exception

```

org.springframework.beans.factory.UnsatisfiedDependencyException: Error creating bean with name 'OAuthLogoutSuccessHandler' defined in URL [jar:file:/kafka-ui-api.jar!/BOOT-INF/classes!/com/provectus/kafka/ui/config/auth/logout/OAuthLogoutSuccessHandler.class]: Unsatisfied dependency expressed through constructor parameter 2; nested exception is org.springframework.beans.factory.UnsatisfiedDependencyException: Error creating bean with name 'defaultOidcLogoutHandler' defined in class path resource [com/provectus/kafka/ui/config/auth/OAuthSecurityConfig.class]: Unsatisfied dependency expressed through method 'defaultOidcLogoutHandler' parameter 0; nested exception is org.springframework.beans.factory.BeanCreationException: Error creating bean with name 'clientRegistrationRepository' defined in class path resource [com/provectus/kafka/ui/config/auth/OAuthSecurityConfig.class]: Bean instantiation via factory method failed; nested exception is org.springframework.beans.BeanInstantiationException: Failed to instantiate [org.springframework.security.oauth2.client.registration.InMemoryReactiveClientRegistrationRepository]: Factory method 'clientRegistrationRepository' threw exception; nested exception is java.lang.NullPointerException: Cannot invoke "java.util.Map.get(Object)" because the return value of "com.provectus.kafka.ui.config.auth.OAuthProperties$OAuth2Provider.getCustomParams()" is null

at org.springframework.beans.factory.support.ConstructorResolver.createArgumentArray(ConstructorResolver.java:800)

at org.springframework.beans.factory.support.ConstructorResolver.autowireConstructor(ConstructorResolver.java:229)

at org.springframework.beans.factory.support.AbstractAutowireCapableBeanFactory.autowireConstructor(AbstractAutowireCapableBeanFactory.java:1372)

at org.springframework.beans.factory.support.AbstractAutowireCapableBeanFactory.createBeanInstance(AbstractAutowireCapableBeanFactory.java:1222)

at org.springframework.beans.factory.support.AbstractAutowireCapableBeanFactory.doCreateBean(AbstractAutowireCapableBeanFactory.java:582)

at org.springframework.beans.factory.support.AbstractAutowireCapableBeanFactory.createBean(AbstractAutowireCapableBeanFactory.java:542)

at org.springframework.beans.factory.support.AbstractBeanFactory.lambda$doGetBean$0(AbstractBeanFactory.java:335)

at org.springframework.beans.factory.support.DefaultSingletonBeanRegistry.getSingleton(DefaultSingletonBeanRegistry.java:234)

at org.springframework.beans.factory.support.AbstractBeanFactory.doGetBean(AbstractBeanFactory.java:333)

at org.springframework.beans.factory.support.AbstractBeanFactory.getBean(AbstractBeanFactory.java:208)

at org.springframework.beans.factory.support.DefaultListableBeanFactory.preInstantiateSingletons(DefaultListableBeanFactory.java:955)

at org.springframework.context.support.AbstractApplicationContext.finishBeanFactoryInitialization(AbstractApplicationContext.java:918)

at org.springframework.context.support.AbstractApplicationContext.refresh(AbstractApplicationContext.java:583)

at org.springframework.boot.web.reactive.context.ReactiveWebServerApplicationContext.refresh(ReactiveWebServerApplicationContext.java:66)

at org.springframework.boot.SpringApplication.refresh(SpringApplication.java:734)

at org.springframework.boot.SpringApplication.refreshContext(SpringApplication.java:408)

at org.springframework.boot.SpringApplication.run(SpringApplication.java:308)

at org.springframework.boot.SpringApplication.run(SpringApplication.java:1306)

at org.springframework.boot.SpringApplication.run(SpringApplication.java:1295)

at com.provectus.kafka.ui.KafkaUiApplication.main(KafkaUiApplication.java:15)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:77)

at java.base/jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.base/java.lang.reflect.Method.invoke(Method.java:568)

at org.springframework.boot.loader.MainMethodRunner.run(MainMethodRunner.java:49)

at org.springframework.boot.loader.Launcher.launch(Launcher.java:108)

at org.springframework.boot.loader.Launcher.launch(Launcher.java:58)

at org.springframework.boot.loader.JarLauncher.main(JarLauncher.java:65)

Caused by: org.springframework.beans.factory.UnsatisfiedDependencyException: Error creating bean with name 'defaultOidcLogoutHandler' defined in class path resource [com/provectus/kafka/ui/config/auth/OAuthSecurityConfig.class]: Unsatisfied dependency expressed through method 'defaultOidcLogoutHandler' parameter 0; nested exception is org.springframework.beans.factory.BeanCreationException: Error creating bean with name 'clientRegistrationRepository' defined in class path resource [com/provectus/kafka/ui/config/auth/OAuthSecurityConfig.class]: Bean instantiation via factory method failed; nested exception is org.springframework.beans.BeanInstantiationException: Failed to instantiate [org.springframework.security.oauth2.client.registration.InMemoryReactiveClientRegistrationRepository]: Factory method 'clientRegistrationRepository' threw exception; nested exception is java.lang.NullPointerException: Cannot invoke "java.util.Map.get(Object)" because the return value of "com.provectus.kafka.ui.config.auth.OAuthProperties$OAuth2Provider.getCustomParams()" is null

at org.springframework.beans.factory.support.ConstructorResolver.createArgumentArray(ConstructorResolver.java:800)

at org.springframework.beans.factory.support.ConstructorResolver.instantiateUsingFactoryMethod(ConstructorResolver.java:541)

at org.springframework.beans.factory.support.AbstractAutowireCapableBeanFactory.instantiateUsingFactoryMethod(AbstractAutowireCapableBeanFactory.java:1352)

at org.springframework.beans.factory.support.AbstractAutowireCapableBeanFactory.createBeanInstance(AbstractAutowireCapableBeanFactory.java:1195)

at org.springframework.beans.factory.support.AbstractAutowireCapableBeanFactory.doCreateBean(AbstractAutowireCapableBeanFactory.java:582)

at org.springframework.beans.factory.support.AbstractAutowireCapableBeanFactory.createBean(AbstractAutowireCapableBeanFactory.java:542)

at org.springframework.beans.factory.support.AbstractBeanFactory.lambda$doGetBean$0(AbstractBeanFactory.java:335)

at org.springframework.beans.factory.support.DefaultSingletonBeanRegistry.getSingleton(DefaultSingletonBeanRegistry.java:234)

at org.springframework.beans.factory.support.AbstractBeanFactory.doGetBean(AbstractBeanFactory.java:333)

at org.springframework.beans.factory.support.AbstractBeanFactory.getBean(AbstractBeanFactory.java:208)

at org.springframework.beans.factory.config.DependencyDescriptor.resolveCandidate(DependencyDescriptor.java:276)

at org.springframework.beans.factory.support.DefaultListableBeanFactory.doResolveDependency(DefaultListableBeanFactory.java:1391)

at org.springframework.beans.factory.support.DefaultListableBeanFactory.resolveDependency(DefaultListableBeanFactory.java:1311)

at org.springframework.beans.factory.support.ConstructorResolver.resolveAutowiredArgument(ConstructorResolver.java:887)

at org.springframework.beans.factory.support.ConstructorResolver.createArgumentArray(ConstructorResolver.java:791)

... 27 common frames omitted

Caused by: org.springframework.beans.factory.BeanCreationException: Error creating bean with name 'clientRegistrationRepository' defined in class path resource [com/provectus/kafka/ui/config/auth/OAuthSecurityConfig.class]: Bean instantiation via factory method failed; nested exception is org.springframework.beans.BeanInstantiationException: Failed to instantiate [org.springframework.security.oauth2.client.registration.InMemoryReactiveClientRegistrationRepository]: Factory method 'clientRegistrationRepository' threw exception; nested exception is java.lang.NullPointerException: Cannot invoke "java.util.Map.get(Object)" because the return value of "com.provectus.kafka.ui.config.auth.OAuthProperties$OAuth2Provider.getCustomParams()" is null

at org.springframework.beans.factory.support.ConstructorResolver.instantiate(ConstructorResolver.java:658)

at org.springframework.beans.factory.support.ConstructorResolver.instantiateUsingFactoryMethod(ConstructorResolver.java:486)

at org.springframework.beans.factory.support.AbstractAutowireCapableBeanFactory.instantiateUsingFactoryMethod(AbstractAutowireCapableBeanFactory.java:1352)

at org.springframework.beans.factory.support.AbstractAutowireCapableBeanFactory.createBeanInstance(AbstractAutowireCapableBeanFactory.java:1195)

at org.springframework.beans.factory.support.AbstractAutowireCapableBeanFactory.doCreateBean(AbstractAutowireCapableBeanFactory.java:582)

at org.springframework.beans.factory.support.AbstractAutowireCapableBeanFactory.createBean(AbstractAutowireCapableBeanFactory.java:542)

at org.springframework.beans.factory.support.AbstractBeanFactory.lambda$doGetBean$0(AbstractBeanFactory.java:335)

at org.springframework.beans.factory.support.DefaultSingletonBeanRegistry.getSingleton(DefaultSingletonBeanRegistry.java:234)

at org.springframework.beans.factory.support.AbstractBeanFactory.doGetBean(AbstractBeanFactory.java:333)

at org.springframework.beans.factory.support.AbstractBeanFactory.getBean(AbstractBeanFactory.java:208)

at org.springframework.beans.factory.config.DependencyDescriptor.resolveCandidate(DependencyDescriptor.java:276)

at org.springframework.beans.factory.support.DefaultListableBeanFactory.doResolveDependency(DefaultListableBeanFactory.java:1391)

at org.springframework.beans.factory.support.DefaultListableBeanFactory.resolveDependency(DefaultListableBeanFactory.java:1311)

at org.springframework.beans.factory.support.ConstructorResolver.resolveAutowiredArgument(ConstructorResolver.java:887)

at org.springframework.beans.factory.support.ConstructorResolver.createArgumentArray(ConstructorResolver.java:791)

... 41 common frames omitted

Caused by: org.springframework.beans.BeanInstantiationException: Failed to instantiate [org.springframework.security.oauth2.client.registration.InMemoryReactiveClientRegistrationRepository]: Factory method 'clientRegistrationRepository' threw exception; nested exception is java.lang.NullPointerException: Cannot invoke "java.util.Map.get(Object)" because the return value of "com.provectus.kafka.ui.config.auth.OAuthProperties$OAuth2Provider.getCustomParams()" is null

at org.springframework.beans.factory.support.SimpleInstantiationStrategy.instantiate(SimpleInstantiationStrategy.java:185)

at org.springframework.beans.factory.support.ConstructorResolver.instantiate(ConstructorResolver.java:653)

... 55 common frames omitted

Caused by: java.lang.NullPointerException: Cannot invoke "java.util.Map.get(Object)" because the return value of "com.provectus.kafka.ui.config.auth.OAuthProperties$OAuth2Provider.getCustomParams()" is null

```

**Set up**

1. docker deployment

2. using Env variables to config kafka ui with keycloak

AUTH_OAUTH2_CLIENT_KEYCLOAK_CLIENTID: kafkaui

AUTH_OAUTH2_CLIENT_KEYCLOAK_CLIENTSECRET: XXXXXXXXXXXXXXXXXXXXXXXXXXx

AUTH_OAUTH2_CLIENT_KEYCLOAK_PROVIDER: keycloak

AUTH_OAUTH2_CLIENT_KEYCLOAK_CUSTOM_PARAMS_TYPE: keycloak

AUTH_OAUTH2_CLIENT_KEYCLOAK_CUSTOM_PARAMS_LOGOUTURL: XXXXXXXXXXXXXXXXXXXX

AUTH_OAUTH2_CLIENT_KEYCLOAK_ISSUER_URI: XXXXXXXXXXXXXXXXXXXXX

AUTH_OAUTH2_CLIENT_KEYCLOAK_SCOPE: openid

**Steps to Reproduce**

1. configure the conatiner

2. run the container with previous configs

**Expected behavior**

run normally with oauth

| [

"kafka-ui-api/src/main/java/com/provectus/kafka/ui/config/auth/OAuthProperties.java",

"kafka-ui-api/src/main/java/com/provectus/kafka/ui/config/auth/OAuthPropertiesConverter.java"

]

| [

"kafka-ui-api/src/main/java/com/provectus/kafka/ui/config/auth/OAuthProperties.java",

"kafka-ui-api/src/main/java/com/provectus/kafka/ui/config/auth/OAuthPropertiesConverter.java"

]

| []

| diff --git a/kafka-ui-api/src/main/java/com/provectus/kafka/ui/config/auth/OAuthProperties.java b/kafka-ui-api/src/main/java/com/provectus/kafka/ui/config/auth/OAuthProperties.java

index f79d217fa79..db192ae826b 100644

--- a/kafka-ui-api/src/main/java/com/provectus/kafka/ui/config/auth/OAuthProperties.java

+++ b/kafka-ui-api/src/main/java/com/provectus/kafka/ui/config/auth/OAuthProperties.java

@@ -1,6 +1,7 @@

package com.provectus.kafka.ui.config.auth;

import java.util.HashMap;

+import java.util.HashSet;

import java.util.Map;

import java.util.Set;

import javax.annotation.PostConstruct;

@@ -31,13 +32,13 @@ public static class OAuth2Provider {

private String clientName;

private String redirectUri;

private String authorizationGrantType;

- private Set<String> scope;

+ private Set<String> scope = new HashSet<>();

private String issuerUri;

private String authorizationUri;

private String tokenUri;

private String userInfoUri;

private String jwkSetUri;

private String userNameAttribute;

- private Map<String, String> customParams;

+ private Map<String, String> customParams = new HashMap<>();

}

}

diff --git a/kafka-ui-api/src/main/java/com/provectus/kafka/ui/config/auth/OAuthPropertiesConverter.java b/kafka-ui-api/src/main/java/com/provectus/kafka/ui/config/auth/OAuthPropertiesConverter.java

index c3d20664914..8e4a8575a8c 100644

--- a/kafka-ui-api/src/main/java/com/provectus/kafka/ui/config/auth/OAuthPropertiesConverter.java

+++ b/kafka-ui-api/src/main/java/com/provectus/kafka/ui/config/auth/OAuthPropertiesConverter.java

@@ -71,7 +71,7 @@ private static void applyGoogleTransformations(OAuth2Provider provider) {

}

private static boolean isGoogle(OAuth2Provider provider) {

- return provider.getCustomParams().get(TYPE).equalsIgnoreCase(GOOGLE);

+ return GOOGLE.equalsIgnoreCase(provider.getCustomParams().get(TYPE));

}

}

| null | train | val | 2023-01-03T11:52:28 | 2022-12-29T11:45:36Z | Nawarix | train |

provectus/kafka-ui/3176_3188 | provectus/kafka-ui | provectus/kafka-ui/3176 | provectus/kafka-ui/3188 | [

"connected"

]

| 57585891d16714fe4ea662f7b4c7c868ba4b77f7 | 578468d09023c9898c14774f8a827058b4fb68d0 | [

"Thanks, we'll take a look at this",

"@EI-Joao hey, can you try pulling `public.ecr.aws/provectus/kafka-ui-custom-build:3188` image? Is it any way better?",

"Hi @Haarolean \r\nIt is much better now taking 3/4 seconds 😊\r\nIt would be really helpful if we could get the same for this one [https://github.com/provectus/kafka-ui/issues/3148](url)",

"@EI-Joao thanks for confirmation! How many consumer groups do you have btw?",

"The cluster that has more consumer groups is having +-220. It is not a lot but to describe them all every time a page is changed takes some time 😊"

]

| [

"try to generalize"

]

| 2023-01-03T12:25:25Z | [

"type/bug",

"good first issue",

"scope/backend",

"status/accepted"

]

| Consumers: performance loading consumers | <!--

Don't forget to check for existing issues/discussions regarding your proposal. We might already have it.

https://github.com/provectus/kafka-ui/issues

https://github.com/provectus/kafka-ui/discussions

-->

**Describe the bug**

<!--(A clear and concise description of what the bug is.)-->

When loading consumers it takes 30/35 seconds. This time is related to the number of consumers.

E.g. if a cluster has x consumers it wil take y time and if a cluster has x1 consumers it will take y1 time.

**Set up**

<!--

How do you run the app? Please provide as much info as possible:

1. App version (docker image version or check commit hash in the top left corner in UI)

2. Helm chart version, if you use one

3. Any IAAC configs

We might close the issue without further explanation if you don't provide such information.

-->

version v0.5.0

**Steps to Reproduce**

<!-- We'd like you to provide an example setup (via docker-compose, helm, etc.)

to reproduce the problem, especially with a complex setups. -->

Steps to reproduce the behavior:

1. Add a cluster

2. Go to Cluster -> Consumers

**Expected behavior**

<!--

(A clear and concise description of what you expected to happen)

-->

When loading the consumers for a page. Only the number of consumers in that page should be loaded in the backed.

E.g. if a cluster has 1000 consumers we can not load 1000 consumers every time a page is changed.

**Screenshots**

<!--

(If applicable, add screenshots to help explain your problem)

-->

**Additional context**

<!--

(Add any other context about the problem here)

-->

This is applicable to the other pages that have pagination e.g. topics

| [

"kafka-ui-api/src/main/java/com/provectus/kafka/ui/controller/ConsumerGroupsController.java",

"kafka-ui-api/src/main/java/com/provectus/kafka/ui/mapper/ConsumerGroupMapper.java",

"kafka-ui-api/src/main/java/com/provectus/kafka/ui/service/ConsumerGroupService.java",

"kafka-ui-api/src/main/java/com/provectus/kafka/ui/service/OffsetsResetService.java",

"kafka-ui-api/src/main/java/com/provectus/kafka/ui/service/ReactiveAdminClient.java",

"kafka-ui-api/src/main/java/com/provectus/kafka/ui/util/MapUtil.java"

]

| [

"kafka-ui-api/src/main/java/com/provectus/kafka/ui/controller/ConsumerGroupsController.java",

"kafka-ui-api/src/main/java/com/provectus/kafka/ui/mapper/ConsumerGroupMapper.java",

"kafka-ui-api/src/main/java/com/provectus/kafka/ui/service/ConsumerGroupService.java",

"kafka-ui-api/src/main/java/com/provectus/kafka/ui/service/OffsetsResetService.java",

"kafka-ui-api/src/main/java/com/provectus/kafka/ui/service/ReactiveAdminClient.java"

]

| [

"kafka-ui-api/src/test/java/com/provectus/kafka/ui/service/ReactiveAdminClientTest.java"

]

| diff --git a/kafka-ui-api/src/main/java/com/provectus/kafka/ui/controller/ConsumerGroupsController.java b/kafka-ui-api/src/main/java/com/provectus/kafka/ui/controller/ConsumerGroupsController.java

index afea878fa8a..fd7505d99bd 100644

--- a/kafka-ui-api/src/main/java/com/provectus/kafka/ui/controller/ConsumerGroupsController.java

+++ b/kafka-ui-api/src/main/java/com/provectus/kafka/ui/controller/ConsumerGroupsController.java

@@ -189,8 +189,8 @@ public Mono<ResponseEntity<Void>> resetConsumerGroupOffsets(String clusterName,

private ConsumerGroupsPageResponseDTO convertPage(ConsumerGroupService.ConsumerGroupsPage

consumerGroupConsumerGroupsPage) {

return new ConsumerGroupsPageResponseDTO()

- .pageCount(consumerGroupConsumerGroupsPage.getTotalPages())

- .consumerGroups(consumerGroupConsumerGroupsPage.getConsumerGroups()

+ .pageCount(consumerGroupConsumerGroupsPage.totalPages())

+ .consumerGroups(consumerGroupConsumerGroupsPage.consumerGroups()

.stream()

.map(ConsumerGroupMapper::toDto)

.collect(Collectors.toList()));

diff --git a/kafka-ui-api/src/main/java/com/provectus/kafka/ui/mapper/ConsumerGroupMapper.java b/kafka-ui-api/src/main/java/com/provectus/kafka/ui/mapper/ConsumerGroupMapper.java

index 75c0a99039b..9f7e32ed148 100644

--- a/kafka-ui-api/src/main/java/com/provectus/kafka/ui/mapper/ConsumerGroupMapper.java

+++ b/kafka-ui-api/src/main/java/com/provectus/kafka/ui/mapper/ConsumerGroupMapper.java

@@ -89,13 +89,17 @@ private static <T extends ConsumerGroupDTO> T convertToConsumerGroup(

.flatMap(m -> m.getAssignment().stream().map(TopicPartition::topic))

).collect(Collectors.toSet()).size();

- long messagesBehind = c.getOffsets().entrySet().stream()

- .mapToLong(e ->

- Optional.ofNullable(c.getEndOffsets())

- .map(o -> o.get(e.getKey()))

- .map(o -> o - e.getValue())

- .orElse(0L)

- ).sum();

+ Long messagesBehind = null;

+ // messagesBehind should be undefined if no committed offsets found for topic

+ if (!c.getOffsets().isEmpty()) {

+ messagesBehind = c.getOffsets().entrySet().stream()

+ .mapToLong(e ->

+ Optional.ofNullable(c.getEndOffsets())

+ .map(o -> o.get(e.getKey()))

+ .map(o -> o - e.getValue())

+ .orElse(0L)

+ ).sum();

+ }

consumerGroup.setMessagesBehind(messagesBehind);

consumerGroup.setTopics(numTopics);

diff --git a/kafka-ui-api/src/main/java/com/provectus/kafka/ui/service/ConsumerGroupService.java b/kafka-ui-api/src/main/java/com/provectus/kafka/ui/service/ConsumerGroupService.java

index e2a9592f7e9..1a74914ff43 100644

--- a/kafka-ui-api/src/main/java/com/provectus/kafka/ui/service/ConsumerGroupService.java

+++ b/kafka-ui-api/src/main/java/com/provectus/kafka/ui/service/ConsumerGroupService.java

@@ -1,5 +1,6 @@

package com.provectus.kafka.ui.service;

+import com.google.common.collect.Table;

import com.provectus.kafka.ui.model.ConsumerGroupOrderingDTO;

import com.provectus.kafka.ui.model.InternalConsumerGroup;

import com.provectus.kafka.ui.model.InternalTopicConsumerGroup;

@@ -7,6 +8,7 @@

import com.provectus.kafka.ui.model.SortOrderDTO;

import com.provectus.kafka.ui.service.rbac.AccessControlService;

import java.util.ArrayList;

+import java.util.Collection;

import java.util.Comparator;

import java.util.HashMap;

import java.util.List;

@@ -14,22 +16,21 @@

import java.util.Properties;

import java.util.function.ToIntFunction;

import java.util.stream.Collectors;

+import java.util.stream.Stream;

import javax.annotation.Nullable;

import lombok.RequiredArgsConstructor;

-import lombok.Value;

import org.apache.commons.lang3.StringUtils;

import org.apache.kafka.clients.admin.ConsumerGroupDescription;

+import org.apache.kafka.clients.admin.ConsumerGroupListing;

import org.apache.kafka.clients.admin.OffsetSpec;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.clients.consumer.KafkaConsumer;

+import org.apache.kafka.common.ConsumerGroupState;

import org.apache.kafka.common.TopicPartition;

import org.apache.kafka.common.serialization.BytesDeserializer;

import org.apache.kafka.common.utils.Bytes;

import org.springframework.stereotype.Service;

-import reactor.core.publisher.Flux;

import reactor.core.publisher.Mono;

-import reactor.util.function.Tuple2;

-import reactor.util.function.Tuples;

@Service

@RequiredArgsConstructor

@@ -41,21 +42,16 @@ public class ConsumerGroupService {

private Mono<List<InternalConsumerGroup>> getConsumerGroups(

ReactiveAdminClient ac,

List<ConsumerGroupDescription> descriptions) {

- return Flux.fromIterable(descriptions)

- // 1. getting committed offsets for all groups

- .flatMap(desc -> ac.listConsumerGroupOffsets(desc.groupId())

- .map(offsets -> Tuples.of(desc, offsets)))

- .collectMap(Tuple2::getT1, Tuple2::getT2)

- .flatMap((Map<ConsumerGroupDescription, Map<TopicPartition, Long>> groupOffsetsMap) -> {

- var tpsFromGroupOffsets = groupOffsetsMap.values().stream()

- .flatMap(v -> v.keySet().stream())

- .collect(Collectors.toSet());

+ var groupNames = descriptions.stream().map(ConsumerGroupDescription::groupId).toList();

+ // 1. getting committed offsets for all groups

+ return ac.listConsumerGroupOffsets(groupNames, null)

+ .flatMap((Table<String, TopicPartition, Long> committedOffsets) -> {

// 2. getting end offsets for partitions with committed offsets

- return ac.listOffsets(tpsFromGroupOffsets, OffsetSpec.latest(), false)

+ return ac.listOffsets(committedOffsets.columnKeySet(), OffsetSpec.latest(), false)

.map(endOffsets ->

descriptions.stream()

.map(desc -> {

- var groupOffsets = groupOffsetsMap.get(desc);

+ var groupOffsets = committedOffsets.row(desc.groupId());

var endOffsetsForGroup = new HashMap<>(endOffsets);

endOffsetsForGroup.keySet().retainAll(groupOffsets.keySet());

// 3. gathering description & offsets

@@ -73,105 +69,122 @@ public Mono<List<InternalTopicConsumerGroup>> getConsumerGroupsForTopic(KafkaClu

.flatMap(endOffsets -> {

var tps = new ArrayList<>(endOffsets.keySet());

// 2. getting all consumer groups

- return describeConsumerGroups(ac, null)

- .flatMap((List<ConsumerGroupDescription> groups) ->

- Flux.fromIterable(groups)

- // 3. for each group trying to find committed offsets for topic

- .flatMap(g ->

- ac.listConsumerGroupOffsets(g.groupId(), tps)

- // 4. keeping only groups that relates to topic

- .filter(offsets -> isConsumerGroupRelatesToTopic(topic, g, offsets))

- // 5. constructing results

- .map(offsets -> InternalTopicConsumerGroup.create(topic, g, offsets, endOffsets))

- ).collectList());

+ return describeConsumerGroups(ac)

+ .flatMap((List<ConsumerGroupDescription> groups) -> {

+ // 3. trying to find committed offsets for topic

+ var groupNames = groups.stream().map(ConsumerGroupDescription::groupId).toList();

+ return ac.listConsumerGroupOffsets(groupNames, tps).map(offsets ->

+ groups.stream()

+ // 4. keeping only groups that relates to topic

+ .filter(g -> isConsumerGroupRelatesToTopic(topic, g, offsets.containsRow(g.groupId())))

+ .map(g ->

+ // 5. constructing results

+ InternalTopicConsumerGroup.create(topic, g, offsets.row(g.groupId()), endOffsets))

+ .toList()

+ );

+ }

+ );

}));

}

private boolean isConsumerGroupRelatesToTopic(String topic,

ConsumerGroupDescription description,

- Map<TopicPartition, Long> committedGroupOffsetsForTopic) {

+ boolean hasCommittedOffsets) {

boolean hasActiveMembersForTopic = description.members()

.stream()

.anyMatch(m -> m.assignment().topicPartitions().stream().anyMatch(tp -> tp.topic().equals(topic)));

- boolean hasCommittedOffsets = !committedGroupOffsetsForTopic.isEmpty();

return hasActiveMembersForTopic || hasCommittedOffsets;

}

- @Value

- public static class ConsumerGroupsPage {

- List<InternalConsumerGroup> consumerGroups;

- int totalPages;

+ public record ConsumerGroupsPage(List<InternalConsumerGroup> consumerGroups, int totalPages) {

}

public Mono<ConsumerGroupsPage> getConsumerGroupsPage(

KafkaCluster cluster,

- int page,

+ int pageNum,

int perPage,

@Nullable String search,

ConsumerGroupOrderingDTO orderBy,

SortOrderDTO sortOrderDto) {

- var comparator = sortOrderDto.equals(SortOrderDTO.ASC)

- ? getPaginationComparator(orderBy)

- : getPaginationComparator(orderBy).reversed();

return adminClientService.get(cluster).flatMap(ac ->

- describeConsumerGroups(ac, search).flatMap(descriptions ->

- getConsumerGroups(

- ac,

- descriptions.stream()

- .sorted(comparator)

- .skip((long) (page - 1) * perPage)

- .limit(perPage)

- .collect(Collectors.toList())

+ ac.listConsumerGroups()

+ .map(listing -> search == null

+ ? listing

+ : listing.stream()

+ .filter(g -> StringUtils.containsIgnoreCase(g.groupId(), search))

+ .toList()

)

- .flatMapMany(Flux::fromIterable)

- .filterWhen(

- cg -> accessControlService.isConsumerGroupAccessible(cg.getGroupId(), cluster.getName()))

- .collect(Collectors.toList())

- .map(cgs -> new ConsumerGroupsPage(

- cgs,

- (descriptions.size() / perPage) + (descriptions.size() % perPage == 0 ? 0 : 1))))

- );

+ .flatMapIterable(lst -> lst)

+ .filterWhen(cg -> accessControlService.isConsumerGroupAccessible(cg.groupId(), cluster.getName()))

+ .collectList()

+ .flatMap(allGroups ->

+ loadSortedDescriptions(ac, allGroups, pageNum, perPage, orderBy, sortOrderDto)

+ .flatMap(descriptions -> getConsumerGroups(ac, descriptions)

+ .map(page -> new ConsumerGroupsPage(

+ page,

+ (allGroups.size() / perPage) + (allGroups.size() % perPage == 0 ? 0 : 1))))));

}

- private Comparator<ConsumerGroupDescription> getPaginationComparator(ConsumerGroupOrderingDTO

- orderBy) {

- switch (orderBy) {

- case NAME:

- return Comparator.comparing(ConsumerGroupDescription::groupId);

- case STATE:

- ToIntFunction<ConsumerGroupDescription> statesPriorities = cg -> {

- switch (cg.state()) {

- case STABLE:

- return 0;

- case COMPLETING_REBALANCE:

- return 1;

- case PREPARING_REBALANCE:

- return 2;

- case EMPTY:

- return 3;

- case DEAD:

- return 4;

- case UNKNOWN:

- return 5;

- default:

- return 100;

- }

- };

- return Comparator.comparingInt(statesPriorities);

- case MEMBERS:

- return Comparator.comparingInt(cg -> cg.members().size());

- default:

- throw new IllegalStateException("Unsupported order by: " + orderBy);

- }

+ private Mono<List<ConsumerGroupDescription>> loadSortedDescriptions(ReactiveAdminClient ac,

+ List<ConsumerGroupListing> groups,

+ int pageNum,

+ int perPage,

+ ConsumerGroupOrderingDTO orderBy,

+ SortOrderDTO sortOrderDto) {

+ return switch (orderBy) {

+ case NAME -> {

+ Comparator<ConsumerGroupListing> comparator = Comparator.comparing(ConsumerGroupListing::groupId);

+ yield loadDescriptionsByListings(ac, groups, comparator, pageNum, perPage, sortOrderDto);

+ }

+ case STATE -> {

+ ToIntFunction<ConsumerGroupListing> statesPriorities =

+ cg -> switch (cg.state().orElse(ConsumerGroupState.UNKNOWN)) {

+ case STABLE -> 0;

+ case COMPLETING_REBALANCE -> 1;

+ case PREPARING_REBALANCE -> 2;

+ case EMPTY -> 3;

+ case DEAD -> 4;

+ case UNKNOWN -> 5;

+ };

+ var comparator = Comparator.comparingInt(statesPriorities);

+ yield loadDescriptionsByListings(ac, groups, comparator, pageNum, perPage, sortOrderDto);

+ }

+ case MEMBERS -> {

+ var comparator = Comparator.<ConsumerGroupDescription>comparingInt(cg -> cg.members().size());

+ var groupNames = groups.stream().map(ConsumerGroupListing::groupId).toList();

+ yield ac.describeConsumerGroups(groupNames)

+ .map(descriptions ->

+ sortAndPaginate(descriptions.values(), comparator, pageNum, perPage, sortOrderDto).toList());

+ }

+ };

}

- private Mono<List<ConsumerGroupDescription>> describeConsumerGroups(ReactiveAdminClient ac,

- @Nullable String search) {

- return ac.listConsumerGroups()

- .map(groupIds -> groupIds

- .stream()

- .filter(groupId -> search == null || StringUtils.containsIgnoreCase(groupId, search))

- .collect(Collectors.toList()))

+ private Mono<List<ConsumerGroupDescription>> loadDescriptionsByListings(ReactiveAdminClient ac,

+ List<ConsumerGroupListing> listings,

+ Comparator<ConsumerGroupListing> comparator,

+ int pageNum,

+ int perPage,

+ SortOrderDTO sortOrderDto) {

+ List<String> sortedGroups = sortAndPaginate(listings, comparator, pageNum, perPage, sortOrderDto)

+ .map(ConsumerGroupListing::groupId)

+ .toList();

+ return ac.describeConsumerGroups(sortedGroups)

+ .map(descrMap -> sortedGroups.stream().map(descrMap::get).toList());

+ }

+

+ private <T> Stream<T> sortAndPaginate(Collection<T> collection,

+ Comparator<T> comparator,

+ int pageNum,

+ int perPage,

+ SortOrderDTO sortOrderDto) {

+ return collection.stream()

+ .sorted(sortOrderDto == SortOrderDTO.ASC ? comparator : comparator.reversed())

+ .skip((long) (pageNum - 1) * perPage)

+ .limit(perPage);

+ }

+

+ private Mono<List<ConsumerGroupDescription>> describeConsumerGroups(ReactiveAdminClient ac) {

+ return ac.listConsumerGroupNames()

.flatMap(ac::describeConsumerGroups)

.map(cgs -> new ArrayList<>(cgs.values()));

}

diff --git a/kafka-ui-api/src/main/java/com/provectus/kafka/ui/service/OffsetsResetService.java b/kafka-ui-api/src/main/java/com/provectus/kafka/ui/service/OffsetsResetService.java

index 36b812473e1..67fc268d428 100644

--- a/kafka-ui-api/src/main/java/com/provectus/kafka/ui/service/OffsetsResetService.java

+++ b/kafka-ui-api/src/main/java/com/provectus/kafka/ui/service/OffsetsResetService.java

@@ -98,7 +98,7 @@ private Mono<ReactiveAdminClient> checkGroupCondition(KafkaCluster cluster, Stri

.flatMap(ac ->

// we need to call listConsumerGroups() to check group existence, because

// describeConsumerGroups() will return consumer group even if it doesn't exist

- ac.listConsumerGroups()

+ ac.listConsumerGroupNames()

.filter(cgs -> cgs.stream().anyMatch(g -> g.equals(groupId)))

.flatMap(cgs -> ac.describeConsumerGroups(List.of(groupId)))

.filter(cgs -> cgs.containsKey(groupId))

diff --git a/kafka-ui-api/src/main/java/com/provectus/kafka/ui/service/ReactiveAdminClient.java b/kafka-ui-api/src/main/java/com/provectus/kafka/ui/service/ReactiveAdminClient.java

index 1ffbd429180..b24180fa48d 100644

--- a/kafka-ui-api/src/main/java/com/provectus/kafka/ui/service/ReactiveAdminClient.java

+++ b/kafka-ui-api/src/main/java/com/provectus/kafka/ui/service/ReactiveAdminClient.java

@@ -4,12 +4,12 @@

import static java.util.stream.Collectors.toMap;

import static org.apache.kafka.clients.admin.ListOffsetsResult.ListOffsetsResultInfo;

-import com.google.common.collect.ImmutableMap;

-import com.google.common.collect.Iterators;

+import com.google.common.collect.ImmutableTable;

+import com.google.common.collect.Iterables;

+import com.google.common.collect.Table;

import com.provectus.kafka.ui.exception.IllegalEntityStateException;

import com.provectus.kafka.ui.exception.NotFoundException;

import com.provectus.kafka.ui.exception.ValidationException;

-import com.provectus.kafka.ui.util.MapUtil;

import com.provectus.kafka.ui.util.NumberUtil;

import com.provectus.kafka.ui.util.annotation.KafkaClientInternalsDependant;

import java.io.Closeable;

@@ -18,7 +18,6 @@

import java.util.Collection;

import java.util.HashMap;

import java.util.HashSet;

-import java.util.Iterator;

import java.util.List;

import java.util.Map;

import java.util.Optional;

@@ -45,7 +44,7 @@

import org.apache.kafka.clients.admin.DescribeClusterOptions;

import org.apache.kafka.clients.admin.DescribeClusterResult;

import org.apache.kafka.clients.admin.DescribeConfigsOptions;

-import org.apache.kafka.clients.admin.ListConsumerGroupOffsetsOptions;

+import org.apache.kafka.clients.admin.ListConsumerGroupOffsetsSpec;

import org.apache.kafka.clients.admin.ListOffsetsResult;

import org.apache.kafka.clients.admin.ListTopicsOptions;

import org.apache.kafka.clients.admin.NewPartitionReassignment;

@@ -54,7 +53,6 @@

import org.apache.kafka.clients.admin.OffsetSpec;

import org.apache.kafka.clients.admin.RecordsToDelete;

import org.apache.kafka.clients.admin.TopicDescription;

-import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.clients.consumer.OffsetAndMetadata;

import org.apache.kafka.common.KafkaException;

import org.apache.kafka.common.KafkaFuture;

@@ -69,6 +67,7 @@

import org.apache.kafka.common.errors.InvalidRequestException;

import org.apache.kafka.common.errors.UnknownTopicOrPartitionException;

import org.apache.kafka.common.requests.DescribeLogDirsResponse;

+import reactor.core.publisher.Flux;

import reactor.core.publisher.Mono;

import reactor.core.scheduler.Schedulers;

import reactor.util.function.Tuple2;

@@ -183,7 +182,7 @@ public Mono<Map<String, List<ConfigEntry>>> getTopicsConfig(Collection<String> t

topicNames,

200,

part -> getTopicsConfigImpl(part, includeDocFixed),

- (m1, m2) -> ImmutableMap.<String, List<ConfigEntry>>builder().putAll(m1).putAll(m2).build()

+ mapMerger()

);

}

@@ -236,7 +235,7 @@ public Mono<Map<String, TopicDescription>> describeTopics(Collection<String> top

topics,

200,

this::describeTopicsImpl,

- (m1, m2) -> ImmutableMap.<String, TopicDescription>builder().putAll(m1).putAll(m2).build()

+ mapMerger()

);

}

@@ -383,32 +382,57 @@ public Mono<Void> updateTopicConfig(String topicName, Map<String, String> config

}

}

- public Mono<List<String>> listConsumerGroups() {

- return toMono(client.listConsumerGroups().all())

- .map(lst -> lst.stream().map(ConsumerGroupListing::groupId).collect(toList()));

+ public Mono<List<String>> listConsumerGroupNames() {

+ return listConsumerGroups().map(lst -> lst.stream().map(ConsumerGroupListing::groupId).toList());

}

- public Mono<Map<String, ConsumerGroupDescription>> describeConsumerGroups(Collection<String> groupIds) {

- return toMono(client.describeConsumerGroups(groupIds).all());

+ public Mono<Collection<ConsumerGroupListing>> listConsumerGroups() {

+ return toMono(client.listConsumerGroups().all());

}

- public Mono<Map<TopicPartition, Long>> listConsumerGroupOffsets(String groupId) {

- return listConsumerGroupOffsets(groupId, new ListConsumerGroupOffsetsOptions());

+ public Mono<Map<String, ConsumerGroupDescription>> describeConsumerGroups(Collection<String> groupIds) {

+ return partitionCalls(

+ groupIds,

+ 25,

+ 4,

+ ids -> toMono(client.describeConsumerGroups(ids).all()),

+ mapMerger()

+ );

}

- public Mono<Map<TopicPartition, Long>> listConsumerGroupOffsets(

- String groupId, List<TopicPartition> partitions) {

- return listConsumerGroupOffsets(groupId,

- new ListConsumerGroupOffsetsOptions().topicPartitions(partitions));

- }

+ // group -> partition -> offset

+ // NOTE: partitions with no committed offsets will be skipped

+ public Mono<Table<String, TopicPartition, Long>> listConsumerGroupOffsets(List<String> consumerGroups,

+ // all partitions if null passed

+ @Nullable List<TopicPartition> partitions) {

+ Function<Collection<String>, Mono<Map<String, Map<TopicPartition, OffsetAndMetadata>>>> call =

+ groups -> toMono(

+ client.listConsumerGroupOffsets(

+ groups.stream()

+ .collect(Collectors.toMap(

+ g -> g,

+ g -> new ListConsumerGroupOffsetsSpec().topicPartitions(partitions)

+ ))).all()

+ );

+

+ Mono<Map<String, Map<TopicPartition, OffsetAndMetadata>>> merged = partitionCalls(

+ consumerGroups,

+ 25,

+ 4,

+ call,

+ mapMerger()

+ );

- private Mono<Map<TopicPartition, Long>> listConsumerGroupOffsets(

- String groupId, ListConsumerGroupOffsetsOptions options) {

- return toMono(client.listConsumerGroupOffsets(groupId, options).partitionsToOffsetAndMetadata())

- .map(MapUtil::removeNullValues)

- .map(m -> m.entrySet().stream()

- .map(e -> Tuples.of(e.getKey(), e.getValue().offset()))

- .collect(Collectors.toMap(Tuple2::getT1, Tuple2::getT2)));

+ return merged.map(map -> {

+ var table = ImmutableTable.<String, TopicPartition, Long>builder();

+ map.forEach((g, tpOffsets) -> tpOffsets.forEach((tp, offset) -> {

+ if (offset != null) {

+ // offset will be null for partitions that don't have committed offset for this group

+ table.put(g, tp, offset.offset());

+ }

+ }));

+ return table.build();

+ });

}

public Mono<Void> alterConsumerGroupOffsets(String groupId, Map<TopicPartition, Long> offsets) {

@@ -501,7 +525,7 @@ public Mono<Map<TopicPartition, Long>> listOffsetsUnsafe(Collection<TopicPartiti

partitions,

200,

call,

- (m1, m2) -> ImmutableMap.<TopicPartition, Long>builder().putAll(m1).putAll(m2).build()

+ mapMerger()

);

}

@@ -551,7 +575,7 @@ private Mono<Void> alterConfig(String topicName, Map<String, String> configs) {

}

/**

- * Splits input collection into batches, applies each batch sequentially to function

+ * Splits input collection into batches, converts each batch into Mono, sequentially subscribes to them

* and merges output Monos into one Mono.

*/

private static <R, I> Mono<R> partitionCalls(Collection<I> items,

@@ -561,14 +585,37 @@ private static <R, I> Mono<R> partitionCalls(Collection<I> items,

if (items.isEmpty()) {

return call.apply(items);

}

- Iterator<List<I>> parts = Iterators.partition(items.iterator(), partitionSize);

- Mono<R> mono = call.apply(parts.next());

- while (parts.hasNext()) {

- var nextPart = parts.next();

- // calls will be executed sequentially

- mono = mono.flatMap(res1 -> call.apply(nextPart).map(res2 -> merger.apply(res1, res2)));

+ Iterable<List<I>> parts = Iterables.partition(items, partitionSize);

+ return Flux.fromIterable(parts)

+ .concatMap(call)

+ .reduce(merger);

+ }

+

+ /**

+ * Splits input collection into batches, converts each batch into Mono, subscribes to them (concurrently,

+ * with specified concurrency level) and merges output Monos into one Mono.

+ */

+ private static <R, I> Mono<R> partitionCalls(Collection<I> items,

+ int partitionSize,

+ int concurrency,

+ Function<Collection<I>, Mono<R>> call,

+ BiFunction<R, R, R> merger) {

+ if (items.isEmpty()) {

+ return call.apply(items);

}

- return mono;

+ Iterable<List<I>> parts = Iterables.partition(items, partitionSize);

+ return Flux.fromIterable(parts)

+ .flatMap(call, concurrency)

+ .reduce(merger);

+ }

+

+ private static <K, V> BiFunction<Map<K, V>, Map<K, V>, Map<K, V>> mapMerger() {

+ return (m1, m2) -> {

+ var merged = new HashMap<K, V>();

+ merged.putAll(m1);

+ merged.putAll(m2);

+ return merged;

+ };

}

@Override

diff --git a/kafka-ui-api/src/main/java/com/provectus/kafka/ui/util/MapUtil.java b/kafka-ui-api/src/main/java/com/provectus/kafka/ui/util/MapUtil.java

index d1a5c035ee6..e69de29bb2d 100644

--- a/kafka-ui-api/src/main/java/com/provectus/kafka/ui/util/MapUtil.java

+++ b/kafka-ui-api/src/main/java/com/provectus/kafka/ui/util/MapUtil.java

@@ -1,21 +0,0 @@

-package com.provectus.kafka.ui.util;

-

-import java.util.Map;

-import java.util.stream.Collectors;

-

-public class MapUtil {

-

- private MapUtil() {

- }

-

- public static <K, V> Map<K, V> removeNullValues(Map<K, V> map) {

- return map.entrySet().stream()

- .filter(e -> e.getValue() != null)

- .collect(

- Collectors.toMap(

- Map.Entry::getKey,

- Map.Entry::getValue

- )

- );

- }

-}

| diff --git a/kafka-ui-api/src/test/java/com/provectus/kafka/ui/service/ReactiveAdminClientTest.java b/kafka-ui-api/src/test/java/com/provectus/kafka/ui/service/ReactiveAdminClientTest.java

index 99cfedad4cf..2e302009ac1 100644

--- a/kafka-ui-api/src/test/java/com/provectus/kafka/ui/service/ReactiveAdminClientTest.java

+++ b/kafka-ui-api/src/test/java/com/provectus/kafka/ui/service/ReactiveAdminClientTest.java

@@ -2,14 +2,18 @@

import static com.provectus.kafka.ui.service.ReactiveAdminClient.toMonoWithExceptionFilter;

import static java.util.Objects.requireNonNull;

+import static org.apache.kafka.clients.admin.ListOffsetsResult.ListOffsetsResultInfo;

import static org.assertj.core.api.Assertions.assertThat;

import com.provectus.kafka.ui.AbstractIntegrationTest;

import com.provectus.kafka.ui.producer.KafkaTestProducer;

+import java.time.Duration;

import java.util.ArrayList;

import java.util.List;

import java.util.Map;

+import java.util.Properties;

import java.util.UUID;

+import java.util.function.Function;

import java.util.stream.Stream;

import lombok.SneakyThrows;

import org.apache.kafka.clients.admin.AdminClient;

@@ -18,12 +22,16 @@

import org.apache.kafka.clients.admin.ConfigEntry;

import org.apache.kafka.clients.admin.NewTopic;

import org.apache.kafka.clients.admin.OffsetSpec;

+import org.apache.kafka.clients.consumer.ConsumerConfig;

+import org.apache.kafka.clients.consumer.KafkaConsumer;

+import org.apache.kafka.clients.consumer.OffsetAndMetadata;

import org.apache.kafka.clients.producer.ProducerRecord;

import org.apache.kafka.common.KafkaFuture;

import org.apache.kafka.common.TopicPartition;

import org.apache.kafka.common.config.ConfigResource;

import org.apache.kafka.common.errors.UnknownTopicOrPartitionException;

import org.apache.kafka.common.internals.KafkaFutureImpl;

+import org.apache.kafka.common.serialization.StringDeserializer;

import org.junit.function.ThrowingRunnable;

import org.junit.jupiter.api.AfterEach;

import org.junit.jupiter.api.BeforeEach;

@@ -96,6 +104,14 @@ void createTopics(NewTopic... topics) {

clearings.add(() -> adminClient.deleteTopics(Stream.of(topics).map(NewTopic::name).toList()).all().get());

}

+ void fillTopic(String topic, int msgsCnt) {

+ try (var producer = KafkaTestProducer.forKafka(kafka)) {

+ for (int i = 0; i < msgsCnt; i++) {

+ producer.send(topic, UUID.randomUUID().toString());

+ }

+ }

+ }

+

@Test

void testToMonoWithExceptionFilter() {

var failedFuture = new KafkaFutureImpl<String>();

@@ -152,4 +168,79 @@ void testListOffsetsUnsafe() {

.verifyComplete();

}

+

+ @Test

+ void testListConsumerGroupOffsets() throws Exception {

+ String topic = UUID.randomUUID().toString();

+ String anotherTopic = UUID.randomUUID().toString();

+ createTopics(new NewTopic(topic, 2, (short) 1), new NewTopic(anotherTopic, 1, (short) 1));

+ fillTopic(topic, 10);

+

+ Function<String, KafkaConsumer<String, String>> consumerSupplier = groupName -> {

+ Properties p = new Properties();

+ p.setProperty(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, kafka.getBootstrapServers());

+ p.setProperty(ConsumerConfig.GROUP_ID_CONFIG, groupName);

+ p.setProperty(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG, "earliest");

+ p.setProperty(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class.getName());

+ p.setProperty(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class.getName());

+ p.setProperty(ConsumerConfig.ENABLE_AUTO_COMMIT_CONFIG, "false");

+ return new KafkaConsumer<String, String>(p);

+ };

+

+ String fullyPolledConsumer = UUID.randomUUID().toString();

+ try (KafkaConsumer<String, String> c = consumerSupplier.apply(fullyPolledConsumer)) {

+ c.subscribe(List.of(topic));

+ int polled = 0;

+ while (polled < 10) {

+ polled += c.poll(Duration.ofMillis(50)).count();

+ }

+ c.commitSync();

+ }

+

+ String polled1MsgConsumer = UUID.randomUUID().toString();

+ try (KafkaConsumer<String, String> c = consumerSupplier.apply(polled1MsgConsumer)) {

+ c.subscribe(List.of(topic));

+ c.poll(Duration.ofMillis(100));

+ c.commitSync(Map.of(tp(topic, 0), new OffsetAndMetadata(1)));

+ }

+

+ String noCommitConsumer = UUID.randomUUID().toString();

+ try (KafkaConsumer<String, String> c = consumerSupplier.apply(noCommitConsumer)) {

+ c.subscribe(List.of(topic));

+ c.poll(Duration.ofMillis(100));

+ }

+

+ Map<TopicPartition, ListOffsetsResultInfo> endOffsets = adminClient.listOffsets(Map.of(

+ tp(topic, 0), OffsetSpec.latest(),

+ tp(topic, 1), OffsetSpec.latest())).all().get();

+

+ StepVerifier.create(

+ reactiveAdminClient.listConsumerGroupOffsets(

+ List.of(fullyPolledConsumer, polled1MsgConsumer, noCommitConsumer),

+ List.of(

+ tp(topic, 0),

+ tp(topic, 1),

+ tp(anotherTopic, 0))

+ )

+ ).assertNext(table -> {

+

+ assertThat(table.row(polled1MsgConsumer))

+ .containsEntry(tp(topic, 0), 1L)

+ .hasSize(1);

+

+ assertThat(table.row(noCommitConsumer))

+ .isEmpty();

+

+ assertThat(table.row(fullyPolledConsumer))

+ .containsEntry(tp(topic, 0), endOffsets.get(tp(topic, 0)).offset())

+ .containsEntry(tp(topic, 1), endOffsets.get(tp(topic, 1)).offset())

+ .hasSize(2);

+ })

+ .verifyComplete();

+ }

+

+ private static TopicPartition tp(String topic, int partition) {

+ return new TopicPartition(topic, partition);

+ }

+

}

| test | val | 2023-01-12T16:43:37 | 2022-12-29T10:11:54Z | joaofrsilva | train |

provectus/kafka-ui/3191_3192 | provectus/kafka-ui | provectus/kafka-ui/3191 | provectus/kafka-ui/3192 | [

"connected"

]

| 53cdb684783817e7efab4fb75e1133cb25e857fb | e1708550d5796da07ef3bc006f24bd82580da3c9 | []

| []

| 2023-01-04T15:02:34Z | [

"scope/frontend",

"status/accepted",

"type/chore"

]

| [FE] Build fails after #2372 | <!--

Don't forget to check for existing issues/discussions regarding your proposal. We might already have it.

https://github.com/provectus/kafka-ui/issues

https://github.com/provectus/kafka-ui/discussions

-->

<!--

Please follow the naming conventions for bugs:

<Feature/Area/Scope> : <Compact, but specific problem summary>

Avoid generic titles, like “Topics: incorrect layout of message sorting drop-down list”. Better use something like: “Topics: Message sorting drop-down list overlaps the "Submit" button”.

-->

**Describe the bug** (Actual behavior)

<!--(A clear and concise description of what the bug is.Use a list, if there is more than one problem)-->

Front End DEV environment is not working after vite plugin implementation.

**Expected behavior**

<!--(A clear and concise description of what you expected to happen.)-->

It should work as before.

**Set up**

<!--

WE MIGHT CLOSE THE ISSUE without further explanation IF YOU DON'T PROVIDE THIS INFORMATION.

How do you run the app? Please provide as much info as possible:

1. App version (docker image version or check commit hash in the top left corner in UI)

2. Helm chart version, if you use one

3. Any IAAC configs

-->

Normal front end setup without any java build to replace the `PUBLIC-PATH-VARIABLE`

**Steps to Reproduce**

<!-- We'd like you to provide an example setup (via docker-compose, helm, etc.)

to reproduce the problem, especially with a complex setups. -->

1. Go the the front end application and start the `pnpm start`

**Screenshots**

<!--

(If applicable, add screenshots to help explain your problem)

-->

**Additional context**

<!--

Add any other context about the problem here. E.g.:

1. Are there any alternative scenarios (different data/methods/configuration/setup) you have tried?

Were they successfull or same issue occured? Please provide steps as well.

2. Related issues (if there are any).

3. Logs (if available)

4. Is there any serious impact or behaviour on the end-user because of this issue, that can be overlooked?

-->

| [

"kafka-ui-react-app/index.html",

"kafka-ui-react-app/package.json",

"kafka-ui-react-app/pnpm-lock.yaml",

"kafka-ui-react-app/vite.config.ts"

]

| [

"kafka-ui-react-app/index.html",

"kafka-ui-react-app/package.json",

"kafka-ui-react-app/pnpm-lock.yaml",

"kafka-ui-react-app/vite.config.ts"

]

| []

| diff --git a/kafka-ui-react-app/index.html b/kafka-ui-react-app/index.html

index aa3f89f8f90..33e18ad2688 100644

--- a/kafka-ui-react-app/index.html

+++ b/kafka-ui-react-app/index.html

@@ -12,14 +12,14 @@

/>

<!-- Favicons -->

- <link rel="icon" href="PUBLIC-PATH-VARIABLE/favicon/favicon.ico" sizes="any" />

- <link rel="icon" href="PUBLIC-PATH-VARIABLE/favicon/icon.svg" type="image/svg+xml" />

- <link rel="apple-touch-icon" href="PUBLIC-PATH-VARIABLE/favicon/apple-touch-icon.png" />

- <link rel="manifest" href="PUBLIC-PATH-VARIABLE/manifest.json" />

+ <link rel="icon" href="<%= PUBLIC_PATH %>/favicon/favicon.ico" sizes="any" />

+ <link rel="icon" href="<%= PUBLIC_PATH %>/favicon/icon.svg" type="image/svg+xml" />

+ <link rel="apple-touch-icon" href="<%= PUBLIC_PATH %>/favicon/apple-touch-icon.png" />

+ <link rel="manifest" href="<%= PUBLIC_PATH %>/manifest.json" />

<title>UI for Apache Kafka</title>

<script type="text/javascript">

- window.basePath = 'PUBLIC-PATH-VARIABLE';

+ window.basePath = '<%= PUBLIC_PATH %>';

window.__assetsPathBuilder = function (importer) {

return window.basePath+ "/" + importer;

diff --git a/kafka-ui-react-app/package.json b/kafka-ui-react-app/package.json

index 6353452605a..a385c08c881 100644

--- a/kafka-ui-react-app/package.json

+++ b/kafka-ui-react-app/package.json

@@ -118,7 +118,8 @@

"rimraf": "^3.0.2",

"ts-node": "^10.8.1",

"ts-prune": "^0.10.3",

- "typescript": "^4.7.4"

+ "typescript": "^4.7.4",

+ "vite-plugin-ejs": "^1.6.4"

},

"engines": {

"node": "v16.15.0",

diff --git a/kafka-ui-react-app/pnpm-lock.yaml b/kafka-ui-react-app/pnpm-lock.yaml

index 8a37026d6c3..b69eb15c985 100644

--- a/kafka-ui-react-app/pnpm-lock.yaml

+++ b/kafka-ui-react-app/pnpm-lock.yaml

@@ -85,6 +85,7 @@ specifiers:

typescript: ^4.7.4

use-debounce: ^8.0.1

vite: ^4.0.0

+ vite-plugin-ejs: ^1.6.4

vite-tsconfig-paths: ^4.0.2

whatwg-fetch: ^3.6.2

yup: ^0.32.11

@@ -181,6 +182,7 @@ devDependencies:

ts-node: 10.8.1_seagpw47opwyivxvtfydnuwcuy

ts-prune: 0.10.3

typescript: 4.7.4

+ vite-plugin-ejs: 1.6.4

packages:

@@ -4266,6 +4268,10 @@ packages:

engines: {node: '>=8'}

dev: true

+ /async/3.2.4:

+ resolution: {integrity: sha512-iAB+JbDEGXhyIUavoDl9WP/Jj106Kz9DEn1DPgYw5ruDn0e3Wgi3sKFm55sASdGBNOQB8F59d9qQ7deqrHA8wQ==}

+ dev: true

+

/asynckit/0.4.0:

resolution: {integrity: sha512-Oei9OH4tRh0YqU3GxhX79dM/mwVgvbZJaSNaRk+bshkj0S5cfHcgYakreBjrHwatXKbz+IoIdYLxrKim2MjW0Q==}

dev: true

@@ -4514,6 +4520,12 @@ packages:

balanced-match: 1.0.2

concat-map: 0.0.1

+ /brace-expansion/2.0.1:

+ resolution: {integrity: sha512-XnAIvQ8eM+kC6aULx6wuQiwVsnzsi9d3WxzV3FpWTGA19F621kwdbsAcFKXgKUHZWsy+mY6iL1sHTxWEFCytDA==}

+ dependencies:

+ balanced-match: 1.0.2

+ dev: true

+

/braces/3.0.2:

resolution: {integrity: sha512-b8um+L1RzM3WDSzvhm6gIz1yfTbBt6YTlcEKAvsmqCZZFw46z626lVj9j1yEPW33H5H+lBQpZMP1k8l+78Ha0A==}

engines: {node: '>=8'}

@@ -5040,6 +5052,14 @@ packages:

wcwidth: 1.0.1

dev: true

+ /ejs/3.1.8:

+ resolution: {integrity: sha512-/sXZeMlhS0ArkfX2Aw780gJzXSMPnKjtspYZv+f3NiKLlubezAHDU5+9xz6gd3/NhG3txQCo6xlglmTS+oTGEQ==}

+ engines: {node: '>=0.10.0'}

+ hasBin: true

+ dependencies:

+ jake: 10.8.5

+ dev: true

+

/electron-to-chromium/1.4.151:

resolution: {integrity: sha512-XaG2LpZi9fdiWYOqJh0dJy4SlVywCvpgYXhzOlZTp4JqSKqxn5URqOjbm9OMYB3aInA2GuHQiem1QUOc1yT0Pw==}

@@ -5740,6 +5760,12 @@ packages:

flat-cache: 3.0.4

dev: true

+ /filelist/1.0.4:

+ resolution: {integrity: sha512-w1cEuf3S+DrLCQL7ET6kz+gmlJdbq9J7yXCSjK/OZCPA+qEN1WyF4ZAf0YYJa4/shHJra2t/d/r8SV4Ji+x+8Q==}

+ dependencies:

+ minimatch: 5.1.2

+ dev: true

+

/fill-range/7.0.1:

resolution: {integrity: sha512-qOo9F+dMUmC2Lcb4BbVvnKJxTPjCm+RRpe4gDuGrzkL7mEVl/djYSu2OdQ2Pa302N4oqkSg9ir6jaLWJ2USVpQ==}

engines: {node: '>=8'}

@@ -6340,6 +6366,17 @@ packages:

engines: {node: '>=6'}

dev: true

+ /jake/10.8.5:

+ resolution: {integrity: sha512-sVpxYeuAhWt0OTWITwT98oyV0GsXyMlXCF+3L1SuafBVUIr/uILGRB+NqwkzhgXKvoJpDIpQvqkUALgdmQsQxw==}

+ engines: {node: '>=10'}

+ hasBin: true

+ dependencies:

+ async: 3.2.4

+ chalk: 4.1.2

+ filelist: 1.0.4

+ minimatch: 3.1.2

+ dev: true

+

/jest-changed-files/29.0.0:

resolution: {integrity: sha512-28/iDMDrUpGoCitTURuDqUzWQoWmOmOKOFST1mi2lwh62X4BFf6khgH3uSuo1e49X/UDjuApAj3w0wLOex4VPQ==}

engines: {node: ^14.15.0 || ^16.10.0 || >=18.0.0}

@@ -7176,6 +7213,13 @@ packages:

dependencies:

brace-expansion: 1.1.11

+ /minimatch/5.1.2:

+ resolution: {integrity: sha512-bNH9mmM9qsJ2X4r2Nat1B//1dJVcn3+iBLa3IgqJ7EbGaDNepL9QSHOxN4ng33s52VMMhhIfgCYDk3C4ZmlDAg==}

+ engines: {node: '>=10'}

+ dependencies:

+ brace-expansion: 2.0.1

+ dev: true

+

/minimist/1.2.6:

resolution: {integrity: sha512-Jsjnk4bw3YJqYzbdyBiNsPWHPfO++UGG749Cxs6peCu5Xg4nrena6OVxOYxrQTqww0Jmwt+Ref8rggumkTLz9Q==}

dev: true

@@ -8675,6 +8719,12 @@ packages:

'@types/istanbul-lib-coverage': 2.0.3

convert-source-map: 1.7.0

+ /vite-plugin-ejs/1.6.4:

+ resolution: {integrity: sha512-23p1RS4PiA0veXY5/gHZ60pl3pPvd8NEqdBsDgxNK8nM1rjFFDcVb0paNmuipzCgNP/Y0f/Id22M7Il4kvZ2jA==}

+ dependencies:

+ ejs: 3.1.8

+ dev: true

+

/vite-tsconfig-paths/4.0.2_eqmiqdrctagsk5ranq2vs4ssty:

resolution: {integrity: sha512-UzU8zwbCQrdUkj/Z0tnh293n4ScRcjJLoS8nPme2iB2FHoU5q8rhilb7AbhLlUC1uv4t6jSzVWnENjPnyGseeQ==}

peerDependencies:

diff --git a/kafka-ui-react-app/vite.config.ts b/kafka-ui-react-app/vite.config.ts

index 3320bb8cd87..189e72e7f9d 100644

--- a/kafka-ui-react-app/vite.config.ts

+++ b/kafka-ui-react-app/vite.config.ts

@@ -6,12 +6,20 @@ import {

} from 'vite';

import react from '@vitejs/plugin-react-swc';

import tsconfigPaths from 'vite-tsconfig-paths';

+import { ViteEjsPlugin } from 'vite-plugin-ejs';

export default defineConfig(({ mode }) => {

process.env = { ...process.env, ...loadEnv(mode, process.cwd()) };

const defaultConfig: UserConfigExport = {

- plugins: [react(), tsconfigPaths(), splitVendorChunkPlugin()],

+ plugins: [

+ react(),

+ tsconfigPaths(),

+ splitVendorChunkPlugin(),

+ ViteEjsPlugin({

+ PUBLIC_PATH: mode !== 'development' ? 'PUBLIC-PATH-VARIABLE' : '',

+ }),

+ ],

server: {

port: 3000,

},

| null | test | val | 2023-01-04T05:51:49 | 2023-01-04T12:23:08Z | Mgrdich | train |

provectus/kafka-ui/3105_3195 | provectus/kafka-ui | provectus/kafka-ui/3105 | provectus/kafka-ui/3195 | [

"connected"

]

| 578468d09023c9898c14774f8a827058b4fb68d0 | a03b6844e0000a1aedcf6d7ed1472789277f1009 | []

| [

"please make all created methods return the object of ApiService and all old methods need to set private",

"don't we able to use createConnector() at line 60 and deleteConnector() at line 123 without clusterName?",

"seems here we also can set a date when seek type is Timestamp. so suggest tot lease String argument",

"Condition.visible -> Condition.enabled for seekTypeFLd",

"seems this method is not used in tests, it should be private and w/o Step annotation",

"Condition.visible -> Condition.enabled",

"Condition.visible -> Condition.enabled",

"done",

"sure ,done it ",

"done",

"done\r\n",

"done",

"done",

"done"

]

| 2023-01-05T11:32:27Z | [

"scope/QA",

"scope/AQA"

]

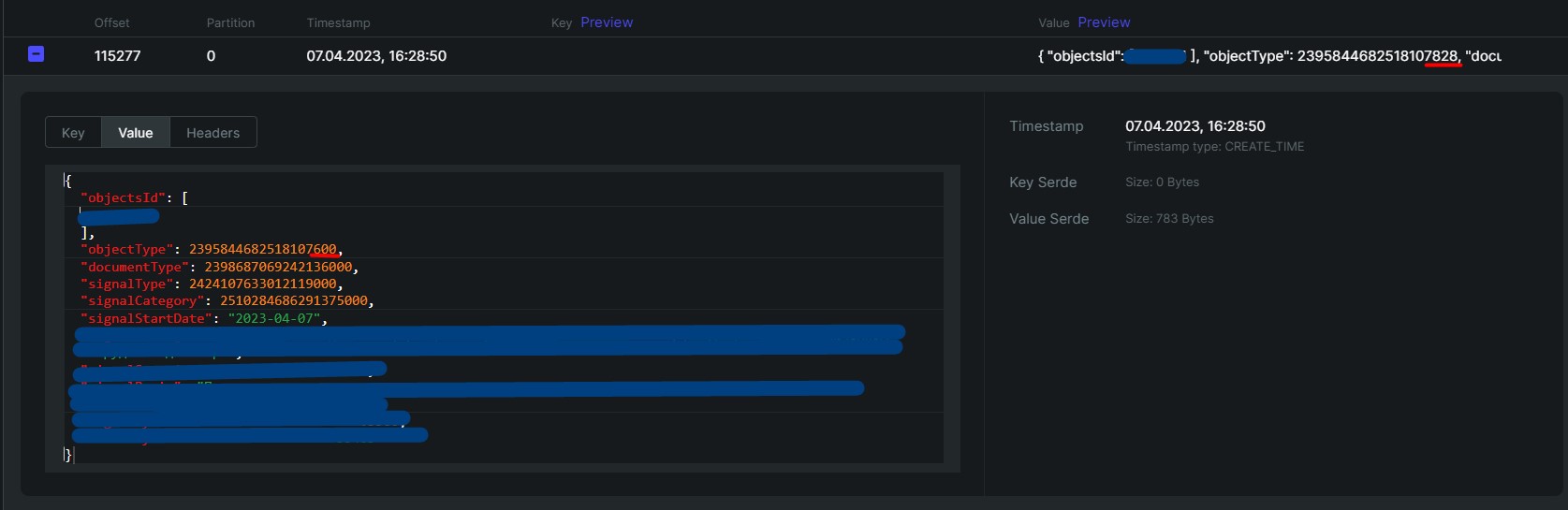

| [e2e]Checking messages filtering by Timestamp within Messages/Topic | Autotest implementation for:

https://app.qase.io/case/KAFKAUI-16

Description:

Checking messages filtering by Timestamp within Messages/Topic

Pre-conditions:

- Login to Kafka-ui application

- Open the 'Local' section

- Select the 'Topics'

- Open the Topic profile

- Turn to Messages tab

Steps:

1. Change the Offset dropdown value to 'Timestamp'

2. Click on 'Select Timestamp'

3. Choose the date and time within opened calendar

**Expected result:**

All the messages created from the selected time should filtered | [

"kafka-ui-e2e-checks/src/main/java/com/provectus/kafka/ui/pages/BasePage.java",

"kafka-ui-e2e-checks/src/main/java/com/provectus/kafka/ui/pages/topic/TopicDetails.java",

"kafka-ui-e2e-checks/src/main/java/com/provectus/kafka/ui/services/ApiService.java",

"kafka-ui-e2e-checks/src/test/resources/producedkey.txt",

"kafka-ui-e2e-checks/src/test/resources/testData.txt"

]

| [

"kafka-ui-e2e-checks/src/main/java/com/provectus/kafka/ui/pages/BasePage.java",

"kafka-ui-e2e-checks/src/main/java/com/provectus/kafka/ui/pages/topic/TopicDetails.java",

"kafka-ui-e2e-checks/src/main/java/com/provectus/kafka/ui/services/ApiService.java",

"kafka-ui-e2e-checks/src/main/java/com/provectus/kafka/ui/utilities/TimeUtils.java"

]

| [

"kafka-ui-e2e-checks/src/test/java/com/provectus/kafka/ui/base/BaseTest.java",

"kafka-ui-e2e-checks/src/test/java/com/provectus/kafka/ui/suite/connectors/ConnectorsTests.java",

"kafka-ui-e2e-checks/src/test/java/com/provectus/kafka/ui/suite/schemas/SchemasTests.java",

"kafka-ui-e2e-checks/src/test/java/com/provectus/kafka/ui/suite/topics/TopicMessagesTests.java",

"kafka-ui-e2e-checks/src/test/java/com/provectus/kafka/ui/suite/topics/TopicsTests.java"

]

| diff --git a/kafka-ui-e2e-checks/src/main/java/com/provectus/kafka/ui/pages/BasePage.java b/kafka-ui-e2e-checks/src/main/java/com/provectus/kafka/ui/pages/BasePage.java

index 67bf5a7c590..8b12d3b0d3f 100644

--- a/kafka-ui-e2e-checks/src/main/java/com/provectus/kafka/ui/pages/BasePage.java

+++ b/kafka-ui-e2e-checks/src/main/java/com/provectus/kafka/ui/pages/BasePage.java

@@ -7,12 +7,13 @@

import com.codeborne.selenide.ElementsCollection;

import com.codeborne.selenide.SelenideElement;

import com.provectus.kafka.ui.utilities.WebUtils;

+import java.time.Duration;

import lombok.extern.slf4j.Slf4j;

@Slf4j

public abstract class BasePage extends WebUtils {

- protected SelenideElement loadingSpinner = $x("//*[contains(text(),'Loading')]");

+ protected SelenideElement loadingSpinner = $x("//div[@role='progressbar']");

protected SelenideElement submitBtn = $x("//button[@type='submit']");

protected SelenideElement tableGrid = $x("//table");

protected SelenideElement dotMenuBtn = $x("//button[@aria-label='Dropdown Toggle']");

@@ -26,7 +27,9 @@ public abstract class BasePage extends WebUtils {

protected void waitUntilSpinnerDisappear() {

log.debug("\nwaitUntilSpinnerDisappear");

- loadingSpinner.shouldBe(Condition.disappear);

+ if(isVisible(loadingSpinner)){

+ loadingSpinner.shouldBe(Condition.disappear, Duration.ofSeconds(30));

+ }

}

protected void clickSubmitBtn() {

diff --git a/kafka-ui-e2e-checks/src/main/java/com/provectus/kafka/ui/pages/topic/TopicDetails.java b/kafka-ui-e2e-checks/src/main/java/com/provectus/kafka/ui/pages/topic/TopicDetails.java

index bd8cbd3d02c..1de0478abec 100644

--- a/kafka-ui-e2e-checks/src/main/java/com/provectus/kafka/ui/pages/topic/TopicDetails.java

+++ b/kafka-ui-e2e-checks/src/main/java/com/provectus/kafka/ui/pages/topic/TopicDetails.java

@@ -3,6 +3,7 @@

import static com.codeborne.selenide.Selenide.$;

import static com.codeborne.selenide.Selenide.$$x;

import static com.codeborne.selenide.Selenide.$x;

+import static com.codeborne.selenide.Selenide.sleep;

import static org.apache.commons.lang.math.RandomUtils.nextInt;

import com.codeborne.selenide.CollectionCondition;

@@ -11,9 +12,17 @@

import com.codeborne.selenide.SelenideElement;

import com.provectus.kafka.ui.pages.BasePage;

import io.qameta.allure.Step;

+import java.time.LocalDate;

+import java.time.LocalDateTime;

+import java.time.LocalTime;

+import java.time.YearMonth;

+import java.time.format.DateTimeFormatter;

+import java.time.format.DateTimeFormatterBuilder;

import java.util.ArrayList;

import java.util.Arrays;

import java.util.List;

+import java.util.Locale;

+import java.util.Objects;

import org.openqa.selenium.By;

public class TopicDetails extends BasePage {

@@ -24,7 +33,7 @@ public class TopicDetails extends BasePage {

protected SelenideElement overviewTab = $x("//a[contains(text(),'Overview')]");

protected SelenideElement messagesTab = $x("//a[contains(text(),'Messages')]");

protected SelenideElement seekTypeDdl = $x("//ul[@id='selectSeekType']/li");

- protected SelenideElement seekTypeField = $x("//label[text()='Seek Type']//..//input");

+ protected SelenideElement seekTypeField = $x("//label[text()='Seek Type']//..//div/input");

protected SelenideElement addFiltersBtn = $x("//button[text()='Add Filters']");

protected SelenideElement savedFiltersLink = $x("//div[text()='Saved Filters']");

protected SelenideElement addFilterCodeModalTitle = $x("//label[text()='Filter code']");

@@ -33,6 +42,7 @@ public class TopicDetails extends BasePage {

protected SelenideElement displayNameInputAddFilterMdl = $x("//input[@placeholder='Enter Name']");

protected SelenideElement cancelBtnAddFilterMdl = $x("//button[text()='Cancel']");

protected SelenideElement addFilterBtnAddFilterMdl = $x("//button[text()='Add filter']");

+ protected SelenideElement addFiltersBtnMessages = $x("//button[text()='Add Filters']");

protected SelenideElement selectFilterBtnAddFilterMdl = $x("//button[text()='Select filter']");

protected SelenideElement editSettingsMenu = $x("//li[@role][contains(text(),'Edit settings')]");

protected SelenideElement removeTopicBtn = $x("//ul[@role='menu']//div[contains(text(),'Remove Topic')]");

@@ -43,6 +53,11 @@ public class TopicDetails extends BasePage {

protected SelenideElement backToCreateFiltersLink = $x("//div[text()='Back To create filters']");

protected SelenideElement confirmationMdl = $x("//div[text()= 'Confirm the action']/..");

protected ElementsCollection messageGridItems = $$x("//tbody//tr");

+ protected SelenideElement actualCalendarDate = $x("//div[@class='react-datepicker__current-month']");

+ protected SelenideElement previousMonthButton = $x("//button[@aria-label='Previous Month']");

+ protected SelenideElement nextMonthButton = $x("//button[@aria-label='Next Month']");

+ protected SelenideElement calendarTimeFld = $x("//input[@placeholder='Time']");

+ protected String dayCellLtr = "//div[@role='option'][contains(text(),'%d')]";

protected String seekFilterDdlLocator = "//ul[@id='selectSeekType']/ul/li[text()='%s']";