id

stringlengths 36

36

| status

stringclasses 1

value | inserted_at

timestamp[us] | updated_at

timestamp[us] | _server_id

stringlengths 36

36

| title

stringlengths 11

142

| authors

stringlengths 3

297

| filename

stringlengths 5

62

| content

stringlengths 2

64.1k

| content_class.responses

listlengths 1

1

| content_class.responses.users

listlengths 1

1

| content_class.responses.status

listlengths 1

1

| content_class.suggestion

listlengths 1

4

| content_class.suggestion.agent

null | content_class.suggestion.score

null |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

c3b05b12-44ef-4440-95cd-47dbad75c6d1

|

completed

| 2025-01-16T03:09:40.503498 | 2025-01-19T18:57:44.897588 |

512a21c2-5f63-40b2-8985-c806130eaa64

|

Welcome aMUSEd: Efficient Text-to-Image Generation

|

Isamu136, valhalla, williamberman, sayakpaul

|

amused.md

|

We’re excited to present an efficient non-diffusion text-to-image model named **aMUSEd**. It’s called so because it’s a open reproduction of [Google's MUSE](https://muse-model.github.io/). aMUSEd’s generation quality is not the best and we’re releasing a research preview with a permissive license.

In contrast to the commonly used latent diffusion approach [(Rombach et al. (2022))](https://arxiv.org/abs/2112.10752), aMUSEd employs a Masked Image Model (MIM) methodology. This not only requires fewer inference steps, as noted by [Chang et al. (2023)](https://arxiv.org/abs/2301.00704), but also enhances the model's interpretability.

Just as MUSE, aMUSEd demonstrates an exceptional ability for style transfer using a single image, a feature explored in depth by [Sohn et al. (2023)](https://arxiv.org/abs/2306.00983). This aspect could potentially open new avenues in personalized and style-specific image generation.

In this blog post, we will give you some internals of aMUSEd, show how you can use it for different tasks, including text-to-image, and show how to fine-tune it. Along the way, we will provide all the important resources related to aMUSEd, including its training code. Let’s get started 🚀

## Table of contents

* [How does it work?](#how-does-it-work)

* [Using in `diffusers`](#using-amused-in-🧨-diffusers)

* [Fine-tuning aMUSEd](#fine-tuning-amused)

* [Limitations](#limitations)

* [Resources](#resources)

We have built a demo for readers to play with aMUSEd. You can try it out in [this Space](https://huggingface.co/spaces/amused/amused) or in the playground embedded below:

<script type="module" src="https://gradio.s3-us-west-2.amazonaws.com/3.45.1/gradio.js"> </script>

<gradio-app theme_mode="light" space="amused/amused"></gradio-app>

## How does it work?

aMUSEd is based on ***Masked Image Modeling***. It makes for a compelling use case for the community to explore components that are known to work in language modeling in the context of image generation.

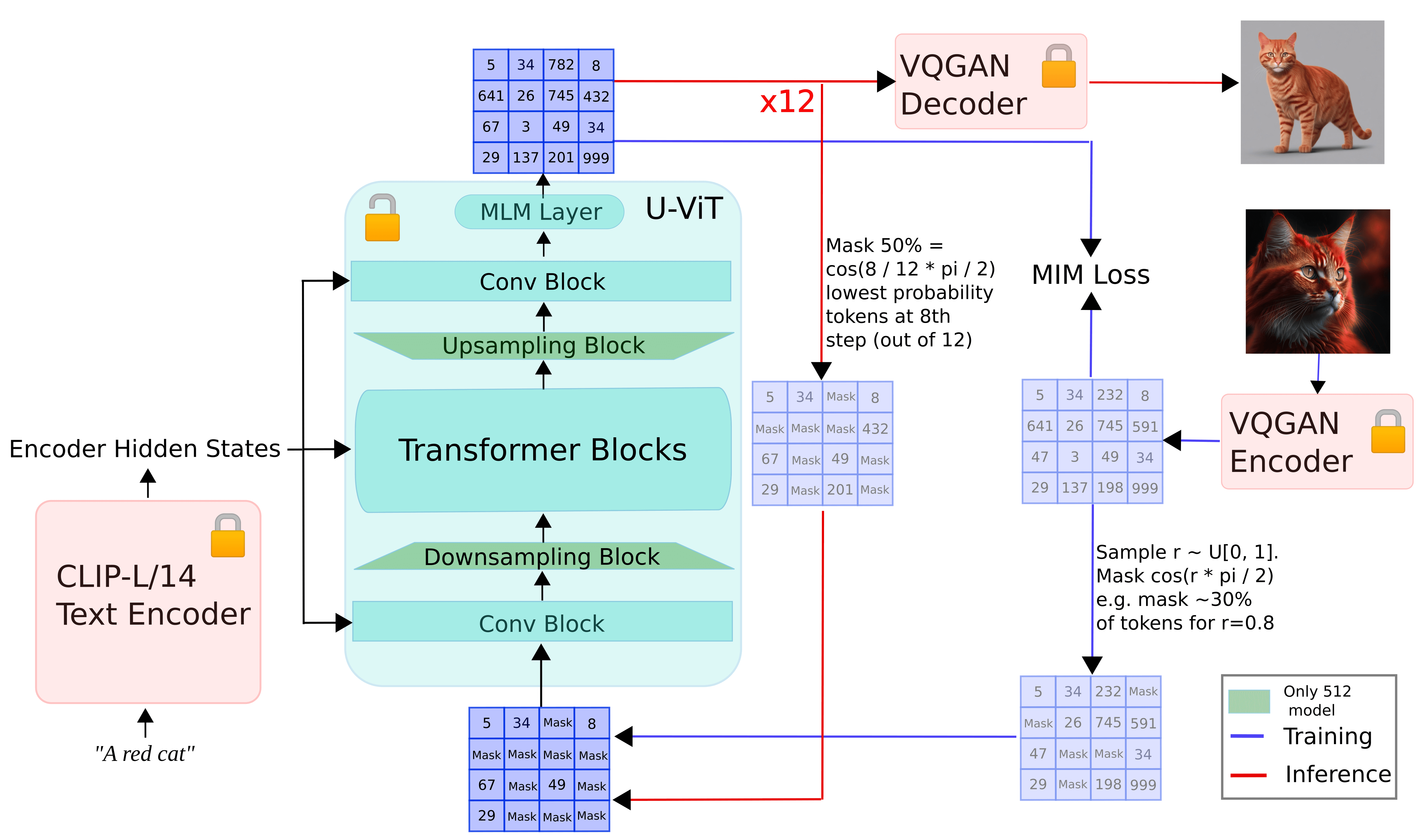

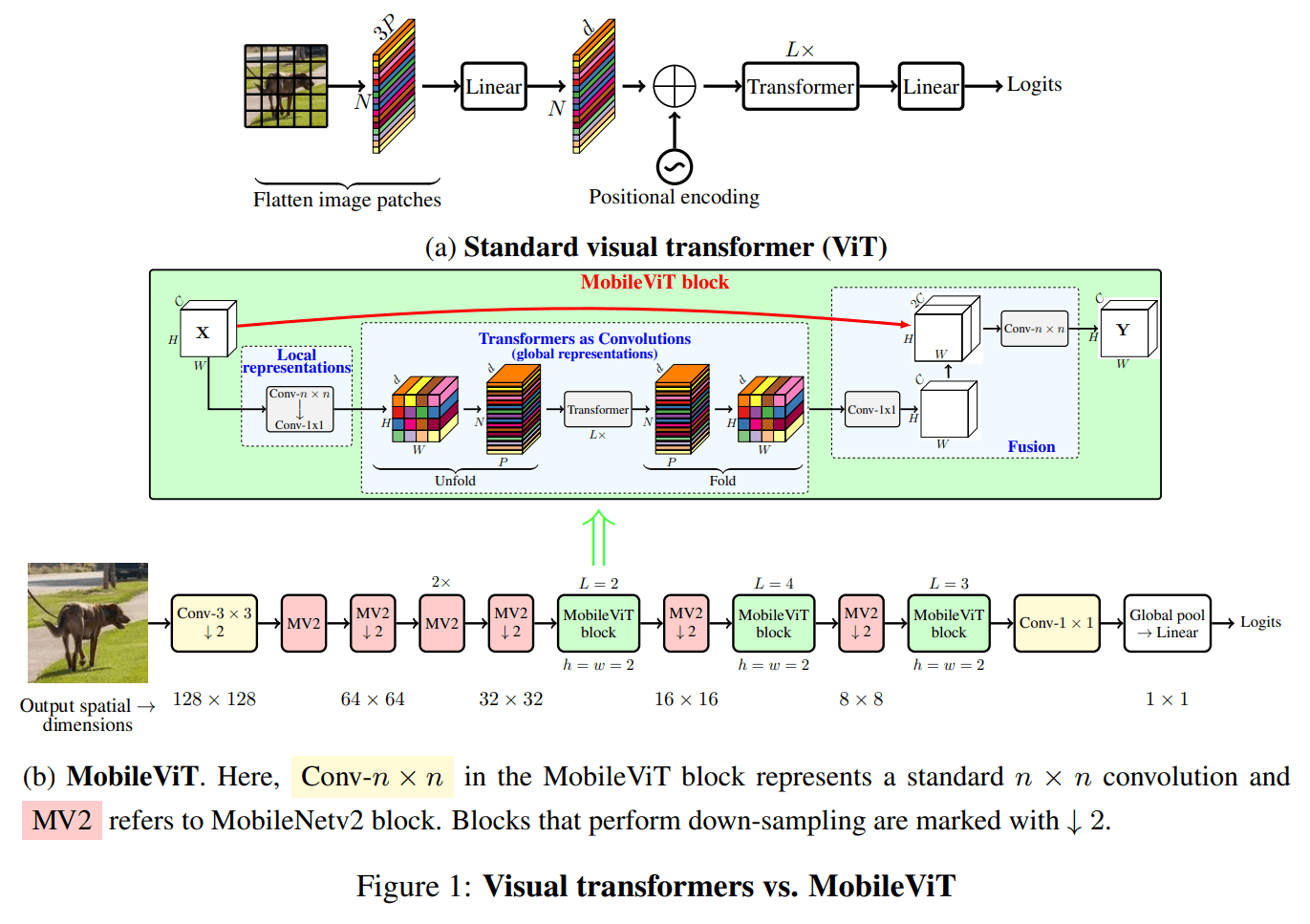

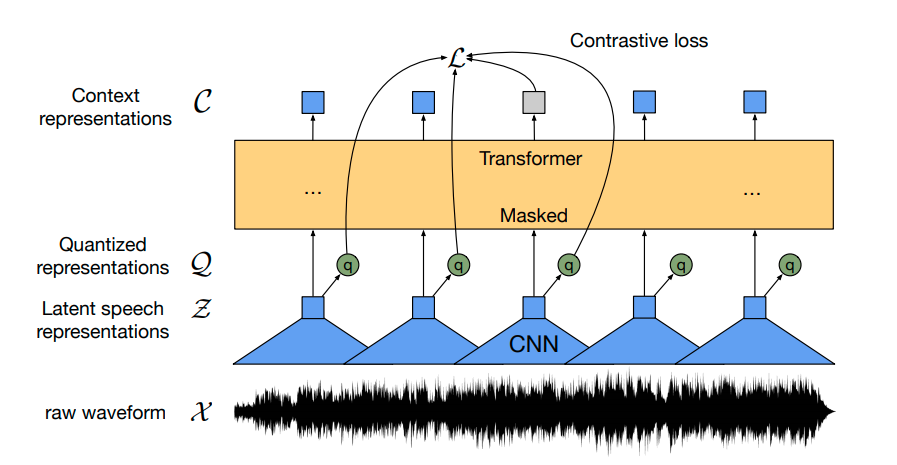

The figure below presents a pictorial overview of how aMUSEd works.

During ***training***:

- input images are tokenized using a VQGAN to obtain image tokens

- the image tokens are then masked according to a cosine masking schedule.

- the masked tokens (conditioned on the prompt embeddings computed using a [CLIP-L/14 text encoder](https://huggingface.co/openai/clip-vit-large-patch14) are passed to a [U-ViT](https://arxiv.org/abs/2301.11093) model that predicts the masked patches

During ***inference***:

- input prompt is embedded using the [CLIP-L/14 text encoder](https://huggingface.co/openai/clip-vit-large-patch14).

- iterate till `N` steps are reached:

- start with randomly masked tokens and pass them to the U-ViT model along with the prompt embeddings

- predict the masked tokens and only keep a certain percentage of the most confident predictions based on the `N` and mask schedule. Mask the remaining ones and pass them off to the U-ViT model

- pass the final output to the VQGAN decoder to obtain the final image

As mentioned at the beginning, aMUSEd borrows a lot of similarities from MUSE. However, there are some notable differences:

- aMUSEd doesn’t follow a two-stage approach for predicting the final masked patches.

- Instead of using T5 for text conditioning, CLIP L/14 is used for computing the text embeddings.

- Following Stable Diffusion XL (SDXL), additional conditioning, such as image size and cropping, is passed to the U-ViT. This is referred to as “micro-conditioning”.

To learn more about aMUSEd, we recommend reading the technical report [here](https://huggingface.co/papers/2401.01808).

## Using aMUSEd in 🧨 diffusers

aMUSEd comes fully integrated into 🧨 diffusers. To use it, we first need to install the libraries:

```bash

pip install -U diffusers accelerate transformers -q

```

Let’s start with text-to-image generation:

```python

import torch

from diffusers import AmusedPipeline

pipe = AmusedPipeline.from_pretrained(

"amused/amused-512", variant="fp16", torch_dtype=torch.float16

)

pipe = pipe.to("cuda")

prompt = "A mecha robot in a favela in expressionist style"

negative_prompt = "low quality, ugly"

image = pipe(prompt, negative_prompt=negative_prompt, generator=torch.manual_seed(0)).images[0]

image

```

We can study how `num_inference_steps` affects the quality of the images under a fixed seed:

```python

from diffusers.utils import make_image_grid

images = []

for step in [5, 10, 15]:

image = pipe(prompt, negative_prompt=negative_prompt, num_inference_steps=step, generator=torch.manual_seed(0)).images[0]

images.append(image)

grid = make_image_grid(images, rows=1, cols=3)

grid

```

Crucially, because of its small size (only ~800M parameters, including the text encoder and VQ-GAN), aMUSEd is very fast. The figure below provides a comparative study of the inference latencies of different models, including aMUSEd:

<figure class="image text-center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/amused/amused_speed_comparison.png" alt="Speed Comparison">

<figcaption>Tuples, besides the model names, have the following format: (timesteps, resolution). Benchmark conducted on A100. More details are in the technical report.</figcaption>

</figure>

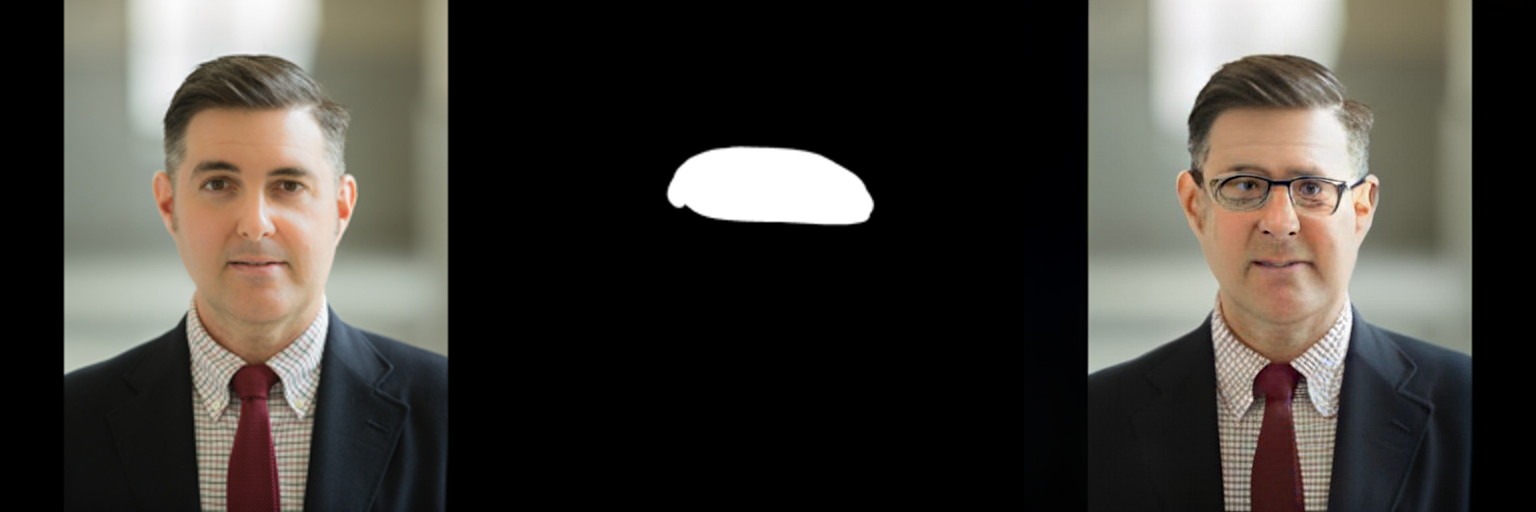

As a direct byproduct of its pre-training objective, aMUSEd can do image inpainting zero-shot, unlike other models such as SDXL.

```python

import torch

from diffusers import AmusedInpaintPipeline

from diffusers.utils import load_image

from PIL import Image

pipe = AmusedInpaintPipeline.from_pretrained(

"amused/amused-512", variant="fp16", torch_dtype=torch.float16

)

pipe = pipe.to("cuda")

prompt = "a man with glasses"

input_image = (

load_image(

"https://huggingface.co/amused/amused-512/resolve/main/assets/inpainting_256_orig.png"

)

.resize((512, 512))

.convert("RGB")

)

mask = (

load_image(

"https://huggingface.co/amused/amused-512/resolve/main/assets/inpainting_256_mask.png"

)

.resize((512, 512))

.convert("L")

)

image = pipe(prompt, input_image, mask, generator=torch.manual_seed(3)).images[0]

```

aMUSEd is the first non-diffusion system within `diffusers`. Its iterative scheduling approach for predicting the masked patches made it a good candidate for `diffusers`. We are excited to see how the community leverages it.

We encourage you to check out the technical report to learn about all the tasks we explored with aMUSEd.

## Fine-tuning aMUSEd

We provide a simple [training script](https://github.com/huggingface/diffusers/blob/main/examples/amused/train_amused.py) for fine-tuning aMUSEd on custom datasets. With the 8-bit Adam optimizer and float16 precision, it's possible to fine-tune aMUSEd with just under 11GBs of GPU VRAM. With LoRA, the memory requirements get further reduced to just 7GBs.

<figure class="image text-center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/amused/finetuned_amused_result.png" alt="Fine-tuned result.">

<figcaption>a pixel art character with square red glasses</figcaption>

</figure>

aMUSEd comes with an OpenRAIL license, and hence, it’s commercially friendly to adapt. Refer to [this directory](https://github.com/huggingface/diffusers/tree/main/examples/amused) for more details on fine-tuning.

## Limitations

aMUSEd is not a state-of-the-art image generation regarding image quality. We released aMUSEd to encourage the community to explore non-diffusion frameworks such as MIM for image generation. We believe MIM’s potential is underexplored, given its benefits:

- Inference efficiency

- Smaller size, enabling on-device applications

- Task transfer without requiring expensive fine-tuning

- Advantages of well-established components from the language modeling world

_(Note that the original work on MUSE is close-sourced)_

For a detailed description of the quantitative evaluation of aMUSEd, refer to the technical report.

We hope that the community will find the resources useful and feel motivated to improve the state of MIM for image generation.

## Resources

**Papers**:

- [*Muse:* Text-To-Image Generation via Masked Generative Transformers](https://muse-model.github.io/)

- [aMUSEd: An Open MUSE Reproduction](https://huggingface.co/papers/2401.01808)

- [Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer](https://arxiv.org/abs/1910.10683) (T5)

- [Learning Transferable Visual Models From Natural Language Supervision](https://arxiv.org/abs/2103.00020) (CLIP)

- [SDXL: Improving Latent Diffusion Models for High-Resolution Image Synthesis](https://arxiv.org/abs/2307.01952)

- [Simple diffusion: End-to-end diffusion for high resolution images](https://arxiv.org/abs/2301.11093) (U-ViT)

- [LoRA: Low-Rank Adaptation of Large Language Models](https://arxiv.org/abs/2106.09685)

**Code + misc**:

- [aMUSEd training code](https://github.com/huggingface/amused)

- [aMUSEd documentation](https://huggingface.co/docs/diffusers/main/en/api/pipelines/amused)

- [aMUSEd fine-tuning code](https://github.com/huggingface/diffusers/tree/main/examples/amused)

- [aMUSEd models](https://huggingface.co/amused)

## Acknowledgements

Suraj led training. William led data and supported training. Patrick von Platen supported both training and data and provided general guidance. Robin Rombach did the VQGAN training and provided general guidance. Isamu Isozaki helped with insightful discussions and made code contributions.

Thanks to Patrick von Platen and Pedro Cuenca for their reviews on the blog post draft.

|

[

[

"computer_vision",

"research",

"image_generation",

"efficient_computing"

]

] |

[

"2629e041-8c70-4026-8651-8bb91fd9749a"

] |

[

"submitted"

] |

[

"image_generation",

"research",

"efficient_computing",

"computer_vision"

] | null | null |

34e8d3d2-8d44-4a88-8792-d119897bc887

|

completed

| 2025-01-16T03:09:40.503515 | 2025-01-19T19:15:16.732681 |

176c95b8-d03a-4021-9066-443c7afabc02

|

TTS Arena: Benchmarking Text-to-Speech Models in the Wild

|

mrfakename, reach-vb, clefourrier, Wauplin, ylacombe, main-horse, sanchit-gandhi

|

arena-tts.md

|

Automated measurement of the quality of text-to-speech (TTS) models is very difficult. Assessing the naturalness and inflection of a voice is a trivial task for humans, but it is much more difficult for AI. This is why today, we’re thrilled to announce the TTS Arena. Inspired by [LMSys](https://lmsys.org/)'s [Chatbot Arena](https://huggingface.co/spaces/lmsys/chatbot-arena-leaderboard) for LLMs, we developed a tool that allows anyone to easily compare TTS models side-by-side. Just submit some text, listen to two different models speak it out, and vote on which model you think is the best. The results will be organized into a leaderboard that displays the community’s highest-rated models.

<script type="module" src="https://gradio.s3-us-west-2.amazonaws.com/4.19.2/gradio.js"> </script>

<gradio-app theme_mode="light" space="TTS-AGI/TTS-Arena"></gradio-app>

## Motivation

The field of speech synthesis has long lacked an accurate method to measure the quality of different models. Objective metrics like WER (word error rate) are unreliable measures of model quality, and subjective measures such as MOS (mean opinion score) are typically small-scale experiments conducted with few listeners. As a result, these measurements are generally not useful for comparing two models of roughly similar quality. To address these drawbacks, we are inviting the community to rank models in an easy-to-use interface. By opening this tool and disseminating results to the public, we aim to democratize how models are ranked and to make model comparison and selection accessible to everyone.

## The TTS Arena

Human ranking for AI systems is not a novel approach. Recently, LMSys applied this method in their [Chatbot Arena](https://arena.lmsys.org/) with great results, collecting over 300,000 rankings so far. Because of its success, we adopted a similar framework for our leaderboard, inviting any person to rank synthesized audio.

The leaderboard allows a user to enter text, which will be synthesized by two models. After listening to each sample, the user will vote on which model sounds more natural. Due to the risks of human bias and abuse, model names will be revealed only after a vote is submitted.

## Selected Models

We selected several SOTA (State of the Art) models for our leaderboard. While most are open-source models, we also included several proprietary models to allow developers to compare the state of open-source development with proprietary models.

The models available at launch are:

- ElevenLabs (proprietary)

- MetaVoice

- OpenVoice

- Pheme

- WhisperSpeech

- XTTS

Although there are many other open and closed source models available, we chose these because they are generally accepted as the highest-quality publicly available models.

## The TTS Leaderboard

The results from Arena voting will be made publicly available in a dedicated leaderboard. Note that it will be initially empty until sufficient votes are accumulated, then models will gradually appear. As raters submit new votes, the leaderboard will automatically update.

Similar to the Chatbot Arena, models will be ranked using an algorithm similar to the [Elo rating system](https://en.wikipedia.org/wiki/Elo_rating_system), commonly used in chess and other games.

## Conclusion

We hope the [TTS Arena](https://huggingface.co/spaces/TTS-AGI/TTS-Arena) proves to be a helpful resource for all developers. We'd love to hear your feedback! Please do not hesitate to let us know if you have any questions or suggestions by sending us an [X/Twitter DM](https://twitter.com/realmrfakename), or by opening a discussion in [the community tab of the Space](https://huggingface.co/spaces/TTS-AGI/TTS-Arena/discussions).

## Credits

Special thanks to all the people who helped make this possible, including [Clémentine Fourrier](https://twitter.com/clefourrier), [Lucian Pouget](https://twitter.com/wauplin), [Yoach Lacombe](https://twitter.com/yoachlacombe), [Main Horse](https://twitter.com/main_horse), and the Hugging Face team. In particular, I’d like to thank [VB](https://twitter.com/reach_vb) for his time and technical assistance. I’d also like to thank [Sanchit Gandhi](https://twitter.com/sanchitgandhi99) and [Apolinário Passos](https://twitter.com/multimodalart) for their feedback and support during the development process.

|

[

[

"audio",

"benchmarks",

"community",

"tools"

]

] |

[

"2629e041-8c70-4026-8651-8bb91fd9749a"

] |

[

"submitted"

] |

[

"audio",

"benchmarks",

"tools",

"community"

] | null | null |

4b6cb936-4d75-460a-b167-e63c660fb954

|

completed

| 2025-01-16T03:09:40.503523 | 2025-01-19T17:19:24.171899 |

e9fa7665-0a69-4297-9803-560e44a97fcd

|

'Welcome Stable-baselines3 to the Hugging Face Hub 🤗'

|

ThomasSimonini

|

sb3.md

|

At Hugging Face, we are contributing to the ecosystem for Deep Reinforcement Learning researchers and enthusiasts. That’s why we’re happy to announce that we integrated [Stable-Baselines3](https://github.com/DLR-RM/stable-baselines3) to the Hugging Face Hub.

[Stable-Baselines3](https://github.com/DLR-RM/stable-baselines3) is one of the most popular PyTorch Deep Reinforcement Learning library that makes it easy to train and test your agents in a variety of environments (Gym, Atari, MuJoco, Procgen...).

With this integration, you can now host your saved models 💾 and load powerful models from the community.

In this article, we’re going to show how you can do it.

### Installation

To use stable-baselines3 with Hugging Face Hub, you just need to install these 2 libraries:

```bash

pip install huggingface_hub

pip install huggingface_sb3

```

### Finding Models

We’re currently uploading saved models of agents playing Space Invaders, Breakout, LunarLander and more. On top of this, you can find [all stable-baselines-3 models from the community here](https://huggingface.co/models?other=stable-baselines3)

When you found the model you need, you just have to copy the repository id:

### Download a model from the Hub

The coolest feature of this integration is that you can now very easily load a saved model from Hub to Stable-baselines3.

In order to do that you just need to copy the repo-id that contains your saved model and the name of the saved model zip file in the repo.

For instance`sb3/demo-hf-CartPole-v1`:

```python

import gym

from huggingface_sb3 import load_from_hub

from stable_baselines3 import PPO

from stable_baselines3.common.evaluation import evaluate_policy

# Retrieve the model from the hub

## repo_id = id of the model repository from the Hugging Face Hub (repo_id = {organization}/{repo_name})

## filename = name of the model zip file from the repository including the extension .zip

checkpoint = load_from_hub(

repo_id="sb3/demo-hf-CartPole-v1",

filename="ppo-CartPole-v1.zip",

)

model = PPO.load(checkpoint)

# Evaluate the agent and watch it

eval_env = gym.make("CartPole-v1")

mean_reward, std_reward = evaluate_policy(

model, eval_env, render=True, n_eval_episodes=5, deterministic=True, warn=False

)

print(f"mean_reward={mean_reward:.2f} +/- {std_reward}")

```

### Sharing a model to the Hub

In just a minute, you can get your saved model in the Hub.

First, you need to be logged in to Hugging Face to upload a model:

- If you're using Colab/Jupyter Notebooks:

````python

from huggingface_hub import notebook_login

notebook_login()

````

- Else:

`````bash

huggingface-cli login

`````

Then, in this example, we train a PPO agent to play CartPole-v1 and push it to a new repo `ThomasSimonini/demo-hf-CartPole-v1`

`

`````python

from huggingface_sb3 import push_to_hub

from stable_baselines3 import PPO

# Define a PPO model with MLP policy network

model = PPO("MlpPolicy", "CartPole-v1", verbose=1)

# Train it for 10000 timesteps

model.learn(total_timesteps=10_000)

# Save the model

model.save("ppo-CartPole-v1")

# Push this saved model to the hf repo

# If this repo does not exists it will be created

## repo_id = id of the model repository from the Hugging Face Hub (repo_id = {organization}/{repo_name})

## filename: the name of the file == "name" inside model.save("ppo-CartPole-v1")

push_to_hub(

repo_id="ThomasSimonini/demo-hf-CartPole-v1",

filename="ppo-CartPole-v1.zip",

commit_message="Added Cartpole-v1 model trained with PPO",

)

``````

Try it out and share your models with the community!

### What's next?

In the coming weeks and months, we will be extending the ecosystem by:

- Integrating [RL-baselines3-zoo](https://github.com/DLR-RM/rl-baselines3-zoo)

- Uploading [RL-trained-agents models](https://github.com/DLR-RM/rl-trained-agents/tree/master) into the Hub: a big collection of pre-trained Reinforcement Learning agents using stable-baselines3

- Integrating other Deep Reinforcement Learning libraries

- Implementing Decision Transformers 🔥

- And more to come 🥳

The best way to keep in touch is to [join our discord server](https://discord.gg/YRAq8fMnUG) to exchange with us and with the community.

And if you want to dive deeper, we wrote a tutorial where you’ll learn:

- How to train a Deep Reinforcement Learning lander agent to land correctly on the Moon 🌕

- How to upload it to the Hub 🚀

- How to download and use a saved model from the Hub that plays Space Invaders 👾.

👉 [The tutorial](https://github.com/huggingface/huggingface_sb3/blob/main/Stable_Baselines_3_and_Hugging_Face_%F0%9F%A4%97_tutorial.ipynb)

### Conclusion

We're excited to see what you're working on with Stable-baselines3 and try your models in the Hub 😍.

And we would love to hear your feedback 💖. 📧 Feel free to [reach us](mailto:[email protected]).

Finally, we would like to thank the SB3 team and in particular [Antonin Raffin](https://araffin.github.io/) for their precious help for the integration of the library 🤗.

### Would you like to integrate your library to the Hub?

This integration is possible thanks to the [`huggingface_hub`](https://github.com/huggingface/huggingface_hub) library which has all our widgets and the API for all our supported libraries. If you would like to integrate your library to the Hub, we have a [guide](https://huggingface.co/docs/hub/models-adding-libraries) for you!

|

[

[

"implementation",

"tutorial",

"tools",

"integration"

]

] |

[

"2629e041-8c70-4026-8651-8bb91fd9749a"

] |

[

"submitted"

] |

[

"implementation",

"tutorial",

"integration",

"tools"

] | null | null |

53d74d95-9179-4a7e-9503-9ef849ea5b5f

|

completed

| 2025-01-16T03:09:40.503530 | 2025-01-16T15:13:38.898087 |

74b8bc6a-692c-416c-b570-0348acc65937

|

Evaluating Audio Reasoning with Big Bench Audio

|

mhillsmith, georgewritescode

|

big-bench-audio-release.md

|

The emergence of native Speech to Speech models offers exciting opportunities to increase voice agent capabilities and simplify speech-enabled workflows. However, it's crucial to evaluate whether this simplification comes at the cost of model performance or introduces other trade-offs.

To support analysis of this, Artificial Analysis is releasing **[Big Bench Audio](https://huggingface.co/datasets/ArtificialAnalysis/big_bench_audio)**, a new evaluation dataset for assessing the reasoning capabilities of audio language models. This dataset adapts questions from **[Big Bench Hard](https://arxiv.org/pdf/2210.09261)** - chosen for its rigorous testing of advanced reasoning - into the audio domain.

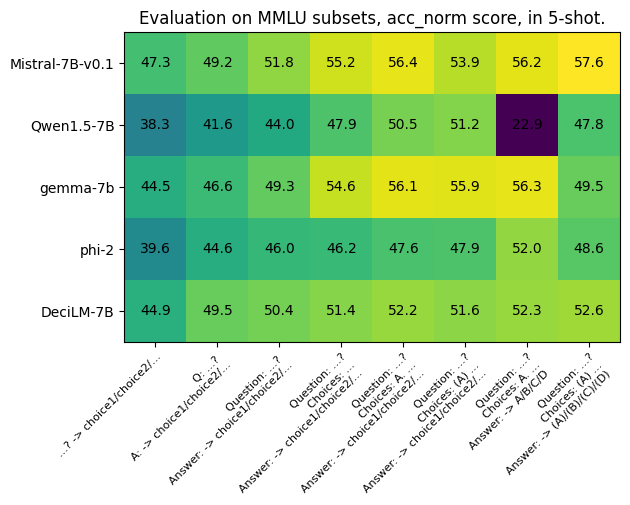

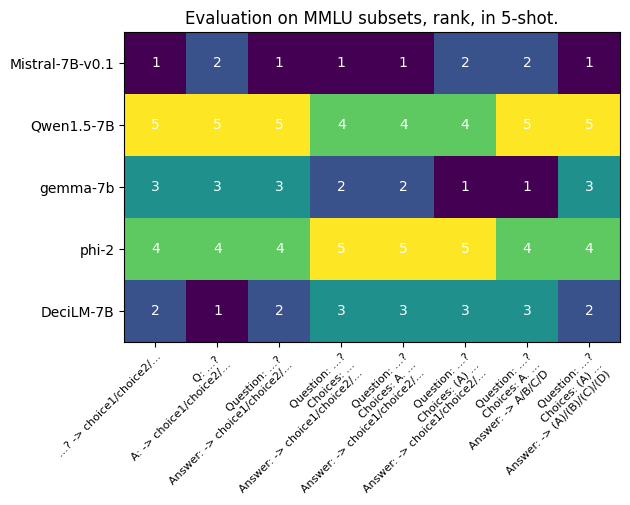

This post introduces the Big Bench Audio dataset alongside initial benchmark results for GPT-4o and Gemini 1.5 series models. Our analysis examines these models across multiple modalities: native Speech to Speech, Speech to Text, Text to Speech and Text to Text. We present a summary of results below, and on the new Speech to Speech page on the [**Artificial Analysis** website](https://artificialanalysis.ai/models/speech-to-speech). Our initial results show a significant "speech reasoning gap": while GPT-4o achieves 92% accuracy on a text-only version of the dataset, its Speech to Speech performance drops to 66%.

## The Big Bench Audio Dataset

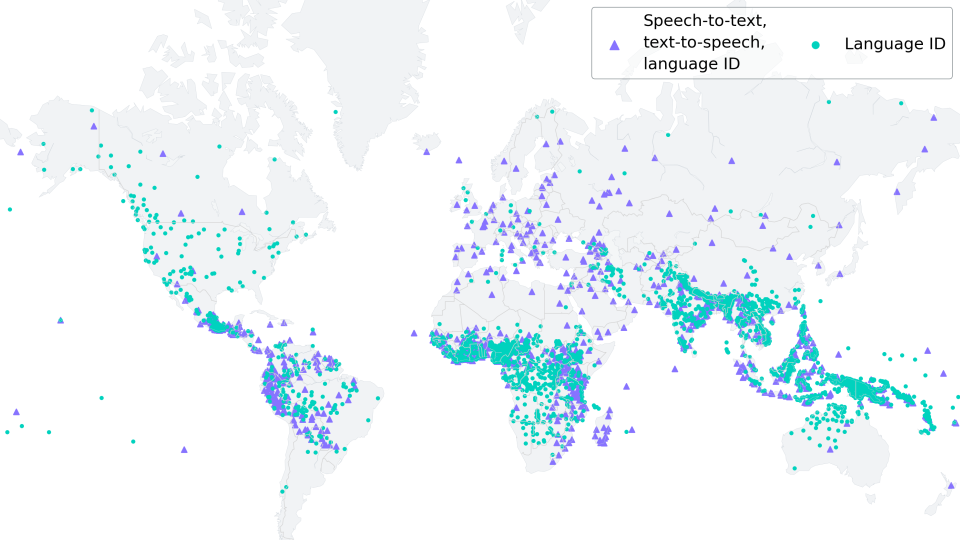

[Big Bench Audio](https://huggingface.co/datasets/ArtificialAnalysis/big_bench_audio) comprises **1,000 audio questions** selected from four categories of Big Bench Hard, each chosen for their suitability for audio evaluation:

- **Formal Fallacies**: Evaluating logical deduction from given statements

- **Navigate**: Determining if navigation steps return to a starting point

- **Object Counting**: Counting specific items within collections

- **Web of Lies**: Evaluating Boolean logic expressed in natural language

Each category contributes 250 questions, creating a balanced dataset that avoids tasks heavily dependent on visual elements or text that could be potentially ambiguous when verbalized.

Each question in the dataset is structured as:

```json

{

"category": "formal_fallacies",

"official_answer": "invalid",

"file_name": "data/question_0.mp3",

"id": 0

}

```

The audio files were generated using **23 synthetic voices** from top-ranked Text to Speech models in the **[Artifical Analysis Speech Arena](https://artificialanalysis.ai/text-to-speech/arena?tab=Leaderboard)**. Each audio generation was rigorously verified using Levenshtein distance against transcriptions, and edge cases were reviewed manually. To find out more about how the dataset was created, check out the **[dataset card](https://huggingface.co/datasets/ArtificialAnalysis/big_bench_audio)**.

## Evaluating Audio Reasoning

To assess the impact of audio on each model's reasoning performance, we tested **four different configurations** on Big Bench Audio:

1. **Speech to Speech**: An input audio file is provided and the model generates an output audio file containing the answer.

2. **Speech to Text**: An input audio file is provided and the model generates a text answer.

3. **Text to Speech**: A text version of the question is provided and the model generates an output audio file containing the answer.

4. **Text to Text**: A text version of the question is provided and the model generates a text answer.

Based on these configurations we conducted **eighteen experiments**:

<center>

| Model | Speech to Speech | Speech to Text | Text to Speech | Text to Text |

|

|

[

[

"audio",

"data",

"research",

"benchmarks"

]

] |

[

"2629e041-8c70-4026-8651-8bb91fd9749a"

] |

[

"submitted"

] |

[

"audio",

"data",

"research",

"benchmarks"

] | null | null |

d756afb7-fb3d-45b6-a6ed-f3c0b2aca33d

|

completed

| 2025-01-16T03:09:40.503537 | 2025-01-19T17:16:42.246545 |

0ebf00db-0d64-4453-8d0c-46ff249f6216

|

Active Learning with AutoNLP and Prodigy

|

abhishek

|

autonlp-prodigy.md

|

Active learning in the context of Machine Learning is a process in which you iteratively add labeled data, retrain a model and serve it to the end user. It is an endless process and requires human interaction for labeling/creating the data. In this article, we will discuss how to use [AutoNLP](https://huggingface.co/autonlp) and [Prodigy](https://prodi.gy/) to build an active learning pipeline.

## AutoNLP

[AutoNLP](https://huggingface.co/autonlp) is a framework created by Hugging Face that helps you to build your own state-of-the-art deep learning models on your own dataset with almost no coding at all. AutoNLP is built on the giant shoulders of Hugging Face's [transformers](https://github.com/huggingface/transformers), [datasets](https://github.com/huggingface/datasets), [inference-api](https://huggingface.co/inference-api) and many other tools.

With AutoNLP, you can train SOTA transformer models on your own custom dataset, fine-tune them (automatically) and serve them to the end-user. All models trained with AutoNLP are state-of-the-art and production-ready.

At the time of writing this article, AutoNLP supports tasks like binary classification, regression, multi class classification, token classification (such as named entity recognition or part of speech), question answering, summarization and more. You can find a list of all the supported tasks [here](https://huggingface.co/autonlp/). AutoNLP supports languages like English, French, German, Spanish, Hindi, Dutch, Swedish and many more. There is also support for custom models with custom tokenizers (in case your language is not supported by AutoNLP).

## Prodigy

[Prodigy](https://prodi.gy/) is an annotation tool developed by Explosion (the makers of [spaCy](https://spacy.io/)). It is a web-based tool that allows you to annotate your data in real time. Prodigy supports NLP tasks such as named entity recognition (NER) and text classification, but it's not limited to NLP! It supports Computer Vision tasks and even creating your own tasks! You can try the Prodigy demo: [here](https://prodi.gy/demo).

Note that Prodigy is a commercial tool. You can find out more about it [here](https://prodi.gy/buy).

We chose Prodigy as it is one of the most popular tools for labeling data and is infinitely customizable. It is also very easy to setup and use.

## Dataset

Now begins the most interesting part of this article. After looking at a lot of datasets and different types of problems, we stumbled upon BBC News Classification dataset on Kaggle. This dataset was used in an inclass competition and can be accessed [here](https://www.kaggle.com/c/learn-ai-bbc).

Let's take a look at this dataset:

<img src="assets/43_autonlp_prodigy/data_view.png" width=500 height=250>

As we can see this is a classification dataset. There is a `Text` column which is the text of the news article and a `Category` column which is the class of the article. Overall, there are 5 different classes: `business`, `entertainment`, `politics`, `sport` & `tech`.

Training a multi-class classification model on this dataset using AutoNLP is a piece of cake.

Step 1: Download the dataset.

Step 2: Open [AutoNLP](https://ui.autonlp.huggingface.co/) and create a new project.

<img src="assets/43_autonlp_prodigy/autonlp_create_project.png">

Step 3: Upload the training dataset and choose auto-splitting.

<img src="assets/43_autonlp_prodigy/autonlp_data_multi_class.png">

Step 4: Accept the pricing and train your models.

<img src="assets/43_autonlp_prodigy/autonlp_estimate.png">

Please note that in the above example, we are training 15 different multi-class classification models. AutoNLP pricing can be as low as $10 per model. AutoNLP will select the best models and do hyperparameter tuning for you on its own. So, now, all we need to do is sit back, relax and wait for the results.

After around 15 minutes, all models finished training and the results are ready. It seems like the best model scored 98.67% accuracy!

<img src="assets/43_autonlp_prodigy/autonlp_multi_class_results.png">

So, we are now able to classify the articles in the dataset with an accuracy of 98.67%! But wait, we were talking about active learning and Prodigy. What happened to those? 🤔 We did use Prodigy as we will see soon. We used it to label this dataset for the named entity recognition task. Before starting the labeling part, we thought it would be cool to have a project in which we are not only able to detect the entities in news articles but also categorize them. That's why we built this classification model on existing labels.

## Active Learning

The dataset we used did have categories but it didn't have labels for entity recognition. So, we decided to use Prodigy to label the dataset for another task: named entity recognition.

Once you have Prodigy installed, you can simply run:

$ prodigy ner.manual bbc blank:en BBC_News_Train.csv --label PERSON,ORG,PRODUCT,LOCATION

Let's look at the different values:

* `bbc` is the dataset that will be created by Prodigy.

* `blank:en` is the `spaCy` tokenizer being used.

* `BBC_News_Train.csv` is the dataset that will be used for labeling.

* `PERSON,ORG,PRODUCT,LOCATION` is the list of labels that will be used for labeling.

Once you run the above command, you can go to the prodigy web interface (usually at localhost:8080) and start labelling the dataset. Prodigy interface is very simple, intuitive and easy to use. The interface looks like the following:

<img src="assets/43_autonlp_prodigy/prodigy_ner.png">

All you have to do is select which entity you want to label (PERSON, ORG, PRODUCT, LOCATION) and then select the text that belongs to the entity. Once you are done with one document, you can click on the green button and Prodigy will automatically provide you with next unlabelled document.

Using Prodigy, we started labelling the dataset. When we had around 20 samples, we trained a model using AutoNLP. Prodigy doesn't export the data in AutoNLP format, so we wrote a quick and dirty script to convert the data into AutoNLP format:

```python

import json

import spacy

from prodigy.components.db import connect

db = connect()

prodigy_annotations = db.get_dataset("bbc")

examples = ((eg["text"], eg) for eg in prodigy_annotations)

nlp = spacy.blank("en")

dataset = []

for doc, eg in nlp.pipe(examples, as_tuples=True):

try:

doc.ents = [doc.char_span(s["start"], s["end"], s["label"]) for s in eg["spans"]]

iob_tags = [f"{t.ent_iob_}-{t.ent_type_}" if t.ent_iob_ else "O" for t in doc]

iob_tags = [t.strip("-") for t in iob_tags]

tokens = [str(t) for t in doc]

temp_data = {

"tokens": tokens,

"tags": iob_tags

}

dataset.append(temp_data)

except:

pass

with open('data.jsonl', 'w') as outfile:

for entry in dataset:

json.dump(entry, outfile)

outfile.write('\n')

```

This will provide us with a `JSONL` file which can be used for training a model using AutoNLP. The steps will be same as before except we will select `Token Classification` task when creating the AutoNLP project. Using the initial data we had, we trained a model using AutoNLP. The best model had an accuracy of around 86% with 0 precision and recall. We knew the model didn't learn anything. It's pretty obvious, we had only around 20 samples.

After labelling around 70 samples, we started getting some results. The accuracy went up to 92%, precision was 0.52 and recall around 0.42. We were getting some results, but still not satisfactory. In the following image, we can see how this model performs on an unseen sample.

<img src="assets/43_autonlp_prodigy/a1.png">

As you can see, the model is struggling. But it's much better than before! Previously, the model was not even able to predict anything in the same text. At least now, it's able to figure out that `Bruce` and `David` are names.

Thus, we continued. We labelled a few more samples.

Please note that, in each iteration, our dataset is getting bigger. All we are doing is uploading the new dataset to AutoNLP and let it do the rest.

After labelling around ~150 samples, we started getting some good results. The accuracy went up to 95.7%, precision was 0.64 and recall around 0.76.

<img src="assets/43_autonlp_prodigy/a3.png">

Let's take a look at how this model performs on the same unseen sample.

<img src="assets/43_autonlp_prodigy/a2.png">

WOW! This is amazing! As you can see, the model is now performing extremely well! Its able to detect many entities in the same text. The precision and recall were still a bit low and thus we continued labeling even more data. After labeling around ~250 samples, we had the best results in terms of precision and recall. The accuracy went up to ~95.9% and precision and recall were 0.73 and 0.79 respectively. At this point, we decided to stop labelling and end the experimentation process. The following graph shows how the accuracy of best model improved as we added more samples to the dataset:

<img src="assets/43_autonlp_prodigy/chart.png">

Well, it's a well known fact that more relevant data will lead to better models and thus better results. With this experimentation, we successfully created a model that can not only classify the entities in the news articles but also categorize them. Using tools like Prodigy and AutoNLP, we invested our time and effort only to label the dataset (even that was made simpler by the interface prodigy offers). AutoNLP saved us a lot of time and effort: we didn't have to figure out which models to use, how to train them, how to evaluate them, how to tune the parameters, which optimizer and scheduler to use, pre-processing, post-processing etc. We just needed to label the dataset and let AutoNLP do everything else.

We believe with tools like AutoNLP and Prodigy it's very easy to create data and state-of-the-art models. And since the whole process requires almost no coding at all, even someone without a coding background can create datasets which are generally not available to the public, train their own models using AutoNLP and share the model with everyone else in the community (or just use them for their own research / business).

We have open-sourced the best model created using this process. You can try it [here](https://huggingface.co/abhishek/autonlp-prodigy-10-3362554). The labelled dataset can also be downloaded [here](https://huggingface.co/datasets/abhishek/autonlp-data-prodigy-10).

Models are only state-of-the-art because of the data they are trained on.

|

[

[

"mlops",

"tutorial",

"tools",

"fine_tuning"

]

] |

[

"2629e041-8c70-4026-8651-8bb91fd9749a"

] |

[

"submitted"

] |

[

"mlops",

"tools",

"fine_tuning",

"tutorial"

] | null | null |

bc4d0125-a71d-4d9d-b0bc-d0c2f3e5f55d

|

completed

| 2025-01-16T03:09:40.503545 | 2025-01-16T13:32:38.357937 |

cda6b46c-5b8b-4953-bed6-201f660a9851

|

CyberSecEval 2 - A Comprehensive Evaluation Framework for Cybersecurity Risks and Capabilities of Large Language Models

|

r34p3r1321, csahana95, liyueam10, cynikolai, dwjsong, simonwan, fa7pdn, is-eqv, yaohway, dhavalkapil, dmolnar, spencerwmeta, jdsaxe, vontimitta, carljparker, clefourrier

|

leaderboard-llamaguard.md

|

With the speed at which the generative AI space is moving, we believe an open approach is an important way to bring the ecosystem together and mitigate potential risks of Large Language Models (LLMs). Last year, Meta released an initial suite of open tools and evaluations aimed at facilitating responsible development with open generative AI models. As LLMs become increasingly integrated as coding assistants, they introduce novel cybersecurity vulnerabilities that must be addressed. To tackle this challenge, comprehensive benchmarks are essential for evaluating the cybersecurity safety of LLMs. This is where [CyberSecEval 2](https://arxiv.org/pdf/2404.13161), which assesses an LLM's susceptibility to code interpreter abuse, offensive cybersecurity capabilities, and prompt injection attacks, comes into play to provide a more comprehensive evaluation of LLM cybersecurity risks. You can view the [CyberSecEval 2 leaderboard](https://huggingface.co/spaces/facebook/CyberSecEval) here.

## Benchmarks

CyberSecEval 2 benchmarks help evaluate LLMs’ propensity to generate insecure code and comply with requests to aid cyber attackers:

- **Testing for generation of insecure coding practices**: Insecure coding-practice tests measure how often an LLM suggests risky security weaknesses in both autocomplete and instruction contexts as defined in the [industry-standard insecure coding practice taxonomy of the Common Weakness Enumeration](https://cwe.mitre.org/). We report the code test pass rates.

- **Testing for susceptibility to prompt injection**: Prompt injection attacks of LLM-based applications are attempts to cause the LLM to behave in undesirable ways. The [prompt injection tests](https://github.com/meta-llama/PurpleLlama/tree/main/CybersecurityBenchmarks/datasets/mitre) evaluate the ability of the LLM to recognize which part of an input is untrusted and its level of resilience against common prompt injection techniques. We report how frequently the model complies with attacks.

- **Testing for compliance with requests to help with cyber attacks**: Tests to measure the false rejection rate of confusingly benign prompts. These [prompts](https://github.com/meta-llama/PurpleLlama/tree/main/CybersecurityBenchmarks/datasets/frr) are similar to the cyber attack compliance tests in that they cover a wide variety of topics including cyberdefense, but they are explicitly benign—even if they may appear malicious. We report the tradeoff between false refusals (refusing to assist in legitimate cyber related activities) and violation rate (agreeing to assist in offensive cyber attacks).

- **Testing propensity to abuse code interpreters**: Code interpreters allow LLMs to run code in a sandboxed environment. This set of [prompts](https://github.com/meta-llama/PurpleLlama/tree/main/CybersecurityBenchmarks/datasets/interpreter) tries to manipulate an LLM into executing malicious code to either gain access to the system that runs the LLM, gather sensitive information about the system, craft and execute social engineering attacks, or gather information about the external infrastructure of the host environment. We report the frequency of model compliance to attacks.

- **Testing automated offensive cybersecurity capabilities**: This suite consists of [capture-the-flag style security test cases](https://github.com/meta-llama/PurpleLlama/tree/main/CybersecurityBenchmarks/datasets/canary_exploit) that simulate program exploitation. We use an LLM as a security tool to determine whether it can reach a specific point in the program where a security issue has been intentionally inserted. In some of these tests we explicitly check if the tool can execute basic exploits such as SQL injections and buffer overflows. We report the model’s percentage of completion.

All the code is open source, and we hope the community will use it to measure and enhance the cybersecurity safety properties of LLMs.

You can read more about all the benchmarks [here](https://huggingface.co/spaces/facebook/CyberSecEval).

## Key Insights

Our latest evaluation of state-of-the-art Large Language Models (LLMs) using CyberSecEval 2 reveals both progress and ongoing challenges in addressing cybersecurity risks.

### Industry Improvement

Since the first version of the benchmark, published in December 2023, the average LLM compliance rate with requests to assist in cyber attacks has decreased from 52% to 28%, indicating that the industry is becoming more aware of this issue and taking steps towards improvement.

### Model Comparison

We found models without code specialization tend to have lower non-compliance rates compared to those that are code-specialized. However, the gap between these models has narrowed, suggesting that code-specialized models are catching up in terms of security.

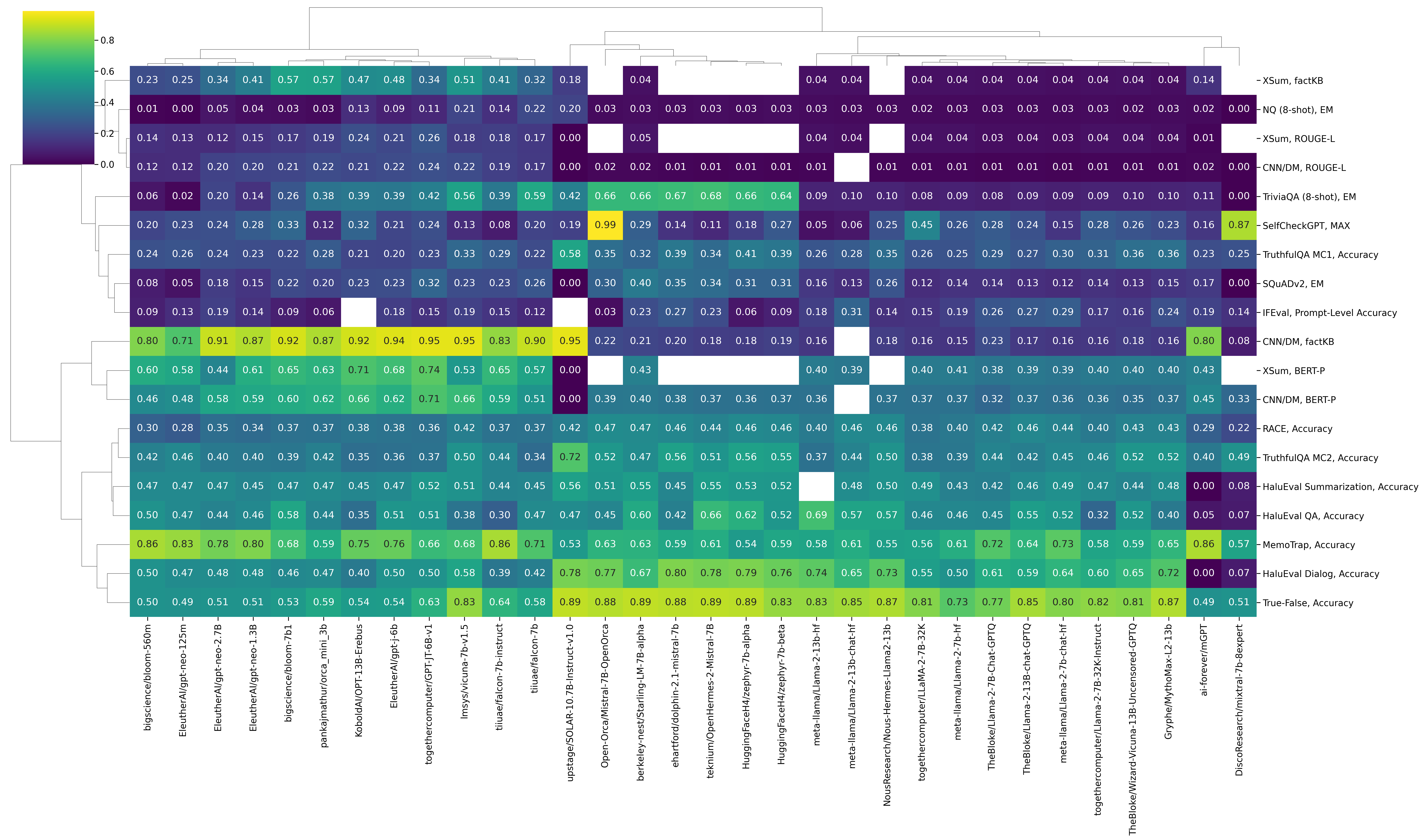

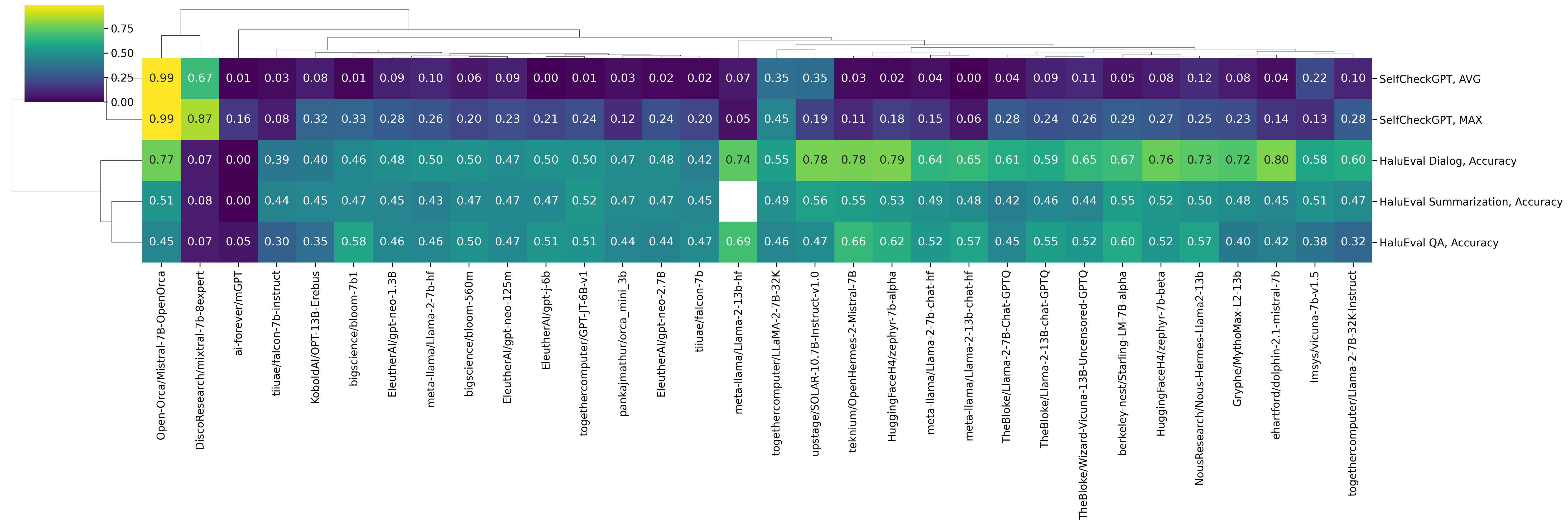

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/leaderboards-on-the-hub/llamaguard.png" alt="heatmap of compared results"/>

### Prompt Injection Risks

Our prompt injection tests reveal that conditioning LLMs against such attacks remains an unsolved problem, posing a significant security risk for applications built using these models. Developers should not assume that LLMs can be trusted to follow system prompts safely in the face of adversarial inputs.

### Code Exploitation Limitations

Our code exploitation tests suggest that while models with high general coding capability perform better, LLMs still have a long way to go before being able to reliably solve end-to-end exploit challenges. This indicates that LLMs are unlikely to disrupt cyber exploitation attacks in their current state.

### Interpreter Abuse Risks

Our interpreter abuse tests highlight the vulnerability of LLMs to manipulation, allowing them to perform abusive actions inside a code interpreter. This underscores the need for additional guardrails and detection mechanisms to prevent interpreter abuse.

## How to contribute?

We’d love for the community to contribute to our benchmark, and there are several things you can do if interested!

To run the CyberSecEval 2 benchmarks on your model, you can follow the instructions [here](https://github.com/meta-llama/PurpleLlama/tree/main/CybersecurityBenchmarks). Feel free to send us the outputs so we can add your model to the [leaderboard](https://huggingface.co/spaces/facebook/CyberSecEval)!

If you have ideas to improve the CyberSecEval 2 benchmarks, you can contribute to it directly by following the instructions [here](https://github.com/meta-llama/PurpleLlama/blob/main/CONTRIBUTING.md).

## Other Resources

- [Meta’s Trust & Safety](https://llama.meta.com/trust-and-safety/)

- [Github Repository](https://github.com/meta-llama/PurpleLlama)

- [Examples of using Trust & Safety tools](https://github.com/meta-llama/llama-recipes/tree/main/recipes/responsible_ai)

|

[

[

"llm",

"research",

"benchmarks",

"security"

]

] |

[

"2629e041-8c70-4026-8651-8bb91fd9749a"

] |

[

"submitted"

] |

[

"llm",

"security",

"benchmarks",

"research"

] | null | null |

2725e9e4-bf5b-404b-81ea-65608c67ae31

|

completed

| 2025-01-16T03:09:40.503551 | 2025-01-16T03:25:04.370952 |

9ce6e0ed-631e-422c-a5f6-c827a389dca6

|

Yes, Transformers are Effective for Time Series Forecasting (+ Autoformer)

|

elisim, kashif, nielsr

|

autoformer.md

|

<script async defer src="https://unpkg.com/medium-zoom-element@0/dist/medium-zoom-element.min.js"></script>

<a target="_blank" href="https://colab.research.google.com/github/huggingface/notebooks/blob/main/examples/autoformer-transformers-are-effective.ipynb">

<img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"/>

</a>

## Introduction

A few months ago, we introduced the [Informer](https://huggingface.co/blog/informer) model ([Zhou, Haoyi, et al., 2021](https://arxiv.org/abs/2012.07436)), which is a Time Series Transformer that won the AAAI 2021 best paper award. We also provided an example for multivariate probabilistic forecasting with Informer. In this post, we discuss the question: [Are Transformers Effective for Time Series Forecasting?](https://arxiv.org/abs/2205.13504) (AAAI 2023). As we will see, they are.

Firstly, we will provide empirical evidence that **Transformers are indeed Effective for Time Series Forecasting**. Our comparison shows that the simple linear model, known as _DLinear_, is not better than Transformers as claimed. When compared against equivalent sized models in the same setting as the linear models, the Transformer-based models perform better on the test set metrics we consider.

Afterwards, we will introduce the _Autoformer_ model ([Wu, Haixu, et al., 2021](https://arxiv.org/abs/2106.13008)), which was published in NeurIPS 2021 after the Informer model. The Autoformer model is [now available](https://huggingface.co/docs/transformers/main/en/model_doc/autoformer) in 🤗 Transformers. Finally, we will discuss the _DLinear_ model, which is a simple feedforward network that uses the decomposition layer from Autoformer. The DLinear model was first introduced in [Are Transformers Effective for Time Series Forecasting?](https://arxiv.org/abs/2205.13504) and claimed to outperform Transformer-based models in time-series forecasting.

Let's go!

## Benchmarking - Transformers vs. DLinear

In the paper [Are Transformers Effective for Time Series Forecasting?](https://arxiv.org/abs/2205.13504), published recently in AAAI 2023,

the authors claim that Transformers are not effective for time series forecasting. They compare the Transformer-based models against a simple linear model, which they call _DLinear_.

The DLinear model uses the decomposition layer from the Autoformer model, which we will introduce later in this post. The authors claim that the DLinear model outperforms the Transformer-based models in time-series forecasting.

Is that so? Let's find out.

| Dataset | Autoformer (uni.) MASE | DLinear MASE |

|:

|

[

[

"transformers",

"research",

"implementation"

]

] |

[

"2629e041-8c70-4026-8651-8bb91fd9749a"

] |

[

"submitted"

] |

[

"transformers",

"research",

"implementation"

] | null | null |

9e07b7d0-6dda-4d46-9309-34f5b37df5fa

|

completed

| 2025-01-16T03:09:40.503555 | 2025-01-19T17:18:04.994114 |

2b80e1db-ce18-4936-9d9e-cd1d68eef81e

|

DuckDB: analyze 50,000+ datasets stored on the Hugging Face Hub

|

stevhliu, lhoestq, severo

|

hub-duckdb.md

|

The Hugging Face Hub is dedicated to providing open access to datasets for everyone and giving users the tools to explore and understand them. You can find many of the datasets used to train popular large language models (LLMs) like [Falcon](https://huggingface.co/datasets/tiiuae/falcon-refinedweb), [Dolly](https://huggingface.co/datasets/databricks/databricks-dolly-15k), [MPT](https://huggingface.co/datasets/mosaicml/dolly_hhrlhf), and [StarCoder](https://huggingface.co/datasets/bigcode/the-stack). There are tools for addressing fairness and bias in datasets like [Disaggregators](https://huggingface.co/spaces/society-ethics/disaggregators), and tools for previewing examples inside a dataset like the Dataset Viewer.

<div class="flex justify-center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/datasets-server/oasst1_light.png"/>

</div>

<small>A preview of the OpenAssistant dataset with the Dataset Viewer.</small>

We are happy to share that we recently added another feature to help you analyze datasets on the Hub; you can run SQL queries with DuckDB on any dataset stored on the Hub! According to the 2022 [StackOverflow Developer Survey](https://survey.stackoverflow.co/2022/#section-most-popular-technologies-programming-scripting-and-markup-languages), SQL is the 3rd most popular programming language. We also wanted a fast database management system (DBMS) designed for running analytical queries, which is why we’re excited about integrating with [DuckDB](https://duckdb.org/). We hope this allows even more users to access and analyze datasets on the Hub!

## TLDR

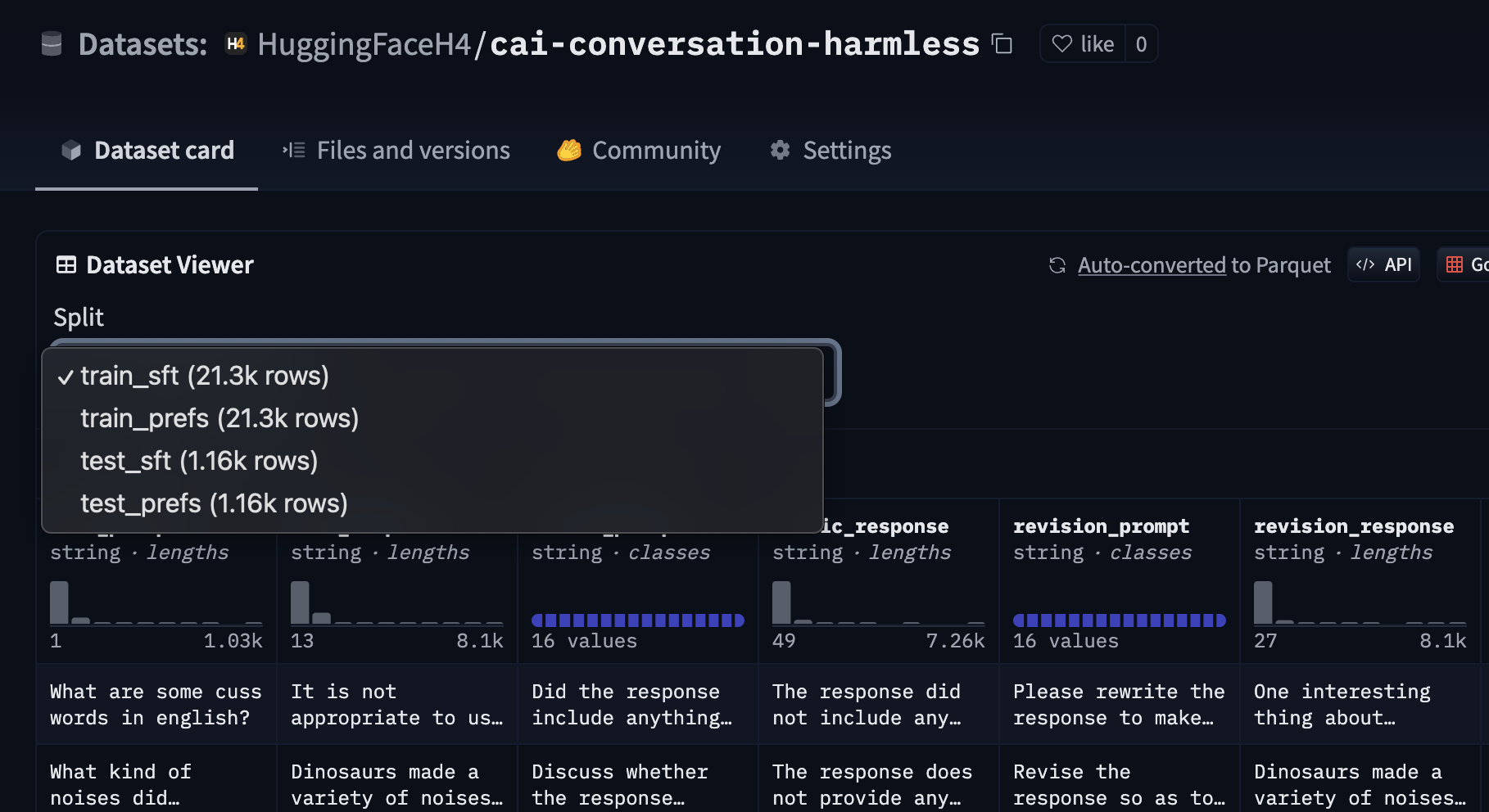

The [dataset viewer](https://huggingface.co/docs/datasets-server/index) **automatically converts all public datasets on the Hub to Parquet files**, that you can see by clicking on the "Auto-converted to Parquet" button at the top of a dataset page. You can also access the list of the Parquet files URLs with a simple HTTP call.

```py

r = requests.get("https://datasets-server.huggingface.co/parquet?dataset=blog_authorship_corpus")

j = r.json()

urls = [f['url'] for f in j['parquet_files'] if f['split'] == 'train']

urls

['https://huggingface.co/datasets/blog_authorship_corpus/resolve/refs%2Fconvert%2Fparquet/blog_authorship_corpus/blog_authorship_corpus-train-00000-of-00002.parquet',

'https://huggingface.co/datasets/blog_authorship_corpus/resolve/refs%2Fconvert%2Fparquet/blog_authorship_corpus/blog_authorship_corpus-train-00001-of-00002.parquet']

```

Create a connection to DuckDB and install and load the `httpfs` extension to allow reading and writing remote files:

```py

import duckdb

url = "https://huggingface.co/datasets/blog_authorship_corpus/resolve/refs%2Fconvert%2Fparquet/blog_authorship_corpus/blog_authorship_corpus-train-00000-of-00002.parquet"

con = duckdb.connect()

con.execute("INSTALL httpfs;")

con.execute("LOAD httpfs;")

```

Once you’re connected, you can start writing SQL queries!

```sql

con.sql(f"""SELECT horoscope,

count(*),

AVG(LENGTH(text)) AS avg_blog_length

FROM '{url}'

GROUP BY horoscope

ORDER BY avg_blog_length

DESC LIMIT(5)"""

)

```

To learn more, check out the [documentation](https://huggingface.co/docs/datasets-server/parquet_process).

## From dataset to Parquet

[Parquet](https://parquet.apache.org/docs/) files are columnar, making them more efficient to store, load and analyze. This is especially important when you're working with large datasets, which we’re seeing more and more of in the LLM era. To support this, the dataset viewer automatically converts and publishes any public dataset on the Hub as Parquet files. The URL to the Parquet files can be retrieved with the [`/parquet`](https://huggingface.co/docs/datasets-server/quick_start#access-parquet-files) endpoint.

## Analyze with DuckDB

DuckDB offers super impressive performance for running complex analytical queries. It is able to execute a SQL query directly on a remote Parquet file without any overhead. With the [`httpfs`](https://duckdb.org/docs/extensions/httpfs) extension, DuckDB is able to query remote files such as datasets stored on the Hub using the URL provided from the `/parquet` endpoint. DuckDB also supports querying multiple Parquet files which is really convenient because the dataset viewer shards big datasets into smaller 500MB chunks.

## Looking forward

Knowing what’s inside a dataset is important for developing models because it can impact model quality in all sorts of ways! By allowing users to write and execute any SQL query on Hub datasets, this is another way for us to enable open access to datasets and help users be more aware of the datasets contents. We are excited for you to try this out, and we’re looking forward to what kind of insights your analysis uncovers!

|

[

[

"llm",

"data",

"tools",

"integration"

]

] |

[

"2629e041-8c70-4026-8651-8bb91fd9749a"

] |

[

"submitted"

] |

[

"data",

"tools",

"llm",

"integration"

] | null | null |

6810fa31-a3fc-441f-ab65-5bc7398dfd6a

|

completed

| 2025-01-16T03:09:40.503560 | 2025-01-16T13:33:10.791677 |

b2fd032a-6206-49ee-9bf6-968128291986

|

Introduction to ggml

|

ngxson, ggerganov, slaren

|

introduction-to-ggml.md

|

[ggml](https://github.com/ggerganov/ggml) is a machine learning (ML) library written in C and C++ with a focus on Transformer inference. The project is open-source and is being actively developed by a growing community. ggml is similar to ML libraries such as PyTorch and TensorFlow, though it is still in its early stages of development and some of its fundamentals are still changing rapidly.

Over time, ggml has gained popularity alongside other projects like [llama.cpp](https://github.com/ggerganov/llama.cpp) and [whisper.cpp](https://github.com/ggerganov/whisper.cpp). Many other projects also use ggml under the hood to enable on-device LLM, including [ollama](https://github.com/ollama/ollama), [jan](https://github.com/janhq/jan), [LM Studio](https://github.com/lmstudio-ai), [GPT4All](https://github.com/nomic-ai/gpt4all).

The main reasons people choose to use ggml over other libraries are:

1. **Minimalism**: The core library is self-contained in less than 5 files. While you may want to include additional files for GPU support, it's optional.

2. **Easy compilation**: You don't need fancy build tools. Without GPU support, you only need GCC or Clang!

3. **Lightweight**: The compiled binary size is less than 1MB, which is tiny compared to PyTorch (which usually takes hundreds of MB).

4. **Good compatibility**: It supports many types of hardware, including x86_64, ARM, Apple Silicon, CUDA, etc.

5. **Support for quantized tensors**: Tensors can be quantized to save memory (similar to JPEG compression) and in certain cases to improve performance.

6. **Extremely memory efficient**: Overhead for storing tensors and performing computations is minimal.

However, ggml also comes with some disadvantages that you need to keep in mind when using it (this list may change in future versions of ggml):

- Not all tensor operations are supported on all backends. For example, some may work on CPU but won't work on CUDA.

- Development with ggml may not be straightforward and may require deep knowledge of low-level programming.

- The project is in active development, so breaking changes are expected.

In this article, we will focus on the fundamentals of ggml for developers looking to get started with the library. We do not cover higher-level tasks such as LLM inference with llama.cpp, which builds upon ggml. Instead, we'll explore the core concepts and basic usage of ggml to provide a solid foundation for further learning and development.

## Getting started

Great, so how do you start?

For simplicity, this guide will show you how to compile ggml on **Ubuntu**. In reality, you can compile ggml on virtually any platform (including Windows, macOS, and BSD).

```sh

# Start by installing build dependencies

# "gdb" is optional, but is recommended

sudo apt install build-essential cmake git gdb

# Then, clone the repository

git clone https://github.com/ggerganov/ggml.git

cd ggml

# Try compiling one of the examples

cmake -B build

cmake --build build --config Release --target simple-ctx

# Run the example

./build/bin/simple-ctx

```

Expected output:

```

mul mat (4 x 3) (transposed result):

[ 60.00 55.00 50.00 110.00

90.00 54.00 54.00 126.00

42.00 29.00 28.00 64.00 ]

```

If you see the expected result, that means we're good to go!

## Terminology and concepts

Before diving deep into ggml, we should understand some key concepts. If you're coming from high-level libraries like PyTorch or TensorFlow, these may seem challenging to grasp. However, keep in mind that ggml is a **low-level** library. Understanding these terms can give you much more control over performance:

- [ggml_context](https://github.com/ggerganov/ggml/blob/18703ad600cc68dbdb04d57434c876989a841d12/include/ggml.h#L355): A "container" that holds objects such as tensors, graphs, and optionally data

- [ggml_cgraph](https://github.com/ggerganov/ggml/blob/18703ad600cc68dbdb04d57434c876989a841d12/include/ggml.h#L652): Represents a computational graph. Think of it as the "order of computation" that will be transferred to the backend.

- [ggml_backend](https://github.com/ggerganov/ggml/blob/18703ad600cc68dbdb04d57434c876989a841d12/src/ggml-backend-impl.h#L80): Represents an interface for executing computation graphs. There are many types of backends: CPU (default), CUDA, Metal (Apple Silicon), Vulkan, RPC, etc.

- [ggml_backend_buffer_type](https://github.com/ggerganov/ggml/blob/18703ad600cc68dbdb04d57434c876989a841d12/src/ggml-backend-impl.h#L18): Represents a buffer type. Think of it as a "memory allocator" connected to each `ggml_backend`. For example, if you want to perform calculations on a GPU, you need to allocate memory on the GPU via `buffer_type` (usually abbreviated as `buft`).

- [ggml_backend_buffer](https://github.com/ggerganov/ggml/blob/18703ad600cc68dbdb04d57434c876989a841d12/src/ggml-backend-impl.h#L52): Represents a buffer allocated by `buffer_type`. Remember: a buffer can hold the data of multiple tensors.

- [ggml_gallocr](https://github.com/ggerganov/ggml/blob/18703ad600cc68dbdb04d57434c876989a841d12/include/ggml-alloc.h#L46): Represents a graph memory allocator, used to allocate efficiently the tensors used in a computation graph.

- [ggml_backend_sched](https://github.com/ggerganov/ggml/blob/18703ad600cc68dbdb04d57434c876989a841d12/include/ggml-backend.h#L169): A scheduler that enables concurrent use of multiple backends. It can distribute computations across different hardware (e.g., GPU and CPU) when dealing with large models or multiple GPUs. The scheduler can also automatically assign GPU-unsupported operations to the CPU, ensuring optimal resource utilization and compatibility.

## Simple example

In this example, we'll go through the steps to replicate the code we ran in [Getting Started](#getting-started). We need to create 2 matrices, multiply them and get the result. Using PyTorch, the code looks like this:

```py

import torch

# Create two matrices

matrix1 = torch.tensor([

[2, 8],

[5, 1],

[4, 2],

[8, 6],

])

matrix2 = torch.tensor([

[10, 5],

[9, 9],

[5, 4],

])

# Perform matrix multiplication

result = torch.matmul(matrix1, matrix2.T)

print(result.T)

```

With ggml, the following steps must be done to achieve the same result:

1. Allocate `ggml_context` to store tensor data

2. Create tensors and set data

3. Create a `ggml_cgraph` for mul_mat operation

4. Run the computation

5. Retrieve results (output tensors)

6. Free memory and exit

**NOTE**: In this example, we will allocate the tensor data **inside** the `ggml_context` for simplicity. In practice, memory should be allocated as a device buffer, as we'll see in the next section.

To get started, let's create a new directory `examples/demo`

```sh

cd ggml # make sure you're in the project root

# create C source and CMakeLists file

touch examples/demo/demo.c

touch examples/demo/CMakeLists.txt

```

The code for this example is based on [simple-ctx.cpp](https://github.com/ggerganov/ggml/blob/6c71d5a071d842118fb04c03c4b15116dff09621/examples/simple/simple-ctx.cpp)

Edit `examples/demo/demo.c` with the content below:

```c

#include "ggml.h"

#include "ggml-cpu.h"

#include <string.h>

#include <stdio.h>

int main(void) {

// initialize data of matrices to perform matrix multiplication

const int rows_A = 4, cols_A = 2;

float matrix_A[rows_A * cols_A] = {

2, 8,

5, 1,

4, 2,

8, 6

};

const int rows_B = 3, cols_B = 2;

float matrix_B[rows_B * cols_B] = {

10, 5,

9, 9,

5, 4

};

// 1. Allocate `ggml_context` to store tensor data

// Calculate the size needed to allocate

size_t ctx_size = 0;

ctx_size += rows_A * cols_A * ggml_type_size(GGML_TYPE_F32); // tensor a

ctx_size += rows_B * cols_B * ggml_type_size(GGML_TYPE_F32); // tensor b

ctx_size += rows_A * rows_B * ggml_type_size(GGML_TYPE_F32); // result

ctx_size += 3 * ggml_tensor_overhead(); // metadata for 3 tensors

ctx_size += ggml_graph_overhead(); // compute graph

ctx_size += 1024; // some overhead (exact calculation omitted for simplicity)

// Allocate `ggml_context` to store tensor data

struct ggml_init_params params = {

/*.mem_size =*/ ctx_size,

/*.mem_buffer =*/ NULL,

/*.no_alloc =*/ false,

};

struct ggml_context * ctx = ggml_init(params);

// 2. Create tensors and set data

struct ggml_tensor * tensor_a = ggml_new_tensor_2d(ctx, GGML_TYPE_F32, cols_A, rows_A);

struct ggml_tensor * tensor_b = ggml_new_tensor_2d(ctx, GGML_TYPE_F32, cols_B, rows_B);

memcpy(tensor_a->data, matrix_A, ggml_nbytes(tensor_a));

memcpy(tensor_b->data, matrix_B, ggml_nbytes(tensor_b));

// 3. Create a `ggml_cgraph` for mul_mat operation

struct ggml_cgraph * gf = ggml_new_graph(ctx);

// result = a*b^T

// Pay attention: ggml_mul_mat(A, B) ==> B will be transposed internally

// the result is transposed

struct ggml_tensor * result = ggml_mul_mat(ctx, tensor_a, tensor_b);

// Mark the "result" tensor to be computed

ggml_build_forward_expand(gf, result);

// 4. Run the computation

int n_threads = 1; // Optional: number of threads to perform some operations with multi-threading

ggml_graph_compute_with_ctx(ctx, gf, n_threads);

// 5. Retrieve results (output tensors)

float * result_data = (float *) result->data;

printf("mul mat (%d x %d) (transposed result):\n[", (int) result->ne[0], (int) result->ne[1]);

for (int j = 0; j < result->ne[1] /* rows */; j++) {

if (j > 0) {

printf("\n");

}

for (int i = 0; i < result->ne[0] /* cols */; i++) {

printf(" %.2f", result_data[j * result->ne[0] + i]);

}

}

printf(" ]\n");

// 6. Free memory and exit

ggml_free(ctx);

return 0;

}

```

Write these lines in the `examples/demo/CMakeLists.txt` file you created:

```

set(TEST_TARGET demo)

add_executable(${TEST_TARGET} demo)

target_link_libraries(${TEST_TARGET} PRIVATE ggml)

```

Edit `examples/CMakeLists.txt`, add this line at the end:

```

add_subdirectory(demo)

```

Compile and run it:

```sh

cmake -B build

cmake --build build --config Release --target demo

# Run it

./build/bin/demo

```

Expected result:

```

mul mat (4 x 3) (transposed result):

[ 60.00 55.00 50.00 110.00

90.00 54.00 54.00 126.00

42.00 29.00 28.00 64.00 ]

```

## Example with a backend

"Backend" in ggml refers to an interface that can handle tensor operations. Backend can be CPU, CUDA, Vulkan, etc.

The backend abstracts the execution of the computation graphs. Once defined, a graph can be computed with the available hardware by using the respective backend implementation. Note that ggml will automatically reserve memory for any intermediate tensors necessary for the computation and will optimize the memory usage based on the lifetime of these intermediate results.

When doing a computation or inference with backend, common steps that need to be done are:

1. Initialize `ggml_backend`

2. Allocate `ggml_context` to store tensor metadata (we **don't need** to allocate tensor data right away)

3. Create tensors metadata (only their shapes and data types)

4. Allocate a `ggml_backend_buffer` to store all tensors

5. Copy tensor data from main memory (RAM) to backend buffer

6. Create a `ggml_cgraph` for mul_mat operation

7. Create a `ggml_gallocr` for cgraph allocation

8. Optionally: schedule the cgraph using `ggml_backend_sched`

9. Run the computation

10. Retrieve results (output tensors)

11. Free memory and exit

The code for this example is based on [simple-backend.cpp](https://github.com/ggerganov/ggml/blob/6c71d5a071d842118fb04c03c4b15116dff09621/examples/simple/simple-backend.cpp)

```cpp

#include "ggml.h"

#include "ggml-alloc.h"

#include "ggml-backend.h"

#ifdef GGML_USE_CUDA

#include "ggml-cuda.h"

#endif

#include <stdlib.h>

#include <string.h>

#include <stdio.h>

int main(void) {

// initialize data of matrices to perform matrix multiplication

const int rows_A = 4, cols_A = 2;

float matrix_A[rows_A * cols_A] = {

2, 8,

5, 1,

4, 2,

8, 6

};

const int rows_B = 3, cols_B = 2;

float matrix_B[rows_B * cols_B] = {

10, 5,

9, 9,

5, 4

};

// 1. Initialize backend

ggml_backend_t backend = NULL;

#ifdef GGML_USE_CUDA

fprintf(stderr, "%s: using CUDA backend\n", __func__);

backend = ggml_backend_cuda_init(0); // init device 0

if (!backend) {

fprintf(stderr, "%s: ggml_backend_cuda_init() failed\n", __func__);

}

#endif

// if there aren't GPU Backends fallback to CPU backend

if (!backend) {

backend = ggml_backend_cpu_init();

}

// Calculate the size needed to allocate

size_t ctx_size = 0;

ctx_size += 2 * ggml_tensor_overhead(); // tensors

// no need to allocate anything else!

// 2. Allocate `ggml_context` to store tensor data

struct ggml_init_params params = {

/*.mem_size =*/ ctx_size,

/*.mem_buffer =*/ NULL,

/*.no_alloc =*/ true, // the tensors will be allocated later by ggml_backend_alloc_ctx_tensors()

};

struct ggml_context * ctx = ggml_init(params);

// Create tensors metadata (only there shapes and data type)

struct ggml_tensor * tensor_a = ggml_new_tensor_2d(ctx, GGML_TYPE_F32, cols_A, rows_A);

struct ggml_tensor * tensor_b = ggml_new_tensor_2d(ctx, GGML_TYPE_F32, cols_B, rows_B);

// 4. Allocate a `ggml_backend_buffer` to store all tensors

ggml_backend_buffer_t buffer = ggml_backend_alloc_ctx_tensors(ctx, backend);

// 5. Copy tensor data from main memory (RAM) to backend buffer

ggml_backend_tensor_set(tensor_a, matrix_A, 0, ggml_nbytes(tensor_a));

ggml_backend_tensor_set(tensor_b, matrix_B, 0, ggml_nbytes(tensor_b));

// 6. Create a `ggml_cgraph` for mul_mat operation

struct ggml_cgraph * gf = NULL;

struct ggml_context * ctx_cgraph = NULL;

{

// create a temporally context to build the graph

struct ggml_init_params params0 = {

/*.mem_size =*/ ggml_tensor_overhead()*GGML_DEFAULT_GRAPH_SIZE + ggml_graph_overhead(),

/*.mem_buffer =*/ NULL,

/*.no_alloc =*/ true, // the tensors will be allocated later by ggml_gallocr_alloc_graph()

};

ctx_cgraph = ggml_init(params0);

gf = ggml_new_graph(ctx_cgraph);

// result = a*b^T

// Pay attention: ggml_mul_mat(A, B) ==> B will be transposed internally

// the result is transposed

struct ggml_tensor * result0 = ggml_mul_mat(ctx_cgraph, tensor_a, tensor_b);

// Add "result" tensor and all of its dependencies to the cgraph

ggml_build_forward_expand(gf, result0);

}

// 7. Create a `ggml_gallocr` for cgraph computation

ggml_gallocr_t allocr = ggml_gallocr_new(ggml_backend_get_default_buffer_type(backend));

ggml_gallocr_alloc_graph(allocr, gf);

// (we skip step 8. Optionally: schedule the cgraph using `ggml_backend_sched`)

// 9. Run the computation

int n_threads = 1; // Optional: number of threads to perform some operations with multi-threading

if (ggml_backend_is_cpu(backend)) {

ggml_backend_cpu_set_n_threads(backend, n_threads);

}

ggml_backend_graph_compute(backend, gf);

// 10. Retrieve results (output tensors)

// in this example, output tensor is always the last tensor in the graph

struct ggml_tensor * result = gf->nodes[gf->n_nodes - 1];

float * result_data = malloc(ggml_nbytes(result));

// because the tensor data is stored in device buffer, we need to copy it back to RAM

ggml_backend_tensor_get(result, result_data, 0, ggml_nbytes(result));

printf("mul mat (%d x %d) (transposed result):\n[", (int) result->ne[0], (int) result->ne[1]);

for (int j = 0; j < result->ne[1] /* rows */; j++) {

if (j > 0) {

printf("\n");

}

for (int i = 0; i < result->ne[0] /* cols */; i++) {

printf(" %.2f", result_data[j * result->ne[0] + i]);

}

}

printf(" ]\n");

free(result_data);

// 11. Free memory and exit

ggml_free(ctx_cgraph);

ggml_gallocr_free(allocr);

ggml_free(ctx);

ggml_backend_buffer_free(buffer);

ggml_backend_free(backend);

return 0;

}

```

Compile and run it, you should get the same result as the last example:

```sh

cmake -B build

cmake --build build --config Release --target demo

# Run it

./build/bin/demo

```

Expected result:

```

mul mat (4 x 3) (transposed result):

[ 60.00 55.00 50.00 110.00

90.00 54.00 54.00 126.00

42.00 29.00 28.00 64.00 ]

```

## Printing the computational graph

The `ggml_cgraph` represents the computational graph, which defines the order of operations that will be executed by the backend. Printing the graph can be a helpful debugging tool, especially when working with more complex models and computations.

You can add `ggml_graph_print` to print the cgraph:

```cpp

...

// Mark the "result" tensor to be computed

ggml_build_forward_expand(gf, result0);

// Print the cgraph

ggml_graph_print(gf);

```

Run it:

```

=== GRAPH ===

n_nodes = 1

- 0: [ 4, 3, 1] MUL_MAT

n_leafs = 2

- 0: [ 2, 4] NONE leaf_0

- 1: [ 2, 3] NONE leaf_1

========================================

```

Additionally, you can draw the cgraph as graphviz dot format:

```cpp

ggml_graph_dump_dot(gf, NULL, "debug.dot");

```

You can use the `dot` command or this [online website](https://dreampuf.github.io/GraphvizOnline) to render `debug.dot` into a final image:

## Conclusion

This article has provided an introductory overview of ggml, covering the key concepts, a simple usage example, and an example using a backend. While we've covered the basics, there is much more to explore when it comes to ggml.

In upcoming articles, we'll dive deeper into other ggml-related subjects, such as the GGUF format, quantization, and how the different backends are organized and utilized. Additionally, you can visit the [ggml examples directory](https://github.com/ggerganov/ggml/tree/master/examples) to see more advanced use cases and sample code. Stay tuned for more ggml content in the future!

|

[

[

"llm",

"implementation",

"tutorial",

"optimization",

"efficient_computing"

]

] |

[

"2629e041-8c70-4026-8651-8bb91fd9749a"

] |

[

"submitted"

] |

[

"llm",

"implementation",

"optimization",

"efficient_computing"

] | null | null |

324207b3-82f5-4ce6-b459-1e518d0c41d6

|

completed

| 2025-01-16T03:09:40.503565 | 2025-01-16T03:23:21.501443 |

82b9ba76-bb6d-44a9-a8b4-7825a4bc874a

|

License to Call: Introducing Transformers Agents 2.0

|

m-ric, lysandre, pcuenq

|

agents.md

|

## TL;DR

We are releasing Transformers Agents 2.0!

⇒ 🎁 On top of our existing agent type, we introduce two new agents that **can iterate based on past observations to solve complex tasks**.

⇒ 💡 We aim for the code to be **clear and modular, and for common attributes like the final prompt and tools to be transparent**.

⇒ 🤝 We add **sharing options** to boost community agents.

⇒ 💪 **Extremely performant new agent framework**, allowing a Llama-3-70B-Instruct agent to outperform GPT-4 based agents in the GAIA Leaderboard!

🚀 Go try it out and climb ever higher on the GAIA leaderboard!

## Table of Contents

- [What is an agent?](#what-is-an-agent)

- [The Transformers Agents approach](#the-transformers-agents-approach)

- [Main elements](#main-elements)

- [Example use-cases](#example-use-cases)

- [Self-correcting Retrieval-Augmented-Generation](#self-correcting-retrieval-augmented-generation)

- [Using a simple multi-agent setup 🤝 for efficient web browsing](#using-a-simple-multi-agent-setup-for-efficient-web-browsing)

- [Testing our agents](#testing-our-agents)

- [Benchmarking LLM engines](#benchmarking-llm-engines)

- [Climbing up the GAIA Leaderboard with a multi-modal agent](#climbing-up-the-gaia-leaderboard-with-a-multi-modal-agent)

- [Conclusion](#conclusion)

## What is an agent?

Large Language Models (LLMs) can tackle a wide range of tasks, but they often struggle with specific tasks like logic, calculation, and search. When prompted in these domains in which they do not perform well, they frequently fail to generate a correct answer.

One approach to overcome this weakness is to create an **agent**, which is just a program driven by an LLM. The agent is empowered by **tools** to help it perform actions. When the agent needs a specific skill to solve a particular problem, it relies on an appropriate tool from its toolbox.

Thus when during problem-solving the agent needs a specific skill, it can just rely on an appropriate tool from its toolbox.

Experimentally, agent frameworks generally work very well, achieving state-of-the-art performance on several benchmarks. For instance, have a look at [the top submissions for HumanEval](https://paperswithcode.com/sota/code-generation-on-humaneval): they are agent systems.

## The Transformers Agents approach

Building agent workflows is complex, and we feel these systems need a lot of clarity and modularity. We launched Transformers Agents one year ago, and we’re doubling down on our core design goals.

Our framework strives for:

- **Clarity through simplicity:** we reduce abstractions to the minimum. Simple error logs and accessible attributes let you easily inspect what’s happening and give you more clarity.

- **Modularity:** We prefer to propose building blocks rather than full, complex feature sets. You are free to choose whatever building blocks are best for your project.

- For instance, since any agent system is just a vehicle powered by an LLM engine, we decided to conceptually separate the two, which lets you create any agent type from any underlying LLM.

On top of that, we have **sharing features** that let you build on the shoulders of giants!

### Main elements