modelId

stringlengths 4

112

| sha

stringlengths 40

40

| lastModified

stringlengths 24

24

| tags

list | pipeline_tag

stringclasses 29

values | private

bool 1

class | author

stringlengths 2

38

⌀ | config

null | id

stringlengths 4

112

| downloads

float64 0

36.8M

⌀ | likes

float64 0

712

⌀ | library_name

stringclasses 17

values | __index_level_0__

int64 0

38.5k

| readme

stringlengths 0

186k

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

unicamp-dl/mMiniLM-L6-v2-mmarco-v2

|

8ed6820748716827e99e8f39505eaa121169c1a1

|

2022-01-05T22:45:15.000Z

|

[

"pytorch",

"xlm-roberta",

"text-classification",

"pt",

"dataset:msmarco",

"arxiv:2108.13897",

"transformers",

"msmarco",

"miniLM",

"tensorflow",

"pt-br",

"license:mit"

] |

text-classification

| false

|

unicamp-dl

| null |

unicamp-dl/mMiniLM-L6-v2-mmarco-v2

| 122

| null |

transformers

| 4,300

|

---

language: pt

license: mit

tags:

- msmarco

- miniLM

- pytorch

- tensorflow

- pt

- pt-br

datasets:

- msmarco

widget:

- text: "Texto de exemplo em português"

inference: false

---

# mMiniLM-L6-v2 Reranker finetuned on mMARCO

## Introduction

mMiniLM-L6-v2-mmarco-v2 is a multilingual miniLM-based model finetuned on a multilingual version of MS MARCO passage dataset. This dataset, named mMARCO, is formed by passages in 9 different languages, translated from English MS MARCO passages collection.

In the v2 version, the datasets were translated using Google Translate.

Further information about the dataset or the translation method can be found on our [**mMARCO: A Multilingual Version of MS MARCO Passage Ranking Dataset**](https://arxiv.org/abs/2108.13897) and [mMARCO](https://github.com/unicamp-dl/mMARCO) repository.

## Usage

```python

from transformers import AutoTokenizer, AutoModel

model_name = 'unicamp-dl/mMiniLM-L6-v2-mmarco-v2'

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModel.from_pretrained(model_name)

```

# Citation

If you use mMiniLM-L6-v2-mmarco-v2, please cite:

@misc{bonifacio2021mmarco,

title={mMARCO: A Multilingual Version of MS MARCO Passage Ranking Dataset},

author={Luiz Henrique Bonifacio and Vitor Jeronymo and Hugo Queiroz Abonizio and Israel Campiotti and Marzieh Fadaee and and Roberto Lotufo and Rodrigo Nogueira},

year={2021},

eprint={2108.13897},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

|

pszemraj/t5-v1_1-base-ft-jflAUG

|

bf9384f2c638632ef0e943ec57ddb7b13f7f6740

|

2022-07-10T00:41:01.000Z

|

[

"pytorch",

"t5",

"text2text-generation",

"dataset:jfleg",

"transformers",

"grammar",

"spelling",

"punctuation",

"error-correction",

"license:cc-by-nc-sa-4.0",

"autotrain_compatible"

] |

text2text-generation

| false

|

pszemraj

| null |

pszemraj/t5-v1_1-base-ft-jflAUG

| 122

| 1

|

transformers

| 4,301

|

---

license: cc-by-nc-sa-4.0

tags:

- grammar

- spelling

- punctuation

- error-correction

datasets:

- jfleg

widget:

- text: "i can has cheezburger"

example_title: "cheezburger"

- text: "There car broke down so their hitching a ride to they're class."

example_title: "compound-1"

- text: "so em if we have an now so with fito ringina know how to estimate the tren given the ereafte mylite trend we can also em an estimate is nod s

i again tort watfettering an we have estimated the trend an

called wot to be called sthat of exty right now we can and look at

wy this should not hare a trend i becan we just remove the trend an and we can we now estimate

tesees ona effect of them exty"

example_title: "Transcribed Audio Example 2"

- text: "My coworker said he used a financial planner to help choose his stocks so he wouldn't loose money."

example_title: "incorrect word choice (context)"

- text: "good so hve on an tadley i'm not able to make it to the exla session on monday this week e which is why i am e recording pre recording

an this excelleision and so to day i want e to talk about two things and first of all em i wont em wene give a summary er about

ta ohow to remove trents in these nalitives from time series"

example_title: "lowercased audio transcription output"

- text: "Frustrated, the chairs took me forever to set up."

example_title: "dangling modifier"

- text: "I would like a peice of pie."

example_title: "miss-spelling"

- text: "Which part of Zurich was you going to go hiking in when we were there for the first time together? ! ?"

example_title: "chatbot on Zurich"

parameters:

max_length: 128

min_length: 4

num_beams: 4

repetition_penalty: 1.21

length_penalty: 1

early_stopping: True

---

> A more recent version can be found [here](https://huggingface.co/pszemraj/grammar-synthesis-large). Training smaller and/or comparably sized models is a WIP.

# t5-v1_1-base-ft-jflAUG

**GOAL:** a more robust and generalized grammar and spelling correction model that corrects everything in a single shot. It should have a minimal impact on the semantics of correct sentences (i.e. it does not change things that do not need to be changed).

- this model _(at least from preliminary testing)_ can handle large amounts of errors in the source text (i.e. from audio transcription) and still produce cohesive results.

- a fine-tuned version of [google/t5-v1_1-base](https://huggingface.co/google/t5-v1_1-base) on an expanded version of the [JFLEG dataset](https://aclanthology.org/E17-2037/).

## Model description

- this is a WIP. This fine-tuned model is v1.

- long term: a generalized grammar and spelling correction model that can handle lots of things at the same time.

- currently, it seems to be more of a "gibberish to mostly correct English" translator

## Intended uses & limitations

- try some tests with the [examples here](https://www.engvid.com/english-resource/50-common-grammar-mistakes-in-english/)

- thus far, some limitations are: sentence fragments are not autocorrected (at least, if entered individually), some more complicated pronoun/they/he/her etc. agreement is not always fixed.

## Training and evaluation data

- trained as text-to-text

- JFLEG dataset + additional selected and/or generated grammar corrections

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 6e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- distributed_type: multi-GPU

- gradient_accumulation_steps: 8

- total_train_batch_size: 64

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine

- lr_scheduler_warmup_ratio: 0.05

- num_epochs: 5

### Framework versions

- Transformers 4.17.0

- Pytorch 1.10.0+cu111

- Datasets 2.0.0

- Tokenizers 0.11.6

|

algoprog/mimics-multilabel-roberta-base-787

|

7faaa092ba7eea4b0389b572322a365560405c92

|

2022-05-07T17:49:07.000Z

|

[

"pytorch",

"roberta",

"transformers"

] | null | false

|

algoprog

| null |

algoprog/mimics-multilabel-roberta-base-787

| 122

| null |

transformers

| 4,302

|

Entry not found

|

IljaSamoilov/EstBERT-estonian-subtitles-token-classification

|

ceabdc298a4ff421f22d990d715f2409e9757391

|

2022-05-11T08:13:06.000Z

|

[

"pytorch",

"bert",

"token-classification",

"et",

"transformers",

"autotrain_compatible"

] |

token-classification

| false

|

IljaSamoilov

| null |

IljaSamoilov/EstBERT-estonian-subtitles-token-classification

| 122

| null |

transformers

| 4,303

|

---

language:

- et

widget:

- text: "Et, et, et miks mitte olla siis tasakaalus, ma noh, hüpoteetiliselt viskan selle palli üles,"

- text: "te olete ka noh, noh, päris korralikult ka Rahvusringhäälingu teatud mõttes sellisesse keerulisse olukorda pannud,"

---

Importing the model and tokenizer:

```

tokenizer = AutoTokenizer.from_pretrained("IljaSamoilov/EstBERT-estonian-subtitles-token-classification")

model = AutoModelForTokenClassification.from_pretrained("IljaSamoilov/EstBERT-estonian-subtitles-token-classification")

```

|

launch/POLITICS

|

41b3da20755e0eaf6f00a9dfc5136f4920721856

|

2022-07-26T00:06:33.000Z

|

[

"pytorch",

"roberta",

"fill-mask",

"transformers",

"autotrain_compatible"

] |

fill-mask

| false

|

launch

| null |

launch/POLITICS

| 122

| 3

|

transformers

| 4,304

|

## POLITICS

POLITICS, a pretrained model on English news articles of politics, is produced via continued training on RoBERTa, based on a **P**retraining **O**bjective **L**everaging **I**nter-article **T**riplet-loss using **I**deological **C**ontent and **S**tory.

Details of our proposed training objectives (i.e., Ideology-driven Pretraining Objectives) and experimental results of POLITICS can be found in our NAACL-2022 Findings [paper](https://aclanthology.org/2022.findings-naacl.101.pdf) and GitHub [Repo](https://github.com/launchnlp/POLITICS).

Together with POLITICS, we also release our curated large-scale dataset (i.e., BIGNEWS) for pretraining, consisting of more than 3.6M political news articles. This asset can be requested [here](https://docs.google.com/forms/d/e/1FAIpQLSf4hft2AHbuak8jHcltVec_2HviaBBVKXPN4OC-CuW4OFORsw/viewform).

## Citation

Please cite our paper if you use the **POLITICS** model:

```

@inproceedings{liu-etal-2022-POLITICS,

title = "POLITICS: Pretraining with Same-story Article Comparison for Ideology Prediction and Stance Detection",

author = "Liu, Yujian and

Zhang, Xinliang Frederick and

Wegsman, David and

Beauchamp, Nicholas and

Wang, Lu"

booktitle = "Findings of the Association for Computational Linguistics: NAACL 2022",

year = "2022",

```

|

fourthbrain-demo/model_trained_by_me2

|

0fdf6cf2c394fd10fb3740b1a4fc937da49643d3

|

2022-06-20T20:47:13.000Z

|

[

"pytorch",

"tensorboard",

"distilbert",

"text-classification",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index"

] |

text-classification

| false

|

fourthbrain-demo

| null |

fourthbrain-demo/model_trained_by_me2

| 122

| null |

transformers

| 4,305

|

---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- accuracy

- f1

model-index:

- name: model_trained_by_me2

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# model_trained_by_me2

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4258

- Accuracy: 0.7983

- F1: 0.7888

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

### Framework versions

- Transformers 4.20.0

- Pytorch 1.11.0+cu113

- Datasets 2.3.2

- Tokenizers 0.12.1

|

HooshvareLab/bert-fa-base-uncased-sentiment-deepsentipers-multi

|

76f7785ba3d6e867239401bc6359678a92505e4c

|

2021-05-18T20:58:01.000Z

|

[

"pytorch",

"tf",

"jax",

"bert",

"text-classification",

"fa",

"transformers",

"license:apache-2.0"

] |

text-classification

| false

|

HooshvareLab

| null |

HooshvareLab/bert-fa-base-uncased-sentiment-deepsentipers-multi

| 121

| null |

transformers

| 4,306

|

---

language: fa

license: apache-2.0

---

# ParsBERT (v2.0)

A Transformer-based Model for Persian Language Understanding

We reconstructed the vocabulary and fine-tuned the ParsBERT v1.1 on the new Persian corpora in order to provide some functionalities for using ParsBERT in other scopes!

Please follow the [ParsBERT](https://github.com/hooshvare/parsbert) repo for the latest information about previous and current models.

## Persian Sentiment [Digikala, SnappFood, DeepSentiPers]

It aims to classify text, such as comments, based on their emotional bias. We tested three well-known datasets for this task: `Digikala` user comments, `SnappFood` user comments, and `DeepSentiPers` in two binary-form and multi-form types.

### DeepSentiPers

which is a balanced and augmented version of SentiPers, contains 12,138 user opinions about digital products labeled with five different classes; two positives (i.e., happy and delighted), two negatives (i.e., furious and angry) and one neutral class. Therefore, this dataset can be utilized for both multi-class and binary classification. In the case of binary classification, the neutral class and its corresponding sentences are removed from the dataset.

**Binary:**

1. Negative (Furious + Angry)

2. Positive (Happy + Delighted)

**Multi**

1. Furious

2. Angry

3. Neutral

4. Happy

5. Delighted

| Label | # |

|:---------:|:----:|

| Furious | 236 |

| Angry | 1357 |

| Neutral | 2874 |

| Happy | 2848 |

| Delighted | 2516 |

**Download**

You can download the dataset from:

- [SentiPers](https://github.com/phosseini/sentipers)

- [DeepSentiPers](https://github.com/JoyeBright/DeepSentiPers)

## Results

The following table summarizes the F1 score obtained by ParsBERT as compared to other models and architectures.

| Dataset | ParsBERT v2 | ParsBERT v1 | mBERT | DeepSentiPers |

|:------------------------:|:-----------:|:-----------:|:-----:|:-------------:|

| SentiPers (Multi Class) | 71.31* | 71.11 | - | 69.33 |

| SentiPers (Binary Class) | 92.42* | 92.13 | - | 91.98 |

## How to use :hugs:

| Task | Notebook |

|---------------------|---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

| Sentiment Analysis | [](https://colab.research.google.com/github/hooshvare/parsbert/blob/master/notebooks/Taaghche_Sentiment_Analysis.ipynb) |

### BibTeX entry and citation info

Please cite in publications as the following:

```bibtex

@article{ParsBERT,

title={ParsBERT: Transformer-based Model for Persian Language Understanding},

author={Mehrdad Farahani, Mohammad Gharachorloo, Marzieh Farahani, Mohammad Manthouri},

journal={ArXiv},

year={2020},

volume={abs/2005.12515}

}

```

## Questions?

Post a Github issue on the [ParsBERT Issues](https://github.com/hooshvare/parsbert/issues) repo.

|

algolet/mt5-base-chinese-qg

|

90f1d65a0fb2129463110b272d275f88fe57d22c

|

2022-03-03T02:18:05.000Z

|

[

"pytorch",

"mt5",

"text2text-generation",

"zh",

"transformers",

"question generation",

"autotrain_compatible"

] |

text2text-generation

| false

|

algolet

| null |

algolet/mt5-base-chinese-qg

| 121

| 4

|

transformers

| 4,307

|

<h3 align="center">

<p>MT5 Base Model for Chinese Question Generation</p>

</h3>

<h3 align="center">

<p>基于mt5的中文问题生成任务</p>

</h3>

#### 可以通过安装question-generation包开始用

```

pip install question-generation

```

使用方法请参考github项目:https://github.com/algolet/question_generation

#### 在线使用

可以直接在线使用我们的模型:https://www.algolet.com/applications/qg

#### 通过transformers调用

``` python

import torch

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

tokenizer = AutoTokenizer.from_pretrained("algolet/mt5-base-chinese-qg")

model = AutoModelForSeq2SeqLM.from_pretrained("algolet/mt5-base-chinese-qg")

model.eval()

text = "在一个寒冷的冬天,赶集完回家的农夫在路边发现了一条冻僵了的蛇。他很可怜蛇,就把它放在怀里。当他身上的热气把蛇温暖以后,蛇很快苏醒了,露出了残忍的本性,给了农夫致命的伤害——咬了农夫一口。农夫临死之前说:“我竟然救了一条可怜的毒蛇,就应该受到这种报应啊!”"

text = "question generation: " + text

inputs = tokenizer(text,

return_tensors='pt',

truncation=True,

max_length=512)

with torch.no_grad():

outs = model.generate(input_ids=inputs["input_ids"],

attention_mask=inputs["attention_mask"],

max_length=128,

no_repeat_ngram_size=4,

num_beams=4)

question = tokenizer.decode(outs[0], skip_special_tokens=True)

questions = [q.strip() for q in question.split("<sep>") if len(q.strip()) > 0]

print(questions)

['在寒冷的冬天,农夫在哪里发现了一条可怜的蛇?', '农夫是如何看待蛇的?', '当农夫遇到蛇时,他做了什么?']

```

#### 指标

rouge-1: 0.4041

rouge-2: 0.2104

rouge-l: 0.3843

---

language:

- zh

tags:

- mt5

- question generation

metrics:

- rouge

---

|

avichr/hebEMO_anticipation

|

27b2152fa2a8875fe4f5cc438e21a413bbc36fa4

|

2022-04-15T09:35:11.000Z

|

[

"pytorch",

"bert",

"text-classification",

"transformers"

] |

text-classification

| false

|

avichr

| null |

avichr/hebEMO_anticipation

| 121

| null |

transformers

| 4,308

|

# HebEMO - Emotion Recognition Model for Modern Hebrew

<img align="right" src="https://github.com/avichaychriqui/HeBERT/blob/main/data/heBERT_logo.png?raw=true" width="250">

HebEMO is a tool that detects polarity and extracts emotions from modern Hebrew User-Generated Content (UGC), which was trained on a unique Covid-19 related dataset that we collected and annotated.

HebEMO yielded a high performance of weighted average F1-score = 0.96 for polarity classification.

Emotion detection reached an F1-score of 0.78-0.97, with the exception of *surprise*, which the model failed to capture (F1 = 0.41). These results are better than the best-reported performance, even when compared to the English language.

## Emotion UGC Data Description

Our UGC data includes comments posted on news articles collected from 3 major Israeli news sites, between January 2020 to August 2020. The total size of the data is ~150 MB, including over 7 million words and 350K sentences.

~2000 sentences were annotated by crowd members (3-10 annotators per sentence) for overall sentiment (polarity) and [eight emotions](https://en.wikipedia.org/wiki/Robert_Plutchik#Plutchik's_wheel_of_emotions): anger, disgust, anticipation , fear, joy, sadness, surprise and trust.

The percentage of sentences in which each emotion appeared is found in the table below.

| | anger | disgust | expectation | fear | happy | sadness | surprise | trust | sentiment |

|------:|------:|--------:|------------:|-----:|------:|--------:|---------:|------:|-----------|

| **ratio** | 0.78 | 0.83 | 0.58 | 0.45 | 0.12 | 0.59 | 0.17 | 0.11 | 0.25 |

## Performance

### Emotion Recognition

| emotion | f1-score | precision | recall |

|-------------|----------|-----------|----------|

| anger | 0.96 | 0.99 | 0.93 |

| disgust | 0.97 | 0.98 | 0.96 |

|anticipation | 0.82 | 0.80 | 0.87 |

| fear | 0.79 | 0.88 | 0.72 |

| joy | 0.90 | 0.97 | 0.84 |

| sadness | 0.90 | 0.86 | 0.94 |

| surprise | 0.40 | 0.44 | 0.37 |

| trust | 0.83 | 0.86 | 0.80 |

*The above metrics is for positive class (meaning, the emotion is reflected in the text).*

### Sentiment (Polarity) Analysis

| | precision | recall | f1-score |

|--------------|-----------|--------|----------|

| neutral | 0.83 | 0.56 | 0.67 |

| positive | 0.96 | 0.92 | 0.94 |

| negative | 0.97 | 0.99 | 0.98 |

| accuracy | | | 0.97 |

| macro avg | 0.92 | 0.82 | 0.86 |

| weighted avg | 0.96 | 0.97 | 0.96 |

*Sentiment (polarity) analysis model is also available on AWS! for more information visit [AWS' git](https://github.com/aws-samples/aws-lambda-docker-serverless-inference/tree/main/hebert-sentiment-analysis-inference-docker-lambda)*

## How to use

### Emotion Recognition Model

An online model can be found at [huggingface spaces](https://huggingface.co/spaces/avichr/HebEMO_demo) or as [colab notebook](https://colab.research.google.com/drive/1Jw3gOWjwVMcZslu-ttXoNeD17lms1-ff?usp=sharing)

```

# !pip install pyplutchik==0.0.7

# !pip install transformers==4.14.1

!git clone https://github.com/avichaychriqui/HeBERT.git

from HeBERT.src.HebEMO import *

HebEMO_model = HebEMO()

HebEMO_model.hebemo(input_path = 'data/text_example.txt')

# return analyzed pandas.DataFrame

hebEMO_df = HebEMO_model.hebemo(text='החיים יפים ומאושרים', plot=True)

```

<img src="https://github.com/avichaychriqui/HeBERT/blob/main/data/hebEMO1.png?raw=true" width="300" height="300" />

### For sentiment classification model (polarity ONLY):

from transformers import AutoTokenizer, AutoModel, pipeline

tokenizer = AutoTokenizer.from_pretrained("avichr/heBERT_sentiment_analysis") #same as 'avichr/heBERT' tokenizer

model = AutoModel.from_pretrained("avichr/heBERT_sentiment_analysis")

# how to use?

sentiment_analysis = pipeline(

"sentiment-analysis",

model="avichr/heBERT_sentiment_analysis",

tokenizer="avichr/heBERT_sentiment_analysis",

return_all_scores = True

)

sentiment_analysis('אני מתלבט מה לאכול לארוחת צהריים')

>>> [[{'label': 'neutral', 'score': 0.9978172183036804},

>>> {'label': 'positive', 'score': 0.0014792329166084528},

>>> {'label': 'negative', 'score': 0.0007035882445052266}]]

sentiment_analysis('קפה זה טעים')

>>> [[{'label': 'neutral', 'score': 0.00047328314394690096},

>>> {'label': 'possitive', 'score': 0.9994067549705505},

>>> {'label': 'negetive', 'score': 0.00011996887042187154}]]

sentiment_analysis('אני לא אוהב את העולם')

>>> [[{'label': 'neutral', 'score': 9.214012970915064e-05},

>>> {'label': 'possitive', 'score': 8.876807987689972e-05},

>>> {'label': 'negetive', 'score': 0.9998190999031067}]]

## Contact us

[Avichay Chriqui](mailto:[email protected]) <br>

[Inbal yahav](mailto:[email protected]) <br>

The Coller Semitic Languages AI Lab <br>

Thank you, תודה, شكرا <br>

## If you used this model please cite us as :

Chriqui, A., & Yahav, I. (2022). HeBERT & HebEMO: a Hebrew BERT Model and a Tool for Polarity Analysis and Emotion Recognition. INFORMS Journal on Data Science, forthcoming.

```

@article{chriqui2021hebert,

title={HeBERT \& HebEMO: a Hebrew BERT Model and a Tool for Polarity Analysis and Emotion Recognition},

author={Chriqui, Avihay and Yahav, Inbal},

journal={INFORMS Journal on Data Science},

year={2022}

}

```

|

facebook/s2t-small-mustc-en-de-st

|

ebde73eef775bb11dfa33ee2e5285e0fcfc6f126

|

2022-02-07T15:07:57.000Z

|

[

"pytorch",

"tf",

"speech_to_text",

"automatic-speech-recognition",

"en",

"de",

"dataset:mustc",

"arxiv:2010.05171",

"arxiv:1904.08779",

"transformers",

"audio",

"speech-translation",

"license:mit"

] |

automatic-speech-recognition

| false

|

facebook

| null |

facebook/s2t-small-mustc-en-de-st

| 121

| null |

transformers

| 4,309

|

---

language:

- en

- de

datasets:

- mustc

tags:

- audio

- speech-translation

- automatic-speech-recognition

license: mit

pipeline_tag: automatic-speech-recognition

widget:

- example_title: Librispeech sample 1

src: https://cdn-media.huggingface.co/speech_samples/sample1.flac

- example_title: Librispeech sample 2

src: https://cdn-media.huggingface.co/speech_samples/sample2.flac

---

# S2T-SMALL-MUSTC-EN-DE-ST

`s2t-small-mustc-en-de-st` is a Speech to Text Transformer (S2T) model trained for end-to-end Speech Translation (ST).

The S2T model was proposed in [this paper](https://arxiv.org/abs/2010.05171) and released in

[this repository](https://github.com/pytorch/fairseq/tree/master/examples/speech_to_text)

## Model description

S2T is a transformer-based seq2seq (encoder-decoder) model designed for end-to-end Automatic Speech Recognition (ASR) and Speech

Translation (ST). It uses a convolutional downsampler to reduce the length of speech inputs by 3/4th before they are

fed into the encoder. The model is trained with standard autoregressive cross-entropy loss and generates the

transcripts/translations autoregressively.

## Intended uses & limitations

This model can be used for end-to-end English speech to German text translation.

See the [model hub](https://huggingface.co/models?filter=speech_to_text) to look for other S2T checkpoints.

### How to use

As this a standard sequence to sequence transformer model, you can use the `generate` method to generate the

transcripts by passing the speech features to the model.

*Note: The `Speech2TextProcessor` object uses [torchaudio](https://github.com/pytorch/audio) to extract the

filter bank features. Make sure to install the `torchaudio` package before running this example.*

You could either install those as extra speech dependancies with

`pip install transformers"[speech, sentencepiece]"` or install the packages seperatly

with `pip install torchaudio sentencepiece`.

```python

import torch

from transformers import Speech2TextProcessor, Speech2TextForConditionalGeneration

from datasets import load_dataset

import soundfile as sf

model = Speech2TextForConditionalGeneration.from_pretrained("facebook/s2t-small-mustc-en-de-st")

processor = Speech2TextProcessor.from_pretrained("facebook/s2t-small-mustc-en-de-st")

def map_to_array(batch):

speech, _ = sf.read(batch["file"])

batch["speech"] = speech

return batch

ds = load_dataset(

"patrickvonplaten/librispeech_asr_dummy",

"clean",

split="validation"

)

ds = ds.map(map_to_array)

inputs = processor(

ds["speech"][0],

sampling_rate=16_000,

return_tensors="pt"

)

generated_ids = model.generate(input_ids=inputs["input_features"], attention_mask=inputs["attention_mask"])

translation = processor.batch_decode(generated_ids, skip_special_tokens=True)

```

## Training data

The s2t-small-mustc-en-de-st is trained on English-German subset of [MuST-C](https://ict.fbk.eu/must-c/).

MuST-C is a multilingual speech translation corpus whose size and quality facilitates the training of end-to-end systems

for speech translation from English into several languages. For each target language, MuST-C comprises several hundred

hours of audio recordings from English TED Talks, which are automatically aligned at the sentence level with their manual

transcriptions and translations.

## Training procedure

### Preprocessing

The speech data is pre-processed by extracting Kaldi-compliant 80-channel log mel-filter bank features automatically from

WAV/FLAC audio files via PyKaldi or torchaudio. Further utterance-level CMVN (cepstral mean and variance normalization)

is applied to each example.

The texts are lowercased and tokenized using SentencePiece and a vocabulary size of 8,000.

### Training

The model is trained with standard autoregressive cross-entropy loss and using [SpecAugment](https://arxiv.org/abs/1904.08779).

The encoder receives speech features, and the decoder generates the transcripts autoregressively. To accelerate

model training and for better performance the encoder is pre-trained for English ASR.

## Evaluation results

MuST-C test results for en-de (BLEU score): 22.7

### BibTeX entry and citation info

```bibtex

@inproceedings{wang2020fairseqs2t,

title = {fairseq S2T: Fast Speech-to-Text Modeling with fairseq},

author = {Changhan Wang and Yun Tang and Xutai Ma and Anne Wu and Dmytro Okhonko and Juan Pino},

booktitle = {Proceedings of the 2020 Conference of the Asian Chapter of the Association for Computational Linguistics (AACL): System Demonstrations},

year = {2020},

}

```

|

facebook/wav2vec2-xls-r-2b-21-to-en

|

e045eaf53c335796df62992c1aee949a1c20d32c

|

2022-05-27T03:01:36.000Z

|

[

"pytorch",

"speech-encoder-decoder",

"automatic-speech-recognition",

"multilingual",

"fr",

"de",

"es",

"ca",

"it",

"ru",

"zh",

"pt",

"fa",

"et",

"mn",

"nl",

"tr",

"ar",

"sv",

"lv",

"sl",

"ta",

"ja",

"id",

"cy",

"en",

"dataset:common_voice",

"dataset:multilingual_librispeech",

"dataset:covost2",

"arxiv:2111.09296",

"transformers",

"speech",

"xls_r",

"xls_r_translation",

"license:apache-2.0"

] |

automatic-speech-recognition

| false

|

facebook

| null |

facebook/wav2vec2-xls-r-2b-21-to-en

| 121

| 1

|

transformers

| 4,310

|

---

language:

- multilingual

- fr

- de

- es

- ca

- it

- ru

- zh

- pt

- fa

- et

- mn

- nl

- tr

- ar

- sv

- lv

- sl

- ta

- ja

- id

- cy

- en

datasets:

- common_voice

- multilingual_librispeech

- covost2

tags:

- speech

- xls_r

- automatic-speech-recognition

- xls_r_translation

pipeline_tag: automatic-speech-recognition

license: apache-2.0

widget:

- example_title: Swedish

src: https://cdn-media.huggingface.co/speech_samples/cv_swedish_1.mp3

- example_title: Arabic

src: https://cdn-media.huggingface.co/speech_samples/common_voice_ar_19058308.mp3

- example_title: Russian

src: https://cdn-media.huggingface.co/speech_samples/common_voice_ru_18849022.mp3

- example_title: German

src: https://cdn-media.huggingface.co/speech_samples/common_voice_de_17284683.mp3

- example_title: French

src: https://cdn-media.huggingface.co/speech_samples/common_voice_fr_17299386.mp3

- example_title: Indonesian

src: https://cdn-media.huggingface.co/speech_samples/common_voice_id_19051309.mp3

- example_title: Italian

src: https://cdn-media.huggingface.co/speech_samples/common_voice_it_17415776.mp3

- example_title: Japanese

src: https://cdn-media.huggingface.co/speech_samples/common_voice_ja_19482488.mp3

- example_title: Mongolian

src: https://cdn-media.huggingface.co/speech_samples/common_voice_mn_18565396.mp3

- example_title: Dutch

src: https://cdn-media.huggingface.co/speech_samples/common_voice_nl_17691471.mp3

- example_title: Russian

src: https://cdn-media.huggingface.co/speech_samples/common_voice_ru_18849022.mp3

- example_title: Turkish

src: https://cdn-media.huggingface.co/speech_samples/common_voice_tr_17341280.mp3

- example_title: Catalan

src: https://cdn-media.huggingface.co/speech_samples/common_voice_ca_17367522.mp3

- example_title: English

src: https://cdn-media.huggingface.co/speech_samples/common_voice_en_18301577.mp3

- example_title: Dutch

src: https://cdn-media.huggingface.co/speech_samples/common_voice_nl_17691471.mp3

---

# Wav2Vec2-XLS-R-2b-21-EN

Facebook's Wav2Vec2 XLS-R fine-tuned for **Speech Translation.**

This is a [SpeechEncoderDecoderModel](https://huggingface.co/transformers/model_doc/speechencoderdecoder.html) model.

The encoder was warm-started from the [**`facebook/wav2vec2-xls-r-2b`**](https://huggingface.co/facebook/wav2vec2-xls-r-2b) checkpoint and

the decoder from the [**`facebook/mbart-large-50`**](https://huggingface.co/facebook/mbart-large-50) checkpoint.

Consequently, the encoder-decoder model was fine-tuned on 21 `{lang}` -> `en` translation pairs of the [Covost2 dataset](https://huggingface.co/datasets/covost2).

The model can translate from the following spoken languages `{lang}` -> `en` (English):

{`fr`, `de`, `es`, `ca`, `it`, `ru`, `zh-CN`, `pt`, `fa`, `et`, `mn`, `nl`, `tr`, `ar`, `sv-SE`, `lv`, `sl`, `ta`, `ja`, `id`, `cy`} -> `en`

For more information, please refer to Section *5.1.2* of the [official XLS-R paper](https://arxiv.org/abs/2111.09296).

## Usage

### Demo

The model can be tested directly on the speech recognition widget on this model card!

Simple record some audio in one of the possible spoken languages or pick an example audio file to see how well the checkpoint can translate the input.

### Example

As this a standard sequence to sequence transformer model, you can use the `generate` method to generate the

transcripts by passing the speech features to the model.

You can use the model directly via the ASR pipeline

```python

from datasets import load_dataset

from transformers import pipeline

# replace following lines to load an audio file of your choice

librispeech_en = load_dataset("patrickvonplaten/librispeech_asr_dummy", "clean", split="validation")

audio_file = librispeech_en[0]["file"]

asr = pipeline("automatic-speech-recognition", model="facebook/wav2vec2-xls-r-2b-21-to-en", feature_extractor="facebook/wav2vec2-xls-r-2b-21-to-en")

translation = asr(audio_file)

```

or step-by-step as follows:

```python

import torch

from transformers import Speech2Text2Processor, SpeechEncoderDecoderModel

from datasets import load_dataset

model = SpeechEncoderDecoderModel.from_pretrained("facebook/wav2vec2-xls-r-2b-21-to-en")

processor = Speech2Text2Processor.from_pretrained("facebook/wav2vec2-xls-r-2b-21-to-en")

ds = load_dataset("patrickvonplaten/librispeech_asr_dummy", "clean", split="validation")

inputs = processor(ds[0]["audio"]["array"], sampling_rate=ds[0]["audio"]["array"]["sampling_rate"], return_tensors="pt")

generated_ids = model.generate(input_ids=inputs["input_features"], attention_mask=inputs["attention_mask"])

transcription = processor.batch_decode(generated_ids)

```

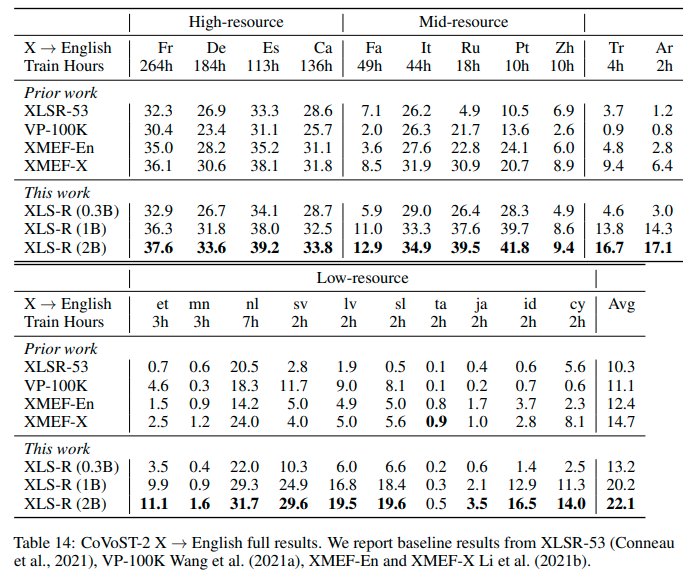

## Results `{lang}` -> `en`

See the row of **XLS-R (2B)** for the performance on [Covost2](https://huggingface.co/datasets/covost2) for this model.

## More XLS-R models for `{lang}` -> `en` Speech Translation

- [Wav2Vec2-XLS-R-300M-21-EN](https://huggingface.co/facebook/wav2vec2-xls-r-300m-21-to-en)

- [Wav2Vec2-XLS-R-1B-21-EN](https://huggingface.co/facebook/wav2vec2-xls-r-1b-21-to-en)

- [Wav2Vec2-XLS-R-2B-21-EN](https://huggingface.co/facebook/wav2vec2-xls-r-2b-21-to-en)

- [Wav2Vec2-XLS-R-2B-22-16](https://huggingface.co/facebook/wav2vec2-xls-r-2b-22-to-16)

|

huggingtweets/commanderwuff

|

f9c9c97d7f1ba3f1f8932541096b5d6302dd307d

|

2021-05-21T23:15:31.000Z

|

[

"pytorch",

"jax",

"gpt2",

"text-generation",

"en",

"transformers",

"huggingtweets"

] |

text-generation

| false

|

huggingtweets

| null |

huggingtweets/commanderwuff

| 121

| null |

transformers

| 4,311

|

---

language: en

thumbnail: https://www.huggingtweets.com/commanderwuff/1614170164099/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div>

<div style="width: 132px; height:132px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1363930888585703425/kbXPjWRV_400x400.jpg')">

</div>

<div style="margin-top: 8px; font-size: 19px; font-weight: 800">CommanderWuffels 🤖 AI Bot </div>

<div style="font-size: 15px">@commanderwuff bot</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://app.wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-model-to-generate-tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on [@commanderwuff's tweets](https://twitter.com/commanderwuff).

| Data | Quantity |

| --- | --- |

| Tweets downloaded | 2214 |

| Retweets | 1573 |

| Short tweets | 144 |

| Tweets kept | 497 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/2a74c2hq/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @commanderwuff's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/2f3nzjf3) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/2f3nzjf3/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/commanderwuff')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

ktangri/gpt-neo-demo

|

dbb3415f20cb5679e122ebe4bc6126b82f44cfa2

|

2021-07-21T15:20:09.000Z

|

[

"pytorch",

"gpt_neo",

"text-generation",

"en",

"dataset:the Pile",

"transformers",

"text generation",

"the Pile",

"causal-lm",

"license:apache-2.0"

] |

text-generation

| false

|

ktangri

| null |

ktangri/gpt-neo-demo

| 121

| 1

|

transformers

| 4,312

|

---

language:

- en

tags:

- text generation

- pytorch

- the Pile

- causal-lm

license: apache-2.0

datasets:

- the Pile

---

# GPT-Neo 2.7B (By EleutherAI)

## Model Description

GPT-Neo 2.7B is a transformer model designed using EleutherAI's replication of the GPT-3 architecture. GPT-Neo refers to the class of models, while 2.7B represents the number of parameters of this particular pre-trained model.

## Training data

GPT-Neo 2.7B was trained on the Pile, a large scale curated dataset created by EleutherAI for the purpose of training this model.

## Training procedure

This model was trained for 420 billion tokens over 400,000 steps. It was trained as a masked autoregressive language model, using cross-entropy loss.

## Intended Use and Limitations

This way, the model learns an inner representation of the English language that can then be used to extract features useful for downstream tasks. The model is best at what it was pretrained for however, which is generating texts from a prompt.

### How to use

You can use this model directly with a pipeline for text generation. This example generates a different sequence each time it's run:

```py

>>> from transformers import pipeline

>>> generator = pipeline('text-generation', model='EleutherAI/gpt-neo-2.7B')

>>> generator("EleutherAI has", do_sample=True, min_length=50)

[{'generated_text': 'EleutherAI has made a commitment to create new software packages for each of its major clients and has'}]

```

### Limitations and Biases

GPT-Neo was trained as an autoregressive language model. This means that its core functionality is taking a string of text and predicting the next token. While language models are widely used for tasks other than this, there are a lot of unknowns with this work.

GPT-Neo was trained on the Pile, a dataset known to contain profanity, lewd, and otherwise abrasive language. Depending on your usecase GPT-Neo may produce socially unacceptable text. See Sections 5 and 6 of the Pile paper for a more detailed analysis of the biases in the Pile.

As with all language models, it is hard to predict in advance how GPT-Neo will respond to particular prompts and offensive content may occur without warning. We recommend having a human curate or filter the outputs before releasing them, both to censor undesirable content and to improve the quality of the results.

## Eval results

All evaluations were done using our [evaluation harness](https://github.com/EleutherAI/lm-evaluation-harness). Some results for GPT-2 and GPT-3 are inconsistent with the values reported in the respective papers. We are currently looking into why, and would greatly appreciate feedback and further testing of our eval harness. If you would like to contribute evaluations you have done, please reach out on our [Discord](https://discord.gg/vtRgjbM).

### Linguistic Reasoning

| Model and Size | Pile BPB | Pile PPL | Wikitext PPL | Lambada PPL | Lambada Acc | Winogrande | Hellaswag |

| ---------------- | ---------- | ---------- | ------------- | ----------- | ----------- | ---------- | ----------- |

| GPT-Neo 1.3B | 0.7527 | 6.159 | 13.10 | 7.498 | 57.23% | 55.01% | 38.66% |

| GPT-2 1.5B | 1.0468 | ----- | 17.48 | 10.634 | 51.21% | 59.40% | 40.03% |

| **GPT-Neo 2.7B** | **0.7165** | **5.646** | **11.39** | **5.626** | **62.22%** | **56.50%** | **42.73%** |

| GPT-3 Ada | 0.9631 | ----- | ----- | 9.954 | 51.60% | 52.90% | 35.93% |

### Physical and Scientific Reasoning

| Model and Size | MathQA | PubMedQA | Piqa |

| ---------------- | ---------- | ---------- | ----------- |

| GPT-Neo 1.3B | 24.05% | 54.40% | 71.11% |

| GPT-2 1.5B | 23.64% | 58.33% | 70.78% |

| **GPT-Neo 2.7B** | **24.72%** | **57.54%** | **72.14%** |

| GPT-3 Ada | 24.29% | 52.80% | 68.88% |

### Down-Stream Applications

TBD

### BibTeX entry and citation info

To cite this model, use

```bibtex

@article{gao2020pile,

title={The Pile: An 800GB Dataset of Diverse Text for Language Modeling},

author={Gao, Leo and Biderman, Stella and Black, Sid and Golding, Laurence and Hoppe, Travis and Foster, Charles and Phang, Jason and He, Horace and Thite, Anish and Nabeshima, Noa and others},

journal={arXiv preprint arXiv:2101.00027},

year={2020}

}

```

To cite the codebase that this model was trained with, use

```bibtex

@software{gpt-neo,

author = {Black, Sid and Gao, Leo and Wang, Phil and Leahy, Connor and Biderman, Stella},

title = {{GPT-Neo}: Large Scale Autoregressive Language Modeling with Mesh-Tensorflow},

url = {http://github.com/eleutherai/gpt-neo},

version = {1.0},

year = {2021},

}

```

|

macedonizer/hr-gpt2

|

4913a36f6dfb05ef6ff5eb89638cadc3843d19f0

|

2021-09-22T08:58:40.000Z

|

[

"pytorch",

"gpt2",

"text-generation",

"hr",

"dataset:wiki-hr",

"transformers",

"license:apache-2.0"

] |

text-generation

| false

|

macedonizer

| null |

macedonizer/hr-gpt2

| 121

| 1

|

transformers

| 4,313

|

---

language:

- hr

thumbnail: https://huggingface.co/macedonizer/hr-gpt2/lets-talk-about-nlp-hr.jpg

license: apache-2.0

datasets:

- wiki-hr

---

# hr-gpt2

Test the whole generation capabilities here: https://transformer.huggingface.co/doc/gpt2-large

Pretrained model on English language using a causal language modeling (CLM) objective. It was introduced in

[this paper](https://d4mucfpksywv.cloudfront.net/better-language-models/language_models_are_unsupervised_multitask_learners.pdf)

and first released at [this page](https://openai.com/blog/better-language-models/).

## Model description

hr-gpt2 is a transformers model pretrained on a very large corpus of Croation data in a self-supervised fashion. This

means it was pretrained on the raw texts only, with no humans labeling them in any way (which is why it can use lots

of publicly available data) with an automatic process to generate inputs and labels from those texts. More precisely,

it was trained to guess the next word in sentences.

More precisely, inputs are sequences of the continuous text of a certain length and the targets are the same sequence,

shifted one token (word or piece of the word) to the right. The model uses internally a mask-mechanism to make sure the

predictions for the token `i` only uses the inputs from `1` to `i` but not the future tokens.

This way, the model learns an inner representation of the Macedonian language that can then be used to extract features

useful for downstream tasks. The model is best at what it was pretrained for, however, which is generating texts from a

prompt.

### How to use

Here is how to use this model to get the features of a given text in PyTorch:

import random \\nfrom transformers import AutoTokenizer, AutoModelWithLMHead

tokenizer = AutoTokenizer.from_pretrained('macedonizer/hr-gpt2') \

model = AutoModelWithLMHead.from_pretrained('macedonizer/sr-gpt2')

input_text = 'Ja sam bio '

if len(input_text) == 0: \

encoded_input = tokenizer(input_text, return_tensors="pt") \

output = model.generate( \

bos_token_id=random.randint(1, 50000), \

do_sample=True, \

top_k=50, \

max_length=1024, \

top_p=0.95, \

num_return_sequences=1, \

) \

else: \

encoded_input = tokenizer(input_text, return_tensors="pt") \

output = model.generate( \

**encoded_input, \

bos_token_id=random.randint(1, 50000), \

do_sample=True, \

top_k=50, \

max_length=1024, \

top_p=0.95, \

num_return_sequences=1, \

)

decoded_output = [] \

for sample in output: \

decoded_output.append(tokenizer.decode(sample, skip_special_tokens=True))

print(decoded_output)

|

tau/spider

|

d06abf763de54af0e2a908610cd1fa1917ca3bba

|

2022-05-08T07:51:30.000Z

|

[

"pytorch",

"dpr",

"arxiv:2112.07708",

"transformers"

] | null | false

|

tau

| null |

tau/spider

| 121

| 5

|

transformers

| 4,314

|

# Spider

This is the unsupervised pretrained model discussed in our paper [Learning to Retrieve Passages without Supervision](https://arxiv.org/abs/2112.07708).

## Usage

We used weight sharing for the query encoder and passage encoder, so the same model should be applied for both.

**Note**! We format the passages similar to DPR, i.e. the title and the text are separated by a `[SEP]` token, but token

type ids are all 0-s.

An example usage:

```python

from transformers import AutoTokenizer, DPRContextEncoder

tokenizer = AutoTokenizer.from_pretrained("tau/spider")

model = DPRContextEncoder.from_pretrained("tau/spider")

input_dict = tokenizer("title", "text", return_tensors="pt")

del input_dict["token_type_ids"]

outputs = model(**input_dict)

```

|

facebook/m2m100-12B-last-ckpt

|

d3b4890e87cd5ee681d200e66d2aa5faf3a00feb

|

2022-05-26T22:26:23.000Z

|

[

"pytorch",

"m2m_100",

"text2text-generation",

"multilingual",

"af",

"am",

"ar",

"ast",

"az",

"ba",

"be",

"bg",

"bn",

"br",

"bs",

"ca",

"ceb",

"cs",

"cy",

"da",

"de",

"el",

"en",

"es",

"et",

"fa",

"ff",

"fi",

"fr",

"fy",

"ga",

"gd",

"gl",

"gu",

"ha",

"he",

"hi",

"hr",

"ht",

"hu",

"hy",

"id",

"ig",

"ilo",

"is",

"it",

"ja",

"jv",

"ka",

"kk",

"km",

"kn",

"ko",

"lb",

"lg",

"ln",

"lo",

"lt",

"lv",

"mg",

"mk",

"ml",

"mn",

"mr",

"ms",

"my",

"ne",

"nl",

"no",

"ns",

"oc",

"or",

"pa",

"pl",

"ps",

"pt",

"ro",

"ru",

"sd",

"si",

"sk",

"sl",

"so",

"sq",

"sr",

"ss",

"su",

"sv",

"sw",

"ta",

"th",

"tl",

"tn",

"tr",

"uk",

"ur",

"uz",

"vi",

"wo",

"xh",

"yi",

"yo",

"zh",

"zu",

"arxiv:2010.11125",

"transformers",

"m2m100-12B",

"license:mit",

"autotrain_compatible"

] |

text2text-generation

| false

|

facebook

| null |

facebook/m2m100-12B-last-ckpt

| 121

| null |

transformers

| 4,315

|

---

language:

- multilingual

- af

- am

- ar

- ast

- az

- ba

- be

- bg

- bn

- br

- bs

- ca

- ceb

- cs

- cy

- da

- de

- el

- en

- es

- et

- fa

- ff

- fi

- fr

- fy

- ga

- gd

- gl

- gu

- ha

- he

- hi

- hr

- ht

- hu

- hy

- id

- ig

- ilo

- is

- it

- ja

- jv

- ka

- kk

- km

- kn

- ko

- lb

- lg

- ln

- lo

- lt

- lv

- mg

- mk

- ml

- mn

- mr

- ms

- my

- ne

- nl

- no

- ns

- oc

- or

- pa

- pl

- ps

- pt

- ro

- ru

- sd

- si

- sk

- sl

- so

- sq

- sr

- ss

- su

- sv

- sw

- ta

- th

- tl

- tn

- tr

- uk

- ur

- uz

- vi

- wo

- xh

- yi

- yo

- zh

- zu

license: mit

tags:

- m2m100-12B

---

# M2M100 12B

M2M100 is a multilingual encoder-decoder (seq-to-seq) model trained for Many-to-Many multilingual translation.

It was introduced in this [paper](https://arxiv.org/abs/2010.11125) and first released in [this](https://github.com/pytorch/fairseq/tree/master/examples/m2m_100) repository.

The model that can directly translate between the 9,900 directions of 100 languages.

To translate into a target language, the target language id is forced as the first generated token.

To force the target language id as the first generated token, pass the `forced_bos_token_id` parameter to the `generate` method.

*Note: `M2M100Tokenizer` depends on `sentencepiece`, so make sure to install it before running the example.*

To install `sentencepiece` run `pip install sentencepiece`

```python

from transformers import M2M100ForConditionalGeneration, M2M100Tokenizer

hi_text = "जीवन एक चॉकलेट बॉक्स की तरह है।"

chinese_text = "生活就像一盒巧克力。"

model = M2M100ForConditionalGeneration.from_pretrained("facebook/m2m100-12B-last-ckpt")

tokenizer = M2M100Tokenizer.from_pretrained("facebook/m2m100-12B-last-ckpt")

# translate Hindi to French

tokenizer.src_lang = "hi"

encoded_hi = tokenizer(hi_text, return_tensors="pt")

generated_tokens = model.generate(**encoded_hi, forced_bos_token_id=tokenizer.get_lang_id("fr"))

tokenizer.batch_decode(generated_tokens, skip_special_tokens=True)

# => "La vie est comme une boîte de chocolat."

# translate Chinese to English

tokenizer.src_lang = "zh"

encoded_zh = tokenizer(chinese_text, return_tensors="pt")

generated_tokens = model.generate(**encoded_zh, forced_bos_token_id=tokenizer.get_lang_id("en"))

tokenizer.batch_decode(generated_tokens, skip_special_tokens=True)

# => "Life is like a box of chocolate."

```

See the [model hub](https://huggingface.co/models?filter=m2m_100) to look for more fine-tuned versions.

## Languages covered

Afrikaans (af), Amharic (am), Arabic (ar), Asturian (ast), Azerbaijani (az), Bashkir (ba), Belarusian (be), Bulgarian (bg), Bengali (bn), Breton (br), Bosnian (bs), Catalan; Valencian (ca), Cebuano (ceb), Czech (cs), Welsh (cy), Danish (da), German (de), Greeek (el), English (en), Spanish (es), Estonian (et), Persian (fa), Fulah (ff), Finnish (fi), French (fr), Western Frisian (fy), Irish (ga), Gaelic; Scottish Gaelic (gd), Galician (gl), Gujarati (gu), Hausa (ha), Hebrew (he), Hindi (hi), Croatian (hr), Haitian; Haitian Creole (ht), Hungarian (hu), Armenian (hy), Indonesian (id), Igbo (ig), Iloko (ilo), Icelandic (is), Italian (it), Japanese (ja), Javanese (jv), Georgian (ka), Kazakh (kk), Central Khmer (km), Kannada (kn), Korean (ko), Luxembourgish; Letzeburgesch (lb), Ganda (lg), Lingala (ln), Lao (lo), Lithuanian (lt), Latvian (lv), Malagasy (mg), Macedonian (mk), Malayalam (ml), Mongolian (mn), Marathi (mr), Malay (ms), Burmese (my), Nepali (ne), Dutch; Flemish (nl), Norwegian (no), Northern Sotho (ns), Occitan (post 1500) (oc), Oriya (or), Panjabi; Punjabi (pa), Polish (pl), Pushto; Pashto (ps), Portuguese (pt), Romanian; Moldavian; Moldovan (ro), Russian (ru), Sindhi (sd), Sinhala; Sinhalese (si), Slovak (sk), Slovenian (sl), Somali (so), Albanian (sq), Serbian (sr), Swati (ss), Sundanese (su), Swedish (sv), Swahili (sw), Tamil (ta), Thai (th), Tagalog (tl), Tswana (tn), Turkish (tr), Ukrainian (uk), Urdu (ur), Uzbek (uz), Vietnamese (vi), Wolof (wo), Xhosa (xh), Yiddish (yi), Yoruba (yo), Chinese (zh), Zulu (zu)

## BibTeX entry and citation info

```

@misc{fan2020englishcentric,

title={Beyond English-Centric Multilingual Machine Translation},

author={Angela Fan and Shruti Bhosale and Holger Schwenk and Zhiyi Ma and Ahmed El-Kishky and Siddharth Goyal and Mandeep Baines and Onur Celebi and Guillaume Wenzek and Vishrav Chaudhary and Naman Goyal and Tom Birch and Vitaliy Liptchinsky and Sergey Edunov and Edouard Grave and Michael Auli and Armand Joulin},

year={2020},

eprint={2010.11125},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```

|

VietAI/vit5-large

|

8a6430bc250119f4e587b541fb9511fabcb1145d

|

2022-07-25T14:15:38.000Z

|

[

"pytorch",

"tf",

"jax",

"t5",

"text2text-generation",

"vi",

"dataset:cc100",

"transformers",

"summarization",

"translation",

"question-answering",

"license:mit",

"autotrain_compatible"

] |

question-answering

| false

|

VietAI

| null |

VietAI/vit5-large

| 121

| null |

transformers

| 4,316

|

---

language: vi

datasets:

- cc100

tags:

- summarization

- translation

- question-answering

license: mit

---

# ViT5-large

State-of-the-art pretrained Transformer-based encoder-decoder model for Vietnamese.

## How to use

For more details, do check out [our Github repo](https://github.com/vietai/ViT5).

```python

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

tokenizer = AutoTokenizer.from_pretrained("VietAI/vit5-large")

model = AutoModelForSeq2SeqLM.from_pretrained("VietAI/vit5-large")

sentence = "VietAI là tổ chức phi lợi nhuận với sứ mệnh ươm mầm tài năng về trí tuệ nhân tạo và xây dựng một cộng đồng các chuyên gia trong lĩnh vực trí tuệ nhân tạo đẳng cấp quốc tế tại Việt Nam."

text = "vi: " + sentence

encoding = tokenizer.encode_plus(text, pad_to_max_length=True, return_tensors="pt")

input_ids, attention_masks = encoding["input_ids"].to("cuda"), encoding["attention_mask"].to("cuda")

outputs = model.generate(

input_ids=input_ids, attention_mask=attention_masks,

max_length=256,

early_stopping=True

)

for output in outputs:

line = tokenizer.decode(output, skip_special_tokens=True, clean_up_tokenization_spaces=True)

print(line)

```

## Citation

```

@inproceedings{phan-etal-2022-vit5,

title = "{V}i{T}5: Pretrained Text-to-Text Transformer for {V}ietnamese Language Generation",

author = "Phan, Long and Tran, Hieu and Nguyen, Hieu and Trinh, Trieu H.",

booktitle = "Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies: Student Research Workshop",

year = "2022",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2022.naacl-srw.18",

pages = "136--142",

}

```

|

benjamin/gpt2-large-wechsel-ukrainian

|

43593df16479731a30227a4cfb62be8ca731eb53

|

2022-04-29T16:56:10.000Z

|

[

"pytorch",

"gpt2",

"text-generation",

"uk",

"arxiv:2112.06598",

"transformers",

"license:mit"

] |

text-generation

| false

|

benjamin

| null |

benjamin/gpt2-large-wechsel-ukrainian

| 121

| 3

|

transformers

| 4,317

|

---

license: mit

language: uk

---

# gpt2-large-wechsel-ukrainian

[`gpt2-large`](https://huggingface.co/gpt2-large) transferred to Ukrainian using the method from the NAACL2022 paper [WECHSEL: Effective initialization of subword embeddings for cross-lingual transfer of monolingual language models](https://arxiv.org/abs/2112.065989).

|

jonas/sdg_classifier_osdg

|

c86a6802a2e2956365669a3ab41091d2634da058

|

2022-05-24T15:46:51.000Z

|

[

"pytorch",

"bert",

"text-classification",

"en",

"dataset:jonas/osdg_sdg_data_processed",

"transformers",

"co2_eq_emissions"

] |

text-classification

| false

|

jonas

| null |

jonas/sdg_classifier_osdg

| 121

| 2

|

transformers

| 4,318

|

---

language: en

widget:

- text: "Ending all forms of discrimination against women and girls is not only a basic human right, but it also crucial to accelerating sustainable development. It has been proven time and again, that empowering women and girls has a multiplier effect, and helps drive up economic growth and development across the board.

Since 2000, UNDP, together with our UN partners and the rest of the global community, has made gender equality central to our work. We have seen remarkable progress since then. More girls are now in school compared to 15 years ago, and most regions have reached gender parity in primary education. Women now make up to 41 percent of paid workers outside of agriculture, compared to 35 percent in 1990."

datasets:

- jonas/osdg_sdg_data_processed

co2_eq_emissions: 0.0653263174784986

---

# About

Machine Learning model for classifying text according to the first 15 of the 17 Sustainable Development Goals from the United Nations. Note that model is trained on quite short paragraphs (around 100 words) and performs best with similar input sizes.

Data comes from the amazing https://osdg.ai/ community!

# Model Training Specifics

- Problem type: Multi-class Classification

- Model ID: 900229515

- CO2 Emissions (in grams): 0.0653263174784986

## Validation Metrics

- Loss: 0.3644874095916748

- Accuracy: 0.8972544579677328

- Macro F1: 0.8500873710954522

- Micro F1: 0.8972544579677328

- Weighted F1: 0.8937529692986061

- Macro Precision: 0.8694369727467804

- Micro Precision: 0.8972544579677328

- Weighted Precision: 0.8946984684977016

- Macro Recall: 0.8405065997404059

- Micro Recall: 0.8972544579677328

- Weighted Recall: 0.8972544579677328

## Usage

You can use cURL to access this model:

```

$ curl -X POST -H "Authorization: Bearer YOUR_API_KEY" -H "Content-Type: application/json" -d '{"inputs": "I love AutoTrain"}' https://api-inference.huggingface.co/models/jonas/autotrain-osdg-sdg-classifier-900229515

```

Or Python API:

```

from transformers import AutoModelForSequenceClassification, AutoTokenizer

model = AutoModelForSequenceClassification.from_pretrained("jonas/sdg_classifier_osdg", use_auth_token=True)

tokenizer = AutoTokenizer.from_pretrained("jonas/sdg_classifier_osdg", use_auth_token=True)

inputs = tokenizer("I love AutoTrain", return_tensors="pt")

outputs = model(**inputs)

```

|

PrimeQA/tydiqa-primary-task-xlm-roberta-large

|

6933c572b917c8987b756c4202d6af1e4851ee1a

|

2022-07-05T16:47:31.000Z

|

[

"pytorch",

"xlm-roberta",

"multilingual",

"arxiv:2003.05002",

"arxiv:1911.02116",

"transformers",

"MRC",

"TyDiQA",

"xlm-roberta-large"

] | null | false

|

PrimeQA

| null |

PrimeQA/tydiqa-primary-task-xlm-roberta-large

| 121

| null |

transformers

| 4,319

|

---

tags:

- MRC

- TyDiQA

- xlm-roberta-large

language:

- multilingual

---

# Model description

An XLM-RoBERTa reading comprehension model for [TyDiQA Primary Tasks](https://arxiv.org/abs/2003.05002).

The model is initialized with [xlm-roberta-large](https://huggingface.co/xlm-roberta-large/) and fine-tuned on the [TyDiQA train data](https://huggingface.co/datasets/tydiqa).

## Intended uses & limitations

You can use the raw model for the reading comprehension task. Biases associated with the pre-existing language model, xlm-roberta-large, that we used may be present in our fine-tuned model, tydiqa-primary-task-xlm-roberta-large.

## Usage

You can use this model directly with the [PrimeQA](https://github.com/primeqa/primeqa) pipeline for reading comprehension [tydiqa.ipynb](https://github.com/primeqa/primeqa/blob/main/notebooks/mrc/tydiqa.ipynb).

### BibTeX entry and citation info

```bibtex

@article{clark-etal-2020-tydi,

title = "{T}y{D}i {QA}: A Benchmark for Information-Seeking Question Answering in Typologically Diverse Languages",

author = "Clark, Jonathan H. and

Choi, Eunsol and

Collins, Michael and

Garrette, Dan and

Kwiatkowski, Tom and

Nikolaev, Vitaly and

Palomaki, Jennimaria",

journal = "Transactions of the Association for Computational Linguistics",

volume = "8",

year = "2020",

address = "Cambridge, MA",

publisher = "MIT Press",

url = "https://aclanthology.org/2020.tacl-1.30",

doi = "10.1162/tacl_a_00317",

pages = "454--470",

}

```

```bibtex

@article{DBLP:journals/corr/abs-1911-02116,

author = {Alexis Conneau and

Kartikay Khandelwal and

Naman Goyal and

Vishrav Chaudhary and

Guillaume Wenzek and

Francisco Guzm{\'{a}}n and

Edouard Grave and

Myle Ott and

Luke Zettlemoyer and

Veselin Stoyanov},

title = {Unsupervised Cross-lingual Representation Learning at Scale},

journal = {CoRR},

volume = {abs/1911.02116},

year = {2019},

url = {http://arxiv.org/abs/1911.02116},

eprinttype = {arXiv},

eprint = {1911.02116},

timestamp = {Mon, 11 Nov 2019 18:38:09 +0100},

biburl = {https://dblp.org/rec/journals/corr/abs-1911-02116.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

```

|

nickmuchi/yolos-small-rego-plates-detection

|

232139b5fd2fcaeb45ccd59de5c8eda1fe0788fe

|

2022-07-10T13:09:55.000Z

|

[

"pytorch",

"yolos",

"object-detection",

"dataset:coco",

"dataset:license-plate-detection",

"arxiv:2106.00666",

"transformers",

"license-plate-detection",

"vehicle-detection",

"license:apache-2.0",

"model-index"

] |

object-detection

| false

|

nickmuchi

| null |

nickmuchi/yolos-small-rego-plates-detection

| 121

| null |

transformers

| 4,320

|

---

license: apache-2.0

tags:

- object-detection

- license-plate-detection

- vehicle-detection

datasets:

- coco

- license-plate-detection

widget:

- src: https://drive.google.com/uc?id=1j9VZQ4NDS4gsubFf3m2qQoTMWLk552bQ

example_title: "Skoda 1"

- src: https://drive.google.com/uc?id=1p9wJIqRz3W50e2f_A0D8ftla8hoXz4T5

example_title: "Skoda 2"

metrics:

- average precision

- recall

- IOU

model-index:

- name: yolos-small-rego-plates-detection

results: []

---

# YOLOS (small-sized) model

The original YOLOS model was fine-tuned on COCO 2017 object detection (118k annotated images). It was introduced in the paper [You Only Look at One Sequence: Rethinking Transformer in Vision through Object Detection](https://arxiv.org/abs/2106.00666) by Fang et al. and first released in [this repository](https://github.com/hustvl/YOLOS).

This model was further fine-tuned on the [license plate dataset]("https://www.kaggle.com/datasets/andrewmvd/car-plate-detection") from Kaggle. The dataset consists of 735 images of annotations categorised as "vehicle" and "license-plate". The model was trained for 200 epochs on a single GPU using Google Colab

## Model description

YOLOS is a Vision Transformer (ViT) trained using the DETR loss. Despite its simplicity, a base-sized YOLOS model is able to achieve 42 AP on COCO validation 2017 (similar to DETR and more complex frameworks such as Faster R-CNN).

## Intended uses & limitations

You can use the raw model for object detection. See the [model hub](https://huggingface.co/models?search=hustvl/yolos) to look for all available YOLOS models.

### How to use

Here is how to use this model:

```python

from transformers import YolosFeatureExtractor, YolosForObjectDetection

from PIL import Image

import requests

url = 'https://drive.google.com/uc?id=1p9wJIqRz3W50e2f_A0D8ftla8hoXz4T5'

image = Image.open(requests.get(url, stream=True).raw)

feature_extractor = YolosFeatureExtractor.from_pretrained('nickmuchi/yolos-small-rego-plates-detection')

model = YolosForObjectDetection.from_pretrained('nickmuchi/yolos-small-rego-plates-detection')

inputs = feature_extractor(images=image, return_tensors="pt")

outputs = model(**inputs)

# model predicts bounding boxes and corresponding face mask detection classes

logits = outputs.logits

bboxes = outputs.pred_boxes

```

Currently, both the feature extractor and model support PyTorch.

## Training data

The YOLOS model was pre-trained on [ImageNet-1k](https://huggingface.co/datasets/imagenet2012) and fine-tuned on [COCO 2017 object detection](https://cocodataset.org/#download), a dataset consisting of 118k/5k annotated images for training/validation respectively.

### Training

This model was fine-tuned for 200 epochs on the [license plate dataset]("https://www.kaggle.com/datasets/andrewmvd/car-plate-detection").

## Evaluation results

This model achieves an AP (average precision) of **47.9**.

Accumulating evaluation results...

IoU metric: bbox

Metrics | Metric Parameter | Location | Dets | Value |

---------------- | --------------------- | ------------| ------------- | ----- |

Average Precision | (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] | 0.479 |

Average Precision | (AP) @[ IoU=0.50 | area= all | maxDets=100 ] | 0.752 |

Average Precision | (AP) @[ IoU=0.75 | area= all | maxDets=100 ] | 0.555 |

Average Precision | (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] | 0.147 |

Average Precision | (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] | 0.420 |

Average Precision | (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] | 0.804 |

Average Recall | (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] | 0.437 |

Average Recall | (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] | 0.641 |

Average Recall | (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] | 0.676 |

Average Recall | (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] | 0.268 |

Average Recall | (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] | 0.641 |

Average Recall | (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] | 0.870 |

|

TurkuNLP/wikibert-base-vi-cased

|

359e6c23f7737b19861f4db02fd4484e2ecb639c

|

2020-05-24T20:02:25.000Z

|

[

"pytorch",

"transformers"

] | null | false

|

TurkuNLP

| null |

TurkuNLP/wikibert-base-vi-cased

| 120

| null |

transformers

| 4,321

|

Entry not found

|

erst/xlm-roberta-base-finetuned-nace

|

84d9e5e01eb7a718c4ade662b6659509b73c17c0

|

2021-05-21T04:36:28.000Z

|

[

"pytorch",

"xlm-roberta",

"text-classification",

"transformers"

] |

text-classification

| false

|

erst

| null |

erst/xlm-roberta-base-finetuned-nace

| 120

| 1

|

transformers

| 4,322

|

# Classifying Text into NACE Codes

This model is [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) fine-tuned to classify descriptions of activities into [NACE Rev. 2](https://ec.europa.eu/eurostat/web/nace-rev2) codes.

## Data

The data used to fine-tune the model consist of 2.5 million descriptions of activities from Norwegian and Danish businesses. To improve the model's multilingual performance, random samples of the Norwegian and Danish descriptions were machine translated into the following languages:

- English

- German

- Spanish

- French

- Finnish

- Polish

## Quick Start

```python

from transformers import pipeline, AutoTokenizer, AutoModelForSequenceClassification

tokenizer = AutoTokenizer.from_pretrained("erst/xlm-roberta-base-finetuned-nace")

model = AutoModelForSequenceClassification.from_pretrained("erst/xlm-roberta-base-finetuned-nace")

pl = pipeline(

"sentiment-analysis",

model=model,

tokenizer=tokenizer,

return_all_scores=False,

)

pl("The purpose of our company is to build houses")

```

|

ethanyt/guwen-cls

|

3249168f65e7a2d6e1ad8fb09bd1e77db714ff90

|

2021-06-17T09:37:37.000Z

|

[

"pytorch",

"roberta",

"text-classification",

"zh",

"transformers",

"chinese",

"classical chinese",

"literary chinese",

"ancient chinese",

"bert",

"text classificatio",

"license:apache-2.0"

] |

text-classification

| false

|

ethanyt

| null |

ethanyt/guwen-cls

| 120

| 1

|

transformers

| 4,323

|

---

language:

- "zh"

thumbnail: "https://user-images.githubusercontent.com/9592150/97142000-cad08e00-179a-11eb-88df-aff9221482d8.png"

tags:

- "chinese"

- "classical chinese"

- "literary chinese"

- "ancient chinese"

- "bert"

- "pytorch"

- "text classificatio"

license: "apache-2.0"

pipeline_tag: "text-classification"

widget:

- text: "子曰:“弟子入则孝,出则悌,谨而信,泛爱众,而亲仁。行有馀力,则以学文。”"

---

# Guwen CLS

A Classical Chinese Text Classifier.

See also:

<a href="https://github.com/ethan-yt/guwen-models">

<img align="center" width="400" src="https://github-readme-stats.vercel.app/api/pin/?username=ethan-yt&repo=guwen-models&bg_color=30,e96443,904e95&title_color=fff&text_color=fff&icon_color=fff&show_owner=true" />

</a>

<a href="https://github.com/ethan-yt/cclue/">

<img align="center" width="400" src="https://github-readme-stats.vercel.app/api/pin/?username=ethan-yt&repo=cclue&bg_color=30,e96443,904e95&title_color=fff&text_color=fff&icon_color=fff&show_owner=true" />

</a>

<a href="https://github.com/ethan-yt/guwenbert/">

<img align="center" width="400" src="https://github-readme-stats.vercel.app/api/pin/?username=ethan-yt&repo=guwenbert&bg_color=30,e96443,904e95&title_color=fff&text_color=fff&icon_color=fff&show_owner=true" />

</a>

|

facebook/convnext-xlarge-384-22k-1k

|

f9f3d83b87a2a395b2ffa940a5ce7a0442c390e5

|

2022-03-02T19:02:58.000Z

|

[

"pytorch",

"tf",

"convnext",

"image-classification",

"dataset:imagenet-21k",

"dataset:imagenet-1k",

"arxiv:2201.03545",

"transformers",

"vision",

"license:apache-2.0"

] |

image-classification

| false

|

facebook

| null |

facebook/convnext-xlarge-384-22k-1k

| 120

| 2

|

transformers

| 4,324

|

---

license: apache-2.0

tags:

- vision

- image-classification

datasets:

- imagenet-21k

- imagenet-1k

widget:

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/tiger.jpg

example_title: Tiger

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/teapot.jpg

example_title: Teapot

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/palace.jpg

example_title: Palace

---

# ConvNeXT (xlarge-sized model)

ConvNeXT model pre-trained on ImageNet-22k and fine-tuned on ImageNet-1k at resolution 384x384. It was introduced in the paper [A ConvNet for the 2020s](https://arxiv.org/abs/2201.03545) by Liu et al. and first released in [this repository](https://github.com/facebookresearch/ConvNeXt).

Disclaimer: The team releasing ConvNeXT did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

ConvNeXT is a pure convolutional model (ConvNet), inspired by the design of Vision Transformers, that claims to outperform them. The authors started from a ResNet and "modernized" its design by taking the Swin Transformer as inspiration.

## Intended uses & limitations

You can use the raw model for image classification. See the [model hub](https://huggingface.co/models?search=convnext) to look for

fine-tuned versions on a task that interests you.

### How to use

Here is how to use this model to classify an image of the COCO 2017 dataset into one of the 1,000 ImageNet classes:

```python

from transformers import ConvNextFeatureExtractor, ConvNextForImageClassification

import torch

from datasets import load_dataset