Unnamed: 0

int64 0

0

| repo_id

stringlengths 5

186

| file_path

stringlengths 15

223

| content

stringlengths 1

32.8M

⌀ |

|---|---|---|---|

0 |

repos/xmake/xmake/toolchains

|

repos/xmake/xmake/toolchains/msvc/check.lua

|

--!A cross-toolchain build utility based on Lua

--

-- Licensed under the Apache License, Version 2.0 (the "License");

-- you may not use this file except in compliance with the License.

-- You may obtain a copy of the License at

--

-- http://www.apache.org/licenses/LICENSE-2.0

--

-- Unless required by applicable law or agreed to in writing, software

-- distributed under the License is distributed on an "AS IS" BASIS,

-- WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-- See the License for the specific language governing permissions and

-- limitations under the License.

--

-- Copyright (C) 2015-present, TBOOX Open Source Group.

--

-- @author ruki

-- @file check.lua

--

-- imports

import("core.base.option")

import("core.project.config")

import("detect.sdks.find_vstudio")

import("lib.detect.find_tool")

-- attempt to check vs environment

function _check_vsenv(toolchain)

-- have been checked?

local vs = toolchain:config("vs") or config.get("vs")

if vs then

vs = tostring(vs)

end

local vcvars = toolchain:config("vcvars")

if vs and vcvars then

return vs

end

-- find vstudio

local vs_toolset = toolchain:config("vs_toolset") or config.get("vs_toolset")

local vs_sdkver = toolchain:config("vs_sdkver") or config.get("vs_sdkver")

local vstudio = find_vstudio({vcvars_ver = vs_toolset, sdkver = vs_sdkver})

if vstudio then

-- make order vsver

local vsvers = {}

for vsver, _ in pairs(vstudio) do

if not vs or vs ~= vsver then

table.insert(vsvers, vsver)

end

end

table.sort(vsvers, function (a, b) return tonumber(a) > tonumber(b) end)

if vs then

table.insert(vsvers, 1, vs)

end

-- get vcvarsall

for _, vsver in ipairs(vsvers) do

local vcvarsall = (vstudio[vsver] or {}).vcvarsall or {}

local vcvars = vcvarsall[toolchain:arch()]

if vcvars and vcvars.PATH and vcvars.INCLUDE and vcvars.LIB then

-- save vcvars

toolchain:config_set("vcvars", vcvars)

toolchain:config_set("vcarchs", table.orderkeys(vcvarsall))

toolchain:config_set("vs_toolset", vcvars.VCToolsVersion)

toolchain:config_set("vs_sdkver", vcvars.WindowsSDKVersion)

-- check compiler

local program = nil

local tool = find_tool("cl.exe", {version = true, force = true, envs = vcvars})

if tool then

program = tool.program

end

if program then

return vsver, tool

end

end

end

end

end

-- check the visual studio

function _check_vstudio(toolchain)

local vs, msvc = _check_vsenv(toolchain)

if vs then

if toolchain:is_global() then

config.set("vs", vs, {force = true, readonly = true})

end

toolchain:config_set("vs", vs)

toolchain:configs_save()

cprint("checking for Microsoft Visual Studio (%s) version ... ${color.success}%s", toolchain:arch(), vs)

if msvc and msvc.version then

cprint("checking for Microsoft C/C++ Compiler (%s) version ... ${color.success}%s", toolchain:arch(), msvc.version)

end

else

cprint("checking for Microsoft Visual Studio (%s) version ... ${color.nothing}${text.nothing}", toolchain:arch())

end

return vs

end

-- main entry

function main(toolchain)

-- only for windows

if not is_host("windows") then

return

end

-- @see https://github.com/xmake-io/xmake/pull/679

local cc = path.basename(config.get("cc") or "cl"):lower()

local cxx = path.basename(config.get("cxx") or "cl"):lower()

local mrc = path.basename(config.get("mrc") or "rc"):lower()

if cc == "cl" or cxx == "cl" or mrc == "rc" then

return _check_vstudio(toolchain)

end

end

|

0 |

repos/xmake/xmake/toolchains

|

repos/xmake/xmake/toolchains/c51/xmake.lua

|

--!A cross-platform build utility based on Lua

--

-- Licensed under the Apache License, Version 2.0 (the "License");

-- you may not use this file except in compliance with the License.

-- You may obtain a copy of the License at

--

-- http://www.apache.org/licenses/LICENSE-2.0

--

-- Unless required by applicable law or agreed to in writing, software

-- distributed under the License is distributed on an "AS IS" BASIS,

-- WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-- See the License for the specific language governing permissions and

-- limitations under the License.

--

-- Copyright (C) 2015-present, TBOOX Open Source Group.

--

-- @author DawnMagnet

-- @file xmake.lua

--

toolchain("c51")

set_homepage("https://www.keil.com/c51/")

set_description("Keil development tools for the 8051 Microcontroller Architecture")

set_kind("cross")

set_kind("standalone")

set_toolset("cc", "c51")

set_toolset("cxx", "c51")

set_toolset("ld", "bl51")

on_check(function (toolchain)

import("lib.detect.find_tool")

import("detect.sdks.find_c51")

local c51 = find_c51()

if c51 and c51.sdkdir and find_tool("c51") then

toolchain:config_set("sdkdir", c51.sdkdir)

toolchain:configs_save()

return true

end

end)

|

0 |

repos/xmake/xmake/toolchains

|

repos/xmake/xmake/toolchains/clang-16/xmake.lua

|

includes(path.join(os.scriptdir(), "../clang/xmake.lua"))

toolchain_clang("16")

|

0 |

repos/xmake/xmake/toolchains

|

repos/xmake/xmake/toolchains/clang/xmake.lua

|

--!A cross-platform build utility based on Lua

--

-- Licensed under the Apache License, Version 2.0 (the "License");

-- you may not use this file except in compliance with the License.

-- You may obtain a copy of the License at

--

-- http://www.apache.org/licenses/LICENSE-2.0

--

-- Unless required by applicable law or agreed to in writing, software

-- distributed under the License is distributed on an "AS IS" BASIS,

-- WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-- See the License for the specific language governing permissions and

-- limitations under the License.

--

-- Copyright (C) 2015-present, TBOOX Open Source Group.

--

-- @author ruki

-- @file xmake.lua

--

-- define toolchain

function toolchain_clang(version)

local suffix = ""

if version then

suffix = suffix .. "-" .. version

end

toolchain("clang" .. suffix)

set_kind("standalone")

set_homepage("https://clang.llvm.org/")

set_description("A C language family frontend for LLVM" .. (version and (" (" .. version .. ")") or ""))

set_runtimes("c++_static", "c++_shared", "stdc++_static", "stdc++_shared")

set_toolset("cc", "clang" .. suffix)

set_toolset("cxx", "clang" .. suffix, "clang++" .. suffix)

set_toolset("ld", "clang++" .. suffix, "clang" .. suffix)

set_toolset("sh", "clang++" .. suffix, "clang" .. suffix)

set_toolset("ar", "ar", "llvm-ar")

set_toolset("strip", "strip", "llvm-strip")

set_toolset("ranlib", "ranlib", "llvm-ranlib")

set_toolset("objcopy", "objcopy", "llvm-objcopy")

set_toolset("mm", "clang" .. suffix)

set_toolset("mxx", "clang" .. suffix, "clang++" .. suffix)

set_toolset("as", "clang" .. suffix)

set_toolset("mrc", "llvm-rc")

on_check(function (toolchain)

return import("lib.detect.find_tool")("clang" .. suffix)

end)

on_load(function (toolchain)

local march

if toolchain:is_arch("x86_64", "x64") then

march = "-m64"

elseif toolchain:is_arch("i386", "x86") then

march = "-m32"

end

if march then

toolchain:add("cxflags", march)

toolchain:add("mxflags", march)

toolchain:add("asflags", march)

toolchain:add("ldflags", march)

toolchain:add("shflags", march)

end

if toolchain:is_plat("windows") then

toolchain:add("runtimes", "MT", "MTd", "MD", "MDd")

end

end)

end

toolchain_clang()

|

0 |

repos/xmake/xmake/toolchains

|

repos/xmake/xmake/toolchains/armclang/xmake.lua

|

--!A cross-platform build utility based on Lua

--

-- Licensed under the Apache License, Version 2.0 (the "License");

-- you may not use this file except in compliance with the License.

-- You may obtain a copy of the License at

--

-- http://www.apache.org/licenses/LICENSE-2.0

--

-- Unless required by applicable law or agreed to in writing, software

-- distributed under the License is distributed on an "AS IS" BASIS,

-- WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-- See the License for the specific language governing permissions and

-- limitations under the License.

--

-- Copyright (C) 2015-present, TBOOX Open Source Group.

--

-- @author ruki

-- @file xmake.lua

--

toolchain("armclang")

set_homepage("https://www2.keil.com/mdk5/compiler/6")

set_description("ARM Compiler Version 6 of Keil MDK")

set_kind("cross")

set_toolset("cc", "armclang")

set_toolset("cxx", "armclang")

set_toolset("ld", "armlink")

set_toolset("ar", "armar")

on_check(function (toolchain)

import("core.base.semver")

import("lib.detect.find_tool")

import("detect.sdks.find_mdk")

local mdk = find_mdk()

if mdk and mdk.sdkdir_armclang then

toolchain:config_set("sdkdir", mdk.sdkdir_armclang)

-- different assembler choices for different versions of armclang

local armclang = find_tool("armclang", {version = true, force = true, paths = path.join(mdk.sdkdir_armclang, "bin")})

if armclang and semver.compare(armclang.version, "6.13") > 0 then

toolchain:config_set("toolset_as", "armclang")

else

toolchain:config_set("toolset_as", "armasm")

end

toolchain:configs_save()

return true

end

end)

on_load(function (toolchain)

local arch = toolchain:arch()

if arch then

local arch_cpu = arch:lower()

local arch_cpu_ld = ""

local arch_target = ""

if arch_cpu:startswith("cortex-m") then

arch_cpu_ld = arch_cpu:replace("cortex-m", "Cortex-M", {plain = true})

arch_target = "arm-arm-none-eabi"

end

if arch_cpu:startswith("cortex-a") then

arch_cpu_ld = arch_cpu:replace("cortex-a", "Cortex-A", {plain = true})

arch_target = "aarch64-arm-none-eabi"

end

local as = toolchain:config("toolset_as")

toolchain:set("toolset", "as", as)

toolchain:add("cxflags", "--target=" .. arch_target)

toolchain:add("cxflags", "-mcpu=" .. arch_cpu)

toolchain:add("asflags", "--target=" .. arch_target)

toolchain:add("asflags", (as == "armclang" and "-mcpu=" or "--cpu=") .. arch_cpu)

toolchain:add("ldflags", "--cpu " .. arch_cpu_ld)

end

end)

|

0 |

repos/xmake/xmake/toolchains

|

repos/xmake/xmake/toolchains/wasi/xmake.lua

|

--!A cross-platform build utility based on Lua

--

-- Licensed under the Apache License, Version 2.0 (the "License");

-- you may not use this file except in compliance with the License.

-- You may obtain a copy of the License at

--

-- http://www.apache.org/licenses/LICENSE-2.0

--

-- Unless required by applicable law or agreed to in writing, software

-- distributed under the License is distributed on an "AS IS" BASIS,

-- WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-- See the License for the specific language governing permissions and

-- limitations under the License.

--

-- Copyright (C) 2015-present, TBOOX Open Source Group.

--

-- @author wsw0108

-- @file xmake.lua

--

-- define toolchain

toolchain("wasi")

-- set homepage

set_homepage("https://github.com/WebAssembly/wasi-sdk")

set_description("WASI-enabled WebAssembly C/C++ toolchain.")

-- mark as standalone toolchain

set_kind("standalone")

-- set toolset

set_toolset("cc", "clang")

set_toolset("cxx", "clang", "clang++")

set_toolset("cpp", "clang -E")

set_toolset("as", "clang")

set_toolset("ld", "clang++", "clang")

set_toolset("sh", "clang++", "clang")

set_toolset("ar", "llvm-ar")

set_toolset("ranlib", "llvm-ranlib")

set_toolset("strip", "llvm-strip")

-- check toolchain

on_check(function (toolchain)

import("lib.detect.find_tool")

import("detect.sdks.find_wasisdk")

local wasisdk = find_wasisdk(toolchain:sdkdir())

if wasisdk then

toolchain:config_set("bindir", wasisdk.bindir)

toolchain:config_set("sdkdir", wasisdk.sdkdir)

toolchain:configs_save()

return wasisdk

end

return import("lib.detect.find_tool")("clang", {paths = toolchain:bindir()})

end)

-- on load

on_load(function (toolchain)

local sdkdir = toolchain:sdkdir()

local sysroot = path.join(sdkdir, "share", "wasi-sysroot")

toolchain:add("cxflags", "--sysroot=" .. sysroot)

toolchain:add("mxflags", "--sysroot=" .. sysroot)

toolchain:add("ldflags", "--sysroot=" .. sysroot)

toolchain:add("shflags", "--sysroot=" .. sysroot)

-- add bin search library for loading some dependent .dll files windows

local bindir = toolchain:bindir()

if bindir and is_host("windows") then

toolchain:add("runenvs", "PATH", bindir)

end

end)

|

0 |

repos/xmake/xmake/toolchains

|

repos/xmake/xmake/toolchains/ifx/load.lua

|

--!A cross-toolchain build utility based on Lua

--

-- Licensed under the Apache License, Version 2.0 (the "License");

-- you may not use this file except in compliance with the License.

-- You may obtain a copy of the License at

--

-- http://www.apache.org/licenses/LICENSE-2.0

--

-- Unless required by applicable law or agreed to in writing, software

-- distributed under the License is distributed on an "AS IS" BASIS,

-- WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-- See the License for the specific language governing permissions and

-- limitations under the License.

--

-- Copyright (C) 2015-present, TBOOX Open Source Group.

--

-- @author ruki

-- @file load.lua

--

-- imports

import("core.base.option")

import("core.project.config")

import("detect.sdks.find_vstudio")

-- add the given vs environment

function _add_vsenv(toolchain, name, curenvs)

-- get vcvars

local vcvars = toolchain:config("vcvars")

if not vcvars then

return

end

-- get the paths for the vs environment

local new = vcvars[name]

if new then

-- fix case naming conflict for cmake/msbuild between the new msvc envs and current environment, if we are running xmake in vs prompt.

-- @see https://github.com/xmake-io/xmake/issues/4751

for k, c in pairs(curenvs) do

if name:lower() == k:lower() and name ~= k then

name = k

break

end

end

toolchain:add("runenvs", name, table.unpack(path.splitenv(new)))

end

end

-- add the given ifx environment

function _add_ifxenv(toolchain, name, curenvs)

-- get ifxvarsall

local ifxvarsall = toolchain:config("varsall")

if not ifxvarsall then

return

end

-- get ifx environment for the current arch

local arch = toolchain:arch()

local ifxenv = ifxvarsall[arch] or {}

-- get the paths for the ifx environment

local new = ifxenv[name]

if new then

-- fix case naming conflict for cmake/msbuild between the new msvc envs and current environment, if we are running xmake in vs prompt.

-- @see https://github.com/xmake-io/xmake/issues/4751

for k, c in pairs(curenvs) do

if name:lower() == k:lower() and name ~= k then

name = k

break

end

end

toolchain:add("runenvs", name, table.unpack(path.splitenv(new)))

end

end

-- load intel on windows

function _load_intel_on_windows(toolchain)

-- set toolset

toolchain:set("toolset", "fc", "ifx.exe")

toolchain:set("toolset", "mrc", "rc.exe")

if toolchain:is_arch("x64") then

toolchain:set("toolset", "as", "ml64.exe")

else

toolchain:set("toolset", "as", "ml.exe")

end

toolchain:set("toolset", "fcld", "ifx.exe")

toolchain:set("toolset", "fcsh", "ifx.exe")

toolchain:set("toolset", "ar", "link.exe")

-- add vs/ifx environments

local expect_vars = {"PATH", "LIB", "INCLUDE", "LIBPATH"}

local curenvs = os.getenvs()

for _, name in ipairs(expect_vars) do

_add_vsenv(toolchain, name, curenvs)

_add_ifxenv(toolchain, name, curenvs)

end

for _, name in ipairs(find_vstudio.get_vcvars()) do

if not table.contains(expect_vars, name:upper()) then

_add_vsenv(toolchain, name, curenvs)

end

end

end

-- load intel on linux

function _load_intel_on_linux(toolchain)

-- set toolset

toolchain:set("toolset", "fc", "ifx")

toolchain:set("toolset", "fcld", "ifx")

toolchain:set("toolset", "fcsh", "ifx")

toolchain:set("toolset", "ar", "ar")

-- add march flags

local march

if toolchain:is_arch("x86_64", "x64") then

march = "-m64"

elseif toolchain:is_arch("i386", "x86") then

march = "-m32"

end

if march then

toolchain:add("fcflags", march)

toolchain:add("fcldflags", march)

toolchain:add("fcshflags", march)

end

-- get ifx environments

local ifxenv = toolchain:config("ifxenv")

if ifxenv then

local ldname = is_host("macosx") and "DYLD_LIBRARY_PATH" or "LD_LIBRARY_PATH"

toolchain:add("runenvs", ldname, ifxenv.libdir)

end

end

-- main entry

function main(toolchain)

if is_host("windows") then

return _load_intel_on_windows(toolchain)

else

return _load_intel_on_linux(toolchain)

end

end

|

0 |

repos/xmake/xmake/toolchains

|

repos/xmake/xmake/toolchains/ifx/xmake.lua

|

--!A cross-platform build utility based on Lua

--

-- Licensed under the Apache License, Version 2.0 (the "License");

-- you may not use this file except in compliance with the License.

-- You may obtain a copy of the License at

--

-- http://www.apache.org/licenses/LICENSE-2.0

--

-- Unless required by applicable law or agreed to in writing, software

-- distributed under the License is distributed on an "AS IS" BASIS,

-- WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-- See the License for the specific language governing permissions and

-- limitations under the License.

--

-- Copyright (C) 2015-present, TBOOX Open Source Group.

--

-- @author ruki

-- @file xmake.lua

--

-- define toolchain

toolchain("ifx")

-- set homepage

set_homepage("https://www.intel.com/content/www/us/en/developer/articles/tool/oneapi-standalone-components.html#fortran")

set_description("Intel LLVM Fortran Compiler")

-- mark as standalone toolchain

set_kind("standalone")

-- check toolchain

on_check("check")

-- on load

on_load("load")

|

0 |

repos/xmake/xmake/toolchains

|

repos/xmake/xmake/toolchains/ifx/check.lua

|

--!A cross-toolchain build utility based on Lua

--

-- Licensed under the Apache License, Version 2.0 (the "License");

-- you may not use this file except in compliance with the License.

-- You may obtain a copy of the License at

--

-- http://www.apache.org/licenses/LICENSE-2.0

--

-- Unless required by applicable law or agreed to in writing, software

-- distributed under the License is distributed on an "AS IS" BASIS,

-- WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-- See the License for the specific language governing permissions and

-- limitations under the License.

--

-- Copyright (C) 2015-present, TBOOX Open Source Group.

--

-- @author ruki

-- @file check.lua

--

-- imports

import("core.base.option")

import("core.project.config")

import("detect.sdks.find_ifxenv")

import("detect.sdks.find_vstudio")

import("lib.detect.find_tool")

-- attempt to check vs environment

function _check_vsenv(toolchain)

-- has been checked?

local vs = toolchain:config("vs") or config.get("vs")

if vs then

vs = tostring(vs)

end

local vcvars = toolchain:config("vcvars")

if vs and vcvars then

return vs

end

-- find vstudio

local vs_toolset = toolchain:config("vs_toolset") or config.get("vs_toolset")

local vs_sdkver = toolchain:config("vs_sdkver") or config.get("vs_sdkver")

local vstudio = find_vstudio({vcvars_ver = vs_toolset, sdkver = vs_sdkver})

if vstudio then

-- make order vsver

local vsvers = {}

for vsver, _ in pairs(vstudio) do

if not vs or vs ~= vsver then

table.insert(vsvers, vsver)

end

end

table.sort(vsvers, function (a, b) return tonumber(a) > tonumber(b) end)

if vs then

table.insert(vsvers, 1, vs)

end

-- get vcvarsall

for _, vsver in ipairs(vsvers) do

local vcvarsall = (vstudio[vsver] or {}).vcvarsall or {}

local vcvars = vcvarsall[toolchain:arch()]

if vcvars and vcvars.PATH and vcvars.INCLUDE and vcvars.LIB then

-- save vcvars

toolchain:config_set("vcvars", vcvars)

toolchain:config_set("vcarchs", table.orderkeys(vcvarsall))

toolchain:config_set("vs_toolset", vcvars.VCToolsVersion)

toolchain:config_set("vs_sdkver", vcvars.WindowsSDKVersion)

-- check compiler

local program

local paths

local pathenv = os.getenv("PATH")

if pathenv then

paths = path.splitenv(pathenv)

end

local tool = find_tool("cl.exe", {version = true, force = true, paths = paths, envs = vcvars})

if tool then

program = tool.program

end

if program then

return vsver, tool

end

end

end

end

end

-- check the visual studio

function _check_vstudio(toolchain)

local vs = _check_vsenv(toolchain)

if vs then

if toolchain:is_global() then

config.set("vs", vs, {force = true, readonly = true})

end

toolchain:config_set("vs", vs)

toolchain:configs_save()

cprint("checking for Microsoft Visual Studio (%s) version ... ${color.success}%s", toolchain:arch(), vs)

else

cprint("checking for Microsoft Visual Studio (%s) version ... ${color.nothing}${text.nothing}", toolchain:arch())

end

return vs

end

-- check intel on windows

function _check_intel_on_windows(toolchain)

-- have been checked?

local varsall = toolchain:config("varsall")

if varsall then

return true

end

-- find intel llvm c/c++ compiler environment

local ifxenv = find_ifxenv()

if ifxenv and ifxenv.ifxvars then

local ifxvarsall = ifxenv.ifxvars

local ifxenv = ifxvarsall[toolchain:arch()]

if ifxenv and ifxenv.PATH and ifxenv.INCLUDE and ifxenv.LIB then

local tool = find_tool("ifx.exe", {force = true, envs = ifxenv, version = true})

if tool then

cprint("checking for Intel LLVM Fortran Compiler (%s) ... ${color.success}${text.success}", toolchain:arch())

toolchain:config_set("varsall", ifxvarsall)

toolchain:configs_save()

return _check_vstudio(toolchain)

end

end

end

end

-- check intel on linux

function _check_intel_on_linux(toolchain)

local ifxenv = toolchain:config("ifxenv")

if ifxenv then

return true

end

ifxenv = find_ifxenv()

if ifxenv then

local ldname = is_host("macosx") and "DYLD_LIBRARY_PATH" or "LD_LIBRARY_PATH"

local tool = find_tool("ifx", {force = true, envs = {[ldname] = ifxenv.libdir}, paths = ifxenv.bindir})

if tool then

cprint("checking for Intel Fortran Compiler (%s) ... ${color.success}${text.success}", toolchain:arch())

toolchain:config_set("ifxenv", ifxenv)

toolchain:config_set("bindir", ifxenv.bindir)

toolchain:configs_save()

return true

end

return true

end

end

-- main entry

function main(toolchain)

if is_host("windows") then

return _check_intel_on_windows(toolchain)

else

return _check_intel_on_linux(toolchain)

end

end

|

0 |

repos/xmake/xmake/toolchains

|

repos/xmake/xmake/toolchains/gdc/xmake.lua

|

--!A cross-platform build utility based on Lua

--

-- Licensed under the Apache License, Version 2.0 (the "License");

-- you may not use this file except in compliance with the License.

-- You may obtain a copy of the License at

--

-- http://www.apache.org/licenses/LICENSE-2.0

--

-- Unless required by applicable law or agreed to in writing, software

-- distributed under the License is distributed on an "AS IS" BASIS,

-- WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-- See the License for the specific language governing permissions and

-- limitations under the License.

--

-- Copyright (C) 2015-present, TBOOX Open Source Group.

--

-- @author ruki

-- @file xmake.lua

--

-- define toolchain

toolchain("gdc")

set_homepage("https://gdcproject.org/")

set_description("The GNU D Compiler (GDC).")

on_check("check")

on_load(function (toolchain)

local cross = toolchain:cross() or ""

toolchain:add("toolset", "dc", cross .. "gdc")

toolchain:add("toolset", "dcld", cross .. "gdc")

toolchain:add("toolset", "dcsh", cross .. "gdc")

toolchain:add("toolset", "dcar", cross .. "gcc-ar")

local march

if toolchain:is_arch("x86_64", "x64") then

march = "-m64"

elseif toolchain:is_arch("i386", "x86") then

march = "-m32"

end

toolchain:add("dcflags", march or "")

toolchain:add("dcshflags", march or "")

toolchain:add("dcldflags", march or "")

end)

|

0 |

repos/xmake/xmake/toolchains

|

repos/xmake/xmake/toolchains/gdc/check.lua

|

--!A cross-toolchain build utility based on Lua

--

-- Licensed under the Apache License, Version 2.0 (the "License");

-- you may not use this file except in compliance with the License.

-- You may obtain a copy of the License at

--

-- http://www.apache.org/licenses/LICENSE-2.0

--

-- Unless required by applicable law or agreed to in writing, software

-- distributed under the License is distributed on an "AS IS" BASIS,

-- WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-- See the License for the specific language governing permissions and

-- limitations under the License.

--

-- Copyright (C) 2015-present, TBOOX Open Source Group.

--

-- @author ruki

-- @file check.lua

--

-- imports

import("core.project.config")

import("lib.detect.find_tool")

import("detect.sdks.find_cross_toolchain")

function main(toolchain)

-- we attempt to find gdc in $PATH

if find_tool("gdc") then

return true

end

-- we need to find gdc2 in the given toolchain sdk directory

local sdkdir = toolchain:sdkdir()

local bindir = toolchain:bindir()

local cross = toolchain:cross()

if not sdkdir and not bindir and not cross then

return

end

-- find cross toolchain

local cross_toolchain = find_cross_toolchain(sdkdir, {bindir = bindir, cross = cross})

if cross_toolchain then

toolchain:config_set("cross", cross_toolchain.cross)

toolchain:config_set("bindir", cross_toolchain.bindir)

toolchain:config_set("sdkdir", cross_toolchain.sdkdir)

toolchain:configs_save()

else

raise("cross toolchain not found!")

end

return cross_toolchain

end

|

0 |

repos/xmake/xmake/toolchains

|

repos/xmake/xmake/toolchains/clang-15/xmake.lua

|

includes(path.join(os.scriptdir(), "../clang/xmake.lua"))

toolchain_clang("15")

|

0 |

repos/xmake/xmake/toolchains

|

repos/xmake/xmake/toolchains/gcc-4.8/xmake.lua

|

includes(path.join(os.scriptdir(), "../gcc/xmake.lua"))

toolchain_gcc("4.8")

|

0 |

repos/xmake/xmake/toolchains

|

repos/xmake/xmake/toolchains/sdcc/xmake.lua

|

--!A cross-platform build utility based on Lua

--

-- Licensed under the Apache License, Version 2.0 (the "License");

-- you may not use this file except in compliance with the License.

-- You may obtain a copy of the License at

--

-- http://www.apache.org/licenses/LICENSE-2.0

--

-- Unless required by applicable law or agreed to in writing, software

-- distributed under the License is distributed on an "AS IS" BASIS,

-- WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-- See the License for the specific language governing permissions and

-- limitations under the License.

--

-- Copyright (C) 2015-present, TBOOX Open Source Group.

--

-- @author ruki

-- @file xmake.lua

--

-- define toolchain

toolchain("sdcc")

-- set homepage

set_homepage("http://sdcc.sourceforge.net/")

set_description("Small Device C Compiler")

-- mark as standalone toolchain

set_kind("standalone")

-- set toolset

set_toolset("cc", "sdcc")

set_toolset("cxx", "sdcc")

set_toolset("cpp", "sdcpp")

set_toolset("as", "sdcc")

set_toolset("ld", "sdcc")

set_toolset("sh", "sdcc")

set_toolset("ar", "sdar")

-- set archs

set_archs("stm8", "mcs51", "z80", "z180", "r2k", "r3ka", "s08", "hc08")

-- set formats

set_formats("static", "$(name).lib")

set_formats("object", "$(name).rel")

set_formats("binary", "$(name).bin")

set_formats("symbol", "$(name).sym")

-- check toolchain

on_check("check")

-- on load

on_load(function (toolchain)

local arch = toolchain:arch()

if arch then

toolchain:add("cxflags", "-m" .. arch)

toolchain:add("ldflags", "-m" .. arch)

end

end)

|

0 |

repos/xmake/xmake/toolchains

|

repos/xmake/xmake/toolchains/sdcc/check.lua

|

--!A cross-toolchain build utility based on Lua

--

-- Licensed under the Apache License, Version 2.0 (the "License");

-- you may not use this file except in compliance with the License.

-- You may obtain a copy of the License at

--

-- http://www.apache.org/licenses/LICENSE-2.0

--

-- Unless required by applicable law or agreed to in writing, software

-- distributed under the License is distributed on an "AS IS" BASIS,

-- WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-- See the License for the specific language governing permissions and

-- limitations under the License.

--

-- Copyright (C) 2015-present, TBOOX Open Source Group.

--

-- @author ruki

-- @file check.lua

--

-- imports

import("core.project.config")

import("detect.sdks.find_cross_toolchain")

-- check the cross toolchain

function main(toolchain)

local sdkdir = toolchain:sdkdir()

local bindir = toolchain:bindir()

local cross_toolchain = find_cross_toolchain(sdkdir, {bindir = bindir})

if cross_toolchain then

toolchain:config_set("cross", cross_toolchain.cross)

toolchain:config_set("bindir", cross_toolchain.bindir)

toolchain:configs_save()

else

raise("sdcc toolchain not found!")

end

return cross_toolchain

end

|

0 |

repos

|

repos/weekend-raytracer-zig/README.md

|

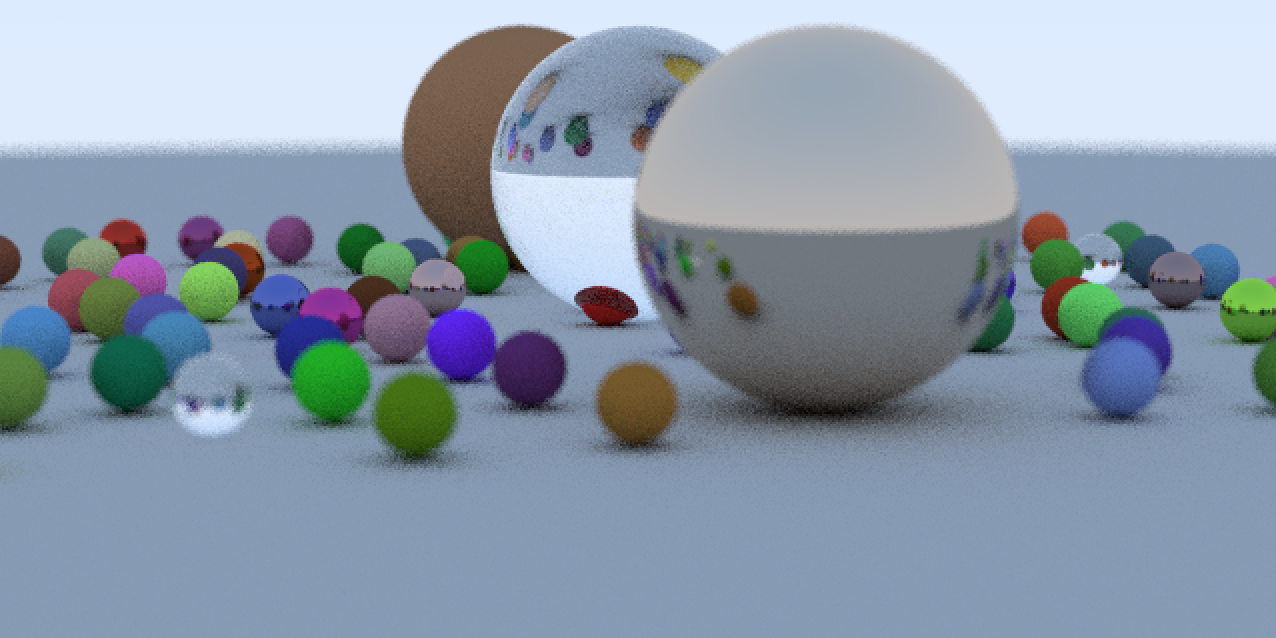

# Ray Tracing in One Weekend (Zig)

This is a fairly straightforward implementation of Peter Shirley's "Ray Tracing in One Weekend" book in the Zig programming language

To run:

```

$ zig build run -Drelease-fast

```

# Dependencies

- [email protected]: https://ziglang.org/

- SDL2: https://wiki.libsdl.org/Installation

|

0 |

repos

|

repos/weekend-raytracer-zig/build.zig

|

const Builder = @import("std").build.Builder;

pub fn build(b: *Builder) void {

const exe = b.addExecutable("zig-tracer", "src/main.zig");

exe.setBuildMode(b.standardReleaseOptions());

exe.linkSystemLibrary("c");

if (exe.target.isDarwin()) {

exe.addIncludeDir("/Library/Frameworks/SDL2.framework/Headers");

exe.linkFramework("SDL2");

} else {

exe.linkSystemLibrary("SDL2");

}

exe.install();

const run = b.step("run", "Run the project");

const run_cmd = exe.run();

run_cmd.step.dependOn(b.getInstallStep());

run.dependOn(&run_cmd.step);

}

|

0 |

repos/weekend-raytracer-zig

|

repos/weekend-raytracer-zig/src/camera.zig

|

const std = @import("std");

const math = std.math;

const rand = std.rand;

const Ray = @import("ray.zig").Ray;

const Vec3f = @import("vector.zig").Vec3f;

pub const Camera = struct {

const Self = @This();

eye: Vec3f,

lower_left_corner: Vec3f,

horizontal: Vec3f,

vertical: Vec3f,

u: Vec3f,

v: Vec3f,

lens_radius: f32,

pub fn new(lookfrom: Vec3f, lookat: Vec3f, vup: Vec3f, vfov: f32, aspect: f32, aperture: f32, focus_distance: f32) Camera {

const lens_radius = 0.5 * aperture;

// TODO: numerical constant for PI

const theta = vfov * 3.14159 / 180.0;

const half_height = math.tan(0.5 * theta);

const half_width = aspect * half_height;

const w = lookfrom.sub(lookat).makeUnitVector();

const u = vup.cross(w).makeUnitVector();

const v = w.cross(u);

const lower_left_corner = lookfrom.sub(u.mul(half_width * focus_distance)).sub(v.mul(half_height * focus_distance)).sub(w.mul(focus_distance));

const horizontal = u.mul(2.0 * half_width * focus_distance);

const vertical = v.mul(2.0 * half_height * focus_distance);

return Self{ .eye = lookfrom, .lower_left_corner = lower_left_corner, .horizontal = horizontal, .vertical = vertical, .u = u, .v = v, .lens_radius = lens_radius };

}

pub fn makeRay(self: *const Self, r: *rand.Random, u: f32, v: f32) Ray {

const rd = Vec3f.randomInUnitDisk(r).mul(self.lens_radius);

const offset = self.u.mul(rd.x).add(self.v.mul(rd.y));

const lens_pos = self.eye.add(offset);

return Ray.new(lens_pos, self.lower_left_corner.add(self.horizontal.mul(u)).add(self.vertical.mul(v)).sub(lens_pos).makeUnitVector());

}

};

|

0 |

repos/weekend-raytracer-zig

|

repos/weekend-raytracer-zig/src/main.zig

|

const Camera = @import("camera.zig").Camera;

const hitable = @import("hitable.zig");

const mat = @import("material.zig");

const Material = mat.Material;

const std = @import("std");

const ArrayList = std.ArrayList;

const rand = std.rand;

const Ray = @import("ray.zig").Ray;

const Vec3f = @import("vector.zig").Vec3f;

const Sphere = hitable.Sphere;

const World = hitable.World;

const c = @cImport({

@cInclude("SDL.h");

});

// See https://github.com/zig-lang/zig/issues/565

// SDL_video.h:#define SDL_WINDOWPOS_UNDEFINED SDL_WINDOWPOS_UNDEFINED_DISPLAY(0)

// SDL_video.h:#define SDL_WINDOWPOS_UNDEFINED_DISPLAY(X) (SDL_WINDOWPOS_UNDEFINED_MASK|(X))

// SDL_video.h:#define SDL_WINDOWPOS_UNDEFINED_MASK 0x1FFF0000u

const SDL_WINDOWPOS_UNDEFINED = @bitCast(c_int, c.SDL_WINDOWPOS_UNDEFINED_MASK);

const window_width: c_int = 640;

const window_height: c_int = 320;

const num_threads: i32 = 16;

const num_samples: i32 = 256;

const max_depth: i32 = 16;

// For some reason, this isn't parsed automatically. According to SDL docs, the

// surface pointer returned is optional!

extern fn SDL_GetWindowSurface(window: *c.SDL_Window) ?*c.SDL_Surface;

fn setPixel(surf: *c.SDL_Surface, x: c_int, y: c_int, pixel: u32) void {

const target_pixel = @ptrToInt(surf.pixels) +

@intCast(usize, y) * @intCast(usize, surf.pitch) +

@intCast(usize, x) * 4;

@intToPtr(*u32, target_pixel).* = pixel;

}

fn colorNormal(r: Ray, w: *const World) Vec3f {

const maybe_hit = w.hit(r, 0.001, 10000.0);

if (maybe_hit) |hit| {

const n = hit.n.makeUnitVector();

return n.add(Vec3f.one()).mul(0.5);

} else {

const unit_direction = r.direction.makeUnitVector();

const t = 0.5 * (unit_direction.y + 1.0);

return Vec3f.new(1.0, 1.0, 1.0).mul(1.0 - t).add(Vec3f.new(0.5, 0.7, 1.0).mul(t));

}

}

fn colorAlbedo(r: Ray, w: *const World) Vec3f {

const maybe_hit = w.hit(r, 0.001, 10000.0);

if (maybe_hit) |hit| {

return switch (hit.material) {

Material.Lambertian => |l| l.albedo,

Material.Metal => |m| m.albedo,

Material.Dielectric => |l| Vec3f.one(),

};

} else {

const unit_direction = r.direction.makeUnitVector();

const t = 0.5 * (unit_direction.y + 1.0);

return Vec3f.new(1.0, 1.0, 1.0).mul(1.0 - t).add(Vec3f.new(0.5, 0.7, 1.0).mul(t));

}

}

fn colorDepthHelper(r: Ray, w: *const World, random: *rand.Random, depth: i32) i32 {

const maybe_hit = w.hit(r, 0.001, 10000.0);

if (maybe_hit) |hit| {

if (depth < max_depth) {

const scatter = switch (hit.material) {

Material.Lambertian => |l| l.scatter(hit, random),

Material.Metal => |m| m.scatter(r, hit, random),

Material.Dielectric => |d| d.scatter(r, hit, random),

};

return colorDepthHelper(scatter.ray, w, random, depth + 1);

} else {

return depth; // reached max depth

}

} else {

return depth; // hit the sky

}

}

fn colorDepth(r: Ray, w: *const World, random: *rand.Random) Vec3f {

const depth = colorDepthHelper(r, w, random, 0);

return Vec3f.new(@intToFloat(f32, depth) / @intToFloat(f32, max_depth), 0.0, 0.0);

}

fn colorScattering(r: Ray, w: *const World, random: *rand.Random) Vec3f {

const maybe_hit = w.hit(r, 0.001, 10000.0);

if (maybe_hit) |hit| {

const scatter = switch (hit.material) {

Material.Lambertian => |l| l.scatter(hit, random),

Material.Metal => |m| m.scatter(r, hit, random),

Material.Dielectric => |d| d.scatter(r, hit, random),

};

const dir = scatter.ray.direction.makeUnitVector();

return dir.add(Vec3f.one()).mul(0.5);

} else {

const unit_direction = r.direction.makeUnitVector();

const t = 0.5 * (unit_direction.y + 1.0);

return Vec3f.new(1.0, 1.0, 1.0).mul(1.0 - t).add(Vec3f.new(0.5, 0.7, 1.0).mul(t));

}

}

fn color(r: Ray, world: *const World, random: *rand.Random, depth: i32) Vec3f {

const maybe_hit = world.hit(r, 0.001, 10000.0);

if (maybe_hit) |hit| {

if (depth < max_depth) {

const scatter = switch (hit.material) {

Material.Lambertian => |l| l.scatter(hit, random),

Material.Metal => |m| m.scatter(r, hit, random),

Material.Dielectric => |d| d.scatter(r, hit, random),

};

return color(scatter.ray, world, random, depth + 1).elementwiseMul(scatter.attenuation);

} else {

return Vec3f.zero();

}

} else {

const unit_direction = r.direction.makeUnitVector();

const t = 0.5 * (unit_direction.y + 1.0);

return Vec3f.new(1.0, 1.0, 1.0).mul(1.0 - t).add(Vec3f.new(0.5, 0.7, 1.0).mul(t));

}

}

fn toBgra(r: u32, g: u32, b: u32) u32 {

return 255 << 24 | r << 16 | g << 8 | b;

}

const ThreadContext = struct {

thread_index: i32,

num_pixels: i32,

chunk_size: i32,

rng: rand.DefaultPrng,

surface: *c.SDL_Surface,

world: *const World,

camera: *const Camera,

};

fn renderFn(context: *ThreadContext) void {

const start_index = context.thread_index * context.chunk_size;

const end_index = if (start_index + context.chunk_size <= context.num_pixels) start_index + context.chunk_size else context.num_pixels;

var idx: i32 = start_index;

while (idx < end_index) : (idx += 1) {

const w = @mod(idx, window_width);

const h = @divTrunc(idx, window_width);

var sample: i32 = 0;

var color_accum = Vec3f.zero();

while (sample < num_samples) : (sample += 1) {

const v = (@intToFloat(f32, h) + context.rng.random.float(f32)) / @intToFloat(f32, window_height);

const u = (@intToFloat(f32, w) + context.rng.random.float(f32)) / @intToFloat(f32, window_width);

const r = context.camera.makeRay(&context.rng.random, u, v);

const color_sample = color(r, context.world, &context.rng.random, 0);

// const color_sample = colorScattering(r, context.world, &context.rng.random);

// const color_sample = colorDepth(r, context.world, &context.rng.random);

// const color_sample = colorNormal(r, context.world);

// const color_sample = colorAlbedo(r, context.world);

color_accum = color_accum.add(color_sample);

}

color_accum = color_accum.mul(1.0 / @intToFloat(f32, num_samples));

setPixel(context.surface, w, window_height - h - 1, toBgra(@floatToInt(u32, 255.99 * color_accum.x), @floatToInt(u32, 255.99 * color_accum.y), @floatToInt(u32, 255.99 * color_accum.z)));

}

}

pub fn main() !void {

if (c.SDL_Init(c.SDL_INIT_VIDEO) != 0) {

c.SDL_Log("Unable to initialize SDL: %s", c.SDL_GetError());

return error.SDLInitializationFailed;

}

defer c.SDL_Quit();

const window = c.SDL_CreateWindow("weekend raytracer", SDL_WINDOWPOS_UNDEFINED, SDL_WINDOWPOS_UNDEFINED, window_width, window_height, c.SDL_WINDOW_OPENGL) orelse {

c.SDL_Log("Unable to create window: %s", c.SDL_GetError());

return error.SDLInitializationFailed;

};

const surface = SDL_GetWindowSurface(window) orelse {

c.SDL_Log("Unable to get window surface: %s", c.SDL_GetError());

return error.SDLInitializationFailed;

};

// Ray tracing takes place here

const lookfrom = Vec3f.new(16.0, 2.0, 4.0);

const lookat = Vec3f.new(0.0, 0.0, 0.0);

const vfov = 15.0;

const focus_distance = lookfrom.sub(lookat).length();

const aperture = 0.4;

// 640 by 320

const aspect_ratio = @intToFloat(f32, window_width) / @intToFloat(f32, window_height);

const camera = Camera.new(lookfrom, lookat, Vec3f.new(0.0, 1.0, 0.0), vfov, aspect_ratio, aperture, focus_distance);

var world = World.init();

defer world.deinit();

try world.spheres.append(Sphere.new(Vec3f.new(0.0, -1000.0, -1.0), 1000.0, Material.lambertian(Vec3f.new(0.5, 0.5, 0.5))));

try world.spheres.append(Sphere.new(Vec3f.new(0.0, 1.0, 0.0), 1.0, Material.dielectric(1.5)));

try world.spheres.append(Sphere.new(Vec3f.new(-4.0, 1.0, 0.0), 1.0, Material.lambertian(Vec3f.new(0.4, 0.2, 0.1))));

try world.spheres.append(Sphere.new(Vec3f.new(4.0, 1.0, 0.0), 1.0, Material.metal(Vec3f.new(0.7, 0.6, 0.5), 0.0)));

var prng = rand.DefaultPrng.init(0);

const sphere_offset = Vec3f.new(4.0, 0.2, 0.0);

var i: i32 = -5;

while (i < 5) : (i += 1) {

var j: i32 = -5;

while (j < 5) : (j += 1) {

const a = @intToFloat(f32, i);

const b = @intToFloat(f32, j);

const center = Vec3f.new(a + 0.9 * prng.random.float(f32), 0.2, b + 0.9 * prng.random.float(f32));

const choose_mat = prng.random.float(f32);

if (center.sub(sphere_offset).length() > 0.9) {

if (choose_mat < 0.8) {

// diffuse

const random_albedo = Vec3f.new(prng.random.float(f32), prng.random.float(f32), prng.random.float(f32));

try world.spheres.append(Sphere.new(center, 0.2, Material.lambertian(random_albedo)));

} else if (choose_mat < 0.95) {

// metal

const random_albedo = Vec3f.new(prng.random.float(f32), prng.random.float(f32), prng.random.float(f32));

try world.spheres.append(Sphere.new(center, 0.2, Material.metal(random_albedo, 0.5 * prng.random.float(f32))));

} else {

try world.spheres.append(Sphere.new(center, 0.2, Material.dielectric(1.5)));

}

}

}

}

{

_ = c.SDL_LockSurface(surface);

var tasks = ArrayList(*std.Thread).init(std.testing.allocator);

defer tasks.deinit();

var contexts = ArrayList(ThreadContext).init(std.testing.allocator);

defer contexts.deinit();

const chunk_size = blk: {

const num_pixels = window_width * window_height;

const n = num_pixels / num_threads;

const rem = num_pixels % num_threads;

if (rem > 0) {

break :blk n + 1;

} else {

break :blk n;

}

};

{

var ithread: i32 = 0;

while (ithread < num_threads) : (ithread += 1) {

try contexts.append(ThreadContext{

.thread_index = ithread,

.num_pixels = window_width * window_height,

.chunk_size = chunk_size,

.rng = rand.DefaultPrng.init(@intCast(u64, ithread)),

.surface = surface,

.world = &world,

.camera = &camera,

});

const thread = try std.Thread.spawn(&contexts.items[@intCast(usize, ithread)], renderFn);

try tasks.append(thread);

}

}

for (tasks.items) |task| {

task.wait();

}

c.SDL_UnlockSurface(surface);

}

if (c.SDL_UpdateWindowSurface(window) != 0) {

c.SDL_Log("Error updating window surface: %s", c.SDL_GetError());

return error.SDLUpdateWindowFailed;

}

var running = true;

while (running) {

var event: c.SDL_Event = undefined;

while (c.SDL_PollEvent(&event) != 0) {

switch (event.@"type") {

c.SDL_QUIT => {

running = false;

},

else => {},

}

}

c.SDL_Delay(16);

}

}

|

0 |

repos/weekend-raytracer-zig

|

repos/weekend-raytracer-zig/src/vector.zig

|

const math = @import("std").math;

const Random = @import("std").rand.Random;

pub fn Vector3(comptime T: type) type {

return packed struct {

const Self = @This();

x: T,

y: T,

z: T,

pub fn new(x: T, y: T, z: T) Self {

return Self{

.x = x,

.y = y,

.z = z,

};

}

pub fn zero() Self {

return Self{

.x = 0.0,

.y = 0.0,

.z = 0.0,

};

}

pub fn one() Self {

return Self{

.x = 1.0,

.y = 1.0,

.z = 1.0,

};

}

pub fn add(a: Self, b: Self) Self {

return Self{

.x = a.x + b.x,

.y = a.y + b.y,

.z = a.z + b.z,

};

}

pub fn sub(a: Self, b: Self) Self {

return Self{

.x = a.x - b.x,

.y = a.y - b.y,

.z = a.z - b.z,

};

}

pub fn mul(self: Self, s: T) Self {

return Self{

.x = s * self.x,

.y = s * self.y,

.z = s * self.z,

};

}

pub fn elementwiseMul(lhs: Self, rhs: Self) Self {

return Self{

.x = lhs.x * rhs.x,

.y = lhs.y * rhs.y,

.z = lhs.z * rhs.z,

};

}

pub fn length(self: Self) T {

return math.sqrt(self.x * self.x + self.y * self.y + self.z * self.z);

}

pub fn lengthSquared(self: Self) T {

return self.x * self.x + self.y * self.y + self.z * self.z;

}

pub fn dot(a: Self, b: Self) T {

return a.x * b.x + a.y * b.y + a.z * b.z;

}

pub fn cross(a: Self, b: Self) Self {

return Self{

.x = a.y * b.z - a.z * b.y,

.y = a.z * b.x - a.x * b.z,

.z = a.x * b.y - a.y * b.x,

};

}

pub fn makeUnitVector(self: Self) Self {

const inv_n = 1.0 / self.length();

return Self{

.x = inv_n * self.x,

.y = inv_n * self.y,

.z = inv_n * self.z,

};

}

pub fn randomInUnitSphere(r: *Random) Self {

return while (true) {

const p = Vec3f.new(r.float(f32), r.float(f32), r.float(f32));

if (p.lengthSquared() < 1.0) {

break p;

}

// WTF, why do we need an else for a while loop? O.o

} else Vec3f.zero();

}

pub fn randomInUnitDisk(r: *Random) Self {

return while (true) {

const p = Vec3f.new(2.0 * r.float(f32) - 1.0, 2.0 * r.float(f32) - 1.0, 0.0);

if (p.lengthSquared() < 1.0) {

break p;

}

} else Vec3f.zero();

}

pub fn reflect(self: Self, n: Self) Self {

return self.sub(n.mul(2.0 * self.dot(n)));

}

};

}

pub const Vec3f = Vector3(f32);

const assert = @import("std").debug.assert;

const epsilon: f32 = 0.00001;

test "Vector3.add" {

const lhs = Vec3f.new(1.0, 2.0, 3.0);

const rhs = Vec3f.new(2.0, 3.0, 4.0);

const r = lhs.add(rhs);

assert(math.fabs(r.x - 3.0) < epsilon);

assert(math.fabs(r.y - 5.0) < epsilon);

assert(math.fabs(r.z - 7.0) < epsilon);

}

test "Vector3.sub" {

const lhs = Vec3f.new(2.0, 3.0, 4.0);

const rhs = Vec3f.new(2.0, 4.0, 3.0);

const r = lhs.sub(rhs);

assert(math.fabs(r.x) < epsilon);

assert(math.fabs(r.y + 1.0) < epsilon);

assert(math.fabs(r.z - 1.0) < epsilon);

}

test "Vector3.makeUnitVector" {

const v = Vec3f.new(1.0, 2.0, 3.0);

const uv = v.makeUnitVector();

assert(math.fabs(uv.length() - 1.0) < epsilon);

}

test "Vector3.cross" {

const lhs = Vec3f.new(1.0, 0.0, 2.0);

const rhs = Vec3f.new(2.0, 1.0, 2.0);

const res = lhs.cross(rhs);

assert(math.fabs(res.x + 2.0) < epsilon);

assert(math.fabs(res.y - 2.0) < epsilon);

assert(math.fabs(res.z - 1.0) < epsilon);

}

|

0 |

repos/weekend-raytracer-zig

|

repos/weekend-raytracer-zig/src/hitable.zig

|

const std = @import("std");

const math = std.math;

const ArrayList = std.ArrayList;

const debug = std.debug;

const mat = @import("material.zig");

const Material = @import("material.zig").Material;

const Ray = @import("ray.zig").Ray;

const Vec3f = @import("vector.zig").Vec3f;

pub const HitRecord = struct {

t: f32,

p: Vec3f,

n: Vec3f,

material: mat.Material

};

pub const Sphere = struct {

center: Vec3f,

radius: f32,

material: Material,

pub fn new(center: Vec3f, radius: f32, material: Material) Sphere {

return Sphere{

.center = center,

.radius = radius,

.material = material,

};

}

pub fn hit(self: Sphere, ray: Ray, t_min: f32, t_max: f32) ?HitRecord {

// C: circle center

// r: circle radius

// O: ray origin

// D: ray direction

// (t*D + O - C)^2 = r^2

// t^2 * D^2 + 2 * t * D * (O - C) + (O - C) * (O - C) = r^2

const oc = ray.origin.sub(self.center);

const a = ray.direction.dot(ray.direction);

const b = oc.dot(ray.direction); // the factor 2.0 was moved out of b

const c = oc.dot(oc) - self.radius * self.radius;

const discriminant = b * b - a * c;

if (discriminant > 0.0) {

{

const t = (-b - math.sqrt(b * b - a * c)) / a;

if (t < t_max and t > t_min) {

const hit_point = ray.pointAtParameter(t);

return HitRecord{

.t = t,

.p = hit_point,

.n = (hit_point.sub(self.center)).mul(1.0 / self.radius),

.material = self.material

};

}

}

{

const t = (-b + math.sqrt(b * b - a * c)) / a;

if (t < t_max and t > t_min) {

const hit_point = ray.pointAtParameter(t);

return HitRecord{

.t = t,

.p = hit_point,

.n = (hit_point.sub(self.center)).mul(1.0 / self.radius),

.material = self.material

};

}

}

}

return null;

}

};

pub const World = struct {

spheres: ArrayList(Sphere),

pub fn init() World {

return World {

.spheres = ArrayList(Sphere).init(std.testing.allocator)

};

}

pub fn deinit(self: *World) void {

self.spheres.deinit();

}

pub fn hit(self: *const World, ray: Ray, t_min: f32, t_max: f32) ?HitRecord {

var maybe_hit: ?HitRecord = null;

var closest_so_far = t_max;

for (self.spheres.items) |sphere| {

if (sphere.hit(ray, t_min, t_max)) |hit_rec| {

if (hit_rec.t < closest_so_far) {

maybe_hit = hit_rec;

closest_so_far = hit_rec.t;

}

}

}

return maybe_hit;

}

};

|

0 |

repos/weekend-raytracer-zig

|

repos/weekend-raytracer-zig/src/material.zig

|

const HitRecord = @import("hitable.zig").HitRecord;

const math = @import("std").math;

const Random = @import("std").rand.Random;

const Ray = @import("ray.zig").Ray;

const Vec3f = @import("vector.zig").Vec3f;

pub const Scatter = struct {

attenuation: Vec3f,

ray: Ray,

pub fn new(attenuation: Vec3f, ray: Ray) Scatter {

return Scatter{

.attenuation = attenuation,

.ray = ray,

};

}

};

pub const Lambertian = struct {

albedo: Vec3f,

pub fn scatter(self: Lambertian, hit: HitRecord, rand: *Random) Scatter {

const target = hit.p.add(hit.n.add(Vec3f.randomInUnitSphere(rand)));

const attenuation = self.albedo;

const scattered_ray = Ray.new(hit.p, target.sub(hit.p).makeUnitVector());

return Scatter.new(attenuation, scattered_ray);

}

};

pub const Metal = struct {

albedo: Vec3f,

fuzz: f32,

pub fn scatter(self: Metal, ray: Ray, hit: HitRecord, rand: *Random) Scatter {

const reflected = ray.direction.reflect(hit.n.makeUnitVector());

const attenuation = self.albedo;

const scattered = Ray.new(hit.p, reflected.add(Vec3f.randomInUnitSphere(rand).mul(self.fuzz)).makeUnitVector());

return Scatter.new(attenuation, scattered);

}

};

fn refract(v: Vec3f, n: Vec3f, ni_over_nt: f32) ?Vec3f {

// ni * sin(i) = nt * sin(t)

// sint(t) = sin(i) * (ni / nt)

const uv = v.makeUnitVector();

const dt = uv.dot(n);

const discriminant = 1.0 - ni_over_nt * ni_over_nt * (1.0 - dt * dt);

if (discriminant > 0.0) {

// ni_over_nt * (uv - dt * n) - (n * sqrt(discriminant))

return uv.sub(n.mul(dt)).mul(ni_over_nt).sub(n.mul(math.sqrt(discriminant)));

}

return null;

}

fn schlick(cosine: f32, refraction_index: f32) f32 {

var r0 = (1.0 - refraction_index) / (1.0 + refraction_index);

r0 = r0 * r0;

return r0 + (1.0 - r0) * math.pow(f32, (1.0 - cosine), 5.0);

}

pub const Dielectric = struct {

refraction_index: f32,

pub fn scatter(self: Dielectric, ray: Ray, hit: HitRecord, rand: *Random) Scatter {

// If the ray direction and hit normal are in the same half-sphere

var outward_normal: Vec3f = undefined;

var ni_over_nt: f32 = undefined;

var cosine: f32 = undefined;

if (ray.direction.dot(hit.n) > 0.0) {

outward_normal = Vec3f.new(-hit.n.x, -hit.n.y, -hit.n.z);

ni_over_nt = self.refraction_index;

cosine = self.refraction_index * ray.direction.dot(hit.n) / ray.direction.length();

} else {

outward_normal = hit.n;

ni_over_nt = 1.0 / self.refraction_index;

cosine = -ray.direction.dot(hit.n) / ray.direction.length();

}

if (refract(ray.direction, outward_normal, ni_over_nt)) |refracted_dir| {

const reflection_prob = schlick(cosine, self.refraction_index);

return if (rand.float(f32) < reflection_prob) Scatter.new(Vec3f.one(), Ray.new(hit.p, ray.direction.reflect(hit.n).makeUnitVector())) else Scatter.new(Vec3f.one(), Ray.new(hit.p, refracted_dir.makeUnitVector()));

} else {

return Scatter.new(Vec3f.one(), Ray.new(hit.p, ray.direction.reflect(hit.n).makeUnitVector()));

}

}

};

pub const Material = union(enum) {

Lambertian: Lambertian,

Metal: Metal,

Dielectric: Dielectric,

pub fn lambertian(albedo: Vec3f) Material {

return Material{ .Lambertian = Lambertian{ .albedo = albedo } };

}

pub fn metal(albedo: Vec3f, fuzz: f32) Material {

return Material{ .Metal = Metal{ .albedo = albedo, .fuzz = fuzz } };

}

pub fn dielectric(refraction_index: f32) Material {

return Material{ .Dielectric = Dielectric{ .refraction_index = refraction_index } };

}

};

const std = @import("std");

const assert = std.debug.assert;

test "complex union" {

const complex_union = Material{ .Lambertian = Lambertian{ .attenuation = Vec3f.new(1.0, 0.0, 0.0) } };

assert(complex_union.Lambertian.attenuation.x == 1.0);

}

test "switch expression" {

const complex_union = Material{ .Lambertian = Lambertian{ .attenuation = Vec3f.new(1.0, 0.0, 0.0) } };

assert(complex_union.Lambertian.attenuation.x == 1.0);

const val = switch (complex_union) {

Material.Lambertian => |l| l.attenuation.x,

Material.Metal => |m| m.albedo.x,

};

assert(val == 1.0);

}

|

0 |

repos/weekend-raytracer-zig

|

repos/weekend-raytracer-zig/src/ray.zig

|

const Vec3f = @import("vector.zig").Vec3f;

pub const Ray = struct {

origin: Vec3f,

direction: Vec3f,

pub fn new(origin: Vec3f, direction: Vec3f) Ray {

return Ray{

.origin = origin,

.direction = direction,

};

}

pub fn pointAtParameter(self: Ray, t: f32) Vec3f {

return self.origin.add(self.direction.mul(t));

}

};

const assert = @import("std").debug.assert;

const math = @import("std").math;

const epsilon: f32 = 0.00001;

test "Ray.pointAtParameter" {

const r = Ray.new(Vec3f.zero(), Vec3f.one());

const p = r.pointAtParameter(1.0);

assert(math.fabs(p.x - 1.0) < epsilon);

assert(math.fabs(p.y - 1.0) < epsilon);

assert(math.fabs(p.z - 1.0) < epsilon);

}

|

0 |

repos/advent-of-code

|

repos/advent-of-code/2023/build.zig.zon

|

.{

.name = "2023",

.version = "0.0.0",

.dependencies = .{},

.paths = .{

"",

},

}

|

0 |

repos/advent-of-code

|

repos/advent-of-code/2023/build.zig

|

const std = @import("std");

pub fn build(b: *std.Build) void {

const target = b.standardTargetOptions(.{});

const optimize = b.standardOptimizeOption(.{});

for (0..4) |day| {

const name = b.fmt("day{d:0>2}", .{day + 1});

const exe = b.addExecutable(.{

.name = name,

.root_source_file = .{ .path = b.fmt("src/{s}.zig", .{name}) },

.target = target,

.optimize = optimize,

});

b.installArtifact(exe);

const run_cmd = b.addRunArtifact(exe);

run_cmd.step.dependOn(b.getInstallStep());

if (b.args) |args| {

run_cmd.addArgs(args);

}

const run_step = b.step(b.fmt("{s}", .{name}), b.fmt("Run {s}", .{name}));

run_step.dependOn(&run_cmd.step);

}

}

|

0 |

repos/advent-of-code/2023

|

repos/advent-of-code/2023/src/day03.zig

|

const std = @import("std");

const print = std.debug.print;

const ArrayList = std.ArrayList;

const Allocator = std.mem.Allocator;

const isDigit = std.ascii.isDigit;

fn findNum(line: []const u8, idx: usize) std.fmt.ParseIntError!usize {

var begin = idx;

while (begin > 0 and isDigit(line[begin - 1])) {

begin -= 1;

}

var end = if (isDigit(line[idx])) idx + 1 else idx;

while (end < line.len and isDigit(line[end])) {

end += 1;

}

return std.fmt.parseUnsigned(usize, line[begin..end], 10);

}

pub fn solve(allocator: std.mem.Allocator, input_path: []const u8) !void {

const input_file = try std.fs.cwd().openFile(input_path, .{ .mode = .read_only });

defer input_file.close();

var buffered = std.io.bufferedReader(input_file.reader());

var reader = buffered.reader();

var lines_array = ArrayList([]const u8).init(allocator);

defer lines_array.deinit();

defer {

for (lines_array.items) |line| {

allocator.free(line);

}

}

var temp = std.ArrayList(u8).init(allocator);

defer temp.deinit();

while (true) {

defer temp.clearRetainingCapacity();

reader.streamUntilDelimiter(temp.writer(), '\n', null) catch |err| switch (err) {

error.EndOfStream => break,

else => return err,

};

try lines_array.append(try temp.toOwnedSlice());

}

var part_one: usize = 0;

var part_two: usize = 0;

const lines = lines_array.items;

for (lines, 0..) |line, line_idx| {

for (line, 0..) |char, char_idx| {

if (char != '.' and !isDigit(char)) {

// check for numbers around the symbol

const l = char_idx > 0 and isDigit(line[char_idx - 1]);

const r = char_idx < line.len - 1 and isDigit(line[char_idx + 1]);

const t = line_idx > 0 and isDigit(lines[line_idx - 1][char_idx]);

const b = line_idx < line.len - 1 and isDigit(lines[line_idx + 1][char_idx]);

const tl = line_idx > 0 and char_idx > 0 and isDigit(lines[line_idx - 1][char_idx - 1]);

const tr = line_idx > 0 and char_idx < line.len - 1 and isDigit(lines[line_idx - 1][char_idx + 1]);

const bl = line_idx < line.len - 1 and char_idx > 0 and isDigit(lines[line_idx + 1][char_idx - 1]);

const br = line_idx < line.len - 1 and char_idx < line.len - 1 and isDigit(lines[line_idx + 1][char_idx + 1]);

var count: usize = 0;

var ratio: usize = 1;

if (l) {

const num = findNum(line, char_idx - 1);

part_one += num catch 0;

ratio *= num catch 1;

count += 1;

}

if (tl) {

const num = findNum(lines[line_idx - 1], char_idx - 1);

part_one += num catch 0;

ratio *= num catch 1;

count += 1;

}

if (t and !tl) {

const num = findNum(lines[line_idx - 1], char_idx);

part_one += num catch 0;

ratio *= num catch 1;

count += 1;

}

if (tr and !t) {

const num = findNum(lines[line_idx - 1], char_idx + 1);

part_one += num catch 0;

ratio *= num catch 1;

count += 1;

}

if (r) {

const num = findNum(line, char_idx + 1);

part_one += num catch 0;

ratio *= num catch 1;

count += 1;

}

if (bl) {

const num = findNum(lines[line_idx + 1], char_idx - 1);

part_one += num catch 0;

ratio *= num catch 1;

count += 1;

}

if (b and !bl) {

const num = findNum(lines[line_idx + 1], char_idx);

part_one += num catch 0;

ratio *= num catch 1;

count += 1;

}

if (br and !b) {

const num = findNum(lines[line_idx + 1], char_idx + 1);

part_one += num catch 0;

ratio *= num catch 1;

count += 1;

}

if (char == '*' and count == 2) {

part_two += ratio;

}

}

}

}

print("part_one: {}\n", .{part_one});

print("part_two: {}\n", .{part_two});

}

pub fn main() !void {

// Set up the General Purpose allocator, this will track memory leaks, etc.

var gpa = std.heap.GeneralPurposeAllocator(.{}){};

const allocator = gpa.allocator();

defer _ = gpa.deinit();

// Parse the command line arguments to get the input file

const args = try std.process.argsAlloc(allocator);

defer std.process.argsFree(allocator, args);

if (args.len < 2) {

std.debug.print("No input file passed\n", .{});

return;

}

try solve(allocator, args[1]);

}

|

0 |

repos/advent-of-code/2023

|

repos/advent-of-code/2023/src/day01.zig

|

const std = @import("std");

const Digit = enum(usize) {

one = 1,

two,

three,

four,

five,

six,

seven,

eight,

nine,

fn fromSlice(string: []const u8) ?Digit {

return for (std.meta.tags(Digit)) |tag| {

const name = @tagName(tag);

if (name.len > string.len)

continue;

if (std.mem.eql(u8, name, string[0..name.len])) {

break tag;

}

} else null;

}

};

pub fn solve(allocator: std.mem.Allocator, input_path: []const u8) !void {

// https://www.openmsevenymind.net/Performance-of-reading-a-file-line-by-line-in-Zig/

const input_file = try std.fs.cwd().openFile(input_path, .{ .mode = .read_only });

defer input_file.close();

var buffered = std.io.bufferedReader(input_file.reader());

var reader = buffered.reader();

var line = std.ArrayList(u8).init(allocator);

defer line.deinit();

var total: usize = 0;

while (true) {

defer line.clearRetainingCapacity();

reader.streamUntilDelimiter(line.writer(), '\n', null) catch |err| switch (err) {

error.EndOfStream => break,

else => return err,

};

var idx: usize = 0;

var found_first: bool = false;

var last: usize = 0;

while (idx < line.items.len) : (idx += 1) {

// part 1

if (std.ascii.isDigit(line.items[idx])) {

last = try std.fmt.charToDigit(line.items[idx], 10);

if (!found_first) {

found_first = true;

total += 10 * last;

}

// part 2

} else if (Digit.fromSlice(line.items[idx..])) |digit| {

last = @intFromEnum(digit);

if (!found_first) {

found_first = true;

total += 10 * last;

}

// We need to decrement index by two:

// 1 is added by the while loop

// 1 is the maximum overlap between number names: sevenine, eighthree

// This is completely optional, but allows us to skip parts of the line.

idx += @tagName(digit).len - 2;

}

}

total += last;

}

std.debug.print("total: {}\n", .{total});

}

pub fn main() !void {

// Set up the General Purpose allocator, this will track memory leaks, etc.

var gpa = std.heap.GeneralPurposeAllocator(.{}){};

const allocator = gpa.allocator();

defer _ = gpa.deinit();

// Parse the command line arguments to get the input file

const args = try std.process.argsAlloc(allocator);

defer std.process.argsFree(allocator, args);

if (args.len < 2) {

std.debug.print("No input file passed\n", .{});

return;

}

try solve(allocator, args[1]);

}

|

0 |

repos/advent-of-code/2023

|

repos/advent-of-code/2023/src/day00.zig

|

const std = @import("std");

const print = std.debug.print;

const ArrayList = std.ArrayList;

const Allocator = std.mem.Allocator;

pub fn solve(allocator: Allocator, input_path: []const u8) !void {

// https://www.openmsevenymind.net/Performance-of-reading-a-file-line-by-line-in-Zig/

const input_file = try std.fs.cwd().openFile(input_path, .{ .mode = .read_only });

defer input_file.close();

var buffered = std.io.bufferedReader(input_file.reader());

var reader = buffered.reader();

var line = ArrayList(u8).init(allocator);

defer line.deinit();

while (true) {

defer line.clearRetainingCapacity();

reader.streamUntilDelimiter(line.writer(), '\n', null) catch |err| switch (err) {

error.EndOfStream => break,

else => return err,

};

}

}

pub fn main() !void {

// Set up the General Purpose allocator, this will track memory leaks, etc.

var gpa = std.heap.GeneralPurposeAllocator(.{}){};

const allocator = gpa.allocator();

defer _ = gpa.deinit();

// Parse the command line arguments to get the input file

const args = try std.process.argsAlloc(allocator);

defer std.process.argsFree(allocator, args);

if (args.len < 2) {

std.debug.print("No input file passed\n", .{});

return;

}

try solve(allocator, args[1]);

}

|

0 |

repos/advent-of-code/2023

|

repos/advent-of-code/2023/src/day04.zig

|

const std = @import("std");

const print = std.debug.print;

const ArrayList = std.ArrayList;

const Allocator = std.mem.Allocator;

const Set = std.AutoHashMap(usize, void);

const Scratchcard = struct {

winners: usize,

amount: usize = 1,

};

pub fn solve(allocator: Allocator, input_path: []const u8) !void {

// https://www.openmsevenymind.net/Performance-of-reading-a-file-line-by-line-in-Zig/

const input_file = try std.fs.cwd().openFile(input_path, .{ .mode = .read_only });

defer input_file.close();

var buffered = std.io.bufferedReader(input_file.reader());

var reader = buffered.reader();

var line = ArrayList(u8).init(allocator);

defer line.deinit();

var part_one: usize = 0;

var scratchcards = ArrayList(Scratchcard).init(allocator);

defer scratchcards.deinit();

while (true) {

defer line.clearRetainingCapacity();

reader.streamUntilDelimiter(line.writer(), '\n', null) catch |err| switch (err) {

error.EndOfStream => break,

else => return err,

};

const colon_pos = for (line.items, 0..) |char, idx| {

if (char == ':') {

break idx;

}

} else line.items.len;

var parts = std.mem.split(u8, line.items[colon_pos + 1 ..], "|");

var winning_numbers = blk: {

var set = Set.init(allocator);

var nums = std.mem.tokenize(u8, parts.next().?, " ");

while (nums.next()) |num| {

try set.put(try std.fmt.parseInt(usize, num, 10), {});

}

break :blk set;

};

defer winning_numbers.deinit();

var count: u6 = 0;

var owned_numbers = std.mem.tokenize(u8, parts.next().?, " ");

while (owned_numbers.next()) |num_str| {

const num = try std.fmt.parseInt(usize, num_str, 10);

if (winning_numbers.contains(num)) {

count += 1;

}

}

try scratchcards.append(.{ .winners = count });

switch (count) {

0 => continue,

1 => part_one += 1,

else => part_one += @as(usize, 1) << (count - 1),

}

}

var part_two: usize = 0;

for (scratchcards.items, 0..) |scratchcard, current_id| {

for (1..scratchcard.winners + 1) |i| {

scratchcards.items[current_id + i].amount += scratchcard.amount;

}

part_two += scratchcard.amount;

}

print("part_one: {}\n", .{part_one});

print("part_two: {}\n", .{part_two});

}

pub fn main() !void {

// Set up the General Purpose allocator, this will track memory leaks, etc.

var gpa = std.heap.GeneralPurposeAllocator(.{}){};

const allocator = gpa.allocator();

defer _ = gpa.deinit();

// Parse the command line arguments to get the input file

const args = try std.process.argsAlloc(allocator);

defer std.process.argsFree(allocator, args);

if (args.len < 2) {

std.debug.print("No input file passed\n", .{});

return;

}

try solve(allocator, args[1]);

}

|

0 |

repos/advent-of-code/2023

|

repos/advent-of-code/2023/src/day02.zig

|

const std = @import("std");

const print = std.debug.print;

const ArrayList = std.ArrayList;

const Allocator = std.mem.Allocator;

const Color = enum {

red,

green,

blue,

};

const Subset = struct {

color: Color,

amount: usize,

};

fn Game() type {

return struct {

const Self = @This();

id: usize,

subsets: ArrayList(Subset),

pub fn init(allocator: Allocator) Self {

return Self{ .id = 0, .subsets = ArrayList(Subset).init(allocator) };

}

pub fn deinit(self: *Self) void {

self.subsets.deinit();

}

fn check(self: Self, subsets: []const Subset) bool {

for (subsets) |subset| {

for (self.subsets.items) |candidate| {

if (subset.color == candidate.color) {

if (candidate.amount > subset.amount) {

return false;

}

}

}

}

return true;

}

fn power(self: Self) usize {

var max_red: usize = 0;

var max_green: usize = 0;

var max_blue: usize = 0;

for (self.subsets.items) |item| {

switch (item.color) {

.red => max_red = @max(max_red, item.amount),

.green => max_green = @max(max_green, item.amount),

.blue => max_blue = @max(max_blue, item.amount),

}

}

return max_red * max_green * max_blue;

}

fn parseId(self: *Self, string: []const u8) !void {

var parts = std.mem.split(u8, string, " ");

_ = parts.first();

self.id = try std.fmt.parseUnsigned(usize, parts.next().?, 10);

}

fn parseSubsets(self: *Self, string: []const u8) !void {

var subgames = std.mem.tokenize(u8, string, ";");

while (subgames.next()) |subgame| {

var pairs = std.mem.tokenize(u8, subgame, ",");

while (pairs.next()) |pair| {

var parts = std.mem.tokenize(u8, pair, " ");

try self.subsets.append(.{

.amount = try std.fmt.parseUnsigned(usize, parts.next().?, 10),

.color = std.meta.stringToEnum(Color, parts.next().?).?,

});

}

}

}

};

}

pub fn solve(allocator: std.mem.Allocator, input_path: []const u8) !void {

// https://www.openmsevenymind.net/Performance-of-reading-a-file-line-by-line-in-Zig/

const input_file = try std.fs.cwd().openFile(input_path, .{ .mode = .read_only });

defer input_file.close();

var buffered = std.io.bufferedReader(input_file.reader());

var reader = buffered.reader();

var line = std.ArrayList(u8).init(allocator);

defer line.deinit();

var part_one: usize = 0;

var part_two: usize = 0;

while (true) {

defer line.clearRetainingCapacity();

reader.streamUntilDelimiter(line.writer(), '\n', null) catch |err| switch (err) {

error.EndOfStream => break,

else => return err,

};

var parts = std.mem.split(u8, line.items, ":");

var game = Game().init(allocator);

defer game.deinit();

try game.parseId(parts.first());

try game.parseSubsets(parts.next().?);

const contents: []const Subset = &.{

.{ .amount = 12, .color = .red },

.{ .amount = 13, .color = .green },

.{ .amount = 14, .color = .blue },

};

part_one += if (game.check(contents)) game.id else 0;

part_two += game.power();

}

print("part_one: {}\n", .{part_one});

print("part_two: {}\n", .{part_two});

}

pub fn main() !void {

// Set up the General Purpose allocator, this will track memory leaks, etc.

var gpa = std.heap.GeneralPurposeAllocator(.{}){};

const allocator = gpa.allocator();

defer _ = gpa.deinit();

// Parse the command line arguments to get the input file

const args = try std.process.argsAlloc(allocator);

defer std.process.argsFree(allocator, args);

if (args.len < 2) {

std.debug.print("No input file passed\n", .{});

return;

}

try solve(allocator, args[1]);

}

|

0 |

repos

|

repos/http2.0/old.build.zig

|

const std = @import("std");

// Although this function looks imperative, note that its job is to

// declaratively construct a build graph that will be executed by an external

// runner.

pub fn build(b: *std.Build) void {

// Standard target options allows the person running `zig build` to choose

// what target to build for. Here we do not override the defaults, which

// means any target is allowed, and the default is native. Other options

// for restricting supported target set are available.

const target = b.standardTargetOptions(.{});

// Standard optimization options allow the person running `zig build` to select

// between Debug, ReleaseSafe, ReleaseFast, and ReleaseSmall. Here we do not

// set a preferred release mode, allowing the user to decide how to optimize.

const optimize = b.standardOptimizeOption(.{});

const lib = b.addStaticLibrary(.{

.name = "hpack",

// In this case the main source file is merely a path, however, in more

// complicated build scripts, this could be a generated file.

.root_source_file = b.path("main.zig"),

.target = target,

.optimize = optimize,

});

// This declares intent for the library to be installed into the standard