repo

stringclasses 1

value | number

int64 1

25.3k

| state

stringclasses 2

values | title

stringlengths 1

487

| body

stringlengths 0

234k

⌀ | created_at

stringlengths 19

19

| closed_at

stringlengths 19

19

| comments

stringlengths 0

293k

|

|---|---|---|---|---|---|---|---|

transformers

| 13,532 |

closed

|

fix PhophetNet 'use_cache' assignment of no effect

|

Hi, I noticed that there was one '=' too many, resulting in 'use_cache' not properly assigned. So I just deleted it. Hope this can be of any help to you.

|

09-12-2021 10:45:26

|

09-12-2021 10:45:26

| |

transformers

| 13,528 |

closed

|

Trainer's create_model_card creates an invalid yaml metadata `datasets: - null`

|

## Environment info

- any env

### Who can help

- discussed with @julien-c @sgugger and @LysandreJik

## Information

- The hub will soon reject push with invalid model card metadata,

- **only when `datasets`, `model-index` or `license` are present**, their content need to follow the specification cf. https://github.com/huggingface/huggingface_hub/pull/342

## To reproduce

Steps to reproduce the behavior:

1. Train a model

2. Do not association any datasets

3. The trained model and the model card are rejected by the server

## Expected behavior

trainer.py git push should be successfull, even with the coming patch https://github.com/huggingface/transformers/pull/13514

|

09-11-2021 11:16:03

|

09-11-2021 11:16:03

|

I think there are more fixes we can add to properly validate metadata:

- check the licence is correct

- check all the required fields are present before adding a `result` (which is going to also error out).

I will suggest a PR later today with all of that.<|||||>(note that this cannot really be hardcoded in `transformers` as rules can and will change on the server side)<|||||>I thought the goal was to fix the faulty model cards the Trainer was generating? If we don't want to hardcode anything in Transformers, we can just close the issue then. Why only fix the missing datasets and not the missing metrics for instance?<|||||>Hmm, the `datasets: - null` issue is not about missing datasets, it's about invalid data.

in YAML, this is parsed as `datasets = [None]` (python-syntax) whereas it should be an array of string.

In my opinion, we will not enforce rejections for missing data any time soon (especially for automatically generated model cards).<|||||>> I will suggest a PR later today with all of that.

Thanks @sgugger

Ok if you take care of `license`.

But more simply here I wanted to avoid transformer code to insert `null` as dataset name.

<|||||>(That being said, by all means, if you want to improve validation in transformers in the meantime, please do so. I'm just pointing out that writing validation code in transformers might in some cases be a little redundant)<|||||>To reformulate, we also have some problems if there is a `model_index` passed with no metrics in some result, which can be generated if the Trainer makes a push to hub with no evaluation done but can still be assigned a task and a dataset, which is a bit similar to the issue of passing a `datsasets: - null`. The license is different so we should definitely drop it for now if the list is expected to move.

The code for that won't be redundant in the sense the Trainer should not try to pass an incomplete result if we want the push to succeed. Even if we move the validation to the hf_hub library, we will still get an error. It's just the check will happen at a different level.<|||||>Yes I see. I think what you propose sounds good. Thoughts @elishowk?<|||||>IMHO the central point is to ensure transformers and the huggingface_hub CLI to display really well the server's warning and errors, that are meant to be clear and provide advices and documentation about validation failures.

> The license is different so we should definitely drop it for now if the list is expected to move.

The license enumeration is very simple, I don't think it's gonna change soon (is it ?) and the hub git server will return [the docs anyways](https://huggingface.co/docs/hub/model-repos#list-of-license-identifiers)

> Trainer should not try to pass an incomplete result if we want the push to succeed.

OK for me it's you call @sgugger and @julien-c, really, but if any checking is gonna be done on transformers' side before pushing a model's README.md metadata changes, IMHO we should use the same schema, in order to create as little maintenance as possible..

- The hub server the schema we use for `model-indel` is (for the moment) the following: [model-index-set.jtd.zip](https://github.com/huggingface/transformers/files/7153383/model-index-set.jtd.zip) (sorry github doesn't allow json uploads)

- we can generate a validation function for python using https://jsontypedef.com/docs/python-codegen/

- finally, if the JTD schema changes on the hub, we'll need to open a PR here first to update the schema, and may be later to version the schema server-side so that we don't break the older clients users have. for example by introducing a hub metadata schema version marked in the yaml itself. The server could respond (without breaking) that the pushed version is deprecated, and also later if needed implement schema conversion code.<|||||>(personally i don't see the point of this duplication if we can just try to push i.e. perform validation on the server side and report warnings/errors if push is not successful for validation reasons)

I might be missing something though<|||||>In the meantime I've suggested a fix for the problems for which this issue was created and for the incomplete results I mentioned. Both have their origin in the code of the `TrainingSummary`, so fixing them is not duplicate code :-)

We can think more about what validation we want to do where, personally I would see this more in the hf_hub side, in the function that adds metadata (which we will use in the Trainer once it's merged and in a release of hf_hub).<|||||>> In the meantime I've suggested a fix for the problems for which this issue was created and for the incomplete results I mentioned. Both have their origin in the code of the `TrainingSummary`, so fixing them is not duplicate code :-)

OK I think we understood each other now, it's about fixing the code that generates bad yaml, so also OK with @julien-c

Let's just fix the yaml generation errors we know at this time. The hub server will tell us future validation errors and how to fix then.

|

transformers

| 13,527 |

closed

|

T5 support for text classification demo code

|

## Environment info

- `transformers` version: 4.10.2

- Platform: Linux-4.4.0-210-generic-x86_64-with-debian-stretch-sid

- Python version: 3.7.0

- PyTorch version (GPU?): 1.9.0+cu102 (True)

- Tensorflow version (GPU?): not installed (NA)

- Flax version (CPU?/GPU?/TPU?): not installed (NA)

- Jax version: not installed

- JaxLib version: not installed

- Using GPU in script?: Yes

- Using distributed or parallel set-up in script?: No

### Who can help

@sgugger, @patil-suraj, @patrickvonplaten

## Information

Model I am using (Bert, XLNet ...): T5

The problem arises when using:

* [x] the official example scripts: `examples/pytorch/text-classification/run_glue.py`

* [ ] my own modified scripts: (give details below)

The tasks I am working on is:

* [x] an official GLUE/SQUaD task: GLUE

* [ ] my own task or dataset: (give details below)

## To reproduce

Steps to reproduce the behavior:

Just run https://github.com/huggingface/transformers/blob/master/examples/pytorch/text-classification/run_glue.py and set the model to `t5-base`, it produces the following output:

```

raceback (most recent call last):

File "run_glue.py", line 566, in <module>

main()

File "run_glue.py", line 336, in main

use_auth_token=True if model_args.use_auth_token else None,

File "/home2/yushi/hf_examples/.venv/lib/python3.7/site-packages/transformers/models/auto/auto_factory.py", line 390, in from_pretrained

f"Unrecognized configuration class {config.__class__} for this kind of AutoModel: {cls.__name__}.\n"

ValueError: Unrecognized configuration class <class 'transformers.models.t5.configuration_t5.T5Config'> for this kind of AutoModel: AutoModelForSequenceClassification.

Model type should be one of LayoutLMv2Config, RemBertConfig, CanineConfig, RoFormerConfig, BigBirdPegasusConfig, GPTNeoConfig, BigBirdConfig, ConvBertConfig, LEDConfig, IBertConfig, MobileBertConfig, DistilBertConfig, AlbertConfig, CamembertConfig, XLMRobertaConfig, MBartConfig, MegatronBertConfig, MPNetConfig, BartConfig, ReformerConfig, LongformerConfig, RobertaConfig, DebertaV2Config, DebertaConfig, FlaubertConfig, SqueezeBertConfig, BertConfig, OpenAIGPTConfig, GPT2Config, TransfoXLConfig, XLNetConfig, XLMConfig, CTRLConfig, ElectraConfig, FunnelConfig, LayoutLMConfig, TapasConfig.

```

<!-- If you have code snippets, error messages, stack traces please provide them here as well.

Important! Use code tags to correctly format your code. See https://help.github.com/en/github/writing-on-github/creating-and-highlighting-code-blocks#syntax-highlighting

Do not use screenshots, as they are hard to read and (more importantly) don't allow others to copy-and-paste your code.-->

It seems that the demo code doesn't support T5. Could you please add T5 support? Thank you!

## Expected behavior

Run successfully

|

09-11-2021 07:33:19

|

09-11-2021 07:33:19

|

The T5 model is not suitable for text classification, as it's a sequence to sequence model. This why there is no version of that model with a classification head, and why the text classification head then fails.<|||||>Hi @sgugger, the T5 **is** suitable for text classification, according to the T5 paper. This is performed by assigning a label word for each class and doing generation. <|||||>For example, to classify a sentence from SST2, the input is converted to:

```

sst2 sentence: it confirms fincher ’s status as a film maker who artfully bends technical know-how to the service of psychological insight .

```

The model is trained to generate "positive" for target 1, according to the Appendix D of the paper.<|||||>It has become a trend to use generative/seq2seq models for classification tasks. I wonder if HuggingFace could possibly build convenient APIs to support this paradigm. <|||||>> Hi @sgugger, the T5 is suitable for text classification, according to the T5 paper. This is performed by assigning a label word for each class and doing generation.

Yes, so this is done by using T5 as a seq2seq model, not by adding a classification head. Therefore, you can't expect the generic text classification example to work with T5. You can however adapt the seqseq scripts to have then do text classification with T5.<|||||>@Yu-Shi You could refer to this [colab](https://colab.research.google.com/github/patil-suraj/exploring-T5/blob/master/t5_fine_tuning.ipynb) to see how to use T5 for classification and then adapt the `run_summarization.py` script accordingly, i.e you will need to change the pre-processing part to prepare the input text in the required format. and then adjust the metrics.<|||||>Technically, we could add a `run_seq2seq_text_classification.py` script to the examples folder, similar to how `run_seq2seq_qa.py` is added by #13432.<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored.

|

transformers

| 13,526 |

closed

|

ONNXRuntimeError]RUNTIME_EXCEPTION : Non-zero status code returned while running Reshape node.

|

## Environment info

Copy-and-paste the text below in your GitHub issue and FILL OUT the two last points.

- `transformers` version: 4.5.0.dev0

- Platform: Windows-10-10.0.19041-SP0

- Python version: 3.7.2

- PyTorch version (GPU?): 1.9.0+cpu (False)

- Tensorflow version (GPU?): not installed (NA)

- Using GPU in script?: no

- Using distributed or parallel set-up in script?: no

### Who can help

## Information

Model I am using (Bert, XLNet ...): gptneo 125M

The problem arises when using:

* [ ] the official example scripts: (give details below)

* [x] my own modified scripts: (give details below)

The tasks I am working on is:

* [ ] an official GLUE/SQUaD task: (give the name)

* [x] my own task or dataset: (give details below)

RuntimeException Traceback (most recent call last)

~\AppData\Local\Temp/ipykernel_13348/1934748927.py in

66

67 onnx_inputs = _get_inputs(PROMPTS, tokenizer, config)

---> 68 outputs = ort_session.run(['logits'], onnx_inputs)

c:\python37\lib\site-packages\onnxruntime\capi\onnxruntime_inference_collection.py in run(self, output_names, input_feed, run_options)

186 output_names = [output.name for output in self._outputs_meta]

187 try:

--> 188 return self._sess.run(output_names, input_feed, run_options)

189 except C.EPFail as err:

190 if self._enable_fallback:

RuntimeException: [ONNXRuntimeError] : 6 : RUNTIME_EXCEPTION : Non-zero status code returned while running Reshape node. Name:'Reshape_501' Status Message: D:\a_work\1\s\onnxruntime\core\providers\cpu\tensor\reshape_helper.h:42 onnxruntime::ReshapeHelper::ReshapeHelper gsl::narrow_cast<int64_t>(input_shape.Size()) == size was false. The input tensor cannot be reshaped to the requested shape. Input shape:{1,1,1,4096}, requested shape:{1,1,1,16,128}

## To reproduce

Steps to reproduce the behavior:

```

from pathlib import Path

from transformers import GPTNeoForCausalLM, GPT2TokenizerFast, GPTNeoConfig

from transformers.models.gpt_neo import GPTNeoOnnxConfig

from transformers.onnx.convert import export

import numpy as np

import onnxruntime as ort

MODEL_PATH = 'EleutherAI/gpt-neo-1.3B'

#MODEL_PATH = 'EleutherAI/gpt-neo-125M'

TASK = 'causal-lm'

ONNX_MODEL_PATH = Path("gpt_neo_1.3B.onnx")

#ONNX_MODEL_PATH = Path("gpt_neo_125M.onnx")

ONNX_MODEL_PATH.parent.mkdir(exist_ok=True, parents=True)

tokenizer = GPT2TokenizerFast.from_pretrained(MODEL_PATH)

config = GPTNeoConfig.from_pretrained(MODEL_PATH)

onnx_config = GPTNeoOnnxConfig.with_past(config, task=TASK)

print(config)

print(onnx_config)

model = GPTNeoForCausalLM(config=config).from_pretrained(MODEL_PATH)

onnx_inputs, onnx_outputs = export(tokenizer=tokenizer, model=model, config=onnx_config, opset=12, output=ONNX_MODEL_PATH)

print(f'Inputs: {onnx_inputs}')

print(f'Outputs: {onnx_outputs}')

PROMPTS = ['Hello there']

def _get_inputs(prompts, tokenizer, config):

encodings_dict = tokenizer.batch_encode_plus(prompts)

# Shape: [batch_size, seq_length]

input_ids = np.array(encodings_dict["input_ids"], dtype=np.int64)

# Shape: [batch_size, seq_length]

attention_mask = np.array(encodings_dict["attention_mask"], dtype=np.float32)

batch_size, seq_length = input_ids.shape

past_seq_length = 0

num_attention_heads = config.num_attention_heads

hidden_size = config.hidden_size

even_present_state_shape = [

batch_size, num_attention_heads, past_seq_length, hidden_size // num_attention_heads

]

odd_present_state_shape = [batch_size, past_seq_length, hidden_size]

onnx_inputs = {}

for idx in range(config.num_layers):

if idx % 2 == 0:

onnx_inputs[f'past_key_values.{idx}.key'] = np.empty(even_present_state_shape, dtype=np.float32)

onnx_inputs[f'past_key_values.{idx}.value'] = np.empty(even_present_state_shape, dtype=np.float32)

else:

onnx_inputs[f'past_key_values.{idx}.key_value'] = np.empty(odd_present_state_shape, dtype=np.float32)

onnx_inputs['input_ids'] = input_ids

onnx_inputs['attention_mask'] = attention_mask

return onnx_inputs

config = GPTNeoConfig.from_pretrained(MODEL_PATH)

tokenizer = GPT2TokenizerFast.from_pretrained(MODEL_PATH)

ort_session = ort.InferenceSession(str(ONNX_MODEL_PATH))

onnx_inputs = _get_inputs(PROMPTS, tokenizer, config)

outputs = ort_session.run(['logits'], onnx_inputs)

```

## Expected behavior

model exporting and loading without shape mismatch

|

09-11-2021 07:25:43

|

09-11-2021 07:25:43

|

https://github.com/microsoft/onnxruntime/issues/9026<|||||>Hi @BenjaminWegener,

The local attention implementation was [simplified](https://github.com/huggingface/transformers/pull/13491).

You do not have to check for the past_key_values idx value anymore, try changing the loop that creates past_key_values tensors like this:

```

for idx in range(config.num_layers):

onnx_inputs[f'past_key_values.{idx}.key'] = np.empty(even_present_state_shape, dtype=np.float32)

onnx_inputs[f'past_key_values.{idx}.value'] = np.empty(even_present_state_shape, dtype=np.float32)

onnx_inputs['input_ids'] = input_ids

onnx_inputs['attention_mask'] = attention_mask

```<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored.

|

transformers

| 13,525 |

closed

|

FlaxCLIPModel memory leak due to JAX `jit` function cache

|

## Environment info

- `transformers` version: 4.10.2

- Platform: Linux-5.11.0-7620-generic-x86_64-with-Ubuntu-18.04-bionic

- Python version: 3.6.9

- PyTorch version (GPU?): 1.9.0+cu102 (True)

- Tensorflow version (GPU?): 2.5.0 (True)

- Flax version (CPU?/GPU?/TPU?): 0.3.4 (gpu)

- Jax version: 0.2.17

- JaxLib version: 0.1.68

- Using GPU in script?: Yes

- Using distributed or parallel set-up in script?: No

### Who can help

@patil-suraj @patrickvonplaten

## Information

Model I am using: FlaxCLIPModel

The problem arises when using an altered version of the official example. I'm using the outputs of `CLIPProcessor` in a `score` function that outputs how similar the text and image are (basically the same as the official example script). The difference is that I'm `jax.jit`ing the `score` function in a `for` loop and executing it with a different-sized image each time. This causes a memory leak as the previously cached `jit`s are for some reason not being cleared.

Why `jit` the `score` function in a loop? Why not just `jit` once at the start and let JAX automatically re-`jit` based on the input array shape? That unfortunately doesn't fix the issue. That's how I had the code originally, but the memory still grows. I hoped that by completely dropping the reference to the function and then re-`jit`ing it whenever I needed to change to a new image size, it would clear the old `jit` cache. That turns out not to be the case.

Here's the code that reproduces this with some memory logging stripped out for ease of reading. See the Gist/Colab links below for the full code. All I'm doing here is repeatedly re-`jit`ing the `score` function for different image sizes. This results in the script consuming more and more GPU memory until it crashes (after about 6 rounds on my machine). This example code is based on real code where I need to change the size of the images that are passed to `score` every now and then.

```python

import os

os.environ["XLA_PYTHON_CLIENT_PREALLOCATE"] = "false"

os.environ["XLA_PYTHON_CLIENT_ALLOCATOR"] = "platform"

!pip install --upgrade pip

!pip install --upgrade "jax[cuda111]" flax==0.3.4 transformers==4.10.2 Pillow==8.3.2 numpy==1.19.5 ftfy==6.0.3 -f https://storage.googleapis.com/jax-releases/jax_releases.html

import jax

from jax import jit, grad

import jax.numpy as np

from jax.interpreters import xla

import PIL

from PIL import Image

import ftfy

import time

from transformers import CLIPProcessor, FlaxCLIPModel

model = FlaxCLIPModel.from_pretrained("openai/clip-vit-base-patch32")

processor = CLIPProcessor.from_pretrained("openai/clip-vit-base-patch32")

def score(pixel_values, input_ids, attention_mask):

pixel_values = jax.image.resize(pixel_values, (1, 3, 224, 224), "nearest")

inputs = {"pixel_values":pixel_values, "input_ids":input_ids, "attention_mask":attention_mask}

outputs = model(**inputs)

return outputs.logits_per_image[0][0]

size = 224

image = Image.new('RGB', (size, size), color=(0, 0, 66))

data = processor(text=["blue"], images=[image], return_tensors="jax", padding=True)

pixel_values = data.pixel_values

input_ids = data.input_ids

attention_mask = data.attention_mask

for i in range(100):

print(f"\nROUND #{i}")

# Decrease image size (to bust JAX jit cache):

print("SIZE:", size)

size -= 1

pixel_values = jax.image.resize(pixel_values, (1, 3, size, size), "nearest")

# Clear JAX GPU memory:

jit_score = None

time.sleep(2)

xla._xla_callable.cache_clear()

# JIT score function and execute it:

jit_score = jit(score)

print(jit_score(pixel_values, input_ids, attention_mask))

```

As you can see I've tried using `XLA_PYTHON_CLIENT_ALLOCATOR=platform` as suggested by a JAX dev [here](https://github.com/google/jax/issues/1222). I've also tried `XLA_PYTHON_CLIENT_PREALLOCATE=true` (the default) and the problem remains. I'm also calling `xla._xla_callable.cache_clear()`, but that shouldn't be necessary, as mentioned by another JAX dev [here](https://github.com/google/jax/issues/2072).

## To reproduce

Here's a minimal reproduction with GPU memory logging:

* https://gist.github.com/josephrocca/d0ddd720fd4bf178337fe8d878a559d9

* https://colab.research.google.com/gist/josephrocca/d0ddd720fd4bf178337fe8d878a559d9

As you can see from the logs in the Gist, the memory accumulates/leaks every round. If I use the same `pixel_values` array, then there is no leak (predictably, since there's no re-`jit`).

I thought it might have been a problem with JAX, but I created a minimal example that's analogous to that one (but using a different and very simple `score` function instead of the Flax model) and there's no leak:

* https://gist.github.com/josephrocca/3ac6e48d81b3dc9c67b54e2f5bd2fa70

* https://colab.research.google.com/gist/josephrocca/3ac6e48d81b3dc9c67b54e2f5bd2fa70

Still seems like this might be an upstream problem - but strange that it doesn't occur with the pure JAX example. I don't know enough about the internals of FlaxCLIPModel and Flax to know whether they could be interacting with `jax.jit` in a way that's causing this 🤔

## Expected result

I'd have expected the `jit` compilation cache for a function to be cleared when the reference to that function is dropped. That seems to be the behavior of the minimal non-Flax Colab/Gist that I've linked. If all previously-cached `jit`s are kept in memory forever, then it makes it hard to use this module in non-trivial code.

|

09-11-2021 06:47:13

|

09-11-2021 06:47:13

|

It just occurred to me that perhaps the cache *is* growing in the simplified no-Flax code example, but it's too slow to notice due to the simplicity of the function. I've just ran another test and that does indeed seem to be the case. So this is an upstream (JAX) problem or misunderstanding on my part. Here's the example showing the leak (it "leaks" about 1mb per 100 iterations):

* https://gist.github.com/josephrocca/b56f0cb99b8ac07f15bee467842788f2

* https://colab.research.google.com/gist/josephrocca/b56f0cb99b8ac07f15bee467842788f2

Closing since it doesn't seem to be a problem that's specifically caused by `transformers`.

**Edit:** I've asked how to clear the jit cache for a function in the JAX discussion forum [here](https://github.com/google/jax/discussions/7882).

|

transformers

| 13,524 |

closed

|

Fix GPTNeo onnx export

|

# What does this PR do?

#13491 simplified the GPTNeo's local attention by removing the complicated operations, as a result, the `GPTNeoAttentionMixin` class is no longer needed and was removed. This breaks `GPTNeoOnnxConfig` as it was importing the `GPTNeoAttentionMixin` class.

This PR (tries to!) fixes `GPTNeoOnnxConfig` by removing the `PatchingSpec` and updates the `input`, `output` accordingly.

I'm an onnx noob so it would be awesome if you could please take a deeper look @michaelbenayoun :)

Thanks a lot, @tianleiwu for reporting the bug!

|

09-11-2021 05:38:31

|

09-11-2021 05:38:31

|

@patil-suraj, thanks for providing a quick fix.

For dynamic axes mapping for inputs and outputs, I suggest the following:

```

"input_ids": {0: "batch", 1: "sequence"}

f"past_key_values.{i}.key" = {0: "batch", 2: "past_sequence"}

f"present.{i}.key" : {0: "batch", 2: "present_sequence"} #or {0: "batch", 2: "past_sequence+sequence"}

"attention_mask" : {0: "batch", 1: "present_sequence"} #or {0: "batch", 1: "past_sequence+sequence"}

```

<|||||>I have updated the onnx config input and output names to support dynamic axes as @tianleiwu suggested.

The simplification brought by #13491 along with this PR also solves the issue #13175.

@sgugger @LysandreJik I have left the custom implementations `custom_unfold` and `custom_get_block_length_and_num_blocks` which are no longer needed thanks to #13491: should we keep them there as they might be useful one day for other models or get rid of them to keep things clean?<|||||>I think we can keep those two functions for now. We can look again in a few months and remove them if they are still not used anywhere.

|

transformers

| 13,523 |

closed

|

[tokenizer] use use_auth_token for config

|

This PR forwards `use_auth_token` to `AutoConfig.from_pretrained()` when `AutoTokenizer.from_pretrained(mname, use_auth_token=True)` is used. Otherwise `AutoConfig` fails to retrieve `config.json` with 404.

Model's `from_pretrained` does the right thing.

@sgugger, @LysandreJik

|

09-11-2021 03:48:17

|

09-11-2021 03:48:17

| |

transformers

| 13,522 |

closed

|

The new impl for CONFIG_MAPPING prevents users from adding any custom models

|

## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version: 4.10+

- Platform: Ubuntu 18.04

- Python version: 3.7.11

- PyTorch version (GPU?): N/A

- Tensorflow version (GPU?): N/A

- Using GPU in script?: N/A

- Using distributed or parallel set-up in script?: No.

### Who can help

<!-- Your issue will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

-->

## Information

Model I am using (Bert, XLNet ...): _Custom_ model

The problem arises when using:

* [ ] the official example scripts: (give details below)

* [x] my own modified scripts: (give details below)

The tasks I am working on is:

* [x] an official GLUE/SQUaD task: (give the name)

* [ ] my own task or dataset: (give details below)

## To reproduce

See: https://github.com/huggingface/transformers/blob/010965dcde8ce9526f6a7e6e2c3f36276c153708/src/transformers/models/auto/configuration_auto.py#L297

This was changed from the design in version `4.9` which used an `OrderedDict` instead of the new `_LazyConfigMapping`. The current design makes it so users cannot add their own custom models by assigning names and classes to the following registries (example: classification tasks):

- `CONFIG_MAPPING` in `transformers.models.auto.configuration_auto`, and

- `MODEL_FOR_SEQUENCE_CLASSIFICATION_MAPPING` in `transformers.models.auto.modeling_auto`.

<!-- If you have code snippets, error messages, stack traces please provide them here as well.

Important! Use code tags to correctly format your code. See https://help.github.com/en/github/writing-on-github/creating-and-highlighting-code-blocks#syntax-highlighting

Do not use screenshots, as they are hard to read and (more importantly) don't allow others to copy-and-paste your code.-->

## Expected behavior

Either a mechanism to add custom `Config`s (and the corresponding models) with documentation for it, or documentation for whatever other recommended method. Possibly that already exists, but I haven't found it yet.

<!-- A clear and concise description of what you would expect to happen. -->

@sgugger

|

09-11-2021 00:16:44

|

09-11-2021 00:16:44

|

Adding a config/model/tokenizer to those constants wasn't really supported before (but I agree it may have worked in some situations). A mechanism to add a custom model/config/tokenizer is on the roadmap!

Slightly different but which may be of interest, we are also starting to implement support for custom modeling (soon config and tokenizer) files on the Hub in #13467<|||||>Also related to https://github.com/huggingface/transformers/issues/10256#issuecomment-916482519<|||||>@sgugger , is the roadmap shared anywhere publicly? I have searched but could not find it. The reason I'm asking is because we are also interested in adding custom (customized) models.<|||||>No there is no public roadmap, this is internal only because it evolves constantly with the feature requests we receive :-)

Like I said, there should be something available for this pretty soon!<|||||>Related https://github.com/huggingface/transformers/issues/13591<|||||>@sgugger Updating just broke my codebase :)

Any reasons why you cannot allow users to modify the registry? At the end of the day, it's something that will do on their own without affecting the entire library...

Can we please revert this? Because currently the latest version of HF fixes an important [issue](https://github.com/huggingface/transformers/issues/12904).<|||||>@sgugger @LysandreJik any updates on this? Thanks!<|||||>Hello @aleSuglia, @sgugger is working on that API here: https://github.com/huggingface/transformers/pull/13989

It should land in the next few days.

|

transformers

| 13,521 |

closed

|

Ignore `past_key_values` during GPT-Neo inference

|

# What does this PR do?

Applies #8633 to GPT-Neo.

I was getting errors like `RuntimeError: Sizes of tensors must match except in dimension 2. Got 20 and 19 (The offending index is 0)` during the evaluation step of `Trainer.train()` with GPT-Neo.

This was fixed in an internal implementation of `GPTNeoForSequenceClassification` by @manuelciosici before an official version of the class was released for `transformers`. I converted our code to use the `transformers` version of the class and realized the solution should be upstreamed. I'm just mentioning this here because I don't get all the credit for this patch.

This might be needed for other models as well, but I don't know of a good way to figure that out without testing them all.

## Who can review?

trainer: @sgugger

|

09-10-2021 22:14:44

|

09-10-2021 22:14:44

| |

transformers

| 13,520 |

closed

|

[WIP] Wav2vec2 pretraining 2

|

# What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes # (issue)

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [ ] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @LysandreJik

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @n1t0, @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

|

09-10-2021 22:07:06

|

09-10-2021 22:07:06

|

This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored.

|

transformers

| 13,519 |

closed

|

CodeT5

|

# 🌟 New model addition

## Model description

<!-- Important information -->

This model is an T5 version by Salesforce trained for coding. Some articles says it should beat openai codex, so it could be interesting to add it to the hub

## Open source status

* [X] the model implementation is available: (give details) https://github.com/salesforce/CodeT5

* [X] the model weights are available: (give details) https://github.com/salesforce/CodeT5

* [ ] who are the authors: (mention them, if possible by @gh-username)

|

09-10-2021 19:31:02

|

09-10-2021 19:31:02

|

https://huggingface.co/Salesforce/codet5-base/tree/main

Thanks @NielsRogge

|

transformers

| 13,518 |

closed

|

updated setup.py

|

checking if python is running with the older version in the system or not

|

09-10-2021 17:37:06

|

09-10-2021 17:37:06

|

This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored.

|

transformers

| 13,517 |

closed

|

[Wav2Vec2] Fix dtype 64 bug

|

# What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Test has to be `is np.dtype(np.float64)` not `is np.float64`

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [ ] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @LysandreJik

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @n1t0, @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

|

09-10-2021 16:07:16

|

09-10-2021 16:07:16

| |

transformers

| 13,516 |

closed

|

Loading SentenceTransformers (DistilBertModel) model using from_pretrained(...) HF function into a DPRQuestionEncoder model

|

## Environment info

```

- `transformers` version: 4.10.0

- Platform: Linux-5.4.104+-x86_64-with-Ubuntu-18.04-bionic

- Python version: 3.7.11

- PyTorch version (GPU?): 1.9.0+cu102 (True)

- Tensorflow version (GPU?): 2.6.0 (True)

- Flax version (CPU?/GPU?/TPU?): not installed (NA)

- Jax version: not installed

- JaxLib version: not installed

- Using GPU in script?: Yes

- Using distributed or parallel set-up in script?: No

```

### Who can help

research_projects/rag: @patrickvonplaten, @lhoestq

## To reproduce

Steps to reproduce the behavior:

_./semanticsearch_model_ is a DistilBertModel created with SentenceTransformers it is structured as follow:

```

from transformers import DPRQuestionEncoder, DPRQuestionEncoderTokenizer

model_name = './semanticsearch_model'

model = DPRQuestionEncoder.from_pretrained(model_name)

```

# Error

```

You are using a model of type distilbert to instantiate a model of type dpr. This is not supported for all configurations of models and can yield errors.

NotImplementedErrorTraceback (most recent call last)

<ipython-input-52-1f1b990b906b> in <module>

----> 1 model = DPRQuestionEncoder.from_pretrained(model_name)

2 # https://github.com/huggingface/transformers/blob/41cd52a768a222a13da0c6aaae877a92fc6c783c/src/transformers/models/dpr/modeling_dpr.py#L520

/opt/conda/lib/python3.8/site-packages/transformers/modeling_utils.py in from_pretrained(cls, pretrained_model_name_or_path, *model_args, **kwargs)

1358 raise

1359 elif from_pt:

-> 1360 model, missing_keys, unexpected_keys, mismatched_keys, error_msgs = cls._load_state_dict_into_model(

1361 model,

1362 state_dict,

/opt/conda/lib/python3.8/site-packages/transformers/modeling_utils.py in _load_state_dict_into_model(cls, model, state_dict, pretrained_model_name_or_path, ignore_mismatched_sizes, _fast_init)

1462 )

1463 for module in unintialized_modules:

-> 1464 model._init_weights(module)

1465

1466 # copy state_dict so _load_from_state_dict can modify it

/opt/conda/lib/python3.8/site-packages/transformers/modeling_utils.py in _init_weights(self, module)

577 Initialize the weights. This method should be overridden by derived class.

578 """

--> 579 raise NotImplementedError(f"Make sure `_init_weigths` is implemented for {self.__class__}")

580

581 def tie_weights(self):

NotImplementedError: Make sure `_init_weigths` is implemented for <class 'transformers.models.dpr.modeling_dpr.DPRQuestionEncoder'>

```

## Another thing

It seems that _from_pretrained_ function is not able to load all the weights (i.e. it ignores the 1_Pooling, 2_Dense folders which contains the last two layers beyond the Transformer model).

## Expected behavior

Load correctly the model.

|

09-10-2021 15:23:16

|

09-10-2021 15:23:16

|

I don't fully understand here. If the model is a `DistilBertModel` - why is it loaded with `DPRQuestionEncoder` ? Shouldn't it be loaded as follows:

```python

from transformers import DistilBertModel

model_name = './semanticsearch_model'

model = DistilBertModel.from_pretrained(model_name)

```

?<|||||>Hi @patrickvonplaten, you're right. My aim was to load a DistilBERT as underlying model of DPRQuestionEncoder (rather than the standard BERT), but maybe this is not the right way.

However, by loading the model as DistilBERT (as in your code) it only loads the transformer (i.e. `pytorch_model.bin` and `config.json` in the root directory) while the pooling and dense layers added by SentenceTransformers are ignored.

The point is, is there a way to load a model created with SentenceTransformer - including pooling and dense layers - into an HuggingFace model?

<|||||>Can you try:

```

from transformers import DistilBertForMaskedLM

model_name = './semanticsearch_model'

model = DistilBertForMaskedLM.from_pretrained(model_name)

```

?

<|||||>> Can you try:

>

> ```

> from transformers import DistilBertForMaskedLM

>

> model_name = './semanticsearch_model'

> model = DistilBertForMaskedLM.from_pretrained(model_name)

> ```

>

> ?

I get

```

Some weights of DistilBertForMaskedLM were not initialized from the model checkpoint at ./semanticsearch_model and are newly initialized: ['vocab_transform.weight', 'vocab_projector.bias', 'vocab_transform.bias', 'vocab_layer_norm.weight', 'vocab_projector.weight', 'vocab_layer_norm.bias']

You should probably TRAIN this model on a down-stream task to be able to use it for predictions and inference.

```

Pooling and dense layers are still ignored since as final embedding dimension I get 768 instead of 512 as it should be due to the dense layer.<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored.

|

transformers

| 13,515 |

closed

|

beit-flax

|

# What does this PR do?

Beit Flax model

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes # (issue)

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [ ] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @LysandreJik

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @n1t0, @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

@NielsRogge @patrickvonplaten

|

09-10-2021 13:58:26

|

09-10-2021 13:58:26

|

Thanks @patrickvonplaten <|||||>Thanks for adding this! I will let Suraj review the Flax code, as I'm not that familiar with it.<|||||>Thanks for the review @patil-suraj

done changes according to review

<|||||>@kamalkraj - could you merge master into this PR or rebase to master ? :-) Think this should solve the pipeline failures<|||||>@patrickvonplaten done

|

transformers

| 13,514 |

closed

|

separate model card git push from the rest

|

# What does this PR do?

- After model card metadata contents validation was deployed to the Hub, we need to ensure transformer's trainer git push are not blocked because of an invalid README.mld yaml.

- as discussed with @julien-c @Pierrci @sgugger and @LysandreJik the first step to match Hub's model card validation system is to avoid failing a whole git push after training, for the only reason that README.md metadata is not valid.

- therefore, I tried in this PR to git push the training result independently from the modelcard update, so that the modelcard update failing does not fail the rest, keeping only logging for README.Md push failures.

- Relates to https://github.com/huggingface/huggingface_hub/pull/326

## Before submitting

- [x] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [x] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [x] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

|

09-10-2021 13:54:55

|

09-10-2021 13:54:55

|

For tracking purposes, do we have another issue for `datasets: - null` @elishowk? (which will still fail with this, AFAICT)<|||||>> For tracking purposes, do we have another issue for `datasets: - null` @elishowk? (which will still fail with this, AFAICT)

Yep : https://github.com/huggingface/transformers/issues/13528<|||||>🥳 first PR on transformers, thanks for your help you all !

|

transformers

| 13,513 |

closed

|

TF multiple choice loss fix

|

The `TFMultipleChoiceLoss` inherits from `TFSequenceClassificationLoss` and can get confused when some of the input dimensions (specifically, the number of multiple choices) are not known at compile time. This can cause a compilation failure or other misbehaviour. Ensuring that the `TFMultipleChoiceLoss` never attempts to do regression fixes the problem.

|

09-10-2021 13:04:17

|

09-10-2021 13:04:17

| |

transformers

| 13,512 |

closed

|

[Wav2Vec2] Fix normalization for non-padded tensors

|

# What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

This PR fixes a problem with normalization when the input is a list of different length that is not numpified - see: https://github.com/huggingface/transformers/issues/13504

Just noticed that this bug is pretty severe actually as it affects all large-Wav2Vec2 fine-tuning :-/.

It was introduced by me in this PR: https://github.com/huggingface/transformers/pull/12804/files - I should have written more and better tests for this.

=> This means that from transformers 4.9.0 to until this PR is merged the normalization for all large Wav2Vec2 models was way off when fine-tuning the model.

@LysandreJik - do you think it might be possible to do a patched release for this?

|

09-10-2021 08:37:43

|

09-10-2021 08:37:43

| |

transformers

| 13,511 |

closed

|

VisionTextDualEncoder

|

# What does this PR do?

This PR adds `VisionTextDualEncoder` model in PyTorch and Flax to be able to load any pre-trained vision (`ViT`, `DeiT`, `BeiT`, CLIP's vision model) and text (`BERT`, `ROBERTA`) model in the library for vision-text tasks like CLIP.

This model pairs a vision and text encoder and adds projection layers to project the embeddings to another embeddings space with similar dimensions. which can then be used to align the two modalities.

The API to load the config and model is similar to the API of `EncoderDecoder` and `VisionEncoderDecoder` models.

- load `vit-bert` model from config

```python3

config_vision = ViTConfig()

config_text = BertConfig()

config = VisionTextDualEncoderConfig.from_vision_text_configs(config_vision, config_text, projection_dim=512)

# Initializing a BERT and ViT model

model = VisionTextDualEncoderModel(config=config)

```

- load using pre-trained vision and text model

```python3

model = VisionTextDualEncoderModel.from_vision_text_pretrained(

"google/vit-base-patch16-224", "bert-base-uncased"

)

```

Since this is a multi-modal model, this PR also adds a generic `VisionTextDualEncoderProcessor`, which wraps any feature extractor and tokenizer

```python3

tokenizer = BertTokenizer.from_pretrained("bert-base-uncased")

feature_extractor = ViTFeatureExtractor.from_pretrained("google/vit-base-patch16-224")

processor = VisionTextDualEncoderProcessor(feature_extractor, tokenizer)

```

|

09-10-2021 06:06:48

|

09-10-2021 06:06:48

|

Let's merge this asap - it's been on the ToDo-List a bit too long now ;-)

|

transformers

| 13,510 |

closed

|

Huge bug. TF saved model running in nvidia-docker dose not use GPU.

|

## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version: 4.10

- Platform: Ubutu 18.04

- Python version: 3.6

- PyTorch version (GPU?): latest GPU

- Tensorflow version (GPU?): 2.4 GPU

- Using GPU in script?:

- Using distributed or parallel set-up in script?:

### Who can help

@LysandreJik

@Rocketknight1

@sgugger

## Information

Model I am using official 'nateraw/bert-base-uncased-imdb'.

The problem arises when using:

[*] the official example scripts: https://huggingface.co/blog/tf-serving

## To reproduce

Steps to reproduce the behavior:

Follow [official blog](https://huggingface.co/blog/tf-serving), I save pretrained model to saved model, then running model on tensorflow/serving docker (that is CPU docker) and tensorflow/serving:latest-gpu docker (that is GPU docker), then I testing the inference speed:

1. On CPU docker, cost is 0.19s

2. On GPU docker, cost is 2.9s, 10 times slower than CPU docker inference

3. When inferening with GPU docker, GPU-Util is 0 and only one CPU core is working.

4. I re-test on another machine, got same result.

Tesing machine environment:

#1: GTX 1070

#2: Titan Xp

## Expected behavior

Inference on GPU docker should be much faster than CPU docker.

What's wrong with that?

Have you ever test the offcial blog?

Nobody run saved model with nvidia-docker?

Help me, thx!!!

|

09-10-2021 05:19:52

|

09-10-2021 05:19:52

|

Code to saved model.

```

from transformers import TFBertForSequenceClassification

model = TFBertForSequenceClassification.from_pretrained("nateraw/bert-base-uncased-imdb", from_pt=True)

# the saved_model parameter is a flag to create a SavedModel version of the model in same time than the h5 weights

model.save_pretrained("my_model", saved_model=True)

```<|||||>Code to do inference.

```

from transformers import BertTokenizerFast, BertConfig

import requests

import json

import numpy as np

import time

sentence = "I love the new TensorFlow update in transformers. I love the new TensorFlow update in transformers. I love the new TensorFlow update in transformers. I love the new TensorFlow update in transformers. I love the new TensorFlow update in transformers. I love the new TensorFlow update in transformers. I love the new TensorFlow update in transformers. I love the new TensorFlow update in transformers."

# Load the corresponding tokenizer of our SavedModel

tokenizer = BertTokenizerFast.from_pretrained("nateraw/bert-base-uncased-imdb")

# Load the model config of our SavedModel

config = BertConfig.from_pretrained("nateraw/bert-base-uncased-imdb")

# Tokenize the sentence

batch = tokenizer(sentence)

# Convert the batch into a proper dict

batch = dict(batch)

# Put the example into a list of size 1, that corresponds to the batch size

batch = [batch]

# The REST API needs a JSON that contains the key instances to declare the examples to process

input_data = {"instances": batch}

t1 = time.time()

# Query the REST API, the path corresponds to http://host:port/model_version/models_root_folder/model_name:method

r = requests.post("http://localhost:8502/v1/models/bert:predict", data=json.dumps(input_data))

print(time.time() - t1)

# Parse the JSON result. The results are contained in a list with a root key called "predictions"

# and as there is only one example, takes the first element of the list

result = json.loads(r.text)["predictions"][0]

# The returned results are probabilities, that can be positive or negative hence we take their absolute value

abs_scores = np.abs(result)

# Take the argmax that correspond to the index of the max probability.

label_id = np.argmax(abs_scores)

# Print the proper LABEL with its index

print(config.id2label[label_id])

```<|||||>Run cpu docker:

`sudo docker run -d -p 8502:8501 --name bert_cpu image_hug_off_cpu`

Run gpu docker:

`sudo docker run -d --gpus '"device=0"' -p 8502:8501 --name bert image_hug_off`<|||||>Hello! Do you manage to leverage your GPUs using other TensorFlow code than our models? I suspect there is an issue in the setup - we do run some GPU tests so the models should work without issue on GPUs. <|||||>Everything is OK when code runs without signatures, after add signatures, it gets sucked.<|||||>cc @Rocketknight1 <|||||>I'm not sure I understand - can you show an example of a model that runs correctly with the GPU and a model that does not, so we can figure out what the problem is?<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored.

|

transformers

| 13,509 |

closed

|

Warn for unexpected argument combinations

|

# What does this PR do?

Warn for unexpected argument combinations:

- `padding is True` and ``max_length is not None``

- `padding is True` and ``pad_to_max_length is not False``

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [x] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- https://discuss.huggingface.co/t/migration-guide-from-v2-x-to-v3-x-for-the-tokenizer-api/55

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @LysandreJik

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @n1t0, @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

|

09-10-2021 04:02:34

|

09-10-2021 04:02:34

|

@sgugger

Could you review this PR?

Thank you.

|

transformers

| 13,508 |

closed

|

[megatron_gpt2] checkpoint v3

|

The BigScience generates Megatron-Deepspeed checkpoints en masse, so we need a better v3 checkpoint support for megatron_gpt2 (also working on a direct Megatron-Deepspeed to HF-style script).

This PR:

- removes the requirement that the source checkpoint is a zip file. Most of the checkpoints aren't .zip files, so supporting both.

- removes the need to manually feed the config, when all the needed data is already in the checkpoint. (while supporting the old format)

- disables a debug print that repeats for each layer

- switch to default `gelu` from `gelu_new` - which is what the current megatron-lm uses

- fixes a bug in the previous version. The hidden size is dimension 1 as it can be seen from:

https://github.com/NVIDIA/Megatron-LM/blob/3860e995269df61d234ed910d4756e104e1ab844/megatron/model/language_model.py#L140-L141

The previous script happened to work because `max_position_embeddings` happened to be equal to `hidden_size`

@LysandreJik, @sgugger

|

09-10-2021 03:42:22

|

09-10-2021 03:42:22

| |

transformers

| 13,507 |

closed

|

[Benchmark]Why 'step' consume most time?

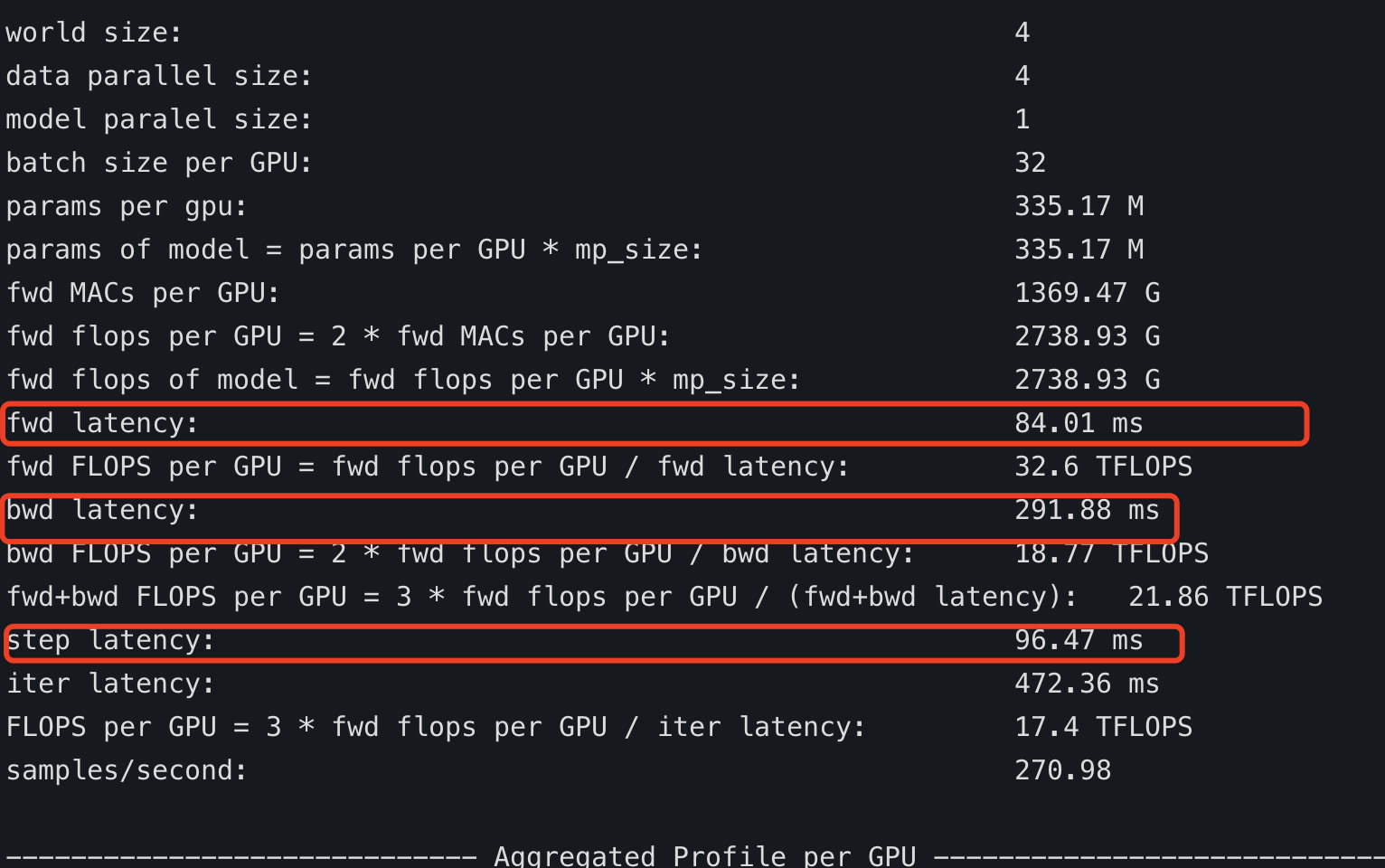

|

# 🖥 Benchmarking `transformers`

## Benchmark

I have test "**transformers/examples/pytorch/language-modeling/run_clm.py**" for "**bert-large-uncased**" with "**Deepspeed**"

but found something weird.

## Set-up

device: 4 x V100(16GB), single node

script cmd is:

```shell

#!/bin/bash

deepspeed run_mlm.py \

--deepspeed ds_config.json \

--model_type bert \

--config_name bert-large-uncased \

--output_dir output/ \

--dataset_name wikipedia \

--dataset_config_name 20200501.en \

--tokenizer_name bert-large-uncased \

--preprocessing_num_workers $(nproc) \

--max_seq_length 128 \

--do_train \

--fp16 true \

--overwrite_output_dir true \

--per_device_train_batch_size 32

```

and the ds_config.json is:

```shell

{

"train_micro_batch_size_per_gpu": "auto",

"fp16": {

"enabled": true,

"loss_scale": 0,

"loss_scale_window": 1000,

"hysteresis": 2,

"min_loss_scale": 1

},

"flops_profiler": {

"enabled": true,

"profile_step": 3,

"module_depth": -1,

"top_modules": 1,

"detailed": true,

"output_file": "./tmp.log"

},

"zero_optimization": {

"stage": 1,

"allgather_partitions": true,

"allgather_bucket_size": 2e8,

"reduce_scatter": true,

"reduce_bucket_size": 2e8,

"overlap_comm": true,

"contiguous_gradients": true,

"cpu_offload": true

}

}

```

## Results

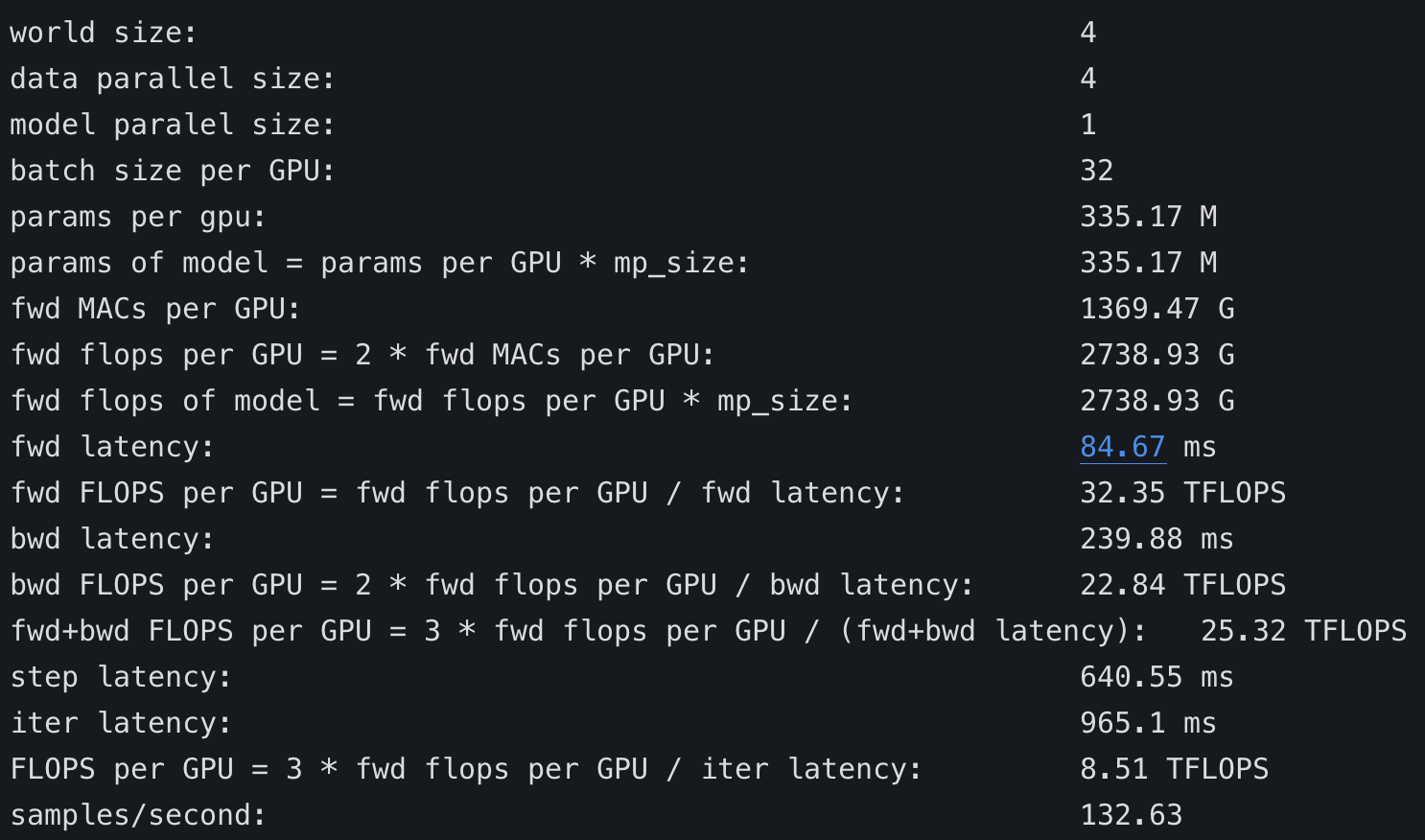

huggingface with deepspeed:

compare to native deepspeed example "bing_bert" with same deepspeed config:

I found that native deepspeed "step" cost little time.

## question

1.why huggingface step cost most time?

2.why huggingface and deepspeed are very difference in "fwd flops per GPU" ? model are all "bert-large"

|

09-10-2021 03:02:58

|

09-10-2021 03:02:58

|

Thank you for the report, @dancingpipi.

I have a hard time making sense of it since you shared how your invoked the transformers side but not the other example. or how you measured the performance reports you shared. But I think we have enough in the numbers you shared to unravel your puzzlement.

If you look at fwd MACs (“multiply and accumulate” units) the transformers table says 1369, whereas the other example say 20, so clearly you have a ~70x difference in the amount of computation being run. And of course the performance would have a huge difference.

I suppose you're referring to this: https://github.com/microsoft/DeepSpeedExamples/tree/master/bing_bert as the other, and if you read the description it says:

> Using DeepSpeed's optimized transformer kernels as the building block, we were able to achieve the fastest BERT training record: 44 minutes on 1,024 NVIDIA V100 GPUs, compared with the previous best published result of 67 minutes on the same number and generation of GPUs.

So I hope that the "DeepSpeed's optimized transformer kernels" part answers your question.

It's also hard to tell whether the 2 comparisons are measuring the same thing, but if they don't - CUDA kernels outperform python kernels by many times.

We are intending to look at how to gain more benefits from DeepSpeed's gifts, in particular the various kernels, we just don't have enough resources to do so fast enough. If you have the time and inspiration to write a PR that integrates such kernels into the current Bert model I'd dare to say it'd be very welcome.

BTW, pytorch core too has optimized transformers kernels that would be great to evaluate as well.

<|||||>@stas00 thanks for your great answer!

I'm still not clearly understand why MACs are very difference between them. Is it just because of "optimized transformer kernels"? 70 times improvement is a bit unbelievable.

Another weird thing is "step" cost much time in huggingface. This is beyond my cognition.

So the current situation is that the performance of native deepspeed on bert-large is better than the performance of huggingface.

Is there any suggestions on using huggingface with deepspeed?<|||||>> @stas00 thanks for your great answer!

> I'm still not clearly understand why MACs are very difference between them. Is it just because of "optimized transformer kernels"? 70 times improvement is a bit unbelievable.

I agree. That's why I'm suspicious of your reported numbers as 2 orders of magnitude is too fantastic to believe.

> Another weird thing is "step" cost much time in huggingface. This is beyond my cognition.

`step` is comprised of those MACs, so if your setup is not comparing apples to apples then obviously the speed difference would be out of proportion as well.

> So the current situation is that the performance of native deepspeed on bert-large is better than the performance of huggingface.

I haven't tested this myself, but it's almost certainly the case if one uses custom CUDA kernels vs. vanila implementation.

> Is there any suggestions on using huggingface with deepspeed?

Deepspeed is a framework/toolkit with many tools and features in it. HF transformers has currently only integrated Deepspeed's ZeRO features, which allow you to easily scale any huge model with multiple gpus and some CPU/NVMe memory and minimal to no changes to the model's code base.

Plugging Deepspeed's custom kernels has been briefly discussed as a great feature to add, but currently no one had the time to integrate it. If you have the know-how you are very welcome to spearhead the effort. I'd reach out then to the Deepspeed team via their Issues and ask whether they can help you integrate the custom cuda kernels into HF transformers. It's possible that they have already done it but the work isn't public yet. The other approach is to perhaps opening this request to the community at large and perhaps someone would be interested to work on that.

<|||||>ok, I see. Maybe I have to make a trade-off between performance and ease of use. But the first thing I want to do now is to find out why the MACs is so different.

@stas00 Thanks again for your help~<|||||>

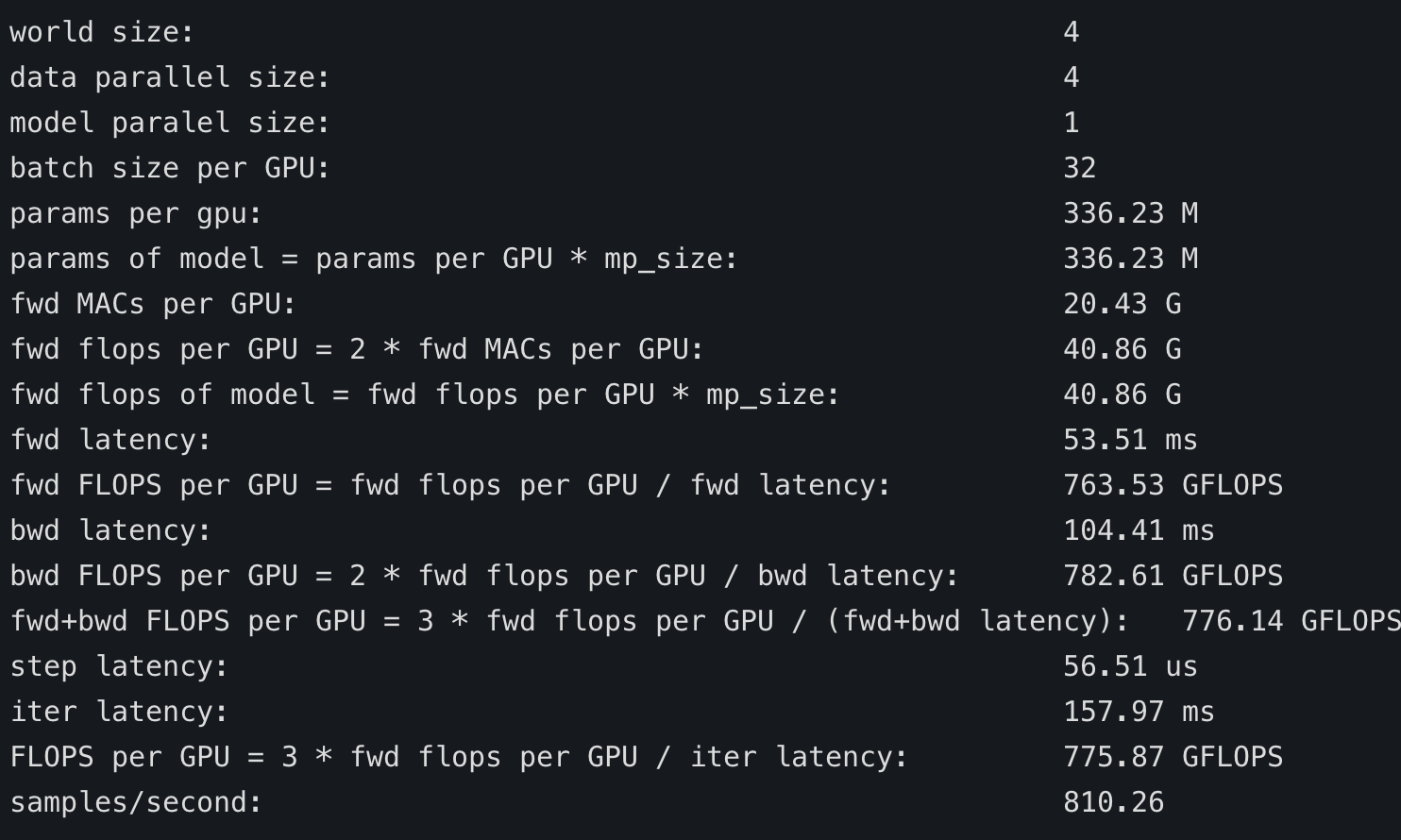

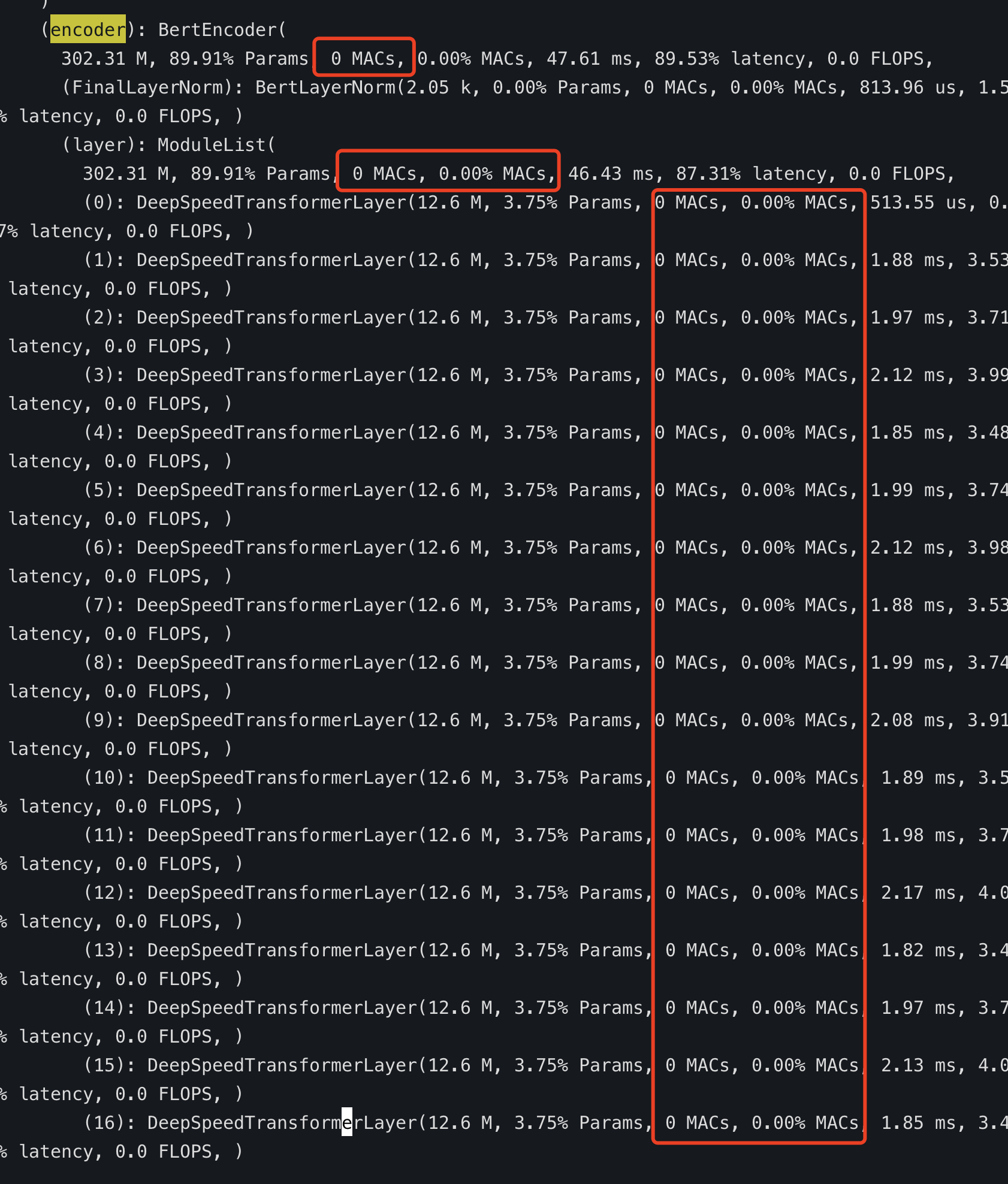

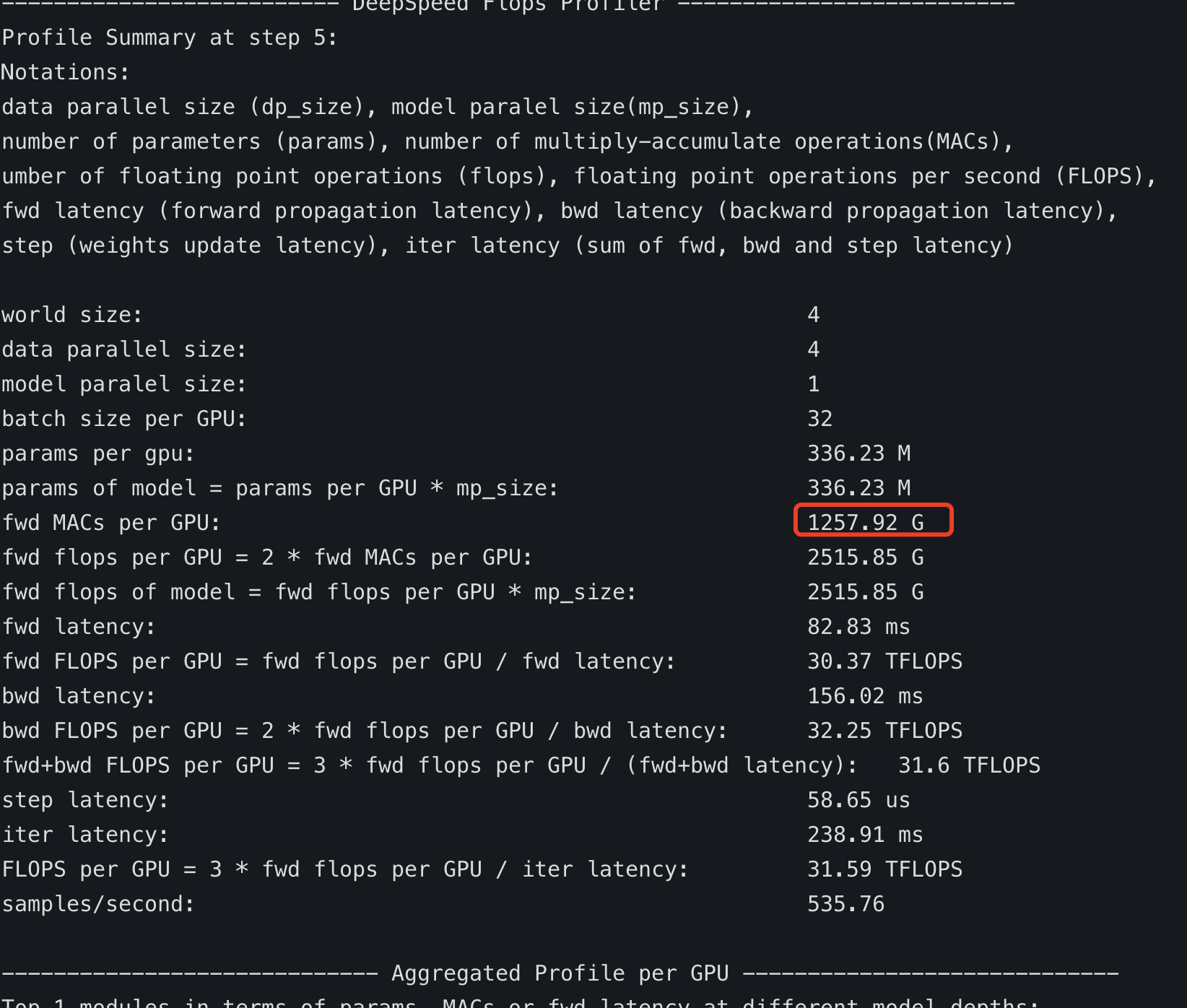

@stas00 I seem to have found the reason for the big difference in MACs, picture above is profile of "https://github.com/microsoft/DeepSpeedExamples/tree/master/bing_bert", the MACs is all zero.

While turn-off "**deepspeed_transformer_kernel**", result looks like correct:

This is close to HF deepspeed's MACs.

But native deepspeed's(**without deepspeed_transformer_kernel**) "**step latency**" only cost "**58.65 us**" while HF deepspeed's cost "**96.47 ms**":

Now problem is:

1. HF deepspeed's "backward latency" is almost twice as much as native deepspeed.

2. HF deepspeed's "step latency" cost too much time.

<|||||>I still have no idea where you get your reports from. Perhaps the first step is for you to help us reproduce your reports.<|||||>what I have compared is **https://github.com/microsoft/DeepSpeedExamples/tree/master/bing_bert** and **https://github.com/huggingface/transformers/blob/master/examples/pytorch/language-modeling/run_mlm.py**

To "**bing_bert**", the **launch script** is:

```shell

#!/bin/bash

base_dir=`pwd`

JOB_NAME=lamb_nvidia_data_64k_seq128

OUTPUT_DIR=${base_dir}/bert_model_nvidia_data_outputs

mkdir -p $OUTPUT_DIR

NCCL_TREE_THRESHOLD=0 deepspeed ${base_dir}/deepspeed_train.py \

--cf ${base_dir}/bert_large_lamb_nvidia_data.json \

--max_seq_length 128 \

--output_dir $OUTPUT_DIR \

--deepspeed \

--print_steps 100 \

--lr_schedule "EE" \

--lr_offset 10e-4 \

--job_name $JOB_NAME \

--deepspeed_config ${base_dir}/deepspeed_bsz64k_lamb_config_seq128.json \

--data_path_prefix ${base_dir} \

--use_nvidia_dataset

```

and "**deepspeed_bsz64k_lamb_config_seq128**.json" is :

```json

{

"train_batch_size": 128,

"train_micro_batch_size_per_gpu": 32,

"steps_per_print": 100,

"prescale_gradients": false,

"optimizer": {

"type": "Adam",

"params": {

"lr": 6e-3,

"betas": [

0.9,

0.99

],

"eps": 1e-8,

"weight_decay": 0.01

}

},

"flops_profiler": {

"enabled": true,

"profile_step": 5,

"module_depth": -1,

"top_modules": 1,

"detailed": true,

"output_file": "./tmp.log"

},

"zero_optimization": {

"stage": 1,

"overlap_comm": true,

"allgather_partitions": true,

"allgather_bucket_size": 5e8,

"reduce_scatter": true,

"reduce_bucket_size": 5e8,

"contiguous_gradients": true,

"grad_hooks": true,

"round_robin_gradients": false

},

"scheduler": {

"type": "WarmupLR",

"params": {

"warmup_min_lr": 1e-8,

"warmup_max_lr": 6e-3

}

},

"gradient_clipping": 1.0,

"wall_clock_breakdown": false,

"fp16": {

"enabled": true,

"loss_scale": 0

},

"sparse_attention": {

"mode": "fixed",

"block": 16,

"different_layout_per_head": true,

"num_local_blocks": 4,

"num_global_blocks": 1,

"attention": "bidirectional",

"horizontal_global_attention": false,

"num_different_global_patterns": 4

}

}

```

To huggingface "run_mlm.py", the **launch script** is:

```shell

#!/bin/bash

deepspeed \

run_mlm.py \

--deepspeed ds_config.json \

--model_type bert \

--config_name bert-large-uncased \

--output_dir output/ \

--dataset_name wikipedia \

--dataset_config_name 20200501.en \

--tokenizer_name bert-large-uncased \

--preprocessing_num_workers $(nproc) \

--max_seq_length 128 \

--do_train \

--fp16 true \

--overwrite_output_dir true \

--gradient_accumulation_steps 32 \

--per_device_train_batch_size 32

```

the **ds_config.json** is :

```json

{

"train_micro_batch_size_per_gpu": "auto",

"fp16": {

"enabled": true,

"loss_scale": 0

},

"flops_profiler": {

"enabled": true,

"profile_step": 30,

"module_depth": -1,

"top_modules": 1,

"detailed": true,

"output_file": "./tmp.log"

},

"zero_optimization": {

"stage": 3,

"allgather_partitions": true,

"allgather_bucket_size": 5e8,

"reduce_scatter": true,

"reduce_bucket_size": 5e8,

"overlap_comm": true,

"contiguous_gradients": true,

"grad_hooks": true,

"round_robin_gradients": false

},

"gradient_clipping": 1.0,

"wall_clock_breakdown": false,

"sparse_attention": {

"mode": "fixed",

"block": 16,

"different_layout_per_head": true,

"num_local_blocks": 4,

"num_global_blocks": 1,

"attention": "bidirectional",

"horizontal_global_attention": false,

"num_different_global_patterns": 4

}

}

```

My GPU is 4 x V100(16GB).

@stas00 I am pleased to provide all the information that helps to reproduce this problem<|||||>That's much better. Thank you for the explicit config sharing, @dancingpipi

Well, let's do the "what's different in the 2 pictures" exercise:

1. you're comparing zero1 in ds to zero3 in hf - that would be quite a difference. z1 would be much faster than z3. What happens if you use the same zero stages?

2. you are using a huge `--gradient_accumulation_steps 32` in hf and none in ds - perhaps that's a huge chunk of the 70x factor?

Perhaps I missed other differences, probably re-ordering the configs' content to match will make things easier to diff.

<|||||>@stas00 thanks for your reply, maybe I made some mistake, I'll do a check<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.