text

stringlengths 100

500k

| subset

stringclasses 4

values |

|---|---|

Alan M. Frieze

Template:EngvarB {{ safesubst:#invoke:Unsubst||$N=Use dmy dates |date=__DATE__ |$B= }} Alan M. Frieze (born 25 October 1945 in London, England) is a professor in the Department of Mathematical Sciences at Carnegie Mellon University, Pittsburgh, United States. He graduated from the University of Oxford in 1966, and obtained his PhD from the University of London in 1975. His research interests lie in combinatorics, discrete optimisation and theoretical computer science. Currently, he focuses on the probabilistic aspects of these areas; in particular, the study of the asymptotic properties of random graphs, the average case analysis of algorithms, and randomised algorithms. His recent work has included approximate counting and volume computation via random walks; finding edge disjoint paths in expander graphs, and exploring anti-Ramsey theory and the stability of routing algorithms.

1 Key contributions

1.1 Polynomial time algorithm for approximating the volume of convex bodies

1.2 Algorithmic version for Szemerédi regularity partition

2 Awards and honours

3 References and external links

Two key contributions made by Alan Frieze are:

(1) polynomial time algorithm for approximating the volume of convex bodies

(2) algorithmic version for Szemerédi regularity lemma

Both these algorithms will be described briefly here.

Polynomial time algorithm for approximating the volume of convex bodies

The paper [1] is a joint work by Martin Dyer, Alan Frieze and Ravindran Kannan.

The main result of the paper is a randomised algorithm for finding an ϵ{\displaystyle \epsilon } approximation to the volume of a convex body K{\displaystyle K} in n{\displaystyle n} -dimensional Euclidean space by assume the existence of a membership oracle. The algorithm takes time bounded by a polynomial in n{\displaystyle n} , the dimension of K{\displaystyle K} and 1/ϵ{\displaystyle 1/\epsilon } .

The algorithm is a sophisticated usage of the so-called Markov Chain Monte Carlo (MCMC) method. The basic scheme of the algorithm is a nearly uniform sampling from within K{\displaystyle K} by placing a grid consisting n-dimensional cubes and doing a random walk over these cubes. By using the theory of rapidly mixing Markov chains, they show that it takes a polynomial time for the random walk to settle down to being a nearly uniform distribution.

Algorithmic version for Szemerédi regularity partition

This paper [2] is a combined work by Alan Frieze and Ravindran Kannan. They use two lemmas to derive the algorithmic version of the Szemerédi regularity lemma to find an ϵ{\displaystyle \epsilon } -regular partition.

Lemma 1:

Fix k and γ{\displaystyle \gamma } and let G=(V,E){\displaystyle G=(V,E)} be a graph with n{\displaystyle n} vertices. Let P{\displaystyle P} be an equitable partition of V{\displaystyle V} in classes V0,V1,…,Vk{\displaystyle V_{0},V_{1},\ldots ,V_{k}} . Assume | V 1 | > 4 2 k {\displaystyle |V_{1}|>4^{2k}} and 4k>600γ2{\displaystyle 4^{k}>600\gamma ^{2}} . Given proofs that more than γk2{\displaystyle \gamma k^{2}} pairs (Vr,Vs){\displaystyle (V_{r},V_{s})} are not γ{\displaystyle \gamma } -regular, it is possible to find in O(n) time an equitable partition P′{\displaystyle P'} (which is a refinement of P{\displaystyle P} ) into 1+k4k{\displaystyle 1+k4^{k}} classes, with an exceptional class of cardinality at most |V0|+n/4k{\displaystyle |V_{0}|+n/4^{k}} and such that ind(P′)≥ind(P)+γ5/20{\displaystyle \operatorname {ind} (P')\geq \operatorname {ind} (P)+\gamma ^{5}/20}

Let W{\displaystyle W} be a R×C{\displaystyle R\times C} matrix with |R|=p{\displaystyle |R|=p} , | C | = q {\displaystyle |C|=q} and ‖W‖inf≤1{\displaystyle \|W\|_{\inf }\leq 1} and γ{\displaystyle \gamma } be a positive real.

(a) If there exist S ⊆ R {\displaystyle S\subseteq R} , T⊆C{\displaystyle T\subseteq C} such that |S|≥γp{\displaystyle |S|\geq \gamma p} , | T | ≥ γ q {\displaystyle |T|\geq \gamma q} and |W(S,T)|≥γ|S||T|{\displaystyle |W(S,T)|\geq \gamma |S||T|} then σ1(W)≥γ3pq{\displaystyle \sigma _{1}(W)\geq \gamma ^{3}{\sqrt {pq}}}

(b) If σ1(W)≥γpq{\displaystyle \sigma _{1}(W)\geq \gamma {\sqrt {pq}}} , then there exist S⊆R{\displaystyle S\subseteq R} , T⊆C{\displaystyle T\subseteq C} such that |S|≥γ′p{\displaystyle |S|\geq \gamma 'p} , |T|≥γ′q{\displaystyle |T|\geq \gamma 'q} and W(S,T)≥γ′|S||T|{\displaystyle W(S,T)\geq \gamma '|S||T|} where γ′=γ3/108{\displaystyle \gamma '=\gamma ^{3}/108} . Furthermore S{\displaystyle S} , T{\displaystyle T} can be constructed in polynomial time.

These two lemmas are combined in the following algorithmic construction of the Szemerédi regularity lemma.

[Step 1] Arbitrarily divide the vertices of G{\displaystyle G} into an equitable partition P1{\displaystyle P_{1}} with classes V0,V1,…,Vb{\displaystyle V_{0},V_{1},\ldots ,V_{b}} where |Vi|⌊n/b⌋{\displaystyle |V_{i}|\lfloor n/b\rfloor } and hence | V 0 | < b {\displaystyle |V_{0}|<b} . denote k 1 = b {\displaystyle k_{1}=b} .

[Step 2] For every pair (Vr,Vs){\displaystyle (V_{r},V_{s})} of Pi{\displaystyle P_{i}} , compute σ 1 ( W r , s ) {\displaystyle \sigma _{1}(W_{r,s})} . If the pair (Vr,Vs){\displaystyle (V_{r},V_{s})} are not ϵ−{\displaystyle \epsilon -} regular then by Lemma 2 we obtain a proof that they are not γ=ϵ9/108−{\displaystyle \gamma =\epsilon ^{9}/108-} regular.

[Step 3] If there are at most ϵ(k12){\displaystyle \epsilon \left({\begin{array}{c}k_{1}\\2\\\end{array}}\right)} pairs that produce proofs of non γ − {\displaystyle \gamma -} regularity that halt. Pi{\displaystyle P_{i}} is ϵ−{\displaystyle \epsilon -} regular.

[Step 4] Apply Lemma 1 where P=Pi{\displaystyle P=P_{i}} , k=ki{\displaystyle k=k_{i}} , γ=ϵ9/108{\displaystyle \gamma =\epsilon ^{9}/108} and obtain P′{\displaystyle P'} with 1+ki4ki{\displaystyle 1+k_{i}4^{k_{i}}} classes

[Step 5] Let ki+1=ki4ki{\displaystyle k_{i}+1=k_{i}4^{k_{i}}} , Pi+1=P′{\displaystyle P_{i}+1=P'} , i=i+1{\displaystyle i=i+1} and go to Step 2.

In 1991, Frieze received (jointly with Martin Dyer and Ravi Kannan) the Fulkerson Prize in Discrete Mathematics awarded by the American Mathematical Society and the Mathematical Programming Society. The award was for the paper "A random polynomial time algorithm for approximating the volume of convex bodies" in the Journal of the ACM).

In 1997 he was a Guggenheim Fellow.

In 2000, he received the IBM Faculty Partnership Award.

In 2006 he jointly received (with Michael Krivelevich) the Professor Pazy Memorial Research Award from the United States-Israel Binational Science Foundation.

In 2011 he was selected as a SIAM Fellow.[3]

In 2012 he was selected as an AMS fellow.[4]

In 2014 he gave a plenary talk at the International Congress of Mathematicians in Seoul, South Korea.

References and external links

↑ Template:Cite news

↑ Siam Fellows Class of 2011

↑ List of Fellows of the American Mathematical Society, retrieved 29 December 2012.

Alan Frieze's web page

Fulkerson prize-winning paper

Carol Frieze's web page

Alan Frieze's publications at DBLP

Certain self-archived works are available here

Template:Persondata

Retrieved from "https://en.formulasearchengine.com/index.php?title=Alan_M._Frieze&oldid=263971"

Use dmy dates from September 2014

Alumni of the University of Oxford

Alumni of University College London

Carnegie Mellon University faculty

Theoretical computer scientists

Fellows of the American Mathematical Society

Guggenheim Fellows

English mathematicians

Fellows of the Society for Industrial and Applied Mathematics | CommonCrawl |

Earth Science Meta

Earth Science Stack Exchange is a question and answer site for those interested in the geology, meteorology, oceanography, and environmental sciences. It only takes a minute to sign up.

Why doesn't the 71% water of the earth dry or evaporate?

Perhaps a simple question, we know 71% of the earth's surface contains water as oceans. If Earth's age is 4.543 billion years, then I guess it should be decreased with drying or should have been dried so far. Why doesn't it dry or decrease?

If we put some water in sunlight, it evaporates. The oceans are the chief source of rain, but lakes and rivers also contribute to it. The sun's heat evaporates the water.

So I wonder why doesn't the 71% water coverage not evaporate, decreasing until gone? Why is it still 71% after billions of years? Does water keep coming from somewhere? Or, does moving water not evaporate? Why is it still here?

HarryHarry

$\begingroup$ Water evaporates, but comes down back as rain or other forms of precipitation. $\endgroup$

– Gimelist

$\begingroup$ Where do you think the water goes when it evaporates? $\endgroup$

$\begingroup$ tl,dr: Because earth's atmosphere can only hold so much evaporated water, far, far less than earth's oceans hold. $\endgroup$

– RBarryYoung

$\begingroup$ How would anyone know that it was 71% billions of years ago? $\endgroup$

– Tim

$\begingroup$ Who said it doesn't evaporate :O :D? It just also precipitates :D. $\endgroup$

– Teacher KSHuang

There are two ways this problem needs to be looked at. The first is more astronomy than Earth science. The Earth as an entire system is largely contained. Its gravity and magnetic field retains nearly all of its elements. Earth does lose hydrogen and helium and cosmic rays will split water molecules leading to a loss of an impressive amount of hydrogen and as an indirect result a loss of water, but this is loss irrelevant compared to the size of the oceans. More detail here. Space dust, comets and asteroids contain water so some water is returned from space too.

By the upper estimate in one article, 50,000 tons of hydrogen per year works out to about 450,000 tons of water lost every year. (and 400,000 tons of oxygen added as a result). Compared to the mass of Earth's oceans those numbers are small. 450,000 tons per year, or 450 trillion tons over a billion years is nothing compared to the 1.3 million trillion tons of water Earth has in its oceans. By the highest estimate, it will take 30 billion years at the current rate and at the Sun's current luminosity for Earth to lose just 1% of its oceans. (Will look to update with other estimates).

As for the rest of the question, once we recognize that loss into space is insignificant, then virtually all water is continuously cycled though the water cycle or hydrological cycle. Very little water gets destroyed or chemically transformed. Nearly all of it, even of millions or billions of years, evaporates, or, turns into ice, or gets absorbed by plants, or seeps underground, but it always returns. Evaporated water returns to Earth as rain. Water that gets frozen on the ice caps eventually melts back into the oceans. Water absorbed by plants or that seeps underground does eventually get returned to the surface by plate tectonics or volcanism. Plants that store water return it when the plant is eaten. Water is very hard to destroy, so it stays remarkably constant on Earth over time.

edited May 1, 2017 at 3:10

Nayuki

userLTKuserLTK

$\begingroup$ I think you have a typo in "gets absorbed by planets". $\endgroup$

– Dragomok

$\begingroup$ One problem with this answer is that 50000 tons per year is nowhere near the highest estimate. A fairly common estimate is 3 kg/sec, which is equivalent to twice your rate of 50000 tons per year. Some estimate even higher current mass loss rates. Another problem: Oxygen comprises 8/9 of the mass of a water molecule. Lose the 1/9 that is hydrogen and the water it's gone. One last problem: That ~3 kg/second is the current rate. It was arguably orders of magnitude higher in the distant past and will be orders of magnitude higher in the distant future. $\endgroup$

– David Hammen

$\begingroup$ @Dragomok Thank you. That should be gets absorbed by plants. Fixed. $\endgroup$

– userLTK

$\begingroup$ @DavidHammen I did the math for the 8/9ths. That's why 50 tons of hydrogen works out to 450 tons of water. Also, I know it was orders of magnitudes higher in the distant past, certainly higher when Earth was forming and very hot and higher during the late heavy bombardment and higher prior to the magnetic field. I didn't want to get into that, but I should probably add a notation. As to the estimate, that's a good point. I should adjust the estimate. $\endgroup$

Why doesn't 71% water of the earth dry or evaporate?

The simple answer: Because it rains.

The not so simple answer: By some estimates, the Earth has already lost about a quarter of its water, and it is predicted to lose almost all of its water in a billion or so years from now.

It rains because temperature decreases with altitude. This lapse rate means that moist air becomes saturated at some point in the atmosphere. You can see this point on somewhat cloudy days. While cumulus clouds have puffy tops, they have flat bottoms. Those flat bottoms reflect the point where the humidity level reaches 100%.

Currently, only an extremely small fraction of the moisture in the air makes its way to the top of the thermosphere. The tiny amount of moisture that does make its way to the stratosphere can migrate throughout the stratosphere. The top of the stratosphere is unprotected from the nastier parts of the Sun's output. Ultraviolet radiation dissociates water into hydrogen and oxygen. Some of that hydrogen escapes into space. That lost hydrogen represents lost water.

The Earth is currently losing about three kilograms of hydrogen into space every second. At that rate, it would take about a trillion (1012) years for the Earth to lose all of its waters. There are signs that the escape rate was significantly higher in the distant past. This is a byproduct of solutions to the Faint Young Sun problem. The signs of this early high escape rate are written in stone (serpentine, to be specific). Very old rocks have a different deuterium ratio that is currently observed.

The escape rate will inevitably become significantly higher in the distant future as the Sun gets warmer and warmer. The Earth will eventually lose all of its water.

David HammenDavid Hammen

$\begingroup$ A related question is how much of Earth's water that locked in the mantle will be locked in the mantle and not be lost to space. Might not relevant though because that water is not available for liquid water on the surface. $\endgroup$

$\begingroup$ is predicted to lose almost all of its water in a billion or so years from now.. Strange, considering the most upvoted answer suggests that the Earth will lose about 1% of its water after 30 billion years! $\endgroup$

$\begingroup$ @user1993 - That answer reflects the current loss rate. The Sun grows more luminous as it ages. It will be about 10% more luminous a billion years from now. That increase in luminosity will eventually trigger a runaway greenhouse effect. $\endgroup$

$\begingroup$ @Harry .. as Michael mentioned above there may be a very large amount of water locked in the Earth's mantle. See this article astrobio.net/news-exclusive/… (there are many others). However, contrary to what Michael said, the researchers think that this water may have been a buffer helping the Earth maintain a constant (even more than expected) volume of surface water. $\endgroup$

– Jack R. Woods

$\begingroup$ @DavidHammen, Hi, is it measured beyond doubt that the earth is currently losing kilograms of hydrogen into space every second? Or is it speculation that has its critics? $\endgroup$

– Pacerier

The bigger question is really: where would it go?

Because of course matter isn't created or destroyed. So it'd have to go elsewhere.

The only two places it could go are:

Into the Earth. But water is fairly light (1 g/mL versus Earth which appears to increase from 1.02 g/mL near the surface to 13.09 g/mL at the center). Plus, even if water could/did continue to transition into the Earth in large amounts, it'd just build up there until it reached a saturation level. And there's only so much room between the highly compressed rock.

Out to space. However gravity generally keeps the atmosphere here, just like it does for us. A very small percentage of gas does escape. However, it's predominantly lower mass gases like hydrogen and helium. The question on our site of What "g" would be needed to keep helium on Earth? is quite useful for information on this.

Or, alternatively,

Out to space as its constituent gases. (Monotonic) Oxygen and especially Hydrogen do escape somewhat more readily. Electrolysis and other water splitting methods do cause a small percentage of Earth's water to be broken down at any given time. However, based upon this estimate of 95,000 tons of hydrogen lost per year, and this estimate of $1.4 \times 10^{21}$ kg of water, it'd take 1.8 trillion years to lose all of Earth's water by hydrogen loss.

Otherwise, if it isn't leaving the system, it could only build up in the atmosphere... potentially until reaching a level where the air could hold no more. Theoretically that'd be at 100% relative humidity. Unfortunately the troposphere can only hold the equivalent of a layer of a couple inches\few cm of water. Additionally water tends to leaves the air when enough areas of it reach 100% RH, through processes like dew and precipitation.

And so over the long run, it can only reach an equilibrium, where the amount of water the air held was in balance with the amount it lost. And that seems in line with what we see. Moisture levels vary, but aren't rapidly changing in any direction. Considering that there's 96000 times more water in the oceans than there is in the air currently, the atmosphere surely couldn't hold it all anyways! If it can't leave, and the atmosphere cannot hold it all... well, it has to stay on the Earth. Certainly we see the equilibrium in practice too, as sea\lake levels aren't dropping consistently. Lake levels actually vary quite a bit, showing evaporation has the ability to drain at least the lakes fairly fast. Yet they aren't dropping consistently in any noticeable way. We're very dry in Florida right now, and lakes are way down... but in places like California and the Piedmont they've been seeing great flooding. But neither lasts in the long run.

Looks like studies do estimate that the Earth has lost 1/4 of its water in its estimated lifetime. So indeed it's not that nothing leaves. It's just that it's very slow. If it wasn't... well we'd be seeing it quickly change now! Over our lifetimes, over the past few thousand years. But there's no indications that general lake/ocean levels have drastically changed.

I do really get that there can be skepticism at billions of years. It's certainly something we can't verify too easily, visually, personally. And with Biblical\other religious sources casting potential dispute upon it, that increases the question.

Honestly, a reasonable scientist should welcome skepticism and try to address it with fairness and levelheadedness. Unfortunately there certainly are plenty who are dismissive and belittling, either openly, or in their attitudes.

But while questioning is a very worthwhile endeavor, this particular line of reasoning doesn't appear to hold much mustard. Be wary not to jump too quickly on any potential disagreements you see to accepted theories and thus run hard with them, or you're no different from those overly rigid scientists. Keep seeking for the truth as something of the greatest value. Regardless of what it is, and in the end it alone shall stand, and shall set you free!

JeopardyTempestJeopardyTempest

$\begingroup$ informative, your opinion is much appreciated. $\endgroup$

– Harry

$\begingroup$ There's one big problem with this answer, which is that almost all of the hydrogen that escapes from the Earth's atmosphere was originally in the form of water. Sunlight dissociates water in the middle atmosphere into hydrogen and oxygen. Hydrogen escaping into space means water is lost into space. $\endgroup$

$\begingroup$ @David Hammen: I'm confused, that's what I'm suggesting by option 3. I didn't know the water dissociation region was primarily the middle atmosphere, as you suggest, and can add that... but my calculation equated any hydrogen loss to be water loss, so wherever the water splitting occurs, the hydrogen loss is being counted as water loss. You sound like it's a bad answer! $\endgroup$

– JeopardyTempest

$\begingroup$ (I see my calculation used a poorly noted 9 to do the water to hydrogen mass conversion), perhaps I should've more explicitly used (2.02/18.02), as looking back I thought I'd misequated water mass to hydrogen mass too $\endgroup$

Sep 20, 2022 at 3:35

There are good answers for this question but Earth Science is one of the few sciences that lends itself really well to experiments around the house.

To see the effect you're talking about (water evaporating, but not going away) take a large rag, and soak it in water. Get it very, very wet. Place the large rag in a zip-lock bag and make sure to seal it tight. Now place the bag with the rag out in the sun.

You will notice how the water never leaves the bag. During the sunny part of the day the water evaporates into the air in the bag, and when there is enough in the air, the water condenses and drips (rains) back down onto the rag.

The rag is like the "ground". The bag is like the atmosphere.

Just like with the rag, after water evaporates it "condenses" in the atmosphere (on the bag) and rains (drips) back down to earth (the rag).

This is a over simplified example of the process, but it demonstrates it very well.

coteyrcoteyr

$\begingroup$ sounds logical, but don't you think reprocessing of water that evaporates and comes down in rain form should decrease in amount? we see when it rains there's puddles and some water amount in streets for 1 or 2 days then it evaporates but eventually it gets dried. $\endgroup$

$\begingroup$ It doesnt go anywhere just soaks into the ground or moves by evaporating and raining somewhere elae $\endgroup$

– coteyr

$\begingroup$ Barring some really really small amounts that "leak" into space via various amounts (very very small). All the water on earth stays on earth. It just moves around from place to place. Some times it's ice, some times it's water, some times clouds, but always here. $\endgroup$

$\begingroup$ as clouds travel, so evaporated water of oceans should fall as rain form somewhere on dry part of lands. still it's 71% after so many years as you mentioned some water soaks into the ground as well? $\endgroup$

$\begingroup$ Essentially yes. With out getting silly with numbers there is always the same amount of water on earth, it just moves around. $\endgroup$

"If we put some water in sunlight it evaporates." Really? If I take some water in a closed bottle and put it in the sunlight, then it will evaporate? Obviously not. Why not? Because the air (gas) in the bottle is saturated with water. The relative humidity of that air is 100%, or close to it. You probably know this already. So you should realize that your question is flawed. Not only does water not evaporate in the bottle, but water evaporates at night and inside houses without any sunlight. A better question, imho, is: "Why isn't the Earth's atmosphere saturated with water after 4 billion years?" The atmosphere has a mass of about 5E+18 kg (that is 5 followed by 18 0s, eg 5,000,000,000,000,000,000). The water in the oceans has a mass of about 1.3E+21 kg, meaning about 250 times more mass than the atmosphere. Simply put: there's not enough atmosphere to hold all that water. The reason why the atmosphere is not saturated, does not have 100% relative humidity, is that the temperature of the air varies a lot. Both day to night, and season to season. The air is frequently being cooled and the water lost via precipitation. When this air is reheated, both by direct sunlight and by contact with the surface which warms up even more in direct sunlight, it is low in relative humidity. This is the air that will allow water to evaporate. Because of these temperature cycles, air never has the time to reach saturation.

ableable

5111 bronze badge

Answer #43 above is absolutely correct. Unless water molecules are split (disintegrate) into hydrogen and oxygen, they almost never leave Earth into space. We have practically the same amount of water on Earth as it had when first created. After evaporation, it returns as rain. Every time. Every place. This is why I become crazy when government officials tell us that we are running out of water. This is absurd. We can't run out of water. But it certainly can be mismanaged -- but then, that's the government's job. Isn't it?

Paul MorrisPaul Morris

$\begingroup$ A little water vapor getting out to the upper atmosphere can escape due to solar winds, but it is small (making your post imho okay). Note, water as vapor is lesser dense than air (molecular weight is $\approx$ 18 to the $\approx$ 29 or the air), this increases its relative density in the higher atmosphere - but the effect is still small. Although water escaped from Mars and Venus probably by this process. $\endgroup$

– peterh

I don't want to post a super long answer so I'll keep it simple.

Pressure and gravity due to the mass of the earth keeps the water within the atmosphere and cycling through the 3 states of solid, liquid, and gas.

Our water is constantly being recycled, and some things create water, like humans (we create water in our lungs when we breathe.)

JoshJosh

Thanks for contributing an answer to Earth Science Stack Exchange!

What "g" would be needed to keep helium on Earth?

Why do the dry and moist adiabatic lapse rates converge with height?

Why is Venezuela so dry?

Water on Mars and Earth

Does the magnetic field really protect Earth from anything?

Why doesn't sea level show seasonality?

Why the Indian Monsoon doesn't start in the north?

At what latitudes does the precipitation water for Europe evaporate?

Why doesn't earth radiate at wavelengths where there is strong absorption?

How is centrifugal force identified in equations of fluid motion in the rotating reference system of earth? | CommonCrawl |

Unsupervised machine learning applied to scanning precession electron diffraction data

Ben H. Martineau1,

Duncan N. Johnstone1,

Antonius T. J. van Helvoort3,

Paul A. Midgley1 &

Alexander S. Eggeman ORCID: orcid.org/0000-0002-3447-43222

Advanced Structural and Chemical Imaging volume 5, Article number: 3 (2019) Cite this article

Scanning precession electron diffraction involves the acquisition of a two-dimensional precession electron diffraction pattern at every probe position in a two-dimensional scan. The data typically comprise many more diffraction patterns than the number of distinct microstructural volume elements (e.g. crystals) in the region sampled. A dimensionality reduction, ideally to one representative diffraction pattern per distinct element, may then be sought. Further, some diffraction patterns will contain contributions from multiple crystals sampled along the beam path, which may be unmixed by harnessing this oversampling. Here, we report on the application of unsupervised machine learning methods to achieve both dimensionality reduction and signal unmixing. Potential artefacts are discussed and precession electron diffraction is demonstrated to improve results by reducing the impact of bending and dynamical diffraction so that the data better approximate the case in which each crystal yields a given diffraction pattern.

Scanning transmission electron microscopy (STEM) investigations increasingly combine the measurement of multiple analytical signals as a function of probe position with post-facto computational analysis [1]. In a scan, the number of local signal measurements is usually much greater than the number of significantly distinct microstructural elements and this redundancy may be harnessed during analysis, for example by averaging signals over like regions to improve signal to noise. Unsupervised machine learning techniques automatically exploit data redundancy to find patterns with minimal prior constraints [2]. In analytical electron microscopy, such methods have been applied to learn representative signals corresponding to separate microstructural elements (e.g. crystal phases) and to unmix signals comprising contributions from multiple microstructural elements sampled along the beam path [3,4,5,6,7,8,9,10]. These studies have primarily applied linear matrix decompositions such as independent component analysis (ICA) and non-negative matrix factorisation (NMF).

Scanning precession electron diffraction (SPED) enables nanoscale investigation of local crystallography [11, 12] by recording electron diffraction patterns as the electron beam is scanned across the sample with a step size on the order of nanometres. The incorporation of double conical rocking of the beam, also known as precession [13], achieves integration through a reciprocal space volume for each reflection. Precession has been found to convey a number of advantages for interpretation and analysis of the resultant diffraction patterns, in particular the suppression of intensity variation due to dynamical scattering [14,15,16]. The resultant four-dimensional dataset, comprising two real and two reciprocal dimensions (4D-SPED), can be analysed in numerous ways. For example, the intensity of a sub-set of pixels in each diffraction pattern can be integrated (or summed) as a function of probe position, to form so-called virtual bright field (VBF) or virtual dark field (VDF) images [17, 18]. VBF/VDF analysis has been used to provide insight into local crystallographic variations such as phase [17], strain [19] and orientation [20]. In another approach, the collected diffraction patterns are compared against a library of precomputed templates, providing a visualisation of the microstructure and orientation information, a process known as template or pattern matching [11]. These analyses do not utilise the aforementioned redundancy present in data and may require significant effort on the part of the researcher. Here, we explore the application of unsupervised machine learning methods to achieve dimensionality reduction and signal unmixing.

GaAs (cubic, F43m) nanowires containing type I twin (\(\Sigma 3\)) [21] boundaries were taken as a model system for this work. The long axis of these nanowires is approximately parallel to the [111] crystallographic direction as a result of growth by molecular beam epitaxy [22] on (111). In cross section, these nanowires have an approximately hexagonal geometry with a vertex-to-vertex distance of 120–150 nm. Viewed near to the \([1{\overline{1}}0]\) zone axis, the twin boundary normal is approximately perpendicular to the incident beam direction.

SPED experiments

Scanning precession electron diffraction was performed on a Philips CM300 FEGTEM operating at 300 kV with the scan and simultaneous double rocking of the electron beam controlled using a NanoMegas Digistar external scan generator. A convergent probe with convergence semi-angle of \(\sim 1.5\hbox { mrad}\) and precession angles of 0, 9 and 35 mrad was used to perform scans with a step size of 10 nm using the ASTAR software package. The resolution was thus dominated by the step size. PED patterns were recorded using a Stingray CCD camera to capture the image on the fluorescent binocular viewing screen.

It is generally inappropriate to manipulate raw data before applying multivariate methods such as decomposition or clustering, which cannot be considered objective if subjective prior alterations have been made. In this work, the only data manipulation applied before machine learning is to align the central beams of each diffraction pattern. Geometric distortions introduced from the angle between the camera and the viewing screen were corrected by applying an opposite distortion to the data after the application of machine learning methods.

Multislice simulations

A twinned bi-crystal model was constructed with the normal to the [111] twin boundary inclined at an angle of \(55 ^{\circ }\) to the incident beam direction so that the two crystals overlapped in projection. In this geometry, both crystals are oriented close to \(\langle 511\rangle\) zone axes with coherent matching of the \(\{0{\bar{6}}6\}\) and \(\{2{\bar{8}}2\}\) planes in these zones. Three precession angles were simulated using the TurboSlice package [23]: 0, 10 and 20 mrad, with 200 distinct azimuthal positions about the optic axis to ensure appropriate integration in the resultant simulated patterns [24]. The crystal model used in the simulation comprised 9 unique layers each 0.404 nm thick. 15 layers were used leading to a total thickness of 54.6 nm. These \(512\times 512\)-pixel patterns with 16-bit dynamic range were convolved with a 4-pixel Gaussian kernel to approximate a point spread function.

Linear matrix decomposition

Latent linear models describe data by the linear combination of latent variables that are learned from the data rather than measured—more pragmatically, the repeated features in the data can be well approximated using a small number of basis vectors. With appropriate constraints, the basis vectors may be interpreted as physical signals. To achieve this, a data matrix, \(\mathbf X\), can be approximated as the matrix product of a matrix of basis vectors \(\mathbf W\) (components), and corresponding coefficients \(\mathbf Z\) (loadings). The error in the approximation, or reconstruction error, may be expressed as an objective function to be minimised in a least squares scheme:

$$\begin{aligned} \left| \left| \mathbf X -\mathbf WZ \right| \right| ^{2}_{\mathrm {F}} \end{aligned}$$

where \(||\mathbf A ||_{\mathrm {F}}\) is the Frobenius normFootnote 1 of matrix \(\mathbf A\). More complex objective functions, for example incorporating sparsity promoting weighting factors [25], may be defined. We note that the decomposition is not necessarily performed by directly computing this error minimisation.

Three linear decompositions were used here: singular value decomposition (SVD) [2, 26], independent component analysis (ICA) [27], and non-negative matrix factorisation (NMF) [25, 28]. These decompositions were used as implemented in HyperSpy [29], which itself draws on the algorithms implemented in the open-source package scikit-learn [30].

The singular value decomposition is closely related to the better-known principal component analysis, in which the vectors comprising \(\mathbf W\) are orthonormal. The optimal solution to rank L is then obtained when \(\mathbf W\) is estimated by eigenvectors (principal components) corresponding to the L largest eigenvalues of the empirical covariance matrixFootnote 2. The optimal low-dimensional representation of the data is given by \(\mathbf z _{i} = \mathbf W ^{T}{} \mathbf x _{i}\), which is an orthogonal projection of the data on to the corresponding subspace and maximises the statistical variance of the projected data. This optimal reconstruction may be obtained via truncated SVD of the data matrix—the factors for PCA and SVD are equivalent, though the loadings may differ by independent scaling factors [31].

Unmixing measured signals to determine source signals a priori is known as blind source separation (BSS) [32]. SVD typically yields components that do not correspond well with the original sources due to its orthogonality constraint. ICA solves this problem by maximising the independence of the components, instead of the variance, and is applied to data previously projected by SVD using the widespread FastICA algorithm [27]. NMF [25, 28] may also be used for BSS and imposes \(\mathbf W \ge \mathbf 0 , \mathbf Z \ge \mathbf 0\). To impose these constraints, the algorithm computes a coordinate descent numerical minimisation of Eq. 1. Such an approach does not guarantee convergence to a global minimum and the results are sensitive to initialisation. The implementation used here initialises the optimisation using a non-negative double singular value decomposition (NNDSVD), which is based on two SVD processes, one approximating the data matrix, the other approximating positive sections of the resulting partial SVD factors [33]. This algorithm gives a well-defined non-negative starting point suitable for obtaining a sparse factorisation. Finally, the product \(\mathbf WZ\) is invariant under the transformation \(\mathbf W \rightarrow\) \(\mathbf W \varvec{\lambda }\), \(\mathbf Z \rightarrow\) \(\varvec{\lambda }^{-1}{} \mathbf Z\), where \(\varvec{\lambda }\) is a diagonal matrix. This fact is used to scale the loadings to a maximum value of 1.

Data clustering

Clustering points in space may be achieved using numerous methods. One of the best known is k-means, in which the positions of several cluster prototypes (centroids) are iteratively updated according to the mean of the nearest data points [34]. The clusters thus found are considered to be "hard"—each datum can only belong to a single cluster. Here, we apply fuzzy c-means [35] clustering, which has the significant advantage that data points may be members of multiple clusters allowing for an interpretation based on mixing of multiple cluster centres. For example, a measured diffraction pattern that is an equal mixture of the two basis patterns lies precisely between the two cluster centres and will have a membership of 0.5 to each. We also employ the Gustafson–Kessel variation for c-means, which allows the clusters to adopt elliptical, rather than spherical, shapes [36].

Cluster analysis in spaces of dimension greater than about 10 is unreliable [37, 38] as with increasing dimension "long" distances become less distinct from "short". The relevant dimension of the collected diffraction patterns is the size of the image, on the order of \(10^4\). A dimensionality reduction is, therefore, performed first, using SVD, and clustering is applied in the space of loading coefficients [34]. The cluster centres found in this low-dimensional space can be re-projected into the data space of diffraction patterns to produce a result equivalent to a weighted mean of the measured patterns within the cluster. The spatial occurrence of each basis pattern may then be visualised by plotting the membership values associated with each cluster as a function of probe position to form a membership map.

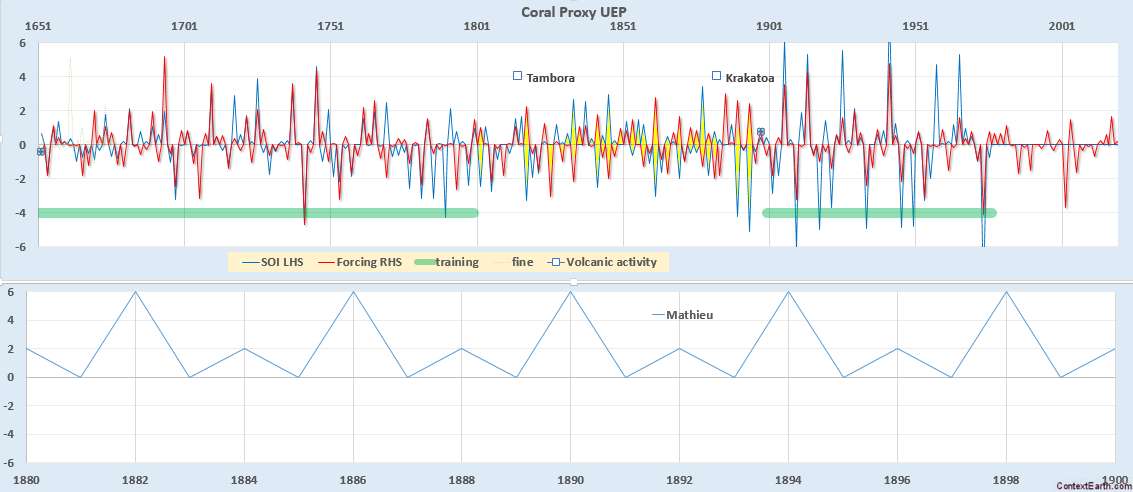

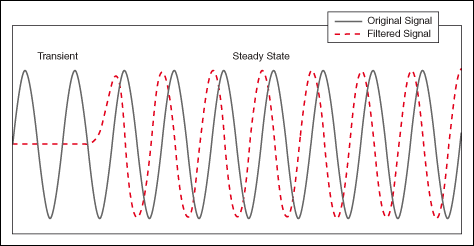

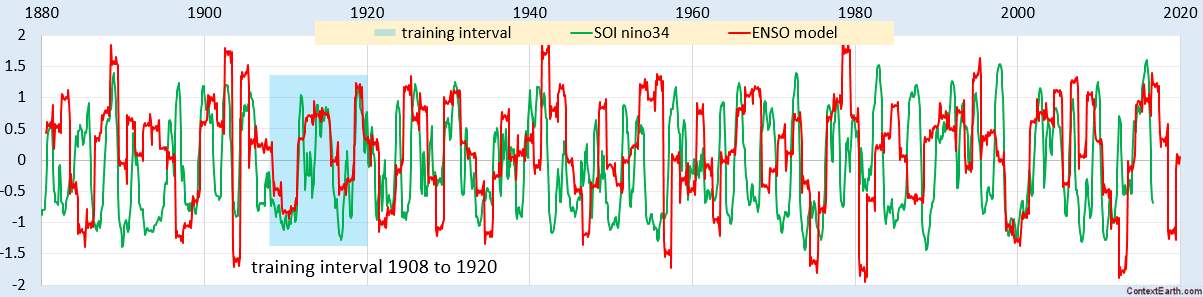

SPED data were acquired with precession angles of 0, 9 and 35 mrad from a GaAs nanowire oriented near to a \([1{\overline{1}}0]\) zone axis such that the twin boundary normal was approximately perpendicular to the incident beam direction, as shown in Fig. 1. The bending of this nanowire is evident in the data acquired without precession (Fig. 1a) as at position iii the diffraction pattern is near the zone axis, whereas at position i a Laue circle is clearly visible. The radius of this Laue circle is \(\sim 24\hbox { mrad}\), which provides an estimate of the bending angle across the field of view. When a precession angle of 35 mrad (i.e. larger than the bending angle) was used, all measured patterns appear close to zone axis (Fig. 1b) due to the reciprocal space integration resulting from the double conical rocking geometry. The effect of this integration is also seen in the contrast of the virtual dark-field image, which shows numerous bend contours without precession and less complex variation in intensity with precession. We surmise that precession leads to the data better approximating the situation where there is a single diffraction pattern associated with each microstructural element, which here is essentially the two twinned crystal orientations and the vacuum surrounding the sample. The region of interest also contains a small portion of carbon support film, which is just visible in the virtual dark-field images as a small variation in intensity. The position of the carbon film has been indicated in the figure.

SPED data from a GaAs nanowire and virtual dark-field images formed by plotting the intensity within the disks marked around \(\lbrace 111\rbrace\) reflections, as a function of probe position. a Without precession and b with 35 mrad precession. Diffraction pattern and VDF image scale bars are common to all subfigures and measure 1 Å\(^{-1}\) and 150 nm respectively. The approximate position of the carbon film is indicated by the red dashed line

Using SVD, we can produce a scree plot showing the fraction of total variance in the data explained by each principal component pattern. Figure 2a shows the scree plot for the 0, 9 and 35 mrad data. A regime change, from relatively high variance components to relatively low variance components, may be identified [2, 39] after 3 components for the data acquired with 35 mrad precession, after 4 components with 9 mrad precession, and cannot clearly be identified without precession. While there is a small change in the line after 4 components in the curve for data recorded without precession, the variance described by the components on either side of this is relatively similar, particularly given the ordinate is on a log scale. This demonstrates that the use of precession reduces the number of components required to describe the data, consistent with the intuitive understanding of the effect of reciprocal space integration achieved using precession. The 4th component, significant in the 9 mrad data, arises because the top and bottom of the nanowire are sufficiently differently oriented, as a result of bending, to be distinguished by the algorithm. We, therefore, continue our analysis focusing attention on data acquired with relatively large precession angles.

SVD and ICA analysis of SPED data from a GaAs nanowire. a Scree plot of variance explained by each SVD component for 0, 9 and 35 mrad data. b First 3 SVD components for 35 mrad data. c ICA components for 35 mrad data. Intensities in red indicate positive values and those in blue indicate negative values. Pattern and loading scale bars are common to all subfigures and measure 1 Å\(^{-1}\) and 150 nm respectively

Component patterns and corresponding loading maps obtained by SVD and ICA analysis of 35 mrad SPED data are shown in Fig. 2b, c, respectively. In either analysis, each feature clearly describes some significant variation in the diffraction peak intensities, although it is worth noting that SVD requires two components to describe the two twins in the wire where ICA needs only one. Both descriptions of the data are mathematically sensible and physical insight can be obtained from the differences between diffraction patterns that are highlighted by negative values in the SVD and ICA component patterns, but neither method produces patterns that can be directly associated with crystal structure. To make use of more conventional diffraction pattern analysis, we seek decomposition constraints that yield learned components which more closely resemble physical diffraction patterns. To this end, we apply NMF and fuzzy clustering.

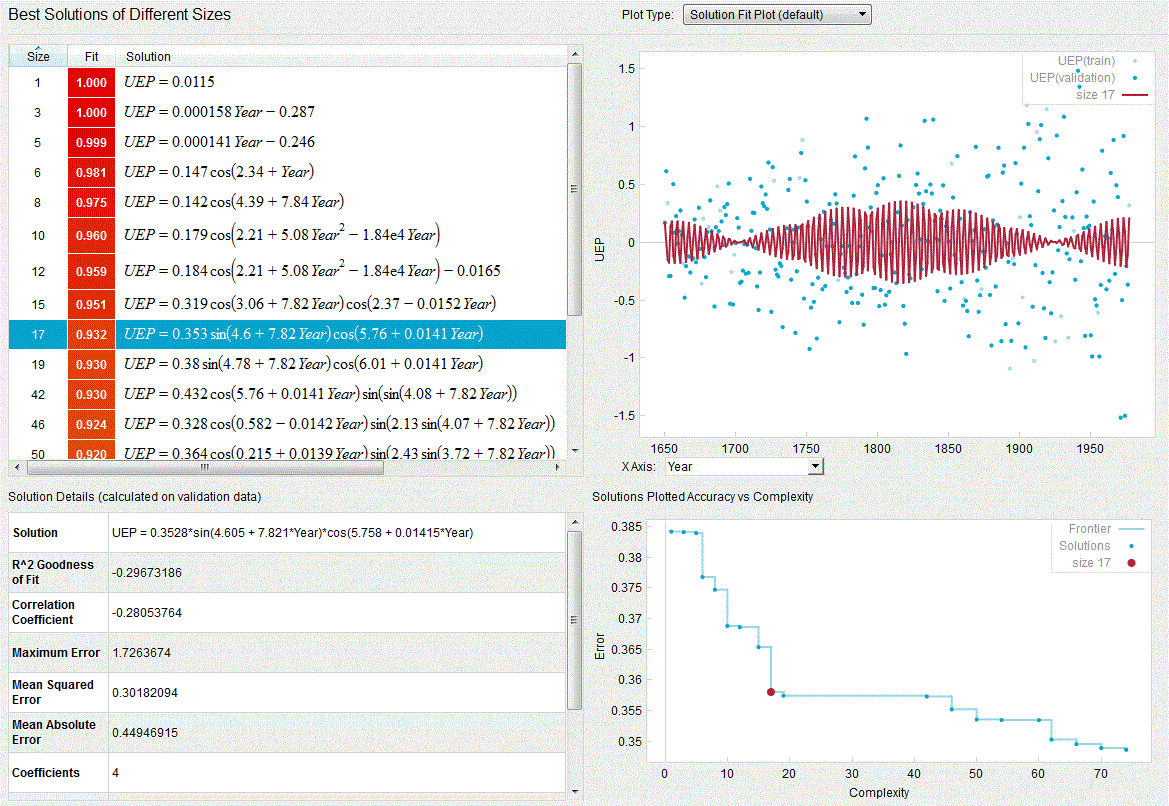

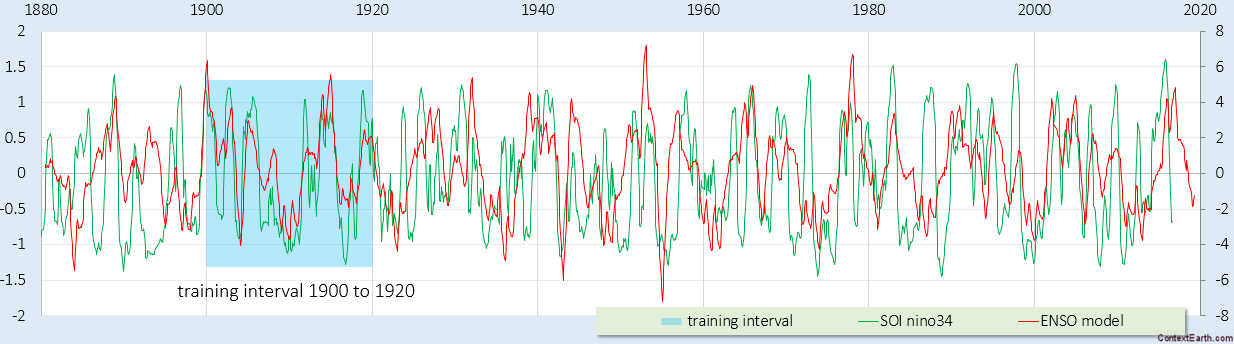

The data were decomposed to three component patterns using NMF, of which, by inspection, one corresponded to the background and two corresponded to the two twinned crystal orientations—the latter shown in Fig. 3a, b. The choice of three components was guided by the intrinsic dimensionality indicated by the SVD analysis and it was further verified that a plot of the NMF reconstruction error (Eq. 1) as a function of increasing number of components showed a similar regime change to the SVD scree plot (see "Availability of data and materials" section at the end of the main text). In the NMF component patterns, white spots are visible, representing intensity lower than background level. We describe these as a pseudo-subtractive contribution of intensity from those locations.

NMF and fuzzy clustering of SPED data from a GaAs nanowire. a, b NMF factors and corresponding loading maps. c Two-dimensional projection of 3 component SVD loadings onto the plane of the second and third loading with cluster membership as contours. d, e Weighted average cluster centre patterns. Pattern and loading scale bars are common to all subfigures and measure 1 Å\(^{-1}\) and 150 nm respectively

In Fig. 3c, SVD loadings for the scan data are shown as a scatter plot, where the axes correspond to the SVD factors. Because the SVD and PCA factors are equivalent, this projection represents the maximum possible variation in the data, and so the maximum discrimination. The loadings associated with each measured pattern are approximately distributed about a triangle in this space. Fuzzy clustering was applied to three SVD components, and the learned memberships are overlaid as contours. Three clusters describe the distribution of the loadings well, and the cluster centres correspond to the background and the twinned crystals as shown in Fig. 3d, e. Both the NMF factors and c-means centers represent the same orientations, but the pseudo-subtractive artefacts in the NMF factors are not present in the cluster centers.

The scatter plot in Fig. 3c also shows that two of the clusters comprise two smaller subclusters. Membership maps for these subclusters reveal that the splitting is due to the underlying carbon film with the subcluster nearer to the background cluster in each case corresponding to the region where the film is present. In the membership maps, there are bright lines along the boundaries between the nanowire and the vacuum, due to overlap between clusters.

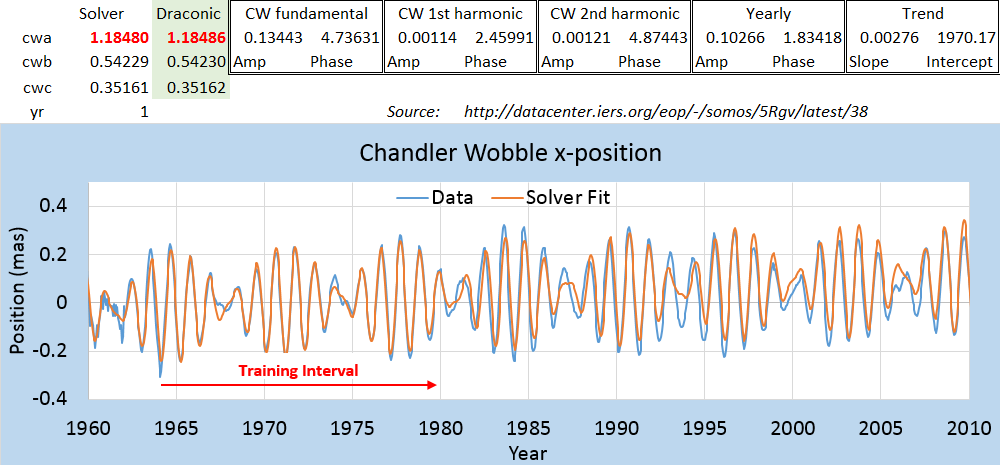

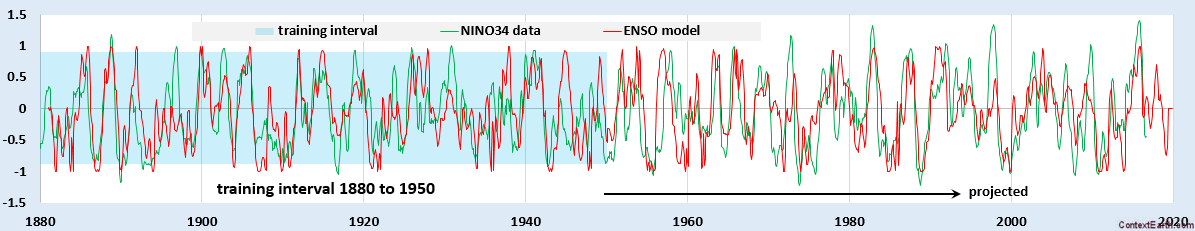

The unmixing of diffraction signals from overlapping crystals was investigated. SPED data with a precession angle of 18 mrad were acquired from a nanowire tilted away from the \([1{\overline{1}}0]\) zone axis by \(\sim 30 ^{\circ }\), such that two microstructural elements overlapped in projection. The overlap of the two crystals was assessed using virtual dark-field imaging, NMF loading maps, and fuzzy clustering membership maps (Fig. 4). The region in which the crystals overlap can be identified by all these methods. The VDF result can be considered a reference and is obtained with minimal processing but requires manual specification of appropriate diffracting conditions for image formation. The NMF and fuzzy clustering approaches are semi-automatic. There is good agreement between the VDF images and NMF loading maps. The boundary appears slightly narrower in the clustering membership map. The NMF loading corresponding to the background component decreases along the profile, which may be related to the underlying carbon film, whilst the cluster membership for the background contains a spurious peak in the overlap region. Finally, the direct beam intensity is much lower in the NMF component patterns than in the true source signals. Our results indicate that either machine learning method is superior to conventional linear decomposition for the analysis of SPED datasets, but some unintuitive and potentially misleading features are present in the learning results.

SPED data from a GaAs nanowire with a twin boundary at an oblique angle to the beam. a Virtual dark-field images formed, using a virtual aperture 4 pixels in diameter, from the circled diffraction spots. b NMF decomposition results. c Clustering results. For b and c the profiles are taken from the line scans indicated, and the blue profile represents the intensity of the background component. Pattern and loading scale bars are common to all subfigures and measure 1 Å\(^{-1}\) and 70 nm respectively

Unsupervised learning methods (SVD, ICA, NMF, and fuzzy clustering) have been explored here in application to SPED data as applied to materials where the region of interest comprises a finite number of significantly different microstructrual elements, i.e. crystals of particular phase and/or orientation. In this case, NMF and clustering may yield a small number of component patterns and weighted average cluster centres that resemble physical electron diffraction patterns. These methods are, therefore, effective for both dimensionality reduction and signal unmixing although we note that neither approach is well suited to situations where there are continuous changes in crystal structure. By contrast, SVD and ICA provide effective means of dimensionality reduction but the components are not readily interpreted using analogous methods to conventional electron diffraction analysis, owing to the presence of many negative values. The SVD and ICA results do nevertheless tend to highlight physically important differences in the diffraction signal across the region of interest. The massive data reduction from many thousands of measured diffraction patterns to a handful of learned component patterns is very useful, as is the unmixing achieved. Artefacts in the learning results were however identified, particularly when applied to achieve signal unmixing, and these are explored further here.

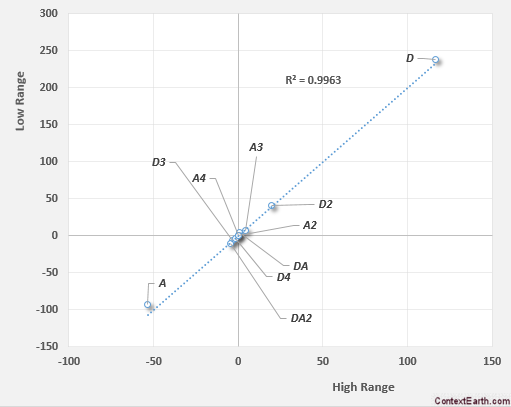

To illustrate artefacts resulting from learning methods, model SPED datasets were constructed based on line scans across inclined boundaries in hypothetical bicrystals. Models (Figs. 5 and 6) were designed to highlight features of two-dimensional diffraction-like signals rather than to reflect the physics of diffraction. These were, therefore, constructed with the strength of the signal directly proportional to thickness of the hypothetical crystal at each point, with no noise, and Gaussian peak profiles.

Construction and decomposition of an idealised model SPED dataset system comprising non-overlapping two-dimensional signals. a Schematic representation of hypothetical bi-crystal. b Ground truth end-member patterns and relative thickness of the two crystals. c Factors and loadings obtained by 2-component NMF. d Cluster centre average patterns and membership maps obtained by fuzzy clustering

Non-independent components. a Expected result for an artificial dataset with two 'phases' with overlapping peaks. b NMF decomposition. c Cluster results. d SVD loadings of the dataset, used for clustering. Each point corresponds to a diffraction pattern in the scan—several are indicated with the dotted lines. Contours indicate the value of membership to the two clusters—refer to "Methods" section "Data clustering"

The model SPED dataset shown in Fig. 5 comprises the linear summation of two square arrays of Gaussians (to emulate diffraction patterns) with no overlap between the two patterns. NMF decomposition exactly recovers the signal profile in this simple case. In contrast, the membership profile obtained by fuzzy clustering, which varies smoothly owing to the use of a Euclidean distance metric, does not match the source signal. The boundary region instead appears qualitatively narrower than the true boundary. Further, the membership value for each of the pure phases is slightly below 100% because the cluster centre is a weighted average position that will only correspond to the end member if there are many more measurements near to it than away from it. A related effect is that the membership value rises at the edge of the boundary region where mixed patterns are closer to the weighted centre than the end members. We conclude that clustering should be used only if the data comprises a significant amount of unmixed signal. In the extreme, cluster analysis cannot separate the contribution from a microstructural feature which has no pure signal in the scan, for example a fully embedded particle. These observations are consistent with the results reported in association with Fig. 4.

A common challenge for signal separation arises when the source signals contain coincident peaks from distinct microstructural elements, as would be the case in SPED data when crystallographic orientation relationships exist between crystals. A model SPED dataset corresponding to this case was constructed and decomposed using NMF and fuzzy clustering (Fig. 6). In this case, the NMF decomposition yields a factor containing all the common reflections and a factor containing the reflections unique to only one end member. Whilst this is interpretable, it is not physical, although it should be noted that this is an extreme example where there is no unique information in one of the source patterns. Nevertheless, it should be expected that the intensity of shared peaks is likely to be unreliable in the learned factors and this was the case for the direct beam in learned component patterns shown in Fig. 4. As a result, components learned through NMF should not be analysed quantitativelyFootnote 3. The weighted average cluster centres resemble the true end members much more closely than the NMF components. The pure phases have a membership of around 99%, rather than 100%, due to the cluster centre being offset from the pure cluster by the mixed data, as shown in Fig. 6d. The observation that memberships extend across all the data (albeit sometimes with vanishingly small values) explains the rise in intensity of the background component in Fig. 4c in the interface region. Such interface regions do not evenly split their membership between their two true constituent clusters, meaning that some membership is attributed to the third cluster, causing a small increase in the membership locally. These issues may potentially be addressed using extensions to the algorithm developed by Rousseeuw et al. [41] or using alternative geometric decompositions such as vertex component analysis [42].

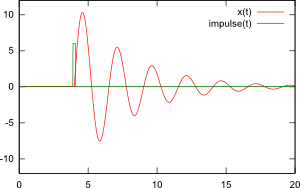

Precession was found empirically to improve machine learning decomposition as discussed above (Fig. 2), so long as the precession angle is large enough. This was attributed primarily to integration through bending of the nanowire. Precession may also result in a more monotonic variation of diffracted intensity with thickness [15] as a result of integration through the Bragg condition. It was, therefore, suggested that precession may improve the approximation that signals from two overlapping crystals may be considered to be combined linearly. To explore this, a multislice simulation of a line scan across a bi-crystal was performed and decomposed using both NMF and fuzzy clustering (Fig. 7). Without precession, both the NMF loadings and the cluster memberships do not increase monotonically with thickness but rather vary significantly in a manner reminiscent of diffracted intensity modulation with thickness due to dynamical scattering. Both the loading profile and the membership profile reach subsidiary minima when the corresponding component is just thicker than half the thickness of the simulation, which corresponds to a thickness of approximately 100 nm and is consistent with the \(2{\bar{2}}0\) extinction length for GaAs of 114 nm. This suggests that the decomposition of the diffraction patterns is highly influenced by a few strong reflections; hence, the variation of the \(2{\bar{2}}0\) reflections with thickness is overwhelming the other structural information encoded in the patterns. The removal of this effect, an essential function of applying precession, is seen: with 10 or 20 mrad precession this intensity modulation is suppressed and the loading or membership maps obtained show a monotonic increase across the inclined boundary. The cluster centres again show intensity corresponding to the opposite end member due to the weighted averaging. Precession is, therefore, beneficial for the application of unsupervised learning algorithms both in reducing signal variations arising from bending, which is a common artefact of specimen preparation, and reducing the impact of dynamical effects on signal mixing.

Unsupervised learning applied to SPED data simulated using dynamical multislice calculations a Original data with a 20 mrad precession angle. b NMF decomposition, in which the loadings have been re-scaled as in Fig. 5. The factors show pseudo-subtractive features, typical of NMF. c Cluster analysis. The high proportion of data points from the boundary means there is information shared between the cluster centres. Without precession, neither method can reproduce the original data structure

Noise and background are both significant in determining the performance of unsupervised learning algorithms. Extensive exploration of these parameters is beyond the scope of this work but we note that the various direct electron detectors that have recently been developed and that are likely to play a significant role in future SPED studies have very different noise properties. Therefore, understanding the optimal noise performance for unsupervised learning may become an important consideration. We also note that the pseudo-subtractive features evident in the NMF decomposition results of Fig. 3 may become more significant in this case and the robustness of fuzzy clustering to this may prove advantageous.

Unsupervised machine learning methods, particularly non-negative matrix factorisation and fuzzy clustering, have been demonstrated here to be capable of learning the significant microstructural features within SPED data. NMF may be considered a true linear unmixing whereas fuzzy clustering, when applied to learn representative patterns, is essentially an automated way of performing a weighted averaging with the weighting learned from the data. The former can struggle to separate coincident signals (including signal shared with a background or noise) whereas the latter implicitly leaves some mixing when a large fraction of measurements are mixed. In both cases, precession electron diffraction patterns are more amenable to unsupervised learning than the static beam equivalents. This is due to the integration through the Bragg condition, resulting from rocking the beam, causing diffracted beam intensities to vary more monotonically with thickness and the integration through small orientation changes due to out of plane bending. This work has, therefore, demonstrated that unsupervised machine learning methods, when applied to SPED data, are capable of reducing the data to the most salient structural features and unmixing signals. The scope for machine learning to reveal nanoscale crystallography will expand rapidly in the coming years with the application of more advanced methods.

The Frobenius norm is defined as \(||\mathbf A ||_{\mathrm {F}} = \sqrt{\sum \limits _{i=1}^{m} \sum \limits _{j=1}^{n} a_{ij}^{2}}\)

The empirical covariance matrix, \(\Sigma = \frac{1}{N}\sum _{i=1}^{N} \mathbf x _{i}{} \mathbf x _{i}^{T}\)

This problem may be mitigated by enforcing a sum-to-one constraint on the loadings learned through NMF during optimisation. See for example [40].

BSS:

blind source separation

FEGTEM:

field-emission gun transmission electron microscope

ICA:

independent component analysis

NNDSVD:

non-negative double singular value decomposition

NMF:

non-negative matrix factorisation

PED:

precession electron diffraction

SPED:

scanning precession electron diffraction

scanning transmission electron microscopy

SVD:

singular value decomposition

VBF:

virtual bright field

VDF:

virtual dark field

Thomas, J.M., Leary, R.K., Eggeman, A.S., Midgley, P.A.: The rapidly changing face of electron microscopy. Chem. Phys. Lett. 631, 103–113 (2015). https://doi.org/10.1016/j.cplett.2015.04.048

Murphy, K.P.: Machine Learning: A Probabilistic Perspective. Adaptive Computation and Machine Learning. MIT Press, Boston (2012)

de la Peña, F., Berger, M.H., Hochepied, J.F., Dynys, F., Stephan, O., Walls, M.: Mapping titanium and tin oxide phases using EELS: an application of independent component analysis. Ultramicroscopy 111(2), 169–176 (2011). https://doi.org/10.1016/J.ULTRAMIC.2010.10.001

Nicoletti, O., de la Peña, F., Leary, R.K., Holland, D.J., Ducati, C., Midgley, P.A.: Three-dimensional imaging of localized surface plasmon resonances of metal nanoparticles. Nature 502(7469), 80–84 (2013). https://doi.org/10.1038/nature12469

Rossouw, D., Burdet, P., de la Peña, F., Ducati, C., Knappett, B.R., Wheatley, A.E.H., Midgley, P.A.: Multicomponent signal unmixing from nanoheterostructures: overcoming the traditional challenges of nanoscale X-ray analysis via machine learning. Nano Lett. 15(4), 2716–2720 (2015). https://doi.org/10.1021/acs.nanolett.5b00449

Rossouw, D., Krakow, R., Saghi, Z., Yeoh, C.S., Burdet, P., Leary, R.K., de la Peña, F., Ducati, C., Rae, C.M., Midgley, P.A.: Blind source separation aided characterization of the \(\gamma\)' strengthening phase in an advanced nickel-based superalloy by spectroscopic 4D electron microscopy. Acta Mater. 107, 229–238 (2016). https://doi.org/10.1016/j.actamat.2016.01.042

Rossouw, D., Knappett, B.R., Wheatley, A.E.H., Midgley, P.A.: A new method for determining the composition of core-shell nanoparticles via dual-EDX+EELS spectrum imaging. Particle Particle Syst. Charact. 33(10), 749–755 (2016). https://doi.org/10.1002/ppsc.201600096

Shiga, M., Tatsumi, K., Muto, S., Tsuda, K., Yamamoto, Y., Mori, T., Tanji, T.: Sparse modeling of EELS and EDX spectral imaging data by nonnegative matrix factorization. Ultramicroscopy 170, 43–59 (2016). https://doi.org/10.1016/J.ULTRAMIC.2016.08.006

Eggeman, A.S., Krakow, R., Midgley, Pa: Scanning precession electron tomography for three-dimensional nanoscale orientation imaging and crystallographic analysis. Nat. Commun. 6, 7267 (2015). https://doi.org/10.1038/ncomms8267

Sunde, J.K., Marioara, C.D., Van Helvoort, A.T.J., Holmestad, R.: The evolution of precipitate crystal structures in an Al-Mg-Si(-Cu) alloy studied by a combined HAADF-STEM and SPED approach. Mater. Charact. 142, 458–469 (2018). https://doi.org/10.1016/j.matchar.2018.05.031

Rauch, E.F., Veron, M.: Coupled microstructural observations and local texture measurements with an automated crystallographic orientation mapping tool attached to a TEM. Materialwissenschaft und Werkstofftechnik 36(10), 552–556 (2005). https://doi.org/10.1002/mawe.200500923

Rauch, E.F., Portillo, J., Nicolopoulos, S., Bultreys, D., Rouvimov, S., Moeck, P.: Automated nanocrystal orientation and phase mapping in the transmission electron microscope on the basis of precession electron diffraction. Zeitschrift für Kristallographie 225(2–3), 103–109 (2010). https://doi.org/10.1524/zkri.2010.1205

Vincent, R., Midgley, P.: Double conical beam-rocking system for measurement of integrated electron diffraction intensities. Ultramicroscopy 53(3), 271–282 (1994). https://doi.org/10.1016/0304-3991(94)90039-6

White, T., Eggeman, A., Midgley, P.: Is precession electron diffraction kinematical? Part I: "Phase-scrambling" multislice simulations. Ultramicroscopy 110(7), 763–770 (2010). https://doi.org/10.1016/J.ULTRAMIC.2009.10.013

Eggeman, A.S., White, T.A., Midgley, P.A.: Is precession electron diffraction kinematical? Part II. A practical method to determine the optimum precession angle. Ultramicroscopy 110(7), 771–777 (2010). https://doi.org/10.1016/j.ultramic.2009.10.012

Sinkler, W., Marks, L.D.: Characteristics of precession electron diffraction intensities from dynamical simulations. Zeitschrift für Kristallographie 225(2–3), 47–55 (2010). https://doi.org/10.1524/zkri.2010.1199

Rauch, E.F., Véron, M.: Virtual dark-field images reconstructed from electron diffraction patterns. Eur. Phys. J. Appl. Phys. 66(1), 10,701 (2014). https://doi.org/10.1051/epjap/2014130556

Gammer, C., Burak Ozdol, V., Liebscher, C.H., Minor, A.M.: Diffraction contrast imaging using virtual apertures. Ultramicroscopy 155, 1–10 (2015). https://doi.org/10.1016/J.ULTRAMIC.2015.03.015

Rouviere, J.L., Béché, A., Martin, Y., Denneulin, T., Cooper, D.: Improved strain precision with high spatial resolution using nanobeam precession electron diffraction. Appl. Phys. Lett. 103(24), 241913 (2013). https://doi.org/10.1063/1.4829154

Moeck, P., Rouvimov, S., Rauch, E.F., Véron, M., Kirmse, H., Häusler, I., Neumann, W., Bultreys, D., Maniette, Y., Nicolopoulos, S.: High spatial resolution semi-automatic crystallite orientation and phase mapping of nanocrystals in transmission electron microscopes. Crys. Res. Technol. 46(6), 589–606 (2011). https://doi.org/10.1002/crat.201000676

Kelly, A., Groves, G., Kidd, P.: Crystallography and Crystal Defects. Wiley, Chichester (2000)

Munshi, A.M., Dheeraj, D.L., Fauske, V.T., Kim, D.C., Huh, J., Reinertsen, J.F., Ahtapodov, L., Lee, K.D., Heidari, B., van Helvoort, A.T.J., Fimland, B.O., Weman, H.: Position-controlled uniform GaAs nanowires on silicon using nanoimprint lithography. Nano Lett. 14(2), 960–966 (2014). https://doi.org/10.1021/nl404376m

Eggeman, A., London, A., Midgley, P.: Ultrafast electron diffraction pattern simulations using gpu technology. Applications to lattice vibrations. Ultramicroscopy 134, 44–47 (2013). https://doi.org/10.1016/j.ultramic.2013.05.013

Palatinus, L., Jacob, D., Cuvillier, P., Klementová, M., Sinkler, W., Marks, L.D.: IUCr: structure refinement from precession electron diffraction data. Acta Crystallogr. Sect. A Found. Crystallogr. 69(2), 171–188 (2013). https://doi.org/10.1107/S010876731204946X

Hoyer, P.O.: Non-negative matrix factorization with sparseness constraints. J. Mach. Learn. Res. 5, 1457–1469 (2004)

Jolliffe, I.: Principal component analysis. In: International Encyclopedia of Statistical Science, pp. 1094–1096. Springer , Berlin (2011)

Hyvärinen, A., Karhunen, J., Oja, E.: Independent Component Analysis. Wiley, New York (2001)

Lee, D.D., Seung, H.S.: Learning the parts of objects by non-negative matrix factorization. Nature 401(6755), 788–91 (1999). https://doi.org/10.1038/44565

de la Pena, F., Ostasevicius, T., Tonaas Fauske, V., Burdet, P., Jokubauskas, P., Nord, M., Sarahan, M., Prestat, E., Johnstone, D.N., Taillon, J., Jan Caron, J., Furnival, T., MacArthur, K.E., Eljarrat, A., Mazzucco, S., Migunov, V., Aarholt, T., Walls, M., Winkler, F., Donval, G., Martineau, B., Garmannslund, A., Zagonel, L.F., Iyengar, I.: Electron Microscopy (Big and Small) Data Analysis With the Open Source Software Package HyperSpy. Microsc. Microanal. 23(S1), 214–215 (2017). https://doi.org/10.1017/S1431927617001751

Pedregosa, F., Varoquaux, G., Gramfort, A., Michel, V., Thirion, B., Grisel, O., Blondel, M., Prettenhofer, P., Weiss, R., Dubourg, V., Vanderplas, J., Passos, A., Cournapeau, D., Brucher, M., Perrot, M., Duchesnay, É.: Scikit-learn: machine learning in python. J. Mach. Learn. Res. 12(Oct), 2825–2830 (2011)

Shlens, J.: A tutorial on principal component analysis. CoRR (2014). arXiv:abs/1404.1100

Bishop, C.: Pattern Recognition and Machine Learning. Information Science and Statistics. Springer, New York (2006)

Boutsidis, C., Gallopoulos, E.: Svd based initialization: a head start for nonnegative matrix factorization. Pattern Recogn. 41(4), 1350–1362 (2008). https://doi.org/10.1016/j.patcog.2007.09.010

Everitt, B., Landau, S., Leese, M.: Clust. Anal. Wiley, Chichester (2009)

Bezdek, J.C., Ehrlich, R., Full, W.: FCM: the fuzzy c-means clustering algorithm. Comput. Geosci. 10(2–3), 191–203 (1984). https://doi.org/10.1016/0098-3004(84)90020-7

Gustafson, D., Kessel, W.: Fuzzy clustering with a fuzzy covariance matrix. In: 1978 IEEE conference on decision and control including the 17th symposium on adaptive processes, pp. 761–766. IEEE, San Diego (1978). https://doi.org/10.1109/CDC.1978.268028

Marimont, R.B., Shapiro, M.B.: Nearest neighbour searches and the curse of dimensionality. IMA J. Appl. Math. 24(1), 59–70 (1979). https://doi.org/10.1093/imamat/24.1.59

Aggarwal, C.C., Hinneburg, A., Keim, D.A.: On the surprising behavior of distance metric in high-dimensional space (2002)

Rencher, A.: Methods of Multivariate Analysis. Wiley Series in Probability and Statistics. Wiley, Hoboken (2002)

Kannan, R., Ievlev, A.V., Laanait, N., Ziatdinov, M.A., Vasudevan, R.K., Jesse, S., Kalinin, S.V.: Deep data analysis via physically constrained linear unmixing: universal framework, domain examples, and a community-wide platform. Adv. Struct. Chem. Imaging 4(1), 6 (2018). https://doi.org/10.1186/s40679-018-0055-8

Rousseeuw, P.J., Trauwaertb, E., Kaufman, L.: Fuzzy clustering with high contrast. J. Comput. Appl. Math. 0427(95), 8–9 (1995)

Spiegelberg, J., Rusz, J., Thersleff, T., Pelckmans, K.: Analysis of electron energy loss spectroscopy data using geometric extraction methods. Ultramicroscopy 174, 14–26 (2017). https://doi.org/10.1016/J.ULTRAMIC.2016.12.014

BHM, DNJ, ASE, and PAM proposed the investigation. DNJ, ASE, and ATJvH performed the SPED experiments, ASE provided the multislice simulations, and BHM implemented the c-means algorithm. The data analysis was undertaken by BHM and DNJ who also prepared the manuscript, with oversight and critical contributions from ASE, ATJvH, and PAM. All authors read and approved the final manuscript.

Acknowlegements

Prof. Weman and Fimland of IES at NTNU are acknowledged for supplying the nanowire samples.

Data used in this work has been made freely available to download at https://doi.org/10.17863/CAM.26432.

The Python 3 code to perform the analysis has also been made available, at https://doi.org/10.17863/CAM.26444.

The authors acknowledge financial support from: The Royal Society (Grant RG140453; UF130286); the Seventh Framework Programme of the European Commission: ESTEEM2, contract number 312483; the European Research Council under the European Union's Seventh Framework Programme (FP7/2007-2013)/ERC grant agreement 291522-3DIMAGE; the University of Cambridge and the Cambridge NanoDTC; the EPSRC (Grant no. EP/R008779/1).

Department of Materials Science and Metallurgy, University of Cambridge, 27 Charles Babbage Road, Cambridge, CB3 0FS, UK

Ben H. Martineau, Duncan N. Johnstone & Paul A. Midgley

The School of Materials, The University of Manchester, Oxford Road, Manchester, M13 9PL, UK

Alexander S. Eggeman

Department of Physics, Norwegian University of Science and Technology, Hogskolering 5, 7491, Trondheim, Norway

Antonius T. J. van Helvoort

Ben H. Martineau

Duncan N. Johnstone

Paul A. Midgley

Correspondence to Ben H. Martineau.

Martineau, B.H., Johnstone, D.N., van Helvoort, A.T.J. et al. Unsupervised machine learning applied to scanning precession electron diffraction data. Adv Struct Chem Imag 5, 3 (2019). https://doi.org/10.1186/s40679-019-0063-3

Multivariate analysis

Scanning electron diffraction | CommonCrawl |

Criterion predicting the generation of microdamages in the filled elastomers

Alexander Konstantinovich Sokolov1,

Oleg Konstantinovich Garishin1 and

Alexander L'vovich Svistkov1Email author

Mechanics of Advanced Materials and Modern Processes20184:7

Received: 30 June 2018

Accepted: 6 December 2018

Incorporation of active fillers to rubber markedly improves the strength properties and deformation characteristics of such materials. One possible explanation of this phenomenon is suggested in this work. It is based on the fact that for large deformations the binder (high-elastic, cross-linked elastomer) in the gaps between the filler particles (carbon black) is in a state close to the uniaxial extension. The greater part of polymer molecular chains are oriented along the loading axis in this situation. Therefore it can be assumed that the material in this state has a higher strength compared to other ones at the same intensity of deformation. In this paper, a new strength criterion is proposed, and a few examples are given to illustrate its possible use. It is shown that microscopic ruptures that occur during materials deformation happen not in the space between filler particles but at some distance around from it without breaking particle "interactions" through these gaps. The verification of this approach in modeling the stretching of a sample from an unfilled elastomer showed that in this case it works in full accordance with the classical strength criteria, where the presence in the material of a small defect (microscopic incision) leads to the appearance and catastrophic growth of the macrocrack.

Damage generation

Nanocomposite

Finite deformations

Computational modeling

Fracture criterion

Elastomeric nanocomposites contains a highly elastic rubber matrix, where rigid grain nanoparticles or aggregates of nanoparticles are dispersed. Over the past years, a lot of practical experience has been gained in creating such materials for a variety of applications. Elastomers in which nanoparticles are used as filler are characterized by increased strength and ultimate deformation (strain at break). However, the reasons for such improvement of their mechanical characteristics are still the subject of discussions among materials experts. Since the beginning of the 20-th century, it has been well established that the reinforcement of rubbers with carbon black (20–30% by volume) significantly improves their operational characteristics. In particular, such materials possess enhanced rigidity; their tensile strength and ultimate strains increase by 5–15 times and 2–4 times, respectively. Intensive study of the mechanical properties of elastomeric nanocomposites in relation to the type of filler and its concentration and manufacturing technology is still in progress. An example are the works related to the study of the elastomers properties filled with carbon black, carbon nanotubes, nanodiamonds, various mineral particles (montmorillonite, palygorskite, schungite etc.) (Rodgers & Waddel, 2013; Jovanovic et al., 2013; Le et al., 2015; Lvov et al., 2016; Mokhireva et al., 2017; He et al., 2015; Huili et al., 2017; Stöckelhuber et al., 2011; Garishin et al., 2017). A part from numerous experimental investigations, there are many theoretical works devoted to structural modeling of the physical-mechanical properties of materials, which takes into account the characteristic features of the internal structure and processes at micro- and nanolevels (Garishin & Moshev, 2005; Reese, 2003; Österlöf et al., 2015; Ivaneiko et al., 2016; Raghunath et al., 2016; Plagge & Klüppel, 2017; Svistkov et al., 2016; Svistkov, 2010).

An important feature of elastomeric composites is the ability to change their mechanical properties (softening) as a result of preliminary deformation (the Mullins-Patrikeev effect) (Svistkov, 2010; Patrikeev, 1946; Mullins, 1947; Mullins & Tobin, 1965; Mullins, 1986; Diani et al., 2009). This feature can significantly influence the mechanical behavior of the products made of filled elastomer (Sokolov et al., 2016; Sokolov et al., 2018). To date, the Mullins effect is an object of intensive theoretical and experimental study. In the literature there is still no single settled opinion about its nature.

It has been found that, the anisotropic properties can also be formed in filled elastomers under softening (Govindjee & Simo, 1991; Machado et al., 2012). The anisotropy features are clearly seen in the samples the second loading of which is executed at some angle to the direction of the force applied during the first loading (Machado et al., 2014). Some authors try to describe these effects using purely phenomenological models (without specifying the physical meaning of the internal variables used) (Ragni et al., 2018; Itskov et al., 2010). A model based on the features of the interaction of polymer chains with filler particles is proposed in (Dorfmann & Pancheri, 2012). In particular, it is assumed that the anisotropic softening of the material during its cyclic loading is due to the peculiarities of interaction of polymer network with filler particles, including breaking and mutual slipping of macromolecules segments.

However, despite the undoubted progress in the analysis of possible mechanisms responsible for the formation of properties of nanofilled elastomers, there are still ambiguities to be clarified. An increase in strength and the appearance of anisotropic properties after the first deformation can be attributed to the existence of micro and nanostrands in its structure. Their existence is confirmed by experimental studies (Marckmann et al., 2016; Reichert et al., 1993; Le Cam et al., 2004; Watabe et al., 2005; Beurrot et al., 2010; Marco et al., 2010). In (Matos et al., 2012), based on the results of experimental studies of the carbon-filled rubber structure (using electron microtomography) and computer simulation, it was shown that the macrodeformation of about 15% can cause significant microdeformation of the matrix of 100 and more percent in the zones between the agglomerates of carbon black particles. Investigations of the nanostructure of filled rubbers in a stretched (up to pre-rupture) state by the atomic force microscopy methods (AFM) demonstrate the formation of a fibrous texture between filler particles (Morozov et al., 2012). Tomograms of the microstructure of rubber (electron microscopy), obtained in (Akutagava et al., 2008) also show strands and strand-linked aggregates of carbon black particles.

The results of experimental studies indicate that the fraction of polymer is not extractable from uncured filled rubber compounds by a good solvent of the gum elastomer. A polymer layer remains on the surface of the filler particles remains, called "bound rubber". The simplest and most obvious explanation for this fact is the phenomenon of adsorption of polymer chains on the surface of particles and the occurrence of a strong bond between the polymer and the particles. So, the basis of the Meissner theory and its further refinements (Meissner, 1974; Meissner, 1993; Karásek & Meissner, 1994; Karásek & Meissner, 1998) is the idea of random adsorption of portions of polymer chains on the reactive sites which are assumed to exist on the surface of filler particles. In this approach, the filler particles are considered as a polyfunctional crosslinking agent for the polymer chains. Carbon black particles are assembled into aggregates with a strong bond that is difficult to destroy. These aggregates have dimensions of 100 to 300 nm. Therefore, many authors, when constructing models of the behavior of filled elastomers, consider the state of the polymer around particle aggregates within the framework of continuum mechanics. It is reasonable to consider nanofiller not as multifunctional stitching of polymer chains, but as nanoinclusions of a composite material.