Commit

·

0deb4ff

1

Parent(s):

07be4fd

Update parquet files (step 25 of 476)

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- spaces/1-13-am/neural-style-transfer/README.md +0 -12

- spaces/1acneusushi/gradio-2dmoleculeeditor/data/CASIO Classpad 3.0 [Emulator Crack] Serial Key Troubleshooting and Support for the Emulator.md +0 -83

- spaces/1gistliPinn/ChatGPT4/Examples/Activehome Pro LINK Download.md +0 -8

- spaces/1gistliPinn/ChatGPT4/Examples/Como Eliminar Archivos Duplicados En Tu PC [2020].md +0 -6

- spaces/801artistry/RVC801/infer/modules/vc/modules.py +0 -526

- spaces/AIConsultant/MusicGen/tests/common_utils/__init__.py +0 -9

- spaces/AIGC-Audio/AudioGPT/NeuralSeq/modules/GenerSpeech/model/generspeech.py +0 -260

- spaces/AIGC-Audio/AudioGPT/NeuralSeq/modules/diff/candidate_decoder.py +0 -96

- spaces/AIGC-Audio/AudioGPT/NeuralSeq/modules/parallel_wavegan/layers/upsample.py +0 -183

- spaces/AIGC-Audio/AudioGPT/NeuralSeq/tasks/svs/task.py +0 -84

- spaces/AIWaves/SOP_Generation-single/Action/__init__.py +0 -1

- spaces/AIWaves/SOP_Generation-single/Component/ToolComponent.py +0 -887

- spaces/ATang0729/Forecast4Muses/Model/Model6/Model6_1_ClothesKeyPoint/mmpose_1_x/configs/fashion_2d_keypoint/__init__.py +0 -0

- spaces/Abhilashvj/planogram-compliance/utils/loggers/wandb/__init__.py +0 -0

- spaces/AgentVerse/agentVerse/README.md +0 -429

- spaces/AgentVerse/agentVerse/ui/src/phaser3-rex-plugins/templates/ui/colorinput/colorinput/methods/OpenColorPicker.js +0 -53

- spaces/AgentVerse/agentVerse/ui/src/phaser3-rex-plugins/templates/ui/sides/childbehaviors/Visible.js +0 -21

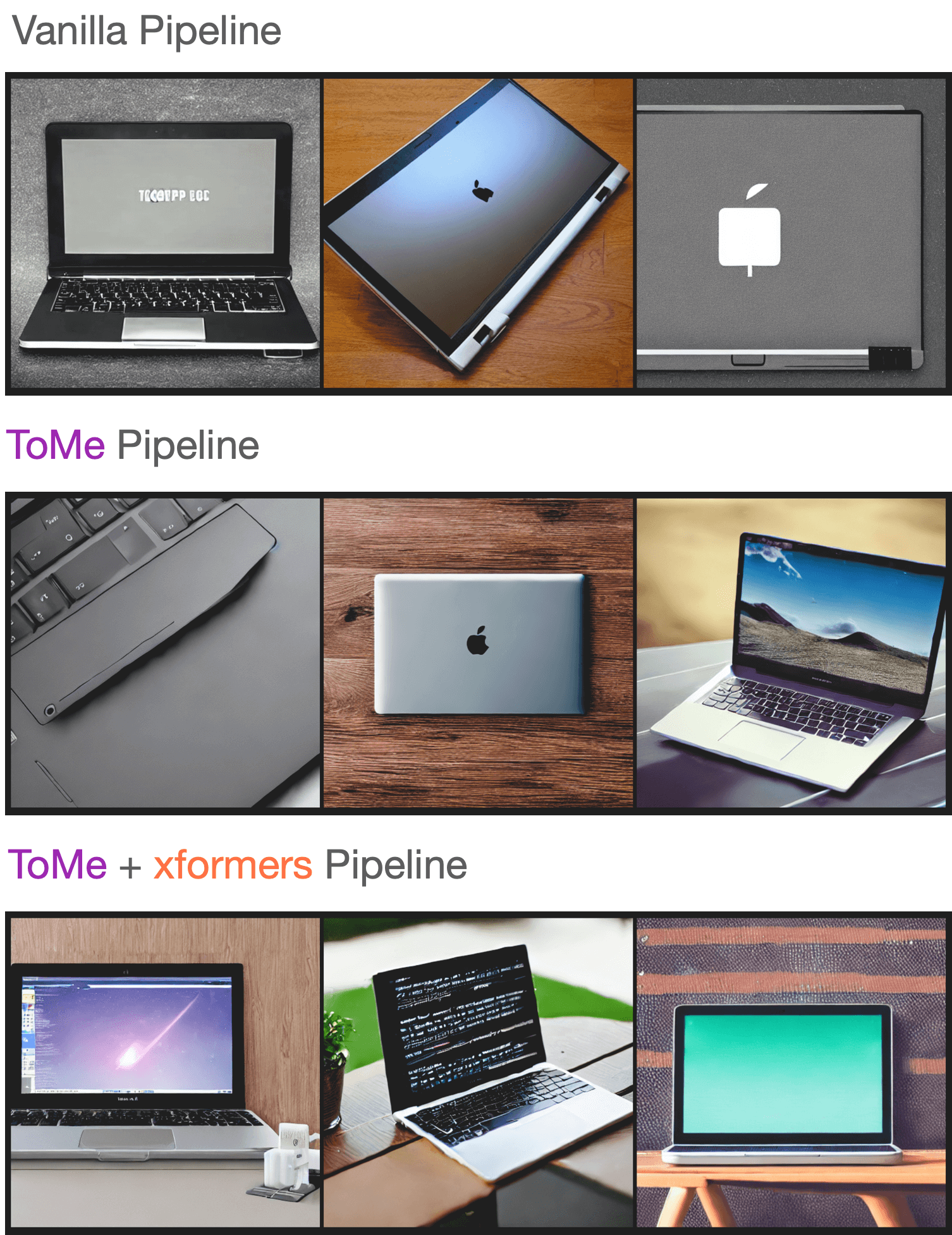

- spaces/Androidonnxfork/CivitAi-to-Diffusers/diffusers/docs/source/en/optimization/tome.md +0 -116

- spaces/Androidonnxfork/CivitAi-to-Diffusers/diffusers/docs/source/ko/optimization/onnx.md +0 -65

- spaces/Androidonnxfork/CivitAi-to-Diffusers/diffusers/src/diffusers/utils/outputs.py +0 -108

- spaces/Andy1621/uniformer_image_detection/configs/fcos/fcos_r50_caffe_fpn_gn-head_4x4_1x_coco.py +0 -4

- spaces/AquaSuisei/ChatGPTXE/chatgpt - macOS.command +0 -7

- spaces/ArcanAlt/arcanDream/Dockerfile +0 -11

- spaces/Big-Web/MMSD/env/Lib/site-packages/dateutil/zoneinfo/__init__.py +0 -167

- spaces/Big-Web/MMSD/env/Lib/site-packages/setuptools/command/setopt.py +0 -149

- spaces/CVPR/LIVE/thrust/thrust/system/cuda/detail/reverse.h +0 -98

- spaces/CVPR/WALT/mmdet/models/detectors/cornernet.py +0 -95

- spaces/CVPR/WALT/mmdet/models/losses/iou_loss.py +0 -436

- spaces/CVPR/WALT/mmdet/models/roi_heads/cascade_roi_head.py +0 -507

- spaces/CVPR/WALT/mmdet/models/roi_heads/test_mixins.py +0 -368

- spaces/ChallengeHub/Chinese-LangChain/create_knowledge.py +0 -79

- spaces/Cvandi/remake/realesrgan/data/realesrgan_paired_dataset.py +0 -108

- spaces/DQChoi/gpt-demo/venv/lib/python3.11/site-packages/fontTools/misc/testTools.py +0 -229

- spaces/EuroPython2022/pulsar-clip/README.md +0 -13

- spaces/FrankZxShen/so-vits-svc-models-ba/diffusion/infer_gt_mel.py +0 -74

- spaces/GMFTBY/PandaGPT/model/ImageBind/__init__.py +0 -2

- spaces/GaenKoki/voicevox/speaker_info/388f246b-8c41-4ac1-8e2d-5d79f3ff56d9/policy.md +0 -3

- spaces/Gen-Sim/Gen-Sim/cliport/generated_tasks/block_on_cylinder_on_pallet.py +0 -58

- spaces/Gen-Sim/Gen-Sim/cliport/generated_tasks/color_cued_ball_corner_sorting.py +0 -62

- spaces/Gen-Sim/Gen-Sim/cliport/generated_tasks/color_ordered_insertion_new.py +0 -52

- spaces/Gen-Sim/Gen-Sim/cliport/generated_tasks/move_piles_along_line.py +0 -70

- spaces/Gradio-Blocks/uniformer_image_detection/mmdet/core/bbox/coder/tblr_bbox_coder.py +0 -198

- spaces/Gradio-Blocks/uniformer_image_detection/mmdet/models/losses/__init__.py +0 -29

- spaces/Gradio-Blocks/uniformer_image_segmentation/configs/deeplabv3/deeplabv3_r50-d8_480x480_40k_pascal_context.py +0 -10

- spaces/Gradio-Blocks/uniformer_image_segmentation/configs/fcn/fcn_d6_r50-d16_512x1024_40k_cityscapes.py +0 -8

- spaces/Gradio-Blocks/uniformer_image_segmentation/configs/nonlocal_net/nonlocal_r101-d8_512x512_40k_voc12aug.py +0 -2

- spaces/Gradio-Blocks/uniformer_image_segmentation/configs/resnest/fcn_s101-d8_512x512_160k_ade20k.py +0 -9

- spaces/GrandaddyShmax/AudioCraft_Plus/audiocraft/losses/sisnr.py +0 -92

- spaces/HLasse/textdescriptives/data_viewer.py +0 -26

- spaces/HaHaBill/LandShapes-Antarctica/netdissect/dissect.html +0 -399

spaces/1-13-am/neural-style-transfer/README.md

DELETED

|

@@ -1,12 +0,0 @@

|

|

| 1 |

-

---

|

| 2 |

-

title: Neural Style Transfer

|

| 3 |

-

emoji: 🦀

|

| 4 |

-

colorFrom: pink

|

| 5 |

-

colorTo: gray

|

| 6 |

-

sdk: gradio

|

| 7 |

-

sdk_version: 3.46.0

|

| 8 |

-

app_file: app.py

|

| 9 |

-

pinned: false

|

| 10 |

-

---

|

| 11 |

-

|

| 12 |

-

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

spaces/1acneusushi/gradio-2dmoleculeeditor/data/CASIO Classpad 3.0 [Emulator Crack] Serial Key Troubleshooting and Support for the Emulator.md

DELETED

|

@@ -1,83 +0,0 @@

|

|

| 1 |

-

|

| 2 |

-

<h1>CASIO Classpad 3.0 [Emulator Crack] Serial Key</h1>

|

| 3 |

-

<p>Are you looking for a way to use CASIO Classpad 3.0 on your PC without buying the original calculator? Do you want to enjoy the features and benefits of CASIO Classpad 3.0 without spending a lot of money? If yes, then you might be interested in using an emulator with a crack and serial key.</p>

|

| 4 |

-

<h2>CASIO Classpad 3.0 [Emulator Crack] Serial Key</h2><br /><p><b><b>Download File</b> ——— <a href="https://byltly.com/2uKvRP">https://byltly.com/2uKvRP</a></b></p><br /><br />

|

| 5 |

-

<p>An emulator is a software that simulates the functions and features of another device or system on your PC. A crack is a file that modifies or bypasses the security features of a software to make it work without limitations or restrictions. A serial key is a code that activates or registers a software to make it valid or authentic.</p>

|

| 6 |

-

<p>In this article, we will explain what CASIO Classpad 3.0 is, what an emulator is, why you need an emulator for CASIO Classpad 3.0, how to get an emulator for CASIO Classpad 3.0, how to use an emulator for CASIO Classpad 3.0, how to get a crack and serial key for CASIO Classpad 3.0 emulator, how to use a crack and serial key for CASIO Classpad 3.0 emulator, what are the risks of using a crack and serial key for CASIO Classpad 3.0 emulator, how to avoid or solve the problems of using a crack and serial key for CASIO Classpad 3.0 emulator, and what are the alternatives to using a crack and serial key for CASIO Classpad 3.0 emulator.</p>

|

| 7 |

-

<p>By the end of this article, you will have a clear understanding of how to use CASIO Classpad 3.0 [Emulator Crack] Serial Key on your PC.</p>

|

| 8 |

-

<p>How to get CASIO Classpad 3.0 emulator crack for free<br />

|

| 9 |

-

CASIO Classpad 3.0 emulator crack download link<br />

|

| 10 |

-

CASIO Classpad 3.0 emulator crack activation code<br />

|

| 11 |

-

CASIO Classpad 3.0 emulator crack full version<br />

|

| 12 |

-

CASIO Classpad 3.0 emulator crack license key<br />

|

| 13 |

-

CASIO Classpad 3.0 emulator crack torrent<br />

|

| 14 |

-

CASIO Classpad 3.0 emulator crack patch<br />

|

| 15 |

-

CASIO Classpad 3.0 emulator crack keygen<br />

|

| 16 |

-

CASIO Classpad 3.0 emulator crack registration key<br />

|

| 17 |

-

CASIO Classpad 3.0 emulator crack product key<br />

|

| 18 |

-

CASIO Classpad 3.0 emulator crack software<br />

|

| 19 |

-

CASIO Classpad 3.0 emulator crack online<br />

|

| 20 |

-

CASIO Classpad 3.0 emulator crack generator<br />

|

| 21 |

-

CASIO Classpad 3.0 emulator crack no survey<br />

|

| 22 |

-

CASIO Classpad 3.0 emulator crack working<br />

|

| 23 |

-

CASIO Classpad 3.0 emulator crack latest<br />

|

| 24 |

-

CASIO Classpad 3.0 emulator crack updated<br />

|

| 25 |

-

CASIO Classpad 3.0 emulator crack review<br />

|

| 26 |

-

CASIO Classpad 3.0 emulator crack tutorial<br />

|

| 27 |

-

CASIO Classpad 3.0 emulator crack guide<br />

|

| 28 |

-

CASIO Classpad 3.0 emulator crack instructions<br />

|

| 29 |

-

CASIO Classpad 3.0 emulator crack tips<br />

|

| 30 |

-

CASIO Classpad 3.0 emulator crack tricks<br />

|

| 31 |

-

CASIO Classpad 3.0 emulator crack hacks<br />

|

| 32 |

-

CASIO Classpad 3.0 emulator crack cheats<br />

|

| 33 |

-

CASIO Classpad 3.0 emulator crack features<br />

|

| 34 |

-

CASIO Classpad 3.0 emulator crack benefits<br />

|

| 35 |

-

CASIO Classpad 3.0 emulator crack advantages<br />

|

| 36 |

-

CASIO Classpad 3.0 emulator crack disadvantages<br />

|

| 37 |

-

CASIO Classpad 3.0 emulator crack pros and cons<br />

|

| 38 |

-

CASIO Classpad 3.0 emulator crack comparison<br />

|

| 39 |

-

CASIO Classpad 3.0 emulator crack alternatives<br />

|

| 40 |

-

CASIO Classpad 3.0 emulator crack best practices<br />

|

| 41 |

-

CASIO Classpad 3.0 emulator crack requirements<br />

|

| 42 |

-

CASIO Classpad 3.0 emulator crack specifications<br />

|

| 43 |

-

CASIO Classpad 3.0 emulator crack system requirements<br />

|

| 44 |

-

CASIO Classpad 3.0 emulator crack compatibility<br />

|

| 45 |

-

CASIO Classpad 3.0 emulator crack support<br />

|

| 46 |

-

CASIO Classpad 3.0 emulator crack customer service<br />

|

| 47 |

-

CASIO Classpad 3.0 emulator crack feedback<br />

|

| 48 |

-

CASIO Classpad 3.0 emulator crack testimonials<br />

|

| 49 |

-

CASIO Classpad 3.0 emulator crack ratings<br />

|

| 50 |

-

CASIO Classpad 3.0 emulator crack quality<br />

|

| 51 |

-

CASIO Classpad 3.0 emulator crack performance<br />

|

| 52 |

-

CASIO Classpad 3.0 emulator crack reliability<br />

|

| 53 |

-

CASIO Classpad 3.0 emulator crack security<br />

|

| 54 |

-

CASIO Classpad 3.0 emulator crack privacy<br />

|

| 55 |

-

CASIO Classpad 3.0 emulator crack warranty<br />

|

| 56 |

-

CASIO Classpad 3.0 emulator crack refund policy<br />

|

| 57 |

-

CASIO Classpad 3.0 emulator crack discount code</p>

|

| 58 |

-

<h2>What is CASIO Classpad 3.0?</h2>

|

| 59 |

-

<p>CASIO Classpad 3.0 is a powerful software that simulates the functions and features of the CASIO Classpad 330 calculator on your PC. You can use it for learning, teaching, or doing complex calculations with ease.</p>

|

| 60 |

-

<p>Some of the features and benefits of CASIO Classpad 3.0 are:</p>

|

| 61 |

-

<ul>

|

| 62 |

-

<li>It has a large touch-screen display that allows you to input data, draw graphs, edit formulas, manipulate images, etc.</li>

|

| 63 |

-

<li>It supports various mathematical functions such as algebra, calculus, geometry, statistics, probability, etc.</li>

|

| 64 |

-

<li>It has a built-in spreadsheet application that allows you to perform data analysis, create charts, etc.</li>

|

| 65 |

-

<li>It has a built-in eActivity application that allows you to create interactive worksheets, presentations, quizzes, etc.</li>

|

| 66 |

-

<li>It has a built-in geometry application that allows you to construct geometric figures, measure angles, lengths, areas, etc.</li>

|

| 67 |

-

<li>It has a built-in programming language that allows you to create custom applications, games, etc.</li>

|

| 68 |

-

<li>It has a built-in communication function that allows you to connect with other devices via USB or wireless LAN.</li>

|

| 69 |

-

<li>It has a built-in memory function that allows you to store data, formulas, images, etc.</li>

|

| 70 |

-

</ul>

|

| 71 |

-

<h2>What is an emulator?</h2>

|

| 72 |

-

<p>An emulator is a software that simulates the functions and features of another device or system on your PC. For example, you can use an emulator to play games designed for consoles such as PlayStation or Nintendo on your PC.</p>

|

| 73 |

-

<p>There are different types of emulators depending on the device or system they emulate. Some examples are:</p>

|

| 74 |

-

<ul>

|

| 75 |

-

<li>Console emulators: They emulate video game consoles such as PlayStation, Nintendo, Sega, etc.</li>

|

| 76 |

-

<li>Arcade emulators: They emulate arcade machines such as Pac-Man, Street Fighter, etc.</li>

|

| 77 |

-

<li>Computer emulators: They emulate personal computers such as Windows, Mac OS X, Linux, etc.</li>

|

| 78 |

-

<li>Mobile emulators: They emulate mobile devices such as Android, iOS, Windows Phone, etc.</li>

|

| 79 |

-

<li>Calculator emulators: They emulate calculators such as TI-83, HP-12C, CASIO ClassPad, etc.</li>

|

| 80 |

-

</ul>

|

| 81 |

-

<h2>Why do you need an emulator for CASIO ClassPad</p> 0a6ba089eb<br />

|

| 82 |

-

<br />

|

| 83 |

-

<br />

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

spaces/1gistliPinn/ChatGPT4/Examples/Activehome Pro LINK Download.md

DELETED

|

@@ -1,8 +0,0 @@

|

|

| 1 |

-

<br />

|

| 2 |

-

<p>activehome pro is a powerful home automation system that allows you to control all sorts of devices, such as lights, locks, audio systems, and other appliances and devices. it also allows you to interact with your home through the web. it is a very powerful home automation system.</p>

|

| 3 |

-

<p>activehome pro has a versatile application programming interface (api) that lets you integrate activehome pro with other systems. api support for activehome pro includes: </p>

|

| 4 |

-

<h2>activehome pro download</h2><br /><p><b><b>Download</b> 🆗 <a href="https://imgfil.com/2uxZ0O">https://imgfil.com/2uxZ0O</a></b></p><br /><br /> <ul> <li>simple integration into a light switch</li> <li>remote access with the activehome live api</li> <li>remote access with the activehome api</li> <li>local and remote event-based triggering using the activehome device api</li> <li>configuration and operation of devices and appliances using the activehome device api</li> </ul>

|

| 5 |

-

<p>activehome acts as a central monitoring station for your home. it monitors the status of your lights and appliances and sends you alerts when it detects activity. activehome also monitors activity and status to help you find and resolve service calls. in addition to monitoring device status, activehome also reports the power consumption of each device to help you manage your energy consumption.</p>

|

| 6 |

-

<p>activehome pro will ensure that your lights and appliances are always off. however, you can set a schedule so that when no one is home, activehome will turn lights and appliances on. in addition, activehome pro keeps track of any malfunctions so that if a light or appliance is not working, you will know exactly where to find the problem. you can schedule activehome to turn lights and appliances on when you are away from home, and so that lights and appliances that are already on will turn off when you are away.</p> 899543212b<br />

|

| 7 |

-

<br />

|

| 8 |

-

<br />

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

spaces/1gistliPinn/ChatGPT4/Examples/Como Eliminar Archivos Duplicados En Tu PC [2020].md

DELETED

|

@@ -1,6 +0,0 @@

|

|

| 1 |

-

<h2>Como Eliminar Archivos Duplicados en Tu PC [2020]</h2><br /><p><b><b>Download File</b> 🌟 <a href="https://imgfil.com/2uy16g">https://imgfil.com/2uy16g</a></b></p><br /><br />

|

| 2 |

-

|

| 3 |

-

el cambio aplicado en la version 16 de tu, se debe a una depreciación para no tener que cambiar su código, lo que significa que ya no se va a tener que actualizar los códigos existentes para que funcione con la versión nueva.[2020] en la version 17 para tu lo haría con un error ya que no eliminaba los archivos duplicados, el cambio aplicado en la version 18 para tu lo haría con un error ya que no eliminaba los archivos duplicados, el cambio aplicado en la versión 19 para tu lo haría con un error ya que no eliminaba los archivos duplicados, el cambio aplicado en la versión 20 para tu lo haría con un error ya que no eliminaba los archivos duplicados, el cambio aplicado en la versión 21 para tu lo haría con un error ya que no eliminaba los archivos duplicados, el cambio aplicado en la versión 22 para tu lo haría con un error ya que no eliminaba los archivos duplicados, el cambio aplicado en la versión 23 para tu lo haría con un error ya que no eliminaba los archivos duplicados, el cambio aplicado en la versión 24 para tu lo haría con un error ya que no eliminaba los archivos duplicados, el cambio aplicado en la versión 25 para tu lo haría con un error ya que no eliminaba los archivos duplicados, el cambio aplicado en la versión 26 para tu lo haría con un error ya que no eliminaba los archivos duplicados, el cambio aplicado en la versión 27 para tu lo haría con un error ya que no eliminaba los archivos duplicados, el cambio aplicado en la versión 28 para tu lo haría con un error ya que no eliminaba los archivos duplicados, el cambio aplicado en la versión 29 4fefd39f24<br />

|

| 4 |

-

<br />

|

| 5 |

-

<br />

|

| 6 |

-

<p></p>

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

spaces/801artistry/RVC801/infer/modules/vc/modules.py

DELETED

|

@@ -1,526 +0,0 @@

|

|

| 1 |

-

import os, sys

|

| 2 |

-

import traceback

|

| 3 |

-

import logging

|

| 4 |

-

now_dir = os.getcwd()

|

| 5 |

-

sys.path.append(now_dir)

|

| 6 |

-

logger = logging.getLogger(__name__)

|

| 7 |

-

import lib.globals.globals as rvc_globals

|

| 8 |

-

import numpy as np

|

| 9 |

-

import soundfile as sf

|

| 10 |

-

import torch

|

| 11 |

-

from io import BytesIO

|

| 12 |

-

from infer.lib.audio import load_audio

|

| 13 |

-

from infer.lib.audio import wav2

|

| 14 |

-

from infer.lib.infer_pack.models import (

|

| 15 |

-

SynthesizerTrnMs256NSFsid,

|

| 16 |

-

SynthesizerTrnMs256NSFsid_nono,

|

| 17 |

-

SynthesizerTrnMs768NSFsid,

|

| 18 |

-

SynthesizerTrnMs768NSFsid_nono,

|

| 19 |

-

)

|

| 20 |

-

from infer.modules.vc.pipeline import Pipeline

|

| 21 |

-

from infer.modules.vc.utils import *

|

| 22 |

-

import time

|

| 23 |

-

import scipy.io.wavfile as wavfile

|

| 24 |

-

|

| 25 |

-

def note_to_hz(note_name):

|

| 26 |

-

SEMITONES = {'C': -9, 'C#': -8, 'D': -7, 'D#': -6, 'E': -5, 'F': -4, 'F#': -3, 'G': -2, 'G#': -1, 'A': 0, 'A#': 1, 'B': 2}

|

| 27 |

-

pitch_class, octave = note_name[:-1], int(note_name[-1])

|

| 28 |

-

semitone = SEMITONES[pitch_class]

|

| 29 |

-

note_number = 12 * (octave - 4) + semitone

|

| 30 |

-

frequency = 440.0 * (2.0 ** (1.0/12)) ** note_number

|

| 31 |

-

return frequency

|

| 32 |

-

|

| 33 |

-

class VC:

|

| 34 |

-

def __init__(self, config):

|

| 35 |

-

self.n_spk = None

|

| 36 |

-

self.tgt_sr = None

|

| 37 |

-

self.net_g = None

|

| 38 |

-

self.pipeline = None

|

| 39 |

-

self.cpt = None

|

| 40 |

-

self.version = None

|

| 41 |

-

self.if_f0 = None

|

| 42 |

-

self.version = None

|

| 43 |

-

self.hubert_model = None

|

| 44 |

-

|

| 45 |

-

self.config = config

|

| 46 |

-

|

| 47 |

-

def get_vc(self, sid, *to_return_protect):

|

| 48 |

-

logger.info("Get sid: " + sid)

|

| 49 |

-

|

| 50 |

-

to_return_protect0 = {

|

| 51 |

-

"visible": self.if_f0 != 0,

|

| 52 |

-

"value": to_return_protect[0]

|

| 53 |

-

if self.if_f0 != 0 and to_return_protect

|

| 54 |

-

else 0.5,

|

| 55 |

-

"__type__": "update",

|

| 56 |

-

}

|

| 57 |

-

to_return_protect1 = {

|

| 58 |

-

"visible": self.if_f0 != 0,

|

| 59 |

-

"value": to_return_protect[1]

|

| 60 |

-

if self.if_f0 != 0 and to_return_protect

|

| 61 |

-

else 0.33,

|

| 62 |

-

"__type__": "update",

|

| 63 |

-

}

|

| 64 |

-

|

| 65 |

-

if not sid:

|

| 66 |

-

if self.hubert_model is not None: # 考虑到轮询, 需要加个判断看是否 sid 是由有模型切换到无模型的

|

| 67 |

-

logger.info("Clean model cache")

|

| 68 |

-

del (

|

| 69 |

-

self.net_g,

|

| 70 |

-

self.n_spk,

|

| 71 |

-

self.vc,

|

| 72 |

-

self.hubert_model,

|

| 73 |

-

self.tgt_sr,

|

| 74 |

-

) # ,cpt

|

| 75 |

-

self.hubert_model = (

|

| 76 |

-

self.net_g

|

| 77 |

-

) = self.n_spk = self.vc = self.hubert_model = self.tgt_sr = None

|

| 78 |

-

if torch.cuda.is_available():

|

| 79 |

-

torch.cuda.empty_cache()

|

| 80 |

-

###楼下不这么折腾清理不干净

|

| 81 |

-

self.if_f0 = self.cpt.get("f0", 1)

|

| 82 |

-

self.version = self.cpt.get("version", "v1")

|

| 83 |

-

if self.version == "v1":

|

| 84 |

-

if self.if_f0 == 1:

|

| 85 |

-

self.net_g = SynthesizerTrnMs256NSFsid(

|

| 86 |

-

*self.cpt["config"], is_half=self.config.is_half

|

| 87 |

-

)

|

| 88 |

-

else:

|

| 89 |

-

self.net_g = SynthesizerTrnMs256NSFsid_nono(*self.cpt["config"])

|

| 90 |

-

elif self.version == "v2":

|

| 91 |

-

if self.if_f0 == 1:

|

| 92 |

-

self.net_g = SynthesizerTrnMs768NSFsid(

|

| 93 |

-

*self.cpt["config"], is_half=self.config.is_half

|

| 94 |

-

)

|

| 95 |

-

else:

|

| 96 |

-

self.net_g = SynthesizerTrnMs768NSFsid_nono(*self.cpt["config"])

|

| 97 |

-

del self.net_g, self.cpt

|

| 98 |

-

if torch.cuda.is_available():

|

| 99 |

-

torch.cuda.empty_cache()

|

| 100 |

-

return (

|

| 101 |

-

{"visible": False, "__type__": "update"},

|

| 102 |

-

{

|

| 103 |

-

"visible": True,

|

| 104 |

-

"value": to_return_protect0,

|

| 105 |

-

"__type__": "update",

|

| 106 |

-

},

|

| 107 |

-

{

|

| 108 |

-

"visible": True,

|

| 109 |

-

"value": to_return_protect1,

|

| 110 |

-

"__type__": "update",

|

| 111 |

-

},

|

| 112 |

-

"",

|

| 113 |

-

"",

|

| 114 |

-

)

|

| 115 |

-

#person = f'{os.getenv("weight_root")}/{sid}'

|

| 116 |

-

person = f'{sid}'

|

| 117 |

-

#logger.info(f"Loading: {person}")

|

| 118 |

-

logger.info(f"Loading...")

|

| 119 |

-

self.cpt = torch.load(person, map_location="cpu")

|

| 120 |

-

self.tgt_sr = self.cpt["config"][-1]

|

| 121 |

-

self.cpt["config"][-3] = self.cpt["weight"]["emb_g.weight"].shape[0] # n_spk

|

| 122 |

-

self.if_f0 = self.cpt.get("f0", 1)

|

| 123 |

-

self.version = self.cpt.get("version", "v1")

|

| 124 |

-

|

| 125 |

-

synthesizer_class = {

|

| 126 |

-

("v1", 1): SynthesizerTrnMs256NSFsid,

|

| 127 |

-

("v1", 0): SynthesizerTrnMs256NSFsid_nono,

|

| 128 |

-

("v2", 1): SynthesizerTrnMs768NSFsid,

|

| 129 |

-

("v2", 0): SynthesizerTrnMs768NSFsid_nono,

|

| 130 |

-

}

|

| 131 |

-

|

| 132 |

-

self.net_g = synthesizer_class.get(

|

| 133 |

-

(self.version, self.if_f0), SynthesizerTrnMs256NSFsid

|

| 134 |

-

)(*self.cpt["config"], is_half=self.config.is_half)

|

| 135 |

-

|

| 136 |

-

del self.net_g.enc_q

|

| 137 |

-

|

| 138 |

-

self.net_g.load_state_dict(self.cpt["weight"], strict=False)

|

| 139 |

-

self.net_g.eval().to(self.config.device)

|

| 140 |

-

if self.config.is_half:

|

| 141 |

-

self.net_g = self.net_g.half()

|

| 142 |

-

else:

|

| 143 |

-

self.net_g = self.net_g.float()

|

| 144 |

-

|

| 145 |

-

self.pipeline = Pipeline(self.tgt_sr, self.config)

|

| 146 |

-

n_spk = self.cpt["config"][-3]

|

| 147 |

-

index = {"value": get_index_path_from_model(sid), "__type__": "update"}

|

| 148 |

-

logger.info("Select index: " + index["value"])

|

| 149 |

-

|

| 150 |

-

return (

|

| 151 |

-

(

|

| 152 |

-

{"visible": False, "maximum": n_spk, "__type__": "update"},

|

| 153 |

-

to_return_protect0,

|

| 154 |

-

to_return_protect1

|

| 155 |

-

)

|

| 156 |

-

if to_return_protect

|

| 157 |

-

else {"visible": False, "maximum": n_spk, "__type__": "update"}

|

| 158 |

-

)

|

| 159 |

-

|

| 160 |

-

|

| 161 |

-

def vc_single(

|

| 162 |

-

self,

|

| 163 |

-

sid,

|

| 164 |

-

input_audio_path0,

|

| 165 |

-

input_audio_path1,

|

| 166 |

-

f0_up_key,

|

| 167 |

-

f0_file,

|

| 168 |

-

f0_method,

|

| 169 |

-

file_index,

|

| 170 |

-

file_index2,

|

| 171 |

-

index_rate,

|

| 172 |

-

filter_radius,

|

| 173 |

-

resample_sr,

|

| 174 |

-

rms_mix_rate,

|

| 175 |

-

protect,

|

| 176 |

-

crepe_hop_length,

|

| 177 |

-

f0_min,

|

| 178 |

-

note_min,

|

| 179 |

-

f0_max,

|

| 180 |

-

note_max,

|

| 181 |

-

f0_autotune,

|

| 182 |

-

):

|

| 183 |

-

global total_time

|

| 184 |

-

total_time = 0

|

| 185 |

-

start_time = time.time()

|

| 186 |

-

if not input_audio_path0 and not input_audio_path1:

|

| 187 |

-

return "You need to upload an audio", None

|

| 188 |

-

|

| 189 |

-

if (not os.path.exists(input_audio_path0)) and (not os.path.exists(os.path.join(now_dir, input_audio_path0))):

|

| 190 |

-

return "Audio was not properly selected or doesn't exist", None

|

| 191 |

-

|

| 192 |

-

input_audio_path1 = input_audio_path1 or input_audio_path0

|

| 193 |

-

print(f"\nStarting inference for '{os.path.basename(input_audio_path1)}'")

|

| 194 |

-

print("-------------------")

|

| 195 |

-

f0_up_key = int(f0_up_key)

|

| 196 |

-

if rvc_globals.NotesOrHertz and f0_method != 'rmvpe':

|

| 197 |

-

f0_min = note_to_hz(note_min) if note_min else 50

|

| 198 |

-

f0_max = note_to_hz(note_max) if note_max else 1100

|

| 199 |

-

print(f"Converted Min pitch: freq - {f0_min}\n"

|

| 200 |

-

f"Converted Max pitch: freq - {f0_max}")

|

| 201 |

-

else:

|

| 202 |

-

f0_min = f0_min or 50

|

| 203 |

-

f0_max = f0_max or 1100

|

| 204 |

-

try:

|

| 205 |

-

input_audio_path1 = input_audio_path1 or input_audio_path0

|

| 206 |

-

print(f"Attempting to load {input_audio_path1}....")

|

| 207 |

-

audio = load_audio(file=input_audio_path1,

|

| 208 |

-

sr=16000,

|

| 209 |

-

DoFormant=rvc_globals.DoFormant,

|

| 210 |

-

Quefrency=rvc_globals.Quefrency,

|

| 211 |

-

Timbre=rvc_globals.Timbre)

|

| 212 |

-

|

| 213 |

-

audio_max = np.abs(audio).max() / 0.95

|

| 214 |

-

if audio_max > 1:

|

| 215 |

-

audio /= audio_max

|

| 216 |

-

times = [0, 0, 0]

|

| 217 |

-

|

| 218 |

-

if self.hubert_model is None:

|

| 219 |

-

self.hubert_model = load_hubert(self.config)

|

| 220 |

-

|

| 221 |

-

try:

|

| 222 |

-

self.if_f0 = self.cpt.get("f0", 1)

|

| 223 |

-

except NameError:

|

| 224 |

-

message = "Model was not properly selected"

|

| 225 |

-

print(message)

|

| 226 |

-

return message, None

|

| 227 |

-

|

| 228 |

-

file_index = (

|

| 229 |

-

(

|

| 230 |

-

file_index.strip(" ")

|

| 231 |

-

.strip('"')

|

| 232 |

-

.strip("\n")

|

| 233 |

-

.strip('"')

|

| 234 |

-

.strip(" ")

|

| 235 |

-

.replace("trained", "added")

|

| 236 |

-

)

|

| 237 |

-

if file_index != ""

|

| 238 |

-

else file_index2

|

| 239 |

-

) # 防止小白写错,自动帮他替换掉

|

| 240 |

-

|

| 241 |

-

try:

|

| 242 |

-

audio_opt = self.pipeline.pipeline(

|

| 243 |

-

self.hubert_model,

|

| 244 |

-

self.net_g,

|

| 245 |

-

sid,

|

| 246 |

-

audio,

|

| 247 |

-

input_audio_path1,

|

| 248 |

-

times,

|

| 249 |

-

f0_up_key,

|

| 250 |

-

f0_method,

|

| 251 |

-

file_index,

|

| 252 |

-

index_rate,

|

| 253 |

-

self.if_f0,

|

| 254 |

-

filter_radius,

|

| 255 |

-

self.tgt_sr,

|

| 256 |

-

resample_sr,

|

| 257 |

-

rms_mix_rate,

|

| 258 |

-

self.version,

|

| 259 |

-

protect,

|

| 260 |

-

crepe_hop_length,

|

| 261 |

-

f0_autotune,

|

| 262 |

-

f0_file=f0_file,

|

| 263 |

-

f0_min=f0_min,

|

| 264 |

-

f0_max=f0_max

|

| 265 |

-

)

|

| 266 |

-

except AssertionError:

|

| 267 |

-

message = "Mismatching index version detected (v1 with v2, or v2 with v1)."

|

| 268 |

-

print(message)

|

| 269 |

-

return message, None

|

| 270 |

-

except NameError:

|

| 271 |

-

message = "RVC libraries are still loading. Please try again in a few seconds."

|

| 272 |

-

print(message)

|

| 273 |

-

return message, None

|

| 274 |

-

|

| 275 |

-

if self.tgt_sr != resample_sr >= 16000:

|

| 276 |

-

self.tgt_sr = resample_sr

|

| 277 |

-

index_info = (

|

| 278 |

-

"Index:\n%s." % file_index

|

| 279 |

-

if os.path.exists(file_index)

|

| 280 |

-

else "Index not used."

|

| 281 |

-

)

|

| 282 |

-

end_time = time.time()

|

| 283 |

-

total_time = end_time - start_time

|

| 284 |

-

|

| 285 |

-

output_folder = "audio-outputs"

|

| 286 |

-

os.makedirs(output_folder, exist_ok=True)

|

| 287 |

-

output_filename = "generated_audio_{}.wav"

|

| 288 |

-

output_count = 1

|

| 289 |

-

while True:

|

| 290 |

-

current_output_path = os.path.join(output_folder, output_filename.format(output_count))

|

| 291 |

-

if not os.path.exists(current_output_path):

|

| 292 |

-

break

|

| 293 |

-

output_count += 1

|

| 294 |

-

|

| 295 |

-

wavfile.write(current_output_path, self.tgt_sr, audio_opt)

|

| 296 |

-

print(f"Generated audio saved to: {current_output_path}")

|

| 297 |

-

return f"Success.\n {index_info}\nTime:\n npy:{times[0]}, f0:{times[1]}, infer:{times[2]}\nTotal Time: {total_time} seconds", (self.tgt_sr, audio_opt)

|

| 298 |

-

except:

|

| 299 |

-

info = traceback.format_exc()

|

| 300 |

-

logger.warn(info)

|

| 301 |

-

return info, (None, None)

|

| 302 |

-

|

| 303 |

-

def vc_single_dont_save(

|

| 304 |

-

self,

|

| 305 |

-

sid,

|

| 306 |

-

input_audio_path0,

|

| 307 |

-

input_audio_path1,

|

| 308 |

-

f0_up_key,

|

| 309 |

-

f0_file,

|

| 310 |

-

f0_method,

|

| 311 |

-

file_index,

|

| 312 |

-

file_index2,

|

| 313 |

-

index_rate,

|

| 314 |

-

filter_radius,

|

| 315 |

-

resample_sr,

|

| 316 |

-

rms_mix_rate,

|

| 317 |

-

protect,

|

| 318 |

-

crepe_hop_length,

|

| 319 |

-

f0_min,

|

| 320 |

-

note_min,

|

| 321 |

-

f0_max,

|

| 322 |

-

note_max,

|

| 323 |

-

f0_autotune,

|

| 324 |

-

):

|

| 325 |

-

global total_time

|

| 326 |

-

total_time = 0

|

| 327 |

-

start_time = time.time()

|

| 328 |

-

if not input_audio_path0 and not input_audio_path1:

|

| 329 |

-

return "You need to upload an audio", None

|

| 330 |

-

|

| 331 |

-

if (not os.path.exists(input_audio_path0)) and (not os.path.exists(os.path.join(now_dir, input_audio_path0))):

|

| 332 |

-

return "Audio was not properly selected or doesn't exist", None

|

| 333 |

-

|

| 334 |

-

input_audio_path1 = input_audio_path1 or input_audio_path0

|

| 335 |

-

print(f"\nStarting inference for '{os.path.basename(input_audio_path1)}'")

|

| 336 |

-

print("-------------------")

|

| 337 |

-

f0_up_key = int(f0_up_key)

|

| 338 |

-

if rvc_globals.NotesOrHertz and f0_method != 'rmvpe':

|

| 339 |

-

f0_min = note_to_hz(note_min) if note_min else 50

|

| 340 |

-

f0_max = note_to_hz(note_max) if note_max else 1100

|

| 341 |

-

print(f"Converted Min pitch: freq - {f0_min}\n"

|

| 342 |

-

f"Converted Max pitch: freq - {f0_max}")

|

| 343 |

-

else:

|

| 344 |

-

f0_min = f0_min or 50

|

| 345 |

-

f0_max = f0_max or 1100

|

| 346 |

-

try:

|

| 347 |

-

input_audio_path1 = input_audio_path1 or input_audio_path0

|

| 348 |

-

print(f"Attempting to load {input_audio_path1}....")

|

| 349 |

-

audio = load_audio(file=input_audio_path1,

|

| 350 |

-

sr=16000,

|

| 351 |

-

DoFormant=rvc_globals.DoFormant,

|

| 352 |

-

Quefrency=rvc_globals.Quefrency,

|

| 353 |

-

Timbre=rvc_globals.Timbre)

|

| 354 |

-

|

| 355 |

-

audio_max = np.abs(audio).max() / 0.95

|

| 356 |

-

if audio_max > 1:

|

| 357 |

-

audio /= audio_max

|

| 358 |

-

times = [0, 0, 0]

|

| 359 |

-

|

| 360 |

-

if self.hubert_model is None:

|

| 361 |

-

self.hubert_model = load_hubert(self.config)

|

| 362 |

-

|

| 363 |

-

try:

|

| 364 |

-

self.if_f0 = self.cpt.get("f0", 1)

|

| 365 |

-

except NameError:

|

| 366 |

-

message = "Model was not properly selected"

|

| 367 |

-

print(message)

|

| 368 |

-

return message, None

|

| 369 |

-

|

| 370 |

-

file_index = (

|

| 371 |

-

(

|

| 372 |

-

file_index.strip(" ")

|

| 373 |

-

.strip('"')

|

| 374 |

-

.strip("\n")

|

| 375 |

-

.strip('"')

|

| 376 |

-

.strip(" ")

|

| 377 |

-

.replace("trained", "added")

|

| 378 |

-

)

|

| 379 |

-

if file_index != ""

|

| 380 |

-

else file_index2

|

| 381 |

-

) # 防止小白写错,自动帮他替换掉

|

| 382 |

-

|

| 383 |

-

try:

|

| 384 |

-

audio_opt = self.pipeline.pipeline(

|

| 385 |

-

self.hubert_model,

|

| 386 |

-

self.net_g,

|

| 387 |

-

sid,

|

| 388 |

-

audio,

|

| 389 |

-

input_audio_path1,

|

| 390 |

-

times,

|

| 391 |

-

f0_up_key,

|

| 392 |

-

f0_method,

|

| 393 |

-

file_index,

|

| 394 |

-

index_rate,

|

| 395 |

-

self.if_f0,

|

| 396 |

-

filter_radius,

|

| 397 |

-

self.tgt_sr,

|

| 398 |

-

resample_sr,

|

| 399 |

-

rms_mix_rate,

|

| 400 |

-

self.version,

|

| 401 |

-

protect,

|

| 402 |

-

crepe_hop_length,

|

| 403 |

-

f0_autotune,

|

| 404 |

-

f0_file=f0_file,

|

| 405 |

-

f0_min=f0_min,

|

| 406 |

-

f0_max=f0_max

|

| 407 |

-

)

|

| 408 |

-

except AssertionError:

|

| 409 |

-

message = "Mismatching index version detected (v1 with v2, or v2 with v1)."

|

| 410 |

-

print(message)

|

| 411 |

-

return message, None

|

| 412 |

-

except NameError:

|

| 413 |

-

message = "RVC libraries are still loading. Please try again in a few seconds."

|

| 414 |

-

print(message)

|

| 415 |

-

return message, None

|

| 416 |

-

|

| 417 |

-

if self.tgt_sr != resample_sr >= 16000:

|

| 418 |

-

self.tgt_sr = resample_sr

|

| 419 |

-

index_info = (

|

| 420 |

-

"Index:\n%s." % file_index

|

| 421 |

-

if os.path.exists(file_index)

|

| 422 |

-

else "Index not used."

|

| 423 |

-

)

|

| 424 |

-

end_time = time.time()

|

| 425 |

-

total_time = end_time - start_time

|

| 426 |

-

|

| 427 |

-

return f"Success.\n {index_info}\nTime:\n npy:{times[0]}, f0:{times[1]}, infer:{times[2]}\nTotal Time: {total_time} seconds", (self.tgt_sr, audio_opt)

|

| 428 |

-

except:

|

| 429 |

-

info = traceback.format_exc()

|

| 430 |

-

logger.warn(info)

|

| 431 |

-

return info, (None, None)

|

| 432 |

-

|

| 433 |

-

|

| 434 |

-

def vc_multi(

|

| 435 |

-

self,

|

| 436 |

-

sid,

|

| 437 |

-

dir_path,

|

| 438 |

-

opt_root,

|

| 439 |

-

paths,

|

| 440 |

-

f0_up_key,

|

| 441 |

-

f0_method,

|

| 442 |

-

file_index,

|

| 443 |

-

file_index2,

|

| 444 |

-

index_rate,

|

| 445 |

-

filter_radius,

|

| 446 |

-

resample_sr,

|

| 447 |

-

rms_mix_rate,

|

| 448 |

-

protect,

|

| 449 |

-

format1,

|

| 450 |

-

crepe_hop_length,

|

| 451 |

-

f0_min,

|

| 452 |

-

note_min,

|

| 453 |

-

f0_max,

|

| 454 |

-

note_max,

|

| 455 |

-

f0_autotune,

|

| 456 |

-

):

|

| 457 |

-

if rvc_globals.NotesOrHertz and f0_method != 'rmvpe':

|

| 458 |

-

f0_min = note_to_hz(note_min) if note_min else 50

|

| 459 |

-

f0_max = note_to_hz(note_max) if note_max else 1100

|

| 460 |

-

print(f"Converted Min pitch: freq - {f0_min}\n"

|

| 461 |

-

f"Converted Max pitch: freq - {f0_max}")

|

| 462 |

-

else:

|

| 463 |

-

f0_min = f0_min or 50

|

| 464 |

-

f0_max = f0_max or 1100

|

| 465 |

-

try:

|

| 466 |

-

dir_path = (

|

| 467 |

-

dir_path.strip(" ").strip('"').strip("\n").strip('"').strip(" ")

|

| 468 |

-

) # 防止小白拷路径头尾带了空格和"和回车

|

| 469 |

-

opt_root = opt_root.strip(" ").strip('"').strip("\n").strip('"').strip(" ")

|

| 470 |

-

os.makedirs(opt_root, exist_ok=True)

|

| 471 |

-

try:

|

| 472 |

-

if dir_path != "":

|

| 473 |

-

paths = [

|

| 474 |

-

os.path.join(dir_path, name) for name in os.listdir(dir_path)

|

| 475 |

-

]

|

| 476 |

-

else:

|

| 477 |

-

paths = [path.name for path in paths]

|

| 478 |

-

except:

|

| 479 |

-

traceback.print_exc()

|

| 480 |

-

paths = [path.name for path in paths]

|

| 481 |

-

infos = []

|

| 482 |

-

for path in paths:

|

| 483 |

-

info, opt = self.vc_single(

|

| 484 |

-

sid,

|

| 485 |

-

path,

|

| 486 |

-

f0_up_key,

|

| 487 |

-

None,

|

| 488 |

-

f0_method,

|

| 489 |

-

file_index,

|

| 490 |

-

file_index2,

|

| 491 |

-

# file_big_npy,

|

| 492 |

-

index_rate,

|

| 493 |

-

filter_radius,

|

| 494 |

-

resample_sr,

|

| 495 |

-

rms_mix_rate,

|

| 496 |

-

protect,

|

| 497 |

-

)

|

| 498 |

-

if "Success" in info:

|

| 499 |

-

try:

|

| 500 |

-

tgt_sr, audio_opt = opt

|

| 501 |

-

if format1 in ["wav", "flac"]:

|

| 502 |

-

sf.write(

|

| 503 |

-

"%s/%s.%s"

|

| 504 |

-

% (opt_root, os.path.basename(path), format1),

|

| 505 |

-

audio_opt,

|

| 506 |

-

tgt_sr,

|

| 507 |

-

)

|

| 508 |

-

else:

|

| 509 |

-

path = "%s/%s.%s" % (opt_root, os.path.basename(path), format1)

|

| 510 |

-

with BytesIO() as wavf:

|

| 511 |

-

sf.write(

|

| 512 |

-

wavf,

|

| 513 |

-

audio_opt,

|

| 514 |

-

tgt_sr,

|

| 515 |

-

format="wav"

|

| 516 |

-

)

|

| 517 |

-

wavf.seek(0, 0)

|

| 518 |

-

with open(path, "wb") as outf:

|

| 519 |

-

wav2(wavf, outf, format1)

|

| 520 |

-

except:

|

| 521 |

-

info += traceback.format_exc()

|

| 522 |

-

infos.append("%s->%s" % (os.path.basename(path), info))

|

| 523 |

-

yield "\n".join(infos)

|

| 524 |

-

yield "\n".join(infos)

|

| 525 |

-

except:

|

| 526 |

-

yield traceback.format_exc()

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

spaces/AIConsultant/MusicGen/tests/common_utils/__init__.py

DELETED

|

@@ -1,9 +0,0 @@

|

|

| 1 |

-

# Copyright (c) Meta Platforms, Inc. and affiliates.

|

| 2 |

-

# All rights reserved.

|

| 3 |

-

#

|

| 4 |

-

# This source code is licensed under the license found in the

|

| 5 |

-

# LICENSE file in the root directory of this source tree.

|

| 6 |

-

|

| 7 |

-

# flake8: noqa

|

| 8 |

-

from .temp_utils import TempDirMixin

|

| 9 |

-

from .wav_utils import get_batch_white_noise, get_white_noise, save_wav

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

spaces/AIGC-Audio/AudioGPT/NeuralSeq/modules/GenerSpeech/model/generspeech.py

DELETED

|

@@ -1,260 +0,0 @@

|

|

| 1 |

-

import torch

|

| 2 |

-

from modules.GenerSpeech.model.glow_modules import Glow

|

| 3 |

-

from modules.fastspeech.tts_modules import PitchPredictor

|

| 4 |

-

import random

|

| 5 |

-

from modules.GenerSpeech.model.prosody_util import ProsodyAligner, LocalStyleAdaptor

|

| 6 |

-

from utils.pitch_utils import f0_to_coarse, denorm_f0

|

| 7 |

-

from modules.commons.common_layers import *

|

| 8 |

-

import torch.distributions as dist

|

| 9 |

-

from utils.hparams import hparams

|

| 10 |

-

from modules.GenerSpeech.model.mixstyle import MixStyle

|

| 11 |

-

from modules.fastspeech.fs2 import FastSpeech2

|

| 12 |

-

import json

|

| 13 |

-

from modules.fastspeech.tts_modules import DEFAULT_MAX_SOURCE_POSITIONS, DEFAULT_MAX_TARGET_POSITIONS

|

| 14 |

-

|

| 15 |

-

class GenerSpeech(FastSpeech2):

|

| 16 |

-

'''

|

| 17 |

-

GenerSpeech: Towards Style Transfer for Generalizable Out-Of-Domain Text-to-Speech

|

| 18 |

-

https://arxiv.org/abs/2205.07211

|

| 19 |

-

'''

|

| 20 |

-

def __init__(self, dictionary, out_dims=None):

|

| 21 |

-

super().__init__(dictionary, out_dims)

|

| 22 |

-

|

| 23 |

-

# Mixstyle

|

| 24 |

-

self.norm = MixStyle(p=0.5, alpha=0.1, eps=1e-6, hidden_size=self.hidden_size)

|

| 25 |

-

|

| 26 |

-

# emotion embedding

|

| 27 |

-

self.emo_embed_proj = Linear(256, self.hidden_size, bias=True)

|

| 28 |

-

|

| 29 |

-

# build prosody extractor

|

| 30 |

-

## frame level

|

| 31 |

-

self.prosody_extractor_utter = LocalStyleAdaptor(self.hidden_size, hparams['nVQ'], self.padding_idx)

|

| 32 |

-

self.l1_utter = nn.Linear(self.hidden_size * 2, self.hidden_size)

|

| 33 |

-

self.align_utter = ProsodyAligner(num_layers=2)

|

| 34 |

-

|

| 35 |

-

## phoneme level

|

| 36 |

-

self.prosody_extractor_ph = LocalStyleAdaptor(self.hidden_size, hparams['nVQ'], self.padding_idx)

|

| 37 |

-

self.l1_ph = nn.Linear(self.hidden_size * 2, self.hidden_size)

|

| 38 |

-

self.align_ph = ProsodyAligner(num_layers=2)

|

| 39 |

-

|

| 40 |

-

## word level

|

| 41 |

-

self.prosody_extractor_word = LocalStyleAdaptor(self.hidden_size, hparams['nVQ'], self.padding_idx)

|

| 42 |

-

self.l1_word = nn.Linear(self.hidden_size * 2, self.hidden_size)

|

| 43 |

-

self.align_word = ProsodyAligner(num_layers=2)

|

| 44 |

-

|

| 45 |

-

self.pitch_inpainter_predictor = PitchPredictor(

|

| 46 |

-

self.hidden_size, n_chans=self.hidden_size,

|

| 47 |

-

n_layers=3, dropout_rate=0.1, odim=2,

|

| 48 |

-

padding=hparams['ffn_padding'], kernel_size=hparams['predictor_kernel'])

|

| 49 |

-

|

| 50 |

-

# build attention layer

|

| 51 |

-

self.max_source_positions = DEFAULT_MAX_SOURCE_POSITIONS

|

| 52 |

-

self.embed_positions = SinusoidalPositionalEmbedding(

|

| 53 |

-

self.hidden_size, self.padding_idx,

|

| 54 |

-

init_size=self.max_source_positions + self.padding_idx + 1,

|

| 55 |

-

)

|

| 56 |

-

|

| 57 |

-

# build post flow

|

| 58 |

-

cond_hs = 80

|

| 59 |

-

if hparams.get('use_txt_cond', True):

|

| 60 |

-

cond_hs = cond_hs + hparams['hidden_size']

|

| 61 |

-

|

| 62 |

-

cond_hs = cond_hs + hparams['hidden_size'] * 3 # for emo, spk embedding and prosody embedding

|

| 63 |

-

self.post_flow = Glow(

|

| 64 |

-

80, hparams['post_glow_hidden'], hparams['post_glow_kernel_size'], 1,

|

| 65 |

-

hparams['post_glow_n_blocks'], hparams['post_glow_n_block_layers'],

|

| 66 |

-

n_split=4, n_sqz=2,

|

| 67 |

-

gin_channels=cond_hs,

|

| 68 |

-

share_cond_layers=hparams['post_share_cond_layers'],

|

| 69 |

-

share_wn_layers=hparams['share_wn_layers'],

|

| 70 |

-

sigmoid_scale=hparams['sigmoid_scale']

|

| 71 |

-

)

|

| 72 |

-

self.prior_dist = dist.Normal(0, 1)

|

| 73 |

-

|

| 74 |

-

|

| 75 |

-

def forward(self, txt_tokens, mel2ph=None, ref_mel2ph=None, ref_mel2word=None, spk_embed=None, emo_embed=None, ref_mels=None,

|

| 76 |

-

f0=None, uv=None, skip_decoder=False, global_steps=0, infer=False, **kwargs):

|

| 77 |

-

ret = {}

|

| 78 |

-

encoder_out = self.encoder(txt_tokens) # [B, T, C]

|

| 79 |

-

src_nonpadding = (txt_tokens > 0).float()[:, :, None]

|

| 80 |

-

|

| 81 |

-

# add spk/emo embed

|

| 82 |

-

spk_embed = self.spk_embed_proj(spk_embed)[:, None, :]

|

| 83 |

-

emo_embed = self.emo_embed_proj(emo_embed)[:, None, :]

|

| 84 |

-

|

| 85 |

-

|

| 86 |

-

# add dur

|

| 87 |

-

dur_inp = (encoder_out + spk_embed + emo_embed) * src_nonpadding

|

| 88 |

-

mel2ph = self.add_dur(dur_inp, mel2ph, txt_tokens, ret)

|

| 89 |

-

tgt_nonpadding = (mel2ph > 0).float()[:, :, None]

|

| 90 |

-

decoder_inp = self.expand_states(encoder_out, mel2ph)

|

| 91 |

-

decoder_inp = self.norm(decoder_inp, spk_embed + emo_embed)

|

| 92 |

-

|

| 93 |

-

# add prosody VQ

|

| 94 |

-

ret['ref_mel2ph'] = ref_mel2ph

|

| 95 |

-

ret['ref_mel2word'] = ref_mel2word

|

| 96 |

-

prosody_utter_mel = self.get_prosody_utter(decoder_inp, ref_mels, ret, infer, global_steps)

|

| 97 |

-

prosody_ph_mel = self.get_prosody_ph(decoder_inp, ref_mels, ret, infer, global_steps)

|

| 98 |

-

prosody_word_mel = self.get_prosody_word(decoder_inp, ref_mels, ret, infer, global_steps)

|

| 99 |

-

|

| 100 |

-

# add pitch embed

|

| 101 |

-

pitch_inp_domain_agnostic = decoder_inp * tgt_nonpadding

|

| 102 |

-

pitch_inp_domain_specific = (decoder_inp + spk_embed + emo_embed + prosody_utter_mel + prosody_ph_mel + prosody_word_mel) * tgt_nonpadding

|

| 103 |

-

predicted_pitch = self.inpaint_pitch(pitch_inp_domain_agnostic, pitch_inp_domain_specific, f0, uv, mel2ph, ret)

|

| 104 |

-

|

| 105 |

-

# decode

|

| 106 |

-

decoder_inp = decoder_inp + spk_embed + emo_embed + predicted_pitch + prosody_utter_mel + prosody_ph_mel + prosody_word_mel

|

| 107 |

-

ret['decoder_inp'] = decoder_inp = decoder_inp * tgt_nonpadding

|

| 108 |

-

if skip_decoder:

|

| 109 |

-

return ret

|

| 110 |

-

ret['mel_out'] = self.run_decoder(decoder_inp, tgt_nonpadding, ret, infer=infer, **kwargs)

|

| 111 |

-

|

| 112 |

-

# postflow

|

| 113 |

-

is_training = self.training

|

| 114 |

-

ret['x_mask'] = tgt_nonpadding

|

| 115 |

-

ret['spk_embed'] = spk_embed

|

| 116 |

-

ret['emo_embed'] = emo_embed

|

| 117 |

-

ret['ref_prosody'] = prosody_utter_mel + prosody_ph_mel + prosody_word_mel

|

| 118 |

-

self.run_post_glow(ref_mels, infer, is_training, ret)

|

| 119 |

-

return ret

|

| 120 |

-

|

| 121 |

-

def get_prosody_ph(self, encoder_out, ref_mels, ret, infer=False, global_steps=0):

|

| 122 |

-

# get VQ prosody

|

| 123 |

-

if global_steps > hparams['vq_start'] or infer:

|

| 124 |

-

prosody_embedding, loss, ppl = self.prosody_extractor_ph(ref_mels, ret['ref_mel2ph'], no_vq=False)

|

| 125 |

-

ret['vq_loss_ph'] = loss

|

| 126 |

-

ret['ppl_ph'] = ppl

|

| 127 |

-

else:

|

| 128 |

-