hexsha

stringlengths 40

40

| size

int64 5

1.04M

| ext

stringclasses 6

values | lang

stringclasses 1

value | max_stars_repo_path

stringlengths 3

344

| max_stars_repo_name

stringlengths 5

125

| max_stars_repo_head_hexsha

stringlengths 40

78

| max_stars_repo_licenses

sequencelengths 1

11

| max_stars_count

int64 1

368k

⌀ | max_stars_repo_stars_event_min_datetime

stringlengths 24

24

⌀ | max_stars_repo_stars_event_max_datetime

stringlengths 24

24

⌀ | max_issues_repo_path

stringlengths 3

344

| max_issues_repo_name

stringlengths 5

125

| max_issues_repo_head_hexsha

stringlengths 40

78

| max_issues_repo_licenses

sequencelengths 1

11

| max_issues_count

int64 1

116k

⌀ | max_issues_repo_issues_event_min_datetime

stringlengths 24

24

⌀ | max_issues_repo_issues_event_max_datetime

stringlengths 24

24

⌀ | max_forks_repo_path

stringlengths 3

344

| max_forks_repo_name

stringlengths 5

125

| max_forks_repo_head_hexsha

stringlengths 40

78

| max_forks_repo_licenses

sequencelengths 1

11

| max_forks_count

int64 1

105k

⌀ | max_forks_repo_forks_event_min_datetime

stringlengths 24

24

⌀ | max_forks_repo_forks_event_max_datetime

stringlengths 24

24

⌀ | content

stringlengths 5

1.04M

| avg_line_length

float64 1.14

851k

| max_line_length

int64 1

1.03M

| alphanum_fraction

float64 0

1

| lid

stringclasses 191

values | lid_prob

float64 0.01

1

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

3e64a005b4af3a410713a92e147ecbcba78b03fa | 3,011 | md | Markdown | README.md | jasudev/Scroller | a825313309c2fc6964b50edc83867370ad08bb47 | [

"MIT"

] | 18 | 2022-02-02T15:45:26.000Z | 2022-03-28T22:55:05.000Z | README.md | jasudev/Scroller | a825313309c2fc6964b50edc83867370ad08bb47 | [

"MIT"

] | null | null | null | README.md | jasudev/Scroller | a825313309c2fc6964b50edc83867370ad08bb47 | [

"MIT"

] | null | null | null | # **Scroller for SwiftUI**

You can animate in individual views based on scroll position. Developed with SwiftUI. This library supports iOS/macOS.

[](https://developer.apple.com/macOS)

[](https://developer.apple.com/iOS)

[](https://developer.apple.com/macOS)

[](https://www.instagram.com/dev.fabula)

[](https://developer.apple.com/documentation/swift_packages/package/)

[](https://opensource.org/licenses/MIT)

## Screenshot

|Example|Vertical|Horizontal|

|:---:|:---:|:---:|

|<img src="Markdown/Scroller.gif">|<img src="Markdown/ScrollerVertical.gif">|<img src="Markdown/ScrollerHorizontal.gif">|

## Example

[https://fabulaapp.page.link/222](https://fabulaapp.page.link/222)

[https://fabulaapp.page.link/223](https://fabulaapp.page.link/223)

## Usages

1. Scroller

```swift

Scroller(.vertical, value: $valueV) {

ForEach(0...5, id: \.self) { index in

GeometryReader { proxy in

ScrollerVContent(value: proxy.scrollerValue(.vertical))

}

}

} lastContent: {

Rectangle()

.fill(Color.blue)

.overlay(Text("LastView"))

.foregroundColor(Color.white)

}

```

2. Each view only needs to conform to the ScrollerContent protocol.

```swift

struct ScrollerVContent: ScrollerContent {

/// Bind each view's scroll-relative value. It is a value between 0 and 1.

var value: CGFloat = 0

var body: some View {

GeometryReader { proxy in

ScrollerInfoView(axes: .vertical, value: value, proxy: proxy)

.offset(y: proxy.size.height * value)

.padding(10)

Rectangle().fill(Color.blue)

.frame(width: proxy.size.width * value, height: 5)

.offset(y: proxy.size.height * value)

}

.background(Color.orange.opacity(1.0 - value))

}

}

```

## Swift Package Manager

The Swift Package Manager is a tool for automating the distribution of Swift code and is integrated into the swift compiler. Once you have your Swift package set up, adding Scroller as a dependency is as easy as adding it to the dependencies value of your Package.swift.

```swift

dependencies: [

.package(url: "https://github.com/jasudev/Scroller.git", .branch("main"))

]

```

## Contact

instagram : [@dev.fabula](https://www.instagram.com/dev.fabula)

email : [[email protected]](mailto:[email protected])

## License

Scroller is available under the MIT license. See the [LICENSE](LICENSE) file for more info.

| 42.408451 | 270 | 0.655928 | eng_Latn | 0.385298 |

3e651e66497a97651ee0aca38fa4372c677f6e6f | 1,178 | md | Markdown | licenses.md | Bhaskers-Blu-Org2/DUCK | 63a15a11c7576ab6261cb98685976b5e5d2c152f | [

"Apache-2.0"

] | 3 | 2018-04-09T04:06:11.000Z | 2018-07-30T23:08:41.000Z | licenses.md | Bhaskers-Blu-Org2/DUCK | 63a15a11c7576ab6261cb98685976b5e5d2c152f | [

"Apache-2.0"

] | 8 | 2017-07-18T10:45:38.000Z | 2018-10-02T22:18:22.000Z | licenses.md | LaudateCorpus1/DUCK | 63a15a11c7576ab6261cb98685976b5e5d2c152f | [

"Apache-2.0"

] | 7 | 2019-11-03T16:56:21.000Z | 2021-11-10T10:20:33.000Z | # Third Party Licenses

This document tracks third-party software distributed by this project:

- AngularJS [https://angularjs.org/]

- Zurb Foundation [https://github.com/zurb/foundation-sites/blob/develop/LICENSE]

- Font Awesome [https://fortawesome.github.io/Font-Awesome/license/]

- JSHashtable fork [https://github.com/enriquecastl/jshashtable]

- JSHashSet fork [https://github.com/enriquecastl/jshashset]

- ngSortable [https://github.com/a5hik/ng-sortable]

- Fuse.js [http://kiro.me/projects/fuse.html]

- Mass AutoComplete [http://hakib.github.io/MassAutocomplete/]

- Elastic Input [http://jacek-pulit.github.io/angular-elastic-input/]

- Angular Translate [https://github.com/angular-translate/angular-translate/blob/master/LICENSE]

- Angular File Saver [https://github.com/alferov/angular-file-saver]

- CouchDB [https://www.apache.org/licenses/LICENSE-2.0]

- Carneades [https://github.com/carneades/carneades-4/blob/master/LICENSE]

- Go programming Language [https://golang.org/LICENSE]

- Go Yaml parser [https://github.com/go-yaml/yaml/blob/v2/LICENSE] and [https://github.com/go-yaml/yaml/blob/v2/LICENSE.libyaml]

- SWI Prolog [http://www.swi-prolog.org/license.html]

| 51.217391 | 128 | 0.767402 | yue_Hant | 0.342179 |

3e65ad66636f3b40bc39f38648c3067b6e0e5ef8 | 35 | md | Markdown | README.md | Chamarez/ionic-docker-microservices-spring | 7802b58150f892be50c01a5f5d76d58163deb8cf | [

"MIT"

] | null | null | null | README.md | Chamarez/ionic-docker-microservices-spring | 7802b58150f892be50c01a5f5d76d58163deb8cf | [

"MIT"

] | null | null | null | README.md | Chamarez/ionic-docker-microservices-spring | 7802b58150f892be50c01a5f5d76d58163deb8cf | [

"MIT"

] | null | null | null | # ionic-docker-microservices-spring | 35 | 35 | 0.857143 | eng_Latn | 0.345207 |

3e66d0ae8d8ecd7330d078f2637b008e94b79bbf | 28 | md | Markdown | README.md | brandeis-llc/semviz | 97735e88d3808cad35ca07430fced16152461a83 | [

"MIT"

] | null | null | null | README.md | brandeis-llc/semviz | 97735e88d3808cad35ca07430fced16152461a83 | [

"MIT"

] | 6 | 2020-04-21T02:41:07.000Z | 2020-04-22T14:40:07.000Z | README.md | brandeis-llc/semviz | 97735e88d3808cad35ca07430fced16152461a83 | [

"MIT"

] | 1 | 2020-04-20T14:17:06.000Z | 2020-04-20T14:17:06.000Z | # SemViz

semviz.org source

| 7 | 17 | 0.75 | tur_Latn | 0.278753 |

3e66eec7cb3eb3fc44e8a911496ea5cab7dcbc46 | 6,548 | md | Markdown | docs/learning-by-doing/week04-docker-monitoring/02-report-en.md | cuongtransc/autoscaling | bb1a6abfedb16c53f52dae4f24b4e2141a4b5e0a | [

"Apache-2.0"

] | 1 | 2020-05-24T20:11:06.000Z | 2020-05-24T20:11:06.000Z | docs/learning-by-doing/week04-docker-monitoring/02-report-en.md | cuongtransc/autoscaling | bb1a6abfedb16c53f52dae4f24b4e2141a4b5e0a | [

"Apache-2.0"

] | 2 | 2017-06-18T02:33:51.000Z | 2017-06-18T02:33:58.000Z | docs/learning-by-doing/week04-docker-monitoring/02-report-en.md | cuongtransc/autoscaling | bb1a6abfedb16c53f52dae4f24b4e2141a4b5e0a | [

"Apache-2.0"

] | 4 | 2018-01-26T07:53:35.000Z | 2020-04-14T05:38:05.000Z | # Báo cáo tuần 4

#### Auto - scaling team

### A. Overview

**1. cAdvisor**

cAdvisor (Container Advisor) provides container users an understanding of the resource usage and performance characteristics of their running containers. It is a running daemon that collects, aggregates, processes, and exports information about running containers.

Specifically, for each container it keeps resource isolation parameters, historical resource usage, histograms of complete historical resource usage and network statistics. This data is exported by container and machine-wide.

- Performance Metrics:

+ CPU: total usage, usage per core, usage breakdown (Hz)

+ Memory: total usage(Byte)

+ Network: Throughput-Tx bytes,Rx bytes (Bytes per second), Errors(Errors per second) - Tx bytes, Rx bytes

+ Filesystem (Storage): total usage (Byte)

- Frequence of data collection:

+ Real-time collector (per second)

- Size of data per one docker container measuring:

>Size of a sample data unit *monitoring time by time unit * collected frequence of data

+ Structure of a sample data unit:

Time|Sequence_number|fs_limit|Machine|memory_usage|container_name|cpu_cumulative_usage|memory_working_set|rx_bytes|tx_errors|tx_bytes|fs_device|rx_errors|fs_usage

--|--|--|--|--|--|--|--|--|--|--|--|--|--|

**2. InfluxDB**

InfluxDB is a time series, metrics, and analytics database. cAdvisor only displays realtime information and doesn't store the metrics. We need to store the monitoring information which cAdvisor provides in order to display a time range other than realtime.

Feature:

- Time-Centric Functions

- Events

- Powerful Query Language

- Scalable Metrics

- Native HTTP API

- Built-in Explorer

Database structure:

+ By time series

+ Metrics data and events data oriented

+ A sample time series record:

Time|Sequence_number|field 1|field 2|field 3|....

---|---|---|---|---|---

+ Store billions of data points.

Aggregate record:

+ Merge multiple series together

+ Group by time range

+ Graph visualized

+ Powerful aggregate function: sum, mean, max, count, median...

+ SQL-like query language

```sh

Exam:

select count(type) from events group by time(10m), type

into events.count_per_type.10m

```

Client Libraries

+ Supporting to interact with InfluxDB throughout HTTP protocol (read, write,insert ...)

+ Support many language: javaScript, Ruby, Rails, Python, PHP, Perl, .NET...

Get more [here](https://influxdb.com/)

**3. Grafana:**

Grafana is a leading open source application for visualizing large-scale measurement data. The Grafana Dashboard allows us to pull all the pieces together visually. This powerful Dashboard allows us to run queries against the InfluxDB and chart them accordingly in a very nice layout.

Features:

+ graph

+ singlestat

+ annotation

Data aggregate

+ Interacting with InfluxDB

+ Query template/ editor for InfluxDB

HTTP API

+ The Grafana backend exposes an HTTP API, the same API is used by the frontend to do everything from saving dashboards, creating users and updating data sources.

Get more [here](http://docs.grafana.org/)

### B. Installation of Docker Monitoring.

####1. Install the InfluxDb

- command:

```

$ docker run -d -p 8083:8083 -p 8086:8086 --expose 8090 --expose 8099 -e PRE_CREATE_DB=cadvisor --name influxsrv tutum/influxdb:0.8.8

```

+ `-p 8083:8083` : user interface, log in with username-admin, pass-admin

+ `-p 8086:8086` : interaction with orther application

+ `PRE_CREATE_DB=cadvisor` : create database have name `cadvisor`

+ `--name influxsrv` : container have name `influxsrv`, use to cAdvisor link it.

####2. Install the cAdvisor container and link it to the InfluxDB container.

- command:

```

docker run \

--volume=/:/rootfs:ro \

--volume=/var/run:/var/run:rw \

--volume=/sys:/sys:ro \

--volume=/var/lib/docker/:/var/lib/docker:ro \

--publish=8080:8080 \

--link=influxsrv:influxsrv \

--detach=true \

--name=cadvisor \

google/cadvisor:0.14.0 \

-storage_driver=influxdb \

-storage_driver_db=cadvisor \

-storage_driver_host=influxsrv:8086

```

+ `--publish=8080:8080` : user interface

+ `--link=influxsrv:influxsrv`: link to container influxsrv

+ `-storage_driver=influxdb`: set the storage driver as InfluxDB

+ Specify what InfluxDB instance to push data to:

* `-storage_driver_host=influxsrv:8086 `: The *ip:port* of the database. Default is 'localhost:8086'

* `-storage_driver_db=cadvisor `: database name. Uses db 'cadvisor' by default

- After install successfully access url `http://localhost:8080` You should now see the cAdvisor gathering statistics on your Docker host and containers

####3. Install the Grafana Dashboard and link it to the InfluxDB container:

- command:

```

docker run -d -p 3000:3000 \

-e HTTP_USER=admin \

-e HTTP_PASS=admin \

-e INFLUXDB_HOST=localhost \

-e INFLUXDB_PORT=8086 \

-e INFLUXDB_NAME=cadvisor \

-e INFLUXDB_USER=root \

-e INFLUXDB_PASS=root \

--link=influxsrv:influxsrv \

grafana/grafana:2.0.2

```

- After install successfully access url `http://localhost:3000`, config to link it to the InfluxDb:

+ Login: Username – admin, password – admin

+ Click on the Grafana icon in the upper left hand corner of the GUI. Click on: Data Sources → Add New and fill information follow image:

- Config to monitoring statistics:

+ Click Dashboard → Home Menu → New → Add Panel → Graph

+ Click notitle → edit, after write our query for our graph at metric tab.

+ Example several graph:

#### References

- https://www.brianchristner.io/how-to-setup-docker-monitoring/

- You can use json file to create dashboards: https://github.com/vegasbrianc/docker-monitoring

| 39.926829 | 284 | 0.752902 | eng_Latn | 0.73312 |

3e67cd11c5ccc05296162285f11fb4117cdda223 | 366 | md | Markdown | _posts/2018-07-01-8.md | nickie10106/new-simply-nickie | c127b6bd8fca641462a0858fdd6c8d511ec3be50 | [

"Apache-2.0"

] | null | null | null | _posts/2018-07-01-8.md | nickie10106/new-simply-nickie | c127b6bd8fca641462a0858fdd6c8d511ec3be50 | [

"Apache-2.0"

] | null | null | null | _posts/2018-07-01-8.md | nickie10106/new-simply-nickie | c127b6bd8fca641462a0858fdd6c8d511ec3be50 | [

"Apache-2.0"

] | null | null | null | ---

title: "Cherry On the Cake"

subtitle: Electronic Direct Message

layout: default

modal-id: 8

date: 2014-07-15

img: portfolio/8.jpg

thumbnail: thumbnail/8.jpg

alt: Electronic Direct Message

project-date: 2018

client: None

category: Design

description: Clean and innovative design to send out to customers on MailChimp, GetResponse or any email marketing app.

---

| 22.875 | 119 | 0.781421 | eng_Latn | 0.863125 |

3e67e5dc7976d08e6ee235ac24b961acce4be431 | 2,907 | md | Markdown | content/post/Day_36.md | lewisc2303/LewisBlog | a9fc8b4522ffcdabce5d513a97b3e3f978be86b2 | [

"MIT"

] | null | null | null | content/post/Day_36.md | lewisc2303/LewisBlog | a9fc8b4522ffcdabce5d513a97b3e3f978be86b2 | [

"MIT"

] | null | null | null | content/post/Day_36.md | lewisc2303/LewisBlog | a9fc8b4522ffcdabce5d513a97b3e3f978be86b2 | [

"MIT"

] | null | null | null | ---

title: "Day 36"

date: 2018-10-08

tags: []

draft: False

---

# (More) Types

### Polymorphism

Degrees of polymorphism are:

- Parametric Polymorphism - full polymorphism of any type: `a -> b -> a`

- Constrained Polymorphism - polymorphism constrained to a typeclass: `(Num a, Ord a) => a`

- Concrete type - not polymorphic: `[Char] -> Char`

It is beneficial to keep a function as polymorphic as possible, as it saves you from creating different functions for different types.

But as soon as you apply a function belonging to a typeclass you constrain the argument to the typeclass making it a constrained polymorphic function. This is often necessary as certain functions are only applicable to a certain typeclass and then even more functions are applicable to specific data types; so the more specified you make the type, the greater access to functions you have.

### Typeclass-constrained type variables

Certain functions are constrained to certain typeclasses, sub classes and types; analogous to a tree of compatibility. Functions from the stem can be applied to the branches but not the other way round.

e.g. Take the function:

```

divideLength = 6 / length [1, 2, 3]

```

This does not compile because `/` is of type `fractional` and is an infixr operator and so applies to the right but `length` is of type `Integer` which is not compatible. You would either have to use functions of the same type...

**Or** you can convert `length` to the parent typeclass `Num` by using the `fromIntegral`. And so when the `/` operator is applied to `length` it can constrain the `Num` typeclass to `fractional`.

```

Prelude> divideLength = 6 / fromIntegral ( length [1, 2, 3] )

Prelude> divideLength

2.0

```

Note: answer is in decimal form as it is of `fractional`

### Type signatures for HOFs

Type signatures are a useful tool to read a function and determines its purpose, it specifies the application of the arguments regarding their respective types.

I found type signature fairly straight forward until I got to the following exercise:

Write the undefined function which will allow this type signature to compile?

```

munge :: (x -> y) -> (y -> (w, z)) -> x -> w

```

At first I got too bogged down with the amount of arrows and letters that were there. it seemed a bit mad.

Then I tried to dissect it logically from the type **signature** (key word).

The output type needs to be of type `w`. So working back from `w`, I need the first element of the tuple `(w, z)` which I can use the function `fst` (a parametric polymorphic function), but that functions require the input `y` and the first argument does just that `x->y` and then `x` is needed to input into the function to get `w`! Therefore if you nest these you get:

```

munge :: (x -> y) -> (y -> (w, z)) -> x -> w -- How to approach this?

munge xy ywz x = fst (ywz (xy (x) ) )

```

Perhaps a bad explanation, but it's hard to explain.

| 46.887097 | 389 | 0.733402 | eng_Latn | 0.999571 |

3e681a3ca0db1770393ad1dcf91a387035ce6f4c | 148 | md | Markdown | pra/TrabalhoFinal/README.md | LucasFreitass/udesc | c45bd8474d2e6ac1507b4e9acc8488fe853decee | [

"Apache-2.0"

] | null | null | null | pra/TrabalhoFinal/README.md | LucasFreitass/udesc | c45bd8474d2e6ac1507b4e9acc8488fe853decee | [

"Apache-2.0"

] | null | null | null | pra/TrabalhoFinal/README.md | LucasFreitass/udesc | c45bd8474d2e6ac1507b4e9acc8488fe853decee | [

"Apache-2.0"

] | null | null | null | ## Trabalho final

Objetivo do trabalho era realizar uma comparação entre melhores e piores casos de uma sequencia de execução de uma Árvore AVL.

| 37 | 127 | 0.797297 | por_Latn | 1.00001 |

3e69cb94e1945cb6884c815dbb1a506e62ea7dde | 1,088 | md | Markdown | _posts/2018-05-23-python-module.md | miaculpa/miaculpa.github.io | 4013ddd0f134c80a7fca542a73b9cc2b30d93d7a | [

"MIT"

] | null | null | null | _posts/2018-05-23-python-module.md | miaculpa/miaculpa.github.io | 4013ddd0f134c80a7fca542a73b9cc2b30d93d7a | [

"MIT"

] | null | null | null | _posts/2018-05-23-python-module.md | miaculpa/miaculpa.github.io | 4013ddd0f134c80a7fca542a73b9cc2b30d93d7a | [

"MIT"

] | null | null | null | ---

layout: post

title: "[Python 04모듈과 패키지] - 모듈과 패키지"

subtitle: "모듈과 패키지"

categories: language

tags: python

comments: true

---

## 모듈

>함수나 변수 또는 클래스들을 모아 놓은 파일이다.

### 1. 모듈 불러오기 (import)

```

import 모듈이름

```

모듈의 이름이 전역 네임스페이스에 등록되어 모듈명.함수로 사용가능하다.

```

from 모듈이름 import 함수이름

```

모듈명을 생략하고 모듈 내부의 함수를 쓸 수 있다.

```

from 모듈이름 import*

```

\* 문자는 모든것이라는 뜻이다. 모듈명의 모든 함수를 불러서 사용하겠다는 뜻

```

from 모듈이름 import 함수이름 as 별칭

import 모듈이름 as 별칭

```

같은 모듈명이 존재하거나 혼동 될 수 있을 경우, 뒤에 as를 붙여 사용할 모듈명을 변경할 수 있다.

### 2. if \_\_name\_\_ == \__main\_\_

python <파일명>으로 실행한 경우에만 동작할 부분은 if문으로 감싸준다.

\_\_name\_\_ : 모듈의 전역변수

\_\_main\_\_ : 파이썬 인터프리터가 실행한 모듈의 경우, \_\_main\_\_ 이라는 이름을 가진다

---

## 패키지

>모듈들을 모아 둔 특별한 폴더를 뜻한다.

패키지로 사용할 폴더에 \_\_init\_\_.py파일을 넣어주면 해당 폴더는 패키지로 취급된다.

```

├── functions

│ ├── __init__.py

│ ├── game.py

│ └── shop.py

└── lol.py

```

\*, \_\_all\_\_

패키지에 포함된 하위 패키지 및 모듈을 불러올 때, \*을 사용하면 해당 모듈의 모든 식별자들을 불러온다.

이 때, 각 모듈에서 자신이 import될 때 불러와질 목록을 지정하고자 한다면 \_\_all\_\_ 을 정의하면 된다.

패키지 자체를 import시에 자동으로 가져오고 싶은 목록이 있다면, 패키지의 \_\_init\_\_.py파일에 해당 항목을 import해주면 된다.

| 14.702703 | 83 | 0.629596 | kor_Hang | 1.00001 |

3e6a0f926655551537dd990821e08ca251b97e01 | 1,632 | md | Markdown | docs/tables/aws_glacier_vault.md | blinkops/blink-aws-query | 21b0d5eee6b0b5554c040867b9e25681e7ba6559 | [

"Apache-2.0"

] | 61 | 2021-01-21T19:06:48.000Z | 2022-03-28T20:09:46.000Z | docs/tables/aws_glacier_vault.md | blinkops/blink-aws-query | 21b0d5eee6b0b5554c040867b9e25681e7ba6559 | [

"Apache-2.0"

] | 592 | 2021-01-23T05:27:12.000Z | 2022-03-31T14:16:19.000Z | docs/tables/aws_glacier_vault.md | blinkops/blink-aws-query | 21b0d5eee6b0b5554c040867b9e25681e7ba6559 | [

"Apache-2.0"

] | 30 | 2021-01-21T18:43:25.000Z | 2022-03-12T15:14:05.000Z | # Table: aws_glacier_vault

A vault is a way to group archives together in Amazon S3 Glacier.

## Examples

### Basic info

```sql

select

vault_name,

creation_date,

last_inventory_date,

number_of_archives,

size_in_bytes

from

aws_glacier_vault;

```

### List vaults that grant full access to the resource

```sql

select

title,

p as principal,

a as action,

s ->> 'Effect' as effect,

s -> 'Condition' as conditions

from

aws_glacier_vault,

jsonb_array_elements(policy_std -> 'Statement') as s,

jsonb_array_elements_text(s -> 'Principal' -> 'AWS') as p,

jsonb_array_elements_text(s -> 'Action') as a

where

s ->> 'Effect' = 'Allow'

and a in ('*', 'glacier:*');

```

### List vaults that grant anonymous access to the resource

```sql

select

title,

p as principal,

a as action,

s ->> 'Effect' as effect,

s -> 'Condition' as conditions

from

aws_glacier_vault,

jsonb_array_elements(policy_std -> 'Statement') as s,

jsonb_array_elements_text(s -> 'Principal' -> 'AWS') as p,

jsonb_array_elements_text(s -> 'Action') as a

where

p = '*'

and s ->> 'Effect' = 'Allow';

```

### Get the archival age in days before deletion for each vault

```sql

select

title,

a as action,

s ->> 'Effect' as effect,

s -> 'Condition' -> 'NumericLessThan' ->> 'glacier:archiveageindays' as archive_age_in_days

from

aws_glacier_vault,

jsonb_array_elements(vault_lock_policy_std -> 'Statement') as s,

jsonb_array_elements_text(s -> 'Action') as a;

```

### List vaults without owner tag key

```sql

select

vault_name,

tags

from

aws_glacier_vault

where

not tags :: JSONB ? 'owner';

```

| 18.758621 | 93 | 0.683211 | eng_Latn | 0.815457 |

3e6a627f68ec1dc372bde9fd10fd0bb71a7aa71e | 14,362 | md | Markdown | _posts/2020-03-15-software-design-principles-lesson-3.md | matiasbeltramone/matiasbeltramone.github.io | a3576c6002cb7bffb728cc1b8d454c9b6cbcb89d | [

"MIT"

] | null | null | null | _posts/2020-03-15-software-design-principles-lesson-3.md | matiasbeltramone/matiasbeltramone.github.io | a3576c6002cb7bffb728cc1b8d454c9b6cbcb89d | [

"MIT"

] | null | null | null | _posts/2020-03-15-software-design-principles-lesson-3.md | matiasbeltramone/matiasbeltramone.github.io | a3576c6002cb7bffb728cc1b8d454c9b6cbcb89d | [

"MIT"

] | null | null | null | ---

layout: post

title: "POO Lección 8: Principios de Diseño de Software II"

tags: [POO, OOP, Programación Orientada a Objetos, Object Oriented Programming, Software Design Principles]

---

¿Qué es el buen diseño de software? ¿Cómo podemos medirlo? ¿Qué prácticas necesitaras seguir para llegar? ¿Como podes hacer tu arquitectura flexible, estable y fácil de entender?

Estas son las grandes preguntas; pero, afortunadamente, las respuestas son diferentes dependiendo del tipo de aplicación que querramos construir. Sin embargo, hay muchos principios universales del diseño de software que podrán ayudarte a contestar estas preguntas para tu propio proyecto. La mayoría de los patrones de diseño que podamos encontrar son basados en estos principios.

### Principio 1: :package: Encapsulación

<hr>

Identificar los aspectos de tu aplicación que varían y separarlos de los que permanecen iguales.

<hr>

**El principal objetivo de este principio es minimizar el efecto causado por cambios.**

Imaginemos que nuestro programa es un barco, y los cambios son minas que permanecen abajo del agua. Si golpeamos una, el barco se hunde.

Conociendo esto, podemos dividir el casco del barco en compartimientos independientes que puedan ser sellados de forma segura con el objetivo de limitar el daño causado a un compartimiento individual.

Si golpearamos ahora una mina, es probable que el barco **como un todo** siga a flote gracias a este mecanismo, que hace que el daño se quede en una cosa parte del barco.

De la misma manera, en nuestro caso podemos **aislar las partes** de un **programa** que varía en **modulos independientes**, protegiendo el resto del código de efectos adversos que pueda ocasionar ese módulo en cuestión. Como resultado, gastas menos tiempo para que el programa vuelva a funcionar en caso de que falle por un módulo en particular, implementando y probando los cambios de manera más aislada, sin temer que se rompa otra parte. Cuanto menos tiempo pase haciendo cambios, o probando que un cambio en una feature no afecte al resto del programa, más tiempo tendrá para implementar funciones nuevas.

#### Encapsulación: :scroll: A nivel de metodos.

Digamos que estamos haciendo un sitio de e-commerce. En algún lugar de nuestro código, hay un método `getOrderTotal` que calcula el precio total de la orden, incluídos los impuestos, recargos, envíos, etc.

Nosotros podemos anticipar que el **código de los impuestos** relacionados **puede cambiar en el futuro**.

La cantidad de impuestos dependen del país, estado, o quizás de la ciudad donde el cliente reside, y la formula actual podría cambiar con el paso del tiempo por nuevas leyes o regulaciones, algo muy común en Argentina por ejemplo. Como resultado, vamos a necesitar cambiar el metodo `getOrderTotal` bastante seguido. Pero el nombre del método sugiere que no se preocupa acerca del `como` el recargo es calculado.

```

method getOrderTotal() is

total = 0

foreach item in this.lineItems

total += item.price * item.quantity

if (this.country == "US")

total += total * 0.07 // US VAT

else if (this.country == "EU"):

total += total * 0.20 // European VAT

return total

```

ANTES: El cálculo de los impuestos son calculados junto al resto del código del método.

Podemos extraer la lógica para calcular los impuestos en un método por separado, escondiendolo del método original.

```

method getOrderTotal() is

total = 0

foreach item in order.lineItems

total += item.price * item.quantity

total += total * getTaxRate(this.country)

return total

method getTaxRate(country) is

if (country == "US")

return 0.07 // US VAT

else if (country == "EU")

return 0.20 // European VAT

else

return 0

```

DESPUÉS: Podes obtener el calculo de impuesto solamente llamando al método designado para calcularlos.

Los cambios relacionados con los impuestos quedan aislados dentro de un solo método.

Además, si la lógica del cálculo de impuestos se vuelve demasiado complicada, ahora es más fácil moverlo a una clase separada.

#### Encapsulación: :file_folder: A nivel de clases.

Con el tiempo, podemos agregar más y más responsabilidades a un método que solía hacer algo simple. Estos comportamientos adicionales a menudo vienen con sus propios campos auxiliares y métodos que eventualmente difuminan la responsabilidad principal de la clase que los contiene. Extraer todo a una nueva clase podría hacer las cosas mucho más claras y simples.

<p align="center">

<img width="30%" src="https://user-images.githubusercontent.com/22304957/72668237-8346f580-3a03-11ea-8264-d063fd9f3621.png"/>

Antes: Calculabamos los impuestos en la clase `Order`

</p>

Lo que podemos hacer ahora es que los objetos de la clase `Order` deleguen todos los calculos relacionados a impuestos a un objeto especial para realizar esa acción en particular `TaxCalculator`.

<p align="center">

<img width="50%" src="https://user-images.githubusercontent.com/22304957/72668236-8346f580-3a03-11ea-9fa0-2bc178bee4f7.png"/>

</p>

```

method getOrderTotal() is

total = 0

foreach item in this.lineItems

subtotal = item.price * item.quantity

total += subtotal * taxCalculator.getTaxRate(country, state, item.product)

return total

```

DESPUÉS: El calculo de impuestos esta oculto para la clase `Order` mediante el colaborador `TaxCalculator`

#### Principio 2: :clipboard: Programar una Interfaz no una Implementación. (Un contrato)

<hr>

Programar una interfaz, no una implementación. Depender de abstracciones, no de clases concretas.

<hr>

Podríamos decir que el diseño es suficientemente flexible si puedes extender facilmente sin romper el código existente. Vamos a asegurarnos que esto es correcto mirando otro ejemplo con gatos.

Un `Cat` que puede comer cualquier tipo de comida es más flexible que uno que solo puede comer pescado supongamos. Puedes alimientar al primer gato con pestaco porque esta en el marco de "cualquier comida"; sin embargo, también puedes extender el menú del gato con cualquier comida, por ejemplo: balanceado, en cambio el segundo gato que solo puede comer pescado no puede extender su menú.

Cuando quieres hacer que dos clases colaboren, solemos empezar haciendo una dependiente de la otra. Diablos! A menudo empiezo haciendo esto yo mismo. Sin embargo, hay otra forma más flexible para configurar una colaboración entre objetos:

1. Determinar exactamente que es lo que un objeto necesita del otro: ¿Qué métodos ejecutara?

2. Describa estos métodos en una nueva **interfaz**.

3. Hacer que la clase que es una dependencia, implementar esta interfaz recien creada para que cumpla con el contrato correctamente.

4. Ahora crear la segunda clase que depende de esta interfaz, y hacer que tome como colaborador esa interfaz. Puedes hacer que funcione también relacionando los objetos originales, pero la conexión mediante `interfaces` es mucho más flexible.

<p align="center">

<img src="https://user-images.githubusercontent.com/22304957/72668510-7081f000-3a06-11ea-99e7-bf7b676e1672.png"/>

</p>

Si observamos el **antes y después** de extraer la **interfaz**. El codigo en la **derecha** es **más flexible** que el codigo de la izquierda, pero también es verdad que es **algo más complicado de entender o realizar**.

Después de hacer este cambio, probablemente no sientas de inmediato ningún beneficio de realizar esto. Por el contrario, el código se convirtió en algo más complicado que antes de realizar y seguir el flujo que antes. Sin embargo, si sientes que esto podría ser un buen punto para agregar funcionalidad extra o para que otra gente use tu código y quiera extender la funcionalidad de una manera ms sencilla, sigamos este camino mediante `interfaces`.

#### Ejemplo

Miremos otro ejemplo que ilustre que los objetos a través de **interfaces** podrían resultar más beneficiosos que depender de **clases concretas**. Imaginemos que estamos creando un simulador de una compañia de desarrollo de software. Tenemos diferentes clases que representan varios tipos de empleados.

<p align="center">

<img src="https://user-images.githubusercontent.com/22304957/72668673-184bed80-3a08-11ea-9a99-e40c5b0e1bb7.png"/>

Todas las clases estan altamente acopladas entre si.

</p>

En un principio, la clase `Company` esta altamente acoplada a las clases concretas de empleados. Sin embargo, apesar de la diferencia entre las implementaciones, podemos generalizar los métodos de trabajo relacionados y extraerlos en una interfaz común para todas las clases de empleados.

Después de hacer esto, nosotros podemos aplicar `polimorfismo` dentro de la clase `Company`, tratando varios objetos empleados a través de la interfaz `Employee`.

<p align="center">

<img src="https://user-images.githubusercontent.com/22304957/72668751-c22b7a00-3a08-11ea-8f4f-914a2fc95aed.png"/>

El polimorfismo nos ayuda a simplificar el codigo, pero el resto de la clase `Company` sigue dependiendo de las clases `Employee` concretas.

</p>

La clase `Company` sigue acoplada a las clases `Employee`. Esto es malo porque si introducimos nuevos tipos de compañias que funcionan con otro tipos de empleados, nosotros necesitaremos sobreescribir la mayoría de la clase `Company` en lugar de reutiizar el código.

Para resolver este problema, podemos declarar el método para obtener los empleados como `abstracto`. Cada clase concreta `Company` implementara este método diferentemente, creando solamente los empleados que necesita.

<p align="center">

<img src="https://user-images.githubusercontent.com/22304957/72668827-880ea800-3a09-11ea-8405-8559b066fadc.png"/>

El método primario de la clase `Company` es independiente de la clase concreta empleado. Los objetos `Employee` son creados en las subclases concretas de `Company`.

</p>

Después de este cambio, la clase `Company` se hace independiente de las diferentes clases de `Employee`. Ahora puedes extender esta clase e introducir nuevo tipos de compañías y empleados mientras seguimos reutilizando la porción base de la clase `Company`. Entendiendo la clase `Company` no rompemos cualquier código existente que ya se basa en ella.

Por cierto, acabas de ver la aplicación de un patrón de diseño en acción! Ese fue un ejemplo del patrón `Factory Method`.

No se preocupe: seguramente lo discutiremos más adelante en detalle, en alguna serie de patrones que generemos.

#### Principio 3: ✍️ Composición sobre Herencia.

La herencia es probablemente el camino más obvio y fácil de reutilización de código entre clases. Supongamos que tenes dos clases con el mismo código, procedes de la siguiente manera creas una clase común base para estas dos clases y moves el codigo similar allí, luego extendes de esa clase base y listo. Facilisimo!

Desafortunadamente, la herencia viene con advertencias que a menudo se hacen evidentes solo después de que su programa ya tiene toneladas de clases y cambiar algo se vuelve bastante difícil. Aquí hay una lista de esos problemas:

- Una subclase no puede reducir la interfaz de la superclase. Tienen que implementar todos los métodos abstractos de la clase padre aún si no lo tienes que utilizar.

- Cuando sobreescribimos métodos necesitamos estar seguros que el nuevo comportamiento es compatible con el de la clase base. Esto es importante porque los objetos de la subclase podrían ser pasados a un código que espera el objeto de la superclase y no queremos que el código se rompa.

- La herencia rompe la encapsulación de la superclase porque los detalles internos de la clase padre están disponibles para la subclase. Puede haber una situación opuesta en la que un programador hace que una superclase conozca algunos detalles de las subclases en virtud de facilitar aún más la extensión, en ambos casos no es un camino correcto.

- Las subclases estan altamente acopladas a las superclases. Cualquier cambio en una superclase podrá romper la funcionalidad de las subclases.

- Intentar reutilizar el código a través de la herencia puede conducir a crear jerarquías de herencia paralelas.

La herencia usualmente toma lugar en una sola dimensión.

Pero cada vez que hay dos o más dimensiones, tienes que crear muchas combinaciones de clases, hinchando la jerarquía de clases a un tamaño ridículo.

**Hay una alternativa a la herencia llamada composición.**

Mientras que la herencia representa la relación "es un" entre clases (un automóvil es un transporte) y siempre lo será, la composición representa el "tiene una" relación (un automóvil tiene un motor).

Debo mencionar que este principio también se aplica a los agregados, una variante de composición más relajada donde un objeto puede tener una referencia a la otra pero no gestiona su ciclo (lifecycle).

Aquí hay un ejemplo: un automóvil tiene un conductor, pero él o ella puede usar otro automóvil o simplemente caminar sin utilizar un automóvil.

#### Ejemplo

Imaginemos que necesitamos crear una app de catálogo para una empresa manufacturera de autos. La compañía hace autos y camiones; pueden ser electricos o a gas; todos los modelos tienen control manual o automatico.

<p align="center">

<img src="https://user-images.githubusercontent.com/22304957/72669268-5a782d80-3a0e-11ea-96f8-dbac3364c855.png"/>

HERENCIA: Extendiendo una clase en muchas dimensiones podría derivar en una combinatoria explosión de subclases.

</p>

Como podemos ver, cada parámetro adicional resulta en multiplicar el número de subclases. Hay mucho código duplicado entre subclases porque una subclase no puede extender de dos clases al mismo tiempo, al menos no en la mayoría de los lenguajes actuales.

Podemos resolver este problema con composición. En lugar de un objeto `Auto o Car` implementando las funciones por si mismo, podemos delegarlo en otros objetos.

El beneficio agregado es que puedes reemplazar el comportamiento en tiempo de ejecución. Por ejemplo. Puedes reemplazar el objeto `Engine` linkeado al objeto `Car` solamente asignando un `Engine` diferente al objeto `Car`.

<p align="center">

<img src="https://user-images.githubusercontent.com/22304957/72669269-5ba95a80-3a0e-11ea-98ef-87a06ce0cffc.png"/>

COMPOSICIÓN: Diferentes "dimensiones" de funcionalidad extraidas a sus propias jerarquías de clases.

</p>

Esta estructura de clases se asemeja al patron `Strategy`, donde utilizamos diferentes implementaciones para llegar al mismo resultado.

| 65.880734 | 611 | 0.78833 | spa_Latn | 0.996955 |

3e6afa08b9fa164f6ee1f00117827561f0c1579e | 2,042 | md | Markdown | CS320/CS320Assignment4-master/README.md | ko-takahashi/college | c333f1e1767f1206687f5e9b0fb3f0145b2d5d6a | [

"MIT"

] | null | null | null | CS320/CS320Assignment4-master/README.md | ko-takahashi/college | c333f1e1767f1206687f5e9b0fb3f0145b2d5d6a | [

"MIT"

] | null | null | null | CS320/CS320Assignment4-master/README.md | ko-takahashi/college | c333f1e1767f1206687f5e9b0fb3f0145b2d5d6a | [

"MIT"

] | null | null | null | Bryan Phan

[email protected]

This project was to help familiarize us with Python programming and virtual machine

The first part of the program (prog4_1.py) was written in Python and implemented the Tokenize(str) and Parse(tokens) function. The tokenize function takes in an input string and tokenizes it according to the same rules in assignment#2. We do not have to store the tokenized string, but return true. For the parse function it takes a list of tokens as input that have previously been parsed by the Tokenize Function. If there are any mistakes a raise ValueError will occur.

The second part of the program (prog4_2.py) was written in Python and implemented a StackMachine.class. This class had both the Execute(tokens) public function and the currentLine property(initial value zero). The Execute(tokens) function should accept a list of tokens that has been previously Tokenized and Parsed correctly. The function will then perform the operation defined in the list of tokens as specified by the operation. These operations include push #, pop, add, sub, mul, div, mod, skip, save #, get #. Whenever the Execute(tokens) function finishes executing the operation specified by the tokens, the property currentLine is incremented by 1. If, at any time, the program attempts to pop a value that doesn't exist or get a value that has not previously been saved, it raises an IndexError with the message "Invalid Memory Access".

The final part of the program (prog4_3.py) was written in Python and implemented a driver program. It imports both prog4_1.py and prog4_2.py. The first command line argument is read as a file, which the program then proceeds to tokenize and parse all of the lines of the file. After that the lines are stored in an indexable structure so that we can randomly get the tokens for any line by line number. It then instantiates a StackMachine class and execute each operation one line at a time. When the StackMachine stopsrunning naturally, then program will print "Program terminated correctly."

| 185.636364 | 848 | 0.801175 | eng_Latn | 0.999837 |

3e6b18e37d55e3d76452f0928c7c1072a785d229 | 1,261 | md | Markdown | docs/preprocessor/rename-search-namespace.md | Mdlglobal-atlassian-net/cpp-docs.it-it | c8edd4e9238d24b047d2b59a86e2a540f371bd93 | [

"CC-BY-4.0",

"MIT"

] | null | null | null | docs/preprocessor/rename-search-namespace.md | Mdlglobal-atlassian-net/cpp-docs.it-it | c8edd4e9238d24b047d2b59a86e2a540f371bd93 | [

"CC-BY-4.0",

"MIT"

] | null | null | null | docs/preprocessor/rename-search-namespace.md | Mdlglobal-atlassian-net/cpp-docs.it-it | c8edd4e9238d24b047d2b59a86e2a540f371bd93 | [

"CC-BY-4.0",

"MIT"

] | 1 | 2020-05-28T15:54:57.000Z | 2020-05-28T15:54:57.000Z | ---

title: attributo di importazione rename_search_namespace

ms.date: 08/29/2019

f1_keywords:

- rename_search_namespace

helpviewer_keywords:

- rename_search_namespace attribute

ms.assetid: 47c9d7fd-59dc-4c62-87a1-9011a0040167

ms.openlocfilehash: 42c6edb6aa34b441db8041dd2974728c138b2c82

ms.sourcegitcommit: 6e1c1822e7bcf3d2ef23eb8fac6465f88743facf

ms.translationtype: MT

ms.contentlocale: it-IT

ms.lasthandoff: 09/03/2019

ms.locfileid: "70216630"

---

# <a name="rename_search_namespace-import-attribute"></a>attributo di importazione rename_search_namespace

**C++Specifico**

Ha la stessa funzionalità dell'attributo [rename_namespace](../preprocessor/rename-namespace.md) , ma viene usata nelle librerie dei tipi in cui si usa `#import` la direttiva insieme all'attributo [auto_search](../preprocessor/auto-search.md) .

## <a name="syntax"></a>Sintassi

> **#import** *libreria di tipi* **rename_search_namespace (** "*newname*" **)**

### <a name="parameters"></a>Parametri

*NewName*\

Nuovo nome dello spazio dei nomi.

## <a name="remarks"></a>Note

**Specifico C++ finale**

## <a name="see-also"></a>Vedere anche

[attributi di #import](../preprocessor/hash-import-attributes-cpp.md)\

[#import (direttiva)](../preprocessor/hash-import-directive-cpp.md)

| 32.333333 | 244 | 0.766852 | ita_Latn | 0.522509 |

3e6bf86f692f891f92b3f4fefdd5a27a3a21535f | 69 | md | Markdown | articles/app-service-logic/app-service-logic-schema-2015-08-01.md | zhenjiao-ms/azure-docs | c0a229227c1651301b5cd978c3d248c2e22fbb66 | [

"CC-BY-4.0",

"MIT"

] | 1 | 2022-03-22T15:03:27.000Z | 2022-03-22T15:03:27.000Z | articles/app-service-logic/app-service-logic-schema-2015-08-01.md | zhenjiao-ms/azure-docs | c0a229227c1651301b5cd978c3d248c2e22fbb66 | [

"CC-BY-4.0",

"MIT"

] | null | null | null | articles/app-service-logic/app-service-logic-schema-2015-08-01.md | zhenjiao-ms/azure-docs | c0a229227c1651301b5cd978c3d248c2e22fbb66 | [

"CC-BY-4.0",

"MIT"

] | 2 | 2017-02-18T05:45:54.000Z | 2019-12-21T21:23:13.000Z | ---

redirect_url: /azure/logic-apps/logic-apps-schema-2015-08-01

---

| 17.25 | 60 | 0.710145 | swe_Latn | 0.131623 |

3e6c462169d2918f7afff2cca0589cea597026ff | 2,443 | md | Markdown | treebanks/de_pud/de_pud-feat-VerbForm.md | mjabrams/docs | eef96df1ce8f6752e9f80660c8255482b2a07c45 | [

"Apache-2.0"

] | null | null | null | treebanks/de_pud/de_pud-feat-VerbForm.md | mjabrams/docs | eef96df1ce8f6752e9f80660c8255482b2a07c45 | [

"Apache-2.0"

] | null | null | null | treebanks/de_pud/de_pud-feat-VerbForm.md | mjabrams/docs | eef96df1ce8f6752e9f80660c8255482b2a07c45 | [

"Apache-2.0"

] | null | null | null | ---

layout: base

title: 'Statistics of VerbForm in UD_German-PUD'

udver: '2'

---

## Treebank Statistics: UD_German-PUD: Features: `VerbForm`

This feature is universal.

It occurs with 2 different values: `Inf`, `Part`.

62 tokens (0%) have a non-empty value of `VerbForm`.

59 types (1%) occur at least once with a non-empty value of `VerbForm`.

1 lemmas (17%) occur at least once with a non-empty value of `VerbForm`.

The feature is used with 2 part-of-speech tags: <tt><a href="de_pud-pos-VERB.html">VERB</a></tt> (58; 0% instances), <tt><a href="de_pud-pos-AUX.html">AUX</a></tt> (4; 0% instances).

### `VERB`

58 <tt><a href="de_pud-pos-VERB.html">VERB</a></tt> tokens (3% of all `VERB` tokens) have a non-empty value of `VerbForm`.

The most frequent other feature values with which `VERB` and `VerbForm` co-occurred: <tt><a href="de_pud-feat-Mood.html">Mood</a></tt><tt>=EMPTY</tt> (58; 100%), <tt><a href="de_pud-feat-Number.html">Number</a></tt><tt>=EMPTY</tt> (58; 100%), <tt><a href="de_pud-feat-Person.html">Person</a></tt><tt>=EMPTY</tt> (58; 100%), <tt><a href="de_pud-feat-Tense.html">Tense</a></tt><tt>=EMPTY</tt> (39; 67%).

`VERB` tokens may have the following values of `VerbForm`:

* `Inf` (40; 69% of non-empty `VerbForm`): <em>abzuschreiben, aufrechtzuerhalten, begrenzen, behandeln, bekommen, beschäftigen, besuchen, bewegen, durchzuführen, einzunehmen</em>

* `Part` (18; 31% of non-empty `VerbForm`): <em>abgesehen, basierend, gefolgt, gesagt, Breaking, Geformt, abgehärtet, angeklagt, begründet, geeignet</em>

* `EMPTY` (1855): <em>sagte, ist, an, hat, haben, gibt, sagt, war, auf, begann</em>

### `AUX`

4 <tt><a href="de_pud-pos-AUX.html">AUX</a></tt> tokens (0% of all `AUX` tokens) have a non-empty value of `VerbForm`.

The most frequent other feature values with which `AUX` and `VerbForm` co-occurred: <tt><a href="de_pud-feat-Mood.html">Mood</a></tt><tt>=EMPTY</tt> (4; 100%), <tt><a href="de_pud-feat-Number.html">Number</a></tt><tt>=EMPTY</tt> (4; 100%), <tt><a href="de_pud-feat-Person.html">Person</a></tt><tt>=EMPTY</tt> (4; 100%), <tt><a href="de_pud-feat-Tense.html">Tense</a></tt><tt>=Past</tt> (3; 75%).

`AUX` tokens may have the following values of `VerbForm`:

* `Inf` (1; 25% of non-empty `VerbForm`): <em>aufzutreten</em>

* `Part` (3; 75% of non-empty `VerbForm`): <em>bekannt, genannt, geschrieben</em>

* `EMPTY` (946): <em>ist, wurde, war, werden, wird, wurden, sind, hatte, waren, hat</em>

| 59.585366 | 401 | 0.671715 | yue_Hant | 0.297752 |

3e6c82b7e9541f5d42d725bcfc765041f463eabb | 2,666 | md | Markdown | _posts/popculture/2018-05-17-popculture-oceans8.md | psmak3/psmak3.github.io | 17f0c3ef414e840d1008ee073056dd8ecda30a5c | [

"MIT"

] | 1 | 2020-08-06T22:31:34.000Z | 2020-08-06T22:31:34.000Z | _posts/popculture/2018-05-17-popculture-oceans8.md | psmak3/psmak3.github.io | 17f0c3ef414e840d1008ee073056dd8ecda30a5c | [

"MIT"

] | null | null | null | _posts/popculture/2018-05-17-popculture-oceans8.md | psmak3/psmak3.github.io | 17f0c3ef414e840d1008ee073056dd8ecda30a5c | [

"MIT"

] | 1 | 2016-05-28T01:59:29.000Z | 2016-05-28T01:59:29.000Z | ---

layout: post

title: "Lady Parts"

excerpt: "Pop Culture"

categories: popculture

comments: false

share: true

---

Over the past few years, the social left have been demanding more good parts for women in Hollywood. From the Bechdel test to just a bunch of SJW's making a lot of noise, there is a feeling from some that there needs to be not only more females in movies, but parts that do not restrict them to damsels in distress or background eye candy.

Sure, I'll go along with this. I'm an open-minded man of the 21st century, I'm willing to agree with this idea. Also my mom reads this, so I really have no choice but to say I agree.

So has been the recent result of this push? Well, we have a female Luke Skywalker, a remake of the Ghostbusters with women, and not this; an Ocean's 11 re-remake but with 8 women.

Is this what people had in mind for more women in movies? Just to rehash dusty scripts of days past and cast it anew with females? Seems very lame.

In a business that prides itself on progressiveness and creativity, there seems to be a lot of those things missing. I mean c'mon now....Ocean's 8? That is so lazy and inane that one cannot defend this idea at all.

And it is not that there is not reasonable talent in the cast. Kristin Wiig (UA grad by the way) is very good, Sandra Bullock has moments, and Cate Blanchett is maybe the best female actress going today. So why is the outlet for this female talent engulfed in a 'sloppy seconds' (or thirds in this case) script?

Now we can blame Hollywood for their inane choices for remakes, but we also should look at the women that are cooperating in this. There have been good and very funny movies as of late with primary female casts: Spy, The Heat, and Bridesmaids (which was sort of like a remake of Hangover, but I digress). There are also very strong female parts in many good big films; Cate Blanchett in Thor 3, Gal Gadot in Wonder Woman, and of course the creme de la creme of strong female parts, Charlize Theron as Furiousa in Mad Max. These women in this new bullpoo Ocean's 8 should not agree to do that tripe.

It is not like there is any proof that female's cannot carry a movie or are weak on screen. Quite the opposite. So why mire this talent in some rehashed garbage. And why does the talent let this happen?

Anyway, I guarantee I will not see this movie. I loved the Clooney Ocean's 11, it was hip, fresh, ultra-stylized, and just fun. Then the franchise got stale. I bet my mortgage that by simply infusing the cast with women will not freshen this all up.

| 62 | 599 | 0.768192 | eng_Latn | 0.999781 |

3e6c96dfd46b935dc0e932f41c83ae410c024813 | 10,209 | md | Markdown | _posts/2017-06-07-Du-fsharp-a-newcraft-2017.md | evilz/evilz.github.io | 2c2acae18422d2b91507b2e9dd84f26574c05964 | [

"MIT"

] | null | null | null | _posts/2017-06-07-Du-fsharp-a-newcraft-2017.md | evilz/evilz.github.io | 2c2acae18422d2b91507b2e9dd84f26574c05964 | [

"MIT"

] | null | null | null | _posts/2017-06-07-Du-fsharp-a-newcraft-2017.md | evilz/evilz.github.io | 2c2acae18422d2b91507b2e9dd84f26574c05964 | [

"MIT"

] | null | null | null | ---

layout: post

title: Du Fsharp à NewCrafts 2017

date: 2017-06-07

author: evilz

comments: true

tags: [dotnet, Informatique]

image: https://farm5.staticflickr.com/4599/38677096174_8c651790b7_z.jpg

---

J'ai eu la chance d'assister aux deux jours de la [conférence NewCrafts de cette année](http://ncrafts.io).

Sur les deux jours, nous avons pu assister à de nombreuses présentations très intéressantes,

mais je vais ici m'intéresser aux sessions abordant du Fsharp et auxquelles j'ai pu participer.

## Scott Wlaschin - Thirteen ways of looking at a Turtle

**@ScottWlaschin**

Tout le monde se pose la même question en lisant le titre de la session : "Une tortue ? What ...?"

En fait Scott nous propose en utilisant l'image de cette tortue une API très simpliste permettant de tracer des traits.

La "tortue" peut se déplacer en avant, tourner sur un angle donné et finalement dessiner ou non le trait pendant son déplacement.

Cette API de quatre méthodes peut sembler trop simple pour être vraiment intéressante, mais nous allons voir sa réalisation en utilisant 13 approches différentes.

Cela va du modèle orienté objet classique, en passant par de l'actor model puis de l'event sourcing.

Je vous recommande fortement de jeter un coup d'œil, car même si le code est présenté en F#, cela reste applicable dans d'autres plateformes/langages.

L'ensemble du contenu est en ligne sur [son fameux site](https://fsharpforfunandprofit.com/turtle/)

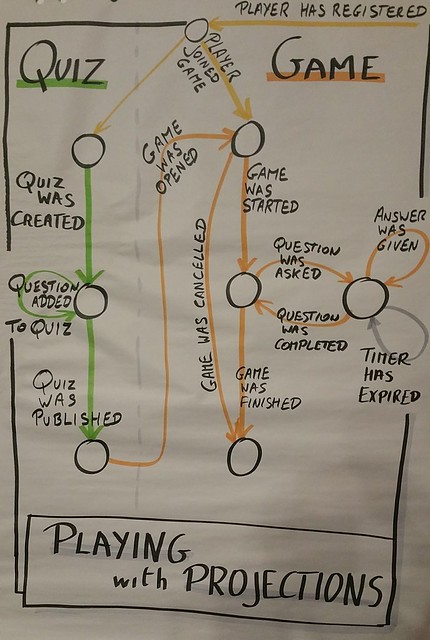

## Michel Grootjans et Thomas Coopman : Playing with projections

**@michelgrootjans @tcoopman**

J’ai participé à un atelier, où nous avons joué avec des projections.

Lorsque l’on fait de l’event sourcing, il va souvent falloir relire et rejouer les événements d’une certaine façon, que ce soit pour retourner dans un état particulier ou pour faire des analyses, il y a souvent besoin de faire des transformations sur des paquets d'événements.

L’objectif de cet atelier était donc de travailler sur ces transformations qui sont appelées des Projections.

Le domaine métier est relativement simple, mais possède déjà de nombreux types d'événements.

J’avoue ne pas avoir fait l’exercice directement en F# mais en C# en utilisant pas mal de linq. Donc facilement portable en F#.

D’ailleurs l’exercice est disponible dans de nombreux langages.

Je vous invite à regarder [ici](https://github.com/michelgrootjans/playing_with_projections)

---

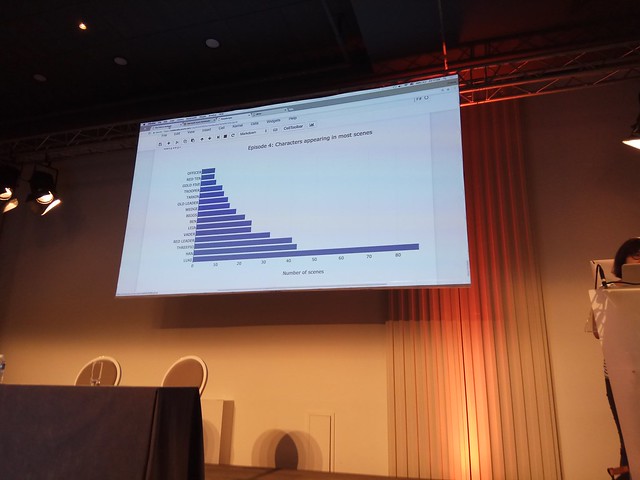

## Evelina Gabasova - The F#orce Awakens

**@evelgab**

<amp-youtube data-videoid="R10rPhpLvr8" layout="responsive" width="480" height="270"></amp-youtube>

Après une introduction digne de la saga Star Wars, Evelina nous a présenté comment elle a réalisé une analyse autour des personnages en utilisant les scripts des sept films.

1) La première étape consistait à réussir à parser les différents scripts des films pour en extraire les noms des personnages. Elle a alors utilisé les scènes pour en déduire les personnages connectés.

Cela ne s'est pas fait sans mal puisque chaque script possède un format différent et que certains personnages n'ont même pas de dialogue.

Pour cette première étape ce sont des regex et des active patterns qui ont été utilisés.

```fsharp

// Active pattern to parse the contents of the script

let (|SceneTitle|Name|Word|) (text:string) =

let scenePattern = "[ 0-9]*(INT.|EXT.)[ A-Z0-9]"

let namePattern = "^[/A-Z0-9]+[-]*[/A-Z0-9 ]*[-]*[/A-Z0-9 ]+$"

if Regex.Match(text, scenePattern).Success then

SceneTitle text

elif Regex.Match(text, namePattern).Success then

Name text

else Word

```

2) Deuxième étape : Analyser les données.

Evelina nous a montré ses différents [Azure notebook](https://notebooks.azure.com/) d'analyse.

Ces notebooks, fortement inspirés de ceux disponibles depuis longtemps en Python, permettent de mélanger texte et code exécutable.

Il est même possible d’utiliser la lib Fsharp.Data pour générer des graphiques plus ou moins complexes.

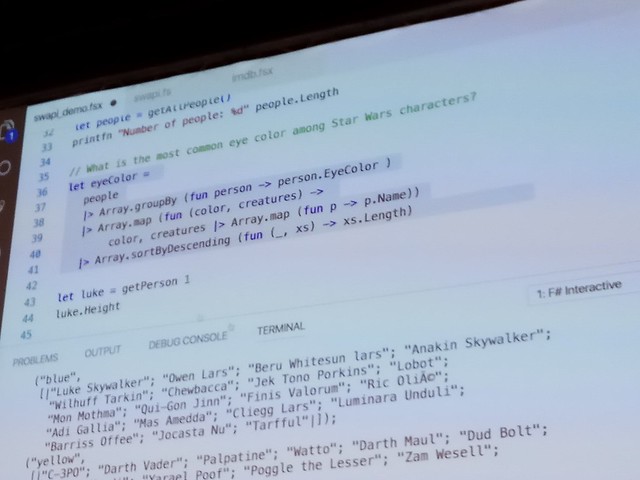

3) Troisième étape : Utiliser plusieurs apis publiques fournissant des informations supplémentaires sur Star Wars.

Il s'agit principalement de l'api [Swapi](http://swapi.co/API)

Elle fournit des détails sur de nombreux éléments de la saga et possède même les clients pour plusieurs langages.

C'est Evelina qui a créé le client F#.

Pour cela elle a utilisé une fonctionnalité très puissante de F# : les Type Provider.

Une simple référence vers le package JsonProvider a créé deux constantes contenant une json d'exemple : une avec les champs minimum et l'autre complète. Le provider se charge de créer dynamiquement un modèle.

Elle a complété sa démo en croisant des données récupérées d'IMDB en utilisant la même technique, mais un provider différent : 'HtmlProvider' qui est lui capable de récupérer un tableau de données pour notre exemple.

Pour tous les détails, rendez-vous sur [son blog](http://evelinag.com/blog/2016/01-25-social-network-force-awakens/)

Que faut-il retenir de tout ça ?

Plusieurs points sont intéressants à garder en tête :

- F# permet de très vite et avec peu de code prototyper l'analyse de données.

les Type Provider et les notebooks sont aussi d'une grande aide.

- Commencer par analyser un ensemble de données connu avant de se lancer sur des analyses à grande échelle.

Bien que le domaine lié à Star Wars puisse paraître geek, c'est surtout un domaine connu par beaucoup de monde, et cela permet de s'apercevoir rapidement des résultats incohérents.

---

## Gien Verschatse - Playing nice together: how to use F# in a brownfield project

**@selketjah**

J'attendais cette session avec impatience. Gien, développeuse Belge pour des jeux de casino en ligne, nous a fait un retour d'expérience sur son intégration de développement F# au sein d'un existant en C#.

Elle a donc passé en revue les différentes fonctionnalités qui pouvaient ou non être utilisées depuis un programme C# voire VB.net.

J’ai refait moi-même quelques tests suite à cette présentation et [mon code est disponible ici](https://github.com/evilz/Fsharp-from-csharp)

Ce que je retiens surtout de ce retour d'expérience, c’est le courage de Gien à s'être lancée dans l’aventure F#, dans le sens où il est difficile (pour ne pas dire impossible) de trouver des missions en F#. C’est donc à nous d’introduire au fur et à mesure cette techno si cela nous semble adéquat.

---

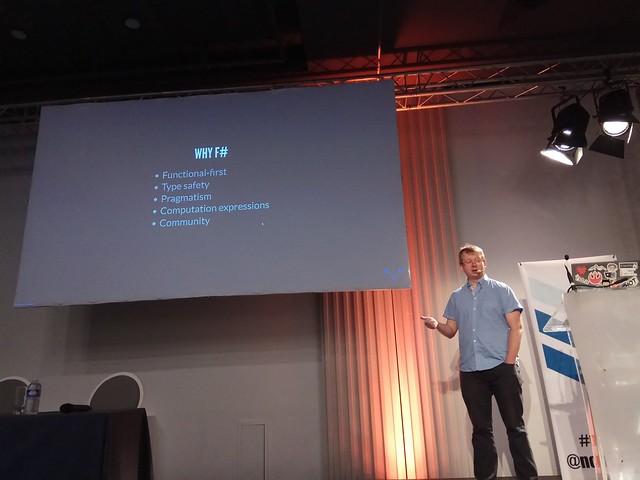

## Krzysztof Cieslak - Hacking F# in JS ecosystem

**@k_cieslak**

Dans cette session, Krzysztof nous présente le dernier Framework à la mode dans la communauté FSharp, `Fable`.

Fable est un compilateur F# vers JavaScript qui s'appuie sur Babel.

Fable va prendre votre code F#, en extraire un AST et le fournir à Babel qui fait le reste du travail pour obtenir un code Js pour Navigateur ou NodeJS.

On peut alors se poser la question : Mais pourquoi ?

La réponse simple serait “parce qu’on peut !” ou encore “pourquoi pas !”

Mais il y a de vrais avantages à utiliser F# comme langage principal :

- Une approche fonctionnelle

- Tout est fortement typé : Record type et Union Type

- Toutes les fonctionnalités avancées : pattern matching, Computation Expression

- Réutilisation de code côté serveur

- Pragmatique

- Communauté

Il nous a ensuite présenté l'outil à travers plusieurs démos dans le navigateur avec du JS très simple, mais aussi une démo react.

Il faut aussi savoir que Fable permet la génération de sourcemap ; il est donc possible de déboguer le code F# depuis la fenêtre de DevTool de Chrome !

La dernière version de Fable est utilisable directement depuis la cli dotnet et permet donc d'initialiser rapidement un projet ou de démarrer un serveur :

dotnet fable start

[Plus d’infos sur ce billet du blog](http://fable.io/blog/Introducing-1-0-beta.html)

De plus si comme moi vous vous demandez comment jouer avec du code d’une librairie externe et comment récupérer tous les types un peu comme le fait Typescript, les devs de la communauté ont pensé à tout et ont créé cet outil : [ts2fable](https://www.npmjs.com/package/ts2fable), qui permet de convertir un type definition de Typescript en Fsharp.

Vous trouverez plus d'informations et de démos sur [le site officiel](http://fable.io/)

---

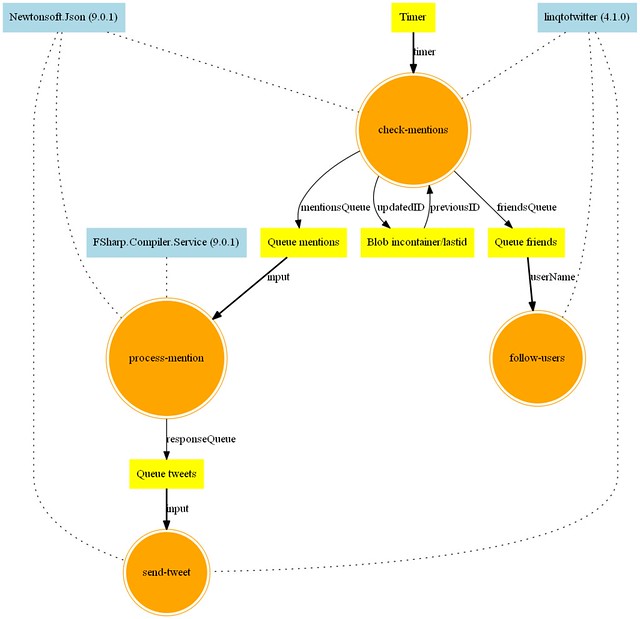

## Mathias Brandewinder - Serverless F# with Azure Functions: fsibot goes nano-services

**@brandewinder**

Le Professeur Tournesol nous a très rapidement montré comment mettre en place grâce aux Azure Functions un système Server Less (ou presque) en F#.

Ce que j'apprécie principalement sur le système des Azure Functions c’est l’interface en ligne simple et agréable à utiliser. Il est vraiment facile de créer une nouvelle fonction et de définir le trigger qui va la déclencher.

Il y a déjà pas mal de triggers disponibles et nous avons pu voir l’utilisation d'un trigger basé sur une horloge et d'un autre sur des messages queue.

<p> </p>

Le FsiBot de Mathias utilise ces deux triggers. L’application regarde à intervalles réguliers les tweets d’un channel comportant du F#, compile et s'exécute.

[Le code est disponible ici](https://github.com/mathias-brandewinder/fsibot-serverless)

[Et un petit billet explicatif ici](http://brandewinder.com/2017/04/01/azure-function-app-diagram/)

---

## Et pour conclure

Deux journées finalement assez riches pour la communauté F#. Et même si les 45min de présentation ne permettent pas de rentrer dans le détail, cela a l'avantage de présenter la technologie et les possibilités du langage aux développeurs.

Je vous invite donc à regarder de près ce langage .net au travers des différents liens fournis ainsi que sur http://fsharp.org et [awesome-fsharp](https://github.com/fsprojects/awesome-fsharp). | 54.015873 | 346 | 0.775198 | fra_Latn | 0.985374 |

3e6d0c1ecd30d68960e5fec415ffd4902dc23ca1 | 487 | md | Markdown | README.md | AstroWa3l/Smart-Contracts | 22dc4865fa1d622adf6b6ec1ca6bfe191bbcc110 | [

"Apache-2.0"

] | null | null | null | README.md | AstroWa3l/Smart-Contracts | 22dc4865fa1d622adf6b6ec1ca6bfe191bbcc110 | [

"Apache-2.0"

] | null | null | null | README.md | AstroWa3l/Smart-Contracts | 22dc4865fa1d622adf6b6ec1ca6bfe191bbcc110 | [

"Apache-2.0"

] | null | null | null | # Smart-Contracts

##### I will be uploading various smart contracts to be used in future DApp projects..

##### In order to run the various haskell smart contracts you must go to IOHK's Marlowe playground here: https://alpha.marlowe.iohkdev.io/#/ Once you have arrived at the main page choose "New Project", then choose the "Marlowe" editor, then you can clear the contents in the Marlowe editor and paste in the smart contracts here and start the simulation to see if it all worked :)

| 81.166667 | 379 | 0.76386 | eng_Latn | 0.998393 |

3e6d63c2678c80008b83130ad1a160bf0738b658 | 674 | md | Markdown | README.md | officialraze/webproject_new | 617976a32e7d69d42e1b956525b44d636b8b88bf | [

"PHP-3.01",

"PHP-3.0"

] | null | null | null | README.md | officialraze/webproject_new | 617976a32e7d69d42e1b956525b44d636b8b88bf | [

"PHP-3.01",

"PHP-3.0"

] | 1 | 2019-02-11T15:41:15.000Z | 2019-02-11T15:41:15.000Z | README.md | officialraze/webproject_new | 617976a32e7d69d42e1b956525b44d636b8b88bf | [

"PHP-3.01",

"PHP-3.0"

] | null | null | null | # Webprojekt

Herzlich Willkommen bei meinem Webprojekt, das im Jahre 2018 begonnen wurde und im Verlaufe des Informatik-Unterrichts erweitert wurde.

## Was kann man auf der Webseite alles machen?

Man kann verschiedene Künstler sehen und diese haben alle eine Detail-Seite, auf der man einen Slider mit verschiedenen Bildern des Künstlers sehen kann. Ebenfalls kann man seine nächsten Tour-Daten betrachten.

Mit Hilfe des Band-Logins können die einzelnen Künstler jeweils ihre Beschreibung und Tour-Daten anpassen. Dort werden ebenfalls verschiedene Daten des Künstlers angezeigt.

Das Webprojekt wurde mit Hilfe von HTML, CSS, PHP und MySQL erstellt.

© by Melvin Lauber

| 51.846154 | 210 | 0.818991 | deu_Latn | 0.999877 |

3e6e0a735b0b41818f6d15446b3092775b1b7029 | 805 | md | Markdown | .github/PULL_REQUEST_TEMPLATE.md | nybles/beta-website | ac65a4e7cf91970771b4ed9da7b94153357aa4b9 | [

"MIT"

] | 2 | 2019-05-04T19:44:18.000Z | 2019-07-11T16:31:26.000Z | .github/PULL_REQUEST_TEMPLATE.md | nybles/beta-website | ac65a4e7cf91970771b4ed9da7b94153357aa4b9 | [

"MIT"

] | 8 | 2017-03-08T19:08:25.000Z | 2017-03-12T21:29:51.000Z | .github/PULL_REQUEST_TEMPLATE.md | nybles/beta-website | ac65a4e7cf91970771b4ed9da7b94153357aa4b9 | [

"MIT"

] | 6 | 2017-03-11T12:50:30.000Z | 2017-03-12T16:28:47.000Z | Thanks for making this contribution. :blush:

People like you maintain the spirit of community work. :clap:

However to make sure that things don't break, please make following checks.

* Read the [contribution guidelines](https://github.com/nybles/nybles.github.io/blob/master/CONTRIBUTING.md).

* Any UI changes should follow Material design principles. [reference](http://fezvrasta.github.io/bootstrap-material-design/)

* Make sure the site is working fine after your commits. [Running Jekyll locally](https://jekyllrb.com/docs/quickstart/)

**Remove above lines, after reading them**

Link of your fork to view changes: *Enter github.io link here*

Any [issue](https://github.com/nybles/nybles.github.io/issues) your want to refer to?

*# issue number *

Briefly describe what changes you made:

*

*

*

| 38.333333 | 125 | 0.76646 | eng_Latn | 0.962749 |

3e6ed3564323fec6c49bc7b11da0087d55476cf5 | 33 | md | Markdown | README.md | khos2ow/cluster-api-provider-cloudstack | 7cf1d89f63f9371de1e73835706e0f9667ac4607 | [

"Apache-2.0"

] | null | null | null | README.md | khos2ow/cluster-api-provider-cloudstack | 7cf1d89f63f9371de1e73835706e0f9667ac4607 | [

"Apache-2.0"

] | null | null | null | README.md | khos2ow/cluster-api-provider-cloudstack | 7cf1d89f63f9371de1e73835706e0f9667ac4607 | [

"Apache-2.0"

] | null | null | null | # cluster-api-provider-cloudstack | 33 | 33 | 0.848485 | nld_Latn | 0.303017 |

3e6f74c79b6467bc94525c93157268be99e4abe7 | 601 | md | Markdown | docs/README.md | AndonMitev/EWallet | 898cde38933d6f134734528b3e594eedf5fa50f3 | [

"Apache-2.0"

] | 322 | 2018-02-28T07:38:44.000Z | 2020-05-27T23:09:55.000Z | docs/README.md | AndonMitev/EWallet | 898cde38933d6f134734528b3e594eedf5fa50f3 | [

"Apache-2.0"

] | 643 | 2018-02-28T12:05:20.000Z | 2020-05-22T08:34:38.000Z | docs/README.md | AndonMitev/EWallet | 898cde38933d6f134734528b3e594eedf5fa50f3 | [

"Apache-2.0"

] | 63 | 2018-02-28T10:57:06.000Z | 2020-05-27T23:10:38.000Z | # Documentation

Below are the main areas of the documentation. Navigate through the links below to see more details.

- **[Design](design/):** Design and philosophy behind the eWallet Server.

- **[Guides](guides/):** Using the eWallet Server to its full potential.

- **[Setup](setup/):** Details on the different eWallet Server setup approaches.

- **[Running the tests](tests/):** Running the tests bundled with the eWallet Server.

- **[Demo](demo.md):** Sample setup that demonstrates how the eWallet Server can be used.

- **[FAQ](faq.md):** Top frequently asked questions about the eWallet Server.

| 54.636364 | 100 | 0.733777 | eng_Latn | 0.984863 |

3e6fdf377bf19bfbba845a2b451a57db7639535a | 205 | md | Markdown | development/coder/http/index.md | biangbiang/something | b98e1c4b53a7b2c2260f40307596ef590a4f5776 | [

"MIT"

] | 1 | 2017-08-23T19:48:02.000Z | 2017-08-23T19:48:02.000Z | development/coder/http/index.md | biangbiang/something | b98e1c4b53a7b2c2260f40307596ef590a4f5776 | [

"MIT"

] | null | null | null | development/coder/http/index.md | biangbiang/something | b98e1c4b53a7b2c2260f40307596ef590a4f5776 | [

"MIT"

] | 3 | 2017-02-17T06:41:34.000Z | 2021-08-28T03:00:42.000Z | http协议相关

============

### [讨论“get”和“post”安全性](discussion-of-get-and-post-security)

---

### [四种常见的 POST 提交数据方式](four-post-data-way)

---

### [X-Frame-Options 响应头](x-frame-options-response-headers)

---

| 13.666667 | 60 | 0.609756 | yue_Hant | 0.3403 |

3e702a1519da4dbe9b27a7c4adaeddb8d13aade7 | 388 | md | Markdown | docs/Boundary.md | Syncsort/PreciselyAPIsSDK-Android | 752d575414ca06f04c1499c86a5cc569c5043437 | [

"Apache-2.0"

] | null | null | null | docs/Boundary.md | Syncsort/PreciselyAPIsSDK-Android | 752d575414ca06f04c1499c86a5cc569c5043437 | [

"Apache-2.0"

] | null | null | null | docs/Boundary.md | Syncsort/PreciselyAPIsSDK-Android | 752d575414ca06f04c1499c86a5cc569c5043437 | [

"Apache-2.0"

] | null | null | null |

# Boundary

## Properties

Name | Type | Description | Notes

------------ | ------------- | ------------- | -------------

**boundaryId** | **String** | | [optional]

**boundaryType** | **String** | | [optional]

**boundaryRef** | **String** | | [optional]

**geometry** | [**DemographicsGeometry**](DemographicsGeometry.md) | | [optional]

**url** | **String** | | [optional]

| 21.555556 | 83 | 0.494845 | kor_Hang | 0.266832 |

3e704d73a7eab1dfe984570079441c7f6d8fc047 | 712 | md | Markdown | README.md | assimoes/angular-directives | bd8e78416abaa02c39170848a7419c3e7184ed4f | [

"MIT"

] | null | null | null | README.md | assimoes/angular-directives | bd8e78416abaa02c39170848a7419c3e7184ed4f | [

"MIT"

] | null | null | null | README.md | assimoes/angular-directives | bd8e78416abaa02c39170848a7419c3e7184ed4f | [

"MIT"

] | null | null | null | # angular-directives

## Example

Add the `asv-directives` module as a dependency to your main module definition

`index.html`

```

(function () {

'use strict';

var app = angular.module('app', ['asv-directives']);

})();

```

Add a reference to the `asv-directives.js` script file below your angular app js file reference.

```

<html>

<head>

...

<script src="path/to/yourAngularApp.js" type="text/javascript"></script>

<script src="path/to/asv-directives.js" type="text/javascript"></script>

...

</head>

<body>...</body>

</html>

```

Use it.

```

<file-uploader accepts="*.*" post-url="/controller/UploadAction" file-icon="file.ico" col-size="12" max-size="10000000"></file-uploader>

```

| 18.736842 | 136 | 0.650281 | eng_Latn | 0.381756 |

3e71270bc73945897aa2b2cd4caa8f86aed40f08 | 2,288 | md | Markdown | _posts/2015-12-31-latex.md | fuzzbin/devnull | 58a7e04f265caf425d6fc6af7d1e863bb7beb55a | [

"MIT"

] | null | null | null | _posts/2015-12-31-latex.md | fuzzbin/devnull | 58a7e04f265caf425d6fc6af7d1e863bb7beb55a | [

"MIT"

] | null | null | null | _posts/2015-12-31-latex.md | fuzzbin/devnull | 58a7e04f265caf425d6fc6af7d1e863bb7beb55a | [

"MIT"

] | null | null | null | ---

layout: post

title: LaTeX

date: 2015-12-31 00:00:00

author: Tom Jarle

---

[\\(LaTeX\\)](https://www.latex-project.org/) er et system for å typesette dokumenter. Resultatet holder høy kvalitet, og er meget godt egnet for produksjon av tekniske og vitenskaplige dokumenter. Å lage \\(LaTeX\\)-dokumenter gjøres på en litt annen måte enn i en vanlig tekstbehandler. Skriveprosessen minner mer om programmering, og \\(LaTeX\\)-koden må faktisk kompileres før dokumentet kan vises. Dette høres kanskje tungvint ut, og det var det før. Heldigvis så har det kommet flere gode verktøy de siste årene som gjør at man kan lage flotte \\(LaTeX\\)-dokumenter direkte i nettleseren uten å måtte installere annen programvare. Et slikt verktøy er [overleaf](https://www.overleaf.com).

Hvorfor bruke \\(LaTeX\\)? Først og fremst fordi typesettingen er fantastisk fin. Moderne tekstbehandlere tilbyr riktignok formelverktøy som gir veldig bra resultat, men \\(LaTeX\\) er enda ett hakk bedre. En annen grunn for min egen del er at det faktisk er en veldig effektiv måte å skrive på. Fokuset er på innhold, så tar \\(LaTeX\\) seg av formattering. Det er behagelig. I tillegg er det faktisk ganske gøy!

Der man i et tekstdokument kanskje ville skrevet pytagoras læresetning som a^2 + b^2 = c^2, eller plassert uttrykket inn med et formelverktøy kan man veldig enkelt få dette til å bli \\(a^2+b^2=c^2\\) ved å bruke \\(LaTeX\\). Man kan faktisk legge inn større uttrykk som \\(x_{1,2}=\frac{-b\pm\sqrt{b^2-4ac}}{2a}\\) direkte i teksten uten å ødelegge formateringen på dokumentet.

Uttrykket under er skrevet med en litt annen kode, som gjør at det kommer på en egen linje.

$$x_{1,2}=\frac{-b\pm\sqrt{b^2-4ac}}{2a}$$

Dette kan være nyttig hvis man skal vise utledninger som går over flere linjer.

En annen fin funksjon med [overleaf](https://www.overleaf.com) er støtten for samskriving. Man kan enkelt dele, og samskrive på dokumenter. I tillegg ligger det mange maler tilgjengelig, slik at man enklere kan komme i gang. Selv om mange forbinder \\(LaTeX\\) med vitenskapelige dokumenter, støtter verktøyet er lang rekke andre funksjoner. Et eksempel på dette er [lilypond](http://www.lilypond.org/text-input.html), dette er et system for å skrive noter til musikk med \\(LaTeX\\)-rammeverket. | 120.421053 | 695 | 0.76049 | nob_Latn | 0.996591 |

3e7142e8abf0dfe9404136f23bbc7c352bbd6f8b | 2,109 | md | Markdown | content/posts/2020-02-13--RSA-Algorithm-With-Example.md | GeminiWind/personal-blog | f58e1686edadf0a53995829ed178775ad547b475 | [

"MIT"

] | null | null | null | content/posts/2020-02-13--RSA-Algorithm-With-Example.md | GeminiWind/personal-blog | f58e1686edadf0a53995829ed178775ad547b475 | [

"MIT"

] | 11 | 2021-03-01T20:47:18.000Z | 2022-02-26T17:39:54.000Z | content/posts/2020-02-13--RSA-Algorithm-With-Example.md | GeminiWind/personal-blog | f58e1686edadf0a53995829ed178775ad547b475 | [

"MIT"

] | null | null | null | ---

title: "Encryption: RSA Algorithm with example"

date: "2020-02-13T22:40:32.169Z"

template: "post"

draft: false

slug: "/explain-rsa-algorithm-with-example"

category: "AWS"

tags:

- "RSA"

- "Encryption"

description: "Explain RSA Algorithm in the shortest and easiest way along with example"

---

In the university, I bet that all of us has studied how RSA works. However, in that time, we don't truly understand the important of cryptography as well as RSA algorithm. Therefore, in this article, we 'll look back to RSA. What is RSA ? How it work ? Where is it apply ?

## Brief introduction

- RSA (Rivest–Shamir–Adleman) is one of the first public-key cryptosystems and is widely used for secure data transmission.

- The acronym RSA is made of the initial letters of the surnames of Ron Rivest, Adi Shamir, and Leonard Adleman, who first publicly described the algorithm in 1977.

- RSA is one of the cipher suites used in Transport Layer Security, which is used by HTTPS.

## Operation

1. Choose two different large random prime numbers *__p__* and *__q__*

2. Calculate *__n = p * q__*

- *__n__* is the modulus for the public key and the private keys

3. Calculate the totient: *__Φ(n)=(p-1)(q-1)__*

4. Choose an integer *__e__* such that 1 < e < Φ(n) and e is co-prime to n

- e is released as the public key exponent

5. Compute d as the following formular: *__d = e^(-1) mod Φ(n)__*

- d is kept as the private key exponent

## Encrypting message

Alice gives her public key (n & e) to Bob and keeps her private key secret. Bob wants to send message M to Alice.

The cipher text c will be computed as the following way

_**c = m^e mod n**_

## Decrypting message

Alice can recover m from c by using her private key d in the following procedure:

_**m = c^d mod n**_

## Example

1. Choose random large prime number p = 61 and q = 53

2. n = q * q = 61 * 53 = 3233

3. Φ(n) = (p-1)(q-1) = 60*52 = 3120

4. Find e: e> 1 and e is co-prime to 3120 => e = 17

5. Find d = 2753

- Encryption: (m = 88): c= m^17 mod 3233 = 88 ^ 17 mod 3233 = 1525

- Decryption: m = 1525^2753 mod 3233 = 88

| 35.745763 | 272 | 0.702703 | eng_Latn | 0.996744 |

3e71746ee1a772cfac04ac7913dd5d41116e91b6 | 26 | md | Markdown | README.md | aramisic/html-css-js | 697f19f56fc7a1f814be6984ef4ceca808bc2e42 | [

"MIT"

] | null | null | null | README.md | aramisic/html-css-js | 697f19f56fc7a1f814be6984ef4ceca808bc2e42 | [

"MIT"

] | null | null | null | README.md | aramisic/html-css-js | 697f19f56fc7a1f814be6984ef4ceca808bc2e42 | [

"MIT"

] | null | null | null | # html-css-js

Single Page

| 8.666667 | 13 | 0.730769 | kor_Hang | 0.937387 |

3e71ad6de98bad7f2046e7d9d9c87ad7cb7204f3 | 1,136 | md | Markdown | README.md | Ohems/watodo | d6e173468b33c3576696dfdd1416ea7d1c99e02a | [

"MIT"

] | null | null | null | README.md | Ohems/watodo | d6e173468b33c3576696dfdd1416ea7d1c99e02a | [

"MIT"

] | null | null | null | README.md | Ohems/watodo | d6e173468b33c3576696dfdd1416ea7d1c99e02a | [

"MIT"

] | null | null | null | #  [WIP]

Community event and responsibility management tool. Work in progress and currently unusable.

## Getting Started