hexsha

stringlengths 40

40

| size

int64 5

1.04M

| ext

stringclasses 6

values | lang

stringclasses 1

value | max_stars_repo_path

stringlengths 3

344

| max_stars_repo_name

stringlengths 5

125

| max_stars_repo_head_hexsha

stringlengths 40

78

| max_stars_repo_licenses

sequencelengths 1

11

| max_stars_count

int64 1

368k

⌀ | max_stars_repo_stars_event_min_datetime

stringlengths 24

24

⌀ | max_stars_repo_stars_event_max_datetime

stringlengths 24

24

⌀ | max_issues_repo_path

stringlengths 3

344

| max_issues_repo_name

stringlengths 5

125

| max_issues_repo_head_hexsha

stringlengths 40

78

| max_issues_repo_licenses

sequencelengths 1

11

| max_issues_count

int64 1

116k

⌀ | max_issues_repo_issues_event_min_datetime

stringlengths 24

24

⌀ | max_issues_repo_issues_event_max_datetime

stringlengths 24

24

⌀ | max_forks_repo_path

stringlengths 3

344

| max_forks_repo_name

stringlengths 5

125

| max_forks_repo_head_hexsha

stringlengths 40

78

| max_forks_repo_licenses

sequencelengths 1

11

| max_forks_count

int64 1

105k

⌀ | max_forks_repo_forks_event_min_datetime

stringlengths 24

24

⌀ | max_forks_repo_forks_event_max_datetime

stringlengths 24

24

⌀ | content

stringlengths 5

1.04M

| avg_line_length

float64 1.14

851k

| max_line_length

int64 1

1.03M

| alphanum_fraction

float64 0

1

| lid

stringclasses 191

values | lid_prob

float64 0.01

1

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

3ee11897484b8dbc49715217b2c7a18d2b321635 | 2,237 | md | Markdown | _posts/blog/2019-05-10-music.md | derektopper/derektopper.github.io | bc106798a2722ca0e4e0e8cbea4f75dba484905e | [

"Apache-2.0"

] | null | null | null | _posts/blog/2019-05-10-music.md | derektopper/derektopper.github.io | bc106798a2722ca0e4e0e8cbea4f75dba484905e | [

"Apache-2.0"

] | null | null | null | _posts/blog/2019-05-10-music.md | derektopper/derektopper.github.io | bc106798a2722ca0e4e0e8cbea4f75dba484905e | [

"Apache-2.0"

] | null | null | null | ---

layout: blog_post

title: Generative Sonification of Web-Scraped Bike-Sharing Data

category: blog

---

In my final for my music technology class, I developed a generative algorithmic composition that changes based on web-scraped bike sharing data.

This past semester, I took a course on music technology. This was not something I never really done before as I was never particularly musically gifted. I was always the worst person in music class and was always “last chair” when I played the stand-up bass in elementary, middle and high school. However, I’ve always thought the music was neat and wanted to see how data science could be applied.

In the class, offered by [Berkeley’s CNMAT program,](cnmat.berkeley.edu) The professor taught students how to use the visual programming language Max to create music and multimedia projects. Max is a data flow system where patches/programs are created by arranging building blocks within a visual canvas. These objects can receive input, generate output or do both. These objects pass messages between each other and can transmit various information to each other.

While other people in my class developed instruments or analyzed various sinusoidal parts of music, I chose to build something that the program was not really set up to accommodate. I used Max to web-scrape the [open-data for every bike sharing system in the United States.](https://github.com/NABSA/gbfs/blob/master/systems.csv) Having spent the year using the Bay Area’s Ford GoBike, I became interested in the flow of riders and bikes and wanted to sonfiy this information.

Thus, I used Max to scrape the bike sharing information for each of the 87 systems in the United States. I then took this data and every time someone docked or removed a bike from a given system, I played a sound related to that bike sharing system. So for example, if someone docked a bike in Miami, I had Max play a sample of Cuban music. While if someone began to ride a bike in Detroit, I had Max play a sample of Motown.

This created a neat generative sample of music that was kind of interesting.

[The code and images of the project are available here.](https://drive.google.com/open?id=11HyjFIO4MvrjeYIR--Eov1iaOCzkk-A5olokgfU12Do)

| 117.736842 | 477 | 0.798838 | eng_Latn | 0.999811 |

3ee13113be5a0a477721bfb9e8ede121cec12947 | 2,010 | md | Markdown | README.md | ErrorxCode/CloremDB | 2fe565e06e622dc49c5477212019a09c0169f04f | [

"Apache-2.0"

] | 1 | 2022-03-03T02:29:12.000Z | 2022-03-03T02:29:12.000Z | README.md | ErrorxCode/CloremDB | 2fe565e06e622dc49c5477212019a09c0169f04f | [

"Apache-2.0"

] | 3 | 2021-10-17T16:32:13.000Z | 2022-03-30T12:19:28.000Z | README.md | ErrorxCode/CloremDB | 2fe565e06e622dc49c5477212019a09c0169f04f | [

"Apache-2.0"

] | null | null | null |

# CloremDB ~ Key-value pair store

<p align="left">

<a href="#"><img alt="Version" src="https://img.shields.io/badge/Language-Java-1DA1F2?style=flat-square&logo=java"></a>

<a href="#"><img alt="Bot" src="https://img.shields.io/badge/Version-2.8-green"></a>

<a href="https://www.instagram.com/x__coder__x/"><img alt="Instagram - x__coder__" src="https://img.shields.io/badge/Instagram-x____coder____x-lightgrey"></a>

<a href="#"><img alt="GitHub Repo stars" src="https://img.shields.io/github/stars/ErrorxCode/OTP-Verification-Api?style=social"></a>

</p>

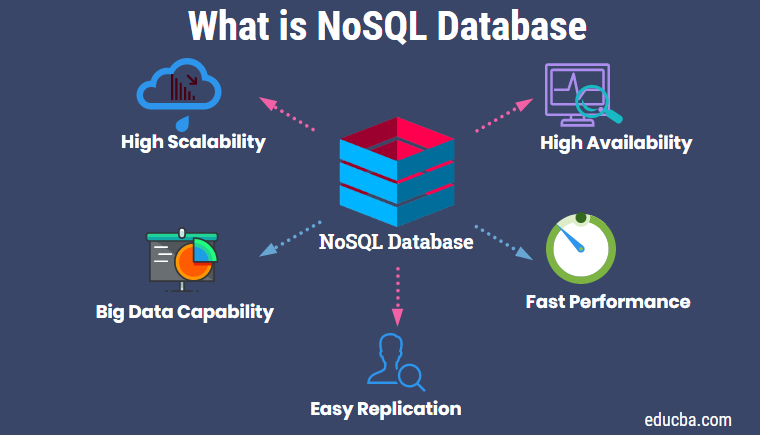

CloremDB is a key-value paired nosql database written in JAVA for programs in JAVA. The data is stored like a JSON tree with nodes and children. It has

the most powerful query engine. You can perform low-level and high-level queries on database to sort data. Given a node, you can reach/find any node to any nested levels that are under it and can sort them on the basis of a property.

## Features

- Easy,lightweight and fast

- Data sorting using queries

- Direct object deserialization

- Capable of storing almost all primitive datatypes

- Use JSON structure for storing data

- Supports List<Integer> & List<String>

## Acknowledgements

- [What is No-Sql](https://en.wikipedia.org/wiki/Key%E2%80%93value_database)

## Documentation

- [Javadocs](https://errorxcode.github.io/docs/clorem/index.html)

- [Guide](https://github.com/ErrorxCode/CloremDB/wiki/Guide)

## Deployment / Installation

In your project build.gradle

```groovy

allprojects {

repositories {

...

maven { url 'https://jitpack.io' }

}

}

```

In your app build.gradle

```groovy

dependencies {

implementation 'com.github.ErrorxCode:CloremDB:v2.8'

}

```

## It's easy

```

Clorem.getInstance().addMyData().commit();

```

## Powered by ❤

#### [Clorabase](https://clorabase.netlify.app)

> A account-less platform as a service for android apps. (PaaS)

| 32.419355 | 233 | 0.71791 | eng_Latn | 0.552596 |

3ee1bf49419b6b07c15e6f5f58ab5ff1512bf2c3 | 5,482 | md | Markdown | README.md | Pocc/meraki-client-vpn | bdb33b047b0a71b69c199db399c59ac5bfecd64c | [

"Apache-2.0"

] | 4 | 2018-04-15T07:31:27.000Z | 2020-03-26T04:20:31.000Z | README.md | Pocc/meraki-client-vpn | bdb33b047b0a71b69c199db399c59ac5bfecd64c | [

"Apache-2.0"

] | 60 | 2018-04-15T08:25:49.000Z | 2021-12-13T19:47:10.000Z | README.md | Pocc/meraki-client-vpn | bdb33b047b0a71b69c199db399c59ac5bfecd64c | [

"Apache-2.0"

] | 1 | 2018-04-05T07:16:04.000Z | 2018-04-05T07:16:04.000Z | [](https://travis-ci.org/pocc/merlink)

[](https://ci.appveyor.com/project/pocc/merlink/branch/master)

[](https://bettercodehub.com/)

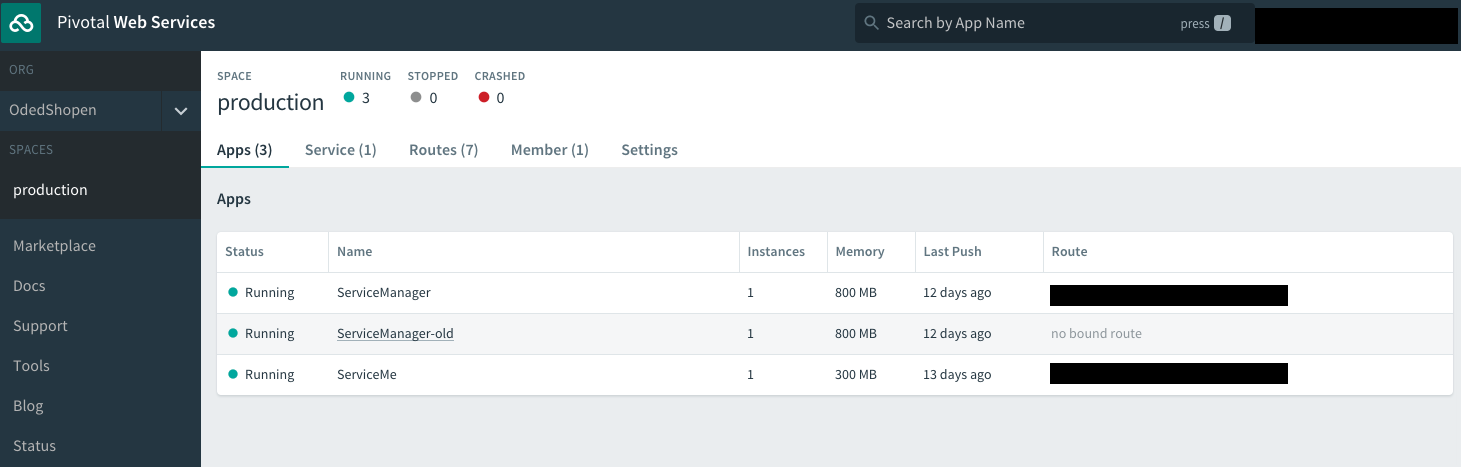

# MerLink

This program will connect desktop devices to Meraki firewalls via an

L2TP/IPSEC connection. This program uses a Meraki dashboard admin's

credentials to pull the data required for a Client VPN connection, create

the connection with OS built-ins, and then connect.

## Current Feature Set (targeting v1.0.0)

* Authentication/Authorization

* Dashboard admins/Guest users supported with Meraki Auth

* TFA prompt supported

* Only networks/organizations that that user has access to are shown

* VPN Connection (Windows-only)

* Split Tunnel

* Remember Credential

* Platforms

* Windows 7/8/10

* macOS 10.7-13

* linux (requires network-manager)

* CI/CD on tagged commits

* Windows 10

* macOS 10.13

* Ubuntu 14.04

* Ubuntu 16.04

* Troubleshooting tests on connection failure

* Is the MX online?

* Can the client ping the firewall's public IP?

* Is the user behind the firewall?

* Is Client VPN enabled?

* Is authentication type Meraki Auth?

* Are UDP ports 500/4500 port forwarded through firewall?

The goals for future major versions can be found in the

[Project list](https://github.com/pocc/merlink/projects).

## Installing Merlink

### Executables

Download the executables [here](https://github.com/pocc/merlink/releases).

### Building from Source

**1.** Clone the repository:

```git clone https://github.com/pocc/merlink```

**2.** Download the required libraries with pip3

```pip3 install requirements.txt```

**3.** Execute the file

```python3 merlink.py```

## Contributing

Please read [contributing.md](https://github.com/pocc/merlink/blob/master/docs/contributing.md) for the process for

submitting pull requests.

### Setting up your environment

To set up your Windows environment, please read

[pycharm_setup.md](https://github.com/pocc/merlink/blob/master/docs/pycharm_setup.md)

### Versioning

[SemVer](http://semver.org/) is used for versioning:

* MAJOR version: Incompatible UI from previous version from a user's perspective

* MINOR version: Functionality is added to UI from a user's persective

* PATCH version: Minor enhancements and bug fixes

For the versions available, see the [tags on this repository](https://github.com/pocc/merlink/tags).

### Branching

Adapting [Git Branching](http://nvie.com/posts/a-successful-git-branching-model/) for this projcet

* **iss#-X.Y**: Branch from dev-X.Y and reintegrate to dev-X.Y. Should be tied to an issue tagged with 'bug', 'feature', or 'enchancement' on repo.

* **dev-X.Y**: Development branch. When it's ready for a release, branch into a release.

* **rel-X.Y**: Release candidate targeting version X.Y. When it is ready, it should be merged into master tagged with version X.Y.

* **master**: Master branch.

## Addenda

### Reference Material

#### Language and Libraries

* [Python 3](https://www.python.org/) - Base language

* [Qt5](https://doc.qt.io/qt-5/index.html) - Comprehensive Qt reference made by the Qt company. It is made for C++, but will supply the information you need about classes and functions.

* [PyQt5](http://pyqt.sourceforge.net/Docs/PyQt5/) - Documentation for PyQt5. This is a copypaste of the Qt docs applied to Python, and generally contains less useful information

* [Mechanical Soup](https://github.com/MechanicalSoup/MechanicalSoup) - Web scraper for Python 3

#### Environment

* [PyCharm](https://www.jetbrains.com/pycharm/) - IDE used

#### General Documentation

* [Powershell VPN Client docs](https://docs.microsoft.com/en-us/powershell/module/vpnclient/?view=win10-ps) -

Collection of manpages for VPN Client-specific powershell functions.

#### Style Guide

* [Google Python Style Guide (2018)](https://github.com/google/styleguide/blob/gh-pages/pyguide.md)

#### Style Guide

* [Google Python Style Guide (2018)](https://github.com/google/styleguide/blob/gh-pages/pyguide.md)

#### Building

* [PyInstaller](https://pyinstaller.readthedocs.io/en/v3.3.1/) - Python bundler used as part of this project

* Make sure you install the latest PyIntstaller directly:

`pip install https://github.com/pyinstaller/pyinstaller/archive/develop.zip

`

* [NSIS](http://nsis.sourceforge.net/Docs/) - Windows program installer system

* [NSIS Wizard + IDE](http://hmne.sourceforge.net/) - Will build and debug NSIS scripts

* [NSIS Sample Installers](http://nsis.sourceforge.net/Category:Real_World_Installers) - To learn how to build your own installer by example

* [FPM](https://github.com/jordansissel/fpm) - A way to package to targets deb, rpm, pacman, and osxpkg

libxml2-utils

### Linting

* coala:

* On ubuntu, be sure to install these libraries as well:

`sudo apt install libxml2-utils libxml2-dev libxslt-dev libxml2`

### License

This project is licensed under the Apache License 2.0 - see the

[LICENSE.md](LICENSE.md) file for details.

### Authors

* **Ross Jacobs** - *Initial work* - [pocc](https://github.com/pocc)

See also the list of

[contributors](https://github.com/pocc/merlink/contributors) who participated

in this project.

### Acknowledgments

Praise be Stack Overflow! | 39.438849 | 185 | 0.738234 | eng_Latn | 0.787336 |

3ee1d8ca5d76d0327021b7d12787cf37042887d7 | 364 | md | Markdown | docs/V4ReleaseListItemChangelog.md | giantswarm/giantswarm-js-client | 7dc20cc3eae929665d73fa38b5ca9157112d2e14 | [

"Apache-2.0"

] | 6 | 2015-07-09T09:12:03.000Z | 2021-03-30T01:50:10.000Z | docs/V4ReleaseListItemChangelog.md | giantswarm/giantswarm-js-client | 7dc20cc3eae929665d73fa38b5ca9157112d2e14 | [

"Apache-2.0"

] | 69 | 2015-06-15T10:14:13.000Z | 2022-01-27T13:56:21.000Z | docs/V4ReleaseListItemChangelog.md | giantswarm/giantswarm-js-client | 7dc20cc3eae929665d73fa38b5ca9157112d2e14 | [

"Apache-2.0"

] | 2 | 2018-01-22T21:07:52.000Z | 2018-01-22T21:07:58.000Z | # GiantSwarm.V4ReleaseListItemChangelog

## Properties

Name | Type | Description | Notes

------------ | ------------- | ------------- | -------------

**component** | **String** | If the changed item was a component, this attribute is the name of the component. | [optional]

**description** | **String** | Human-friendly description of the change | [optional]

| 36.4 | 125 | 0.598901 | eng_Latn | 0.90755 |

3ee202cf3ae15e96644e2f5bd5640fd66c9c3e54 | 5,493 | md | Markdown | documents/amazon-elasticache-docs/doc_source/memcache/elasticache-use-cases.md | siagholami/aws-documentation | 2d06ee9011f3192b2ff38c09f04e01f1ea9e0191 | [

"CC-BY-4.0"

] | 5 | 2021-08-13T09:20:58.000Z | 2021-12-16T22:13:54.000Z | documents/amazon-elasticache-docs/doc_source/memcache/elasticache-use-cases.md | siagholami/aws-documentation | 2d06ee9011f3192b2ff38c09f04e01f1ea9e0191 | [

"CC-BY-4.0"

] | null | null | null | documents/amazon-elasticache-docs/doc_source/memcache/elasticache-use-cases.md | siagholami/aws-documentation | 2d06ee9011f3192b2ff38c09f04e01f1ea9e0191 | [

"CC-BY-4.0"

] | null | null | null | # Common ElastiCache Use Cases and How ElastiCache Can Help<a name="elasticache-use-cases"></a>

Whether serving the latest news or a product catalog, or selling tickets to an event, speed is the name of the game\. The success of your website and business is greatly affected by the speed at which you deliver content\.

In "[For Impatient Web Users, an Eye Blink Is Just Too Long to Wait](http://www.nytimes.com/2012/03/01/technology/impatient-web-users-flee-slow-loading-sites.html?pagewanted=all&_r=0)," the New York Times noted that users can register a 250\-millisecond \(1/4 second\) difference between competing sites\. Users tend to opt out of the slower site in favor of the faster site\. Tests done at Amazon, cited in [How Webpage Load Time Is Related to Visitor Loss](http://pearanalytics.com/blog/2009/how-webpage-load-time-related-to-visitor-loss/), revealed that for every 100\-ms \(1/10 second\) increase in load time, sales decrease 1 percent\.

If someone wants data, you can deliver that data much faster if it's cached\. That's true whether it's for a webpage or a report that drives business decisions\. Can your business afford to not cache your webpages so as to deliver them with the shortest latency possible?

It might seem intuitively obvious that you want to cache your most heavily requested items\. But why not cache your less frequently requested items? Even the most optimized database query or remote API call is noticeably slower than retrieving a flat key from an in\-memory cache\. *Noticeably slower* tends to send customers elsewhere\.

The following examples illustrate some of the ways using ElastiCache can improve overall performance of your application\.

## In\-Memory Data Store<a name="elasticache-use-cases-data-store"></a>

The primary purpose of an in\-memory key\-value store is to provide ultrafast \(submillisecond latency\) and inexpensive access to copies of data\. Most data stores have areas of data that are frequently accessed but seldom updated\. Additionally, querying a database is always slower and more expensive than locating a key in a key\-value pair cache\. Some database queries are especially expensive to perform\. An example is queries that involve joins across multiple tables or queries with intensive calculations\. By caching such query results, you pay the price of the query only once\. Then you can quickly retrieve the data multiple times without having to re\-execute the query\.

The following image shows ElastiCache caching\.

![\[Image: ElastiCache Caching\]](http://docs.aws.amazon.com/AmazonElastiCache/latest/mem-ug/./images/ElastiCache-Caching.png)

### What Should I Cache?<a name="elasticache-use-cases-data-store-what-to-cache"></a>

When deciding what data to cache, consider these factors:

**Speed and expense** – It's always slower and more expensive to get data from a database than from a cache\. Some database queries are inherently slower and more expensive than others\. For example, queries that perform joins on multiple tables are much slower and more expensive than simple, single table queries\. If the interesting data requires a slow and expensive query to get, it's a candidate for caching\. If getting the data requires a relatively quick and simple query, it might still be a candidate for caching, depending on other factors\.

**Data and access pattern** – Determining what to cache also involves understanding the data itself and its access patterns\. For example, it doesn't make sense to cache data that changes quickly or is seldom accessed\. For caching to provide a real benefit, the data should be relatively static and frequently accessed\. An example is a personal profile on a social media site\. On the other hand, you don't want to cache data if caching it provides no speed or cost advantage\. For example, it doesn't make sense to cache webpages that return search results because the queries and results are usually unique\.

**Staleness** – By definition, cached data is stale data\. Even if in certain circumstances it isn't stale, it should always be considered and treated as stale\. To tell whether your data is a candidate for caching, determine your application's tolerance for stale data\.

Your application might be able to tolerate stale data in one context, but not another\. For example, suppose that your site serves a publicly traded stock price\. Your customers might accept some staleness with a disclaimer that prices might be *n* minutes delayed\. But if you serve that stock price to a broker making a sale or purchase, you want real\-time data\.

Consider caching your data if the following is true:

+ Your data is slow or expensive to get when compared to cache retrieval\.

+ Users access your data often\.

+ Your data stays relatively the same, or if it changes quickly staleness is not a large issue\.

For more information, see the following:

+ [Caching Strategies](https://docs.aws.amazon.com/AmazonElastiCache/latest/mem-ug/Strategies.html) in the *ElastiCache for Memcached User Guide*

## ElastiCache Customer Testimonials<a name="elasticache-use-cases-testimonials"></a>

To learn about how businesses like Airbnb, PBS, Esri, and others use Amazon ElastiCache to grow their businesses with improved customer experience, see [How Others Use Amazon ElastiCache](https://aws.amazon.com/elasticache/testimonials/)\.

You can also watch the [ElastiCache Videos](Tutorials.md#tutorial-videos) for additional ElastiCache customer use cases\. | 122.066667 | 687 | 0.788822 | eng_Latn | 0.997163 |

3ee2babb021e5f907a8e0b18b5037726c180cba1 | 90 | md | Markdown | src/Camera agents for multiple cars/README.md | SahilDhull/autonomous | 378fc7d6c5a9c34c4e915f080fb78ed5c11195d6 | [

"MIT"

] | 3 | 2020-02-28T12:04:26.000Z | 2022-02-27T00:42:56.000Z | src/Camera agents for multiple cars/README.md | SahilDhull/autonomous | 378fc7d6c5a9c34c4e915f080fb78ed5c11195d6 | [

"MIT"

] | null | null | null | src/Camera agents for multiple cars/README.md | SahilDhull/autonomous | 378fc7d6c5a9c34c4e915f080fb78ed5c11195d6 | [

"MIT"

] | null | null | null | To add multiple cars in the webots, look at these files

no need for the current scenario | 22.5 | 55 | 0.788889 | eng_Latn | 0.999595 |

3ee33d55ed1179c150e8742a606516fb29fd05d6 | 84 | md | Markdown | vault/tn/HEB-x7px.md | mandolyte/uw-obsidian | 39e987c4cdc49d2a68e3af6b4e3fc84d1cda916d | [

"MIT"

] | null | null | null | vault/tn/HEB-x7px.md | mandolyte/uw-obsidian | 39e987c4cdc49d2a68e3af6b4e3fc84d1cda916d | [

"MIT"

] | null | null | null | vault/tn/HEB-x7px.md | mandolyte/uw-obsidian | 39e987c4cdc49d2a68e3af6b4e3fc84d1cda916d | [

"MIT"

] | null | null | null | # Connecting Statement:

This is the first of five urgent warnings the author gives. | 28 | 59 | 0.797619 | eng_Latn | 0.99998 |

3ee4d68ce482391b354ca3ede46f45fec81033c1 | 1,749 | md | Markdown | Search/README.md | LihaoWang1991/LeetCode | 391b3beeefe1d32c8a4935a66175ab94445a1160 | [

"Apache-2.0"

] | null | null | null | Search/README.md | LihaoWang1991/LeetCode | 391b3beeefe1d32c8a4935a66175ab94445a1160 | [

"Apache-2.0"

] | null | null | null | Search/README.md | LihaoWang1991/LeetCode | 391b3beeefe1d32c8a4935a66175ab94445a1160 | [

"Apache-2.0"

] | null | null | null | Search

======

### BFS:

* [Problem 1091: Shortest Path in Binary Matrix](https://leetcode.com/problems/shortest-path-in-binary-matrix/)

* [Problem 279: Perfect Squares](https://leetcode.com/problems/perfect-squares/)

* [Problem 127: Word Ladder](https://leetcode.com/problems/word-ladder/)

### DFS:

* [Problem 695: Max Area of Island](https://leetcode.com/problems/max-area-of-island/)

* [Problem 200: Number of Islands](https://leetcode.com/problems/number-of-islands/)

* [Problem 547: Friend Circles](https://leetcode.com/problems/friend-circles/)

* [Problem 130: Surrounded Regions](https://leetcode.com/problems/surrounded-regions/)

* [Problem 417: Pacific Atlantic Water Flow](https://leetcode.com/problems/pacific-atlantic-water-flow/)

### Backtracking:

* [Problem 17: Letter Combinations of a Phone Number](https://leetcode.com/problems/letter-combinations-of-a-phone-number/)

* [Problem 93: Restore IP Addresses](https://leetcode.com/problems/restore-ip-addresses/)

* [Problem 79: Word Search](https://leetcode.com/problems/word-search/)

* [Problem 257: Binary Tree Paths](https://leetcode.com/problems/binary-tree-paths/)

* [Problem 46: Permutations](https://leetcode.com/problems/permutations/)

* [Problem 47: Permutations II](https://leetcode.com/problems/permutations-ii/)

* [Problem 77: Combinations](https://leetcode.com/problems/combinations/)

* [Problem 39: Combination Sum](https://leetcode.com/problems/combination-sum/)

* [Problem 40: Combination Sum II](https://leetcode.com/problems/combination-sum-ii/)

* [Problem 216: Combination Sum III](https://leetcode.com/problems/combination-sum-iii/)

* [Problem 78: Subsets](https://leetcode.com/problems/subsets/)

* [Problem 90: Subsets II](https://leetcode.com/problems/subsets-ii/)

| 62.464286 | 123 | 0.751858 | yue_Hant | 0.264445 |

3ee75bb935372b7916bd388a382b2864085ef13e | 899 | md | Markdown | content/projects.md | IgorVaryvoda/new-varyvoda.com | df805d439898407498496ad095792a87439fc9c2 | [

"Apache-2.0"

] | null | null | null | content/projects.md | IgorVaryvoda/new-varyvoda.com | df805d439898407498496ad095792a87439fc9c2 | [

"Apache-2.0"

] | null | null | null | content/projects.md | IgorVaryvoda/new-varyvoda.com | df805d439898407498496ad095792a87439fc9c2 | [

"Apache-2.0"

] | null | null | null | ---

template: page

title: Projects by Igor Varyvoda

slug: projects

draft: false

---

## UaHelp

<a href="https://www.uahelp.me" target="_blank"><img src="https://cdn.earthroulette.com/varyvoda/uahelp.png" alt="Earth Roulette screenshot"></a>

[A curated list of resources to help Ukraine](https://www.uahelp.me)

## Earth Roulette

<a href="https://earthroulette.com" target="_blank"><img src="https://iantiark.sirv.com/varyvoda/er.png" alt="Earth Roulette screenshot"></a>

A random travel destination generator. It's a pretty fun project which I thouroughly enjoyed creating from scratch :) [Visit Earth Roulette](https://earthroulette.com)

## CryptoTracker

<a href="https://cryptotracker.xyz" target="_blank"><img src="https://iantiark.sirv.com/varyvoda/ct.png"></a>

Track crypto currency prices. Simple, yet good looking. Set it as a homepage. [Visit CryptoTracker](https://cryptotracker.xyz)

| 37.458333 | 167 | 0.74416 | eng_Latn | 0.338705 |

3ee8b33b1c9e2acdf44332e8822405e6dc313faf | 146 | md | Markdown | README.md | michelescandola/BayesianCorrelations | b5e4867e501bd221f136816fa4bbb39328065b81 | [

"MIT"

] | null | null | null | README.md | michelescandola/BayesianCorrelations | b5e4867e501bd221f136816fa4bbb39328065b81 | [

"MIT"

] | null | null | null | README.md | michelescandola/BayesianCorrelations | b5e4867e501bd221f136816fa4bbb39328065b81 | [

"MIT"

] | null | null | null | # BayesianCorrelations

Here a list of Stan scripts to compute Bayesian Correlations in Stan.

More infos at https://michelescandola.netlify.app/

| 24.333333 | 69 | 0.808219 | eng_Latn | 0.549938 |

3ee8cf08ca5b9211197b539ef30cabecc571395a | 11,874 | md | Markdown | applications/ori.md | syntifi/Grants-Program | 879bee4f9bdfa6a022a40f519be8d676bfb79b34 | [

"Apache-2.0"

] | null | null | null | applications/ori.md | syntifi/Grants-Program | 879bee4f9bdfa6a022a40f519be8d676bfb79b34 | [

"Apache-2.0"

] | null | null | null | applications/ori.md | syntifi/Grants-Program | 879bee4f9bdfa6a022a40f519be8d676bfb79b34 | [

"Apache-2.0"

] | null | null | null | # W3F Grant Proposal

- **Project Name:** ORI (Onchain Risk Intelligence)

- **Team Name:** SyntiFi

- **Payment Address:** 0x5E89f8d81C74E311458277EA1Be3d3247c7cd7D1 (USDT)

- **[Level](https://github.com/w3f/Grants-Program/tree/master#level_slider-levels):**

2

## Project Overview :page_facing_up:

The main issue in fighting financial crime is that traditional and

crypto financial institutions manage their own Anti Money Laundering

(AML) processes independently. These same institutions continue to

invest enormous resources in INTERNAL systems to fight financial crime

and fulfill regulatory requirements. Yet, financial crime occurs

across institutions and jurisdictions in both crypto- and fiat

worlds. Closer collaboration between the market players is

fundamental, but privacy regulations and banking secrecy rules prevent

data sharing. The result is an industry on the verge of a revolution

that still relies on regulatory processes of the last century. The

blockchain technology solves some of these problems by providing a

secure and reliable ledger to all stakeholders. SyntiFi is here to

close the gap and provide on-chain tools to investigate and monitor

financial transactions stored across-chains.

### Overview

SyntiFi is actively developing ORI, an On-chain Risk Intelligence

tool to fight and prevent financial crime. We do believe that with the

great power of decentralized finance comes the great responsibility of

ensuring that no financial crime, money laundering or terrorist

financing is taking place. For this reason, some crucial capabilities

of our tool is made available open source. In this grant we ask for

support to add Polkadot to our growing ecosystem of chains and tokens.

### Project Details

In a nutshell this project is composed of two main steps:

1. the implementation of a Job to crawl and index the Polkadot

chain and persist the information according to the ORI data

model (Token, Block, Account and Transaction);

2. and the development of a REST API with resources to query the

indexed chain, trace the coin and rate a given account

according to some AML metrics.

The tool is implemented in Java and uses the micro-service framework

Quarkus, as well as the Spring Jobs for the crawler. Up until now we

were indexing directly on ElasticSearch but we are currently changing

it to use Postgresql as persistency layer together with ElasticSearch

for an efficient search (more precisely we use a hibernate-elastic-search

module to facilitate the sync between the SQL DB and Elastic Search).

Additionally we could have decied to use any of the already available

indexers for the different chains. The issue is that these indexers are

often storing the whole information of the blocks and merkle trees, but

we just need a smaller portion of that. For this reason we decided to

create our own, simple crawler, to index just what we need and replicate

that for the different chains. We believe that in doing so we can facilitate

the integration of other chains into the system.

ORI also comes with a front-end implemented in React. The further

development/improvement of the front end is **NOT** part of this

proposal.

For more details please check our Git repository:

[ORI](https://www.github.com/syntifi/ori)

### Ecosystem Fit

Following compliance rules and ensuring that no financial crime is

taking place is paramount to the success of any DeFi application. In

fact, governments are starting to tighten regulatory requirements for

Crypto related transactions. According to

[Reuters](https://www.reuters.com/technology/eu-tighten-rules-cryptoasset-transfers-2021-07-20/)

, “Companies that transfer BTC or other crypto-assets must collect

details of senders and recipients to help authorities crack down on

dirty money, EU policymakers proposed on Tuesday in the latest efforts

to tighten regulation of the sector. The law, which is one of the

recommendations of the inter-governmental watchdog, the Financial

Action Task Force (FATF), already applies to wire transfers.

Providing anonymous crypto-asset wallets will also be prohibited, just

as anonymous bank accounts are already banned under EU anti-money

laundering rules.”

That being said, we do believe that applications on Polkadot could use

an open source tool such as ORI to facilitate the job of their

compliance team when fulfilling regulatory requirements.

## Team :busts_in_silhouette:

### Team members

- Andre Bertolace

- Remo Stieger

- Alexandre Carvalho

### Contact

- **Contact Name:** Andre Bertolace

- **Contact Email:** [email protected]

- **Website:** www.syntifi.com

### Legal Structure

- **Registered Address:** Baarerstasse 10, 6300 Zug

- **Registered Legal Entity:** SyntiFi GmbH

### Team's experience

Andre is an entrepreneur with an engineering background who acquired

over 15+ years of experience in the financial industry in several

positions and institutions but always in a quantitative analyst,

trader or developer role. He founded a fintech start-up delivering

financial data analytics to Wealth and Asset managers.

Remo has 15+ years of financial markets experience having worked in

investment banking where he held various leading positions at global

financial institutions. Remo has previously co-founded a technology

start-up solving legal and technological challenges of inheriting

digital assets leveraging a blockchain-based ecosystem.

Alexandre is an electrical engineer with more than 15 years working in

IT. His focus has been on enterprise software development, acting as

solution architect, software engineer and developer in both private

and public sector. Alexandre works with a team of diverse specialties

building strategic systems while implementing DevOps practices and

infrastructure. As a self-learner, he is always experimenting with new

and emerging tech.

### Team Code Repos

- https://github.com/syntifi

- https://github.com/syntifi/ori

- https://github.com/syntif/casper-sdk

- https://github.com/syntif/near-java-api

Please also provide the GitHub accounts of all team members. If they

contain no activity, references to projects hosted elsewhere or live

are also fine.

- https://github.com/AB3rt0

- https://github.com/AB3rtz

- https://github.com/oak

### Team LinkedIn Profiles (if available)

- https://www.linkedin.com/in/andre-bertolace-87983426/

- https://www.linkedin.com/in/remostieger/

## Development Status :open_book:

ORI is an active/ongoing project. Please take a look at

[ORI](https://github.com/syntifi/ori) for the latest development. At

the moment, we are on the process of finalizing a front-end and a

dashboard as well as refactoring the back end code.

## Development Roadmap :nut_and_bolt:

### Overview

- **Total Estimated Duration:** 3 months

- **Full-Time Equivalent (FTE):** 1.5 FTE

- **Total Costs:** 49'500 USD

### Milestone 1 — Index the Polkadot chain

- **Estimated duration:** 1 month

- **FTE:** 1.5

- **Costs:** 20'500 USD

| Number | Deliverable | Specification |

| -----: | ----------- | ------------- |

| 0a. | License | Apache 2.0 |

| 0b. | Documentation | We will provide both **low-level/inline documentation** of the code and a basic **tutorial** that explains how a user can crawl the Polkadot Chain and populate the database for later use. |

| 0c. | Testing Guide | Core functions will be fully covered by unit tests to ensure functionality and robustness. In the guide, we will describe how to run these tests. |

| 0d. | Docker | We will provide a Dockerfile(s) that can be used to test all the functionality delivered with this milestone. |

| 1. | Polkadot Crawler Job | We will create an Spring batch job to crawl the Polkadot chain and populate the transaction/account tables in our indexed Database |

| 2. | Polkadot Updater Job | We will create an Spring batch job to update the transaction/account tables in our indexed Database with the latest transactions in the Polkadot network |

### Milestone 2 — REST API to query the Indexed chain

- **Estimated duration:** 1 month

- **FTE:** 1.5

- **Costs:** 12'500 USD

| Number | Deliverable | Specification |

| -----: | ----------- | ------------- |

| 0a. | License | Apache 2.0 |

| 0b. | Documentation | We will provide both **low-level/inline documentation** of the code and a basic **tutorial** that explains how a user can crawl the Polkadot Chain and populate the database for later use. |

| 0c. | Testing Guide | Core functions will be fully covered by unit tests to ensure functionality and robustness. In the guide, we will describe how to run these tests. |

| 0d. | Docker | We will provide a Dockerfile(s) that can be used to test all the functionality delivered with this milestone. |

| 1. | Data model | Block, Account and Transaction POJO models |

| 2. | API endpoint: *account/* | GET and POST method to list accounts and create a new account |

| 3. | API endpoint: *account/hash/{hash}* | GET and DELETE method to retrieve and remove an specific account given the hash |

| 4. | API endpoint: *block/* | GET method to list blocks currently in the system |

| 5. | API endpoint: *block/hash/{hash}* | GET and DELETE method to retrieve and remove an specific block given the hash |

| 6. | API endpoint: *block/parent/{hash}* | POST method to add a new block given the parent block hash |

| 7. | API endpoint: *transaction/* | GET method to list transactions currently in the system |

| 8. | API endpoint: *transaction/account/{account}* | GET method to list the transactions for a given the account |

| 9. | API endpoint: *transaction/hash/{hash}* | GET method to retrieve a transaction given the transaction hash |

| 10. | API endpoint: *transaction/hash/{hash}* | DELETE method to remove a transaction given the hash |

| 11. | API endpoint: *transaction/incoming/account/{account}* | GET method to list the incoming transactions to the given account |

| 12. | API endpoint: *transaction/outgoing/account/{account}* | GET method to list the outgoing transactions from the given account |

| 13. | API endpoint: *block/{hash}/from/{from}/to/{to}* | POST method to add a new transaction registered on the given block hash from an account to another account|

### Milestone 3 — REST API to trace the coin

- **Estimated duration:** 1 month

- **FTE:** 1.5

- **Costs:** 16'500 USD

| Number | Deliverable | Specification |

| -----: | ----------- | ------------- |

| 0a. | License | Apache 2.0 |

| 0b. | Documentation | We will provide both **low-level/inline documentation** of the code and a basic **tutorial** that explains how a user can crawl the Polkadot Chain and populate the database for later use. |

| 0c. | Testing Guide | Core functions will be fully covered by unit tests to ensure functionality and robustness. In the guide, we will describe how to run these tests. |

| 0d. | Docker | We will provide a Dockerfile(s) that can be used to test all the functionality delivered with this milestone. |

| 0e. | Article | We will publish a **blog entry** highlighting the addition of another Chain to the group of chains covered by ORI. |

| 1. | API endpoint */score/{account}* | GET method to retrieve the certain AML scores (structuring over time, unusual outgoing volume, unusual behavior score and flow through score) for a given account |

| 2. | API endpoint */trace/back/{account}* | GET method to trace back the origin of the coin given a date/time and an account |

| 3. | API endpoint */trace/forward/{account}* | GET method to trace forward the destination of the coin given a date/time and an account |

## Future Plans

- Short-term: add the major chains and tokens to ORI (BTC, ETH, ...)

- Mid-term: run the platform as a service for DeFi apps that need

compliance tools

## Additional Information :heavy_plus_sign:

**How did you hear about the Grants Program?** Web3 Foundation Website

| 50.101266 | 213 | 0.756864 | eng_Latn | 0.994625 |

3ee91ffa0aee43ed8228f4a745714d0d1aa4d91e | 302 | md | Markdown | includes/iot-hub-pii-note-naming-device.md | grayknight2/mc-docs.zh-cn | dc705774cac09f2b3eaeec3c0ecc17148604133e | [

"CC-BY-4.0",

"MIT"

] | null | null | null | includes/iot-hub-pii-note-naming-device.md | grayknight2/mc-docs.zh-cn | dc705774cac09f2b3eaeec3c0ecc17148604133e | [

"CC-BY-4.0",

"MIT"

] | null | null | null | includes/iot-hub-pii-note-naming-device.md | grayknight2/mc-docs.zh-cn | dc705774cac09f2b3eaeec3c0ecc17148604133e | [

"CC-BY-4.0",

"MIT"

] | null | null | null | ---

ms.openlocfilehash: ceca1d1061b80b7d117cfc775bf3f6930c761e48

ms.sourcegitcommit: c1ba5a62f30ac0a3acb337fb77431de6493e6096

ms.translationtype: HT

ms.contentlocale: zh-CN

ms.lasthandoff: 04/17/2020

ms.locfileid: "63823842"

---

> [!IMPORTANT]

> 收集的日志中可能会显示设备 ID 用于客户支持和故障排除,因此,在为日志命名时,请务必避免包含任何敏感信息。

> | 27.454545 | 60 | 0.824503 | yue_Hant | 0.634186 |

3ee9e84d9483e2b9fd0af15a75edd50859223069 | 724 | md | Markdown | README.md | nadhirvince/Boston-Housing-Prices | 93546e42fce4736282e33b036cd742f0c7a3bc59 | [

"MIT"

] | null | null | null | README.md | nadhirvince/Boston-Housing-Prices | 93546e42fce4736282e33b036cd742f0c7a3bc59 | [

"MIT"

] | null | null | null | README.md | nadhirvince/Boston-Housing-Prices | 93546e42fce4736282e33b036cd742f0c7a3bc59 | [

"MIT"

] | null | null | null |

# Boston-Housing-Prices

## Statistical Analysis of Housing Prices

### Requirements

* Linux

* Python 3.3 and up

`$ pip install foobar`

## Usage

```python

import foobar

foobar.pluralize('word') # returns 'words'

foobar.pluralize('goose') # returns 'geese'

foobar.singularize('phenomena') # returns 'phenomenon'

```

## Development

```

$ virtualenv foobar

$ . foobar/bin/activate

$ pip install -e .

```

## Contributing

Pull requests are welcome. For major changes, please open an issue first to discuss what you would like to change.

Please make sure to update tests as appropriate.

## License

[MIT](https://choosealicense.com/licenses/mit/)

| 19.052632 | 114 | 0.726519 | eng_Latn | 0.88506 |

3eea3ceeb299fe90f02654932a63aba29e0c6526 | 1,860 | md | Markdown | README.md | erkandem/ogame-model | 2e18f7d0b8d177a6cd55a95fd236861d9fbb8d1e | [

"MIT"

] | null | null | null | README.md | erkandem/ogame-model | 2e18f7d0b8d177a6cd55a95fd236861d9fbb8d1e | [

"MIT"

] | null | null | null | README.md | erkandem/ogame-model | 2e18f7d0b8d177a6cd55a95fd236861d9fbb8d1e | [

"MIT"

] | null | null | null | # ogame-model

Python representation of objects in ogame

* * *

## about

This code base contains objects which model the in game objects of `ogame.org`.

Yes it is a stupid "game". But still quite interesting.

I created it as an experiment to calculate mine production, but coding escalated quickly.

## how do I use it?

Not ready to use. I created it as an experiment and needs your help,

ideas, guidance to be sth meaningful.

## construction sites

#### state

options include a database (see `db` sub folder), a simple dictionary (see `config.py`)

which plays nice with JSON, or sth completely different.

#### server specific settings

Object properties are not constant among all universes/servers - the macroscopic unit of a game.

That's why I avoided to hard code them into the objects.

But something messy like in `properties.py` and `ogame.py` isn't a solution either.

There must be a better way.

#### tests

They are mostly missing. Really a shame.

#### any many more

Really, it's not ready.

## other projects

A quick search on github reveals that most other projects are written either in JavaScript or PHP.

My weapon of choice is Python.

|project| python version| description|

|----|----|----|

|[alaingilbert/pyogame](https://github.com/alaingilbert/pyogame) | python3 | API to interact with the account |

|[esp1337/ogame-testing](https://github.com/esp1337/ogame-testing) | python2 | undocumented |

|[erkandem/ogame-stats](https://github.com/erkandem/ogame-stats) | python3 | public game statistics API |

|[erkandem/ogame-raid-radar](https://github.com/erkandem/ogame-raid-radar) | python3 | sample application using the statistics API |

Thanks to the work by Alain Gilbert (https://github.com/alaingilbert)

I don't remember from where I took most of the constants used in attributes (e.g. prices).

Credit to the website I've forgotten.

| 37.959184 | 132 | 0.75 | eng_Latn | 0.994842 |

3eea6fc7e1be01816868f2fd822cf868e48c5df6 | 43 | md | Markdown | README.md | bobbyroe/graphql-lambda | ecd641306a6d3d621018f354eaefd63373a93a80 | [

"MIT"

] | null | null | null | README.md | bobbyroe/graphql-lambda | ecd641306a6d3d621018f354eaefd63373a93a80 | [

"MIT"

] | null | null | null | README.md | bobbyroe/graphql-lambda | ecd641306a6d3d621018f354eaefd63373a93a80 | [

"MIT"

] | null | null | null | # graphql-lambda

getting comfy with Lambda

| 14.333333 | 25 | 0.813953 | eng_Latn | 0.976737 |

3eea81c2d593ec271328a9c4b3b7e6347e6bd00b | 2,115 | md | Markdown | _posts/2018-02-14-speyside-03.md | whiskeybobby/whiskeybobby.github.io | 887f7af49a3092de0f6268c90a726c803a281023 | [

"MIT"

] | null | null | null | _posts/2018-02-14-speyside-03.md | whiskeybobby/whiskeybobby.github.io | 887f7af49a3092de0f6268c90a726c803a281023 | [

"MIT"

] | null | null | null | _posts/2018-02-14-speyside-03.md | whiskeybobby/whiskeybobby.github.io | 887f7af49a3092de0f6268c90a726c803a281023 | [

"MIT"

] | null | null | null | ---

layout: post

title: You Spey, I Spey - Part 3

date: 2018-02-14

category: blog

tags: [Scotch, Speyside, Glenlivet 12, Glenfiddich 12]

---

Cracking open two more Speyside 50 mL samplers today - Glenlivet 12 and Glenfiddich 12. I get these confused all the time. I poured 15 mLs of each into Glencairns and promptly forgot which was which. This bodes well for the tasting...

Contrary to my usual taste first style, I'm going to grab the tasting notes from the respective websites to see if I can figure out which is which.

### Glenlivet 12

From the [official website](https://www.theglenlivet.com/en-us/the-glenlivet-12-year-old/):

* 40% ABV

* Cask: Traditional and American oak

* Flavor: Delicately balanced with strong pineapple notes

* Colour: Bright, vibrant gold

* Nose: Fruity and summery

* Palate: Well balanced and fruity, with strong pineapple notes

* Finish: Creamy, smooth, with marzipan and fresh hazelnuts

* $5.29/50mL -> $1.59/15mL pour

### Glenfiddich 12

From the [official website](https://www.glenfiddich.com/us/collection/product-collection/core-range/12-year-old/):

* 40% ABV

* Fresh pear, subtle oak

* $5.99/50mL -> $1.80/15mL pour

>With a unique freshness from the same Highland spring water we’ve used since 1887, its distinctive fruitiness comes from the high cut point William Grant always insisted upon.

>Carefully matured in the finest American oak and European oak sherry casks for at least 12 years, it is mellowed in oak marrying tuns to create its sweet and subtle oak flavours.

>Creamy with a long, smooth and mellow finish, our 12 Year Old is the perfect example of Glenfiddich’s unique Speyside style and is widely proclaimed the best dram in the valley.

### My take

I'm trying to pick out the "strong pineapple notes" but can't find them. Both are light and fruity. One has a bit more woodiness and kick on the backend. That one might be the Glenfiddich with the "subtle oak" description. Neither one have a terribly long finish. These seem really interchangeable upon first taste. Maybe a couple more samplings will reveal more differences...

Whisky Bob signing off.

| 49.186047 | 377 | 0.768794 | eng_Latn | 0.996207 |

3eeab6df35082052f8738c39ab12edd0e61f7907 | 65 | md | Markdown | README.md | All-the-stuff/stress_test.sh | 1ad4e5a740fc5d5697fde228d10593914607b5ac | [

"MIT"

] | null | null | null | README.md | All-the-stuff/stress_test.sh | 1ad4e5a740fc5d5697fde228d10593914607b5ac | [

"MIT"

] | null | null | null | README.md | All-the-stuff/stress_test.sh | 1ad4e5a740fc5d5697fde228d10593914607b5ac | [

"MIT"

] | null | null | null | # stress_test.sh

Stress test the overclocking on a raspberry pi.

| 21.666667 | 47 | 0.8 | eng_Latn | 0.984224 |

3eec6302147692d771068d48e1d3d56ecf8fda3c | 173 | md | Markdown | README.md | ramesh-kamath/hello-world | 490cd6c5d413087e105a4baf43015007fde6ddc1 | [

"MIT"

] | null | null | null | README.md | ramesh-kamath/hello-world | 490cd6c5d413087e105a4baf43015007fde6ddc1 | [

"MIT"

] | null | null | null | README.md | ramesh-kamath/hello-world | 490cd6c5d413087e105a4baf43015007fde6ddc1 | [

"MIT"

] | null | null | null | # hello-world

This is a repository created to learn how GitHub works

I am a newbie to GitHub and that is the reason I am trying out this Hello World project as suggested.

| 28.833333 | 101 | 0.780347 | eng_Latn | 0.999962 |

3eede6c724a5e009888789b294d8f940e8057953 | 1,171 | md | Markdown | README.md | ito-soft-design/isd-color-palette | f3939494c38369e2c3e6e2050afdcb7ce5dc7073 | [

"MIT"

] | null | null | null | README.md | ito-soft-design/isd-color-palette | f3939494c38369e2c3e6e2050afdcb7ce5dc7073 | [

"MIT"

] | null | null | null | README.md | ito-soft-design/isd-color-palette | f3939494c38369e2c3e6e2050afdcb7ce5dc7073 | [

"MIT"

] | null | null | null | # ISDColorPalette

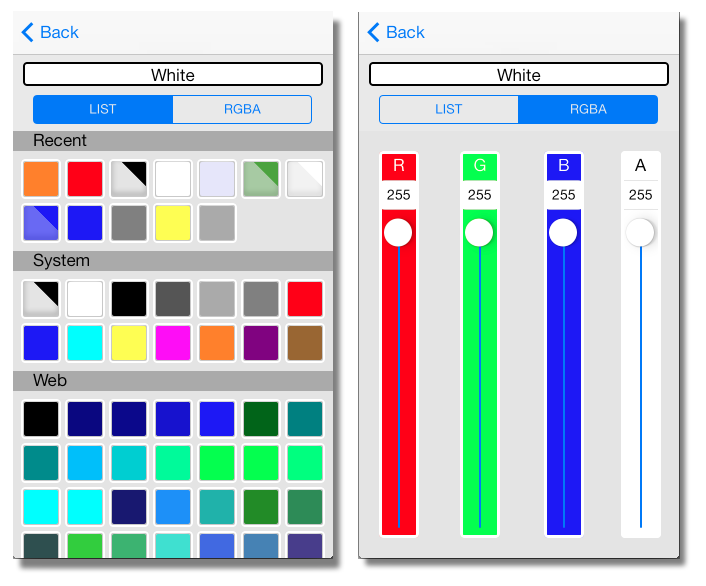

ISDColorPalette is a color selection panel for the RubyMotion iOS app.

## Installation

```ruby

# in Gemfile

gem 'isd-color-palette'

```

ISDColorPalette uses Sugarcube and BubbleWrap.

```ruby

# in Gemfile

gem 'bubble-wrap'

# minimum set

gem 'sugarcube', :require => [

'sugarcube-core',

'sugarcube-localized',

'sugarcube-color',

'sugarcube-uikit',

'sugarcube-nsuserdefaults'

]

```

## Usage

```ruby

# attr_accessor :color

# get a controller.

c = ISDColorPaletteViewController.colorPaletteViewController

# set an initial color.

c.selectedColor = self.color

# set a callback.

c.selected_color_block = Proc.new {|color| did_select_color color }

# push the controller.

self.navigationController.pushViewController c, animated:true

```

If you want to get nil instead of the clear color, set #return_nil true.

```

c.return_nil = true

```

The selected_color_block is called after a color is selected.

```

def did_select_color color

p color

self.color = color

end

```

## License

MIT License

| 17.742424 | 111 | 0.721605 | eng_Latn | 0.510778 |

3eee75d2df1a8a93ef3e75d108d5ec6ff6f147f0 | 1,555 | md | Markdown | settings.md | shreyas-dev/CueObserve | c573f6f5f2725696b3fceabf9955d9013fe3d77d | [

"Apache-2.0"

] | null | null | null | settings.md | shreyas-dev/CueObserve | c573f6f5f2725696b3fceabf9955d9013fe3d77d | [

"Apache-2.0"

] | null | null | null | settings.md | shreyas-dev/CueObserve | c573f6f5f2725696b3fceabf9955d9013fe3d77d | [

"Apache-2.0"

] | null | null | null | # Settings

## Slack

CueObserve can send two types of Slack alerts:

1. Anomaly alerts are sent when an anomaly is detected

2. App Monitoring alerts are sent when an anomaly detection job fails

To get these alerts, enter your Slack Bot User OAuth Access Token. To create a Slack Bot User OAuth Access Token, follow the steps outlined in [Slack documentation](https://api.slack.com/messaging/webhooks).

1. Create a slack app.

2. Once you create the app, you will be redirected to your app’s `Basic Information` screen. In `Add features and functionality`, click on `Bots`.

3. On the next screen, click on `add a scope` and you will be redirected to OAuth & Permissions page.

4. On the next screen, go to Scopes section, click on `Add on OAuth Scope` and add `files:write` and `chat:write` permissions, now click on `Install to Workspace` to create the `Bot User OAuth Token` .

5. Copy `Bot User OAuth Token` and paste it in the CueObserve Settings screen.

Next, create two channels in Slack. Add the app to these two channels.

1. To find your Slack channel's ID, right-click the channel in Slack and then click on `Open channel details` . You'll find the channel ID at the bottom. Copy and paste it in the CueObserve Settings screen.

2. Click on the `Save` button.

## Email

1. Make sure you have enabled email alert while installation.

2. Add email Id to `Send Email To` input field, If you have to add more than one email Id, make it comma separated in input field as shown below.

| 48.59375 | 207 | 0.75627 | eng_Latn | 0.987012 |

3eeef4a67e2db2da9da343491c2effa65648789c | 5,301 | md | Markdown | articles/sql-database/sql-database-elastic-pool-manage-tsql.md | OpenLocalizationTestOrg/azure-docs-pr15_hu-HU | ac1600ab65c96c83848e8b2445ac60e910561a25 | [

"CC-BY-3.0",

"CC-BY-4.0",

"MIT"

] | null | null | null | articles/sql-database/sql-database-elastic-pool-manage-tsql.md | OpenLocalizationTestOrg/azure-docs-pr15_hu-HU | ac1600ab65c96c83848e8b2445ac60e910561a25 | [

"CC-BY-3.0",

"CC-BY-4.0",

"MIT"

] | null | null | null | articles/sql-database/sql-database-elastic-pool-manage-tsql.md | OpenLocalizationTestOrg/azure-docs-pr15_hu-HU | ac1600ab65c96c83848e8b2445ac60e910561a25 | [

"CC-BY-3.0",

"CC-BY-4.0",

"MIT"

] | null | null | null | <properties

pageTitle="Hozzon létre vagy egy Azure SQL-adatbázis áthelyezése egy rugalmas készlet segítségével az SQL-T |} Microsoft Azure"

description="Az SQL-T használja-Azure SQL-adatbázis létrehozása az rugalmas készletben. Vagy helyezze át a datbase készletek és kijelentkezés az SQL-T használja."

services="sql-database"

documentationCenter=""

authors="srinia"

manager="jhubbard"

editor=""/>

<tags

ms.service="sql-database"

ms.devlang="NA"

ms.topic="article"

ms.tgt_pltfrm="NA"

ms.workload="data-management"

ms.date="05/27/2016"

ms.author="srinia"/>

# <a name="monitor-and-manage-an-elastic-database-pool-with-transact-sql"></a>Figyelheti és kezelheti a Transact-SQL-rugalmas adatbázis készlet

> [AZURE.SELECTOR]

- [Azure portál](sql-database-elastic-pool-manage-portal.md)

- [A PowerShell](sql-database-elastic-pool-manage-powershell.md)

- [C#](sql-database-elastic-pool-manage-csharp.md)

- [AZ SQL-T](sql-database-elastic-pool-manage-tsql.md)

Az [Adatbázis létrehozása (Azure SQL-adatbázis)](https://msdn.microsoft.com/library/dn268335.aspx) és parancsokkal [Alter Database(Azure SQL Database)](https://msdn.microsoft.com/library/mt574871.aspx) hozhat létre, és helyezze át az adatbázisok be- és kijelentkezés a rugalmas készletek. A rugalmas készlet léteznie kell, mielőtt az alábbi parancsokat használhatja. Ezek a parancsok csak adatbázisok vonatkoznak. Új készletek létrehozása és a készlet tulajdonságai (például a min és max eDTUs) beállítás nem módosítható az SQL-T a parancsok.

## <a name="create-a-new-database-in-an-elastic-pool"></a>Új adatbázis létrehozása az egyik rugalmas készletben

Az adatbázis létrehozása parancs használata a SERVICE_OBJECTIVE lehetőséget.

CREATE DATABASE db1 ( SERVICE_OBJECTIVE = ELASTIC_POOL (name = [S3M100] ));

-- Create a database named db1 in a pool named S3M100.

Az rugalmas készletben adatbázisokra öröklik a szolgáltatási réteg rugalmas készlet (Basic, szabványos, prémium verzióban).

## <a name="move-a-database-between-elastic-pools"></a>Adatbázis-készletek rugalmas közötti áthelyezése

Az adatbázis módosítása parancs használata a módosítása, és adja meg a szolgáltatás\_ELASZTIKUS OBJEKTÍV lehetőséget\_készlet; Állítsa be a nevet a cél készlet nevére.

ALTER DATABASE db1 MODIFY ( SERVICE_OBJECTIVE = ELASTIC_POOL (name = [PM125] ));

-- Move the database named db1 to a pool named P1M125

## <a name="move-a-database-into-an-elastic-pool"></a>Adatbázis áthelyezése egy rugalmas készlet

Az adatbázis módosítása parancs használata a módosítása, és adja meg a szolgáltatás\_ELASTIC_POOL; OBJEKTÍV lehetőséget Állítsa be a nevet a cél készlet nevére.

ALTER DATABASE db1 MODIFY ( SERVICE_OBJECTIVE = ELASTIC_POOL (name = [S3100] ));

-- Move the database named db1 to a pool named S3100.

## <a name="move-a-database-out-of-an-elastic-pool"></a>Adatbázis áthelyezése egy rugalmas készlet kívül

Az adatbázis módosítása parancsot, és jelölje be a SERVICE_OBJECTIVE, a teljesítmény különböző (S0, S1 stb.).

ALTER DATABASE db1 MODIFY ( SERVICE_OBJECTIVE = 'S1');

-- Changes the database into a stand-alone database with the service objective S1.

## <a name="list-databases-in-an-elastic-pool"></a>Lista-adatbázisok az rugalmas készletben

Használja a [sys.database\_szolgáltatás \_célok nézet](https://msdn.microsoft.com/library/mt712619) egy rugalmas készlet adatbázisokra listáját. Jelentkezzen be a fő adatbázist, hogy a lekérdezés a nézetet.

SELECT d.name, slo.*

FROM sys.databases d

JOIN sys.database_service_objectives slo

ON d.database_id = slo.database_id

WHERE elastic_pool_name = 'MyElasticPool';

## <a name="get-resource-usage-data-for-a-pool"></a>Erőforrás-használati adatok beszerzése a készletben

Használja a [sys.elastic\_készlet \_erőforrás \_stat nézet](https://msdn.microsoft.com/library/mt280062.aspx) szeretné az erőforrás használati statisztika egy rugalmas készlet logikai kiszolgálón vizsgálja meg. Jelentkezzen be a fő adatbázist, hogy a lekérdezés a nézetet.

SELECT * FROM sys.elastic_pool_resource_stats

WHERE elastic_pool_name = 'MyElasticPool'

ORDER BY end_time DESC;

## <a name="get-resource-usage-for-an-elastic-database"></a>Erőforrás-kihasználtság első rugalmas adatbázisok

Használja a [sys.dm\_ db\_ erőforrás\_stat nézet](https://msdn.microsoft.com/library/dn800981.aspx) vagy [sys.resource \_stat nézet](https://msdn.microsoft.com/library/dn269979.aspx) szemügyre veszi a erőforrás használati statisztika az rugalmas készletben adatbázis. Ez a folyamat hasonlít lekérdezése az Erőforrás kihasználtsága bármely egyetlen adatbázis.

## <a name="next-steps"></a>Következő lépések

Miután létrehozott egy rugalmas adatbázis készlet, kezelheti a készletben rugalmas adatbázisok rugalmas feladatok létrehozásával. Rugalmas feladatok megkönnyítik a készletben adatbázisok tetszőleges számú parancsprogramok futó az SQL-T. További információ a [Rugalmas adatbázis feladatok áttekintése](sql-database-elastic-jobs-overview.md)című témakörben találhat.

Lásd: a [Méretezés ki az Azure SQL-adatbázis](sql-database-elastic-scale-introduction.md): rugalmas adatbázis eszközök segítségével méretezési, az adatok áthelyezése, lekérdezés, vagy hozzon létre a tranzakciók.

| 64.646341 | 542 | 0.777966 | hun_Latn | 0.999946 |

3ef00dd5336293e125393eecca1b287c587ee201 | 8,141 | md | Markdown | articles/supply-chain/warehousing/packing-vs-storage-dimensions.md | MicrosoftDocs/Dynamics-365-Operations.pl-pl | fabc82553d43158349e740e44634860e5c927b6d | [

"CC-BY-4.0",

"MIT"

] | 5 | 2020-05-18T17:14:43.000Z | 2022-03-02T03:47:15.000Z | articles/supply-chain/warehousing/packing-vs-storage-dimensions.md | MicrosoftDocs/Dynamics-365-Operations.pl-pl | fabc82553d43158349e740e44634860e5c927b6d | [

"CC-BY-4.0",

"MIT"

] | 8 | 2017-12-12T13:01:05.000Z | 2021-01-17T16:41:42.000Z | articles/supply-chain/warehousing/packing-vs-storage-dimensions.md | MicrosoftDocs/Dynamics-365-Operations.pl-pl | fabc82553d43158349e740e44634860e5c927b6d | [

"CC-BY-4.0",

"MIT"

] | 4 | 2019-10-12T18:17:43.000Z | 2021-01-17T16:37:51.000Z | ---

title: Ustawianie różnych wymiarów pakowania i przechowywania

description: W tym temacie pokazano, jak określić, do którego procesu (pakowanie, przechowywanie lub zagnieżdżone pakowanie) będzie używany każdy określony wymiar.

author: mirzaab

ms.date: 01/28/2021

ms.topic: article

ms.prod: ''

ms.technology: ''

ms.search.form: EcoResPhysicalProductDimensions, WHSPhysDimUOM

audience: Application User

ms.reviewer: kamaybac

ms.search.scope: Core, Operations

ms.search.region: Global

ms.author: mirzaab

ms.search.validFrom: 2021-01-28

ms.dyn365.ops.version: 10.0.17

ms.openlocfilehash: 0e8ce576f21f1f5ea5f3acb7d43bbe68826e6f39

ms.sourcegitcommit: 3b87f042a7e97f72b5aa73bef186c5426b937fec

ms.translationtype: HT

ms.contentlocale: pl-PL

ms.lasthandoff: 09/29/2021

ms.locfileid: "7580079"

---

# <a name="set-different-dimensions-for-packing-and-storage"></a>Ustawianie różnych wymiarów pakowania i przechowywania

[!include [banner](../../includes/banner.md)]

Niektóre towary są pakowane lub przechowywane w taki sposób, że konieczne może być śledzenie wymiarów fizycznych w inny sposób dla każdego z kilku różnych procesów. Funkcja *wymiarów produktu do pakowania* umożliwia skonfigurowanie jednego lub kilku typów wymiarów dla każdego produktu. Każdy typ wymiaru ma zestaw miar fizycznych (waga, szerokość, głębokość i wysokość) i ustala proces, w którym te wartości miar fizycznych mają zastosowanie. Gdy ta funkcja jest włączona, system obsługuje następujące typy wymiarów:

- *Przechowywanie* — wymiary magazynowania są używane razem z danymi wolumetrycznymi lokalizacji w celu określenia, ile każdego towaru można przechowywać w różnych lokalizacjach magazynowych.

- *Pakowanie* — wymiary pakowania są używane podczas konteneryzacji i ręcznego procesu pakowania w celu określenia, ile każdego towaru dopasuje się do różnych typów kontenerów.

- *Zagnieżdżone pakowanie* — zagnieżdżone wymiary pakowania są używane, gdy proces pakowania zawiera wiele poziomów.

Wymiany *magazynowania* są obsługiwane, nawet jeśli funkcja *Wymiary produktu do pakowania* nie jest włączona. Te ustawienia są ustawiane za pomocą strony **Wymiar fizyczny** w aplikacji Supply Chain Management. Te wymiary są używane przez wszystkie procesy, w których nie określono wymiarów pakowania i zagnieżdżonych wymiarów pakowania.

Wymiary *pakowania* i *zagnieżdżonego pakowania* są ustawiane za pomocą strony **Fizyczne wymiary produktu**, która jest dodawana po włączeniu funkcji *wymiarów produktu do pakowania*.

Ten temat zawiera scenariusz, który ilustruje sposób używania tej funkcji.

## <a name="turn-on-the-packaging-product-dimensions-feature"></a>Włączanie funkcji wymiarów produktu opakowania

Aby móc używać tej funkcji, należy ją włączyć w systemie. Administratorzy mogą skorzystać z obszaru roboczego [Zarządzanie funkcjami](../../fin-ops-core/fin-ops/get-started/feature-management/feature-management-overview.md), aby sprawdzić stan funkcji i włączyć ją, jeśli istnieje taka potrzeba. Ta funkcja jest wymieniona w następujący sposób:

- **Moduł:** *Zarządzanie magazynem*

- **Nazwa funkcji:** *Wymiary produktu do pakowania*

## <a name="example-scenario"></a>Przykładowy scenariusz

### <a name="set-up-the-scenario"></a>Konfiguracja scenariusza

Przed uruchomieniem przykładowego scenariusza należy przygotować system w sposób opisany w tej sekcji.

#### <a name="enable-demo-data"></a>Włączanie danych pokazowych

Aby pracować z tym scenariuszem przy użyciu określonych pokazowych rekordów i wartości tutaj określonych, należy użyć systemu, w którym są zainstalowane standardowe [dane pokazowe](../../fin-ops-core/dev-itpro/deployment/deploy-demo-environment.md). Dodatkowo należy również wybrać firmę *USMF* przed rozpoczęciem.

#### <a name="add-a-new-physical-dimension-to-a-product"></a>Dodawanie nowego wymiaru fizycznego do produktu

Dodaj nowy wymiar fizyczny dla produktu, wykonując następujące czynności:

1. Przejdź do **Zarządzanie informacjami o produktach\> Produkty \> Zwolnione produkty**.

1. Wybierz produkt z **numerem pozycji** *A0001*.

1. W okienku akcji otwórz kartę **Zarządzaj zapasami** i w grupie **Magazyn** wybierz **Wymiary fizyczne produktu**.

1. Zostanie otwarta strona **Wymiary fizyczne produktu**. W okienku akcji wybierz opcję **Nowy**, aby dodać nowy wymiar do siatki, używając następujących ustawień:

- **Typ wymiaru fizycznego** - *Pakowanie*

- **Jednostka fizyczna** - *sztuki*

- **Waga** - *4*

- **Jednostka wagi** - *kg*

- **Długość** - *3*

- **Wysokość** - *4*

- **Szerokość** - *3*

- **Długość** - *cm*

- **Jednostka objętości** - *cm3*

Pole **Objętość** jest obliczane automatycznie na podstawie ustawień **głębokości**, **wysokości** i **szerokości**.

#### <a name="create-a-new-container-type"></a>Tworzenie nowego typu kontenera

Przejdź do pozycji **Zarządzanie magazynem \> Ustawienia \> Kontenery \> Typy kontenerów** i utwórz nowy rekord z następującymi ustawieniami:

- **Kod typu kontenera** - *Pudełko krótkie*

- **Opis** - *Pudełko krótkie*

- **Maksymalna waga netto** - *50*

- **Objętość** - *144*

- **Długość** - *6*

- **Szerokość** - *6*

- **Wysokość** - *4*

#### <a name="create-a-container-group"></a>Tworzenie grupy kontenerów

Przejdź do pozycji **Zarządzanie magazynem \> Ustawienia \> Kontenery \> Grupy kontenerów** i utwórz nowy rekord z następującymi ustawieniami:

- **Identyfikator grupy kontenerów** - *Pudełko krótkie*

- **Opis** - *Pudełko krótkie*

Dodaj nowy wiersz do sekcji **Szczegóły**. Ustaw **typ kontenera** na *Pudełko krótkie*.

#### <a name="set-up-a-container-build-template"></a>Ustawianie szablonu kompilacji kontenera

Wybierz kolejno opcje **Zarządzanie magazynem \> Ustawienia \> Kontenery \> Szablony kompilacji kontenerów** i wybierz pozycję **Pudełka**. Zmień **identyfikator grupy kontenerów** na *Pudełko krótkie*.

### <a name="run-the-scenario"></a>Uruchamianie scenariusza

Po przygotowaniu systemu zgodnie z opisem w poprzedniej sekcji można rozpocząć scenariusz opisany w następnej sekcji.

#### <a name="create-a-sales-order-and-create-a-shipment"></a>Tworzenie zamówienia sprzedaży i tworzenie wysyłki

W tym procesie można utworzyć wysyłkę na podstawie wymiarów *pakowania* towarów, dla których wysokość jest mniejsza niż 3.

1. Wybierz kolejno opcje **Sprzedaż i marketing \> Zamówienia sprzedaży \> Wszystkie zamówienia sprzedaży**.

1. W okienku akcji wybierz opcję **Nowy**.

1. W wyświetlonym oknie dialogowym **Utwórz zamówienie sprzedaży** można ustawić następujące wartości:

- **Konto odbiorcy:** *US-001*

- **Magazyn:** *63*

1. Naciśnij przycisk **OK**, aby zamknąć okno dialogowe i utworzyć nowe zamówienie zakupu.

1. Nowe zamówienie zakupu (PO) zostało otwarte. Powinno zawierać pusty wiersz w siatce na skróconej karcie **Wiersze zamówienia sprzedaży**. W nowym wierszu ustaw następujące wartości:

- **Numer pozycji:** *A0001*

- **Ilość:** *5*

1. Na skróconej karcie **Wiersze zamówienia sprzedaży**, w wybierz **Zapasy \> Rezerwacja**.

1. Na stronie **Rezerwacja**, w okienku akcji, wybierz pozycję **Rezerwacja partii**, aby zarezerwować zapasy.

1. Zamknij stronę.

1. W okienku akcji otwórz kartę **Magazyn** i wybierz pozycję **Zwolnij do magazynu**, aby utworzyć pracę dla magazynu.

1. Na skróconej karcie **Wiersze zamówienia sprzedaży** wybierz opcję **Magazyn \> Szczegóły wysyłki**.

1. W okienku akcji otwórz kartę **Transport** i wybierz opcję **Wyświetl kontenery**. Potwierdź, że towar został przeniesiony do dwóch kontenerów *Pudełko krótkie*.

#### <a name="place-an-item-into-storage"></a>Umieszczanie pozycji w magazynie

1. Otwórz urządzenie przenośne, zaloguj się do magazynu 63 i przejdź do pozycji **Zapasy \> Dostosuj w**.

1. Wprowadź **lokalizację** = *SHORT-01*. Utwórz nowy numer identyfikacyjny z **pozycją** = *A0001* i **ilością** = *1 szt.*

1. Kliknij przycisk **OK**. Pojawi się błąd „Niepowodzenie lokalizacji SHORT-01, pozycja A0001 nie pasuje do wymiarów określonych dla lokalizacji”. Wynika to z tego, że wymiary typu *Magazyn* są większe niż wymiary określone w profilu lokalizacji.

[!INCLUDE[footer-include](../../includes/footer-banner.md)] | 59.860294 | 517 | 0.763665 | pol_Latn | 0.999873 |

3ef07a70e9ca3f8f6a11a184d7cce6b703e4bcee | 6,271 | md | Markdown | _posts/2016-09-14-java-thread-exclusion-sync.md | jingboli/jingbolee.github.io | 9a6e51806aec8525d2048f06e4e232bbdee8fe40 | [

"MIT"

] | null | null | null | _posts/2016-09-14-java-thread-exclusion-sync.md | jingboli/jingbolee.github.io | 9a6e51806aec8525d2048f06e4e232bbdee8fe40 | [

"MIT"

] | null | null | null | _posts/2016-09-14-java-thread-exclusion-sync.md | jingboli/jingbolee.github.io | 9a6e51806aec8525d2048f06e4e232bbdee8fe40 | [

"MIT"

] | null | null | null | ---

layout: post

title: "Java 多线程: 线程安全、同步、互斥"

subtitle: "线程安全、同步、互斥"

date: 2016-09-18

author: "Jerome"

header-img: "img/post-bg-06.jpg"

---

## Java 多线程

### 线程安全的产生

- 在单线程的情况下,不会出现线程安全。当运行在多线程的情况下,需要访问同一个资源的情况下,可能会存在线程安全。

- 当多个线程同时访问临界资源(也称为共享资源,可以是一个对象,对象中的属性,一个文件,一个数据库等)时,就可能会产生线程安全问题。

### 线程安全的解决-同步互斥

- 基本上所有的开发模式在解决线程安全问题时,都采用“序列化访问临界资源”的方案,即**在同一时刻,只能有一个线程访问临界资源,也称作同步互斥访问**。

- 通常来说,是在访问临界资源的代码前面加上一个锁,当访问完临界资源后释放锁,让其他线程继续访问。

- 在 Java 中,提供了两种方式来实现同步互斥访问: **synchronized** 和 **Lock**

### synchronized

- 互斥锁:在临界资源上加上互斥锁,当一个线程在访问该临界资源时,其他线程便只能等待。

- 在 Java 中,**每一个对象都拥有一个锁标记( monitor),也成为监视器**,多线程同时访问某个对象时,线程只有获取了该对象的锁才能访问

- 在 Java 中,可以使用 synchronized 关键字来标记一个方法或者代码块,当某个线程调用该对象的 synchronized 方法或者访问 synchronized 代码块时,这个线程便获取了该对象的锁,其他线程暂时无法访问这个方法,只有等待这个方法执行完毕或者代码块执行完毕,这个线程才会释放该对象的锁,其他线程才能执行这个方法或者代码块。

- 没有使用 synchronized 关键字修饰方法

- InsertData.java

public class InsertData {

private ArrayList<Integer> datas = new ArrayList<>();

public void insert(Thread thread) {

for (int i = 0; i < 5; i++) {

System.out.println(thread.getName() + "插入数据:" + i);

datas.add(i);

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

}

- SynchronizedTest.java

public class SynchronizedTest {

public static void main(String[] args) {

InsertData insertData = new InsertData();

new Thread("t1"){

@Override

public void run() {

insertData.insert(Thread.currentThread());

}

}.start();

new Thread("t2"){

@Override

public void run() {

insertData.insert(Thread.currentThread());

}

}.start();

}

}

- 测试结果:(说明: 2 个线程同时对 ArrayList 进行了插入数据的操作,数据在某个时刻可能会出现预期以外的结果)

t2插入数据:0

t1插入数据:0

t1插入数据:1

t2插入数据:1

t1插入数据:2

t2插入数据:2

t1插入数据:3

t2插入数据:3

t1插入数据:4

t2插入数据:4

- 使用 synchronized 关键字修饰方法

- InsertData.java

public class InsertData {

private ArrayList<Integer> datas = new ArrayList<>();

public synchronized void insert(Thread thread) {

for (int i = 0; i < 5; i++) {

System.out.println(thread.getName() + "插入数据:" + i);

datas.add(i);

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

}

- 测试结果:(说明: t1 线程获取到锁,执行完毕以后,释放锁, t2才执行插入操作)

t1插入数据:0

t1插入数据:1

t1插入数据:2

t1插入数据:3

t1插入数据:4

t2插入数据:0

t2插入数据:1

t2插入数据:2

t2插入数据:3

t2插入数据:4

- 注意点:

1. 当一个线程正在访问一个对象的 synchronized 方法,那么其他线程不能访问该对象的其他的 synchronized 方法,因为**一个对象只有一个锁,当一个线程获取了该对象的锁之后,其他线程无法获取该对象的锁**,所以无法访问该对象的其他的 synchronized 方法。

2. 当一个线程访问一个对象的 sychronized 方法,其他线程可以访问非 synchronized 方法,因为**访问非 synchronized 方法不需要获取该对象的锁**。

3. 访问同一类型的不同对象的 synchronized 方法,不存在互斥对象,因为访问的不是同一个对象。

- synchronized 代码块

- 格式:

synchronized (synObject) {

}

- 当在某个线程中执行这段代码块,该线程获取对象 synObject 的锁,从而使得其他线程无法同时访问该代码块

- synObject 可以是 this,代表获取当前对象的锁,也可以是类中的一个属性,代表获取该属性的锁。

- InsertData.java(版本一)

public class InsertData {

private ArrayList<Integer> datas = new ArrayList<>();

public void insert(Thread thread) {

synchronized (this) {

for (int i = 0; i < 5; i++) {

System.out.println(thread.getName() + "插入数据:" + i);

datas.add(i);

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

}

}

- InsertData.java(版本二)

public class InsertData {

private ArrayList<Integer> datas = new ArrayList<>();

private Object synObject = new Object();

public void insert(Thread thread) {

synchronized (synObject) {

for (int i = 0; i < 5; i++) {

System.out.println(thread.getName() + "插入数据:" + i);

datas.add(i);

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

}

}

- synchronized 代码块使用起来比 synchronized 方法要灵活得多,一个方法可能只有一部分是需要同步的,如果此时对整个方法用 synchronized 进行同步,会影响程序的执行效率,而使用 synchronized 代码块就可以避免这个问题,synchronized 代码块可以只对需要同步的地方进行同步

- 每个类也会有一个锁,它可以用来控制对 static 数据成员的并发访问

- 如果一个线程执行一个对象的非 static synchronized 方法,另外一个线程执行这个对象所属类的 static synchronized 方法,此时不会发生互斥现象,因为访问 static synchronized 方法占用的是类锁,而访问非 static synchronized 方法占用的是对象锁,所以不存在互斥现象

- InsertData.java

public class InsertData {

private static int staticNumber = 1;

public static synchronized void insert(Thread thread) {

for (int i = 0; i < 5; i++) {

System.out.println(thread.getName() + "数据:" + staticNumber);

staticNumber++;

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

public synchronized void insert1() {

System.out.println("insert1执行");

}

}

- SynchronizedTest.java

public class SynchronizedTest {

public static void main(String[] args) {

InsertData insertData = new InsertData();

new Thread("t1") {

@Override

public void run() {

InsertData.insert(Thread.currentThread());

}

}.start();

new Thread("t2") {

@Override

public void run() {

insertData.insert1();

}

}.start();

}

}

- 测试结果:(第一个线程执行的是 insert() 方法,不会导致第二个线程执行 insert1() 方法发送阻塞现象)

t1数据:1

insert1执行

t1数据:2

t1数据:3

t1数据:4

t1数据:5

### 注意:

- 对于 synchronized 方法或者 synchronized 代码块,当出现异常时, JVM 会自动释放当前线程占用的锁,因此不会由于异常导致出现死锁现象。

| 26.348739 | 178 | 0.559719 | yue_Hant | 0.275875 |

3ef09582cdfbc0e69822320e15c0a110f9c1403d | 75 | md | Markdown | tag/twitter.md | andrewsio/andrewsio-blog.github.io | 008e349a3aeb8ba3e162b5cf72e15093ee63eea2 | [

"MIT"

] | 2 | 2021-10-02T23:32:37.000Z | 2022-01-03T21:57:43.000Z | tag/twitter.md | andrewsio/andrewsio-blog.github.io | 008e349a3aeb8ba3e162b5cf72e15093ee63eea2 | [

"MIT"

] | 1 | 2015-08-03T19:02:29.000Z | 2017-01-25T16:51:24.000Z | tag/twitter.md | andrewsio/andrewsio-blog.github.io | 008e349a3aeb8ba3e162b5cf72e15093ee63eea2 | [

"MIT"

] | 1 | 2020-01-02T19:15:55.000Z | 2020-01-02T19:15:55.000Z | ---

layout: tagpage

title: "Tag: twitter"

tag: twitter

robots: noindex

---

| 10.714286 | 21 | 0.68 | eng_Latn | 0.241862 |

3ef1d712ed1fe67c4eb2d763748007f280417c5b | 5,046 | md | Markdown | list.md | gtg7784/List-Data-Structure-Presentation | 7c62cc0c6acce8ea10c8729ff080381bbf77fd35 | [

"MIT"

] | 2 | 2021-04-23T01:34:12.000Z | 2021-10-14T01:23:52.000Z | list.md | gtg7784/List-Data-Structure-Presentation | 7c62cc0c6acce8ea10c8729ff080381bbf77fd35 | [

"MIT"

] | null | null | null | list.md | gtg7784/List-Data-Structure-Presentation | 7c62cc0c6acce8ea10c8729ff080381bbf77fd35 | [

"MIT"

] | null | null | null | ---

marp: true

style: |

img[alt~="center"] {

display: block;

margin: 0 auto;

}

table {

margin-left: 30px

}

footer: '2021 선린인터넷고등학교 자료구조 - 리스트'

---

# 리스트

### 30406 고태건, 30413 박경민

---

# 목차

1. 리스트란?

2. 배열

3. 배열의 검색

5. 배열의 추가/삭제

6. 연결 리스트

7. 연결 리스트의 검색

8. 연결 리스트의 추가/삭제

9. 원형 연결 리스트

10. 이중 연결 리스트

---

# 리스트란?

**리스트는 선형적으로 값을 가지고 있는 자료구조**

두가지가 있다.

- 배열 리스트

- 연결 리스트

---

<style scoped>

table {

margin-top: 80px;

}

</style>

# 배열 리스트

- **연속된 데이터를 저장하는 자료구조**

- 인덱스와 대응하는 데이터를 저장하여, 인덱스는 첫번째 부터 상대적인 위치를 표현

- 검색 연산은 빠르지만, 추가/삭제 연산이 느리다

0 | 1 | 2 | 3 | 4 | 5 | 6

:----:|:----:|:-----:|:----:|:----:|:----:|:----:

Banana | Apple | Orange | Coconut | Pizza | Papaya | Melon

---

<style scoped>

table {

margin-top: 20px;

margin-bottom: 20px;

}

</style>

# 배열의 검색

- 검색은 매우 빠르다

- 아래 그림을 보면 바로 이해가 될것이다

0 | 1 | 2 | 3 | 4 | **5** | 6

:----:|:----:|:-----:|:----:|:----:|:----:|:----:

Banana | Apple | Orange | Coconut | Pizza | **Papaya** | Melon

```

5번째 주소에 있는건 무엇일까??

= 배열의 첫번째 주소 + 5 -> Papaya

```

---

<style scoped>

table {

margin-top: 20px;

margin-bottom: 20px;

}

</style>

# 배열의 추가/삭제

- 추가나 삭제가 많은 상황에 적합하지 않다.

- 작업을 할때마다 메모리 상의 주소를 다 바꿔야 하는 문제가 생긴다

0 | 1 | 2 | 3 | **4** | 5 | 6

:----:|:----:|:-----:|:----:|:----:|:----:|:----:

Banana | Apple | Orange | Coconut | **Pizza** | Papaya | Melon

```

과일 배열에 웬 피자가 꼈어!

> 피자를 지워보자

```

---

0 | 1 | 2 | 3 | **4** | 5 | 6