hexsha

stringlengths 40

40

| size

int64 5

1.04M

| ext

stringclasses 6

values | lang

stringclasses 1

value | max_stars_repo_path

stringlengths 3

344

| max_stars_repo_name

stringlengths 5

125

| max_stars_repo_head_hexsha

stringlengths 40

78

| max_stars_repo_licenses

sequencelengths 1

11

| max_stars_count

int64 1

368k

⌀ | max_stars_repo_stars_event_min_datetime

stringlengths 24

24

⌀ | max_stars_repo_stars_event_max_datetime

stringlengths 24

24

⌀ | max_issues_repo_path

stringlengths 3

344

| max_issues_repo_name

stringlengths 5

125

| max_issues_repo_head_hexsha

stringlengths 40

78

| max_issues_repo_licenses

sequencelengths 1

11

| max_issues_count

int64 1

116k

⌀ | max_issues_repo_issues_event_min_datetime

stringlengths 24

24

⌀ | max_issues_repo_issues_event_max_datetime

stringlengths 24

24

⌀ | max_forks_repo_path

stringlengths 3

344

| max_forks_repo_name

stringlengths 5

125

| max_forks_repo_head_hexsha

stringlengths 40

78

| max_forks_repo_licenses

sequencelengths 1

11

| max_forks_count

int64 1

105k

⌀ | max_forks_repo_forks_event_min_datetime

stringlengths 24

24

⌀ | max_forks_repo_forks_event_max_datetime

stringlengths 24

24

⌀ | content

stringlengths 5

1.04M

| avg_line_length

float64 1.14

851k

| max_line_length

int64 1

1.03M

| alphanum_fraction

float64 0

1

| lid

stringclasses 191

values | lid_prob

float64 0.01

1

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

8b15b7848b7a92827669631c3894a5e9c2e96a44 | 6,490 | md | Markdown | includes/azure-stack-edge-iot-troubleshoot-compute.md | changeworld/azure-docs.it- | 34f70ff6964ec4f6f1a08527526e214fdefbe12a | [

"CC-BY-4.0",

"MIT"

] | 1 | 2017-06-06T22:50:05.000Z | 2017-06-06T22:50:05.000Z | includes/azure-stack-edge-iot-troubleshoot-compute.md | changeworld/azure-docs.it- | 34f70ff6964ec4f6f1a08527526e214fdefbe12a | [

"CC-BY-4.0",

"MIT"

] | 41 | 2016-11-21T14:37:50.000Z | 2017-06-14T20:46:01.000Z | includes/azure-stack-edge-iot-troubleshoot-compute.md | changeworld/azure-docs.it- | 34f70ff6964ec4f6f1a08527526e214fdefbe12a | [

"CC-BY-4.0",

"MIT"

] | 7 | 2016-11-16T18:13:16.000Z | 2017-06-26T10:37:55.000Z | ---

author: v-dalc

ms.service: databox

ms.author: alkohli

ms.topic: include

ms.date: 03/23/2021

ms.openlocfilehash: 0d912d0ac3f0fcf4c52116e67909038a1973304b

ms.sourcegitcommit: 32e0fedb80b5a5ed0d2336cea18c3ec3b5015ca1

ms.translationtype: MT

ms.contentlocale: it-IT

ms.lasthandoff: 03/30/2021

ms.locfileid: "105105444"

---

Usare le risposte di runtime dell'agente IoT Edge per risolvere gli errori correlati al calcolo. Ecco un elenco di risposte possibili:

* 200 - OK

* 400 - La configurazione della distribuzione è in formato non corretto o non valida.

* 417: il dispositivo non ha un set di configurazione della distribuzione.

* 412 - La versione dello schema nella configurazione della distribuzione non è valida.

* 406 - Il dispositivo è offline o non invia segnalazioni sullo stato.

* 500 - Si è verificato un errore nel runtime di IoT Edge.

Per ulteriori informazioni, vedere [IOT Edge Agent](../articles/iot-edge/iot-edge-runtime.md?preserve-view=true&view=iotedge-2018-06#iot-edge-agent).

Il seguente errore è correlato al servizio IoT Edge sul dispositivo Azure Stack Edge Pro.

### <a name="compute-modules-have-unknown-status-and-cant-be-used"></a>I moduli di calcolo presentano uno stato sconosciuto e non possono essere usati

#### <a name="error-description"></a>Descrizione errore

Tutti i moduli del dispositivo mostrano lo stato sconosciuto e non possono essere usati. Lo stato sconosciuto viene mantenuto in un riavvio.<!--Original Support ticket relates to trying to deploy a container app on a Hub. Based on the work item, I assume the error description should not be that specific, and that the error applies to Azure Stack Edge Devices, which is the focus of this troubleshooting.-->

#### <a name="suggested-solution"></a>Soluzione suggerita

Eliminare il servizio IoT Edge e quindi ridistribuire i moduli. Per ulteriori informazioni, vedere [Remove IOT Edge service](../articles/databox-online/azure-stack-edge-gpu-manage-compute.md#remove-iot-edge-service).

### <a name="modules-show-as-running-but-are-not-working"></a>I moduli vengono mostrati come in esecuzione, ma non funzionano

#### <a name="error-description"></a>Descrizione errore

Lo stato di runtime del modulo viene visualizzato come in esecuzione, ma i risultati previsti non vengono visualizzati.

Questa condizione può essere dovuta a un problema relativo alla configurazione della route del modulo che non funziona o `edgehub` non esegue il routing dei messaggi come previsto. È possibile controllare i `edgehub` log. Se si verificano errori, ad esempio la mancata connessione al servizio hub Internet delle cose, il motivo più comune è che i problemi di connettività. È possibile che si verifichino problemi di connettività perché la porta AMPQ utilizzata come porta predefinita dal servizio hub Internet per la comunicazione è bloccata o il server proxy Web blocca tali messaggi.

#### <a name="suggested-solution"></a>Soluzione suggerita

Seguire questa procedura:

1. Per risolvere l'errore, passare alla risorsa hub Internet delle cose per il dispositivo e quindi selezionare il dispositivo perimetrale.

1. Passare a **impostare i moduli > impostazioni di runtime**.

1. Aggiungere la `Upstream protocol` variabile di ambiente e assegnarle un valore `AMQPWS` . I messaggi configurati in questo caso vengono inviati tramite WebSocket tramite la porta 443.

### <a name="modules-show-as-running-but-do-not-have-an-ip-assigned"></a>I moduli vengono visualizzati come in esecuzione, ma non è stato assegnato un indirizzo IP

#### <a name="error-description"></a>Descrizione errore

Lo stato di runtime del modulo viene visualizzato come in esecuzione, ma l'app in contenitori non dispone di un indirizzo IP assegnato.

Questa condizione è dovuta al fatto che l'intervallo di indirizzi IP forniti per gli indirizzi IP del servizio esterno Kubernetes non è sufficiente. È necessario estendere questo intervallo per assicurarsi che vengano analizzati tutti i contenitori o le macchine virtuali distribuite.

#### <a name="suggested-solution"></a>Soluzione suggerita

Nell'interfaccia utente Web locale del dispositivo, seguire questa procedura:

1. Passare alla pagina **calcolo** . Selezionare la porta per cui è stata abilitata la rete di calcolo.

1. Immettere un intervallo statico e contiguo di indirizzi IP per gli **indirizzi IP del servizio esterno Kubernetes**. È necessario 1 IP per il `edgehub` servizio. Inoltre, è necessario un IP per ogni modulo di IoT Edge e per ogni macchina virtuale che verrà distribuita.

1. Selezionare **Applica**. L'intervallo di indirizzi IP modificati dovrebbe essere applicato immediatamente.

Per altre informazioni, vedere [modificare gli IP del servizio esterno per i contenitori](../articles/databox-online/azure-stack-edge-gpu-manage-compute.md#change-external-service-ips-for-containers).

### <a name="configure-static-ips-for-iot-edge-modules"></a>Configurare gli indirizzi IP statici per i moduli IoT Edge

#### <a name="problem-description"></a>Descrizione del problema

Kubernetes assegna indirizzi IP dinamici a ogni modulo IoT Edge sul dispositivo GPU di Azure Stack Edge Pro. È necessario un metodo per configurare gli indirizzi IP statici per i moduli.

#### <a name="suggested-solution"></a>Soluzione suggerita

È possibile specificare indirizzi IP fissi per i moduli di IoT Edge tramite la sezione K8s-experimental, come descritto di seguito:

```yaml

{

"k8s-experimental": {

"serviceOptions" : {

"loadBalancerIP" : "100.23.201.78",

"type" : "LoadBalancer"

}

}

}

```

### <a name="expose-kubernetes-service-as-cluster-ip-service-for-internal-communication"></a>Esporre il servizio Kubernetes come servizio IP del cluster per la comunicazione interna

#### <a name="problem-description"></a>Descrizione del problema

Per impostazione predefinita, il tipo di servizio Internet delle cose è di tipo Load Balancer ed è stato assegnato un indirizzo IP esterno. Potrebbe non essere necessario un indirizzo IP esterno per l'applicazione. Potrebbe essere necessario esporre i pod all'interno del cluster KUbernetes per l'accesso come altri pod e non come servizio di bilanciamento del carico esposto esternamente.

#### <a name="suggested-solution"></a>Soluzione suggerita

È possibile usare le opzioni create tramite la sezione K8s-experimental. L'opzione di servizio seguente dovrebbe funzionare con i binding di porta.

```yaml

{

"k8s-experimental": {

"serviceOptions" : {

"type" : "ClusterIP"

}

}

}

``` | 60.092593 | 585 | 0.782435 | ita_Latn | 0.997419 |

8b16bca99bdb7e395666cff3b2d3710b8e0aaba3 | 181 | md | Markdown | manifest/development/foruns.md | MUHammadUmerHayat/Informatinal-Links | 6f911c2e852b7eb19456a9bab37083fbdfd9e59b | [

"MIT"

] | 1 | 2020-09-05T11:23:10.000Z | 2020-09-05T11:23:10.000Z | manifest/development/foruns.md | MUHammadUmerHayat/Informatinal-Links | 6f911c2e852b7eb19456a9bab37083fbdfd9e59b | [

"MIT"

] | null | null | null | manifest/development/foruns.md | MUHammadUmerHayat/Informatinal-Links | 6f911c2e852b7eb19456a9bab37083fbdfd9e59b | [

"MIT"

] | null | null | null | ## Fóruns

* [Devnaestrada](https://devnaestrada.com.br/)

* [Guj](https://www.guj.com.br/)

* [Imasters](https://forum.imasters.com.br/)

* [Tableless](http://forum.tableless.com.br/) | 30.166667 | 46 | 0.674033 | yue_Hant | 0.220587 |

8b175366f7dd916d957e0542ecb04e0f9215d231 | 2,606 | md | Markdown | witcher/README.md | daelsepara/zil-experiment | 1e9be391a5fae766dace210a074be5fea172ef31 | [

"MIT"

] | null | null | null | witcher/README.md | daelsepara/zil-experiment | 1e9be391a5fae766dace210a074be5fea172ef31 | [

"MIT"

] | null | null | null | witcher/README.md | daelsepara/zil-experiment | 1e9be391a5fae766dace210a074be5fea172ef31 | [

"MIT"

] | null | null | null | # Witcher ZIL

## Design Goals

With our increasing understanding of ZIL, it is time to create a more sophisticated game. Here, we try to create an interactive fiction game set in the Witcher game universe by CD Projekt Red.

Our goals are to implement these features

- [X] combat! (14 June 2020)

- [X] silver and steel swords that can be enhanced by the application of certain oils (11 June 2020)

- [X] swords with oil should also confer bonuses when combatting specific monsters (14 June 2020)

- [X] witcher medallion that can detect invisible objects (12 June 2020)

- [X] travelling with or without Roach (12 June 2020)

- [X] eating/drinking food and/or potions and decoctions (13 June 2020, eating, 22 June 2020 cat's eye potion)

- [X] monster bounties (19 June 2020)

- [X] mechanisms for generic NPCs (blacksmith, alchemist, merchant) (20 June 2020)

### Phase 2 goals (Starting 22 June 2020)

- [X] random money drops/pickups (23 June 2020)

- [ ] armorer NPC

- [X] non-monster-killing quests (22 June 2020 search quest, 24 June 2020 Recover item(s) quest and connected quests)

- [ ] superior versions of oils

- [ ] non-bounty related NPC dialogs

- [X] other interesting objects (24 JUNE 2020 border posts, allow fast travel between locations)

### Phase 3 (TBD)

- [ ] other types of silver/steel swords

- [ ] overall story or plot arc

## Compiling and running

You need a ZIL compiler or assembler, or something like [ZILF](https://bitbucket.org/jmcgrew/zilf/wiki/Home) installed to convert the .zil file into a format usable by a z-machine interpreter such as [Frotz](https://davidgriffith.gitlab.io/frotz/).

Once installed, you can compile and convert it to a z-machine file using *zilf* and *zapf*

```

./zilf witcher.zil

./zapf witcher.zap

```

To play the game, run it with a Z-machine interpreter like *Frotz*

```

frotz witcher.z5

```

Where you are greeted by the following screen:

```

ZIL Witcher

Experiments with ZIL

By SD Separa (2020)

Inspired by the Witcher Games by CD Projekt Red

Release 0 / Serial number 200612 / ZILF 0.9 lib J5

------------------------------------------------------

Once you were many. Now you are few. Hunters. Killers of the world's filth. Witchers. The ultimate killing machines. Among you, a legend, the one they call Geralt of

Rivia, the White Wolf.

That legend is you.

------------------------------------------------------

Campsite

A small campfire is burning underneath a tree. All is quiet except for the crackling sounds of burning wood. The fire keeps the wolves and other would-be predators at

bay.

Roach is here.

>

```

(work in progress)

| 33.410256 | 248 | 0.712203 | eng_Latn | 0.994937 |

8b1798b7c60c70854c997a61a6a9b87ee18399e5 | 766 | md | Markdown | reference/concepts/implicit_self.md | gsilvapt/python | d675468b2437d4c09c358d023ef998a05a781f58 | [

"MIT"

] | 1,177 | 2017-06-21T20:24:06.000Z | 2022-03-29T02:30:55.000Z | reference/concepts/implicit_self.md | gsilvapt/python | d675468b2437d4c09c358d023ef998a05a781f58 | [

"MIT"

] | 1,938 | 2019-12-12T08:07:10.000Z | 2021-01-29T12:56:13.000Z | reference/concepts/implicit_self.md | gsilvapt/python | d675468b2437d4c09c358d023ef998a05a781f58 | [

"MIT"

] | 1,095 | 2017-06-26T23:06:19.000Z | 2022-03-29T03:25:38.000Z | # Implicit self

TODO: ADD MORE

- the example uses the `self` implicit argument for methods and properties linked to a specific instance of the class [matrix](../exercise-concepts/matrix.md)

- the example uses `self` for methods and properties linked to a specific instance of the class. [robot-simulator](../exercise-concepts/robot-simulator.md)

- the exercise relies on the implied passing of `self` as the first parameter of bound methods [allergies](../exercise-concepts/allergies.md)

- student needs to know how to use statement `self` in a class [binary-search-tree](../exercise-concepts/binary-search-tree.md)

- within the class definition, methods and properties can be accessed via the `self.` notation [phone-number](../exercise-concepts/phone-number.md)

| 76.6 | 158 | 0.774151 | eng_Latn | 0.993035 |

8b1806724b6dbc8c12ffaa82ddb1c95a80235b5b | 177 | md | Markdown | README.md | ajmwagar/oxy | c835355aab6eed3c2c9f73d672c175b8dc30e0fe | [

"MIT"

] | 4 | 2019-08-17T02:14:55.000Z | 2022-03-20T03:09:58.000Z | README.md | ajmwagar/oxy | c835355aab6eed3c2c9f73d672c175b8dc30e0fe | [

"MIT"

] | null | null | null | README.md | ajmwagar/oxy | c835355aab6eed3c2c9f73d672c175b8dc30e0fe | [

"MIT"

] | null | null | null | # oxy

A Wayland window manager...

See this [blog post](https://averywagar.com/posts/2019/06/oxidizing-my-workflow-writing-a-wayland-window-manager-in-rust-part-1-setting-up/).

| 35.4 | 141 | 0.762712 | eng_Latn | 0.420695 |

8b18496843ff6140edb340fee62271115c246bcc | 376 | md | Markdown | README.md | syucream/elastiquic | 9a351840b23fb1426aa2795a55e05ddb3b88f8bf | [

"MIT"

] | 1 | 2017-08-01T14:28:22.000Z | 2017-08-01T14:28:22.000Z | README.md | syucream/elastiquic | 9a351840b23fb1426aa2795a55e05ddb3b88f8bf | [

"MIT"

] | null | null | null | README.md | syucream/elastiquic | 9a351840b23fb1426aa2795a55e05ddb3b88f8bf | [

"MIT"

] | null | null | null | # elastiquic

An elastic QUIC client and benchmark tool written in golang.

## quickstart

* Prepare goquic

```

$ go get -u -d github.com/devsisters/goquic

$ cd $GOPATH/src/github.com/devsisters/goquic/

$ ./build_libs.sh

```

* Write definitions

```

$ vim definitions.json

```

* run elastiquic

```

$ go run elastiquic.go

..

Total requests: 2, successed: 2, failed: 0

```

| 12.965517 | 60 | 0.68617 | eng_Latn | 0.420552 |

8b1a433d6b369e5d1c7327f50b705338227d9f6b | 19,454 | md | Markdown | articles/hdinsight/hdinsight-apache-kafka-connect-vpn-gateway.md | DarryStonem/azure-docs.es-es | aa59a5fa09188f4cd2ae772e7818b708e064b1c0 | [

"CC-BY-4.0",

"MIT"

] | 1 | 2017-05-20T17:31:12.000Z | 2017-05-20T17:31:12.000Z | articles/hdinsight/hdinsight-apache-kafka-connect-vpn-gateway.md | DarryStonem/azure-docs.es-es | aa59a5fa09188f4cd2ae772e7818b708e064b1c0 | [

"CC-BY-4.0",

"MIT"

] | null | null | null | articles/hdinsight/hdinsight-apache-kafka-connect-vpn-gateway.md | DarryStonem/azure-docs.es-es | aa59a5fa09188f4cd2ae772e7818b708e064b1c0 | [

"CC-BY-4.0",

"MIT"

] | null | null | null | ---

title: "Conexión a Kafka en HDInsight mediante redes virtuales - Azure | Microsoft Docs"

description: "Obtenga información sobre cómo conectarse a Kafka de manera remota en HDInsight mediante el cliente kafka-python. La configuración que se indica en este documento usa HDInsight dentro de una instancia de Azure Virtual Network. El cliente remoto se conecta a la red virtual mediante una puerta de enlace de VPN de punto a sitio."

services: hdinsight

documentationCenter:

author: Blackmist

manager: jhubbard

editor: cgronlun

tags: azure-portal

ms.service: hdinsight

ms.devlang:

ms.custom: hdinsightactive

ms.topic: article

ms.tgt_pltfrm: na

ms.workload: big-data

ms.date: 04/18/2017

ms.author: larryfr

translationtype: Human Translation

ms.sourcegitcommit: aaf97d26c982c1592230096588e0b0c3ee516a73

ms.openlocfilehash: 9ddf19c008c35525419a357436b1a969a4b19205

ms.lasthandoff: 04/27/2017

---

# <a name="connect-to-kafka-on-hdinsight-preview-through-an-azure-virtual-network"></a>Conexión a Kafka en HDInsight (versión preliminar) mediante una instancia de Azure Virtual Network

Obtenga información sobre cómo conectarse a Kafka en HDInsight mediante redes virtuales de Azure. Los clientes de Kafka (productores y consumidores) pueden ejecutarse directamente en HDInsight o en sistemas remotos. Los clientes remotos deben conectarse a Kafka en HDInsight mediante una instancia de Azure Virtual Network. Use la información que se entrega en este documento para entender cómo los clientes remotos se pueden conectar a HDInsight mediante las redes virtuales de Azure.

> [!IMPORTANT]

> Varias de las configuraciones que se describen en este documento se pueden usar con clientes Windows, macOS o Linux. Sin embargo, el ejemplo de punto a sitio que se incluye solo proporciona un cliente VPN para Windows.

>

> El ejemplo también usa un cliente Python ([kafka-python](http://kafka-python.readthedocs.io/en/master/)) para comprobar la comunicación con Kafka en HDInsight.

## <a name="architecture-and-planning"></a>Arquitectura y planeación

Los clústeres de HDInsight están protegidos dentro de una instancia de Azure Virtual Network y solo permiten el tráfico SSH y HTTPS entrante. El tráfico llega a través de una puerta de enlace pública que no enruta el tráfico desde los clientes Kafka. Para tener acceso a Kafka desde un cliente remoto, debe crear una instancia de Azure Virtual Network que proporcione una puerta de enlace de red privada virtual (VPN). Una vez que haya configurado la puerta de enlace y la red virtual, instale HDInsight en la red virtual y conéctelo a ella a través de la puerta de enlace de VPN.

En la lista siguiente se muestra información sobre el proceso de usar Kafka en HDInsight con una red virtual:

1. Cree una red virtual. Para información específica sobre cómo usar HDInsight con redes virtuales de Azure, consulte el documento sobre la [extensión de HDInsight mediante una instancia de Azure Virtual Network](hdinsight-extend-hadoop-virtual-network.md).

2. (Opcional) Cree una máquina virtual de Azure dentro de la red virtual e instale ahí un servidor DNS personalizado. Este servidor DNS se usa para habilitar la resolución de nombres para clientes remotos en una configuración de sitio a sitio o de red virtual a red virtual. Para más información, consulte el documento sobre [resolución de nombres para máquinas virtuales y servicios en la nube](../virtual-network/virtual-networks-name-resolution-for-vms-and-role-instances.md).

3. Cree una puerta de enlace de VPN para la red virtual. Para más información sobre las configuraciones de puerta de enlace de VPN, consulte el documento de [información sobre VPN Gateway](../vpn-gateway/vpn-gateway-about-vpngateways.md).

4. Cree HDInsight dentro de la red virtual. Si configuró un servidor DNS personalizado para la red, HDInsight se configura automáticamente para usarlo.

5. (Opcional) Si no usó un servidor DNS personalizado y no tiene resolución de nombres entre los clientes y la red virtual, debe configurar Kafka para publicidad de direcciones IP. Para más información, consulte la sección [Configuración de Kafka para anunciar direcciones IP](#configure-kafka-for-ip-advertising) de este documento.

## <a name="create-using-powershell"></a>Creación mediante PowerShell

Los pasos de esta sección crean la configuración siguiente con [Azure PowerShell](/powershell/azure/overview):

* Red virtual

* Puerta de enlace de VPN de punto a sitio

* Cuenta de Azure Storage (usada por HDInsight)

* Kafka en HDInsight

1. Siga los pasos que aparecen en el documento [Funcionamiento de los certificados autofirmados para conexiones de punto a sitio](../vpn-gateway/vpn-gateway-certificates-point-to-site.md) para crear los certificados que se necesitan para la puerta de enlace.

2. Abra un símbolo del sistema de PowerShell y use el código siguiente para iniciar sesión en la suscripción de Azure:

```powershell

Add-AzureRmAccount

# If you have multiple subscriptions, uncomment to set the subscription

#Select-AzureRmSubscription -SubscriptionName "name of your subscription"

```

3. Use el código siguiente para crear variables que contengan la información de configuración:

```powershell

# Prompt for generic information

$resourceGroupName = Read-Host "What is the resource group name?"

$baseName = Read-Host "What is the base name? It is used to create names for resources, such as 'net-basename' and 'kafka-basename':"

$location = Read-Host "What Azure Region do you want to create the resources in?"

$rootCert = Read-Host "What is the file path to the root certificate? It is used to secure the VPN gateway."

# Prompt for HDInsight credentials

$adminCreds = Get-Credential -Message "Enter the HTTPS user name and password for the HDInsight cluster" -UserName "admin"

$sshCreds = Get-Credential -Message "Enter the SSH user name and password for the HDInsight cluster" -UserName "sshuser"

# Names for Azure resources

$networkName = "net-$baseName"

$clusterName = "kafka-$baseName"

$storageName = "store$baseName" # Can't use dashes in storage names

$defaultContainerName = $clusterName

$defaultSubnetName = "default"

$gatewaySubnetName = "GatewaySubnet"

$gatewayPublicIpName = "GatewayIp"

$gatewayIpConfigName = "GatewayConfig"

$vpnRootCertName = "rootcert"

$vpnName = "VPNGateway"

# Network settings

$networkAddressPrefix = "10.0.0.0/16"

$defaultSubnetPrefix = "10.0.0.0/24"

$gatewaySubnetPrefix = "10.0.1.0/24"

$vpnClientAddressPool = "172.16.201.0/24"

# HDInsight settings

$HdiWorkerNodes = 4

$hdiVersion = "3.5"

$hdiType = "Kafka"

```

4. Use el código siguiente para crear la red virtual y el grupo de recursos de Azure:

```powershell

# Create the resource group that contains everything

New-AzureRmResourceGroup -Name $resourceGroupName -Location $location

# Create the subnet configuration

$defaultSubnetConfig = New-AzureRmVirtualNetworkSubnetConfig -Name $defaultSubnetName `

-AddressPrefix $defaultSubnetPrefix

$gatewaySubnetConfig = New-AzureRmVirtualNetworkSubnetConfig -Name $gatewaySubnetName `

-AddressPrefix $gatewaySubnetPrefix

# Create the subnet

New-AzureRmVirtualNetwork -Name $networkName `

-ResourceGroupName $resourceGroupName `

-Location $location `

-AddressPrefix $networkAddressPrefix `

-Subnet $defaultSubnetConfig, $gatewaySubnetConfig

# Get the network & subnet that were created

$network = Get-AzureRmVirtualNetwork -Name $networkName `

-ResourceGroupName $resourceGroupName

$gatewaySubnet = Get-AzureRmVirtualNetworkSubnetConfig -Name $gatewaySubnetName `

-VirtualNetwork $network

$defaultSubnet = Get-AzureRmVirtualNetworkSubnetConfig -Name $defaultSubnetName `

-VirtualNetwork $network

# Set a dynamic public IP address for the gateway subnet

$gatewayPublicIp = New-AzureRmPublicIpAddress -Name $gatewayPublicIpName `

-ResourceGroupName $resourceGroupName `

-Location $location `

-AllocationMethod Dynamic

$gatewayIpConfig = New-AzureRmVirtualNetworkGatewayIpConfig -Name $gatewayIpConfigName `

-Subnet $gatewaySubnet `

-PublicIpAddress $gatewayPublicIp

# Get the certificate info

# Get the full path in case a relative path was passed

$rootCertFile = Get-ChildItem $rootCert

$cert = New-Object System.Security.Cryptography.X509Certificates.X509Certificate2($rootCertFile)

$certBase64 = [System.Convert]::ToBase64String($cert.RawData)

$p2sRootCert = New-AzureRmVpnClientRootCertificate -Name $vpnRootCertName `

-PublicCertData $certBase64

# Create the VPN gateway

New-AzureRmVirtualNetworkGateway -Name $vpnName `

-ResourceGroupName $resourceGroupName `

-Location $location `

-IpConfigurations $gatewayIpConfig `

-GatewayType Vpn `

-VpnType RouteBased `

-EnableBgp $false `

-GatewaySku Standard `

-VpnClientAddressPool $vpnClientAddressPool `

-VpnClientRootCertificates $p2sRootCert

```

> [!WARNING]

> Este proceso puede tardar varios minutos en completarse.

5. Use el código siguiente para crear el contenedor de blobs y la cuenta de Azure Storage:

```powershell

# Create the storage account

New-AzureRmStorageAccount `

-ResourceGroupName $resourceGroupName `

-Name $storageName `

-Type Standard_GRS `

-Location $location

# Get the storage account keys and create a context

$defaultStorageKey = (Get-AzureRmStorageAccountKey -ResourceGroupName $resourceGroupName `

-Name $storageName)[0].Value

$storageContext = New-AzureStorageContext -StorageAccountName $storageName `

-StorageAccountKey $defaultStorageKey

# Create the default storage container

New-AzureStorageContainer -Name $defaultContainerName `

-Context $storageContext

```

6. Use el código siguiente para crear el clúster de HDInsight:

```powershell

# Create the HDInsight cluster

New-AzureRmHDInsightCluster `

-ResourceGroupName $resourceGroupName `

-ClusterName $clusterName `

-Location $location `

-ClusterSizeInNodes $hdiWorkerNodes `

-ClusterType $hdiType `

-OSType Linux `

-Version $hdiVersion `

-HttpCredential $adminCreds `

-SshCredential $sshCreds `

-DefaultStorageAccountName "$storageName.blob.core.windows.net" `

-DefaultStorageAccountKey $defaultStorageKey `

-DefaultStorageContainer $defaultContainerName `

-VirtualNetworkId $network.Id `

-SubnetName $defaultSubnet.Id

```

> [!WARNING]

> Este proceso tarda unos 20 minutos en completarse.

8. Use el cmdlet siguiente para recuperar la dirección URL del cliente de VPN de Windows para la red virtual:

```powershell

Get-AzureRmVpnClientPackage -ResourceGroupName $resourceGroupName `

-VirtualNetworkGatewayName $vpnName `

-ProcessorArchitecture Amd64

```

Para descargar el cliente de VPN de Windows, use el identificador URI devuelto en el explorador web.

## <a name="configure-kafka-for-ip-advertising"></a>Configuración de Kafka para anunciar direcciones IP

De manera predeterminada, Zookeeper devuelve el nombre de dominio de los agentes de Kafka a los clientes. Esta configuración no funciona para el cliente de VPN, por lo que no puede usar la resolución de nombres para entidades de la red virtual. Use los pasos siguientes para configurar Kafka en HDInsight y anunciar direcciones IP en lugar de nombres de dominio:

1. En el explorador web, vaya a https://CLUSTERNAME.azurehdinsight.net. Reemplace __CLUSTERNAME__ por el nombre del clúster de Kafka en HDInsight.

Cuando se le solicite, use el nombre de usuario y la contraseña HTTPS para el clúster. Aparece la interfaz de usuario web de Ambari para el clúster.

2. Para ver información sobre Kafka, seleccione __Kafka__ en la lista de la izquierda.

3. Para ver la configuración de Kafka, seleccione __Configs__ (Configuraciones) en la parte superior central.

4. Para encontrar la configuración __kafka-env__, escriba `kafka-env` en el campo __Filtrar__ que se encuentra en la esquina superior derecha.

5. Para configurar Kafka y anunciar direcciones IP, agregue el texto siguiente en la parte inferior del campo __kafka-env-template__:

```

# Configure Kafka to advertise IP addresses instead of FQDN

IP_ADDRESS=$(hostname -i)

echo advertised.listeners=$IP_ADDRESS

sed -i.bak -e '/advertised/{/advertised@/!d;}' /usr/hdp/current/kafka-broker/conf/server.properties

echo "advertised.listeners=PLAINTEXT://$IP_ADDRESS:9092" >> /usr/hdp/current/kafka-broker/conf/server.properties

```

6. Para configurar la interfaz en que escucha Kafka, escriba `listeners` en el campo __Filtrar__ que se encuentra en la esquina superior derecha.

7. Para configurar Kafka para que escuche en todas las interfaces de red, cambie el valor del campo __listeners__ (agentes de escucha) a `PLAINTEXT://0.0.0.0:92092`.

8. Use el botón __Guardar__ para guardar los cambios en la configuración. Escriba un mensaje de texto para describir los cambios. Seleccione __Aceptar__ una vez que se guarden los cambios.

9. Para evitar errores al reiniciar Kafka, use el botón __Acciones de servicio__ y seleccione __Activar el modo de mantenimiento__. Seleccione Aceptar para completar esta operación.

10. Para reiniciar Kafka, use el botón __Reiniciar__ y seleccione __Restart All Affected__ (Reiniciar todos los elementos afectados). Confirme el reinicio y use el botón __Aceptar__ una vez que se complete la operación.

11. Para deshabilitar el modo de mantenimiento, use el botón __Acciones de servicio__ y seleccione __Desactivar el modo de mantenimiento__. Seleccione **Aceptar** para completar esta operación.

## <a name="connect-to-the-vpn-gateway"></a>Conexión a la puerta de enlace de VPN

Para conectarse a la puerta de enlace de VPN desde un __cliente Windows__, use la sección __Conexión a Azure__ del documento [Configuración de una conexión de punto a sitio](../vpn-gateway/vpn-gateway-howto-point-to-site-rm-ps.md#a-nameconnectapart-7---connect-to-azure).

## <a name="remote-kafka-client"></a>Cliente Kafka remoto

Para conectarse a Kafka desde el equipo cliente, debe usar la dirección IP de los agentes de Kafka o los nodos de Zookeeper (cualquiera sea la opción que requiere el cliente). Use los pasos siguientes para recuperar la dirección IP de los agentes de Kafka y, luego, úselos desde una aplicación Python

1. Use el script siguiente para recuperar las direcciones IP de los nodos del clúster:

```powershell

# Get the NICs for the HDInsight workernodes (names contain 'workernode').

$nodes = Get-AzureRmNetworkInterface `

-ResourceGroupName $resourceGroupName `

| where-object {$_.Name -like "*workernode*"}

# Loop through each node and get the IP address

foreach($node in $nodes) {

$node.IpConfigurations.PrivateIpAddress

}

```

En este script se supone que `$resourceGroupName` es el nombre del grupo de recursos de Azure que contiene la red virtual. La salida del script es similar al texto siguiente:

10.0.0.12

10.0.0.6

10.0.0.13

10.0.0.5

> [!NOTE]

> Si el cliente Kafka usa nodos de Zookeeper en lugar de agentes de Kafka, reemplace `*workernode*` por `*zookeepernode*` en el script de PowerShell.

> [!WARNING]

> Si escala el clúster o los nodos presentan un error y es necesario reemplazarlos, es posible que las direcciones IP cambien. Actualmente, no hay direcciones IP específicas asignadas previamente para los nodos de un clúster de HDInsight.

2. Use el código siguiente para instalar el cliente [kafka-python](http://kafka-python.readthedocs.io/):

pip install kafka-python

3. Para enviar datos a Kafka, use el código Python siguiente:

```python

from kafka import KafkaProducer

# Replace the `ip_address` entries with the IP address of your worker nodes

producer = KafkaProducer(bootstrap_servers=['ip_address1','ip_address2','ip_adderess3','ip_address4'])

for _ in range(50):

producer.send('testtopic', b'test message')

```

Reemplace las entradas `'ip_address'` por las direcciones devueltas del paso 1 de esta sección.

> [!NOTE]

> Este código envía la cadena `test message` al tema `testtopic`. La configuración predeterminada de Kafka en HDInsight es crear el tema si no existe.

4. Para recuperar los mensajes de Kafka, use el código Python siguiente:

```python

from kafka import KafkaConsumer

# Replace the `ip_address` entries with the IP address of your worker nodes

# Note: auto_offset_reset='earliest' resets the starting offset to the beginning

# of the topic

consumer = KafkaConsumer(bootstrap_servers=['ip_address1','ip_address2','ip_adderess3','ip_address4'],auto_offset_reset='earliest')

consumer.subscribe(['testtopic'])

for msg in consumer:

print (msg)

```

Reemplace las entradas `'ip_address'` por las direcciones devueltas del paso 1 de esta sección. La salida contiene el mensaje de prueba que se envió al productor en el paso anterior.

## <a name="troubleshooting"></a>Solución de problemas

Si tiene problemas para conectarse a la red virtual o para conectarse a HDInsight a través de la red, consulte el documento [Solución de problemas de las conexiones y la puerta de enlace de Virtual Network](../network-watcher/network-watcher-troubleshoot-manage-powershell.md) para instrucciones.

## <a name="next-steps"></a>Pasos siguientes

Para más información sobre cómo crear una instancia de Azure Virtual Network con puerta de enlace de VPN de punto a sitio, consulte los documentos siguientes:

* [Configuración de una conexión de punto a sitio mediante Azure Portal](../vpn-gateway/vpn-gateway-howto-point-to-site-resource-manager-portal.md)

* [Configuración de una conexión de punto a sitio mediante Azure PowerShell](../vpn-gateway/vpn-gateway-howto-point-to-site-rm-ps.md)

Para más información sobre cómo trabajar con Kafka en HDInsight, consulte los documentos siguientes:

* [Introducción a Kafka en HDInsight](hdinsight-apache-kafka-get-started.md)

* [Uso de creación de reflejos con Kafka en HDInsight](hdinsight-apache-kafka-mirroring.md)

| 54.340782 | 580 | 0.758404 | spa_Latn | 0.876105 |

8b1ae860f41424099ff34f1ab52513eb65ff0ec5 | 921 | md | Markdown | csharp/destinations/snowflake/README.md | raaij/quix-library | d286e6bcad194d40adf261a85ecccec3e32bccd4 | [

"Apache-2.0"

] | null | null | null | csharp/destinations/snowflake/README.md | raaij/quix-library | d286e6bcad194d40adf261a85ecccec3e32bccd4 | [

"Apache-2.0"

] | null | null | null | csharp/destinations/snowflake/README.md | raaij/quix-library | d286e6bcad194d40adf261a85ecccec3e32bccd4 | [

"Apache-2.0"

] | null | null | null | # C# Snowflake Sink

The sample contained in this folder gives an example on how to stream data from Quix to Snowflake, it handles both parameter and event data.

## Requirements / Prerequisites

- A Snowflake account.

## Environment variables

The code sample uses the following environment variables:

- **Broker__TopicName**: Name of the input topic to read from.

- **Snowflake__ConnectionString**: The Snowflake database connection string.

- e.g. account=xxx.north-europe.azure;user=xxx;password=xxx;db=xxx

## Known limitations

- Binary parameters are not supported in this version

- Stream metadata is not persisted in this version

## Docs

Check out the [SDK docs](https://quix.ai/docs/sdk/introduction.html) for detailed usage guidance

## How to run

Create an account on [Quix](https://portal.platform.quix.ai/self-sign-up?xlink=github) to edit or deploy this application without a local environment setup.

| 36.84 | 156 | 0.774159 | eng_Latn | 0.984952 |

8b1b73481af267bfd9ce4cac3f0311ef294c1aa4 | 6,808 | md | Markdown | articles/governance/resource-graph/overview.md | Nike1016/azure-docs.hu-hu | eaca0faf37d4e64d5d6222ae8fd9c90222634341 | [

"CC-BY-4.0",

"MIT"

] | 1 | 2019-09-29T16:59:33.000Z | 2019-09-29T16:59:33.000Z | articles/governance/resource-graph/overview.md | Nike1016/azure-docs.hu-hu | eaca0faf37d4e64d5d6222ae8fd9c90222634341 | [

"CC-BY-4.0",

"MIT"

] | null | null | null | articles/governance/resource-graph/overview.md | Nike1016/azure-docs.hu-hu | eaca0faf37d4e64d5d6222ae8fd9c90222634341 | [

"CC-BY-4.0",

"MIT"

] | null | null | null | ---

title: Az Azure Resource Graph áttekintése

description: Ismerje meg, hogyan az Azure-erőforrás Graph szolgáltatás lehetővé teszi, hogy nagy mennyiségű erőforrást összetett lekérdezés.

author: DCtheGeek

ms.author: dacoulte

ms.date: 05/06/2019

ms.topic: overview

ms.service: resource-graph

manager: carmonm

ms.openlocfilehash: d78c640f4269c799d3d371e6dd9db477faf96694

ms.sourcegitcommit: 47ce9ac1eb1561810b8e4242c45127f7b4a4aa1a

ms.translationtype: MT

ms.contentlocale: hu-HU

ms.lasthandoff: 07/11/2019

ms.locfileid: "67807414"

---

# <a name="overview-of-the-azure-resource-graph-service"></a>Az Azure-erőforrás Graph szolgáltatás áttekintése

Az Azure Erőforrás-grafikon egy olyan szolgáltatás, az Azure-ban, amely való kiterjesztésére szolgál Azure Resource Management azáltal, hogy hatékony és nagy teljesítményű erőforrások feltárási és ipari méretekben lekérdezés lehetővé teszi egy adott halmazát az előfizetések között, hogy hatékonyan szabályozhatja a környezet. Ezek a lekérdezések a következő funkciókat biztosítják:

- Erőforrások lekérdezése az erőforrás-tulajdonságok alapján végzett összetett szűréssel, csoportosítással és rendezéssel.

- Azon képessége, iteratív a források a cégirányítási követelmények alapján.

- Szabályzatok alkalmazásából adódó következmények felmérése kiterjedt felhőkörnyezetben.

- Lehetővé teszi [erőforrás-tulajdonságok módosításait részletezik](./how-to/get-resource-changes.md) (előzetes verzió).

Ez a dokumentáció mindegyik funkciót részletesen tárgyalja.

> [!NOTE]

> Az Azure Erőforrás-grafikon működteti az Azure portál keresősávjában, az új tallózási "Minden erőforrás" élményt és az Azure Policy [módosítási előzmények](../policy/how-to/determine-non-compliance.md#change-history-preview)

> _diff vizuális_. Úgy van kialakítva, hogy az ügyfelek kezelése nagyméretű környezetekben.

[!INCLUDE [service-provider-management-toolkit](../../../includes/azure-lighthouse-supported-service.md)]

## <a name="how-does-resource-graph-complement-azure-resource-manager"></a>Hogyan egészíti ki a Resource Graph az Azure Resource Managert

Az Azure Resource Manager jelenleg támogatja lekérdezések keresztül alapvető erőforrás mezők, kifejezetten - erőforrás nevét, Azonosítóját, típus, erőforráscsoport, előfizetés és hely. Erőforrás-kezelő szolgáltatásokat egyéni erőforrás-szolgáltató hívása egy erőforrás részletes tulajdonságok egyszerre is tartalmazza.

Az Azure Resource Graph segítségével az erőforrás-szolgáltatók egyenkénti hívása nélkül is hozzáférhet az általuk visszaadott tulajdonságokhoz. Támogatott erőforrástípusait listáját, keressen egy **Igen** a a [erőforrások teljes üzemmód telepítéseit](../../azure-resource-manager/complete-mode-deletion.md) tábla.

Az Azure Erőforrás-grafikon a következőket teheti:

- Hozzáférés a anélkül, hogy az egyes hívásokat mindegyik erőforrás-szolgáltató erőforrás-szolgáltató által visszaadott tulajdonságait.

- Az elmúlt 14 napban tulajdonságok változott az erőforrás végzett módosítási előzmények megtekintése és mikor. (előzetes verzió)

## <a name="how-resource-graph-is-kept-current"></a>Hogyan tárolódik aktuális erőforrás-grafikon

Egy Azure-erőforrás frissítésekor az Erőforrás-grafikon Resource Manager által a változás értesítést kap.

Erőforrás-grafikon majd frissíti az adatbázist. Erőforrás-grafikon is elvégzi a szokványos _teljes vizsgálat_. Ez a vizsgálat biztosítja, hogy erőforrás gráfadatok aktuális kihagyott értesítések esetén, vagy egy erőforrás-en kívül a Resource Manager frissítésekor.

## <a name="the-query-language"></a>A lekérdezőnyelv

Most, hogy már jobban érti az Azure Resource Graph lényegét, ismerkedjünk meg közelebbről a lekérdezések összeállításával.

Fontos megérteni, hogy az Azure Resource Graph lekérdezési nyelv alapul a [Kusto-lekérdezés nyelvi](../../data-explorer/data-explorer-overview.md) Azure Data Explorer által használt.

Első lépésként olvassa el az Azure Resource Graphfal használható műveleteket és funkciókat ismertető, [a Resource Graph lekérdezőnyelve](./concepts/query-language.md) című cikket.

Az erőforrások tallózását az [erőforrások kezeléséről](./concepts/explore-resources.md) szóló cikk írja le.

## <a name="permissions-in-azure-resource-graph"></a>Engedélyek az Azure Resource Graphban

A Resource Graph használatához megfelelő jogosultságokkal kell rendelkeznie a [szerepköralapú hozzáférés-vezérlésben](../../role-based-access-control/overview.md) (RBAC), és legalább olvasási jogosultsággal kell rendelkeznie a lekérdezni kívánt erőforrásokon. Ha nem rendelkezik legalább `read` engedélyekkel az Azure-objektumhoz vagy -objektumcsoporthoz, a rendszer nem ad vissza eredményeket.

> [!NOTE]

> Erőforrás-grafikon egy egyszerű elérhető előfizetések használja a bejelentkezés során. Aktív munkamenet során hozzáadott új előfizetés erőforrások megtekintéséhez az egyszerű frissítenie kell a környezetben. Ez a művelet automatikusan megtörténik, amikor kijelentkezik, majd újból.

## <a name="throttling"></a>Throttling

Ingyenes szolgáltatás erőforrás-grafikon a lekérdezések szabályozott a legjobb felhasználói élményt és válasz ideje biztosít minden ügyfél számára. Ha a szervezet által támogatni kívánt nagy méretű és gyakori lekérdezések a erőforrás Graph API használatával, használja a portál "Visszajelzés" a [erőforrás Graph portáloldalán](https://portal.azure.com/#blade/Microsoft_Azure_Policy/PolicyMenuBlade/ResourceGraph).

Adja meg az üzleti esetekhez, és jelölje be a "Microsoft e-mail üzeneteket küldhet Önnek a Visszajelzésével kapcsolatban" jelölőnégyzetet ahhoz, hogy a csapat Önnel a kapcsolatot.

Erőforrás-grafikon felhasználói szinten lekérdezések szabályozza. A szolgáltatás válasza a következő HTTP-fejléceket tartalmazza:

- `x-ms-user-quota-remaining` (int): A felhasználó többi erőforrás kvótáját. Ez az érték lekérdezés száma képezi le.

- `x-ms-user-quota-resets-after` (ÓÓ:) Az időtartamot, amíg a felhasználó kvóta fogyasztás alaphelyzetbe állítása

További információkért lásd: [szabályozott kérelmeinek útmutatást](./concepts/guidance-for-throttled-requests.md).

## <a name="running-your-first-query"></a>Az első lekérdezés futtatása

Erőforrás-grafikon támogatja a .NET-hez készült Azure CLI-vel, az Azure PowerShell és az Azure SDK-t. A lekérdezés szerkezete ugyanaz az egyes nyelvekhez. Útmutató a Resource Graph engedélyezéséhez az [Azure CLI-ben](first-query-azurecli.md#add-the-resource-graph-extension) és az [Azure PowerShellben](first-query-powershell.md#add-the-resource-graph-module).

## <a name="next-steps"></a>További lépések

- Az első lekérdezés futtatása [Azure CLI-vel](first-query-azurecli.md).

- Az első lekérdezés futtatása [Azure PowerShell-lel](first-query-powershell.md).

- Kezdje [alapszintű lekérdezéseket](./samples/starter.md).

- A elmélyítse [speciális lekérdezések](./samples/advanced.md). | 78.252874 | 413 | 0.821974 | hun_Latn | 1.000009 |

8b1bf1750bb5ef0ee84e75d4783761bcff3d3987 | 3,241 | md | Markdown | exampleSite/content/english/blog/how-to-make-use-of-the-description-meta-tag.md | sanderwollaert/educenter-hugo | 947664b8a98e5703fef71080030a76c29c88aa41 | [

"MIT"

] | null | null | null | exampleSite/content/english/blog/how-to-make-use-of-the-description-meta-tag.md | sanderwollaert/educenter-hugo | 947664b8a98e5703fef71080030a76c29c88aa41 | [

"MIT"

] | null | null | null | exampleSite/content/english/blog/how-to-make-use-of-the-description-meta-tag.md | sanderwollaert/educenter-hugo | 947664b8a98e5703fef71080030a76c29c88aa41 | [

"MIT"

] | null | null | null | ---

title: How to make use of the "description" meta tag

date: 2020-11-15T09:45:17.000+00:00

bg_image: images/backgrounds/page-title.jpg

description: The description tag should accurately summarize the page content and

should be unique

image: images/blog/post-3.jpg

author: Sander Wollaert

categories:

- SEO

tags: []

type: post

---

### Summaries can be defined for each page

A page's description meta tag gives Google and other search engines a summary of what the page is about. Whereas a page's title may be a few words or a phrase, a page's description meta tag might be a sentence or two or a short paragraph. Google Webmaster Tools provides a handy [content analysis section](http://googlewebmastercentral.blogspot.com/2007/12/new-content-analysis-and-sitemap.html) that'll tell you about any description meta tags that are either too short, long, or duplicated too many times (the same information is also shown for `<title>` tags). Like the `<title>` tag, the description meta tag is placed within the tag of your HTML document.

### What are the merits of description meta tags?

Description meta tags are important because **Google might use them as snippets for your pages**. Note that we say "might" because Google may choose to use a relevant section of your page's visible text if it does a good job of matching up with a user's query. Alternatively, Google might use your site's description in the Open Directory Project if your site is listed there (learn how to [prevent search engines from displaying ODP data](http://www.google.com/support/webmasters/bin/answer.py?answer=35264)). Adding description meta tags to each of your pages is always a good practice in case Google cannot find a good selection of text to use in the snippet. The Webmaster Central Blog has an informative post on [improving snippets with better description meta tags. ](http://googlewebmastercentral.blogspot.com/2007/09/improve-snippets-with-meta-description.html)

Words in the snippet are bolded when they appear in the user's query. This gives the user clues about whether the content on the page matches with what he or she is looking for.

### Best Practices

#### Accurately summarize the page's content

Write a description that would both inform and interest users if they saw your description meta tag as a snippet in a search result.

* **Avoid** writing a description meta tag that has no relation to the content on the page

* **Avoid** using generic descriptions like "This is a web page" or "Page about baseball cards" filling the description with only keywords

* **Avoid** copying and pasting the entire content of the document into the description meta tag

#### Use unique descriptions for each page

Having a different description meta tag for each page helps both users and Google, especially in searches where users may bring up multiple pages on your domain (e.g. searches using the [site: operator]()). If your site has thousands or even millions of pages, hand-crafting description meta tags probably isn't feasible. In this case, you could automatically generate description meta tags based on each page's content.

* **Avoid** using a single description meta tag across all of your site's pages or a large group of pages | 83.102564 | 869 | 0.784943 | eng_Latn | 0.996722 |

8b1e41b69844a807c1a8922d9c00ff27ced2bc4f | 587 | md | Markdown | _posts/2019-11-19-pyneng-online-jan-apr-2019.md | rubmu/natenka | 2ecd8f4bdc4974ff10e84cdb637a0cefecf9c88e | [

"MIT"

] | 18 | 2017-02-19T15:58:54.000Z | 2022-02-13T22:15:19.000Z | _posts/2019-11-19-pyneng-online-jan-apr-2019.md | rubmu/natenka | 2ecd8f4bdc4974ff10e84cdb637a0cefecf9c88e | [

"MIT"

] | 3 | 2020-02-26T14:42:54.000Z | 2021-09-28T00:32:23.000Z | _posts/2019-11-19-pyneng-online-jan-apr-2019.md | rubmu/natenka | 2ecd8f4bdc4974ff10e84cdb637a0cefecf9c88e | [

"MIT"

] | 27 | 2017-05-03T15:38:41.000Z | 2022-02-08T02:53:38.000Z | ---

title: "PyNEng Online: 11.01.20 - 11.04.20"

date: 2019-11-19

tags:

- pyneng-online

- pyneng

category:

- pyneng

---

## Объявляется набор на курс "Python для сетевых инженеров"

Курс начинается 11.01.20 и идет до 11.04.20.

14 недель, 20 лекций, 60 часов теории, 100 домашних заданий, Python 3.7.

Курс достаточно интенсивный, несмотря на то, что он идет 14 недель.

Поэтому приготовьтесь много работать и делать домашние задания.

Подробнее на [странице курса](https://natenka.github.io/pyneng-online/)

> Для записи на курс пишите мне на email, который указан на странице курса.

| 24.458333 | 75 | 0.74276 | rus_Cyrl | 0.850127 |

8b1f6465dfeb29380b5328c91bded4f4cf774f91 | 579 | md | Markdown | README.md | jeti182/big_M_toj_models | f22a99e4f6ef55f4c33a42c8e949da26d5045070 | [

"MIT"

] | null | null | null | README.md | jeti182/big_M_toj_models | f22a99e4f6ef55f4c33a42c8e949da26d5045070 | [

"MIT"

] | null | null | null | README.md | jeti182/big_M_toj_models | f22a99e4f6ef55f4c33a42c8e949da26d5045070 | [

"MIT"

] | null | null | null | # big_M_toj_models

TOJ models and anylsis scripts to a company the Tünnermann & Scharlau (2021) TOJ data set paper.

## Dendencies

* [PyMC3](https://github.com/pymc-devs/pymc3)

* [ArviZ](https://github.com/arviz-devs/arviz)

## Installation

* Install the dependencies listed above, e.g.: `conda install -c conda-forge pymc3 arviz`

* Clone the repositories: `git clone https://github.com/jeti182/big_M_toj_models.git`

## Data set

Download the data set at https://osf.io/e4stu/ and copy it into the same folder as the scripts

## Usage

Run with `python big_M_toj_models`

| 32.166667 | 100 | 0.746114 | eng_Latn | 0.345264 |

8b2289e47d39c3f750fa3fdfce32baed78f2f5c9 | 918 | md | Markdown | _posts/_queue/2011-07-17-tsql-random-rows.md | groundh0g/ososoft.net | 7e2a1baee9cdb7ebdedbd1da6d7ce5869324d665 | [

"MIT"

] | null | null | null | _posts/_queue/2011-07-17-tsql-random-rows.md | groundh0g/ososoft.net | 7e2a1baee9cdb7ebdedbd1da6d7ce5869324d665 | [

"MIT"

] | null | null | null | _posts/_queue/2011-07-17-tsql-random-rows.md | groundh0g/ososoft.net | 7e2a1baee9cdb7ebdedbd1da6d7ce5869324d665 | [

"MIT"

] | null | null | null | ---

layout: post

category : code

tagline:

tags : [ tsql, code, tips, tricks ]

---

{% include JB/setup %}

Sometimes it can be useful to randomly select a value (or row) from a table. One easy way to do this is to sort the table using a column that contains random values, and then select the first row. The following query illustrates this concept.

~~~

-- initialize example data

DECLARE @Data TABLE (Id int IDENTITY(1,1), Caption varchar(25))

INSERT INTO @Data (Caption)

SELECT 'Apple'

UNION SELECT 'Orange'

UNION SELECT 'Banana'

UNION SELECT 'Pear'

UNION SELECT 'Mango'

UNION SELECT 'Kiwi'

-- query

SELECT TOP 1

Caption

FROM @Data

ORDER BY NEWID()

~~~

Every time you run this query, you’ll be presented with a random fruit from the list.

It’s worth noting that this isn’t the fastest code in the world. While it’s fine for rarely-run data samples, I wouldn’t use it in an oft-called production routine.

-- Joe | 27 | 242 | 0.737473 | eng_Latn | 0.966465 |

8b22c563640d5799f7979b8b88ff23ad39a0d424 | 3,178 | md | Markdown | _posts/2019-04-11-Docker-install-ubuntu.md | mdchao2010/mdchao2010.github.io | a2a7c127b1a243927205fb66454cf48657d9cac4 | [

"MIT"

] | null | null | null | _posts/2019-04-11-Docker-install-ubuntu.md | mdchao2010/mdchao2010.github.io | a2a7c127b1a243927205fb66454cf48657d9cac4 | [

"MIT"

] | null | null | null | _posts/2019-04-11-Docker-install-ubuntu.md | mdchao2010/mdchao2010.github.io | a2a7c127b1a243927205fb66454cf48657d9cac4 | [

"MIT"

] | null | null | null | ---

layout: post

title: "ubuntu 16 安装 Docker"

date: 2019-04-11

categories: docker

tags: docker ubuntu 16

---

* content

{:toc}

## 前言

Docker 要求 Ubuntu 系统的内核版本高于 3.10,通过下面的命令查看内核版本:

```bash

uname -r

```

### 卸载老版本

Docker老版本(例如1.13),叫做docker-engine。Docker进入17.x版本后,名称发生了变化,叫做docker-ce或者docker-ee。

因此,如果有安装老版本的Docker,必须先删除老版本的Docker。

1.确认安装了docker

```bash

dpkg -l | grep -i docker

```

2.删除旧docker版本:

```bash

sudo apt-get purge -y docker-engine docker docker.io docker-ce

sudo apt-get autoremove -y --purge docker-engine docker docker.io docker-ce

```

需要注意的是,执行该命令只会卸载Docker本身,而不会删除Docker内容,例如镜像、容器、卷以及网络。这些文件保

存在`/var/lib/docker` 目录中,需要手动删除。

```bash

sudo rm -rf /var/lib/docker

sudo rm /etc/apparmor.d/docker

sudo groupdel docker

sudo rm -rf /var/run/docker.sock

```

### 安装步骤

由于docker安装需要使用https,所以需要使 apt 支持 https 的拉取方式。

1.安装https。

```bash

sudo apt-get update # 先更新一下软件源库信息

sudo apt-get install \

apt-transport-https \

ca-certificates \

curl \

software-properties-common

```

2.添加Docker软件包源,设置apt仓库地址

鉴于国内网络问题,强烈建议使用国内地址,添加 阿里云 的apt仓库(使用国内源)

```bash

$ curl -fsSL https://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | sudo apt-key add -

$ sudo add-apt-repository \

"deb [arch=amd64] https://mirrors.aliyun.com/docker-ce/linux/ubuntu \

$(lsb_release -cs) \

stable"

```

**TIPS**: Docker有两种构建方式,Stable(稳定)构建一般是一个季度发布一次;Edge(边缘)构建一般是一个月发布一次。

### 安装Docker

1.执行以下命令更新apt的包索引

```bash

$ sudo apt-get update

$ sudo apt-get install docker-ce # 安装最新版的docker

```

2.安装你想要的Docker版本(CE/EE)

```bash

$ apt-cache policy docker-ce # 查看可供安装的所有docker版本

$ sudo apt-get install docker-ce=18.03.0~ce-0~ubuntu # 安装指定版本的docker

```

3.验证安装是否正确

```bash

docker info

```

### docker 配置http_proxy

docker deamon不会读取系统代理,需要手动配置

根据经验需要修改/etc/default/docker这个配置文件,文件中也确实提供了配置代理的注释和参考,此配置方法适

用于低于Ubuntu16版本系统,Ubuntu 16配置方法如下:

1.创建目录`/etc/systemd/system/docker.service.d`

```bash

sudo mkdir docker.service.d

```

2.在该目录下创建文件`http-proxy.conf`,在文件中添加配置:

```bash

[Service]

Environment="HTTP_PROXY=http://[proxy-addr]:[proxy-port]/"

Environment="HTTPS_PROXY=http://[proxy-addr]:[proxy-port]/"

```

或者执行

```bash

sh -c "cat << EOF > /etc/systemd/system/docker.service.d/http-proxy.conf

[Service]

Environment="HTTP_PROXY=http://192.168.199.62:808"

Environment="HTTP_PROXY=http://192.168.199.62:808"

EOF"

```

3.刷新配置

```

sudo systemctl daemon-reload

```

4.重启docker服务

```bash

sudo systemctl restart docker

```

### 采坑指南

按照docker官方安装教程在执行以下命令的时候:`sudo apt-get install docker-ce`会出现如下的报错:

```bash

The following packages have unmet dependencies:

docker-ce : Depends: libltdl7 (>= 2.4.6) but it is not going to be installed

Recommends: aufs-tools but it is not going to be installed

Recommends: cgroupfs-mount but it is not installable or

cgroup-lite but it is not going to be installed

E: Unable to correct problems, you have held broken packages.

```

此问题源于libltdl7版本过低,ubuntu16.04默认无更高版本。

解决方案:

搜索到libltdl7 2.4.6的包,下载:

```

wget http://launchpadlibrarian.net/236916213/libltdl7_2.4.6-0.1_amd64.deb

```

要先安装`libltdl7`,否则安装`docker-ce`会报错

```

sudo dpkg -i libltdl7_2.4.6-0.1_amd64.deb

```

然后再安装 `docker-ce-17.03.2.ce`,就能正常安装

```bash

sudo apt-get install docker-ce

```

| 20.771242 | 87 | 0.731278 | yue_Hant | 0.500866 |

8b22cedd7d195d4afc47e7ff26b88aff6311ad7a | 1,808 | md | Markdown | articles/api-management/policies/cache-response.md | Nike1016/azure-docs.hu-hu | eaca0faf37d4e64d5d6222ae8fd9c90222634341 | [

"CC-BY-4.0",

"MIT"

] | 1 | 2019-09-29T16:59:33.000Z | 2019-09-29T16:59:33.000Z | articles/api-management/policies/cache-response.md | Nike1016/azure-docs.hu-hu | eaca0faf37d4e64d5d6222ae8fd9c90222634341 | [

"CC-BY-4.0",

"MIT"

] | null | null | null | articles/api-management/policies/cache-response.md | Nike1016/azure-docs.hu-hu | eaca0faf37d4e64d5d6222ae8fd9c90222634341 | [

"CC-BY-4.0",

"MIT"

] | null | null | null | ---

title: Az Azure API management policy – képességek háttérszolgáltatás hozzáadása |} A Microsoft Docs

description: Az Azure API management házirend-minta - képességeket adhat a háttérszolgáltatás mutatja be. Lehetővé teheti például, hogy egy időjárás-előrejelző API-ban a szélesség és hosszúság helyett elég legyen egy hely nevét megadni.

services: api-management

documentationcenter: ''

author: vladvino

manager: cfowler

editor: ''

ms.service: api-management

ms.workload: mobile

ms.tgt_pltfrm: na

ms.devlang: na

ms.topic: article

ms.date: 10/13/2017

ms.author: apimpm

ms.openlocfilehash: 7c9edbf4b2d231453cd336521a04ba6b7714b696

ms.sourcegitcommit: d4dfbc34a1f03488e1b7bc5e711a11b72c717ada

ms.translationtype: MT

ms.contentlocale: hu-HU

ms.lasthandoff: 06/13/2019

ms.locfileid: "61062207"

---

# <a name="add-capabilities-to-a-backend-service"></a>Képességek hozzáadása a háttérszolgáltatáshoz

Ez a cikk bemutatja egy Azure API management házirend minta, amely bemutatja, hogyan adhat hozzá funkciókat háttérszolgáltatás. Lehetővé teheti például, hogy egy időjárás-előrejelző API-ban a szélesség és hosszúság helyett elég legyen egy hely nevét megadni. Az ismertetett lépéseket követve beállíthatja, vagy szerkesztheti egy szabályzat-kódot, [Set meg vagy szerkessze a szabályzat](../set-edit-policies.md). További példák megtekintéséhez lásd: [házirend minták](../policy-samples.md).

## <a name="policy"></a>Szabályzat

Illessze be a kódot a **bejövő** letiltása.

[!code-xml[Main](../../../api-management-policy-samples/examples/Call out to an HTTP endpoint and cache the response.policy.xml)]

## <a name="next-steps"></a>További lépések

További információ az APIM-szabályzatokat:

+ [Átalakítási házirendek](../api-management-transformation-policies.md)

+ [A házirend-minták](../policy-samples.md)

| 45.2 | 489 | 0.795354 | hun_Latn | 0.999872 |

8b2308094b4c0ffefdb962f6552d1633beaab247 | 1,159 | md | Markdown | README.md | syyongx/ii18n | 03d063505fc9f2c037c22a9000b7ff5e7571e2e6 | [

"MIT"

] | 23 | 2018-06-21T06:04:39.000Z | 2022-02-10T08:06:29.000Z | README.md | syyongx/ii18n | 03d063505fc9f2c037c22a9000b7ff5e7571e2e6 | [

"MIT"

] | null | null | null | README.md | syyongx/ii18n | 03d063505fc9f2c037c22a9000b7ff5e7571e2e6 | [

"MIT"

] | 1 | 2019-02-19T06:48:53.000Z | 2019-02-19T06:48:53.000Z | # II18N

[](https://godoc.org/github.com/syyongx/ii18n)

[](https://goreportcard.com/report/github.com/syyongx/ii18n)

[![MIT licensed][3]][4]

[3]: https://img.shields.io/badge/license-MIT-blue.svg

[4]: LICENSE

Go i18n library.

## Download & Install

```shell

go get github.com/syyongx/ii18n

```

## Quick Start

```go

import github.com/syyongx/ii18n

func main() {

config := map[string]Config{

"app": Config{

SourceNewFunc: NewJSONSource,

OriginalLang: "en-US",

BasePath: "./testdata",

FileMap: map[string]string{

"app": "app.json",

"error": "error.json",

},

},

}

NewI18N(config)

message := T("app", "hello", nil, "zh-CN")

}

```

## Apis

```go

NewI18N(config map[string]Config) *I18N

T(category string, message string, params map[string]string, lang string) string

```

## LICENSE

II18N source code is licensed under the [MIT](https://github.com/syyongx/ii18n/blob/master/LICENSE) Licence.

| 25.195652 | 134 | 0.625539 | yue_Hant | 0.316422 |

8b230b60eeced7e8ff1a9ec3ca1c74040ac60d22 | 109 | md | Markdown | README.md | tys404/edxNodeJs | c8be772194dcbcd3251aeb7ffccc87cf340c3fa5 | [

"MIT"

] | null | null | null | README.md | tys404/edxNodeJs | c8be772194dcbcd3251aeb7ffccc87cf340c3fa5 | [

"MIT"

] | null | null | null | README.md | tys404/edxNodeJs | c8be772194dcbcd3251aeb7ffccc87cf340c3fa5 | [

"MIT"

] | null | null | null | # edxNodeJs

node.js exercises for edx course Microsoft: DEV280x Building Functional Prototypes using Node.js

| 36.333333 | 96 | 0.834862 | eng_Latn | 0.471912 |

8b25b1da69aa6432505b8c1456c833af2156679b | 40 | md | Markdown | README.md | Edilaine100/VMT | b1b652bc01a307f5446a952c1aaf6ef50c433555 | [

"MIT"

] | null | null | null | README.md | Edilaine100/VMT | b1b652bc01a307f5446a952c1aaf6ef50c433555 | [

"MIT"

] | null | null | null | README.md | Edilaine100/VMT | b1b652bc01a307f5446a952c1aaf6ef50c433555 | [

"MIT"

] | null | null | null | # VMT

2 trabalho da Equipe de Inovação

| 13.333333 | 33 | 0.75 | por_Latn | 1.000009 |

8b2625e0dbaec1387b50c94f19430a6ff4c872af | 25,510 | md | Markdown | README.md | kdoroschak/PyPore | fa4c662ae84aadffca69a0d731b0b1483aef0ea5 | [

"MIT"

] | 24 | 2015-01-28T14:17:53.000Z | 2021-12-21T05:56:01.000Z | README.md | jmschrei/PyPore | fa4c662ae84aadffca69a0d731b0b1483aef0ea5 | [

"MIT"

] | 1 | 2021-01-11T02:47:40.000Z | 2021-01-11T03:57:41.000Z | README.md | kdoroschak/PyPore | fa4c662ae84aadffca69a0d731b0b1483aef0ea5 | [

"MIT"

] | 10 | 2015-01-31T04:43:01.000Z | 2021-01-11T02:13:45.000Z | # PyPore

## _Analysis of Nanopore Data_

The PyPore package is based off of a few core data analysis packages in order to provide a consistent and easy framework for handling nanopore data in the UCSC nanopore lab. The packages it requires are:

* numpy

* scipy

* matplotlib

* sklearn

Packages which are not required, but can be used, are:

* mySQLdb

* cython

* PyQt4

Let's get started!

# DataTypes

There are several core datatypes implemented in order to speed up analysis. These are currently File, Event, and Segment. Each of these is a way to store a full, or parts of, a .abf file and perform common tasks.

### Files

* **Attributes**: duration, mean, std, min, max, n *(# events)*, second, current, sample, events, event_parser, filename

* **Instance Methods**: parse( parser ), delete(), to\_meta(), to\_json( filename ), to\_dict(), to\_database( database, host, user, password ), plot( color_events )

* **Class Methods**: from\_json( filename ), from\_database( ... )

Nanopore data files consist primarily of current levels corresponding to ions passing freely through the nanopore ("open channel"), and a blockages as something passes through the pore, such as a DNA strand ("events"). Data from nanopore experiments are stored in Axon Binary Files (extension .abf), as a sequence 32 bit floats, and supporting information about the hardware. They can be opened and loaded with the following:

```

from PyPore.DataTypes import *

file = File( "My_File.abf" )

```

The File class contains many methods to simplify the analysis of these files. The simplest analysis to do is to pull the events, or blockages of current, from the file, while ignoring open channel. Let's say that we are looking for any blockage of current which causes the current to dip from an open channel of ~120 pA. To be conservative, we set the threshold the current has to dip before being significant to 110 pA. This can be done simply with the file's parse method, which requires a parser class which will perform the parsing. The simplest event detector is the *lambda_event_parser*, which has a keyword *threshold*, indicating the raw current that serves as the threshold.

```

from PyPore.DataTypes import *

file = File( "My_File.abf" )

file.parse( parser=lambda_event_parser( threshold=50 ) )

```

The events are now stored as Event objects in file.events. The only other important file methods involve loading and saving them to a cache, which we'll cover later. Files also have the properties mean, std, and n (number of events). If we wanted to look at what it thought were events, we could use the plot method. By default, this method will plot detected events in a different color.

```

from PyPore.DataTypes import *

file = File( "My_File.abf" )

file.parse( parser=lambda_event_parser( threshold=50 ) )

file.plot()

```

Given that a file is huge, only every 100th point is used in the black regions, and every 5th point is used in events. This may lead to some problems, such as there are two regions which seem like they should be called events, but are colored black and not cyan. This is because in reality, there are spikes below 0 in each of these segments, and the parsing method filtered out any events which went below 0 pA at any point. However, the downsampling removed this spike (because it was less than 100 points long).

### Events

* **Attributes**: duration, start, end, mean, std, min, max, n, current, sample, segments, state\_parser, filtered, filter\_order, filter\_cutoff

* **Instance Methods**: filter( order, cutoff ), parse( parser ), delete(), apply\_hmm( hmm ), plot( [hmm, kwargs), to\_meta(), to\_dict(), to\_json()

* **Class Methods**: from\_json( filename ), from\_database( ... ), from\_segments( segments )

Events are segments of current which correspond to something passing through the nanopore. We hope that it is something which we are interested in, such as DNA or protein. An event is usually made up of a sequence of discrete segments of current, which should correspond to reading some region of whatever is passing through. In the best case, each discrete segment in an event corresponds to a single nucleotide of DNA, or a single amino acid of a protein passing through.

Events are often noisy, and transitions between them are quick, making filtering a good option for trying to see the underlying signal. Currently only [bessel filters](http://en.wikipedia.org/wiki/Bessel_filter) are supported for filtering tasks, as they've been shown to perform very well.

Let's continue with our example, and imagine that now we want to filter each event, and look at it! The filter method has two parameters, order and cutoff, defaulting to order=1 and cutoff=2000. (Note that we now import pyplot as well.)

```

from PyPore.DataTypes import *

from matplotlib import pyplot as plt

file = File( "My_File.abf" )

file.parse( parser=lambda_event_parser( threshold=50 ) )

for event in file.events:

event.filter( order=1, cutoff=2000 )

event.plot()

plt.show()

```

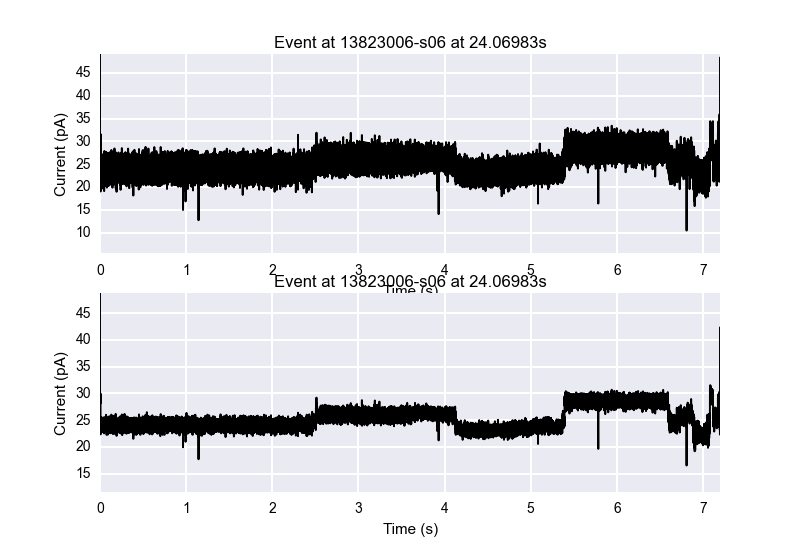

The first event plotted in this loop is shown.

Currently, *lambda_event_parser* and *MemoryParse* are the most used File parsers. MemoryParse takes in two lists, one of starts of events, and one of ends of events, and will cut a file into it's respective events. This is useful if you've done an analysis before and remember where the split points are.

The plot command will draw the event on whatever canvas you have, allowing you to make subplots with the events or add them into GUIs (such as Abada!), with the downside being that you need to use plt.show() after calling the plot command. The plot command wraps the pyplot.plot command, allowing you pass in any argument that could be used by pyplot.plot, for example:

```

event.plot( alpha=0.5, marker='o' )

```

This plot doesn't look terrible good, and takes longer to plot, but it is possible to do!

Subplot handling is extremely easy. All of the plotting commands plot to whichever canvas is currently open, allowing for you to do something like this:

```

from PyPore.DataTypes import *

from matplotlib import pyplot as plt

file = File( "My_File.abf" )

file.parse( parser=lambda_event_parser( threshold=50 ) )

for event in file.events:

plt.subplot(211)

event.plot()

plt.subplot(212)

event.filter()

event.plot()

plt.show()

```

This plot shows how easy it is to show a comparison between an event which is not filtered versus one which is filtered.

The next step is usually to try to segment this event into it's discrete states. There are several segmenters which have been written to do this, of which currently *StatSplit is the best, written by Dr. Kevin Karplus and based on a recursive maximum likelihood algorithm. This algorithm was sped up by rewritting it in Cython, leading to *SpeedyStatSplit, which is a python wrapper for the cython code. Segmenting an event is the same process as detecting events in a file, by using the parse method on an event and passing in a parser.

Let's say that now we want to segment an event and view it. Using the same plot command for the event, we can specify to color by 'cycle', which colors the segments in a four-color cycle for easy viewing. SpeedyStatSplit takes in several parameters, of which *min_gain_per_sample is the most important, and 0.50 to 1.50 usually provide an adequate level to parse at, with higher numbers leading to less segments.

```

from PyPore.DataTypes import *

from matplotlib import pyplot as plt

file = File( "My_File.abf" )

file.parse( parser=lambda_event_parser( threshold=50 ) )

for event in file.events:

event.filter( order=1, cutoff=2000 )

event.parse( parser=SpeedyStatSplit( min_gain_per_sample=0.50 ) )

event.plot( color='cycle' )

plt.show()

```

The color cycle goes blue-red-green-cyan.

The most reliable segmenter currently is *SpeedyStatSplit*. For more documentation on the parsers, see the parsers segment of this documentation. Both Files and Events inherit from the Segment class, described below. This means that any of the parsers will work on either files or events.

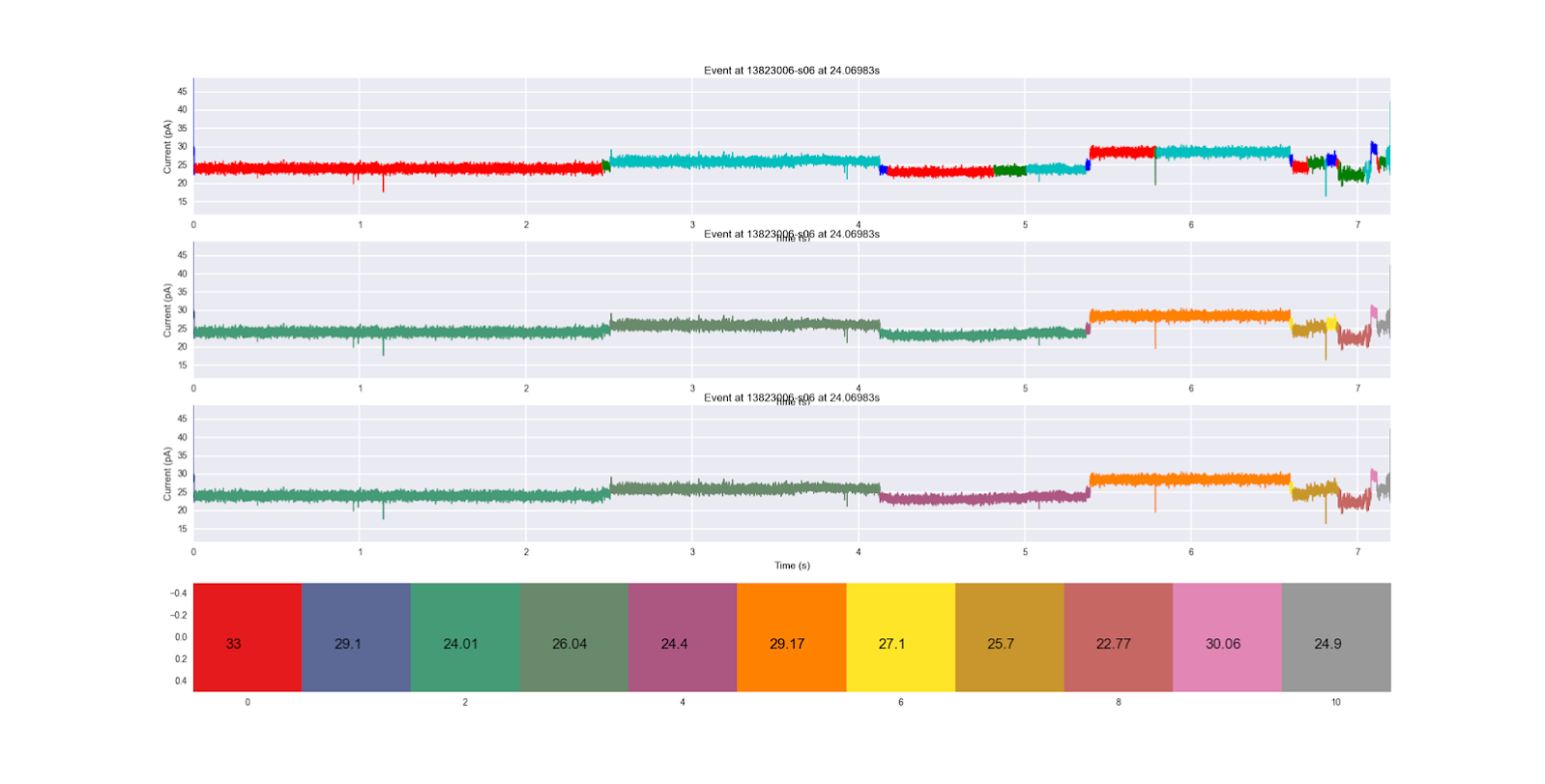

The last core functionality is the ability to apply an hidden markov model (HMM) to an event, and see which segments correspond to which hidden states. HMM functionality is made possible through the use of the yahmm class, which has a Model class and a viterbi method, which is called to find the best path through the HMM. A good example of one of these HMMs is tRNAbasic or tRNAbasic2, which were both made for this purpose. Lets say we want to compare the two HMMs to see which one we agree with more.

```

from PyPore.DataTypes import *

from PyPore.hmm import tRNAbasic

from matplotlib import pyplot as plt

file = File( "My_File.abf" )

file.parse( lambda_event_parser( threshold=50 ) )

for i, event in enumerate( file.events ):

event.filter()

event.parse( SpeedyStatSplit( min_gain_per_sample=.2 ) )

plt.subplot( 411 )

event.plot( color='cycle' )

plt.subplot( 412 )

event.plot( color='hmm', hmm=tRNAbasic() )

plt.subplot( 413 )

event.plot( color='hmm', hmm=tRNAbasic2() )

plt.subplot( 414 )

plt.imshow( [ np.arange(11) ], interpolation='nearest', cmap="Set1" )

plt.grid( False )

means = [ 33, 29.1, 24.01, 26.04, 24.4, 29.17, 27.1, 25.7, 22.77, 30.06, 24.9 ]

for i, mean in enumerate( means ):

plt.text( i-0.2, 0.1, str(mean), fontsize=16 )

plt.show()

```

You'll notice that the title of an image and the xlabel of the image above it will always conflict. This is unfortunate, but an acceptable consequence in my opinion. If you're making more professional grade images, you may need to go in and manually fix this conflict. We see the original segmentation on the top, the first HMM applied next, and the second HMM on the bottom. The color coding of each HMM hidden state (sequentially) along with the mean ionic current of their emission distribution are shown at the very bottom. We see that the bottom HMM seems to progress more sequentially, progressing to the purple state instead of regressing back to the blue-green state in the middle of the trace. It also does not go backwards to the yellow state once it's in the gold state later on in the trace. It seems like a more robust HMM, and this way of comparing them is super easy to do.

Event objects also have the properties start, end, duration, mean, std, and n (number of segments after segmentation has been performed).

### Segments

* **Attributes**: duration, start, end, mean, std, min, max, current

* **Instance Methods**: to\_json( filename ), to\_dict(), to\_meta(), delete()

* **Class Methods**: from\_json( filename )

A segment stores the series of floats in a given range of ionic current. This abstract notion allows for both Event and File to inherit from it, as both a file and an event are a range of floats. The context in which you will most likely interact with a Segment is in representing a discrete step of the biopolymer through the pore, with points usually coming from the same distribution.

Segments are short sequences of current samples, usually which appear to be from the same distribution. They are the core place where data are stored, as usually an event is analyzed by the metadata stored in each state. Segments have the attributes current, which stores the raw current samples, in addition to mean, std, duration, start, end, min, and max.

### Metadata

If storing the raw sequence of current samples is too memory intensive, there are two ways to get rid of lists of floats representing the current, which take up the vast majority of the memory ( >~99% ).

1) Initialize a MetaSegment object, instead of a Segment one, and feed in whatever statistics you'd like to save. This will prevent the current from ever being saved to a second object. For this example, lets assume you have a list of starts and ends of segments in an event, such as loading them from a cache.

```

event = Event( current=[...], start=..., file=... )

event.segments = [ MetaSegment( mean=np.mean( event.current[start:end] ),

std=np.std( event.current[start:end] ),

duration=(end-start)/100000 ) for start, end in zip( starts, ends ) ]

```

In this example, references to the current are not stored in both the event and the segment, which may save memory if you wish to not store the raw current after analyzing a file. The duration here is divided by 100,000 because abf files store 100,000 samples per second, and we wished to convert from the integer index of the start and end to the second index of the start and end.

2) If you have the memory to store the references, but don't want to accumulate them past a single event, you can parse a file normally, and produce normal segments, then call the function to_meta() to turn them into MetaSegments. This does not require any calculation on the user part, but does require the segment have contained all current samples at one point.

```

event = Event( current=[...], start=..., file=... )

event.parse( parser=SpeedyStatSplit() )

for segment in event.segments:

segment.to_meta()

```

You may have noticed that every datatype implements a to\_meta() method, which removes simply retypes the object to "Meta...", and removes the current attribute, and all references to that list. Remember that in python, if any references exist to a list, the list still exists. This means that your file contains the list of ionic current, and all events or segments simply contain pointers to that list, so meta-izing a list or segment by itself probably won't help that much in terms of memory. However, you can meta-ize the File, which will meta-ize everything in the file tree. This means that calling to\_meta() on a file will cause to\_meta() to be called on each event, which will cause to\_meta() to be called on every segment, removing every reference to that list, and tagging that list for garbage collection.

# Parsers

Given that both Events and Files inherit from the Segment class, any parser can be used on both Events and Files. However, some were written for the express purpose of event detection or segmentation, and are better suited for that task.