![]()

🥳 What is Lumos ?

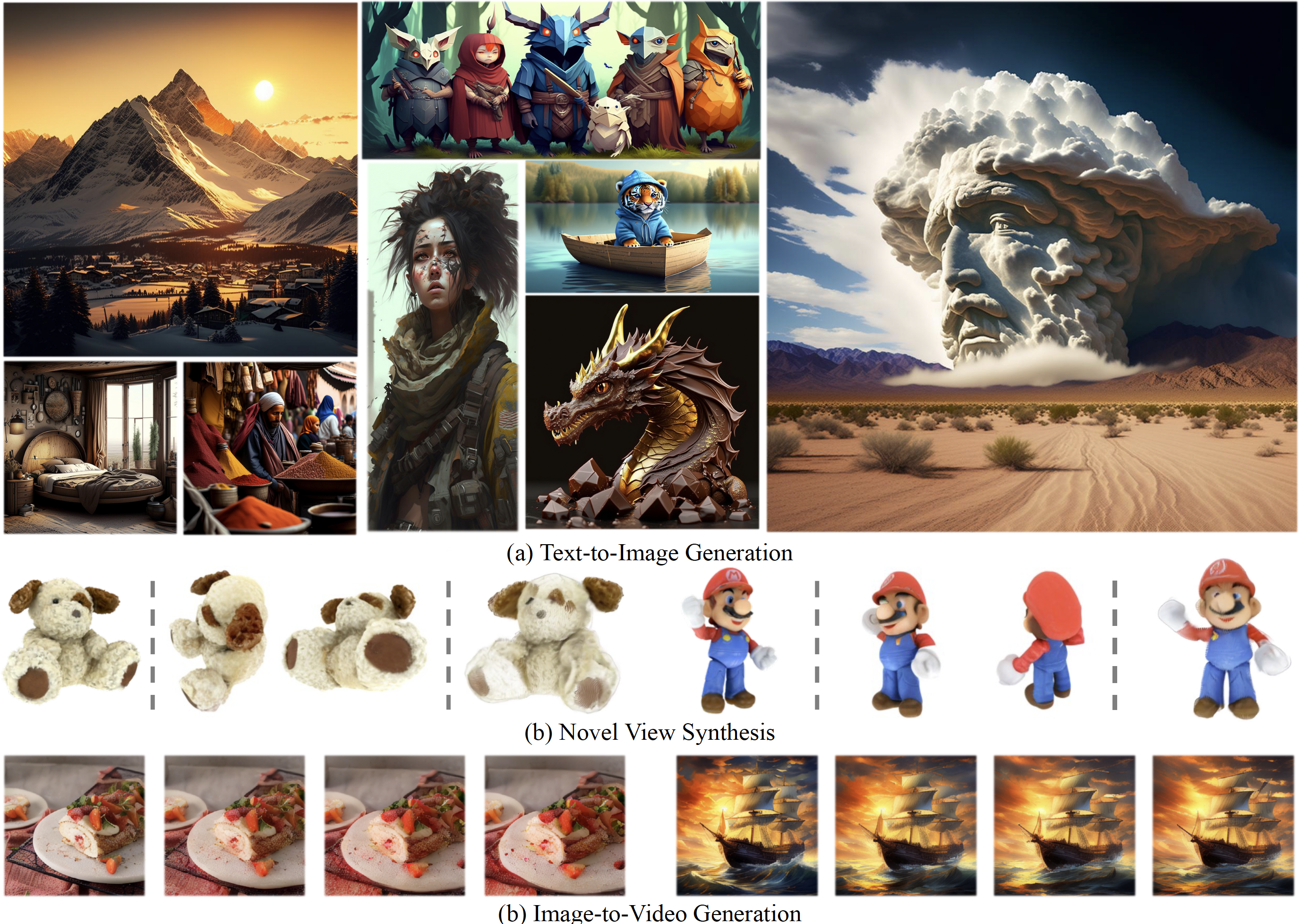

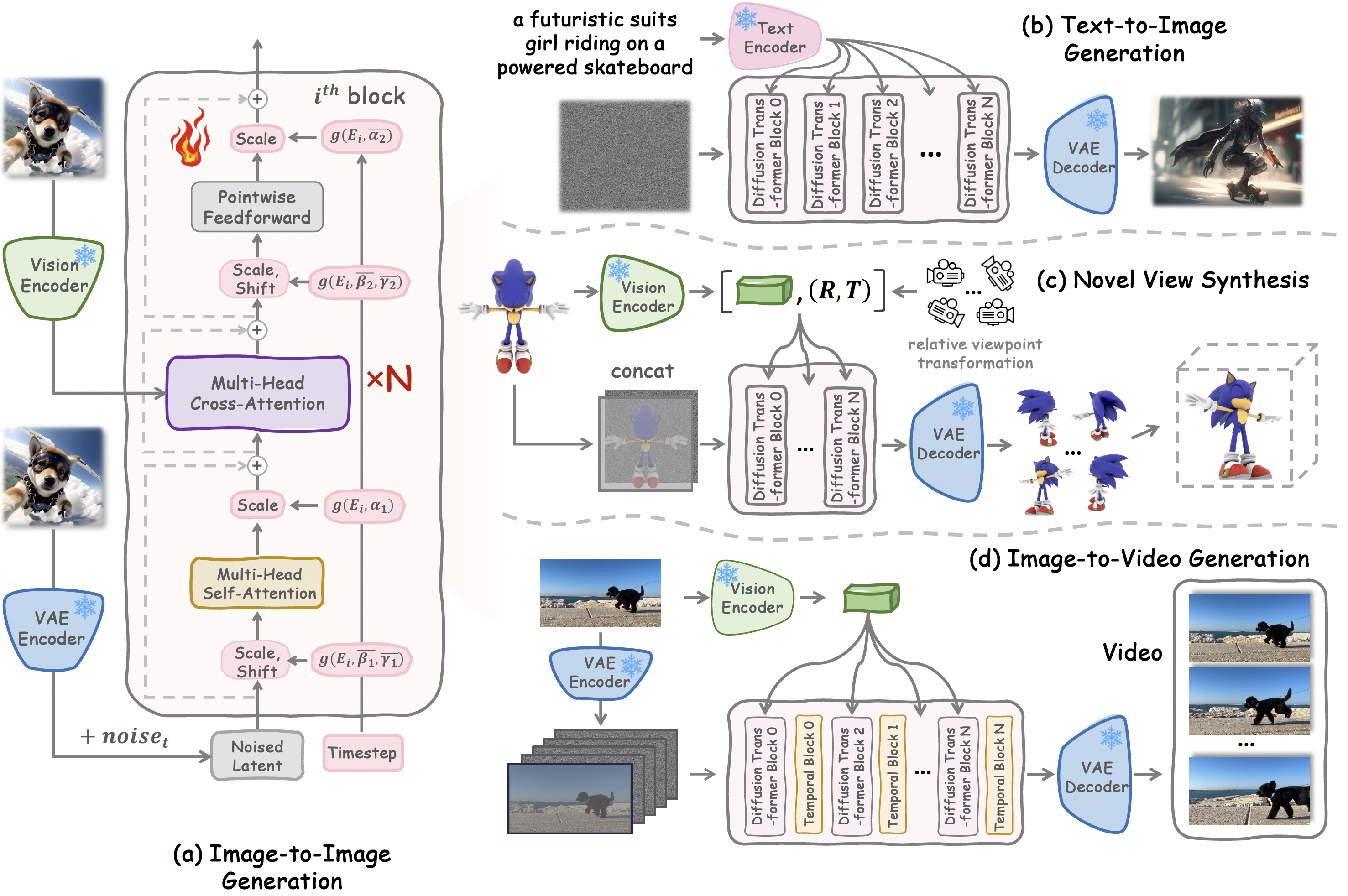

TL; DR: Lumos is a pure vision-based generative framework, which confirms the feasibility and the scalability of learning visual generative priors. It can be efficiently adapted to visual generative tasks such as text-to-image, image-to-3D, and image-to-video generation.

CLICK for the full abstract

Although text-to-image (T2I) models have recently thrived as visual generative priors, their reliance on high-quality text-image pairs makes scaling up expensive. We argue that grasping the cross-modality alignment is not a necessity for a sound visual generative prior, whose focus should be on texture modeling. Such a philosophy inspires us to study image-to-image (I2I) generation, where models can learn from in-the-wild images in a self-supervised manner. We first develop a pure vision-based training framework, Lumos, and confirm the feasibility and the scalability of learning I2I models. We then find that, as an upstream task of T2I, our I2I model serves as a more foundational visual prior and achieves on-par or better performance than existing T2I models using only 1/10 text-image pairs for fine-tuning. We further demonstrate the superiority of I2I priors over T2I priors on some text-irrelevant visual generative tasks, like image-to-3D and image-to-video.🪄✨ Lumos Model Card

🚀 Model Structure

Lumos consists of transformer blocks for latent diffusion, which is applied for various visual generative tasks such as text-to-image, image-to-3D, and image-to-video generation.

Source code is available at https://github.com/xiaomabufei/lumos.

📋 Model Description

- Developed by: Lumos

- Model type: Diffusion-Transformer-based generative model

- License: CreativeML Open RAIL++-M License

- Model Description: Lumos-I2I is a model designed for generating images based on image prompts. It utilizes a Transformer Latent Diffusion architecture and incorporates a fixed, pretrained vision encoder (DINO)). Lumos-T2I is a model that can be used to generate images based on text prompts. It is a Transformer Latent Diffusion Model that uses one fixed, pretrained text encoders (T5)).

- Resources for more information: Check out our GitHub Repository and the Lumos report on arXiv.

Inference Providers

NEW

This model is not currently available via any of the supported Inference Providers.

The model cannot be deployed to the HF Inference API:

The model has no library tag.