---

license: cc-by-nc-4.0

language:

- en

metrics:

- accuracy

base_model:

- meta-llama/Llama-3.3-70B-Instruct

---

# CoALM-70B: Conversational Agentic Language Model

[](https://github.com/oumi-ai/oumi)

## Model Description

**CoALM-70B** is our middle scale **Conversational Agentic Language Model**, designed to integrate **Task-Oriented Dialogue (TOD) capabilities** with **Language Agent (LA) functionalities** at a **larger scale** than its predecessor CoALM-8B. By leveraging **CoALM-IT**, a multi-task dataset interleaving **multi-turn ReAct reasoning** with **complex API usage**, CoALM-70B achieves **state-of-the-art performance** across TOD and function-calling benchmarks.

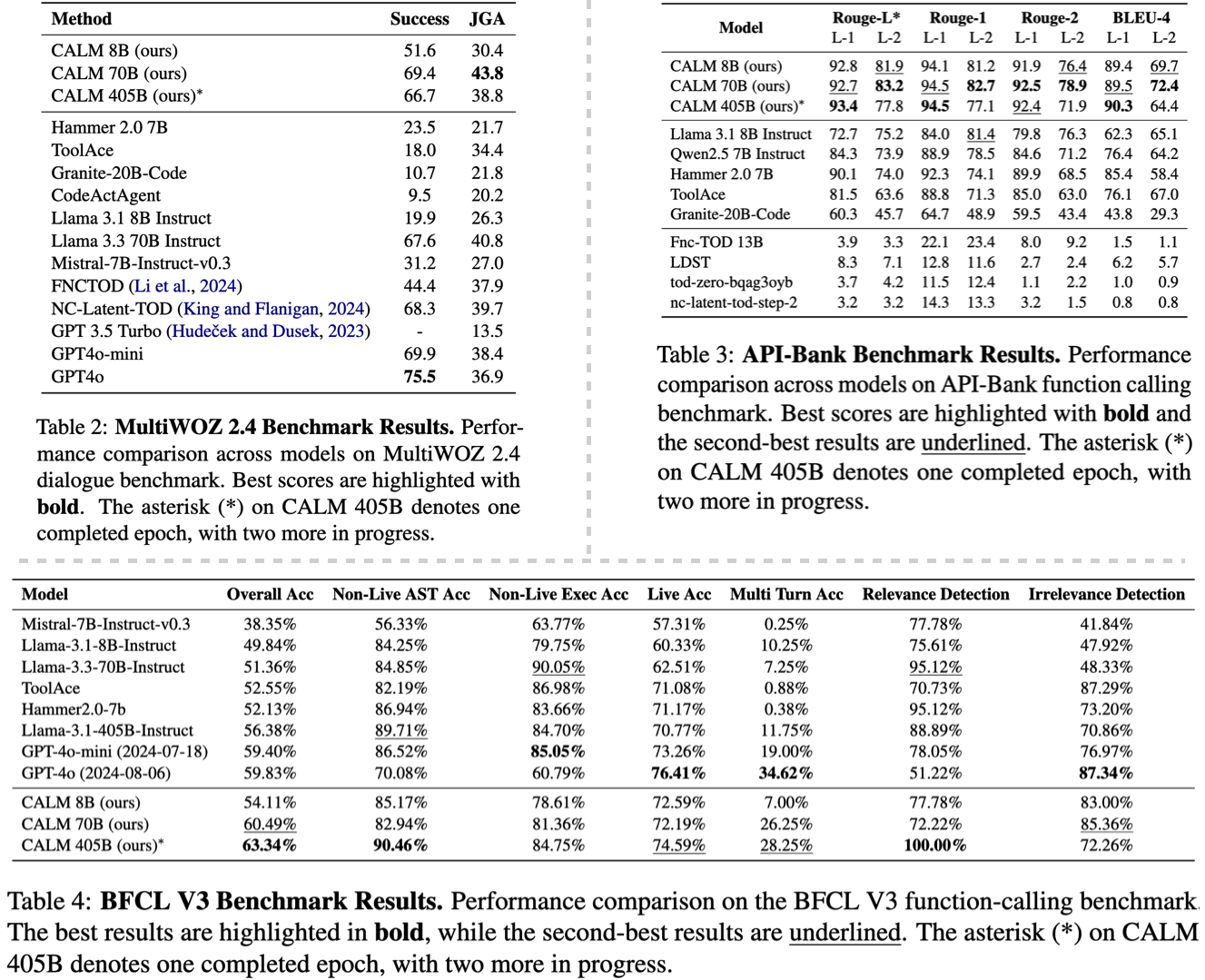

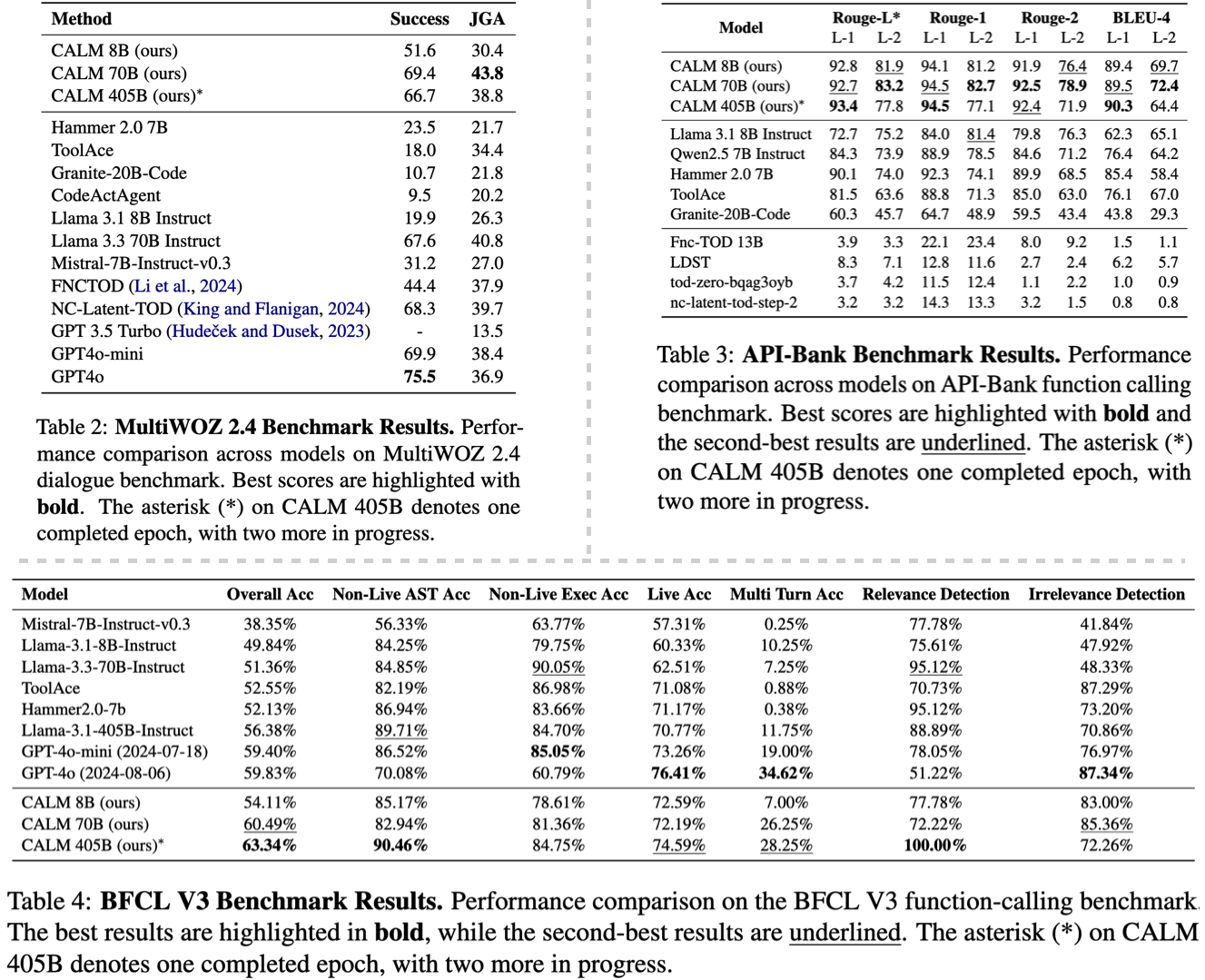

CoALM-70B has been fine-tuned on a **comprehensive multi-tasking** covering dialogue state tracking, function calling, and multi-turn reasoning, surpassing even proprietary models like **GPT-4o** on major conversational evaluation benchmarks: **MultiWOZ 2.4 (TOD), BFCL V3 (LA), and API-Bank (LA).**

## Model Sources

- 📝 **Paper:** https://arxiv.org/abs/2502.08820

- 🌐 **Project Page:** https://emrecanacikgoz.github.io/CoALM/

- 💻 **Repository:** https://github.com/oumi-ai/oumi/tree/main/configs/projects/CALM

- 💎 **Dataset:** https://huggingface.co/datasets/uiuc-convai/CoALM-IT

---

## Model Details

- **Model Name:** CoALM-70B

- **Developed by:** Colloboration of UIUC Conversational AI LAB and Oumi

- **License:** cc-by-nc-4.0

- **Architecture:** Fine-tuned **Llama 3.3 70B Instruct**

- **Parameter Count:** 70B

- **Training Data:** CoALM-IT

- **Training Type:** Full Fine-tunning (FFT)

- **Fine-tuning Framework:** [Oumi](https://github.com/oumi-ai/oumi)

- **Training Hardware:** 8 NVIDIA H100 GPUs

- **Training Duration:** ~24 hours

- **Evaluation Benchmarks:** MultiWOZ 2.4, BFCL V3, API-Bank

- **Release Date:** February 5, 2025

---

## Capabilities and Features

### 🗣 Conversational Agentic Abilities

- **Multi-turn Dialogue Mastery:** Handles long conversations with accurate state tracking.

- **Advanced Function Calling:** Dynamically selects and executes API calls for task completion.

- **Enhanced ReAct-based Reasoning:** Integrates structured reasoning (User-Thought-Action-Observation-Thought-Response).

- **Zero-Shot Generalization:** Excels in unseen function-calling and TOD tasks.

### 🚀 Benchmark Performance

- **MultiWOZ 2.4 (TOD):** Strong performance in dialogue state tracking and task success.

- **BFCL V3 (LA):** Superior function-calling abilities compared to language agents.

- **API-Bank (LA):** High accuracy in API call generation and response synthesis.

---

## Training Process

### 🔧 Fine-tuning Stages

1. **TOD Fine-tuning:** Optimized for dialogue state tracking (e.g., augmented SNIPS in instruction-tuned format).

2. **Function Calling Fine-tuning:** Trained to generate precise API calls from LA datasets.

3. **ReAct-based Fine-tuning:** Enhances multi-turn conversations with API integrations through structured reasoning.

### 🔍 Training Hyperparameters

- **Base Model:** Llama 3.3 70B Instruct

- **LoRA Config:** Rank = 16, Scaling Factor = 32

- **Batch Size:** 7

- **Learning Rate:** 4e-5

- **Optimizer:** AdamW (betas = 0.9, 0.999, epsilon = 1e-8)

- **Precision:** Mixed precision (bfloat16)

- **Warm-up Steps:** 24

- **Gradient Accumulation Steps:** 1

---

## 💡 CoALM-IT Dataset

---

## 📊 Benchmark Performance

---

## 📊 Benchmark Performance

## Usage

### 🏗 How to Load the Model using HuggingFace

```python

from transformers import AutoModelForCausalLM, AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("uiuc-convai/CoALM-70B")

model = AutoModelForCausalLM.from_pretrained("uiuc-convai/CoALM-70B")

```

### 🛠 Example Oumi Inference

```bash

pip install oumi

# See oumi_infer.yaml in this model's /oumi/ directory.

oumi infer -i -c ./oumi_infer.yaml

```

### 🛠 Example Oumi Fine-Tuning

```bash

pip install oumi

# See oumi_train.yaml in this model's /oumi/ directory.

oumi train -c ./oumi_train.yaml

```

---

- **Scalability to CoALM-405B:** Next iteration will extend capabilities for even larger-scale conversations.

- **Continuous Open-Source Expansion:** Ongoing release of datasets, model weights, and training artifacts to foster community research.

---

## Acknowledgements

We'd like to thank the [Oumi AI Team](https://github.com/oumi-ai/oumi) for collaborating on training the models using the Oumi platform on [Together AI's](https://www.together.ai/) cloud.

## License

This model is licensed under [Creative Commons NonCommercial (CC BY-NC 4.0)](https://creativecommons.org/licenses/by-nc/4.0/legalcode).

---

## Citation

If you use **CoALM-70B** in your research, please cite:

```

@misc{acikgoz2025singlemodelmastermultiturn,

title={Can a Single Model Master Both Multi-turn Conversations and Tool Use? CoALM: A Unified Conversational Agentic Language Model},

author={Emre Can Acikgoz and Jeremiah Greer and Akul Datta and Ze Yang and William Zeng and Oussama Elachqar and Emmanouil Koukoumidis and Dilek Hakkani-Tür and Gokhan Tur},

year={2025},

eprint={2502.08820},

archivePrefix={arXiv},

primaryClass={cs.AI},

url={https://arxiv.org/abs/2502.08820},

}

```

For more details, visit [Project Repository](https://github.com/oumi-ai/oumi/tree/main/configs/projects/calm) or contact **acikgoz2@illinois.edu**.

## Usage

### 🏗 How to Load the Model using HuggingFace

```python

from transformers import AutoModelForCausalLM, AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("uiuc-convai/CoALM-70B")

model = AutoModelForCausalLM.from_pretrained("uiuc-convai/CoALM-70B")

```

### 🛠 Example Oumi Inference

```bash

pip install oumi

# See oumi_infer.yaml in this model's /oumi/ directory.

oumi infer -i -c ./oumi_infer.yaml

```

### 🛠 Example Oumi Fine-Tuning

```bash

pip install oumi

# See oumi_train.yaml in this model's /oumi/ directory.

oumi train -c ./oumi_train.yaml

```

---

- **Scalability to CoALM-405B:** Next iteration will extend capabilities for even larger-scale conversations.

- **Continuous Open-Source Expansion:** Ongoing release of datasets, model weights, and training artifacts to foster community research.

---

## Acknowledgements

We'd like to thank the [Oumi AI Team](https://github.com/oumi-ai/oumi) for collaborating on training the models using the Oumi platform on [Together AI's](https://www.together.ai/) cloud.

## License

This model is licensed under [Creative Commons NonCommercial (CC BY-NC 4.0)](https://creativecommons.org/licenses/by-nc/4.0/legalcode).

---

## Citation

If you use **CoALM-70B** in your research, please cite:

```

@misc{acikgoz2025singlemodelmastermultiturn,

title={Can a Single Model Master Both Multi-turn Conversations and Tool Use? CoALM: A Unified Conversational Agentic Language Model},

author={Emre Can Acikgoz and Jeremiah Greer and Akul Datta and Ze Yang and William Zeng and Oussama Elachqar and Emmanouil Koukoumidis and Dilek Hakkani-Tür and Gokhan Tur},

year={2025},

eprint={2502.08820},

archivePrefix={arXiv},

primaryClass={cs.AI},

url={https://arxiv.org/abs/2502.08820},

}

```

For more details, visit [Project Repository](https://github.com/oumi-ai/oumi/tree/main/configs/projects/calm) or contact **acikgoz2@illinois.edu**. ---

## 📊 Benchmark Performance

---

## 📊 Benchmark Performance

## Usage

### 🏗 How to Load the Model using HuggingFace

```python

from transformers import AutoModelForCausalLM, AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("uiuc-convai/CoALM-70B")

model = AutoModelForCausalLM.from_pretrained("uiuc-convai/CoALM-70B")

```

### 🛠 Example Oumi Inference

```bash

pip install oumi

# See oumi_infer.yaml in this model's /oumi/ directory.

oumi infer -i -c ./oumi_infer.yaml

```

### 🛠 Example Oumi Fine-Tuning

```bash

pip install oumi

# See oumi_train.yaml in this model's /oumi/ directory.

oumi train -c ./oumi_train.yaml

```

---

- **Scalability to CoALM-405B:** Next iteration will extend capabilities for even larger-scale conversations.

- **Continuous Open-Source Expansion:** Ongoing release of datasets, model weights, and training artifacts to foster community research.

---

## Acknowledgements

We'd like to thank the [Oumi AI Team](https://github.com/oumi-ai/oumi) for collaborating on training the models using the Oumi platform on [Together AI's](https://www.together.ai/) cloud.

## License

This model is licensed under [Creative Commons NonCommercial (CC BY-NC 4.0)](https://creativecommons.org/licenses/by-nc/4.0/legalcode).

---

## Citation

If you use **CoALM-70B** in your research, please cite:

```

@misc{acikgoz2025singlemodelmastermultiturn,

title={Can a Single Model Master Both Multi-turn Conversations and Tool Use? CoALM: A Unified Conversational Agentic Language Model},

author={Emre Can Acikgoz and Jeremiah Greer and Akul Datta and Ze Yang and William Zeng and Oussama Elachqar and Emmanouil Koukoumidis and Dilek Hakkani-Tür and Gokhan Tur},

year={2025},

eprint={2502.08820},

archivePrefix={arXiv},

primaryClass={cs.AI},

url={https://arxiv.org/abs/2502.08820},

}

```

For more details, visit [Project Repository](https://github.com/oumi-ai/oumi/tree/main/configs/projects/calm) or contact **acikgoz2@illinois.edu**.

## Usage

### 🏗 How to Load the Model using HuggingFace

```python

from transformers import AutoModelForCausalLM, AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("uiuc-convai/CoALM-70B")

model = AutoModelForCausalLM.from_pretrained("uiuc-convai/CoALM-70B")

```

### 🛠 Example Oumi Inference

```bash

pip install oumi

# See oumi_infer.yaml in this model's /oumi/ directory.

oumi infer -i -c ./oumi_infer.yaml

```

### 🛠 Example Oumi Fine-Tuning

```bash

pip install oumi

# See oumi_train.yaml in this model's /oumi/ directory.

oumi train -c ./oumi_train.yaml

```

---

- **Scalability to CoALM-405B:** Next iteration will extend capabilities for even larger-scale conversations.

- **Continuous Open-Source Expansion:** Ongoing release of datasets, model weights, and training artifacts to foster community research.

---

## Acknowledgements

We'd like to thank the [Oumi AI Team](https://github.com/oumi-ai/oumi) for collaborating on training the models using the Oumi platform on [Together AI's](https://www.together.ai/) cloud.

## License

This model is licensed under [Creative Commons NonCommercial (CC BY-NC 4.0)](https://creativecommons.org/licenses/by-nc/4.0/legalcode).

---

## Citation

If you use **CoALM-70B** in your research, please cite:

```

@misc{acikgoz2025singlemodelmastermultiturn,

title={Can a Single Model Master Both Multi-turn Conversations and Tool Use? CoALM: A Unified Conversational Agentic Language Model},

author={Emre Can Acikgoz and Jeremiah Greer and Akul Datta and Ze Yang and William Zeng and Oussama Elachqar and Emmanouil Koukoumidis and Dilek Hakkani-Tür and Gokhan Tur},

year={2025},

eprint={2502.08820},

archivePrefix={arXiv},

primaryClass={cs.AI},

url={https://arxiv.org/abs/2502.08820},

}

```

For more details, visit [Project Repository](https://github.com/oumi-ai/oumi/tree/main/configs/projects/calm) or contact **acikgoz2@illinois.edu**.