Commit

·

f47b137

1

Parent(s):

ab04c92

Updated duplicate pages interface to include subdocuments and review. Updated relevant user guide. Minor package updates

Browse files- README.md +40 -8

- app.py +166 -107

- pyproject.toml +1 -1

- requirements.txt +1 -1

- src/user_guide.qmd +44 -12

- tools/file_conversion.py +17 -5

- tools/find_duplicate_pages.py +269 -139

- tools/helper_functions.py +8 -0

- tools/redaction_review.py +10 -2

README.md

CHANGED

|

@@ -14,7 +14,7 @@ version: 0.7.0

|

|

| 14 |

|

| 15 |

Redact personally identifiable information (PII) from documents (pdf, images), open text, or tabular data (xlsx/csv/parquet). Please see the [User Guide](#user-guide) for a walkthrough on how to use the app. Below is a very brief overview.

|

| 16 |

|

| 17 |

-

To identify text in documents, the 'local' text/OCR image analysis uses spacy/tesseract, and works

|

| 18 |

|

| 19 |

After redaction, review suggested redactions on the 'Review redactions' tab. The original pdf can be uploaded here alongside a '...redaction_file.csv' to continue a previous redaction/review task. See the 'Redaction settings' tab to choose which pages to redact, the type of information to redact (e.g. people, places), or custom terms to always include/ exclude from redaction.

|

| 20 |

|

|

@@ -181,6 +181,8 @@ If the table is empty, you can add a new entry, you can add a new row by clickin

|

|

| 181 |

|

| 182 |

|

| 183 |

|

|

|

|

|

|

|

| 184 |

### Redacting additional types of personal information

|

| 185 |

|

| 186 |

You may want to redact additional types of information beyond the defaults, or you may not be interested in default suggested entity types. There are dates in the example complaint letter. Say we wanted to redact those dates also?

|

|

@@ -390,21 +392,49 @@ You can find this option at the bottom of the 'Redaction Settings' tab. Upload m

|

|

| 390 |

|

| 391 |

The files for this section are stored [here](https://github.com/seanpedrick-case/document_redaction_examples/blob/main/duplicate_page_find_in_app/).

|

| 392 |

|

| 393 |

-

Some redaction tasks involve removing duplicate pages of text that may exist across multiple documents. This feature

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 394 |

|

| 395 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 396 |

|

| 397 |

-

|

| 398 |

|

| 399 |

-

|

|

|

|

| 400 |

|

| 401 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 402 |

|

| 403 |

|

| 404 |

|

| 405 |

-

|

| 406 |

|

| 407 |

-

above.

|

| 410 |

|

|

@@ -505,6 +535,8 @@ Again, a lot can potentially go wrong with AWS solutions that are insecure, so b

|

|

| 505 |

|

| 506 |

## Modifying existing redaction review files

|

| 507 |

|

|

|

|

|

|

|

| 508 |

You can find the folder containing the files discussed in this section [here](https://github.com/seanpedrick-case/document_redaction_examples/blob/main/merge_review_files/).

|

| 509 |

|

| 510 |

As well as serving as inputs to the document redaction app's review function, the 'review_file.csv' output can be modified outside of the app, and also merged with others from multiple redaction attempts on the same file. This gives you the flexibility to change redaction details outside of the app.

|

|

|

|

| 14 |

|

| 15 |

Redact personally identifiable information (PII) from documents (pdf, images), open text, or tabular data (xlsx/csv/parquet). Please see the [User Guide](#user-guide) for a walkthrough on how to use the app. Below is a very brief overview.

|

| 16 |

|

| 17 |

+

To identify text in documents, the 'local' text/OCR image analysis uses spacy/tesseract, and works quite well for documents with typed text. If available, choose 'AWS Textract service' to redact more complex elements e.g. signatures or handwriting. Then, choose a method for PII identification. 'Local' is quick and gives good results if you are primarily looking for a custom list of terms to redact (see Redaction settings). If available, AWS Comprehend gives better results at a small cost.

|

| 18 |

|

| 19 |

After redaction, review suggested redactions on the 'Review redactions' tab. The original pdf can be uploaded here alongside a '...redaction_file.csv' to continue a previous redaction/review task. See the 'Redaction settings' tab to choose which pages to redact, the type of information to redact (e.g. people, places), or custom terms to always include/ exclude from redaction.

|

| 20 |

|

|

|

|

| 181 |

|

| 182 |

|

| 183 |

|

| 184 |

+

**Note:** As of version 0.7.0 you can now apply your whole page redaction list directly to the document file currently under review by clicking the 'Apply whole page redaction list to document currently under review' button that appears here.

|

| 185 |

+

|

| 186 |

### Redacting additional types of personal information

|

| 187 |

|

| 188 |

You may want to redact additional types of information beyond the defaults, or you may not be interested in default suggested entity types. There are dates in the example complaint letter. Say we wanted to redact those dates also?

|

|

|

|

| 392 |

|

| 393 |

The files for this section are stored [here](https://github.com/seanpedrick-case/document_redaction_examples/blob/main/duplicate_page_find_in_app/).

|

| 394 |

|

| 395 |

+

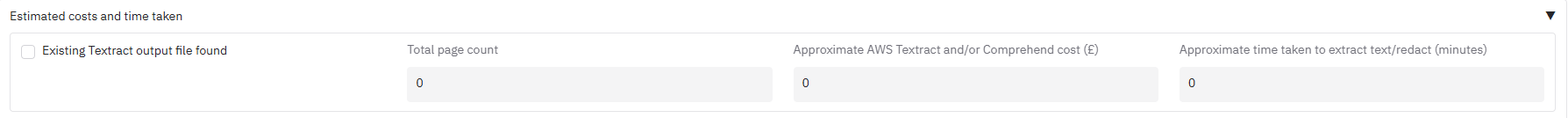

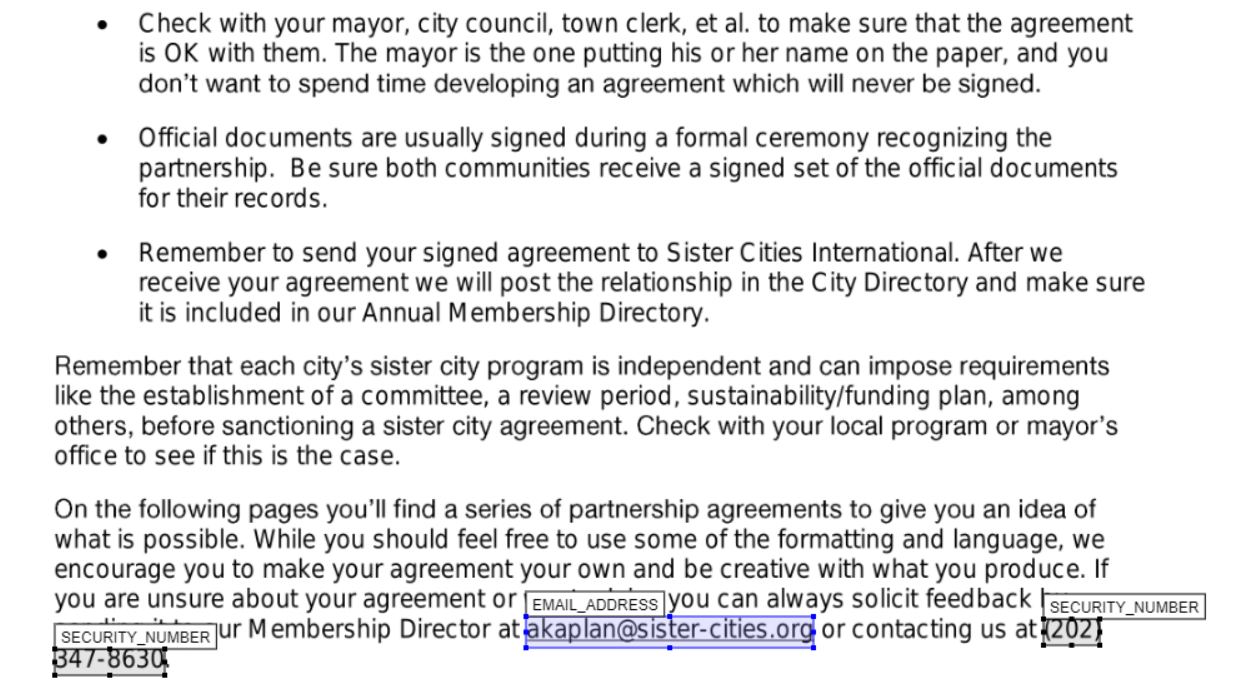

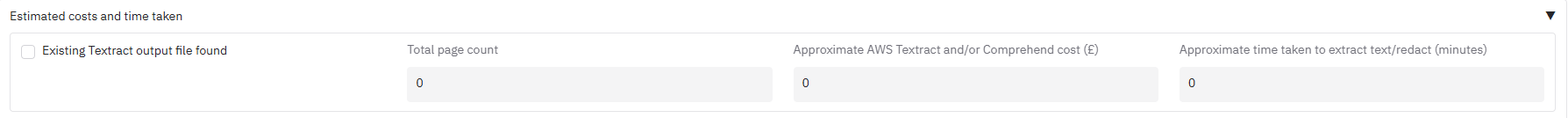

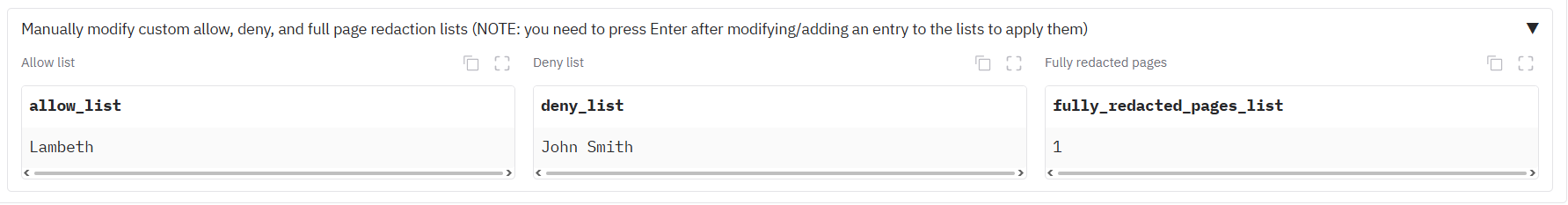

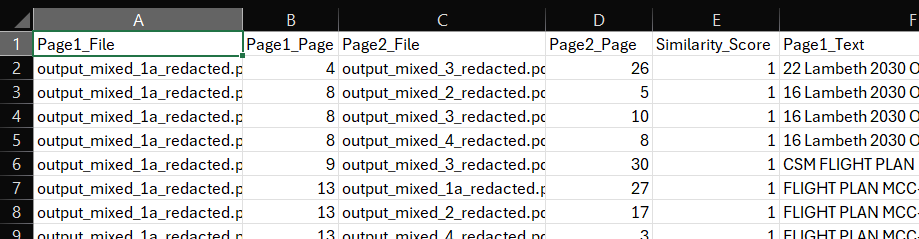

Some redaction tasks involve removing duplicate pages of text that may exist across multiple documents. This feature helps you find and remove duplicate content that may exist in single or multiple documents. It can identify everything from single identical pages to multi-page sections (subdocuments). The process involves three main steps: configuring the analysis, reviewing the results in the interactive interface, and then using the generated files to perform the redactions.

|

| 396 |

+

|

| 397 |

+

|

| 398 |

+

|

| 399 |

+

**Step 1: Upload and Configure the Analysis**

|

| 400 |

+

First, navigate to the "Identify duplicate pages" tab. Upload all the ocr_output.csv files you wish to compare into the file area. These files are generated every time you run a redaction task and contain the text for each page of a document.

|

| 401 |

+

|

| 402 |

+

For our example, you can upload the four 'ocr_output.csv' files provided in the example folder into the file area. Click 'Identify duplicate pages' and you will see a number of files returned. In case you want to see the original PDFs, they are available [here](https://github.com/seanpedrick-case/document_redaction_examples/blob/main/duplicate_page_find_in_app/input_pdfs/).

|

| 403 |

+

|

| 404 |

+

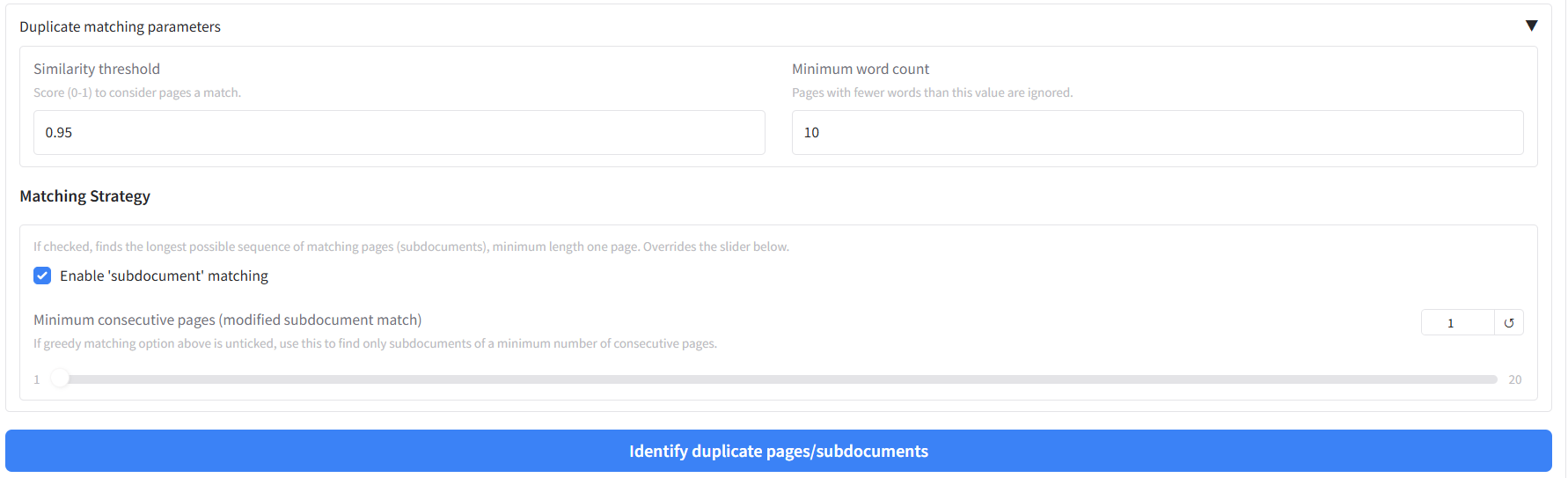

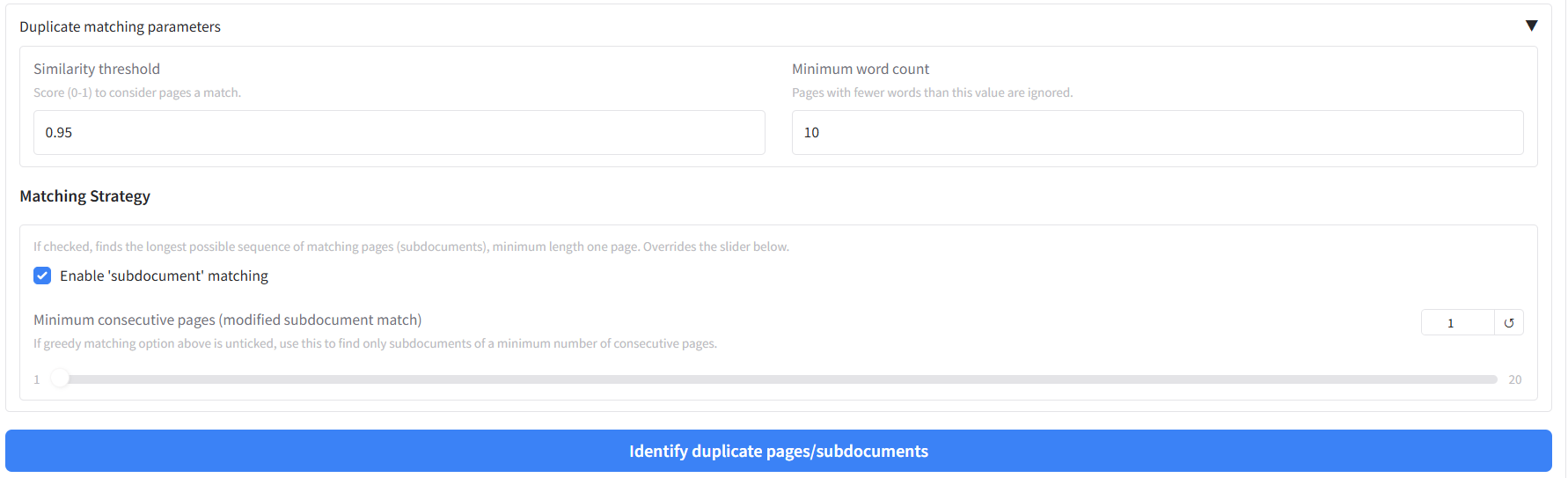

The default options will search for matching subdocuments of any length. Before running the analysis, you can configure these matching parameters to tell the tool what you're looking for:

|

| 405 |

+

|

| 406 |

+

|

| 407 |

|

| 408 |

+

*Matching Parameters*

|

| 409 |

+

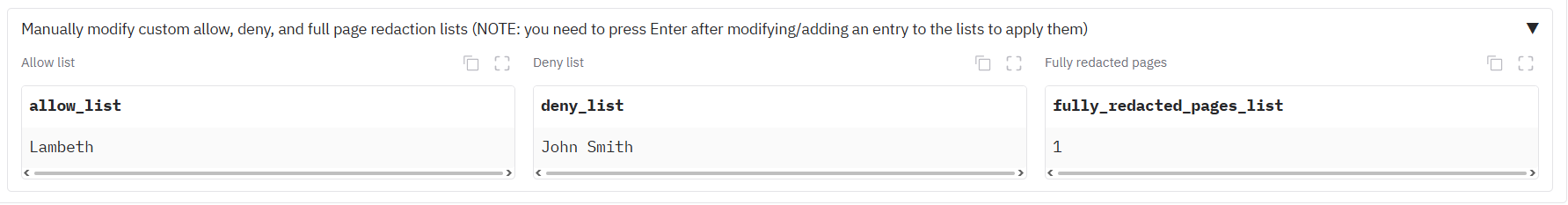

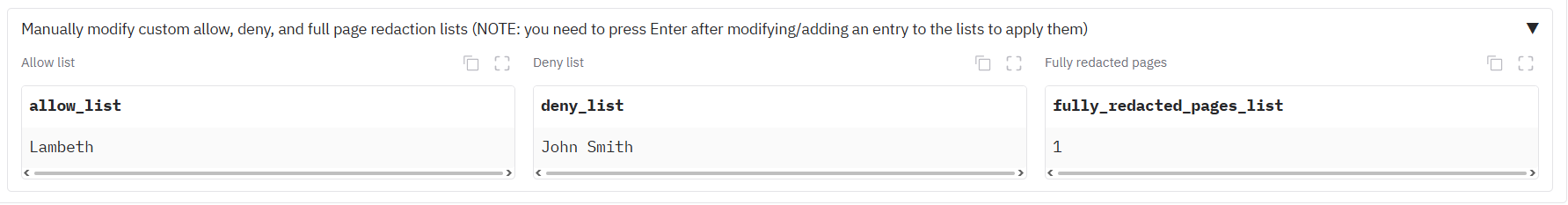

- **Similarity Threshold:** A score from 0 to 1. Pages or sequences of pages with a calculated text similarity above this value will be considered a match. The default of 0.9 (90%) is a good starting point for finding near-identical pages.

|

| 410 |

+

- **Min Word Count:** Pages with fewer words than this value will be completely ignored during the comparison. This is extremely useful for filtering out blank pages, title pages, or boilerplate pages that might otherwise create noise in the results. The default is 10.

|

| 411 |

+

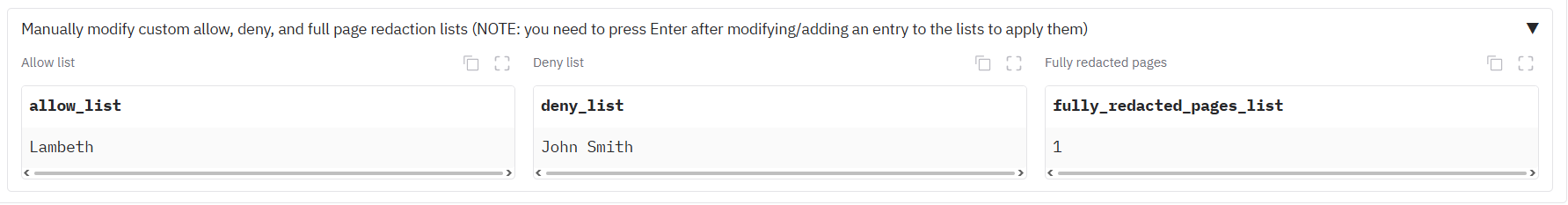

- **Choosing a Matching Strategy:** You have three main options to find duplicate content.

|

| 412 |

+

- *'Subdocument' matching (default):* Use this to find the longest possible sequence of matching pages. The tool will find an initial match and then automatically expand it forward page-by-page until the consecutive match breaks. This is the best method for identifying complete copied chapters or sections of unknown length. This is enabled by default by ticking the "Enable 'subdocument' matching" box. This setting overrides the described below.

|

| 413 |

+

- *Minimum length subdocument matching:* Use this to find sequences of consecutively matching pages with a minimum page lenght. For example, setting the slider to 3 will only return sections that are at least 3 pages long. How to enable: Untick the "Enable 'subdocument' matching" box and set the "Minimum consecutive pages" slider to a value greater than 1.

|

| 414 |

+

- *Single Page Matching:* Use this to find all individual page pairs that are similar to each other. Leave the "Enable 'subdocument' matching" box unchecked and keep the "Minimum consecutive pages" slider at 1.

|

| 415 |

|

| 416 |

+

Once your parameters are set, click the "Identify duplicate pages/subdocuments" button.

|

| 417 |

|

| 418 |

+

**Step 2: Review Results in the Interface**

|

| 419 |

+

After the analysis is complete, the results will be displayed directly in the interface.

|

| 420 |

|

| 421 |

+

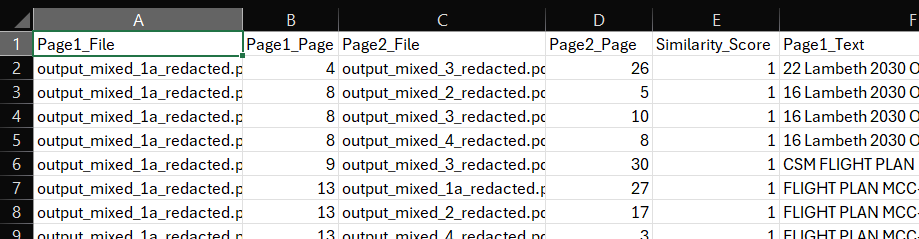

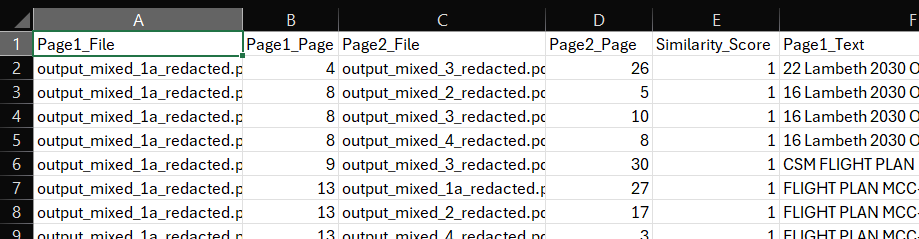

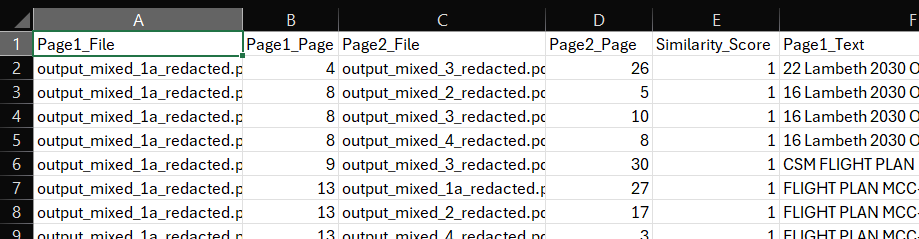

*Analysis Summary:* A table will appear showing a summary of all the matches found. The columns will change depending on the matching strategy you chose. For subdocument matches, it will show the start and end pages of the matched sequence.

|

| 422 |

+

|

| 423 |

+

*Interactive Preview:* This is the most important part of the review process. Click on any row in the summary table. The full text of the matching page(s) will appear side-by-side in the "Full Text Preview" section below, allowing you to instantly verify the accuracy of the match.

|

| 424 |

+

|

| 425 |

+

|

| 426 |

+

|

| 427 |

+

**Step 3: Download and Use the Output Files**

|

| 428 |

+

The analysis also generates a set of downloadable files for your records and for performing redactions.

|

| 429 |

+

|

| 430 |

+

|

| 431 |

+

- page_similarity_results.csv: This is a detailed report of the analysis you just ran. It shows a breakdown of the pages from each file that are most similar to each other above the similarity threshold. You can compare the text in the two columns 'Page_1_Text' and 'Page_2_Text'. For single-page matches, it will list each pair of matching pages. For subdocument matches, it will list the start and end pages of each matched sequence, along with the total length of the match.

|

| 432 |

|

| 433 |

|

| 434 |

|

| 435 |

+

- [Original_Filename]_pages_to_redact.csv: For each input document that was found to contain duplicate content, a separate redaction list is created. This is a simple, one-column CSV file containing a list of all page numbers that should be removed. To use these files, you can either upload the original document (i.e. the PDF) on the 'Review redactions' tab, and then click on the 'Apply relevant duplicate page output to document currently under review' button. You should see the whole pages suggested for redaction on the 'Review redactions' tab. Alternatively, you can reupload the file into the whole page redaction section as described in the ['Full page redaction list example' section](#full-page-redaction-list-example).

|

| 436 |

|

| 437 |

+

|

| 438 |

|

| 439 |

If you want to combine the results from this redaction process with previous redaction tasks for the same PDF, you could merge review file outputs following the steps described in [Merging existing redaction review files](#merging-existing-redaction-review-files) above.

|

| 440 |

|

|

|

|

| 535 |

|

| 536 |

## Modifying existing redaction review files

|

| 537 |

|

| 538 |

+

*Note:* As of version 0.7.0 you can now modify redaction review files directly in the app on the 'Review redactions' tab. Open the accordion 'View and edit review data' under the file input area. You can edit review file data cells here - press Enter to apply changes. You should see the effect on the current page if you click the 'Save changes on current page to file' button to the right.

|

| 539 |

+

|

| 540 |

You can find the folder containing the files discussed in this section [here](https://github.com/seanpedrick-case/document_redaction_examples/blob/main/merge_review_files/).

|

| 541 |

|

| 542 |

As well as serving as inputs to the document redaction app's review function, the 'review_file.csv' output can be modified outside of the app, and also merged with others from multiple redaction attempts on the same file. This gives you the flexibility to change redaction details outside of the app.

|

app.py

CHANGED

|

@@ -3,37 +3,39 @@ import pandas as pd

|

|

| 3 |

import gradio as gr

|

| 4 |

from gradio_image_annotation import image_annotator

|

| 5 |

from tools.config import OUTPUT_FOLDER, INPUT_FOLDER, RUN_DIRECT_MODE, MAX_QUEUE_SIZE, DEFAULT_CONCURRENCY_LIMIT, MAX_FILE_SIZE, GRADIO_SERVER_PORT, ROOT_PATH, GET_DEFAULT_ALLOW_LIST, ALLOW_LIST_PATH, S3_ALLOW_LIST_PATH, FEEDBACK_LOGS_FOLDER, ACCESS_LOGS_FOLDER, USAGE_LOGS_FOLDER, REDACTION_LANGUAGE, GET_COST_CODES, COST_CODES_PATH, S3_COST_CODES_PATH, ENFORCE_COST_CODES, DISPLAY_FILE_NAMES_IN_LOGS, SHOW_COSTS, RUN_AWS_FUNCTIONS, DOCUMENT_REDACTION_BUCKET, SHOW_WHOLE_DOCUMENT_TEXTRACT_CALL_OPTIONS, TEXTRACT_WHOLE_DOCUMENT_ANALYSIS_BUCKET, TEXTRACT_WHOLE_DOCUMENT_ANALYSIS_INPUT_SUBFOLDER, TEXTRACT_WHOLE_DOCUMENT_ANALYSIS_OUTPUT_SUBFOLDER, SESSION_OUTPUT_FOLDER, LOAD_PREVIOUS_TEXTRACT_JOBS_S3, TEXTRACT_JOBS_S3_LOC, TEXTRACT_JOBS_LOCAL_LOC, HOST_NAME, DEFAULT_COST_CODE, OUTPUT_COST_CODES_PATH, OUTPUT_ALLOW_LIST_PATH, COGNITO_AUTH, SAVE_LOGS_TO_CSV, SAVE_LOGS_TO_DYNAMODB, ACCESS_LOG_DYNAMODB_TABLE_NAME, DYNAMODB_ACCESS_LOG_HEADERS, CSV_ACCESS_LOG_HEADERS, FEEDBACK_LOG_DYNAMODB_TABLE_NAME, DYNAMODB_FEEDBACK_LOG_HEADERS, CSV_FEEDBACK_LOG_HEADERS, USAGE_LOG_DYNAMODB_TABLE_NAME, DYNAMODB_USAGE_LOG_HEADERS, CSV_USAGE_LOG_HEADERS, TEXTRACT_JOBS_S3_INPUT_LOC, TEXTRACT_TEXT_EXTRACT_OPTION, NO_REDACTION_PII_OPTION, TEXT_EXTRACTION_MODELS, PII_DETECTION_MODELS, DEFAULT_TEXT_EXTRACTION_MODEL, DEFAULT_PII_DETECTION_MODEL, LOG_FILE_NAME, CHOSEN_COMPREHEND_ENTITIES, FULL_COMPREHEND_ENTITY_LIST, CHOSEN_REDACT_ENTITIES, FULL_ENTITY_LIST, FILE_INPUT_HEIGHT, TABULAR_PII_DETECTION_MODELS

|

| 6 |

-

from tools.helper_functions import put_columns_in_df, get_connection_params, reveal_feedback_buttons, custom_regex_load, reset_state_vars, load_in_default_allow_list, reset_review_vars, merge_csv_files, load_all_output_files, update_dataframe, check_for_existing_textract_file, load_in_default_cost_codes, enforce_cost_codes, calculate_aws_costs, calculate_time_taken, reset_base_dataframe, reset_ocr_base_dataframe, update_cost_code_dataframe_from_dropdown_select, check_for_existing_local_ocr_file, reset_data_vars, reset_aws_call_vars

|

| 7 |

from tools.aws_functions import download_file_from_s3, upload_log_file_to_s3

|

| 8 |

from tools.file_redaction import choose_and_run_redactor

|

| 9 |

from tools.file_conversion import prepare_image_or_pdf, get_input_file_names

|

| 10 |

-

from tools.redaction_review import apply_redactions_to_review_df_and_files, update_all_page_annotation_object_based_on_previous_page, decrease_page, increase_page, update_annotator_object_and_filter_df, update_entities_df_recogniser_entities, update_entities_df_page, update_entities_df_text, df_select_callback_dataframe_row, convert_df_to_xfdf, convert_xfdf_to_dataframe, reset_dropdowns, exclude_selected_items_from_redaction, undo_last_removal, update_selected_review_df_row_colour, update_all_entity_df_dropdowns, df_select_callback_cost, update_other_annotator_number_from_current, update_annotator_page_from_review_df, df_select_callback_ocr, df_select_callback_textract_api, get_all_rows_with_same_text, increase_bottom_page_count_based_on_top

|

| 11 |

from tools.data_anonymise import anonymise_data_files

|

| 12 |

from tools.auth import authenticate_user

|

| 13 |

from tools.load_spacy_model_custom_recognisers import custom_entities

|

| 14 |

from tools.custom_csvlogger import CSVLogger_custom

|

| 15 |

-

from tools.find_duplicate_pages import

|

| 16 |

from tools.textract_batch_call import analyse_document_with_textract_api, poll_whole_document_textract_analysis_progress_and_download, load_in_textract_job_details, check_for_provided_job_id, check_textract_outputs_exist, replace_existing_pdf_input_for_whole_document_outputs

|

| 17 |

|

| 18 |

# Suppress downcasting warnings

|

| 19 |

pd.set_option('future.no_silent_downcasting', True)

|

| 20 |

|

| 21 |

# Convert string environment variables to string or list

|

| 22 |

-

SAVE_LOGS_TO_CSV =

|

| 23 |

-

|

|

|

|

|

|

|

| 24 |

|

| 25 |

-

if CSV_ACCESS_LOG_HEADERS: CSV_ACCESS_LOG_HEADERS =

|

| 26 |

-

if CSV_FEEDBACK_LOG_HEADERS: CSV_FEEDBACK_LOG_HEADERS =

|

| 27 |

-

if CSV_USAGE_LOG_HEADERS: CSV_USAGE_LOG_HEADERS =

|

| 28 |

|

| 29 |

-

if DYNAMODB_ACCESS_LOG_HEADERS: DYNAMODB_ACCESS_LOG_HEADERS =

|

| 30 |

-

if DYNAMODB_FEEDBACK_LOG_HEADERS: DYNAMODB_FEEDBACK_LOG_HEADERS =

|

| 31 |

-

if DYNAMODB_USAGE_LOG_HEADERS: DYNAMODB_USAGE_LOG_HEADERS =

|

| 32 |

|

| 33 |

-

if CHOSEN_COMPREHEND_ENTITIES: CHOSEN_COMPREHEND_ENTITIES =

|

| 34 |

-

if FULL_COMPREHEND_ENTITY_LIST: FULL_COMPREHEND_ENTITY_LIST =

|

| 35 |

-

if CHOSEN_REDACT_ENTITIES: CHOSEN_REDACT_ENTITIES =

|

| 36 |

-

if FULL_ENTITY_LIST: FULL_ENTITY_LIST =

|

| 37 |

|

| 38 |

# Add custom spacy recognisers to the Comprehend list, so that local Spacy model can be used to pick up e.g. titles, streetnames, UK postcodes that are sometimes missed by comprehend

|

| 39 |

CHOSEN_COMPREHEND_ENTITIES.extend(custom_entities)

|

|

@@ -42,7 +44,7 @@ FULL_COMPREHEND_ENTITY_LIST.extend(custom_entities)

|

|

| 42 |

FILE_INPUT_HEIGHT = int(FILE_INPUT_HEIGHT)

|

| 43 |

|

| 44 |

# Create the gradio interface

|

| 45 |

-

app = gr.Blocks(theme = gr.themes.

|

| 46 |

|

| 47 |

with app:

|

| 48 |

|

|

@@ -55,7 +57,7 @@ with app:

|

|

| 55 |

all_image_annotations_state = gr.State([])

|

| 56 |

|

| 57 |

all_decision_process_table_state = gr.Dataframe(value=pd.DataFrame(), headers=None, col_count=0, row_count = (0, "dynamic"), label="all_decision_process_table", visible=False, type="pandas", wrap=True)

|

| 58 |

-

|

| 59 |

|

| 60 |

all_page_line_level_ocr_results = gr.State([])

|

| 61 |

all_page_line_level_ocr_results_with_children = gr.State([])

|

|

@@ -186,7 +188,9 @@ with app:

|

|

| 186 |

# Duplicate page detection

|

| 187 |

in_duplicate_pages_text = gr.Textbox(label="in_duplicate_pages_text", visible=False)

|

| 188 |

duplicate_pages_df = gr.Dataframe(value=pd.DataFrame(), headers=None, col_count=0, row_count = (0, "dynamic"), label="duplicate_pages_df", visible=False, type="pandas", wrap=True)

|

| 189 |

-

|

|

|

|

|

|

|

| 190 |

|

| 191 |

# Tracking variables for current page (not visible)

|

| 192 |

current_loop_page_number = gr.Number(value=0,precision=0, interactive=False, label = "Last redacted page in document", visible=False)

|

|

@@ -231,7 +235,7 @@ with app:

|

|

| 231 |

|

| 232 |

Redact personally identifiable information (PII) from documents (PDF, images), open text, or tabular data (XLSX/CSV/Parquet). Please see the [User Guide](https://github.com/seanpedrick-case/doc_redaction/blob/main/README.md) for a walkthrough on how to use the app. Below is a very brief overview.

|

| 233 |

|

| 234 |

-

To identify text in documents, the 'Local' text/OCR image analysis uses

|

| 235 |

|

| 236 |

After redaction, review suggested redactions on the 'Review redactions' tab. The original pdf can be uploaded here alongside a '...review_file.csv' to continue a previous redaction/review task. See the 'Redaction settings' tab to choose which pages to redact, the type of information to redact (e.g. people, places), or custom terms to always include/ exclude from redaction.

|

| 237 |

|

|

@@ -260,9 +264,9 @@ with app:

|

|

| 260 |

local_ocr_output_found_checkbox = gr.Checkbox(value= False, label="Existing local OCR output file found", interactive=False, visible=True)

|

| 261 |

with gr.Column(scale=4):

|

| 262 |

with gr.Row(equal_height=True):

|

| 263 |

-

total_pdf_page_count = gr.Number(label = "Total page count", value=0, visible=True)

|

| 264 |

-

estimated_aws_costs_number = gr.Number(label = "Approximate AWS Textract and/or Comprehend cost (£)", value=0.00, precision=2, visible=True)

|

| 265 |

-

estimated_time_taken_number = gr.Number(label = "Approximate time taken to extract text/redact (minutes)", value=0, visible=True, precision=2)

|

| 266 |

|

| 267 |

if GET_COST_CODES == "True" or ENFORCE_COST_CODES == "True":

|

| 268 |

with gr.Accordion("Assign task to cost code", open = True, visible=True):

|

|

@@ -318,7 +322,10 @@ with app:

|

|

| 318 |

annotate_zoom_in = gr.Button("Zoom in", visible=False)

|

| 319 |

annotate_zoom_out = gr.Button("Zoom out", visible=False)

|

| 320 |

with gr.Row():

|

| 321 |

-

clear_all_redactions_on_page_btn = gr.Button("Clear all redactions on page", visible=False)

|

|

|

|

|

|

|

|

|

|

| 322 |

|

| 323 |

with gr.Row():

|

| 324 |

with gr.Column(scale=2):

|

|

@@ -389,47 +396,67 @@ with app:

|

|

| 389 |

# IDENTIFY DUPLICATE PAGES TAB

|

| 390 |

###

|

| 391 |

with gr.Tab(label="Identify duplicate pages"):

|

| 392 |

-

|

|

|

|

|

|

|

| 393 |

in_duplicate_pages = gr.File(

|

| 394 |

-

label="Upload multiple 'ocr_output.csv' files to

|

| 395 |

file_count="multiple", height=FILE_INPUT_HEIGHT, file_types=['.csv']

|

| 396 |

)

|

| 397 |

|

| 398 |

-

gr.

|

| 399 |

-

|

| 400 |

-

|

| 401 |

-

|

| 402 |

-

|

| 403 |

-

|

| 404 |

-

|

| 405 |

-

|

| 406 |

-

|

| 407 |

-

|

| 408 |

-

|

| 409 |

-

|

| 410 |

-

|

| 411 |

-

|

| 412 |

-

|

| 413 |

-

|

| 414 |

|

| 415 |

-

find_duplicate_pages_btn = gr.Button(value="Identify

|

| 416 |

|

| 417 |

-

with gr.Accordion("Step 2: Review

|

| 418 |

-

gr.Markdown("### Analysis

|

| 419 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 420 |

|

| 421 |

-

gr.Markdown("### Full Text Preview")

|

| 422 |

with gr.Row():

|

| 423 |

-

page1_text_preview = gr.

|

| 424 |

-

page2_text_preview = gr.

|

| 425 |

|

| 426 |

gr.Markdown("### Downloadable Files")

|

| 427 |

-

|

| 428 |

label="Download analysis summary and redaction lists (.csv)",

|

| 429 |

-

file_count="multiple",

|

|

|

|

| 430 |

)

|

| 431 |

|

| 432 |

-

|

|

|

|

|

|

|

|

|

|

| 433 |

|

| 434 |

###

|

| 435 |

# TEXT / TABULAR DATA TAB

|

|

@@ -484,6 +511,13 @@ with app:

|

|

| 484 |

in_allow_list_state = gr.Dataframe(value=pd.DataFrame(), headers=["allow_list"], col_count=(1, "fixed"), row_count = (0, "dynamic"), label="Allow list", visible=True, type="pandas", interactive=True, show_fullscreen_button=True, show_copy_button=True, wrap=True)

|

| 485 |

in_deny_list_state = gr.Dataframe(value=pd.DataFrame(), headers=["deny_list"], col_count=(1, "fixed"), row_count = (0, "dynamic"), label="Deny list", visible=True, type="pandas", interactive=True, show_fullscreen_button=True, show_copy_button=True, wrap=True)

|

| 486 |

in_fully_redacted_list_state = gr.Dataframe(value=pd.DataFrame(), headers=["fully_redacted_pages_list"], col_count=(1, "fixed"), row_count = (0, "dynamic"), label="Fully redacted pages", visible=True, type="pandas", interactive=True, show_fullscreen_button=True, show_copy_button=True, datatype='number', wrap=True)

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 487 |

|

| 488 |

with gr.Accordion("Select entity types to redact", open = True):

|

| 489 |

in_redact_entities = gr.Dropdown(value=CHOSEN_REDACT_ENTITIES, choices=FULL_ENTITY_LIST, multiselect=True, label="Local PII identification model (click empty space in box for full list)")

|

|

@@ -553,24 +587,24 @@ with app:

|

|

| 553 |

cost_code_choice_drop.select(update_cost_code_dataframe_from_dropdown_select, inputs=[cost_code_choice_drop, cost_code_dataframe_base], outputs=[cost_code_dataframe])

|

| 554 |

|

| 555 |

in_doc_files.upload(fn=get_input_file_names, inputs=[in_doc_files], outputs=[doc_file_name_no_extension_textbox, doc_file_name_with_extension_textbox, doc_full_file_name_textbox, doc_file_name_textbox_list, total_pdf_page_count]).\

|

| 556 |

-

success(fn = prepare_image_or_pdf, inputs=[in_doc_files, text_extract_method_radio, latest_file_completed_text, redaction_output_summary_textbox, first_loop_state, annotate_max_pages, all_image_annotations_state, prepare_for_review_bool_false, in_fully_redacted_list_state, output_folder_textbox, input_folder_textbox, prepare_images_bool_false], outputs=[redaction_output_summary_textbox, prepared_pdf_state, images_pdf_state, annotate_max_pages, annotate_max_pages_bottom, pdf_doc_state, all_image_annotations_state,

|

| 557 |

success(fn=check_for_existing_textract_file, inputs=[doc_file_name_no_extension_textbox, output_folder_textbox], outputs=[textract_output_found_checkbox]).\

|

| 558 |

success(fn=check_for_existing_local_ocr_file, inputs=[doc_file_name_no_extension_textbox, output_folder_textbox], outputs=[local_ocr_output_found_checkbox])

|

| 559 |

|

| 560 |

# Run redaction function

|

| 561 |

document_redact_btn.click(fn = reset_state_vars, outputs=[all_image_annotations_state, all_line_level_ocr_results_df_base, all_decision_process_table_state, comprehend_query_number, textract_metadata_textbox, annotator, output_file_list_state, log_files_output_list_state, recogniser_entity_dataframe, recogniser_entity_dataframe_base, pdf_doc_state, duplication_file_path_outputs_list_state, redaction_output_summary_textbox, is_a_textract_api_call, textract_query_number]).\

|

| 562 |

success(fn= enforce_cost_codes, inputs=[enforce_cost_code_textbox, cost_code_choice_drop, cost_code_dataframe_base]).\

|

| 563 |

-

success(fn= choose_and_run_redactor, inputs=[in_doc_files, prepared_pdf_state, images_pdf_state, in_redact_language, in_redact_entities, in_redact_comprehend_entities, text_extract_method_radio, in_allow_list_state, in_deny_list_state, in_fully_redacted_list_state, latest_file_completed_text, redaction_output_summary_textbox, output_file_list_state, log_files_output_list_state, first_loop_state, page_min, page_max, actual_time_taken_number, handwrite_signature_checkbox, textract_metadata_textbox, all_image_annotations_state, all_line_level_ocr_results_df_base, all_decision_process_table_state, pdf_doc_state, current_loop_page_number, page_break_return, pii_identification_method_drop, comprehend_query_number, max_fuzzy_spelling_mistakes_num, match_fuzzy_whole_phrase_bool, aws_access_key_textbox, aws_secret_key_textbox, annotate_max_pages,

|

| 564 |

-

outputs=[redaction_output_summary_textbox, output_file, output_file_list_state, latest_file_completed_text, log_files_output, log_files_output_list_state, actual_time_taken_number, textract_metadata_textbox, pdf_doc_state, all_image_annotations_state, current_loop_page_number, page_break_return, all_line_level_ocr_results_df_base, all_decision_process_table_state, comprehend_query_number, output_review_files, annotate_max_pages, annotate_max_pages_bottom, prepared_pdf_state, images_pdf_state,

|

| 565 |

|

| 566 |

# If the app has completed a batch of pages, it will rerun the redaction process until the end of all pages in the document

|

| 567 |

-

current_loop_page_number.change(fn = choose_and_run_redactor, inputs=[in_doc_files, prepared_pdf_state, images_pdf_state, in_redact_language, in_redact_entities, in_redact_comprehend_entities, text_extract_method_radio, in_allow_list_state, in_deny_list_state, in_fully_redacted_list_state, latest_file_completed_text, redaction_output_summary_textbox, output_file_list_state, log_files_output_list_state, second_loop_state, page_min, page_max, actual_time_taken_number, handwrite_signature_checkbox, textract_metadata_textbox, all_image_annotations_state, all_line_level_ocr_results_df_base, all_decision_process_table_state, pdf_doc_state, current_loop_page_number, page_break_return, pii_identification_method_drop, comprehend_query_number, max_fuzzy_spelling_mistakes_num, match_fuzzy_whole_phrase_bool, aws_access_key_textbox, aws_secret_key_textbox, annotate_max_pages,

|

| 568 |

-

outputs=[redaction_output_summary_textbox, output_file, output_file_list_state, latest_file_completed_text, log_files_output, log_files_output_list_state, actual_time_taken_number, textract_metadata_textbox, pdf_doc_state, all_image_annotations_state, current_loop_page_number, page_break_return, all_line_level_ocr_results_df_base, all_decision_process_table_state, comprehend_query_number, output_review_files, annotate_max_pages, annotate_max_pages_bottom, prepared_pdf_state, images_pdf_state,

|

| 569 |

|

| 570 |

# If a file has been completed, the function will continue onto the next document

|

| 571 |

-

latest_file_completed_text.change(fn = choose_and_run_redactor, inputs=[in_doc_files, prepared_pdf_state, images_pdf_state, in_redact_language, in_redact_entities, in_redact_comprehend_entities, text_extract_method_radio, in_allow_list_state, in_deny_list_state, in_fully_redacted_list_state, latest_file_completed_text, redaction_output_summary_textbox, output_file_list_state, log_files_output_list_state, second_loop_state, page_min, page_max, actual_time_taken_number, handwrite_signature_checkbox, textract_metadata_textbox, all_image_annotations_state, all_line_level_ocr_results_df_base, all_decision_process_table_state, pdf_doc_state, current_loop_page_number, page_break_return, pii_identification_method_drop, comprehend_query_number, max_fuzzy_spelling_mistakes_num, match_fuzzy_whole_phrase_bool, aws_access_key_textbox, aws_secret_key_textbox, annotate_max_pages,

|

| 572 |

-

outputs=[redaction_output_summary_textbox, output_file, output_file_list_state, latest_file_completed_text, log_files_output, log_files_output_list_state, actual_time_taken_number, textract_metadata_textbox, pdf_doc_state, all_image_annotations_state, current_loop_page_number, page_break_return, all_line_level_ocr_results_df_base, all_decision_process_table_state, comprehend_query_number, output_review_files, annotate_max_pages, annotate_max_pages_bottom, prepared_pdf_state, images_pdf_state,

|

| 573 |

-

success(fn=update_annotator_object_and_filter_df, inputs=[all_image_annotations_state, page_min, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown, recogniser_entity_dataframe_base, annotator_zoom_number,

|

| 574 |

success(fn=check_for_existing_textract_file, inputs=[doc_file_name_no_extension_textbox, output_folder_textbox], outputs=[textract_output_found_checkbox]).\

|

| 575 |

success(fn=check_for_existing_local_ocr_file, inputs=[doc_file_name_no_extension_textbox, output_folder_textbox], outputs=[local_ocr_output_found_checkbox]).\

|

| 576 |

success(fn=reveal_feedback_buttons, outputs=[pdf_feedback_radio, pdf_further_details_text, pdf_submit_feedback_btn, pdf_feedback_title]).\

|

|

@@ -592,62 +626,67 @@ with app:

|

|

| 592 |

textract_job_detail_df.select(df_select_callback_textract_api, inputs=[textract_output_found_checkbox], outputs=[job_id_textbox, job_type_dropdown, selected_job_id_row])

|

| 593 |

|

| 594 |

convert_textract_outputs_to_ocr_results.click(replace_existing_pdf_input_for_whole_document_outputs, inputs = [s3_whole_document_textract_input_subfolder, doc_file_name_no_extension_textbox, output_folder_textbox, s3_whole_document_textract_default_bucket, in_doc_files, input_folder_textbox], outputs = [in_doc_files, doc_file_name_no_extension_textbox, doc_file_name_with_extension_textbox, doc_full_file_name_textbox, doc_file_name_textbox_list, total_pdf_page_count]).\

|

| 595 |

-

success(fn = prepare_image_or_pdf, inputs=[in_doc_files, text_extract_method_radio, latest_file_completed_text, redaction_output_summary_textbox, first_loop_state, annotate_max_pages, all_image_annotations_state, prepare_for_review_bool_false, in_fully_redacted_list_state, output_folder_textbox, input_folder_textbox, prepare_images_bool_false], outputs=[redaction_output_summary_textbox, prepared_pdf_state, images_pdf_state, annotate_max_pages, annotate_max_pages_bottom, pdf_doc_state, all_image_annotations_state,

|

| 596 |

success(fn=check_for_existing_textract_file, inputs=[doc_file_name_no_extension_textbox, output_folder_textbox], outputs=[textract_output_found_checkbox]).\

|

| 597 |

success(fn=check_for_existing_local_ocr_file, inputs=[doc_file_name_no_extension_textbox, output_folder_textbox], outputs=[local_ocr_output_found_checkbox]).\

|

| 598 |

success(fn= check_textract_outputs_exist, inputs=[textract_output_found_checkbox]).\

|

| 599 |

success(fn = reset_state_vars, outputs=[all_image_annotations_state, all_line_level_ocr_results_df_base, all_decision_process_table_state, comprehend_query_number, textract_metadata_textbox, annotator, output_file_list_state, log_files_output_list_state, recogniser_entity_dataframe, recogniser_entity_dataframe_base, pdf_doc_state, duplication_file_path_outputs_list_state, redaction_output_summary_textbox, is_a_textract_api_call, textract_query_number]).\

|

| 600 |

-

success(fn= choose_and_run_redactor, inputs=[in_doc_files, prepared_pdf_state, images_pdf_state, in_redact_language, in_redact_entities, in_redact_comprehend_entities, textract_only_method_drop, in_allow_list_state, in_deny_list_state, in_fully_redacted_list_state, latest_file_completed_text, redaction_output_summary_textbox, output_file_list_state, log_files_output_list_state, first_loop_state, page_min, page_max, actual_time_taken_number, handwrite_signature_checkbox, textract_metadata_textbox, all_image_annotations_state, all_line_level_ocr_results_df_base, all_decision_process_table_state, pdf_doc_state, current_loop_page_number, page_break_return, no_redaction_method_drop, comprehend_query_number, max_fuzzy_spelling_mistakes_num, match_fuzzy_whole_phrase_bool, aws_access_key_textbox, aws_secret_key_textbox, annotate_max_pages,

|

| 601 |

-

outputs=[redaction_output_summary_textbox, output_file, output_file_list_state, latest_file_completed_text, log_files_output, log_files_output_list_state, actual_time_taken_number, textract_metadata_textbox, pdf_doc_state, all_image_annotations_state, current_loop_page_number, page_break_return, all_line_level_ocr_results_df_base, all_decision_process_table_state, comprehend_query_number, output_review_files, annotate_max_pages, annotate_max_pages_bottom, prepared_pdf_state, images_pdf_state,

|

| 602 |

-

success(fn=update_annotator_object_and_filter_df, inputs=[all_image_annotations_state, page_min, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown, recogniser_entity_dataframe_base, annotator_zoom_number,

|

| 603 |

|

| 604 |

###

|

| 605 |

# REVIEW PDF REDACTIONS

|

| 606 |

###

|

|

|

|

| 607 |

|

| 608 |

# Upload previous files for modifying redactions

|

| 609 |

upload_previous_review_file_btn.click(fn=reset_review_vars, inputs=None, outputs=[recogniser_entity_dataframe, recogniser_entity_dataframe_base]).\

|

| 610 |

success(fn=get_input_file_names, inputs=[output_review_files], outputs=[doc_file_name_no_extension_textbox, doc_file_name_with_extension_textbox, doc_full_file_name_textbox, doc_file_name_textbox_list, total_pdf_page_count]).\

|

| 611 |

-

success(fn = prepare_image_or_pdf, inputs=[output_review_files, text_extract_method_radio, latest_file_completed_text, redaction_output_summary_textbox, second_loop_state, annotate_max_pages, all_image_annotations_state, prepare_for_review_bool, in_fully_redacted_list_state, output_folder_textbox, input_folder_textbox, prepare_images_bool_false], outputs=[redaction_output_summary_textbox, prepared_pdf_state, images_pdf_state, annotate_max_pages, annotate_max_pages_bottom, pdf_doc_state, all_image_annotations_state,

|

| 612 |

-

success(update_annotator_object_and_filter_df, inputs=[all_image_annotations_state, annotate_current_page, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown, recogniser_entity_dataframe_base, annotator_zoom_number,

|

|

|

|

|

|

|

|

|

|

|

|

|

| 613 |

|

| 614 |

# Page number controls

|

| 615 |

annotate_current_page.submit(update_all_page_annotation_object_based_on_previous_page, inputs = [annotator, annotate_current_page, annotate_previous_page, all_image_annotations_state, page_sizes], outputs = [all_image_annotations_state, annotate_previous_page, annotate_current_page_bottom]).\

|

| 616 |

-

success(update_annotator_object_and_filter_df, inputs=[all_image_annotations_state, annotate_current_page, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown, recogniser_entity_dataframe_base, annotator_zoom_number,

|

| 617 |

-

success(apply_redactions_to_review_df_and_files, inputs=[annotator, doc_full_file_name_textbox, pdf_doc_state, all_image_annotations_state, annotate_current_page,

|

| 618 |

|

| 619 |

annotation_last_page_button.click(fn=decrease_page, inputs=[annotate_current_page], outputs=[annotate_current_page, annotate_current_page_bottom]).\

|

| 620 |

success(update_all_page_annotation_object_based_on_previous_page, inputs = [annotator, annotate_current_page, annotate_previous_page, all_image_annotations_state, page_sizes], outputs = [all_image_annotations_state, annotate_previous_page, annotate_current_page_bottom]).\

|

| 621 |

-

success(update_annotator_object_and_filter_df, inputs=[all_image_annotations_state, annotate_current_page, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown, recogniser_entity_dataframe_base, annotator_zoom_number,

|

| 622 |

-

success(apply_redactions_to_review_df_and_files, inputs=[annotator, doc_full_file_name_textbox, pdf_doc_state, all_image_annotations_state, annotate_current_page,

|

| 623 |

|

| 624 |

annotation_next_page_button.click(fn=increase_page, inputs=[annotate_current_page, all_image_annotations_state], outputs=[annotate_current_page, annotate_current_page_bottom]).\

|

| 625 |

success(update_all_page_annotation_object_based_on_previous_page, inputs = [annotator, annotate_current_page, annotate_previous_page, all_image_annotations_state, page_sizes], outputs = [all_image_annotations_state, annotate_previous_page, annotate_current_page_bottom]).\

|

| 626 |

-

success(update_annotator_object_and_filter_df, inputs=[all_image_annotations_state, annotate_current_page, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown, recogniser_entity_dataframe_base, annotator_zoom_number,

|

| 627 |

-

success(apply_redactions_to_review_df_and_files, inputs=[annotator, doc_full_file_name_textbox, pdf_doc_state, all_image_annotations_state, annotate_current_page,

|

| 628 |

|

| 629 |

annotation_last_page_button_bottom.click(fn=decrease_page, inputs=[annotate_current_page], outputs=[annotate_current_page, annotate_current_page_bottom]).\

|

| 630 |

success(update_all_page_annotation_object_based_on_previous_page, inputs = [annotator, annotate_current_page, annotate_previous_page, all_image_annotations_state, page_sizes], outputs = [all_image_annotations_state, annotate_previous_page, annotate_current_page_bottom]).\

|

| 631 |

-

success(update_annotator_object_and_filter_df, inputs=[all_image_annotations_state, annotate_current_page, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown, recogniser_entity_dataframe_base, annotator_zoom_number,

|

| 632 |

-

success(apply_redactions_to_review_df_and_files, inputs=[annotator, doc_full_file_name_textbox, pdf_doc_state, all_image_annotations_state, annotate_current_page,

|

| 633 |

|

| 634 |

annotation_next_page_button_bottom.click(fn=increase_page, inputs=[annotate_current_page, all_image_annotations_state], outputs=[annotate_current_page, annotate_current_page_bottom]).\

|

| 635 |

success(update_all_page_annotation_object_based_on_previous_page, inputs = [annotator, annotate_current_page, annotate_previous_page, all_image_annotations_state, page_sizes], outputs = [all_image_annotations_state, annotate_previous_page, annotate_current_page_bottom]).\

|

| 636 |

-

success(update_annotator_object_and_filter_df, inputs=[all_image_annotations_state, annotate_current_page, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown, recogniser_entity_dataframe_base, annotator_zoom_number,

|

| 637 |

-

success(apply_redactions_to_review_df_and_files, inputs=[annotator, doc_full_file_name_textbox, pdf_doc_state, all_image_annotations_state, annotate_current_page,

|

| 638 |

|

| 639 |

annotate_current_page_bottom.submit(update_other_annotator_number_from_current, inputs=[annotate_current_page_bottom], outputs=[annotate_current_page]).\

|

| 640 |

success(update_all_page_annotation_object_based_on_previous_page, inputs = [annotator, annotate_current_page, annotate_previous_page, all_image_annotations_state, page_sizes], outputs = [all_image_annotations_state, annotate_previous_page, annotate_current_page_bottom]).\

|

| 641 |

-

success(update_annotator_object_and_filter_df, inputs=[all_image_annotations_state, annotate_current_page, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown, recogniser_entity_dataframe_base, annotator_zoom_number,

|

| 642 |

-

success(apply_redactions_to_review_df_and_files, inputs=[annotator, doc_full_file_name_textbox, pdf_doc_state, all_image_annotations_state, annotate_current_page,

|

| 643 |

|

| 644 |

# Apply page redactions

|

| 645 |

-

annotation_button_apply.click(apply_redactions_to_review_df_and_files, inputs=[annotator, doc_full_file_name_textbox, pdf_doc_state, all_image_annotations_state, annotate_current_page,

|

| 646 |

|

| 647 |

# Save current page redactions

|

| 648 |

update_current_page_redactions_btn.click(update_all_page_annotation_object_based_on_previous_page, inputs = [annotator, annotate_current_page, annotate_current_page, all_image_annotations_state, page_sizes], outputs = [all_image_annotations_state, annotate_previous_page, annotate_current_page_bottom]).\

|

| 649 |

-

success(update_annotator_object_and_filter_df, inputs=[all_image_annotations_state, annotate_current_page, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown, recogniser_entity_dataframe_base, annotator_zoom_number,

|

| 650 |

-

success(apply_redactions_to_review_df_and_files, inputs=[annotator, doc_full_file_name_textbox, pdf_doc_state, all_image_annotations_state, annotate_current_page,

|

| 651 |

|

| 652 |

# Review table controls

|

| 653 |

recogniser_entity_dropdown.select(update_entities_df_recogniser_entities, inputs=[recogniser_entity_dropdown, recogniser_entity_dataframe_base, page_entity_dropdown, text_entity_dropdown], outputs=[recogniser_entity_dataframe, text_entity_dropdown, page_entity_dropdown])

|

|

@@ -656,54 +695,52 @@ with app:

|

|

| 656 |

|

| 657 |

# Clicking on a cell in the recogniser entity dataframe will take you to that page, and also highlight the target redaction box in blue

|

| 658 |

recogniser_entity_dataframe.select(df_select_callback_dataframe_row, inputs=[recogniser_entity_dataframe], outputs=[selected_entity_dataframe_row, selected_entity_dataframe_row_text]).\

|

| 659 |

-

success(update_selected_review_df_row_colour, inputs=[selected_entity_dataframe_row,

|

| 660 |

-

success(update_annotator_page_from_review_df, inputs=[

|

| 661 |

success(increase_bottom_page_count_based_on_top, inputs=[annotate_current_page], outputs=[annotate_current_page_bottom])

|

| 662 |

|

| 663 |

reset_dropdowns_btn.click(reset_dropdowns, inputs=[recogniser_entity_dataframe_base], outputs=[recogniser_entity_dropdown, text_entity_dropdown, page_entity_dropdown]).\

|

| 664 |

-

success(update_annotator_object_and_filter_df, inputs=[all_image_annotations_state, annotate_current_page, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown, recogniser_entity_dataframe_base, annotator_zoom_number,

|

| 665 |

|

| 666 |

# Exclude current selection from annotator and outputs

|

| 667 |

# Exclude only selected row

|

| 668 |

-

exclude_selected_row_btn.click(exclude_selected_items_from_redaction, inputs=[

|

| 669 |

-

success(update_annotator_object_and_filter_df, inputs=[all_image_annotations_state, annotate_current_page, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown, recogniser_entity_dataframe_base, annotator_zoom_number,

|

| 670 |

-

success(apply_redactions_to_review_df_and_files, inputs=[annotator, doc_full_file_name_textbox, pdf_doc_state, all_image_annotations_state, annotate_current_page,

|

| 671 |

success(update_all_entity_df_dropdowns, inputs=[recogniser_entity_dataframe_base, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown], outputs=[recogniser_entity_dropdown, text_entity_dropdown, page_entity_dropdown])

|

| 672 |

|

| 673 |

# Exclude all items with same text as selected row

|

| 674 |

exclude_text_with_same_as_selected_row_btn.click(get_all_rows_with_same_text, inputs=[recogniser_entity_dataframe_base, selected_entity_dataframe_row_text], outputs=[recogniser_entity_dataframe_same_text]).\

|

| 675 |

-

success(exclude_selected_items_from_redaction, inputs=[

|

| 676 |

-

success(update_annotator_object_and_filter_df, inputs=[all_image_annotations_state, annotate_current_page, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown, recogniser_entity_dataframe_base, annotator_zoom_number,

|

| 677 |

-

success(apply_redactions_to_review_df_and_files, inputs=[annotator, doc_full_file_name_textbox, pdf_doc_state, all_image_annotations_state, annotate_current_page,

|

| 678 |

success(update_all_entity_df_dropdowns, inputs=[recogniser_entity_dataframe_base, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown], outputs=[recogniser_entity_dropdown, text_entity_dropdown, page_entity_dropdown])

|

| 679 |

|

| 680 |

# Exclude everything visible in table

|

| 681 |

-

exclude_selected_btn.click(exclude_selected_items_from_redaction, inputs=[

|

| 682 |

-

success(update_annotator_object_and_filter_df, inputs=[all_image_annotations_state, annotate_current_page, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown, recogniser_entity_dataframe_base, annotator_zoom_number,

|

| 683 |

-

success(apply_redactions_to_review_df_and_files, inputs=[annotator, doc_full_file_name_textbox, pdf_doc_state, all_image_annotations_state, annotate_current_page,

|

| 684 |

success(update_all_entity_df_dropdowns, inputs=[recogniser_entity_dataframe_base, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown], outputs=[recogniser_entity_dropdown, text_entity_dropdown, page_entity_dropdown])

|

| 685 |

|

| 686 |

-

|

| 687 |

-

|

| 688 |

-

|

| 689 |

-

success(update_annotator_object_and_filter_df, inputs=[all_image_annotations_state, annotate_current_page, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown, recogniser_entity_dataframe_base, annotator_zoom_number, review_file_state, page_sizes, doc_full_file_name_textbox, input_folder_textbox], outputs = [annotator, annotate_current_page, annotate_current_page_bottom, annotate_previous_page, recogniser_entity_dropdown, recogniser_entity_dataframe, recogniser_entity_dataframe_base, text_entity_dropdown, page_entity_dropdown, page_sizes, all_image_annotations_state]).\

|

| 690 |

-

success(apply_redactions_to_review_df_and_files, inputs=[annotator, doc_full_file_name_textbox, pdf_doc_state, all_image_annotations_state, annotate_current_page, review_file_state, output_folder_textbox, do_not_save_pdf_state, page_sizes], outputs=[pdf_doc_state, all_image_annotations_state, output_review_files, log_files_output, review_file_state])

|

| 691 |

|

| 692 |

# Review OCR text button

|

| 693 |

all_line_level_ocr_results_df.select(df_select_callback_ocr, inputs=[all_line_level_ocr_results_df], outputs=[annotate_current_page, selected_ocr_dataframe_row]).\

|

| 694 |

-

success(update_annotator_page_from_review_df, inputs=[

|

| 695 |

success(increase_bottom_page_count_based_on_top, inputs=[annotate_current_page], outputs=[annotate_current_page_bottom])

|

| 696 |

|

| 697 |

reset_all_ocr_results_btn.click(reset_ocr_base_dataframe, inputs=[all_line_level_ocr_results_df_base], outputs=[all_line_level_ocr_results_df])

|

| 698 |

|

| 699 |

# Convert review file to xfdf Adobe format

|

| 700 |

convert_review_file_to_adobe_btn.click(fn=get_input_file_names, inputs=[output_review_files], outputs=[doc_file_name_no_extension_textbox, doc_file_name_with_extension_textbox, doc_full_file_name_textbox, doc_file_name_textbox_list, total_pdf_page_count]).\

|

| 701 |

-

success(fn = prepare_image_or_pdf, inputs=[output_review_files, text_extract_method_radio, latest_file_completed_text, redaction_output_summary_textbox, second_loop_state, annotate_max_pages, all_image_annotations_state, prepare_for_review_bool, in_fully_redacted_list_state, output_folder_textbox, input_folder_textbox, prepare_images_bool_false], outputs=[redaction_output_summary_textbox, prepared_pdf_state, images_pdf_state, annotate_max_pages, annotate_max_pages_bottom, pdf_doc_state, all_image_annotations_state,

|

| 702 |

success(convert_df_to_xfdf, inputs=[output_review_files, pdf_doc_state, images_pdf_state, output_folder_textbox, document_cropboxes, page_sizes], outputs=[adobe_review_files_out])

|

| 703 |

|

| 704 |

# Convert xfdf Adobe file back to review_file.csv

|

| 705 |

convert_adobe_to_review_file_btn.click(fn=get_input_file_names, inputs=[adobe_review_files_out], outputs=[doc_file_name_no_extension_textbox, doc_file_name_with_extension_textbox, doc_full_file_name_textbox, doc_file_name_textbox_list, total_pdf_page_count]).\

|

| 706 |

-

success(fn = prepare_image_or_pdf, inputs=[adobe_review_files_out, text_extract_method_radio, latest_file_completed_text, redaction_output_summary_textbox, second_loop_state, annotate_max_pages, all_image_annotations_state, prepare_for_review_bool, in_fully_redacted_list_state, output_folder_textbox, input_folder_textbox, prepare_images_bool_false], outputs=[redaction_output_summary_textbox, prepared_pdf_state, images_pdf_state, annotate_max_pages, annotate_max_pages_bottom, pdf_doc_state, all_image_annotations_state,

|

| 707 |

success(fn=convert_xfdf_to_dataframe, inputs=[adobe_review_files_out, pdf_doc_state, images_pdf_state, output_folder_textbox], outputs=[output_review_files], scroll_to_output=True)

|

| 708 |

|

| 709 |

###

|

|

@@ -716,7 +753,7 @@ with app:

|

|

| 716 |

success(fn=anonymise_data_files, inputs=[in_data_files, in_text, anon_strat, in_colnames, in_redact_language, in_redact_entities, in_allow_list_state, text_tabular_files_done, text_output_summary, text_output_file_list_state, log_files_output_list_state, in_excel_sheets, first_loop_state, output_folder_textbox, in_deny_list_state, max_fuzzy_spelling_mistakes_num, pii_identification_method_drop_tabular, in_redact_comprehend_entities, comprehend_query_number, aws_access_key_textbox, aws_secret_key_textbox, actual_time_taken_number], outputs=[text_output_summary, text_output_file, text_output_file_list_state, text_tabular_files_done, log_files_output, log_files_output_list_state, actual_time_taken_number], api_name="redact_data").\

|

| 717 |

success(fn = reveal_feedback_buttons, outputs=[data_feedback_radio, data_further_details_text, data_submit_feedback_btn, data_feedback_title])

|

| 718 |

|

| 719 |

-

# Currently only supports redacting one data file at a time

|

| 720 |

# If the output file count text box changes, keep going with redacting each data file until done

|

| 721 |

# text_tabular_files_done.change(fn=anonymise_data_files, inputs=[in_data_files, in_text, anon_strat, in_colnames, in_redact_language, in_redact_entities, in_allow_list_state, text_tabular_files_done, text_output_summary, text_output_file_list_state, log_files_output_list_state, in_excel_sheets, second_loop_state, output_folder_textbox, in_deny_list_state, max_fuzzy_spelling_mistakes_num, pii_identification_method_drop_tabular, in_redact_comprehend_entities, comprehend_query_number, aws_access_key_textbox, aws_secret_key_textbox, actual_time_taken_number], outputs=[text_output_summary, text_output_file, text_output_file_list_state, text_tabular_files_done, log_files_output, log_files_output_list_state, actual_time_taken_number]).\

|

| 722 |

# success(fn = reveal_feedback_buttons, outputs=[data_feedback_radio, data_further_details_text, data_submit_feedback_btn, data_feedback_title])

|

|

@@ -725,7 +762,7 @@ with app:

|

|

| 725 |

# IDENTIFY DUPLICATE PAGES

|

| 726 |

###

|

| 727 |

find_duplicate_pages_btn.click(

|

| 728 |

-

fn=

|

| 729 |

inputs=[

|

| 730 |

in_duplicate_pages,

|

| 731 |

duplicate_threshold_input,

|

|

@@ -735,17 +772,32 @@ with app:

|

|

| 735 |

],

|

| 736 |

outputs=[

|

| 737 |

results_df_preview,

|

| 738 |

-

|

| 739 |

-

|

| 740 |

]

|

| 741 |

)

|

| 742 |

|

|

|

|

| 743 |

results_df_preview.select(

|

| 744 |

-

fn=

|

| 745 |

-

inputs=[

|

| 746 |

-

outputs=[page1_text_preview, page2_text_preview]

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 747 |

)

|

| 748 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 749 |

###

|

| 750 |

# SETTINGS PAGE INPUT / OUTPUT

|

| 751 |

###

|

|

@@ -759,6 +811,13 @@ with app:

|

|

| 759 |

in_deny_list_state.input(update_dataframe, inputs=[in_deny_list_state], outputs=[in_deny_list_state])

|

| 760 |

in_fully_redacted_list_state.input(update_dataframe, inputs=[in_fully_redacted_list_state], outputs=[in_fully_redacted_list_state])

|

| 761 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 762 |

# Merge multiple review csv files together

|

| 763 |

merge_multiple_review_files_btn.click(fn=merge_csv_files, inputs=multiple_review_files_in_out, outputs=multiple_review_files_in_out)

|

| 764 |

|

|

|

|

| 3 |

import gradio as gr

|

| 4 |

from gradio_image_annotation import image_annotator

|

| 5 |

from tools.config import OUTPUT_FOLDER, INPUT_FOLDER, RUN_DIRECT_MODE, MAX_QUEUE_SIZE, DEFAULT_CONCURRENCY_LIMIT, MAX_FILE_SIZE, GRADIO_SERVER_PORT, ROOT_PATH, GET_DEFAULT_ALLOW_LIST, ALLOW_LIST_PATH, S3_ALLOW_LIST_PATH, FEEDBACK_LOGS_FOLDER, ACCESS_LOGS_FOLDER, USAGE_LOGS_FOLDER, REDACTION_LANGUAGE, GET_COST_CODES, COST_CODES_PATH, S3_COST_CODES_PATH, ENFORCE_COST_CODES, DISPLAY_FILE_NAMES_IN_LOGS, SHOW_COSTS, RUN_AWS_FUNCTIONS, DOCUMENT_REDACTION_BUCKET, SHOW_WHOLE_DOCUMENT_TEXTRACT_CALL_OPTIONS, TEXTRACT_WHOLE_DOCUMENT_ANALYSIS_BUCKET, TEXTRACT_WHOLE_DOCUMENT_ANALYSIS_INPUT_SUBFOLDER, TEXTRACT_WHOLE_DOCUMENT_ANALYSIS_OUTPUT_SUBFOLDER, SESSION_OUTPUT_FOLDER, LOAD_PREVIOUS_TEXTRACT_JOBS_S3, TEXTRACT_JOBS_S3_LOC, TEXTRACT_JOBS_LOCAL_LOC, HOST_NAME, DEFAULT_COST_CODE, OUTPUT_COST_CODES_PATH, OUTPUT_ALLOW_LIST_PATH, COGNITO_AUTH, SAVE_LOGS_TO_CSV, SAVE_LOGS_TO_DYNAMODB, ACCESS_LOG_DYNAMODB_TABLE_NAME, DYNAMODB_ACCESS_LOG_HEADERS, CSV_ACCESS_LOG_HEADERS, FEEDBACK_LOG_DYNAMODB_TABLE_NAME, DYNAMODB_FEEDBACK_LOG_HEADERS, CSV_FEEDBACK_LOG_HEADERS, USAGE_LOG_DYNAMODB_TABLE_NAME, DYNAMODB_USAGE_LOG_HEADERS, CSV_USAGE_LOG_HEADERS, TEXTRACT_JOBS_S3_INPUT_LOC, TEXTRACT_TEXT_EXTRACT_OPTION, NO_REDACTION_PII_OPTION, TEXT_EXTRACTION_MODELS, PII_DETECTION_MODELS, DEFAULT_TEXT_EXTRACTION_MODEL, DEFAULT_PII_DETECTION_MODEL, LOG_FILE_NAME, CHOSEN_COMPREHEND_ENTITIES, FULL_COMPREHEND_ENTITY_LIST, CHOSEN_REDACT_ENTITIES, FULL_ENTITY_LIST, FILE_INPUT_HEIGHT, TABULAR_PII_DETECTION_MODELS

|

| 6 |

+

from tools.helper_functions import put_columns_in_df, get_connection_params, reveal_feedback_buttons, custom_regex_load, reset_state_vars, load_in_default_allow_list, reset_review_vars, merge_csv_files, load_all_output_files, update_dataframe, check_for_existing_textract_file, load_in_default_cost_codes, enforce_cost_codes, calculate_aws_costs, calculate_time_taken, reset_base_dataframe, reset_ocr_base_dataframe, update_cost_code_dataframe_from_dropdown_select, check_for_existing_local_ocr_file, reset_data_vars, reset_aws_call_vars, _get_env_list

|

| 7 |

from tools.aws_functions import download_file_from_s3, upload_log_file_to_s3

|

| 8 |

from tools.file_redaction import choose_and_run_redactor

|

| 9 |

from tools.file_conversion import prepare_image_or_pdf, get_input_file_names

|

| 10 |

+

from tools.redaction_review import apply_redactions_to_review_df_and_files, update_all_page_annotation_object_based_on_previous_page, decrease_page, increase_page, update_annotator_object_and_filter_df, update_entities_df_recogniser_entities, update_entities_df_page, update_entities_df_text, df_select_callback_dataframe_row, convert_df_to_xfdf, convert_xfdf_to_dataframe, reset_dropdowns, exclude_selected_items_from_redaction, undo_last_removal, update_selected_review_df_row_colour, update_all_entity_df_dropdowns, df_select_callback_cost, update_other_annotator_number_from_current, update_annotator_page_from_review_df, df_select_callback_ocr, df_select_callback_textract_api, get_all_rows_with_same_text, increase_bottom_page_count_based_on_top, store_duplicate_selection

|

| 11 |

from tools.data_anonymise import anonymise_data_files

|

| 12 |

from tools.auth import authenticate_user

|

| 13 |

from tools.load_spacy_model_custom_recognisers import custom_entities

|

| 14 |

from tools.custom_csvlogger import CSVLogger_custom

|

| 15 |

+

from tools.find_duplicate_pages import run_duplicate_analysis, exclude_match, handle_selection_and_preview, apply_whole_page_redactions_from_list

|

| 16 |

from tools.textract_batch_call import analyse_document_with_textract_api, poll_whole_document_textract_analysis_progress_and_download, load_in_textract_job_details, check_for_provided_job_id, check_textract_outputs_exist, replace_existing_pdf_input_for_whole_document_outputs

|

| 17 |

|

| 18 |

# Suppress downcasting warnings

|

| 19 |

pd.set_option('future.no_silent_downcasting', True)

|

| 20 |

|

| 21 |

# Convert string environment variables to string or list

|

| 22 |

+

if SAVE_LOGS_TO_CSV == "True": SAVE_LOGS_TO_CSV = True

|

| 23 |

+

else: SAVE_LOGS_TO_CSV = False

|

| 24 |

+

if SAVE_LOGS_TO_DYNAMODB == "True": SAVE_LOGS_TO_DYNAMODB = True

|

| 25 |

+

else: SAVE_LOGS_TO_DYNAMODB = False

|

| 26 |

|

| 27 |

+

if CSV_ACCESS_LOG_HEADERS: CSV_ACCESS_LOG_HEADERS = _get_env_list(CSV_ACCESS_LOG_HEADERS)

|

| 28 |

+

if CSV_FEEDBACK_LOG_HEADERS: CSV_FEEDBACK_LOG_HEADERS = _get_env_list(CSV_FEEDBACK_LOG_HEADERS)

|

| 29 |

+

if CSV_USAGE_LOG_HEADERS: CSV_USAGE_LOG_HEADERS = _get_env_list(CSV_USAGE_LOG_HEADERS)

|

| 30 |

|

| 31 |

+

if DYNAMODB_ACCESS_LOG_HEADERS: DYNAMODB_ACCESS_LOG_HEADERS = _get_env_list(DYNAMODB_ACCESS_LOG_HEADERS)

|

| 32 |

+

if DYNAMODB_FEEDBACK_LOG_HEADERS: DYNAMODB_FEEDBACK_LOG_HEADERS = _get_env_list(DYNAMODB_FEEDBACK_LOG_HEADERS)

|

| 33 |

+

if DYNAMODB_USAGE_LOG_HEADERS: DYNAMODB_USAGE_LOG_HEADERS = _get_env_list(DYNAMODB_USAGE_LOG_HEADERS)

|

| 34 |

|

| 35 |

+

if CHOSEN_COMPREHEND_ENTITIES: CHOSEN_COMPREHEND_ENTITIES = _get_env_list(CHOSEN_COMPREHEND_ENTITIES)

|

| 36 |

+

if FULL_COMPREHEND_ENTITY_LIST: FULL_COMPREHEND_ENTITY_LIST = _get_env_list(FULL_COMPREHEND_ENTITY_LIST)

|

| 37 |

+

if CHOSEN_REDACT_ENTITIES: CHOSEN_REDACT_ENTITIES = _get_env_list(CHOSEN_REDACT_ENTITIES)

|

| 38 |

+

if FULL_ENTITY_LIST: FULL_ENTITY_LIST = _get_env_list(FULL_ENTITY_LIST)

|

| 39 |

|

| 40 |

# Add custom spacy recognisers to the Comprehend list, so that local Spacy model can be used to pick up e.g. titles, streetnames, UK postcodes that are sometimes missed by comprehend

|

| 41 |

CHOSEN_COMPREHEND_ENTITIES.extend(custom_entities)

|

|

|

|

| 44 |

FILE_INPUT_HEIGHT = int(FILE_INPUT_HEIGHT)

|

| 45 |

|

| 46 |

# Create the gradio interface

|

| 47 |

+

app = gr.Blocks(theme = gr.themes.Default(primary_hue="blue"), fill_width=True) #gr.themes.Base()

|

| 48 |

|

| 49 |

with app:

|

| 50 |

|

|

|

|

| 57 |

all_image_annotations_state = gr.State([])

|

| 58 |

|

| 59 |

all_decision_process_table_state = gr.Dataframe(value=pd.DataFrame(), headers=None, col_count=0, row_count = (0, "dynamic"), label="all_decision_process_table", visible=False, type="pandas", wrap=True)

|

| 60 |

+

|

| 61 |

|

| 62 |

all_page_line_level_ocr_results = gr.State([])

|

| 63 |

all_page_line_level_ocr_results_with_children = gr.State([])

|

|

|

|

| 188 |

# Duplicate page detection

|

| 189 |

in_duplicate_pages_text = gr.Textbox(label="in_duplicate_pages_text", visible=False)

|

| 190 |

duplicate_pages_df = gr.Dataframe(value=pd.DataFrame(), headers=None, col_count=0, row_count = (0, "dynamic"), label="duplicate_pages_df", visible=False, type="pandas", wrap=True)

|

| 191 |

+

full_duplicated_data_df = gr.Dataframe(value=pd.DataFrame(), headers=None, col_count=0, row_count = (0, "dynamic"), label="full_duplicated_data_df", visible=False, type="pandas", wrap=True)

|

| 192 |

+

selected_duplicate_data_row_index = gr.Number(value=None, label="selected_duplicate_data_row_index", visible=False)

|

| 193 |

+

full_duplicate_data_by_file = gr.State() # A dictionary of the full duplicate data indexed by file

|

| 194 |

|

| 195 |

# Tracking variables for current page (not visible)

|

| 196 |

current_loop_page_number = gr.Number(value=0,precision=0, interactive=False, label = "Last redacted page in document", visible=False)

|

|

|

|

| 235 |

|

| 236 |

Redact personally identifiable information (PII) from documents (PDF, images), open text, or tabular data (XLSX/CSV/Parquet). Please see the [User Guide](https://github.com/seanpedrick-case/doc_redaction/blob/main/README.md) for a walkthrough on how to use the app. Below is a very brief overview.

|

| 237 |

|

| 238 |

+

To identify text in documents, the 'Local' text/OCR image analysis uses spaCy/Tesseract, and works well only for documents with typed text. If available, choose 'AWS Textract' to redact more complex elements e.g. signatures or handwriting. Then, choose a method for PII identification. 'Local' is quick and gives good results if you are primarily looking for a custom list of terms to redact (see Redaction settings). If available, AWS Comprehend gives better results at a small cost.

|

| 239 |

|

| 240 |

After redaction, review suggested redactions on the 'Review redactions' tab. The original pdf can be uploaded here alongside a '...review_file.csv' to continue a previous redaction/review task. See the 'Redaction settings' tab to choose which pages to redact, the type of information to redact (e.g. people, places), or custom terms to always include/ exclude from redaction.

|

| 241 |

|

|

|

|

| 264 |

local_ocr_output_found_checkbox = gr.Checkbox(value= False, label="Existing local OCR output file found", interactive=False, visible=True)

|

| 265 |

with gr.Column(scale=4):

|

| 266 |

with gr.Row(equal_height=True):

|

| 267 |

+

total_pdf_page_count = gr.Number(label = "Total page count", value=0, visible=True, interactive=False)

|

| 268 |

+

estimated_aws_costs_number = gr.Number(label = "Approximate AWS Textract and/or Comprehend cost (£)", value=0.00, precision=2, visible=True, interactive=False)

|

| 269 |

+

estimated_time_taken_number = gr.Number(label = "Approximate time taken to extract text/redact (minutes)", value=0, visible=True, precision=2, interactive=False)

|

| 270 |

|

| 271 |

if GET_COST_CODES == "True" or ENFORCE_COST_CODES == "True":

|

| 272 |

with gr.Accordion("Assign task to cost code", open = True, visible=True):

|

|

|

|

| 322 |

annotate_zoom_in = gr.Button("Zoom in", visible=False)

|

| 323 |

annotate_zoom_out = gr.Button("Zoom out", visible=False)

|

| 324 |

with gr.Row():

|

| 325 |

+

clear_all_redactions_on_page_btn = gr.Button("Clear all redactions on page", visible=False)

|

| 326 |

+

|

| 327 |

+

with gr.Accordion(label = "View and edit review file data", open=False):

|

| 328 |

+

review_file_df = gr.Dataframe(value=pd.DataFrame(), headers=['image', 'page', 'label', 'color', 'xmin', 'ymin', 'xmax', 'ymax', 'text', 'id'], row_count = (0, "dynamic"), label="Review file data", visible=True, type="pandas", wrap=True, show_search=True, show_fullscreen_button=True, show_copy_button=True)

|

| 329 |

|

| 330 |

with gr.Row():

|

| 331 |

with gr.Column(scale=2):

|

|

|

|

| 396 |

# IDENTIFY DUPLICATE PAGES TAB

|

| 397 |

###

|

| 398 |

with gr.Tab(label="Identify duplicate pages"):

|

| 399 |

+

gr.Markdown("Search for duplicate pages/subdocuments in your ocr_output files. By default, this function will search for duplicate text across multiple pages, and then join consecutive matching pages together into matched 'subdocuments'. The results can be reviewed below, false positives removed, and then the verified results applied to a document you have loaded in on the 'Review redactions' tab.")

|

| 400 |

+

|

| 401 |

+

with gr.Accordion("Step 1: Configure and run analysis", open = True):

|

| 402 |

in_duplicate_pages = gr.File(

|

| 403 |

+

label="Upload one or multiple 'ocr_output.csv' files to find duplicate pages and subdocuments",

|

| 404 |

file_count="multiple", height=FILE_INPUT_HEIGHT, file_types=['.csv']

|

| 405 |

)

|

| 406 |

|

| 407 |

+

with gr.Accordion("Duplicate matching parameters", open = False):

|

| 408 |

+

with gr.Row():

|

| 409 |

+

duplicate_threshold_input = gr.Number(value=0.95, label="Similarity threshold", info="Score (0-1) to consider pages a match.")

|

| 410 |

+

min_word_count_input = gr.Number(value=10, label="Minimum word count", info="Pages with fewer words than this value are ignored.")

|

| 411 |

+

|

| 412 |

+

gr.Markdown("#### Matching Strategy")

|

| 413 |

+

greedy_match_input = gr.Checkbox(

|

| 414 |

+

label="Enable 'subdocument' matching",

|

| 415 |

+

value=True,

|

| 416 |

+

info="If checked, finds the longest possible sequence of matching pages (subdocuments), minimum length one page. Overrides the slider below."

|

| 417 |

+

)

|

| 418 |

+

min_consecutive_pages_input = gr.Slider(

|

| 419 |

+

minimum=1, maximum=20, value=1, step=1,

|

| 420 |

+

label="Minimum consecutive pages (modified subdocument match)",

|

| 421 |

+

info="If greedy matching option above is unticked, use this to find only subdocuments of a minimum number of consecutive pages."

|

| 422 |

+

)

|

| 423 |

|

| 424 |

+

find_duplicate_pages_btn = gr.Button(value="Identify duplicate pages/subdocuments", variant="primary")

|

| 425 |

|

| 426 |

+

with gr.Accordion("Step 2: Review and refine results", open=True):

|

| 427 |

+

gr.Markdown("### Analysis summary\nClick on a row to select it for preview or exclusion.")

|

| 428 |

+

|

| 429 |

+

with gr.Row():

|

| 430 |

+

results_df_preview = gr.Dataframe(

|

| 431 |

+

label="Similarity Results",

|

| 432 |

+

wrap=True,

|

| 433 |

+

show_fullscreen_button=True,

|

| 434 |

+

show_search=True,

|

| 435 |

+

show_copy_button=True

|

| 436 |

+

)

|

| 437 |

+

with gr.Row():

|

| 438 |

+

exclude_match_btn = gr.Button(

|

| 439 |

+

value="❌ Exclude Selected Match",

|

| 440 |

+

variant="stop"

|

| 441 |

+

)

|

| 442 |

+

gr.Markdown("Click a row in the table, then click this button to remove it from the results and update the downloadable files.")

|

| 443 |

|

| 444 |

+

gr.Markdown("### Full Text Preview of Selected Match")

|

| 445 |

with gr.Row():

|

| 446 |

+

page1_text_preview = gr.Dataframe(label="Match Source (Document 1)", wrap=True, headers=["page", "text"], show_fullscreen_button=True, show_search=True, show_copy_button=True)

|

| 447 |

+

page2_text_preview = gr.Dataframe(label="Match Duplicate (Document 2)", wrap=True, headers=["page", "text"], show_fullscreen_button=True, show_search=True, show_copy_button=True)

|