Merge pull request #54 from seanpedrick-case/dev

Browse filesAdded search text and easy redact feature, multi-language support, added support for PaddleOCR. Various minor fixes and package updates.

- Dockerfile +20 -6

- README.md +266 -9

- app.py +0 -0

- cdk/cdk_config.py +3 -3

- cdk/cdk_stack.py +15 -0

- cdk/post_cdk_build_quickstart.py +2 -2

- cdk/requirements.txt +3 -3

- example_config.env +26 -0

- index.qmd +1 -1

- load_dynamo_logs.py +45 -8

- pyproject.toml +20 -18

- requirements.txt +19 -17

- src/app_settings.qmd +69 -20

- src/user_guide.qmd +79 -6

- tools/aws_textract.py +9 -191

- tools/cli_redact.py +149 -69

- tools/config.py +29 -5

- tools/custom_image_analyser_engine.py +0 -0

- tools/data_anonymise.py +209 -65

- tools/example_cli_calls.txt +24 -0

- tools/file_conversion.py +195 -70

- tools/file_redaction.py +481 -391

- tools/find_duplicate_pages.py +696 -330

- tools/helper_functions.py +67 -21

- tools/load_spacy_model_custom_recognisers.py +350 -83

- tools/redaction_review.py +426 -86

Dockerfile

CHANGED

|

@@ -17,7 +17,7 @@ WORKDIR /src

|

|

| 17 |

|

| 18 |

COPY requirements.txt .

|

| 19 |

|

| 20 |

-

RUN pip install --no-cache-dir --target=/install -r requirements.txt && rm requirements.txt

|

| 21 |

|

| 22 |

# Add lambda entrypoint and script

|

| 23 |

COPY lambda_entrypoint.py .

|

|

@@ -54,7 +54,8 @@ ENV GRADIO_TEMP_DIR=/tmp/gradio_tmp/ \

|

|

| 54 |

ACCESS_LOGS_FOLDER=$APP_HOME/app/logs/ \

|

| 55 |

USAGE_LOGS_FOLDER=$APP_HOME/app/usage/ \

|

| 56 |

CONFIG_FOLDER=$APP_HOME/app/config/ \

|

| 57 |

-

XDG_CACHE_HOME=/tmp/xdg_cache/user_1000

|

|

|

|

| 58 |

|

| 59 |

# Create the base application directory and set its ownership

|

| 60 |

RUN mkdir -p ${APP_HOME}/app && chown user:user ${APP_HOME}/app

|

|

@@ -81,13 +82,22 @@ RUN mkdir -p \

|

|

| 81 |

${APP_HOME}/app/logs \

|

| 82 |

${APP_HOME}/app/usage \

|

| 83 |

${APP_HOME}/app/feedback \

|

| 84 |

-

${APP_HOME}/app/config

|

| 85 |

|

| 86 |

-

# Now handle the /tmp and /var/tmp directories and their subdirectories

|

| 87 |

RUN mkdir -p /tmp/gradio_tmp /tmp/tld /tmp/matplotlib_cache /tmp /var/tmp ${XDG_CACHE_HOME} \

|

| 88 |

&& chown user:user /tmp /var/tmp /tmp/gradio_tmp /tmp/tld /tmp/matplotlib_cache ${XDG_CACHE_HOME} \

|

| 89 |

&& chmod 1777 /tmp /var/tmp /tmp/gradio_tmp /tmp/tld /tmp/matplotlib_cache \

|

| 90 |

-

&& chmod 700 ${XDG_CACHE_HOME}

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 91 |

|

| 92 |

# Copy installed packages from builder stage

|

| 93 |

COPY --from=builder /install /usr/local/lib/python3.11/site-packages/

|

|

@@ -115,6 +125,9 @@ VOLUME ["/home/user/app/logs"]

|

|

| 115 |

VOLUME ["/home/user/app/usage"]

|

| 116 |

VOLUME ["/home/user/app/feedback"]

|

| 117 |

VOLUME ["/home/user/app/config"]

|

|

|

|

|

|

|

|

|

|

| 118 |

VOLUME ["/tmp"]

|

| 119 |

VOLUME ["/var/tmp"]

|

| 120 |

|

|

@@ -127,7 +140,8 @@ ENV PATH=$APP_HOME/.local/bin:$PATH \

|

|

| 127 |

GRADIO_NUM_PORTS=1 \

|

| 128 |

GRADIO_SERVER_NAME=0.0.0.0 \

|

| 129 |

GRADIO_SERVER_PORT=7860 \

|

| 130 |

-

GRADIO_ANALYTICS_ENABLED=False

|

|

|

|

| 131 |

|

| 132 |

ENTRYPOINT ["/entrypoint.sh"]

|

| 133 |

|

|

|

|

| 17 |

|

| 18 |

COPY requirements.txt .

|

| 19 |

|

| 20 |

+

RUN pip install --verbose --no-cache-dir --target=/install -r requirements.txt && rm requirements.txt

|

| 21 |

|

| 22 |

# Add lambda entrypoint and script

|

| 23 |

COPY lambda_entrypoint.py .

|

|

|

|

| 54 |

ACCESS_LOGS_FOLDER=$APP_HOME/app/logs/ \

|

| 55 |

USAGE_LOGS_FOLDER=$APP_HOME/app/usage/ \

|

| 56 |

CONFIG_FOLDER=$APP_HOME/app/config/ \

|

| 57 |

+

XDG_CACHE_HOME=/tmp/xdg_cache/user_1000 \

|

| 58 |

+

TESSERACT_DATA_FOLDER=/usr/share/tessdata

|

| 59 |

|

| 60 |

# Create the base application directory and set its ownership

|

| 61 |

RUN mkdir -p ${APP_HOME}/app && chown user:user ${APP_HOME}/app

|

|

|

|

| 82 |

${APP_HOME}/app/logs \

|

| 83 |

${APP_HOME}/app/usage \

|

| 84 |

${APP_HOME}/app/feedback \

|

| 85 |

+

${APP_HOME}/app/config

|

| 86 |

|

| 87 |

+

# Now handle the /tmp and /var/tmp directories and their subdirectories, paddle, spacy, tessdata

|

| 88 |

RUN mkdir -p /tmp/gradio_tmp /tmp/tld /tmp/matplotlib_cache /tmp /var/tmp ${XDG_CACHE_HOME} \

|

| 89 |

&& chown user:user /tmp /var/tmp /tmp/gradio_tmp /tmp/tld /tmp/matplotlib_cache ${XDG_CACHE_HOME} \

|

| 90 |

&& chmod 1777 /tmp /var/tmp /tmp/gradio_tmp /tmp/tld /tmp/matplotlib_cache \

|

| 91 |

+

&& chmod 700 ${XDG_CACHE_HOME} \

|

| 92 |

+

&& mkdir -p ${APP_HOME}/.paddlex \

|

| 93 |

+

&& chown user:user ${APP_HOME}/.paddlex \

|

| 94 |

+

&& chmod 755 ${APP_HOME}/.paddlex \

|

| 95 |

+

&& mkdir -p ${APP_HOME}/.local/share/spacy/data \

|

| 96 |

+

&& chown user:user ${APP_HOME}/.local/share/spacy/data \

|

| 97 |

+

&& chmod 755 ${APP_HOME}/.local/share/spacy/data \

|

| 98 |

+

&& mkdir -p /usr/share/tessdata \

|

| 99 |

+

&& chown user:user /usr/share/tessdata \

|

| 100 |

+

&& chmod 755 /usr/share/tessdata

|

| 101 |

|

| 102 |

# Copy installed packages from builder stage

|

| 103 |

COPY --from=builder /install /usr/local/lib/python3.11/site-packages/

|

|

|

|

| 125 |

VOLUME ["/home/user/app/usage"]

|

| 126 |

VOLUME ["/home/user/app/feedback"]

|

| 127 |

VOLUME ["/home/user/app/config"]

|

| 128 |

+

VOLUME ["/home/user/.paddlex"]

|

| 129 |

+

VOLUME ["/home/user/.local/share/spacy/data"]

|

| 130 |

+

VOLUME ["/usr/share/tessdata"]

|

| 131 |

VOLUME ["/tmp"]

|

| 132 |

VOLUME ["/var/tmp"]

|

| 133 |

|

|

|

|

| 140 |

GRADIO_NUM_PORTS=1 \

|

| 141 |

GRADIO_SERVER_NAME=0.0.0.0 \

|

| 142 |

GRADIO_SERVER_PORT=7860 \

|

| 143 |

+

GRADIO_ANALYTICS_ENABLED=False

|

| 144 |

+

|

| 145 |

|

| 146 |

ENTRYPOINT ["/entrypoint.sh"]

|

| 147 |

|

README.md

CHANGED

|

@@ -10,9 +10,9 @@ license: agpl-3.0

|

|

| 10 |

---

|

| 11 |

# Document redaction

|

| 12 |

|

| 13 |

-

version: 0.

|

| 14 |

|

| 15 |

-

Redact personally identifiable information (PII) from documents (pdf, images),

|

| 16 |

|

| 17 |

To identify text in documents, the 'local' text/OCR image analysis uses spacy/tesseract, and works quite well for documents with typed text. If available, choose 'AWS Textract service' to redact more complex elements e.g. signatures or handwriting. Then, choose a method for PII identification. 'Local' is quick and gives good results if you are primarily looking for a custom list of terms to redact (see Redaction settings). If available, AWS Comprehend gives better results at a small cost.

|

| 18 |

|

|

@@ -20,7 +20,191 @@ After redaction, review suggested redactions on the 'Review redactions' tab. The

|

|

| 20 |

|

| 21 |

NOTE: The app is not 100% accurate, and it will miss some personal information. It is essential that all outputs are reviewed **by a human** before using the final outputs.

|

| 22 |

|

| 23 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 24 |

|

| 25 |

## Table of contents

|

| 26 |

|

|

@@ -35,7 +219,7 @@ NOTE: The app is not 100% accurate, and it will miss some personal information.

|

|

| 35 |

- [Redacting only specific pages](#redacting-only-specific-pages)

|

| 36 |

- [Handwriting and signature redaction](#handwriting-and-signature-redaction)

|

| 37 |

- [Reviewing and modifying suggested redactions](#reviewing-and-modifying-suggested-redactions)

|

| 38 |

-

- [Redacting tabular data files (CSV/XLSX) or copy and pasted text](#redacting-tabular-data-files-xlsxcsv-or-copy-and-pasted-text)

|

| 39 |

|

| 40 |

See the [advanced user guide here](#advanced-user-guide):

|

| 41 |

- [Merging redaction review files](#merging-redaction-review-files)

|

|

@@ -225,9 +409,11 @@ On the 'Review redactions' tab you have a visual interface that allows you to in

|

|

| 225 |

|

| 226 |

### Uploading documents for review

|

| 227 |

|

| 228 |

-

The top area has a file upload area where you can upload

|

| 229 |

|

| 230 |

-

Optionally, you can also upload one of the '..._ocr_output.csv'

|

|

|

|

|

|

|

| 231 |

|

| 232 |

|

| 233 |

|

|

@@ -315,6 +501,77 @@ Once you have filtered the table, or selected a row from the table, you have a f

|

|

| 315 |

|

| 316 |

If you made a mistake, click the 'Undo last element removal' button to restore the Search suggested redactions table to its previous state (can only undo the last action).

|

| 317 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 318 |

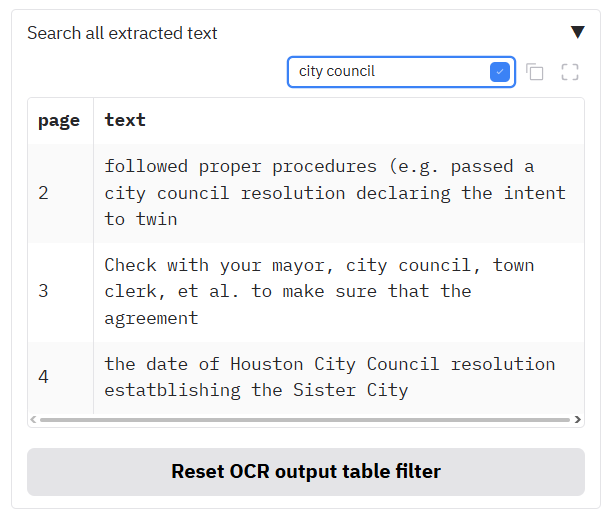

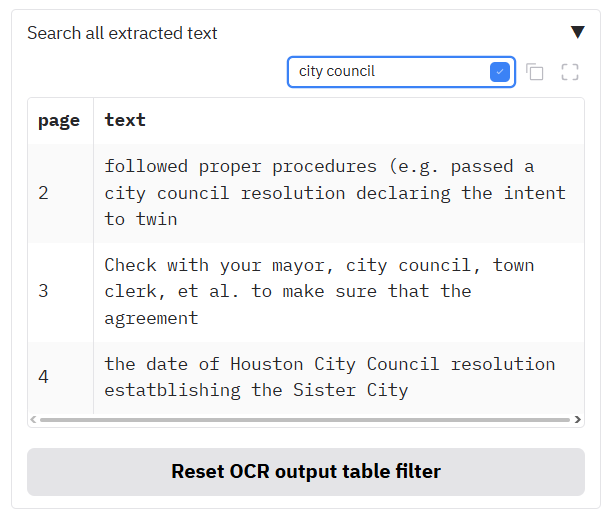

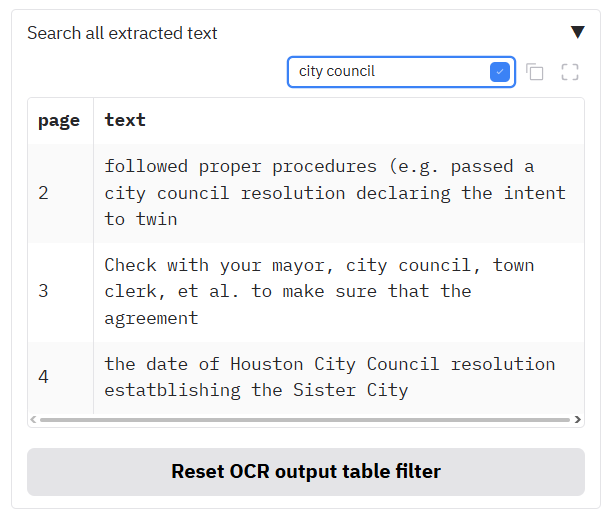

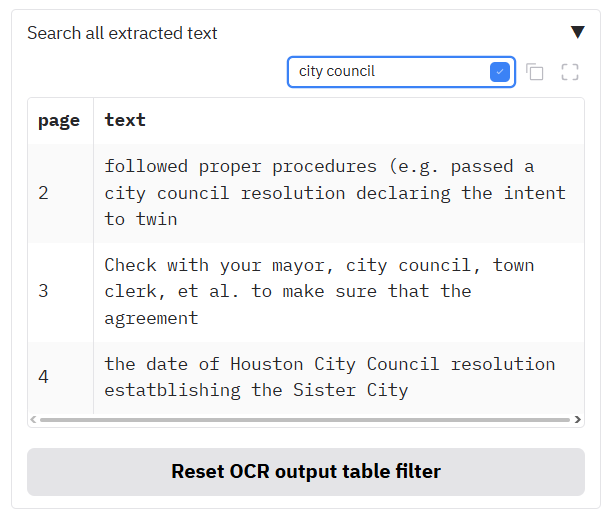

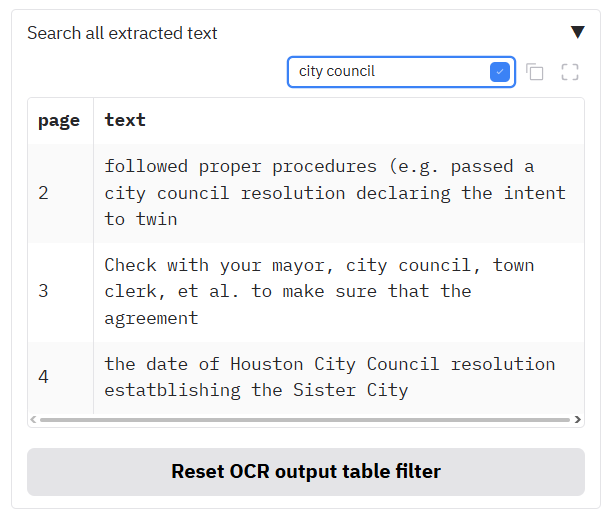

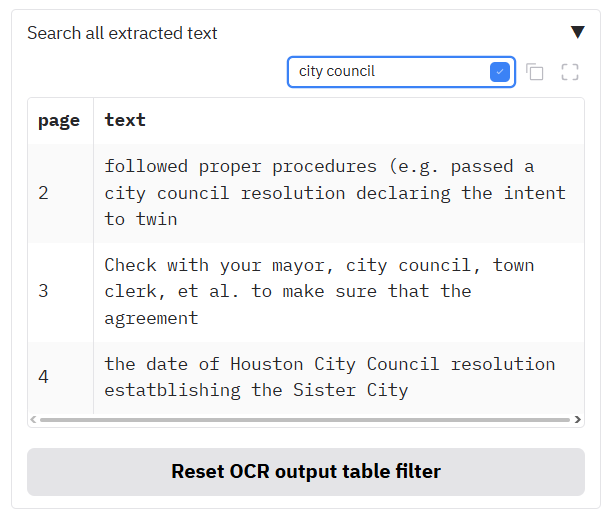

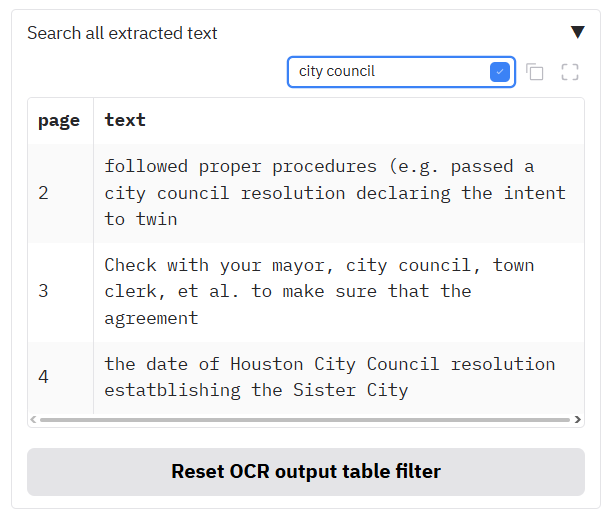

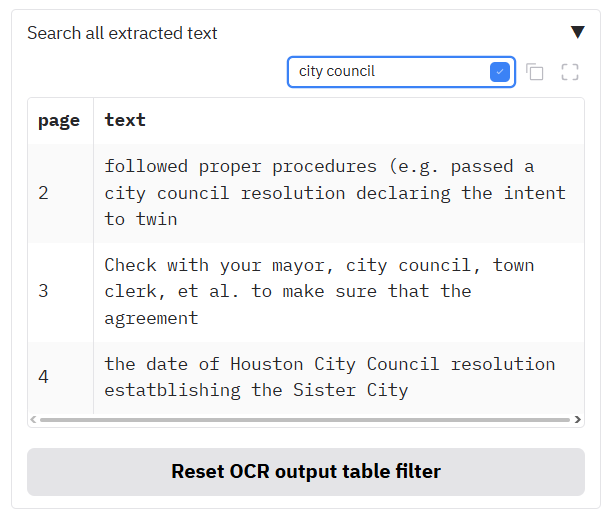

### Navigating through the document using the 'Search all extracted text'

|

| 319 |

|

| 320 |

The 'search all extracted text' table will contain text if you have just redacted a document, or if you have uploaded a '..._ocr_output.csv' file alongside a document file and review file on the Review redactions tab as [described above](#uploading-documents-for-review).

|

|

@@ -327,11 +584,11 @@ You can search through the extracted text by using the search bar just above the

|

|

| 327 |

|

| 328 |

|

| 329 |

|

| 330 |

-

## Redacting tabular data files (XLSX/CSV) or copy and pasted text

|

| 331 |

|

| 332 |

-

###

|

| 333 |

|

| 334 |

-

The app can be used to redact tabular data files such as xlsx or csv files. For this to work properly, your data file needs to be in a simple table format, with a single table starting from the first cell (A1), and no other information in the sheet. Similarly for .xlsx files, each sheet in the file that you want to redact should be in this simple format.

|

| 335 |

|

| 336 |

To demonstrate this, we can use [the example csv file 'combined_case_notes.csv'](https://github.com/seanpedrick-case/document_redaction_examples/blob/main/combined_case_notes.csv), which is a small dataset of dummy social care case notes. Go to the 'Open text or Excel/csv files' tab. Drop the file into the upload area. After the file is loaded, you should see the suggested columns for redaction in the box underneath. You can select and deselect columns to redact as you wish from this list.

|

| 337 |

|

|

|

|

| 10 |

---

|

| 11 |

# Document redaction

|

| 12 |

|

| 13 |

+

version: 1.0.0

|

| 14 |

|

| 15 |

+

Redact personally identifiable information (PII) from documents (pdf, images), Word files (.docx), or tabular data (xlsx/csv/parquet). Please see the [User Guide](#user-guide) for a walkthrough on how to use the app. Below is a very brief overview.

|

| 16 |

|

| 17 |

To identify text in documents, the 'local' text/OCR image analysis uses spacy/tesseract, and works quite well for documents with typed text. If available, choose 'AWS Textract service' to redact more complex elements e.g. signatures or handwriting. Then, choose a method for PII identification. 'Local' is quick and gives good results if you are primarily looking for a custom list of terms to redact (see Redaction settings). If available, AWS Comprehend gives better results at a small cost.

|

| 18 |

|

|

|

|

| 20 |

|

| 21 |

NOTE: The app is not 100% accurate, and it will miss some personal information. It is essential that all outputs are reviewed **by a human** before using the final outputs.

|

| 22 |

|

| 23 |

+

---

|

| 24 |

+

|

| 25 |

+

## 🚀 Quick Start - Installation and first run

|

| 26 |

+

|

| 27 |

+

Follow these instructions to get the document redaction application running on your local machine.

|

| 28 |

+

|

| 29 |

+

### 1. Prerequisites: System Dependencies

|

| 30 |

+

|

| 31 |

+

This application relies on two external tools for OCR (Tesseract) and PDF processing (Poppler). Please install them on your system before proceeding.

|

| 32 |

+

|

| 33 |

+

---

|

| 34 |

+

|

| 35 |

+

|

| 36 |

+

#### **On Windows**

|

| 37 |

+

|

| 38 |

+

Installation on Windows requires downloading installers and adding the programs to your system's PATH.

|

| 39 |

+

|

| 40 |

+

1. **Install Tesseract OCR:**

|

| 41 |

+

* Download the installer from the official Tesseract at [UB Mannheim page](https://github.com/UB-Mannheim/tesseract/wiki) (e.g., `tesseract-ocr-w64-setup-v5.X.X...exe`).

|

| 42 |

+

* Run the installer.

|

| 43 |

+

* **IMPORTANT:** During installation, ensure you select the option to "Add Tesseract to system PATH for all users" or a similar option. This is crucial for the application to find the Tesseract executable.

|

| 44 |

+

|

| 45 |

+

|

| 46 |

+

2. **Install Poppler:**

|

| 47 |

+

* Download the latest Poppler binary for Windows. A common source is the [Poppler for Windows](https://github.com/oschwartz10612/poppler-windows) GitHub releases page. Download the `.zip` file (e.g., `poppler-24.02.0-win.zip`).

|

| 48 |

+

* Extract the contents of the zip file to a permanent location on your computer, for example, `C:\Program Files\poppler\`.

|

| 49 |

+

* You must add the `bin` folder from your Poppler installation to your system's PATH environment variable.

|

| 50 |

+

* Search for "Edit the system environment variables" in the Windows Start Menu and open it.

|

| 51 |

+

* Click the "Environment Variables..." button.

|

| 52 |

+

* In the "System variables" section, find and select the `Path` variable, then click "Edit...".

|

| 53 |

+

* Click "New" and add the full path to the `bin` directory inside your Poppler folder (e.g., `C:\Program Files\poppler\poppler-24.02.0\bin`).

|

| 54 |

+

* Click OK on all windows to save the changes.

|

| 55 |

+

|

| 56 |

+

To verify, open a new Command Prompt and run `tesseract --version` and `pdftoppm -v`. If they both return version information, you have successfully installed the prerequisites.

|

| 57 |

+

|

| 58 |

+

---

|

| 59 |

+

|

| 60 |

+

#### **On Linux (Debian/Ubuntu)**

|

| 61 |

+

|

| 62 |

+

Open your terminal and run the following command to install Tesseract and Poppler:

|

| 63 |

+

|

| 64 |

+

```bash

|

| 65 |

+

sudo apt-get update && sudo apt-get install -y tesseract-ocr poppler-utils

|

| 66 |

+

```

|

| 67 |

+

|

| 68 |

+

#### **On Linux (Fedora/CentOS/RHEL)**

|

| 69 |

+

|

| 70 |

+

Open your terminal and use the `dnf` or `yum` package manager:

|

| 71 |

+

|

| 72 |

+

```bash

|

| 73 |

+

sudo dnf install -y tesseract poppler-utils

|

| 74 |

+

```

|

| 75 |

+

---

|

| 76 |

+

|

| 77 |

+

|

| 78 |

+

### 2. Installation: Code and Python Packages

|

| 79 |

+

|

| 80 |

+

Once the system prerequisites are installed, you can set up the Python environment.

|

| 81 |

+

|

| 82 |

+

#### Step 1: Clone the Repository

|

| 83 |

+

|

| 84 |

+

Open your terminal or Git Bash and clone this repository:

|

| 85 |

+

```bash

|

| 86 |

+

git clone https://github.com/seanpedrick-case/doc_redaction.git

|

| 87 |

+

cd doc_redaction

|

| 88 |

+

```

|

| 89 |

+

|

| 90 |

+

#### Step 2: Create and Activate a Virtual Environment (Recommended)

|

| 91 |

+

|

| 92 |

+

It is highly recommended to use a virtual environment to isolate project dependencies and avoid conflicts with other Python projects.

|

| 93 |

+

|

| 94 |

+

```bash

|

| 95 |

+

# Create the virtual environment

|

| 96 |

+

python -m venv venv

|

| 97 |

+

|

| 98 |

+

# Activate it

|

| 99 |

+

# On Windows:

|

| 100 |

+

.\venv\Scripts\activate

|

| 101 |

+

|

| 102 |

+

# On macOS/Linux:

|

| 103 |

+

source venv/bin/activate

|

| 104 |

+

```

|

| 105 |

+

|

| 106 |

+

#### Step 3: Install Python Dependencies

|

| 107 |

+

|

| 108 |

+

This project uses `pyproject.toml` to manage dependencies. You can install everything with a single pip command. This process will also download the required Spacy models and other packages directly from their URLs.

|

| 109 |

+

|

| 110 |

+

```bash

|

| 111 |

+

pip install .

|

| 112 |

+

```

|

| 113 |

+

|

| 114 |

+

Alternatively, you can use the `requirements.txt` file:

|

| 115 |

+

```bash

|

| 116 |

+

pip install -r requirements.txt

|

| 117 |

+

```

|

| 118 |

+

|

| 119 |

+

### 3. Run the Application

|

| 120 |

+

|

| 121 |

+

With all dependencies installed, you can now start the Gradio application.

|

| 122 |

+

|

| 123 |

+

```bash

|

| 124 |

+

python app.py

|

| 125 |

+

```

|

| 126 |

+

|

| 127 |

+

After running the command, the application will start, and you will see a local URL in your terminal (usually `http://127.0.0.1:7860`).

|

| 128 |

+

|

| 129 |

+

Open this URL in your web browser to use the document redaction tool

|

| 130 |

+

|

| 131 |

+

---

|

| 132 |

+

|

| 133 |

+

|

| 134 |

+

### 4. ⚙️ Configuration (Optional)

|

| 135 |

+

|

| 136 |

+

You can customise the application's behavior by creating a configuration file. This allows you to change settings without modifying the source code, such as enabling AWS features, changing logging behavior, or pointing to local Tesseract/Poppler installations. A full overview of all the potential settings you can modify in the app_config.env file can be seen in tools/config.py, with explanation on the documentation website for [the github repo](https://seanpedrick-case.github.io/doc_redaction/)

|

| 137 |

+

|

| 138 |

+

To get started:

|

| 139 |

+

1. Locate the `example_config.env` file in the root of the project.

|

| 140 |

+

2. Create a new file named `app_config.env` inside the `config/` directory (i.e., `config/app_config.env`).

|

| 141 |

+

3. Copy the contents from `example_config.env` into your new `config/app_config.env` file.

|

| 142 |

+

4. Modify the values in `config/app_config.env` to suit your needs. The application will automatically load these settings on startup.

|

| 143 |

+

|

| 144 |

+

If you do not create this file, the application will run with default settings.

|

| 145 |

+

|

| 146 |

+

#### Configuration Breakdown

|

| 147 |

+

|

| 148 |

+

Here is an overview of the most important settings, separated by whether they are for local use or require AWS.

|

| 149 |

+

|

| 150 |

+

---

|

| 151 |

+

|

| 152 |

+

#### **Local & General Settings (No AWS Required)**

|

| 153 |

+

|

| 154 |

+

These settings are useful for all users, regardless of whether you are using AWS.

|

| 155 |

+

|

| 156 |

+

* `TESSERACT_FOLDER` / `POPPLER_FOLDER`

|

| 157 |

+

* Use these if you installed Tesseract or Poppler to a custom location on **Windows** and did not add them to the system PATH.

|

| 158 |

+

* Provide the path to the respective installation folders (for Poppler, point to the `bin` sub-directory).

|

| 159 |

+

* **Examples:** `POPPLER_FOLDER=C:/Program Files/poppler-24.02.0/bin/` `TESSERACT_FOLDER=tesseract/`

|

| 160 |

+

|

| 161 |

+

* `SHOW_LANGUAGE_SELECTION=True`

|

| 162 |

+

* Set to `True` to display a language selection dropdown in the UI for OCR processing.

|

| 163 |

+

|

| 164 |

+

* `CHOSEN_LOCAL_OCR_MODEL=tesseract`"

|

| 165 |

+

* Choose the backend for local OCR. Options are `tesseract`, `paddle`, or `hybrid`. "Tesseract" is the default, and is recommended. "hybrid" is a combination of the two - first pass through the redactions will be done with Tesseract, and then a second pass will be done with PaddleOCR on words with low confidence. "paddle" will only return whole line text extraction, and so will only work for OCR, not redaction.

|

| 166 |

+

|

| 167 |

+

* `SESSION_OUTPUT_FOLDER=False`

|

| 168 |

+

* If `True`, redacted files will be saved in unique subfolders within the `output/` directory for each session.

|

| 169 |

+

|

| 170 |

+

* `DISPLAY_FILE_NAMES_IN_LOGS=False`

|

| 171 |

+

* For privacy, file names are not recorded in usage logs by default. Set to `True` to include them.

|

| 172 |

+

|

| 173 |

+

---

|

| 174 |

+

|

| 175 |

+

#### **AWS-Specific Settings**

|

| 176 |

+

|

| 177 |

+

These settings are only relevant if you intend to use AWS services like Textract for OCR and Comprehend for PII detection.

|

| 178 |

+

|

| 179 |

+

* `RUN_AWS_FUNCTIONS=1`

|

| 180 |

+

* **This is the master switch.** You must set this to `1` to enable any AWS functionality. If it is `0`, all other AWS settings will be ignored.

|

| 181 |

+

|

| 182 |

+

* **UI Options:**

|

| 183 |

+

* `SHOW_AWS_TEXT_EXTRACTION_OPTIONS=True`: Adds "AWS Textract" as an option in the text extraction dropdown.

|

| 184 |

+

* `SHOW_AWS_PII_DETECTION_OPTIONS=True`: Adds "AWS Comprehend" as an option in the PII detection dropdown.

|

| 185 |

+

|

| 186 |

+

* **Core AWS Configuration:**

|

| 187 |

+

* `AWS_REGION=example-region`: Set your AWS region (e.g., `us-east-1`).

|

| 188 |

+

* `DOCUMENT_REDACTION_BUCKET=example-bucket`: The name of the S3 bucket the application will use for temporary file storage and processing.

|

| 189 |

+

|

| 190 |

+

* **AWS Logging:**

|

| 191 |

+

* `SAVE_LOGS_TO_DYNAMODB=True`: If enabled, usage and feedback logs will be saved to DynamoDB tables.

|

| 192 |

+

* `ACCESS_LOG_DYNAMODB_TABLE_NAME`, `USAGE_LOG_DYNAMODB_TABLE_NAME`, etc.: Specify the names of your DynamoDB tables for logging.

|

| 193 |

+

|

| 194 |

+

* **Advanced AWS Textract Features:**

|

| 195 |

+

* `SHOW_WHOLE_DOCUMENT_TEXTRACT_CALL_OPTIONS=True`: Enables UI components for large-scale, asynchronous document processing via Textract.

|

| 196 |

+

* `TEXTRACT_WHOLE_DOCUMENT_ANALYSIS_BUCKET=example-bucket-output`: A separate S3 bucket for the final output of asynchronous Textract jobs.

|

| 197 |

+

* `LOAD_PREVIOUS_TEXTRACT_JOBS_S3=True`: If enabled, the app will try to load the status of previously submitted asynchronous jobs from S3.

|

| 198 |

+

|

| 199 |

+

* **Cost Tracking (for internal accounting):**

|

| 200 |

+

* `SHOW_COSTS=True`: Displays an estimated cost for AWS operations. Can be enabled even if AWS functions are off.

|

| 201 |

+

* `GET_COST_CODES=True`: Enables a dropdown for users to select a cost code before running a job.

|

| 202 |

+

* `COST_CODES_PATH=config/cost_codes.csv`: The local path to a CSV file containing your cost codes.

|

| 203 |

+

* `ENFORCE_COST_CODES=True`: Makes selecting a cost code mandatory before starting a redaction.

|

| 204 |

+

|

| 205 |

+

Now you have the app installed, what follows is a guide on how to use it for basic and advanced redaction.

|

| 206 |

+

|

| 207 |

+

# User Guide

|

| 208 |

|

| 209 |

## Table of contents

|

| 210 |

|

|

|

|

| 219 |

- [Redacting only specific pages](#redacting-only-specific-pages)

|

| 220 |

- [Handwriting and signature redaction](#handwriting-and-signature-redaction)

|

| 221 |

- [Reviewing and modifying suggested redactions](#reviewing-and-modifying-suggested-redactions)

|

| 222 |

+

- [Redacting Word, tabular data files (CSV/XLSX) or copy and pasted text](#redacting-word-tabular-data-files-xlsxcsv-or-copy-and-pasted-text)

|

| 223 |

|

| 224 |

See the [advanced user guide here](#advanced-user-guide):

|

| 225 |

- [Merging redaction review files](#merging-redaction-review-files)

|

|

|

|

| 409 |

|

| 410 |

### Uploading documents for review

|

| 411 |

|

| 412 |

+

The top area has a file upload area where you can upload files for review . In the left box, upload the original PDF file. Click '1. Upload original PDF'. In the right box, you can upload the '..._review_file.csv' that is produced by the redaction process.

|

| 413 |

|

| 414 |

+

Optionally, you can upload a '..._ocr_result_with_words' file here, that will allow you to search through the text and easily [add new redactions based on word search](#searching-and-adding-custom-redactions). You can also upload one of the '..._ocr_output.csv' file here that comes out of a redaction task, so that you can navigate the extracted text from the document. Click the button '2. Upload Review or OCR csv files' load in these files.

|

| 415 |

+

|

| 416 |

+

Now you can review and modify the suggested redactions using the interface described below.

|

| 417 |

|

| 418 |

|

| 419 |

|

|

|

|

| 501 |

|

| 502 |

If you made a mistake, click the 'Undo last element removal' button to restore the Search suggested redactions table to its previous state (can only undo the last action).

|

| 503 |

|

| 504 |

+

### Searching and Adding Custom Redactions

|

| 505 |

+

|

| 506 |

+

After a document has been processed, you may need to redact specific terms, names, or phrases that the automatic PII (Personally Identifiable Information) detection might have missed. The **"Search text to make new redactions"** tab gives you the power to find and redact any text within your document manually.

|

| 507 |

+

|

| 508 |

+

#### How to Use the Search and Redact Feature

|

| 509 |

+

|

| 510 |

+

The workflow is designed to be simple: **Search → Select → Redact**.

|

| 511 |

+

|

| 512 |

+

---

|

| 513 |

+

|

| 514 |

+

#### **Step 1: Search for Text**

|

| 515 |

+

|

| 516 |

+

1. Navigate to the **"Search text to make new redactions"** tab.

|

| 517 |

+

2. The main table will initially be populated with all the text extracted from the document, broken down by word.

|

| 518 |

+

3. To narrow this down, use the **"Multi-word text search"** box to type the word or phrase you want to find.

|

| 519 |

+

4. Click the **"Search"** button or press Enter.

|

| 520 |

+

5. The table below will update to show only the rows containing text that matches your search query.

|

| 521 |

+

|

| 522 |

+

> **Tip:** You can also filter the results by page number using the **"Page"** dropdown. To clear all filters and see the full text again, click the **"Reset table to original state"** button.

|

| 523 |

+

|

| 524 |

+

---

|

| 525 |

+

|

| 526 |

+

#### **Step 2: Select and Review a Match**

|

| 527 |

+

|

| 528 |

+

When you click on any row in the search results table:

|

| 529 |

+

|

| 530 |

+

* The document preview on the left will automatically jump to that page, allowing you to see the word in its original context.

|

| 531 |

+

* The details of your selection will appear in the smaller **"Selected row"** table for confirmation.

|

| 532 |

+

|

| 533 |

+

---

|

| 534 |

+

|

| 535 |

+

#### **Step 3: Choose Your Redaction Method**

|

| 536 |

+

|

| 537 |

+

You have several powerful options for redacting the text you've found:

|

| 538 |

+

|

| 539 |

+

* **Redact a Single, Specific Instance:**

|

| 540 |

+

* Click on the exact row in the table you want to redact.

|

| 541 |

+

* Click the **`Redact specific text row`** button.

|

| 542 |

+

* Only that single instance will be redacted.

|

| 543 |

+

|

| 544 |

+

* **Redact All Instances of a Word/Phrase:**

|

| 545 |

+

* Let's say you want to redact the project name "Project Alpha" everywhere it appears.

|

| 546 |

+

* Find and select one instance of "Project Alpha" in the table.

|

| 547 |

+

* Click the **`Redact all words with same text as selected row`** button.

|

| 548 |

+

* The application will find and redact every single occurrence of "Project Alpha" throughout the entire document.

|

| 549 |

+

|

| 550 |

+

* **Redact All Current Search Results:**

|

| 551 |

+

* Perform a search (e.g., for a specific person's name).

|

| 552 |

+

* If you are confident that every result shown in the filtered table should be redacted, click the **`Redact all text in table`** button.

|

| 553 |

+

* This will apply a redaction to all currently visible items in the table in one go.

|

| 554 |

+

|

| 555 |

+

---

|

| 556 |

+

|

| 557 |

+

#### **Customising Your New Redactions**

|

| 558 |

+

|

| 559 |

+

Before you click one of the redact buttons, you can customize the appearance and label of the new redactions under the **"Search options"** accordion:

|

| 560 |

+

|

| 561 |

+

* **Label for new redactions:** Change the text that appears on the redaction box (default is "Redaction"). You could change this to "CONFIDENTIAL" or "CUSTOM".

|

| 562 |

+

* **Colour for labels:** Set a custom color for the redaction box by providing an RGB value. The format must be three numbers (0-255) in parentheses, for example:

|

| 563 |

+

* ` (255, 0, 0) ` for Red

|

| 564 |

+

* ` (0, 0, 0) ` for Black

|

| 565 |

+

* ` (255, 255, 0) ` for Yellow

|

| 566 |

+

|

| 567 |

+

#### **Undoing a Mistake**

|

| 568 |

+

|

| 569 |

+

If you make a mistake, you can reverse the last redaction action you performed on this tab.

|

| 570 |

+

|

| 571 |

+

* Click the **`Undo latest redaction`** button. This will revert the last set of redactions you added (whether it was a single row, all of a certain text, or all search results).

|

| 572 |

+

|

| 573 |

+

> **Important:** This undo button only works for the *most recent* action. It maintains a single backup state, so it cannot undo actions that are two or more steps in the past.

|

| 574 |

+

|

| 575 |

### Navigating through the document using the 'Search all extracted text'

|

| 576 |

|

| 577 |

The 'search all extracted text' table will contain text if you have just redacted a document, or if you have uploaded a '..._ocr_output.csv' file alongside a document file and review file on the Review redactions tab as [described above](#uploading-documents-for-review).

|

|

|

|

| 584 |

|

| 585 |

|

| 586 |

|

| 587 |

+

## Redacting Word, tabular data files (XLSX/CSV) or copy and pasted text

|

| 588 |

|

| 589 |

+

### Word or tabular data files (XLSX/CSV)

|

| 590 |

|

| 591 |

+

The app can be used to redact Word (.docx), or tabular data files such as xlsx or csv files. For this to work properly, your data file needs to be in a simple table format, with a single table starting from the first cell (A1), and no other information in the sheet. Similarly for .xlsx files, each sheet in the file that you want to redact should be in this simple format.

|

| 592 |

|

| 593 |

To demonstrate this, we can use [the example csv file 'combined_case_notes.csv'](https://github.com/seanpedrick-case/document_redaction_examples/blob/main/combined_case_notes.csv), which is a small dataset of dummy social care case notes. Go to the 'Open text or Excel/csv files' tab. Drop the file into the upload area. After the file is loaded, you should see the suggested columns for redaction in the box underneath. You can select and deselect columns to redact as you wish from this list.

|

| 594 |

|

app.py

CHANGED

|

The diff for this file is too large to render.

See raw diff

|

|

|

cdk/cdk_config.py

CHANGED

|

@@ -213,9 +213,9 @@ SAVE_LOGS_TO_CSV = get_or_create_env_var('SAVE_LOGS_TO_CSV', 'True')

|

|

| 213 |

|

| 214 |

### DYNAMODB logs. Whether to save to DynamoDB, and the headers of the table

|

| 215 |

SAVE_LOGS_TO_DYNAMODB = get_or_create_env_var('SAVE_LOGS_TO_DYNAMODB', 'True')

|

| 216 |

-

ACCESS_LOG_DYNAMODB_TABLE_NAME = get_or_create_env_var('ACCESS_LOG_DYNAMODB_TABLE_NAME', f"{CDK_PREFIX}dynamodb-access-

|

| 217 |

-

FEEDBACK_LOG_DYNAMODB_TABLE_NAME = get_or_create_env_var('FEEDBACK_LOG_DYNAMODB_TABLE_NAME', f"{CDK_PREFIX}dynamodb-feedback".lower())

|

| 218 |

-

USAGE_LOG_DYNAMODB_TABLE_NAME = get_or_create_env_var('USAGE_LOG_DYNAMODB_TABLE_NAME', f"{CDK_PREFIX}dynamodb-usage".lower())

|

| 219 |

|

| 220 |

###

|

| 221 |

# REDACTION OPTIONS

|

|

|

|

| 213 |

|

| 214 |

### DYNAMODB logs. Whether to save to DynamoDB, and the headers of the table

|

| 215 |

SAVE_LOGS_TO_DYNAMODB = get_or_create_env_var('SAVE_LOGS_TO_DYNAMODB', 'True')

|

| 216 |

+

ACCESS_LOG_DYNAMODB_TABLE_NAME = get_or_create_env_var('ACCESS_LOG_DYNAMODB_TABLE_NAME', f"{CDK_PREFIX}dynamodb-access-logs".lower())

|

| 217 |

+

FEEDBACK_LOG_DYNAMODB_TABLE_NAME = get_or_create_env_var('FEEDBACK_LOG_DYNAMODB_TABLE_NAME', f"{CDK_PREFIX}dynamodb-feedback-logs".lower())

|

| 218 |

+

USAGE_LOG_DYNAMODB_TABLE_NAME = get_or_create_env_var('USAGE_LOG_DYNAMODB_TABLE_NAME', f"{CDK_PREFIX}dynamodb-usage-logs".lower())

|

| 219 |

|

| 220 |

###

|

| 221 |

# REDACTION OPTIONS

|

cdk/cdk_stack.py

CHANGED

|

@@ -990,6 +990,21 @@ class CdkStack(Stack):

|

|

| 990 |

"sourceVolume": epheremal_storage_volume_name,

|

| 991 |

"containerPath": "/tmp/gradio_tmp",

|

| 992 |

"readOnly": False

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 993 |

}

|

| 994 |

],

|

| 995 |

"readonlyRootFilesystem": read_only_file_system,

|

|

|

|

| 990 |

"sourceVolume": epheremal_storage_volume_name,

|

| 991 |

"containerPath": "/tmp/gradio_tmp",

|

| 992 |

"readOnly": False

|

| 993 |

+

},

|

| 994 |

+

{

|

| 995 |

+

"sourceVolume": epheremal_storage_volume_name,

|

| 996 |

+

"containerPath": "/home/user/.paddlex",

|

| 997 |

+

"readOnly": False

|

| 998 |

+

},

|

| 999 |

+

{

|

| 1000 |

+

"sourceVolume": epheremal_storage_volume_name,

|

| 1001 |

+

"containerPath": "/home/user/.local/share/spacy/data",

|

| 1002 |

+

"readOnly": False

|

| 1003 |

+

},

|

| 1004 |

+

{

|

| 1005 |

+

"sourceVolume": epheremal_storage_volume_name,

|

| 1006 |

+

"containerPath": "/usr/share/tessdata",

|

| 1007 |

+

"readOnly": False

|

| 1008 |

}

|

| 1009 |

],

|

| 1010 |

"readonlyRootFilesystem": read_only_file_system,

|

cdk/post_cdk_build_quickstart.py

CHANGED

|

@@ -13,10 +13,10 @@ start_codebuild_build(PROJECT_NAME=CODEBUILD_PROJECT_NAME)

|

|

| 13 |

# Upload config.env file to S3 bucket

|

| 14 |

upload_file_to_s3(local_file_paths="config/config.env", s3_key="", s3_bucket=S3_LOG_CONFIG_BUCKET_NAME)

|

| 15 |

|

| 16 |

-

total_seconds =

|

| 17 |

update_interval = 1 # Update every second

|

| 18 |

|

| 19 |

-

print("Waiting

|

| 20 |

|

| 21 |

# tqdm iterates over a range, and you perform a small sleep in each iteration

|

| 22 |

for i in tqdm(range(total_seconds), desc="Building container"):

|

|

|

|

| 13 |

# Upload config.env file to S3 bucket

|

| 14 |

upload_file_to_s3(local_file_paths="config/config.env", s3_key="", s3_bucket=S3_LOG_CONFIG_BUCKET_NAME)

|

| 15 |

|

| 16 |

+

total_seconds = 660 # 11 minutes

|

| 17 |

update_interval = 1 # Update every second

|

| 18 |

|

| 19 |

+

print("Waiting 11 minutes for the CodeBuild container to build.")

|

| 20 |

|

| 21 |

# tqdm iterates over a range, and you perform a small sleep in each iteration

|

| 22 |

for i in tqdm(range(total_seconds), desc="Building container"):

|

cdk/requirements.txt

CHANGED

|

@@ -1,5 +1,5 @@

|

|

| 1 |

-

aws-cdk-lib==2.

|

| 2 |

-

boto3==1.

|

| 3 |

-

pandas==2.3.

|

| 4 |

nodejs==0.1.1

|

| 5 |

python-dotenv==1.0.1

|

|

|

|

| 1 |

+

aws-cdk-lib==2.212.0

|

| 2 |

+

boto3==1.40.10

|

| 3 |

+

pandas==2.3.1

|

| 4 |

nodejs==0.1.1

|

| 5 |

python-dotenv==1.0.1

|

example_config.env

ADDED

|

@@ -0,0 +1,26 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

TESSERACT_FOLDER=tesseract/

|

| 2 |

+

POPPLER_FOLDER=poppler/poppler-24.02.0/Library/bin/

|

| 3 |

+

SHOW_LANGUAGE_SELECTION=True

|

| 4 |

+

CHOSEN_LOCAL_OCR_MODEL=tesseract

|

| 5 |

+

|

| 6 |

+

SESSION_OUTPUT_FOLDER=False

|

| 7 |

+

DISPLAY_FILE_NAMES_IN_LOGS=False

|

| 8 |

+

|

| 9 |

+

RUN_AWS_FUNCTIONS=1 # Set to 0 if you don't want to run AWS functions

|

| 10 |

+

SAVE_LOGS_TO_DYNAMODB=True

|

| 11 |

+

S3_COST_CODES_PATH=cost_codes.csv

|

| 12 |

+

SHOW_AWS_TEXT_EXTRACTION_OPTIONS=True

|

| 13 |

+

SHOW_AWS_PII_DETECTION_OPTIONS=True

|

| 14 |

+

AWS_REGION=example-region

|

| 15 |

+

DOCUMENT_REDACTION_BUCKET=example-bucket

|

| 16 |

+

SHOW_WHOLE_DOCUMENT_TEXTRACT_CALL_OPTIONS=True

|

| 17 |

+

TEXTRACT_WHOLE_DOCUMENT_ANALYSIS_BUCKET=example-bucket-output

|

| 18 |

+

LOAD_PREVIOUS_TEXTRACT_JOBS_S3=True

|

| 19 |

+

ACCESS_LOG_DYNAMODB_TABLE_NAME=example-dynamodb-access-log

|

| 20 |

+

USAGE_LOG_DYNAMODB_TABLE_NAME=example-dynamodb-usage

|

| 21 |

+

FEEDBACK_LOG_DYNAMODB_TABLE_NAME=example-dynamodb-feedback

|

| 22 |

+

SHOW_COSTS=True

|

| 23 |

+

GET_COST_CODES=True

|

| 24 |

+

COST_CODES_PATH=config/cost_codes.csv

|

| 25 |

+

ENFORCE_COST_CODES=True

|

| 26 |

+

DEFAULT_COST_CODE=example_cost_code

|

index.qmd

CHANGED

|

@@ -2,7 +2,7 @@

|

|

| 2 |

title: "Home"

|

| 3 |

---

|

| 4 |

|

| 5 |

-

version: 0.

|

| 6 |

|

| 7 |

Welcome to the Document Redaction App documentation. This site provides comprehensive documentation for the Document Redaction App.

|

| 8 |

|

|

|

|

| 2 |

title: "Home"

|

| 3 |

---

|

| 4 |

|

| 5 |

+

version: 1.0.0

|

| 6 |

|

| 7 |

Welcome to the Document Redaction App documentation. This site provides comprehensive documentation for the Document Redaction App.

|

| 8 |

|

load_dynamo_logs.py

CHANGED

|

@@ -1,6 +1,7 @@

|

|

| 1 |

import boto3

|

| 2 |

import csv

|

| 3 |

from decimal import Decimal

|

|

|

|

| 4 |

from boto3.dynamodb.conditions import Key

|

| 5 |

|

| 6 |

from tools.config import AWS_REGION, ACCESS_LOG_DYNAMODB_TABLE_NAME, FEEDBACK_LOG_DYNAMODB_TABLE_NAME, USAGE_LOG_DYNAMODB_TABLE_NAME, OUTPUT_FOLDER

|

|

@@ -16,11 +17,26 @@ table = dynamodb.Table(TABLE_NAME)

|

|

| 16 |

|

| 17 |

# Helper function to convert Decimal to float or int

|

| 18 |

def convert_types(item):

|

|

|

|

| 19 |

for key, value in item.items():

|

|

|

|

| 20 |

if isinstance(value, Decimal):

|

| 21 |

-

|

| 22 |

-

|

| 23 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 24 |

|

| 25 |

# Paginated scan

|

| 26 |

def scan_table():

|

|

@@ -35,22 +51,43 @@ def scan_table():

|

|

| 35 |

return items

|

| 36 |

|

| 37 |

# Export to CSV

|

| 38 |

-

|

|

|

|

| 39 |

if not items:

|

| 40 |

print("No items found.")

|

| 41 |

return

|

| 42 |

|

| 43 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 44 |

|

| 45 |

-

with open(output_path, 'w', newline='', encoding='utf-8') as csvfile:

|

| 46 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 47 |

writer.writeheader()

|

| 48 |

|

| 49 |

for item in items:

|

|

|

|

|

|

|

| 50 |

writer.writerow(convert_types(item))

|

| 51 |

|

| 52 |

print(f"Exported {len(items)} items to {output_path}")

|

| 53 |

|

| 54 |

# Run export

|

| 55 |

items = scan_table()

|

| 56 |

-

export_to_csv(items, CSV_OUTPUT)

|

|

|

|

| 1 |

import boto3

|

| 2 |

import csv

|

| 3 |

from decimal import Decimal

|

| 4 |

+

import datetime

|

| 5 |

from boto3.dynamodb.conditions import Key

|

| 6 |

|

| 7 |

from tools.config import AWS_REGION, ACCESS_LOG_DYNAMODB_TABLE_NAME, FEEDBACK_LOG_DYNAMODB_TABLE_NAME, USAGE_LOG_DYNAMODB_TABLE_NAME, OUTPUT_FOLDER

|

|

|

|

| 17 |

|

| 18 |

# Helper function to convert Decimal to float or int

|

| 19 |

def convert_types(item):

|

| 20 |

+

new_item = {}

|

| 21 |

for key, value in item.items():

|

| 22 |

+

# Handle Decimals first

|

| 23 |

if isinstance(value, Decimal):

|

| 24 |

+

new_item[key] = int(value) if value % 1 == 0 else float(value)

|

| 25 |

+

# Handle Strings that might be dates

|

| 26 |

+

elif isinstance(value, str):

|

| 27 |

+

try:

|

| 28 |

+

# Attempt to parse a common ISO 8601 format.

|

| 29 |

+

# The .replace() handles the 'Z' for Zulu/UTC time.

|

| 30 |

+

dt_obj = datetime.datetime.fromisoformat(value.replace('Z', '+00:00'))

|

| 31 |

+

# Now that we have a datetime object, format it as desired

|

| 32 |

+

new_item[key] = dt_obj.strftime('%Y-%m-%d %H:%M:%S.%f')[:-3]

|

| 33 |

+

except (ValueError, TypeError):

|

| 34 |

+

# If it fails to parse, it's just a regular string

|

| 35 |

+

new_item[key] = value

|

| 36 |

+

# Handle all other types

|

| 37 |

+

else:

|

| 38 |

+

new_item[key] = value

|

| 39 |

+

return new_item

|

| 40 |

|

| 41 |

# Paginated scan

|

| 42 |

def scan_table():

|

|

|

|

| 51 |

return items

|

| 52 |

|

| 53 |

# Export to CSV

|

| 54 |

+

# Export to CSV

|

| 55 |

+

def export_to_csv(items, output_path, fields_to_drop: list = None):

|

| 56 |

if not items:

|

| 57 |

print("No items found.")

|

| 58 |

return

|

| 59 |

|

| 60 |

+

# Use a set for efficient lookup

|

| 61 |

+

drop_set = set(fields_to_drop or [])

|

| 62 |

+

|

| 63 |

+

# Get a comprehensive list of all possible headers from all items

|

| 64 |

+

all_keys = set()

|

| 65 |

+

for item in items:

|

| 66 |

+

all_keys.update(item.keys())

|

| 67 |

+

|

| 68 |

+

# Determine the final fieldnames by subtracting the ones to drop

|

| 69 |

+

fieldnames = sorted(list(all_keys - drop_set))

|

| 70 |

+

|

| 71 |

+

print("Final CSV columns will be:", fieldnames)

|

| 72 |

|

| 73 |

+

with open(output_path, 'w', newline='', encoding='utf-8-sig') as csvfile:

|

| 74 |

+

# The key fix is here: extrasaction='ignore'

|

| 75 |

+

# restval='' is also good practice to handle rows that are missing a key

|

| 76 |

+

writer = csv.DictWriter(

|

| 77 |

+

csvfile,

|

| 78 |

+

fieldnames=fieldnames,

|

| 79 |

+

extrasaction='ignore',

|

| 80 |

+

restval=''

|

| 81 |

+

)

|

| 82 |

writer.writeheader()

|

| 83 |

|

| 84 |

for item in items:

|

| 85 |

+

# The convert_types function can now return the full dict,

|

| 86 |

+

# and the writer will simply ignore the extra fields.

|

| 87 |

writer.writerow(convert_types(item))

|

| 88 |

|

| 89 |

print(f"Exported {len(items)} items to {output_path}")

|

| 90 |

|

| 91 |

# Run export

|

| 92 |

items = scan_table()

|

| 93 |

+

export_to_csv(items, CSV_OUTPUT, fields_to_drop=[])

|

pyproject.toml

CHANGED

|

@@ -4,38 +4,40 @@ build-backend = "setuptools.build_meta"

|

|

| 4 |

|

| 5 |

[project]

|

| 6 |

name = "doc_redaction"

|

| 7 |

-

version = "0.

|

| 8 |

description = "Redact PDF/image-based documents, or CSV/XLSX files using a Gradio-based GUI interface"

|

| 9 |

readme = "README.md"

|

| 10 |

requires-python = ">=3.10"

|

| 11 |

|

| 12 |

dependencies = [

|

| 13 |

-

"pdfminer.six==

|

| 14 |

"pdf2image==1.17.0",

|

| 15 |

-

"pymupdf==1.26.

|

| 16 |

-

"opencv-python==4.

|

| 17 |

-

"presidio_analyzer==2.2.

|

| 18 |

-

"presidio_anonymizer==2.2.

|

| 19 |

-

"presidio-image-redactor==0.0.

|

| 20 |

-

"pikepdf==9.

|

| 21 |

-

"pandas==2.3.

|

| 22 |

-

"scikit-learn==1.

|

| 23 |

"spacy==3.8.7",

|

| 24 |

# Direct URL dependency for spacy model

|

| 25 |

"en_core_web_lg @ https://github.com/explosion/spacy-models/releases/download/en_core_web_lg-3.8.0/en_core_web_lg-3.8.0.tar.gz",

|

| 26 |

-

"gradio==5.

|

| 27 |

-

"boto3==1.

|

| 28 |

-

"pyarrow==

|

| 29 |

"openpyxl==3.1.5",

|

| 30 |

-

"Faker==

|

| 31 |

-

"python-levenshtein==0.

|

| 32 |

"spaczz==0.6.1",

|

| 33 |

# Direct URL dependency for gradio_image_annotator wheel

|

| 34 |

"gradio_image_annotation @ https://github.com/seanpedrick-case/gradio_image_annotator/releases/download/v0.3.3/gradio_image_annotation-0.3.3-py3-none-any.whl",

|

| 35 |

-

"rapidfuzz==3.

|

| 36 |

"python-dotenv==1.0.1",

|

| 37 |

-

"

|

| 38 |

-

"

|

|

|

|

|

|

|

| 39 |

]

|

| 40 |

|

| 41 |

[project.urls]

|

|

|

|

| 4 |

|

| 5 |

[project]

|

| 6 |

name = "doc_redaction"

|

| 7 |

+

version = "1.0.0"

|

| 8 |

description = "Redact PDF/image-based documents, or CSV/XLSX files using a Gradio-based GUI interface"

|

| 9 |

readme = "README.md"

|

| 10 |

requires-python = ">=3.10"

|

| 11 |

|

| 12 |

dependencies = [

|

| 13 |

+

"pdfminer.six==20250506",

|

| 14 |

"pdf2image==1.17.0",

|

| 15 |

+

"pymupdf==1.26.3",

|

| 16 |

+

"opencv-python==4.12.0.88",

|

| 17 |

+

"presidio_analyzer==2.2.359",

|

| 18 |

+

"presidio_anonymizer==2.2.359",

|

| 19 |

+

"presidio-image-redactor==0.0.57",

|

| 20 |

+

"pikepdf==9.10.2",

|

| 21 |

+

"pandas==2.3.1",

|

| 22 |

+

"scikit-learn==1.7.1",

|

| 23 |

"spacy==3.8.7",

|

| 24 |

# Direct URL dependency for spacy model

|

| 25 |

"en_core_web_lg @ https://github.com/explosion/spacy-models/releases/download/en_core_web_lg-3.8.0/en_core_web_lg-3.8.0.tar.gz",

|

| 26 |

+

"gradio==5.43.1",

|

| 27 |

+

"boto3==1.40.10",

|

| 28 |

+

"pyarrow==21.0.0",

|

| 29 |

"openpyxl==3.1.5",

|

| 30 |

+

"Faker==37.5.3",

|

| 31 |

+

"python-levenshtein==0.27.1",

|

| 32 |

"spaczz==0.6.1",

|

| 33 |

# Direct URL dependency for gradio_image_annotator wheel

|

| 34 |

"gradio_image_annotation @ https://github.com/seanpedrick-case/gradio_image_annotator/releases/download/v0.3.3/gradio_image_annotation-0.3.3-py3-none-any.whl",

|

| 35 |

+

"rapidfuzz==3.13.0",

|

| 36 |

"python-dotenv==1.0.1",

|

| 37 |

+

"awslambdaric==3.1.1",

|

| 38 |

+

"python-docx==1.2.0",

|

| 39 |

+

"paddlepaddle==3.1.0",

|

| 40 |

+

"paddleocr==3.1.1"

|

| 41 |

]

|

| 42 |

|

| 43 |

[project.urls]

|

requirements.txt

CHANGED

|

@@ -1,28 +1,30 @@

|

|

| 1 |

-

pdfminer.six==

|

| 2 |

pdf2image==1.17.0

|

| 3 |

-

pymupdf==1.26.

|

| 4 |

-

opencv-python==4.

|

| 5 |

-

presidio_analyzer==2.2.

|

| 6 |

-

presidio_anonymizer==2.2.

|

| 7 |

-

presidio-image-redactor==0.0.

|

| 8 |

-

pikepdf==9.

|

| 9 |

-

pandas==2.3.

|

| 10 |

-

scikit-learn==1.

|

| 11 |

spacy==3.8.7

|

| 12 |

en_core_web_lg @ https://github.com/explosion/spacy-models/releases/download/en_core_web_lg-3.8.0/en_core_web_lg-3.8.0.tar.gz

|

| 13 |

-

gradio==5.

|

| 14 |

-

boto3==1.

|

| 15 |

-

pyarrow==

|

| 16 |

openpyxl==3.1.5

|

| 17 |

-

Faker==

|

| 18 |

-

python-levenshtein==0.

|

| 19 |

spaczz==0.6.1

|

| 20 |

# The following version

|

| 21 |

https://github.com/seanpedrick-case/gradio_image_annotator/releases/download/v0.3.3/gradio_image_annotation-0.3.3-py3-none-any.whl # This version includes rotation, image zoom, and default labels, as well as the option to include id for annotation boxes

|

| 22 |

-

rapidfuzz==3.

|

| 23 |

python-dotenv==1.0.1

|

| 24 |

-

|

| 25 |

-

|

|

|

|

|

|

|

| 26 |

|

| 27 |

|

| 28 |

|

|

|

|

| 1 |

+

pdfminer.six==20250506

|

| 2 |

pdf2image==1.17.0

|

| 3 |

+

pymupdf==1.26.3

|

| 4 |

+

opencv-python==4.12.0.88

|

| 5 |

+

presidio_analyzer==2.2.359

|

| 6 |

+

presidio_anonymizer==2.2.359

|

| 7 |

+

presidio-image-redactor==0.0.57

|

| 8 |

+

pikepdf==9.10.2

|

| 9 |

+

pandas==2.3.1

|

| 10 |

+

scikit-learn==1.7.1

|

| 11 |

spacy==3.8.7

|

| 12 |

en_core_web_lg @ https://github.com/explosion/spacy-models/releases/download/en_core_web_lg-3.8.0/en_core_web_lg-3.8.0.tar.gz

|

| 13 |

+

gradio==5.43.1

|

| 14 |

+

boto3==1.40.10

|

| 15 |

+

pyarrow==21.0.0

|

| 16 |

openpyxl==3.1.5

|

| 17 |

+

Faker==37.5.3

|

| 18 |

+

python-levenshtein==0.27.1

|

| 19 |

spaczz==0.6.1

|

| 20 |

# The following version

|

| 21 |

https://github.com/seanpedrick-case/gradio_image_annotator/releases/download/v0.3.3/gradio_image_annotation-0.3.3-py3-none-any.whl # This version includes rotation, image zoom, and default labels, as well as the option to include id for annotation boxes

|

| 22 |

+

rapidfuzz==3.13.0

|

| 23 |

python-dotenv==1.0.1

|

| 24 |

+

awslambdaric==3.1.1

|

| 25 |

+

python-docx==1.2.0

|

| 26 |

+

paddlepaddle==3.1.0 # Consider installing the GPU version for faster local OCR inference with PaddleOCR: paddlepaddle-gpu==3.1.0 -i https://www.paddlepaddle.org.cn/packages/stable/cu126/ , compatible with CUDA 12.6. See this for more details: https://www.paddlepaddle.org.cn/documentation/docs/en/install/pip/linux-pip_en.html#span-id-gpu-gpu-version-of-paddlepaddle-span

|

| 27 |

+

paddleocr==3.1.1

|

| 28 |

|

| 29 |

|

| 30 |

|

src/app_settings.qmd

CHANGED

|

@@ -115,6 +115,16 @@ Configuration for input and output file handling.

|

|

| 115 |

* **Default Value:** `'input/'`

|

| 116 |

* **Configuration:** Set as an environment variable directly, or include in `config/app_config.env`.

|

| 117 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 118 |

## Logging Options

|

| 119 |

|

| 120 |

Settings for configuring application logging, including log formats and storage locations.

|

|

@@ -161,7 +171,7 @@ Settings for configuring application logging, including log formats and storage

|

|

| 161 |

|

| 162 |

* **`CSV_USAGE_LOG_HEADERS`**

|

| 163 |

* **Description:** Defines custom headers for CSV usage logs.

|

| 164 |

-

* **Default Value:** A predefined list of header names. Refer to `

|

| 165 |

* **Configuration:** Set as an environment variable directly, or include in `config/app_config.env`.

|

| 166 |

|

| 167 |

* **`SAVE_LOGS_TO_DYNAMODB`**

|

|

@@ -214,12 +224,17 @@ Settings for configuring application logging, including log formats and storage

|

|

| 214 |

Configurations related to the text redaction process, including PII detection models and external tool paths.

|

| 215 |

|

| 216 |

* **`TESSERACT_FOLDER`**

|

| 217 |

-

* **Description:** Path to the local Tesseract OCR installation folder. Only required if Tesseract is not in

|

| 218 |

* **Default Value:** `""` (empty string)

|

| 219 |

* **Configuration:** Set as an environment variable directly, or include in `config/app_config.env`.

|

| 220 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 221 |

* **`POPPLER_FOLDER`**

|

| 222 |

-

* **Description:** Path to the local Poppler installation's `bin` folder.

|

| 223 |

* **Default Value:** `""` (empty string)

|

| 224 |

* **Configuration:** Set as an environment variable directly, or include in `config/app_config.env`.

|

| 225 |

|

|

@@ -283,24 +298,34 @@ Configurations related to the text redaction process, including PII detection mo

|

|

| 283 |

* **Default Value:** Value of `AWS_PII_OPTION` if `SHOW_AWS_PII_DETECTION_OPTIONS` is True, else value of `LOCAL_PII_OPTION`.

|

| 284 |

* **Configuration:** Set as an environment variable directly, or include in `config/app_config.env`. Provide one of the PII detection option display names.

|

| 285 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 286 |

* **`CHOSEN_COMPREHEND_ENTITIES`**

|

| 287 |

* **Description:** A list of AWS Comprehend PII entity types to be redacted when using AWS Comprehend.

|

| 288 |

-

* **Default Value:** A predefined list of entity types. Refer to `

|

| 289 |

* **Configuration:** Set as an environment variable directly, or include in `config/app_config.env`. This should be a string representation of a Python list.

|

| 290 |

|

| 291 |

* **`FULL_COMPREHEND_ENTITY_LIST`**

|

| 292 |

* **Description:** The complete list of PII entity types supported by AWS Comprehend that can be selected for redaction.

|

| 293 |

-

* **Default Value:** A predefined list of entity types. Refer to `

|

| 294 |

* **Configuration:** This is typically an informational variable reflecting the capabilities of AWS Comprehend and is not meant to be changed by users directly affecting redaction behavior (use `CHOSEN_COMPREHEND_ENTITIES` for that). Set as an environment variable directly, or include in `config/app_config.env`.

|

| 295 |

|

| 296 |

* **`CHOSEN_REDACT_ENTITIES`**

|

| 297 |

* **Description:** A list of local PII entity types to be redacted when using the local PII detection model.

|

| 298 |

-

* **Default Value:** A predefined list of entity types. Refer to `

|

| 299 |

* **Configuration:** Set as an environment variable directly, or include in `config/app_config.env`. This should be a string representation of a Python list.

|

| 300 |

|

| 301 |

* **`FULL_ENTITY_LIST`**

|

| 302 |

* **Description:** The complete list of PII entity types supported by the local PII detection model that can be selected for redaction.

|

| 303 |

-

* **Default Value:** A predefined list of entity types. Refer to `

|

| 304 |

* **Configuration:** This is typically an informational variable reflecting the capabilities of the local model and is not meant to be changed by users directly affecting redaction behavior (use `CHOSEN_REDACT_ENTITIES` for that). Set as an environment variable directly, or include in `config/app_config.env`.

|

| 305 |

|

| 306 |

* **`PAGE_BREAK_VALUE`**

|

|

@@ -309,20 +334,15 @@ Configurations related to the text redaction process, including PII detection mo

|

|

| 309 |

* **Configuration:** Set as an environment variable directly, or include in `config/app_config.env`.

|

| 310 |

|

| 311 |

* **`MAX_TIME_VALUE`**

|

| 312 |

-

* **Description:** Specifies

|

| 313 |

* **Default Value:** `'999999'`

|

| 314 |

* **Configuration:** Set as an environment variable directly, or include in `config/app_config.env`.

|

| 315 |

|

| 316 |

* **`CUSTOM_BOX_COLOUR`**

|

| 317 |

-