Merge pull request #49 from seanpedrick-case/dev_new

Browse filesRevamped duplicate page/subdocument removal, CDK code, updated documentation, read-only file system compatability.

- .dockerignore +14 -6

- .gitignore +11 -2

- Dockerfile +75 -40

- README.md +42 -10

- _quarto.yml +28 -0

- app.py +198 -79

- cdk/__init__.py +0 -0

- cdk/app.py +81 -0

- cdk/cdk_config.py +225 -0

- cdk/cdk_functions.py +1293 -0

- cdk/cdk_stack.py +1317 -0

- cdk/check_resources.py +297 -0

- cdk/post_cdk_build_quickstart.py +27 -0

- cdk/requirements.txt +5 -0

- index.qmd +23 -0

- pyproject.toml +4 -4

- requirements.txt +2 -2

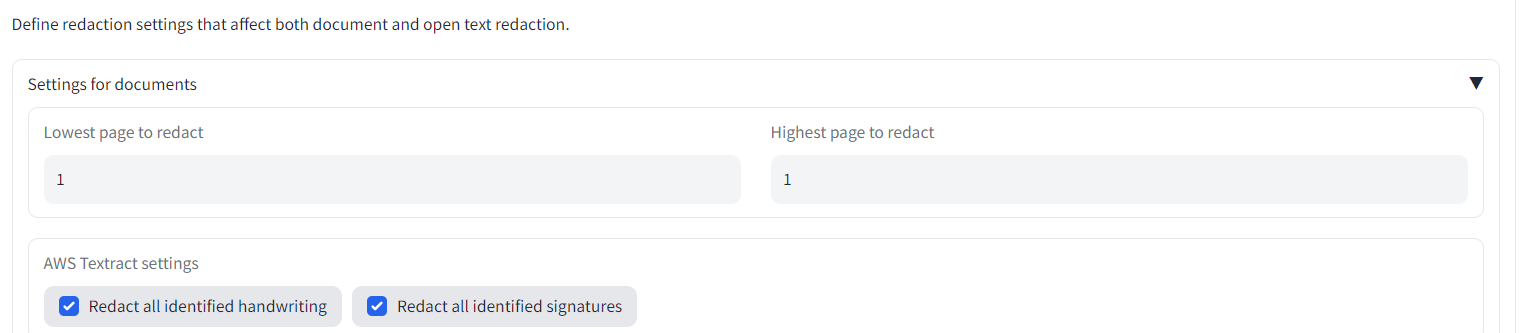

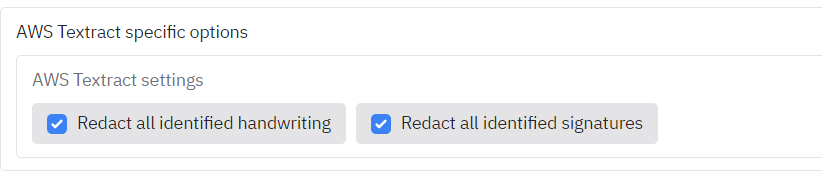

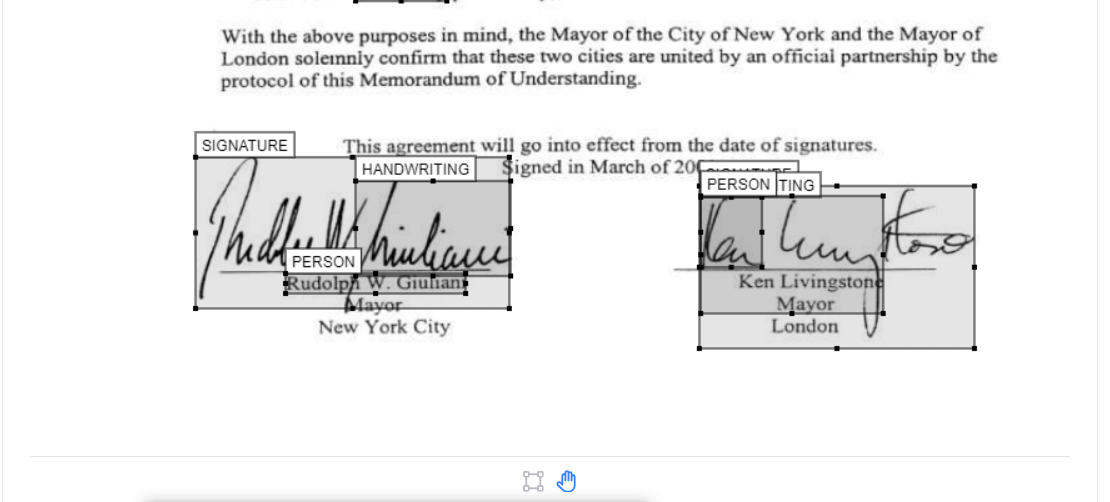

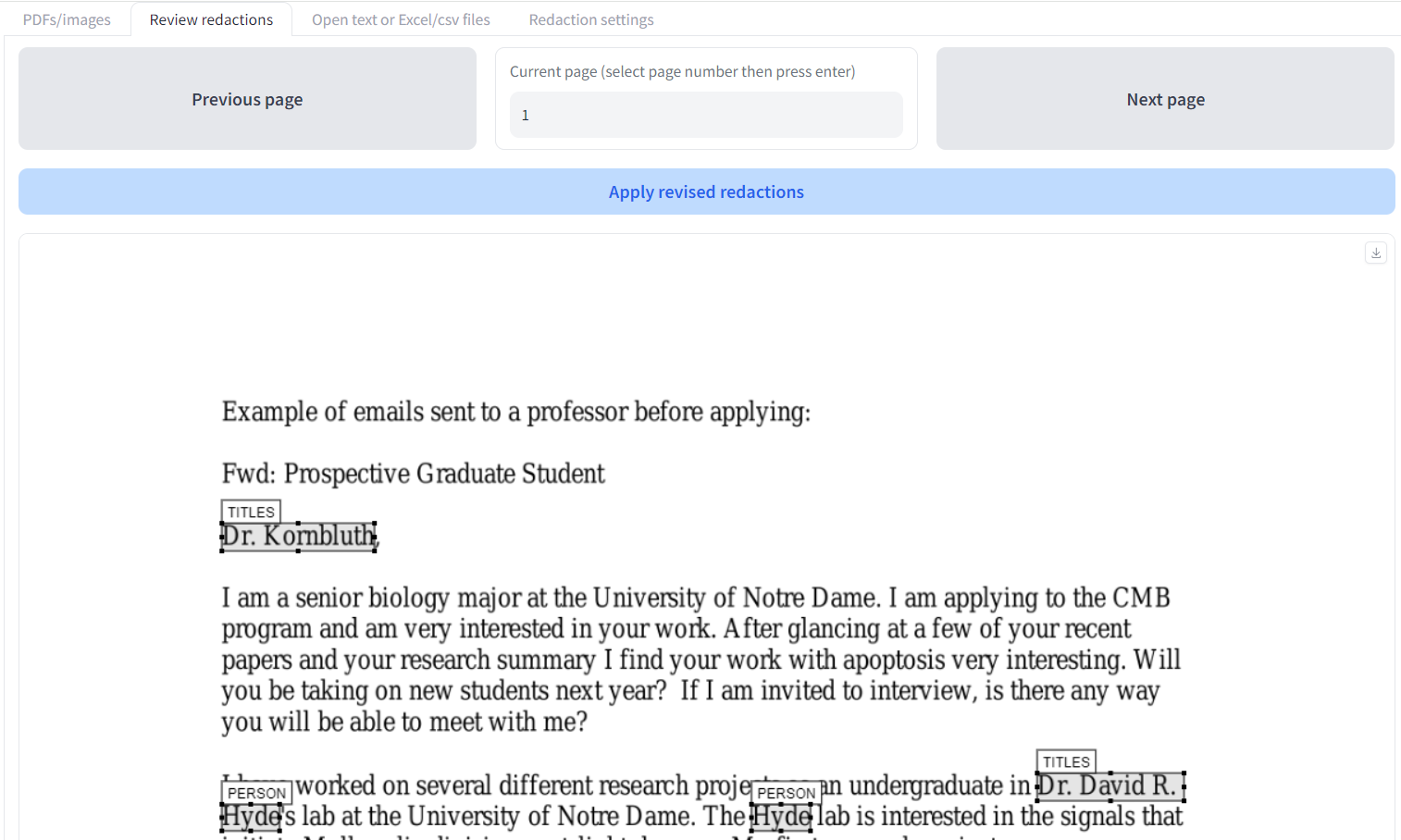

- src/app_settings.qmd +481 -0

- src/faq.qmd +222 -0

- src/installation_guide.qmd +233 -0

- src/management_guide.qmd +226 -0

- src/styles.css +1 -0

- src/user_guide.qmd +543 -0

- tld/.tld_set_snapshot +0 -0

- tools/aws_functions.py +1 -0

- tools/config.py +12 -8

- tools/file_conversion.py +20 -10

- tools/file_redaction.py +42 -22

- tools/find_duplicate_pages.py +470 -125

- tools/helper_functions.py +8 -0

- tools/redaction_review.py +15 -4

.dockerignore

CHANGED

|

@@ -4,10 +4,9 @@

|

|

| 4 |

*.jpg

|

| 5 |

*.png

|

| 6 |

*.ipynb

|

|

|

|

| 7 |

examples/*

|

| 8 |

processing/*

|

| 9 |

-

input/*

|

| 10 |

-

output/*

|

| 11 |

tools/__pycache__/*

|

| 12 |

old_code/*

|

| 13 |

tesseract/*

|

|

@@ -15,9 +14,18 @@ poppler/*

|

|

| 15 |

build/*

|

| 16 |

dist/*

|

| 17 |

build_deps/*

|

| 18 |

-

logs/*

|

| 19 |

-

config/*

|

| 20 |

user_guide/*

|

| 21 |

-

cdk/*

|

| 22 |

cdk/config/*

|

| 23 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 4 |

*.jpg

|

| 5 |

*.png

|

| 6 |

*.ipynb

|

| 7 |

+

*.pyc

|

| 8 |

examples/*

|

| 9 |

processing/*

|

|

|

|

|

|

|

| 10 |

tools/__pycache__/*

|

| 11 |

old_code/*

|

| 12 |

tesseract/*

|

|

|

|

| 14 |

build/*

|

| 15 |

dist/*

|

| 16 |

build_deps/*

|

|

|

|

|

|

|

| 17 |

user_guide/*

|

|

|

|

| 18 |

cdk/config/*

|

| 19 |

+

tld/*

|

| 20 |

+

cdk/config/*

|

| 21 |

+

cdk/cdk.out/*

|

| 22 |

+

cdk/archive/*

|

| 23 |

+

cdk.json

|

| 24 |

+

cdk.context.json

|

| 25 |

+

.quarto/*

|

| 26 |

+

logs/

|

| 27 |

+

output/

|

| 28 |

+

input/

|

| 29 |

+

feedback/

|

| 30 |

+

config/

|

| 31 |

+

usage/

|

.gitignore

CHANGED

|

@@ -4,6 +4,7 @@

|

|

| 4 |

*.jpg

|

| 5 |

*.png

|

| 6 |

*.ipynb

|

|

|

|

| 7 |

examples/*

|

| 8 |

processing/*

|

| 9 |

input/*

|

|

@@ -19,6 +20,14 @@ logs/*

|

|

| 19 |

config/*

|

| 20 |

doc_redaction_amplify_app/*

|

| 21 |

user_guide/*

|

| 22 |

-

cdk/*

|

| 23 |

cdk/config/*

|

| 24 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 4 |

*.jpg

|

| 5 |

*.png

|

| 6 |

*.ipynb

|

| 7 |

+

*.pyc

|

| 8 |

examples/*

|

| 9 |

processing/*

|

| 10 |

input/*

|

|

|

|

| 20 |

config/*

|

| 21 |

doc_redaction_amplify_app/*

|

| 22 |

user_guide/*

|

|

|

|

| 23 |

cdk/config/*

|

| 24 |

+

cdk/cdk.out/*

|

| 25 |

+

cdk/archive/*

|

| 26 |

+

tld/*

|

| 27 |

+

tmp/*

|

| 28 |

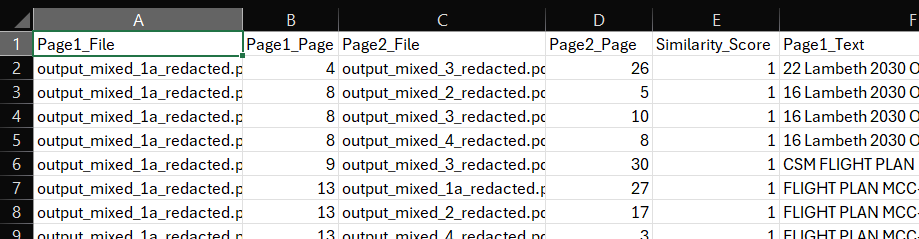

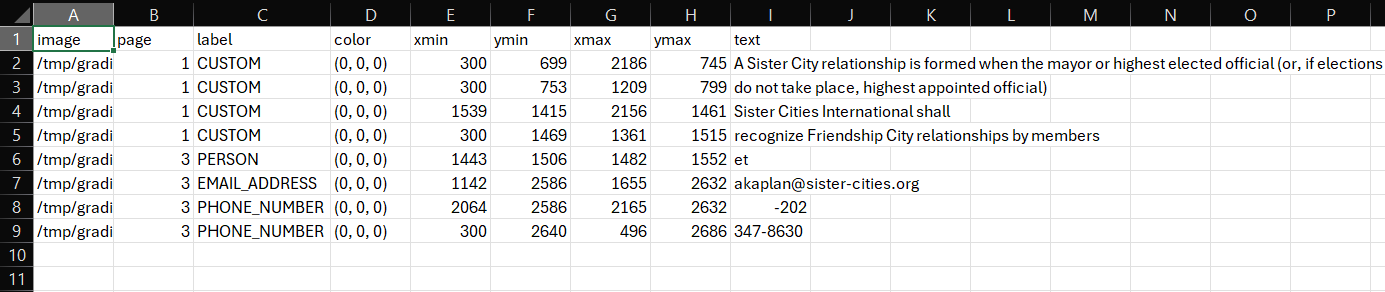

+

cdk.out/*

|

| 29 |

+

cdk.json

|

| 30 |

+

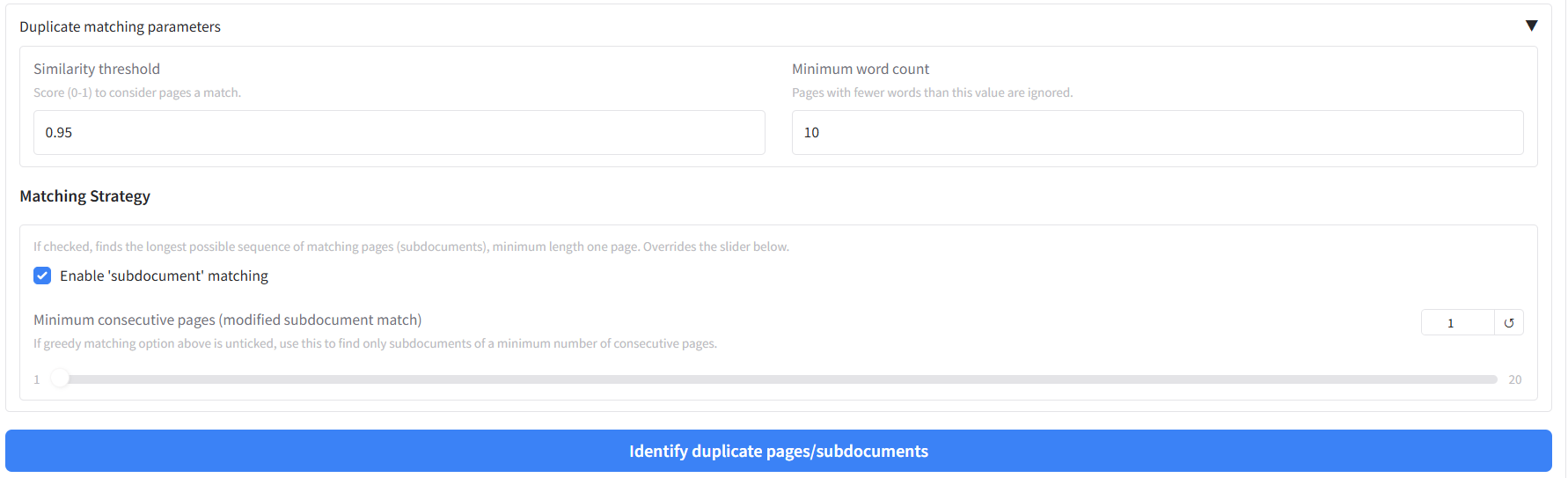

cdk.context.json

|

| 31 |

+

.quarto/*

|

| 32 |

+

/.quarto/

|

| 33 |

+

/_site/

|

Dockerfile

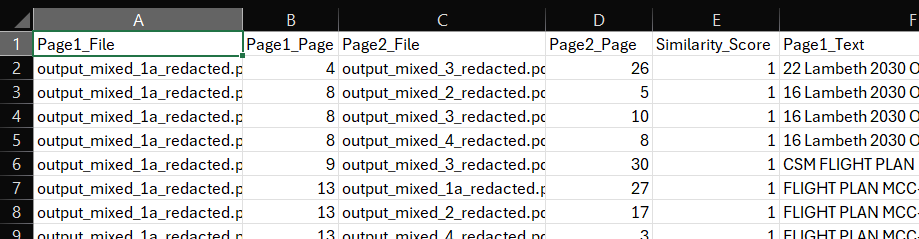

CHANGED

|

@@ -1,14 +1,14 @@

|

|

| 1 |

# Stage 1: Build dependencies and download models

|

| 2 |

FROM public.ecr.aws/docker/library/python:3.11.11-slim-bookworm AS builder

|

| 3 |

|

| 4 |

-

# Install system dependencies

|

| 5 |

RUN apt-get update \

|

| 6 |

&& apt-get install -y \

|

| 7 |

g++ \

|

| 8 |

make \

|

| 9 |

cmake \

|

| 10 |

unzip \

|

| 11 |

-

libcurl4-openssl-dev \

|

| 12 |

git \

|

| 13 |

&& apt-get clean \

|

| 14 |

&& rm -rf /var/lib/apt/lists/*

|

|

@@ -17,28 +17,20 @@ WORKDIR /src

|

|

| 17 |

|

| 18 |

COPY requirements.txt .

|

| 19 |

|

| 20 |

-

RUN pip install --no-cache-dir --target=/install -r requirements.txt

|

| 21 |

-

|

| 22 |

-

RUN rm requirements.txt

|

| 23 |

|

| 24 |

-

# Add

|

| 25 |

COPY lambda_entrypoint.py .

|

| 26 |

-

|

| 27 |

COPY entrypoint.sh .

|

| 28 |

|

| 29 |

# Stage 2: Final runtime image

|

| 30 |

FROM public.ecr.aws/docker/library/python:3.11.11-slim-bookworm

|

| 31 |

|

| 32 |

-

#

|

| 33 |

ARG APP_MODE=gradio

|

| 34 |

-

|

| 35 |

-

# Echo the APP_MODE during the build to confirm its value

|

| 36 |

-

RUN echo "APP_MODE is set to: ${APP_MODE}"

|

| 37 |

-

|

| 38 |

-

# Set APP_MODE as an environment variable for runtime

|

| 39 |

ENV APP_MODE=${APP_MODE}

|

| 40 |

|

| 41 |

-

# Install

|

| 42 |

RUN apt-get update \

|

| 43 |

&& apt-get install -y \

|

| 44 |

tesseract-ocr \

|

|

@@ -48,30 +40,85 @@ RUN apt-get update \

|

|

| 48 |

&& apt-get clean \

|

| 49 |

&& rm -rf /var/lib/apt/lists/*

|

| 50 |

|

| 51 |

-

#

|

| 52 |

RUN useradd -m -u 1000 user

|

|

|

|

| 53 |

|

| 54 |

-

#

|

| 55 |

-

|

| 56 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 57 |

|

| 58 |

# Copy installed packages from builder stage

|

| 59 |

COPY --from=builder /install /usr/local/lib/python3.11/site-packages/

|

| 60 |

|

| 61 |

-

#

|

| 62 |

-

|

| 63 |

|

| 64 |

-

#

|

| 65 |

COPY entrypoint.sh /entrypoint.sh

|

| 66 |

-

|

| 67 |

RUN chmod +x /entrypoint.sh

|

| 68 |

|

| 69 |

-

# Switch to

|

| 70 |

USER user

|

| 71 |

|

| 72 |

-

|

|

|

|

| 73 |

|

| 74 |

-

#

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 75 |

ENV PATH=$APP_HOME/.local/bin:$PATH \

|

| 76 |

PYTHONPATH=$APP_HOME/app \

|

| 77 |

PYTHONUNBUFFERED=1 \

|

|

@@ -80,20 +127,8 @@ ENV PATH=$APP_HOME/.local/bin:$PATH \

|

|

| 80 |

GRADIO_NUM_PORTS=1 \

|

| 81 |

GRADIO_SERVER_NAME=0.0.0.0 \

|

| 82 |

GRADIO_SERVER_PORT=7860 \

|

| 83 |

-

GRADIO_ANALYTICS_ENABLED=False

|

| 84 |

-

TLDEXTRACT_CACHE=$APP_HOME/app/tld/.tld_set_snapshot \

|

| 85 |

-

SYSTEM=spaces

|

| 86 |

-

|

| 87 |

-

# Set the working directory to the user's home directory

|

| 88 |

-

WORKDIR $APP_HOME/app

|

| 89 |

-

|

| 90 |

-

# Copy the app code to the container

|

| 91 |

-

COPY --chown=user . $APP_HOME/app

|

| 92 |

-

|

| 93 |

-

# Ensure permissions are really user:user again after copying

|

| 94 |

-

RUN chown -R user:user $APP_HOME/app && chmod -R u+rwX $APP_HOME/app

|

| 95 |

|

| 96 |

-

ENTRYPOINT [

|

| 97 |

|

| 98 |

-

|

| 99 |

-

CMD [ "lambda_entrypoint.lambda_handler" ]

|

|

|

|

| 1 |

# Stage 1: Build dependencies and download models

|

| 2 |

FROM public.ecr.aws/docker/library/python:3.11.11-slim-bookworm AS builder

|

| 3 |

|

| 4 |

+

# Install system dependencies

|

| 5 |

RUN apt-get update \

|

| 6 |

&& apt-get install -y \

|

| 7 |

g++ \

|

| 8 |

make \

|

| 9 |

cmake \

|

| 10 |

unzip \

|

| 11 |

+

libcurl4-openssl-dev \

|

| 12 |

git \

|

| 13 |

&& apt-get clean \

|

| 14 |

&& rm -rf /var/lib/apt/lists/*

|

|

|

|

| 17 |

|

| 18 |

COPY requirements.txt .

|

| 19 |

|

| 20 |

+

RUN pip install --no-cache-dir --target=/install -r requirements.txt && rm requirements.txt

|

|

|

|

|

|

|

| 21 |

|

| 22 |

+

# Add lambda entrypoint and script

|

| 23 |

COPY lambda_entrypoint.py .

|

|

|

|

| 24 |

COPY entrypoint.sh .

|

| 25 |

|

| 26 |

# Stage 2: Final runtime image

|

| 27 |

FROM public.ecr.aws/docker/library/python:3.11.11-slim-bookworm

|

| 28 |

|

| 29 |

+

# Set build-time and runtime environment variable

|

| 30 |

ARG APP_MODE=gradio

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 31 |

ENV APP_MODE=${APP_MODE}

|

| 32 |

|

| 33 |

+

# Install runtime dependencies

|

| 34 |

RUN apt-get update \

|

| 35 |

&& apt-get install -y \

|

| 36 |

tesseract-ocr \

|

|

|

|

| 40 |

&& apt-get clean \

|

| 41 |

&& rm -rf /var/lib/apt/lists/*

|

| 42 |

|

| 43 |

+

# Create non-root user

|

| 44 |

RUN useradd -m -u 1000 user

|

| 45 |

+

ENV APP_HOME=/home/user

|

| 46 |

|

| 47 |

+

# Set env variables for Gradio & other apps

|

| 48 |

+

ENV GRADIO_TEMP_DIR=/tmp/gradio_tmp/ \

|

| 49 |

+

TLDEXTRACT_CACHE=/tmp/tld/ \

|

| 50 |

+

MPLCONFIGDIR=/tmp/matplotlib_cache/ \

|

| 51 |

+

GRADIO_OUTPUT_FOLDER=$APP_HOME/app/output/ \

|

| 52 |

+

GRADIO_INPUT_FOLDER=$APP_HOME/app/input/ \

|

| 53 |

+

FEEDBACK_LOGS_FOLDER=$APP_HOME/app/feedback/ \

|

| 54 |

+

ACCESS_LOGS_FOLDER=$APP_HOME/app/logs/ \

|

| 55 |

+

USAGE_LOGS_FOLDER=$APP_HOME/app/usage/ \

|

| 56 |

+

CONFIG_FOLDER=$APP_HOME/app/config/ \

|

| 57 |

+

XDG_CACHE_HOME=/tmp/xdg_cache/user_1000

|

| 58 |

+

|

| 59 |

+

# Create the base application directory and set its ownership

|

| 60 |

+

RUN mkdir -p ${APP_HOME}/app && chown user:user ${APP_HOME}/app

|

| 61 |

+

|

| 62 |

+

# Create required sub-folders within the app directory and set their permissions

|

| 63 |

+

# This ensures these specific directories are owned by 'user'

|

| 64 |

+

RUN mkdir -p \

|

| 65 |

+

${APP_HOME}/app/output \

|

| 66 |

+

${APP_HOME}/app/input \

|

| 67 |

+

${APP_HOME}/app/logs \

|

| 68 |

+

${APP_HOME}/app/usage \

|

| 69 |

+

${APP_HOME}/app/feedback \

|

| 70 |

+

${APP_HOME}/app/config \

|

| 71 |

+

&& chown user:user \

|

| 72 |

+

${APP_HOME}/app/output \

|

| 73 |

+

${APP_HOME}/app/input \

|

| 74 |

+

${APP_HOME}/app/logs \

|

| 75 |

+

${APP_HOME}/app/usage \

|

| 76 |

+

${APP_HOME}/app/feedback \

|

| 77 |

+

${APP_HOME}/app/config \

|

| 78 |

+

&& chmod 755 \

|

| 79 |

+

${APP_HOME}/app/output \

|

| 80 |

+

${APP_HOME}/app/input \

|

| 81 |

+

${APP_HOME}/app/logs \

|

| 82 |

+

${APP_HOME}/app/usage \

|

| 83 |

+

${APP_HOME}/app/feedback \

|

| 84 |

+

${APP_HOME}/app/config

|

| 85 |

+

|

| 86 |

+

# Now handle the /tmp and /var/tmp directories and their subdirectories

|

| 87 |

+

RUN mkdir -p /tmp/gradio_tmp /tmp/tld /tmp/matplotlib_cache /tmp /var/tmp ${XDG_CACHE_HOME} \

|

| 88 |

+

&& chown user:user /tmp /var/tmp /tmp/gradio_tmp /tmp/tld /tmp/matplotlib_cache ${XDG_CACHE_HOME} \

|

| 89 |

+

&& chmod 1777 /tmp /var/tmp /tmp/gradio_tmp /tmp/tld /tmp/matplotlib_cache \

|

| 90 |

+

&& chmod 700 ${XDG_CACHE_HOME}

|

| 91 |

|

| 92 |

# Copy installed packages from builder stage

|

| 93 |

COPY --from=builder /install /usr/local/lib/python3.11/site-packages/

|

| 94 |

|

| 95 |

+

# Copy app code and entrypoint with correct ownership

|

| 96 |

+

COPY --chown=user . $APP_HOME/app

|

| 97 |

|

| 98 |

+

# Copy and chmod entrypoint

|

| 99 |

COPY entrypoint.sh /entrypoint.sh

|

|

|

|

| 100 |

RUN chmod +x /entrypoint.sh

|

| 101 |

|

| 102 |

+

# Switch to user

|

| 103 |

USER user

|

| 104 |

|

| 105 |

+

# Declare working directory

|

| 106 |

+

WORKDIR $APP_HOME/app

|

| 107 |

|

| 108 |

+

# Declare volumes (NOTE: runtime mounts will override permissions — handle with care)

|

| 109 |

+

VOLUME ["/tmp/matplotlib_cache"]

|

| 110 |

+

VOLUME ["/tmp/gradio_tmp"]

|

| 111 |

+

VOLUME ["/tmp/tld"]

|

| 112 |

+

VOLUME ["/home/user/app/output"]

|

| 113 |

+

VOLUME ["/home/user/app/input"]

|

| 114 |

+

VOLUME ["/home/user/app/logs"]

|

| 115 |

+

VOLUME ["/home/user/app/usage"]

|

| 116 |

+

VOLUME ["/home/user/app/feedback"]

|

| 117 |

+

VOLUME ["/home/user/app/config"]

|

| 118 |

+

VOLUME ["/tmp"]

|

| 119 |

+

VOLUME ["/var/tmp"]

|

| 120 |

+

|

| 121 |

+

# Set runtime environment

|

| 122 |

ENV PATH=$APP_HOME/.local/bin:$PATH \

|

| 123 |

PYTHONPATH=$APP_HOME/app \

|

| 124 |

PYTHONUNBUFFERED=1 \

|

|

|

|

| 127 |

GRADIO_NUM_PORTS=1 \

|

| 128 |

GRADIO_SERVER_NAME=0.0.0.0 \

|

| 129 |

GRADIO_SERVER_PORT=7860 \

|

| 130 |

+

GRADIO_ANALYTICS_ENABLED=False

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 131 |

|

| 132 |

+

ENTRYPOINT ["/entrypoint.sh"]

|

| 133 |

|

| 134 |

+

CMD ["lambda_entrypoint.lambda_handler"]

|

|

|

README.md

CHANGED

|

@@ -5,16 +5,16 @@ colorFrom: blue

|

|

| 5 |

colorTo: yellow

|

| 6 |

sdk: docker

|

| 7 |

app_file: app.py

|

| 8 |

-

pinned:

|

| 9 |

license: agpl-3.0

|

| 10 |

---

|

| 11 |

# Document redaction

|

| 12 |

|

| 13 |

-

version: 0.

|

| 14 |

|

| 15 |

Redact personally identifiable information (PII) from documents (pdf, images), open text, or tabular data (xlsx/csv/parquet). Please see the [User Guide](#user-guide) for a walkthrough on how to use the app. Below is a very brief overview.

|

| 16 |

|

| 17 |

-

To identify text in documents, the 'local' text/OCR image analysis uses spacy/tesseract, and works

|

| 18 |

|

| 19 |

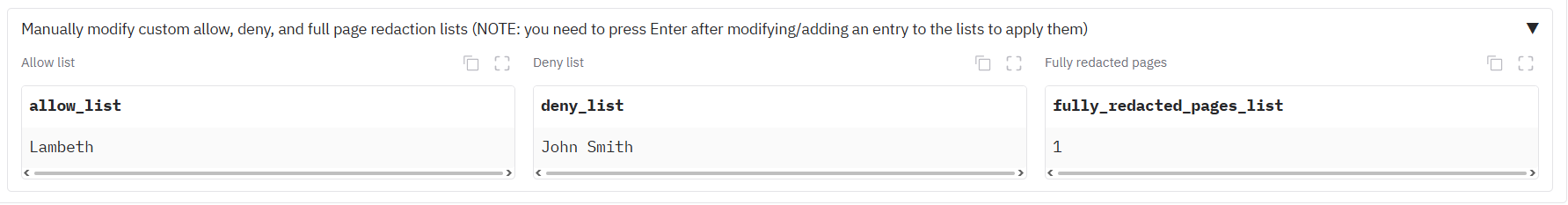

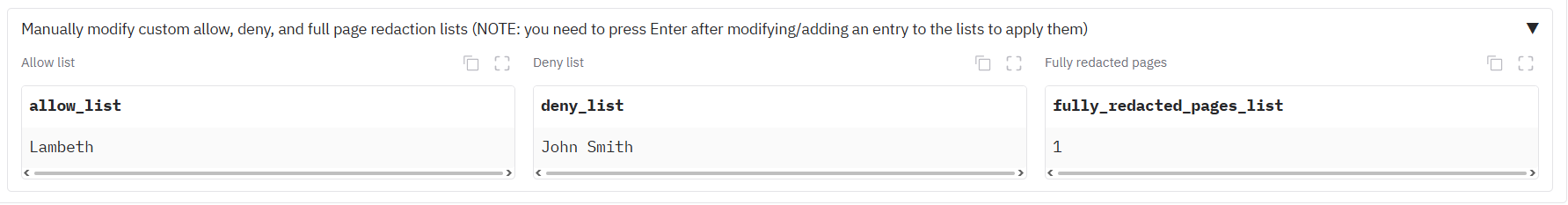

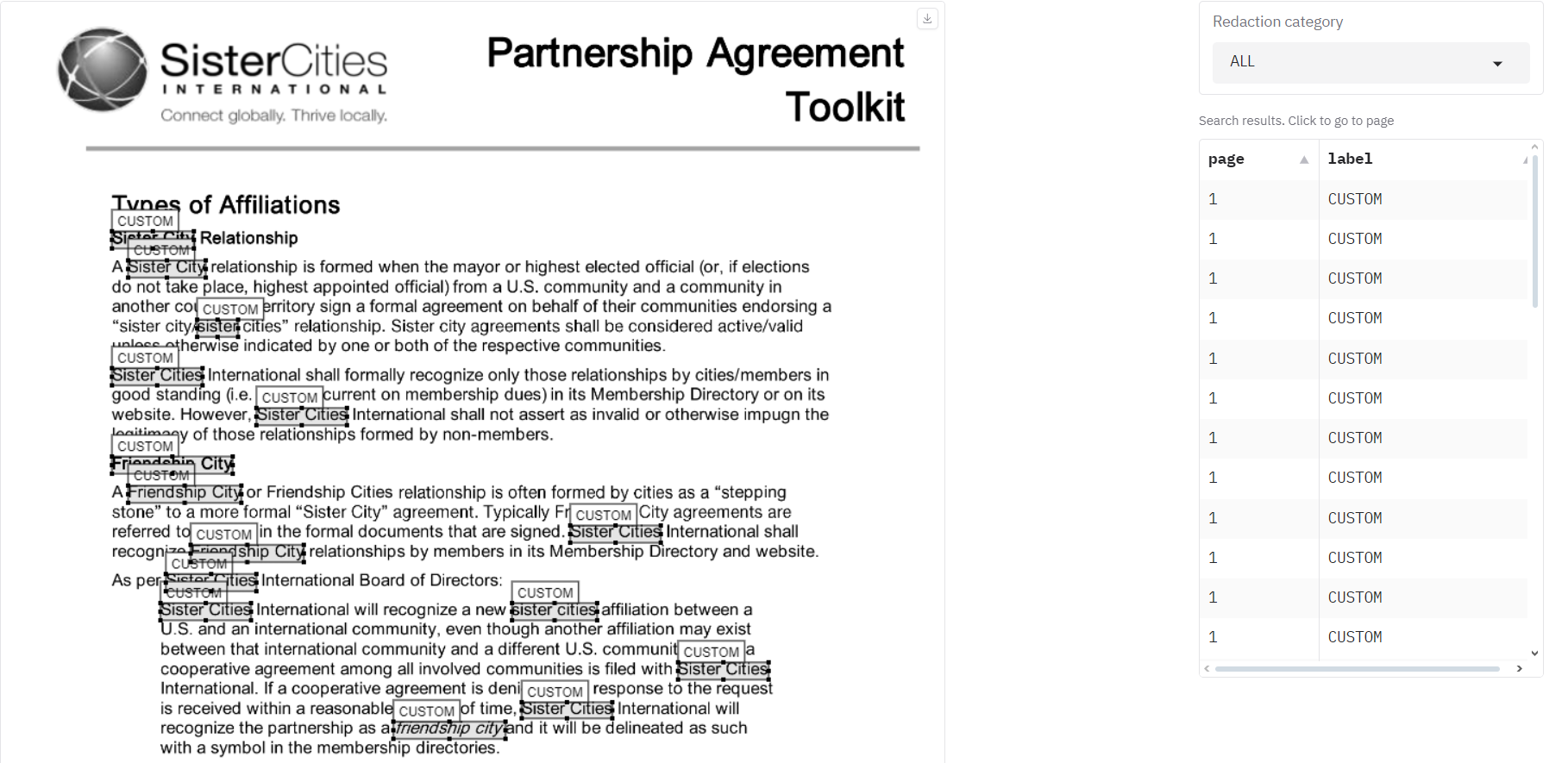

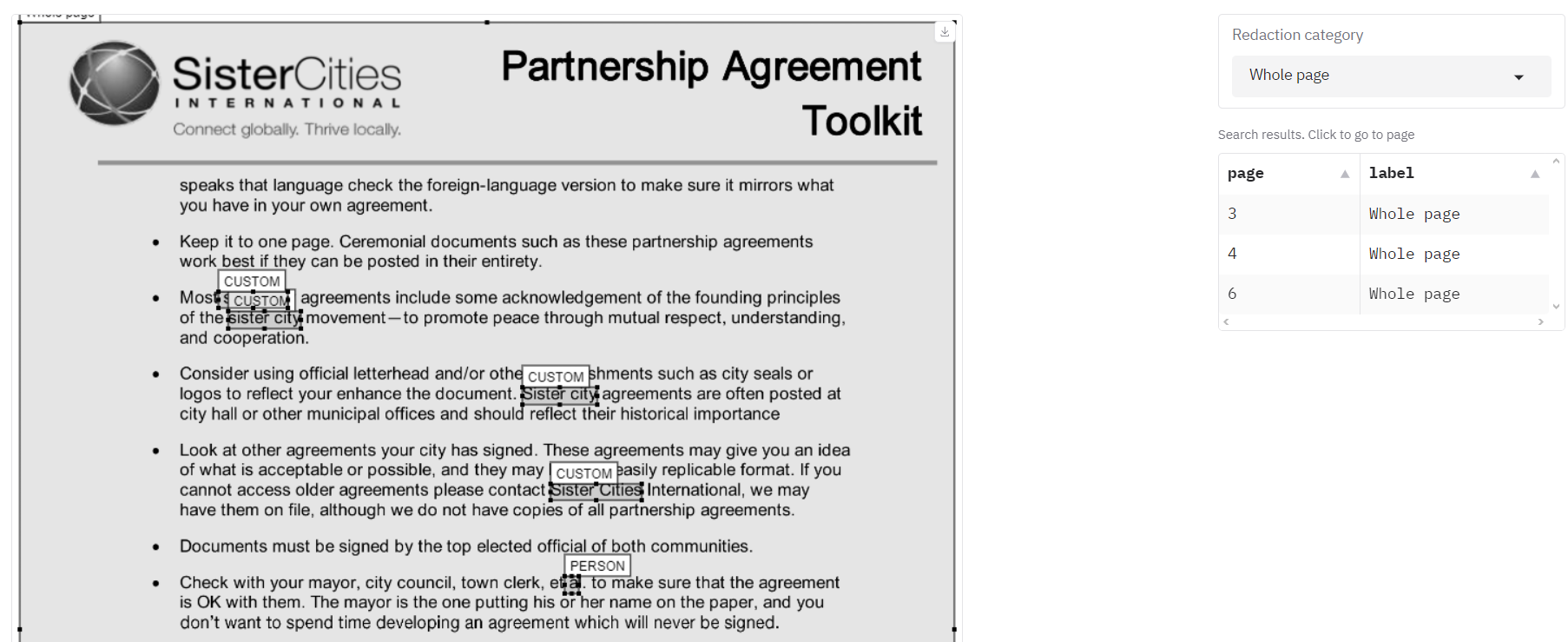

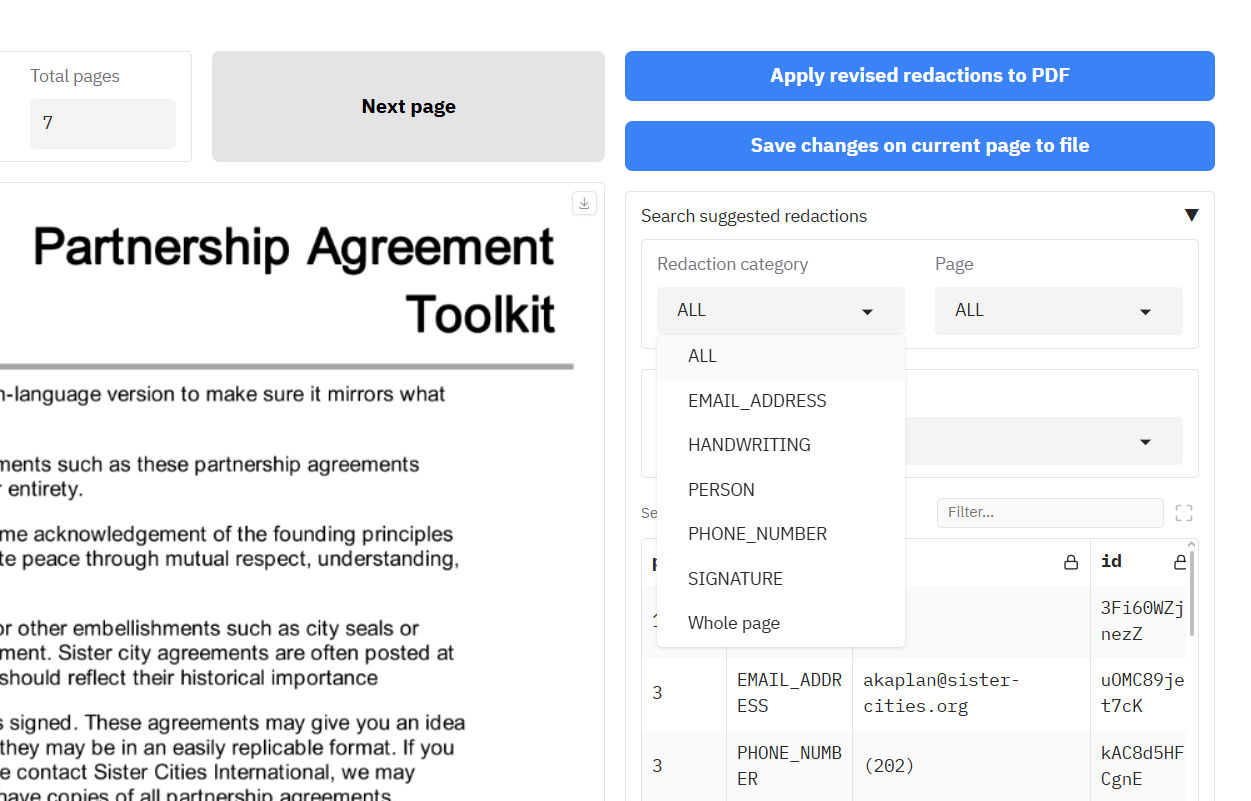

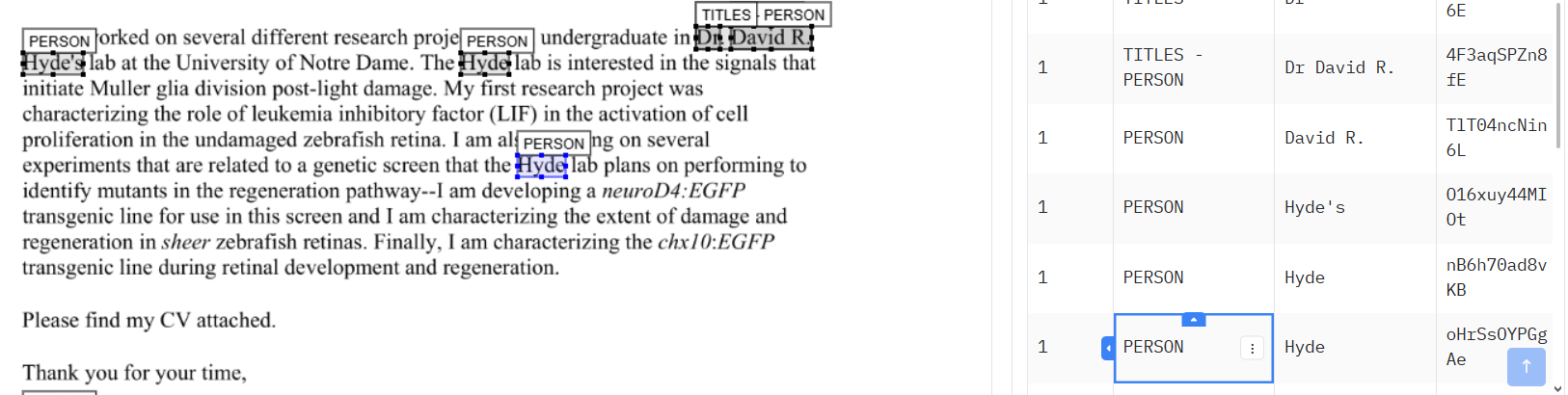

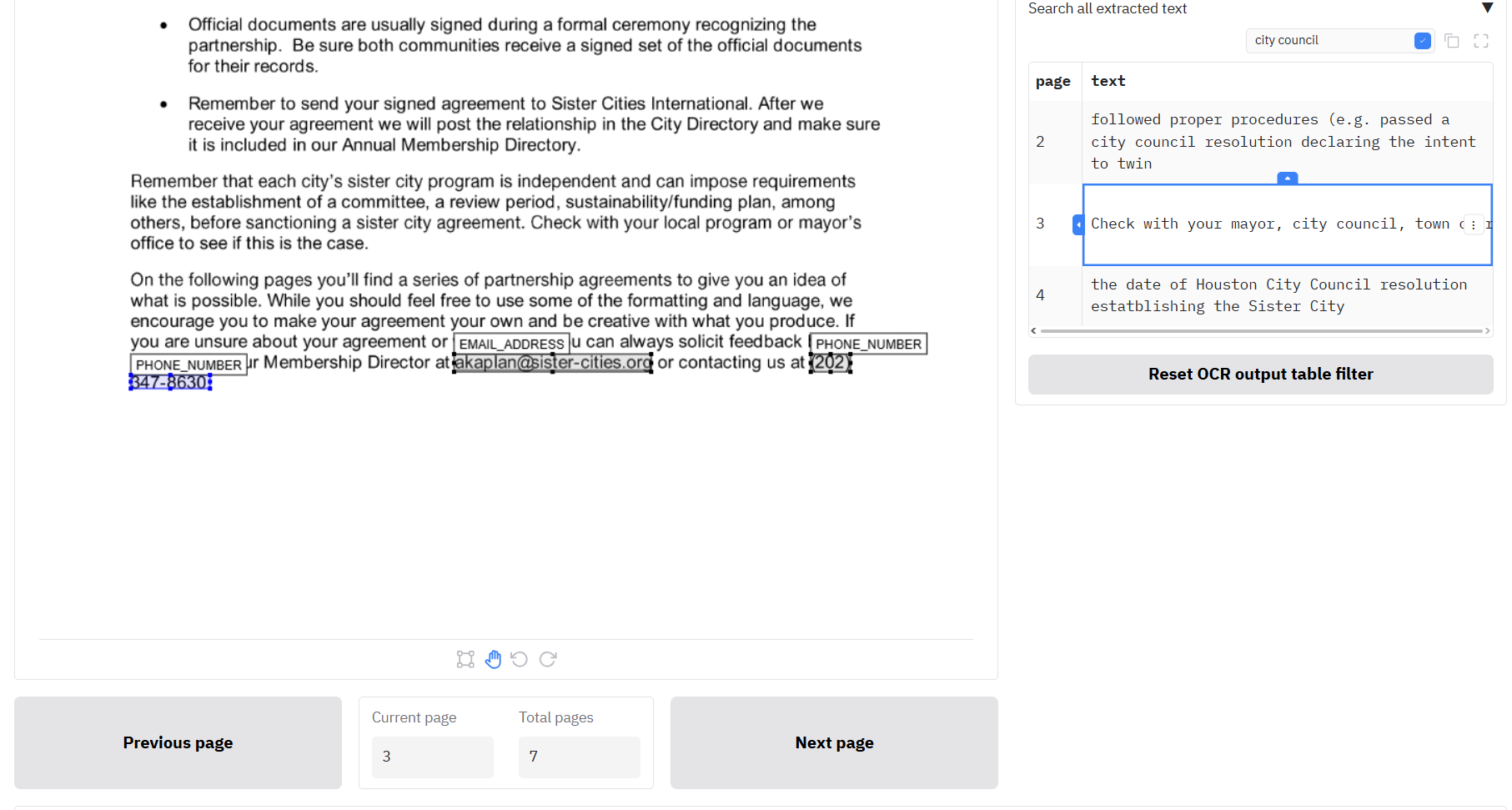

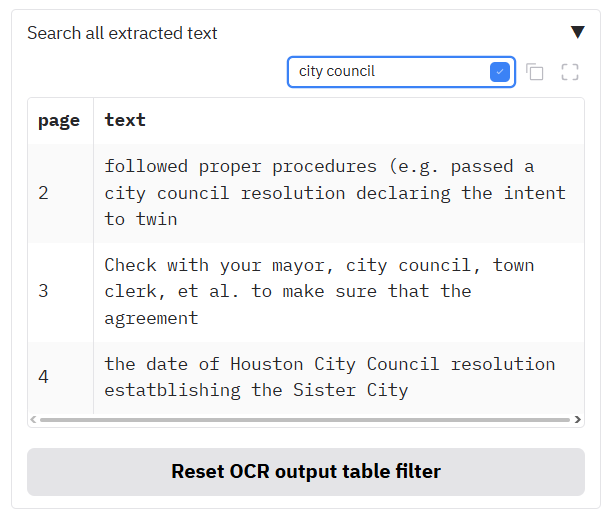

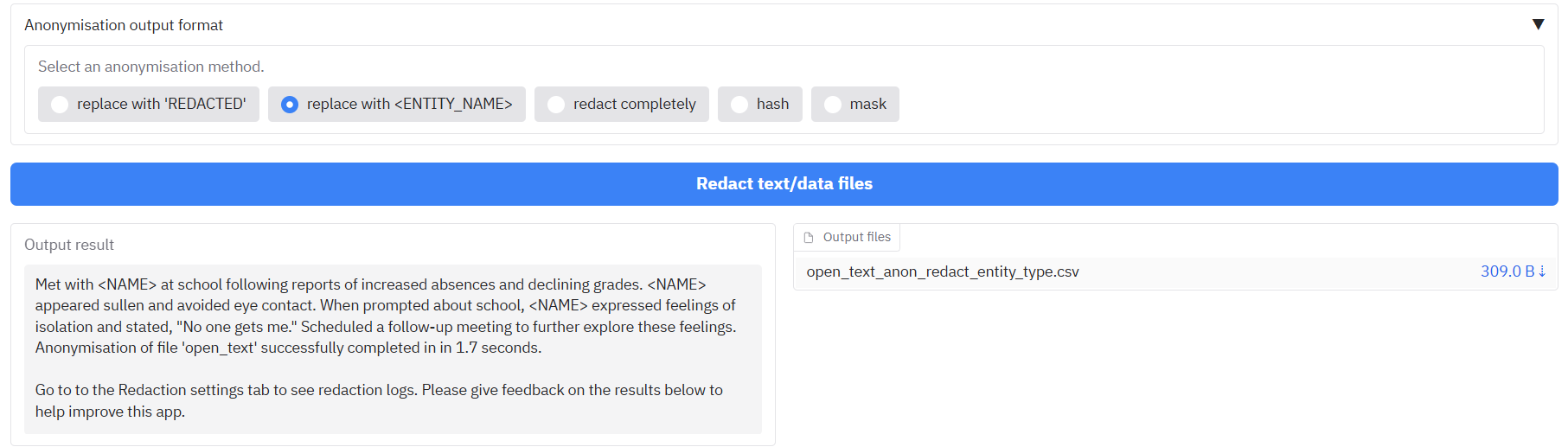

After redaction, review suggested redactions on the 'Review redactions' tab. The original pdf can be uploaded here alongside a '...redaction_file.csv' to continue a previous redaction/review task. See the 'Redaction settings' tab to choose which pages to redact, the type of information to redact (e.g. people, places), or custom terms to always include/ exclude from redaction.

|

| 20 |

|

|

@@ -181,6 +181,8 @@ If the table is empty, you can add a new entry, you can add a new row by clickin

|

|

| 181 |

|

| 182 |

|

| 183 |

|

|

|

|

|

|

|

| 184 |

### Redacting additional types of personal information

|

| 185 |

|

| 186 |

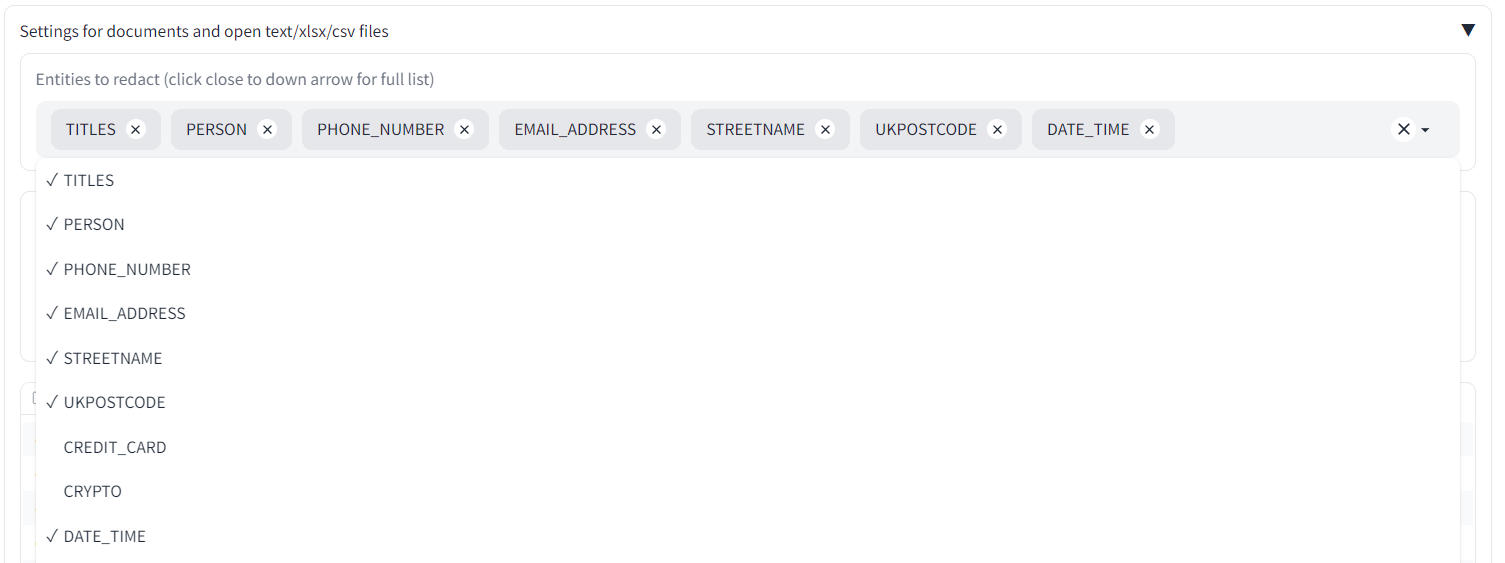

You may want to redact additional types of information beyond the defaults, or you may not be interested in default suggested entity types. There are dates in the example complaint letter. Say we wanted to redact those dates also?

|

|

@@ -390,21 +392,49 @@ You can find this option at the bottom of the 'Redaction Settings' tab. Upload m

|

|

| 390 |

|

| 391 |

The files for this section are stored [here](https://github.com/seanpedrick-case/document_redaction_examples/blob/main/duplicate_page_find_in_app/).

|

| 392 |

|

| 393 |

-

Some redaction tasks involve removing duplicate pages of text that may exist across multiple documents. This feature

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 394 |

|

| 395 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 396 |

|

| 397 |

-

|

| 398 |

|

| 399 |

-

|

|

|

|

| 400 |

|

| 401 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 402 |

|

| 403 |

|

| 404 |

|

| 405 |

-

|

| 406 |

|

| 407 |

-

above.

|

| 410 |

|

|

@@ -505,6 +535,8 @@ Again, a lot can potentially go wrong with AWS solutions that are insecure, so b

|

|

| 505 |

|

| 506 |

## Modifying existing redaction review files

|

| 507 |

|

|

|

|

|

|

|

| 508 |

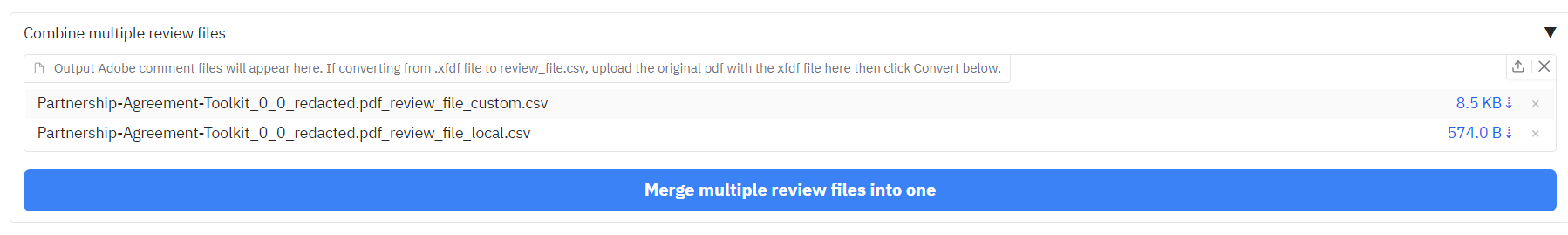

You can find the folder containing the files discussed in this section [here](https://github.com/seanpedrick-case/document_redaction_examples/blob/main/merge_review_files/).

|

| 509 |

|

| 510 |

As well as serving as inputs to the document redaction app's review function, the 'review_file.csv' output can be modified outside of the app, and also merged with others from multiple redaction attempts on the same file. This gives you the flexibility to change redaction details outside of the app.

|

|

|

|

| 5 |

colorTo: yellow

|

| 6 |

sdk: docker

|

| 7 |

app_file: app.py

|

| 8 |

+

pinned: true

|

| 9 |

license: agpl-3.0

|

| 10 |

---

|

| 11 |

# Document redaction

|

| 12 |

|

| 13 |

+

version: 0.7.0

|

| 14 |

|

| 15 |

Redact personally identifiable information (PII) from documents (pdf, images), open text, or tabular data (xlsx/csv/parquet). Please see the [User Guide](#user-guide) for a walkthrough on how to use the app. Below is a very brief overview.

|

| 16 |

|

| 17 |

+

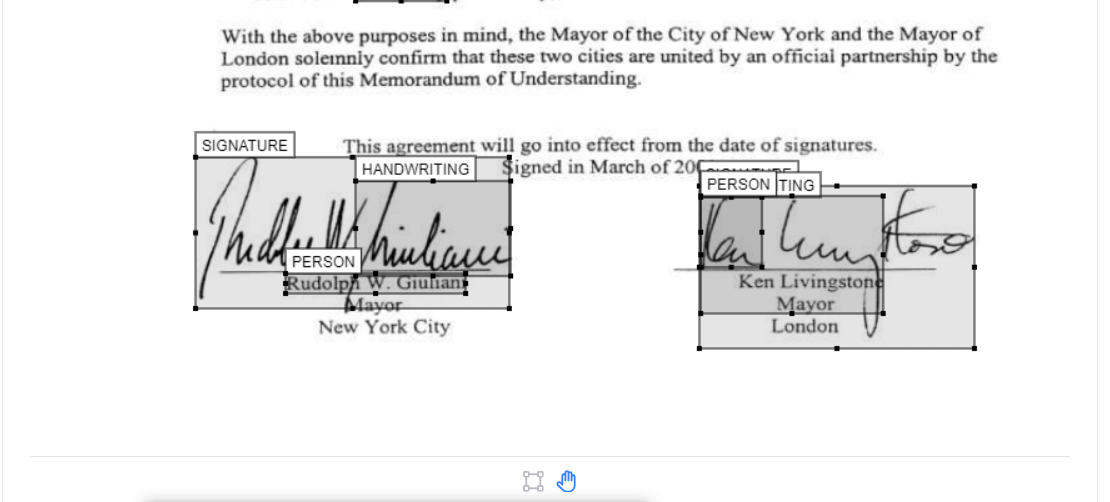

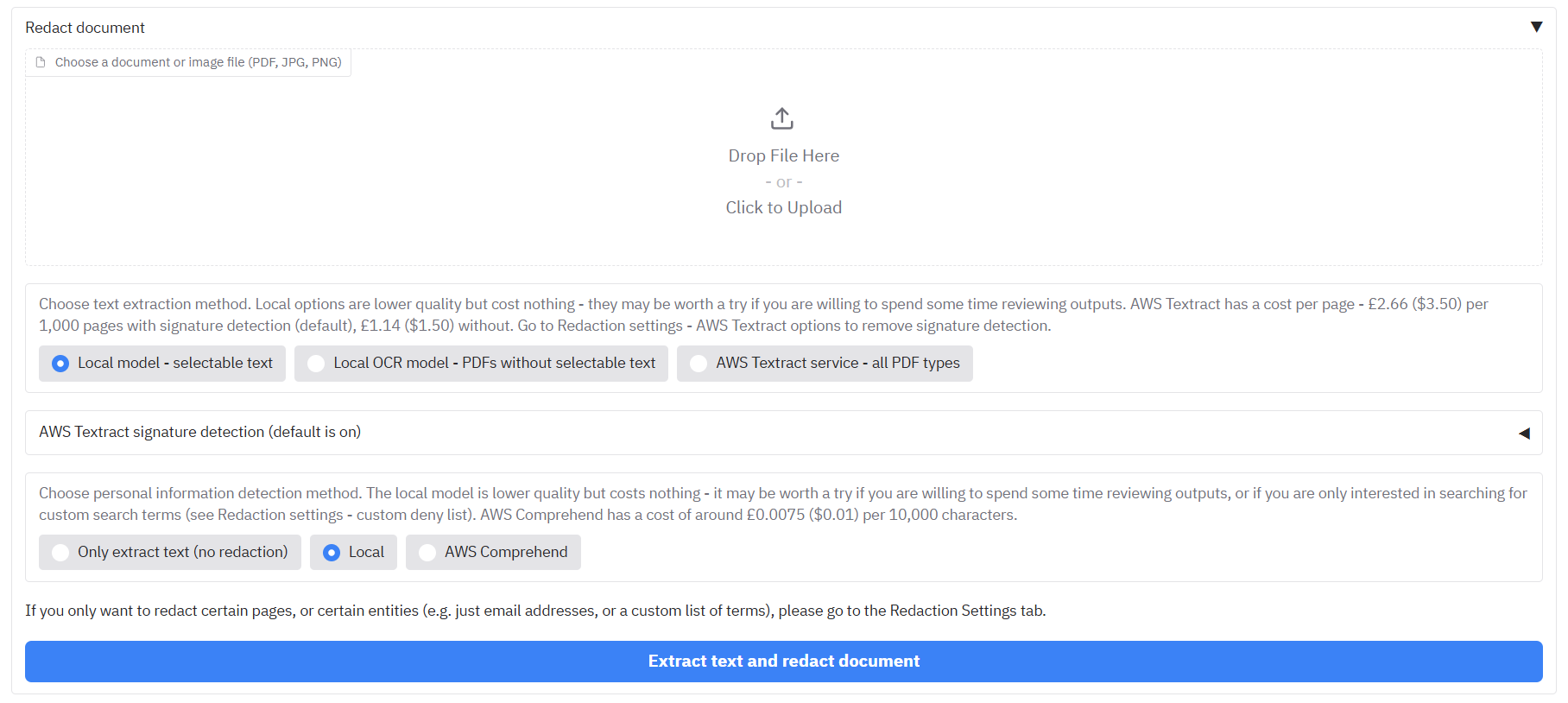

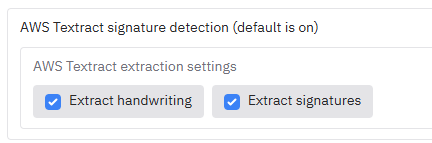

To identify text in documents, the 'local' text/OCR image analysis uses spacy/tesseract, and works quite well for documents with typed text. If available, choose 'AWS Textract service' to redact more complex elements e.g. signatures or handwriting. Then, choose a method for PII identification. 'Local' is quick and gives good results if you are primarily looking for a custom list of terms to redact (see Redaction settings). If available, AWS Comprehend gives better results at a small cost.

|

| 18 |

|

| 19 |

After redaction, review suggested redactions on the 'Review redactions' tab. The original pdf can be uploaded here alongside a '...redaction_file.csv' to continue a previous redaction/review task. See the 'Redaction settings' tab to choose which pages to redact, the type of information to redact (e.g. people, places), or custom terms to always include/ exclude from redaction.

|

| 20 |

|

|

|

|

| 181 |

|

| 182 |

|

| 183 |

|

| 184 |

+

**Note:** As of version 0.7.0 you can now apply your whole page redaction list directly to the document file currently under review by clicking the 'Apply whole page redaction list to document currently under review' button that appears here.

|

| 185 |

+

|

| 186 |

### Redacting additional types of personal information

|

| 187 |

|

| 188 |

You may want to redact additional types of information beyond the defaults, or you may not be interested in default suggested entity types. There are dates in the example complaint letter. Say we wanted to redact those dates also?

|

|

|

|

| 392 |

|

| 393 |

The files for this section are stored [here](https://github.com/seanpedrick-case/document_redaction_examples/blob/main/duplicate_page_find_in_app/).

|

| 394 |

|

| 395 |

+

Some redaction tasks involve removing duplicate pages of text that may exist across multiple documents. This feature helps you find and remove duplicate content that may exist in single or multiple documents. It can identify everything from single identical pages to multi-page sections (subdocuments). The process involves three main steps: configuring the analysis, reviewing the results in the interactive interface, and then using the generated files to perform the redactions.

|

| 396 |

+

|

| 397 |

+

|

| 398 |

+

|

| 399 |

+

**Step 1: Upload and Configure the Analysis**

|

| 400 |

+

First, navigate to the "Identify duplicate pages" tab. Upload all the ocr_output.csv files you wish to compare into the file area. These files are generated every time you run a redaction task and contain the text for each page of a document.

|

| 401 |

+

|

| 402 |

+

For our example, you can upload the four 'ocr_output.csv' files provided in the example folder into the file area. Click 'Identify duplicate pages' and you will see a number of files returned. In case you want to see the original PDFs, they are available [here](https://github.com/seanpedrick-case/document_redaction_examples/blob/main/duplicate_page_find_in_app/input_pdfs/).

|

| 403 |

+

|

| 404 |

+

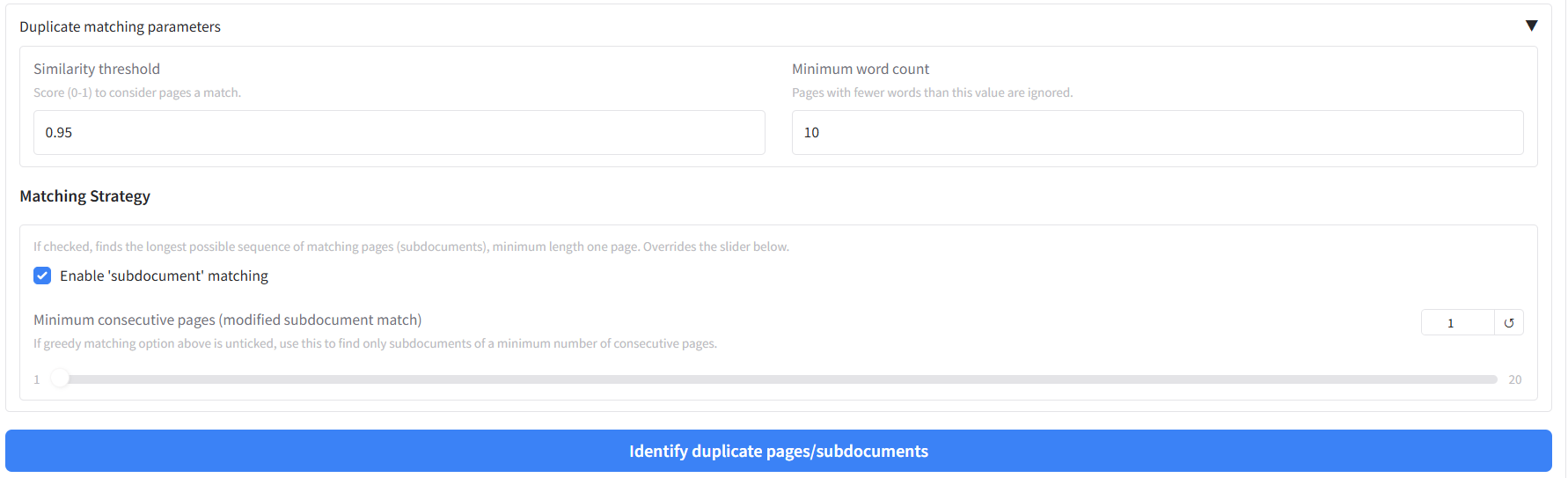

The default options will search for matching subdocuments of any length. Before running the analysis, you can configure these matching parameters to tell the tool what you're looking for:

|

| 405 |

+

|

| 406 |

+

|

| 407 |

|

| 408 |

+

*Matching Parameters*

|

| 409 |

+

- **Similarity Threshold:** A score from 0 to 1. Pages or sequences of pages with a calculated text similarity above this value will be considered a match. The default of 0.9 (90%) is a good starting point for finding near-identical pages.

|

| 410 |

+

- **Min Word Count:** Pages with fewer words than this value will be completely ignored during the comparison. This is extremely useful for filtering out blank pages, title pages, or boilerplate pages that might otherwise create noise in the results. The default is 10.

|

| 411 |

+

- **Choosing a Matching Strategy:** You have three main options to find duplicate content.

|

| 412 |

+

- *'Subdocument' matching (default):* Use this to find the longest possible sequence of matching pages. The tool will find an initial match and then automatically expand it forward page-by-page until the consecutive match breaks. This is the best method for identifying complete copied chapters or sections of unknown length. This is enabled by default by ticking the "Enable 'subdocument' matching" box. This setting overrides the described below.

|

| 413 |

+

- *Minimum length subdocument matching:* Use this to find sequences of consecutively matching pages with a minimum page lenght. For example, setting the slider to 3 will only return sections that are at least 3 pages long. How to enable: Untick the "Enable 'subdocument' matching" box and set the "Minimum consecutive pages" slider to a value greater than 1.

|

| 414 |

+

- *Single Page Matching:* Use this to find all individual page pairs that are similar to each other. Leave the "Enable 'subdocument' matching" box unchecked and keep the "Minimum consecutive pages" slider at 1.

|

| 415 |

|

| 416 |

+

Once your parameters are set, click the "Identify duplicate pages/subdocuments" button.

|

| 417 |

|

| 418 |

+

**Step 2: Review Results in the Interface**

|

| 419 |

+

After the analysis is complete, the results will be displayed directly in the interface.

|

| 420 |

|

| 421 |

+

*Analysis Summary:* A table will appear showing a summary of all the matches found. The columns will change depending on the matching strategy you chose. For subdocument matches, it will show the start and end pages of the matched sequence.

|

| 422 |

+

|

| 423 |

+

*Interactive Preview:* This is the most important part of the review process. Click on any row in the summary table. The full text of the matching page(s) will appear side-by-side in the "Full Text Preview" section below, allowing you to instantly verify the accuracy of the match.

|

| 424 |

+

|

| 425 |

+

|

| 426 |

+

|

| 427 |

+

**Step 3: Download and Use the Output Files**

|

| 428 |

+

The analysis also generates a set of downloadable files for your records and for performing redactions.

|

| 429 |

+

|

| 430 |

+

|

| 431 |

+

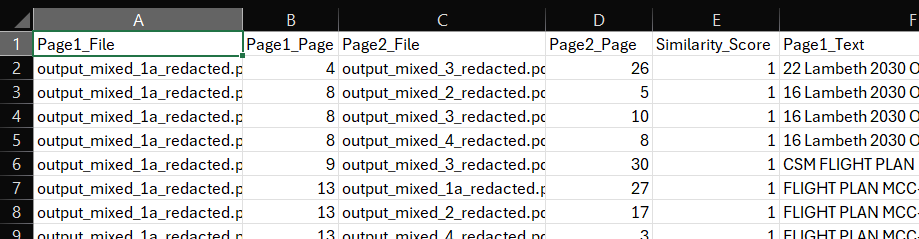

- page_similarity_results.csv: This is a detailed report of the analysis you just ran. It shows a breakdown of the pages from each file that are most similar to each other above the similarity threshold. You can compare the text in the two columns 'Page_1_Text' and 'Page_2_Text'. For single-page matches, it will list each pair of matching pages. For subdocument matches, it will list the start and end pages of each matched sequence, along with the total length of the match.

|

| 432 |

|

| 433 |

|

| 434 |

|

| 435 |

+

- [Original_Filename]_pages_to_redact.csv: For each input document that was found to contain duplicate content, a separate redaction list is created. This is a simple, one-column CSV file containing a list of all page numbers that should be removed. To use these files, you can either upload the original document (i.e. the PDF) on the 'Review redactions' tab, and then click on the 'Apply relevant duplicate page output to document currently under review' button. You should see the whole pages suggested for redaction on the 'Review redactions' tab. Alternatively, you can reupload the file into the whole page redaction section as described in the ['Full page redaction list example' section](#full-page-redaction-list-example).

|

| 436 |

|

| 437 |

+

|

| 438 |

|

| 439 |

If you want to combine the results from this redaction process with previous redaction tasks for the same PDF, you could merge review file outputs following the steps described in [Merging existing redaction review files](#merging-existing-redaction-review-files) above.

|

| 440 |

|

|

|

|

| 535 |

|

| 536 |

## Modifying existing redaction review files

|

| 537 |

|

| 538 |

+

*Note:* As of version 0.7.0 you can now modify redaction review files directly in the app on the 'Review redactions' tab. Open the accordion 'View and edit review data' under the file input area. You can edit review file data cells here - press Enter to apply changes. You should see the effect on the current page if you click the 'Save changes on current page to file' button to the right.

|

| 539 |

+

|

| 540 |

You can find the folder containing the files discussed in this section [here](https://github.com/seanpedrick-case/document_redaction_examples/blob/main/merge_review_files/).

|

| 541 |

|

| 542 |

As well as serving as inputs to the document redaction app's review function, the 'review_file.csv' output can be modified outside of the app, and also merged with others from multiple redaction attempts on the same file. This gives you the flexibility to change redaction details outside of the app.

|

_quarto.yml

ADDED

|

@@ -0,0 +1,28 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

project:

|

| 2 |

+

type: website

|

| 3 |

+

output-dir: docs # Common for GitHub Pages

|

| 4 |

+

render:

|

| 5 |

+

- "*.qmd"

|

| 6 |

+

|

| 7 |

+

website:

|

| 8 |

+

title: "Document Redaction App"

|

| 9 |

+

page-navigation: true # Often enabled for floating TOC to highlight current section

|

| 10 |

+

back-to-top-navigation: true

|

| 11 |

+

search: true

|

| 12 |

+

navbar:

|

| 13 |

+

left:

|

| 14 |

+

- href: index.qmd

|

| 15 |

+

text: Home

|

| 16 |

+

- href: src/user_guide.qmd

|

| 17 |

+

text: User guide

|

| 18 |

+

- href: src/faq.qmd

|

| 19 |

+

text: User FAQ

|

| 20 |

+

- href: src/installation_guide.qmd

|

| 21 |

+

text: App installation guide (with CDK)

|

| 22 |

+

- href: src/app_settings.qmd

|

| 23 |

+

text: App settings management guide

|

| 24 |

+

|

| 25 |

+

format:

|

| 26 |

+

html:

|

| 27 |

+

theme: cosmo

|

| 28 |

+

css: styles.css

|

app.py

CHANGED

|

@@ -3,37 +3,39 @@ import pandas as pd

|

|

| 3 |

import gradio as gr

|

| 4 |

from gradio_image_annotation import image_annotator

|

| 5 |

from tools.config import OUTPUT_FOLDER, INPUT_FOLDER, RUN_DIRECT_MODE, MAX_QUEUE_SIZE, DEFAULT_CONCURRENCY_LIMIT, MAX_FILE_SIZE, GRADIO_SERVER_PORT, ROOT_PATH, GET_DEFAULT_ALLOW_LIST, ALLOW_LIST_PATH, S3_ALLOW_LIST_PATH, FEEDBACK_LOGS_FOLDER, ACCESS_LOGS_FOLDER, USAGE_LOGS_FOLDER, REDACTION_LANGUAGE, GET_COST_CODES, COST_CODES_PATH, S3_COST_CODES_PATH, ENFORCE_COST_CODES, DISPLAY_FILE_NAMES_IN_LOGS, SHOW_COSTS, RUN_AWS_FUNCTIONS, DOCUMENT_REDACTION_BUCKET, SHOW_WHOLE_DOCUMENT_TEXTRACT_CALL_OPTIONS, TEXTRACT_WHOLE_DOCUMENT_ANALYSIS_BUCKET, TEXTRACT_WHOLE_DOCUMENT_ANALYSIS_INPUT_SUBFOLDER, TEXTRACT_WHOLE_DOCUMENT_ANALYSIS_OUTPUT_SUBFOLDER, SESSION_OUTPUT_FOLDER, LOAD_PREVIOUS_TEXTRACT_JOBS_S3, TEXTRACT_JOBS_S3_LOC, TEXTRACT_JOBS_LOCAL_LOC, HOST_NAME, DEFAULT_COST_CODE, OUTPUT_COST_CODES_PATH, OUTPUT_ALLOW_LIST_PATH, COGNITO_AUTH, SAVE_LOGS_TO_CSV, SAVE_LOGS_TO_DYNAMODB, ACCESS_LOG_DYNAMODB_TABLE_NAME, DYNAMODB_ACCESS_LOG_HEADERS, CSV_ACCESS_LOG_HEADERS, FEEDBACK_LOG_DYNAMODB_TABLE_NAME, DYNAMODB_FEEDBACK_LOG_HEADERS, CSV_FEEDBACK_LOG_HEADERS, USAGE_LOG_DYNAMODB_TABLE_NAME, DYNAMODB_USAGE_LOG_HEADERS, CSV_USAGE_LOG_HEADERS, TEXTRACT_JOBS_S3_INPUT_LOC, TEXTRACT_TEXT_EXTRACT_OPTION, NO_REDACTION_PII_OPTION, TEXT_EXTRACTION_MODELS, PII_DETECTION_MODELS, DEFAULT_TEXT_EXTRACTION_MODEL, DEFAULT_PII_DETECTION_MODEL, LOG_FILE_NAME, CHOSEN_COMPREHEND_ENTITIES, FULL_COMPREHEND_ENTITY_LIST, CHOSEN_REDACT_ENTITIES, FULL_ENTITY_LIST, FILE_INPUT_HEIGHT, TABULAR_PII_DETECTION_MODELS

|

| 6 |

-

from tools.helper_functions import put_columns_in_df, get_connection_params, reveal_feedback_buttons, custom_regex_load, reset_state_vars, load_in_default_allow_list, reset_review_vars, merge_csv_files, load_all_output_files, update_dataframe, check_for_existing_textract_file, load_in_default_cost_codes, enforce_cost_codes, calculate_aws_costs, calculate_time_taken, reset_base_dataframe, reset_ocr_base_dataframe, update_cost_code_dataframe_from_dropdown_select, check_for_existing_local_ocr_file, reset_data_vars, reset_aws_call_vars

|

| 7 |

from tools.aws_functions import download_file_from_s3, upload_log_file_to_s3

|

| 8 |

from tools.file_redaction import choose_and_run_redactor

|

| 9 |

from tools.file_conversion import prepare_image_or_pdf, get_input_file_names

|

| 10 |

-

from tools.redaction_review import apply_redactions_to_review_df_and_files, update_all_page_annotation_object_based_on_previous_page, decrease_page, increase_page, update_annotator_object_and_filter_df, update_entities_df_recogniser_entities, update_entities_df_page, update_entities_df_text, df_select_callback_dataframe_row, convert_df_to_xfdf, convert_xfdf_to_dataframe, reset_dropdowns, exclude_selected_items_from_redaction, undo_last_removal, update_selected_review_df_row_colour, update_all_entity_df_dropdowns, df_select_callback_cost, update_other_annotator_number_from_current, update_annotator_page_from_review_df, df_select_callback_ocr, df_select_callback_textract_api, get_all_rows_with_same_text

|

| 11 |

from tools.data_anonymise import anonymise_data_files

|

| 12 |

from tools.auth import authenticate_user

|

| 13 |

from tools.load_spacy_model_custom_recognisers import custom_entities

|

| 14 |

from tools.custom_csvlogger import CSVLogger_custom

|

| 15 |

-

from tools.find_duplicate_pages import

|

| 16 |

from tools.textract_batch_call import analyse_document_with_textract_api, poll_whole_document_textract_analysis_progress_and_download, load_in_textract_job_details, check_for_provided_job_id, check_textract_outputs_exist, replace_existing_pdf_input_for_whole_document_outputs

|

| 17 |

|

| 18 |

# Suppress downcasting warnings

|

| 19 |

pd.set_option('future.no_silent_downcasting', True)

|

| 20 |

|

| 21 |

# Convert string environment variables to string or list

|

| 22 |

-

SAVE_LOGS_TO_CSV =

|

| 23 |

-

|

|

|

|

|

|

|

| 24 |

|

| 25 |

-

if CSV_ACCESS_LOG_HEADERS: CSV_ACCESS_LOG_HEADERS =

|

| 26 |

-

if CSV_FEEDBACK_LOG_HEADERS: CSV_FEEDBACK_LOG_HEADERS =

|

| 27 |

-

if CSV_USAGE_LOG_HEADERS: CSV_USAGE_LOG_HEADERS =

|

| 28 |

|

| 29 |

-

if DYNAMODB_ACCESS_LOG_HEADERS: DYNAMODB_ACCESS_LOG_HEADERS =

|

| 30 |

-

if DYNAMODB_FEEDBACK_LOG_HEADERS: DYNAMODB_FEEDBACK_LOG_HEADERS =

|

| 31 |

-

if DYNAMODB_USAGE_LOG_HEADERS: DYNAMODB_USAGE_LOG_HEADERS =

|

| 32 |

|

| 33 |

-

if CHOSEN_COMPREHEND_ENTITIES: CHOSEN_COMPREHEND_ENTITIES =

|

| 34 |

-

if FULL_COMPREHEND_ENTITY_LIST: FULL_COMPREHEND_ENTITY_LIST =

|

| 35 |

-

if CHOSEN_REDACT_ENTITIES: CHOSEN_REDACT_ENTITIES =

|

| 36 |

-

if FULL_ENTITY_LIST: FULL_ENTITY_LIST =

|

| 37 |

|

| 38 |

# Add custom spacy recognisers to the Comprehend list, so that local Spacy model can be used to pick up e.g. titles, streetnames, UK postcodes that are sometimes missed by comprehend

|

| 39 |

CHOSEN_COMPREHEND_ENTITIES.extend(custom_entities)

|

|

@@ -42,7 +44,7 @@ FULL_COMPREHEND_ENTITY_LIST.extend(custom_entities)

|

|

| 42 |

FILE_INPUT_HEIGHT = int(FILE_INPUT_HEIGHT)

|

| 43 |

|

| 44 |

# Create the gradio interface

|

| 45 |

-

app = gr.Blocks(theme = gr.themes.

|

| 46 |

|

| 47 |

with app:

|

| 48 |

|

|

@@ -55,7 +57,7 @@ with app:

|

|

| 55 |

all_image_annotations_state = gr.State([])

|

| 56 |

|

| 57 |

all_decision_process_table_state = gr.Dataframe(value=pd.DataFrame(), headers=None, col_count=0, row_count = (0, "dynamic"), label="all_decision_process_table", visible=False, type="pandas", wrap=True)

|

| 58 |

-

|

| 59 |

|

| 60 |

all_page_line_level_ocr_results = gr.State([])

|

| 61 |

all_page_line_level_ocr_results_with_children = gr.State([])

|

|

@@ -186,6 +188,9 @@ with app:

|

|

| 186 |

# Duplicate page detection

|

| 187 |

in_duplicate_pages_text = gr.Textbox(label="in_duplicate_pages_text", visible=False)

|

| 188 |

duplicate_pages_df = gr.Dataframe(value=pd.DataFrame(), headers=None, col_count=0, row_count = (0, "dynamic"), label="duplicate_pages_df", visible=False, type="pandas", wrap=True)

|

|

|

|

|

|

|

|

|

|

| 189 |

|

| 190 |

# Tracking variables for current page (not visible)

|

| 191 |

current_loop_page_number = gr.Number(value=0,precision=0, interactive=False, label = "Last redacted page in document", visible=False)

|

|

@@ -230,7 +235,7 @@ with app:

|

|

| 230 |

|

| 231 |

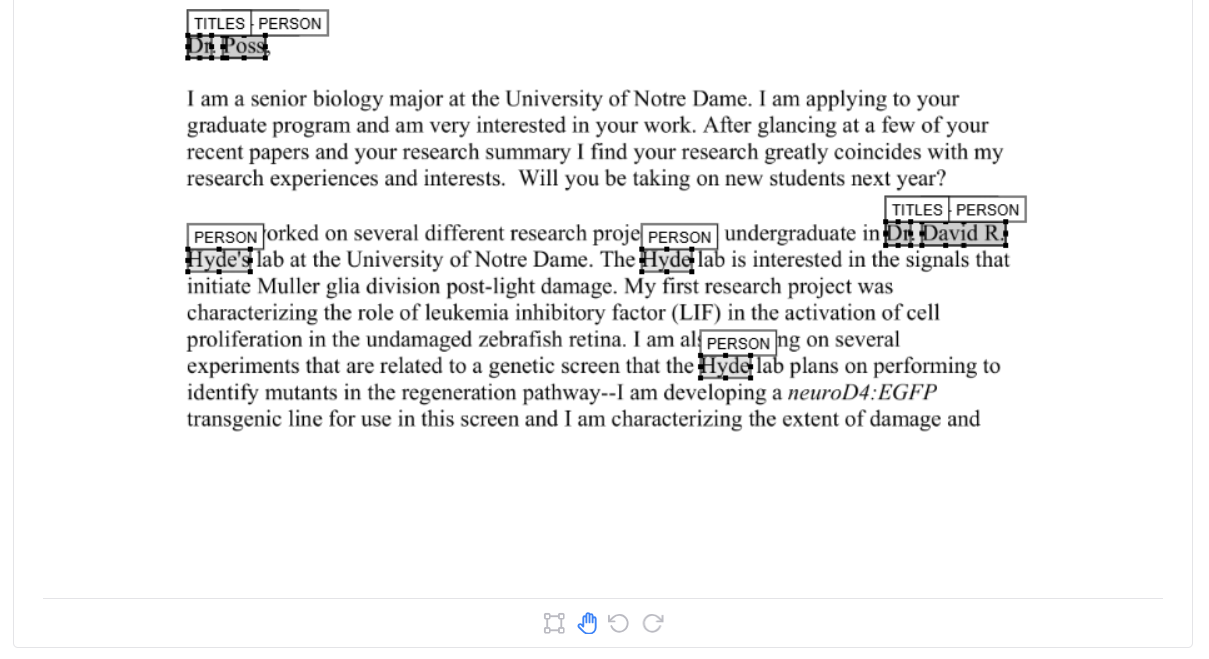

Redact personally identifiable information (PII) from documents (PDF, images), open text, or tabular data (XLSX/CSV/Parquet). Please see the [User Guide](https://github.com/seanpedrick-case/doc_redaction/blob/main/README.md) for a walkthrough on how to use the app. Below is a very brief overview.

|

| 232 |

|

| 233 |

-

To identify text in documents, the 'Local' text/OCR image analysis uses

|

| 234 |

|

| 235 |

After redaction, review suggested redactions on the 'Review redactions' tab. The original pdf can be uploaded here alongside a '...review_file.csv' to continue a previous redaction/review task. See the 'Redaction settings' tab to choose which pages to redact, the type of information to redact (e.g. people, places), or custom terms to always include/ exclude from redaction.

|

| 236 |

|

|

@@ -259,9 +264,9 @@ with app:

|

|

| 259 |

local_ocr_output_found_checkbox = gr.Checkbox(value= False, label="Existing local OCR output file found", interactive=False, visible=True)

|

| 260 |

with gr.Column(scale=4):

|

| 261 |

with gr.Row(equal_height=True):

|

| 262 |

-

total_pdf_page_count = gr.Number(label = "Total page count", value=0, visible=True)

|

| 263 |

-

estimated_aws_costs_number = gr.Number(label = "Approximate AWS Textract and/or Comprehend cost (£)", value=0.00, precision=2, visible=True)

|

| 264 |

-

estimated_time_taken_number = gr.Number(label = "Approximate time taken to extract text/redact (minutes)", value=0, visible=True, precision=2)

|

| 265 |

|

| 266 |

if GET_COST_CODES == "True" or ENFORCE_COST_CODES == "True":

|

| 267 |

with gr.Accordion("Assign task to cost code", open = True, visible=True):

|

|

@@ -317,7 +322,10 @@ with app:

|

|

| 317 |

annotate_zoom_in = gr.Button("Zoom in", visible=False)

|

| 318 |

annotate_zoom_out = gr.Button("Zoom out", visible=False)

|

| 319 |

with gr.Row():

|

| 320 |

-

clear_all_redactions_on_page_btn = gr.Button("Clear all redactions on page", visible=False)

|

|

|

|

|

|

|

|

|

|

| 321 |

|

| 322 |

with gr.Row():

|

| 323 |

with gr.Column(scale=2):

|

|

@@ -376,7 +384,8 @@ with app:

|

|

| 376 |

|

| 377 |

with gr.Accordion("Search all extracted text", open=True):

|

| 378 |

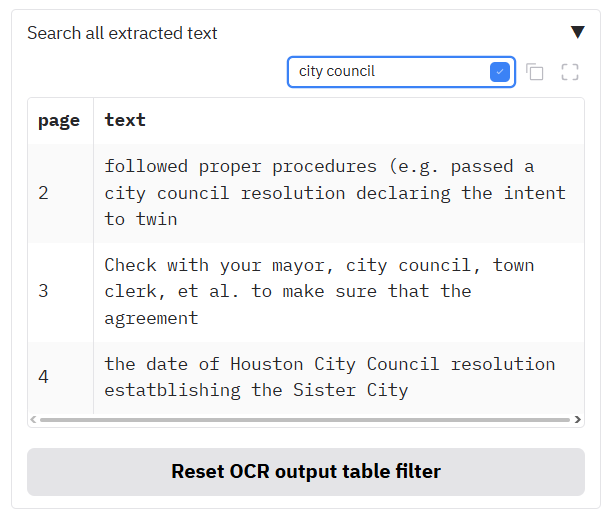

all_line_level_ocr_results_df = gr.Dataframe(value=pd.DataFrame(), headers=["page", "text"], col_count=(2, 'fixed'), row_count = (0, "dynamic"), label="All OCR results", visible=True, type="pandas", wrap=True, show_fullscreen_button=True, show_search='filter', show_label=False, show_copy_button=True, max_height=400)

|

| 379 |

-

reset_all_ocr_results_btn = gr.Button(value="Reset OCR output table filter")

|

|

|

|

| 380 |

|

| 381 |

with gr.Accordion("Convert review files loaded above to Adobe format, or convert from Adobe format to review file", open = False):

|

| 382 |

convert_review_file_to_adobe_btn = gr.Button("Convert review file to Adobe comment format", variant="primary")

|

|

@@ -387,13 +396,67 @@ with app:

|

|

| 387 |

# IDENTIFY DUPLICATE PAGES TAB

|

| 388 |

###

|

| 389 |

with gr.Tab(label="Identify duplicate pages"):

|

| 390 |

-

|

| 391 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 392 |

with gr.Row():

|

| 393 |

-

|

| 394 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

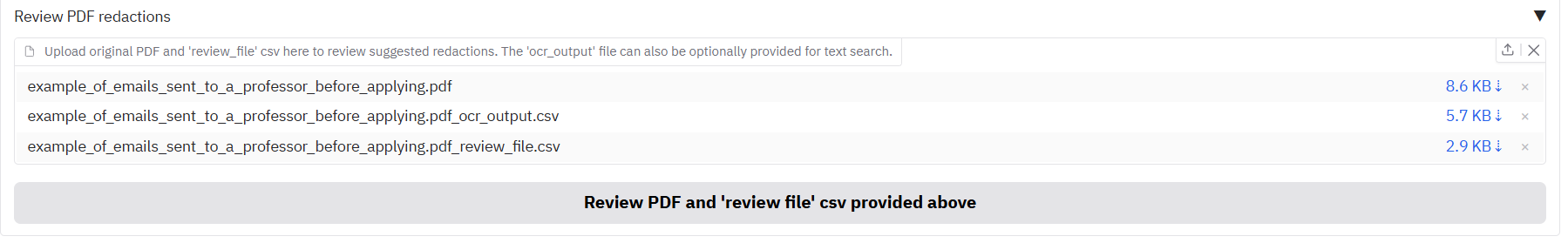

|

|

|

|

|

|

|

| 395 |

|

| 396 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 397 |

|

| 398 |

###

|

| 399 |

# TEXT / TABULAR DATA TAB

|

|

@@ -448,6 +511,13 @@ with app:

|

|

| 448 |

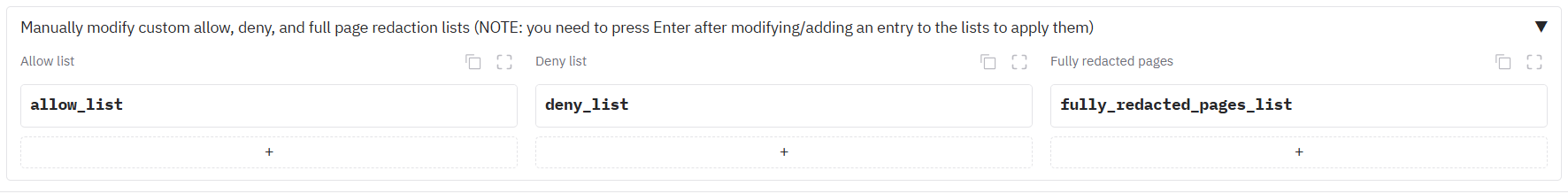

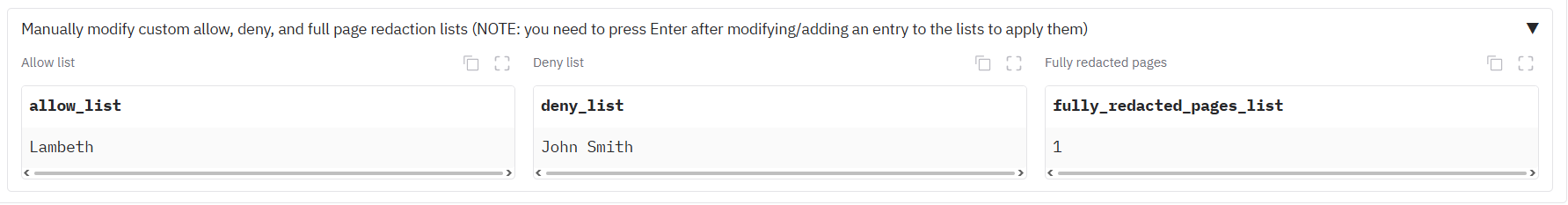

in_allow_list_state = gr.Dataframe(value=pd.DataFrame(), headers=["allow_list"], col_count=(1, "fixed"), row_count = (0, "dynamic"), label="Allow list", visible=True, type="pandas", interactive=True, show_fullscreen_button=True, show_copy_button=True, wrap=True)

|

| 449 |

in_deny_list_state = gr.Dataframe(value=pd.DataFrame(), headers=["deny_list"], col_count=(1, "fixed"), row_count = (0, "dynamic"), label="Deny list", visible=True, type="pandas", interactive=True, show_fullscreen_button=True, show_copy_button=True, wrap=True)

|

| 450 |

in_fully_redacted_list_state = gr.Dataframe(value=pd.DataFrame(), headers=["fully_redacted_pages_list"], col_count=(1, "fixed"), row_count = (0, "dynamic"), label="Fully redacted pages", visible=True, type="pandas", interactive=True, show_fullscreen_button=True, show_copy_button=True, datatype='number', wrap=True)

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 451 |

|

| 452 |

with gr.Accordion("Select entity types to redact", open = True):

|

| 453 |

in_redact_entities = gr.Dropdown(value=CHOSEN_REDACT_ENTITIES, choices=FULL_ENTITY_LIST, multiselect=True, label="Local PII identification model (click empty space in box for full list)")

|

|

@@ -517,24 +587,24 @@ with app:

|

|

| 517 |

cost_code_choice_drop.select(update_cost_code_dataframe_from_dropdown_select, inputs=[cost_code_choice_drop, cost_code_dataframe_base], outputs=[cost_code_dataframe])

|

| 518 |

|

| 519 |

in_doc_files.upload(fn=get_input_file_names, inputs=[in_doc_files], outputs=[doc_file_name_no_extension_textbox, doc_file_name_with_extension_textbox, doc_full_file_name_textbox, doc_file_name_textbox_list, total_pdf_page_count]).\

|

| 520 |

-

success(fn = prepare_image_or_pdf, inputs=[in_doc_files, text_extract_method_radio, latest_file_completed_text, redaction_output_summary_textbox, first_loop_state, annotate_max_pages, all_image_annotations_state, prepare_for_review_bool_false, in_fully_redacted_list_state, output_folder_textbox, input_folder_textbox, prepare_images_bool_false], outputs=[redaction_output_summary_textbox, prepared_pdf_state, images_pdf_state, annotate_max_pages, annotate_max_pages_bottom, pdf_doc_state, all_image_annotations_state,

|

| 521 |

success(fn=check_for_existing_textract_file, inputs=[doc_file_name_no_extension_textbox, output_folder_textbox], outputs=[textract_output_found_checkbox]).\

|

| 522 |

success(fn=check_for_existing_local_ocr_file, inputs=[doc_file_name_no_extension_textbox, output_folder_textbox], outputs=[local_ocr_output_found_checkbox])

|

| 523 |

|

| 524 |

# Run redaction function

|

| 525 |

document_redact_btn.click(fn = reset_state_vars, outputs=[all_image_annotations_state, all_line_level_ocr_results_df_base, all_decision_process_table_state, comprehend_query_number, textract_metadata_textbox, annotator, output_file_list_state, log_files_output_list_state, recogniser_entity_dataframe, recogniser_entity_dataframe_base, pdf_doc_state, duplication_file_path_outputs_list_state, redaction_output_summary_textbox, is_a_textract_api_call, textract_query_number]).\

|

| 526 |

success(fn= enforce_cost_codes, inputs=[enforce_cost_code_textbox, cost_code_choice_drop, cost_code_dataframe_base]).\

|

| 527 |

-

success(fn= choose_and_run_redactor, inputs=[in_doc_files, prepared_pdf_state, images_pdf_state, in_redact_language, in_redact_entities, in_redact_comprehend_entities, text_extract_method_radio, in_allow_list_state, in_deny_list_state, in_fully_redacted_list_state, latest_file_completed_text, redaction_output_summary_textbox, output_file_list_state, log_files_output_list_state, first_loop_state, page_min, page_max, actual_time_taken_number, handwrite_signature_checkbox, textract_metadata_textbox, all_image_annotations_state, all_line_level_ocr_results_df_base, all_decision_process_table_state, pdf_doc_state, current_loop_page_number, page_break_return, pii_identification_method_drop, comprehend_query_number, max_fuzzy_spelling_mistakes_num, match_fuzzy_whole_phrase_bool, aws_access_key_textbox, aws_secret_key_textbox, annotate_max_pages,

|

| 528 |

-

outputs=[redaction_output_summary_textbox, output_file, output_file_list_state, latest_file_completed_text, log_files_output, log_files_output_list_state, actual_time_taken_number, textract_metadata_textbox, pdf_doc_state, all_image_annotations_state, current_loop_page_number, page_break_return, all_line_level_ocr_results_df_base, all_decision_process_table_state, comprehend_query_number, output_review_files, annotate_max_pages, annotate_max_pages_bottom, prepared_pdf_state, images_pdf_state,

|

| 529 |

|

| 530 |

# If the app has completed a batch of pages, it will rerun the redaction process until the end of all pages in the document

|

| 531 |

-

current_loop_page_number.change(fn = choose_and_run_redactor, inputs=[in_doc_files, prepared_pdf_state, images_pdf_state, in_redact_language, in_redact_entities, in_redact_comprehend_entities, text_extract_method_radio, in_allow_list_state, in_deny_list_state, in_fully_redacted_list_state, latest_file_completed_text, redaction_output_summary_textbox, output_file_list_state, log_files_output_list_state, second_loop_state, page_min, page_max, actual_time_taken_number, handwrite_signature_checkbox, textract_metadata_textbox, all_image_annotations_state, all_line_level_ocr_results_df_base, all_decision_process_table_state, pdf_doc_state, current_loop_page_number, page_break_return, pii_identification_method_drop, comprehend_query_number, max_fuzzy_spelling_mistakes_num, match_fuzzy_whole_phrase_bool, aws_access_key_textbox, aws_secret_key_textbox, annotate_max_pages,

|

| 532 |

-

outputs=[redaction_output_summary_textbox, output_file, output_file_list_state, latest_file_completed_text, log_files_output, log_files_output_list_state, actual_time_taken_number, textract_metadata_textbox, pdf_doc_state, all_image_annotations_state, current_loop_page_number, page_break_return, all_line_level_ocr_results_df_base, all_decision_process_table_state, comprehend_query_number, output_review_files, annotate_max_pages, annotate_max_pages_bottom, prepared_pdf_state, images_pdf_state,

|

| 533 |

|

| 534 |

# If a file has been completed, the function will continue onto the next document

|

| 535 |

-

latest_file_completed_text.change(fn = choose_and_run_redactor, inputs=[in_doc_files, prepared_pdf_state, images_pdf_state, in_redact_language, in_redact_entities, in_redact_comprehend_entities, text_extract_method_radio, in_allow_list_state, in_deny_list_state, in_fully_redacted_list_state, latest_file_completed_text, redaction_output_summary_textbox, output_file_list_state, log_files_output_list_state, second_loop_state, page_min, page_max, actual_time_taken_number, handwrite_signature_checkbox, textract_metadata_textbox, all_image_annotations_state, all_line_level_ocr_results_df_base, all_decision_process_table_state, pdf_doc_state, current_loop_page_number, page_break_return, pii_identification_method_drop, comprehend_query_number, max_fuzzy_spelling_mistakes_num, match_fuzzy_whole_phrase_bool, aws_access_key_textbox, aws_secret_key_textbox, annotate_max_pages,

|

| 536 |

-

outputs=[redaction_output_summary_textbox, output_file, output_file_list_state, latest_file_completed_text, log_files_output, log_files_output_list_state, actual_time_taken_number, textract_metadata_textbox, pdf_doc_state, all_image_annotations_state, current_loop_page_number, page_break_return, all_line_level_ocr_results_df_base, all_decision_process_table_state, comprehend_query_number, output_review_files, annotate_max_pages, annotate_max_pages_bottom, prepared_pdf_state, images_pdf_state,

|

| 537 |

-

success(fn=update_annotator_object_and_filter_df, inputs=[all_image_annotations_state, page_min, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown, recogniser_entity_dataframe_base, annotator_zoom_number,

|

| 538 |

success(fn=check_for_existing_textract_file, inputs=[doc_file_name_no_extension_textbox, output_folder_textbox], outputs=[textract_output_found_checkbox]).\

|

| 539 |

success(fn=check_for_existing_local_ocr_file, inputs=[doc_file_name_no_extension_textbox, output_folder_textbox], outputs=[local_ocr_output_found_checkbox]).\

|

| 540 |

success(fn=reveal_feedback_buttons, outputs=[pdf_feedback_radio, pdf_further_details_text, pdf_submit_feedback_btn, pdf_feedback_title]).\

|

|

@@ -556,62 +626,67 @@ with app:

|

|

| 556 |

textract_job_detail_df.select(df_select_callback_textract_api, inputs=[textract_output_found_checkbox], outputs=[job_id_textbox, job_type_dropdown, selected_job_id_row])

|

| 557 |

|

| 558 |

convert_textract_outputs_to_ocr_results.click(replace_existing_pdf_input_for_whole_document_outputs, inputs = [s3_whole_document_textract_input_subfolder, doc_file_name_no_extension_textbox, output_folder_textbox, s3_whole_document_textract_default_bucket, in_doc_files, input_folder_textbox], outputs = [in_doc_files, doc_file_name_no_extension_textbox, doc_file_name_with_extension_textbox, doc_full_file_name_textbox, doc_file_name_textbox_list, total_pdf_page_count]).\

|

| 559 |

-

success(fn = prepare_image_or_pdf, inputs=[in_doc_files, text_extract_method_radio, latest_file_completed_text, redaction_output_summary_textbox, first_loop_state, annotate_max_pages, all_image_annotations_state, prepare_for_review_bool_false, in_fully_redacted_list_state, output_folder_textbox, input_folder_textbox, prepare_images_bool_false], outputs=[redaction_output_summary_textbox, prepared_pdf_state, images_pdf_state, annotate_max_pages, annotate_max_pages_bottom, pdf_doc_state, all_image_annotations_state,

|

| 560 |

success(fn=check_for_existing_textract_file, inputs=[doc_file_name_no_extension_textbox, output_folder_textbox], outputs=[textract_output_found_checkbox]).\

|

| 561 |

success(fn=check_for_existing_local_ocr_file, inputs=[doc_file_name_no_extension_textbox, output_folder_textbox], outputs=[local_ocr_output_found_checkbox]).\

|

| 562 |

success(fn= check_textract_outputs_exist, inputs=[textract_output_found_checkbox]).\

|

| 563 |

success(fn = reset_state_vars, outputs=[all_image_annotations_state, all_line_level_ocr_results_df_base, all_decision_process_table_state, comprehend_query_number, textract_metadata_textbox, annotator, output_file_list_state, log_files_output_list_state, recogniser_entity_dataframe, recogniser_entity_dataframe_base, pdf_doc_state, duplication_file_path_outputs_list_state, redaction_output_summary_textbox, is_a_textract_api_call, textract_query_number]).\

|

| 564 |

-

success(fn= choose_and_run_redactor, inputs=[in_doc_files, prepared_pdf_state, images_pdf_state, in_redact_language, in_redact_entities, in_redact_comprehend_entities, textract_only_method_drop, in_allow_list_state, in_deny_list_state, in_fully_redacted_list_state, latest_file_completed_text, redaction_output_summary_textbox, output_file_list_state, log_files_output_list_state, first_loop_state, page_min, page_max, actual_time_taken_number, handwrite_signature_checkbox, textract_metadata_textbox, all_image_annotations_state, all_line_level_ocr_results_df_base, all_decision_process_table_state, pdf_doc_state, current_loop_page_number, page_break_return, no_redaction_method_drop, comprehend_query_number, max_fuzzy_spelling_mistakes_num, match_fuzzy_whole_phrase_bool, aws_access_key_textbox, aws_secret_key_textbox, annotate_max_pages,

|

| 565 |

-

outputs=[redaction_output_summary_textbox, output_file, output_file_list_state, latest_file_completed_text, log_files_output, log_files_output_list_state, actual_time_taken_number, textract_metadata_textbox, pdf_doc_state, all_image_annotations_state, current_loop_page_number, page_break_return, all_line_level_ocr_results_df_base, all_decision_process_table_state, comprehend_query_number, output_review_files, annotate_max_pages, annotate_max_pages_bottom, prepared_pdf_state, images_pdf_state,

|

| 566 |

-

success(fn=update_annotator_object_and_filter_df, inputs=[all_image_annotations_state, page_min, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown, recogniser_entity_dataframe_base, annotator_zoom_number,

|

| 567 |

|

| 568 |

###

|

| 569 |

# REVIEW PDF REDACTIONS

|

| 570 |

###

|

|

|

|

| 571 |

|

| 572 |

# Upload previous files for modifying redactions

|

| 573 |

upload_previous_review_file_btn.click(fn=reset_review_vars, inputs=None, outputs=[recogniser_entity_dataframe, recogniser_entity_dataframe_base]).\

|

| 574 |

success(fn=get_input_file_names, inputs=[output_review_files], outputs=[doc_file_name_no_extension_textbox, doc_file_name_with_extension_textbox, doc_full_file_name_textbox, doc_file_name_textbox_list, total_pdf_page_count]).\

|

| 575 |

-

success(fn = prepare_image_or_pdf, inputs=[output_review_files, text_extract_method_radio, latest_file_completed_text, redaction_output_summary_textbox, second_loop_state, annotate_max_pages, all_image_annotations_state, prepare_for_review_bool, in_fully_redacted_list_state, output_folder_textbox, input_folder_textbox, prepare_images_bool_false], outputs=[redaction_output_summary_textbox, prepared_pdf_state, images_pdf_state, annotate_max_pages, annotate_max_pages_bottom, pdf_doc_state, all_image_annotations_state,

|

| 576 |

-

success(update_annotator_object_and_filter_df, inputs=[all_image_annotations_state, annotate_current_page, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown, recogniser_entity_dataframe_base, annotator_zoom_number,

|

|

|

|

|

|

|

|

|

|

|

|

|

| 577 |

|

| 578 |

# Page number controls

|

| 579 |

annotate_current_page.submit(update_all_page_annotation_object_based_on_previous_page, inputs = [annotator, annotate_current_page, annotate_previous_page, all_image_annotations_state, page_sizes], outputs = [all_image_annotations_state, annotate_previous_page, annotate_current_page_bottom]).\

|

| 580 |

-

success(update_annotator_object_and_filter_df, inputs=[all_image_annotations_state, annotate_current_page, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown, recogniser_entity_dataframe_base, annotator_zoom_number,

|

| 581 |

-

success(apply_redactions_to_review_df_and_files, inputs=[annotator, doc_full_file_name_textbox, pdf_doc_state, all_image_annotations_state, annotate_current_page,

|

| 582 |

|

| 583 |

annotation_last_page_button.click(fn=decrease_page, inputs=[annotate_current_page], outputs=[annotate_current_page, annotate_current_page_bottom]).\

|

| 584 |

success(update_all_page_annotation_object_based_on_previous_page, inputs = [annotator, annotate_current_page, annotate_previous_page, all_image_annotations_state, page_sizes], outputs = [all_image_annotations_state, annotate_previous_page, annotate_current_page_bottom]).\

|

| 585 |

-

success(update_annotator_object_and_filter_df, inputs=[all_image_annotations_state, annotate_current_page, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown, recogniser_entity_dataframe_base, annotator_zoom_number,

|

| 586 |

-

success(apply_redactions_to_review_df_and_files, inputs=[annotator, doc_full_file_name_textbox, pdf_doc_state, all_image_annotations_state, annotate_current_page,

|

| 587 |

|

| 588 |

annotation_next_page_button.click(fn=increase_page, inputs=[annotate_current_page, all_image_annotations_state], outputs=[annotate_current_page, annotate_current_page_bottom]).\

|

| 589 |

success(update_all_page_annotation_object_based_on_previous_page, inputs = [annotator, annotate_current_page, annotate_previous_page, all_image_annotations_state, page_sizes], outputs = [all_image_annotations_state, annotate_previous_page, annotate_current_page_bottom]).\

|

| 590 |

-

success(update_annotator_object_and_filter_df, inputs=[all_image_annotations_state, annotate_current_page, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown, recogniser_entity_dataframe_base, annotator_zoom_number,

|

| 591 |

-

success(apply_redactions_to_review_df_and_files, inputs=[annotator, doc_full_file_name_textbox, pdf_doc_state, all_image_annotations_state, annotate_current_page,

|

| 592 |

|

| 593 |

annotation_last_page_button_bottom.click(fn=decrease_page, inputs=[annotate_current_page], outputs=[annotate_current_page, annotate_current_page_bottom]).\

|

| 594 |

success(update_all_page_annotation_object_based_on_previous_page, inputs = [annotator, annotate_current_page, annotate_previous_page, all_image_annotations_state, page_sizes], outputs = [all_image_annotations_state, annotate_previous_page, annotate_current_page_bottom]).\

|

| 595 |

-

success(update_annotator_object_and_filter_df, inputs=[all_image_annotations_state, annotate_current_page, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown, recogniser_entity_dataframe_base, annotator_zoom_number,

|

| 596 |

-

success(apply_redactions_to_review_df_and_files, inputs=[annotator, doc_full_file_name_textbox, pdf_doc_state, all_image_annotations_state, annotate_current_page,

|

| 597 |

|

| 598 |

annotation_next_page_button_bottom.click(fn=increase_page, inputs=[annotate_current_page, all_image_annotations_state], outputs=[annotate_current_page, annotate_current_page_bottom]).\

|

| 599 |

success(update_all_page_annotation_object_based_on_previous_page, inputs = [annotator, annotate_current_page, annotate_previous_page, all_image_annotations_state, page_sizes], outputs = [all_image_annotations_state, annotate_previous_page, annotate_current_page_bottom]).\

|

| 600 |

-

success(update_annotator_object_and_filter_df, inputs=[all_image_annotations_state, annotate_current_page, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown, recogniser_entity_dataframe_base, annotator_zoom_number,

|

| 601 |

-

success(apply_redactions_to_review_df_and_files, inputs=[annotator, doc_full_file_name_textbox, pdf_doc_state, all_image_annotations_state, annotate_current_page,

|

| 602 |

|

| 603 |

annotate_current_page_bottom.submit(update_other_annotator_number_from_current, inputs=[annotate_current_page_bottom], outputs=[annotate_current_page]).\

|

| 604 |

success(update_all_page_annotation_object_based_on_previous_page, inputs = [annotator, annotate_current_page, annotate_previous_page, all_image_annotations_state, page_sizes], outputs = [all_image_annotations_state, annotate_previous_page, annotate_current_page_bottom]).\

|

| 605 |

-

success(update_annotator_object_and_filter_df, inputs=[all_image_annotations_state, annotate_current_page, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown, recogniser_entity_dataframe_base, annotator_zoom_number,

|

| 606 |

-

success(apply_redactions_to_review_df_and_files, inputs=[annotator, doc_full_file_name_textbox, pdf_doc_state, all_image_annotations_state, annotate_current_page,

|

| 607 |

|

| 608 |

# Apply page redactions

|

| 609 |

-

annotation_button_apply.click(apply_redactions_to_review_df_and_files, inputs=[annotator, doc_full_file_name_textbox, pdf_doc_state, all_image_annotations_state, annotate_current_page,

|

| 610 |

|

| 611 |

# Save current page redactions

|

| 612 |

update_current_page_redactions_btn.click(update_all_page_annotation_object_based_on_previous_page, inputs = [annotator, annotate_current_page, annotate_current_page, all_image_annotations_state, page_sizes], outputs = [all_image_annotations_state, annotate_previous_page, annotate_current_page_bottom]).\

|

| 613 |

-

success(update_annotator_object_and_filter_df, inputs=[all_image_annotations_state, annotate_current_page, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown, recogniser_entity_dataframe_base, annotator_zoom_number,

|

| 614 |

-

success(apply_redactions_to_review_df_and_files, inputs=[annotator, doc_full_file_name_textbox, pdf_doc_state, all_image_annotations_state, annotate_current_page,

|

| 615 |

|

| 616 |

# Review table controls

|

| 617 |

recogniser_entity_dropdown.select(update_entities_df_recogniser_entities, inputs=[recogniser_entity_dropdown, recogniser_entity_dataframe_base, page_entity_dropdown, text_entity_dropdown], outputs=[recogniser_entity_dataframe, text_entity_dropdown, page_entity_dropdown])

|

|

@@ -620,50 +695,52 @@ with app:

|

|

| 620 |

|

| 621 |

# Clicking on a cell in the recogniser entity dataframe will take you to that page, and also highlight the target redaction box in blue

|

| 622 |

recogniser_entity_dataframe.select(df_select_callback_dataframe_row, inputs=[recogniser_entity_dataframe], outputs=[selected_entity_dataframe_row, selected_entity_dataframe_row_text]).\

|

| 623 |

-

success(update_selected_review_df_row_colour, inputs=[selected_entity_dataframe_row,

|

| 624 |

-

success(update_annotator_page_from_review_df, inputs=[

|

|

|

|

| 625 |

|

| 626 |

reset_dropdowns_btn.click(reset_dropdowns, inputs=[recogniser_entity_dataframe_base], outputs=[recogniser_entity_dropdown, text_entity_dropdown, page_entity_dropdown]).\

|

| 627 |

-

success(update_annotator_object_and_filter_df, inputs=[all_image_annotations_state, annotate_current_page, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown, recogniser_entity_dataframe_base, annotator_zoom_number,

|

| 628 |

|

| 629 |

# Exclude current selection from annotator and outputs

|

| 630 |

# Exclude only selected row

|

| 631 |

-

exclude_selected_row_btn.click(exclude_selected_items_from_redaction, inputs=[

|

| 632 |

-

success(update_annotator_object_and_filter_df, inputs=[all_image_annotations_state, annotate_current_page, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown, recogniser_entity_dataframe_base, annotator_zoom_number,

|

| 633 |

-

success(apply_redactions_to_review_df_and_files, inputs=[annotator, doc_full_file_name_textbox, pdf_doc_state, all_image_annotations_state, annotate_current_page,

|

| 634 |

success(update_all_entity_df_dropdowns, inputs=[recogniser_entity_dataframe_base, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown], outputs=[recogniser_entity_dropdown, text_entity_dropdown, page_entity_dropdown])

|

| 635 |

|

| 636 |

# Exclude all items with same text as selected row

|

| 637 |

exclude_text_with_same_as_selected_row_btn.click(get_all_rows_with_same_text, inputs=[recogniser_entity_dataframe_base, selected_entity_dataframe_row_text], outputs=[recogniser_entity_dataframe_same_text]).\

|

| 638 |

-

success(exclude_selected_items_from_redaction, inputs=[

|

| 639 |

-

success(update_annotator_object_and_filter_df, inputs=[all_image_annotations_state, annotate_current_page, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown, recogniser_entity_dataframe_base, annotator_zoom_number,

|

| 640 |

-

success(apply_redactions_to_review_df_and_files, inputs=[annotator, doc_full_file_name_textbox, pdf_doc_state, all_image_annotations_state, annotate_current_page,

|

| 641 |

success(update_all_entity_df_dropdowns, inputs=[recogniser_entity_dataframe_base, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown], outputs=[recogniser_entity_dropdown, text_entity_dropdown, page_entity_dropdown])

|

| 642 |

|

| 643 |

# Exclude everything visible in table

|

| 644 |

-

exclude_selected_btn.click(exclude_selected_items_from_redaction, inputs=[

|

| 645 |

-

success(update_annotator_object_and_filter_df, inputs=[all_image_annotations_state, annotate_current_page, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown, recogniser_entity_dataframe_base, annotator_zoom_number,

|

| 646 |

-

success(apply_redactions_to_review_df_and_files, inputs=[annotator, doc_full_file_name_textbox, pdf_doc_state, all_image_annotations_state, annotate_current_page,

|

| 647 |

success(update_all_entity_df_dropdowns, inputs=[recogniser_entity_dataframe_base, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown], outputs=[recogniser_entity_dropdown, text_entity_dropdown, page_entity_dropdown])

|

| 648 |

|

| 649 |

-

|

| 650 |

-

|

| 651 |

-

|

| 652 |

-

success(update_annotator_object_and_filter_df, inputs=[all_image_annotations_state, annotate_current_page, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown, recogniser_entity_dataframe_base, annotator_zoom_number, review_file_state, page_sizes, doc_full_file_name_textbox, input_folder_textbox], outputs = [annotator, annotate_current_page, annotate_current_page_bottom, annotate_previous_page, recogniser_entity_dropdown, recogniser_entity_dataframe, recogniser_entity_dataframe_base, text_entity_dropdown, page_entity_dropdown, page_sizes, all_image_annotations_state]).\

|

| 653 |

-

success(apply_redactions_to_review_df_and_files, inputs=[annotator, doc_full_file_name_textbox, pdf_doc_state, all_image_annotations_state, annotate_current_page, review_file_state, output_folder_textbox, do_not_save_pdf_state, page_sizes], outputs=[pdf_doc_state, all_image_annotations_state, output_review_files, log_files_output, review_file_state])

|

| 654 |

|

| 655 |

# Review OCR text button

|

| 656 |

-

all_line_level_ocr_results_df.select(df_select_callback_ocr, inputs=[all_line_level_ocr_results_df], outputs=[annotate_current_page,

|

|

|

|

|

|

|

|

|

|

| 657 |

reset_all_ocr_results_btn.click(reset_ocr_base_dataframe, inputs=[all_line_level_ocr_results_df_base], outputs=[all_line_level_ocr_results_df])

|

| 658 |

|

| 659 |

# Convert review file to xfdf Adobe format

|

| 660 |