Spaces:

Sleeping

Sleeping

Upload 7 files

Browse files- demo_text.txt +12 -0

- index.md +22 -0

- presidio_streamlit.py +293 -0

- requirements.txt +8 -0

- transformers_rec/__init__.py +5 -0

- transformers_rec/configuration.py +116 -0

- transformers_rec/transformers_recognizer.py +324 -0

demo_text.txt

ADDED

|

@@ -0,0 +1,12 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Here are a few examples sentences we currently support:

|

| 2 |

+

|

| 3 |

+

Hello, my name is David Johnson and I live in Maine.

|

| 4 |

+

My credit card number is 4095-2609-9393-4932 and my crypto wallet id is 16Yeky6GMjeNkAiNcBY7ZhrLoMSgg1BoyZ.

|

| 5 |

+

|

| 6 |

+

On September 18 I visited microsoft.com and sent an email to [email protected], from the IP 192.168.0.1.

|

| 7 |

+

|

| 8 |

+

My passport: 191280342 and my phone number: (212) 555-1234.

|

| 9 |

+

|

| 10 |

+

This is a valid International Bank Account Number: IL150120690000003111111 . Can you please check the status on bank account 954567876544?

|

| 11 |

+

|

| 12 |

+

Kate's social security number is 078-05-1126. Her driver license? it is 1234567A.

|

index.md

ADDED

|

@@ -0,0 +1,22 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

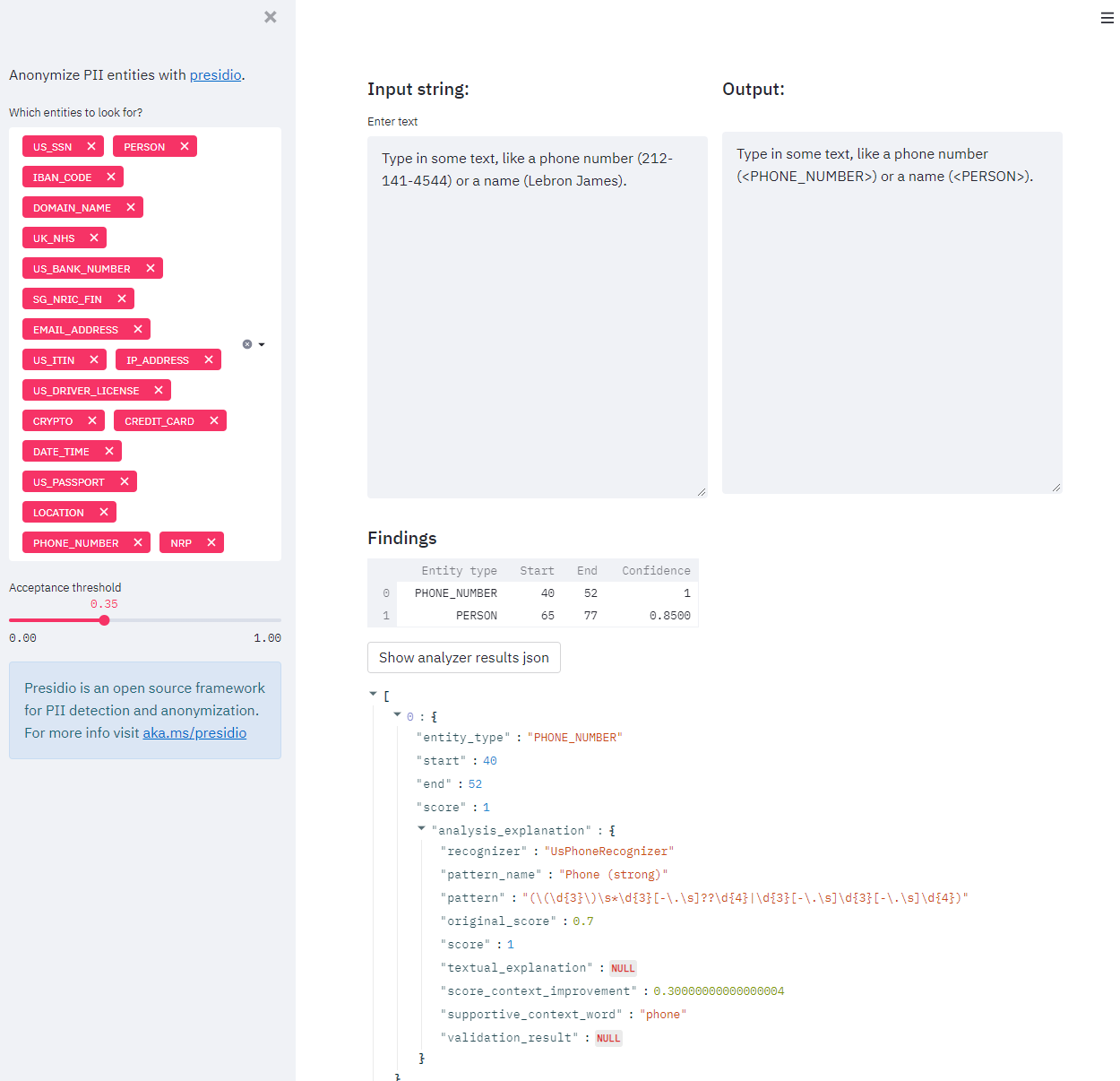

# Simple demo website for Presidio

|

| 2 |

+

Here's a simple app, written in pure Python, to create a demo website for Presidio.

|

| 3 |

+

The app is based on the [streamlit](https://streamlit.io/) package.

|

| 4 |

+

|

| 5 |

+

## Requirements

|

| 6 |

+

1. Install dependencies (preferably in a virtual environment)

|

| 7 |

+

|

| 8 |

+

```sh

|

| 9 |

+

pip install streamlit pandas presidio-analyzer presidio-anonymizer

|

| 10 |

+

```

|

| 11 |

+

|

| 12 |

+

2. Download the [presidio_streamlit.py](presidio_streamlit.py) file.

|

| 13 |

+

3. *Optional*: Update the `analyzer_engine` and `anonymizer_engine` functions for your specific implementation

|

| 14 |

+

3. Start the app:

|

| 15 |

+

|

| 16 |

+

```sh

|

| 17 |

+

streamlit run presidio_streamlit.py

|

| 18 |

+

```

|

| 19 |

+

|

| 20 |

+

## Output

|

| 21 |

+

Output should be similar to this screenshot:

|

| 22 |

+

|

presidio_streamlit.py

ADDED

|

@@ -0,0 +1,293 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""Streamlit app for Presidio."""

|

| 2 |

+

|

| 3 |

+

from json import JSONEncoder

|

| 4 |

+

from typing import List

|

| 5 |

+

|

| 6 |

+

import pandas as pd

|

| 7 |

+

import spacy

|

| 8 |

+

import streamlit as st

|

| 9 |

+

from annotated_text import annotated_text

|

| 10 |

+

from presidio_analyzer import AnalyzerEngine, RecognizerResult, RecognizerRegistry

|

| 11 |

+

from presidio_analyzer.nlp_engine import NlpEngineProvider

|

| 12 |

+

from presidio_anonymizer import AnonymizerEngine

|

| 13 |

+

from presidio_anonymizer.entities import OperatorConfig

|

| 14 |

+

|

| 15 |

+

from transformers_rec import (

|

| 16 |

+

STANFORD_COFIGURATION,

|

| 17 |

+

TransformersRecognizer,

|

| 18 |

+

BERT_DEID_CONFIGURATION,

|

| 19 |

+

)

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

# Helper methods

|

| 23 |

+

@st.cache_resource

|

| 24 |

+

def analyzer_engine(model_path: str):

|

| 25 |

+

"""Return AnalyzerEngine.

|

| 26 |

+

|

| 27 |

+

:param model_path: Which model to use for NER:

|

| 28 |

+

"StanfordAIMI/stanford-deidentifier-base",

|

| 29 |

+

"obi/deid_roberta_i2b2",

|

| 30 |

+

"en_core_web_lg"

|

| 31 |

+

"""

|

| 32 |

+

|

| 33 |

+

registry = RecognizerRegistry()

|

| 34 |

+

registry.load_predefined_recognizers()

|

| 35 |

+

|

| 36 |

+

# Set up NLP Engine according to the model of choice

|

| 37 |

+

if model_path == "en_core_web_lg":

|

| 38 |

+

if not spacy.util.is_package("en_core_web_lg"):

|

| 39 |

+

spacy.cli.download("en_core_web_lg")

|

| 40 |

+

nlp_configuration = {

|

| 41 |

+

"nlp_engine_name": "spacy",

|

| 42 |

+

"models": [{"lang_code": "en", "model_name": "en_core_web_lg"}],

|

| 43 |

+

}

|

| 44 |

+

else:

|

| 45 |

+

if not spacy.util.is_package("en_core_web_sm"):

|

| 46 |

+

spacy.cli.download("en_core_web_sm")

|

| 47 |

+

# Using a small spaCy model + a HF NER model

|

| 48 |

+

transformers_recognizer = TransformersRecognizer(model_path=model_path)

|

| 49 |

+

|

| 50 |

+

if model_path == "StanfordAIMI/stanford-deidentifier-base":

|

| 51 |

+

transformers_recognizer.load_transformer(**STANFORD_COFIGURATION)

|

| 52 |

+

elif model_path == "obi/deid_roberta_i2b2":

|

| 53 |

+

transformers_recognizer.load_transformer(**BERT_DEID_CONFIGURATION)

|

| 54 |

+

|

| 55 |

+

# Use small spaCy model, no need for both spacy and HF models

|

| 56 |

+

# The transformers model is used here as a recognizer, not as an NlpEngine

|

| 57 |

+

nlp_configuration = {

|

| 58 |

+

"nlp_engine_name": "spacy",

|

| 59 |

+

"models": [{"lang_code": "en", "model_name": "en_core_web_sm"}],

|

| 60 |

+

}

|

| 61 |

+

|

| 62 |

+

registry.add_recognizer(transformers_recognizer)

|

| 63 |

+

|

| 64 |

+

nlp_engine = NlpEngineProvider(nlp_configuration=nlp_configuration).create_engine()

|

| 65 |

+

|

| 66 |

+

analyzer = AnalyzerEngine(nlp_engine=nlp_engine, registry=registry)

|

| 67 |

+

return analyzer

|

| 68 |

+

|

| 69 |

+

|

| 70 |

+

@st.cache_resource

|

| 71 |

+

def anonymizer_engine():

|

| 72 |

+

"""Return AnonymizerEngine."""

|

| 73 |

+

return AnonymizerEngine()

|

| 74 |

+

|

| 75 |

+

|

| 76 |

+

@st.cache_data

|

| 77 |

+

def get_supported_entities():

|

| 78 |

+

"""Return supported entities from the Analyzer Engine."""

|

| 79 |

+

return analyzer_engine(st_model).get_supported_entities()

|

| 80 |

+

|

| 81 |

+

|

| 82 |

+

@st.cache_data

|

| 83 |

+

def analyze(**kwargs):

|

| 84 |

+

"""Analyze input using Analyzer engine and input arguments (kwargs)."""

|

| 85 |

+

if "entities" not in kwargs or "All" in kwargs["entities"]:

|

| 86 |

+

kwargs["entities"] = None

|

| 87 |

+

return analyzer_engine(st_model).analyze(**kwargs)

|

| 88 |

+

|

| 89 |

+

|

| 90 |

+

def anonymize(text: str, analyze_results: List[RecognizerResult]):

|

| 91 |

+

"""Anonymize identified input using Presidio Anonymizer.

|

| 92 |

+

|

| 93 |

+

:param text: Full text

|

| 94 |

+

:param analyze_results: list of results from presidio analyzer engine

|

| 95 |

+

"""

|

| 96 |

+

|

| 97 |

+

if st_operator == "mask":

|

| 98 |

+

operator_config = {

|

| 99 |

+

"type": "mask",

|

| 100 |

+

"masking_char": st_mask_char,

|

| 101 |

+

"chars_to_mask": st_number_of_chars,

|

| 102 |

+

"from_end": False,

|

| 103 |

+

}

|

| 104 |

+

|

| 105 |

+

elif st_operator == "encrypt":

|

| 106 |

+

operator_config = {"key": st_encrypt_key}

|

| 107 |

+

elif st_operator == "highlight":

|

| 108 |

+

operator_config = {"lambda": lambda x: x}

|

| 109 |

+

else:

|

| 110 |

+

operator_config = None

|

| 111 |

+

|

| 112 |

+

if st_operator == "highlight":

|

| 113 |

+

operator = "custom"

|

| 114 |

+

else:

|

| 115 |

+

operator = st_operator

|

| 116 |

+

|

| 117 |

+

res = anonymizer_engine().anonymize(

|

| 118 |

+

text,

|

| 119 |

+

analyze_results,

|

| 120 |

+

operators={"DEFAULT": OperatorConfig(operator, operator_config)},

|

| 121 |

+

)

|

| 122 |

+

return res

|

| 123 |

+

|

| 124 |

+

|

| 125 |

+

def annotate(text: str, analyze_results: List[RecognizerResult]):

|

| 126 |

+

"""

|

| 127 |

+

Highlights every identified entity on top of the text.

|

| 128 |

+

:param text: full text

|

| 129 |

+

:param analyze_results: list of analyzer results.

|

| 130 |

+

"""

|

| 131 |

+

tokens = []

|

| 132 |

+

|

| 133 |

+

# Use the anonymizer to resolve overlaps

|

| 134 |

+

results = anonymize(text, analyze_results)

|

| 135 |

+

|

| 136 |

+

# sort by start index

|

| 137 |

+

results = sorted(results.items, key=lambda x: x.start)

|

| 138 |

+

for i, res in enumerate(results):

|

| 139 |

+

if i == 0:

|

| 140 |

+

tokens.append(text[: res.start])

|

| 141 |

+

|

| 142 |

+

# append entity text and entity type

|

| 143 |

+

tokens.append((text[res.start: res.end], res.entity_type))

|

| 144 |

+

|

| 145 |

+

# if another entity coming i.e. we're not at the last results element, add text up to next entity

|

| 146 |

+

if i != len(results) - 1:

|

| 147 |

+

tokens.append(text[res.end: results[i + 1].start])

|

| 148 |

+

# if no more entities coming, add all remaining text

|

| 149 |

+

else:

|

| 150 |

+

tokens.append(text[res.end:])

|

| 151 |

+

return tokens

|

| 152 |

+

|

| 153 |

+

|

| 154 |

+

st.set_page_config(page_title="Presidio demo", layout="wide")

|

| 155 |

+

|

| 156 |

+

# Sidebar

|

| 157 |

+

st.sidebar.header(

|

| 158 |

+

"""

|

| 159 |

+

PII De-Identification with Microsoft Presidio

|

| 160 |

+

"""

|

| 161 |

+

)

|

| 162 |

+

|

| 163 |

+

st.sidebar.info(

|

| 164 |

+

"Presidio is an open source customizable framework for PII detection and de-identification\n"

|

| 165 |

+

"[Code](https://aka.ms/presidio) | "

|

| 166 |

+

"[Tutorial](https://microsoft.github.io/presidio/tutorial/) | "

|

| 167 |

+

"[Installation](https://microsoft.github.io/presidio/installation/) | "

|

| 168 |

+

"[FAQ](https://microsoft.github.io/presidio/faq/)",

|

| 169 |

+

icon="ℹ️",

|

| 170 |

+

)

|

| 171 |

+

|

| 172 |

+

st.sidebar.markdown(

|

| 173 |

+

"[](https://img.shields.io/pypi/dm/presidio-analyzer.svg)"

|

| 174 |

+

"[](http://opensource.org/licenses/MIT)"

|

| 175 |

+

""

|

| 176 |

+

)

|

| 177 |

+

|

| 178 |

+

st_model = st.sidebar.selectbox(

|

| 179 |

+

"NER model",

|

| 180 |

+

[

|

| 181 |

+

"StanfordAIMI/stanford-deidentifier-base",

|

| 182 |

+

"obi/deid_roberta_i2b2",

|

| 183 |

+

"en_core_web_lg",

|

| 184 |

+

],

|

| 185 |

+

index=1,

|

| 186 |

+

)

|

| 187 |

+

st.sidebar.markdown("> Note: Models might take some time to download. ")

|

| 188 |

+

|

| 189 |

+

st_operator = st.sidebar.selectbox(

|

| 190 |

+

"De-identification approach",

|

| 191 |

+

["redact", "replace", "mask", "hash", "encrypt", "highlight"],

|

| 192 |

+

index=1,

|

| 193 |

+

)

|

| 194 |

+

|

| 195 |

+

if st_operator == "mask":

|

| 196 |

+

st_number_of_chars = st.sidebar.number_input(

|

| 197 |

+

"number of chars", value=15, min_value=0, max_value=100

|

| 198 |

+

)

|

| 199 |

+

st_mask_char = st.sidebar.text_input("Mask character", value="*", max_chars=1)

|

| 200 |

+

elif st_operator == "encrypt":

|

| 201 |

+

st_encrypt_key = st.sidebar.text_input("AES key", value="WmZq4t7w!z%C&F)J")

|

| 202 |

+

|

| 203 |

+

st_threshold = st.sidebar.slider(

|

| 204 |

+

label="Acceptance threshold", min_value=0.0, max_value=1.0, value=0.35

|

| 205 |

+

)

|

| 206 |

+

|

| 207 |

+

st_return_decision_process = st.sidebar.checkbox(

|

| 208 |

+

"Add analysis explanations to findings", value=False

|

| 209 |

+

)

|

| 210 |

+

|

| 211 |

+

st_entities = st.sidebar.multiselect(

|

| 212 |

+

label="Which entities to look for?",

|

| 213 |

+

options=get_supported_entities(),

|

| 214 |

+

default=list(get_supported_entities()),

|

| 215 |

+

)

|

| 216 |

+

|

| 217 |

+

# Main panel

|

| 218 |

+

analyzer_load_state = st.info("Starting Presidio analyzer...")

|

| 219 |

+

engine = analyzer_engine(model_path=st_model)

|

| 220 |

+

analyzer_load_state.empty()

|

| 221 |

+

|

| 222 |

+

# Read default text

|

| 223 |

+

with open("demo_text.txt") as f:

|

| 224 |

+

demo_text = f.readlines()

|

| 225 |

+

|

| 226 |

+

# Create two columns for before and after

|

| 227 |

+

col1, col2 = st.columns(2)

|

| 228 |

+

|

| 229 |

+

# Before:

|

| 230 |

+

col1.subheader("Input string:")

|

| 231 |

+

st_text = col1.text_area(

|

| 232 |

+

label="Enter text",

|

| 233 |

+

value="".join(demo_text),

|

| 234 |

+

height=400,

|

| 235 |

+

)

|

| 236 |

+

|

| 237 |

+

st_analyze_results = analyze(

|

| 238 |

+

text=st_text,

|

| 239 |

+

entities=st_entities,

|

| 240 |

+

language="en",

|

| 241 |

+

score_threshold=st_threshold,

|

| 242 |

+

return_decision_process=st_return_decision_process,

|

| 243 |

+

)

|

| 244 |

+

|

| 245 |

+

# After

|

| 246 |

+

if st_operator != "highlight":

|

| 247 |

+

with col2:

|

| 248 |

+

st.subheader(f"Output")

|

| 249 |

+

st_anonymize_results = anonymize(st_text, st_analyze_results)

|

| 250 |

+

st.text_area(label="De-identified", value=st_anonymize_results.text, height=400)

|

| 251 |

+

else:

|

| 252 |

+

st.subheader("Highlighted")

|

| 253 |

+

annotated_tokens = annotate(st_text, st_analyze_results)

|

| 254 |

+

# annotated_tokens

|

| 255 |

+

annotated_text(*annotated_tokens)

|

| 256 |

+

|

| 257 |

+

|

| 258 |

+

# json result

|

| 259 |

+

class ToDictEncoder(JSONEncoder):

|

| 260 |

+

"""Encode dict to json."""

|

| 261 |

+

|

| 262 |

+

def default(self, o):

|

| 263 |

+

"""Encode to JSON using to_dict."""

|

| 264 |

+

return o.to_dict()

|

| 265 |

+

|

| 266 |

+

|

| 267 |

+

# table result

|

| 268 |

+

st.subheader(

|

| 269 |

+

"Findings" if not st_return_decision_process else "Findings with decision factors"

|

| 270 |

+

)

|

| 271 |

+

if st_analyze_results:

|

| 272 |

+

df = pd.DataFrame.from_records([r.to_dict() for r in st_analyze_results])

|

| 273 |

+

df["text"] = [st_text[res.start: res.end] for res in st_analyze_results]

|

| 274 |

+

|

| 275 |

+

df_subset = df[["entity_type", "text", "start", "end", "score"]].rename(

|

| 276 |

+

{

|

| 277 |

+

"entity_type": "Entity type",

|

| 278 |

+

"text": "Text",

|

| 279 |

+

"start": "Start",

|

| 280 |

+

"end": "End",

|

| 281 |

+

"score": "Confidence",

|

| 282 |

+

},

|

| 283 |

+

axis=1,

|

| 284 |

+

)

|

| 285 |

+

df_subset["Text"] = [st_text[res.start: res.end] for res in st_analyze_results]

|

| 286 |

+

if st_return_decision_process:

|

| 287 |

+

analysis_explanation_df = pd.DataFrame.from_records(

|

| 288 |

+

[r.analysis_explanation.to_dict() for r in st_analyze_results]

|

| 289 |

+

)

|

| 290 |

+

df_subset = pd.concat([df_subset, analysis_explanation_df], axis=1)

|

| 291 |

+

st.dataframe(df_subset.reset_index(drop=True), use_container_width=True)

|

| 292 |

+

else:

|

| 293 |

+

st.text("No findings")

|

requirements.txt

ADDED

|

@@ -0,0 +1,8 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

presidio-analyzer

|

| 2 |

+

presidio-anonymizer

|

| 3 |

+

streamlit

|

| 4 |

+

pandas

|

| 5 |

+

st-annotated-text

|

| 6 |

+

faker

|

| 7 |

+

torch

|

| 8 |

+

transformers

|

transformers_rec/__init__.py

ADDED

|

@@ -0,0 +1,5 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from .configuration import BERT_DEID_CONFIGURATION, STANFORD_COFIGURATION

|

| 2 |

+

from .transformers_recognizer import TransformersRecognizer

|

| 3 |

+

|

| 4 |

+

__all__ = ["BERT_DEID_CONFIGURATION", "STANFORD_COFIGURATION", "TransformersRecognizer"]

|

| 5 |

+

|

transformers_rec/configuration.py

ADDED

|

@@ -0,0 +1,116 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

STANFORD_COFIGURATION = {

|

| 2 |

+

"DEFAULT_MODEL_PATH": "StanfordAIMI/stanford-deidentifier-base",

|

| 3 |

+

"PRESIDIO_SUPPORTED_ENTITIES": [

|

| 4 |

+

"LOCATION",

|

| 5 |

+

"PERSON",

|

| 6 |

+

"ORGANIZATION",

|

| 7 |

+

"AGE",

|

| 8 |

+

"PHONE_NUMBER",

|

| 9 |

+

"EMAIL",

|

| 10 |

+

"DATE_TIME",

|

| 11 |

+

"DEVICE",

|

| 12 |

+

"ZIP",

|

| 13 |

+

"PROFESSION",

|

| 14 |

+

"USERNAME"

|

| 15 |

+

|

| 16 |

+

],

|

| 17 |

+

"LABELS_TO_IGNORE": ["O"],

|

| 18 |

+

"DEFAULT_EXPLANATION": "Identified as {} by the StanfordAIMI/stanford-deidentifier-base NER model",

|

| 19 |

+

"SUB_WORD_AGGREGATION": "simple",

|

| 20 |

+

"DATASET_TO_PRESIDIO_MAPPING": {

|

| 21 |

+

"DATE": "DATE_TIME",

|

| 22 |

+

"DOCTOR": "PERSON",

|

| 23 |

+

"PATIENT": "PERSON",

|

| 24 |

+

"HOSPITAL": "LOCATION",

|

| 25 |

+

"MEDICALRECORD": "O",

|

| 26 |

+

"IDNUM": "O",

|

| 27 |

+

"ORGANIZATION": "ORGANIZATION",

|

| 28 |

+

"ZIP": "ZIP",

|

| 29 |

+

"PHONE": "PHONE_NUMBER",

|

| 30 |

+

"USERNAME": "USERNAME",

|

| 31 |

+

"STREET": "LOCATION",

|

| 32 |

+

"PROFESSION": "PROFESSION",

|

| 33 |

+

"COUNTRY": "LOCATION",

|

| 34 |

+

"LOCATION-OTHER": "LOCATION",

|

| 35 |

+

"FAX": "PHONE_NUMBER",

|

| 36 |

+

"EMAIL": "EMAIL",

|

| 37 |

+

"STATE": "LOCATION",

|

| 38 |

+

"DEVICE": "DEVICE",

|

| 39 |

+

"ORG": "ORGANIZATION",

|

| 40 |

+

"AGE": "AGE",

|

| 41 |

+

},

|

| 42 |

+

"MODEL_TO_PRESIDIO_MAPPING": {

|

| 43 |

+

"PER": "PERSON",

|

| 44 |

+

"PERSON": "PERSON",

|

| 45 |

+

"LOC": "LOCATION",

|

| 46 |

+

"ORG": "ORGANIZATION",

|

| 47 |

+

"AGE": "AGE",

|

| 48 |

+

"PATIENT": "PERSON",

|

| 49 |

+

"HCW": "PERSON",

|

| 50 |

+

"HOSPITAL": "LOCATION",

|

| 51 |

+

"PATORG": "ORGANIZATION",

|

| 52 |

+

"DATE": "DATE_TIME",

|

| 53 |

+

"PHONE": "PHONE_NUMBER",

|

| 54 |

+

"VENDOR": "ORGANIZATION",

|

| 55 |

+

},

|

| 56 |

+

"CHUNK_OVERLAP_SIZE": 40,

|

| 57 |

+

"CHUNK_SIZE": 600,

|

| 58 |

+

}

|

| 59 |

+

|

| 60 |

+

|

| 61 |

+

BERT_DEID_CONFIGURATION = {

|

| 62 |

+

"PRESIDIO_SUPPORTED_ENTITIES": [

|

| 63 |

+

"LOCATION",

|

| 64 |

+

"PERSON",

|

| 65 |

+

"ORGANIZATION",

|

| 66 |

+

"AGE",

|

| 67 |

+

"PHONE_NUMBER",

|

| 68 |

+

"EMAIL",

|

| 69 |

+

"DATE_TIME",

|

| 70 |

+

"ZIP",

|

| 71 |

+

"PROFESSION",

|

| 72 |

+

"USERNAME",

|

| 73 |

+

],

|

| 74 |

+

"DEFAULT_MODEL_PATH": "obi/deid_roberta_i2b2",

|

| 75 |

+

"LABELS_TO_IGNORE": ["O"],

|

| 76 |

+

"DEFAULT_EXPLANATION": "Identified as {} by the obi/deid_roberta_i2b2 NER model",

|

| 77 |

+

"SUB_WORD_AGGREGATION": "simple",

|

| 78 |

+

"DATASET_TO_PRESIDIO_MAPPING": {

|

| 79 |

+

"DATE": "DATE_TIME",

|

| 80 |

+

"DOCTOR": "PERSON",

|

| 81 |

+

"PATIENT": "PERSON",

|

| 82 |

+

"HOSPITAL": "ORGANIZATION",

|

| 83 |

+

"MEDICALRECORD": "O",

|

| 84 |

+

"IDNUM": "O",

|

| 85 |

+

"ORGANIZATION": "ORGANIZATION",

|

| 86 |

+

"ZIP": "O",

|

| 87 |

+

"PHONE": "PHONE_NUMBER",

|

| 88 |

+

"USERNAME": "",

|

| 89 |

+

"STREET": "LOCATION",

|

| 90 |

+

"PROFESSION": "PROFESSION",

|

| 91 |

+

"COUNTRY": "LOCATION",

|

| 92 |

+

"LOCATION-OTHER": "LOCATION",

|

| 93 |

+

"FAX": "PHONE_NUMBER",

|

| 94 |

+

"EMAIL": "EMAIL",

|

| 95 |

+

"STATE": "LOCATION",

|

| 96 |

+

"DEVICE": "O",

|

| 97 |

+

"ORG": "ORGANIZATION",

|

| 98 |

+

"AGE": "AGE",

|

| 99 |

+

},

|

| 100 |

+

"MODEL_TO_PRESIDIO_MAPPING": {

|

| 101 |

+

"PER": "PERSON",

|

| 102 |

+

"LOC": "LOCATION",

|

| 103 |

+

"ORG": "ORGANIZATION",

|

| 104 |

+

"AGE": "AGE",

|

| 105 |

+

"ID": "O",

|

| 106 |

+

"EMAIL": "EMAIL",

|

| 107 |

+

"PATIENT": "PERSON",

|

| 108 |

+

"STAFF": "PERSON",

|

| 109 |

+

"HOSP": "ORGANIZATION",

|

| 110 |

+

"PATORG": "ORGANIZATION",

|

| 111 |

+

"DATE": "DATE_TIME",

|

| 112 |

+

"PHONE": "PHONE_NUMBER",

|

| 113 |

+

},

|

| 114 |

+

"CHUNK_OVERLAP_SIZE": 40,

|

| 115 |

+

"CHUNK_SIZE": 600,

|

| 116 |

+

}

|

transformers_rec/transformers_recognizer.py

ADDED

|

@@ -0,0 +1,324 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import copy

|

| 2 |

+

import logging

|

| 3 |

+

from typing import Optional, List

|

| 4 |

+

|

| 5 |

+

import torch

|

| 6 |

+

from presidio_analyzer import (

|

| 7 |

+

RecognizerResult,

|

| 8 |

+

EntityRecognizer,

|

| 9 |

+

AnalysisExplanation,

|

| 10 |

+

)

|

| 11 |

+

from presidio_analyzer.nlp_engine import NlpArtifacts

|

| 12 |

+

|

| 13 |

+

from .configuration import BERT_DEID_CONFIGURATION

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

logger = logging.getLogger("presidio-analyzer")

|

| 17 |

+

|

| 18 |

+

try:

|

| 19 |

+

from transformers import (

|

| 20 |

+

AutoTokenizer,

|

| 21 |

+

AutoModelForTokenClassification,

|

| 22 |

+

pipeline,

|

| 23 |

+

TokenClassificationPipeline,

|

| 24 |

+

)

|

| 25 |

+

|

| 26 |

+

except ImportError:

|

| 27 |

+

logger.error("transformers_rec is not installed")

|

| 28 |

+

|

| 29 |

+

|

| 30 |

+

class TransformersRecognizer(EntityRecognizer):

|

| 31 |

+

"""

|

| 32 |

+

Wrapper for a transformers_rec model, if needed to be used within Presidio Analyzer.

|

| 33 |

+

The class loads models hosted on HuggingFace - https://huggingface.co/

|

| 34 |

+

and loads the model and tokenizer into a TokenClassification pipeline.

|

| 35 |

+

Samples are split into short text chunks, ideally shorter than max_length input_ids of the individual model,

|

| 36 |

+

to avoid truncation by the Tokenizer and loss of information

|

| 37 |

+

|

| 38 |

+

A configuration object should be maintained for each dataset-model combination and translate

|

| 39 |

+

entities names into a standardized view. A sample of a configuration file is attached in

|

| 40 |

+

the example.

|

| 41 |

+

:param supported_entities: List of entities to run inference on

|

| 42 |

+

:type supported_entities: Optional[List[str]]

|

| 43 |

+

:param pipeline: Instance of a TokenClassificationPipeline including a Tokenizer and a Model, defaults to None

|

| 44 |

+

:type pipeline: Optional[TokenClassificationPipeline], optional

|

| 45 |

+

:param model_path: string referencing a HuggingFace uploaded model to be used for Inference, defaults to None

|

| 46 |

+

:type model_path: Optional[str], optional

|

| 47 |

+

|

| 48 |

+

:example

|

| 49 |

+

>from presidio_analyzer import AnalyzerEngine, RecognizerRegistry

|

| 50 |

+

>model_path = "obi/deid_roberta_i2b2"

|

| 51 |

+

>transformers_recognizer = TransformersRecognizer(model_path=model_path,

|

| 52 |

+

>supported_entities = model_configuration.get("PRESIDIO_SUPPORTED_ENTITIES"))

|

| 53 |

+

>transformers_recognizer.load_transformer(**model_configuration)

|

| 54 |

+

>registry = RecognizerRegistry()

|

| 55 |

+

>registry.add_recognizer(transformers_recognizer)

|

| 56 |

+

>analyzer = AnalyzerEngine(registry=registry)

|

| 57 |

+

>sample = "My name is Christopher and I live in Irbid."

|

| 58 |

+

>results = analyzer.analyze(sample, language="en",return_decision_process=True)

|

| 59 |

+

|

| 60 |

+

>for result in results:

|

| 61 |

+

> print(result,'----', sample[result.start:result.end])

|

| 62 |

+

"""

|

| 63 |

+

|

| 64 |

+

def load(self) -> None:

|

| 65 |

+

pass

|

| 66 |

+

|

| 67 |

+

def __init__(

|

| 68 |

+

self,

|

| 69 |

+

model_path: Optional[str] = None,

|

| 70 |

+

pipeline: Optional[TokenClassificationPipeline] = None,

|

| 71 |

+

supported_entities: Optional[List[str]] = None,

|

| 72 |

+

):

|

| 73 |

+

if not supported_entities:

|

| 74 |

+

supported_entities = BERT_DEID_CONFIGURATION[

|

| 75 |

+

"PRESIDIO_SUPPORTED_ENTITIES"

|

| 76 |

+

]

|

| 77 |

+

super().__init__(

|

| 78 |

+

supported_entities=supported_entities,

|

| 79 |

+

name=f"Transformers model {model_path}",

|

| 80 |

+

)

|

| 81 |

+

|

| 82 |

+

self.model_path = model_path

|

| 83 |

+

self.pipeline = pipeline

|

| 84 |

+

self.is_loaded = False

|

| 85 |

+

|

| 86 |

+

self.aggregation_mechanism = None

|

| 87 |

+

self.ignore_labels = None

|

| 88 |

+

self.model_to_presidio_mapping = None

|

| 89 |

+

self.entity_mapping = None

|

| 90 |

+

self.default_explanation = None

|

| 91 |

+

self.text_overlap_length = None

|

| 92 |

+

self.chunk_length = None

|

| 93 |

+

|

| 94 |

+

def load_transformer(self, **kwargs) -> None:

|

| 95 |

+

"""Load external configuration parameters and set default values.

|

| 96 |

+

|

| 97 |

+

:param kwargs: define default values for class attributes and modify pipeline behavior

|

| 98 |

+

**DATASET_TO_PRESIDIO_MAPPING (dict) - defines mapping entity strings from dataset format to Presidio format

|

| 99 |

+

**MODEL_TO_PRESIDIO_MAPPING (dict) - defines mapping entity strings from chosen model format to Presidio format

|

| 100 |

+

**SUB_WORD_AGGREGATION(str) - define how to aggregate sub-word tokens into full words and spans as defined

|

| 101 |

+

in HuggingFace https://huggingface.co/transformers/v4.8.0/main_classes/pipelines.html#transformers.TokenClassificationPipeline # noqa

|

| 102 |

+

**CHUNK_OVERLAP_SIZE (int) - number of overlapping characters in each text chunk

|

| 103 |

+

when splitting a single text into multiple inferences

|

| 104 |

+

**CHUNK_SIZE (int) - number of characters in each chunk of text

|

| 105 |

+

**LABELS_TO_IGNORE (List(str)) - List of entities to skip evaluation. Defaults to ["O"]

|

| 106 |

+

**DEFAULT_EXPLANATION (str) - string format to use for prediction explanations

|

| 107 |

+

"""

|

| 108 |

+

|

| 109 |

+

self.entity_mapping = kwargs.get("DATASET_TO_PRESIDIO_MAPPING", {})

|

| 110 |

+

self.model_to_presidio_mapping = kwargs.get("MODEL_TO_PRESIDIO_MAPPING", {})

|

| 111 |

+

self.ignore_labels = kwargs.get("LABELS_TO_IGNORE", ["O"])

|

| 112 |

+

self.aggregation_mechanism = kwargs.get("SUB_WORD_AGGREGATION", "simple")

|

| 113 |

+

self.default_explanation = kwargs.get("DEFAULT_EXPLANATION", None)

|

| 114 |

+

self.text_overlap_length = kwargs.get("CHUNK_OVERLAP_SIZE", 40)

|

| 115 |

+

self.chunk_length = kwargs.get("CHUNK_SIZE", 600)

|

| 116 |

+

if not self.pipeline:

|

| 117 |

+

if not self.model_path:

|

| 118 |

+

self.model_path = "obi/deid_roberta_i2b2"

|

| 119 |

+

logger.warning(

|

| 120 |

+

f"Both 'model' and 'model_path' arguments are None. Using default model_path={self.model_path}"

|

| 121 |

+

)

|

| 122 |

+

|

| 123 |

+

self._load_pipeline()

|

| 124 |

+

|

| 125 |

+

def _load_pipeline(self) -> None:

|

| 126 |

+

"""Initialize NER transformers_rec pipeline using the model_path provided"""

|

| 127 |

+

|

| 128 |

+

logging.debug(f"Initializing NER pipeline using {self.model_path} path")

|

| 129 |

+

device = 0 if torch.cuda.is_available() else -1

|

| 130 |

+

self.pipeline = pipeline(

|

| 131 |

+

"ner",

|

| 132 |

+

model=AutoModelForTokenClassification.from_pretrained(self.model_path),

|

| 133 |

+

tokenizer=AutoTokenizer.from_pretrained(self.model_path),

|

| 134 |

+

# Will attempt to group sub-entities to word level

|

| 135 |

+

aggregation_strategy=self.aggregation_mechanism,

|

| 136 |

+

device=device,

|

| 137 |

+

framework="pt",

|

| 138 |

+

ignore_labels=self.ignore_labels,

|

| 139 |

+

)

|

| 140 |

+

|

| 141 |

+

self.is_loaded = True

|

| 142 |

+

|

| 143 |

+

def get_supported_entities(self) -> List[str]:

|

| 144 |

+

"""

|

| 145 |

+

Return supported entities by this model.

|

| 146 |

+

:return: List of the supported entities.

|

| 147 |

+

"""

|

| 148 |

+

return self.supported_entities

|

| 149 |

+

|

| 150 |

+

# Class to use transformers_rec with Presidio as an external recognizer.

|

| 151 |

+

def analyze(

|

| 152 |

+

self, text: str, entities: List[str], nlp_artifacts: NlpArtifacts = None

|

| 153 |

+

) -> List[RecognizerResult]:

|

| 154 |

+

"""

|

| 155 |

+

Analyze text using transformers_rec model to produce NER tagging.

|

| 156 |

+

:param text : The text for analysis.

|

| 157 |

+

:param entities: Not working properly for this recognizer.

|

| 158 |

+

:param nlp_artifacts: Not used by this recognizer.

|

| 159 |

+

:return: The list of Presidio RecognizerResult constructed from the recognized

|

| 160 |

+

transformers_rec detections.

|

| 161 |

+

"""

|

| 162 |

+

|

| 163 |

+

results = list()

|

| 164 |

+

# Run transformer model on the provided text

|

| 165 |

+

ner_results = self._get_ner_results_for_text(text)

|

| 166 |

+

|

| 167 |

+

for res in ner_results:

|

| 168 |

+

entity = self.model_to_presidio_mapping.get(res["entity_group"], None)

|

| 169 |

+

if not entity:

|

| 170 |

+

continue

|

| 171 |

+

|

| 172 |

+

res["entity_group"] = self.__check_label_transformer(res["entity_group"])

|

| 173 |

+

textual_explanation = self.default_explanation.format(res["entity_group"])

|

| 174 |

+

explanation = self.build_transformers_explanation(

|

| 175 |

+

float(round(res["score"], 2)), textual_explanation, res["word"]

|

| 176 |

+

)

|

| 177 |

+

transformers_result = self._convert_to_recognizer_result(res, explanation)

|

| 178 |

+

|

| 179 |

+

results.append(transformers_result)

|

| 180 |

+

|

| 181 |

+

return results

|

| 182 |

+

|

| 183 |

+

@staticmethod

|

| 184 |

+

def split_text_to_word_chunks(

|

| 185 |

+

input_length: int, chunk_length: int, overlap_length: int

|

| 186 |

+

) -> List[List]:

|

| 187 |

+

"""The function calculates chunks of text with size chunk_length. Each chunk has overlap_length number of

|

| 188 |

+

words to create context and continuity for the model

|

| 189 |

+

|

| 190 |

+

:param input_length: Length of input_ids for a given text

|

| 191 |

+

:type input_length: int

|

| 192 |

+

:param chunk_length: Length of each chunk of input_ids.

|

| 193 |

+

Should match the max input length of the transformer model

|

| 194 |

+

:type chunk_length: int

|

| 195 |

+

:param overlap_length: Number of overlapping words in each chunk

|

| 196 |

+

:type overlap_length: int

|

| 197 |

+

:return: List of start and end positions for individual text chunks

|

| 198 |

+

:rtype: List[List]

|

| 199 |

+

"""

|

| 200 |

+

if input_length < chunk_length:

|

| 201 |

+

return [[0, input_length]]

|

| 202 |

+

if chunk_length <= overlap_length:

|

| 203 |

+

logger.warning(

|

| 204 |

+

"overlap_length should be shorter than chunk_length, setting overlap_length to by half of chunk_length"

|

| 205 |

+

)

|

| 206 |

+

overlap_length = chunk_length // 2

|

| 207 |

+

return [

|

| 208 |

+

[i, min([i + chunk_length, input_length])]

|

| 209 |

+

for i in range(

|

| 210 |

+

0, input_length - overlap_length, chunk_length - overlap_length

|

| 211 |

+

)

|

| 212 |

+

]

|

| 213 |

+

|

| 214 |

+

def _get_ner_results_for_text(self, text: str) -> List[dict]:

|

| 215 |

+

"""The function runs model inference on the provided text.

|

| 216 |

+

The text is split into chunks with n overlapping characters.

|

| 217 |

+

The results are then aggregated and duplications are removed.

|

| 218 |

+

|

| 219 |

+

:param text: The text to run inference on

|

| 220 |

+

:type text: str

|

| 221 |

+

:return: List of entity predictions on the word level

|

| 222 |

+

:rtype: List[dict]

|

| 223 |

+

"""

|

| 224 |

+

model_max_length = self.pipeline.tokenizer.model_max_length

|

| 225 |

+

# calculate inputs based on the text

|

| 226 |

+

text_length = len(text)

|

| 227 |

+

# split text into chunks

|

| 228 |

+

logger.info(

|

| 229 |

+

f"splitting the text into chunks, length {text_length} > {model_max_length*2}"

|

| 230 |

+

)

|

| 231 |

+

predictions = list()

|

| 232 |

+

chunk_indexes = TransformersRecognizer.split_text_to_word_chunks(

|

| 233 |

+

text_length, self.chunk_length, self.text_overlap_length

|

| 234 |

+

)

|

| 235 |

+

|

| 236 |

+

# iterate over text chunks and run inference

|

| 237 |

+

for chunk_start, chunk_end in chunk_indexes:

|

| 238 |

+

chunk_text = text[chunk_start:chunk_end]

|

| 239 |

+

chunk_preds = self.pipeline(chunk_text)

|

| 240 |

+

|

| 241 |

+

# align indexes to match the original text - add to each position the value of chunk_start

|

| 242 |

+

aligned_predictions = list()

|

| 243 |

+

for prediction in chunk_preds:

|

| 244 |

+

prediction_tmp = copy.deepcopy(prediction)

|

| 245 |

+

prediction_tmp["start"] += chunk_start

|

| 246 |

+

prediction_tmp["end"] += chunk_start

|

| 247 |

+

aligned_predictions.append(prediction_tmp)

|

| 248 |

+

|

| 249 |

+

predictions.extend(aligned_predictions)

|

| 250 |

+

|

| 251 |

+

# remove duplicates

|

| 252 |

+

predictions = [dict(t) for t in {tuple(d.items()) for d in predictions}]

|

| 253 |

+

return predictions

|

| 254 |

+

|

| 255 |

+

@staticmethod

|

| 256 |

+

def _convert_to_recognizer_result(

|

| 257 |

+

prediction_result: dict, explanation: AnalysisExplanation

|

| 258 |

+

) -> RecognizerResult:

|

| 259 |

+

"""The method parses NER model predictions into a RecognizerResult format to enable down the stream analysis

|

| 260 |

+

|

| 261 |

+

:param prediction_result: A single example of entity prediction

|

| 262 |

+

:type prediction_result: dict

|

| 263 |

+

:param explanation: Textual representation of model prediction

|

| 264 |

+

:type explanation: str

|

| 265 |

+

:return: An instance of RecognizerResult which is used to model evaluation calculations

|

| 266 |

+

:rtype: RecognizerResult

|

| 267 |

+

"""

|

| 268 |

+

|

| 269 |

+

transformers_results = RecognizerResult(

|

| 270 |

+

entity_type=prediction_result["entity_group"],

|

| 271 |

+

start=prediction_result["start"],

|

| 272 |

+

end=prediction_result["end"],

|

| 273 |

+

score=float(round(prediction_result["score"], 2)),

|

| 274 |

+

analysis_explanation=explanation,

|

| 275 |

+

)

|

| 276 |

+

|

| 277 |

+

return transformers_results

|

| 278 |

+

|

| 279 |

+

def build_transformers_explanation(

|

| 280 |

+

self,

|

| 281 |

+

original_score: float,

|

| 282 |

+

explanation: str,

|

| 283 |

+

pattern: str,

|

| 284 |

+

) -> AnalysisExplanation:

|

| 285 |

+

"""

|

| 286 |

+

Create explanation for why this result was detected.

|

| 287 |

+

:param original_score: Score given by this recognizer

|

| 288 |

+

:param explanation: Explanation string

|

| 289 |

+

:param pattern: Regex pattern used

|

| 290 |

+

:return Structured explanation and scores of a NER model prediction

|

| 291 |

+

:rtype: AnalysisExplanation

|

| 292 |

+

"""

|

| 293 |

+

explanation = AnalysisExplanation(

|

| 294 |

+

recognizer=self.__class__.__name__,

|

| 295 |

+

original_score=float(original_score),

|

| 296 |

+

textual_explanation=explanation,

|

| 297 |

+

pattern=pattern,

|

| 298 |

+

)

|

| 299 |

+

return explanation

|

| 300 |

+

|

| 301 |

+

def __check_label_transformer(self, label: str) -> str:

|

| 302 |

+

"""The function validates the predicted label is identified by Presidio

|

| 303 |

+

and maps the string into a Presidio representation

|

| 304 |

+

:param label: Predicted label by the model

|

| 305 |

+

:type label: str

|

| 306 |

+

:return: Returns the predicted entity if the label is found in model_to_presidio mapping dictionary

|

| 307 |

+

and is supported by Presidio entities

|

| 308 |

+

:rtype: str

|

| 309 |

+

"""

|

| 310 |

+

|

| 311 |

+

if label == "O":

|

| 312 |

+

return label

|

| 313 |

+

|

| 314 |

+

# convert model label to presidio label

|

| 315 |

+

entity = self.model_to_presidio_mapping.get(label, None)

|

| 316 |

+

|

| 317 |

+

if entity is None:

|

| 318 |

+

logger.warning(f"Found unrecognized label {label}, returning entity as 'O'")

|

| 319 |

+

return "O"

|

| 320 |

+

|

| 321 |

+

if entity not in self.supported_entities:

|

| 322 |

+

logger.warning(f"Found entity {entity} which is not supported by Presidio")

|

| 323 |

+

return "O"

|

| 324 |

+

return entity

|