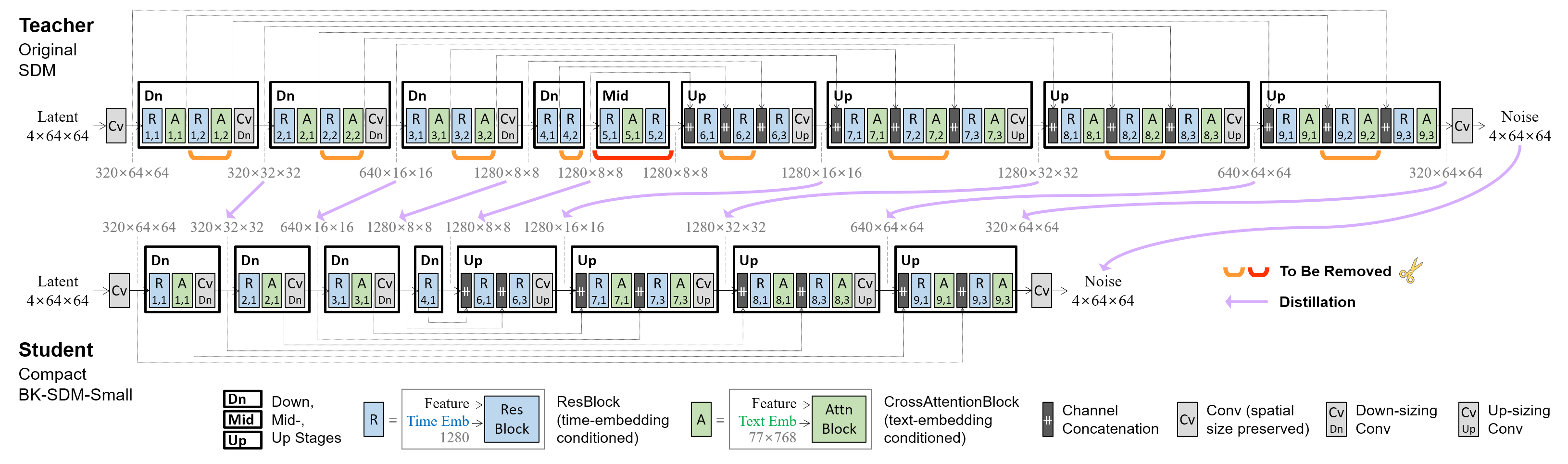

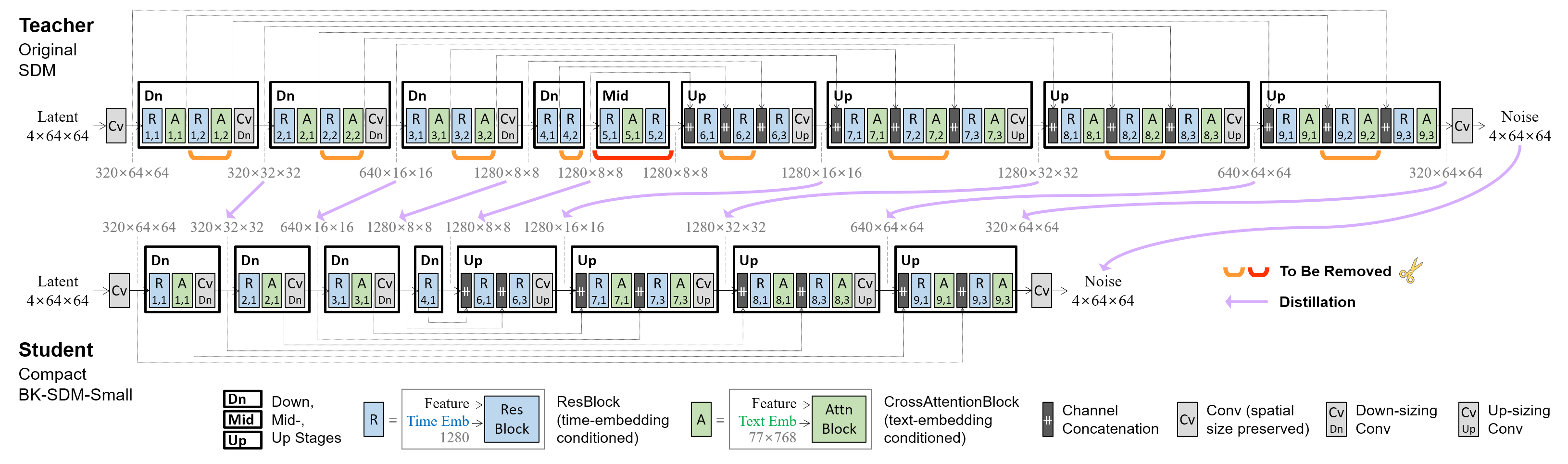

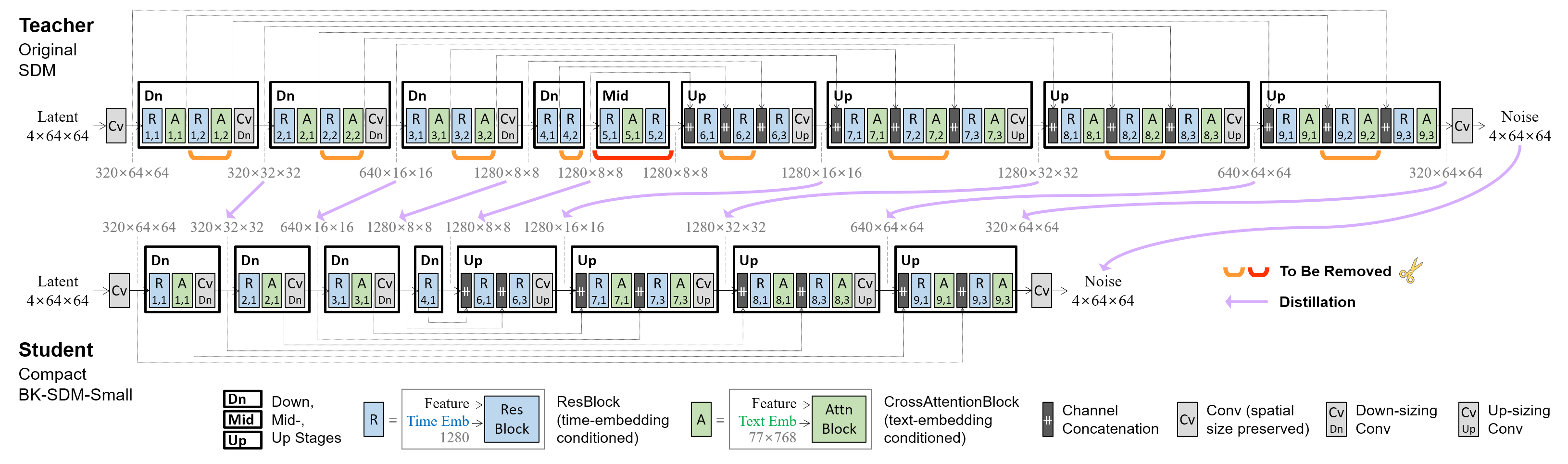

This demo showcases a lightweight Stable Diffusion model (SDM) for general-purpose text-to-image synthesis. Our model [**BK-SDM-Small**](https://huggingface.co/nota-ai/bk-sdm-small) achieves **36% reduced** parameters and latency. This model is bulit with (i) removing several residual and attention blocks from the U-Net of [SDM-v1.4](https://huggingface.co/CompVis/stable-diffusion-v1-4) and (ii) distillation pretraining on only 0.22M LAION pairs (fewer than 0.1% of the full training set). Despite very limited training resources, our model can imitate the original SDM by benefiting from transferred knowledge.

- **For more information & acknowledgments**, please see [Paper](https://arxiv.org/abs/2305.15798), [GitHub](https://github.com/Nota-NetsPresso/BK-SDM), BK-SDM-{[Base](https://huggingface.co/nota-ai/bk-sdm-base), [Small](https://huggingface.co/nota-ai/bk-sdm-small), [Tiny](https://huggingface.co/nota-ai/bk-sdm-tiny)} Model Card.

- This research was accepted to [**ICCV 2023 Demo Track**](https://iccv2023.thecvf.com/) & [**ICML 2023 Workshop on Efficient Systems for Foundation Models** (ES-FoMo)](https://es-fomo.com/).

- Please be aware that your prompts are logged, _without_ any personally identifiable information.

- For different images with the same prompt, please change _Random Seed_ in Advanced Settings (because of using the firstly sampled latent code per seed).

**Demo Environment**: [July/27/2023] NVIDIA T4-small (4 vCPU · 15 GB RAM · 16GB VRAM) — 5~10 sec inference of the original SDM (for a 512×512 image with 25 denoising steps).

Previous Env Setup:

[June/30/2023] Free CPU-basic (2 vCPU · 16 GB RAM) — 7~10 min slow inference of the original SDM.

[May/31/2023] NVIDIA T4-small (4 vCPU · 15 GB RAM · 16GB VRAM)