+

+  +

+

+

+

+

+

+

+

+

+

+

+

+

+  +

+

+ + Support This Project + + + +

+ + [](https://github.com/Gourieff/ComfyUI-ReActor/commits/main) +  + [](https://github.com/Gourieff/ComfyUI-ReActor/issues?cacheSeconds=0) + [](https://github.com/Gourieff/ComfyUI-ReActor/issues?q=is%3Aissue+state%3Aclosed) +  + + English | [Русский](/README_RU.md) + +# ReActor Nodes for ComfyUI

-=SFW-Friendly=- + +

+

+### The Fast and Simple Face Swap Extension Nodes for ComfyUI, based on [blocked ReActor](https://github.com/Gourieff/comfyui-reactor-node) - now it has a nudity detector to avoid using this software with 18+ content

+

+> By using this Node you accept and assume [responsibility](#disclaimer)

+

+ +

+

+

+

+

+

+

+

+

+

+

+

+

+  +

+ + + Support This Project + + + +

+ + [](https://github.com/Gourieff/ComfyUI-ReActor/commits/main) +  + [](https://github.com/Gourieff/ComfyUI-ReActor/issues?cacheSeconds=0) + [](https://github.com/Gourieff/ComfyUI-ReActor/issues?q=is%3Aissue+state%3Aclosed) +  + + English | [Русский](/README_RU.md) + +# ReActor Nodes for ComfyUI

-=SFW-Friendly=- + +

+

+---

+[**What's new**](#latestupdate) | [**Installation**](#installation) | [**Usage**](#usage) | [**Troubleshooting**](#troubleshooting) | [**Updating**](#updating) | [**Disclaimer**](#disclaimer) | [**Credits**](#credits) | [**Note!**](#note)

+

+---

+

+

+

+

+

+## What's new in the latest update

+

+### 0.6.0 ALPHA1

+

+- New Node `ReActorSetWeight` - you can now set the strength of face swap for `source_image` or `face_model` from 0% to 100% (in 12.5% step)

+

+ +

+ +

+ +

+

+

+ +

+ +

+

+

+You can download ReSwapper models here:

+https://huggingface.co/datasets/Gourieff/ReActor/tree/main/models

+Just put them into the "models/reswapper" directory.

+

+- NSFW-detector to not violate [GitHub rules](https://docs.github.com/en/site-policy/acceptable-use-policies/github-misinformation-and-disinformation#synthetic--manipulated-media-tools)

+- New node "Unload ReActor Models" - is useful for complex WFs when you need to free some VRAM utilized by ReActor

+

+ +

+- Support of ORT CoreML and ROCM EPs, just install onnxruntime version you need

+- Install script improvements to install latest versions of ORT-GPU

+

+

+

+- Support of ORT CoreML and ROCM EPs, just install onnxruntime version you need

+- Install script improvements to install latest versions of ORT-GPU

+

+

+ +

+

+

+- Fixes and improvements

+

+

+### 0.5.1

+

+- Support of GPEN 1024/2048 restoration models (available in the HF dataset https://huggingface.co/datasets/Gourieff/ReActor/tree/main/models/facerestore_models)

+- ReActorFaceBoost Node - an attempt to improve the quality of swapped faces. The idea is to restore and scale the swapped face (according to the `face_size` parameter of the restoration model) BEFORE pasting it to the target image (via inswapper algorithms), more information is [here (PR#321)](https://github.com/Gourieff/comfyui-reactor-node/pull/321)

+

+ +

+[Full size demo preview](https://github.com/Gourieff/Assets/blob/main/comfyui-reactor-node/0.5.1-whatsnew-02.png)

+

+- Sorting facemodels alphabetically

+- A lot of fixes and improvements

+

+### [0.5.0 BETA4](https://github.com/Gourieff/comfyui-reactor-node/releases/tag/v0.5.0)

+

+- Spandrel lib support for GFPGAN

+

+### 0.5.0 BETA3

+

+- Fixes: "RAM issue", "No detection" for MaskingHelper

+

+### 0.5.0 BETA2

+

+- You can now build a blended face model from a batch of face models you already have, just add the "Make Face Model Batch" node to your workflow and connect several models via "Load Face Model"

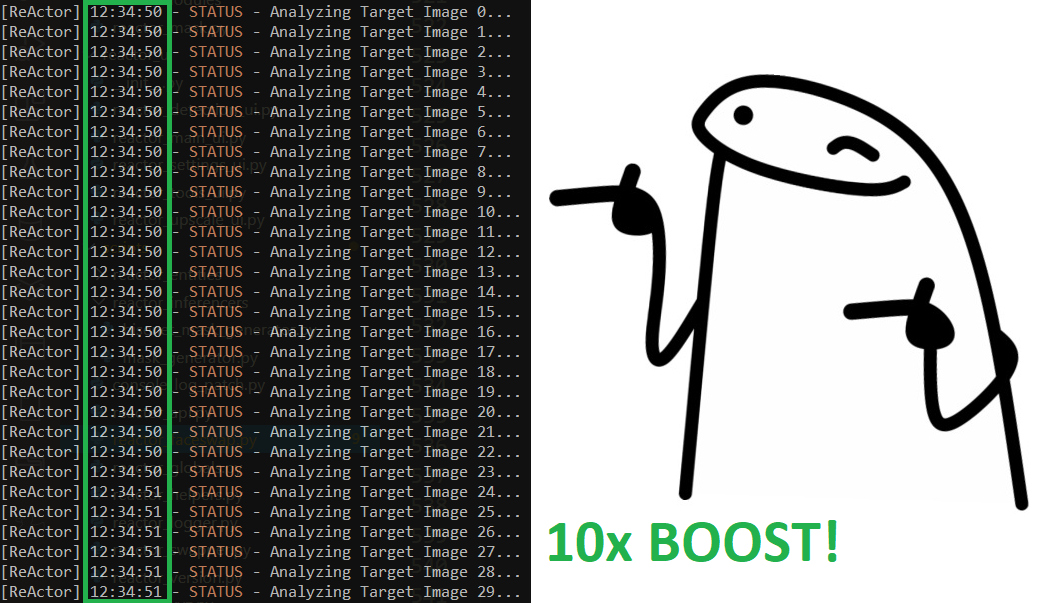

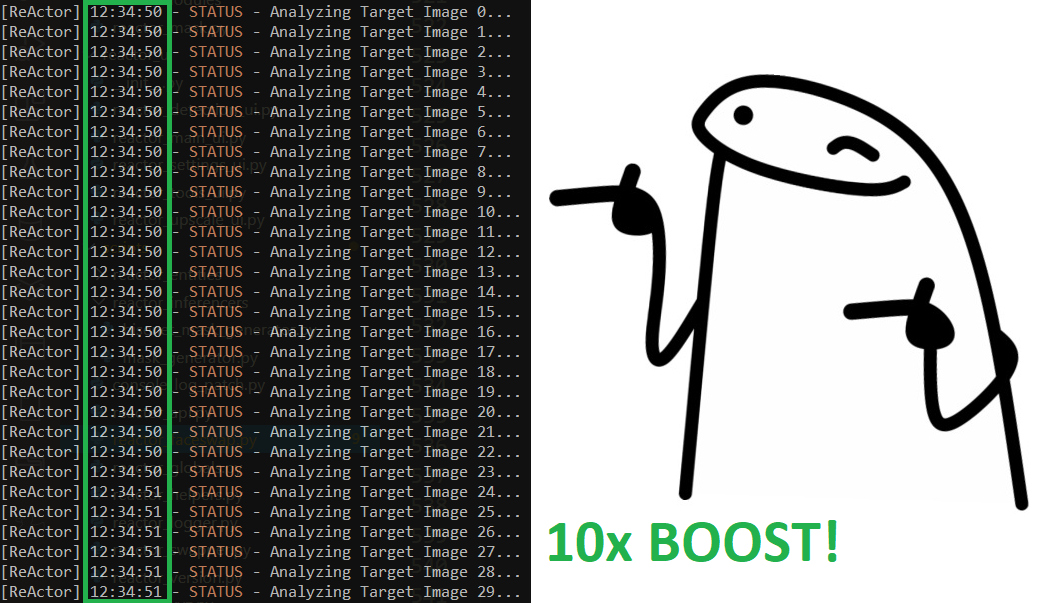

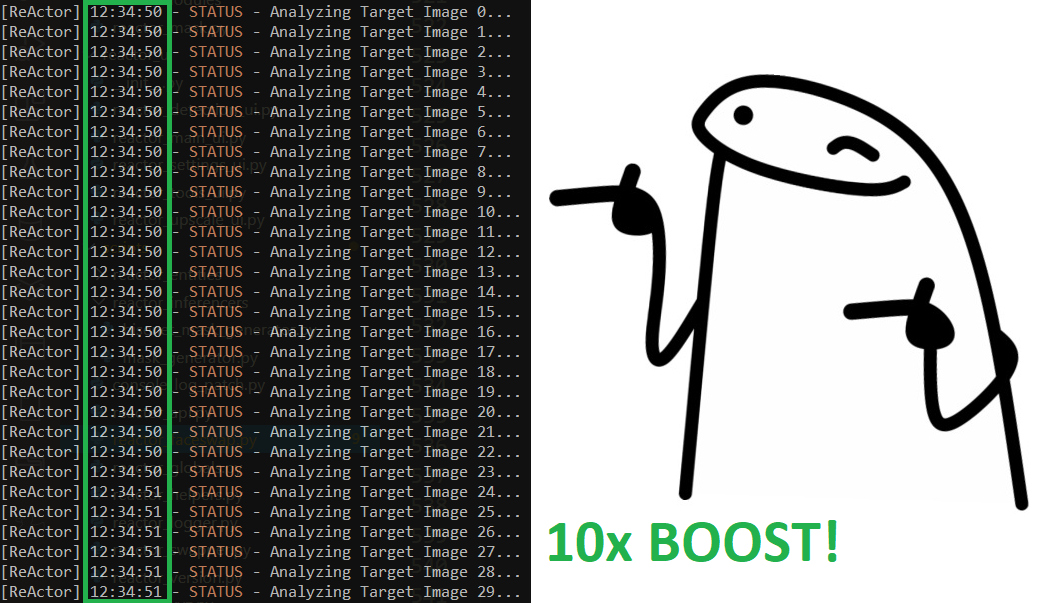

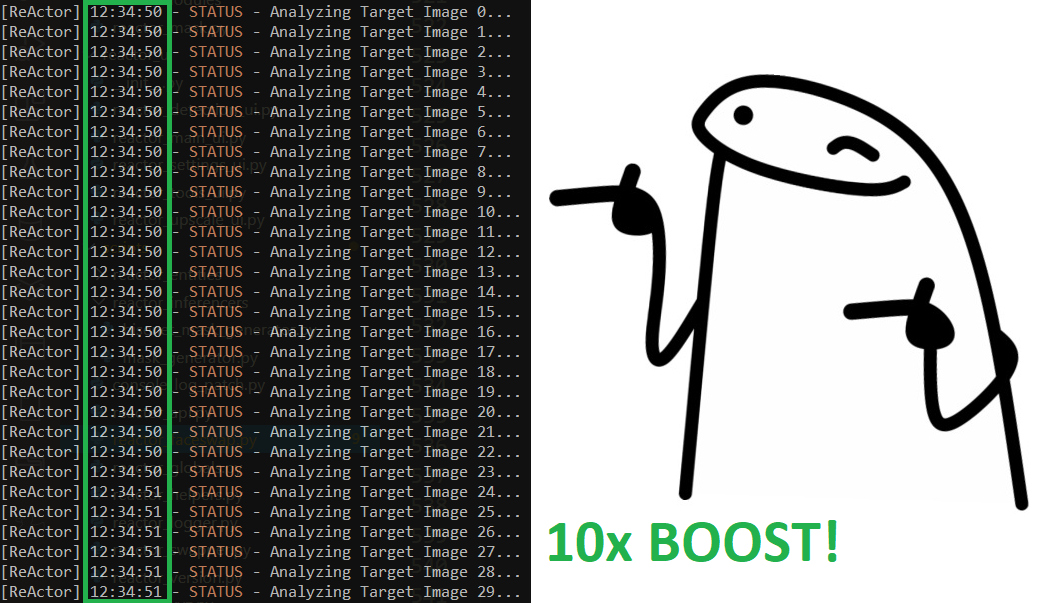

+- Huge performance boost of the image analyzer's module! 10x speed up! Working with videos is now a pleasure!

+

+

+

+[Full size demo preview](https://github.com/Gourieff/Assets/blob/main/comfyui-reactor-node/0.5.1-whatsnew-02.png)

+

+- Sorting facemodels alphabetically

+- A lot of fixes and improvements

+

+### [0.5.0 BETA4](https://github.com/Gourieff/comfyui-reactor-node/releases/tag/v0.5.0)

+

+- Spandrel lib support for GFPGAN

+

+### 0.5.0 BETA3

+

+- Fixes: "RAM issue", "No detection" for MaskingHelper

+

+### 0.5.0 BETA2

+

+- You can now build a blended face model from a batch of face models you already have, just add the "Make Face Model Batch" node to your workflow and connect several models via "Load Face Model"

+- Huge performance boost of the image analyzer's module! 10x speed up! Working with videos is now a pleasure!

+

+ +

+### 0.5.0 BETA1

+

+- SWAPPED_FACE output for the Masking Helper Node

+- FIX: Empty A-channel for Masking Helper IMAGE output (causing errors with some nodes) was removed

+

+### 0.5.0 ALPHA1

+

+- ReActorBuildFaceModel Node got "face_model" output to provide a blended face model directly to the main Node:

+

+Basic workflow [💾](https://github.com/Gourieff/Assets/blob/main/comfyui-reactor-node/workflows/ReActor--Build-Blended-Face-Model--v2.json)

+

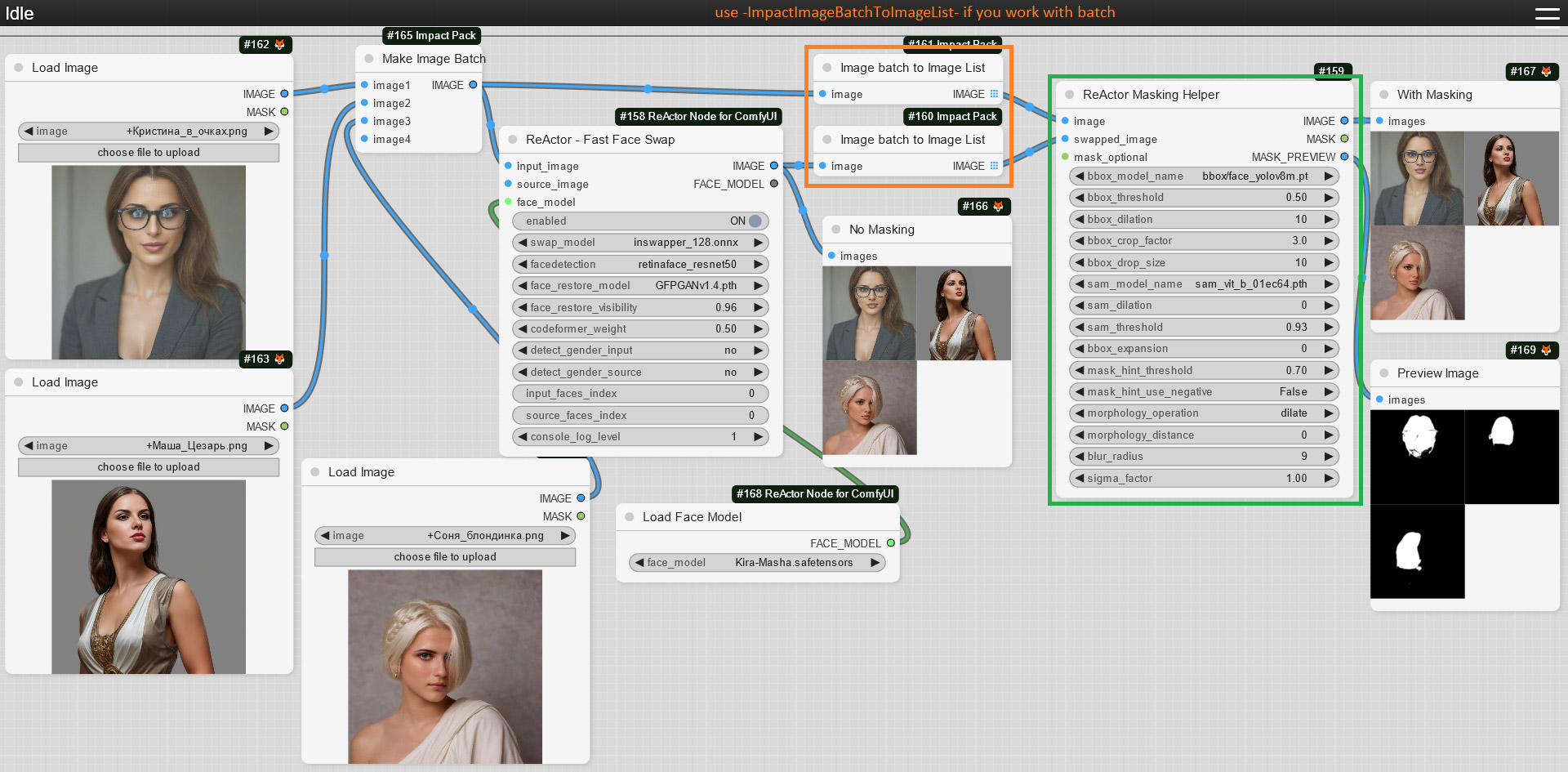

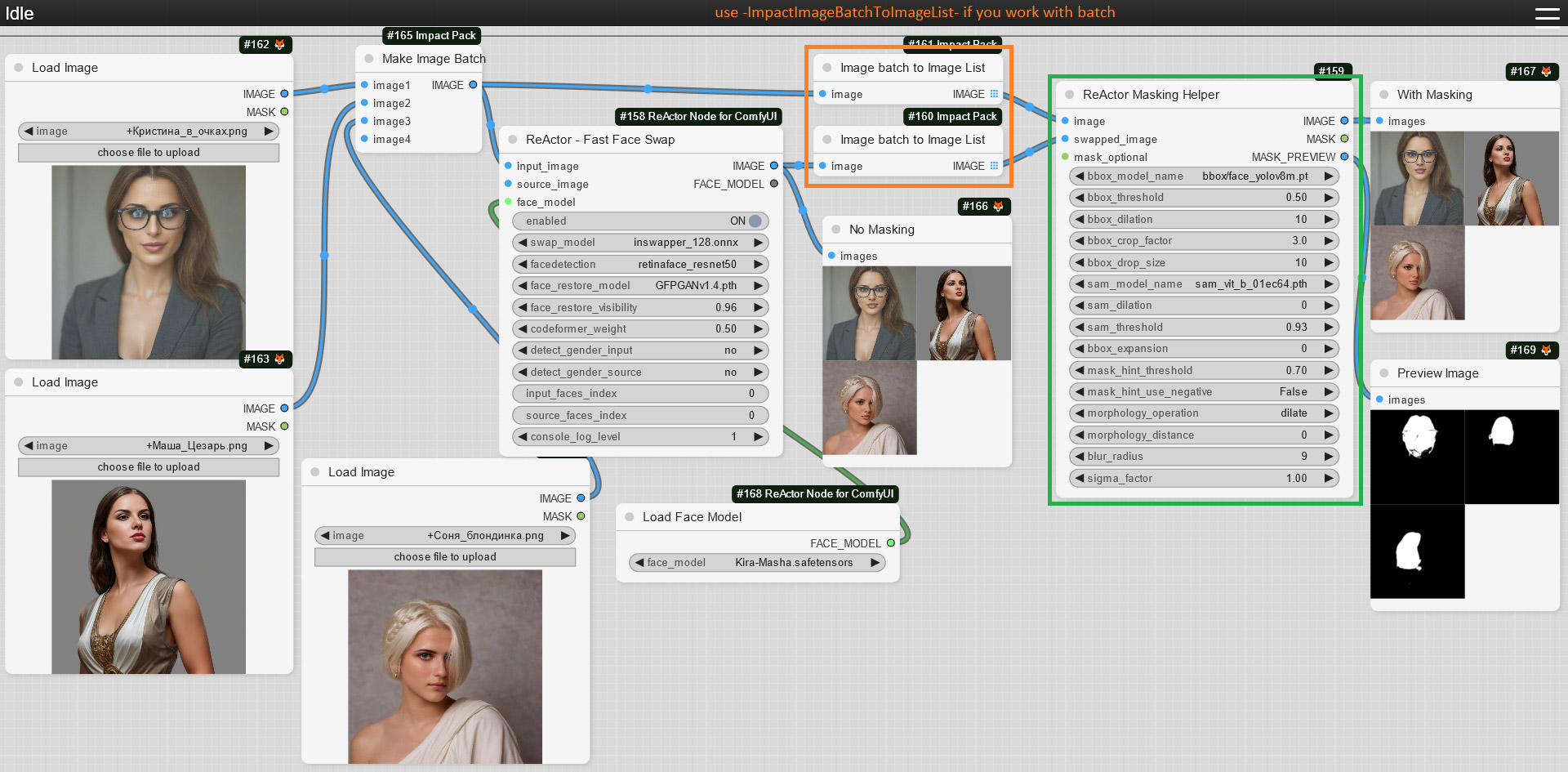

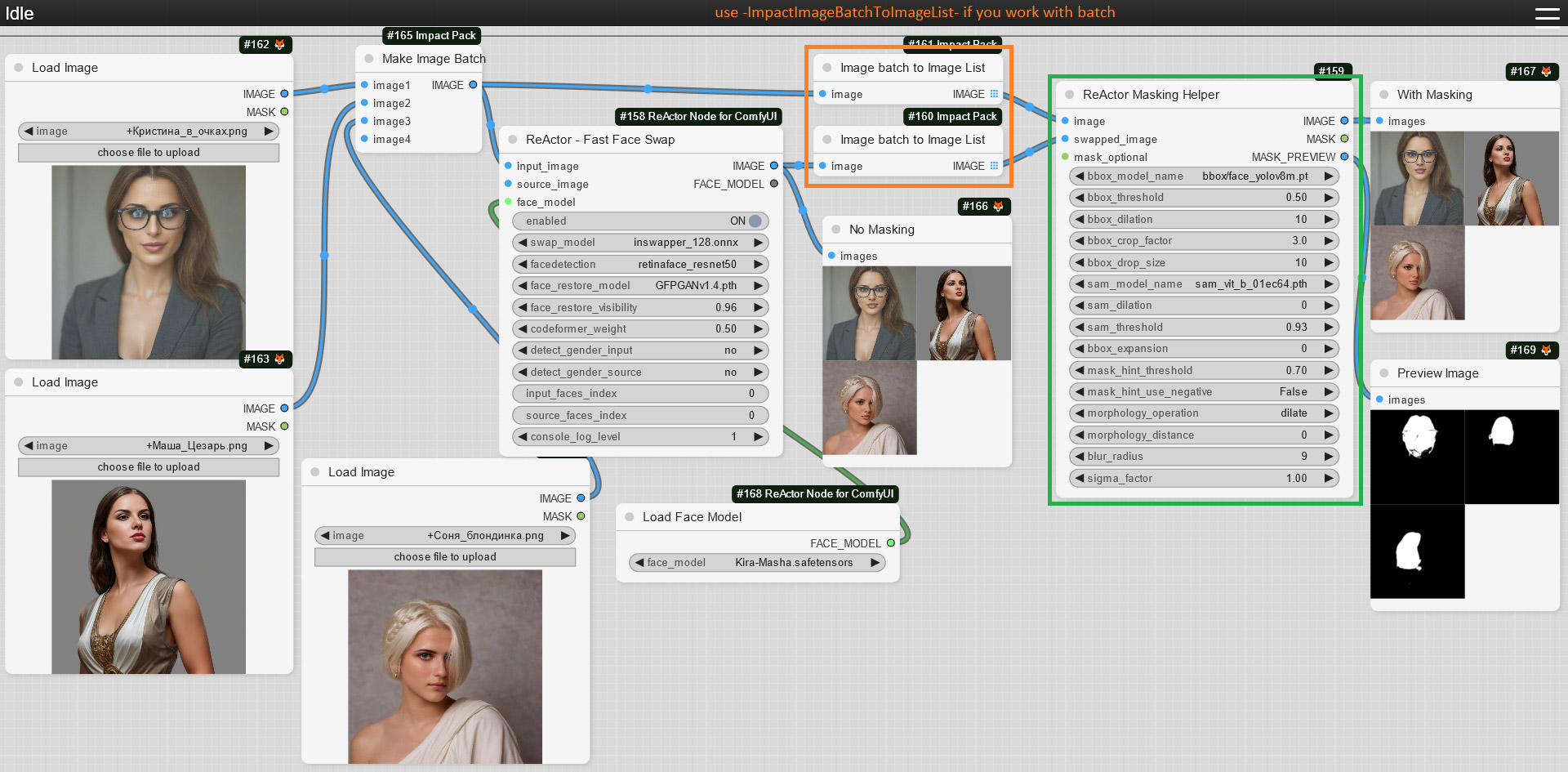

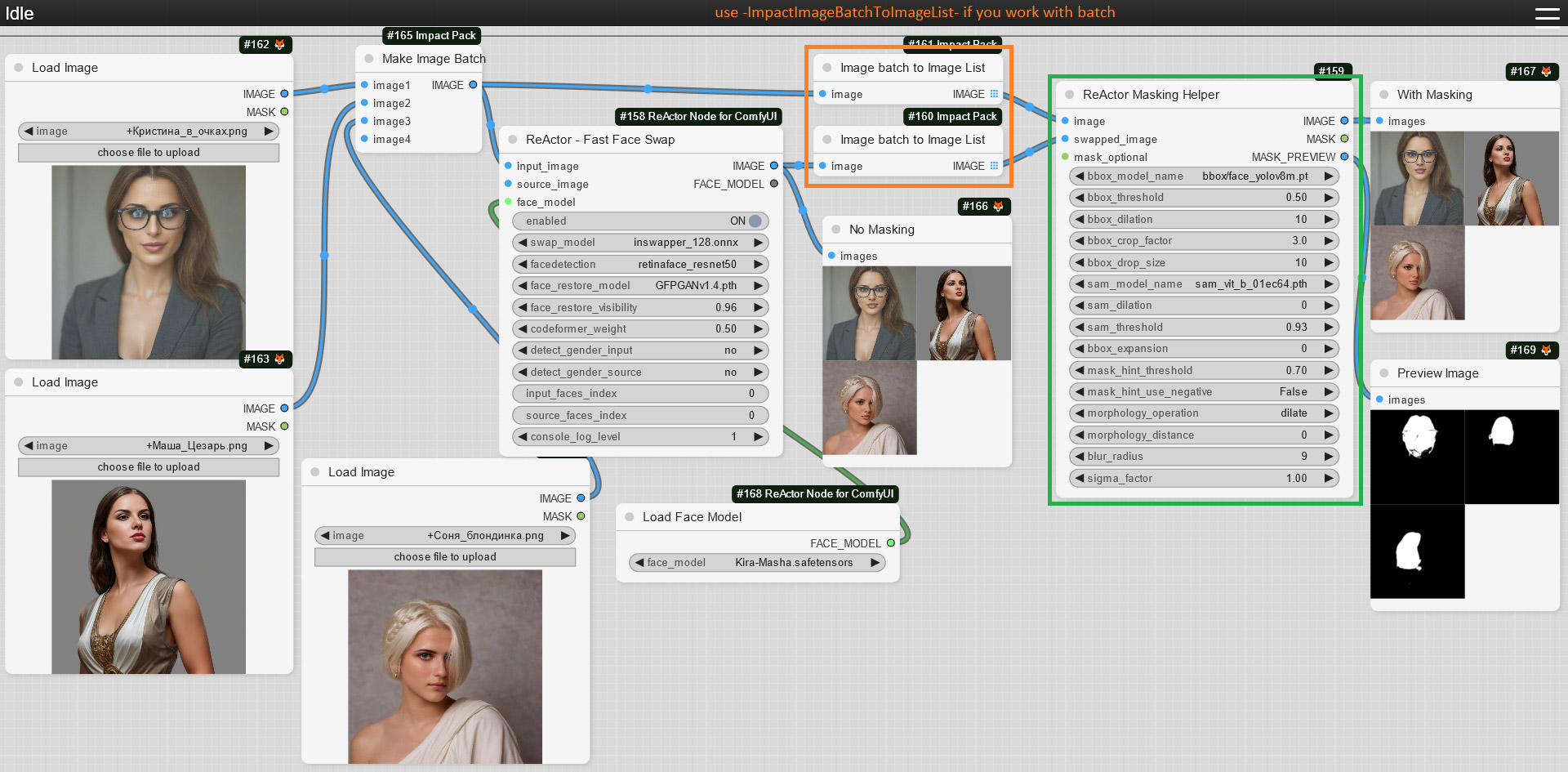

+- Face Masking feature is available now, just add the "ReActorMaskHelper" Node to the workflow and connect it as shown below:

+

+

+

+### 0.5.0 BETA1

+

+- SWAPPED_FACE output for the Masking Helper Node

+- FIX: Empty A-channel for Masking Helper IMAGE output (causing errors with some nodes) was removed

+

+### 0.5.0 ALPHA1

+

+- ReActorBuildFaceModel Node got "face_model" output to provide a blended face model directly to the main Node:

+

+Basic workflow [💾](https://github.com/Gourieff/Assets/blob/main/comfyui-reactor-node/workflows/ReActor--Build-Blended-Face-Model--v2.json)

+

+- Face Masking feature is available now, just add the "ReActorMaskHelper" Node to the workflow and connect it as shown below:

+

+ +

+If you don't have the "face_yolov8m.pt" Ultralytics model - you can download it from the [Assets](https://huggingface.co/datasets/Gourieff/ReActor/blob/main/models/detection/bbox/face_yolov8m.pt) and put it into the "ComfyUI\models\ultralytics\bbox" directory

+

+

+If you don't have the "face_yolov8m.pt" Ultralytics model - you can download it from the [Assets](https://huggingface.co/datasets/Gourieff/ReActor/blob/main/models/detection/bbox/face_yolov8m.pt) and put it into the "ComfyUI\models\ultralytics\bbox" directory

+

+As well as ["sam_vit_b_01ec64.pth"](https://huggingface.co/datasets/Gourieff/ReActor/blob/main/models/sams/sam_vit_b_01ec64.pth) model - download (if you don't have it) and put it into the "ComfyUI\models\sams" directory; + +Use this Node to gain the best results of the face swapping process: + + +

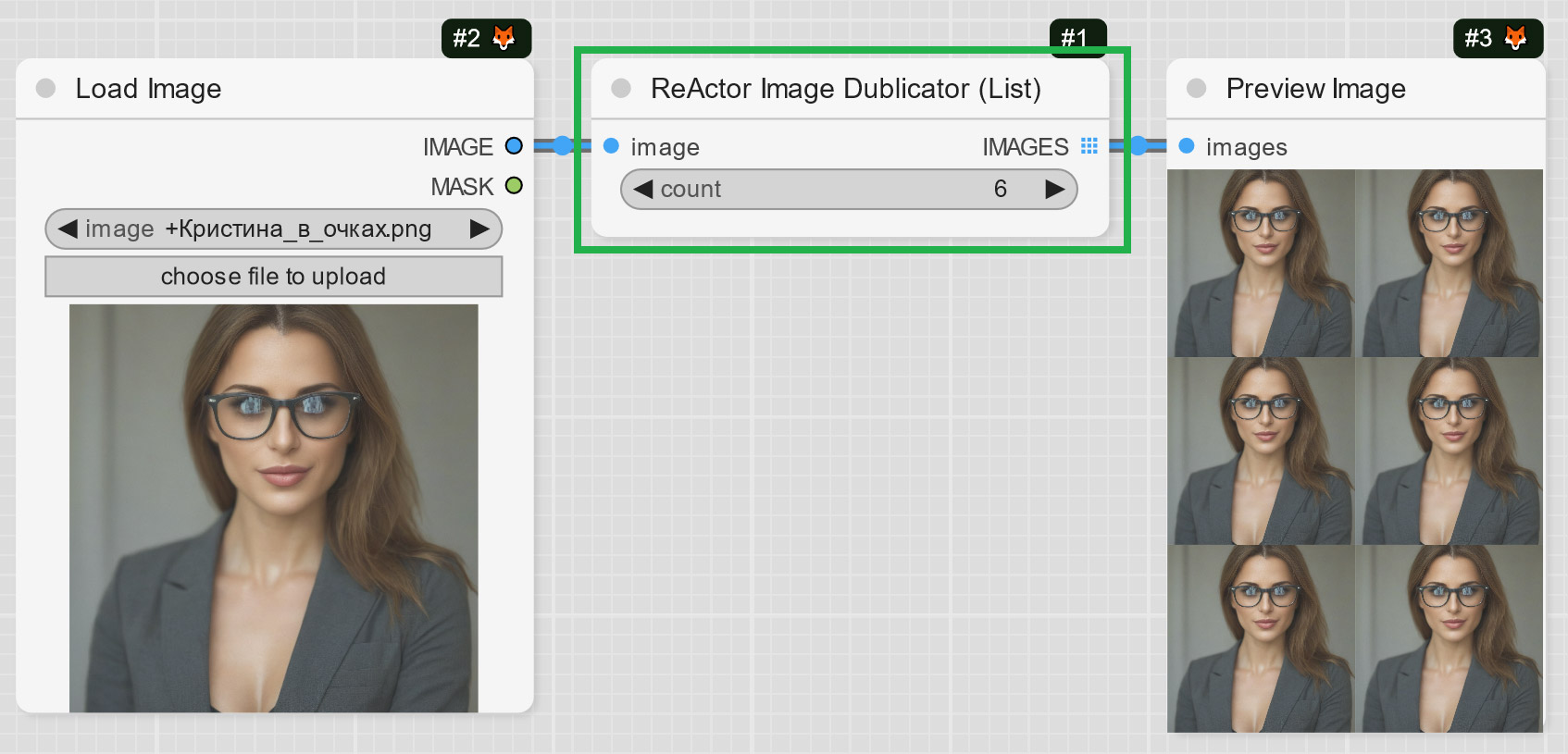

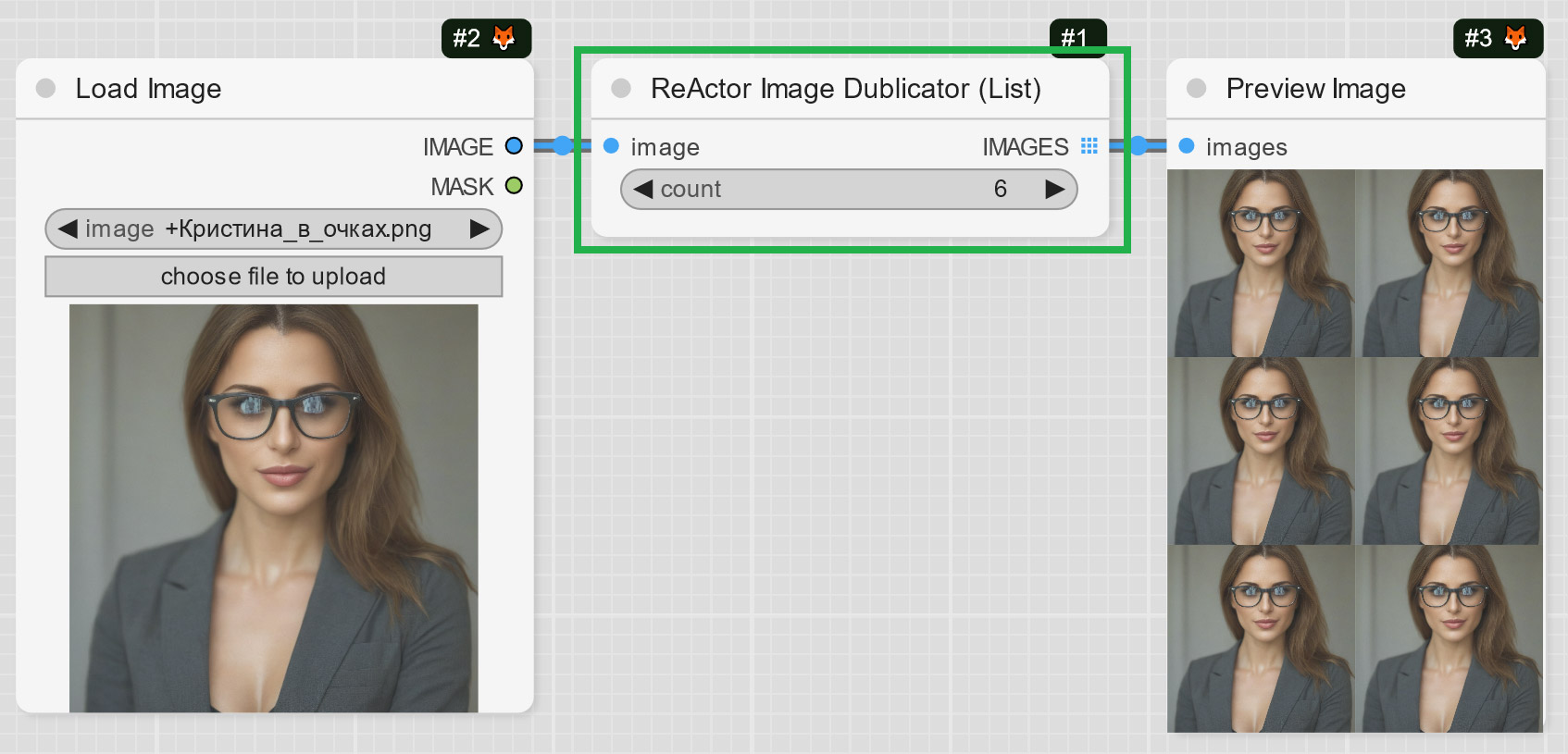

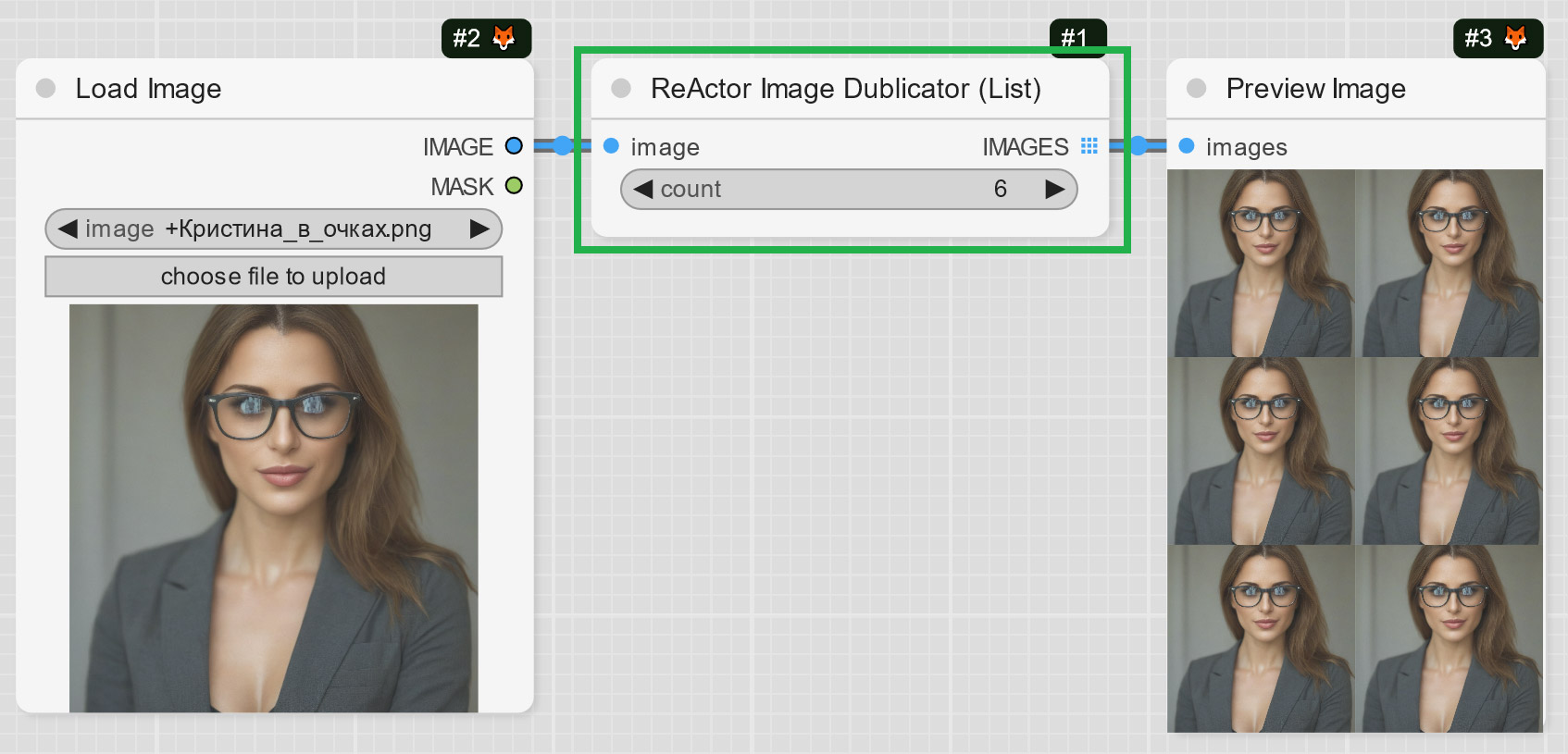

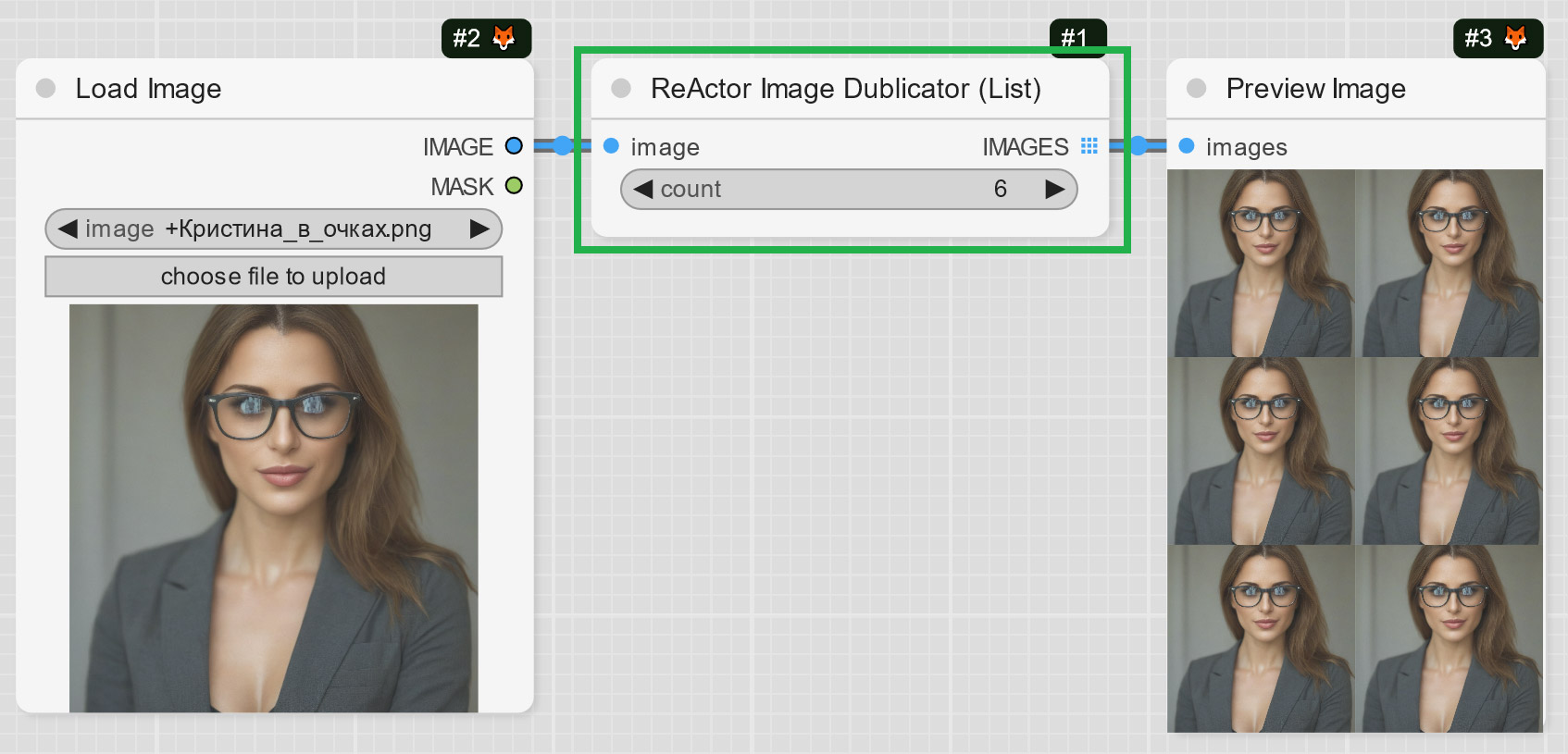

+- ReActorImageDublicator Node - rather useful for those who create videos, it helps to duplicate one image to several frames to use them with VAE Encoder (e.g. live avatars):

+

+

+

+- ReActorImageDublicator Node - rather useful for those who create videos, it helps to duplicate one image to several frames to use them with VAE Encoder (e.g. live avatars):

+

+ +

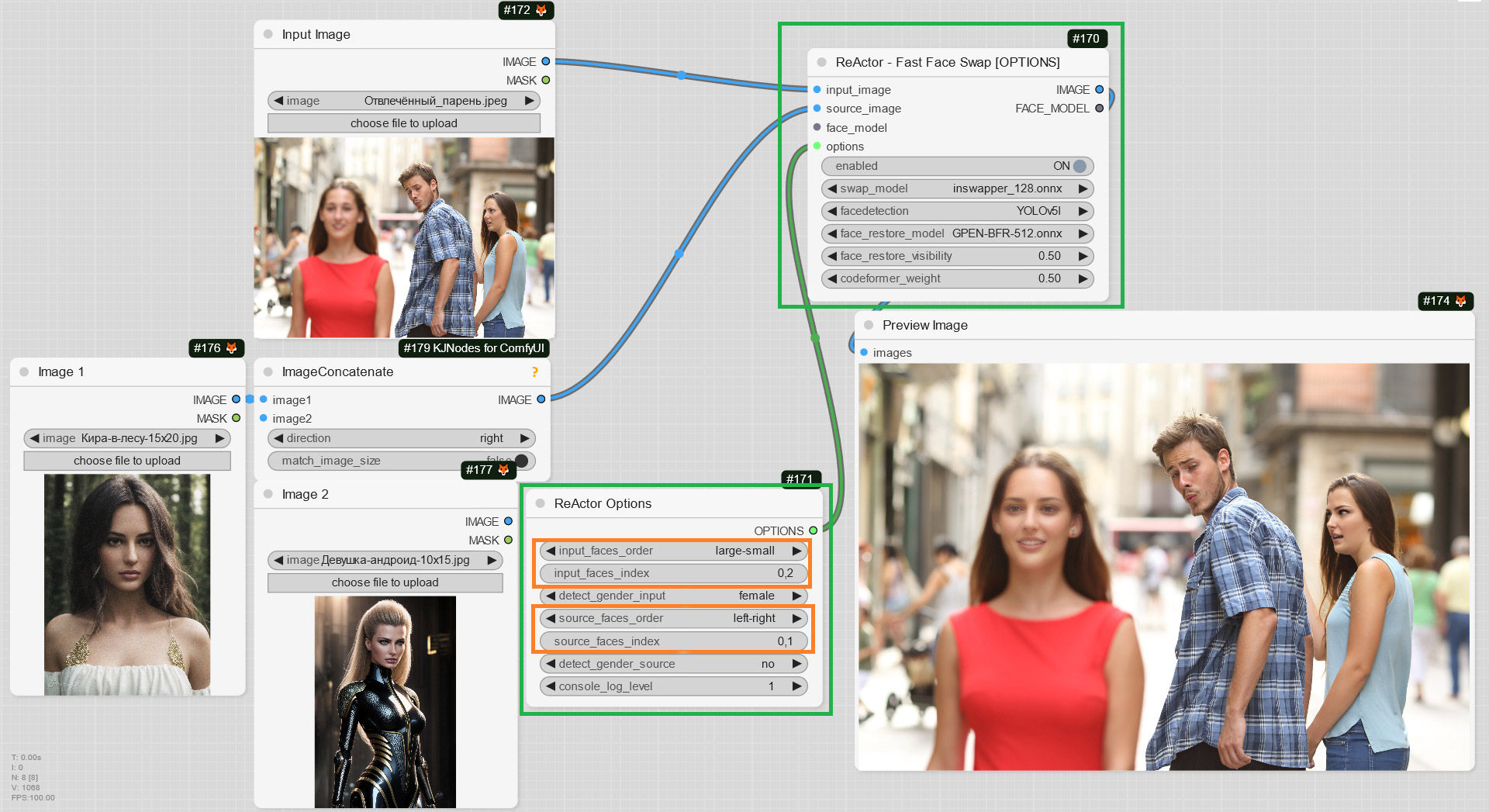

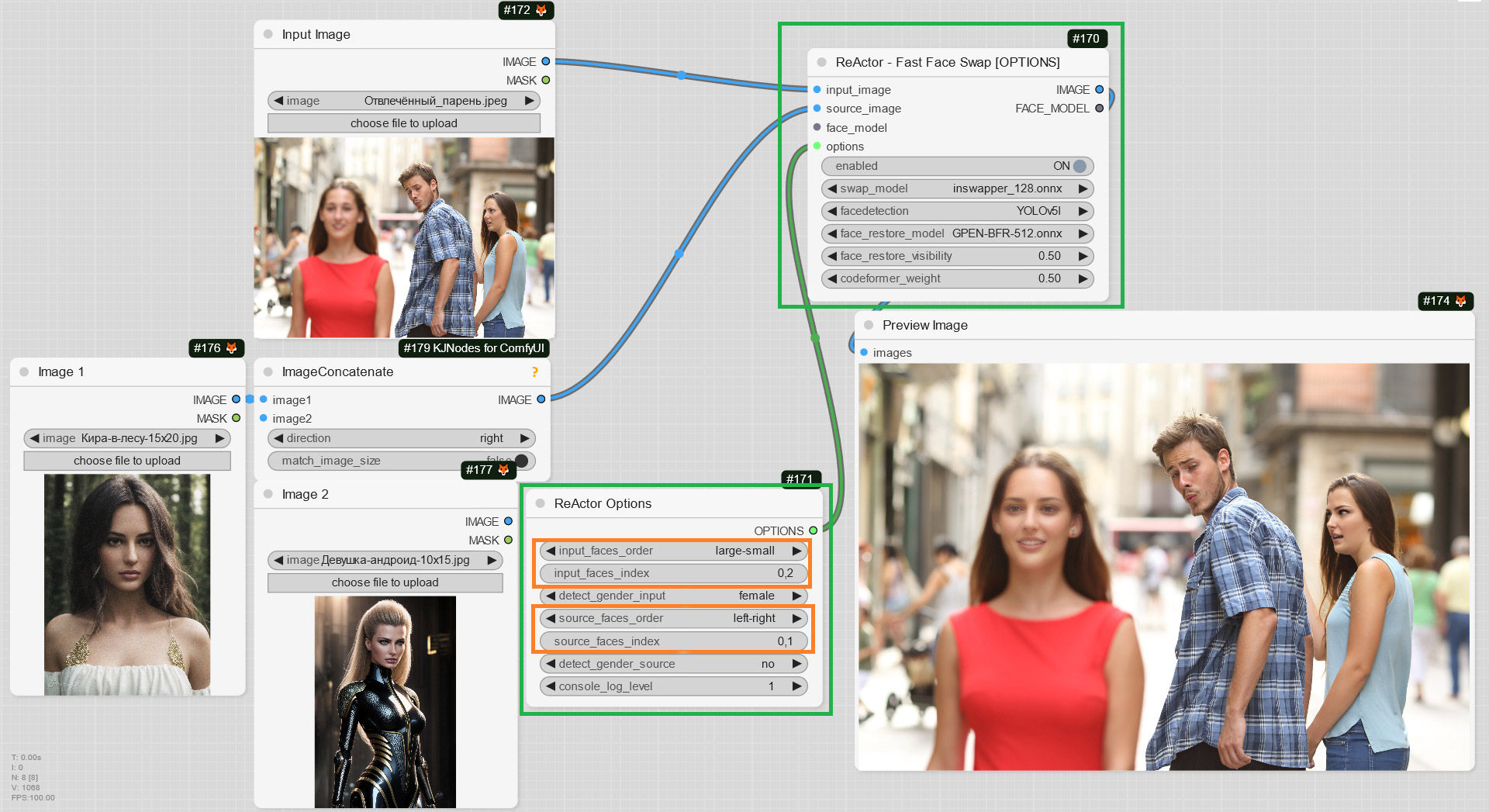

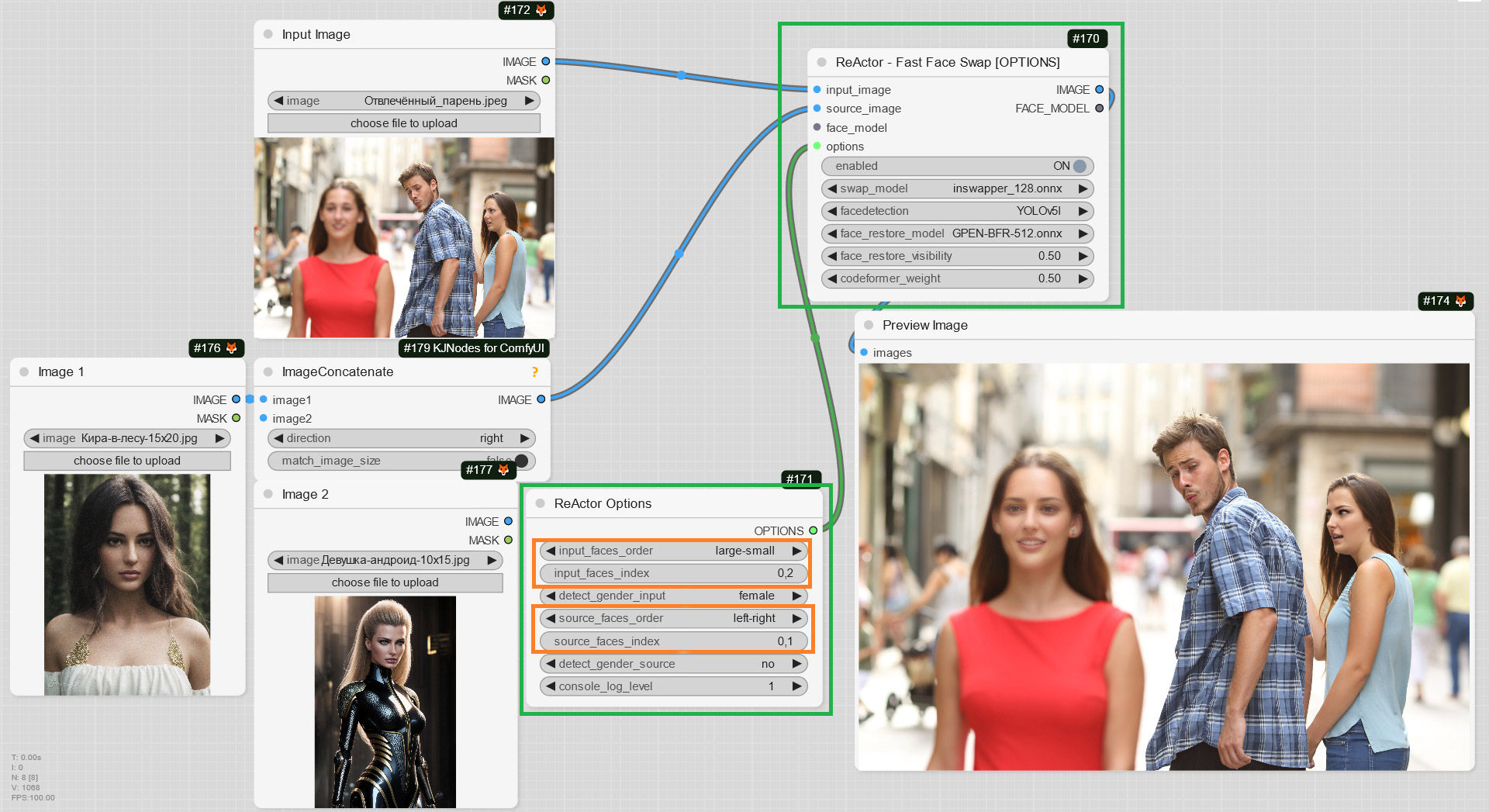

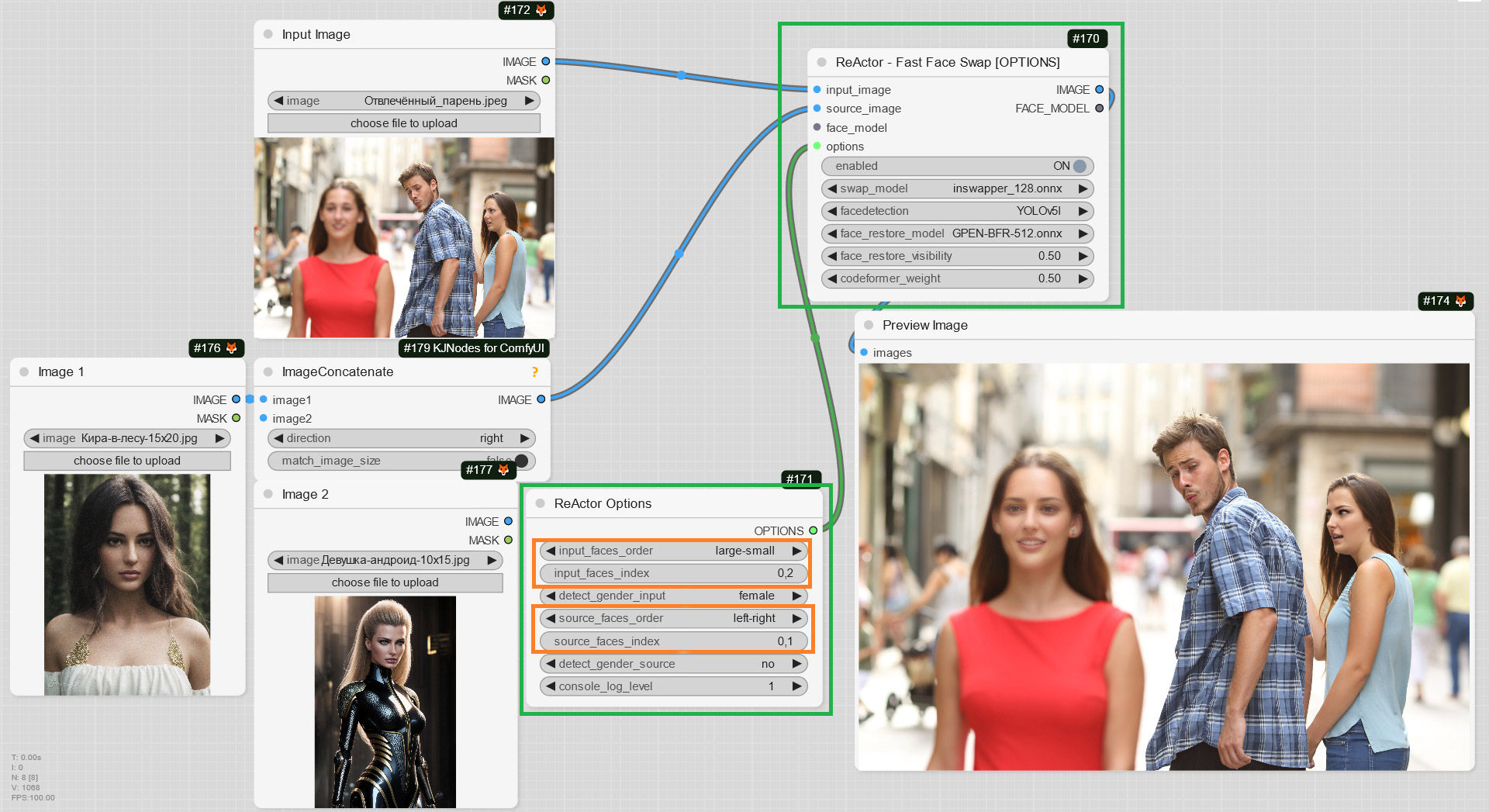

+- ReActorFaceSwapOpt (a simplified version of the Main Node) + ReActorOptions Nodes to set some additional options such as (new) "input/source faces separate order". Yes! You can now set the order of faces in the index in the way you want ("large to small" goes by default)!

+

+

+

+- ReActorFaceSwapOpt (a simplified version of the Main Node) + ReActorOptions Nodes to set some additional options such as (new) "input/source faces separate order". Yes! You can now set the order of faces in the index in the way you want ("large to small" goes by default)!

+

+ +

+- Little speed boost when analyzing target images (unfortunately it is still quite slow in compare to swapping and restoring...)

+

+### [0.4.2](https://github.com/Gourieff/comfyui-reactor-node/releases/tag/v0.4.2)

+

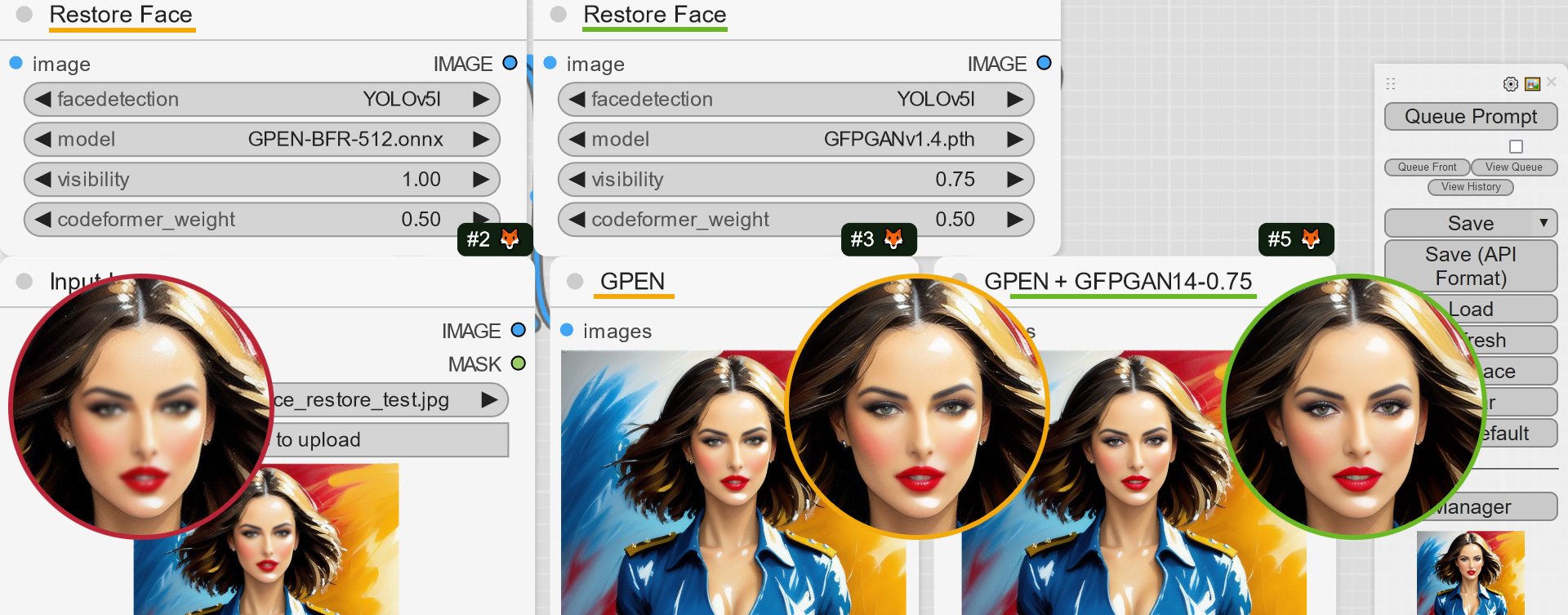

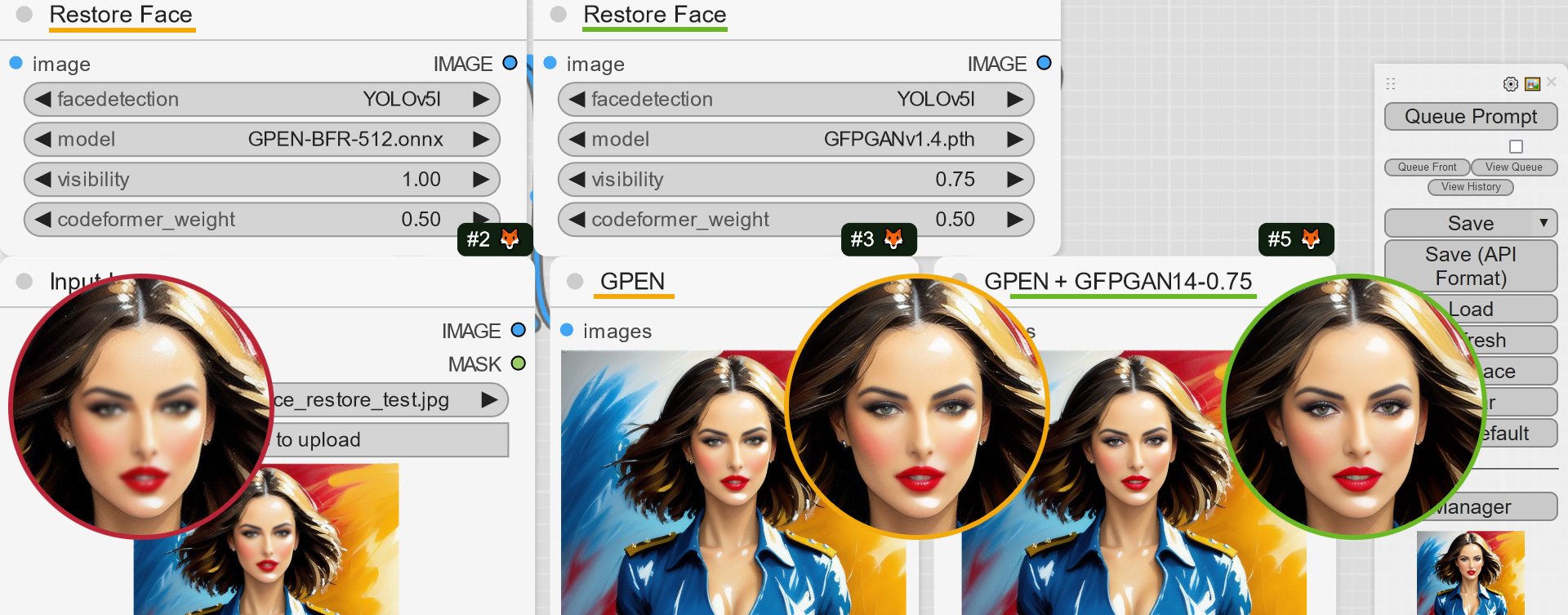

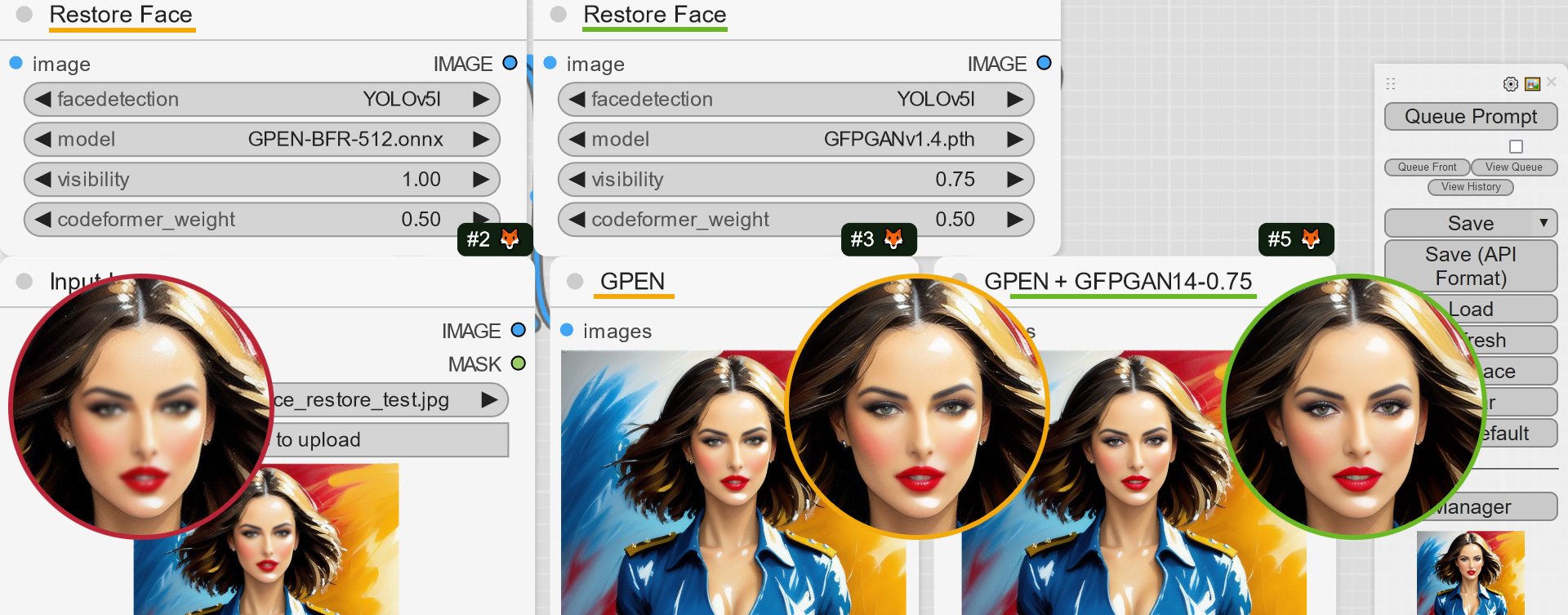

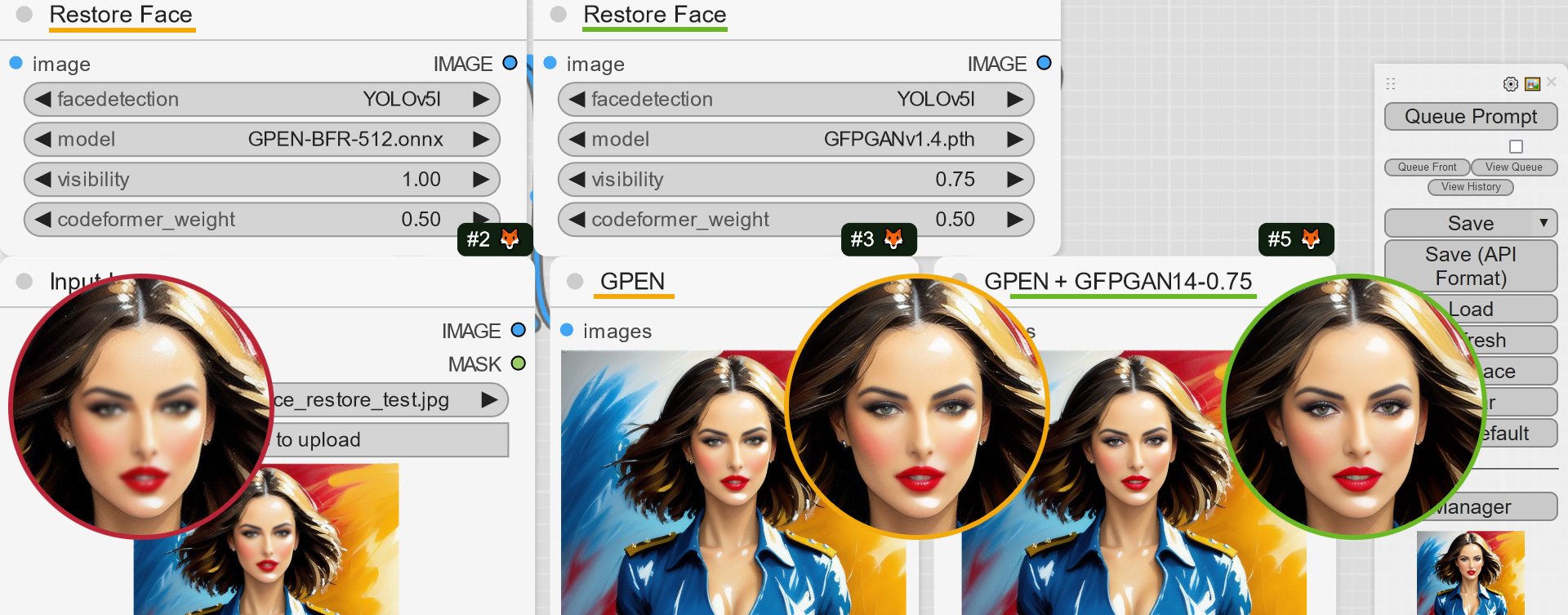

+- GPEN-BFR-512 and RestoreFormer_Plus_Plus face restoration models support

+

+You can download models here: https://huggingface.co/datasets/Gourieff/ReActor/tree/main/models/facerestore_models

+

+

+- Little speed boost when analyzing target images (unfortunately it is still quite slow in compare to swapping and restoring...)

+

+### [0.4.2](https://github.com/Gourieff/comfyui-reactor-node/releases/tag/v0.4.2)

+

+- GPEN-BFR-512 and RestoreFormer_Plus_Plus face restoration models support

+

+You can download models here: https://huggingface.co/datasets/Gourieff/ReActor/tree/main/models/facerestore_models

+

Put them into the `ComfyUI\models\facerestore_models` folder + + +

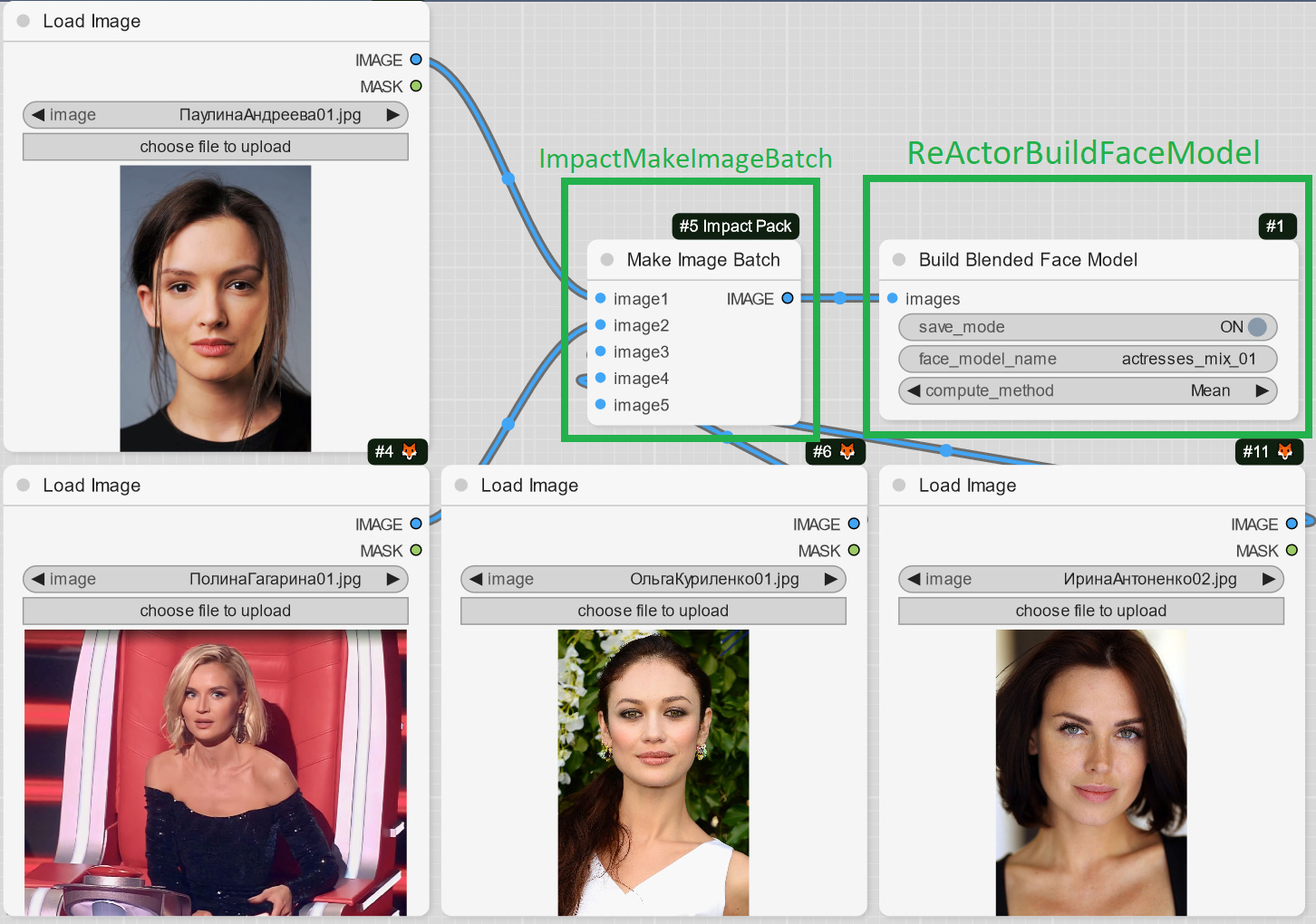

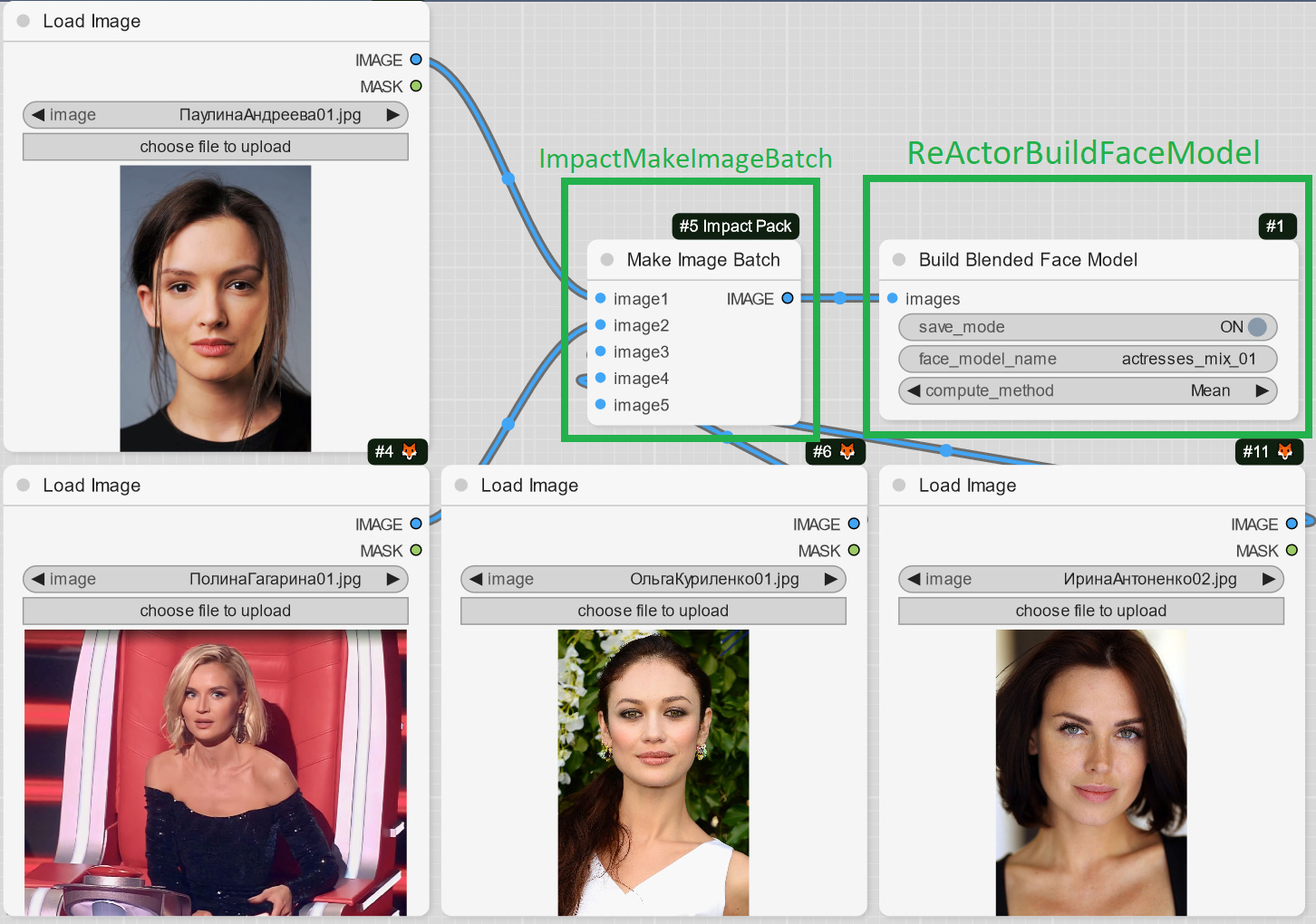

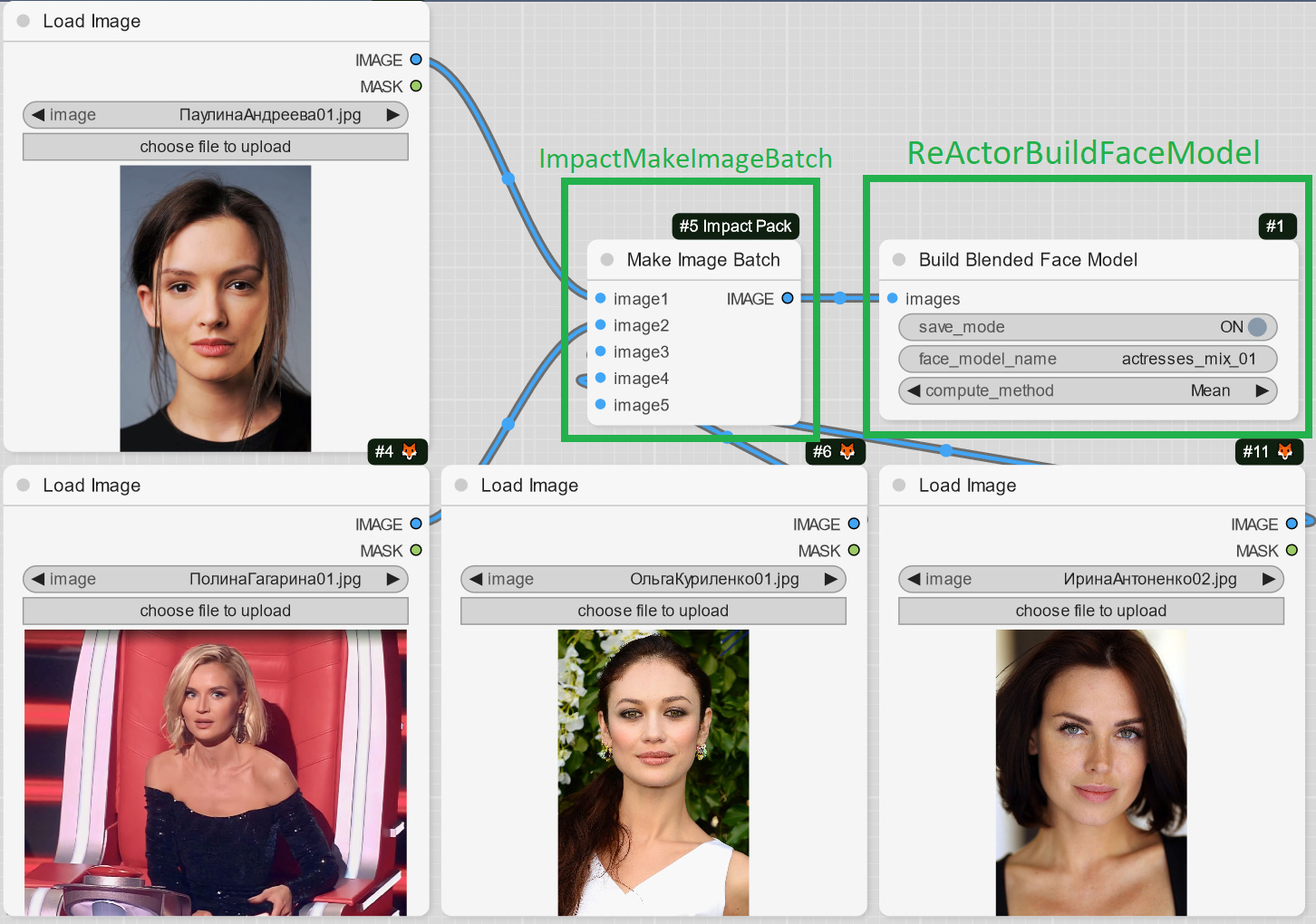

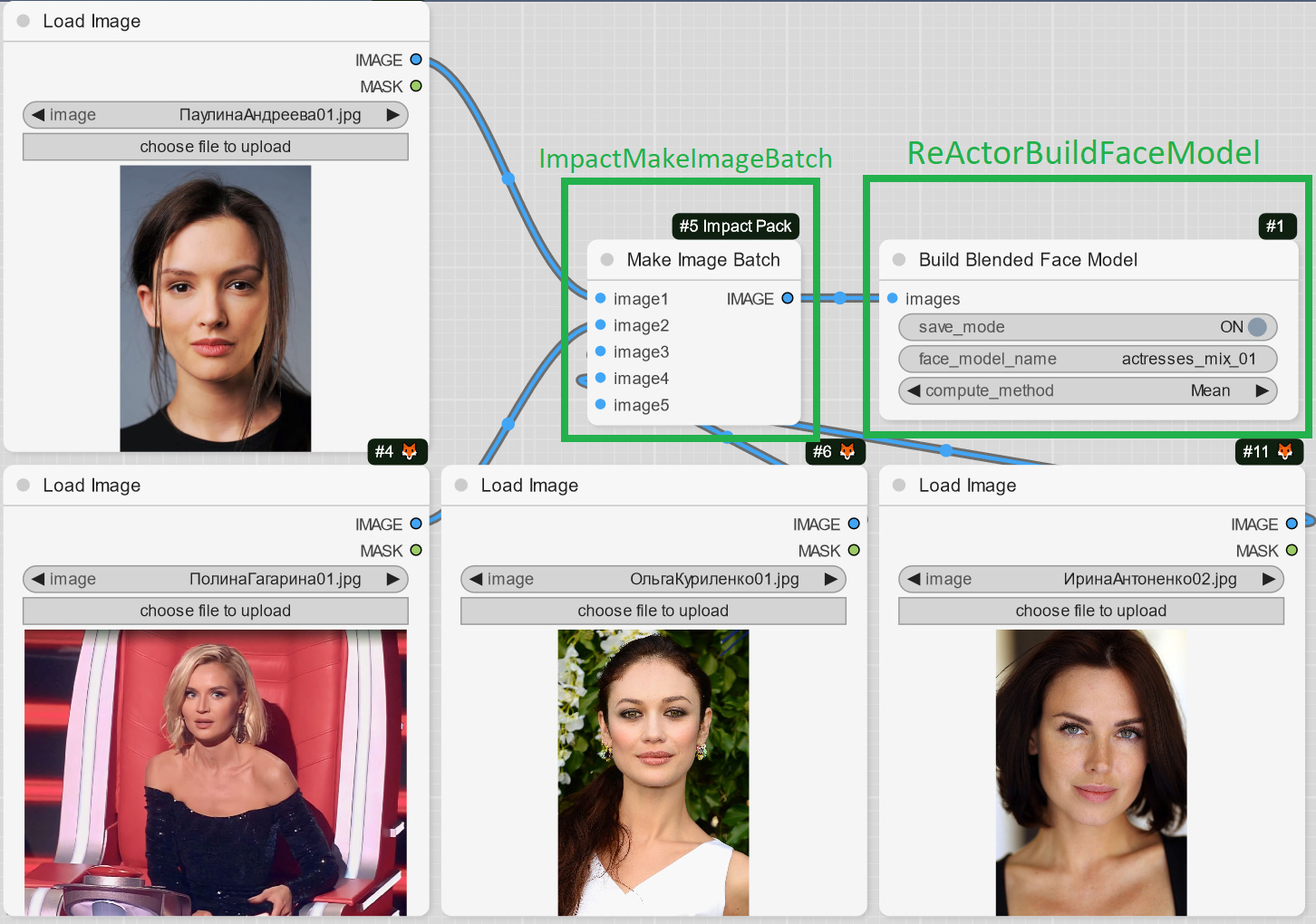

+- Due to popular demand - you can now blend several images with persons into one face model file and use it with "Load Face Model" Node or in SD WebUI as well;

+

+Experiment and create new faces or blend faces of one person to gain better accuracy and likeness!

+

+Just add the ImpactPack's "Make Image Batch" Node as the input to the ReActor's one and load images you want to blend into one model:

+

+

+

+- Due to popular demand - you can now blend several images with persons into one face model file and use it with "Load Face Model" Node or in SD WebUI as well;

+

+Experiment and create new faces or blend faces of one person to gain better accuracy and likeness!

+

+Just add the ImpactPack's "Make Image Batch" Node as the input to the ReActor's one and load images you want to blend into one model:

+

+ +

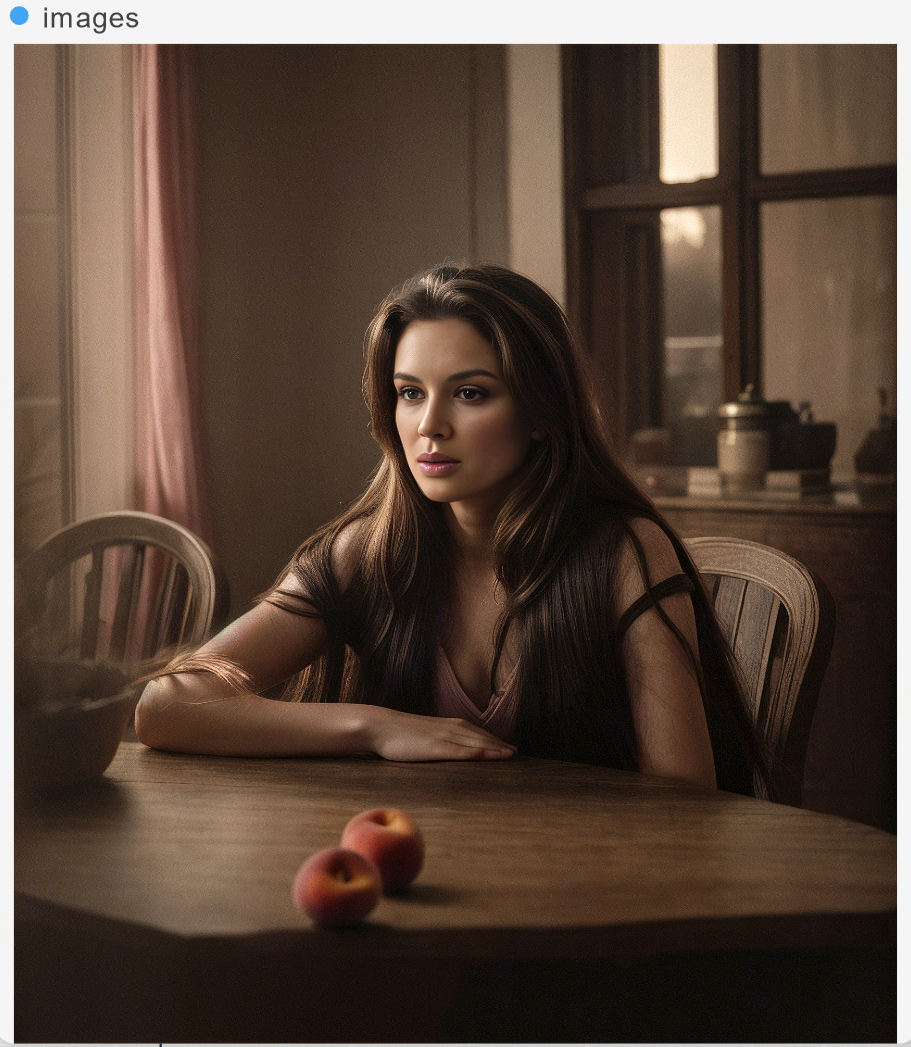

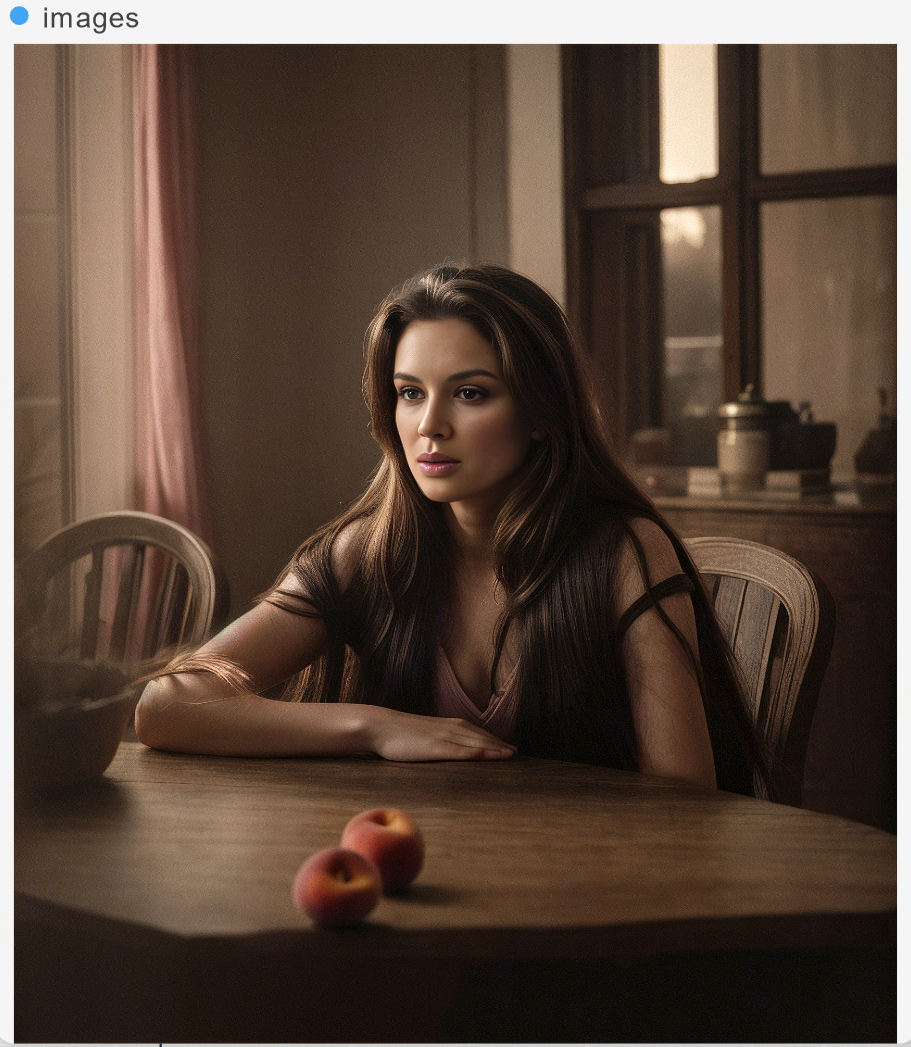

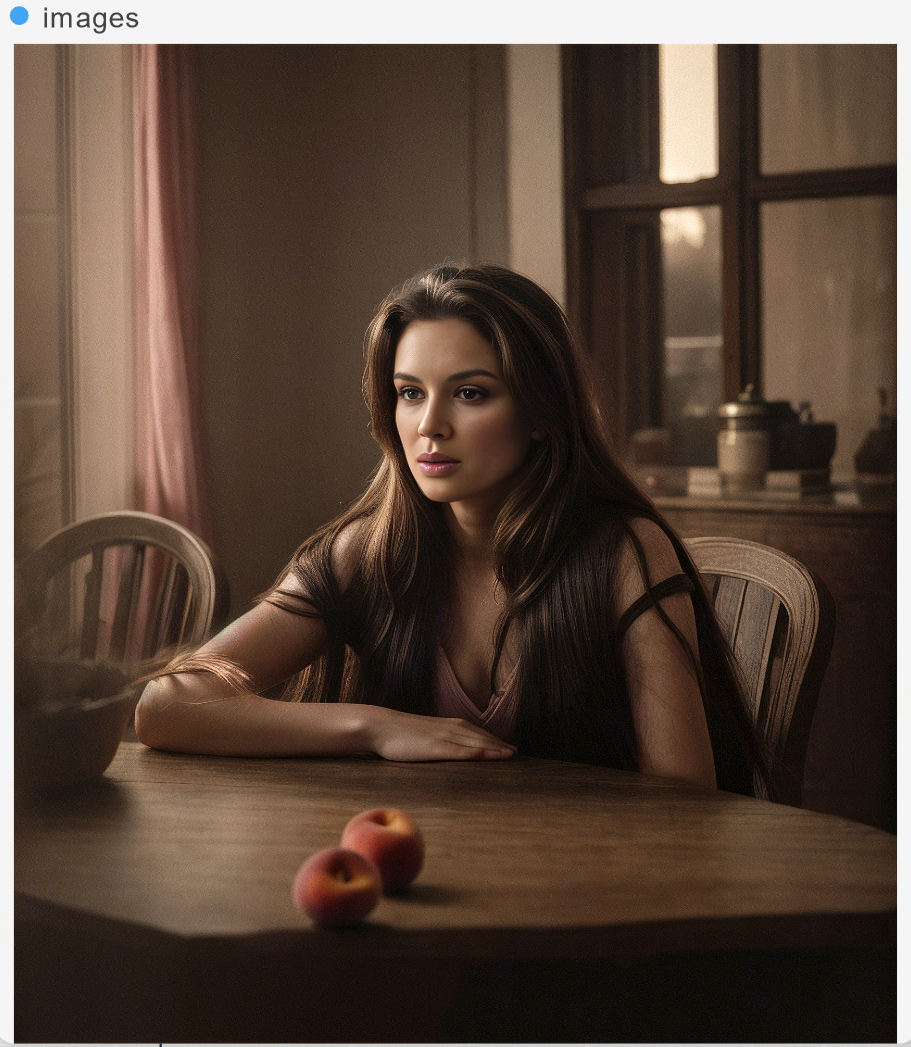

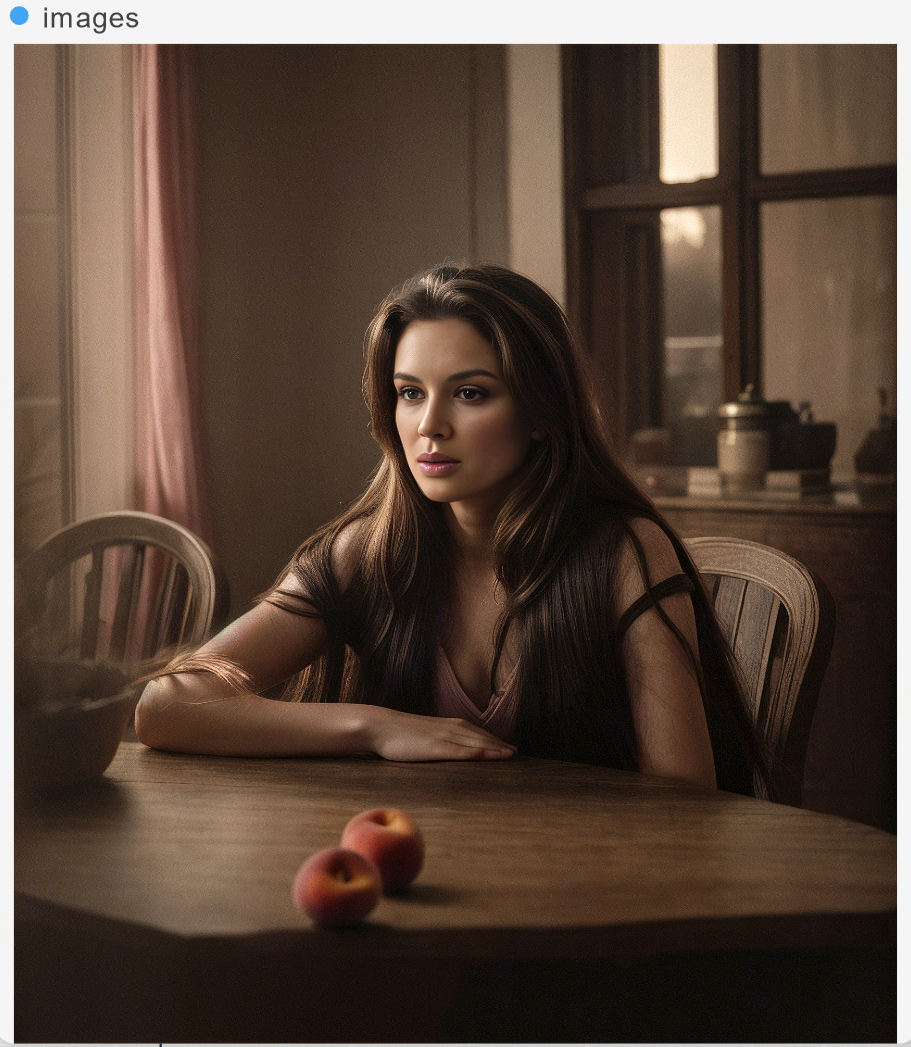

+Result example (the new face was created from 4 faces of different actresses):

+

+

+

+Result example (the new face was created from 4 faces of different actresses):

+

+ +

+Basic workflow [💾](https://github.com/Gourieff/Assets/blob/main/comfyui-reactor-node/workflows/ReActor--Build-Blended-Face-Model--v1.json)

+

+### [0.4.1](https://github.com/Gourieff/comfyui-reactor-node/releases/tag/v0.4.1)

+

+- CUDA 12 Support - don't forget to run (Windows) `install.bat` or (Linux/MacOS) `install.py` for ComfyUI's Python enclosure or try to install ORT-GPU for CU12 manually (https://onnxruntime.ai/docs/install/#install-onnx-runtime-gpu-cuda-12x)

+- Issue https://github.com/Gourieff/comfyui-reactor-node/issues/173 fix

+

+- Separate Node for the Face Restoration postprocessing (FR https://github.com/Gourieff/comfyui-reactor-node/issues/191), can be found inside ReActor's menu (RestoreFace Node)

+- (Windows) Installation can be done for Python from the System's PATH

+- Different fixes and improvements

+

+- Face Restore Visibility and CodeFormer Weight (Fidelity) options are now available! Don't forget to reload the Node in your existing workflow

+

+

+

+Basic workflow [💾](https://github.com/Gourieff/Assets/blob/main/comfyui-reactor-node/workflows/ReActor--Build-Blended-Face-Model--v1.json)

+

+### [0.4.1](https://github.com/Gourieff/comfyui-reactor-node/releases/tag/v0.4.1)

+

+- CUDA 12 Support - don't forget to run (Windows) `install.bat` or (Linux/MacOS) `install.py` for ComfyUI's Python enclosure or try to install ORT-GPU for CU12 manually (https://onnxruntime.ai/docs/install/#install-onnx-runtime-gpu-cuda-12x)

+- Issue https://github.com/Gourieff/comfyui-reactor-node/issues/173 fix

+

+- Separate Node for the Face Restoration postprocessing (FR https://github.com/Gourieff/comfyui-reactor-node/issues/191), can be found inside ReActor's menu (RestoreFace Node)

+- (Windows) Installation can be done for Python from the System's PATH

+- Different fixes and improvements

+

+- Face Restore Visibility and CodeFormer Weight (Fidelity) options are now available! Don't forget to reload the Node in your existing workflow

+

+ +

+### [0.4.0](https://github.com/Gourieff/comfyui-reactor-node/releases/tag/v0.4.0)

+

+- Input "input_image" goes first now, it gives a correct bypass and also it is right to have the main input first;

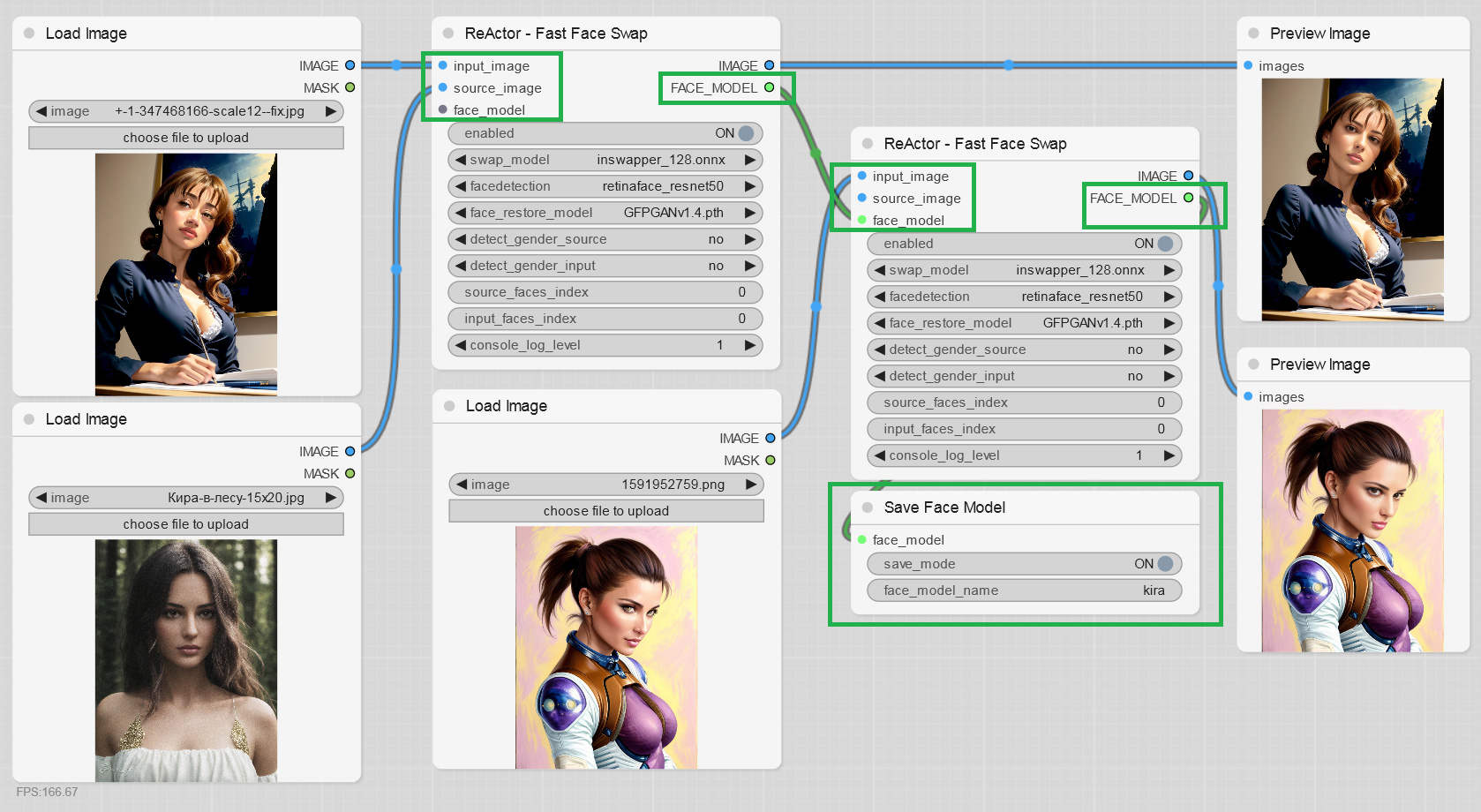

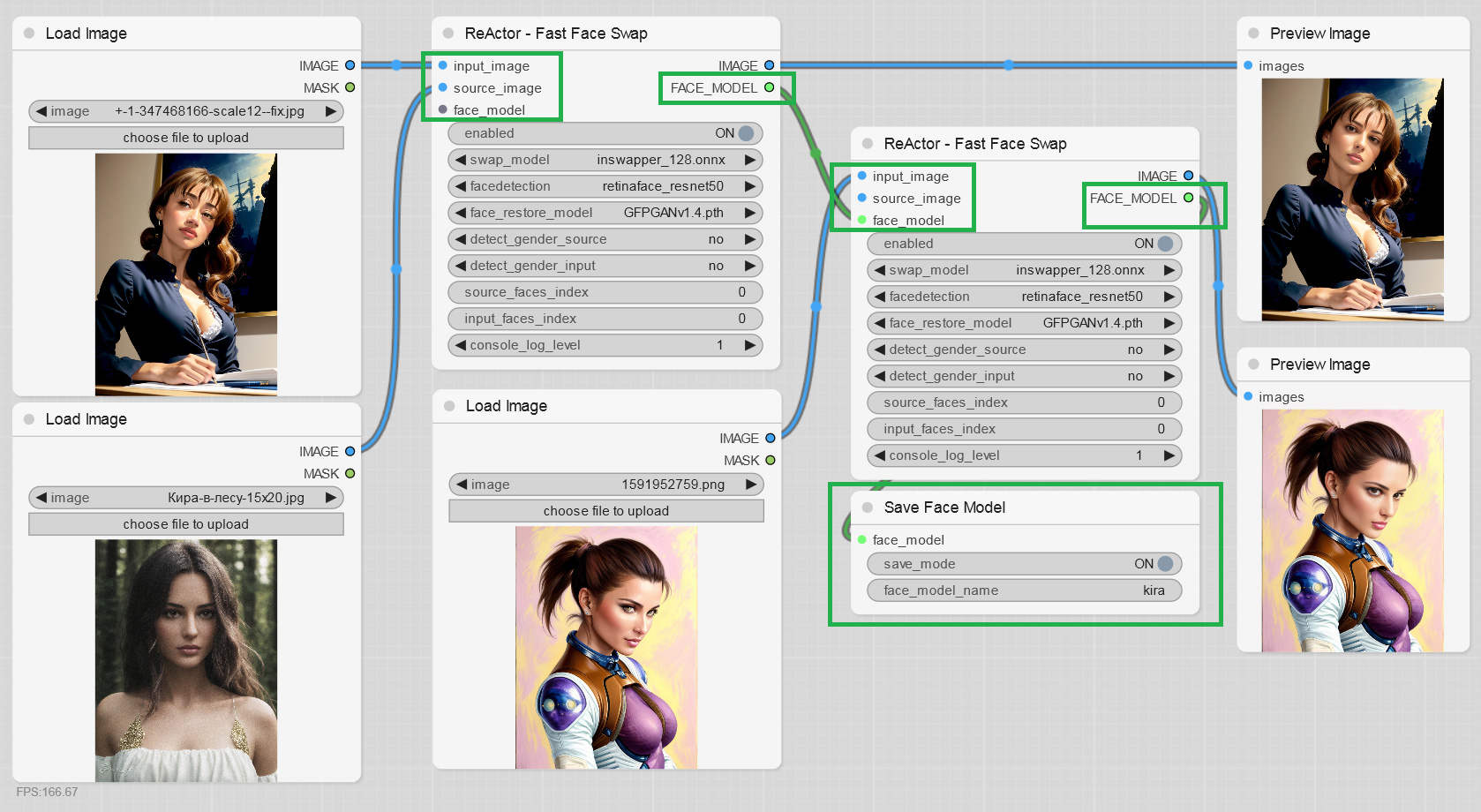

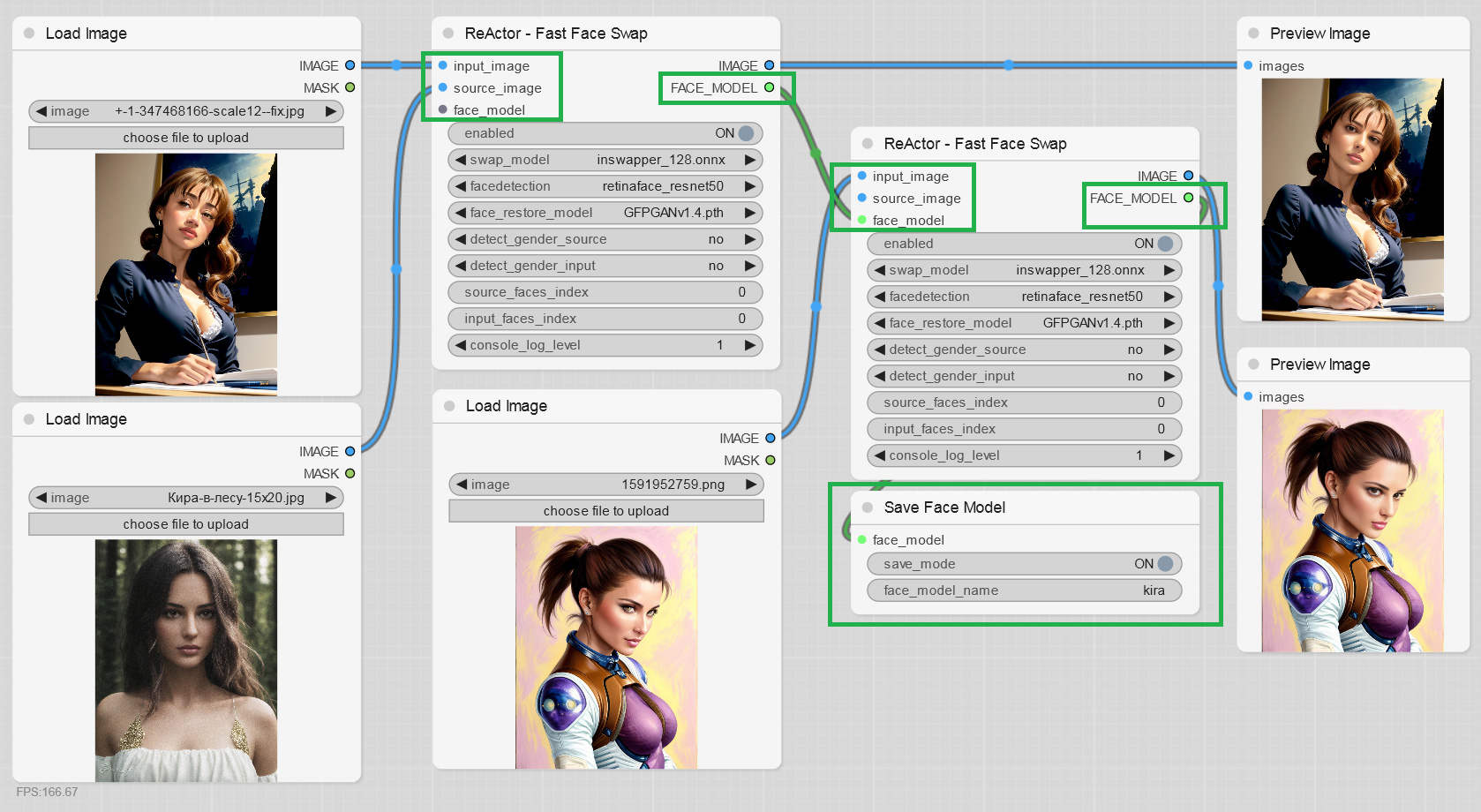

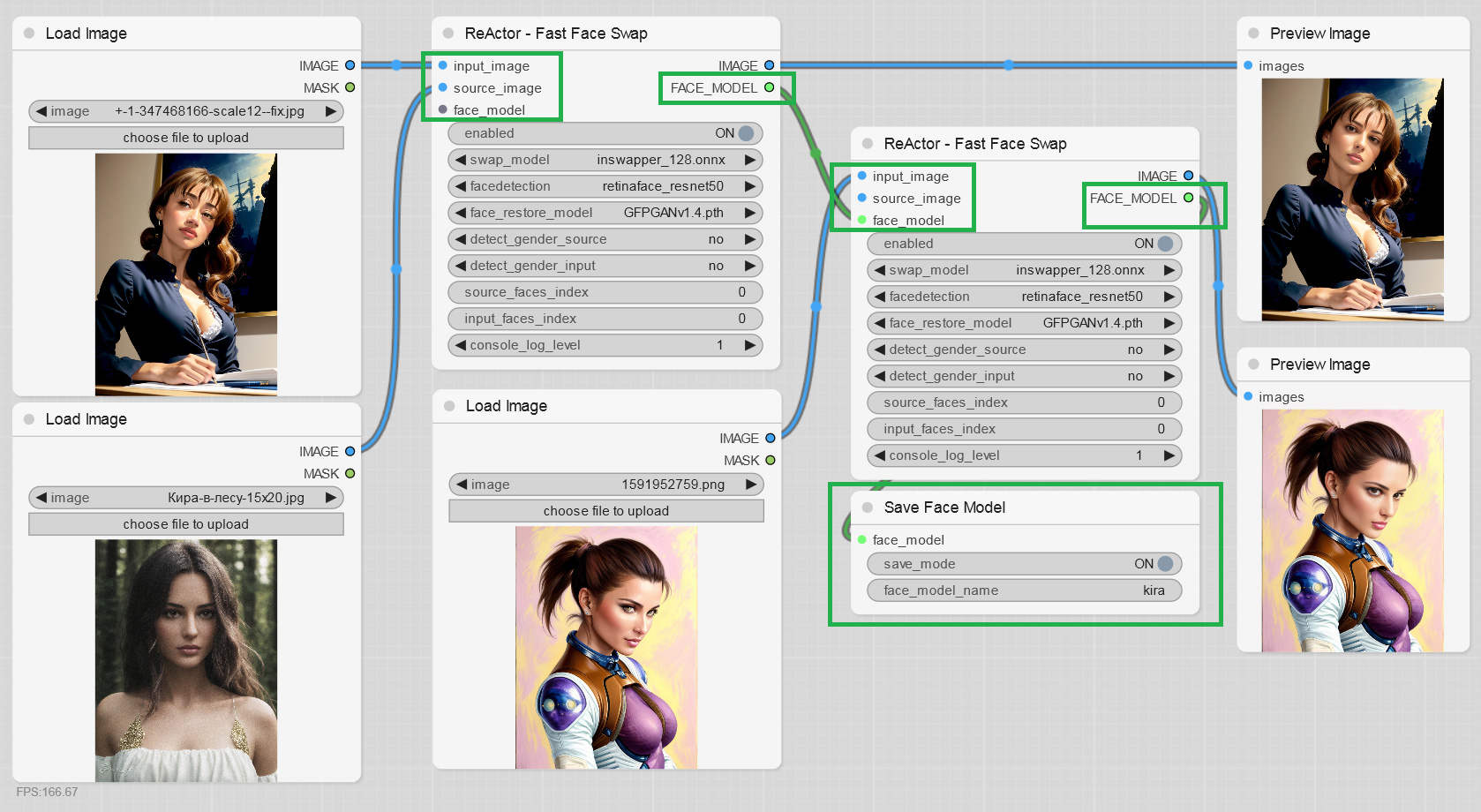

+- You can now save face models as "safetensors" files (`ComfyUI\models\reactor\faces`) and load them into ReActor implementing different scenarios and keeping super lightweight face models of the faces you use:

+

+

+

+### [0.4.0](https://github.com/Gourieff/comfyui-reactor-node/releases/tag/v0.4.0)

+

+- Input "input_image" goes first now, it gives a correct bypass and also it is right to have the main input first;

+- You can now save face models as "safetensors" files (`ComfyUI\models\reactor\faces`) and load them into ReActor implementing different scenarios and keeping super lightweight face models of the faces you use:

+

+ +

+ +

+- Ability to build and save face models directly from an image:

+

+

+

+- Ability to build and save face models directly from an image:

+

+ +

+- Both the inputs are optional, just connect one of them according to your workflow; if both is connected - `image` has a priority.

+- Different fixes making this extension better.

+

+Thanks to everyone who finds bugs, suggests new features and supports this project!

+

+

+

+- Both the inputs are optional, just connect one of them according to your workflow; if both is connected - `image` has a priority.

+- Different fixes making this extension better.

+

+Thanks to everyone who finds bugs, suggests new features and supports this project!

+

+

+

+## Installation

+

+Previous versions

+ +### 0.5.2 + +- ReSwapper models support. Although Inswapper still has the best similarity, but ReSwapper is evolving - thanks @somanchiu https://github.com/somanchiu/ReSwapper for the ReSwapper models and the ReSwapper project! This is a good step for the Community in the Inswapper's alternative creation! + + +

+ +

+ +

+- Support of ORT CoreML and ROCM EPs, just install onnxruntime version you need

+- Install script improvements to install latest versions of ORT-GPU

+

+

+

+- Support of ORT CoreML and ROCM EPs, just install onnxruntime version you need

+- Install script improvements to install latest versions of ORT-GPU

+

+ +

+ +

+[Full size demo preview](https://github.com/Gourieff/Assets/blob/main/comfyui-reactor-node/0.5.1-whatsnew-02.png)

+

+- Sorting facemodels alphabetically

+- A lot of fixes and improvements

+

+### [0.5.0 BETA4](https://github.com/Gourieff/comfyui-reactor-node/releases/tag/v0.5.0)

+

+- Spandrel lib support for GFPGAN

+

+### 0.5.0 BETA3

+

+- Fixes: "RAM issue", "No detection" for MaskingHelper

+

+### 0.5.0 BETA2

+

+- You can now build a blended face model from a batch of face models you already have, just add the "Make Face Model Batch" node to your workflow and connect several models via "Load Face Model"

+- Huge performance boost of the image analyzer's module! 10x speed up! Working with videos is now a pleasure!

+

+

+

+[Full size demo preview](https://github.com/Gourieff/Assets/blob/main/comfyui-reactor-node/0.5.1-whatsnew-02.png)

+

+- Sorting facemodels alphabetically

+- A lot of fixes and improvements

+

+### [0.5.0 BETA4](https://github.com/Gourieff/comfyui-reactor-node/releases/tag/v0.5.0)

+

+- Spandrel lib support for GFPGAN

+

+### 0.5.0 BETA3

+

+- Fixes: "RAM issue", "No detection" for MaskingHelper

+

+### 0.5.0 BETA2

+

+- You can now build a blended face model from a batch of face models you already have, just add the "Make Face Model Batch" node to your workflow and connect several models via "Load Face Model"

+- Huge performance boost of the image analyzer's module! 10x speed up! Working with videos is now a pleasure!

+

+ +

+### 0.5.0 BETA1

+

+- SWAPPED_FACE output for the Masking Helper Node

+- FIX: Empty A-channel for Masking Helper IMAGE output (causing errors with some nodes) was removed

+

+### 0.5.0 ALPHA1

+

+- ReActorBuildFaceModel Node got "face_model" output to provide a blended face model directly to the main Node:

+

+Basic workflow [💾](https://github.com/Gourieff/Assets/blob/main/comfyui-reactor-node/workflows/ReActor--Build-Blended-Face-Model--v2.json)

+

+- Face Masking feature is available now, just add the "ReActorMaskHelper" Node to the workflow and connect it as shown below:

+

+

+

+### 0.5.0 BETA1

+

+- SWAPPED_FACE output for the Masking Helper Node

+- FIX: Empty A-channel for Masking Helper IMAGE output (causing errors with some nodes) was removed

+

+### 0.5.0 ALPHA1

+

+- ReActorBuildFaceModel Node got "face_model" output to provide a blended face model directly to the main Node:

+

+Basic workflow [💾](https://github.com/Gourieff/Assets/blob/main/comfyui-reactor-node/workflows/ReActor--Build-Blended-Face-Model--v2.json)

+

+- Face Masking feature is available now, just add the "ReActorMaskHelper" Node to the workflow and connect it as shown below:

+

+ +

+If you don't have the "face_yolov8m.pt" Ultralytics model - you can download it from the [Assets](https://huggingface.co/datasets/Gourieff/ReActor/blob/main/models/detection/bbox/face_yolov8m.pt) and put it into the "ComfyUI\models\ultralytics\bbox" directory

+

+

+If you don't have the "face_yolov8m.pt" Ultralytics model - you can download it from the [Assets](https://huggingface.co/datasets/Gourieff/ReActor/blob/main/models/detection/bbox/face_yolov8m.pt) and put it into the "ComfyUI\models\ultralytics\bbox" directory

++As well as ["sam_vit_b_01ec64.pth"](https://huggingface.co/datasets/Gourieff/ReActor/blob/main/models/sams/sam_vit_b_01ec64.pth) model - download (if you don't have it) and put it into the "ComfyUI\models\sams" directory; + +Use this Node to gain the best results of the face swapping process: + +

+

+- ReActorImageDublicator Node - rather useful for those who create videos, it helps to duplicate one image to several frames to use them with VAE Encoder (e.g. live avatars):

+

+

+

+- ReActorImageDublicator Node - rather useful for those who create videos, it helps to duplicate one image to several frames to use them with VAE Encoder (e.g. live avatars):

+

+ +

+- ReActorFaceSwapOpt (a simplified version of the Main Node) + ReActorOptions Nodes to set some additional options such as (new) "input/source faces separate order". Yes! You can now set the order of faces in the index in the way you want ("large to small" goes by default)!

+

+

+

+- ReActorFaceSwapOpt (a simplified version of the Main Node) + ReActorOptions Nodes to set some additional options such as (new) "input/source faces separate order". Yes! You can now set the order of faces in the index in the way you want ("large to small" goes by default)!

+

+ +

+- Little speed boost when analyzing target images (unfortunately it is still quite slow in compare to swapping and restoring...)

+

+### [0.4.2](https://github.com/Gourieff/comfyui-reactor-node/releases/tag/v0.4.2)

+

+- GPEN-BFR-512 and RestoreFormer_Plus_Plus face restoration models support

+

+You can download models here: https://huggingface.co/datasets/Gourieff/ReActor/tree/main/models/facerestore_models

+

+

+- Little speed boost when analyzing target images (unfortunately it is still quite slow in compare to swapping and restoring...)

+

+### [0.4.2](https://github.com/Gourieff/comfyui-reactor-node/releases/tag/v0.4.2)

+

+- GPEN-BFR-512 and RestoreFormer_Plus_Plus face restoration models support

+

+You can download models here: https://huggingface.co/datasets/Gourieff/ReActor/tree/main/models/facerestore_models

+Put them into the `ComfyUI\models\facerestore_models` folder + +

+

+- Due to popular demand - you can now blend several images with persons into one face model file and use it with "Load Face Model" Node or in SD WebUI as well;

+

+Experiment and create new faces or blend faces of one person to gain better accuracy and likeness!

+

+Just add the ImpactPack's "Make Image Batch" Node as the input to the ReActor's one and load images you want to blend into one model:

+

+

+

+- Due to popular demand - you can now blend several images with persons into one face model file and use it with "Load Face Model" Node or in SD WebUI as well;

+

+Experiment and create new faces or blend faces of one person to gain better accuracy and likeness!

+

+Just add the ImpactPack's "Make Image Batch" Node as the input to the ReActor's one and load images you want to blend into one model:

+

+ +

+Result example (the new face was created from 4 faces of different actresses):

+

+

+

+Result example (the new face was created from 4 faces of different actresses):

+

+ +

+Basic workflow [💾](https://github.com/Gourieff/Assets/blob/main/comfyui-reactor-node/workflows/ReActor--Build-Blended-Face-Model--v1.json)

+

+### [0.4.1](https://github.com/Gourieff/comfyui-reactor-node/releases/tag/v0.4.1)

+

+- CUDA 12 Support - don't forget to run (Windows) `install.bat` or (Linux/MacOS) `install.py` for ComfyUI's Python enclosure or try to install ORT-GPU for CU12 manually (https://onnxruntime.ai/docs/install/#install-onnx-runtime-gpu-cuda-12x)

+- Issue https://github.com/Gourieff/comfyui-reactor-node/issues/173 fix

+

+- Separate Node for the Face Restoration postprocessing (FR https://github.com/Gourieff/comfyui-reactor-node/issues/191), can be found inside ReActor's menu (RestoreFace Node)

+- (Windows) Installation can be done for Python from the System's PATH

+- Different fixes and improvements

+

+- Face Restore Visibility and CodeFormer Weight (Fidelity) options are now available! Don't forget to reload the Node in your existing workflow

+

+

+

+Basic workflow [💾](https://github.com/Gourieff/Assets/blob/main/comfyui-reactor-node/workflows/ReActor--Build-Blended-Face-Model--v1.json)

+

+### [0.4.1](https://github.com/Gourieff/comfyui-reactor-node/releases/tag/v0.4.1)

+

+- CUDA 12 Support - don't forget to run (Windows) `install.bat` or (Linux/MacOS) `install.py` for ComfyUI's Python enclosure or try to install ORT-GPU for CU12 manually (https://onnxruntime.ai/docs/install/#install-onnx-runtime-gpu-cuda-12x)

+- Issue https://github.com/Gourieff/comfyui-reactor-node/issues/173 fix

+

+- Separate Node for the Face Restoration postprocessing (FR https://github.com/Gourieff/comfyui-reactor-node/issues/191), can be found inside ReActor's menu (RestoreFace Node)

+- (Windows) Installation can be done for Python from the System's PATH

+- Different fixes and improvements

+

+- Face Restore Visibility and CodeFormer Weight (Fidelity) options are now available! Don't forget to reload the Node in your existing workflow

+

+ +

+### [0.4.0](https://github.com/Gourieff/comfyui-reactor-node/releases/tag/v0.4.0)

+

+- Input "input_image" goes first now, it gives a correct bypass and also it is right to have the main input first;

+- You can now save face models as "safetensors" files (`ComfyUI\models\reactor\faces`) and load them into ReActor implementing different scenarios and keeping super lightweight face models of the faces you use:

+

+

+

+### [0.4.0](https://github.com/Gourieff/comfyui-reactor-node/releases/tag/v0.4.0)

+

+- Input "input_image" goes first now, it gives a correct bypass and also it is right to have the main input first;

+- You can now save face models as "safetensors" files (`ComfyUI\models\reactor\faces`) and load them into ReActor implementing different scenarios and keeping super lightweight face models of the faces you use:

+

+ +

+ +

+- Ability to build and save face models directly from an image:

+

+

+

+- Ability to build and save face models directly from an image:

+

+ +

+- Both the inputs are optional, just connect one of them according to your workflow; if both is connected - `image` has a priority.

+- Different fixes making this extension better.

+

+Thanks to everyone who finds bugs, suggests new features and supports this project!

+

+

+

+- Both the inputs are optional, just connect one of them according to your workflow; if both is connected - `image` has a priority.

+- Different fixes making this extension better.

+

+Thanks to everyone who finds bugs, suggests new features and supports this project!

+

+

+

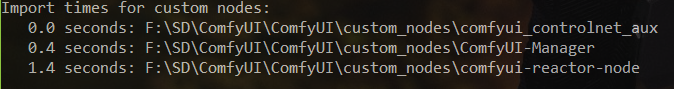

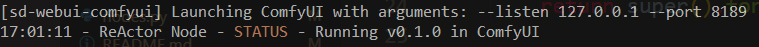

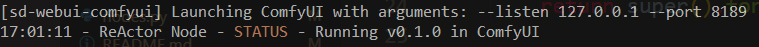

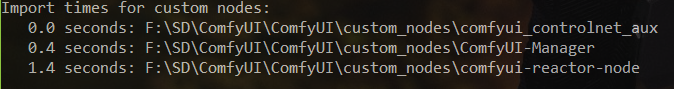

+https://huggingface.co/datasets/Gourieff/ReActor/tree/main/models/facerestore_models +11. Run SD WebUI and check console for the message that ReActor Node is running: + +

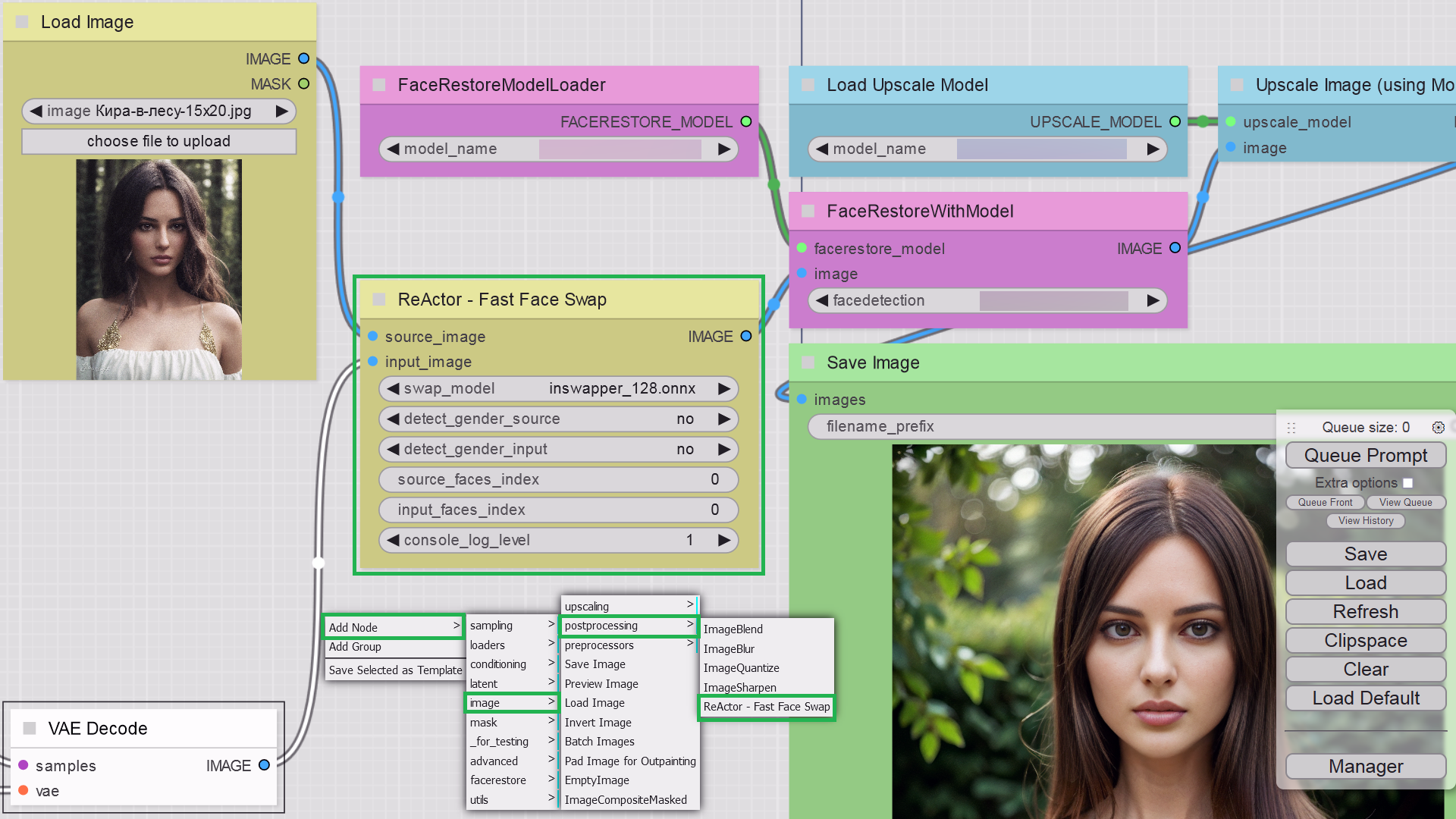

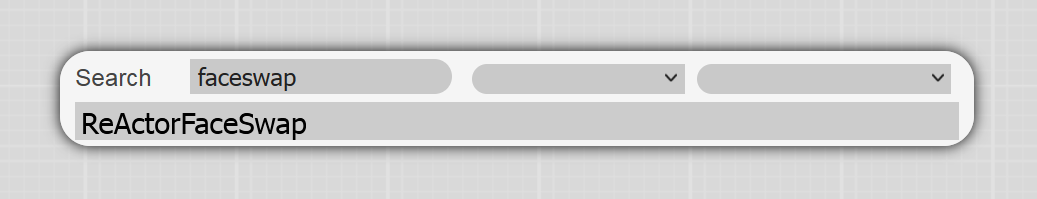

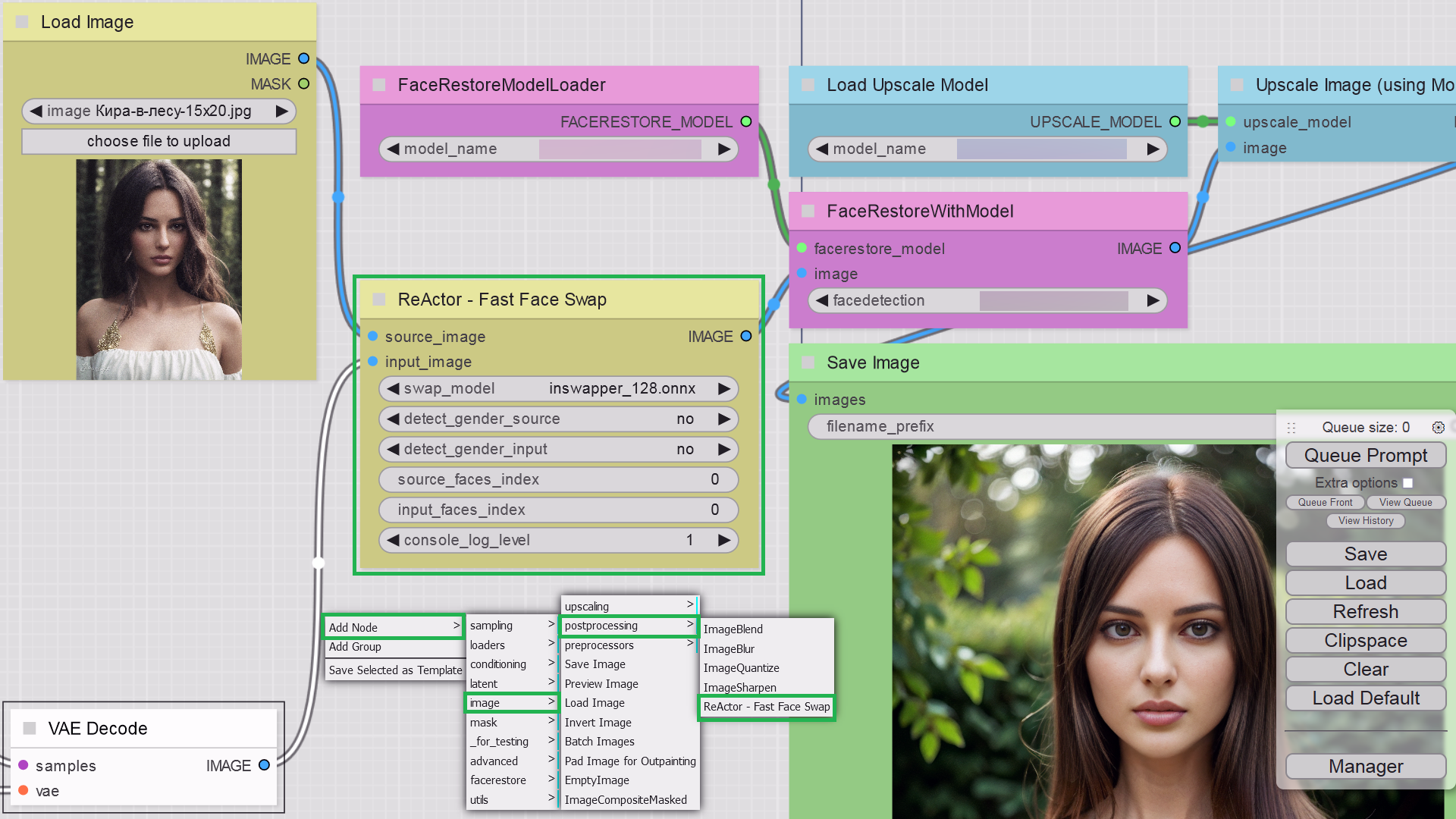

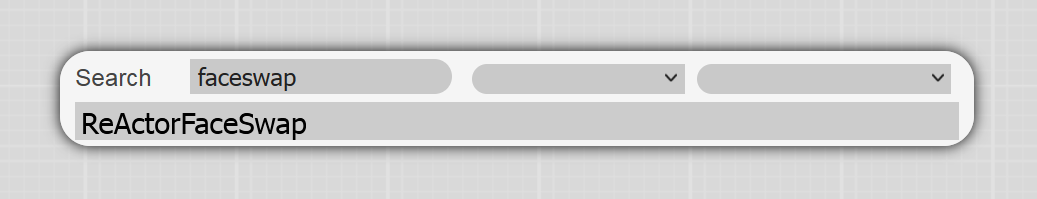

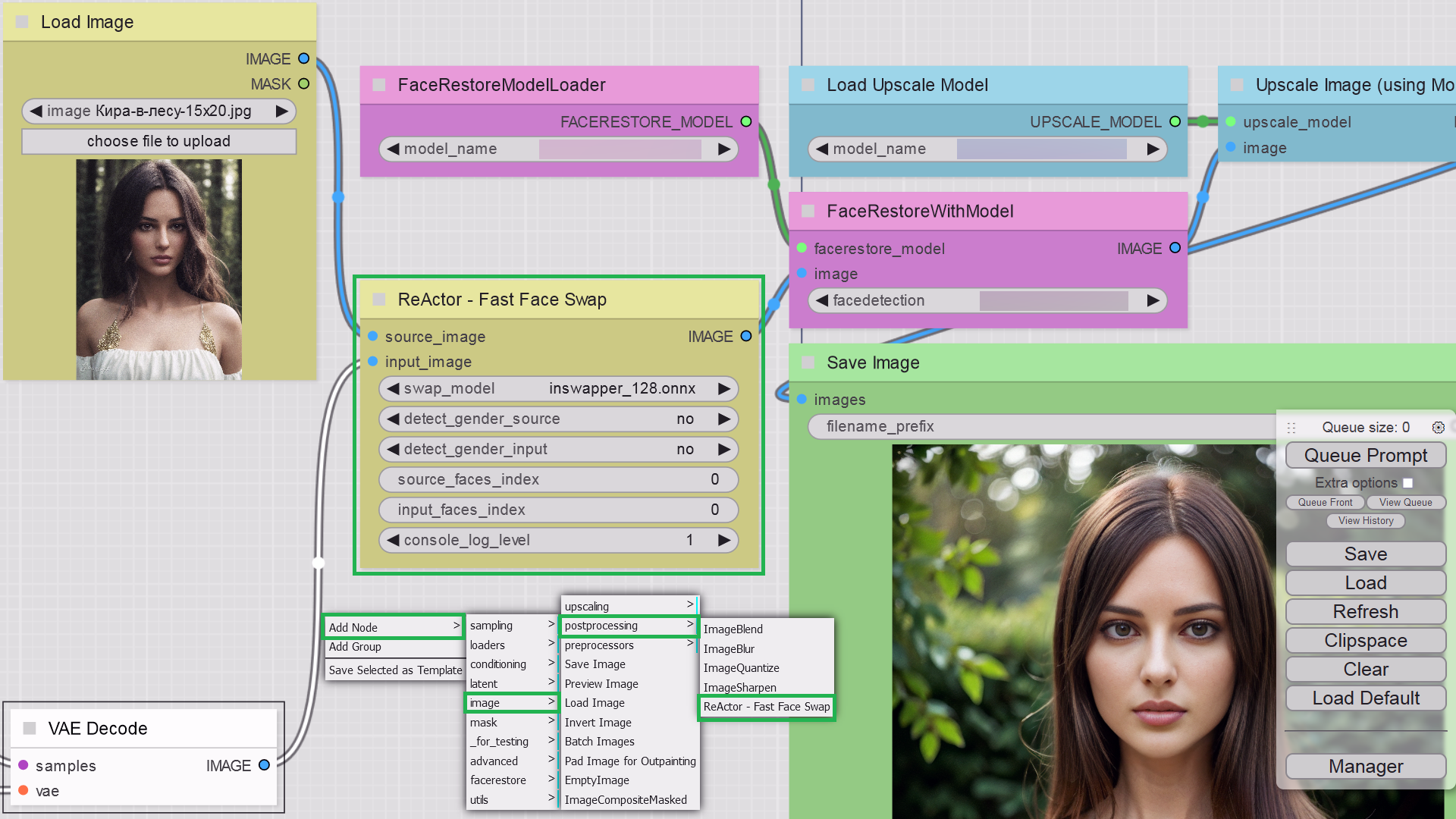

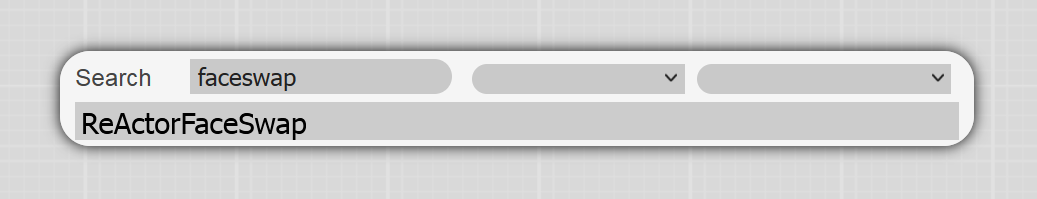

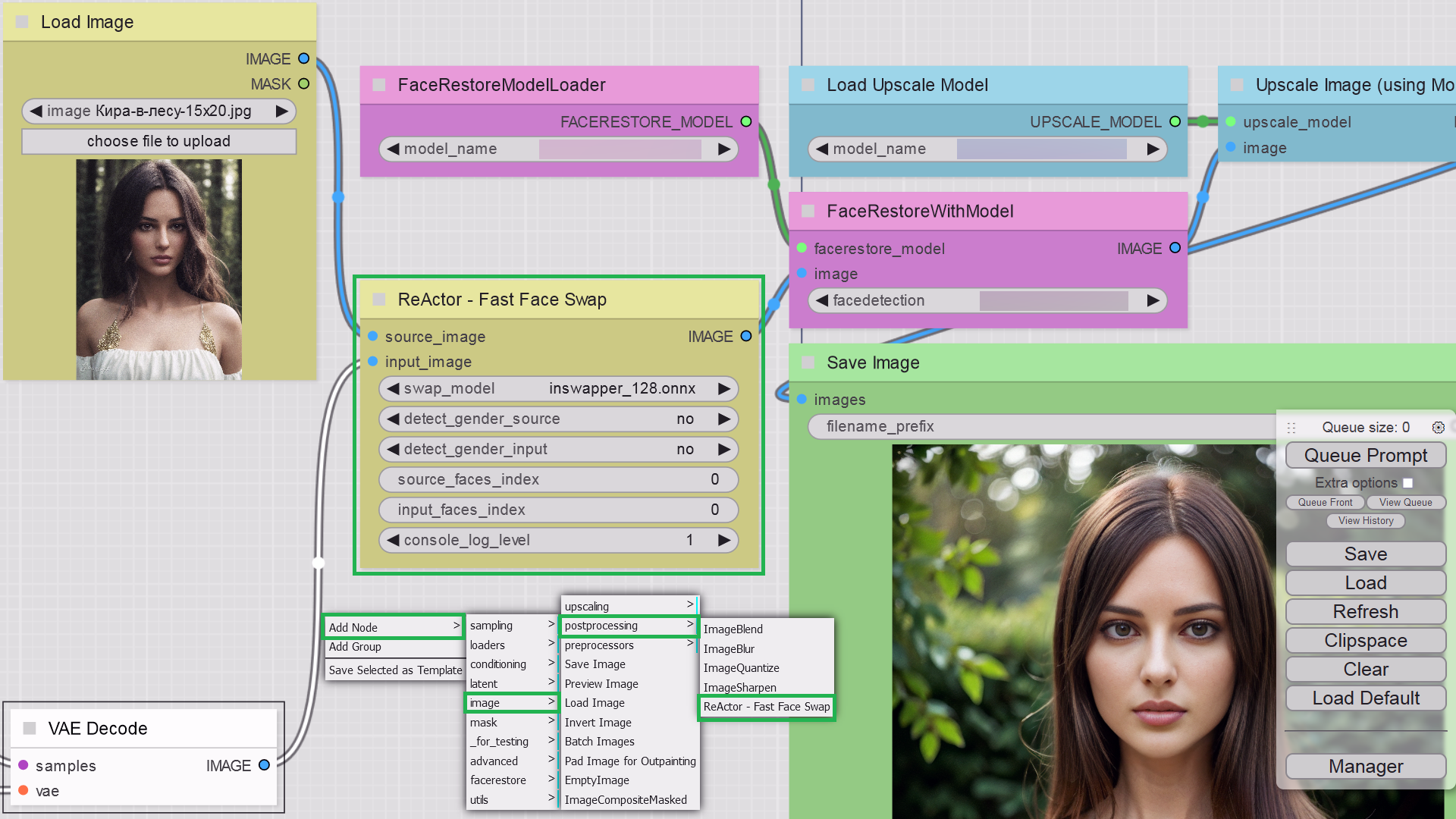

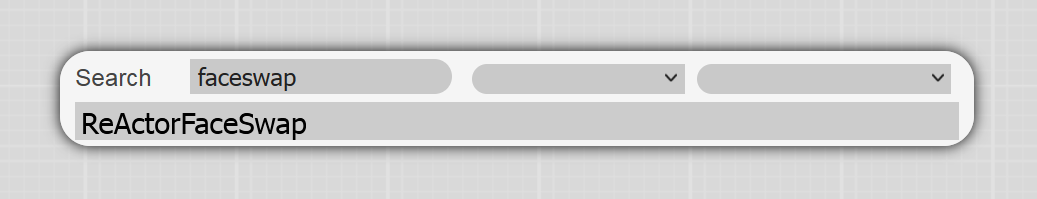

+12. Go to the ComfyUI tab and find there ReActor Node inside the menu `ReActor` or by using a search:

+

+

+12. Go to the ComfyUI tab and find there ReActor Node inside the menu `ReActor` or by using a search:

+ +

+ +

+

+

+

+

+SD WebUI: AUTOMATIC1111 or SD.Next

+ +1. Close (stop) your SD-WebUI/Comfy Server if it's running +2. (For Windows Users): + - Install [Visual Studio 2022](https://visualstudio.microsoft.com/downloads/) (Community version - you need this step to build Insightface) + - OR only [VS C++ Build Tools](https://visualstudio.microsoft.com/visual-cpp-build-tools/) and select "Desktop Development with C++" under "Workloads -> Desktop & Mobile" + - OR if you don't want to install VS or VS C++ BT - follow [this steps (sec. I)](#insightfacebuild) +3. Go to the `extensions\sd-webui-comfyui\ComfyUI\custom_nodes` +4. Open Console or Terminal and run `git clone https://github.com/Gourieff/ComfyUI-ReActor` +5. Go to the SD WebUI root folder, open Console or Terminal and run (Windows users)`.\venv\Scripts\activate` or (Linux/MacOS)`venv/bin/activate` +6. `python -m pip install -U pip` +7. `cd extensions\sd-webui-comfyui\ComfyUI\custom_nodes\ComfyUI-ReActor` +8. `python install.py` +9. Please, wait until the installation process will be finished +10. (From the version 0.3.0) Download additional facerestorers models from the link below and put them into the `extensions\sd-webui-comfyui\ComfyUI\models\facerestore_models` directory:+https://huggingface.co/datasets/Gourieff/ReActor/tree/main/models/facerestore_models +11. Run SD WebUI and check console for the message that ReActor Node is running: +

+

+12. Go to the ComfyUI tab and find there ReActor Node inside the menu `ReActor` or by using a search:

+

+

+12. Go to the ComfyUI tab and find there ReActor Node inside the menu `ReActor` or by using a search:

+ +

+ +

+

+

+

+

As well as one or both of "Sams" models from [here](https://huggingface.co/datasets/Gourieff/ReActor/tree/main/models/sams) - download (if you don't have them) and put into the "ComfyUI\models\sams" directory +5. Run ComfyUI and find there ReActor Nodes inside the menu `ReActor` or by using a search + +

+

+## Usage

+

+You can find ReActor Nodes inside the menu `ReActor` or by using a search (just type "ReActor" in the search field)

+

+List of Nodes:

+- ••• Main Nodes •••

+ - ReActorFaceSwap (Main Node)

+ - ReActorFaceSwapOpt (Main Node with the additional Options input)

+ - ReActorOptions (Options for ReActorFaceSwapOpt)

+ - ReActorFaceBoost (Face Booster Node)

+ - ReActorMaskHelper (Masking Helper)

+- ••• Operations with Face Models •••

+ - ReActorSaveFaceModel (Save Face Model)

+ - ReActorLoadFaceModel (Load Face Model)

+ - ReActorBuildFaceModel (Build Blended Face Model)

+ - ReActorMakeFaceModelBatch (Make Face Model Batch)

+- ••• Additional Nodes •••

+ - ReActorRestoreFace (Face Restoration)

+ - ReActorImageDublicator (Dublicate one Image to Images List)

+ - ImageRGBA2RGB (Convert RGBA to RGB)

+

+Connect all required slots and run the query.

+

+### Main Node Inputs

+

+- `input_image` - is an image to be processed (target image, analog of "target image" in the SD WebUI extension);

+ - Supported Nodes: "Load Image", "Load Video" or any other nodes providing images as an output;

+- `source_image` - is an image with a face or faces to swap in the `input_image` (source image, analog of "source image" in the SD WebUI extension);

+ - Supported Nodes: "Load Image" or any other nodes providing images as an output;

+- `face_model` - is the input for the "Load Face Model" Node or another ReActor node to provide a face model file (face embedding) you created earlier via the "Save Face Model" Node;

+ - Supported Nodes: "Load Face Model", "Build Blended Face Model";

+

+### Main Node Outputs

+

+- `IMAGE` - is an output with the resulted image;

+ - Supported Nodes: any nodes which have images as an input;

+- `FACE_MODEL` - is an output providing a source face's model being built during the swapping process;

+ - Supported Nodes: "Save Face Model", "ReActor", "Make Face Model Batch";

+

+### Face Restoration

+

+Since version 0.3.0 ReActor Node has a buil-in face restoration.Standalone (Portable) ComfyUI for Windows

+ +1. Do the following: + - Install [Visual Studio 2022](https://visualstudio.microsoft.com/downloads/) (Community version - you need this step to build Insightface) + - OR only [VS C++ Build Tools](https://visualstudio.microsoft.com/visual-cpp-build-tools/) and select "Desktop Development with C++" under "Workloads -> Desktop & Mobile" + - OR if you don't want to install VS or VS C++ BT - follow [this steps (sec. I)](#insightfacebuild) +2. Choose between two options: + - (ComfyUI Manager) Open ComfyUI Manager, click "Install Custom Nodes", type "ReActor" in the "Search" field and then click "Install". After ComfyUI will complete the process - please restart the Server. + - (Manually) Go to `ComfyUI\custom_nodes`, open Console and run `git clone https://github.com/Gourieff/ComfyUI-ReActor` +3. Go to `ComfyUI\custom_nodes\ComfyUI-ReActor` and run `install.bat` +4. If you don't have the "face_yolov8m.pt" Ultralytics model - you can download it from the [Assets](https://huggingface.co/datasets/Gourieff/ReActor/blob/main/models/detection/bbox/face_yolov8m.pt) and put it into the "ComfyUI\models\ultralytics\bbox" directoryAs well as one or both of "Sams" models from [here](https://huggingface.co/datasets/Gourieff/ReActor/tree/main/models/sams) - download (if you don't have them) and put into the "ComfyUI\models\sams" directory +5. Run ComfyUI and find there ReActor Nodes inside the menu `ReActor` or by using a search + +

Just download the models you want (see [Installation](#installation) instruction) and select one of them to restore the resulting face(s) during the faceswap. It will enhance face details and make your result more accurate. + +### Face Indexes + +By default ReActor detects faces in images from "large" to "small".

You can change this option by adding ReActorFaceSwapOpt node with ReActorOptions. + +And if you need to specify faces, you can set indexes for source and input images. + +Index of the first detected face is 0. + +You can set indexes in the order you need.

+E.g.: 0,1,2 (for Source); 1,0,2 (for Input).

This means: the second Input face (index = 1) will be swapped by the first Source face (index = 0) and so on. + +### Genders + +You can specify the gender to detect in images.

+ReActor will swap a face only if it meets the given condition. + +### Face Models + +Since version 0.4.0 you can save face models as "safetensors" files (stored in `ComfyUI\models\reactor\faces`) and load them into ReActor implementing different scenarios and keeping super lightweight face models of the faces you use. + +To make new models appear in the list of the "Load Face Model" Node - just refresh the page of your ComfyUI web application.

+(I recommend you to use ComfyUI Manager - otherwise you workflow can be lost after you refresh the page if you didn't save it before that). + +## Troubleshooting + + + +### **I. (For Windows users) If you still cannot build Insightface for some reasons or just don't want to install Visual Studio or VS C++ Build Tools - do the following:** + +1. (ComfyUI Portable) From the root folder check the version of Python:

run CMD and type `python_embeded\python.exe -V` +2. Download prebuilt Insightface package [for Python 3.10](https://github.com/Gourieff/Assets/raw/main/Insightface/insightface-0.7.3-cp310-cp310-win_amd64.whl) or [for Python 3.11](https://github.com/Gourieff/Assets/raw/main/Insightface/insightface-0.7.3-cp311-cp311-win_amd64.whl) (if in the previous step you see 3.11) or [for Python 3.12](https://github.com/Gourieff/Assets/raw/main/Insightface/insightface-0.7.3-cp312-cp312-win_amd64.whl) (if in the previous step you see 3.12) and put into the stable-diffusion-webui (A1111 or SD.Next) root folder (where you have "webui-user.bat" file) or into ComfyUI root folder if you use ComfyUI Portable +3. From the root folder run: + - (SD WebUI) CMD and `.\venv\Scripts\activate` + - (ComfyUI Portable) run CMD +4. Then update your PIP: + - (SD WebUI) `python -m pip install -U pip` + - (ComfyUI Portable) `python_embeded\python.exe -m pip install -U pip` +5. Then install Insightface: + - (SD WebUI) `pip install insightface-0.7.3-cp310-cp310-win_amd64.whl` (for 3.10) or `pip install insightface-0.7.3-cp311-cp311-win_amd64.whl` (for 3.11) or `pip install insightface-0.7.3-cp312-cp312-win_amd64.whl` (for 3.12) + - (ComfyUI Portable) `python_embeded\python.exe -m pip install insightface-0.7.3-cp310-cp310-win_amd64.whl` (for 3.10) or `python_embeded\python.exe -m pip install insightface-0.7.3-cp311-cp311-win_amd64.whl` (for 3.11) or `python_embeded\python.exe -m pip install insightface-0.7.3-cp312-cp312-win_amd64.whl` (for 3.12) +6. Enjoy! + +### **II. "AttributeError: 'NoneType' object has no attribute 'get'"** + +This error may occur if there's smth wrong with the model file `inswapper_128.onnx` + +Try to download it manually from [here](https://github.com/facefusion/facefusion-assets/releases/download/models/inswapper_128.onnx) +and put it to the `ComfyUI\models\insightface` replacing existing one + +### **III. "reactor.execute() got an unexpected keyword argument 'reference_image'"** + +This means that input points have been changed with the latest update

+Remove the current ReActor Node from your workflow and add it again + +### **IV. ControlNet Aux Node IMPORT failed error when using with ReActor Node** + +1. Close ComfyUI if it runs +2. Go to the ComfyUI root folder, open CMD there and run: + - `python_embeded\python.exe -m pip uninstall -y opencv-python opencv-contrib-python opencv-python-headless` + - `python_embeded\python.exe -m pip install opencv-python==4.7.0.72` +3. That's it! + +

+

+### **V. "ModuleNotFoundError: No module named 'basicsr'" or "subprocess-exited-with-error" during future-0.18.3 installation**

+

+- Download https://github.com/Gourieff/Assets/raw/main/comfyui-reactor-node/future-0.18.3-py3-none-any.whl

+

+### **V. "ModuleNotFoundError: No module named 'basicsr'" or "subprocess-exited-with-error" during future-0.18.3 installation**

+

+- Download https://github.com/Gourieff/Assets/raw/main/comfyui-reactor-node/future-0.18.3-py3-none-any.whl+- Put it to ComfyUI root And run: + + python_embeded\python.exe -m pip install future-0.18.3-py3-none-any.whl + +- Then: + + python_embeded\python.exe -m pip install basicsr + +### **VI. "fatal: fetch-pack: invalid index-pack output" when you try to `git clone` the repository"** + +Try to clone with `--depth=1` (last commit only): + + git clone --depth=1 https://github.com/Gourieff/ComfyUI-ReActor + +Then retrieve the rest (if you need): + + git fetch --unshallow + +## Updating + +Just put .bat or .sh script from this [Repo](https://github.com/Gourieff/sd-webui-extensions-updater) to the `ComfyUI\custom_nodes` directory and run it when you need to check for updates + +### Disclaimer + +This software is meant to be a productive contribution to the rapidly growing AI-generated media industry. It will help artists with tasks such as animating a custom character or using the character as a model for clothing etc. + +The developers of this software are aware of its possible unethical applications and are committed to take preventative measures against them. We will continue to develop this project in the positive direction while adhering to law and ethics. + +Users of this software are expected to use this software responsibly while abiding the local law. If face of a real person is being used, users are suggested to get consent from the concerned person and clearly mention that it is a deepfake when posting content online. **Developers and Contributors of this software are not responsible for actions of end-users.** + +By using this extension you are agree not to create any content that: +- violates any laws; +- causes any harm to a person or persons; +- propagates (spreads) any information (both public or personal) or images (both public or personal) which could be meant for harm; +- spreads misinformation; +- targets vulnerable groups of people. + +This software utilizes the pre-trained models `buffalo_l` and `inswapper_128.onnx`, which are provided by [InsightFace](https://github.com/deepinsight/insightface/). These models are included under the following conditions: + +[From insighface license](https://github.com/deepinsight/insightface/tree/master/python-package): The InsightFace’s pre-trained models are available for non-commercial research purposes only. This includes both auto-downloading models and manually downloaded models. + +Users of this software must strictly adhere to these conditions of use. The developers and maintainers of this software are not responsible for any misuse of InsightFace’s pre-trained models. + +Please note that if you intend to use this software for any commercial purposes, you will need to train your own models or find models that can be used commercially. + +### Models Hashsum + +#### Safe-to-use models have the following hash: + +inswapper_128.onnx +``` +MD5:a3a155b90354160350efd66fed6b3d80 +SHA256:e4a3f08c753cb72d04e10aa0f7dbe3deebbf39567d4ead6dce08e98aa49e16af +``` + +1k3d68.onnx + +``` +MD5:6fb94fcdb0055e3638bf9158e6a108f4 +SHA256:df5c06b8a0c12e422b2ed8947b8869faa4105387f199c477af038aa01f9a45cc +``` + +2d106det.onnx + +``` +MD5:a3613ef9eb3662b4ef88eb90db1fcf26 +SHA256:f001b856447c413801ef5c42091ed0cd516fcd21f2d6b79635b1e733a7109dbf +``` + +det_10g.onnx + +``` +MD5:4c10eef5c9e168357a16fdd580fa8371 +SHA256:5838f7fe053675b1c7a08b633df49e7af5495cee0493c7dcf6697200b85b5b91 +``` + +genderage.onnx + +``` +MD5:81c77ba87ab38163b0dec6b26f8e2af2 +SHA256:4fde69b1c810857b88c64a335084f1c3fe8f01246c9a191b48c7bb756d6652fb +``` + +w600k_r50.onnx + +``` +MD5:80248d427976241cbd1343889ed132b3 +SHA256:4c06341c33c2ca1f86781dab0e829f88ad5b64be9fba56e56bc9ebdefc619e43 +``` + +**Please check hashsums if you download these models from unverified (or untrusted) sources** + + + +## Thanks and Credits + +

+

+ +|file|source|license| +|----|------|-------| +|[buffalo_l.zip](https://huggingface.co/datasets/Gourieff/ReActor/blob/main/models/buffalo_l.zip) | [DeepInsight](https://github.com/deepinsight/insightface) |  | +| [codeformer-v0.1.0.pth](https://huggingface.co/datasets/Gourieff/ReActor/blob/main/models/facerestore_models/codeformer-v0.1.0.pth) | [sczhou](https://github.com/sczhou/CodeFormer) |  | +| [GFPGANv1.3.pth](https://huggingface.co/datasets/Gourieff/ReActor/blob/main/models/facerestore_models/GFPGANv1.3.pth) | [TencentARC](https://github.com/TencentARC/GFPGAN) |  | +| [GFPGANv1.4.pth](https://huggingface.co/datasets/Gourieff/ReActor/blob/main/models/facerestore_models/GFPGANv1.4.pth) | [TencentARC](https://github.com/TencentARC/GFPGAN) |  | +| [inswapper_128.onnx](https://github.com/facefusion/facefusion-assets/releases/download/models/inswapper_128.onnx) | [DeepInsight](https://github.com/deepinsight/insightface) |  | +| [inswapper_128_fp16.onnx](https://github.com/facefusion/facefusion-assets/releases/download/models/inswapper_128_fp16.onnx) | [Hillobar](https://github.com/Hillobar/Rope) |  | + +[BasicSR](https://github.com/XPixelGroup/BasicSR) - [@XPixelGroup](https://github.com/XPixelGroup)

+[facexlib](https://github.com/xinntao/facexlib) - [@xinntao](https://github.com/xinntao)

+ +[@s0md3v](https://github.com/s0md3v), [@henryruhs](https://github.com/henryruhs) - the original Roop App

+[@ssitu](https://github.com/ssitu) - the first version of [ComfyUI_roop](https://github.com/ssitu/ComfyUI_roop) extension + +

+

+

+

+### Note!

+

+**If you encounter any errors when you use ReActor Node - don't rush to open an issue, first try to remove current ReActor node in your workflow and add it again**

+

+**ReActor Node gets updates from time to time, new functions appear and old node can work with errors or not work at all**

diff --git a/custom_nodes/ComfyUI-ReActor/README_RU.md b/custom_nodes/ComfyUI-ReActor/README_RU.md

new file mode 100644

index 0000000000000000000000000000000000000000..0f9962d14c3720197a0983383c79db9872d651c5

--- /dev/null

+++ b/custom_nodes/ComfyUI-ReActor/README_RU.md

@@ -0,0 +1,497 @@

+Click to expand

+ ++ +|file|source|license| +|----|------|-------| +|[buffalo_l.zip](https://huggingface.co/datasets/Gourieff/ReActor/blob/main/models/buffalo_l.zip) | [DeepInsight](https://github.com/deepinsight/insightface) |  | +| [codeformer-v0.1.0.pth](https://huggingface.co/datasets/Gourieff/ReActor/blob/main/models/facerestore_models/codeformer-v0.1.0.pth) | [sczhou](https://github.com/sczhou/CodeFormer) |  | +| [GFPGANv1.3.pth](https://huggingface.co/datasets/Gourieff/ReActor/blob/main/models/facerestore_models/GFPGANv1.3.pth) | [TencentARC](https://github.com/TencentARC/GFPGAN) |  | +| [GFPGANv1.4.pth](https://huggingface.co/datasets/Gourieff/ReActor/blob/main/models/facerestore_models/GFPGANv1.4.pth) | [TencentARC](https://github.com/TencentARC/GFPGAN) |  | +| [inswapper_128.onnx](https://github.com/facefusion/facefusion-assets/releases/download/models/inswapper_128.onnx) | [DeepInsight](https://github.com/deepinsight/insightface) |  | +| [inswapper_128_fp16.onnx](https://github.com/facefusion/facefusion-assets/releases/download/models/inswapper_128_fp16.onnx) | [Hillobar](https://github.com/Hillobar/Rope) |  | + +[BasicSR](https://github.com/XPixelGroup/BasicSR) - [@XPixelGroup](https://github.com/XPixelGroup)

+[facexlib](https://github.com/xinntao/facexlib) - [@xinntao](https://github.com/xinntao)

+ +[@s0md3v](https://github.com/s0md3v), [@henryruhs](https://github.com/henryruhs) - the original Roop App

+[@ssitu](https://github.com/ssitu) - the first version of [ComfyUI_roop](https://github.com/ssitu/ComfyUI_roop) extension + +

+

+  +

+

+

+

+

+

+

+

+

+

+

+

+

+  +

+

+ + Поддержать проект + + + +

+ + [](https://github.com/Gourieff/ComfyUI-ReActor/commits/main) +  + [](https://github.com/Gourieff/ComfyUI-ReActor/issues?cacheSeconds=0) + [](https://github.com/Gourieff/ComfyUI-ReActor/issues?q=is%3Aissue+state%3Aclosed) +  + + [English](/README.md) | Русский + +# ReActor Nodes для ComfyUI

-=Безопасно для работы | SFW-Friendly=- + +

+

+### Ноды (nodes) для быстрой и простой замены лиц на любых изображениях для работы с ComfyUI, основан на [ранее заблокированном РеАкторе](https://github.com/Gourieff/comfyui-reactor-node) - теперь имеется встроенный NSFW-детектор, исключающий замену лиц на изображениях с контентом 18+

+

+> Используя данное ПО, вы понимаете и принимаете [ответственность](#disclaimer)

+

+ +

+

+

+

+

+

+

+

+

+

+

+

+

+  +

+ + + Поддержать проект + + + +

+ + [](https://github.com/Gourieff/ComfyUI-ReActor/commits/main) +  + [](https://github.com/Gourieff/ComfyUI-ReActor/issues?cacheSeconds=0) + [](https://github.com/Gourieff/ComfyUI-ReActor/issues?q=is%3Aissue+state%3Aclosed) +  + + [English](/README.md) | Русский + +# ReActor Nodes для ComfyUI

-=Безопасно для работы | SFW-Friendly=- + +

+

+---

+[**Что нового**](#latestupdate) | [**Установка**](#installation) | [**Использование**](#usage) | [**Устранение проблем**](#troubleshooting) | [**Обновление**](#updating) | [**Ответственность**](#disclaimer) | [**Благодарности**](#credits) | [**Заметка**](#note)

+

+---

+

+

+

+

+

+## Что нового в последнем обновлении

+

+### 0.6.0 ALPHA1

+

+- Новый нод `ReActorSetWeight` - теперь можно установить силу замены лица для `source_image` или `face_model` от 0% до 100% (с шагом 12.5%)

+

+ +

+ +

+ +

+

+

+ +

+ +

+

+

+Скачать модели ReSwapper можно отсюда:

+https://huggingface.co/datasets/Gourieff/ReActor/tree/main/models

+Сохраните их в директорию "models/reswapper".

+

+- NSFW-детектор, чтобы не нарушать [правила GitHub](https://docs.github.com/en/site-policy/acceptable-use-policies/github-misinformation-and-disinformation#synthetic--manipulated-media-tools)

+- Новый нод "Unload ReActor Models" - полезен для сложных воркфлоу, когда вам нужно освободить ОЗУ, занятую РеАктором

+

+ +

+- Поддержка ORT CoreML and ROCM EPs, достаточно установить ту версию onnxruntime, которая соответствует вашему GPU

+- Некоторые улучшения скрипта установки для поддержки последней версии ORT-GPU

+

+

+

+- Поддержка ORT CoreML and ROCM EPs, достаточно установить ту версию onnxruntime, которая соответствует вашему GPU

+- Некоторые улучшения скрипта установки для поддержки последней версии ORT-GPU

+

+

+ +

+

+

+- Исправления и улучшения

+

+### 0.5.1

+

+- Поддержка моделей восстановления лиц GPEN 1024/2048 (доступны в датасете на HF https://huggingface.co/datasets/Gourieff/ReActor/tree/main/models/facerestore_models)

+- Нод ReActorFaceBoost - попытка улучшить качество заменённых лиц. Идея состоит в том, чтобы восстановить и увеличить заменённое лицо (в соответствии с параметром `face_size` модели реставрации) ДО того, как лицо будет вставлено в целевое изображения (через алгоритмы инсваппера), больше информации [здесь (PR#321)](https://github.com/Gourieff/comfyui-reactor-node/pull/321)

+

+ +

+[Полноразмерное демо-превью](https://github.com/Gourieff/Assets/blob/main/comfyui-reactor-node/0.5.1-whatsnew-02.png)

+

+- Сортировка моделей лиц по алфавиту

+- Множество исправлений и улучшений

+

+### [0.5.0 BETA4](https://github.com/Gourieff/comfyui-reactor-node/releases/tag/v0.5.0)

+

+- Поддержка библиотеки Spandrel при работе с GFPGAN

+

+### 0.5.0 BETA3

+

+- Исправления: "RAM issue", "No detection" для MaskingHelper

+

+### 0.5.0 BETA2

+

+- Появилась возможность строить смешанные модели лиц из пачки уже имеющихся моделей - добавьте для этого нод "Make Face Model Batch" в свой воркфлоу и загрузите несколько моделей через ноды "Load Face Model"

+- Огромный буст производительности модуля анализа изображений! 10-кратный прирост скорости! Работа с видео теперь в удовольствие!

+

+

+

+[Полноразмерное демо-превью](https://github.com/Gourieff/Assets/blob/main/comfyui-reactor-node/0.5.1-whatsnew-02.png)

+

+- Сортировка моделей лиц по алфавиту

+- Множество исправлений и улучшений

+

+### [0.5.0 BETA4](https://github.com/Gourieff/comfyui-reactor-node/releases/tag/v0.5.0)

+

+- Поддержка библиотеки Spandrel при работе с GFPGAN

+

+### 0.5.0 BETA3

+

+- Исправления: "RAM issue", "No detection" для MaskingHelper

+

+### 0.5.0 BETA2

+

+- Появилась возможность строить смешанные модели лиц из пачки уже имеющихся моделей - добавьте для этого нод "Make Face Model Batch" в свой воркфлоу и загрузите несколько моделей через ноды "Load Face Model"

+- Огромный буст производительности модуля анализа изображений! 10-кратный прирост скорости! Работа с видео теперь в удовольствие!

+

+ +

+### 0.5.0 BETA1

+

+- Добавлен выход SWAPPED_FACE для нода Masking Helper

+- FIX: Удалён пустой A-канал на выходе IMAGE нода Masking Helper (вызывавший ошибки с некоторым нодами)

+

+### 0.5.0 ALPHA1

+

+- Нод ReActorBuildFaceModel получил выход "face_model" для отправки совмещенной модели лиц непосредственно в основной Нод:

+

+Basic workflow [💾](https://github.com/Gourieff/Assets/blob/main/comfyui-reactor-node/workflows/ReActor--Build-Blended-Face-Model--v2.json)

+

+- Функции маски лица теперь доступна и в версии для Комфи, просто добавьте нод "ReActorMaskHelper" в воркфлоу и соедините узлы, как показано ниже:

+

+

+

+### 0.5.0 BETA1

+

+- Добавлен выход SWAPPED_FACE для нода Masking Helper

+- FIX: Удалён пустой A-канал на выходе IMAGE нода Masking Helper (вызывавший ошибки с некоторым нодами)

+

+### 0.5.0 ALPHA1

+

+- Нод ReActorBuildFaceModel получил выход "face_model" для отправки совмещенной модели лиц непосредственно в основной Нод:

+

+Basic workflow [💾](https://github.com/Gourieff/Assets/blob/main/comfyui-reactor-node/workflows/ReActor--Build-Blended-Face-Model--v2.json)

+

+- Функции маски лица теперь доступна и в версии для Комфи, просто добавьте нод "ReActorMaskHelper" в воркфлоу и соедините узлы, как показано ниже:

+

+ +

+Если модель "face_yolov8m.pt" у вас отсутствует - можете скачать её [отсюда](https://huggingface.co/datasets/Gourieff/ReActor/blob/main/models/detection/bbox/face_yolov8m.pt) и положить в папку "ComfyUI\models\ultralytics\bbox"

+

+

+Если модель "face_yolov8m.pt" у вас отсутствует - можете скачать её [отсюда](https://huggingface.co/datasets/Gourieff/ReActor/blob/main/models/detection/bbox/face_yolov8m.pt) и положить в папку "ComfyUI\models\ultralytics\bbox"

+

+То же самое и с ["sam_vit_b_01ec64.pth"](https://huggingface.co/datasets/Gourieff/ReActor/blob/main/models/sams/sam_vit_b_01ec64.pth) - скачайте (если отсутствует) и положите в папку "ComfyUI\models\sams"; + +Данный нод поможет вам получить куда более аккуратный результат при замене лиц: + + +

+- Нод ReActorImageDublicator - полезен тем, кто создает видео, помогает продублировать одиночное изображение в несколько копий, чтобы использовать их, к примеру, с VAE энкодером:

+

+

+

+- Нод ReActorImageDublicator - полезен тем, кто создает видео, помогает продублировать одиночное изображение в несколько копий, чтобы использовать их, к примеру, с VAE энкодером:

+

+ +

+- ReActorFaceSwapOpt (упрощенная версия основного нода) + нод ReActorOptions для установки дополнительных опций, как (новые) "отдельный порядок лиц для input/source". Да! Теперь можно установить любой порядок "чтения" индекса лиц на изображении, в т.ч. от большего к меньшему (по умолчанию)!

+

+

+

+- ReActorFaceSwapOpt (упрощенная версия основного нода) + нод ReActorOptions для установки дополнительных опций, как (новые) "отдельный порядок лиц для input/source". Да! Теперь можно установить любой порядок "чтения" индекса лиц на изображении, в т.ч. от большего к меньшему (по умолчанию)!

+

+ +

+- Небольшое улучшение скорости анализа целевых изображений (input)

+

+### [0.4.2](https://github.com/Gourieff/comfyui-reactor-node/releases/tag/v0.4.2)

+

+- Добавлена поддержка GPEN-BFR-512 и RestoreFormer_Plus_Plus моделей восстановления лиц

+

+Скачать можно здесь: https://huggingface.co/datasets/Gourieff/ReActor/tree/main/models/facerestore_models

+

+

+- Небольшое улучшение скорости анализа целевых изображений (input)

+

+### [0.4.2](https://github.com/Gourieff/comfyui-reactor-node/releases/tag/v0.4.2)

+

+- Добавлена поддержка GPEN-BFR-512 и RestoreFormer_Plus_Plus моделей восстановления лиц

+

+Скачать можно здесь: https://huggingface.co/datasets/Gourieff/ReActor/tree/main/models/facerestore_models

+

Добавьте модели в папку `ComfyUI\models\facerestore_models` + + +

+- По многочисленным просьбам появилась возможность строить смешанные модели лиц и в ComfyUI тоже и использовать их с нодом "Load Face Model" Node или в SD WebUI;

+

+Экспериментируйте и создавайте новые лица или совмещайте разные лица нужного вам персонажа, чтобы добиться лучшей точности и схожести с оригиналом!

+

+Достаточно добавить нод "Make Image Batch" (ImpactPack) на вход нового нода РеАктора и загрузить в пачку необходимые вам изображения для построения смешанной модели:

+

+

+

+- По многочисленным просьбам появилась возможность строить смешанные модели лиц и в ComfyUI тоже и использовать их с нодом "Load Face Model" Node или в SD WebUI;

+

+Экспериментируйте и создавайте новые лица или совмещайте разные лица нужного вам персонажа, чтобы добиться лучшей точности и схожести с оригиналом!

+

+Достаточно добавить нод "Make Image Batch" (ImpactPack) на вход нового нода РеАктора и загрузить в пачку необходимые вам изображения для построения смешанной модели:

+

+ +

+Пример результата (на основе лиц 4-х актрис создано новое лицо):

+

+

+

+Пример результата (на основе лиц 4-х актрис создано новое лицо):

+

+ +

+Базовый воркфлоу [💾](https://github.com/Gourieff/Assets/blob/main/comfyui-reactor-node/workflows/ReActor--Build-Blended-Face-Model--v1.json)

+

+### [0.4.1](https://github.com/Gourieff/comfyui-reactor-node/releases/tag/v0.4.1)

+

+- Поддержка CUDA 12 - не забудьте запустить (Windows) `install.bat` или (Linux/MacOS) `install.py` для используемого Python окружения или попробуйте установить ORT-GPU для CU12 вручную (https://onnxruntime.ai/docs/install/#install-onnx-runtime-gpu-cuda-12x)

+- Исправление Issue https://github.com/Gourieff/comfyui-reactor-node/issues/173

+

+- Отдельный Нод для восстаноления лиц (FR https://github.com/Gourieff/comfyui-reactor-node/issues/191), располагается внутри меню ReActor (нод RestoreFace)

+- (Windows) Установка зависимостей теперь может быть выполнена в Python из PATH ОС

+- Разные исправления и улучшения

+

+- Face Restore Visibility и CodeFormer Weight (Fidelity) теперь доступны; не забудьте заново добавить Нод в ваших существующих воркфлоу

+

+

+

+Базовый воркфлоу [💾](https://github.com/Gourieff/Assets/blob/main/comfyui-reactor-node/workflows/ReActor--Build-Blended-Face-Model--v1.json)

+

+### [0.4.1](https://github.com/Gourieff/comfyui-reactor-node/releases/tag/v0.4.1)

+

+- Поддержка CUDA 12 - не забудьте запустить (Windows) `install.bat` или (Linux/MacOS) `install.py` для используемого Python окружения или попробуйте установить ORT-GPU для CU12 вручную (https://onnxruntime.ai/docs/install/#install-onnx-runtime-gpu-cuda-12x)

+- Исправление Issue https://github.com/Gourieff/comfyui-reactor-node/issues/173

+

+- Отдельный Нод для восстаноления лиц (FR https://github.com/Gourieff/comfyui-reactor-node/issues/191), располагается внутри меню ReActor (нод RestoreFace)

+- (Windows) Установка зависимостей теперь может быть выполнена в Python из PATH ОС

+- Разные исправления и улучшения

+

+- Face Restore Visibility и CodeFormer Weight (Fidelity) теперь доступны; не забудьте заново добавить Нод в ваших существующих воркфлоу

+

+ +

+### [0.4.0](https://github.com/Gourieff/comfyui-reactor-node/releases/tag/v0.4.0)

+

+- Вход "input_image" теперь идёт первым, это даёт возможность корректного байпаса, а также это правильно с точки зрения расположения входов (главный вход - первый);

+- Теперь можно сохранять модели лиц в качестве файлов "safetensors" (`ComfyUI\models\reactor\faces`) и загружать их в ReActor, реализуя разные сценарии использования, а также храня супер легкие модели лиц, которые вы чаще всего используете:

+

+

+

+### [0.4.0](https://github.com/Gourieff/comfyui-reactor-node/releases/tag/v0.4.0)

+

+- Вход "input_image" теперь идёт первым, это даёт возможность корректного байпаса, а также это правильно с точки зрения расположения входов (главный вход - первый);

+- Теперь можно сохранять модели лиц в качестве файлов "safetensors" (`ComfyUI\models\reactor\faces`) и загружать их в ReActor, реализуя разные сценарии использования, а также храня супер легкие модели лиц, которые вы чаще всего используете:

+

+ +

+ +

+- Возможность сохранять модели лиц напрямую из изображения:

+

+

+

+- Возможность сохранять модели лиц напрямую из изображения:

+

+ +

+- Оба входа опциональны, присоедините один из них в соответствии с вашим воркфлоу; если присоеденены оба - вход `image` имеет приоритет.

+- Различные исправления, делающие это расширение лучше.

+

+Спасибо всем, кто находит ошибки, предлагает новые функции и поддерживает данный проект!

+

+

+

+- Оба входа опциональны, присоедините один из них в соответствии с вашим воркфлоу; если присоеденены оба - вход `image` имеет приоритет.

+- Различные исправления, делающие это расширение лучше.

+

+Спасибо всем, кто находит ошибки, предлагает новые функции и поддерживает данный проект!

+

+

+

+

+

+## Установка

+

+Предыдущие версии

+ +### 0.5.2 + +- Поддержка моделей ReSwapper. Несмотря на то, что Inswapper по-прежнему даёт лучшее сходство, но ReSwapper развивается - спасибо @somanchiu https://github.com/somanchiu/ReSwapper за эти модели и проект ReSwapper! Это хороший шаг для Сообщества в создании альтернативы Инсваппера! + + +

+ +

+ +

+- Поддержка ORT CoreML and ROCM EPs, достаточно установить ту версию onnxruntime, которая соответствует вашему GPU

+- Некоторые улучшения скрипта установки для поддержки последней версии ORT-GPU

+

+

+

+- Поддержка ORT CoreML and ROCM EPs, достаточно установить ту версию onnxruntime, которая соответствует вашему GPU

+- Некоторые улучшения скрипта установки для поддержки последней версии ORT-GPU

+

+ +

+ +

+[Полноразмерное демо-превью](https://github.com/Gourieff/Assets/blob/main/comfyui-reactor-node/0.5.1-whatsnew-02.png)

+

+- Сортировка моделей лиц по алфавиту

+- Множество исправлений и улучшений

+

+### [0.5.0 BETA4](https://github.com/Gourieff/comfyui-reactor-node/releases/tag/v0.5.0)

+

+- Поддержка библиотеки Spandrel при работе с GFPGAN

+

+### 0.5.0 BETA3

+

+- Исправления: "RAM issue", "No detection" для MaskingHelper

+

+### 0.5.0 BETA2

+

+- Появилась возможность строить смешанные модели лиц из пачки уже имеющихся моделей - добавьте для этого нод "Make Face Model Batch" в свой воркфлоу и загрузите несколько моделей через ноды "Load Face Model"

+- Огромный буст производительности модуля анализа изображений! 10-кратный прирост скорости! Работа с видео теперь в удовольствие!

+

+

+

+[Полноразмерное демо-превью](https://github.com/Gourieff/Assets/blob/main/comfyui-reactor-node/0.5.1-whatsnew-02.png)

+

+- Сортировка моделей лиц по алфавиту

+- Множество исправлений и улучшений

+

+### [0.5.0 BETA4](https://github.com/Gourieff/comfyui-reactor-node/releases/tag/v0.5.0)

+

+- Поддержка библиотеки Spandrel при работе с GFPGAN

+

+### 0.5.0 BETA3

+

+- Исправления: "RAM issue", "No detection" для MaskingHelper

+

+### 0.5.0 BETA2

+

+- Появилась возможность строить смешанные модели лиц из пачки уже имеющихся моделей - добавьте для этого нод "Make Face Model Batch" в свой воркфлоу и загрузите несколько моделей через ноды "Load Face Model"

+- Огромный буст производительности модуля анализа изображений! 10-кратный прирост скорости! Работа с видео теперь в удовольствие!

+

+ +

+### 0.5.0 BETA1

+

+- Добавлен выход SWAPPED_FACE для нода Masking Helper

+- FIX: Удалён пустой A-канал на выходе IMAGE нода Masking Helper (вызывавший ошибки с некоторым нодами)

+

+### 0.5.0 ALPHA1

+

+- Нод ReActorBuildFaceModel получил выход "face_model" для отправки совмещенной модели лиц непосредственно в основной Нод:

+

+Basic workflow [💾](https://github.com/Gourieff/Assets/blob/main/comfyui-reactor-node/workflows/ReActor--Build-Blended-Face-Model--v2.json)

+

+- Функции маски лица теперь доступна и в версии для Комфи, просто добавьте нод "ReActorMaskHelper" в воркфлоу и соедините узлы, как показано ниже:

+

+

+

+### 0.5.0 BETA1

+

+- Добавлен выход SWAPPED_FACE для нода Masking Helper

+- FIX: Удалён пустой A-канал на выходе IMAGE нода Masking Helper (вызывавший ошибки с некоторым нодами)

+

+### 0.5.0 ALPHA1

+

+- Нод ReActorBuildFaceModel получил выход "face_model" для отправки совмещенной модели лиц непосредственно в основной Нод:

+

+Basic workflow [💾](https://github.com/Gourieff/Assets/blob/main/comfyui-reactor-node/workflows/ReActor--Build-Blended-Face-Model--v2.json)

+

+- Функции маски лица теперь доступна и в версии для Комфи, просто добавьте нод "ReActorMaskHelper" в воркфлоу и соедините узлы, как показано ниже:

+

+ +

+Если модель "face_yolov8m.pt" у вас отсутствует - можете скачать её [отсюда](https://huggingface.co/datasets/Gourieff/ReActor/blob/main/models/detection/bbox/face_yolov8m.pt) и положить в папку "ComfyUI\models\ultralytics\bbox"

+

+

+Если модель "face_yolov8m.pt" у вас отсутствует - можете скачать её [отсюда](https://huggingface.co/datasets/Gourieff/ReActor/blob/main/models/detection/bbox/face_yolov8m.pt) и положить в папку "ComfyUI\models\ultralytics\bbox"

++То же самое и с ["sam_vit_b_01ec64.pth"](https://huggingface.co/datasets/Gourieff/ReActor/blob/main/models/sams/sam_vit_b_01ec64.pth) - скачайте (если отсутствует) и положите в папку "ComfyUI\models\sams"; + +Данный нод поможет вам получить куда более аккуратный результат при замене лиц: + +

+

+- Нод ReActorImageDublicator - полезен тем, кто создает видео, помогает продублировать одиночное изображение в несколько копий, чтобы использовать их, к примеру, с VAE энкодером:

+

+

+

+- Нод ReActorImageDublicator - полезен тем, кто создает видео, помогает продублировать одиночное изображение в несколько копий, чтобы использовать их, к примеру, с VAE энкодером:

+

+ +

+- ReActorFaceSwapOpt (упрощенная версия основного нода) + нод ReActorOptions для установки дополнительных опций, как (новые) "отдельный порядок лиц для input/source". Да! Теперь можно установить любой порядок "чтения" индекса лиц на изображении, в т.ч. от большего к меньшему (по умолчанию)!

+

+

+

+- ReActorFaceSwapOpt (упрощенная версия основного нода) + нод ReActorOptions для установки дополнительных опций, как (новые) "отдельный порядок лиц для input/source". Да! Теперь можно установить любой порядок "чтения" индекса лиц на изображении, в т.ч. от большего к меньшему (по умолчанию)!

+

+ +

+- Небольшое улучшение скорости анализа целевых изображений (input)

+

+### [0.4.2](https://github.com/Gourieff/comfyui-reactor-node/releases/tag/v0.4.2)

+

+- Добавлена поддержка GPEN-BFR-512 и RestoreFormer_Plus_Plus моделей восстановления лиц

+

+Скачать можно здесь: https://huggingface.co/datasets/Gourieff/ReActor/tree/main/models/facerestore_models

+

+

+- Небольшое улучшение скорости анализа целевых изображений (input)

+

+### [0.4.2](https://github.com/Gourieff/comfyui-reactor-node/releases/tag/v0.4.2)

+

+- Добавлена поддержка GPEN-BFR-512 и RestoreFormer_Plus_Plus моделей восстановления лиц

+

+Скачать можно здесь: https://huggingface.co/datasets/Gourieff/ReActor/tree/main/models/facerestore_models

+Добавьте модели в папку `ComfyUI\models\facerestore_models` + +

+

+- По многочисленным просьбам появилась возможность строить смешанные модели лиц и в ComfyUI тоже и использовать их с нодом "Load Face Model" Node или в SD WebUI;

+

+Экспериментируйте и создавайте новые лица или совмещайте разные лица нужного вам персонажа, чтобы добиться лучшей точности и схожести с оригиналом!

+

+Достаточно добавить нод "Make Image Batch" (ImpactPack) на вход нового нода РеАктора и загрузить в пачку необходимые вам изображения для построения смешанной модели:

+

+

+

+- По многочисленным просьбам появилась возможность строить смешанные модели лиц и в ComfyUI тоже и использовать их с нодом "Load Face Model" Node или в SD WebUI;

+

+Экспериментируйте и создавайте новые лица или совмещайте разные лица нужного вам персонажа, чтобы добиться лучшей точности и схожести с оригиналом!

+

+Достаточно добавить нод "Make Image Batch" (ImpactPack) на вход нового нода РеАктора и загрузить в пачку необходимые вам изображения для построения смешанной модели:

+

+ +

+Пример результата (на основе лиц 4-х актрис создано новое лицо):

+

+

+

+Пример результата (на основе лиц 4-х актрис создано новое лицо):

+

+ +

+Базовый воркфлоу [💾](https://github.com/Gourieff/Assets/blob/main/comfyui-reactor-node/workflows/ReActor--Build-Blended-Face-Model--v1.json)

+

+### [0.4.1](https://github.com/Gourieff/comfyui-reactor-node/releases/tag/v0.4.1)

+

+- Поддержка CUDA 12 - не забудьте запустить (Windows) `install.bat` или (Linux/MacOS) `install.py` для используемого Python окружения или попробуйте установить ORT-GPU для CU12 вручную (https://onnxruntime.ai/docs/install/#install-onnx-runtime-gpu-cuda-12x)

+- Исправление Issue https://github.com/Gourieff/comfyui-reactor-node/issues/173

+

+- Отдельный Нод для восстаноления лиц (FR https://github.com/Gourieff/comfyui-reactor-node/issues/191), располагается внутри меню ReActor (нод RestoreFace)

+- (Windows) Установка зависимостей теперь может быть выполнена в Python из PATH ОС

+- Разные исправления и улучшения

+

+- Face Restore Visibility и CodeFormer Weight (Fidelity) теперь доступны; не забудьте заново добавить Нод в ваших существующих воркфлоу

+

+

+

+Базовый воркфлоу [💾](https://github.com/Gourieff/Assets/blob/main/comfyui-reactor-node/workflows/ReActor--Build-Blended-Face-Model--v1.json)

+

+### [0.4.1](https://github.com/Gourieff/comfyui-reactor-node/releases/tag/v0.4.1)

+

+- Поддержка CUDA 12 - не забудьте запустить (Windows) `install.bat` или (Linux/MacOS) `install.py` для используемого Python окружения или попробуйте установить ORT-GPU для CU12 вручную (https://onnxruntime.ai/docs/install/#install-onnx-runtime-gpu-cuda-12x)

+- Исправление Issue https://github.com/Gourieff/comfyui-reactor-node/issues/173

+

+- Отдельный Нод для восстаноления лиц (FR https://github.com/Gourieff/comfyui-reactor-node/issues/191), располагается внутри меню ReActor (нод RestoreFace)

+- (Windows) Установка зависимостей теперь может быть выполнена в Python из PATH ОС

+- Разные исправления и улучшения

+

+- Face Restore Visibility и CodeFormer Weight (Fidelity) теперь доступны; не забудьте заново добавить Нод в ваших существующих воркфлоу

+

+ +

+### [0.4.0](https://github.com/Gourieff/comfyui-reactor-node/releases/tag/v0.4.0)

+

+- Вход "input_image" теперь идёт первым, это даёт возможность корректного байпаса, а также это правильно с точки зрения расположения входов (главный вход - первый);

+- Теперь можно сохранять модели лиц в качестве файлов "safetensors" (`ComfyUI\models\reactor\faces`) и загружать их в ReActor, реализуя разные сценарии использования, а также храня супер легкие модели лиц, которые вы чаще всего используете:

+

+

+

+### [0.4.0](https://github.com/Gourieff/comfyui-reactor-node/releases/tag/v0.4.0)

+

+- Вход "input_image" теперь идёт первым, это даёт возможность корректного байпаса, а также это правильно с точки зрения расположения входов (главный вход - первый);

+- Теперь можно сохранять модели лиц в качестве файлов "safetensors" (`ComfyUI\models\reactor\faces`) и загружать их в ReActor, реализуя разные сценарии использования, а также храня супер легкие модели лиц, которые вы чаще всего используете:

+

+ +

+ +

+- Возможность сохранять модели лиц напрямую из изображения:

+

+

+

+- Возможность сохранять модели лиц напрямую из изображения:

+

+ +

+- Оба входа опциональны, присоедините один из них в соответствии с вашим воркфлоу; если присоеденены оба - вход `image` имеет приоритет.

+- Различные исправления, делающие это расширение лучше.

+

+Спасибо всем, кто находит ошибки, предлагает новые функции и поддерживает данный проект!

+

+

+

+- Оба входа опциональны, присоедините один из них в соответствии с вашим воркфлоу; если присоеденены оба - вход `image` имеет приоритет.

+- Различные исправления, делающие это расширение лучше.

+

+Спасибо всем, кто находит ошибки, предлагает новые функции и поддерживает данный проект!

+

+

+

+https://huggingface.co/datasets/Gourieff/ReActor/tree/main/models/facerestore_models +11. Запустите SD WebUI и проверьте консоль на сообщение, что ReActor Node работает: + +

+12. Перейдите во вкладку ComfyUI и найдите там ReActor Node внутри меню `ReActor` или через поиск:

+

+

+12. Перейдите во вкладку ComfyUI и найдите там ReActor Node внутри меню `ReActor` или через поиск:

+ +

+ +

+

+

+

+

+SD WebUI: AUTOMATIC1111 или SD.Next

+ +1. Закройте (остановите) SD-WebUI Сервер, если запущен +2. (Для пользователей Windows): + - Установите [Visual Studio 2022](https://visualstudio.microsoft.com/downloads/) (Например, версию Community - этот шаг нужен для правильной компиляции библиотеки Insightface) + - ИЛИ только [VS C++ Build Tools](https://visualstudio.microsoft.com/visual-cpp-build-tools/), выберите "Desktop Development with C++" в разделе "Workloads -> Desktop & Mobile" + - ИЛИ если же вы не хотите устанавливать что-либо из вышеуказанного - выполните [данные шаги (раздел. I)](#insightfacebuild) +3. Перейдите в `extensions\sd-webui-comfyui\ComfyUI\custom_nodes` +4. Откройте Консоль или Терминал и выполните `git clone https://github.com/Gourieff/ComfyUI-ReActor` +5. Перейдите в корневую директорию SD WebUI, откройте Консоль или Терминал и выполните (для пользователей Windows)`.\venv\Scripts\activate` или (для пользователей Linux/MacOS)`venv/bin/activate` +6. `python -m pip install -U pip` +7. `cd extensions\sd-webui-comfyui\ComfyUI\custom_nodes\ComfyUI-ReActor` +8. `python install.py` +9. Пожалуйста, дождитесь полного завершения установки +10. (Начиная с версии 0.3.0) Скачайте дополнительные модели восстановления лиц (по ссылке ниже) и сохраните их в папку `extensions\sd-webui-comfyui\ComfyUI\models\facerestore_models`:+https://huggingface.co/datasets/Gourieff/ReActor/tree/main/models/facerestore_models +11. Запустите SD WebUI и проверьте консоль на сообщение, что ReActor Node работает: +

+

+12. Перейдите во вкладку ComfyUI и найдите там ReActor Node внутри меню `ReActor` или через поиск:

+

+

+12. Перейдите во вкладку ComfyUI и найдите там ReActor Node внутри меню `ReActor` или через поиск:

+ +

+ +

+

+

+

+

+То же самое и с "Sams" моделями, скачайте одну или обе [отсюда](https://huggingface.co/datasets/Gourieff/ReActor/tree/main/models/sams) - и положите в папку "ComfyUI\models\sams" +5. Запустите ComfyUI и найдите ReActor Node внутри меню `ReActor` или через поиск + +

+

+

+

+## Использование

+

+Вы можете найти ноды ReActor внутри меню `ReActor` или через поиск (достаточно ввести "ReActor" в поисковой строке)

+

+Список нодов:

+- ••• Main Nodes •••

+ - ReActorFaceSwap (Основной нод)

+ - ReActorFaceSwapOpt (Основной нод с доп. входом Options)

+ - ReActorOptions (Опции для ReActorFaceSwapOpt)

+ - ReActorFaceBoost (Нод Face Booster)

+ - ReActorMaskHelper (Masking Helper)

+- ••• Operations with Face Models •••

+ - ReActorSaveFaceModel (Save Face Model)

+ - ReActorLoadFaceModel (Load Face Model)

+ - ReActorBuildFaceModel (Build Blended Face Model)

+ - ReActorMakeFaceModelBatch (Make Face Model Batch)

+- ••• Additional Nodes •••

+ - ReActorRestoreFace (Face Restoration)

+ - ReActorImageDublicator (Dublicate one Image to Images List)

+ - ImageRGBA2RGB (Convert RGBA to RGB)

+

+Соедините все необходимые слоты (slots) и запустите очередь (query).

+

+### Входы основного Нода

+

+- `input_image` - это изображение, на котором надо поменять лицо или лица (целевое изображение, аналог "target image" в версии для SD WebUI);

+ - Поддерживаемые ноды: "Load Image", "Load Video" или любые другие ноды предоставляющие изображение в качестве выхода;

+- `source_image` - это изображение с лицом или лицами для замены (изображение-источник, аналог "source image" в версии для SD WebUI);

+ - Поддерживаемые ноды: "Load Image" или любые другие ноды с выходом Image(s);

+- `face_model` - это вход для выхода с нода "Load Face Model" или другого нода ReActor для загрузки модели лица (face model или face embedding), которое вы создали ранее через нод "Save Face Model";

+ - Поддерживаемые ноды: "Load Face Model", "Build Blended Face Model";

+

+### Выходы основного Нода

+

+- `IMAGE` - выход с готовым изображением (результатом);

+ - Поддерживаемые ноды: любые ноды с изображением на входе;

+- `FACE_MODEL` - выход, предоставляющий модель лица, построенную в ходе замены;

+ - Поддерживаемые ноды: "Save Face Model", "ReActor", "Make Face Model Batch";

+

+### Восстановление лиц

+

+Начиная с версии 0.3.0 ReActor Node имеет встроенное восстановление лиц.Портативная версия ComfyUI для Windows

+ +1. Сделайте следующее: + - Установите [Visual Studio 2022](https://visualstudio.microsoft.com/downloads/) (Например, версию Community - этот шаг нужен для правильной компиляции библиотеки Insightface) + - ИЛИ только [VS C++ Build Tools](https://visualstudio.microsoft.com/visual-cpp-build-tools/), выберите "Desktop Development with C++" в разделе "Workloads -> Desktop & Mobile" + - ИЛИ если же вы не хотите устанавливать что-либо из вышеуказанного - выполните [данные шаги (раздел. I)](#insightfacebuild) +2. Выберите из двух вариантов: + - (ComfyUI Manager) Откройте ComfyUI Manager, нажвите "Install Custom Nodes", введите "ReActor" в поле "Search" и далее нажмите "Install". После того, как ComfyUI завершит установку, перезагрузите сервер. + - (Вручную) Перейдите в `ComfyUI\custom_nodes`, откройте Консоль и выполните `git clone https://github.com/Gourieff/ComfyUI-ReActor` +3. Перейдите `ComfyUI\custom_nodes\ComfyUI-ReActor` и запустите `install.bat`, дождитесь окончания установки +4. Если модель "face_yolov8m.pt" у вас отсутствует - можете скачать её [отсюда](https://huggingface.co/datasets/Gourieff/ReActor/blob/main/models/detection/bbox/face_yolov8m.pt) и положить в папку "ComfyUI\models\ultralytics\bbox"+То же самое и с "Sams" моделями, скачайте одну или обе [отсюда](https://huggingface.co/datasets/Gourieff/ReActor/tree/main/models/sams) - и положите в папку "ComfyUI\models\sams" +5. Запустите ComfyUI и найдите ReActor Node внутри меню `ReActor` или через поиск + +

Скачайте нужные вам модели (см. инструкцию по [Установке](#installation)) и выберите одну из них, чтобы улучшить качество финального лица. + +### Индексы Лиц (Face Indexes) + +По умолчанию ReActor определяет лица на изображении в порядке от "большого" к "малому".

Вы можете поменять эту опцию, используя нод ReActorFaceSwapOpt вместе с ReActorOptions. + +Если вам нужно заменить определенное лицо, вы можете указать индекс для исходного (source, с лицом) и входного (input, где будет замена лица) изображений. + +Индекс первого обнаруженного лица - 0. + +Вы можете задать индексы в том порядке, который вам нужен.

+Например: 0,1,2 (для Source); 1,0,2 (для Input).

Это означает, что: второе лицо из Input (индекс = 1) будет заменено первым лицом из Source (индекс = 0) и так далее. + +### Определение Пола + +Вы можете обозначить, какой пол нужно определять на изображении.

+ReActor заменит только то лицо, которое удовлетворяет заданному условию. + +### Модели Лиц +Начиная с версии 0.4.0, вы можете сохранять модели лиц как файлы "safetensors" (хранятся в папке `ComfyUI\models\reactor\faces`) и загружать их в ReActor, реализуя разные сценарии использования, а также храня супер легкие модели лиц, которые вы чаще всего используете. + +Чтобы новые модели появились в списке моделей нода "Load Face Model" - обновите страницу of с ComfyUI.