{

"cells": [

{

"attachments": {},

"cell_type": "markdown",

"id": "190e8e4c-461f-4521-ae7f-3491fa827ab7",

"metadata": {

"tags": []

},

"source": [

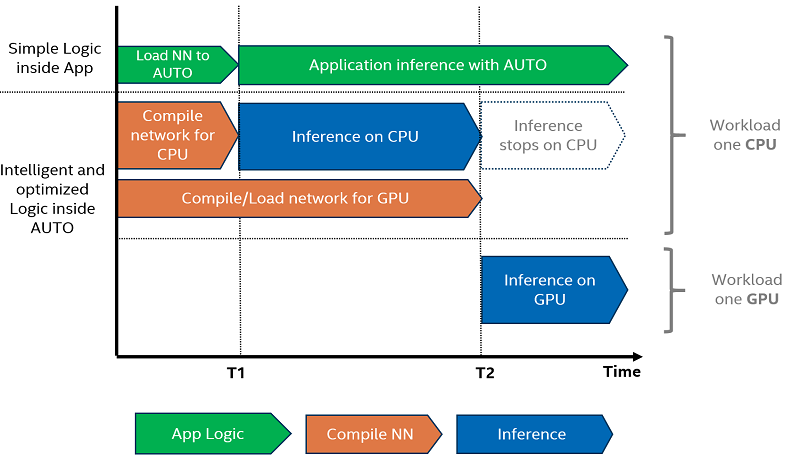

"# Automatic Device Selection with OpenVINO™\n",

"\n",

"The [Auto device](https://docs.openvino.ai/2024/openvino-workflow/running-inference/inference-devices-and-modes/auto-device-selection.html) (or AUTO in short) selects the most suitable device for inference by considering the model precision, power efficiency and processing capability of the available [compute devices](https://docs.openvino.ai/2024/about-openvino/compatibility-and-support/supported-devices.html). The model precision (such as `FP32`, `FP16`, `INT8`, etc.) is the first consideration to filter out the devices that cannot run the network efficiently.\n",

"\n",

"Next, if dedicated accelerators are available, these devices are preferred (for example, integrated and discrete [GPU](https://docs.openvino.ai/2024/openvino-workflow/running-inference/inference-devices-and-modes/gpu-device.html)). [CPU](https://docs.openvino.ai/2024/openvino-workflow/running-inference/inference-devices-and-modes/cpu-device.html) is used as the default \"fallback device\". Keep in mind that AUTO makes this selection only once, during the loading of a model. \n",

"\n",

"When using accelerator devices such as GPUs, loading models to these devices may take a long time. To address this challenge for applications that require fast first inference response, AUTO starts inference immediately on the CPU and then transparently shifts inference to the GPU, once it is ready. This dramatically reduces the time to execute first inference.\n",

"\n",

"\n",

"\n",

"\n",

"\n",

"#### Table of contents:\n",

"\n",

"- [Import modules and create Core](#Import-modules-and-create-Core)\n",

"- [Convert the model to OpenVINO IR format](#Convert-the-model-to-OpenVINO-IR-format)\n",

"- [(1) Simplify selection logic](#(1)-Simplify-selection-logic)\n",

" - [Default behavior of Core::compile_model API without device_name](#Default-behavior-of-Core::compile_model-API-without-device_name)\n",

" - [Explicitly pass AUTO as device_name to Core::compile_model API](#Explicitly-pass-AUTO-as-device_name-to-Core::compile_model-API)\n",

"- [(2) Improve the first inference latency](#(2)-Improve-the-first-inference-latency)\n",

" - [Load an Image](#Load-an-Image)\n",

" - [Load the model to GPU device and perform inference](#Load-the-model-to-GPU-device-and-perform-inference)\n",

" - [Load the model using AUTO device and do inference](#Load-the-model-using-AUTO-device-and-do-inference)\n",

"- [(3) Achieve different performance for different targets](#(3)-Achieve-different-performance-for-different-targets)\n",

" - [Class and callback definition](#Class-and-callback-definition)\n",

" - [Inference with THROUGHPUT hint](#Inference-with-THROUGHPUT-hint)\n",

" - [Inference with LATENCY hint](#Inference-with-LATENCY-hint)\n",

" - [Difference in FPS and latency](#Difference-in-FPS-and-latency)\n",

"\n"

]

},

{

"attachments": {},

"cell_type": "markdown",

"id": "fcfc461c",

"metadata": {},

"source": [

"## Import modules and create Core\n",

"[back to top ⬆️](#Table-of-contents:)\n"

]

},

{

"cell_type": "code",

"execution_count": 1,

"id": "967c128a",

"metadata": {

"tags": []

},

"outputs": [],

"source": [

"import platform\n",

"\n",

"# Install required packages\n",

"%pip install -q \"openvino>=2023.1.0\" Pillow torch torchvision tqdm --extra-index-url https://download.pytorch.org/whl/cpu\n",

"\n",

"if platform.system() != \"Windows\":\n",

" %pip install -q \"matplotlib>=3.4\"\n",

"else:\n",

" %pip install -q \"matplotlib>=3.4,<3.7\""

]

},

{

"cell_type": "code",

"execution_count": 2,

"id": "6c7f9f06",

"metadata": {

"tags": []

},

"outputs": [],

"source": [

"import time\n",

"import sys\n",

"\n",

"import openvino as ov\n",

"\n",

"from IPython.display import Markdown, display\n",

"\n",

"core = ov.Core()\n",

"\n",

"if not any(\"GPU\" in device for device in core.available_devices):\n",

" display(\n",

" Markdown(\n",

" '

Warning: A GPU device is not available. This notebook requires GPU device to have meaningful results.

'\n",

" )\n",

" )"

]

},

{

"attachments": {},

"cell_type": "markdown",

"id": "ee513ee2",

"metadata": {},

"source": [

"## Convert the model to OpenVINO IR format\n",

"[back to top ⬆️](#Table-of-contents:)\n",

"\n",

"This tutorial uses [resnet50](https://pytorch.org/vision/main/models/generated/torchvision.models.resnet50.html#resnet50) model from [torchvision](https://pytorch.org/vision/main/index.html?highlight=torchvision#module-torchvision) library.\n",

"ResNet 50 is image classification model pre-trained on ImageNet dataset described in paper [\"Deep Residual Learning for Image Recognition\"](https://arxiv.org/abs/1512.03385).\n",

"From OpenVINO 2023.0, we can directly convert a model from the PyTorch format to the OpenVINO IR format using model conversion API. To convert model, we should provide model object instance into `ov.convert_model` function, optionally, we can specify input shape for conversion (by default models from PyTorch converted with dynamic input shapes). `ov.convert_model` returns openvino.runtime.Model object ready to be loaded on a device with `ov.compile_model` or serialized for next usage with `ov.save_model`. \n",

"\n",

"For more information about model conversion API, see this [page](https://docs.openvino.ai/2024/openvino-workflow/model-preparation.html)."

]

},

{

"cell_type": "code",

"execution_count": 3,

"id": "e58e0582-a77f-4621-ba92-429a9c5f5e56",

"metadata": {

"tags": []

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"Read IR model from model/resnet50.xml\n"

]

}

],

"source": [

"import torchvision\n",

"from pathlib import Path\n",

"\n",

"base_model_dir = Path(\"./model\")\n",

"base_model_dir.mkdir(exist_ok=True)\n",

"model_path = base_model_dir / \"resnet50.xml\"\n",

"\n",

"if not model_path.exists():\n",

" pt_model = torchvision.models.resnet50(weights=\"DEFAULT\")\n",

" ov_model = ov.convert_model(pt_model, input=[[1, 3, 224, 224]])\n",

" ov.save_model(ov_model, str(model_path))\n",

" print(\"IR model saved to {}\".format(model_path))\n",

"else:\n",

" print(\"Read IR model from {}\".format(model_path))\n",

" ov_model = core.read_model(model_path)"

]

},

{

"attachments": {},

"cell_type": "markdown",

"id": "740bfdd8",

"metadata": {},

"source": [

"## (1) Simplify selection logic\n",

"[back to top ⬆️](#Table-of-contents:)\n",

"\n",

"### Default behavior of Core::compile_model API without device_name\n",

"[back to top ⬆️](#Table-of-contents:)\n",

"\n",

"\n",

"By default, `compile_model` API will select **AUTO** as `device_name` if no device is specified."

]

},

{

"cell_type": "code",

"execution_count": 4,

"id": "73d7aedb",

"metadata": {

"tags": []

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"Successfully compiled model without a device_name.\n"

]

}

],

"source": [

"# Set LOG_LEVEL to LOG_INFO.\n",

"core.set_property(\"AUTO\", {\"LOG_LEVEL\": \"LOG_INFO\"})\n",

"\n",

"# Load the model onto the target device.\n",

"compiled_model = core.compile_model(ov_model)\n",

"\n",

"if isinstance(compiled_model, ov.CompiledModel):\n",

" print(\"Successfully compiled model without a device_name.\")"

]

},

{

"cell_type": "code",

"execution_count": 5,

"id": "66bfa571-8241-4623-9e9e-01bf2d0f89d4",

"metadata": {

"tags": []

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"Deleted compiled_model\n"

]

}

],

"source": [

"# Deleted model will wait until compiling on the selected device is complete.\n",

"del compiled_model\n",

"print(\"Deleted compiled_model\")"

]

},

{

"attachments": {},

"cell_type": "markdown",

"id": "85b48949",

"metadata": {},

"source": [

"### Explicitly pass AUTO as device_name to Core::compile_model API\n",

"[back to top ⬆️](#Table-of-contents:)\n",

"\n",

"It is optional, but passing AUTO explicitly as `device_name` may improve readability of your code."

]

},

{

"cell_type": "code",

"execution_count": 6,

"id": "0814073d",

"metadata": {

"tags": []

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"Successfully compiled model using AUTO.\n"

]

}

],

"source": [

"# Set LOG_LEVEL to LOG_NONE.\n",

"core.set_property(\"AUTO\", {\"LOG_LEVEL\": \"LOG_NONE\"})\n",

"\n",

"compiled_model = core.compile_model(model=ov_model, device_name=\"AUTO\")\n",

"\n",

"if isinstance(compiled_model, ov.CompiledModel):\n",

" print(\"Successfully compiled model using AUTO.\")"

]

},

{

"cell_type": "code",

"execution_count": 7,

"id": "58e765f5-8e31-4eb4-bed8-9fba30bb29e8",

"metadata": {

"tags": []

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"Deleted compiled_model\n"

]

}

],

"source": [

"# Deleted model will wait until compiling on the selected device is complete.\n",

"del compiled_model\n",

"print(\"Deleted compiled_model\")"

]

},

{

"attachments": {},

"cell_type": "markdown",

"id": "705ce668",

"metadata": {},

"source": [

"## (2) Improve the first inference latency\n",

"[back to top ⬆️](#Table-of-contents:)\n",

"\n",

"One of the benefits of using AUTO device selection is reducing FIL (first inference latency). FIL is the model compilation time combined with the first inference execution time. Using the CPU device explicitly will produce the shortest first inference latency, as the OpenVINO graph representation loads quickly on CPU, using just-in-time (JIT) compilation. The challenge is with GPU devices since OpenCL graph complication to GPU-optimized kernels takes a few seconds to complete. This initialization time may be intolerable for some applications. To avoid this delay, the AUTO uses CPU transparently as the first inference device until GPU is ready.\n",

"\n",

"### Load an Image\n",

"[back to top ⬆️](#Table-of-contents:)\n",

"\n",

"torchvision library provides model specific input transformation function, we will reuse it for preparing input data."

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "384cb81a",

"metadata": {},

"outputs": [],

"source": [

"# Fetch `notebook_utils` module\n",

"import requests\n",

"\n",

"r = requests.get(url=\"https://raw.githubusercontent.com/openvinotoolkit/openvino_notebooks/latest/utils/notebook_utils.py\")\n",

"open(\"notebook_utils.py\", \"w\").write(r.text)\n",

"\n",

"from notebook_utils import download_file"

]

},

{

"cell_type": "code",

"execution_count": 8,

"id": "dc1cfeaf",

"metadata": {

"tags": []

},

"outputs": [

{

"data": {