diff --git a/.gitignore b/.gitignore

new file mode 100644

index 0000000000000000000000000000000000000000..074dc911d793a167c4836e60db87dfa7a34f3fd6

--- /dev/null

+++ b/.gitignore

@@ -0,0 +1,10 @@

+*.swp

+*.swo

+

+__pycache__

+*.pyc

+

+sr_interactive_tmp

+sr_interactive_tmp_output

+

+gradio_cached_examples

diff --git a/KAIR/LICENSE b/KAIR/LICENSE

new file mode 100644

index 0000000000000000000000000000000000000000..ddd784fef1443dbdf6bbd00495564e93554c7e4c

--- /dev/null

+++ b/KAIR/LICENSE

@@ -0,0 +1,9 @@

+MIT License

+

+Copyright (c) 2019 Kai Zhang

+

+Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the "Software"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions:

+

+The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.

+

+THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

diff --git a/KAIR/README.md b/KAIR/README.md

new file mode 100644

index 0000000000000000000000000000000000000000..8dd33fabc499cf4287c6deaed49f9c6b04709241

--- /dev/null

+++ b/KAIR/README.md

@@ -0,0 +1,343 @@

+## Training and testing codes for USRNet, DnCNN, FFDNet, SRMD, DPSR, MSRResNet, ESRGAN, BSRGAN, SwinIR, VRT

+[](https://github.com/cszn/KAIR/releases)

+

+[Kai Zhang](https://cszn.github.io/)

+

+*[Computer Vision Lab](https://vision.ee.ethz.ch/the-institute.html), ETH Zurich, Switzerland*

+

+_______

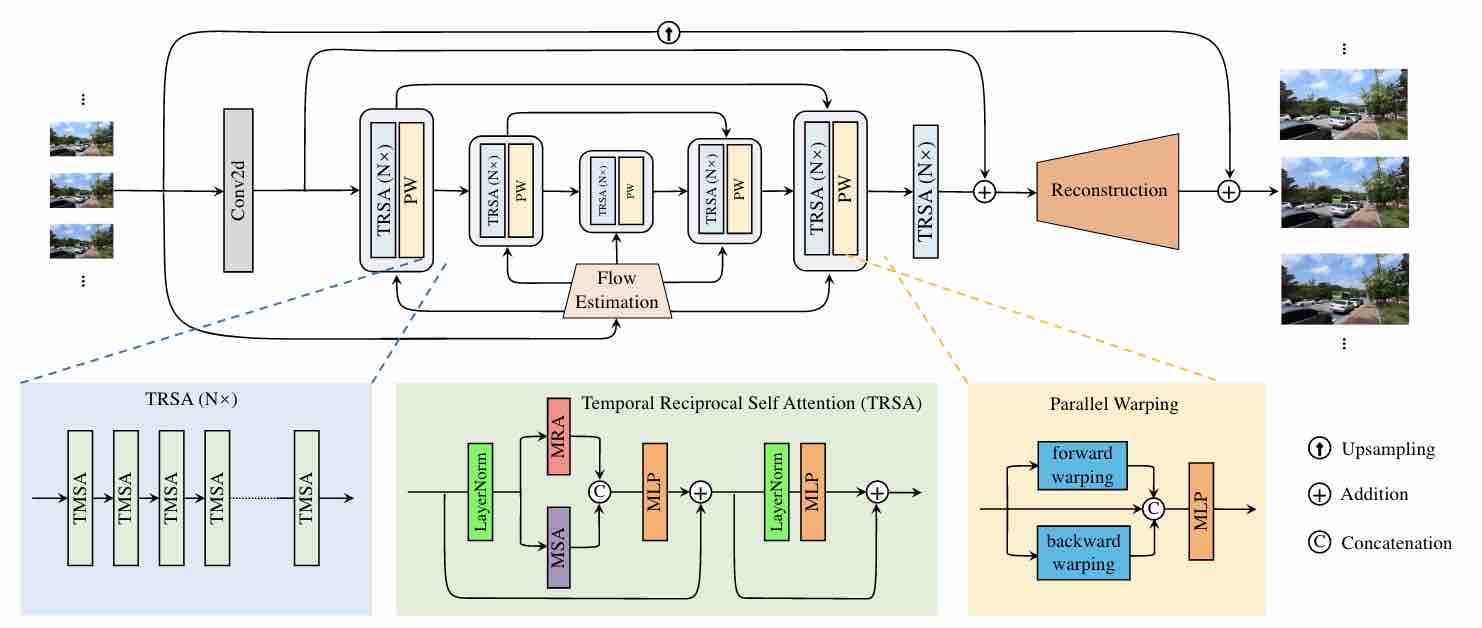

+- **_News (2022-02-15)_**: We release [the training codes](https://github.com/cszn/KAIR/blob/master/docs/README_VRT.md) of [VRT ](https://github.com/JingyunLiang/VRT) for video SR, deblurring and denoising.

+

+

+  +

+  +

+  +

+

+

+

+

+- **_News (2021-12-23)_**: Our techniques are adopted in [https://www.amemori.ai/](https://www.amemori.ai/).

+- **_News (2021-12-23)_**: Our new work for practical image denoising.

+

+-

+- [

+- [ ](https://imgsli.com/ODczMTc)

+[

](https://imgsli.com/ODczMTc)

+[ ](https://imgsli.com/ODczMTY)

+- **_News (2021-09-09)_**: Add [main_download_pretrained_models.py](https://github.com/cszn/KAIR/blob/master/main_download_pretrained_models.py) to download pre-trained models.

+- **_News (2021-09-08)_**: Add [matlab code](https://github.com/cszn/KAIR/tree/master/matlab) to zoom local part of an image for the purpose of comparison between different results.

+- **_News (2021-09-07)_**: We upload [the training code](https://github.com/cszn/KAIR/blob/master/docs/README_SwinIR.md) of [SwinIR ](https://github.com/JingyunLiang/SwinIR) and provide an [interactive online Colob demo for real-world image SR](https://colab.research.google.com/gist/JingyunLiang/a5e3e54bc9ef8d7bf594f6fee8208533/swinir-demo-on-real-world-image-sr.ipynb). Try to super-resolve your own images on Colab!

](https://imgsli.com/ODczMTY)

+- **_News (2021-09-09)_**: Add [main_download_pretrained_models.py](https://github.com/cszn/KAIR/blob/master/main_download_pretrained_models.py) to download pre-trained models.

+- **_News (2021-09-08)_**: Add [matlab code](https://github.com/cszn/KAIR/tree/master/matlab) to zoom local part of an image for the purpose of comparison between different results.

+- **_News (2021-09-07)_**: We upload [the training code](https://github.com/cszn/KAIR/blob/master/docs/README_SwinIR.md) of [SwinIR ](https://github.com/JingyunLiang/SwinIR) and provide an [interactive online Colob demo for real-world image SR](https://colab.research.google.com/gist/JingyunLiang/a5e3e54bc9ef8d7bf594f6fee8208533/swinir-demo-on-real-world-image-sr.ipynb). Try to super-resolve your own images on Colab!  +

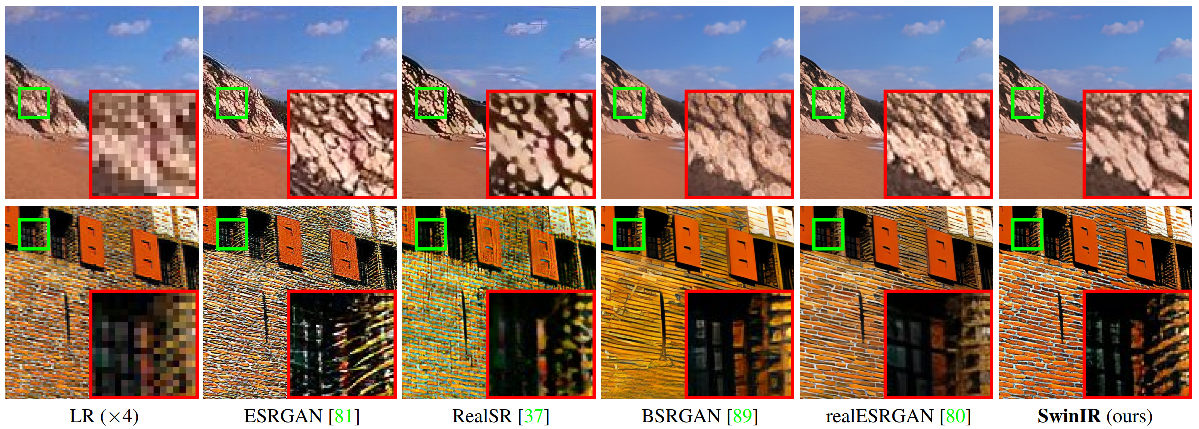

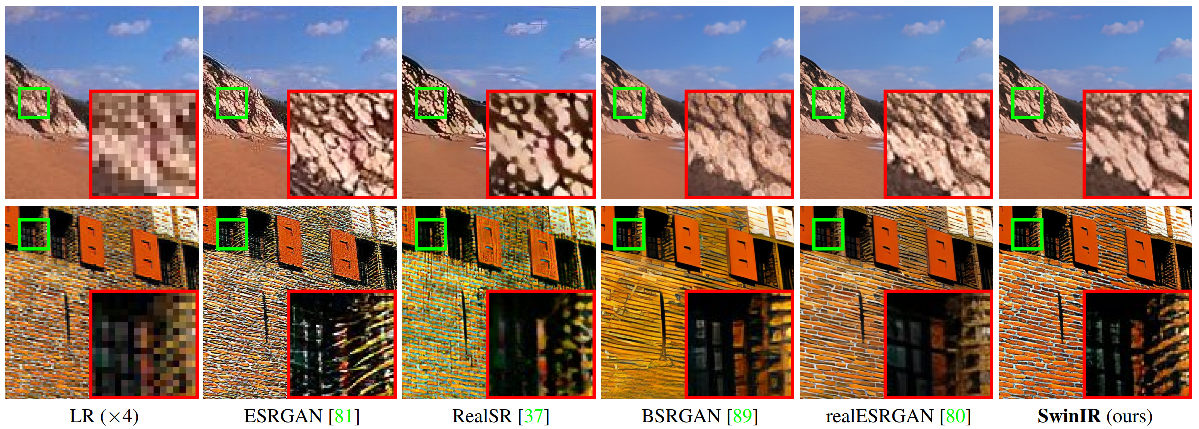

+|Real-World Image (x4)|[BSRGAN, ICCV2021](https://github.com/cszn/BSRGAN)|[Real-ESRGAN](https://github.com/xinntao/Real-ESRGAN)|SwinIR (ours)|

+| :--- | :---: | :-----: | :-----: |

+|

+

+|Real-World Image (x4)|[BSRGAN, ICCV2021](https://github.com/cszn/BSRGAN)|[Real-ESRGAN](https://github.com/xinntao/Real-ESRGAN)|SwinIR (ours)|

+| :--- | :---: | :-----: | :-----: |

+| |

| |

| |

| +|

+| |

| |

| |

| |

+

+- **_News (2021-08-31)_**: We upload the [training code of BSRGAN](https://github.com/cszn/BSRGAN#training).

+- **_News (2021-08-24)_**: We upload the BSRGAN degradation model.

+- **_News (2021-08-22)_**: Support multi-feature-layer VGG perceptual loss and UNet discriminator.

+- **_News (2021-08-18)_**: We upload the extended BSRGAN degradation model. It is slightly different from our published version.

+

+- **_News (2021-06-03)_**: Add testing codes of [GPEN (CVPR21)](https://github.com/yangxy/GPEN) for face image enhancement: [main_test_face_enhancement.py](https://github.com/cszn/KAIR/blob/master/main_test_face_enhancement.py)

+

+

|

+

+- **_News (2021-08-31)_**: We upload the [training code of BSRGAN](https://github.com/cszn/BSRGAN#training).

+- **_News (2021-08-24)_**: We upload the BSRGAN degradation model.

+- **_News (2021-08-22)_**: Support multi-feature-layer VGG perceptual loss and UNet discriminator.

+- **_News (2021-08-18)_**: We upload the extended BSRGAN degradation model. It is slightly different from our published version.

+

+- **_News (2021-06-03)_**: Add testing codes of [GPEN (CVPR21)](https://github.com/yangxy/GPEN) for face image enhancement: [main_test_face_enhancement.py](https://github.com/cszn/KAIR/blob/master/main_test_face_enhancement.py)

+

+ +

+ +

+ +

+ +

+ +

+ +

+

+- **_News (2021-05-13)_**: Add [PatchGAN discriminator](https://github.com/cszn/KAIR/blob/master/models/network_discriminator.py).

+

+- **_News (2021-05-12)_**: Support distributed training, see also [https://github.com/xinntao/BasicSR/blob/master/docs/TrainTest.md](https://github.com/xinntao/BasicSR/blob/master/docs/TrainTest.md).

+

+- **_News (2021-01)_**: [BSRGAN](https://github.com/cszn/BSRGAN) for blind real image super-resolution will be added.

+

+- **_Pull requests are welcome!_**

+

+- **Correction (2020-10)**: If you use multiple GPUs for GAN training, remove or comment [Line 105](https://github.com/cszn/KAIR/blob/e52a6944c6a40ba81b88430ffe38fd6517e0449e/models/model_gan.py#L105) to enable `DataParallel` for fast training

+

+- **News (2020-10)**: Add [utils_receptivefield.py](https://github.com/cszn/KAIR/blob/master/utils/utils_receptivefield.py) to calculate receptive field.

+

+- **News (2020-8)**: A `deep plug-and-play image restoration toolbox` is released at [cszn/DPIR](https://github.com/cszn/DPIR).

+

+- **Tips (2020-8)**: Use [this](https://github.com/cszn/KAIR/blob/9fd17abff001ab82a22070f7e442bb5246d2d844/main_challenge_sr.py#L147) to avoid `out of memory` issue.

+

+- **News (2020-7)**: Add [main_challenge_sr.py](https://github.com/cszn/KAIR/blob/23b0d0f717980e48fad02513ba14045d57264fe1/main_challenge_sr.py#L90) to get `FLOPs`, `#Params`, `Runtime`, `#Activations`, `#Conv`, and `Max Memory Allocated`.

+```python

+from utils.utils_modelsummary import get_model_activation, get_model_flops

+input_dim = (3, 256, 256) # set the input dimension

+activations, num_conv2d = get_model_activation(model, input_dim)

+logger.info('{:>16s} : {:<.4f} [M]'.format('#Activations', activations/10**6))

+logger.info('{:>16s} : {:16s} : {:<.4f} [G]'.format('FLOPs', flops/10**9))

+num_parameters = sum(map(lambda x: x.numel(), model.parameters()))

+logger.info('{:>16s} : {:<.4f} [M]'.format('#Params', num_parameters/10**6))

+```

+

+- **News (2020-6)**: Add [USRNet (CVPR 2020)](https://github.com/cszn/USRNet) for training and testing.

+ - [Network Architecture](https://github.com/cszn/KAIR/blob/3357aa0e54b81b1e26ceb1cee990f39add235e17/models/network_usrnet.py#L309)

+ - [Dataset](https://github.com/cszn/KAIR/blob/6c852636d3715bb281637863822a42c72739122a/data/dataset_usrnet.py#L16)

+

+

+Clone repo

+----------

+```

+git clone https://github.com/cszn/KAIR.git

+```

+```

+pip install -r requirement.txt

+```

+

+

+

+Training

+----------

+

+You should modify the json file from [options](https://github.com/cszn/KAIR/tree/master/options) first, for example,

+setting ["gpu_ids": [0,1,2,3]](https://github.com/cszn/KAIR/blob/ff80d265f64de67dfb3ffa9beff8949773c81a3d/options/train_msrresnet_psnr.json#L4) if 4 GPUs are used,

+setting ["dataroot_H": "trainsets/trainH"](https://github.com/cszn/KAIR/blob/ff80d265f64de67dfb3ffa9beff8949773c81a3d/options/train_msrresnet_psnr.json#L24) if path of the high quality dataset is `trainsets/trainH`.

+

+- Training with `DataParallel` - PSNR

+

+

+```python

+python main_train_psnr.py --opt options/train_msrresnet_psnr.json

+```

+

+- Training with `DataParallel` - GAN

+

+```python

+python main_train_gan.py --opt options/train_msrresnet_gan.json

+```

+

+- Training with `DistributedDataParallel` - PSNR - 4 GPUs

+

+```python

+python -m torch.distributed.launch --nproc_per_node=4 --master_port=1234 main_train_psnr.py --opt options/train_msrresnet_psnr.json --dist True

+```

+

+- Training with `DistributedDataParallel` - PSNR - 8 GPUs

+

+```python

+python -m torch.distributed.launch --nproc_per_node=8 --master_port=1234 main_train_psnr.py --opt options/train_msrresnet_psnr.json --dist True

+```

+

+- Training with `DistributedDataParallel` - GAN - 4 GPUs

+

+```python

+python -m torch.distributed.launch --nproc_per_node=4 --master_port=1234 main_train_gan.py --opt options/train_msrresnet_gan.json --dist True

+```

+

+- Training with `DistributedDataParallel` - GAN - 8 GPUs

+

+```python

+python -m torch.distributed.launch --nproc_per_node=8 --master_port=1234 main_train_gan.py --opt options/train_msrresnet_gan.json --dist True

+```

+

+- Kill distributed training processes of `main_train_gan.py`

+

+```python

+kill $(ps aux | grep main_train_gan.py | grep -v grep | awk '{print $2}')

+```

+

+----------

+| Method | Original Link |

+|---|---|

+| DnCNN |[https://github.com/cszn/DnCNN](https://github.com/cszn/DnCNN)|

+| FDnCNN |[https://github.com/cszn/DnCNN](https://github.com/cszn/DnCNN)|

+| FFDNet | [https://github.com/cszn/FFDNet](https://github.com/cszn/FFDNet)|

+| SRMD | [https://github.com/cszn/SRMD](https://github.com/cszn/SRMD)|

+| DPSR-SRResNet | [https://github.com/cszn/DPSR](https://github.com/cszn/DPSR)|

+| SRResNet | [https://github.com/xinntao/BasicSR](https://github.com/xinntao/BasicSR)|

+| ESRGAN | [https://github.com/xinntao/ESRGAN](https://github.com/xinntao/ESRGAN)|

+| RRDB | [https://github.com/xinntao/ESRGAN](https://github.com/xinntao/ESRGAN)|

+| IMDB | [https://github.com/Zheng222/IMDN](https://github.com/Zheng222/IMDN)|

+| USRNet | [https://github.com/cszn/USRNet](https://github.com/cszn/USRNet)|

+| DRUNet | [https://github.com/cszn/DPIR](https://github.com/cszn/DPIR)|

+| DPIR | [https://github.com/cszn/DPIR](https://github.com/cszn/DPIR)|

+| BSRGAN | [https://github.com/cszn/BSRGAN](https://github.com/cszn/BSRGAN)|

+| SwinIR | [https://github.com/JingyunLiang/SwinIR](https://github.com/JingyunLiang/SwinIR)|

+| VRT | [https://github.com/JingyunLiang/VRT](https://github.com/JingyunLiang/VRT) |

+

+Network architectures

+----------

+* [USRNet](https://github.com/cszn/USRNet)

+

+

+

+

+- **_News (2021-05-13)_**: Add [PatchGAN discriminator](https://github.com/cszn/KAIR/blob/master/models/network_discriminator.py).

+

+- **_News (2021-05-12)_**: Support distributed training, see also [https://github.com/xinntao/BasicSR/blob/master/docs/TrainTest.md](https://github.com/xinntao/BasicSR/blob/master/docs/TrainTest.md).

+

+- **_News (2021-01)_**: [BSRGAN](https://github.com/cszn/BSRGAN) for blind real image super-resolution will be added.

+

+- **_Pull requests are welcome!_**

+

+- **Correction (2020-10)**: If you use multiple GPUs for GAN training, remove or comment [Line 105](https://github.com/cszn/KAIR/blob/e52a6944c6a40ba81b88430ffe38fd6517e0449e/models/model_gan.py#L105) to enable `DataParallel` for fast training

+

+- **News (2020-10)**: Add [utils_receptivefield.py](https://github.com/cszn/KAIR/blob/master/utils/utils_receptivefield.py) to calculate receptive field.

+

+- **News (2020-8)**: A `deep plug-and-play image restoration toolbox` is released at [cszn/DPIR](https://github.com/cszn/DPIR).

+

+- **Tips (2020-8)**: Use [this](https://github.com/cszn/KAIR/blob/9fd17abff001ab82a22070f7e442bb5246d2d844/main_challenge_sr.py#L147) to avoid `out of memory` issue.

+

+- **News (2020-7)**: Add [main_challenge_sr.py](https://github.com/cszn/KAIR/blob/23b0d0f717980e48fad02513ba14045d57264fe1/main_challenge_sr.py#L90) to get `FLOPs`, `#Params`, `Runtime`, `#Activations`, `#Conv`, and `Max Memory Allocated`.

+```python

+from utils.utils_modelsummary import get_model_activation, get_model_flops

+input_dim = (3, 256, 256) # set the input dimension

+activations, num_conv2d = get_model_activation(model, input_dim)

+logger.info('{:>16s} : {:<.4f} [M]'.format('#Activations', activations/10**6))

+logger.info('{:>16s} : {:16s} : {:<.4f} [G]'.format('FLOPs', flops/10**9))

+num_parameters = sum(map(lambda x: x.numel(), model.parameters()))

+logger.info('{:>16s} : {:<.4f} [M]'.format('#Params', num_parameters/10**6))

+```

+

+- **News (2020-6)**: Add [USRNet (CVPR 2020)](https://github.com/cszn/USRNet) for training and testing.

+ - [Network Architecture](https://github.com/cszn/KAIR/blob/3357aa0e54b81b1e26ceb1cee990f39add235e17/models/network_usrnet.py#L309)

+ - [Dataset](https://github.com/cszn/KAIR/blob/6c852636d3715bb281637863822a42c72739122a/data/dataset_usrnet.py#L16)

+

+

+Clone repo

+----------

+```

+git clone https://github.com/cszn/KAIR.git

+```

+```

+pip install -r requirement.txt

+```

+

+

+

+Training

+----------

+

+You should modify the json file from [options](https://github.com/cszn/KAIR/tree/master/options) first, for example,

+setting ["gpu_ids": [0,1,2,3]](https://github.com/cszn/KAIR/blob/ff80d265f64de67dfb3ffa9beff8949773c81a3d/options/train_msrresnet_psnr.json#L4) if 4 GPUs are used,

+setting ["dataroot_H": "trainsets/trainH"](https://github.com/cszn/KAIR/blob/ff80d265f64de67dfb3ffa9beff8949773c81a3d/options/train_msrresnet_psnr.json#L24) if path of the high quality dataset is `trainsets/trainH`.

+

+- Training with `DataParallel` - PSNR

+

+

+```python

+python main_train_psnr.py --opt options/train_msrresnet_psnr.json

+```

+

+- Training with `DataParallel` - GAN

+

+```python

+python main_train_gan.py --opt options/train_msrresnet_gan.json

+```

+

+- Training with `DistributedDataParallel` - PSNR - 4 GPUs

+

+```python

+python -m torch.distributed.launch --nproc_per_node=4 --master_port=1234 main_train_psnr.py --opt options/train_msrresnet_psnr.json --dist True

+```

+

+- Training with `DistributedDataParallel` - PSNR - 8 GPUs

+

+```python

+python -m torch.distributed.launch --nproc_per_node=8 --master_port=1234 main_train_psnr.py --opt options/train_msrresnet_psnr.json --dist True

+```

+

+- Training with `DistributedDataParallel` - GAN - 4 GPUs

+

+```python

+python -m torch.distributed.launch --nproc_per_node=4 --master_port=1234 main_train_gan.py --opt options/train_msrresnet_gan.json --dist True

+```

+

+- Training with `DistributedDataParallel` - GAN - 8 GPUs

+

+```python

+python -m torch.distributed.launch --nproc_per_node=8 --master_port=1234 main_train_gan.py --opt options/train_msrresnet_gan.json --dist True

+```

+

+- Kill distributed training processes of `main_train_gan.py`

+

+```python

+kill $(ps aux | grep main_train_gan.py | grep -v grep | awk '{print $2}')

+```

+

+----------

+| Method | Original Link |

+|---|---|

+| DnCNN |[https://github.com/cszn/DnCNN](https://github.com/cszn/DnCNN)|

+| FDnCNN |[https://github.com/cszn/DnCNN](https://github.com/cszn/DnCNN)|

+| FFDNet | [https://github.com/cszn/FFDNet](https://github.com/cszn/FFDNet)|

+| SRMD | [https://github.com/cszn/SRMD](https://github.com/cszn/SRMD)|

+| DPSR-SRResNet | [https://github.com/cszn/DPSR](https://github.com/cszn/DPSR)|

+| SRResNet | [https://github.com/xinntao/BasicSR](https://github.com/xinntao/BasicSR)|

+| ESRGAN | [https://github.com/xinntao/ESRGAN](https://github.com/xinntao/ESRGAN)|

+| RRDB | [https://github.com/xinntao/ESRGAN](https://github.com/xinntao/ESRGAN)|

+| IMDB | [https://github.com/Zheng222/IMDN](https://github.com/Zheng222/IMDN)|

+| USRNet | [https://github.com/cszn/USRNet](https://github.com/cszn/USRNet)|

+| DRUNet | [https://github.com/cszn/DPIR](https://github.com/cszn/DPIR)|

+| DPIR | [https://github.com/cszn/DPIR](https://github.com/cszn/DPIR)|

+| BSRGAN | [https://github.com/cszn/BSRGAN](https://github.com/cszn/BSRGAN)|

+| SwinIR | [https://github.com/JingyunLiang/SwinIR](https://github.com/JingyunLiang/SwinIR)|

+| VRT | [https://github.com/JingyunLiang/VRT](https://github.com/JingyunLiang/VRT) |

+

+Network architectures

+----------

+* [USRNet](https://github.com/cszn/USRNet)

+

+  +

+* DnCNN

+

+

+

+* DnCNN

+

+  +

+* IRCNN denoiser

+

+

+

+* IRCNN denoiser

+

+  +

+* FFDNet

+

+

+

+* FFDNet

+

+  +

+* SRMD

+

+

+

+* SRMD

+

+  +

+* SRResNet, SRGAN, RRDB, ESRGAN

+

+

+

+* SRResNet, SRGAN, RRDB, ESRGAN

+

+  +

+* IMDN

+

+

+

+* IMDN

+

+  -----

-----  +

+

+

+Testing

+----------

+|Method | [model_zoo](model_zoo)|

+|---|---|

+| [main_test_dncnn.py](main_test_dncnn.py) |```dncnn_15.pth, dncnn_25.pth, dncnn_50.pth, dncnn_gray_blind.pth, dncnn_color_blind.pth, dncnn3.pth```|

+| [main_test_ircnn_denoiser.py](main_test_ircnn_denoiser.py) | ```ircnn_gray.pth, ircnn_color.pth```|

+| [main_test_fdncnn.py](main_test_fdncnn.py) | ```fdncnn_gray.pth, fdncnn_color.pth, fdncnn_gray_clip.pth, fdncnn_color_clip.pth```|

+| [main_test_ffdnet.py](main_test_ffdnet.py) | ```ffdnet_gray.pth, ffdnet_color.pth, ffdnet_gray_clip.pth, ffdnet_color_clip.pth```|

+| [main_test_srmd.py](main_test_srmd.py) | ```srmdnf_x2.pth, srmdnf_x3.pth, srmdnf_x4.pth, srmd_x2.pth, srmd_x3.pth, srmd_x4.pth```|

+| | **The above models are converted from MatConvNet.** |

+| [main_test_dpsr.py](main_test_dpsr.py) | ```dpsr_x2.pth, dpsr_x3.pth, dpsr_x4.pth, dpsr_x4_gan.pth```|

+| [main_test_msrresnet.py](main_test_msrresnet.py) | ```msrresnet_x4_psnr.pth, msrresnet_x4_gan.pth```|

+| [main_test_rrdb.py](main_test_rrdb.py) | ```rrdb_x4_psnr.pth, rrdb_x4_esrgan.pth```|

+| [main_test_imdn.py](main_test_imdn.py) | ```imdn_x4.pth```|

+

+[model_zoo](model_zoo)

+--------

+- download link [https://drive.google.com/drive/folders/13kfr3qny7S2xwG9h7v95F5mkWs0OmU0D](https://drive.google.com/drive/folders/13kfr3qny7S2xwG9h7v95F5mkWs0OmU0D)

+

+[trainsets](trainsets)

+----------

+- [https://github.com/xinntao/BasicSR/blob/master/docs/DatasetPreparation.md](https://github.com/xinntao/BasicSR/blob/master/docs/DatasetPreparation.md)

+- [train400](https://github.com/cszn/DnCNN/tree/master/TrainingCodes/DnCNN_TrainingCodes_v1.0/data)

+- [DIV2K](https://data.vision.ee.ethz.ch/cvl/DIV2K/)

+- [Flickr2K](https://cv.snu.ac.kr/research/EDSR/Flickr2K.tar)

+- optional: use [split_imageset(original_dataroot, taget_dataroot, n_channels=3, p_size=512, p_overlap=96, p_max=800)](https://github.com/cszn/KAIR/blob/3ee0bf3e07b90ec0b7302d97ee2adb780617e637/utils/utils_image.py#L123) to get ```trainsets/trainH``` with small images for fast data loading

+

+[testsets](testsets)

+-----------

+- [https://github.com/xinntao/BasicSR/blob/master/docs/DatasetPreparation.md](https://github.com/xinntao/BasicSR/blob/master/docs/DatasetPreparation.md)

+- [set12](https://github.com/cszn/FFDNet/tree/master/testsets)

+- [bsd68](https://github.com/cszn/FFDNet/tree/master/testsets)

+- [cbsd68](https://github.com/cszn/FFDNet/tree/master/testsets)

+- [kodak24](https://github.com/cszn/FFDNet/tree/master/testsets)

+- [srbsd68](https://github.com/cszn/DPSR/tree/master/testsets/BSD68/GT)

+- set5

+- set14

+- cbsd100

+- urban100

+- manga109

+

+

+References

+----------

+```BibTex

+@article{liang2022vrt,

+title={VRT: A Video Restoration Transformer},

+author={Liang, Jingyun and Cao, Jiezhang and Fan, Yuchen and Zhang, Kai and Ranjan, Rakesh and Li, Yawei and Timofte, Radu and Van Gool, Luc},

+journal={arXiv preprint arXiv:2022.00000},

+year={2022}

+}

+@inproceedings{liang2021swinir,

+title={SwinIR: Image Restoration Using Swin Transformer},

+author={Liang, Jingyun and Cao, Jiezhang and Sun, Guolei and Zhang, Kai and Van Gool, Luc and Timofte, Radu},

+booktitle={IEEE International Conference on Computer Vision Workshops},

+pages={1833--1844},

+year={2021}

+}

+@inproceedings{zhang2021designing,

+title={Designing a Practical Degradation Model for Deep Blind Image Super-Resolution},

+author={Zhang, Kai and Liang, Jingyun and Van Gool, Luc and Timofte, Radu},

+booktitle={IEEE International Conference on Computer Vision},

+pages={4791--4800},

+year={2021}

+}

+@article{zhang2021plug, % DPIR & DRUNet & IRCNN

+ title={Plug-and-Play Image Restoration with Deep Denoiser Prior},

+ author={Zhang, Kai and Li, Yawei and Zuo, Wangmeng and Zhang, Lei and Van Gool, Luc and Timofte, Radu},

+ journal={IEEE Transactions on Pattern Analysis and Machine Intelligence},

+ year={2021}

+}

+@inproceedings{zhang2020aim, % efficientSR_challenge

+ title={AIM 2020 Challenge on Efficient Super-Resolution: Methods and Results},

+ author={Kai Zhang and Martin Danelljan and Yawei Li and Radu Timofte and others},

+ booktitle={European Conference on Computer Vision Workshops},

+ year={2020}

+}

+@inproceedings{zhang2020deep, % USRNet

+ title={Deep unfolding network for image super-resolution},

+ author={Zhang, Kai and Van Gool, Luc and Timofte, Radu},

+ booktitle={IEEE Conference on Computer Vision and Pattern Recognition},

+ pages={3217--3226},

+ year={2020}

+}

+@article{zhang2017beyond, % DnCNN

+ title={Beyond a gaussian denoiser: Residual learning of deep cnn for image denoising},

+ author={Zhang, Kai and Zuo, Wangmeng and Chen, Yunjin and Meng, Deyu and Zhang, Lei},

+ journal={IEEE Transactions on Image Processing},

+ volume={26},

+ number={7},

+ pages={3142--3155},

+ year={2017}

+}

+@inproceedings{zhang2017learning, % IRCNN

+title={Learning deep CNN denoiser prior for image restoration},

+author={Zhang, Kai and Zuo, Wangmeng and Gu, Shuhang and Zhang, Lei},

+booktitle={IEEE conference on computer vision and pattern recognition},

+pages={3929--3938},

+year={2017}

+}

+@article{zhang2018ffdnet, % FFDNet, FDnCNN

+ title={FFDNet: Toward a fast and flexible solution for CNN-based image denoising},

+ author={Zhang, Kai and Zuo, Wangmeng and Zhang, Lei},

+ journal={IEEE Transactions on Image Processing},

+ volume={27},

+ number={9},

+ pages={4608--4622},

+ year={2018}

+}

+@inproceedings{zhang2018learning, % SRMD

+ title={Learning a single convolutional super-resolution network for multiple degradations},

+ author={Zhang, Kai and Zuo, Wangmeng and Zhang, Lei},

+ booktitle={IEEE Conference on Computer Vision and Pattern Recognition},

+ pages={3262--3271},

+ year={2018}

+}

+@inproceedings{zhang2019deep, % DPSR

+ title={Deep Plug-and-Play Super-Resolution for Arbitrary Blur Kernels},

+ author={Zhang, Kai and Zuo, Wangmeng and Zhang, Lei},

+ booktitle={IEEE Conference on Computer Vision and Pattern Recognition},

+ pages={1671--1681},

+ year={2019}

+}

+@InProceedings{wang2018esrgan, % ESRGAN, MSRResNet

+ author = {Wang, Xintao and Yu, Ke and Wu, Shixiang and Gu, Jinjin and Liu, Yihao and Dong, Chao and Qiao, Yu and Loy, Chen Change},

+ title = {ESRGAN: Enhanced super-resolution generative adversarial networks},

+ booktitle = {The European Conference on Computer Vision Workshops (ECCVW)},

+ month = {September},

+ year = {2018}

+}

+@inproceedings{hui2019lightweight, % IMDN

+ title={Lightweight Image Super-Resolution with Information Multi-distillation Network},

+ author={Hui, Zheng and Gao, Xinbo and Yang, Yunchu and Wang, Xiumei},

+ booktitle={Proceedings of the 27th ACM International Conference on Multimedia (ACM MM)},

+ pages={2024--2032},

+ year={2019}

+}

+@inproceedings{zhang2019aim, % IMDN

+ title={AIM 2019 Challenge on Constrained Super-Resolution: Methods and Results},

+ author={Kai Zhang and Shuhang Gu and Radu Timofte and others},

+ booktitle={IEEE International Conference on Computer Vision Workshops},

+ year={2019}

+}

+@inproceedings{yang2021gan,

+ title={GAN Prior Embedded Network for Blind Face Restoration in the Wild},

+ author={Tao Yang, Peiran Ren, Xuansong Xie, and Lei Zhang},

+ booktitle={IEEE Conference on Computer Vision and Pattern Recognition},

+ year={2021}

+}

+```

diff --git a/KAIR/data/__init__.py b/KAIR/data/__init__.py

new file mode 100644

index 0000000000000000000000000000000000000000..8b137891791fe96927ad78e64b0aad7bded08bdc

--- /dev/null

+++ b/KAIR/data/__init__.py

@@ -0,0 +1 @@

+

diff --git a/KAIR/data/dataset_blindsr.py b/KAIR/data/dataset_blindsr.py

new file mode 100644

index 0000000000000000000000000000000000000000..3d16ae3418b45d3550f70c43cd56ac0491fe87b6

--- /dev/null

+++ b/KAIR/data/dataset_blindsr.py

@@ -0,0 +1,92 @@

+import random

+import numpy as np

+import torch.utils.data as data

+import utils.utils_image as util

+import os

+from utils import utils_blindsr as blindsr

+

+

+class DatasetBlindSR(data.Dataset):

+ '''

+ # -----------------------------------------

+ # dataset for BSRGAN

+ # -----------------------------------------

+ '''

+ def __init__(self, opt):

+ super(DatasetBlindSR, self).__init__()

+ self.opt = opt

+ self.n_channels = opt['n_channels'] if opt['n_channels'] else 3

+ self.sf = opt['scale'] if opt['scale'] else 4

+ self.shuffle_prob = opt['shuffle_prob'] if opt['shuffle_prob'] else 0.1

+ self.use_sharp = opt['use_sharp'] if opt['use_sharp'] else False

+ self.degradation_type = opt['degradation_type'] if opt['degradation_type'] else 'bsrgan'

+ self.lq_patchsize = self.opt['lq_patchsize'] if self.opt['lq_patchsize'] else 64

+ self.patch_size = self.opt['H_size'] if self.opt['H_size'] else self.lq_patchsize*self.sf

+

+ self.paths_H = util.get_image_paths(opt['dataroot_H'])

+ print(len(self.paths_H))

+

+# for n, v in enumerate(self.paths_H):

+# if 'face' in v:

+# del self.paths_H[n]

+# time.sleep(1)

+ assert self.paths_H, 'Error: H path is empty.'

+

+ def __getitem__(self, index):

+

+ L_path = None

+

+ # ------------------------------------

+ # get H image

+ # ------------------------------------

+ H_path = self.paths_H[index]

+ img_H = util.imread_uint(H_path, self.n_channels)

+ img_name, ext = os.path.splitext(os.path.basename(H_path))

+ H, W, C = img_H.shape

+

+ if H < self.patch_size or W < self.patch_size:

+ img_H = np.tile(np.random.randint(0, 256, size=[1, 1, self.n_channels], dtype=np.uint8), (self.patch_size, self.patch_size, 1))

+

+ # ------------------------------------

+ # if train, get L/H patch pair

+ # ------------------------------------

+ if self.opt['phase'] == 'train':

+

+ H, W, C = img_H.shape

+

+ rnd_h_H = random.randint(0, max(0, H - self.patch_size))

+ rnd_w_H = random.randint(0, max(0, W - self.patch_size))

+ img_H = img_H[rnd_h_H:rnd_h_H + self.patch_size, rnd_w_H:rnd_w_H + self.patch_size, :]

+

+ if 'face' in img_name:

+ mode = random.choice([0, 4])

+ img_H = util.augment_img(img_H, mode=mode)

+ else:

+ mode = random.randint(0, 7)

+ img_H = util.augment_img(img_H, mode=mode)

+

+ img_H = util.uint2single(img_H)

+ if self.degradation_type == 'bsrgan':

+ img_L, img_H = blindsr.degradation_bsrgan(img_H, self.sf, lq_patchsize=self.lq_patchsize, isp_model=None)

+ elif self.degradation_type == 'bsrgan_plus':

+ img_L, img_H = blindsr.degradation_bsrgan_plus(img_H, self.sf, shuffle_prob=self.shuffle_prob, use_sharp=self.use_sharp, lq_patchsize=self.lq_patchsize)

+

+ else:

+ img_H = util.uint2single(img_H)

+ if self.degradation_type == 'bsrgan':

+ img_L, img_H = blindsr.degradation_bsrgan(img_H, self.sf, lq_patchsize=self.lq_patchsize, isp_model=None)

+ elif self.degradation_type == 'bsrgan_plus':

+ img_L, img_H = blindsr.degradation_bsrgan_plus(img_H, self.sf, shuffle_prob=self.shuffle_prob, use_sharp=self.use_sharp, lq_patchsize=self.lq_patchsize)

+

+ # ------------------------------------

+ # L/H pairs, HWC to CHW, numpy to tensor

+ # ------------------------------------

+ img_H, img_L = util.single2tensor3(img_H), util.single2tensor3(img_L)

+

+ if L_path is None:

+ L_path = H_path

+

+ return {'L': img_L, 'H': img_H, 'L_path': L_path, 'H_path': H_path}

+

+ def __len__(self):

+ return len(self.paths_H)

diff --git a/KAIR/data/dataset_dncnn.py b/KAIR/data/dataset_dncnn.py

new file mode 100644

index 0000000000000000000000000000000000000000..2477e253c3449fd2bf2f133c79700a7fc8be619b

--- /dev/null

+++ b/KAIR/data/dataset_dncnn.py

@@ -0,0 +1,101 @@

+import os.path

+import random

+import numpy as np

+import torch

+import torch.utils.data as data

+import utils.utils_image as util

+

+

+class DatasetDnCNN(data.Dataset):

+ """

+ # -----------------------------------------

+ # Get L/H for denosing on AWGN with fixed sigma.

+ # Only dataroot_H is needed.

+ # -----------------------------------------

+ # e.g., DnCNN

+ # -----------------------------------------

+ """

+

+ def __init__(self, opt):

+ super(DatasetDnCNN, self).__init__()

+ print('Dataset: Denosing on AWGN with fixed sigma. Only dataroot_H is needed.')

+ self.opt = opt

+ self.n_channels = opt['n_channels'] if opt['n_channels'] else 3

+ self.patch_size = opt['H_size'] if opt['H_size'] else 64

+ self.sigma = opt['sigma'] if opt['sigma'] else 25

+ self.sigma_test = opt['sigma_test'] if opt['sigma_test'] else self.sigma

+

+ # ------------------------------------

+ # get path of H

+ # return None if input is None

+ # ------------------------------------

+ self.paths_H = util.get_image_paths(opt['dataroot_H'])

+

+ def __getitem__(self, index):

+

+ # ------------------------------------

+ # get H image

+ # ------------------------------------

+ H_path = self.paths_H[index]

+ img_H = util.imread_uint(H_path, self.n_channels)

+

+ L_path = H_path

+

+ if self.opt['phase'] == 'train':

+ """

+ # --------------------------------

+ # get L/H patch pairs

+ # --------------------------------

+ """

+ H, W, _ = img_H.shape

+

+ # --------------------------------

+ # randomly crop the patch

+ # --------------------------------

+ rnd_h = random.randint(0, max(0, H - self.patch_size))

+ rnd_w = random.randint(0, max(0, W - self.patch_size))

+ patch_H = img_H[rnd_h:rnd_h + self.patch_size, rnd_w:rnd_w + self.patch_size, :]

+

+ # --------------------------------

+ # augmentation - flip, rotate

+ # --------------------------------

+ mode = random.randint(0, 7)

+ patch_H = util.augment_img(patch_H, mode=mode)

+

+ # --------------------------------

+ # HWC to CHW, numpy(uint) to tensor

+ # --------------------------------

+ img_H = util.uint2tensor3(patch_H)

+ img_L = img_H.clone()

+

+ # --------------------------------

+ # add noise

+ # --------------------------------

+ noise = torch.randn(img_L.size()).mul_(self.sigma/255.0)

+ img_L.add_(noise)

+

+ else:

+ """

+ # --------------------------------

+ # get L/H image pairs

+ # --------------------------------

+ """

+ img_H = util.uint2single(img_H)

+ img_L = np.copy(img_H)

+

+ # --------------------------------

+ # add noise

+ # --------------------------------

+ np.random.seed(seed=0)

+ img_L += np.random.normal(0, self.sigma_test/255.0, img_L.shape)

+

+ # --------------------------------

+ # HWC to CHW, numpy to tensor

+ # --------------------------------

+ img_L = util.single2tensor3(img_L)

+ img_H = util.single2tensor3(img_H)

+

+ return {'L': img_L, 'H': img_H, 'H_path': H_path, 'L_path': L_path}

+

+ def __len__(self):

+ return len(self.paths_H)

diff --git a/KAIR/data/dataset_dnpatch.py b/KAIR/data/dataset_dnpatch.py

new file mode 100644

index 0000000000000000000000000000000000000000..289f92e6f454d8246b5128f9e834de9b1678ee73

--- /dev/null

+++ b/KAIR/data/dataset_dnpatch.py

@@ -0,0 +1,133 @@

+import random

+import numpy as np

+import torch

+import torch.utils.data as data

+import utils.utils_image as util

+

+

+class DatasetDnPatch(data.Dataset):

+ """

+ # -----------------------------------------

+ # Get L/H for denosing on AWGN with fixed sigma.

+ # ****Get all H patches first****

+ # Only dataroot_H is needed.

+ # -----------------------------------------

+ # e.g., DnCNN with BSD400

+ # -----------------------------------------

+ """

+

+ def __init__(self, opt):

+ super(DatasetDnPatch, self).__init__()

+ print('Get L/H for denosing on AWGN with fixed sigma. Only dataroot_H is needed.')

+ self.opt = opt

+ self.n_channels = opt['n_channels'] if opt['n_channels'] else 3

+ self.patch_size = opt['H_size'] if opt['H_size'] else 64

+

+ self.sigma = opt['sigma'] if opt['sigma'] else 25

+ self.sigma_test = opt['sigma_test'] if opt['sigma_test'] else self.sigma

+

+ self.num_patches_per_image = opt['num_patches_per_image'] if opt['num_patches_per_image'] else 40

+ self.num_sampled = opt['num_sampled'] if opt['num_sampled'] else 3000

+

+ # ------------------------------------

+ # get paths of H

+ # ------------------------------------

+ self.paths_H = util.get_image_paths(opt['dataroot_H'])

+ assert self.paths_H, 'Error: H path is empty.'

+

+ # ------------------------------------

+ # number of sampled H images

+ # ------------------------------------

+ self.num_sampled = min(self.num_sampled, len(self.paths_H))

+

+ # ------------------------------------

+ # reserve space with zeros

+ # ------------------------------------

+ self.total_patches = self.num_sampled * self.num_patches_per_image

+ self.H_data = np.zeros([self.total_patches, self.patch_size, self.patch_size, self.n_channels], dtype=np.uint8)

+

+ # ------------------------------------

+ # update H patches

+ # ------------------------------------

+ self.update_data()

+

+ def update_data(self):

+ """

+ # ------------------------------------

+ # update whole H patches

+ # ------------------------------------

+ """

+ self.index_sampled = random.sample(range(0, len(self.paths_H), 1), self.num_sampled)

+ n_count = 0

+

+ for i in range(len(self.index_sampled)):

+ H_patches = self.get_patches(self.index_sampled[i])

+ for H_patch in H_patches:

+ self.H_data[n_count,:,:,:] = H_patch

+ n_count += 1

+

+ print('Training data updated! Total number of patches is: %5.2f X %5.2f = %5.2f\n' % (len(self.H_data)//128, 128, len(self.H_data)))

+

+ def get_patches(self, index):

+ """

+ # ------------------------------------

+ # get H patches from an H image

+ # ------------------------------------

+ """

+ H_path = self.paths_H[index]

+ img_H = util.imread_uint(H_path, self.n_channels) # uint format

+

+ H, W = img_H.shape[:2]

+

+ H_patches = []

+

+ num = self.num_patches_per_image

+ for _ in range(num):

+ rnd_h = random.randint(0, max(0, H - self.patch_size))

+ rnd_w = random.randint(0, max(0, W - self.patch_size))

+ H_patch = img_H[rnd_h:rnd_h + self.patch_size, rnd_w:rnd_w + self.patch_size, :]

+ H_patches.append(H_patch)

+

+ return H_patches

+

+ def __getitem__(self, index):

+

+ H_path = 'toy.png'

+ if self.opt['phase'] == 'train':

+

+ patch_H = self.H_data[index]

+

+ # --------------------------------

+ # augmentation - flip and/or rotate

+ # --------------------------------

+ mode = random.randint(0, 7)

+ patch_H = util.augment_img(patch_H, mode=mode)

+

+ patch_H = util.uint2tensor3(patch_H)

+ patch_L = patch_H.clone()

+

+ # ------------------------------------

+ # add noise

+ # ------------------------------------

+ noise = torch.randn(patch_L.size()).mul_(self.sigma/255.0)

+ patch_L.add_(noise)

+

+ else:

+

+ H_path = self.paths_H[index]

+ img_H = util.imread_uint(H_path, self.n_channels)

+ img_H = util.uint2single(img_H)

+ img_L = np.copy(img_H)

+

+ # ------------------------------------

+ # add noise

+ # ------------------------------------

+ np.random.seed(seed=0)

+ img_L += np.random.normal(0, self.sigma_test/255.0, img_L.shape)

+ patch_L, patch_H = util.single2tensor3(img_L), util.single2tensor3(img_H)

+

+ L_path = H_path

+ return {'L': patch_L, 'H': patch_H, 'L_path': L_path, 'H_path': H_path}

+

+ def __len__(self):

+ return len(self.H_data)

diff --git a/KAIR/data/dataset_dpsr.py b/KAIR/data/dataset_dpsr.py

new file mode 100644

index 0000000000000000000000000000000000000000..012f8283df9aae394c51e904183de1a567cc7d39

--- /dev/null

+++ b/KAIR/data/dataset_dpsr.py

@@ -0,0 +1,131 @@

+import random

+import numpy as np

+import torch

+import torch.utils.data as data

+import utils.utils_image as util

+

+

+class DatasetDPSR(data.Dataset):

+ '''

+ # -----------------------------------------

+ # Get L/H/M for noisy image SR.

+ # Only "paths_H" is needed, sythesize bicubicly downsampled L on-the-fly.

+ # -----------------------------------------

+ # e.g., SRResNet super-resolver prior for DPSR

+ # -----------------------------------------

+ '''

+

+ def __init__(self, opt):

+ super(DatasetDPSR, self).__init__()

+ self.opt = opt

+ self.n_channels = opt['n_channels'] if opt['n_channels'] else 3

+ self.sf = opt['scale'] if opt['scale'] else 4

+ self.patch_size = self.opt['H_size'] if self.opt['H_size'] else 96

+ self.L_size = self.patch_size // self.sf

+ self.sigma = opt['sigma'] if opt['sigma'] else [0, 50]

+ self.sigma_min, self.sigma_max = self.sigma[0], self.sigma[1]

+ self.sigma_test = opt['sigma_test'] if opt['sigma_test'] else 0

+

+ # ------------------------------------

+ # get paths of L/H

+ # ------------------------------------

+ self.paths_H = util.get_image_paths(opt['dataroot_H'])

+ self.paths_L = util.get_image_paths(opt['dataroot_L'])

+

+ assert self.paths_H, 'Error: H path is empty.'

+

+ def __getitem__(self, index):

+

+ # ------------------------------------

+ # get H image

+ # ------------------------------------

+ H_path = self.paths_H[index]

+ img_H = util.imread_uint(H_path, self.n_channels)

+ img_H = util.uint2single(img_H)

+

+ # ------------------------------------

+ # modcrop for SR

+ # ------------------------------------

+ img_H = util.modcrop(img_H, self.sf)

+

+ # ------------------------------------

+ # sythesize L image via matlab's bicubic

+ # ------------------------------------

+ H, W, _ = img_H.shape

+ img_L = util.imresize_np(img_H, 1 / self.sf, True)

+

+ if self.opt['phase'] == 'train':

+ """

+ # --------------------------------

+ # get L/H patch pairs

+ # --------------------------------

+ """

+ H, W, C = img_L.shape

+

+ # --------------------------------

+ # randomly crop L patch

+ # --------------------------------

+ rnd_h = random.randint(0, max(0, H - self.L_size))

+ rnd_w = random.randint(0, max(0, W - self.L_size))

+ img_L = img_L[rnd_h:rnd_h + self.L_size, rnd_w:rnd_w + self.L_size, :]

+

+ # --------------------------------

+ # crop corresponding H patch

+ # --------------------------------

+ rnd_h_H, rnd_w_H = int(rnd_h * self.sf), int(rnd_w * self.sf)

+ img_H = img_H[rnd_h_H:rnd_h_H + self.patch_size, rnd_w_H:rnd_w_H + self.patch_size, :]

+

+ # --------------------------------

+ # augmentation - flip and/or rotate

+ # --------------------------------

+ mode = random.randint(0, 7)

+ img_L, img_H = util.augment_img(img_L, mode=mode), util.augment_img(img_H, mode=mode)

+

+ # --------------------------------

+ # get patch pairs

+ # --------------------------------

+ img_H, img_L = util.single2tensor3(img_H), util.single2tensor3(img_L)

+

+ # --------------------------------

+ # select noise level and get Gaussian noise

+ # --------------------------------

+ if random.random() < 0.1:

+ noise_level = torch.zeros(1).float()

+ else:

+ noise_level = torch.FloatTensor([np.random.uniform(self.sigma_min, self.sigma_max)])/255.0

+ # noise_level = torch.rand(1)*50/255.0

+ # noise_level = torch.min(torch.from_numpy(np.float32([7*np.random.chisquare(2.5)/255.0])),torch.Tensor([50./255.]))

+

+ else:

+

+ img_H, img_L = util.single2tensor3(img_H), util.single2tensor3(img_L)

+

+ noise_level = torch.FloatTensor([self.sigma_test])

+

+ # ------------------------------------

+ # add noise

+ # ------------------------------------

+ noise = torch.randn(img_L.size()).mul_(noise_level).float()

+ img_L.add_(noise)

+

+ # ------------------------------------

+ # get noise level map M

+ # ------------------------------------

+ M_vector = noise_level.unsqueeze(1).unsqueeze(1)

+ M = M_vector.repeat(1, img_L.size()[-2], img_L.size()[-1])

+

+

+ """

+ # -------------------------------------

+ # concat L and noise level map M

+ # -------------------------------------

+ """

+ img_L = torch.cat((img_L, M), 0)

+

+

+ L_path = H_path

+

+ return {'L': img_L, 'H': img_H, 'L_path': L_path, 'H_path': H_path}

+

+ def __len__(self):

+ return len(self.paths_H)

diff --git a/KAIR/data/dataset_fdncnn.py b/KAIR/data/dataset_fdncnn.py

new file mode 100644

index 0000000000000000000000000000000000000000..632bf4783452a06cb290147b808dd48854eaabac

--- /dev/null

+++ b/KAIR/data/dataset_fdncnn.py

@@ -0,0 +1,109 @@

+import random

+import numpy as np

+import torch

+import torch.utils.data as data

+import utils.utils_image as util

+

+

+class DatasetFDnCNN(data.Dataset):

+ """

+ # -----------------------------------------

+ # Get L/H/M for denosing on AWGN with a range of sigma.

+ # Only dataroot_H is needed.

+ # -----------------------------------------

+ # e.g., FDnCNN, H = f(cat(L, M)), M is noise level map

+ # -----------------------------------------

+ """

+

+ def __init__(self, opt):

+ super(DatasetFDnCNN, self).__init__()

+ self.opt = opt

+ self.n_channels = opt['n_channels'] if opt['n_channels'] else 3

+ self.patch_size = self.opt['H_size'] if opt['H_size'] else 64

+ self.sigma = opt['sigma'] if opt['sigma'] else [0, 75]

+ self.sigma_min, self.sigma_max = self.sigma[0], self.sigma[1]

+ self.sigma_test = opt['sigma_test'] if opt['sigma_test'] else 25

+

+ # -------------------------------------

+ # get the path of H, return None if input is None

+ # -------------------------------------

+ self.paths_H = util.get_image_paths(opt['dataroot_H'])

+

+ def __getitem__(self, index):

+ # -------------------------------------

+ # get H image

+ # -------------------------------------

+ H_path = self.paths_H[index]

+ img_H = util.imread_uint(H_path, self.n_channels)

+

+ L_path = H_path

+

+ if self.opt['phase'] == 'train':

+ """

+ # --------------------------------

+ # get L/H/M patch pairs

+ # --------------------------------

+ """

+ H, W = img_H.shape[:2]

+

+ # ---------------------------------

+ # randomly crop the patch

+ # ---------------------------------

+ rnd_h = random.randint(0, max(0, H - self.patch_size))

+ rnd_w = random.randint(0, max(0, W - self.patch_size))

+ patch_H = img_H[rnd_h:rnd_h + self.patch_size, rnd_w:rnd_w + self.patch_size, :]

+

+ # ---------------------------------

+ # augmentation - flip, rotate

+ # ---------------------------------

+ mode = random.randint(0, 7)

+ patch_H = util.augment_img(patch_H, mode=mode)

+

+ # ---------------------------------

+ # HWC to CHW, numpy(uint) to tensor

+ # ---------------------------------

+ img_H = util.uint2tensor3(patch_H)

+ img_L = img_H.clone()

+

+ # ---------------------------------

+ # get noise level

+ # ---------------------------------

+ # noise_level = torch.FloatTensor([np.random.randint(self.sigma_min, self.sigma_max)])/255.0

+ noise_level = torch.FloatTensor([np.random.uniform(self.sigma_min, self.sigma_max)])/255.0

+

+ noise_level_map = torch.ones((1, img_L.size(1), img_L.size(2))).mul_(noise_level).float() # torch.full((1, img_L.size(1), img_L.size(2)), noise_level)

+

+ # ---------------------------------

+ # add noise

+ # ---------------------------------

+ noise = torch.randn(img_L.size()).mul_(noise_level).float()

+ img_L.add_(noise)

+

+ else:

+ """

+ # --------------------------------

+ # get L/H/M image pairs

+ # --------------------------------

+ """

+ img_H = util.uint2single(img_H)

+ img_L = np.copy(img_H)

+ np.random.seed(seed=0)

+ img_L += np.random.normal(0, self.sigma_test/255.0, img_L.shape)

+ noise_level_map = torch.ones((1, img_L.shape[0], img_L.shape[1])).mul_(self.sigma_test/255.0).float() # torch.full((1, img_L.size(1), img_L.size(2)), noise_level)

+

+ # ---------------------------------

+ # L/H image pairs

+ # ---------------------------------

+ img_H, img_L = util.single2tensor3(img_H), util.single2tensor3(img_L)

+

+ """

+ # -------------------------------------

+ # concat L and noise level map M

+ # -------------------------------------

+ """

+ img_L = torch.cat((img_L, noise_level_map), 0)

+

+ return {'L': img_L, 'H': img_H, 'L_path': L_path, 'H_path': H_path}

+

+ def __len__(self):

+ return len(self.paths_H)

diff --git a/KAIR/data/dataset_ffdnet.py b/KAIR/data/dataset_ffdnet.py

new file mode 100644

index 0000000000000000000000000000000000000000..b3fd53aee5b52362bd5f80b48cc808346d7dcc80

--- /dev/null

+++ b/KAIR/data/dataset_ffdnet.py

@@ -0,0 +1,103 @@

+import random

+import numpy as np

+import torch

+import torch.utils.data as data

+import utils.utils_image as util

+

+

+class DatasetFFDNet(data.Dataset):

+ """

+ # -----------------------------------------

+ # Get L/H/M for denosing on AWGN with a range of sigma.

+ # Only dataroot_H is needed.

+ # -----------------------------------------

+ # e.g., FFDNet, H = f(L, sigma), sigma is noise level

+ # -----------------------------------------

+ """

+

+ def __init__(self, opt):

+ super(DatasetFFDNet, self).__init__()

+ self.opt = opt

+ self.n_channels = opt['n_channels'] if opt['n_channels'] else 3

+ self.patch_size = self.opt['H_size'] if opt['H_size'] else 64

+ self.sigma = opt['sigma'] if opt['sigma'] else [0, 75]

+ self.sigma_min, self.sigma_max = self.sigma[0], self.sigma[1]

+ self.sigma_test = opt['sigma_test'] if opt['sigma_test'] else 25

+

+ # -------------------------------------

+ # get the path of H, return None if input is None

+ # -------------------------------------

+ self.paths_H = util.get_image_paths(opt['dataroot_H'])

+

+ def __getitem__(self, index):

+ # -------------------------------------

+ # get H image

+ # -------------------------------------

+ H_path = self.paths_H[index]

+ img_H = util.imread_uint(H_path, self.n_channels)

+

+ L_path = H_path

+

+ if self.opt['phase'] == 'train':

+ """

+ # --------------------------------

+ # get L/H/M patch pairs

+ # --------------------------------

+ """

+ H, W = img_H.shape[:2]

+

+ # ---------------------------------

+ # randomly crop the patch

+ # ---------------------------------

+ rnd_h = random.randint(0, max(0, H - self.patch_size))

+ rnd_w = random.randint(0, max(0, W - self.patch_size))

+ patch_H = img_H[rnd_h:rnd_h + self.patch_size, rnd_w:rnd_w + self.patch_size, :]

+

+ # ---------------------------------

+ # augmentation - flip, rotate

+ # ---------------------------------

+ mode = random.randint(0, 7)

+ patch_H = util.augment_img(patch_H, mode=mode)

+

+ # ---------------------------------

+ # HWC to CHW, numpy(uint) to tensor

+ # ---------------------------------

+ img_H = util.uint2tensor3(patch_H)

+ img_L = img_H.clone()

+

+ # ---------------------------------

+ # get noise level

+ # ---------------------------------

+ # noise_level = torch.FloatTensor([np.random.randint(self.sigma_min, self.sigma_max)])/255.0

+ noise_level = torch.FloatTensor([np.random.uniform(self.sigma_min, self.sigma_max)])/255.0

+

+ # ---------------------------------

+ # add noise

+ # ---------------------------------

+ noise = torch.randn(img_L.size()).mul_(noise_level).float()

+ img_L.add_(noise)

+

+ else:

+ """

+ # --------------------------------

+ # get L/H/sigma image pairs

+ # --------------------------------

+ """

+ img_H = util.uint2single(img_H)

+ img_L = np.copy(img_H)

+ np.random.seed(seed=0)

+ img_L += np.random.normal(0, self.sigma_test/255.0, img_L.shape)

+ noise_level = torch.FloatTensor([self.sigma_test/255.0])

+

+ # ---------------------------------

+ # L/H image pairs

+ # ---------------------------------

+ img_H, img_L = util.single2tensor3(img_H), util.single2tensor3(img_L)

+

+ noise_level = noise_level.unsqueeze(1).unsqueeze(1)

+

+

+ return {'L': img_L, 'H': img_H, 'C': noise_level, 'L_path': L_path, 'H_path': H_path}

+

+ def __len__(self):

+ return len(self.paths_H)

diff --git a/KAIR/data/dataset_jpeg.py b/KAIR/data/dataset_jpeg.py

new file mode 100644

index 0000000000000000000000000000000000000000..a847f0d47e8ad86f6349459b2d244075e9f27a92

--- /dev/null

+++ b/KAIR/data/dataset_jpeg.py

@@ -0,0 +1,118 @@

+import random

+import torch.utils.data as data

+import utils.utils_image as util

+import cv2

+

+

+class DatasetJPEG(data.Dataset):

+ def __init__(self, opt):

+ super(DatasetJPEG, self).__init__()

+ print('Dataset: JPEG compression artifact reduction (deblocking) with quality factor. Only dataroot_H is needed.')

+ self.opt = opt

+ self.n_channels = opt['n_channels'] if opt['n_channels'] else 3

+ self.patch_size = self.opt['H_size'] if opt['H_size'] else 128

+

+ self.quality_factor = opt['quality_factor'] if opt['quality_factor'] else 40

+ self.quality_factor_test = opt['quality_factor_test'] if opt['quality_factor_test'] else 40

+ self.is_color = opt['is_color'] if opt['is_color'] else False

+

+ # -------------------------------------

+ # get the path of H, return None if input is None

+ # -------------------------------------

+ self.paths_H = util.get_image_paths(opt['dataroot_H'])

+

+ def __getitem__(self, index):

+

+ if self.opt['phase'] == 'train':

+ # -------------------------------------

+ # get H image

+ # -------------------------------------

+ H_path = self.paths_H[index]

+ img_H = util.imread_uint(H_path, 3)

+ L_path = H_path

+

+ H, W = img_H.shape[:2]

+ self.patch_size_plus = self.patch_size + 8

+

+ # ---------------------------------

+ # randomly crop a large patch

+ # ---------------------------------

+ rnd_h = random.randint(0, max(0, H - self.patch_size_plus))

+ rnd_w = random.randint(0, max(0, W - self.patch_size_plus))

+ patch_H = img_H[rnd_h:rnd_h + self.patch_size_plus, rnd_w:rnd_w + self.patch_size_plus, ...]

+

+ # ---------------------------------

+ # augmentation - flip, rotate

+ # ---------------------------------

+ mode = random.randint(0, 7)

+ patch_H = util.augment_img(patch_H, mode=mode)

+

+ # ---------------------------------

+ # HWC to CHW, numpy(uint) to tensor

+ # ---------------------------------

+ img_L = patch_H.copy()

+

+ # ---------------------------------

+ # set quality factor

+ # ---------------------------------

+ quality_factor = self.quality_factor

+

+ if self.is_color: # color image

+ img_H = img_L.copy()

+ img_L = cv2.cvtColor(img_L, cv2.COLOR_RGB2BGR)

+ result, encimg = cv2.imencode('.jpg', img_L, [int(cv2.IMWRITE_JPEG_QUALITY), quality_factor])

+ img_L = cv2.imdecode(encimg, 1)

+ img_L = cv2.cvtColor(img_L, cv2.COLOR_BGR2RGB)

+ else:

+ if random.random() > 0.5:

+ img_L = util.rgb2ycbcr(img_L)

+ else:

+ img_L = cv2.cvtColor(img_L, cv2.COLOR_RGB2GRAY)

+ img_H = img_L.copy()

+ result, encimg = cv2.imencode('.jpg', img_L, [int(cv2.IMWRITE_JPEG_QUALITY), quality_factor])

+ img_L = cv2.imdecode(encimg, 0)

+

+ # ---------------------------------

+ # randomly crop a patch

+ # ---------------------------------

+ H, W = img_H.shape[:2]

+ if random.random() > 0.5:

+ rnd_h = random.randint(0, max(0, H - self.patch_size))

+ rnd_w = random.randint(0, max(0, W - self.patch_size))

+ else:

+ rnd_h = 0

+ rnd_w = 0

+ img_H = img_H[rnd_h:rnd_h + self.patch_size, rnd_w:rnd_w + self.patch_size]

+ img_L = img_L[rnd_h:rnd_h + self.patch_size, rnd_w:rnd_w + self.patch_size]

+ else:

+

+ H_path = self.paths_H[index]

+ L_path = H_path

+ # ---------------------------------

+ # set quality factor

+ # ---------------------------------

+ quality_factor = self.quality_factor_test

+

+ if self.is_color: # color JPEG image deblocking

+ img_H = util.imread_uint(H_path, 3)

+ img_L = img_H.copy()

+ img_L = cv2.cvtColor(img_L, cv2.COLOR_RGB2BGR)

+ result, encimg = cv2.imencode('.jpg', img_L, [int(cv2.IMWRITE_JPEG_QUALITY), quality_factor])

+ img_L = cv2.imdecode(encimg, 1)

+ img_L = cv2.cvtColor(img_L, cv2.COLOR_BGR2RGB)

+ else:

+ img_H = cv2.imread(H_path, cv2.IMREAD_UNCHANGED)

+ is_to_ycbcr = True if img_L.ndim == 3 else False

+ if is_to_ycbcr:

+ img_H = cv2.cvtColor(img_H, cv2.COLOR_BGR2RGB)

+ img_H = util.rgb2ycbcr(img_H)

+

+ result, encimg = cv2.imencode('.jpg', img_H, [int(cv2.IMWRITE_JPEG_QUALITY), quality_factor])

+ img_L = cv2.imdecode(encimg, 0)

+

+ img_L, img_H = util.uint2tensor3(img_L), util.uint2tensor3(img_H)

+

+ return {'L': img_L, 'H': img_H, 'L_path': L_path, 'H_path': H_path}

+

+ def __len__(self):

+ return len(self.paths_H)

diff --git a/KAIR/data/dataset_l.py b/KAIR/data/dataset_l.py

new file mode 100644

index 0000000000000000000000000000000000000000..9216311b1ca526d704e1f7211ece90453b7e7cea

--- /dev/null

+++ b/KAIR/data/dataset_l.py

@@ -0,0 +1,43 @@

+import torch.utils.data as data

+import utils.utils_image as util

+

+

+class DatasetL(data.Dataset):

+ '''

+ # -----------------------------------------

+ # Get L in testing.

+ # Only "dataroot_L" is needed.

+ # -----------------------------------------

+ # -----------------------------------------

+ '''

+

+ def __init__(self, opt):

+ super(DatasetL, self).__init__()

+ print('Read L in testing. Only "dataroot_L" is needed.')

+ self.opt = opt

+ self.n_channels = opt['n_channels'] if opt['n_channels'] else 3

+

+ # ------------------------------------

+ # get the path of L

+ # ------------------------------------

+ self.paths_L = util.get_image_paths(opt['dataroot_L'])

+ assert self.paths_L, 'Error: L paths are empty.'

+

+ def __getitem__(self, index):

+ L_path = None

+

+ # ------------------------------------

+ # get L image

+ # ------------------------------------

+ L_path = self.paths_L[index]

+ img_L = util.imread_uint(L_path, self.n_channels)

+

+ # ------------------------------------

+ # HWC to CHW, numpy to tensor

+ # ------------------------------------

+ img_L = util.uint2tensor3(img_L)

+

+ return {'L': img_L, 'L_path': L_path}

+

+ def __len__(self):

+ return len(self.paths_L)

diff --git a/KAIR/data/dataset_plain.py b/KAIR/data/dataset_plain.py

new file mode 100644

index 0000000000000000000000000000000000000000..605a4e8166425f1b79f5f1985b0ef0e08cc58b00

--- /dev/null

+++ b/KAIR/data/dataset_plain.py

@@ -0,0 +1,85 @@

+import random

+import numpy as np

+import torch.utils.data as data

+import utils.utils_image as util

+

+

+class DatasetPlain(data.Dataset):

+ '''

+ # -----------------------------------------

+ # Get L/H for image-to-image mapping.

+ # Both "paths_L" and "paths_H" are needed.

+ # -----------------------------------------

+ # e.g., train denoiser with L and H

+ # -----------------------------------------

+ '''

+

+ def __init__(self, opt):

+ super(DatasetPlain, self).__init__()

+ print('Get L/H for image-to-image mapping. Both "paths_L" and "paths_H" are needed.')

+ self.opt = opt

+ self.n_channels = opt['n_channels'] if opt['n_channels'] else 3

+ self.patch_size = self.opt['H_size'] if self.opt['H_size'] else 64

+

+ # ------------------------------------

+ # get the path of L/H

+ # ------------------------------------

+ self.paths_H = util.get_image_paths(opt['dataroot_H'])

+ self.paths_L = util.get_image_paths(opt['dataroot_L'])

+

+ assert self.paths_H, 'Error: H path is empty.'

+ assert self.paths_L, 'Error: L path is empty. Plain dataset assumes both L and H are given!'

+ if self.paths_L and self.paths_H:

+ assert len(self.paths_L) == len(self.paths_H), 'L/H mismatch - {}, {}.'.format(len(self.paths_L), len(self.paths_H))

+

+ def __getitem__(self, index):

+

+ # ------------------------------------

+ # get H image

+ # ------------------------------------

+ H_path = self.paths_H[index]

+ img_H = util.imread_uint(H_path, self.n_channels)

+

+ # ------------------------------------

+ # get L image

+ # ------------------------------------

+ L_path = self.paths_L[index]

+ img_L = util.imread_uint(L_path, self.n_channels)

+

+ # ------------------------------------

+ # if train, get L/H patch pair

+ # ------------------------------------

+ if self.opt['phase'] == 'train':

+

+ H, W, _ = img_H.shape

+

+ # --------------------------------

+ # randomly crop the patch

+ # --------------------------------

+ rnd_h = random.randint(0, max(0, H - self.patch_size))

+ rnd_w = random.randint(0, max(0, W - self.patch_size))

+ patch_L = img_L[rnd_h:rnd_h + self.patch_size, rnd_w:rnd_w + self.patch_size, :]

+ patch_H = img_H[rnd_h:rnd_h + self.patch_size, rnd_w:rnd_w + self.patch_size, :]

+

+ # --------------------------------

+ # augmentation - flip and/or rotate

+ # --------------------------------

+ mode = random.randint(0, 7)

+ patch_L, patch_H = util.augment_img(patch_L, mode=mode), util.augment_img(patch_H, mode=mode)

+

+ # --------------------------------

+ # HWC to CHW, numpy(uint) to tensor

+ # --------------------------------

+ img_L, img_H = util.uint2tensor3(patch_L), util.uint2tensor3(patch_H)

+

+ else:

+

+ # --------------------------------

+ # HWC to CHW, numpy(uint) to tensor

+ # --------------------------------

+ img_L, img_H = util.uint2tensor3(img_L), util.uint2tensor3(img_H)

+

+ return {'L': img_L, 'H': img_H, 'L_path': L_path, 'H_path': H_path}

+

+ def __len__(self):

+ return len(self.paths_H)

diff --git a/KAIR/data/dataset_plainpatch.py b/KAIR/data/dataset_plainpatch.py

new file mode 100644

index 0000000000000000000000000000000000000000..2278bf00aca7f77514fe5b3a5e70b7b562baa13d

--- /dev/null

+++ b/KAIR/data/dataset_plainpatch.py

@@ -0,0 +1,131 @@

+import os.path

+import random

+import numpy as np

+import torch.utils.data as data

+import utils.utils_image as util

+

+

+

+class DatasetPlainPatch(data.Dataset):

+ '''

+ # -----------------------------------------

+ # Get L/H for image-to-image mapping.

+ # Both "paths_L" and "paths_H" are needed.

+ # -----------------------------------------

+ # e.g., train denoiser with L and H patches

+ # create a large patch dataset first

+ # -----------------------------------------

+ '''

+

+ def __init__(self, opt):

+ super(DatasetPlainPatch, self).__init__()

+ print('Get L/H for image-to-image mapping. Both "paths_L" and "paths_H" are needed.')

+ self.opt = opt

+ self.n_channels = opt['n_channels'] if opt['n_channels'] else 3

+ self.patch_size = self.opt['H_size'] if self.opt['H_size'] else 64

+

+ self.num_patches_per_image = opt['num_patches_per_image'] if opt['num_patches_per_image'] else 40

+ self.num_sampled = opt['num_sampled'] if opt['num_sampled'] else 3000

+

+ # -------------------

+ # get the path of L/H

+ # -------------------

+ self.paths_H = util.get_image_paths(opt['dataroot_H'])

+ self.paths_L = util.get_image_paths(opt['dataroot_L'])

+

+ assert self.paths_H, 'Error: H path is empty.'

+ assert self.paths_L, 'Error: L path is empty. This dataset uses L path, you can use dataset_dnpatchh'

+ if self.paths_L and self.paths_H:

+ assert len(self.paths_L) == len(self.paths_H), 'H and L datasets have different number of images - {}, {}.'.format(len(self.paths_L), len(self.paths_H))

+

+ # ------------------------------------

+ # number of sampled images

+ # ------------------------------------

+ self.num_sampled = min(self.num_sampled, len(self.paths_H))

+

+ # ------------------------------------

+ # reserve space with zeros

+ # ------------------------------------

+ self.total_patches = self.num_sampled * self.num_patches_per_image

+ self.H_data = np.zeros([self.total_patches, self.path_size, self.path_size, self.n_channels], dtype=np.uint8)

+ self.L_data = np.zeros([self.total_patches, self.path_size, self.path_size, self.n_channels], dtype=np.uint8)

+

+ # ------------------------------------

+ # update H patches

+ # ------------------------------------

+ self.update_data()

+

+

+ def update_data(self):

+ """

+ # ------------------------------------

+ # update whole L/H patches

+ # ------------------------------------

+ """

+ self.index_sampled = random.sample(range(0, len(self.paths_H), 1), self.num_sampled)

+ n_count = 0

+

+ for i in range(len(self.index_sampled)):

+ L_patches, H_patches = self.get_patches(self.index_sampled[i])

+ for (L_patch, H_patch) in zip(L_patches, H_patches):

+ self.L_data[n_count,:,:,:] = L_patch

+ self.H_data[n_count,:,:,:] = H_patch

+ n_count += 1

+

+ print('Training data updated! Total number of patches is: %5.2f X %5.2f = %5.2f\n' % (len(self.H_data)//128, 128, len(self.H_data)))

+

+ def get_patches(self, index):

+ """

+ # ------------------------------------

+ # get L/H patches from L/H images

+ # ------------------------------------

+ """

+ L_path = self.paths_L[index]

+ H_path = self.paths_H[index]

+ img_L = util.imread_uint(L_path, self.n_channels) # uint format

+ img_H = util.imread_uint(H_path, self.n_channels) # uint format

+

+ H, W = img_H.shape[:2]

+

+ L_patches, H_patches = [], []

+

+ num = self.num_patches_per_image

+ for _ in range(num):

+ rnd_h = random.randint(0, max(0, H - self.path_size))

+ rnd_w = random.randint(0, max(0, W - self.path_size))

+ L_patch = img_L[rnd_h:rnd_h + self.path_size, rnd_w:rnd_w + self.path_size, :]

+ H_patch = img_H[rnd_h:rnd_h + self.path_size, rnd_w:rnd_w + self.path_size, :]

+ L_patches.append(L_patch)

+ H_patches.append(H_patch)

+

+ return L_patches, H_patches

+

+ def __getitem__(self, index):

+

+ if self.opt['phase'] == 'train':

+

+ patch_L, patch_H = self.L_data[index], self.H_data[index]

+

+ # --------------------------------

+ # augmentation - flip and/or rotate

+ # --------------------------------

+ mode = random.randint(0, 7)

+ patch_L = util.augment_img(patch_L, mode=mode)

+ patch_H = util.augment_img(patch_H, mode=mode)

+

+ patch_L, patch_H = util.uint2tensor3(patch_L), util.uint2tensor3(patch_H)

+

+ else:

+

+ L_path, H_path = self.paths_L[index], self.paths_H[index]

+ patch_L = util.imread_uint(L_path, self.n_channels)

+ patch_H = util.imread_uint(H_path, self.n_channels)

+

+ patch_L, patch_H = util.uint2tensor3(patch_L), util.uint2tensor3(patch_H)

+

+ return {'L': patch_L, 'H': patch_H}

+

+

+ def __len__(self):

+

+ return self.total_patches

diff --git a/KAIR/data/dataset_sr.py b/KAIR/data/dataset_sr.py

new file mode 100644

index 0000000000000000000000000000000000000000..8e1c11c7bfbd7e4aecd9a9e5b44f73ad4e81bc3e

--- /dev/null

+++ b/KAIR/data/dataset_sr.py

@@ -0,0 +1,197 @@

+import math

+import numpy as np

+import random

+import torch

+import torch.utils.data as data

+import utils.utils_image as util

+from basicsr.data.degradations import circular_lowpass_kernel, random_mixed_kernels

+from basicsr.utils import DiffJPEG, USMSharp

+from numpy.typing import NDArray

+from PIL import Image

+from utils.utils_video import img2tensor

+from torch import Tensor

+

+from data.degradations import apply_real_esrgan_degradations

+

+class DatasetSR(data.Dataset):

+ '''

+ # -----------------------------------------

+ # Get L/H for SISR.

+ # If only "paths_H" is provided, sythesize bicubicly downsampled L on-the-fly.

+ # -----------------------------------------

+ # e.g., SRResNet

+ # -----------------------------------------

+ '''

+

+ def __init__(self, opt):

+ super(DatasetSR, self).__init__()

+ self.opt = opt

+ self.n_channels = opt['n_channels'] if opt['n_channels'] else 3

+ self.sf = opt['scale'] if opt['scale'] else 4

+ self.patch_size = self.opt['H_size'] if self.opt['H_size'] else 96

+ self.L_size = self.patch_size // self.sf

+

+ # ------------------------------------

+ # get paths of L/H

+ # ------------------------------------

+ self.paths_H = util.get_image_paths(opt['dataroot_H'])

+ self.paths_L = util.get_image_paths(opt['dataroot_L'])

+

+ assert self.paths_H, 'Error: H path is empty.'

+ if self.paths_L and self.paths_H:

+ assert len(self.paths_L) == len(self.paths_H), 'L/H mismatch - {}, {}.'.format(len(self.paths_L), len(self.paths_H))

+

+ self.jpeg_simulator = DiffJPEG()

+ self.usm_sharpener = USMSharp()

+

+ blur_kernel_list1 = ['iso', 'aniso', 'generalized_iso',

+ 'generalized_aniso', 'plateau_iso', 'plateau_aniso']

+ blur_kernel_list2 = ['iso', 'aniso', 'generalized_iso',

+ 'generalized_aniso', 'plateau_iso', 'plateau_aniso']

+ blur_kernel_prob1 = [0.45, 0.25, 0.12, 0.03, 0.12, 0.03]

+ blur_kernel_prob2 = [0.45, 0.25, 0.12, 0.03, 0.12, 0.03]

+ kernel_size = 21

+ blur_sigma1 = [0.05, 0.2]

+ blur_sigma2 = [0.05, 0.1]

+ betag_range1 = [0.7, 1.3]

+ betag_range2 = [0.7, 1.3]

+ betap_range1 = [0.7, 1.3]

+ betap_range2 = [0.7, 1.3]

+

+ def _decide_kernels(self) -> NDArray:

+ blur_kernel1 = random_mixed_kernels(

+ self.blur_kernel_list1,

+ self.blur_kernel_prob1,

+ self.kernel_size,

+ self.blur_sigma1,

+ self.blur_sigma1, [-math.pi, math.pi],

+ self.betag_range1,

+ self.betap_range1,

+ noise_range=None

+ )

+ blur_kernel2 = random_mixed_kernels(

+ self.blur_kernel_list2,

+ self.blur_kernel_prob2,

+ self.kernel_size,

+ self.blur_sigma2,

+ self.blur_sigma2, [-math.pi, math.pi],

+ self.betag_range2,

+ self.betap_range2,

+ noise_range=None

+ )

+ if self.kernel_size < 13:

+ omega_c = np.random.uniform(np.pi / 3, np.pi)

+ else:

+ omega_c = np.random.uniform(np.pi / 5, np.pi)

+ sinc_kernel = circular_lowpass_kernel(omega_c, self.kernel_size, pad_to=21)

+ return (blur_kernel1, blur_kernel2, sinc_kernel)

+

+ def __getitem__(self, index):

+

+ L_path = None

+ # ------------------------------------

+ # get H image

+ # ------------------------------------

+ H_path = self.paths_H[index]

+ img_H = util.imread_uint(H_path, self.n_channels)

+ img_H = util.uint2single(img_H)

+

+ # ------------------------------------

+ # modcrop

+ # ------------------------------------

+ img_H = util.modcrop(img_H, self.sf)

+

+ # ------------------------------------

+ # get L image

+ # ------------------------------------

+ if self.paths_L:

+ # --------------------------------

+ # directly load L image

+ # --------------------------------

+ L_path = self.paths_L[index]

+ img_L = util.imread_uint(L_path, self.n_channels)

+ img_L = util.uint2single(img_L)

+

+ else:

+ # --------------------------------

+ # sythesize L image via matlab's bicubic

+ # --------------------------------

+ H, W = img_H.shape[:2]

+ img_L = util.imresize_np(img_H, 1 / self.sf, True)

+

+ src_tensor = img2tensor(img_L.copy(), bgr2rgb=False,

+ float32=True).unsqueeze(0)

+

+ blur_kernel1, blur_kernel2, sinc_kernel = self._decide_kernels()

+ (img_L_2, sharp_img_L, degraded_img_L) = apply_real_esrgan_degradations(

+ src_tensor,

+ blur_kernel1=Tensor(blur_kernel1).unsqueeze(0),

+ blur_kernel2=Tensor(blur_kernel2).unsqueeze(0),

+ second_blur_prob=0.2,

+ sinc_kernel=Tensor(sinc_kernel).unsqueeze(0),

+ resize_prob1=[0.2, 0.7, 0.1],

+ resize_prob2=[0.3, 0.4, 0.3],

+ resize_range1=[0.9, 1.1],

+ resize_range2=[0.9, 1.1],

+ gray_noise_prob1=0.2,

+ gray_noise_prob2=0.2,

+ gaussian_noise_prob1=0.2,

+ gaussian_noise_prob2=0.2,

+ noise_range=[0.01, 0.2],

+ poisson_scale_range=[0.05, 0.45],

+ jpeg_compression_range1=[85, 100],

+ jpeg_compression_range2=[85, 100],

+ jpeg_simulator=self.jpeg_simulator,

+ random_crop_gt_size=256,

+ sr_upsample_scale=1,

+ usm_sharpener=self.usm_sharpener

+ )

+ # Image.fromarray((degraded_img_L[0] * 255).permute(

+ # 1, 2, 0).cpu().numpy().astype(np.uint8)).save(

+ # "/home/cll/Desktop/degraded_L.png")

+ # Image.fromarray((img_L * 255).astype(np.uint8)).save(

+ # "/home/cll/Desktop/img_L.png")

+ # Image.fromarray((img_L_2[0] * 255).permute(

+ # 1, 2, 0).cpu().numpy().astype(np.uint8)).save(

+ # "/home/cll/Desktop/img_L_2.png")

+ # exit()

+

+ # ------------------------------------

+ # if train, get L/H patch pair

+ # ------------------------------------

+ if self.opt['phase'] == 'train':

+

+ H, W, C = img_L.shape

+

+ # --------------------------------

+ # randomly crop the L patch

+ # --------------------------------

+ rnd_h = random.randint(0, max(0, H - self.L_size))

+ rnd_w = random.randint(0, max(0, W - self.L_size))

+ img_L = img_L[rnd_h:rnd_h + self.L_size, rnd_w:rnd_w + self.L_size, :]

+

+ # --------------------------------

+ # crop corresponding H patch

+ # --------------------------------

+ rnd_h_H, rnd_w_H = int(rnd_h * self.sf), int(rnd_w * self.sf)

+ img_H = img_H[rnd_h_H:rnd_h_H + self.patch_size, rnd_w_H:rnd_w_H + self.patch_size, :]

+

+ # --------------------------------

+ # augmentation - flip and/or rotate + RealESRGAN modified degradations

+ # --------------------------------

+ mode = random.randint(0, 7)

+ img_L, img_H = util.augment_img(img_L, mode=mode), util.augment_img(img_H, mode=mode)

+

+

+ # ------------------------------------

+ # L/H pairs, HWC to CHW, numpy to tensor