Spaces:

Running

on

CPU Upgrade

Running

on

CPU Upgrade

machineuser

commited on

Commit

·

9358240

1

Parent(s):

405a395

Sync widgets demo

Browse files

packages/tasks/src/image-to-image/about.md

CHANGED

|

@@ -2,22 +2,22 @@

|

|

| 2 |

|

| 3 |

### Style transfer

|

| 4 |

|

| 5 |

-

One of the most popular use cases of image

|

| 6 |

|

| 7 |

## Task Variants

|

| 8 |

|

| 9 |

### Image inpainting

|

| 10 |

|

| 11 |

-

Image inpainting is widely used during photography editing to remove unwanted objects, such as poles, wires or sensor

|

| 12 |

dust.

|

| 13 |

|

| 14 |

### Image colorization

|

| 15 |

|

| 16 |

-

Old

|

| 17 |

|

| 18 |

### Super Resolution

|

| 19 |

|

| 20 |

-

Super

|

| 21 |

|

| 22 |

## Inference

|

| 23 |

|

|

@@ -55,20 +55,21 @@ await inference.imageToImage({

|

|

| 55 |

|

| 56 |

## ControlNet

|

| 57 |

|

| 58 |

-

Controlling outputs of diffusion models only with a text prompt is a challenging problem. ControlNet is a neural network

|

| 59 |

|

| 60 |

Many ControlNet models were trained in our community event, JAX Diffusers sprint. You can see the full list of the ControlNet models available [here](https://huggingface.co/spaces/jax-diffusers-event/leaderboard).

|

| 61 |

|

| 62 |

## Most Used Model for the Task

|

| 63 |

|

| 64 |

-

Pix2Pix is a popular model used for image

|

| 65 |

|

| 66 |

-

|

| 67 |

|

| 68 |

|

| 69 |

|

| 70 |

## Useful Resources

|

| 71 |

|

|

|

|

| 72 |

- [Train your ControlNet with diffusers 🧨](https://huggingface.co/blog/train-your-controlnet)

|

| 73 |

- [Ultra fast ControlNet with 🧨 Diffusers](https://huggingface.co/blog/controlnet)

|

| 74 |

|

|

|

|

| 2 |

|

| 3 |

### Style transfer

|

| 4 |

|

| 5 |

+

One of the most popular use cases of image-to-image is style transfer. Style transfer models can convert a normal photography into a painting in the style of a famous painter.

|

| 6 |

|

| 7 |

## Task Variants

|

| 8 |

|

| 9 |

### Image inpainting

|

| 10 |

|

| 11 |

+

Image inpainting is widely used during photography editing to remove unwanted objects, such as poles, wires, or sensor

|

| 12 |

dust.

|

| 13 |

|

| 14 |

### Image colorization

|

| 15 |

|

| 16 |

+

Old or black and white images can be brought up to life using an image colorization model.

|

| 17 |

|

| 18 |

### Super Resolution

|

| 19 |

|

| 20 |

+

Super-resolution models increase the resolution of an image, allowing for higher-quality viewing and printing.

|

| 21 |

|

| 22 |

## Inference

|

| 23 |

|

|

|

|

| 55 |

|

| 56 |

## ControlNet

|

| 57 |

|

| 58 |

+

Controlling the outputs of diffusion models only with a text prompt is a challenging problem. ControlNet is a neural network model that provides image-based control to diffusion models. Control images can be edges or other landmarks extracted from a source image.

|

| 59 |

|

| 60 |

Many ControlNet models were trained in our community event, JAX Diffusers sprint. You can see the full list of the ControlNet models available [here](https://huggingface.co/spaces/jax-diffusers-event/leaderboard).

|

| 61 |

|

| 62 |

## Most Used Model for the Task

|

| 63 |

|

| 64 |

+

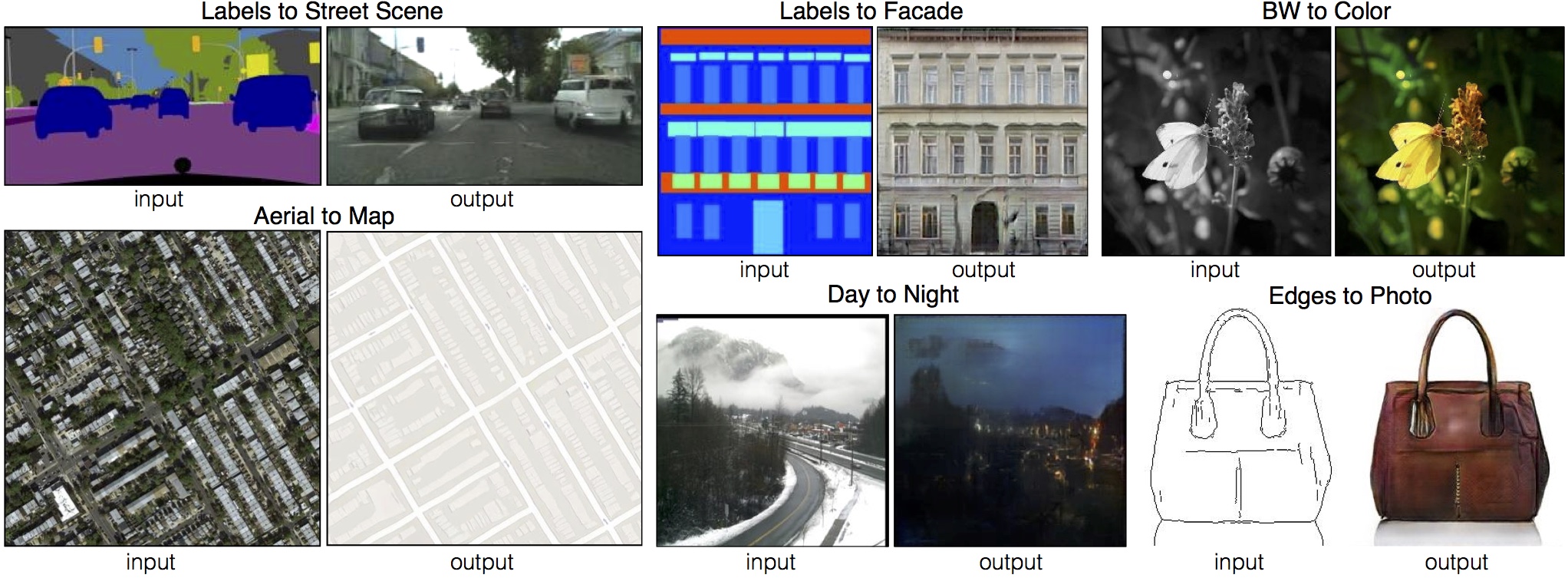

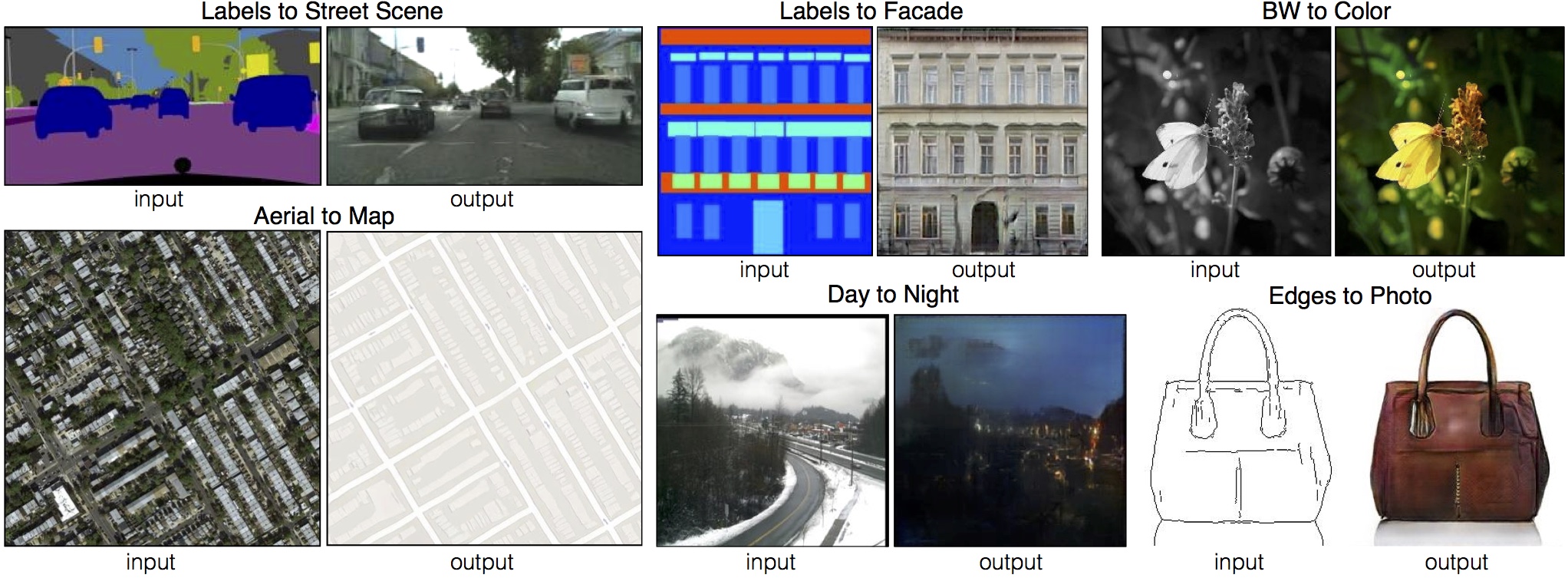

Pix2Pix is a popular model used for image-to-image translation tasks. It is based on a conditional-GAN (generative adversarial network) where instead of a noise vector a 2D image is given as input. More information about Pix2Pix can be retrieved from this [link](https://phillipi.github.io/pix2pix/) where the associated paper and the GitHub repository can be found.

|

| 65 |

|

| 66 |

+

The images below show some examples extracted from the Pix2Pix paper. This model can be applied to various use cases. It is capable of relatively simpler things, e.g., converting a grayscale image to its colored version. But more importantly, it can generate realistic pictures from rough sketches (can be seen in the purse example) or from painting-like images (can be seen in the street and facade examples below).

|

| 67 |

|

| 68 |

|

| 69 |

|

| 70 |

## Useful Resources

|

| 71 |

|

| 72 |

+

- [Image-to-image guide with diffusers](https://huggingface.co/docs/diffusers/using-diffusers/img2img)

|

| 73 |

- [Train your ControlNet with diffusers 🧨](https://huggingface.co/blog/train-your-controlnet)

|

| 74 |

- [Ultra fast ControlNet with 🧨 Diffusers](https://huggingface.co/blog/controlnet)

|

| 75 |

|