Spaces:

Runtime error

Runtime error

Upload 265 files

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +7 -0

- .github/workflows/bot-autolint.yaml +50 -0

- .github/workflows/ci.yaml +54 -0

- .gitignore +184 -0

- .pre-commit-config.yaml +62 -0

- CITATION.bib +9 -0

- CIs/add_license_all.sh +2 -0

- Dockerfile +26 -0

- LICENSE +201 -0

- README.md +401 -12

- app.py +441 -93

- app/app_sana.py +502 -0

- app/app_sana_4bit.py +409 -0

- app/app_sana_4bit_compare_bf16.py +313 -0

- app/app_sana_controlnet_hed.py +306 -0

- app/app_sana_multithread.py +565 -0

- app/safety_check.py +72 -0

- app/sana_controlnet_pipeline.py +353 -0

- app/sana_pipeline.py +304 -0

- asset/Sana.jpg +3 -0

- asset/app_styles/controlnet_app_style.css +28 -0

- asset/controlnet/ref_images/A transparent sculpture of a duck made out of glass. The sculpture is in front of a painting of a la.jpg +3 -0

- asset/controlnet/ref_images/a house.png +3 -0

- asset/controlnet/ref_images/a living room.png +3 -0

- asset/controlnet/ref_images/nvidia.png +0 -0

- asset/controlnet/samples_controlnet.json +26 -0

- asset/docs/4bit_sana.md +68 -0

- asset/docs/8bit_sana.md +109 -0

- asset/docs/ComfyUI/Sana_CogVideoX.json +1142 -0

- asset/docs/ComfyUI/Sana_FlowEuler.json +508 -0

- asset/docs/ComfyUI/Sana_FlowEuler_2K.json +508 -0

- asset/docs/ComfyUI/Sana_FlowEuler_4K.json +508 -0

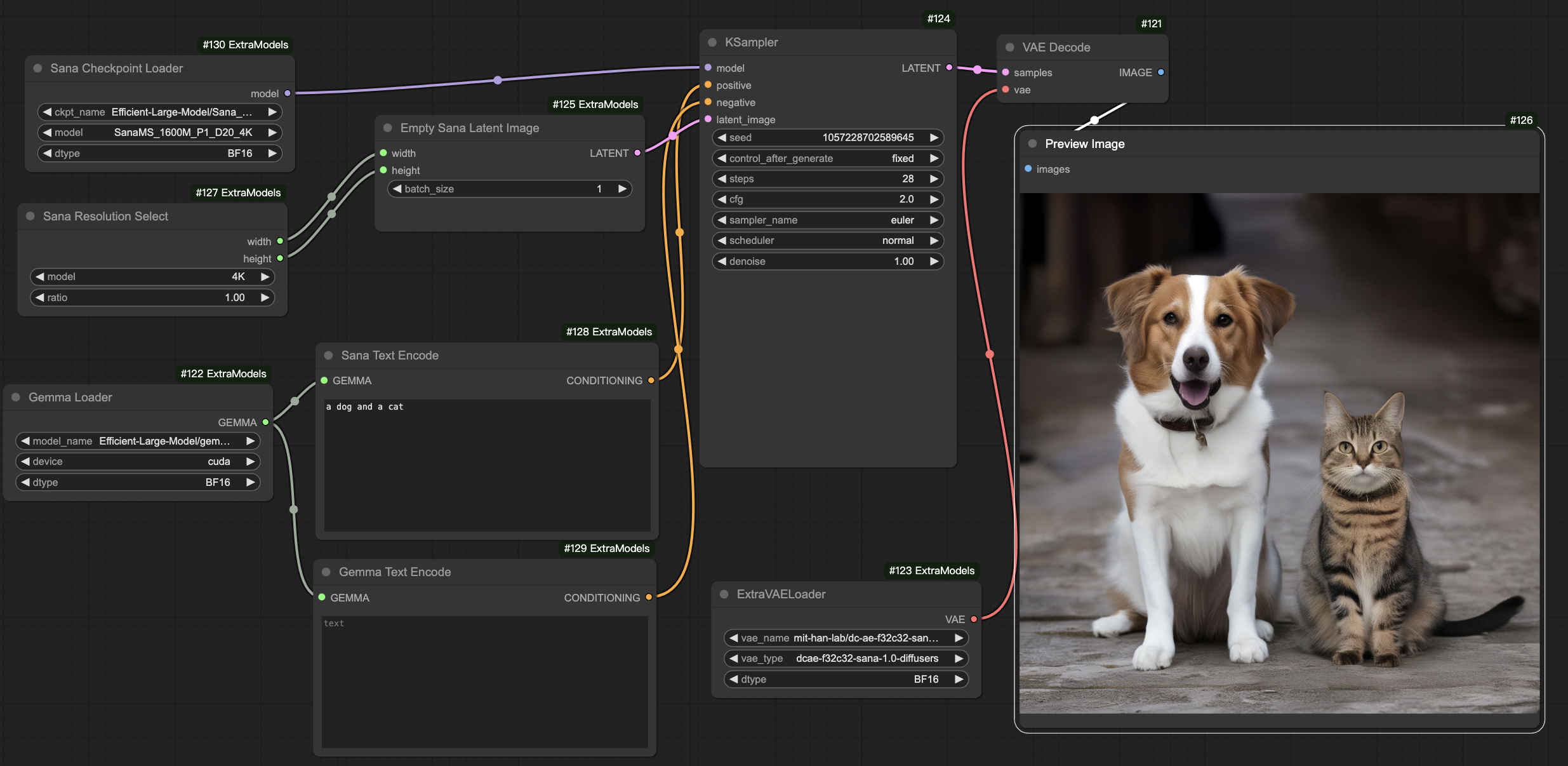

- asset/docs/ComfyUI/comfyui.md +40 -0

- asset/docs/metrics_toolkit.md +118 -0

- asset/docs/model_zoo.md +157 -0

- asset/docs/sana_controlnet.md +75 -0

- asset/docs/sana_lora_dreambooth.md +144 -0

- asset/example_data/00000000.jpg +3 -0

- asset/example_data/00000000.png +3 -0

- asset/example_data/00000000.txt +1 -0

- asset/example_data/00000000_InternVL2-26B.json +5 -0

- asset/example_data/00000000_InternVL2-26B_clip_score.json +5 -0

- asset/example_data/00000000_VILA1-5-13B.json +5 -0

- asset/example_data/00000000_VILA1-5-13B_clip_score.json +5 -0

- asset/example_data/00000000_prompt_clip_score.json +5 -0

- asset/example_data/meta_data.json +7 -0

- asset/examples.py +69 -0

- asset/logo.png +0 -0

- asset/model-incremental.jpg +3 -0

- asset/model_paths.txt +2 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,10 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

asset/controlnet/ref_images/a[[:space:]]house.png filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

asset/controlnet/ref_images/a[[:space:]]living[[:space:]]room.png filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

asset/controlnet/ref_images/A[[:space:]]transparent[[:space:]]sculpture[[:space:]]of[[:space:]]a[[:space:]]duck[[:space:]]made[[:space:]]out[[:space:]]of[[:space:]]glass.[[:space:]]The[[:space:]]sculpture[[:space:]]is[[:space:]]in[[:space:]]front[[:space:]]of[[:space:]]a[[:space:]]painting[[:space:]]of[[:space:]]a[[:space:]]la.jpg filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

asset/example_data/00000000.jpg filter=lfs diff=lfs merge=lfs -text

|

| 40 |

+

asset/example_data/00000000.png filter=lfs diff=lfs merge=lfs -text

|

| 41 |

+

asset/model-incremental.jpg filter=lfs diff=lfs merge=lfs -text

|

| 42 |

+

asset/Sana.jpg filter=lfs diff=lfs merge=lfs -text

|

.github/workflows/bot-autolint.yaml

ADDED

|

@@ -0,0 +1,50 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: Auto Lint (triggered by "auto lint" label)

|

| 2 |

+

on:

|

| 3 |

+

pull_request:

|

| 4 |

+

types:

|

| 5 |

+

- opened

|

| 6 |

+

- edited

|

| 7 |

+

- closed

|

| 8 |

+

- reopened

|

| 9 |

+

- synchronize

|

| 10 |

+

- labeled

|

| 11 |

+

- unlabeled

|

| 12 |

+

# run only one unit test for a branch / tag.

|

| 13 |

+

concurrency:

|

| 14 |

+

group: ci-lint-${{ github.ref }}

|

| 15 |

+

cancel-in-progress: true

|

| 16 |

+

jobs:

|

| 17 |

+

lint-by-label:

|

| 18 |

+

if: contains(github.event.pull_request.labels.*.name, 'lint wanted')

|

| 19 |

+

runs-on: ubuntu-latest

|

| 20 |

+

steps:

|

| 21 |

+

- name: Check out Git repository

|

| 22 |

+

uses: actions/checkout@v4

|

| 23 |

+

with:

|

| 24 |

+

token: ${{ secrets.PAT }}

|

| 25 |

+

ref: ${{ github.event.pull_request.head.ref }}

|

| 26 |

+

- name: Set up Python

|

| 27 |

+

uses: actions/setup-python@v5

|

| 28 |

+

with:

|

| 29 |

+

python-version: '3.10'

|

| 30 |

+

- name: Test pre-commit hooks

|

| 31 |

+

continue-on-error: true

|

| 32 |

+

uses: pre-commit/[email protected] # sync with https://github.com/Efficient-Large-Model/VILA-Internal/blob/main/.github/workflows/pre-commit.yaml

|

| 33 |

+

with:

|

| 34 |

+

extra_args: --all-files

|

| 35 |

+

- name: Check if there are any changes

|

| 36 |

+

id: verify_diff

|

| 37 |

+

run: |

|

| 38 |

+

git diff --quiet . || echo "changed=true" >> $GITHUB_OUTPUT

|

| 39 |

+

- name: Commit files

|

| 40 |

+

if: steps.verify_diff.outputs.changed == 'true'

|

| 41 |

+

run: |

|

| 42 |

+

git config --local user.email "[email protected]"

|

| 43 |

+

git config --local user.name "GitHub Action"

|

| 44 |

+

git add .

|

| 45 |

+

git commit -m "[CI-Lint] Fix code style issues with pre-commit ${{ github.sha }}" -a

|

| 46 |

+

git push

|

| 47 |

+

- name: Remove label(s) after lint

|

| 48 |

+

uses: actions-ecosystem/action-remove-labels@v1

|

| 49 |

+

with:

|

| 50 |

+

labels: lint wanted

|

.github/workflows/ci.yaml

ADDED

|

@@ -0,0 +1,54 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: ci

|

| 2 |

+

on:

|

| 3 |

+

pull_request:

|

| 4 |

+

push:

|

| 5 |

+

branches: [main, feat/Sana-public, feat/Sana-public-for-NVLab]

|

| 6 |

+

concurrency:

|

| 7 |

+

group: ci-${{ github.workflow }}-${{ github.ref }}

|

| 8 |

+

cancel-in-progress: true

|

| 9 |

+

# if: ${{ github.repository == 'Efficient-Large-Model/Sana' }}

|

| 10 |

+

jobs:

|

| 11 |

+

pre-commit:

|

| 12 |

+

runs-on: ubuntu-latest

|

| 13 |

+

steps:

|

| 14 |

+

- name: Check out Git repository

|

| 15 |

+

uses: actions/checkout@v4

|

| 16 |

+

- name: Set up Python

|

| 17 |

+

uses: actions/setup-python@v5

|

| 18 |

+

with:

|

| 19 |

+

python-version: 3.10.10

|

| 20 |

+

- name: Test pre-commit hooks

|

| 21 |

+

uses: pre-commit/[email protected]

|

| 22 |

+

tests-bash:

|

| 23 |

+

# needs: pre-commit

|

| 24 |

+

runs-on: self-hosted

|

| 25 |

+

steps:

|

| 26 |

+

- name: Check out Git repository

|

| 27 |

+

uses: actions/checkout@v4

|

| 28 |

+

- name: Set up Python

|

| 29 |

+

uses: actions/setup-python@v5

|

| 30 |

+

with:

|

| 31 |

+

python-version: 3.10.10

|

| 32 |

+

- name: Set up the environment

|

| 33 |

+

run: |

|

| 34 |

+

bash environment_setup.sh

|

| 35 |

+

- name: Run tests with Slurm

|

| 36 |

+

run: |

|

| 37 |

+

sana-run --pty -m ci -J tests-bash bash tests/bash/entry.sh

|

| 38 |

+

|

| 39 |

+

# tests-python:

|

| 40 |

+

# needs: pre-commit

|

| 41 |

+

# runs-on: self-hosted

|

| 42 |

+

# steps:

|

| 43 |

+

# - name: Check out Git repository

|

| 44 |

+

# uses: actions/checkout@v4

|

| 45 |

+

# - name: Set up Python

|

| 46 |

+

# uses: actions/setup-python@v5

|

| 47 |

+

# with:

|

| 48 |

+

# python-version: 3.10.10

|

| 49 |

+

# - name: Set up the environment

|

| 50 |

+

# run: |

|

| 51 |

+

# ./environment_setup.sh

|

| 52 |

+

# - name: Run tests with Slurm

|

| 53 |

+

# run: |

|

| 54 |

+

# sana-run --pty -m ci -J tests-python pytest tests/python

|

.gitignore

ADDED

|

@@ -0,0 +1,184 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Sana related files

|

| 2 |

+

*_dev.py

|

| 3 |

+

*_dev.sh

|

| 4 |

+

.count.db

|

| 5 |

+

.gradio/

|

| 6 |

+

.idea/

|

| 7 |

+

*.png

|

| 8 |

+

tmp*

|

| 9 |

+

output*

|

| 10 |

+

output/

|

| 11 |

+

outputs/

|

| 12 |

+

wandb/

|

| 13 |

+

.vscode/

|

| 14 |

+

private/

|

| 15 |

+

ldm_ae*

|

| 16 |

+

data/*

|

| 17 |

+

*.pth

|

| 18 |

+

.gradio/

|

| 19 |

+

*.bin

|

| 20 |

+

*.safetensors

|

| 21 |

+

*.pkl

|

| 22 |

+

|

| 23 |

+

# Byte-compiled / optimized / DLL files

|

| 24 |

+

__pycache__/

|

| 25 |

+

*.py[cod]

|

| 26 |

+

*$py.class

|

| 27 |

+

|

| 28 |

+

# C extensions

|

| 29 |

+

*.so

|

| 30 |

+

|

| 31 |

+

# Distribution / packaging

|

| 32 |

+

.Python

|

| 33 |

+

build/

|

| 34 |

+

develop-eggs/

|

| 35 |

+

dist/

|

| 36 |

+

downloads/

|

| 37 |

+

eggs/

|

| 38 |

+

.eggs/

|

| 39 |

+

lib/

|

| 40 |

+

lib64/

|

| 41 |

+

parts/

|

| 42 |

+

sdist/

|

| 43 |

+

var/

|

| 44 |

+

wheels/

|

| 45 |

+

share/python-wheels/

|

| 46 |

+

*.egg-info/

|

| 47 |

+

.installed.cfg

|

| 48 |

+

*.egg

|

| 49 |

+

MANIFEST

|

| 50 |

+

|

| 51 |

+

# PyInstaller

|

| 52 |

+

# Usually these files are written by a python script from a template

|

| 53 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 54 |

+

*.manifest

|

| 55 |

+

*.spec

|

| 56 |

+

|

| 57 |

+

# Installer logs

|

| 58 |

+

pip-log.txt

|

| 59 |

+

pip-delete-this-directory.txt

|

| 60 |

+

|

| 61 |

+

# Unit test / coverage reports

|

| 62 |

+

htmlcov/

|

| 63 |

+

.tox/

|

| 64 |

+

.nox/

|

| 65 |

+

.coverage

|

| 66 |

+

.coverage.*

|

| 67 |

+

.cache

|

| 68 |

+

nosetests.xml

|

| 69 |

+

coverage.xml

|

| 70 |

+

*.cover

|

| 71 |

+

*.py,cover

|

| 72 |

+

.hypothesis/

|

| 73 |

+

.pytest_cache/

|

| 74 |

+

cover/

|

| 75 |

+

|

| 76 |

+

# Translations

|

| 77 |

+

*.mo

|

| 78 |

+

*.pot

|

| 79 |

+

|

| 80 |

+

# Django stuff:

|

| 81 |

+

*.log

|

| 82 |

+

local_settings.py

|

| 83 |

+

db.sqlite3

|

| 84 |

+

db.sqlite3-journal

|

| 85 |

+

|

| 86 |

+

# Flask stuff:

|

| 87 |

+

instance/

|

| 88 |

+

.webassets-cache

|

| 89 |

+

|

| 90 |

+

# Scrapy stuff:

|

| 91 |

+

.scrapy

|

| 92 |

+

|

| 93 |

+

# Sphinx documentation

|

| 94 |

+

docs/_build/

|

| 95 |

+

|

| 96 |

+

# PyBuilder

|

| 97 |

+

.pybuilder/

|

| 98 |

+

target/

|

| 99 |

+

|

| 100 |

+

# Jupyter Notebook

|

| 101 |

+

.ipynb_checkpoints

|

| 102 |

+

|

| 103 |

+

# IPython

|

| 104 |

+

profile_default/

|

| 105 |

+

ipython_config.py

|

| 106 |

+

|

| 107 |

+

# pyenv

|

| 108 |

+

# For a library or package, you might want to ignore these files since the code is

|

| 109 |

+

# intended to run in multiple environments; otherwise, check them in:

|

| 110 |

+

# .python-version

|

| 111 |

+

|

| 112 |

+

# pipenv

|

| 113 |

+

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

| 114 |

+

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

| 115 |

+

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

| 116 |

+

# install all needed dependencies.

|

| 117 |

+

#Pipfile.lock

|

| 118 |

+

|

| 119 |

+

# poetry

|

| 120 |

+

# Similar to Pipfile.lock, it is generally recommended to include poetry.lock in version control.

|

| 121 |

+

# This is especially recommended for binary packages to ensure reproducibility, and is more

|

| 122 |

+

# commonly ignored for libraries.

|

| 123 |

+

# https://python-poetry.org/docs/basic-usage/#commit-your-poetrylock-file-to-version-control

|

| 124 |

+

#poetry.lock

|

| 125 |

+

|

| 126 |

+

# pdm

|

| 127 |

+

# Similar to Pipfile.lock, it is generally recommended to include pdm.lock in version control.

|

| 128 |

+

#pdm.lock

|

| 129 |

+

# pdm stores project-wide configurations in .pdm.toml, but it is recommended to not include it

|

| 130 |

+

# in version control.

|

| 131 |

+

# https://pdm.fming.dev/latest/usage/project/#working-with-version-control

|

| 132 |

+

.pdm.toml

|

| 133 |

+

.pdm-python

|

| 134 |

+

.pdm-build/

|

| 135 |

+

|

| 136 |

+

# PEP 582; used by e.g. github.com/David-OConnor/pyflow and github.com/pdm-project/pdm

|

| 137 |

+

__pypackages__/

|

| 138 |

+

|

| 139 |

+

# Celery stuff

|

| 140 |

+

celerybeat-schedule

|

| 141 |

+

celerybeat.pid

|

| 142 |

+

|

| 143 |

+

# SageMath parsed files

|

| 144 |

+

*.sage.py

|

| 145 |

+

|

| 146 |

+

# Environments

|

| 147 |

+

.env

|

| 148 |

+

.venv

|

| 149 |

+

env/

|

| 150 |

+

venv/

|

| 151 |

+

ENV/

|

| 152 |

+

env.bak/

|

| 153 |

+

venv.bak/

|

| 154 |

+

|

| 155 |

+

# Spyder project settings

|

| 156 |

+

.spyderproject

|

| 157 |

+

.spyproject

|

| 158 |

+

|

| 159 |

+

# Rope project settings

|

| 160 |

+

.ropeproject

|

| 161 |

+

|

| 162 |

+

# mkdocs documentation

|

| 163 |

+

/site

|

| 164 |

+

|

| 165 |

+

# mypy

|

| 166 |

+

.mypy_cache/

|

| 167 |

+

.dmypy.json

|

| 168 |

+

dmypy.json

|

| 169 |

+

|

| 170 |

+

# Pyre type checker

|

| 171 |

+

.pyre/

|

| 172 |

+

|

| 173 |

+

# pytype static type analyzer

|

| 174 |

+

.pytype/

|

| 175 |

+

|

| 176 |

+

# Cython debug symbols

|

| 177 |

+

cython_debug/

|

| 178 |

+

|

| 179 |

+

# PyCharm

|

| 180 |

+

# JetBrains specific template is maintained in a separate JetBrains.gitignore that can

|

| 181 |

+

# be found at https://github.com/github/gitignore/blob/main/Global/JetBrains.gitignore

|

| 182 |

+

# and can be added to the global gitignore or merged into this file. For a more nuclear

|

| 183 |

+

# option (not recommended) you can uncomment the following to ignore the entire idea folder.

|

| 184 |

+

#.idea/

|

.pre-commit-config.yaml

ADDED

|

@@ -0,0 +1,62 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

repos:

|

| 2 |

+

- repo: https://github.com/pre-commit/pre-commit-hooks

|

| 3 |

+

rev: v5.0.0

|

| 4 |

+

hooks:

|

| 5 |

+

- id: trailing-whitespace

|

| 6 |

+

name: (Common) Remove trailing whitespaces

|

| 7 |

+

- id: mixed-line-ending

|

| 8 |

+

name: (Common) Fix mixed line ending

|

| 9 |

+

args: [--fix=lf]

|

| 10 |

+

- id: end-of-file-fixer

|

| 11 |

+

name: (Common) Remove extra EOF newlines

|

| 12 |

+

- id: check-merge-conflict

|

| 13 |

+

name: (Common) Check for merge conflicts

|

| 14 |

+

- id: requirements-txt-fixer

|

| 15 |

+

name: (Common) Sort "requirements.txt"

|

| 16 |

+

- id: fix-encoding-pragma

|

| 17 |

+

name: (Python) Remove encoding pragmas

|

| 18 |

+

args: [--remove]

|

| 19 |

+

# - id: debug-statements

|

| 20 |

+

# name: (Python) Check for debugger imports

|

| 21 |

+

- id: check-json

|

| 22 |

+

name: (JSON) Check syntax

|

| 23 |

+

- id: check-yaml

|

| 24 |

+

name: (YAML) Check syntax

|

| 25 |

+

- id: check-toml

|

| 26 |

+

name: (TOML) Check syntax

|

| 27 |

+

# - repo: https://github.com/shellcheck-py/shellcheck-py

|

| 28 |

+

# rev: v0.10.0.1

|

| 29 |

+

# hooks:

|

| 30 |

+

# - id: shellcheck

|

| 31 |

+

- repo: https://github.com/google/yamlfmt

|

| 32 |

+

rev: v0.13.0

|

| 33 |

+

hooks:

|

| 34 |

+

- id: yamlfmt

|

| 35 |

+

- repo: https://github.com/executablebooks/mdformat

|

| 36 |

+

rev: 0.7.16

|

| 37 |

+

hooks:

|

| 38 |

+

- id: mdformat

|

| 39 |

+

name: (Markdown) Format docs with mdformat

|

| 40 |

+

- repo: https://github.com/asottile/pyupgrade

|

| 41 |

+

rev: v3.2.2

|

| 42 |

+

hooks:

|

| 43 |

+

- id: pyupgrade

|

| 44 |

+

name: (Python) Update syntax for newer versions

|

| 45 |

+

args: [--py37-plus]

|

| 46 |

+

- repo: https://github.com/psf/black

|

| 47 |

+

rev: 22.10.0

|

| 48 |

+

hooks:

|

| 49 |

+

- id: black

|

| 50 |

+

name: (Python) Format code with black

|

| 51 |

+

- repo: https://github.com/pycqa/isort

|

| 52 |

+

rev: 5.12.0

|

| 53 |

+

hooks:

|

| 54 |

+

- id: isort

|

| 55 |

+

name: (Python) Sort imports with isort

|

| 56 |

+

- repo: https://github.com/pre-commit/mirrors-clang-format

|

| 57 |

+

rev: v15.0.4

|

| 58 |

+

hooks:

|

| 59 |

+

- id: clang-format

|

| 60 |

+

name: (C/C++/CUDA) Format code with clang-format

|

| 61 |

+

args: [-style=google, -i]

|

| 62 |

+

types_or: [c, c++, cuda]

|

CITATION.bib

ADDED

|

@@ -0,0 +1,9 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

@misc{xie2024sana,

|

| 2 |

+

title={Sana: Efficient High-Resolution Image Synthesis with Linear Diffusion Transformer},

|

| 3 |

+

author={Enze Xie and Junsong Chen and Junyu Chen and Han Cai and Haotian Tang and Yujun Lin and Zhekai Zhang and Muyang Li and Ligeng Zhu and Yao Lu and Song Han},

|

| 4 |

+

year={2024},

|

| 5 |

+

eprint={2410.10629},

|

| 6 |

+

archivePrefix={arXiv},

|

| 7 |

+

primaryClass={cs.CV},

|

| 8 |

+

url={https://arxiv.org/abs/2410.10629},

|

| 9 |

+

}

|

CIs/add_license_all.sh

ADDED

|

@@ -0,0 +1,2 @@

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#/bin/bash

|

| 2 |

+

addlicense -s -c 'NVIDIA CORPORATION & AFFILIATES' -ignore "**/*__init__.py" **/*.py

|

Dockerfile

ADDED

|

@@ -0,0 +1,26 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

FROM nvcr.io/nvidia/pytorch:24.06-py3

|

| 2 |

+

|

| 3 |

+

ENV PATH=/opt/conda/bin:$PATH

|

| 4 |

+

|

| 5 |

+

RUN apt-get update && apt-get install -y \

|

| 6 |

+

libgl1-mesa-glx \

|

| 7 |

+

libglib2.0-0 \

|

| 8 |

+

&& rm -rf /var/lib/apt/lists/*

|

| 9 |

+

|

| 10 |

+

WORKDIR /app

|

| 11 |

+

|

| 12 |

+

RUN curl https://repo.anaconda.com/miniconda/Miniconda3-latest-Linux-x86_64.sh -o ~/miniconda.sh \

|

| 13 |

+

&& sh ~/miniconda.sh -b -p /opt/conda \

|

| 14 |

+

&& rm ~/miniconda.sh

|

| 15 |

+

|

| 16 |

+

COPY pyproject.toml pyproject.toml

|

| 17 |

+

COPY diffusion diffusion

|

| 18 |

+

COPY configs configs

|

| 19 |

+

COPY sana sana

|

| 20 |

+

COPY app app

|

| 21 |

+

COPY tools tools

|

| 22 |

+

|

| 23 |

+

COPY environment_setup.sh environment_setup.sh

|

| 24 |

+

RUN ./environment_setup.sh

|

| 25 |

+

|

| 26 |

+

CMD ["python", "-u", "-W", "ignore", "app/app_sana.py", "--share", "--config=configs/sana_config/1024ms/Sana_1600M_img1024.yaml", "--model_path=hf://Efficient-Large-Model/Sana_1600M_1024px/checkpoints/Sana_1600M_1024px.pth"]

|

LICENSE

ADDED

|

@@ -0,0 +1,201 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Apache License

|

| 2 |

+

Version 2.0, January 2004

|

| 3 |

+

http://www.apache.org/licenses/

|

| 4 |

+

|

| 5 |

+

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

| 6 |

+

|

| 7 |

+

1. Definitions.

|

| 8 |

+

|

| 9 |

+

"License" shall mean the terms and conditions for use, reproduction,

|

| 10 |

+

and distribution as defined by Sections 1 through 9 of this document.

|

| 11 |

+

|

| 12 |

+

"Licensor" shall mean the copyright owner or entity authorized by

|

| 13 |

+

the copyright owner that is granting the License.

|

| 14 |

+

|

| 15 |

+

"Legal Entity" shall mean the union of the acting entity and all

|

| 16 |

+

other entities that control, are controlled by, or are under common

|

| 17 |

+

control with that entity. For the purposes of this definition,

|

| 18 |

+

"control" means (i) the power, direct or indirect, to cause the

|

| 19 |

+

direction or management of such entity, whether by contract or

|

| 20 |

+

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

| 21 |

+

outstanding shares, or (iii) beneficial ownership of such entity.

|

| 22 |

+

|

| 23 |

+

"You" (or "Your") shall mean an individual or Legal Entity

|

| 24 |

+

exercising permissions granted by this License.

|

| 25 |

+

|

| 26 |

+

"Source" form shall mean the preferred form for making modifications,

|

| 27 |

+

including but not limited to software source code, documentation

|

| 28 |

+

source, and configuration files.

|

| 29 |

+

|

| 30 |

+

"Object" form shall mean any form resulting from mechanical

|

| 31 |

+

transformation or translation of a Source form, including but

|

| 32 |

+

not limited to compiled object code, generated documentation,

|

| 33 |

+

and conversions to other media types.

|

| 34 |

+

|

| 35 |

+

"Work" shall mean the work of authorship, whether in Source or

|

| 36 |

+

Object form, made available under the License, as indicated by a

|

| 37 |

+

copyright notice that is included in or attached to the work

|

| 38 |

+

(an example is provided in the Appendix below).

|

| 39 |

+

|

| 40 |

+

"Derivative Works" shall mean any work, whether in Source or Object

|

| 41 |

+

form, that is based on (or derived from) the Work and for which the

|

| 42 |

+

editorial revisions, annotations, elaborations, or other modifications

|

| 43 |

+

represent, as a whole, an original work of authorship. For the purposes

|

| 44 |

+

of this License, Derivative Works shall not include works that remain

|

| 45 |

+

separable from, or merely link (or bind by name) to the interfaces of,

|

| 46 |

+

the Work and Derivative Works thereof.

|

| 47 |

+

|

| 48 |

+

"Contribution" shall mean any work of authorship, including

|

| 49 |

+

the original version of the Work and any modifications or additions

|

| 50 |

+

to that Work or Derivative Works thereof, that is intentionally

|

| 51 |

+

submitted to Licensor for inclusion in the Work by the copyright owner

|

| 52 |

+

or by an individual or Legal Entity authorized to submit on behalf of

|

| 53 |

+

the copyright owner. For the purposes of this definition, "submitted"

|

| 54 |

+

means any form of electronic, verbal, or written communication sent

|

| 55 |

+

to the Licensor or its representatives, including but not limited to

|

| 56 |

+

communication on electronic mailing lists, source code control systems,

|

| 57 |

+

and issue tracking systems that are managed by, or on behalf of, the

|

| 58 |

+

Licensor for the purpose of discussing and improving the Work, but

|

| 59 |

+

excluding communication that is conspicuously marked or otherwise

|

| 60 |

+

designated in writing by the copyright owner as "Not a Contribution."

|

| 61 |

+

|

| 62 |

+

"Contributor" shall mean Licensor and any individual or Legal Entity

|

| 63 |

+

on behalf of whom a Contribution has been received by Licensor and

|

| 64 |

+

subsequently incorporated within the Work.

|

| 65 |

+

|

| 66 |

+

2. Grant of Copyright License. Subject to the terms and conditions of

|

| 67 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 68 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 69 |

+

copyright license to reproduce, prepare Derivative Works of,

|

| 70 |

+

publicly display, publicly perform, sublicense, and distribute the

|

| 71 |

+

Work and such Derivative Works in Source or Object form.

|

| 72 |

+

|

| 73 |

+

3. Grant of Patent License. Subject to the terms and conditions of

|

| 74 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 75 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 76 |

+

(except as stated in this section) patent license to make, have made,

|

| 77 |

+

use, offer to sell, sell, import, and otherwise transfer the Work,

|

| 78 |

+

where such license applies only to those patent claims licensable

|

| 79 |

+

by such Contributor that are necessarily infringed by their

|

| 80 |

+

Contribution(s) alone or by combination of their Contribution(s)

|

| 81 |

+

with the Work to which such Contribution(s) was submitted. If You

|

| 82 |

+

institute patent litigation against any entity (including a

|

| 83 |

+

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

| 84 |

+

or a Contribution incorporated within the Work constitutes direct

|

| 85 |

+

or contributory patent infringement, then any patent licenses

|

| 86 |

+

granted to You under this License for that Work shall terminate

|

| 87 |

+

as of the date such litigation is filed.

|

| 88 |

+

|

| 89 |

+

4. Redistribution. You may reproduce and distribute copies of the

|

| 90 |

+

Work or Derivative Works thereof in any medium, with or without

|

| 91 |

+

modifications, and in Source or Object form, provided that You

|

| 92 |

+

meet the following conditions:

|

| 93 |

+

|

| 94 |

+

(a) You must give any other recipients of the Work or

|

| 95 |

+

Derivative Works a copy of this License; and

|

| 96 |

+

|

| 97 |

+

(b) You must cause any modified files to carry prominent notices

|

| 98 |

+

stating that You changed the files; and

|

| 99 |

+

|

| 100 |

+

(c) You must retain, in the Source form of any Derivative Works

|

| 101 |

+

that You distribute, all copyright, patent, trademark, and

|

| 102 |

+

attribution notices from the Source form of the Work,

|

| 103 |

+

excluding those notices that do not pertain to any part of

|

| 104 |

+

the Derivative Works; and

|

| 105 |

+

|

| 106 |

+

(d) If the Work includes a "NOTICE" text file as part of its

|

| 107 |

+

distribution, then any Derivative Works that You distribute must

|

| 108 |

+

include a readable copy of the attribution notices contained

|

| 109 |

+

within such NOTICE file, excluding those notices that do not

|

| 110 |

+

pertain to any part of the Derivative Works, in at least one

|

| 111 |

+

of the following places: within a NOTICE text file distributed

|

| 112 |

+

as part of the Derivative Works; within the Source form or

|

| 113 |

+

documentation, if provided along with the Derivative Works; or,

|

| 114 |

+

within a display generated by the Derivative Works, if and

|

| 115 |

+

wherever such third-party notices normally appear. The contents

|

| 116 |

+

of the NOTICE file are for informational purposes only and

|

| 117 |

+

do not modify the License. You may add Your own attribution

|

| 118 |

+

notices within Derivative Works that You distribute, alongside

|

| 119 |

+

or as an addendum to the NOTICE text from the Work, provided

|

| 120 |

+

that such additional attribution notices cannot be construed

|

| 121 |

+

as modifying the License.

|

| 122 |

+

|

| 123 |

+

You may add Your own copyright statement to Your modifications and

|

| 124 |

+

may provide additional or different license terms and conditions

|

| 125 |

+

for use, reproduction, or distribution of Your modifications, or

|

| 126 |

+

for any such Derivative Works as a whole, provided Your use,

|

| 127 |

+

reproduction, and distribution of the Work otherwise complies with

|

| 128 |

+

the conditions stated in this License.

|

| 129 |

+

|

| 130 |

+

5. Submission of Contributions. Unless You explicitly state otherwise,

|

| 131 |

+

any Contribution intentionally submitted for inclusion in the Work

|

| 132 |

+

by You to the Licensor shall be under the terms and conditions of

|

| 133 |

+

this License, without any additional terms or conditions.

|

| 134 |

+

Notwithstanding the above, nothing herein shall supersede or modify

|

| 135 |

+

the terms of any separate license agreement you may have executed

|

| 136 |

+

with Licensor regarding such Contributions.

|

| 137 |

+

|

| 138 |

+

6. Trademarks. This License does not grant permission to use the trade

|

| 139 |

+

names, trademarks, service marks, or product names of the Licensor,

|

| 140 |

+

except as required for reasonable and customary use in describing the

|

| 141 |

+

origin of the Work and reproducing the content of the NOTICE file.

|

| 142 |

+

|

| 143 |

+

7. Disclaimer of Warranty. Unless required by applicable law or

|

| 144 |

+

agreed to in writing, Licensor provides the Work (and each

|

| 145 |

+

Contributor provides its Contributions) on an "AS IS" BASIS,

|

| 146 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

| 147 |

+

implied, including, without limitation, any warranties or conditions

|

| 148 |

+

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

| 149 |

+

PARTICULAR PURPOSE. You are solely responsible for determining the

|

| 150 |

+

appropriateness of using or redistributing the Work and assume any

|

| 151 |

+

risks associated with Your exercise of permissions under this License.

|

| 152 |

+

|

| 153 |

+

8. Limitation of Liability. In no event and under no legal theory,

|

| 154 |

+

whether in tort (including negligence), contract, or otherwise,

|

| 155 |

+

unless required by applicable law (such as deliberate and grossly

|

| 156 |

+

negligent acts) or agreed to in writing, shall any Contributor be

|

| 157 |

+

liable to You for damages, including any direct, indirect, special,

|

| 158 |

+

incidental, or consequential damages of any character arising as a

|

| 159 |

+

result of this License or out of the use or inability to use the

|

| 160 |

+

Work (including but not limited to damages for loss of goodwill,

|

| 161 |

+

work stoppage, computer failure or malfunction, or any and all

|

| 162 |

+

other commercial damages or losses), even if such Contributor

|

| 163 |

+

has been advised of the possibility of such damages.

|

| 164 |

+

|

| 165 |

+

9. Accepting Warranty or Additional Liability. While redistributing

|

| 166 |

+

the Work or Derivative Works thereof, You may choose to offer,

|

| 167 |

+

and charge a fee for, acceptance of support, warranty, indemnity,

|

| 168 |

+

or other liability obligations and/or rights consistent with this

|

| 169 |

+

License. However, in accepting such obligations, You may act only

|

| 170 |

+

on Your own behalf and on Your sole responsibility, not on behalf

|

| 171 |

+

of any other Contributor, and only if You agree to indemnify,

|

| 172 |

+

defend, and hold each Contributor harmless for any liability

|

| 173 |

+

incurred by, or claims asserted against, such Contributor by reason

|

| 174 |

+

of your accepting any such warranty or additional liability.

|

| 175 |

+

|

| 176 |

+

END OF TERMS AND CONDITIONS

|

| 177 |

+

|

| 178 |

+

APPENDIX: How to apply the Apache License to your work.

|

| 179 |

+

|

| 180 |

+

To apply the Apache License to your work, attach the following

|

| 181 |

+

boilerplate notice, with the fields enclosed by brackets "[]"

|

| 182 |

+

replaced with your own identifying information. (Don't include

|

| 183 |

+

the brackets!) The text should be enclosed in the appropriate

|

| 184 |

+

comment syntax for the file format. We also recommend that a

|

| 185 |

+

file or class name and description of purpose be included on the

|

| 186 |

+

same "printed page" as the copyright notice for easier

|

| 187 |

+

identification within third-party archives.

|

| 188 |

+

|

| 189 |

+

Copyright 2024 Nvidia

|

| 190 |

+

|

| 191 |

+

Licensed under the Apache License, Version 2.0 (the "License");

|

| 192 |

+

you may not use this file except in compliance with the License.

|

| 193 |

+

You may obtain a copy of the License at

|

| 194 |

+

|

| 195 |

+

http://www.apache.org/licenses/LICENSE-2.0

|

| 196 |

+

|

| 197 |

+

Unless required by applicable law or agreed to in writing, software

|

| 198 |

+

distributed under the License is distributed on an "AS IS" BASIS,

|

| 199 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 200 |

+

See the License for the specific language governing permissions and

|

| 201 |

+

limitations under the License.

|

README.md

CHANGED

|

@@ -1,12 +1,401 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

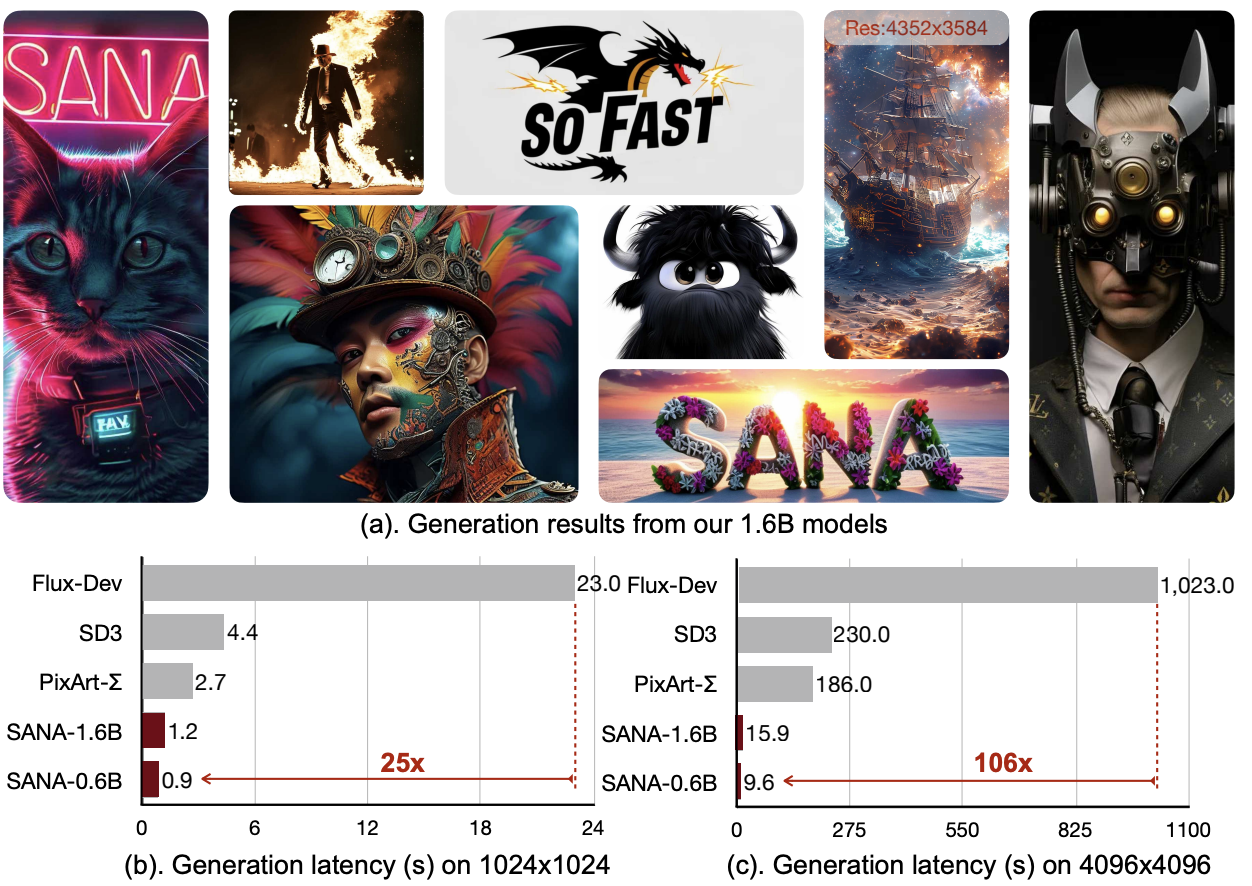

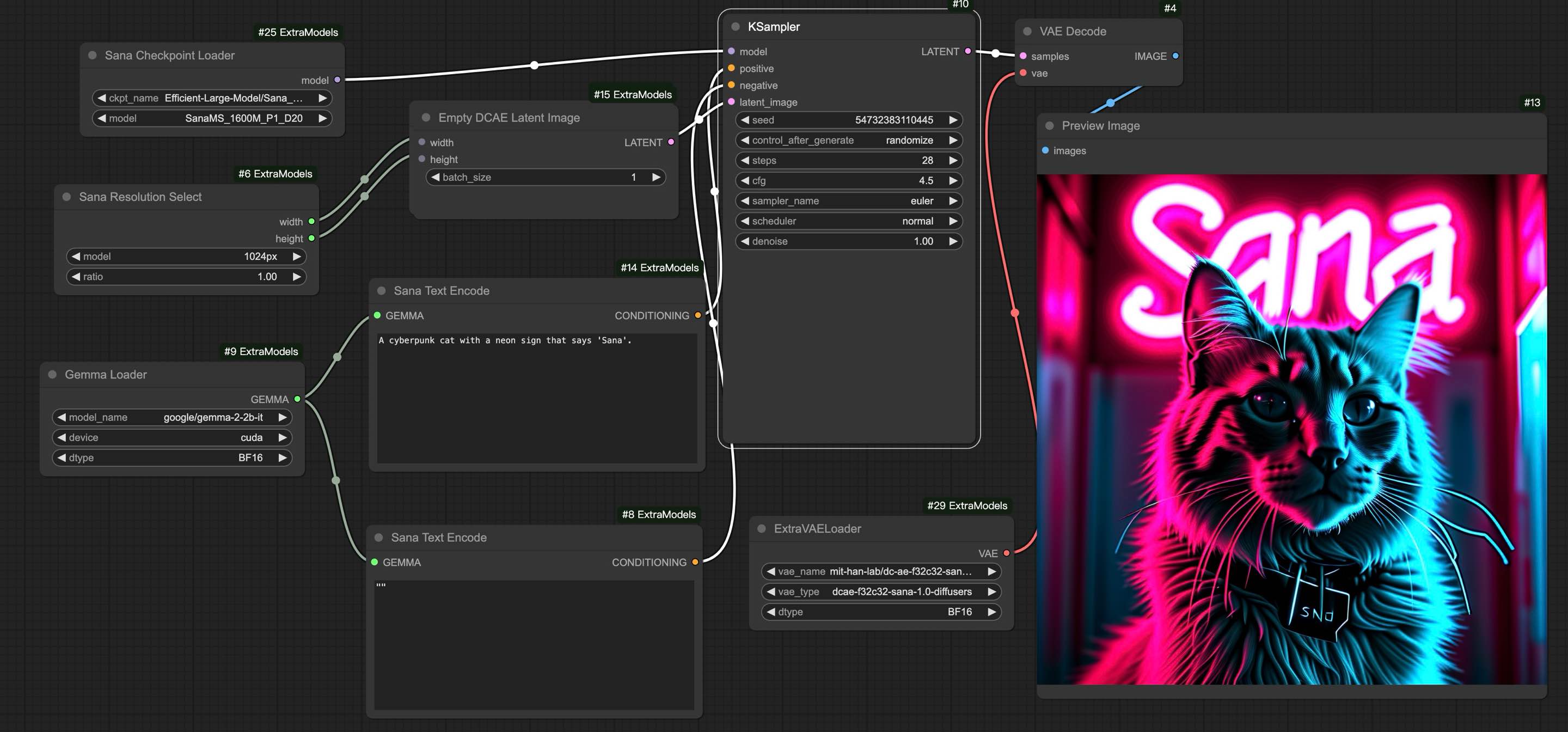

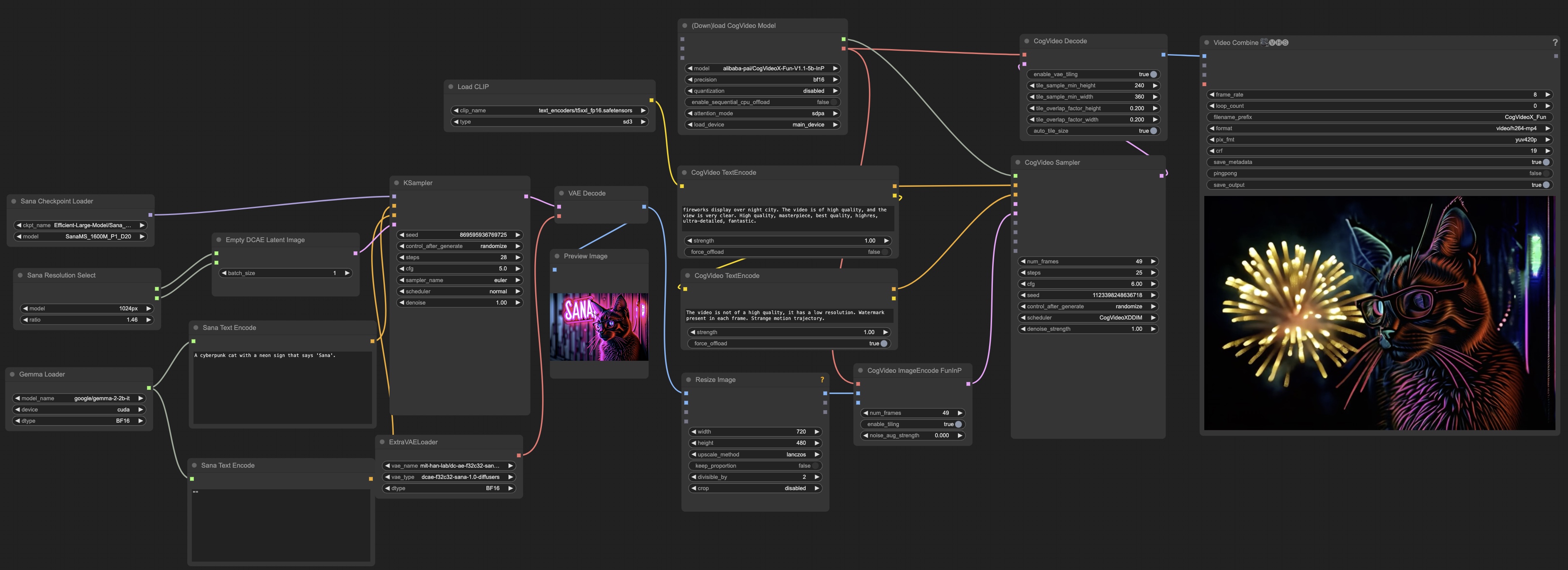

| 1 |

+

<p align="center" style="border-radius: 10px">

|

| 2 |

+

<img src="asset/logo.png" width="35%" alt="logo"/>

|

| 3 |

+

</p>

|

| 4 |

+

|

| 5 |

+

# ⚡️Sana: Efficient High-Resolution Image Synthesis with Linear Diffusion Transformer

|

| 6 |

+

|

| 7 |

+

### <div align="center"> ICLR 2025 Oral Presentation <div>

|

| 8 |

+

|

| 9 |

+

<div align="center">

|

| 10 |

+

<a href="https://nvlabs.github.io/Sana/"><img src="https://img.shields.io/static/v1?label=Project&message=Github&color=blue&logo=github-pages"></a>

|

| 11 |

+

<a href="https://hanlab.mit.edu/projects/sana/"><img src="https://img.shields.io/static/v1?label=Page&message=MIT&color=darkred&logo=github-pages"></a>

|

| 12 |

+

<a href="https://arxiv.org/abs/2410.10629"><img src="https://img.shields.io/static/v1?label=Arxiv&message=Sana&color=red&logo=arxiv"></a>

|

| 13 |

+

<a href="https://nv-sana.mit.edu/"><img src="https://img.shields.io/static/v1?label=Demo:6x3090&message=MIT&color=yellow"></a>

|

| 14 |

+

<a href="https://nv-sana.mit.edu/4bit/"><img src="https://img.shields.io/static/v1?label=Demo:1x3090&message=4bit&color=yellow"></a>

|

| 15 |

+

<a href="https://nv-sana.mit.edu/ctrlnet/"><img src="https://img.shields.io/static/v1?label=Demo:1x3090&message=ControlNet&color=yellow"></a>

|

| 16 |

+

<a href="https://replicate.com/chenxwh/sana"><img src="https://img.shields.io/static/v1?label=API:H100&message=Replicate&color=pink"></a>

|

| 17 |

+

<a href="https://discord.gg/rde6eaE5Ta"><img src="https://img.shields.io/static/v1?label=Discuss&message=Discord&color=purple&logo=discord"></a>

|

| 18 |

+

</div>

|

| 19 |

+

|

| 20 |

+

<p align="center" border-radius="10px">

|

| 21 |

+

<img src="asset/Sana.jpg" width="90%" alt="teaser_page1"/>

|

| 22 |

+

</p>

|

| 23 |

+

|

| 24 |

+

## 💡 Introduction

|

| 25 |

+

|

| 26 |

+

We introduce Sana, a text-to-image framework that can efficiently generate images up to 4096 × 4096 resolution.

|

| 27 |

+

Sana can synthesize high-resolution, high-quality images with strong text-image alignment at a remarkably fast speed, deployable on laptop GPU.

|

| 28 |

+

Core designs include:

|

| 29 |

+

|

| 30 |

+

(1) [**DC-AE**](https://hanlab.mit.edu/projects/dc-ae): unlike traditional AEs, which compress images only 8×, we trained an AE that can compress images 32×, effectively reducing the number of latent tokens. \

|

| 31 |

+

(2) **Linear DiT**: we replace all vanilla attention in DiT with linear attention, which is more efficient at high resolutions without sacrificing quality. \

|

| 32 |

+

(3) **Decoder-only text encoder**: we replaced T5 with a modern decoder-only small LLM as the text encoder and designed complex human instruction with in-context learning to enhance the image-text alignment. \

|

| 33 |

+

(4) **Efficient training and sampling**: we propose **Flow-DPM-Solver** to reduce sampling steps, with efficient caption labeling and selection to accelerate convergence.

|

| 34 |

+

|

| 35 |

+

As a result, Sana-0.6B is very competitive with modern giant diffusion models (e.g. Flux-12B), being 20 times smaller and 100+ times faster in measured throughput. Moreover, Sana-0.6B can be deployed on a 16GB laptop GPU, taking less than 1 second to generate a 1024 × 1024 resolution image. Sana enables content creation at low cost.

|

| 36 |

+

|

| 37 |

+

<p align="center" border-raduis="10px">

|

| 38 |

+

<img src="asset/model-incremental.jpg" width="90%" alt="teaser_page2"/>

|

| 39 |

+

</p>

|

| 40 |

+

|

| 41 |

+

## 🔥🔥 News

|

| 42 |

+

|

| 43 |

+

- (🔥 New) \[2025/2/10\] 🚀Sana + ControlNet is released. [\[Guidance\]](asset/docs/sana_controlnet.md) | [\[Model\]](asset/docs/model_zoo.md) | [\[Demo\]](https://nv-sana.mit.edu/ctrlnet/)

|

| 44 |

+

- (🔥 New) \[2025/1/30\] Release CAME-8bit optimizer code. Saving more GPU memory during training. [\[How to config\]](https://github.com/NVlabs/Sana/blob/main/configs/sana_config/1024ms/Sana_1600M_img1024_CAME8bit.yaml#L86)

|

| 45 |

+

- (🔥 New) \[2025/1/29\] 🎉 🎉 🎉**SANA 1.5 is out! Figure out how to do efficient training & inference scaling!** 🚀[\[Tech Report\]](https://arxiv.org/abs/2501.18427)

|

| 46 |

+

- (🔥 New) \[2025/1/24\] 4bit-Sana is released, powered by [SVDQuant and Nunchaku](https://github.com/mit-han-lab/nunchaku) inference engine. Now run your Sana within **8GB** GPU VRAM [\[Guidance\]](asset/docs/4bit_sana.md) [\[Demo\]](https://svdquant.mit.edu/) [\[Model\]](asset/docs/model_zoo.md)

|

| 47 |

+

- (🔥 New) \[2025/1/24\] DCAE-1.1 is released, better reconstruction quality. [\[Model\]](https://huggingface.co/mit-han-lab/dc-ae-f32c32-sana-1.1) [\[diffusers\]](https://huggingface.co/mit-han-lab/dc-ae-f32c32-sana-1.1-diffusers)

|

| 48 |

+

- (🔥 New) \[2025/1/23\] **Sana is accepted as Oral by ICLR-2025.** 🎉🎉🎉

|

| 49 |

+

|

| 50 |

+

______________________________________________________________________

|

| 51 |

+

|

| 52 |

+

- (🔥 New) \[2025/1/12\] DC-AE tiling makes Sana-4K inferences 4096x4096px images within 22GB GPU memory. With model offload and 8bit/4bit quantize. The 4K Sana run within **8GB** GPU VRAM. [\[Guidance\]](asset/docs/model_zoo.md#-3-4k-models)

|

| 53 |

+

- (🔥 New) \[2025/1/11\] Sana code-base license changed to Apache 2.0.

|

| 54 |

+

- (🔥 New) \[2025/1/10\] Inference Sana with 8bit quantization.[\[Guidance\]](asset/docs/8bit_sana.md#quantization)

|

| 55 |

+

- (🔥 New) \[2025/1/8\] 4K resolution [Sana models](asset/docs/model_zoo.md) is supported in [Sana-ComfyUI](https://github.com/Efficient-Large-Model/ComfyUI_ExtraModels) and [work flow](asset/docs/ComfyUI/Sana_FlowEuler_4K.json) is also prepared. [\[4K guidance\]](asset/docs/ComfyUI/comfyui.md)

|

| 56 |

+