Spaces:

Running

Running

File size: 2,075 Bytes

ab076ce fbc47a3 746bbf4 fbc47a3 |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 |

---

title: README

emoji: 📉

colorFrom: yellow

colorTo: pink

sdk: static

pinned: false

---

<h1 align="center"> Learning Adaptive Reasoning Search in Language </h1>

<p align="center">

<a href="https://www.jiayipan.com/" style="text-decoration: none;">Jiayi Pan</a><sup>*</sup>,

<a href="https://xiuyuli.com/" style="text-decoration: none;">Xiuyu Li</a><sup>*</sup>,

<a href="https://tonylian.com/" style="text-decoration: none;">Long Lian</a><sup>*</sup>,

<a href="https://sea-snell.github.io/" style="text-decoration: none;">Charlie Victor Snell</a>,

<a href="https://yifeizhou02.github.io/" style="text-decoration: none;">Yifei Zhou</a>,<br>

<a href="https://www.adamyala.org/" style="text-decoration: none;">Adam Yala</a>,

<a href="https://people.eecs.berkeley.edu/~trevor/" style="text-decoration: none;">Trevor Darrell</a>,

<a href="https://people.eecs.berkeley.edu/~keutzer/" style="text-decoration: none;">Kurt Keutzer</a>,

<a href="https://www.alanesuhr.com/" style="text-decoration: none;">Alane Suhr</a>

</p>

<p align="center">

UC Berkeley and UCSF <sup>*</sup> Equal Contribution

</p>

<p align="center">

<a href="TODO">📃 Paper</a>

•

<a href="https://github.com/Parallel-Reasoning/APR" >💻 Code</a>

•

<a href="https://huggingface.co/Parallel-Reasoning" >🤗 Data & Models</a>

</p>

**TL;DR**:

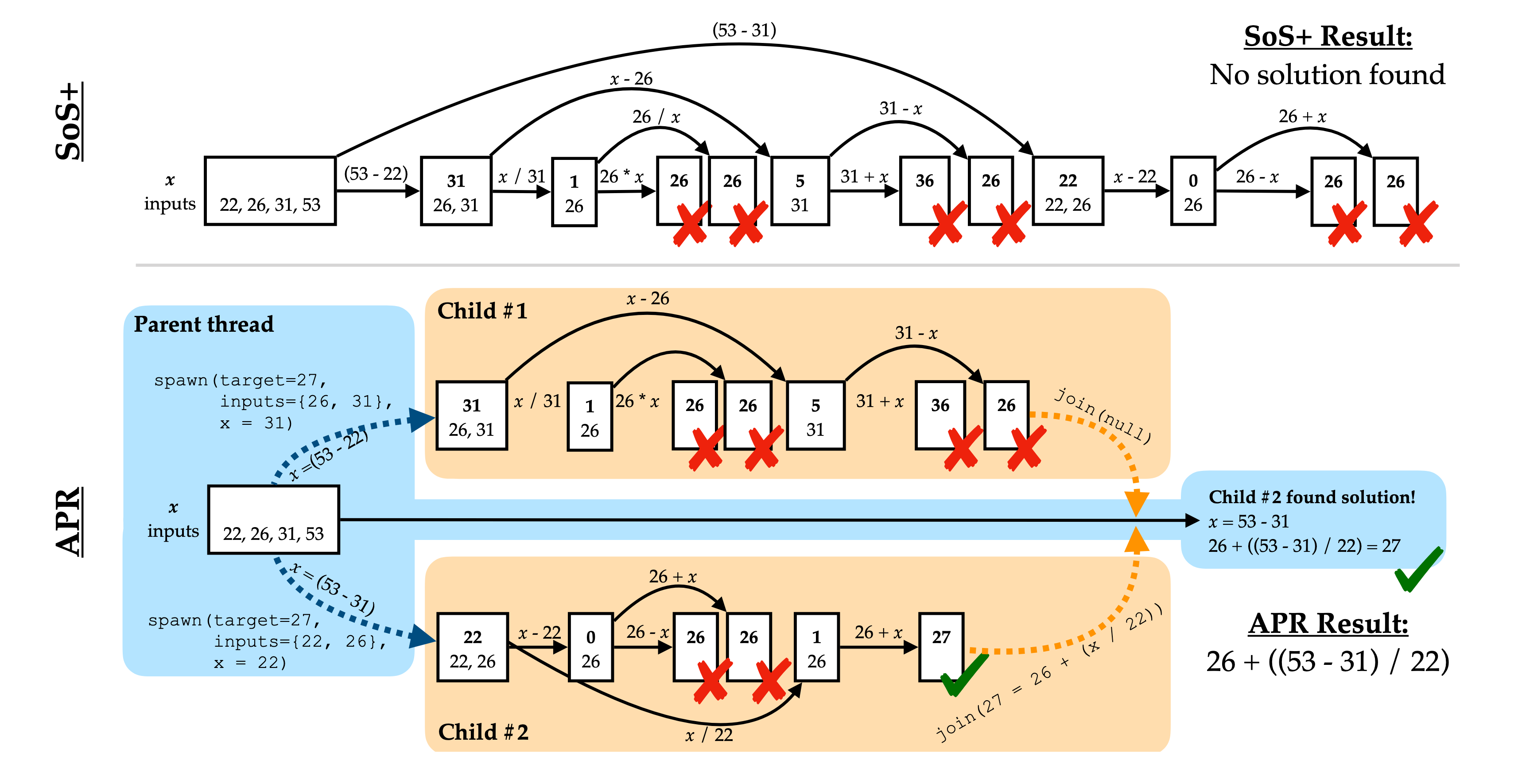

We present Adaptive Parallel Reasoning (APR), a novel framework that enables language models to learn to orchestrate both serialized and parallel computations. APR trains language models to use `spawn()` and `join()` operations through end-to-end supervised training and reinforcement learning, allowing models to dynamically orchestrate their own computational workflows.

APR efficiently distributes compute, reduces latency, overcomes context window limits, and achieves state‑of‑the‑art performance on complex reasoning tasks (e.g., 83.4% vs. 60.0% accuracy at 4K context on Countdown). |