Spaces:

Running

Running

Upload 31 files

Browse files- .dockerignore +16 -0

- .gitattributes +37 -36

- Dockerfile +53 -0

- Dockerfile.cuda12.4 +53 -0

- LICENSE +7 -0

- README.md +11 -11

- advanced.png +0 -0

- app-launch.sh +5 -0

- app.py +1119 -0

- docker-compose.yml +28 -0

- flags.png +0 -0

- icon.png +0 -0

- install.js +96 -0

- models.yaml +27 -0

- models/.gitkeep +0 -0

- models/clip/.gitkeep +0 -0

- models/unet/.gitkeep +0 -0

- models/vae/.gitkeep +0 -0

- outputs/.gitkeep +0 -0

- pinokio.js +95 -0

- pinokio_meta.json +39 -0

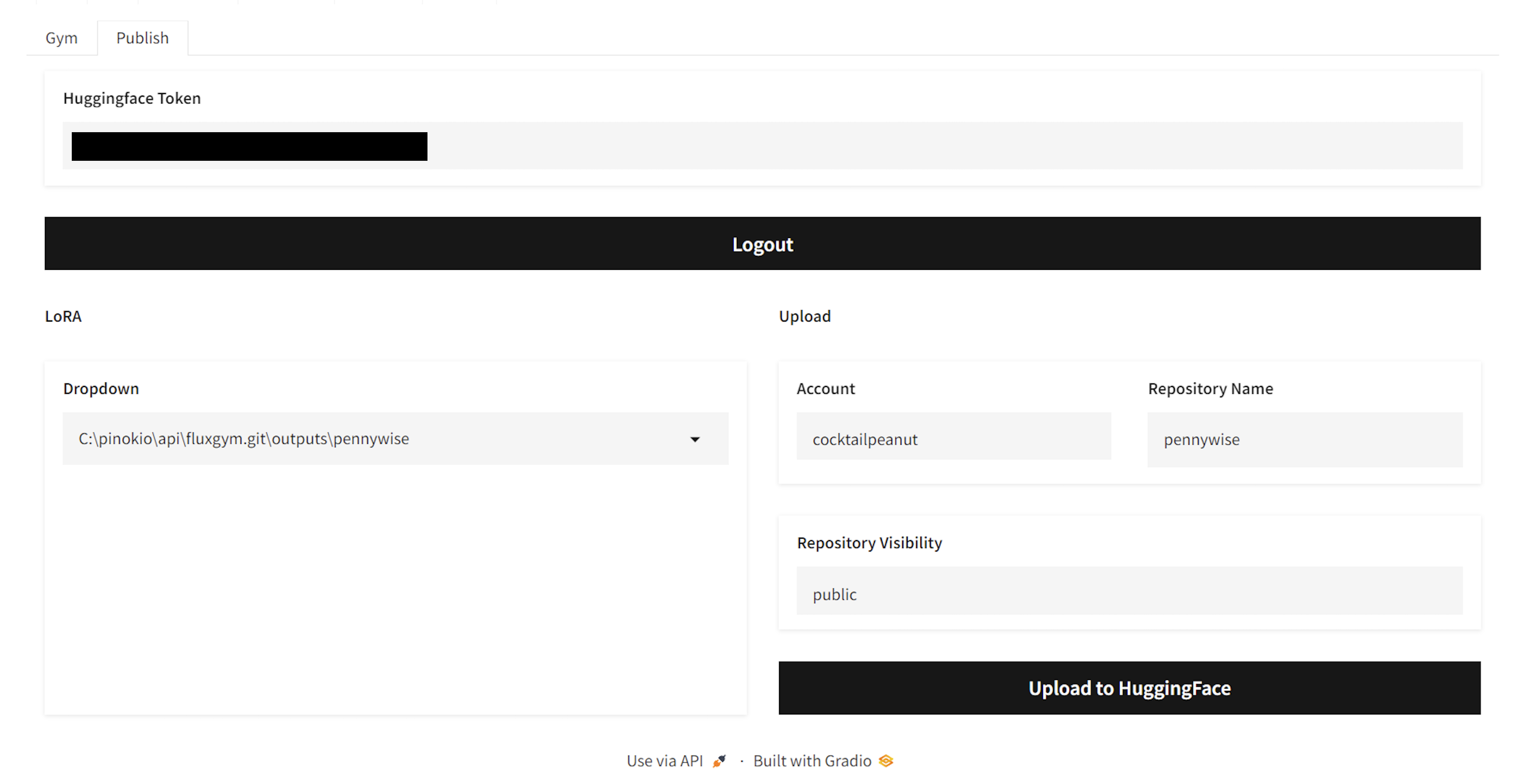

- publish_to_hf.png +0 -0

- requirements.txt +35 -0

- reset.js +13 -0

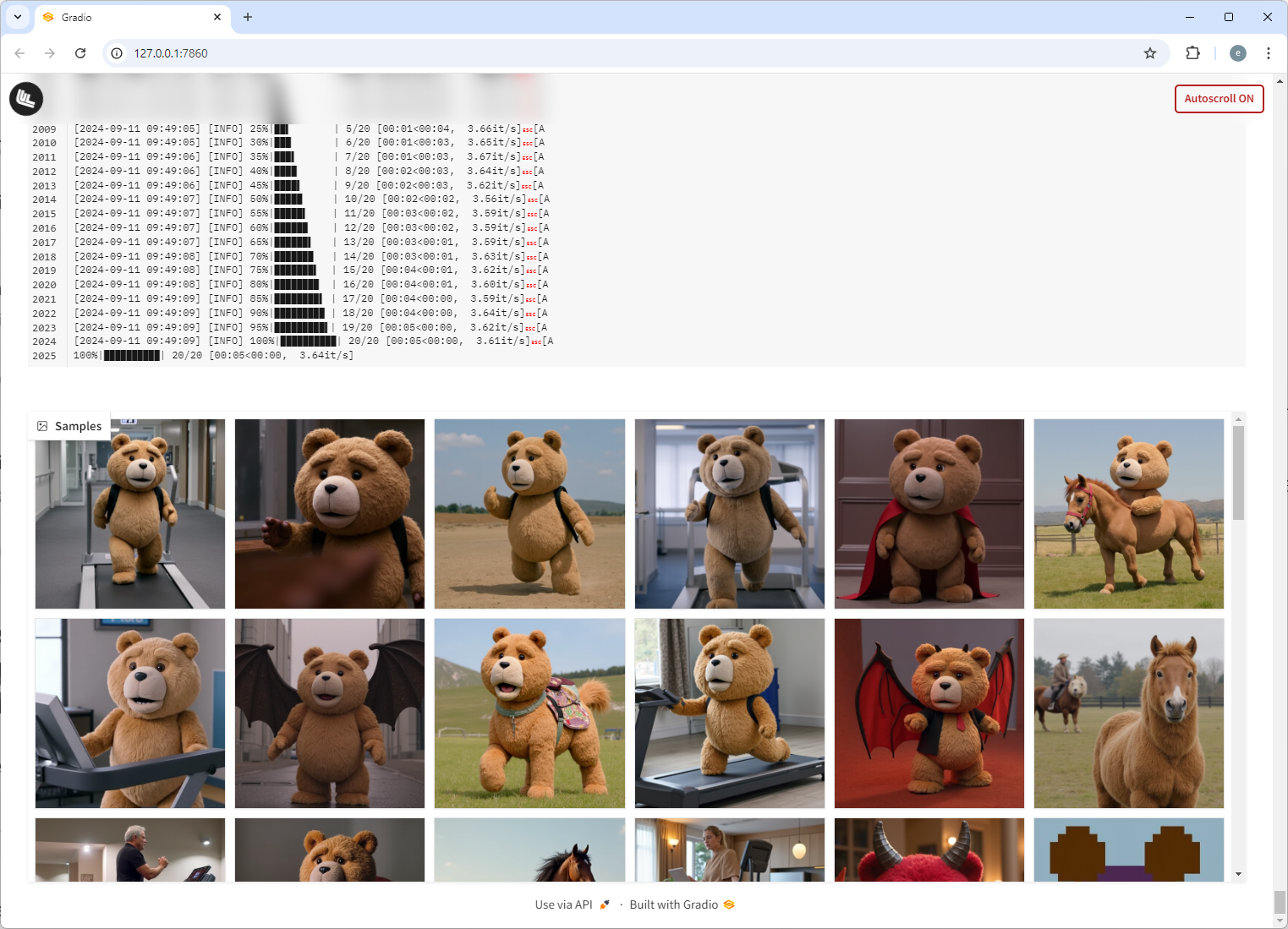

- sample.png +3 -0

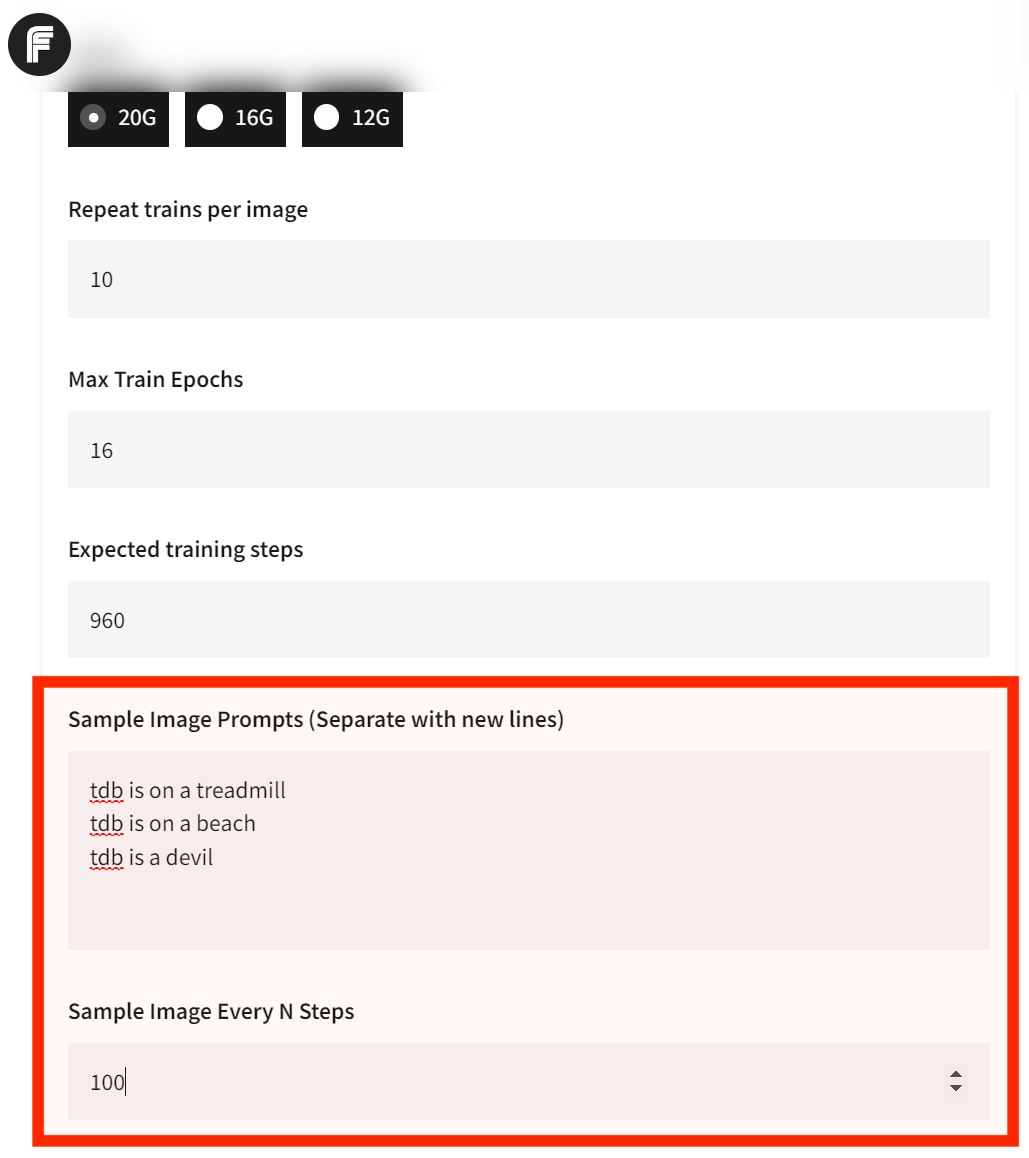

- sample_fields.png +0 -0

- screenshot.png +0 -0

- seed.gif +3 -0

- start.js +37 -0

- torch.js +75 -0

- update.js +46 -0

.dockerignore

ADDED

|

@@ -0,0 +1,16 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

.cache/

|

| 2 |

+

cudnn_windows/

|

| 3 |

+

bitsandbytes_windows/

|

| 4 |

+

bitsandbytes_windows_deprecated/

|

| 5 |

+

dataset/

|

| 6 |

+

__pycache__/

|

| 7 |

+

venv/

|

| 8 |

+

**/.hadolint.yml

|

| 9 |

+

**/*.log

|

| 10 |

+

**/.git

|

| 11 |

+

**/.gitignore

|

| 12 |

+

**/.env

|

| 13 |

+

**/.github

|

| 14 |

+

**/.vscode

|

| 15 |

+

**/*.ps1

|

| 16 |

+

sd-scripts/

|

.gitattributes

CHANGED

|

@@ -1,36 +1,37 @@

|

|

| 1 |

-

*.7z filter=lfs diff=lfs merge=lfs -text

|

| 2 |

-

*.arrow filter=lfs diff=lfs merge=lfs -text

|

| 3 |

-

*.bin filter=lfs diff=lfs merge=lfs -text

|

| 4 |

-

*.bz2 filter=lfs diff=lfs merge=lfs -text

|

| 5 |

-

*.ckpt filter=lfs diff=lfs merge=lfs -text

|

| 6 |

-

*.ftz filter=lfs diff=lfs merge=lfs -text

|

| 7 |

-

*.gz filter=lfs diff=lfs merge=lfs -text

|

| 8 |

-

*.h5 filter=lfs diff=lfs merge=lfs -text

|

| 9 |

-

*.joblib filter=lfs diff=lfs merge=lfs -text

|

| 10 |

-

*.lfs.* filter=lfs diff=lfs merge=lfs -text

|

| 11 |

-

*.mlmodel filter=lfs diff=lfs merge=lfs -text

|

| 12 |

-

*.model filter=lfs diff=lfs merge=lfs -text

|

| 13 |

-

*.msgpack filter=lfs diff=lfs merge=lfs -text

|

| 14 |

-

*.npy filter=lfs diff=lfs merge=lfs -text

|

| 15 |

-

*.npz filter=lfs diff=lfs merge=lfs -text

|

| 16 |

-

*.onnx filter=lfs diff=lfs merge=lfs -text

|

| 17 |

-

*.ot filter=lfs diff=lfs merge=lfs -text

|

| 18 |

-

*.parquet filter=lfs diff=lfs merge=lfs -text

|

| 19 |

-

*.pb filter=lfs diff=lfs merge=lfs -text

|

| 20 |

-

*.pickle filter=lfs diff=lfs merge=lfs -text

|

| 21 |

-

*.pkl filter=lfs diff=lfs merge=lfs -text

|

| 22 |

-

*.pt filter=lfs diff=lfs merge=lfs -text

|

| 23 |

-

*.pth filter=lfs diff=lfs merge=lfs -text

|

| 24 |

-

*.rar filter=lfs diff=lfs merge=lfs -text

|

| 25 |

-

*.safetensors filter=lfs diff=lfs merge=lfs -text

|

| 26 |

-

saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

| 27 |

-

*.tar.* filter=lfs diff=lfs merge=lfs -text

|

| 28 |

-

*.tar filter=lfs diff=lfs merge=lfs -text

|

| 29 |

-

*.tflite filter=lfs diff=lfs merge=lfs -text

|

| 30 |

-

*.tgz filter=lfs diff=lfs merge=lfs -text

|

| 31 |

-

*.wasm filter=lfs diff=lfs merge=lfs -text

|

| 32 |

-

*.xz filter=lfs diff=lfs merge=lfs -text

|

| 33 |

-

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

-

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

-

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

-

|

|

|

|

|

|

| 1 |

+

*.7z filter=lfs diff=lfs merge=lfs -text

|

| 2 |

+

*.arrow filter=lfs diff=lfs merge=lfs -text

|

| 3 |

+

*.bin filter=lfs diff=lfs merge=lfs -text

|

| 4 |

+

*.bz2 filter=lfs diff=lfs merge=lfs -text

|

| 5 |

+

*.ckpt filter=lfs diff=lfs merge=lfs -text

|

| 6 |

+

*.ftz filter=lfs diff=lfs merge=lfs -text

|

| 7 |

+

*.gz filter=lfs diff=lfs merge=lfs -text

|

| 8 |

+

*.h5 filter=lfs diff=lfs merge=lfs -text

|

| 9 |

+

*.joblib filter=lfs diff=lfs merge=lfs -text

|

| 10 |

+

*.lfs.* filter=lfs diff=lfs merge=lfs -text

|

| 11 |

+

*.mlmodel filter=lfs diff=lfs merge=lfs -text

|

| 12 |

+

*.model filter=lfs diff=lfs merge=lfs -text

|

| 13 |

+

*.msgpack filter=lfs diff=lfs merge=lfs -text

|

| 14 |

+

*.npy filter=lfs diff=lfs merge=lfs -text

|

| 15 |

+

*.npz filter=lfs diff=lfs merge=lfs -text

|

| 16 |

+

*.onnx filter=lfs diff=lfs merge=lfs -text

|

| 17 |

+

*.ot filter=lfs diff=lfs merge=lfs -text

|

| 18 |

+

*.parquet filter=lfs diff=lfs merge=lfs -text

|

| 19 |

+

*.pb filter=lfs diff=lfs merge=lfs -text

|

| 20 |

+

*.pickle filter=lfs diff=lfs merge=lfs -text

|

| 21 |

+

*.pkl filter=lfs diff=lfs merge=lfs -text

|

| 22 |

+

*.pt filter=lfs diff=lfs merge=lfs -text

|

| 23 |

+

*.pth filter=lfs diff=lfs merge=lfs -text

|

| 24 |

+

*.rar filter=lfs diff=lfs merge=lfs -text

|

| 25 |

+

*.safetensors filter=lfs diff=lfs merge=lfs -text

|

| 26 |

+

saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

| 27 |

+

*.tar.* filter=lfs diff=lfs merge=lfs -text

|

| 28 |

+

*.tar filter=lfs diff=lfs merge=lfs -text

|

| 29 |

+

*.tflite filter=lfs diff=lfs merge=lfs -text

|

| 30 |

+

*.tgz filter=lfs diff=lfs merge=lfs -text

|

| 31 |

+

*.wasm filter=lfs diff=lfs merge=lfs -text

|

| 32 |

+

*.xz filter=lfs diff=lfs merge=lfs -text

|

| 33 |

+

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

+

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

+

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

sample.png filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

seed.gif filter=lfs diff=lfs merge=lfs -text

|

Dockerfile

ADDED

|

@@ -0,0 +1,53 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Base image with CUDA 12.2

|

| 2 |

+

FROM nvidia/cuda:12.2.2-base-ubuntu22.04

|

| 3 |

+

|

| 4 |

+

# Install pip if not already installed

|

| 5 |

+

RUN apt-get update -y && apt-get install -y \

|

| 6 |

+

python3-pip \

|

| 7 |

+

python3-dev \

|

| 8 |

+

git \

|

| 9 |

+

build-essential # Install dependencies for building extensions

|

| 10 |

+

|

| 11 |

+

# Define environment variables for UID and GID and local timezone

|

| 12 |

+

ENV PUID=${PUID:-1000}

|

| 13 |

+

ENV PGID=${PGID:-1000}

|

| 14 |

+

|

| 15 |

+

# Create a group with the specified GID

|

| 16 |

+

RUN groupadd -g "${PGID}" appuser

|

| 17 |

+

# Create a user with the specified UID and GID

|

| 18 |

+

RUN useradd -m -s /bin/sh -u "${PUID}" -g "${PGID}" appuser

|

| 19 |

+

|

| 20 |

+

WORKDIR /app

|

| 21 |

+

|

| 22 |

+

# Get sd-scripts from kohya-ss and install them

|

| 23 |

+

RUN git clone -b sd3 https://github.com/kohya-ss/sd-scripts && \

|

| 24 |

+

cd sd-scripts && \

|

| 25 |

+

pip install --no-cache-dir -r ./requirements.txt

|

| 26 |

+

|

| 27 |

+

# Install main application dependencies

|

| 28 |

+

COPY ./requirements.txt ./requirements.txt

|

| 29 |

+

RUN pip install --no-cache-dir -r ./requirements.txt

|

| 30 |

+

|

| 31 |

+

# Install Torch, Torchvision, and Torchaudio for CUDA 12.2

|

| 32 |

+

RUN pip install torch torchvision torchaudio --extra-index-url https://download.pytorch.org/whl/cu122/torch_stable.html

|

| 33 |

+

|

| 34 |

+

RUN chown -R appuser:appuser /app

|

| 35 |

+

|

| 36 |

+

# delete redundant requirements.txt and sd-scripts directory within the container

|

| 37 |

+

RUN rm -r ./sd-scripts

|

| 38 |

+

RUN rm ./requirements.txt

|

| 39 |

+

|

| 40 |

+

#Run application as non-root

|

| 41 |

+

USER appuser

|

| 42 |

+

|

| 43 |

+

# Copy fluxgym application code

|

| 44 |

+

COPY . ./fluxgym

|

| 45 |

+

|

| 46 |

+

EXPOSE 7860

|

| 47 |

+

|

| 48 |

+

ENV GRADIO_SERVER_NAME="0.0.0.0"

|

| 49 |

+

|

| 50 |

+

WORKDIR /app/fluxgym

|

| 51 |

+

|

| 52 |

+

# Run fluxgym Python application

|

| 53 |

+

CMD ["python3", "./app.py"]

|

Dockerfile.cuda12.4

ADDED

|

@@ -0,0 +1,53 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Base image with CUDA 12.4

|

| 2 |

+

FROM nvidia/cuda:12.4.1-base-ubuntu22.04

|

| 3 |

+

|

| 4 |

+

# Install pip if not already installed

|

| 5 |

+

RUN apt-get update -y && apt-get install -y \

|

| 6 |

+

python3-pip \

|

| 7 |

+

python3-dev \

|

| 8 |

+

git \

|

| 9 |

+

build-essential # Install dependencies for building extensions

|

| 10 |

+

|

| 11 |

+

# Define environment variables for UID and GID and local timezone

|

| 12 |

+

ENV PUID=${PUID:-1000}

|

| 13 |

+

ENV PGID=${PGID:-1000}

|

| 14 |

+

|

| 15 |

+

# Create a group with the specified GID

|

| 16 |

+

RUN groupadd -g "${PGID}" appuser

|

| 17 |

+

# Create a user with the specified UID and GID

|

| 18 |

+

RUN useradd -m -s /bin/sh -u "${PUID}" -g "${PGID}" appuser

|

| 19 |

+

|

| 20 |

+

WORKDIR /app

|

| 21 |

+

|

| 22 |

+

# Get sd-scripts from kohya-ss and install them

|

| 23 |

+

RUN git clone -b sd3 https://github.com/kohya-ss/sd-scripts && \

|

| 24 |

+

cd sd-scripts && \

|

| 25 |

+

pip install --no-cache-dir -r ./requirements.txt

|

| 26 |

+

|

| 27 |

+

# Install main application dependencies

|

| 28 |

+

COPY ./requirements.txt ./requirements.txt

|

| 29 |

+

RUN pip install --no-cache-dir -r ./requirements.txt

|

| 30 |

+

|

| 31 |

+

# Install Torch, Torchvision, and Torchaudio for CUDA 12.4

|

| 32 |

+

RUN pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu124

|

| 33 |

+

|

| 34 |

+

RUN chown -R appuser:appuser /app

|

| 35 |

+

|

| 36 |

+

# delete redundant requirements.txt and sd-scripts directory within the container

|

| 37 |

+

RUN rm -r ./sd-scripts

|

| 38 |

+

RUN rm ./requirements.txt

|

| 39 |

+

|

| 40 |

+

#Run application as non-root

|

| 41 |

+

USER appuser

|

| 42 |

+

|

| 43 |

+

# Copy fluxgym application code

|

| 44 |

+

COPY . ./fluxgym

|

| 45 |

+

|

| 46 |

+

EXPOSE 7860

|

| 47 |

+

|

| 48 |

+

ENV GRADIO_SERVER_NAME="0.0.0.0"

|

| 49 |

+

|

| 50 |

+

WORKDIR /app/fluxgym

|

| 51 |

+

|

| 52 |

+

# Run fluxgym Python application

|

| 53 |

+

CMD ["python3", "./app.py"]

|

LICENSE

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Copyright 2024 cocktailpeanut

|

| 2 |

+

|

| 3 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the “Software”), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions:

|

| 4 |

+

|

| 5 |

+

The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.

|

| 6 |

+

|

| 7 |

+

THE SOFTWARE IS PROVIDED “AS IS”, WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

|

README.md

CHANGED

|

@@ -1,11 +1,11 @@

|

|

| 1 |

-

---

|

| 2 |

-

title: FLuxGym

|

| 3 |

-

emoji: 🏋️

|

| 4 |

-

colorFrom: green

|

| 5 |

-

colorTo: blue

|

| 6 |

-

sdk: docker

|

| 7 |

-

app_port: 7860

|

| 8 |

-

pinned: True

|

| 9 |

-

---

|

| 10 |

-

|

| 11 |

-

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

|

|

|

| 1 |

+

---

|

| 2 |

+

title: FLuxGym

|

| 3 |

+

emoji: 🏋️

|

| 4 |

+

colorFrom: green

|

| 5 |

+

colorTo: blue

|

| 6 |

+

sdk: docker

|

| 7 |

+

app_port: 7860

|

| 8 |

+

pinned: True

|

| 9 |

+

---

|

| 10 |

+

|

| 11 |

+

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

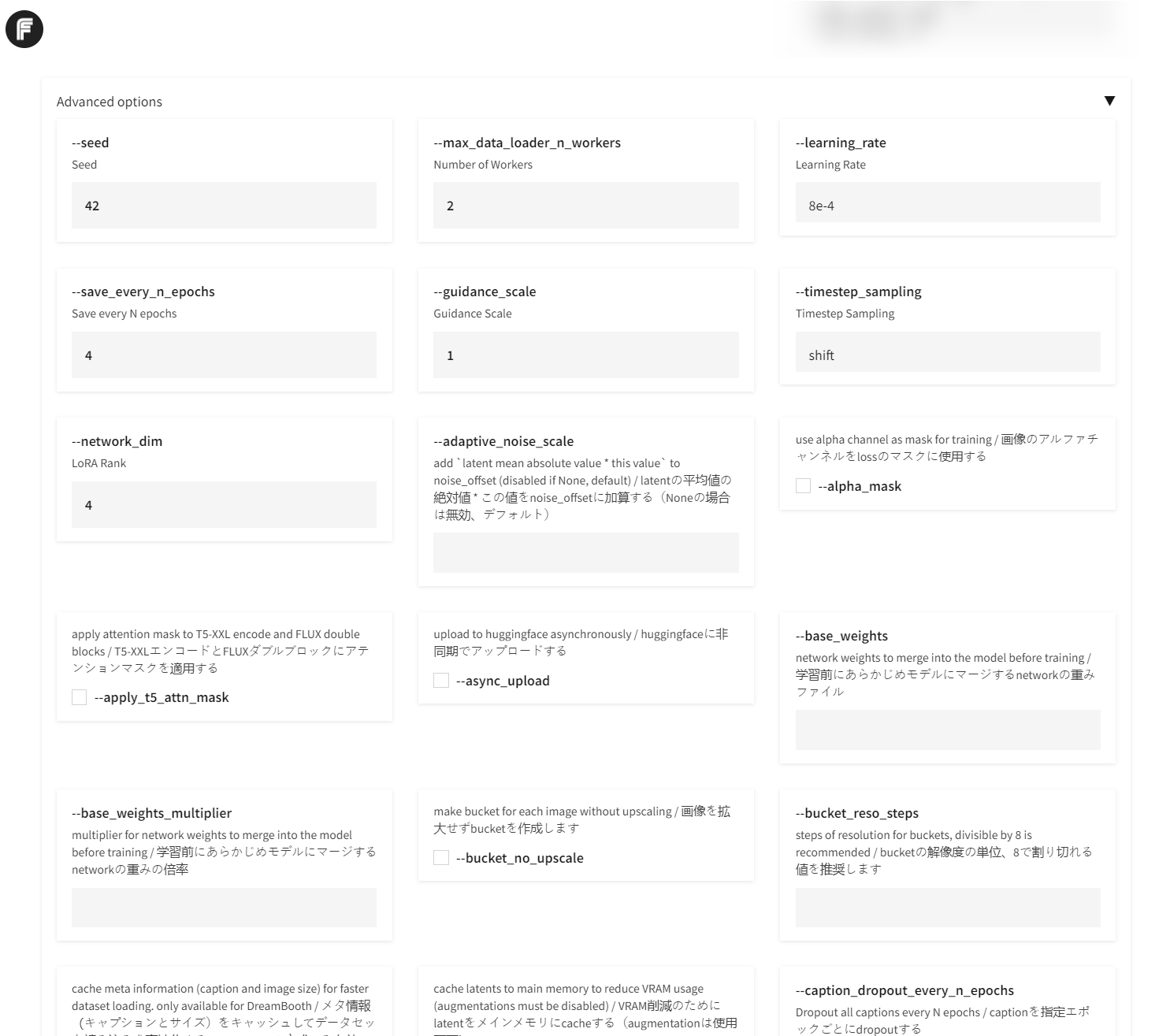

advanced.png

ADDED

|

app-launch.sh

ADDED

|

@@ -0,0 +1,5 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/usr/bin/env bash

|

| 2 |

+

|

| 3 |

+

cd "`dirname "$0"`" || exit 1

|

| 4 |

+

. env/bin/activate

|

| 5 |

+

python app.py

|

app.py

ADDED

|

@@ -0,0 +1,1119 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import sys

|

| 3 |

+

os.environ["HF_HUB_ENABLE_HF_TRANSFER"] = "1"

|

| 4 |

+

os.environ['GRADIO_ANALYTICS_ENABLED'] = '0'

|

| 5 |

+

sys.path.insert(0, os.getcwd())

|

| 6 |

+

sys.path.append(os.path.join(os.path.dirname(__file__), 'sd-scripts'))

|

| 7 |

+

import subprocess

|

| 8 |

+

import gradio as gr

|

| 9 |

+

from PIL import Image

|

| 10 |

+

import torch

|

| 11 |

+

import uuid

|

| 12 |

+

import shutil

|

| 13 |

+

import json

|

| 14 |

+

import yaml

|

| 15 |

+

from slugify import slugify

|

| 16 |

+

from transformers import AutoProcessor, AutoModelForCausalLM

|

| 17 |

+

from gradio_logsview import LogsView, LogsViewRunner

|

| 18 |

+

from huggingface_hub import hf_hub_download, HfApi

|

| 19 |

+

from library import flux_train_utils, huggingface_util

|

| 20 |

+

from argparse import Namespace

|

| 21 |

+

import train_network

|

| 22 |

+

import toml

|

| 23 |

+

import re

|

| 24 |

+

MAX_IMAGES = 150

|

| 25 |

+

|

| 26 |

+

with open('models.yaml', 'r') as file:

|

| 27 |

+

models = yaml.safe_load(file)

|

| 28 |

+

|

| 29 |

+

def readme(base_model, lora_name, instance_prompt, sample_prompts):

|

| 30 |

+

|

| 31 |

+

# model license

|

| 32 |

+

model_config = models[base_model]

|

| 33 |

+

model_file = model_config["file"]

|

| 34 |

+

base_model_name = model_config["base"]

|

| 35 |

+

license = None

|

| 36 |

+

license_name = None

|

| 37 |

+

license_link = None

|

| 38 |

+

license_items = []

|

| 39 |

+

if "license" in model_config:

|

| 40 |

+

license = model_config["license"]

|

| 41 |

+

license_items.append(f"license: {license}")

|

| 42 |

+

if "license_name" in model_config:

|

| 43 |

+

license_name = model_config["license_name"]

|

| 44 |

+

license_items.append(f"license_name: {license_name}")

|

| 45 |

+

if "license_link" in model_config:

|

| 46 |

+

license_link = model_config["license_link"]

|

| 47 |

+

license_items.append(f"license_link: {license_link}")

|

| 48 |

+

license_str = "\n".join(license_items)

|

| 49 |

+

print(f"license_items={license_items}")

|

| 50 |

+

print(f"license_str = {license_str}")

|

| 51 |

+

|

| 52 |

+

# tags

|

| 53 |

+

tags = [ "text-to-image", "flux", "lora", "diffusers", "template:sd-lora", "fluxgym" ]

|

| 54 |

+

|

| 55 |

+

# widgets

|

| 56 |

+

widgets = []

|

| 57 |

+

sample_image_paths = []

|

| 58 |

+

output_name = slugify(lora_name)

|

| 59 |

+

samples_dir = resolve_path_without_quotes(f"outputs/{output_name}/sample")

|

| 60 |

+

try:

|

| 61 |

+

for filename in os.listdir(samples_dir):

|

| 62 |

+

# Filename Schema: [name]_[steps]_[index]_[timestamp].png

|

| 63 |

+

match = re.search(r"_(\d+)_(\d+)_(\d+)\.png$", filename)

|

| 64 |

+

if match:

|

| 65 |

+

steps, index, timestamp = int(match.group(1)), int(match.group(2)), int(match.group(3))

|

| 66 |

+

sample_image_paths.append((steps, index, f"sample/{filename}"))

|

| 67 |

+

|

| 68 |

+

# Sort by numeric index

|

| 69 |

+

sample_image_paths.sort(key=lambda x: x[0], reverse=True)

|

| 70 |

+

|

| 71 |

+

final_sample_image_paths = sample_image_paths[:len(sample_prompts)]

|

| 72 |

+

final_sample_image_paths.sort(key=lambda x: x[1])

|

| 73 |

+

for i, prompt in enumerate(sample_prompts):

|

| 74 |

+

_, _, image_path = final_sample_image_paths[i]

|

| 75 |

+

widgets.append(

|

| 76 |

+

{

|

| 77 |

+

"text": prompt,

|

| 78 |

+

"output": {

|

| 79 |

+

"url": image_path

|

| 80 |

+

},

|

| 81 |

+

}

|

| 82 |

+

)

|

| 83 |

+

except:

|

| 84 |

+

print(f"no samples")

|

| 85 |

+

dtype = "torch.bfloat16"

|

| 86 |

+

# Construct the README content

|

| 87 |

+

readme_content = f"""---

|

| 88 |

+

tags:

|

| 89 |

+

{yaml.dump(tags, indent=4).strip()}

|

| 90 |

+

{"widget:" if os.path.isdir(samples_dir) else ""}

|

| 91 |

+

{yaml.dump(widgets, indent=4).strip() if widgets else ""}

|

| 92 |

+

base_model: {base_model_name}

|

| 93 |

+

{"instance_prompt: " + instance_prompt if instance_prompt else ""}

|

| 94 |

+

{license_str}

|

| 95 |

+

---

|

| 96 |

+

|

| 97 |

+

# {lora_name}

|

| 98 |

+

|

| 99 |

+

A Flux LoRA trained on a local computer with [Fluxgym](https://github.com/cocktailpeanut/fluxgym)

|

| 100 |

+

|

| 101 |

+

<Gallery />

|

| 102 |

+

|

| 103 |

+

## Trigger words

|

| 104 |

+

|

| 105 |

+

{"You should use `" + instance_prompt + "` to trigger the image generation." if instance_prompt else "No trigger words defined."}

|

| 106 |

+

|

| 107 |

+

## Download model and use it with ComfyUI, AUTOMATIC1111, SD.Next, Invoke AI, Forge, etc.

|

| 108 |

+

|

| 109 |

+

Weights for this model are available in Safetensors format.

|

| 110 |

+

|

| 111 |

+

"""

|

| 112 |

+

return readme_content

|

| 113 |

+

|

| 114 |

+

def account_hf():

|

| 115 |

+

try:

|

| 116 |

+

with open("HF_TOKEN", "r") as file:

|

| 117 |

+

token = file.read()

|

| 118 |

+

api = HfApi(token=token)

|

| 119 |

+

try:

|

| 120 |

+

account = api.whoami()

|

| 121 |

+

return { "token": token, "account": account['name'] }

|

| 122 |

+

except:

|

| 123 |

+

return None

|

| 124 |

+

except:

|

| 125 |

+

return None

|

| 126 |

+

|

| 127 |

+

"""

|

| 128 |

+

hf_logout.click(fn=logout_hf, outputs=[hf_token, hf_login, hf_logout, repo_owner])

|

| 129 |

+

"""

|

| 130 |

+

def logout_hf():

|

| 131 |

+

os.remove("HF_TOKEN")

|

| 132 |

+

global current_account

|

| 133 |

+

current_account = account_hf()

|

| 134 |

+

print(f"current_account={current_account}")

|

| 135 |

+

return gr.update(value=""), gr.update(visible=True), gr.update(visible=False), gr.update(value="", visible=False)

|

| 136 |

+

|

| 137 |

+

|

| 138 |

+

"""

|

| 139 |

+

hf_login.click(fn=login_hf, inputs=[hf_token], outputs=[hf_token, hf_login, hf_logout, repo_owner])

|

| 140 |

+

"""

|

| 141 |

+

def login_hf(hf_token):

|

| 142 |

+

api = HfApi(token=hf_token)

|

| 143 |

+

try:

|

| 144 |

+

account = api.whoami()

|

| 145 |

+

if account != None:

|

| 146 |

+

if "name" in account:

|

| 147 |

+

with open("HF_TOKEN", "w") as file:

|

| 148 |

+

file.write(hf_token)

|

| 149 |

+

global current_account

|

| 150 |

+

current_account = account_hf()

|

| 151 |

+

return gr.update(visible=True), gr.update(visible=False), gr.update(visible=True), gr.update(value=current_account["account"], visible=True)

|

| 152 |

+

return gr.update(), gr.update(), gr.update(), gr.update()

|

| 153 |

+

except:

|

| 154 |

+

print(f"incorrect hf_token")

|

| 155 |

+

return gr.update(), gr.update(), gr.update(), gr.update()

|

| 156 |

+

|

| 157 |

+

def upload_hf(base_model, lora_rows, repo_owner, repo_name, repo_visibility, hf_token):

|

| 158 |

+

src = lora_rows

|

| 159 |

+

repo_id = f"{repo_owner}/{repo_name}"

|

| 160 |

+

gr.Info(f"Uploading to Huggingface. Please Stand by...", duration=None)

|

| 161 |

+

args = Namespace(

|

| 162 |

+

huggingface_repo_id=repo_id,

|

| 163 |

+

huggingface_repo_type="model",

|

| 164 |

+

huggingface_repo_visibility=repo_visibility,

|

| 165 |

+

huggingface_path_in_repo="",

|

| 166 |

+

huggingface_token=hf_token,

|

| 167 |

+

async_upload=False

|

| 168 |

+

)

|

| 169 |

+

print(f"upload_hf args={args}")

|

| 170 |

+

huggingface_util.upload(args=args, src=src)

|

| 171 |

+

gr.Info(f"[Upload Complete] https://huggingface.co/{repo_id}", duration=None)

|

| 172 |

+

|

| 173 |

+

def load_captioning(uploaded_files, concept_sentence):

|

| 174 |

+

uploaded_images = [file for file in uploaded_files if not file.endswith('.txt')]

|

| 175 |

+

txt_files = [file for file in uploaded_files if file.endswith('.txt')]

|

| 176 |

+

txt_files_dict = {os.path.splitext(os.path.basename(txt_file))[0]: txt_file for txt_file in txt_files}

|

| 177 |

+

updates = []

|

| 178 |

+

if len(uploaded_images) <= 1:

|

| 179 |

+

raise gr.Error(

|

| 180 |

+

"Please upload at least 2 images to train your model (the ideal number with default settings is between 4-30)"

|

| 181 |

+

)

|

| 182 |

+

elif len(uploaded_images) > MAX_IMAGES:

|

| 183 |

+

raise gr.Error(f"For now, only {MAX_IMAGES} or less images are allowed for training")

|

| 184 |

+

# Update for the captioning_area

|

| 185 |

+

# for _ in range(3):

|

| 186 |

+

updates.append(gr.update(visible=True))

|

| 187 |

+

# Update visibility and image for each captioning row and image

|

| 188 |

+

for i in range(1, MAX_IMAGES + 1):

|

| 189 |

+

# Determine if the current row and image should be visible

|

| 190 |

+

visible = i <= len(uploaded_images)

|

| 191 |

+

|

| 192 |

+

# Update visibility of the captioning row

|

| 193 |

+

updates.append(gr.update(visible=visible))

|

| 194 |

+

|

| 195 |

+

# Update for image component - display image if available, otherwise hide

|

| 196 |

+

image_value = uploaded_images[i - 1] if visible else None

|

| 197 |

+

updates.append(gr.update(value=image_value, visible=visible))

|

| 198 |

+

|

| 199 |

+

corresponding_caption = False

|

| 200 |

+

if(image_value):

|

| 201 |

+

base_name = os.path.splitext(os.path.basename(image_value))[0]

|

| 202 |

+

if base_name in txt_files_dict:

|

| 203 |

+

with open(txt_files_dict[base_name], 'r') as file:

|

| 204 |

+

corresponding_caption = file.read()

|

| 205 |

+

|

| 206 |

+

# Update value of captioning area

|

| 207 |

+

text_value = corresponding_caption if visible and corresponding_caption else concept_sentence if visible and concept_sentence else None

|

| 208 |

+

updates.append(gr.update(value=text_value, visible=visible))

|

| 209 |

+

|

| 210 |

+

# Update for the sample caption area

|

| 211 |

+

updates.append(gr.update(visible=True))

|

| 212 |

+

updates.append(gr.update(visible=True))

|

| 213 |

+

|

| 214 |

+

return updates

|

| 215 |

+

|

| 216 |

+

def hide_captioning():

|

| 217 |

+

return gr.update(visible=False), gr.update(visible=False)

|

| 218 |

+

|

| 219 |

+

def resize_image(image_path, output_path, size):

|

| 220 |

+

with Image.open(image_path) as img:

|

| 221 |

+

width, height = img.size

|

| 222 |

+

if width < height:

|

| 223 |

+

new_width = size

|

| 224 |

+

new_height = int((size/width) * height)

|

| 225 |

+

else:

|

| 226 |

+

new_height = size

|

| 227 |

+

new_width = int((size/height) * width)

|

| 228 |

+

print(f"resize {image_path} : {new_width}x{new_height}")

|

| 229 |

+

img_resized = img.resize((new_width, new_height), Image.Resampling.LANCZOS)

|

| 230 |

+

img_resized.save(output_path)

|

| 231 |

+

|

| 232 |

+

def create_dataset(destination_folder, size, *inputs):

|

| 233 |

+

print("Creating dataset")

|

| 234 |

+

images = inputs[0]

|

| 235 |

+

if not os.path.exists(destination_folder):

|

| 236 |

+

os.makedirs(destination_folder)

|

| 237 |

+

|

| 238 |

+

for index, image in enumerate(images):

|

| 239 |

+

# copy the images to the datasets folder

|

| 240 |

+

new_image_path = shutil.copy(image, destination_folder)

|

| 241 |

+

|

| 242 |

+

# if it's a caption text file skip the next bit

|

| 243 |

+

ext = os.path.splitext(new_image_path)[-1].lower()

|

| 244 |

+

if ext == '.txt':

|

| 245 |

+

continue

|

| 246 |

+

|

| 247 |

+

# resize the images

|

| 248 |

+

resize_image(new_image_path, new_image_path, size)

|

| 249 |

+

|

| 250 |

+

# copy the captions

|

| 251 |

+

|

| 252 |

+

original_caption = inputs[index + 1]

|

| 253 |

+

|

| 254 |

+

image_file_name = os.path.basename(new_image_path)

|

| 255 |

+

caption_file_name = os.path.splitext(image_file_name)[0] + ".txt"

|

| 256 |

+

caption_path = resolve_path_without_quotes(os.path.join(destination_folder, caption_file_name))

|

| 257 |

+

print(f"image_path={new_image_path}, caption_path = {caption_path}, original_caption={original_caption}")

|

| 258 |

+

# if caption_path exists, do not write

|

| 259 |

+

if os.path.exists(caption_path):

|

| 260 |

+

print(f"{caption_path} already exists. use the existing .txt file")

|

| 261 |

+

else:

|

| 262 |

+

print(f"{caption_path} create a .txt caption file")

|

| 263 |

+

with open(caption_path, 'w') as file:

|

| 264 |

+

file.write(original_caption)

|

| 265 |

+

|

| 266 |

+

print(f"destination_folder {destination_folder}")

|

| 267 |

+

return destination_folder

|

| 268 |

+

|

| 269 |

+

|

| 270 |

+

def run_captioning(images, concept_sentence, *captions):

|

| 271 |

+

print(f"run_captioning")

|

| 272 |

+

print(f"concept sentence {concept_sentence}")

|

| 273 |

+

print(f"captions {captions}")

|

| 274 |

+

#Load internally to not consume resources for training

|

| 275 |

+

device = "cuda" if torch.cuda.is_available() else "cpu"

|

| 276 |

+

print(f"device={device}")

|

| 277 |

+

torch_dtype = torch.float16

|

| 278 |

+

model = AutoModelForCausalLM.from_pretrained(

|

| 279 |

+

"multimodalart/Florence-2-large-no-flash-attn", torch_dtype=torch_dtype, trust_remote_code=True

|

| 280 |

+

).to(device)

|

| 281 |

+

processor = AutoProcessor.from_pretrained("multimodalart/Florence-2-large-no-flash-attn", trust_remote_code=True)

|

| 282 |

+

|

| 283 |

+

captions = list(captions)

|

| 284 |

+

for i, image_path in enumerate(images):

|

| 285 |

+

print(captions[i])

|

| 286 |

+

if isinstance(image_path, str): # If image is a file path

|

| 287 |

+

image = Image.open(image_path).convert("RGB")

|

| 288 |

+

|

| 289 |

+

prompt = "<DETAILED_CAPTION>"

|

| 290 |

+

inputs = processor(text=prompt, images=image, return_tensors="pt").to(device, torch_dtype)

|

| 291 |

+

print(f"inputs {inputs}")

|

| 292 |

+

|

| 293 |

+

generated_ids = model.generate(

|

| 294 |

+

input_ids=inputs["input_ids"], pixel_values=inputs["pixel_values"], max_new_tokens=1024, num_beams=3

|

| 295 |

+

)

|

| 296 |

+

print(f"generated_ids {generated_ids}")

|

| 297 |

+

|

| 298 |

+

generated_text = processor.batch_decode(generated_ids, skip_special_tokens=False)[0]

|

| 299 |

+

print(f"generated_text: {generated_text}")

|

| 300 |

+

parsed_answer = processor.post_process_generation(

|

| 301 |

+

generated_text, task=prompt, image_size=(image.width, image.height)

|

| 302 |

+

)

|

| 303 |

+

print(f"parsed_answer = {parsed_answer}")

|

| 304 |

+

caption_text = parsed_answer["<DETAILED_CAPTION>"].replace("The image shows ", "")

|

| 305 |

+

print(f"caption_text = {caption_text}, concept_sentence={concept_sentence}")

|

| 306 |

+

if concept_sentence:

|

| 307 |

+

caption_text = f"{concept_sentence} {caption_text}"

|

| 308 |

+

captions[i] = caption_text

|

| 309 |

+

|

| 310 |

+

yield captions

|

| 311 |

+

model.to("cpu")

|

| 312 |

+

del model

|

| 313 |

+

del processor

|

| 314 |

+

if torch.cuda.is_available():

|

| 315 |

+

torch.cuda.empty_cache()

|

| 316 |

+

|

| 317 |

+

def recursive_update(d, u):

|

| 318 |

+

for k, v in u.items():

|

| 319 |

+

if isinstance(v, dict) and v:

|

| 320 |

+

d[k] = recursive_update(d.get(k, {}), v)

|

| 321 |

+

else:

|

| 322 |

+

d[k] = v

|

| 323 |

+

return d

|

| 324 |

+

|

| 325 |

+

def download(base_model):

|

| 326 |

+

model = models[base_model]

|

| 327 |

+

model_file = model["file"]

|

| 328 |

+

repo = model["repo"]

|

| 329 |

+

|

| 330 |

+

# download unet

|

| 331 |

+

if base_model == "flux-dev" or base_model == "flux-schnell":

|

| 332 |

+

unet_folder = "models/unet"

|

| 333 |

+

else:

|

| 334 |

+

unet_folder = f"models/unet/{repo}"

|

| 335 |

+

unet_path = os.path.join(unet_folder, model_file)

|

| 336 |

+

if not os.path.exists(unet_path):

|

| 337 |

+

os.makedirs(unet_folder, exist_ok=True)

|

| 338 |

+

gr.Info(f"Downloading base model: {base_model}. Please wait. (You can check the terminal for the download progress)", duration=None)

|

| 339 |

+

print(f"download {base_model}")

|

| 340 |

+

hf_hub_download(repo_id=repo, local_dir=unet_folder, filename=model_file)

|

| 341 |

+

|

| 342 |

+

# download vae

|

| 343 |

+

vae_folder = "models/vae"

|

| 344 |

+

vae_path = os.path.join(vae_folder, "ae.sft")

|

| 345 |

+

if not os.path.exists(vae_path):

|

| 346 |

+

os.makedirs(vae_folder, exist_ok=True)

|

| 347 |

+

gr.Info(f"Downloading vae")

|

| 348 |

+

print(f"downloading ae.sft...")

|

| 349 |

+

hf_hub_download(repo_id="cocktailpeanut/xulf-dev", local_dir=vae_folder, filename="ae.sft")

|

| 350 |

+

|

| 351 |

+

# download clip

|

| 352 |

+

clip_folder = "models/clip"

|

| 353 |

+

clip_l_path = os.path.join(clip_folder, "clip_l.safetensors")

|

| 354 |

+

if not os.path.exists(clip_l_path):

|

| 355 |

+

os.makedirs(clip_folder, exist_ok=True)

|

| 356 |

+

gr.Info(f"Downloading clip...")

|

| 357 |

+

print(f"download clip_l.safetensors")

|

| 358 |

+

hf_hub_download(repo_id="comfyanonymous/flux_text_encoders", local_dir=clip_folder, filename="clip_l.safetensors")

|

| 359 |

+

|

| 360 |

+

# download t5xxl

|

| 361 |

+

t5xxl_path = os.path.join(clip_folder, "t5xxl_fp16.safetensors")

|

| 362 |

+

if not os.path.exists(t5xxl_path):

|

| 363 |

+

print(f"download t5xxl_fp16.safetensors")

|

| 364 |

+

gr.Info(f"Downloading t5xxl...")

|

| 365 |

+

hf_hub_download(repo_id="comfyanonymous/flux_text_encoders", local_dir=clip_folder, filename="t5xxl_fp16.safetensors")

|

| 366 |

+

|

| 367 |

+

|

| 368 |

+

def resolve_path(p):

|

| 369 |

+

current_dir = os.path.dirname(os.path.abspath(__file__))

|

| 370 |

+

norm_path = os.path.normpath(os.path.join(current_dir, p))

|

| 371 |

+

return f"\"{norm_path}\""

|

| 372 |

+

def resolve_path_without_quotes(p):

|

| 373 |

+

current_dir = os.path.dirname(os.path.abspath(__file__))

|

| 374 |

+

norm_path = os.path.normpath(os.path.join(current_dir, p))

|

| 375 |

+

return norm_path

|

| 376 |

+

|

| 377 |

+

def gen_sh(

|

| 378 |

+

base_model,

|

| 379 |

+

output_name,

|

| 380 |

+

resolution,

|

| 381 |

+

seed,

|

| 382 |

+

workers,

|

| 383 |

+

learning_rate,

|

| 384 |

+

network_dim,

|

| 385 |

+

max_train_epochs,

|

| 386 |

+

save_every_n_epochs,

|

| 387 |

+

timestep_sampling,

|

| 388 |

+

guidance_scale,

|

| 389 |

+

vram,

|

| 390 |

+

sample_prompts,

|

| 391 |

+

sample_every_n_steps,

|

| 392 |

+

*advanced_components

|

| 393 |

+

):

|

| 394 |

+

|

| 395 |

+

print(f"gen_sh: network_dim:{network_dim}, max_train_epochs={max_train_epochs}, save_every_n_epochs={save_every_n_epochs}, timestep_sampling={timestep_sampling}, guidance_scale={guidance_scale}, vram={vram}, sample_prompts={sample_prompts}, sample_every_n_steps={sample_every_n_steps}")

|

| 396 |

+

|

| 397 |

+

output_dir = resolve_path(f"outputs/{output_name}")

|

| 398 |

+

sample_prompts_path = resolve_path(f"outputs/{output_name}/sample_prompts.txt")

|

| 399 |

+

|

| 400 |

+

line_break = "\\"

|

| 401 |

+

file_type = "sh"

|

| 402 |

+

if sys.platform == "win32":

|

| 403 |

+

line_break = "^"

|

| 404 |

+

file_type = "bat"

|

| 405 |

+

|

| 406 |

+

############# Sample args ########################

|

| 407 |

+

sample = ""

|

| 408 |

+

if len(sample_prompts) > 0 and sample_every_n_steps > 0:

|

| 409 |

+