Upload 18 files

Browse files- .gitattributes +1 -0

- app.py +108 -0

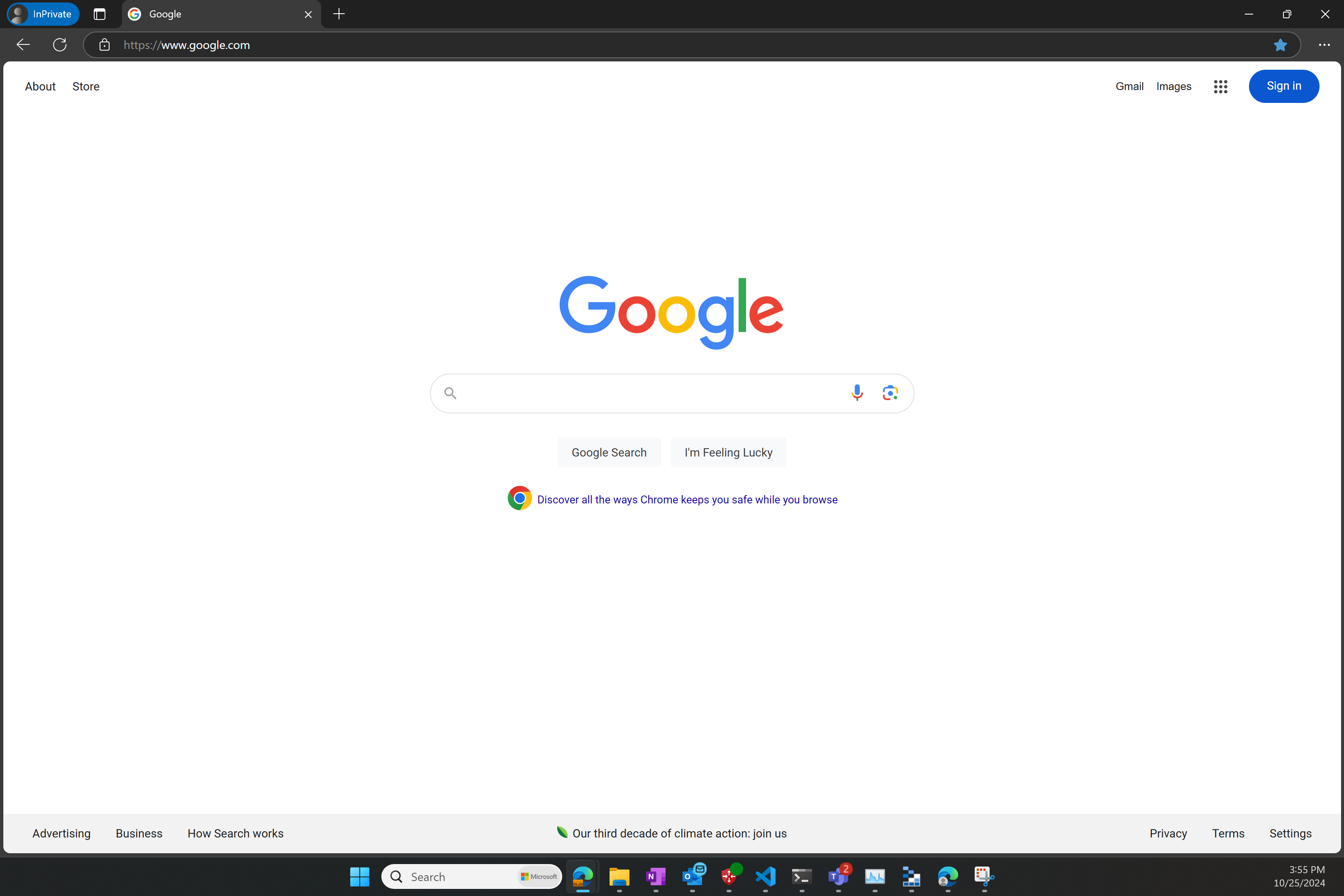

- imgs/google_page.png +0 -0

- imgs/logo.png +0 -0

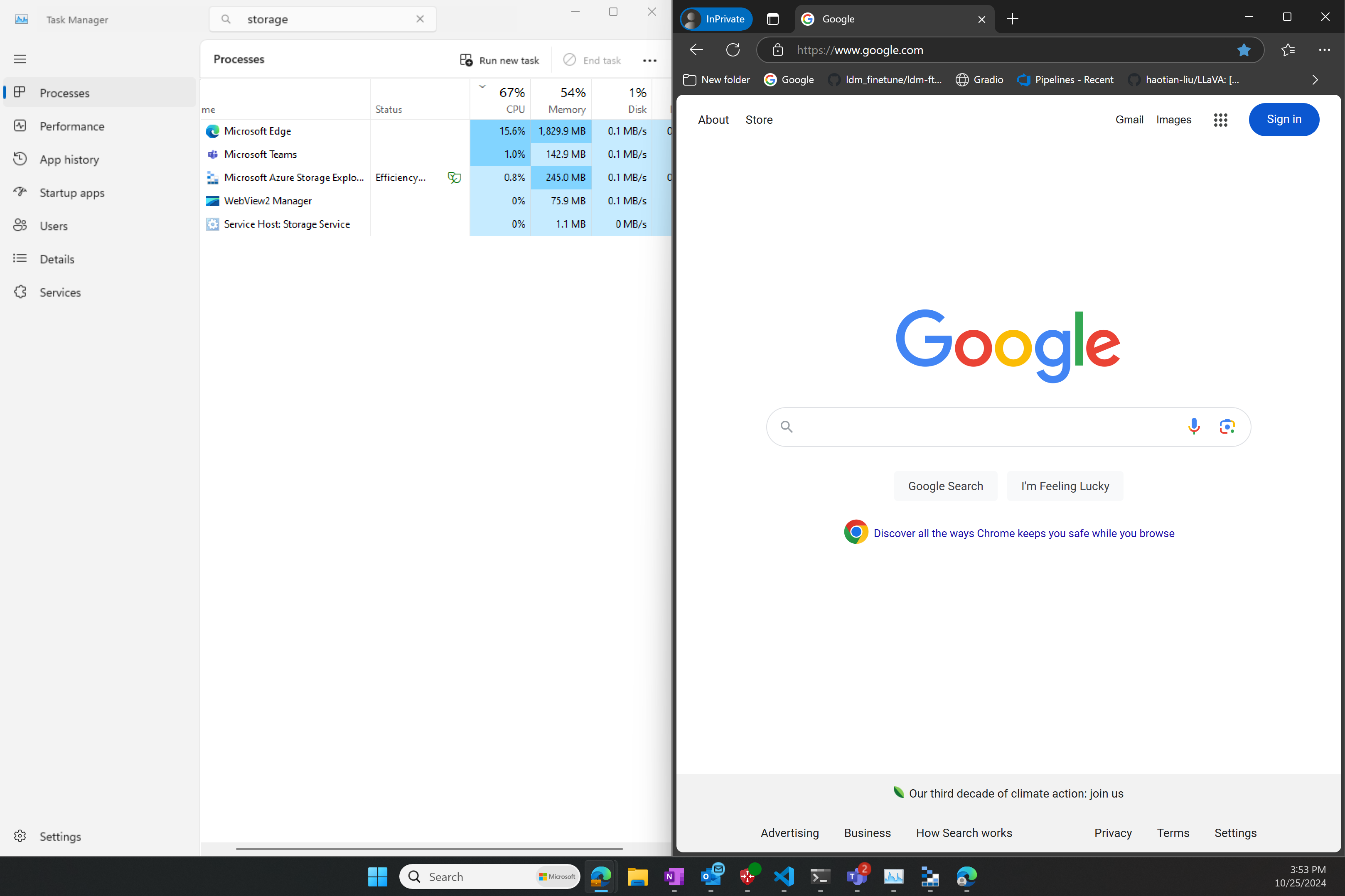

- imgs/saved_image_demo.png +0 -0

- imgs/windows_home.png +3 -0

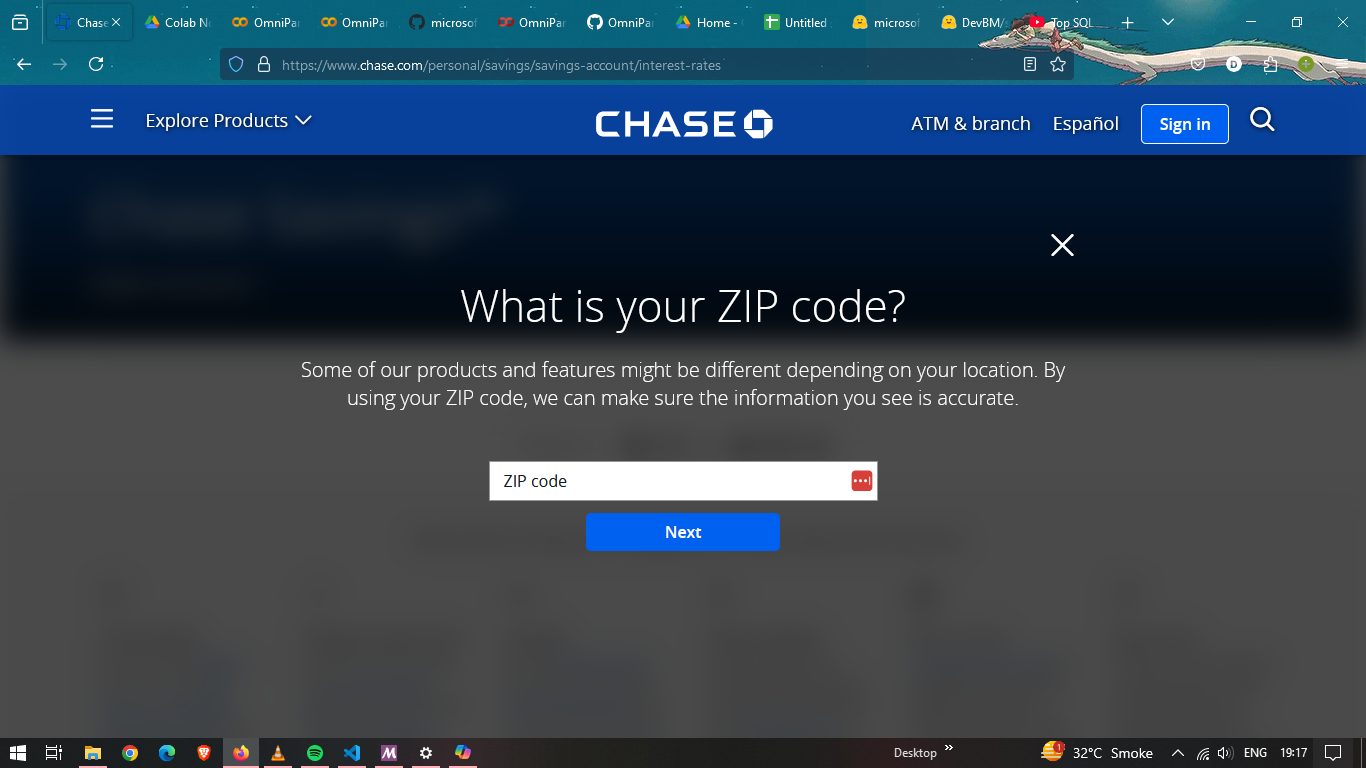

- imgs/windows_multitab.png +0 -0

- omniparser.py +60 -0

- requirements.txt +16 -0

- util/__init__.py +0 -0

- util/__pycache__/__init__.cpython-312.pyc +0 -0

- util/__pycache__/__init__.cpython-39.pyc +0 -0

- util/__pycache__/action_matching.cpython-39.pyc +0 -0

- util/__pycache__/box_annotator.cpython-312.pyc +0 -0

- util/__pycache__/box_annotator.cpython-39.pyc +0 -0

- util/action_matching.py +425 -0

- util/action_type.py +45 -0

- util/box_annotator.py +262 -0

- utils.py +402 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

imgs/windows_home.png filter=lfs diff=lfs merge=lfs -text

|

app.py

ADDED

|

@@ -0,0 +1,108 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from typing import Optional

|

| 2 |

+

|

| 3 |

+

import gradio as gr

|

| 4 |

+

import numpy as np

|

| 5 |

+

import torch

|

| 6 |

+

from PIL import Image

|

| 7 |

+

import io

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

import base64, os

|

| 11 |

+

from utils import check_ocr_box, get_yolo_model, get_caption_model_processor, get_som_labeled_img

|

| 12 |

+

import torch

|

| 13 |

+

from PIL import Image

|

| 14 |

+

|

| 15 |

+

yolo_model = get_yolo_model(model_path='weights/icon_detect/best.pt')

|

| 16 |

+

caption_model_processor = get_caption_model_processor(model_name="florence2", model_name_or_path="weights/icon_caption_florence")

|

| 17 |

+

platform = 'pc'

|

| 18 |

+

if platform == 'pc':

|

| 19 |

+

draw_bbox_config = {

|

| 20 |

+

'text_scale': 0.8,

|

| 21 |

+

'text_thickness': 2,

|

| 22 |

+

'text_padding': 2,

|

| 23 |

+

'thickness': 2,

|

| 24 |

+

}

|

| 25 |

+

elif platform == 'web':

|

| 26 |

+

draw_bbox_config = {

|

| 27 |

+

'text_scale': 0.8,

|

| 28 |

+

'text_thickness': 2,

|

| 29 |

+

'text_padding': 3,

|

| 30 |

+

'thickness': 3,

|

| 31 |

+

}

|

| 32 |

+

elif platform == 'mobile':

|

| 33 |

+

draw_bbox_config = {

|

| 34 |

+

'text_scale': 0.8,

|

| 35 |

+

'text_thickness': 2,

|

| 36 |

+

'text_padding': 3,

|

| 37 |

+

'thickness': 3,

|

| 38 |

+

}

|

| 39 |

+

|

| 40 |

+

|

| 41 |

+

|

| 42 |

+

MARKDOWN = """

|

| 43 |

+

# OmniParser for Pure Vision Based General GUI Agent 🔥

|

| 44 |

+

<div>

|

| 45 |

+

<a href="https://arxiv.org/pdf/2408.00203">

|

| 46 |

+

<img src="https://img.shields.io/badge/arXiv-2408.00203-b31b1b.svg" alt="Arxiv" style="display:inline-block;">

|

| 47 |

+

</a>

|

| 48 |

+

</div>

|

| 49 |

+

|

| 50 |

+

OmniParser is a screen parsing tool to convert general GUI screen to structured elements.

|

| 51 |

+

"""

|

| 52 |

+

|

| 53 |

+

DEVICE = torch.device('cpu')

|

| 54 |

+

|

| 55 |

+

# @spaces.GPU

|

| 56 |

+

# @torch.inference_mode()

|

| 57 |

+

# @torch.autocast(device_type="cuda", dtype=torch.bfloat16)

|

| 58 |

+

def process(

|

| 59 |

+

image_input,

|

| 60 |

+

box_threshold,

|

| 61 |

+

iou_threshold

|

| 62 |

+

) -> Optional[Image.Image]:

|

| 63 |

+

|

| 64 |

+

image_save_path = 'imgs/saved_image_demo.png'

|

| 65 |

+

image_input.save(image_save_path)

|

| 66 |

+

# import pdb; pdb.set_trace()

|

| 67 |

+

|

| 68 |

+

ocr_bbox_rslt, is_goal_filtered = check_ocr_box(image_save_path, display_img = False, output_bb_format='xyxy', goal_filtering=None, easyocr_args={'paragraph': False, 'text_threshold':0.9})

|

| 69 |

+

text, ocr_bbox = ocr_bbox_rslt

|

| 70 |

+

# print('prompt:', prompt)

|

| 71 |

+

dino_labled_img, label_coordinates, parsed_content_list = get_som_labeled_img(image_save_path, yolo_model, BOX_TRESHOLD = box_threshold, output_coord_in_ratio=True, ocr_bbox=ocr_bbox,draw_bbox_config=draw_bbox_config, caption_model_processor=caption_model_processor, ocr_text=text,iou_threshold=iou_threshold)

|

| 72 |

+

image = Image.open(io.BytesIO(base64.b64decode(dino_labled_img)))

|

| 73 |

+

print('finish processing')

|

| 74 |

+

parsed_content_list = '\n'.join(parsed_content_list)

|

| 75 |

+

return image, str(parsed_content_list)

|

| 76 |

+

|

| 77 |

+

|

| 78 |

+

|

| 79 |

+

with gr.Blocks() as demo:

|

| 80 |

+

gr.Markdown(MARKDOWN)

|

| 81 |

+

with gr.Row():

|

| 82 |

+

with gr.Column():

|

| 83 |

+

image_input_component = gr.Image(

|

| 84 |

+

type='pil', label='Upload image')

|

| 85 |

+

# set the threshold for removing the bounding boxes with low confidence, default is 0.05

|

| 86 |

+

box_threshold_component = gr.Slider(

|

| 87 |

+

label='Box Threshold', minimum=0.01, maximum=1.0, step=0.01, value=0.05)

|

| 88 |

+

# set the threshold for removing the bounding boxes with large overlap, default is 0.1

|

| 89 |

+

iou_threshold_component = gr.Slider(

|

| 90 |

+

label='IOU Threshold', minimum=0.01, maximum=1.0, step=0.01, value=0.1)

|

| 91 |

+

submit_button_component = gr.Button(

|

| 92 |

+

value='Submit', variant='primary')

|

| 93 |

+

with gr.Column():

|

| 94 |

+

image_output_component = gr.Image(type='pil', label='Image Output')

|

| 95 |

+

text_output_component = gr.Textbox(label='Parsed screen elements', placeholder='Text Output')

|

| 96 |

+

|

| 97 |

+

submit_button_component.click(

|

| 98 |

+

fn=process,

|

| 99 |

+

inputs=[

|

| 100 |

+

image_input_component,

|

| 101 |

+

box_threshold_component,

|

| 102 |

+

iou_threshold_component

|

| 103 |

+

],

|

| 104 |

+

outputs=[image_output_component, text_output_component]

|

| 105 |

+

)

|

| 106 |

+

|

| 107 |

+

# demo.launch(debug=False, show_error=True, share=True)

|

| 108 |

+

demo.launch(share=True, server_port=7861, server_name='0.0.0.0')

|

imgs/google_page.png

ADDED

|

imgs/logo.png

ADDED

|

imgs/saved_image_demo.png

ADDED

|

imgs/windows_home.png

ADDED

|

Git LFS Details

|

imgs/windows_multitab.png

ADDED

|

omniparser.py

ADDED

|

@@ -0,0 +1,60 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from utils import get_som_labeled_img, check_ocr_box, get_caption_model_processor, get_dino_model, get_yolo_model

|

| 2 |

+

import torch

|

| 3 |

+

from ultralytics import YOLO

|

| 4 |

+

from PIL import Image

|

| 5 |

+

from typing import Dict, Tuple, List

|

| 6 |

+

import io

|

| 7 |

+

import base64

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

config = {

|

| 11 |

+

'som_model_path': 'finetuned_icon_detect.pt',

|

| 12 |

+

'device': 'cpu',

|

| 13 |

+

'caption_model_path': 'Salesforce/blip2-opt-2.7b',

|

| 14 |

+

'draw_bbox_config': {

|

| 15 |

+

'text_scale': 0.8,

|

| 16 |

+

'text_thickness': 2,

|

| 17 |

+

'text_padding': 3,

|

| 18 |

+

'thickness': 3,

|

| 19 |

+

},

|

| 20 |

+

'BOX_TRESHOLD': 0.05

|

| 21 |

+

}

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

class Omniparser(object):

|

| 25 |

+

def __init__(self, config: Dict):

|

| 26 |

+

self.config = config

|

| 27 |

+

|

| 28 |

+

self.som_model = get_yolo_model(model_path=config['som_model_path'])

|

| 29 |

+

# self.caption_model_processor = get_caption_model_processor(config['caption_model_path'], device=cofig['device'])

|

| 30 |

+

# self.caption_model_processor['model'].to(torch.float32)

|

| 31 |

+

|

| 32 |

+

def parse(self, image_path: str):

|

| 33 |

+

print('Parsing image:', image_path)

|

| 34 |

+

ocr_bbox_rslt, is_goal_filtered = check_ocr_box(image_path, display_img = False, output_bb_format='xyxy', goal_filtering=None, easyocr_args={'paragraph': False, 'text_threshold':0.9})

|

| 35 |

+

text, ocr_bbox = ocr_bbox_rslt

|

| 36 |

+

|

| 37 |

+

draw_bbox_config = self.config['draw_bbox_config']

|

| 38 |

+

BOX_TRESHOLD = self.config['BOX_TRESHOLD']

|

| 39 |

+

dino_labled_img, label_coordinates, parsed_content_list = get_som_labeled_img(image_path, self.som_model, BOX_TRESHOLD = BOX_TRESHOLD, output_coord_in_ratio=False, ocr_bbox=ocr_bbox,draw_bbox_config=draw_bbox_config, caption_model_processor=None, ocr_text=text,use_local_semantics=False)

|

| 40 |

+

|

| 41 |

+

image = Image.open(io.BytesIO(base64.b64decode(dino_labled_img)))

|

| 42 |

+

# formating output

|

| 43 |

+

return_list = [{'from': 'omniparser', 'shape': {'x':coord[0], 'y':coord[1], 'width':coord[2], 'height':coord[3]},

|

| 44 |

+

'text': parsed_content_list[i].split(': ')[1], 'type':'text'} for i, (k, coord) in enumerate(label_coordinates.items()) if i < len(parsed_content_list)]

|

| 45 |

+

return_list.extend(

|

| 46 |

+

[{'from': 'omniparser', 'shape': {'x':coord[0], 'y':coord[1], 'width':coord[2], 'height':coord[3]},

|

| 47 |

+

'text': 'None', 'type':'icon'} for i, (k, coord) in enumerate(label_coordinates.items()) if i >= len(parsed_content_list)]

|

| 48 |

+

)

|

| 49 |

+

|

| 50 |

+

return [image, return_list]

|

| 51 |

+

|

| 52 |

+

parser = Omniparser(config)

|

| 53 |

+

image_path = 'examples/pc_1.png'

|

| 54 |

+

|

| 55 |

+

# time the parser

|

| 56 |

+

import time

|

| 57 |

+

s = time.time()

|

| 58 |

+

image, parsed_content_list = parser.parse(image_path)

|

| 59 |

+

device = config['device']

|

| 60 |

+

print(f'Time taken for Omniparser on {device}:', time.time() - s)

|

requirements.txt

ADDED

|

@@ -0,0 +1,16 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

torch

|

| 2 |

+

easyocr

|

| 3 |

+

torchvision

|

| 4 |

+

supervision==0.18.0

|

| 5 |

+

openai==1.3.5

|

| 6 |

+

transformers

|

| 7 |

+

ultralytics==8.1.24

|

| 8 |

+

azure-identity

|

| 9 |

+

numpy

|

| 10 |

+

opencv-python

|

| 11 |

+

opencv-python-headless

|

| 12 |

+

gradio

|

| 13 |

+

dill

|

| 14 |

+

accelerate

|

| 15 |

+

timm

|

| 16 |

+

einops=0.8.0

|

util/__init__.py

ADDED

|

File without changes

|

util/__pycache__/__init__.cpython-312.pyc

ADDED

|

Binary file (139 Bytes). View file

|

|

|

util/__pycache__/__init__.cpython-39.pyc

ADDED

|

Binary file (141 Bytes). View file

|

|

|

util/__pycache__/action_matching.cpython-39.pyc

ADDED

|

Binary file (8.49 kB). View file

|

|

|

util/__pycache__/box_annotator.cpython-312.pyc

ADDED

|

Binary file (9.79 kB). View file

|

|

|

util/__pycache__/box_annotator.cpython-39.pyc

ADDED

|

Binary file (6.57 kB). View file

|

|

|

util/action_matching.py

ADDED

|

@@ -0,0 +1,425 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

'''

|

| 2 |

+

Adapted from https://github.com/google-research/google-research/tree/master/android_in_the_wild

|

| 3 |

+

'''

|

| 4 |

+

|

| 5 |

+

import jax

|

| 6 |

+

import jax.numpy as jnp

|

| 7 |

+

import numpy as np

|

| 8 |

+

|

| 9 |

+

# import action_type as action_type_lib

|

| 10 |

+

import enum

|

| 11 |

+

|

| 12 |

+

class ActionType(enum.IntEnum):

|

| 13 |

+

# Placeholders for unused enum values

|

| 14 |

+

UNUSED_0 = 0

|

| 15 |

+

UNUSED_1 = 1

|

| 16 |

+

UNUSED_2 = 2

|

| 17 |

+

UNUSED_8 = 8

|

| 18 |

+

UNUSED_9 = 9

|

| 19 |

+

|

| 20 |

+

########### Agent actions ###########

|

| 21 |

+

|

| 22 |

+

# A type action that sends text to the emulator. Note that this simply sends

|

| 23 |

+

# text and does not perform any clicks for element focus or enter presses for

|

| 24 |

+

# submitting text.

|

| 25 |

+

TYPE = 3

|

| 26 |

+

|

| 27 |

+

# The dual point action used to represent all gestures.

|

| 28 |

+

DUAL_POINT = 4

|

| 29 |

+

|

| 30 |

+

# These actions differentiate pressing the home and back button from touches.

|

| 31 |

+

# They represent explicit presses of back and home performed using ADB.

|

| 32 |

+

PRESS_BACK = 5

|

| 33 |

+

PRESS_HOME = 6

|

| 34 |

+

|

| 35 |

+

# An action representing that ADB command for hitting enter was performed.

|

| 36 |

+

PRESS_ENTER = 7

|

| 37 |

+

|

| 38 |

+

########### Episode status actions ###########

|

| 39 |

+

|

| 40 |

+

# An action used to indicate the desired task has been completed and resets

|

| 41 |

+

# the environment. This action should also be used in the case that the task

|

| 42 |

+

# has already been completed and there is nothing to do.

|

| 43 |

+

# e.g. The task is to turn on the Wi-Fi when it is already on

|

| 44 |

+

STATUS_TASK_COMPLETE = 10

|

| 45 |

+

|

| 46 |

+

# An action used to indicate that desired task is impossible to complete and

|

| 47 |

+

# resets the environment. This can be a result of many different things

|

| 48 |

+

# including UI changes, Android version differences, etc.

|

| 49 |

+

STATUS_TASK_IMPOSSIBLE = 11

|

| 50 |

+

|

| 51 |

+

|

| 52 |

+

_TAP_DISTANCE_THRESHOLD = 0.14 # Fraction of the screen

|

| 53 |

+

ANNOTATION_WIDTH_AUGMENT_FRACTION = 1.4

|

| 54 |

+

ANNOTATION_HEIGHT_AUGMENT_FRACTION = 1.4

|

| 55 |

+

|

| 56 |

+

# Interval determining if an action is a tap or a swipe.

|

| 57 |

+

_SWIPE_DISTANCE_THRESHOLD = 0.04

|

| 58 |

+

|

| 59 |

+

|

| 60 |

+

def _yx_in_bounding_boxes(

|

| 61 |

+

yx, bounding_boxes

|

| 62 |

+

):

|

| 63 |

+

"""Check if the (y,x) point is contained in each bounding box.

|

| 64 |

+

|

| 65 |

+

Args:

|

| 66 |

+

yx: The (y, x) coordinate in pixels of the point.

|

| 67 |

+

bounding_boxes: A 2D int array of shape (num_bboxes, 4), where each row

|

| 68 |

+

represents a bounding box: (y_top_left, x_top_left, box_height,

|

| 69 |

+

box_width). Note: containment is inclusive of the bounding box edges.

|

| 70 |

+

|

| 71 |

+

Returns:

|

| 72 |

+

is_inside: A 1D bool array where each element specifies if the point is

|

| 73 |

+

contained within the respective box.

|

| 74 |

+

"""

|

| 75 |

+

y, x = yx

|

| 76 |

+

|

| 77 |

+

# `bounding_boxes` has shape (n_elements, 4); we extract each array along the

|

| 78 |

+

# last axis into shape (n_elements, 1), then squeeze unneeded dimension.

|

| 79 |

+

top, left, height, width = [

|

| 80 |

+

jnp.squeeze(v, axis=-1) for v in jnp.split(bounding_boxes, 4, axis=-1)

|

| 81 |

+

]

|

| 82 |

+

|

| 83 |

+

# The y-axis is inverted for AndroidEnv, so bottom = top + height.

|

| 84 |

+

bottom, right = top + height, left + width

|

| 85 |

+

|

| 86 |

+

return jnp.logical_and(y >= top, y <= bottom) & jnp.logical_and(

|

| 87 |

+

x >= left, x <= right)

|

| 88 |

+

|

| 89 |

+

|

| 90 |

+

def _resize_annotation_bounding_boxes(

|

| 91 |

+

annotation_positions, annotation_width_augment_fraction,

|

| 92 |

+

annotation_height_augment_fraction):

|

| 93 |

+

"""Resize the bounding boxes by the given fractions.

|

| 94 |

+

|

| 95 |

+

Args:

|

| 96 |

+

annotation_positions: Array of shape (N, 4), where each row represents the

|

| 97 |

+

(y, x, height, width) of the bounding boxes.

|

| 98 |

+

annotation_width_augment_fraction: The fraction to augment the box widths,

|

| 99 |

+

E.g., 1.4 == 240% total increase.

|

| 100 |

+

annotation_height_augment_fraction: Same as described for width, but for box

|

| 101 |

+

height.

|

| 102 |

+

|

| 103 |

+

Returns:

|

| 104 |

+

Resized bounding box.

|

| 105 |

+

|

| 106 |

+

"""

|

| 107 |

+

height_change = (

|

| 108 |

+

annotation_height_augment_fraction * annotation_positions[:, 2])

|

| 109 |

+

width_change = (

|

| 110 |

+

annotation_width_augment_fraction * annotation_positions[:, 3])

|

| 111 |

+

|

| 112 |

+

# Limit bounding box positions to the screen.

|

| 113 |

+

resized_annotations = jnp.stack([

|

| 114 |

+

jnp.maximum(0, annotation_positions[:, 0] - (height_change / 2)),

|

| 115 |

+

jnp.maximum(0, annotation_positions[:, 1] - (width_change / 2)),

|

| 116 |

+

jnp.minimum(1, annotation_positions[:, 2] + height_change),

|

| 117 |

+

jnp.minimum(1, annotation_positions[:, 3] + width_change),

|

| 118 |

+

],

|

| 119 |

+

axis=1)

|

| 120 |

+

return resized_annotations

|

| 121 |

+

|

| 122 |

+

|

| 123 |

+

def is_tap_action(normalized_start_yx,

|

| 124 |

+

normalized_end_yx):

|

| 125 |

+

distance = jnp.linalg.norm(

|

| 126 |

+

jnp.array(normalized_start_yx) - jnp.array(normalized_end_yx))

|

| 127 |

+

return distance <= _SWIPE_DISTANCE_THRESHOLD

|

| 128 |

+

|

| 129 |

+

|

| 130 |

+

def _is_non_dual_point_action(action_type):

|

| 131 |

+

return jnp.not_equal(action_type, ActionType.DUAL_POINT)

|

| 132 |

+

|

| 133 |

+

|

| 134 |

+

def _check_tap_actions_match(

|

| 135 |

+

tap_1_yx,

|

| 136 |

+

tap_2_yx,

|

| 137 |

+

annotation_positions,

|

| 138 |

+

matching_tap_distance_threshold_screen_percentage,

|

| 139 |

+

annotation_width_augment_fraction,

|

| 140 |

+

annotation_height_augment_fraction,

|

| 141 |

+

):

|

| 142 |

+

"""Determines if two tap actions are the same."""

|

| 143 |

+

resized_annotation_positions = _resize_annotation_bounding_boxes(

|

| 144 |

+

annotation_positions,

|

| 145 |

+

annotation_width_augment_fraction,

|

| 146 |

+

annotation_height_augment_fraction,

|

| 147 |

+

)

|

| 148 |

+

|

| 149 |

+

# Check if the ground truth tap action falls in an annotation's bounding box.

|

| 150 |

+

tap1_in_box = _yx_in_bounding_boxes(tap_1_yx, resized_annotation_positions)

|

| 151 |

+

tap2_in_box = _yx_in_bounding_boxes(tap_2_yx, resized_annotation_positions)

|

| 152 |

+

both_in_box = jnp.max(tap1_in_box & tap2_in_box)

|

| 153 |

+

|

| 154 |

+

# If the ground-truth tap action falls outside any of the annotation

|

| 155 |

+

# bounding boxes or one of the actions is inside a bounding box and the other

|

| 156 |

+

# is outside bounding box or vice versa, compare the points using Euclidean

|

| 157 |

+

# distance.

|

| 158 |

+

within_threshold = (

|

| 159 |

+

jnp.linalg.norm(jnp.array(tap_1_yx) - jnp.array(tap_2_yx))

|

| 160 |

+

<= matching_tap_distance_threshold_screen_percentage

|

| 161 |

+

)

|

| 162 |

+

return jnp.logical_or(both_in_box, within_threshold)

|

| 163 |

+

|

| 164 |

+

|

| 165 |

+

def _check_drag_actions_match(

|

| 166 |

+

drag_1_touch_yx,

|

| 167 |

+

drag_1_lift_yx,

|

| 168 |

+

drag_2_touch_yx,

|

| 169 |

+

drag_2_lift_yx,

|

| 170 |

+

):

|

| 171 |

+

"""Determines if two drag actions are the same."""

|

| 172 |

+

# Store drag deltas (the change in the y and x coordinates from touch to

|

| 173 |

+

# lift), magnitudes, and the index of the main axis, which is the axis with

|

| 174 |

+

# the greatest change in coordinate value (e.g. a drag starting at (0, 0) and

|

| 175 |

+

# ending at (0.3, 0.5) has a main axis index of 1).

|

| 176 |

+

drag_1_deltas = drag_1_lift_yx - drag_1_touch_yx

|

| 177 |

+

drag_1_magnitudes = jnp.abs(drag_1_deltas)

|

| 178 |

+

drag_1_main_axis = np.argmax(drag_1_magnitudes)

|

| 179 |

+

drag_2_deltas = drag_2_lift_yx - drag_2_touch_yx

|

| 180 |

+

drag_2_magnitudes = jnp.abs(drag_2_deltas)

|

| 181 |

+

drag_2_main_axis = np.argmax(drag_2_magnitudes)

|

| 182 |

+

|

| 183 |

+

return jnp.equal(drag_1_main_axis, drag_2_main_axis)

|

| 184 |

+

|

| 185 |

+

|

| 186 |

+

def check_actions_match(

|

| 187 |

+

action_1_touch_yx,

|

| 188 |

+

action_1_lift_yx,

|

| 189 |

+

action_1_action_type,

|

| 190 |

+

action_2_touch_yx,

|

| 191 |

+

action_2_lift_yx,

|

| 192 |

+

action_2_action_type,

|

| 193 |

+

annotation_positions,

|

| 194 |

+

tap_distance_threshold = _TAP_DISTANCE_THRESHOLD,

|

| 195 |

+

annotation_width_augment_fraction = ANNOTATION_WIDTH_AUGMENT_FRACTION,

|

| 196 |

+

annotation_height_augment_fraction = ANNOTATION_HEIGHT_AUGMENT_FRACTION,

|

| 197 |

+

):

|

| 198 |

+

"""Determines if two actions are considered to be the same.

|

| 199 |

+

|

| 200 |

+

Two actions being "the same" is defined here as two actions that would result

|

| 201 |

+

in a similar screen state.

|

| 202 |

+

|

| 203 |

+

Args:

|

| 204 |

+

action_1_touch_yx: The (y, x) coordinates of the first action's touch.

|

| 205 |

+

action_1_lift_yx: The (y, x) coordinates of the first action's lift.

|

| 206 |

+

action_1_action_type: The action type of the first action.

|

| 207 |

+

action_2_touch_yx: The (y, x) coordinates of the second action's touch.

|

| 208 |

+

action_2_lift_yx: The (y, x) coordinates of the second action's lift.

|

| 209 |

+

action_2_action_type: The action type of the second action.

|

| 210 |

+

annotation_positions: The positions of the UI annotations for the screen. It

|

| 211 |

+

is A 2D int array of shape (num_bboxes, 4), where each row represents a

|

| 212 |

+

bounding box: (y_top_left, x_top_left, box_height, box_width). Note that

|

| 213 |

+

containment is inclusive of the bounding box edges.

|

| 214 |

+

tap_distance_threshold: The threshold that determines if two taps result in

|

| 215 |

+

a matching screen state if they don't fall the same bounding boxes.

|

| 216 |

+

annotation_width_augment_fraction: The fraction to increase the width of the

|

| 217 |

+

bounding box by.

|

| 218 |

+

annotation_height_augment_fraction: The fraction to increase the height of

|

| 219 |

+

of the bounding box by.

|

| 220 |

+

|

| 221 |

+

Returns:

|

| 222 |

+

A boolean representing whether the two given actions are the same or not.

|

| 223 |

+

"""

|

| 224 |

+

action_1_touch_yx = jnp.asarray(action_1_touch_yx)

|

| 225 |

+

action_1_lift_yx = jnp.asarray(action_1_lift_yx)

|

| 226 |

+

action_2_touch_yx = jnp.asarray(action_2_touch_yx)

|

| 227 |

+

action_2_lift_yx = jnp.asarray(action_2_lift_yx)

|

| 228 |

+

|

| 229 |

+

# Checks if at least one of the actions is global (i.e. not DUAL_POINT),

|

| 230 |

+

# because if that is the case, only the actions' types need to be compared.

|

| 231 |

+

has_non_dual_point_action = jnp.logical_or(

|

| 232 |

+

_is_non_dual_point_action(action_1_action_type),

|

| 233 |

+

_is_non_dual_point_action(action_2_action_type),

|

| 234 |

+

)

|

| 235 |

+

#print("non dual point: "+str(has_non_dual_point_action))

|

| 236 |

+

|

| 237 |

+

different_dual_point_types = jnp.logical_xor(

|

| 238 |

+

is_tap_action(action_1_touch_yx, action_1_lift_yx),

|

| 239 |

+

is_tap_action(action_2_touch_yx, action_2_lift_yx),

|

| 240 |

+

)

|

| 241 |

+

#print("different dual type: "+str(different_dual_point_types))

|

| 242 |

+

|

| 243 |

+

is_tap = jnp.logical_and(

|

| 244 |

+

is_tap_action(action_1_touch_yx, action_1_lift_yx),

|

| 245 |

+

is_tap_action(action_2_touch_yx, action_2_lift_yx),

|

| 246 |

+

)

|

| 247 |

+

#print("is tap: "+str(is_tap))

|

| 248 |

+

|

| 249 |

+

taps_match = _check_tap_actions_match(

|

| 250 |

+

action_1_touch_yx,

|

| 251 |

+

action_2_touch_yx,

|

| 252 |

+

annotation_positions,

|

| 253 |

+

tap_distance_threshold,

|

| 254 |

+

annotation_width_augment_fraction,

|

| 255 |

+

annotation_height_augment_fraction,

|

| 256 |

+

)

|

| 257 |

+

#print("tap match: "+str(taps_match))

|

| 258 |

+

|

| 259 |

+

taps_match = jnp.logical_and(is_tap, taps_match)

|

| 260 |

+

#print("tap match: "+str(taps_match))

|

| 261 |

+

|

| 262 |

+

drags_match = _check_drag_actions_match(

|

| 263 |

+

action_1_touch_yx, action_1_lift_yx, action_2_touch_yx, action_2_lift_yx

|

| 264 |

+

)

|

| 265 |

+

drags_match = jnp.where(is_tap, False, drags_match)

|

| 266 |

+

#print("drag match: "+str(drags_match))

|

| 267 |

+

|

| 268 |

+

return jnp.where(

|

| 269 |

+

has_non_dual_point_action,

|

| 270 |

+

jnp.equal(action_1_action_type, action_2_action_type),

|

| 271 |

+

jnp.where(

|

| 272 |

+

different_dual_point_types,

|

| 273 |

+

False,

|

| 274 |

+

jnp.logical_or(taps_match, drags_match),

|

| 275 |

+

),

|

| 276 |

+

)

|

| 277 |

+

|

| 278 |

+

|

| 279 |

+

def action_2_format(step_data):

|

| 280 |

+

# 把test数据集中的动作格式转换为计算matching score的格式

|

| 281 |

+

action_type = step_data["action_type_id"]

|

| 282 |

+

|

| 283 |

+

if action_type == 4:

|

| 284 |

+

if step_data["action_type_text"] == 'click': # 点击

|

| 285 |

+

touch_point = step_data["touch"]

|

| 286 |

+

lift_point = step_data["lift"]

|

| 287 |

+

else: # 上下左右滑动

|

| 288 |

+

if step_data["action_type_text"] == 'scroll down':

|

| 289 |

+

touch_point = [0.5, 0.8]

|

| 290 |

+

lift_point = [0.5, 0.2]

|

| 291 |

+

elif step_data["action_type_text"] == 'scroll up':

|

| 292 |

+

touch_point = [0.5, 0.2]

|

| 293 |

+

lift_point = [0.5, 0.8]

|

| 294 |

+

elif step_data["action_type_text"] == 'scroll left':

|

| 295 |

+

touch_point = [0.2, 0.5]

|

| 296 |

+

lift_point = [0.8, 0.5]

|

| 297 |

+

elif step_data["action_type_text"] == 'scroll right':

|

| 298 |

+

touch_point = [0.8, 0.5]

|

| 299 |

+

lift_point = [0.2, 0.5]

|

| 300 |

+

else:

|

| 301 |

+

touch_point = [-1.0, -1.0]

|

| 302 |

+

lift_point = [-1.0, -1.0]

|

| 303 |

+

|

| 304 |

+

if action_type == 3:

|

| 305 |

+

typed_text = step_data["type_text"]

|

| 306 |

+

else:

|

| 307 |

+

typed_text = ""

|

| 308 |

+

|

| 309 |

+

action = {"action_type": action_type, "touch_point": touch_point, "lift_point": lift_point,

|

| 310 |

+

"typed_text": typed_text}

|

| 311 |

+

|

| 312 |

+

action["touch_point"] = [action["touch_point"][1], action["touch_point"][0]]

|

| 313 |

+

action["lift_point"] = [action["lift_point"][1], action["lift_point"][0]]

|

| 314 |

+

action["typed_text"] = action["typed_text"].lower()

|

| 315 |

+

|

| 316 |

+

return action

|

| 317 |

+

|

| 318 |

+

|

| 319 |

+

def pred_2_format(step_data):

|

| 320 |

+

# 把模型输出的内容转换为计算action_matching的格式

|

| 321 |

+

action_type = step_data["action_type"]

|

| 322 |

+

|

| 323 |

+

if action_type == 4: # 点击

|

| 324 |

+

action_type_new = 4

|

| 325 |

+

touch_point = step_data["click_point"]

|

| 326 |

+

lift_point = step_data["click_point"]

|

| 327 |

+

typed_text = ""

|

| 328 |

+

elif action_type == 0:

|

| 329 |

+

action_type_new = 4

|

| 330 |

+

touch_point = [0.5, 0.8]

|

| 331 |

+

lift_point = [0.5, 0.2]

|

| 332 |

+

typed_text = ""

|

| 333 |

+

elif action_type == 1:

|

| 334 |

+

action_type_new = 4

|

| 335 |

+

touch_point = [0.5, 0.2]

|

| 336 |

+

lift_point = [0.5, 0.8]

|

| 337 |

+

typed_text = ""

|

| 338 |

+

elif action_type == 8:

|

| 339 |

+

action_type_new = 4

|

| 340 |

+

touch_point = [0.2, 0.5]

|

| 341 |

+

lift_point = [0.8, 0.5]

|

| 342 |

+

typed_text = ""

|

| 343 |

+

elif action_type == 9:

|

| 344 |

+

action_type_new = 4

|

| 345 |

+

touch_point = [0.8, 0.5]

|

| 346 |

+

lift_point = [0.2, 0.5]

|

| 347 |

+

typed_text = ""

|

| 348 |

+

else:

|

| 349 |

+

action_type_new = action_type

|

| 350 |

+

touch_point = [-1.0, -1.0]

|

| 351 |

+

lift_point = [-1.0, -1.0]

|

| 352 |

+

typed_text = ""

|

| 353 |

+

if action_type_new == 3:

|

| 354 |

+

typed_text = step_data["typed_text"]

|

| 355 |

+

|

| 356 |

+

action = {"action_type": action_type_new, "touch_point": touch_point, "lift_point": lift_point,

|

| 357 |

+

"typed_text": typed_text}

|

| 358 |

+

|

| 359 |

+

action["touch_point"] = [action["touch_point"][1], action["touch_point"][0]]

|

| 360 |

+

action["lift_point"] = [action["lift_point"][1], action["lift_point"][0]]

|

| 361 |

+

action["typed_text"] = action["typed_text"].lower()

|

| 362 |

+

|

| 363 |

+

return action

|

| 364 |

+

|

| 365 |

+

|

| 366 |

+

def pred_2_format_simplified(step_data):

|

| 367 |

+

# 把模型输出的内容转换为计算action_matching的格式

|

| 368 |

+

action_type = step_data["action_type"]

|

| 369 |

+

|

| 370 |

+

if action_type == 'click' : # 点击

|

| 371 |

+

action_type_new = 4

|

| 372 |

+

touch_point = step_data["click_point"]

|

| 373 |

+

lift_point = step_data["click_point"]

|

| 374 |

+

typed_text = ""

|

| 375 |

+

elif action_type == 'scroll' and step_data["direction"] == 'down':

|

| 376 |

+

action_type_new = 4

|

| 377 |

+

touch_point = [0.5, 0.8]

|

| 378 |

+

lift_point = [0.5, 0.2]

|

| 379 |

+

typed_text = ""

|

| 380 |

+

elif action_type == 'scroll' and step_data["direction"] == 'up':

|

| 381 |

+

action_type_new = 4

|

| 382 |

+

touch_point = [0.5, 0.2]

|

| 383 |

+

lift_point = [0.5, 0.8]

|

| 384 |

+

typed_text = ""

|

| 385 |

+

elif action_type == 'scroll' and step_data["direction"] == 'left':

|

| 386 |

+

action_type_new = 4

|

| 387 |

+

touch_point = [0.2, 0.5]

|

| 388 |

+

lift_point = [0.8, 0.5]

|

| 389 |

+

typed_text = ""

|

| 390 |

+

elif action_type == 'scroll' and step_data["direction"] == 'right':

|

| 391 |

+

action_type_new = 4

|

| 392 |

+

touch_point = [0.8, 0.5]

|

| 393 |

+

lift_point = [0.2, 0.5]

|

| 394 |

+

typed_text = ""

|

| 395 |

+

elif action_type == 'type':

|

| 396 |

+

action_type_new = 3

|

| 397 |

+

touch_point = [-1.0, -1.0]

|

| 398 |

+

lift_point = [-1.0, -1.0]

|

| 399 |

+

typed_text = step_data["text"]

|

| 400 |

+

elif action_type == 'navigate_back':

|

| 401 |

+

action_type_new = 5

|

| 402 |

+

touch_point = [-1.0, -1.0]

|

| 403 |

+

lift_point = [-1.0, -1.0]

|

| 404 |

+

typed_text = ""

|

| 405 |

+

elif action_type == 'navigate_home':

|

| 406 |

+

action_type_new = 6

|

| 407 |

+

touch_point = [-1.0, -1.0]

|

| 408 |

+

lift_point = [-1.0, -1.0]

|

| 409 |

+

typed_text = ""

|

| 410 |

+

else:

|

| 411 |

+

action_type_new = action_type

|

| 412 |

+

touch_point = [-1.0, -1.0]

|

| 413 |

+

lift_point = [-1.0, -1.0]

|

| 414 |

+

typed_text = ""

|

| 415 |

+

# if action_type_new == 'type':

|

| 416 |

+

# typed_text = step_data["text"]

|

| 417 |

+

|

| 418 |

+

action = {"action_type": action_type_new, "touch_point": touch_point, "lift_point": lift_point,

|

| 419 |

+

"typed_text": typed_text}

|

| 420 |

+

|

| 421 |

+

action["touch_point"] = [action["touch_point"][1], action["touch_point"][0]]

|

| 422 |

+

action["lift_point"] = [action["lift_point"][1], action["lift_point"][0]]

|

| 423 |

+

action["typed_text"] = action["typed_text"].lower()

|

| 424 |

+

|

| 425 |

+

return action

|

util/action_type.py

ADDED

|

@@ -0,0 +1,45 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

'''

|

| 2 |

+

Adapted from https://github.com/google-research/google-research/tree/master/android_in_the_wild

|

| 3 |

+

'''

|

| 4 |

+

|

| 5 |

+

import enum

|

| 6 |

+

|

| 7 |

+

class ActionType(enum.IntEnum):

|

| 8 |

+

|

| 9 |

+

# Placeholders for unused enum values

|

| 10 |

+

UNUSED_0 = 0

|

| 11 |

+

UNUSED_1 = 1

|

| 12 |

+

UNUSED_2 = 2

|

| 13 |

+

UNUSED_8 = 8

|

| 14 |

+

UNUSED_9 = 9

|

| 15 |

+

|

| 16 |

+

########### Agent actions ###########

|

| 17 |

+

|

| 18 |

+

# A type action that sends text to the emulator. Note that this simply sends

|

| 19 |

+

# text and does not perform any clicks for element focus or enter presses for

|

| 20 |

+

# submitting text.

|

| 21 |

+

TYPE = 3

|

| 22 |

+

|

| 23 |

+

# The dual point action used to represent all gestures.

|

| 24 |

+

DUAL_POINT = 4

|

| 25 |

+

|

| 26 |

+

# These actions differentiate pressing the home and back button from touches.

|

| 27 |

+

# They represent explicit presses of back and home performed using ADB.

|

| 28 |

+

PRESS_BACK = 5

|

| 29 |

+

PRESS_HOME = 6

|

| 30 |

+

|

| 31 |

+

# An action representing that ADB command for hitting enter was performed.

|

| 32 |

+

PRESS_ENTER = 7

|

| 33 |

+

|

| 34 |

+

########### Episode status actions ###########

|

| 35 |

+

|

| 36 |

+

# An action used to indicate the desired task has been completed and resets

|

| 37 |

+

# the environment. This action should also be used in the case that the task

|

| 38 |

+

# has already been completed and there is nothing to do.

|

| 39 |

+

# e.g. The task is to turn on the Wi-Fi when it is already on

|

| 40 |

+

STATUS_TASK_COMPLETE = 10

|

| 41 |

+

|

| 42 |

+

# An action used to indicate that desired task is impossible to complete and

|

| 43 |

+

# resets the environment. This can be a result of many different things

|

| 44 |

+

# including UI changes, Android version differences, etc.

|

| 45 |

+

STATUS_TASK_IMPOSSIBLE = 11

|

util/box_annotator.py

ADDED

|

@@ -0,0 +1,262 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from typing import List, Optional, Union, Tuple

|

| 2 |

+

|

| 3 |

+

import cv2

|

| 4 |

+

import numpy as np

|

| 5 |

+

|

| 6 |

+

from supervision.detection.core import Detections

|

| 7 |

+

from supervision.draw.color import Color, ColorPalette

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

class BoxAnnotator:

|

| 11 |

+

"""

|

| 12 |

+

A class for drawing bounding boxes on an image using detections provided.

|

| 13 |

+

|

| 14 |

+

Attributes:

|

| 15 |

+

color (Union[Color, ColorPalette]): The color to draw the bounding box,

|

| 16 |

+

can be a single color or a color palette

|

| 17 |

+

thickness (int): The thickness of the bounding box lines, default is 2

|

| 18 |

+

text_color (Color): The color of the text on the bounding box, default is white

|

| 19 |

+

text_scale (float): The scale of the text on the bounding box, default is 0.5

|

| 20 |

+

text_thickness (int): The thickness of the text on the bounding box,

|

| 21 |

+

default is 1

|

| 22 |

+

text_padding (int): The padding around the text on the bounding box,

|

| 23 |

+

default is 5

|

| 24 |

+

|

| 25 |

+

"""

|

| 26 |

+

|

| 27 |

+

def __init__(

|

| 28 |

+

self,

|

| 29 |

+

color: Union[Color, ColorPalette] = ColorPalette.DEFAULT,

|

| 30 |

+

thickness: int = 3, # 1 for seeclick 2 for mind2web and 3 for demo

|

| 31 |

+

text_color: Color = Color.BLACK,

|

| 32 |

+

text_scale: float = 0.5, # 0.8 for mobile/web, 0.3 for desktop # 0.4 for mind2web

|

| 33 |

+

text_thickness: int = 2, #1, # 2 for demo

|

| 34 |

+

text_padding: int = 10,

|

| 35 |

+

avoid_overlap: bool = True,

|

| 36 |

+

):

|

| 37 |

+

self.color: Union[Color, ColorPalette] = color

|

| 38 |

+

self.thickness: int = thickness

|

| 39 |

+

self.text_color: Color = text_color

|

| 40 |

+

self.text_scale: float = text_scale

|

| 41 |

+

self.text_thickness: int = text_thickness

|

| 42 |

+

self.text_padding: int = text_padding

|

| 43 |

+

self.avoid_overlap: bool = avoid_overlap

|

| 44 |

+

|

| 45 |

+

def annotate(

|

| 46 |

+

self,

|

| 47 |

+

scene: np.ndarray,

|

| 48 |

+

detections: Detections,

|

| 49 |

+

labels: Optional[List[str]] = None,

|

| 50 |

+

skip_label: bool = False,

|

| 51 |

+

image_size: Optional[Tuple[int, int]] = None,

|

| 52 |

+

) -> np.ndarray:

|

| 53 |

+

"""

|

| 54 |

+

Draws bounding boxes on the frame using the detections provided.

|

| 55 |

+

|

| 56 |

+

Args:

|

| 57 |

+

scene (np.ndarray): The image on which the bounding boxes will be drawn

|

| 58 |

+

detections (Detections): The detections for which the

|

| 59 |

+

bounding boxes will be drawn

|

| 60 |

+

labels (Optional[List[str]]): An optional list of labels

|

| 61 |

+

corresponding to each detection. If `labels` are not provided,

|

| 62 |

+

corresponding `class_id` will be used as label.

|

| 63 |

+

skip_label (bool): Is set to `True`, skips bounding box label annotation.

|

| 64 |

+

Returns:

|

| 65 |

+

np.ndarray: The image with the bounding boxes drawn on it

|

| 66 |

+

|

| 67 |

+

Example:

|

| 68 |

+

```python

|

| 69 |

+

import supervision as sv

|

| 70 |

+

|

| 71 |

+

classes = ['person', ...]

|

| 72 |

+

image = ...

|

| 73 |

+

detections = sv.Detections(...)

|

| 74 |

+

|

| 75 |

+

box_annotator = sv.BoxAnnotator()

|

| 76 |

+

labels = [

|

| 77 |

+

f"{classes[class_id]} {confidence:0.2f}"

|

| 78 |

+

for _, _, confidence, class_id, _ in detections

|

| 79 |

+

]

|

| 80 |

+

annotated_frame = box_annotator.annotate(

|

| 81 |

+

scene=image.copy(),

|

| 82 |

+

detections=detections,

|

| 83 |

+

labels=labels

|

| 84 |

+

)

|

| 85 |

+

```

|

| 86 |

+

"""

|

| 87 |

+

font = cv2.FONT_HERSHEY_SIMPLEX

|

| 88 |

+

for i in range(len(detections)):

|

| 89 |

+

x1, y1, x2, y2 = detections.xyxy[i].astype(int)

|

| 90 |

+

class_id = (

|

| 91 |

+

detections.class_id[i] if detections.class_id is not None else None

|

| 92 |

+

)

|

| 93 |

+

idx = class_id if class_id is not None else i

|

| 94 |

+

color = (

|

| 95 |

+

self.color.by_idx(idx)

|

| 96 |

+

if isinstance(self.color, ColorPalette)

|

| 97 |

+

else self.color

|

| 98 |

+

)

|

| 99 |

+

cv2.rectangle(

|

| 100 |

+

img=scene,

|

| 101 |

+

pt1=(x1, y1),

|

| 102 |

+

pt2=(x2, y2),

|

| 103 |

+

color=color.as_bgr(),

|

| 104 |

+

thickness=self.thickness,

|

| 105 |

+

)

|

| 106 |

+

if skip_label:

|

| 107 |

+

continue

|

| 108 |

+

|

| 109 |

+

text = (

|

| 110 |

+

f"{class_id}"

|

| 111 |

+

if (labels is None or len(detections) != len(labels))

|

| 112 |

+

else labels[i]

|

| 113 |

+

)

|

| 114 |

+

|

| 115 |

+

text_width, text_height = cv2.getTextSize(

|