Commit

·

6d36e93

1

Parent(s):

7feb246

Update README

Browse files- README.md +107 -0

- static/query-expansion-model.jpg +0 -0

README.md

CHANGED

|

@@ -2,4 +2,111 @@

|

|

| 2 |

license: apache-2.0

|

| 3 |

tags:

|

| 4 |

- unsloth

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 5 |

---

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 2 |

license: apache-2.0

|

| 3 |

tags:

|

| 4 |

- unsloth

|

| 5 |

+

- query-expansion

|

| 6 |

+

datasets:

|

| 7 |

+

- s-emanuilov/query-expansion

|

| 8 |

+

base_model:

|

| 9 |

+

- Qwen/Qwen2.5-3B-Instruct

|

| 10 |

---

|

| 11 |

+

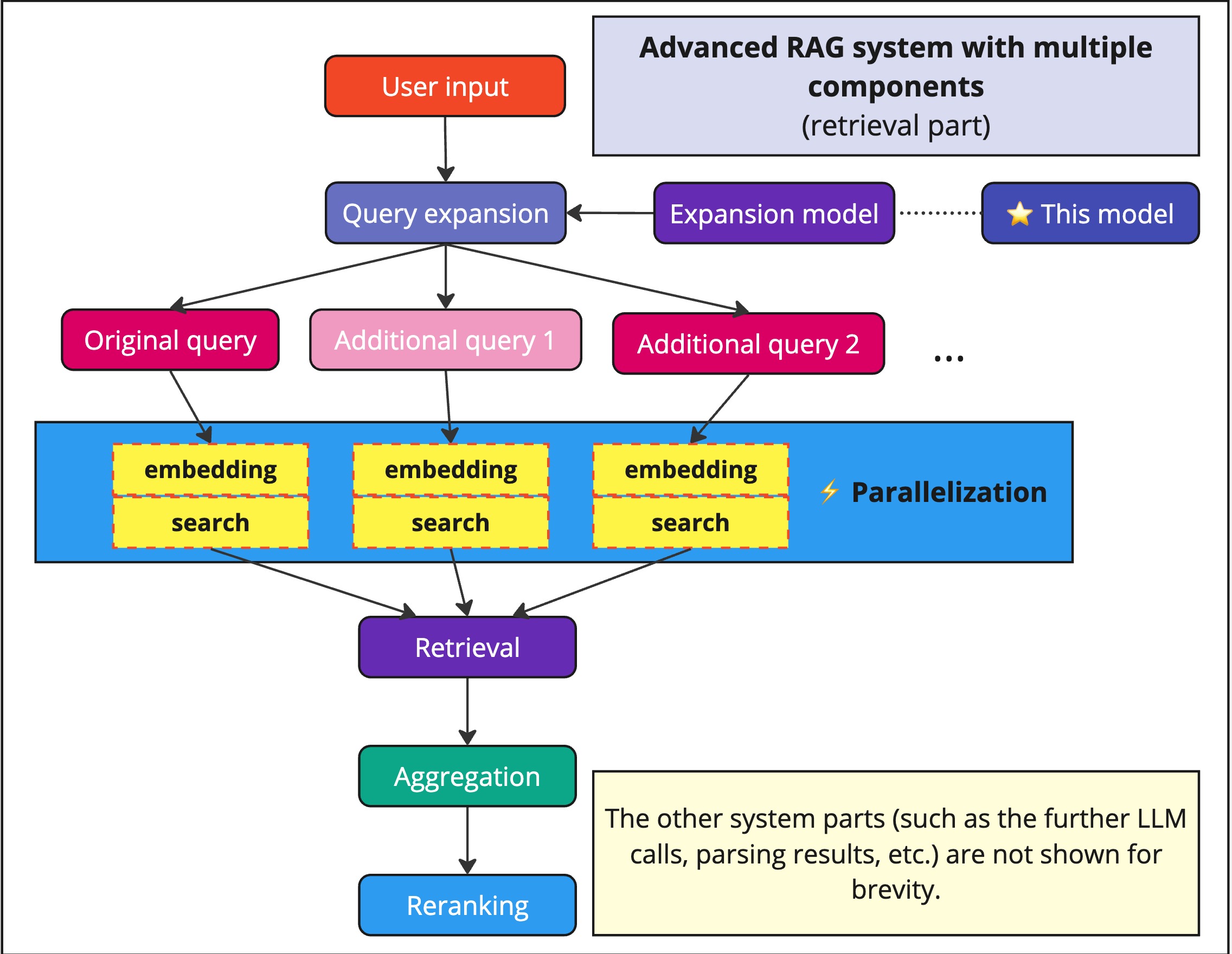

# Query Expansion Dataset - based on Qwen2.5-7B

|

| 12 |

+

|

| 13 |

+

Fine-tuned Qwen2.5-7B model for generating search query expansions.

|

| 14 |

+

Part of a collection of query expansion models available in different architectures and sizes.

|

| 15 |

+

|

| 16 |

+

## Overview

|

| 17 |

+

|

| 18 |

+

**Task:** Search query expansion

|

| 19 |

+

**Base model:** [Qwen2.5-3B](https://huggingface.co/Qwen/Qwen2.5-7B)

|

| 20 |

+

**Training data:** [Query Expansion Dataset](https://huggingface.co/datasets/unsloth/query-expansion-dataset)

|

| 21 |

+

|

| 22 |

+

<img src="static/query-expansion-model.jpg" alt="Query Expansion Model" width="600px" />

|

| 23 |

+

|

| 24 |

+

|

| 25 |

+

## Variants

|

| 26 |

+

### LoRA adaptors

|

| 27 |

+

|

| 28 |

+

- [Qwen2.5-3B](https://huggingface.co/s-emanuilov/query-expansion-Qwen2.5-3B)

|

| 29 |

+

- [Llama-3.2-3B](https://huggingface.co/s-emanuilov/query-expansion-Llama-3.2-3B)

|

| 30 |

+

|

| 31 |

+

### GGUF variants

|

| 32 |

+

- [Qwen2.5-3B-GGUF](https://huggingface.co/s-emanuilov/query-expansion-Qwen2.5-3B-GGUF)

|

| 33 |

+

- [Qwen2.5-7B-GGUF](https://huggingface.co/s-emanuilov/query-expansion-Qwen2.5-7B-GGUF)

|

| 34 |

+

- [Llama-3.2-3B-GGUF](https://huggingface.co/s-emanuilov/query-expansion-Llama-3.2-3B-GGUF)

|

| 35 |

+

|

| 36 |

+

Each GGUF model is available in several quantization formats: F16, Q8_0, Q5_K_M, Q4_K_M, Q3_K_M

|

| 37 |

+

|

| 38 |

+

## Details

|

| 39 |

+

This model is designed for enhancing search and retrieval systems by generating semantically relevant query expansions.

|

| 40 |

+

|

| 41 |

+

It could be useful for:

|

| 42 |

+

- Advanced RAG systems

|

| 43 |

+

- Search enhancement

|

| 44 |

+

- Query preprocessing

|

| 45 |

+

- Low-latency query expansion

|

| 46 |

+

|

| 47 |

+

## Usage

|

| 48 |

+

|

| 49 |

+

```python

|

| 50 |

+

from transformers import AutoModelForCausalLM, AutoTokenizer

|

| 51 |

+

from unsloth import FastLanguageModel

|

| 52 |

+

|

| 53 |

+

# Model configuration

|

| 54 |

+

MODEL_NAME = "s-emanuilov/query-expansion-Qwen2.5-7B"

|

| 55 |

+

MAX_SEQ_LENGTH = 2048

|

| 56 |

+

DTYPE = "float16"

|

| 57 |

+

LOAD_IN_4BIT = True

|

| 58 |

+

|

| 59 |

+

# Load model and tokenizer

|

| 60 |

+

model, tokenizer = FastLanguageModel.from_pretrained(

|

| 61 |

+

model_name=MODEL_NAME,

|

| 62 |

+

max_seq_length=MAX_SEQ_LENGTH,

|

| 63 |

+

dtype=DTYPE,

|

| 64 |

+

load_in_4bit=LOAD_IN_4BIT,

|

| 65 |

+

)

|

| 66 |

+

|

| 67 |

+

# Enable faster inference

|

| 68 |

+

FastLanguageModel.for_inference(model)

|

| 69 |

+

|

| 70 |

+

# Define prompt template

|

| 71 |

+

PROMPT_TEMPLATE = """Below is a search query. Generate relevant expansions and related terms that would help broaden and enhance the search results.

|

| 72 |

+

|

| 73 |

+

### Query:

|

| 74 |

+

{query}

|

| 75 |

+

|

| 76 |

+

### Expansions:

|

| 77 |

+

{output}"""

|

| 78 |

+

|

| 79 |

+

# Prepare input

|

| 80 |

+

query = "apple stock"

|

| 81 |

+

inputs = tokenizer(

|

| 82 |

+

[PROMPT_TEMPLATE.format(query=query, output="")],

|

| 83 |

+

return_tensors="pt"

|

| 84 |

+

).to("cuda")

|

| 85 |

+

|

| 86 |

+

# Generate with streaming output

|

| 87 |

+

from transformers import TextStreamer

|

| 88 |

+

streamer = TextStreamer(tokenizer)

|

| 89 |

+

output = model.generate(

|

| 90 |

+

**inputs,

|

| 91 |

+

streamer=streamer,

|

| 92 |

+

max_new_tokens=128,

|

| 93 |

+

)

|

| 94 |

+

```

|

| 95 |

+

|

| 96 |

+

## Example

|

| 97 |

+

|

| 98 |

+

**Input:** "apple stock"

|

| 99 |

+

**Expansions:**

|

| 100 |

+

- "apple stock price"

|

| 101 |

+

- "how to invest in apple stocks"

|

| 102 |

+

- "apple stock analysis"

|

| 103 |

+

- "what is the future of apple stocks?"

|

| 104 |

+

- "understanding apple's stock market performance"

|

| 105 |

+

|

| 106 |

+

## Citation

|

| 107 |

+

|

| 108 |

+

If you find my work helpful, feel free to give me a citation.

|

| 109 |

+

|

| 110 |

+

```

|

| 111 |

+

|

| 112 |

+

```

|

static/query-expansion-model.jpg

ADDED

|