Update README.md

Browse files

README.md

CHANGED

|

@@ -1,12 +1,17 @@

|

|

| 1 |

---

|

| 2 |

library_name: transformers

|

| 3 |

tags:

|

| 4 |

-

-

|

|

|

|

|

|

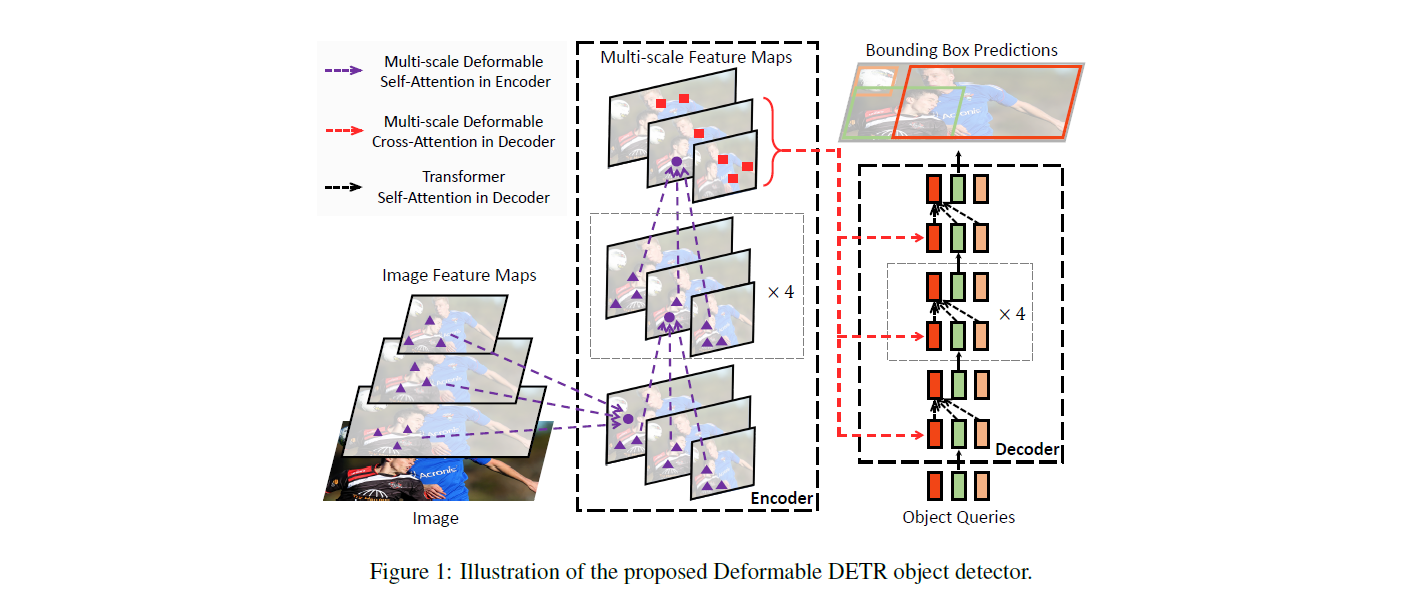

|

|

|

|

|

|

|

|

|

|

| 5 |

datasets:

|

| 6 |

-

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

---

|

| 11 |

|

| 12 |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

|

|

@@ -14,32 +19,99 @@ should probably proofread and complete it, then remove this comment. -->

|

|

| 14 |

|

| 15 |

# Deformable-DETR-Document-Layout-Analyzer

|

| 16 |

|

| 17 |

-

This model was

|

| 18 |

|

| 19 |

## Model description

|

| 20 |

|

| 21 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

| 22 |

|

| 23 |

## Intended uses & limitations

|

| 24 |

|

| 25 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 26 |

|

| 27 |

-

|

|

|

|

| 28 |

|

| 29 |

-

|

|

|

|

| 30 |

|

| 31 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 32 |

|

| 33 |

### Training hyperparameters

|

| 34 |

|

| 35 |

The following hyperparameters were used during training:

|

| 36 |

- learning_rate: 5e-05

|

| 37 |

-

-

|

| 38 |

-

-

|

| 39 |

- seed: 42

|

| 40 |

- optimizer: Use adamw_torch with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

|

| 41 |

- lr_scheduler_type: cosine

|

| 42 |

-

- num_epochs:

|

| 43 |

|

| 44 |

### Framework versions

|

| 45 |

|

|

@@ -47,3 +119,33 @@ The following hyperparameters were used during training:

|

|

| 47 |

- Pytorch 2.6.0+cu124

|

| 48 |

- Datasets 2.21.0

|

| 49 |

- Tokenizers 0.21.0

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

library_name: transformers

|

| 3 |

tags:

|

| 4 |

+

- object-detection

|

| 5 |

+

- Document

|

| 6 |

+

- Layout

|

| 7 |

+

- Analysis

|

| 8 |

+

- DocLayNet

|

| 9 |

+

- mAP

|

| 10 |

datasets:

|

| 11 |

+

- ds4sd/DocLayNet

|

| 12 |

+

license: apache-2.0

|

| 13 |

+

base_model:

|

| 14 |

+

- SenseTime/deformable-detr

|

| 15 |

---

|

| 16 |

|

| 17 |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

|

|

|

|

| 19 |

|

| 20 |

# Deformable-DETR-Document-Layout-Analyzer

|

| 21 |

|

| 22 |

+

This model was fine-tuned on the doc_lay_net dataset for Document Layout Analysis using full-sized DocLayNet Public Dataset.

|

| 23 |

|

| 24 |

## Model description

|

| 25 |

|

| 26 |

+

The DETR model is an encoder-decoder transformer with a convolutional backbone. Two heads are added on top of the decoder outputs in order to perform object detection: a linear layer for the class labels and a MLP (multi-layer perceptron) for the bounding boxes. The model uses so-called object queries to detect objects in an image. Each object query looks for a particular object in the image. For COCO, the number of object queries is set to 100.

|

| 27 |

+

|

| 28 |

+

The model is trained using a "bipartite matching loss": one compares the predicted classes + bounding boxes of each of the N = 100 object queries to the ground truth annotations, padded up to the same length N (so if an image only contains 4 objects, 96 annotations will just have a "no object" as class and "no bounding box" as bounding box). The Hungarian matching algorithm is used to create an optimal one-to-one mapping between each of the N queries and each of the N annotations. Next, standard cross-entropy (for the classes) and a linear combination of the L1 and generalized IoU loss (for the bounding boxes) are used to optimize the parameters of the model.

|

| 29 |

+

|

| 30 |

+

|

| 31 |

|

| 32 |

## Intended uses & limitations

|

| 33 |

|

| 34 |

+

You can use the model to predict Bounding Box for 11 different Classes of Document Layout Analysis.

|

| 35 |

+

|

| 36 |

+

### How to use

|

| 37 |

+

|

| 38 |

+

```python

|

| 39 |

+

from transformers import AutoImageProcessor, DeformableDetrForObjectDetection

|

| 40 |

+

import torch

|

| 41 |

+

from PIL import Image

|

| 42 |

+

import requests

|

| 43 |

+

|

| 44 |

+

url = "string-url-of-a-Document_page"

|

| 45 |

+

image = Image.open(requests.get(url, stream=True).raw)

|

| 46 |

|

| 47 |

+

processor = AutoImageProcessor.from_pretrained("pascalrai/Deformable-DETR-Document-Layout-Analyzer")

|

| 48 |

+

model = DeformableDetrForObjectDetection.from_pretrained("pascalrai/Deformable-DETR-Document-Layout-Analyzer")

|

| 49 |

|

| 50 |

+

inputs = processor(images=image, return_tensors="pt")

|

| 51 |

+

outputs = model(**inputs)

|

| 52 |

|

| 53 |

+

# convert outputs (bounding boxes and class logits) to COCO API

|

| 54 |

+

target_sizes = torch.tensor([image.size[::-1]])

|

| 55 |

+

results = processor.post_process_object_detection(outputs, target_sizes=target_sizes, threshold=0.5)[0]

|

| 56 |

+

|

| 57 |

+

for score, label, box in zip(results["scores"], results["labels"], results["boxes"]):

|

| 58 |

+

box = [round(i, 2) for i in box.tolist()]

|

| 59 |

+

print(

|

| 60 |

+

f"Detected {model.config.id2label[label.item()]} with confidence "

|

| 61 |

+

f"{round(score.item(), 3)} at location {box}"

|

| 62 |

+

)

|

| 63 |

+

```

|

| 64 |

+

|

| 65 |

+

## Evaluation on DocLayNet

|

| 66 |

+

|

| 67 |

+

Evaluation of the Trained model on Test Dataset of DocLayNet (On 3 epoch):

|

| 68 |

+

```

|

| 69 |

+

{'map': 0.6086,

|

| 70 |

+

'map_50': 0.836,

|

| 71 |

+

'map_75': 0.6662,

|

| 72 |

+

'map_small': 0.3269,

|

| 73 |

+

'map_medium': 0.501,

|

| 74 |

+

'map_large': 0.6712,

|

| 75 |

+

'mar_1': 0.3336,

|

| 76 |

+

'mar_10': 0.7113,

|

| 77 |

+

'mar_100': 0.7596,

|

| 78 |

+

'mar_small': 0.4667,

|

| 79 |

+

'mar_medium': 0.6717,

|

| 80 |

+

'mar_large': 0.8436,

|

| 81 |

+

'map_0': 0.5709,

|

| 82 |

+

'mar_100_0': 0.7639,

|

| 83 |

+

'map_1': 0.4685,

|

| 84 |

+

'mar_100_1': 0.7468,

|

| 85 |

+

'map_2': 0.5776,

|

| 86 |

+

'mar_100_2': 0.7163,

|

| 87 |

+

'map_3': 0.7143,

|

| 88 |

+

'mar_100_3': 0.8251,

|

| 89 |

+

'map_4': 0.4056,

|

| 90 |

+

'mar_100_4': 0.533,

|

| 91 |

+

'map_5': 0.5095,

|

| 92 |

+

'mar_100_5': 0.6686,

|

| 93 |

+

'map_6': 0.6826,

|

| 94 |

+

'mar_100_6': 0.8387,

|

| 95 |

+

'map_7': 0.5859,

|

| 96 |

+

'mar_100_7': 0.7308,

|

| 97 |

+

'map_8': 0.7871,

|

| 98 |

+

'mar_100_8': 0.8852,

|

| 99 |

+

'map_9': 0.7898,

|

| 100 |

+

'mar_100_9': 0.8617,

|

| 101 |

+

'map_10': 0.6034,

|

| 102 |

+

'mar_100_10': 0.7854}

|

| 103 |

+

```

|

| 104 |

|

| 105 |

### Training hyperparameters

|

| 106 |

|

| 107 |

The following hyperparameters were used during training:

|

| 108 |

- learning_rate: 5e-05

|

| 109 |

+

- eff_train_batch_size: 12

|

| 110 |

+

- eff_eval_batch_size: 12

|

| 111 |

- seed: 42

|

| 112 |

- optimizer: Use adamw_torch with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

|

| 113 |

- lr_scheduler_type: cosine

|

| 114 |

+

- num_epochs: 10

|

| 115 |

|

| 116 |

### Framework versions

|

| 117 |

|

|

|

|

| 119 |

- Pytorch 2.6.0+cu124

|

| 120 |

- Datasets 2.21.0

|

| 121 |

- Tokenizers 0.21.0

|

| 122 |

+

|

| 123 |

+

### BibTeX entry and citation info

|

| 124 |

+

|

| 125 |

+

```bibtex

|

| 126 |

+

@misc{https://doi.org/10.48550/arxiv.2010.04159,

|

| 127 |

+

doi = {10.48550/ARXIV.2010.04159},

|

| 128 |

+

url = {https://arxiv.org/abs/2010.04159},

|

| 129 |

+

author = {Zhu, Xizhou and Su, Weijie and Lu, Lewei and Li, Bin and Wang, Xiaogang and Dai, Jifeng},

|

| 130 |

+

keywords = {Computer Vision and Pattern Recognition (cs.CV), FOS: Computer and information sciences, FOS: Computer and information sciences},

|

| 131 |

+

title = {Deformable DETR: Deformable Transformers for End-to-End Object Detection},

|

| 132 |

+

publisher = {arXiv},

|

| 133 |

+

year = {2020},

|

| 134 |

+

copyright = {arXiv.org perpetual, non-exclusive license}

|

| 135 |

+

}

|

| 136 |

+

@article{doclaynet2022,

|

| 137 |

+

title = {DocLayNet: A Large Human-Annotated Dataset for Document-Layout Segmentation},

|

| 138 |

+

doi = {10.1145/3534678.353904},

|

| 139 |

+

url = {https://doi.org/10.1145/3534678.3539043},

|

| 140 |

+

author = {Pfitzmann, Birgit and Auer, Christoph and Dolfi, Michele and Nassar, Ahmed S and Staar, Peter W J},

|

| 141 |

+

year = {2022},

|

| 142 |

+

isbn = {9781450393850},

|

| 143 |

+

publisher = {Association for Computing Machinery},

|

| 144 |

+

address = {New York, NY, USA},

|

| 145 |

+

booktitle = {Proceedings of the 28th ACM SIGKDD Conference on Knowledge Discovery and Data Mining},

|

| 146 |

+

pages = {3743–3751},

|

| 147 |

+

numpages = {9},

|

| 148 |

+

location = {Washington DC, USA},

|

| 149 |

+

series = {KDD '22}

|

| 150 |

+

}

|

| 151 |

+

```

|