File size: 5,252 Bytes

7ac28a8 |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 |

# Global Context Vision Transformer (GC ViT)

This model contains the official PyTorch implementation of **Global Context Vision Transformers** (ICML2023) \

\

[Global Context Vision

Transformers](https://arxiv.org/pdf/2206.09959.pdf) \

[Ali Hatamizadeh](https://research.nvidia.com/person/ali-hatamizadeh),

[Hongxu (Danny) Yin](https://scholar.princeton.edu/hongxu),

[Greg Heinrich](https://developer.nvidia.com/blog/author/gheinrich/),

[Jan Kautz](https://jankautz.com/),

and [Pavlo Molchanov](https://www.pmolchanov.com/).

GC ViT achieves state-of-the-art results across image classification, object detection and semantic segmentation tasks. On ImageNet-1K dataset for classification, GC ViT variants with `51M`, `90M` and `201M` parameters achieve `84.3`, `85.9` and `85.7` Top-1 accuracy, respectively, surpassing comparably-sized prior art such as CNN-based ConvNeXt and ViT-based Swin Transformer.

<p align="center">

<img src="https://github.com/NVlabs/GCVit/assets/26806394/d1820d6d-3aef-470e-a1d3-af370f1c1f77" width=63% height=63%

class="center">

</p>

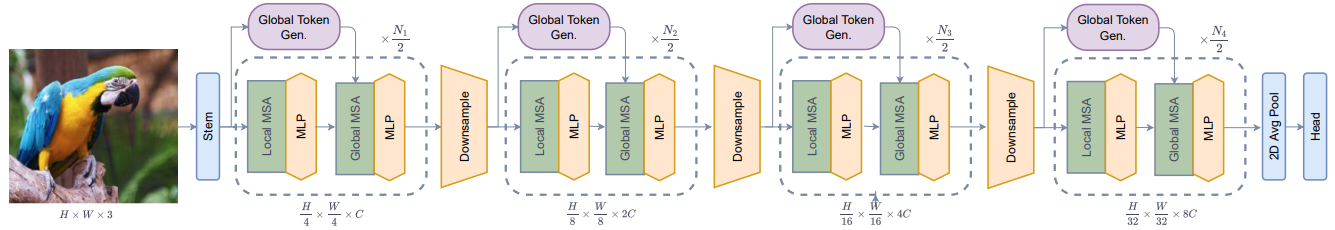

The architecture of GC ViT is demonstrated in the following:

## Introduction

**GC ViT** leverages global context self-attention modules, joint with local self-attention, to effectively yet efficiently model both long and short-range spatial interactions, without the need for expensive

operations such as computing attention masks or shifting local windows.

<p align="center">

<img src="https://github.com/NVlabs/GCVit/assets/26806394/da64f22a-e7af-4577-8884-b08ba4e24e49" width=72% height=72%

class="center">

</p>

## ImageNet Benchmarks

**ImageNet-1K Pretrained Models**

<table>

<tr>

<th>Model Variant</th>

<th>Acc@1</th>

<th>#Params(M)</th>

<th>FLOPs(G)</th>

<th>Download</th>

</tr>

<tr>

<td>GC ViT-XXT</td>

<th>79.9</th>

<td>12</td>

<td>2.1</td>

<td><a href="https://drive.google.com/uc?export=download&id=1apSIWQCa5VhWLJws8ugMTuyKzyayw4Eh">model</a></td>

</tr>

<tr>

<td>GC ViT-XT</td>

<th>82.0</th>

<td>20</td>

<td>2.6</td>

<td><a href="https://drive.google.com/uc?export=download&id=1OgSbX73AXmE0beStoJf2Jtda1yin9t9m">model</a></td>

</tr>

<tr>

<td>GC ViT-T</td>

<th>83.5</th>

<td>28</td>

<td>4.7</td>

<td><a href="https://drive.google.com/uc?export=download&id=11M6AsxKLhfOpD12Nm_c7lOvIIAn9cljy">model</a></td>

</tr>

<tr>

<td>GC ViT-T2</td>

<th>83.7</th>

<td>34</td>

<td>5.5</td>

<td><a href="https://drive.google.com/uc?export=download&id=1cTD8VemWFiwAx0FB9cRMT-P4vRuylvmQ">model</a></td>

</tr>

<tr>

<td>GC ViT-S</td>

<th>84.3</th>

<td>51</td>

<td>8.5</td>

<td><a href="https://drive.google.com/uc?export=download&id=1Nn6ABKmYjylyWC0I41Q3oExrn4fTzO9Y">model</a></td>

</tr>

<tr>

<td>GC ViT-S2</td>

<th>84.8</th>

<td>68</td>

<td>10.7</td>

<td><a href="https://drive.google.com/uc?export=download&id=1E5TtYpTqILznjBLLBTlO5CGq343RbEan">model</a></td>

</tr>

<tr>

<td>GC ViT-B</td>

<th>85.0</th>

<td>90</td>

<td>14.8</td>

<td><a href="https://drive.google.com/uc?export=download&id=1PF7qfxKLcv_ASOMetDP75n8lC50gaqyH">model</a></td>

</tr>

<tr>

<td>GC ViT-L</td>

<th>85.7</th>

<td>201</td>

<td>32.6</td>

<td><a href="https://drive.google.com/uc?export=download&id=1Lkz1nWKTwCCUR7yQJM6zu_xwN1TR0mxS">model</a></td>

</tr>

</table>

**ImageNet-21K Pretrained Models**

<table>

<tr>

<th>Model Variant</th>

<th>Resolution</th>

<th>Acc@1</th>

<th>#Params(M)</th>

<th>FLOPs(G)</th>

<th>Download</th>

</tr>

<tr>

<td>GC ViT-L</td>

<td>224 x 224</td>

<th>86.6</th>

<td>201</td>

<td>32.6</td>

<td><a href="https://drive.google.com/uc?export=download&id=1maGDr6mJkLyRTUkspMzCgSlhDzNRFGEf">model</a></td>

</tr>

<tr>

<td>GC ViT-L</td>

<td>384 x 384</td>

<th>87.4</th>

<td>201</td>

<td>120.4</td>

<td><a href="https://drive.google.com/uc?export=download&id=1P-IEhvQbJ3FjnunVkM1Z9dEpKw-tsuWv">model</a></td>

</tr>

</table>

## Citation

Please consider citing GC ViT paper if it is useful for your work:

```

@inproceedings{hatamizadeh2023global,

title={Global context vision transformers},

author={Hatamizadeh, Ali and Yin, Hongxu and Heinrich, Greg and Kautz, Jan and Molchanov, Pavlo},

booktitle={International Conference on Machine Learning},

pages={12633--12646},

year={2023},

organization={PMLR}

}

```

## Licenses

Copyright © 2023, NVIDIA Corporation. All rights reserved.

This work is made available under the Nvidia Source Code License-NC. Click [here](LICENSE) to view a copy of this license.

The pre-trained models are shared under [CC-BY-NC-SA-4.0](https://creativecommons.org/licenses/by-nc-sa/4.0/). If you remix, transform, or build upon the material, you must distribute your contributions under the same license as the original.

For license information regarding the timm, please refer to its [repository](https://github.com/rwightman/pytorch-image-models).

For license information regarding the ImageNet dataset, please refer to the ImageNet [official website](https://www.image-net.org/). |