Upload folder using huggingface_hub

Browse files- .gitattributes +3 -0

- README.md +143 -0

- aya-expanse-32B.png +3 -0

- config.json +27 -0

- generation_config.json +7 -0

- model-00001-of-00014.safetensors +3 -0

- model-00002-of-00014.safetensors +3 -0

- model-00003-of-00014.safetensors +3 -0

- model-00004-of-00014.safetensors +3 -0

- model-00005-of-00014.safetensors +3 -0

- model-00006-of-00014.safetensors +3 -0

- model-00007-of-00014.safetensors +3 -0

- model-00008-of-00014.safetensors +3 -0

- model-00009-of-00014.safetensors +3 -0

- model-00010-of-00014.safetensors +3 -0

- model-00011-of-00014.safetensors +3 -0

- model-00012-of-00014.safetensors +3 -0

- model-00013-of-00014.safetensors +3 -0

- model-00014-of-00014.safetensors +3 -0

- model.safetensors.index.json +329 -0

- special_tokens_map.json +23 -0

- tokenizer.json +3 -0

- tokenizer_config.json +322 -0

- winrates_dolly_32b.png +0 -0

- winrates_marenahard.png +0 -0

- winrates_marenahard_complete.png +3 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,6 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

tokenizer.json filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

aya-expanse-32B.png filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

winrates_marenahard_complete.png filter=lfs diff=lfs merge=lfs -text

|

README.md

ADDED

|

@@ -0,0 +1,143 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

inference: false

|

| 3 |

+

library_name: transformers

|

| 4 |

+

language:

|

| 5 |

+

- en

|

| 6 |

+

- fr

|

| 7 |

+

- de

|

| 8 |

+

- es

|

| 9 |

+

- it

|

| 10 |

+

- pt

|

| 11 |

+

- ja

|

| 12 |

+

- ko

|

| 13 |

+

- zh

|

| 14 |

+

- ar

|

| 15 |

+

- el

|

| 16 |

+

- fa

|

| 17 |

+

- pl

|

| 18 |

+

- id

|

| 19 |

+

- cs

|

| 20 |

+

- he

|

| 21 |

+

- hi

|

| 22 |

+

- nl

|

| 23 |

+

- ro

|

| 24 |

+

- ru

|

| 25 |

+

- tr

|

| 26 |

+

- uk

|

| 27 |

+

- vi

|

| 28 |

+

license: cc-by-nc-4.0

|

| 29 |

+

extra_gated_prompt: "By submitting this form, you agree to the [License Agreement](https://cohere.com/c4ai-cc-by-nc-license) and acknowledge that the information you provide will be collected, used, and shared in accordance with Cohere’s [Privacy Policy]( https://cohere.com/privacy). You’ll receive email updates about C4AI and Cohere research, events, products and services. You can unsubscribe at any time."

|

| 30 |

+

extra_gated_fields:

|

| 31 |

+

Name: text

|

| 32 |

+

Affiliation: text

|

| 33 |

+

Country: country

|

| 34 |

+

I agree to use this model for non-commercial use ONLY: checkbox

|

| 35 |

+

---

|

| 36 |

+

|

| 37 |

+

# Model Card for Aya-Expanse-32B

|

| 38 |

+

|

| 39 |

+

<img src="aya-expanse-32B.png" width="650" style="margin-left:'auto' margin-right:'auto' display:'block'"/>

|

| 40 |

+

|

| 41 |

+

**Aya Expanse 32B** is an open-weight research release of a model with highly advanced multilingual capabilities. It focuses on pairing a highly performant pre-trained [Command family](https://huggingface.co/CohereForAI/c4ai-command-r-plus) of models with the result of a year’s dedicated research from [Cohere For AI](https://cohere.for.ai/), including [data arbitrage](https://arxiv.org/pdf/2408.14960), [multilingual preference training](https://arxiv.org/abs/2407.02552), [safety tuning](https://arxiv.org/abs/2406.18682), and [model merging](https://arxiv.org/abs/2410.10801). The result is a powerful multilingual large language model serving 23 languages.

|

| 42 |

+

|

| 43 |

+

This model card corresponds to the 32-billion version of the Aya Expanse model. We also released an 8-billion version which you can find [here](https://huggingface.co/CohereForAI/aya-expanse-8B).

|

| 44 |

+

|

| 45 |

+

- Developed by: [Cohere For AI](https://cohere.for.ai/)

|

| 46 |

+

- Point of Contact: Cohere For AI: [cohere.for.ai](https://cohere.for.ai/)

|

| 47 |

+

- License: [CC-BY-NC](https://cohere.com/c4ai-cc-by-nc-license), requires also adhering to [C4AI's Acceptable Use Policy](https://docs.cohere.com/docs/c4ai-acceptable-use-policy)

|

| 48 |

+

- Model: Aya Expanse 32B

|

| 49 |

+

- Model Size: 32 billion parameters

|

| 50 |

+

|

| 51 |

+

### Supported Languages

|

| 52 |

+

|

| 53 |

+

We cover 23 languages: Arabic, Chinese (simplified & traditional), Czech, Dutch, English, French, German, Greek, Hebrew, Hebrew, Hindi, Indonesian, Italian, Japanese, Korean, Persian, Polish, Portuguese, Romanian, Russian, Spanish, Turkish, Ukrainian, and Vietnamese.

|

| 54 |

+

|

| 55 |

+

### Try it: Aya Expanse in Action

|

| 56 |

+

|

| 57 |

+

Use the [Cohere playground](https://dashboard.cohere.com/playground/chat) or our [Hugging Face Space](https://huggingface.co/spaces/CohereForAI/aya_expanse) for interactive exploration.

|

| 58 |

+

|

| 59 |

+

|

| 60 |

+

### How to Use Aya Expanse

|

| 61 |

+

|

| 62 |

+

Install the transformers library and load Aya Expanse 32B as follows:

|

| 63 |

+

|

| 64 |

+

```python

|

| 65 |

+

from transformers import AutoTokenizer, AutoModelForCausalLM

|

| 66 |

+

|

| 67 |

+

model_id = "CohereForAI/aya-expanse-32b"

|

| 68 |

+

tokenizer = AutoTokenizer.from_pretrained(model_id)

|

| 69 |

+

model = AutoModelForCausalLM.from_pretrained(model_id)

|

| 70 |

+

|

| 71 |

+

# Format message with the chat template

|

| 72 |

+

messages = [{"role": "user", "content": "Anneme onu ne kadar sevdiğimi anlatan bir mektup yaz"}]

|

| 73 |

+

input_ids = tokenizer.apply_chat_template(messages, tokenize=True, add_generation_prompt=True, return_tensors="pt")

|

| 74 |

+

## <BOS_TOKEN><|START_OF_TURN_TOKEN|><|USER_TOKEN|>Anneme onu ne kadar sevdiğimi anlatan bir mektup yaz<|END_OF_TURN_TOKEN|><|START_OF_TURN_TOKEN|><|CHATBOT_TOKEN|>

|

| 75 |

+

|

| 76 |

+

gen_tokens = model.generate(

|

| 77 |

+

input_ids,

|

| 78 |

+

max_new_tokens=100,

|

| 79 |

+

do_sample=True,

|

| 80 |

+

temperature=0.3,

|

| 81 |

+

)

|

| 82 |

+

|

| 83 |

+

gen_text = tokenizer.decode(gen_tokens[0])

|

| 84 |

+

print(gen_text)

|

| 85 |

+

```

|

| 86 |

+

|

| 87 |

+

### Example Notebooks

|

| 88 |

+

|

| 89 |

+

**Fine-Tuning:**

|

| 90 |

+

- [Detailed Fine-Tuning Notebook](https://colab.research.google.com/drive/1ryPYXzqb7oIn2fchMLdCNSIH5KfyEtv4).

|

| 91 |

+

|

| 92 |

+

**Community-Contributed Use Cases:**:

|

| 93 |

+

|

| 94 |

+

The following notebooks contributed by *Cohere For AI Community* members show how Aya Expanse can be used for different use cases:

|

| 95 |

+

- [Mulitlingual Writing Assistant](https://colab.research.google.com/drive/1SRLWQ0HdYN_NbRMVVUHTDXb-LSMZWF60)

|

| 96 |

+

- [AyaMCooking](https://colab.research.google.com/drive/1-cnn4LXYoZ4ARBpnsjQM3sU7egOL_fLB?usp=sharing)

|

| 97 |

+

- [Multilingual Question-Answering System](https://colab.research.google.com/drive/1bbB8hzyzCJbfMVjsZPeh4yNEALJFGNQy?usp=sharing)

|

| 98 |

+

|

| 99 |

+

|

| 100 |

+

## Model Details

|

| 101 |

+

|

| 102 |

+

**Input**: Models input text only.

|

| 103 |

+

|

| 104 |

+

**Output**: Models generate text only.

|

| 105 |

+

|

| 106 |

+

**Model Architecture**: Aya Expanse 32B is an auto-regressive language model that uses an optimized transformer architecture. Post-training includes supervised finetuning, preference training, and model merging.

|

| 107 |

+

|

| 108 |

+

**Languages covered**: The model is particularly optimized for multilinguality and supports the following languages: Arabic, Chinese (simplified & traditional), Czech, Dutch, English, French, German, Greek, Hebrew, Hindi, Indonesian, Italian, Japanese, Korean, Persian, Polish, Portuguese, Romanian, Russian, Spanish, Turkish, Ukrainian, and Vietnamese

|

| 109 |

+

|

| 110 |

+

**Context length**: 128K

|

| 111 |

+

|

| 112 |

+

### Evaluation

|

| 113 |

+

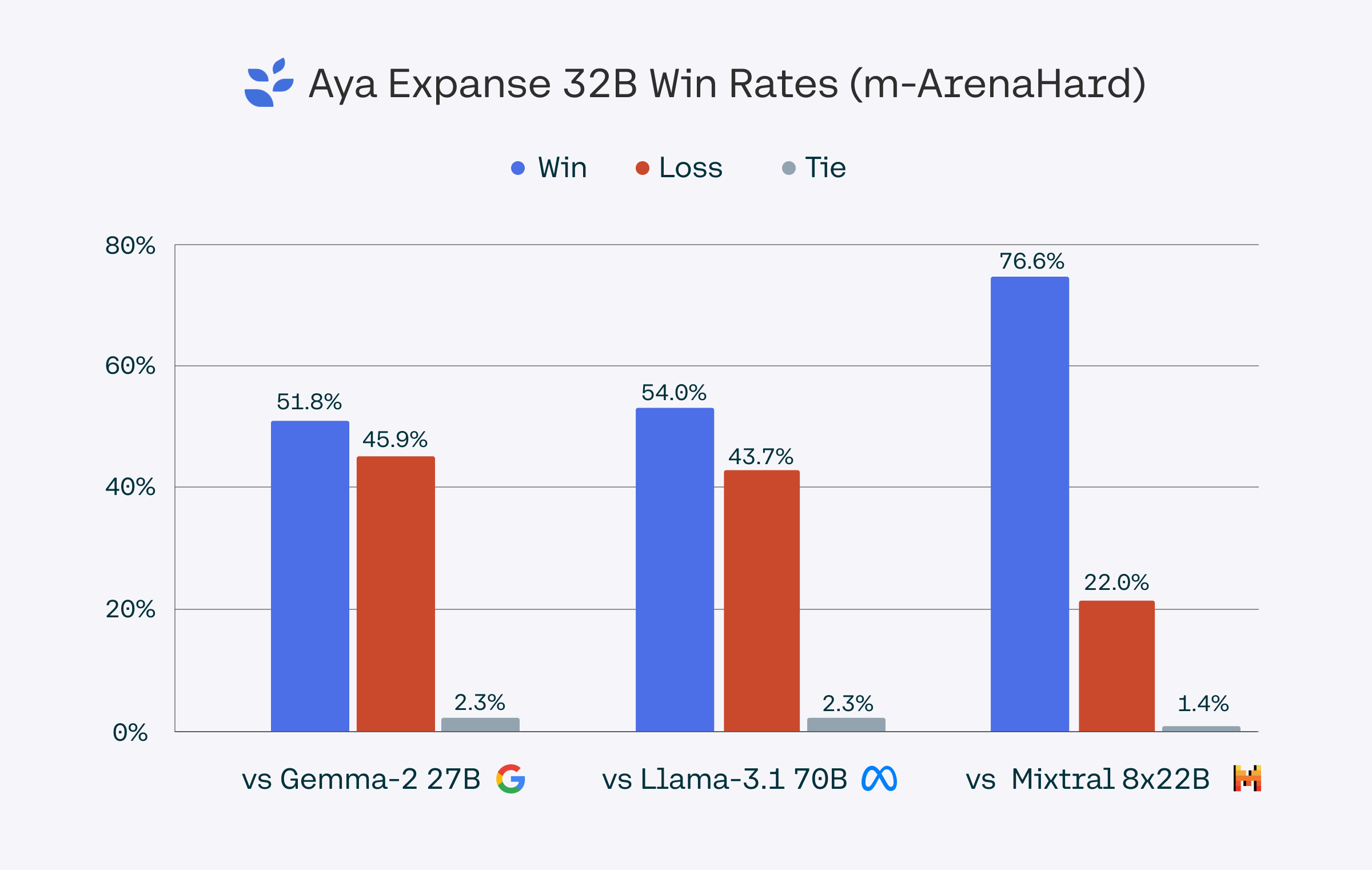

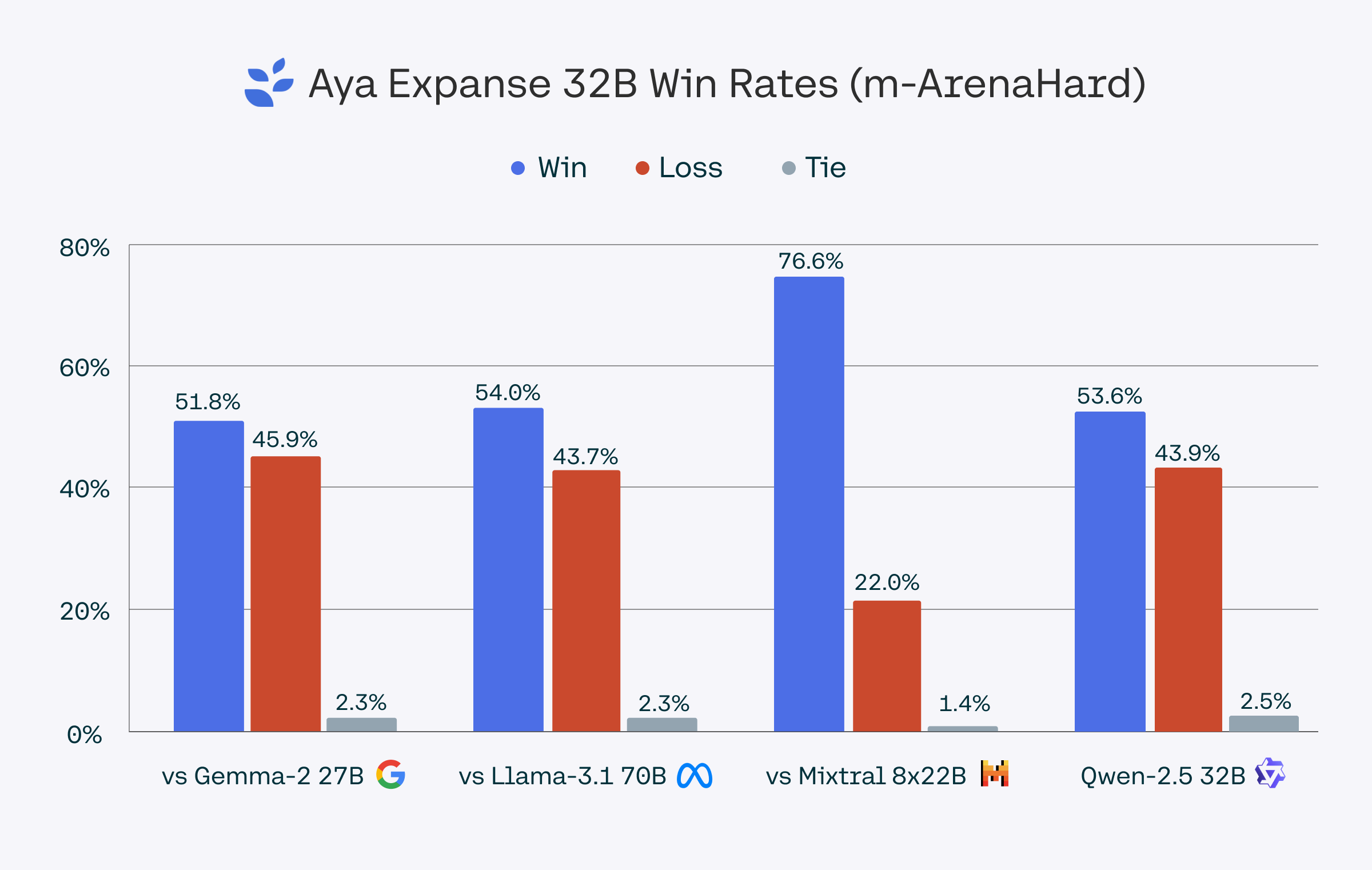

We evaluated Aya Expanse 32B against Gemma 2 27B, Llama 3.1 70B, Mixtral 8x22B, and Qwen 2.5 35B using the `dolly_human_edited` subset from the [Aya Evaluation Suite dataset](https://huggingface.co/datasets/CohereForAI/aya_evaluation_suite) and m-ArenaHard, a dataset based on the [Arena-Hard-Auto dataset](https://huggingface.co/datasets/lmarena-ai/arena-hard-auto-v0.1) and translated to the 23 languages we support in Aya Expanse. Win-rates were determined using gpt-4o-2024-08-06 as a judge. For a conservative benchmark, we report results from gpt-4o-2024-08-06, though gpt-4o-mini scores showed even stronger performance.

|

| 114 |

+

|

| 115 |

+

The m-ArenaHard dataset, used to evaluate Aya Expanse’s capabilities, is publicly available [here](https://huggingface.co/datasets/CohereForAI/m-ArenaHard).

|

| 116 |

+

|

| 117 |

+

<img src="winrates_marenahard_complete.png" width="650" style="margin-left:'auto' margin-right:'auto' display:'block'"/>

|

| 118 |

+

<img src="winrates_dolly_32b.png" width="650" style="margin-left:'auto' margin-right:'auto' display:'block'"/>

|

| 119 |

+

|

| 120 |

+

|

| 121 |

+

### Model Card Contact

|

| 122 |

+

|

| 123 |

+

For errors or additional questions about details in this model card, contact [email protected].

|

| 124 |

+

|

| 125 |

+

### Terms of Use

|

| 126 |

+

|

| 127 |

+

We hope that the release of this model will make community-based research efforts more accessible, by releasing the weights of a highly performant multilingual model to researchers all over the world. This model is governed by a [CC-BY-NC](https://cohere.com/c4ai-cc-by-nc-license) License with an acceptable use addendum, and also requires adhering to [C4AI's Acceptable Use Policy](https://docs.cohere.com/docs/c4ai-acceptable-use-policy).

|

| 128 |

+

|

| 129 |

+

### Cite

|

| 130 |

+

You can cite Aya Expanse using:

|

| 131 |

+

|

| 132 |

+

```

|

| 133 |

+

@misc{dang2024ayaexpansecombiningresearch,

|

| 134 |

+

title={Aya Expanse: Combining Research Breakthroughs for a New Multilingual Frontier},

|

| 135 |

+

author={John Dang and Shivalika Singh and Daniel D'souza and Arash Ahmadian and Alejandro Salamanca and Madeline Smith and Aidan Peppin and Sungjin Hong and Manoj Govindassamy and Terrence Zhao and Sandra Kublik and Meor Amer and Viraat Aryabumi and Jon Ander Campos and Yi-Chern Tan and Tom Kocmi and Florian Strub and Nathan Grinsztajn and Yannis Flet-Berliac and Acyr Locatelli and Hangyu Lin and Dwarak Talupuru and Bharat Venkitesh and David Cairuz and Bowen Yang and Tim Chung and Wei-Yin Ko and Sylvie Shang Shi and Amir Shukayev and Sammie Bae and Aleksandra Piktus and Roman Castagné and Felipe Cruz-Salinas and Eddie Kim and Lucas Crawhall-Stein and Adrien Morisot and Sudip Roy and Phil Blunsom and Ivan Zhang and Aidan Gomez and Nick Frosst and Marzieh Fadaee and Beyza Ermis and Ahmet Üstün and Sara Hooker},

|

| 136 |

+

year={2024},

|

| 137 |

+

eprint={2412.04261},

|

| 138 |

+

archivePrefix={arXiv},

|

| 139 |

+

primaryClass={cs.CL},

|

| 140 |

+

url={https://arxiv.org/abs/2412.04261},

|

| 141 |

+

}

|

| 142 |

+

```

|

| 143 |

+

|

aya-expanse-32B.png

ADDED

|

Git LFS Details

|

config.json

ADDED

|

@@ -0,0 +1,27 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"architectures": [

|

| 3 |

+

"CohereForCausalLM"

|

| 4 |

+

],

|

| 5 |

+

"attention_bias": false,

|

| 6 |

+

"attention_dropout": 0.0,

|

| 7 |

+

"bos_token_id": 5,

|

| 8 |

+

"eos_token_id": 255001,

|

| 9 |

+

"hidden_act": "silu",

|

| 10 |

+

"hidden_size": 8192,

|

| 11 |

+

"initializer_range": 0.02,

|

| 12 |

+

"intermediate_size": 24576,

|

| 13 |

+

"layer_norm_eps": 1e-05,

|

| 14 |

+

"logit_scale": 0.0625,

|

| 15 |

+

"max_position_embeddings": 8192,

|

| 16 |

+

"model_type": "cohere",

|

| 17 |

+

"num_attention_heads": 64,

|

| 18 |

+

"num_hidden_layers": 40,

|

| 19 |

+

"num_key_value_heads": 8,

|

| 20 |

+

"pad_token_id": 0,

|

| 21 |

+

"rope_theta": 4000000,

|

| 22 |

+

"torch_dtype": "float16",

|

| 23 |

+

"transformers_version": "4.44.0",

|

| 24 |

+

"use_cache": true,

|

| 25 |

+

"use_qk_norm": false,

|

| 26 |

+

"vocab_size": 256000

|

| 27 |

+

}

|

generation_config.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_from_model_config": true,

|

| 3 |

+

"bos_token_id": 5,

|

| 4 |

+

"eos_token_id": 255001,

|

| 5 |

+

"pad_token_id": 0,

|

| 6 |

+

"transformers_version": "4.44.0"

|

| 7 |

+

}

|

model-00001-of-00014.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:6f949230e7b55297fc58a24f481ffa388954a6436f0c5e1c419a94c8548487c9

|

| 3 |

+

size 4898947792

|

model-00002-of-00014.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:f207bc12c590241be199649d983d51a17f34963635d9c06c991c4b88472f01ff

|

| 3 |

+

size 4932553528

|

model-00003-of-00014.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:e183a8aad7d527986df34f2c40489ce9cd9027d515b63dcdb3779b9b2032e886

|

| 3 |

+

size 4932570024

|

model-00004-of-00014.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:30667d1d885ce5660588594e2e41428ad07427b8e618fc20795b783a32e9ed78

|

| 3 |

+

size 4831890592

|

model-00005-of-00014.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:4c6876f75e168b62a95a7035c39d7732b442f5b1fe52ef364bc8633396a8bcfe

|

| 3 |

+

size 4932553560

|

model-00006-of-00014.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:7b4466898c4d526d3b539780d4d611ca6636321690f3007c09b04f7e40f88e1f

|

| 3 |

+

size 4932553552

|

model-00007-of-00014.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:8ea8556aaef9b0abe1ca1fea7f163c62ffa6f7f3183aded4d2c01ad84b91c18b

|

| 3 |

+

size 4932570048

|

model-00008-of-00014.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:f189e4478009795ee63b56d1c03653f9734d802a14ea44acc46ea7670d6fc693

|

| 3 |

+

size 4831890616

|

model-00009-of-00014.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:ffac21f772dc3153b27b583ff92ecc715c8c076f25075667ceace2fcfd2dd8fc

|

| 3 |

+

size 4932553560

|

model-00010-of-00014.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:3649b6d1df6f45e747e6e3d1ccfd861133741b7adf9bb43ba60094186c57f83a

|

| 3 |

+

size 4932553552

|

model-00011-of-00014.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:bf7672e7b6457c80e298e1f6275c1e351d87f95b2cc19c58d8bf5e5e5af8830d

|

| 3 |

+

size 4932570048

|

model-00012-of-00014.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:f46d73cd4242a6fb55373a960580a0cfbb1585124a6367d414711fb9ed23f70c

|

| 3 |

+

size 4831890616

|

model-00013-of-00014.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:8d08950d61bb5b9ca5f0a21dd0f710e647b12dc50649981173dadd6f05633e4b

|

| 3 |

+

size 4932553560

|

model-00014-of-00014.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:5c93d2b482022cd44b532e0b66f90e7a68a9b44d9f9d32ed631029f3ce48f570

|

| 3 |

+

size 805339584

|

model.safetensors.index.json

ADDED

|

@@ -0,0 +1,329 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"metadata": {

|

| 3 |

+

"total_size": 64592953344

|

| 4 |

+

},

|

| 5 |

+

"weight_map": {

|

| 6 |

+

"model.embed_tokens.weight": "model-00001-of-00014.safetensors",

|

| 7 |

+

"model.layers.0.input_layernorm.weight": "model-00002-of-00014.safetensors",

|

| 8 |

+

"model.layers.0.mlp.down_proj.weight": "model-00002-of-00014.safetensors",

|

| 9 |

+

"model.layers.0.mlp.gate_proj.weight": "model-00001-of-00014.safetensors",

|

| 10 |

+

"model.layers.0.mlp.up_proj.weight": "model-00002-of-00014.safetensors",

|

| 11 |

+

"model.layers.0.self_attn.k_proj.weight": "model-00001-of-00014.safetensors",

|

| 12 |

+

"model.layers.0.self_attn.o_proj.weight": "model-00001-of-00014.safetensors",

|

| 13 |

+

"model.layers.0.self_attn.q_proj.weight": "model-00001-of-00014.safetensors",

|

| 14 |

+

"model.layers.0.self_attn.v_proj.weight": "model-00001-of-00014.safetensors",

|

| 15 |

+

"model.layers.1.input_layernorm.weight": "model-00002-of-00014.safetensors",

|

| 16 |

+

"model.layers.1.mlp.down_proj.weight": "model-00002-of-00014.safetensors",

|

| 17 |

+

"model.layers.1.mlp.gate_proj.weight": "model-00002-of-00014.safetensors",

|

| 18 |

+

"model.layers.1.mlp.up_proj.weight": "model-00002-of-00014.safetensors",

|

| 19 |

+

"model.layers.1.self_attn.k_proj.weight": "model-00002-of-00014.safetensors",

|

| 20 |

+

"model.layers.1.self_attn.o_proj.weight": "model-00002-of-00014.safetensors",

|

| 21 |

+

"model.layers.1.self_attn.q_proj.weight": "model-00002-of-00014.safetensors",

|

| 22 |

+

"model.layers.1.self_attn.v_proj.weight": "model-00002-of-00014.safetensors",

|

| 23 |

+

"model.layers.10.input_layernorm.weight": "model-00005-of-00014.safetensors",

|

| 24 |

+

"model.layers.10.mlp.down_proj.weight": "model-00005-of-00014.safetensors",

|

| 25 |

+

"model.layers.10.mlp.gate_proj.weight": "model-00005-of-00014.safetensors",

|

| 26 |

+

"model.layers.10.mlp.up_proj.weight": "model-00005-of-00014.safetensors",

|

| 27 |

+

"model.layers.10.self_attn.k_proj.weight": "model-00004-of-00014.safetensors",

|

| 28 |

+

"model.layers.10.self_attn.o_proj.weight": "model-00004-of-00014.safetensors",

|

| 29 |

+

"model.layers.10.self_attn.q_proj.weight": "model-00004-of-00014.safetensors",

|

| 30 |

+

"model.layers.10.self_attn.v_proj.weight": "model-00004-of-00014.safetensors",

|

| 31 |

+

"model.layers.11.input_layernorm.weight": "model-00005-of-00014.safetensors",

|

| 32 |

+

"model.layers.11.mlp.down_proj.weight": "model-00005-of-00014.safetensors",

|

| 33 |

+

"model.layers.11.mlp.gate_proj.weight": "model-00005-of-00014.safetensors",

|

| 34 |

+

"model.layers.11.mlp.up_proj.weight": "model-00005-of-00014.safetensors",

|

| 35 |

+

"model.layers.11.self_attn.k_proj.weight": "model-00005-of-00014.safetensors",

|

| 36 |

+

"model.layers.11.self_attn.o_proj.weight": "model-00005-of-00014.safetensors",

|

| 37 |

+

"model.layers.11.self_attn.q_proj.weight": "model-00005-of-00014.safetensors",

|

| 38 |

+

"model.layers.11.self_attn.v_proj.weight": "model-00005-of-00014.safetensors",

|

| 39 |

+

"model.layers.12.input_layernorm.weight": "model-00005-of-00014.safetensors",

|

| 40 |

+

"model.layers.12.mlp.down_proj.weight": "model-00005-of-00014.safetensors",

|

| 41 |

+

"model.layers.12.mlp.gate_proj.weight": "model-00005-of-00014.safetensors",

|

| 42 |

+

"model.layers.12.mlp.up_proj.weight": "model-00005-of-00014.safetensors",

|

| 43 |

+

"model.layers.12.self_attn.k_proj.weight": "model-00005-of-00014.safetensors",

|

| 44 |

+

"model.layers.12.self_attn.o_proj.weight": "model-00005-of-00014.safetensors",

|

| 45 |

+

"model.layers.12.self_attn.q_proj.weight": "model-00005-of-00014.safetensors",

|

| 46 |

+

"model.layers.12.self_attn.v_proj.weight": "model-00005-of-00014.safetensors",

|

| 47 |

+

"model.layers.13.input_layernorm.weight": "model-00006-of-00014.safetensors",

|

| 48 |

+

"model.layers.13.mlp.down_proj.weight": "model-00006-of-00014.safetensors",

|

| 49 |

+

"model.layers.13.mlp.gate_proj.weight": "model-00005-of-00014.safetensors",

|

| 50 |

+

"model.layers.13.mlp.up_proj.weight": "model-00006-of-00014.safetensors",

|

| 51 |

+

"model.layers.13.self_attn.k_proj.weight": "model-00005-of-00014.safetensors",

|

| 52 |

+

"model.layers.13.self_attn.o_proj.weight": "model-00005-of-00014.safetensors",

|

| 53 |

+

"model.layers.13.self_attn.q_proj.weight": "model-00005-of-00014.safetensors",

|

| 54 |

+

"model.layers.13.self_attn.v_proj.weight": "model-00005-of-00014.safetensors",

|

| 55 |

+

"model.layers.14.input_layernorm.weight": "model-00006-of-00014.safetensors",

|

| 56 |

+

"model.layers.14.mlp.down_proj.weight": "model-00006-of-00014.safetensors",

|

| 57 |

+

"model.layers.14.mlp.gate_proj.weight": "model-00006-of-00014.safetensors",

|

| 58 |

+

"model.layers.14.mlp.up_proj.weight": "model-00006-of-00014.safetensors",

|

| 59 |

+

"model.layers.14.self_attn.k_proj.weight": "model-00006-of-00014.safetensors",

|

| 60 |

+

"model.layers.14.self_attn.o_proj.weight": "model-00006-of-00014.safetensors",

|

| 61 |

+

"model.layers.14.self_attn.q_proj.weight": "model-00006-of-00014.safetensors",

|

| 62 |

+

"model.layers.14.self_attn.v_proj.weight": "model-00006-of-00014.safetensors",

|

| 63 |

+

"model.layers.15.input_layernorm.weight": "model-00006-of-00014.safetensors",

|

| 64 |

+

"model.layers.15.mlp.down_proj.weight": "model-00006-of-00014.safetensors",

|

| 65 |

+

"model.layers.15.mlp.gate_proj.weight": "model-00006-of-00014.safetensors",

|

| 66 |

+

"model.layers.15.mlp.up_proj.weight": "model-00006-of-00014.safetensors",

|

| 67 |

+

"model.layers.15.self_attn.k_proj.weight": "model-00006-of-00014.safetensors",

|

| 68 |

+

"model.layers.15.self_attn.o_proj.weight": "model-00006-of-00014.safetensors",

|

| 69 |

+

"model.layers.15.self_attn.q_proj.weight": "model-00006-of-00014.safetensors",

|

| 70 |

+

"model.layers.15.self_attn.v_proj.weight": "model-00006-of-00014.safetensors",

|

| 71 |

+

"model.layers.16.input_layernorm.weight": "model-00007-of-00014.safetensors",

|

| 72 |

+

"model.layers.16.mlp.down_proj.weight": "model-00007-of-00014.safetensors",

|

| 73 |

+

"model.layers.16.mlp.gate_proj.weight": "model-00006-of-00014.safetensors",

|

| 74 |

+

"model.layers.16.mlp.up_proj.weight": "model-00006-of-00014.safetensors",

|

| 75 |

+

"model.layers.16.self_attn.k_proj.weight": "model-00006-of-00014.safetensors",

|

| 76 |

+

"model.layers.16.self_attn.o_proj.weight": "model-00006-of-00014.safetensors",

|

| 77 |

+

"model.layers.16.self_attn.q_proj.weight": "model-00006-of-00014.safetensors",

|

| 78 |

+

"model.layers.16.self_attn.v_proj.weight": "model-00006-of-00014.safetensors",

|

| 79 |

+

"model.layers.17.input_layernorm.weight": "model-00007-of-00014.safetensors",

|

| 80 |

+

"model.layers.17.mlp.down_proj.weight": "model-00007-of-00014.safetensors",

|

| 81 |

+

"model.layers.17.mlp.gate_proj.weight": "model-00007-of-00014.safetensors",

|

| 82 |

+

"model.layers.17.mlp.up_proj.weight": "model-00007-of-00014.safetensors",

|

| 83 |

+

"model.layers.17.self_attn.k_proj.weight": "model-00007-of-00014.safetensors",

|

| 84 |

+

"model.layers.17.self_attn.o_proj.weight": "model-00007-of-00014.safetensors",

|

| 85 |

+

"model.layers.17.self_attn.q_proj.weight": "model-00007-of-00014.safetensors",

|

| 86 |

+

"model.layers.17.self_attn.v_proj.weight": "model-00007-of-00014.safetensors",

|

| 87 |

+

"model.layers.18.input_layernorm.weight": "model-00007-of-00014.safetensors",

|

| 88 |

+

"model.layers.18.mlp.down_proj.weight": "model-00007-of-00014.safetensors",

|

| 89 |

+

"model.layers.18.mlp.gate_proj.weight": "model-00007-of-00014.safetensors",

|

| 90 |

+

"model.layers.18.mlp.up_proj.weight": "model-00007-of-00014.safetensors",

|

| 91 |

+

"model.layers.18.self_attn.k_proj.weight": "model-00007-of-00014.safetensors",

|

| 92 |

+

"model.layers.18.self_attn.o_proj.weight": "model-00007-of-00014.safetensors",

|

| 93 |

+

"model.layers.18.self_attn.q_proj.weight": "model-00007-of-00014.safetensors",

|

| 94 |

+

"model.layers.18.self_attn.v_proj.weight": "model-00007-of-00014.safetensors",

|

| 95 |

+

"model.layers.19.input_layernorm.weight": "model-00007-of-00014.safetensors",

|

| 96 |

+

"model.layers.19.mlp.down_proj.weight": "model-00007-of-00014.safetensors",

|

| 97 |

+

"model.layers.19.mlp.gate_proj.weight": "model-00007-of-00014.safetensors",

|

| 98 |

+

"model.layers.19.mlp.up_proj.weight": "model-00007-of-00014.safetensors",

|

| 99 |

+

"model.layers.19.self_attn.k_proj.weight": "model-00007-of-00014.safetensors",

|

| 100 |

+

"model.layers.19.self_attn.o_proj.weight": "model-00007-of-00014.safetensors",

|

| 101 |

+

"model.layers.19.self_attn.q_proj.weight": "model-00007-of-00014.safetensors",

|

| 102 |

+

"model.layers.19.self_attn.v_proj.weight": "model-00007-of-00014.safetensors",

|

| 103 |

+

"model.layers.2.input_layernorm.weight": "model-00002-of-00014.safetensors",

|

| 104 |

+

"model.layers.2.mlp.down_proj.weight": "model-00002-of-00014.safetensors",

|

| 105 |

+

"model.layers.2.mlp.gate_proj.weight": "model-00002-of-00014.safetensors",

|

| 106 |

+

"model.layers.2.mlp.up_proj.weight": "model-00002-of-00014.safetensors",

|

| 107 |

+

"model.layers.2.self_attn.k_proj.weight": "model-00002-of-00014.safetensors",

|

| 108 |

+

"model.layers.2.self_attn.o_proj.weight": "model-00002-of-00014.safetensors",

|

| 109 |

+

"model.layers.2.self_attn.q_proj.weight": "model-00002-of-00014.safetensors",

|

| 110 |

+

"model.layers.2.self_attn.v_proj.weight": "model-00002-of-00014.safetensors",

|

| 111 |

+

"model.layers.20.input_layernorm.weight": "model-00008-of-00014.safetensors",

|

| 112 |

+

"model.layers.20.mlp.down_proj.weight": "model-00008-of-00014.safetensors",

|

| 113 |

+

"model.layers.20.mlp.gate_proj.weight": "model-00008-of-00014.safetensors",

|

| 114 |

+

"model.layers.20.mlp.up_proj.weight": "model-00008-of-00014.safetensors",

|

| 115 |

+

"model.layers.20.self_attn.k_proj.weight": "model-00008-of-00014.safetensors",

|

| 116 |

+

"model.layers.20.self_attn.o_proj.weight": "model-00008-of-00014.safetensors",

|

| 117 |

+

"model.layers.20.self_attn.q_proj.weight": "model-00008-of-00014.safetensors",

|

| 118 |

+

"model.layers.20.self_attn.v_proj.weight": "model-00008-of-00014.safetensors",

|

| 119 |

+

"model.layers.21.input_layernorm.weight": "model-00008-of-00014.safetensors",

|

| 120 |

+

"model.layers.21.mlp.down_proj.weight": "model-00008-of-00014.safetensors",

|

| 121 |

+

"model.layers.21.mlp.gate_proj.weight": "model-00008-of-00014.safetensors",

|

| 122 |

+

"model.layers.21.mlp.up_proj.weight": "model-00008-of-00014.safetensors",

|

| 123 |

+

"model.layers.21.self_attn.k_proj.weight": "model-00008-of-00014.safetensors",

|

| 124 |

+

"model.layers.21.self_attn.o_proj.weight": "model-00008-of-00014.safetensors",

|

| 125 |

+

"model.layers.21.self_attn.q_proj.weight": "model-00008-of-00014.safetensors",

|

| 126 |

+

"model.layers.21.self_attn.v_proj.weight": "model-00008-of-00014.safetensors",

|

| 127 |

+

"model.layers.22.input_layernorm.weight": "model-00008-of-00014.safetensors",

|

| 128 |

+

"model.layers.22.mlp.down_proj.weight": "model-00008-of-00014.safetensors",

|

| 129 |

+

"model.layers.22.mlp.gate_proj.weight": "model-00008-of-00014.safetensors",

|

| 130 |

+

"model.layers.22.mlp.up_proj.weight": "model-00008-of-00014.safetensors",

|

| 131 |

+

"model.layers.22.self_attn.k_proj.weight": "model-00008-of-00014.safetensors",

|

| 132 |

+

"model.layers.22.self_attn.o_proj.weight": "model-00008-of-00014.safetensors",

|

| 133 |

+

"model.layers.22.self_attn.q_proj.weight": "model-00008-of-00014.safetensors",

|

| 134 |

+

"model.layers.22.self_attn.v_proj.weight": "model-00008-of-00014.safetensors",

|

| 135 |

+

"model.layers.23.input_layernorm.weight": "model-00009-of-00014.safetensors",

|

| 136 |

+

"model.layers.23.mlp.down_proj.weight": "model-00009-of-00014.safetensors",

|

| 137 |

+

"model.layers.23.mlp.gate_proj.weight": "model-00009-of-00014.safetensors",

|

| 138 |

+

"model.layers.23.mlp.up_proj.weight": "model-00009-of-00014.safetensors",

|

| 139 |

+

"model.layers.23.self_attn.k_proj.weight": "model-00008-of-00014.safetensors",

|

| 140 |

+

"model.layers.23.self_attn.o_proj.weight": "model-00008-of-00014.safetensors",

|

| 141 |

+

"model.layers.23.self_attn.q_proj.weight": "model-00008-of-00014.safetensors",

|

| 142 |

+

"model.layers.23.self_attn.v_proj.weight": "model-00008-of-00014.safetensors",

|

| 143 |

+

"model.layers.24.input_layernorm.weight": "model-00009-of-00014.safetensors",

|

| 144 |

+

"model.layers.24.mlp.down_proj.weight": "model-00009-of-00014.safetensors",

|

| 145 |

+

"model.layers.24.mlp.gate_proj.weight": "model-00009-of-00014.safetensors",

|

| 146 |

+

"model.layers.24.mlp.up_proj.weight": "model-00009-of-00014.safetensors",

|

| 147 |

+

"model.layers.24.self_attn.k_proj.weight": "model-00009-of-00014.safetensors",

|

| 148 |

+

"model.layers.24.self_attn.o_proj.weight": "model-00009-of-00014.safetensors",

|

| 149 |

+

"model.layers.24.self_attn.q_proj.weight": "model-00009-of-00014.safetensors",

|

| 150 |

+

"model.layers.24.self_attn.v_proj.weight": "model-00009-of-00014.safetensors",

|

| 151 |

+

"model.layers.25.input_layernorm.weight": "model-00009-of-00014.safetensors",

|

| 152 |

+

"model.layers.25.mlp.down_proj.weight": "model-00009-of-00014.safetensors",

|

| 153 |

+

"model.layers.25.mlp.gate_proj.weight": "model-00009-of-00014.safetensors",

|

| 154 |

+

"model.layers.25.mlp.up_proj.weight": "model-00009-of-00014.safetensors",

|

| 155 |

+

"model.layers.25.self_attn.k_proj.weight": "model-00009-of-00014.safetensors",

|

| 156 |

+

"model.layers.25.self_attn.o_proj.weight": "model-00009-of-00014.safetensors",

|

| 157 |

+

"model.layers.25.self_attn.q_proj.weight": "model-00009-of-00014.safetensors",

|

| 158 |

+

"model.layers.25.self_attn.v_proj.weight": "model-00009-of-00014.safetensors",

|

| 159 |

+

"model.layers.26.input_layernorm.weight": "model-00010-of-00014.safetensors",

|

| 160 |

+

"model.layers.26.mlp.down_proj.weight": "model-00010-of-00014.safetensors",

|

| 161 |

+

"model.layers.26.mlp.gate_proj.weight": "model-00009-of-00014.safetensors",

|

| 162 |

+

"model.layers.26.mlp.up_proj.weight": "model-00010-of-00014.safetensors",

|

| 163 |

+

"model.layers.26.self_attn.k_proj.weight": "model-00009-of-00014.safetensors",

|

| 164 |

+

"model.layers.26.self_attn.o_proj.weight": "model-00009-of-00014.safetensors",

|

| 165 |

+

"model.layers.26.self_attn.q_proj.weight": "model-00009-of-00014.safetensors",

|

| 166 |

+

"model.layers.26.self_attn.v_proj.weight": "model-00009-of-00014.safetensors",

|

| 167 |

+

"model.layers.27.input_layernorm.weight": "model-00010-of-00014.safetensors",

|

| 168 |

+

"model.layers.27.mlp.down_proj.weight": "model-00010-of-00014.safetensors",

|

| 169 |

+

"model.layers.27.mlp.gate_proj.weight": "model-00010-of-00014.safetensors",

|

| 170 |

+

"model.layers.27.mlp.up_proj.weight": "model-00010-of-00014.safetensors",

|

| 171 |

+

"model.layers.27.self_attn.k_proj.weight": "model-00010-of-00014.safetensors",

|

| 172 |

+

"model.layers.27.self_attn.o_proj.weight": "model-00010-of-00014.safetensors",

|

| 173 |

+

"model.layers.27.self_attn.q_proj.weight": "model-00010-of-00014.safetensors",

|

| 174 |

+

"model.layers.27.self_attn.v_proj.weight": "model-00010-of-00014.safetensors",

|

| 175 |

+

"model.layers.28.input_layernorm.weight": "model-00010-of-00014.safetensors",

|

| 176 |

+

"model.layers.28.mlp.down_proj.weight": "model-00010-of-00014.safetensors",

|

| 177 |

+

"model.layers.28.mlp.gate_proj.weight": "model-00010-of-00014.safetensors",

|

| 178 |

+

"model.layers.28.mlp.up_proj.weight": "model-00010-of-00014.safetensors",

|

| 179 |

+

"model.layers.28.self_attn.k_proj.weight": "model-00010-of-00014.safetensors",

|

| 180 |

+

"model.layers.28.self_attn.o_proj.weight": "model-00010-of-00014.safetensors",

|

| 181 |

+

"model.layers.28.self_attn.q_proj.weight": "model-00010-of-00014.safetensors",

|

| 182 |

+

"model.layers.28.self_attn.v_proj.weight": "model-00010-of-00014.safetensors",

|

| 183 |

+

"model.layers.29.input_layernorm.weight": "model-00011-of-00014.safetensors",

|

| 184 |

+

"model.layers.29.mlp.down_proj.weight": "model-00011-of-00014.safetensors",

|

| 185 |

+

"model.layers.29.mlp.gate_proj.weight": "model-00010-of-00014.safetensors",

|

| 186 |

+

"model.layers.29.mlp.up_proj.weight": "model-00010-of-00014.safetensors",

|

| 187 |

+

"model.layers.29.self_attn.k_proj.weight": "model-00010-of-00014.safetensors",

|

| 188 |

+

"model.layers.29.self_attn.o_proj.weight": "model-00010-of-00014.safetensors",

|

| 189 |

+

"model.layers.29.self_attn.q_proj.weight": "model-00010-of-00014.safetensors",

|

| 190 |

+

"model.layers.29.self_attn.v_proj.weight": "model-00010-of-00014.safetensors",

|

| 191 |

+

"model.layers.3.input_layernorm.weight": "model-00003-of-00014.safetensors",

|

| 192 |

+

"model.layers.3.mlp.down_proj.weight": "model-00003-of-00014.safetensors",

|

| 193 |

+

"model.layers.3.mlp.gate_proj.weight": "model-00002-of-00014.safetensors",

|

| 194 |

+

"model.layers.3.mlp.up_proj.weight": "model-00002-of-00014.safetensors",

|

| 195 |

+

"model.layers.3.self_attn.k_proj.weight": "model-00002-of-00014.safetensors",

|

| 196 |

+

"model.layers.3.self_attn.o_proj.weight": "model-00002-of-00014.safetensors",

|

| 197 |

+

"model.layers.3.self_attn.q_proj.weight": "model-00002-of-00014.safetensors",

|

| 198 |

+

"model.layers.3.self_attn.v_proj.weight": "model-00002-of-00014.safetensors",

|

| 199 |

+

"model.layers.30.input_layernorm.weight": "model-00011-of-00014.safetensors",

|

| 200 |

+

"model.layers.30.mlp.down_proj.weight": "model-00011-of-00014.safetensors",

|

| 201 |

+

"model.layers.30.mlp.gate_proj.weight": "model-00011-of-00014.safetensors",

|

| 202 |

+

"model.layers.30.mlp.up_proj.weight": "model-00011-of-00014.safetensors",

|

| 203 |

+

"model.layers.30.self_attn.k_proj.weight": "model-00011-of-00014.safetensors",

|

| 204 |

+

"model.layers.30.self_attn.o_proj.weight": "model-00011-of-00014.safetensors",

|

| 205 |

+

"model.layers.30.self_attn.q_proj.weight": "model-00011-of-00014.safetensors",

|

| 206 |

+

"model.layers.30.self_attn.v_proj.weight": "model-00011-of-00014.safetensors",

|

| 207 |

+

"model.layers.31.input_layernorm.weight": "model-00011-of-00014.safetensors",

|

| 208 |

+

"model.layers.31.mlp.down_proj.weight": "model-00011-of-00014.safetensors",

|

| 209 |

+

"model.layers.31.mlp.gate_proj.weight": "model-00011-of-00014.safetensors",

|

| 210 |

+

"model.layers.31.mlp.up_proj.weight": "model-00011-of-00014.safetensors",

|

| 211 |

+

"model.layers.31.self_attn.k_proj.weight": "model-00011-of-00014.safetensors",

|

| 212 |

+

"model.layers.31.self_attn.o_proj.weight": "model-00011-of-00014.safetensors",

|

| 213 |

+

"model.layers.31.self_attn.q_proj.weight": "model-00011-of-00014.safetensors",

|

| 214 |

+

"model.layers.31.self_attn.v_proj.weight": "model-00011-of-00014.safetensors",

|

| 215 |

+

"model.layers.32.input_layernorm.weight": "model-00011-of-00014.safetensors",

|

| 216 |

+

"model.layers.32.mlp.down_proj.weight": "model-00011-of-00014.safetensors",

|

| 217 |

+

"model.layers.32.mlp.gate_proj.weight": "model-00011-of-00014.safetensors",

|

| 218 |

+

"model.layers.32.mlp.up_proj.weight": "model-00011-of-00014.safetensors",

|

| 219 |

+

"model.layers.32.self_attn.k_proj.weight": "model-00011-of-00014.safetensors",

|

| 220 |

+

"model.layers.32.self_attn.o_proj.weight": "model-00011-of-00014.safetensors",

|

| 221 |

+

"model.layers.32.self_attn.q_proj.weight": "model-00011-of-00014.safetensors",

|

| 222 |

+

"model.layers.32.self_attn.v_proj.weight": "model-00011-of-00014.safetensors",

|

| 223 |

+

"model.layers.33.input_layernorm.weight": "model-00012-of-00014.safetensors",

|

| 224 |

+

"model.layers.33.mlp.down_proj.weight": "model-00012-of-00014.safetensors",

|

| 225 |

+

"model.layers.33.mlp.gate_proj.weight": "model-00012-of-00014.safetensors",

|

| 226 |

+

"model.layers.33.mlp.up_proj.weight": "model-00012-of-00014.safetensors",

|

| 227 |

+

"model.layers.33.self_attn.k_proj.weight": "model-00012-of-00014.safetensors",

|

| 228 |

+

"model.layers.33.self_attn.o_proj.weight": "model-00012-of-00014.safetensors",

|

| 229 |

+

"model.layers.33.self_attn.q_proj.weight": "model-00012-of-00014.safetensors",

|

| 230 |

+

"model.layers.33.self_attn.v_proj.weight": "model-00012-of-00014.safetensors",

|

| 231 |

+

"model.layers.34.input_layernorm.weight": "model-00012-of-00014.safetensors",

|

| 232 |

+

"model.layers.34.mlp.down_proj.weight": "model-00012-of-00014.safetensors",

|

| 233 |

+

"model.layers.34.mlp.gate_proj.weight": "model-00012-of-00014.safetensors",

|

| 234 |

+

"model.layers.34.mlp.up_proj.weight": "model-00012-of-00014.safetensors",

|

| 235 |

+

"model.layers.34.self_attn.k_proj.weight": "model-00012-of-00014.safetensors",

|

| 236 |

+

"model.layers.34.self_attn.o_proj.weight": "model-00012-of-00014.safetensors",

|

| 237 |

+

"model.layers.34.self_attn.q_proj.weight": "model-00012-of-00014.safetensors",

|

| 238 |

+

"model.layers.34.self_attn.v_proj.weight": "model-00012-of-00014.safetensors",

|

| 239 |

+

"model.layers.35.input_layernorm.weight": "model-00012-of-00014.safetensors",

|

| 240 |

+

"model.layers.35.mlp.down_proj.weight": "model-00012-of-00014.safetensors",

|

| 241 |

+

"model.layers.35.mlp.gate_proj.weight": "model-00012-of-00014.safetensors",

|

| 242 |

+

"model.layers.35.mlp.up_proj.weight": "model-00012-of-00014.safetensors",

|

| 243 |

+

"model.layers.35.self_attn.k_proj.weight": "model-00012-of-00014.safetensors",

|

| 244 |

+

"model.layers.35.self_attn.o_proj.weight": "model-00012-of-00014.safetensors",

|

| 245 |

+

"model.layers.35.self_attn.q_proj.weight": "model-00012-of-00014.safetensors",

|

| 246 |

+

"model.layers.35.self_attn.v_proj.weight": "model-00012-of-00014.safetensors",

|

| 247 |

+

"model.layers.36.input_layernorm.weight": "model-00013-of-00014.safetensors",

|

| 248 |

+

"model.layers.36.mlp.down_proj.weight": "model-00013-of-00014.safetensors",

|

| 249 |

+

"model.layers.36.mlp.gate_proj.weight": "model-00013-of-00014.safetensors",

|

| 250 |

+

"model.layers.36.mlp.up_proj.weight": "model-00013-of-00014.safetensors",

|

| 251 |

+

"model.layers.36.self_attn.k_proj.weight": "model-00012-of-00014.safetensors",

|

| 252 |

+

"model.layers.36.self_attn.o_proj.weight": "model-00012-of-00014.safetensors",

|

| 253 |

+

"model.layers.36.self_attn.q_proj.weight": "model-00012-of-00014.safetensors",

|

| 254 |

+

"model.layers.36.self_attn.v_proj.weight": "model-00012-of-00014.safetensors",

|

| 255 |

+

"model.layers.37.input_layernorm.weight": "model-00013-of-00014.safetensors",

|

| 256 |

+

"model.layers.37.mlp.down_proj.weight": "model-00013-of-00014.safetensors",

|

| 257 |

+

"model.layers.37.mlp.gate_proj.weight": "model-00013-of-00014.safetensors",

|

| 258 |

+

"model.layers.37.mlp.up_proj.weight": "model-00013-of-00014.safetensors",

|

| 259 |

+

"model.layers.37.self_attn.k_proj.weight": "model-00013-of-00014.safetensors",

|

| 260 |

+

"model.layers.37.self_attn.o_proj.weight": "model-00013-of-00014.safetensors",

|

| 261 |

+

"model.layers.37.self_attn.q_proj.weight": "model-00013-of-00014.safetensors",

|

| 262 |

+

"model.layers.37.self_attn.v_proj.weight": "model-00013-of-00014.safetensors",

|

| 263 |

+

"model.layers.38.input_layernorm.weight": "model-00013-of-00014.safetensors",

|

| 264 |

+

"model.layers.38.mlp.down_proj.weight": "model-00013-of-00014.safetensors",

|

| 265 |

+

"model.layers.38.mlp.gate_proj.weight": "model-00013-of-00014.safetensors",

|

| 266 |

+

"model.layers.38.mlp.up_proj.weight": "model-00013-of-00014.safetensors",

|

| 267 |

+

"model.layers.38.self_attn.k_proj.weight": "model-00013-of-00014.safetensors",

|

| 268 |

+

"model.layers.38.self_attn.o_proj.weight": "model-00013-of-00014.safetensors",

|

| 269 |

+

"model.layers.38.self_attn.q_proj.weight": "model-00013-of-00014.safetensors",

|

| 270 |

+

"model.layers.38.self_attn.v_proj.weight": "model-00013-of-00014.safetensors",

|

| 271 |

+

"model.layers.39.input_layernorm.weight": "model-00014-of-00014.safetensors",

|

| 272 |

+

"model.layers.39.mlp.down_proj.weight": "model-00014-of-00014.safetensors",

|

| 273 |

+

"model.layers.39.mlp.gate_proj.weight": "model-00013-of-00014.safetensors",

|

| 274 |

+

"model.layers.39.mlp.up_proj.weight": "model-00014-of-00014.safetensors",

|

| 275 |

+

"model.layers.39.self_attn.k_proj.weight": "model-00013-of-00014.safetensors",

|

| 276 |

+

"model.layers.39.self_attn.o_proj.weight": "model-00013-of-00014.safetensors",

|

| 277 |

+

"model.layers.39.self_attn.q_proj.weight": "model-00013-of-00014.safetensors",

|

| 278 |

+

"model.layers.39.self_attn.v_proj.weight": "model-00013-of-00014.safetensors",

|

| 279 |

+

"model.layers.4.input_layernorm.weight": "model-00003-of-00014.safetensors",

|

| 280 |

+

"model.layers.4.mlp.down_proj.weight": "model-00003-of-00014.safetensors",

|

| 281 |

+

"model.layers.4.mlp.gate_proj.weight": "model-00003-of-00014.safetensors",

|

| 282 |

+

"model.layers.4.mlp.up_proj.weight": "model-00003-of-00014.safetensors",

|

| 283 |

+

"model.layers.4.self_attn.k_proj.weight": "model-00003-of-00014.safetensors",

|

| 284 |

+

"model.layers.4.self_attn.o_proj.weight": "model-00003-of-00014.safetensors",

|

| 285 |

+

"model.layers.4.self_attn.q_proj.weight": "model-00003-of-00014.safetensors",

|

| 286 |

+

"model.layers.4.self_attn.v_proj.weight": "model-00003-of-00014.safetensors",

|

| 287 |

+

"model.layers.5.input_layernorm.weight": "model-00003-of-00014.safetensors",

|

| 288 |

+

"model.layers.5.mlp.down_proj.weight": "model-00003-of-00014.safetensors",

|

| 289 |

+

"model.layers.5.mlp.gate_proj.weight": "model-00003-of-00014.safetensors",

|

| 290 |

+

"model.layers.5.mlp.up_proj.weight": "model-00003-of-00014.safetensors",

|

| 291 |

+

"model.layers.5.self_attn.k_proj.weight": "model-00003-of-00014.safetensors",

|

| 292 |

+

"model.layers.5.self_attn.o_proj.weight": "model-00003-of-00014.safetensors",

|

| 293 |

+

"model.layers.5.self_attn.q_proj.weight": "model-00003-of-00014.safetensors",

|

| 294 |

+

"model.layers.5.self_attn.v_proj.weight": "model-00003-of-00014.safetensors",

|

| 295 |

+

"model.layers.6.input_layernorm.weight": "model-00003-of-00014.safetensors",

|

| 296 |

+

"model.layers.6.mlp.down_proj.weight": "model-00003-of-00014.safetensors",

|

| 297 |

+

"model.layers.6.mlp.gate_proj.weight": "model-00003-of-00014.safetensors",

|

| 298 |

+

"model.layers.6.mlp.up_proj.weight": "model-00003-of-00014.safetensors",

|

| 299 |

+

"model.layers.6.self_attn.k_proj.weight": "model-00003-of-00014.safetensors",

|

| 300 |

+

"model.layers.6.self_attn.o_proj.weight": "model-00003-of-00014.safetensors",

|

| 301 |

+

"model.layers.6.self_attn.q_proj.weight": "model-00003-of-00014.safetensors",

|

| 302 |

+

"model.layers.6.self_attn.v_proj.weight": "model-00003-of-00014.safetensors",

|

| 303 |

+

"model.layers.7.input_layernorm.weight": "model-00004-of-00014.safetensors",

|

| 304 |

+

"model.layers.7.mlp.down_proj.weight": "model-00004-of-00014.safetensors",

|

| 305 |

+

"model.layers.7.mlp.gate_proj.weight": "model-00004-of-00014.safetensors",

|

| 306 |

+

"model.layers.7.mlp.up_proj.weight": "model-00004-of-00014.safetensors",

|

| 307 |

+

"model.layers.7.self_attn.k_proj.weight": "model-00004-of-00014.safetensors",

|

| 308 |

+

"model.layers.7.self_attn.o_proj.weight": "model-00004-of-00014.safetensors",

|

| 309 |

+

"model.layers.7.self_attn.q_proj.weight": "model-00004-of-00014.safetensors",

|

| 310 |

+

"model.layers.7.self_attn.v_proj.weight": "model-00004-of-00014.safetensors",

|

| 311 |

+

"model.layers.8.input_layernorm.weight": "model-00004-of-00014.safetensors",

|

| 312 |

+

"model.layers.8.mlp.down_proj.weight": "model-00004-of-00014.safetensors",

|

| 313 |

+

"model.layers.8.mlp.gate_proj.weight": "model-00004-of-00014.safetensors",

|

| 314 |

+

"model.layers.8.mlp.up_proj.weight": "model-00004-of-00014.safetensors",

|

| 315 |

+

"model.layers.8.self_attn.k_proj.weight": "model-00004-of-00014.safetensors",

|

| 316 |

+

"model.layers.8.self_attn.o_proj.weight": "model-00004-of-00014.safetensors",

|

| 317 |

+

"model.layers.8.self_attn.q_proj.weight": "model-00004-of-00014.safetensors",

|

| 318 |

+

"model.layers.8.self_attn.v_proj.weight": "model-00004-of-00014.safetensors",

|

| 319 |

+

"model.layers.9.input_layernorm.weight": "model-00004-of-00014.safetensors",

|

| 320 |

+

"model.layers.9.mlp.down_proj.weight": "model-00004-of-00014.safetensors",

|

| 321 |

+

"model.layers.9.mlp.gate_proj.weight": "model-00004-of-00014.safetensors",

|

| 322 |

+

"model.layers.9.mlp.up_proj.weight": "model-00004-of-00014.safetensors",

|

| 323 |

+

"model.layers.9.self_attn.k_proj.weight": "model-00004-of-00014.safetensors",

|

| 324 |

+

"model.layers.9.self_attn.o_proj.weight": "model-00004-of-00014.safetensors",

|

| 325 |

+

"model.layers.9.self_attn.q_proj.weight": "model-00004-of-00014.safetensors",

|

| 326 |

+

"model.layers.9.self_attn.v_proj.weight": "model-00004-of-00014.safetensors",

|

| 327 |

+

"model.norm.weight": "model-00014-of-00014.safetensors"

|

| 328 |

+

}

|

| 329 |

+

}

|

special_tokens_map.json

ADDED

|

@@ -0,0 +1,23 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"bos_token": {

|

| 3 |

+

"content": "<BOS_TOKEN>",

|

| 4 |

+

"lstrip": false,

|

| 5 |

+

"normalized": false,

|

| 6 |

+

"rstrip": false,

|

| 7 |

+

"single_word": false

|

| 8 |

+

},

|

| 9 |

+

"eos_token": {

|

| 10 |

+

"content": "<|END_OF_TURN_TOKEN|>",

|

| 11 |

+

"lstrip": false,

|

| 12 |

+

"normalized": false,

|

| 13 |

+

"rstrip": false,

|

| 14 |

+

"single_word": false

|

| 15 |

+

},

|

| 16 |

+

"pad_token": {

|

| 17 |

+

"content": "<PAD>",

|

| 18 |

+

"lstrip": false,

|

| 19 |

+

"normalized": false,

|

| 20 |

+

"rstrip": false,

|

| 21 |

+

"single_word": false

|

| 22 |

+

}

|

| 23 |

+

}

|

tokenizer.json

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:c69a7ea6c0927dfac8c349186ebcf0466a4723c21cbdb2e850cf559f0bee92b8

|

| 3 |

+

size 12777433

|

tokenizer_config.json

ADDED

|

@@ -0,0 +1,322 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|