init

Browse files- README.md +31 -1

- data/stats.data_size.csv +5 -0

- data/stats.entity_distribution.png +3 -0

- data/stats.predicate_distribution.png +3 -0

- data/t_rex.filter.test.jsonl +2 -2

- data/t_rex.filter.train.jsonl +2 -2

- data/t_rex.filter.validation.jsonl +2 -2

- filtering.py +30 -5

- stats.py +132 -0

README.md

CHANGED

|

@@ -14,8 +14,38 @@ pretty_name: t_rex

|

|

| 14 |

- **Paper:** [https://aclanthology.org/L18-1544/](https://aclanthology.org/L18-1544/)

|

| 15 |

- **Dataset:** T-REX

|

| 16 |

|

| 17 |

-

|

| 18 |

This is the T-REX dataset proposed in [https://aclanthology.org/L18-1544/](https://aclanthology.org/L18-1544/).

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 19 |

|

| 20 |

|

| 21 |

## Dataset Structure

|

|

|

|

| 14 |

- **Paper:** [https://aclanthology.org/L18-1544/](https://aclanthology.org/L18-1544/)

|

| 15 |

- **Dataset:** T-REX

|

| 16 |

|

| 17 |

+

## Dataset Summary

|

| 18 |

This is the T-REX dataset proposed in [https://aclanthology.org/L18-1544/](https://aclanthology.org/L18-1544/).

|

| 19 |

+

We split the raw T-REX dataset into train/validation/test split by the ratio of 70/15/15.

|

| 20 |

+

|

| 21 |

+

### Filtering to Remove Noise

|

| 22 |

+

|

| 23 |

+

We apply filtering to keep triples with alpha-numeric subject and object, as well as triples with at least either of subject or object is a named-entity.

|

| 24 |

+

|

| 25 |

+

- Number of unique entities in subject and object.

|

| 26 |

+

|

| 27 |

+

| Dataset | `train` | `validation` | `test` | `all` |

|

| 28 |

+

| ------- | ------- | ------------ | ------- | ------- |

|

| 29 |

+

| raw | 659,163 | 141,248 | 141,249 | 941,660 |

|

| 30 |

+

| filter | 463,521 | 99,550 | 99,408 | 662,479 |

|

| 31 |

+

|

| 32 |

+

- Number of unique predicate.

|

| 33 |

+

|

| 34 |

+

| Dataset | `train` | `validation` | `test` | `all` |

|

| 35 |

+

| ------- | ------- | ------------ | ------- | ----- |

|

| 36 |

+

| raw | 894 | 717 | 700 | 2,311 |

|

| 37 |

+

| filter | 780 | 614 | 616 | 2,010 |

|

| 38 |

+

|

| 39 |

+

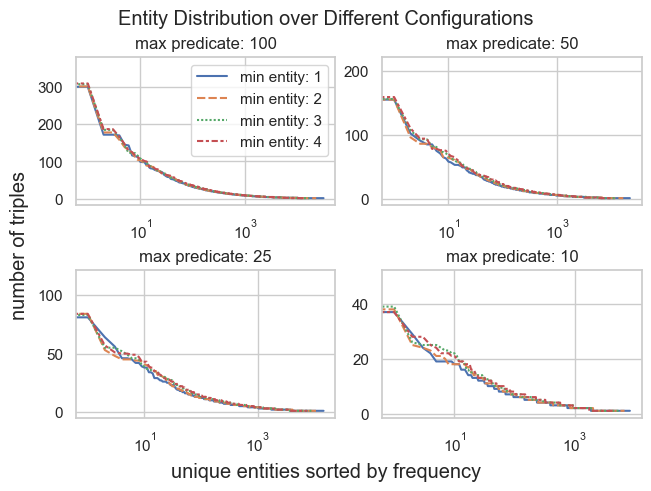

### Filtering to Purify the Dataset

|

| 40 |

+

|

| 41 |

+

| min entity/max predicate | 10 | 25 | 50 | 100 |

|

| 42 |

+

|-------------------------:|-----:|------:|------:|------:|

|

| 43 |

+

| 1 | 6,052 | 12,295 | 20,602 | 33,206 |

|

| 44 |

+

| 2 | 5,489 | 11,153 | 18,595 | 29,618 |

|

| 45 |

+

| 3 | 4,986 | 10,093 | 16,599 | 26,151 |

|

| 46 |

+

| 4 | 4,640 | 9,384 | 15,335 | 24,075 |

|

| 47 |

+

|

| 48 |

+

[comment]: <> (<img src="https://raw.githubusercontent.com/asahi417/relbert/test/assets/relbert_logo.png" alt="" width="150" style="margin-left:'auto' margin-right:'auto' display:'block'"/>)

|

| 49 |

|

| 50 |

|

| 51 |

## Dataset Structure

|

data/stats.data_size.csv

ADDED

|

@@ -0,0 +1,5 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

min entity / max predicate,10,25,50,100

|

| 2 |

+

1,6052,12295,20602,33206

|

| 3 |

+

2,5489,11153,18595,29618

|

| 4 |

+

3,4986,10093,16599,26151

|

| 5 |

+

4,4640,9384,15335,24075

|

data/stats.entity_distribution.png

ADDED

|

Git LFS Details

|

data/stats.predicate_distribution.png

ADDED

|

Git LFS Details

|

data/t_rex.filter.test.jsonl

CHANGED

|

@@ -1,3 +1,3 @@

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

-

oid sha256:

|

| 3 |

-

size

|

|

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:f0cb4f880cdd24589aad630ba79e042a4dcce3d6471f490cd472886366a68d50

|

| 3 |

+

size 90649913

|

data/t_rex.filter.train.jsonl

CHANGED

|

@@ -1,3 +1,3 @@

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

-

oid sha256:

|

| 3 |

-

size

|

|

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:800dc2faef4a84c10be28a8560c5970a797e1ab852238367d0cec96f8db86021

|

| 3 |

+

size 422593571

|

data/t_rex.filter.validation.jsonl

CHANGED

|

@@ -1,3 +1,3 @@

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

-

oid sha256:

|

| 3 |

-

size

|

|

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:b690964fd198d372882d889cc6169184be5373335315fc31e5e34975fb4d4c89

|

| 3 |

+

size 90752371

|

filtering.py

CHANGED

|

@@ -1,23 +1,48 @@

|

|

| 1 |

import json

|

|

|

|

|

|

|

| 2 |

|

| 3 |

stopwords = ["he", "she", "they", "it"]

|

|

|

|

| 4 |

|

| 5 |

|

| 6 |

def filtering(entry):

|

| 7 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 8 |

return False

|

|

|

|

| 9 |

if entry['object'].islower() and entry['subject'].islower():

|

| 10 |

return False

|

|

|

|

| 11 |

return True

|

| 12 |

|

| 13 |

|

| 14 |

-

full_data = {}

|

| 15 |

for s in ['train', 'validation', 'test']:

|

| 16 |

with open(f"data/t_rex.raw.{s}.jsonl") as f:

|

| 17 |

data = [json.loads(i) for i in f.read().split('\n') if len(i) > 0]

|

|

|

|

| 18 |

data = [i for i in data if filtering(i)]

|

| 19 |

-

|

| 20 |

with open(f"data/t_rex.filter.{s}.jsonl", 'w') as f:

|

| 21 |

f.write('\n'.join([json.dumps(i) for i in data]))

|

| 22 |

-

|

| 23 |

-

# full_data[s] = [i for i in data if filtering(i)]

|

|

|

|

| 1 |

import json

|

| 2 |

+

import string

|

| 3 |

+

import re

|

| 4 |

|

| 5 |

stopwords = ["he", "she", "they", "it"]

|

| 6 |

+

list_alnum = string.ascii_lowercase + '0123456789 '

|

| 7 |

|

| 8 |

|

| 9 |

def filtering(entry):

|

| 10 |

+

|

| 11 |

+

def _subfilter(token):

|

| 12 |

+

if len(re.findall(rf'[^{list_alnum}]+', token)) != 0:

|

| 13 |

+

return False

|

| 14 |

+

if token in stopwords:

|

| 15 |

+

return False

|

| 16 |

+

if token.startswith("www"):

|

| 17 |

+

return False

|

| 18 |

+

if token.startswith("."):

|

| 19 |

+

return False

|

| 20 |

+

if token.startswith(","):

|

| 21 |

+

return False

|

| 22 |

+

if token.startswith("$"):

|

| 23 |

+

return False

|

| 24 |

+

if token.startswith("+"):

|

| 25 |

+

return False

|

| 26 |

+

if token.startswith("#"):

|

| 27 |

+

return False

|

| 28 |

+

return True

|

| 29 |

+

|

| 30 |

+

if not _subfilter(entry["object"].lower()):

|

| 31 |

+

return False

|

| 32 |

+

if not _subfilter(entry["subject"].lower()):

|

| 33 |

return False

|

| 34 |

+

|

| 35 |

if entry['object'].islower() and entry['subject'].islower():

|

| 36 |

return False

|

| 37 |

+

|

| 38 |

return True

|

| 39 |

|

| 40 |

|

|

|

|

| 41 |

for s in ['train', 'validation', 'test']:

|

| 42 |

with open(f"data/t_rex.raw.{s}.jsonl") as f:

|

| 43 |

data = [json.loads(i) for i in f.read().split('\n') if len(i) > 0]

|

| 44 |

+

print(f"[{s}] (before): {len(data)}")

|

| 45 |

data = [i for i in data if filtering(i)]

|

| 46 |

+

print(f"[{s}] (after) : {len(data)}")

|

| 47 |

with open(f"data/t_rex.filter.{s}.jsonl", 'w') as f:

|

| 48 |

f.write('\n'.join([json.dumps(i) for i in data]))

|

|

|

|

|

|

stats.py

ADDED

|

@@ -0,0 +1,132 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import json

|

| 2 |

+

from itertools import product

|

| 3 |

+

|

| 4 |

+

import numpy as np

|

| 5 |

+

import pandas as pd

|

| 6 |

+

import seaborn as sns

|

| 7 |

+

from matplotlib import pyplot as plt

|

| 8 |

+

|

| 9 |

+

from datasets import Dataset

|

| 10 |

+

|

| 11 |

+

sns.set_theme(style="whitegrid")

|

| 12 |

+

|

| 13 |

+

# load filtered data

|

| 14 |

+

tmp = []

|

| 15 |

+

for s in ['train', 'validation', 'test']:

|

| 16 |

+

with open(f"data/t_rex.filter.{s}.jsonl") as f:

|

| 17 |

+

tmp += [json.loads(i) for i in f.read().split('\n') if len(i) > 0]

|

| 18 |

+

data = Dataset.from_list(tmp)

|

| 19 |

+

df_main = data.to_pandas()

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

def is_entity(token):

|

| 23 |

+

return any(i.isupper() for i in token)

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

def filtering(row, min_freq: int = 3, target: str = "subject"):

|

| 27 |

+

if not row['is_entity']:

|

| 28 |

+

return True

|

| 29 |

+

return row[target] >= min_freq

|

| 30 |

+

|

| 31 |

+

|

| 32 |

+

def main(min_entity_freq, max_pairs_predicate, min_pairs_predicate: int = 1,

|

| 33 |

+

return_stats: bool = True, random_sampling: bool = True):

|

| 34 |

+

|

| 35 |

+

df = df_main.copy()

|

| 36 |

+

|

| 37 |

+

# entity frequency filter

|

| 38 |

+

c_sub = df.groupby("subject")['title'].count()

|

| 39 |

+

c_obj = df.groupby("object")['title'].count()

|

| 40 |

+

key = set(list(c_sub.index) + list(c_obj.index))

|

| 41 |

+

count = pd.DataFrame([{'entity': k, "subject": c_sub[k] if k in c_sub else 0, "object": c_obj[k] if k in c_obj else 0} for k in key])

|

| 42 |

+

count.index = count.pop('entity')

|

| 43 |

+

count['is_entity'] = [is_entity(i) for i in count.index]

|

| 44 |

+

count['sum'] = count['subject'] + count['object']

|

| 45 |

+

count_filter_sub = count[count.apply(lambda x: filtering(x, min_freq=min_entity_freq, target='subject'), axis=1)]['subject']

|

| 46 |

+

count_filter_obj = count[count.apply(lambda x: filtering(x, min_freq=min_entity_freq, target='object'), axis=1)]['object']

|

| 47 |

+

vocab_sub = set(count_filter_sub.index)

|

| 48 |

+

vocab_obj = set(count_filter_obj.index)

|

| 49 |

+

df['flag_subject'] = [i in vocab_sub for i in df['subject']]

|

| 50 |

+

df['flag_object'] = [i in vocab_obj for i in df['object']]

|

| 51 |

+

df['flag'] = df['flag_subject'] & df['flag_object']

|

| 52 |

+

df_filter = df[df['flag']]

|

| 53 |

+

df_filter.pop("flag")

|

| 54 |

+

df_filter.pop("flag_subject")

|

| 55 |

+

df_filter.pop("flag_object")

|

| 56 |

+

df_filter['count_subject'] = [count_filter_sub.loc[i] for i in df_filter['subject']]

|

| 57 |

+

df_filter['count_object'] = [count_filter_obj.loc[i] for i in df_filter['object']]

|

| 58 |

+

df_filter['count_sum'] = df_filter['count_subject'] + df_filter['count_object']

|

| 59 |

+

|

| 60 |

+

# predicate frequency filter

|

| 61 |

+

if random_sampling:

|

| 62 |

+

df_balanced = pd.concat(

|

| 63 |

+

[g if len(g) <= max_pairs_predicate else g.sample(max_pairs_predicate, random_state=0) for _, g in

|

| 64 |

+

df_filter.groupby("predicate") if len(g) >= min_pairs_predicate])

|

| 65 |

+

else:

|

| 66 |

+

df_balanced = pd.concat(

|

| 67 |

+

[g if len(g) <= max_pairs_predicate else g.sort_values(by='count_sum', ascending=False).head(max_pairs_predicate) for _, g in

|

| 68 |

+

df_filter.groupby("predicate") if len(g) >= min_pairs_predicate])

|

| 69 |

+

|

| 70 |

+

if not return_stats:

|

| 71 |

+

df_balanced.pop("count_subject")

|

| 72 |

+

df_balanced.pop("count_object")

|

| 73 |

+

df_balanced.pop("count_sum")

|

| 74 |

+

return [i.to_dict() for _, i in df_balanced]

|

| 75 |

+

|

| 76 |

+

# return distribution

|

| 77 |

+

predicate_dist = df_balanced.groupby("predicate")['text'].count().sort_values(ascending=False).to_dict()

|

| 78 |

+

entity, count = np.unique(df_balanced['object'].tolist() + df_balanced['subject'].tolist(), return_counts=True)

|

| 79 |

+

entity_dist = dict(list(zip(entity.tolist(), count.tolist())))

|

| 80 |

+

return predicate_dist, entity_dist, len(df_balanced)

|

| 81 |

+

|

| 82 |

+

|

| 83 |

+

if __name__ == '__main__':

|

| 84 |

+

p_dist_full = []

|

| 85 |

+

e_dist_full = []

|

| 86 |

+

data_size_full = []

|

| 87 |

+

config = []

|

| 88 |

+

candidates = list(product([1, 2, 3, 4], [100, 50, 25, 10]))

|

| 89 |

+

|

| 90 |

+

# run filtering with different configs

|

| 91 |

+

for min_e_freq, max_p_freq in candidates:

|

| 92 |

+

p_dist, e_dist, data_size = main(min_entity_freq=min_e_freq, max_pairs_predicate=max_p_freq)

|

| 93 |

+

p_dist_full.append(p_dist)

|

| 94 |

+

e_dist_full.append(e_dist)

|

| 95 |

+

data_size_full.append(data_size)

|

| 96 |

+

config.append([min_e_freq, max_p_freq])

|

| 97 |

+

|

| 98 |

+

# check statistics

|

| 99 |

+

print("- Data Size")

|

| 100 |

+

df_size = pd.DataFrame([{"min entity": mef, "max predicate": mpf, "freq": x} for x, (mef, mpf) in zip(data_size_full, candidates)])

|

| 101 |

+

df_size = df_size.pivot(index="min entity", columns="max predicate", values="freq")

|

| 102 |

+

df_size.index.name = "min entity / max predicate"

|

| 103 |

+

df_size.to_csv("data/stats.data_size.csv")

|

| 104 |

+

print(df_size.to_markdown())

|

| 105 |

+

|

| 106 |

+

# plot predicate distribution

|

| 107 |

+

df_p = pd.DataFrame([dict(enumerate(sorted(p.values(), reverse=True))) for p in p_dist_full]).T

|

| 108 |

+

df_p.columns = [f"min entity: {mef}, max predicate: {mpf}" for mef, mpf in candidates]

|

| 109 |

+

_df_p = df_p[[f"min entity: {mef}, max predicate: 100" for mef in [1, 2, 3, 4]]]

|

| 110 |

+

_df_p.columns = [f"min entity: {mef}" for mef in [1, 2, 3, 4]]

|

| 111 |

+

ax = sns.lineplot(data=_df_p, linewidth=2.5)

|

| 112 |

+

ax.set(xlabel='unique predicates sorted by frequency', ylabel='number of triples', title='Predicate Distribution (max predicate: 100)')

|

| 113 |

+

ax.get_figure().savefig("data/stats.predicate_distribution.png", bbox_inches='tight')

|

| 114 |

+

ax.get_figure().clf()

|

| 115 |

+

|

| 116 |

+

# plot entity distribution

|

| 117 |

+

df_e = pd.DataFrame([dict(enumerate(sorted(e.values(), reverse=True))) for e in e_dist_full]).T

|

| 118 |

+

df_e.columns = [f"min entity: {mef}, max predicate: {mpf}" for mef, mpf in candidates]

|

| 119 |

+

|

| 120 |

+

fig, axes = plt.subplots(2, 2, constrained_layout=True)

|

| 121 |

+

fig.suptitle('Entity Distribution over Different Configurations')

|

| 122 |

+

for (x, y), mpf in zip([(0, 0), (0, 1), (1, 0), (1, 1)], [100, 50, 25, 10]):

|

| 123 |

+

_df = df_e[[f"min entity: {mef}, max predicate: {mpf}" for mef in [1, 2, 3, 4]]]

|

| 124 |

+

_df.columns = [f"min entity: {mef}" for mef in [1, 2, 3, 4]]

|

| 125 |

+

ax = sns.lineplot(ax=axes[x, y], data=_df, linewidth=1.5)

|

| 126 |

+

ax.set(xscale='log')

|

| 127 |

+

if mpf != 100:

|

| 128 |

+

ax.legend_.remove()

|

| 129 |

+

axes[x, y].set_title(f'max predicate: {mpf}')

|

| 130 |

+

fig.supxlabel('unique entities sorted by frequency')

|

| 131 |

+

fig.supylabel('number of triples')

|

| 132 |

+

fig.savefig("data/stats.entity_distribution.png", bbox_inches='tight')

|