problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.71k

18.9k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 465

23.6k

| num_tokens_prompt

int64 556

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

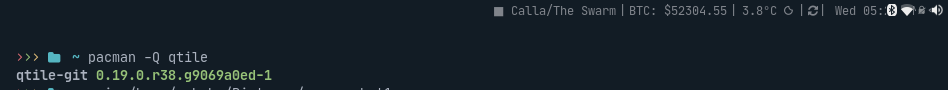

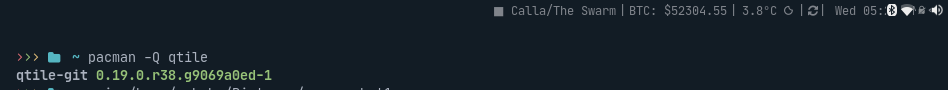

gh_patches_debug_15542 | rasdani/github-patches | git_diff | replicate__cog-553 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Dear friend,please tell me why I can't run it from cog example.

I am a newbie.

I run the code from cog examples.

I can run "cog run python",but I can't run following command.

input:

sudo cog predict -i @input.jpg

resluts:

</issue>

<code>

[start of python/cog/json.py]

1 from enum import Enum

2 import io

3 from typing import Any

4

5 from pydantic import BaseModel

6

7 from .types import Path

8

9 try:

10 import numpy as np # type: ignore

11

12 has_numpy = True

13 except ImportError:

14 has_numpy = False

15

16

17 def encode_json(obj: Any, upload_file) -> Any:

18 """

19 Returns a JSON-compatible version of the object. It will encode any Pydantic models and custom types.

20

21 When a file is encountered, it will be passed to upload_file. Any paths will be opened and converted to files.

22

23 Somewhat based on FastAPI's jsonable_encoder().

24 """

25 if isinstance(obj, BaseModel):

26 return encode_json(obj.dict(exclude_unset=True), upload_file)

27 if isinstance(obj, dict):

28 return {key: encode_json(value, upload_file) for key, value in obj.items()}

29 if isinstance(obj, list):

30 return [encode_json(value, upload_file) for value in obj]

31 if isinstance(obj, Enum):

32 return obj.value

33 if isinstance(obj, Path):

34 with obj.open("rb") as f:

35 return upload_file(f)

36 if isinstance(obj, io.IOBase):

37 return upload_file(obj)

38 if has_numpy:

39 if isinstance(obj, np.integer):

40 return int(obj)

41 if isinstance(obj, np.floating):

42 return float(obj)

43 if isinstance(obj, np.ndarray):

44 return obj.tolist()

45 return obj

46

[end of python/cog/json.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/python/cog/json.py b/python/cog/json.py

--- a/python/cog/json.py

+++ b/python/cog/json.py

@@ -1,5 +1,6 @@

from enum import Enum

import io

+from types import GeneratorType

from typing import Any

from pydantic import BaseModel

@@ -26,7 +27,7 @@

return encode_json(obj.dict(exclude_unset=True), upload_file)

if isinstance(obj, dict):

return {key: encode_json(value, upload_file) for key, value in obj.items()}

- if isinstance(obj, list):

+ if isinstance(obj, (list, set, frozenset, GeneratorType, tuple)):

return [encode_json(value, upload_file) for value in obj]

if isinstance(obj, Enum):

return obj.value

| {"golden_diff": "diff --git a/python/cog/json.py b/python/cog/json.py\n--- a/python/cog/json.py\n+++ b/python/cog/json.py\n@@ -1,5 +1,6 @@\n from enum import Enum\n import io\n+from types import GeneratorType\n from typing import Any\n \n from pydantic import BaseModel\n@@ -26,7 +27,7 @@\n return encode_json(obj.dict(exclude_unset=True), upload_file)\n if isinstance(obj, dict):\n return {key: encode_json(value, upload_file) for key, value in obj.items()}\n- if isinstance(obj, list):\n+ if isinstance(obj, (list, set, frozenset, GeneratorType, tuple)):\n return [encode_json(value, upload_file) for value in obj]\n if isinstance(obj, Enum):\n return obj.value\n", "issue": "Dear friend,please tell me why I can't run it from cog example.\nI am a newbie.\r\nI run the code from cog examples.\r\nI can run \"cog run python\",but I can't run following command.\r\ninput:\r\nsudo cog predict -i @input.jpg\r\nresluts:\r\n\r\n\n", "before_files": [{"content": "from enum import Enum\nimport io\nfrom typing import Any\n\nfrom pydantic import BaseModel\n\nfrom .types import Path\n\ntry:\n import numpy as np # type: ignore\n\n has_numpy = True\nexcept ImportError:\n has_numpy = False\n\n\ndef encode_json(obj: Any, upload_file) -> Any:\n \"\"\"\n Returns a JSON-compatible version of the object. It will encode any Pydantic models and custom types.\n\n When a file is encountered, it will be passed to upload_file. Any paths will be opened and converted to files.\n\n Somewhat based on FastAPI's jsonable_encoder().\n \"\"\"\n if isinstance(obj, BaseModel):\n return encode_json(obj.dict(exclude_unset=True), upload_file)\n if isinstance(obj, dict):\n return {key: encode_json(value, upload_file) for key, value in obj.items()}\n if isinstance(obj, list):\n return [encode_json(value, upload_file) for value in obj]\n if isinstance(obj, Enum):\n return obj.value\n if isinstance(obj, Path):\n with obj.open(\"rb\") as f:\n return upload_file(f)\n if isinstance(obj, io.IOBase):\n return upload_file(obj)\n if has_numpy:\n if isinstance(obj, np.integer):\n return int(obj)\n if isinstance(obj, np.floating):\n return float(obj)\n if isinstance(obj, np.ndarray):\n return obj.tolist()\n return obj\n", "path": "python/cog/json.py"}]} | 1,051 | 177 |

gh_patches_debug_12833 | rasdani/github-patches | git_diff | mindee__doctr-219 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Demo app error when analyzing my first document

## 🐛 Bug

I tried to analyze a PNG and a PDF, got the same error. I try to change the model, didn't change anything.

## To Reproduce

Steps to reproduce the behavior:

1. Upload a PNG

2. Click on analyze document

<!-- If you have a code sample, error messages, stack traces, please provide it here as well -->

```

KeyError: 0

Traceback:

File "/Users/thibautmorla/opt/anaconda3/lib/python3.8/site-packages/streamlit/script_runner.py", line 337, in _run_script

exec(code, module.__dict__)

File "/Users/thibautmorla/Downloads/doctr/demo/app.py", line 93, in <module>

main()

File "/Users/thibautmorla/Downloads/doctr/demo/app.py", line 77, in main

seg_map = predictor.det_predictor.model(processed_batches[0])[0]

```

## Additional context

First image upload

</issue>

<code>

[start of demo/app.py]

1 # Copyright (C) 2021, Mindee.

2

3 # This program is licensed under the Apache License version 2.

4 # See LICENSE or go to <https://www.apache.org/licenses/LICENSE-2.0.txt> for full license details.

5

6 import os

7 import streamlit as st

8 import matplotlib.pyplot as plt

9

10 os.environ["TF_CPP_MIN_LOG_LEVEL"] = "2"

11

12 import tensorflow as tf

13 import cv2

14

15 gpu_devices = tf.config.experimental.list_physical_devices('GPU')

16 if any(gpu_devices):

17 tf.config.experimental.set_memory_growth(gpu_devices[0], True)

18

19 from doctr.documents import DocumentFile

20 from doctr.models import ocr_predictor

21 from doctr.utils.visualization import visualize_page

22

23 DET_ARCHS = ["db_resnet50"]

24 RECO_ARCHS = ["crnn_vgg16_bn", "crnn_resnet31", "sar_vgg16_bn", "sar_resnet31"]

25

26

27 def main():

28

29 # Wide mode

30 st.set_page_config(layout="wide")

31

32 # Designing the interface

33 st.title("DocTR: Document Text Recognition")

34 # For newline

35 st.write('\n')

36 # Set the columns

37 cols = st.beta_columns((1, 1, 1))

38 cols[0].header("Input document")

39 cols[1].header("Text segmentation")

40 cols[-1].header("OCR output")

41

42 # Sidebar

43 # File selection

44 st.sidebar.title("Document selection")

45 # Disabling warning

46 st.set_option('deprecation.showfileUploaderEncoding', False)

47 # Choose your own image

48 uploaded_file = st.sidebar.file_uploader("Upload files", type=['pdf', 'png', 'jpeg', 'jpg'])

49 if uploaded_file is not None:

50 if uploaded_file.name.endswith('.pdf'):

51 doc = DocumentFile.from_pdf(uploaded_file.read())

52 else:

53 doc = DocumentFile.from_images(uploaded_file.read())

54 cols[0].image(doc[0], "First page", use_column_width=True)

55

56 # Model selection

57 st.sidebar.title("Model selection")

58 det_arch = st.sidebar.selectbox("Text detection model", DET_ARCHS)

59 reco_arch = st.sidebar.selectbox("Text recognition model", RECO_ARCHS)

60

61 # For newline

62 st.sidebar.write('\n')

63

64 if st.sidebar.button("Analyze document"):

65

66 if uploaded_file is None:

67 st.sidebar.write("Please upload a document")

68

69 else:

70 with st.spinner('Loading model...'):

71 predictor = ocr_predictor(det_arch, reco_arch, pretrained=True)

72

73 with st.spinner('Analyzing...'):

74

75 # Forward the image to the model

76 processed_batches = predictor.det_predictor.pre_processor(doc)

77 seg_map = predictor.det_predictor.model(processed_batches[0])[0]

78 seg_map = cv2.resize(seg_map.numpy(), (doc[0].shape[1], doc[0].shape[0]),

79 interpolation=cv2.INTER_LINEAR)

80 # Plot the raw heatmap

81 fig, ax = plt.subplots()

82 ax.imshow(seg_map)

83 ax.axis('off')

84 cols[1].pyplot(fig)

85

86 # OCR

87 out = predictor(doc)

88 fig = visualize_page(out.pages[0].export(), doc[0], interactive=False)

89 cols[-1].pyplot(fig)

90

91

92 if __name__ == '__main__':

93 main()

94

[end of demo/app.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/demo/app.py b/demo/app.py

--- a/demo/app.py

+++ b/demo/app.py

@@ -74,7 +74,8 @@

# Forward the image to the model

processed_batches = predictor.det_predictor.pre_processor(doc)

- seg_map = predictor.det_predictor.model(processed_batches[0])[0]

+ seg_map = predictor.det_predictor.model(processed_batches[0])["proba_map"]

+ seg_map = tf.squeeze(seg_map, axis=[0, 3])

seg_map = cv2.resize(seg_map.numpy(), (doc[0].shape[1], doc[0].shape[0]),

interpolation=cv2.INTER_LINEAR)

# Plot the raw heatmap

| {"golden_diff": "diff --git a/demo/app.py b/demo/app.py\n--- a/demo/app.py\n+++ b/demo/app.py\n@@ -74,7 +74,8 @@\n \n # Forward the image to the model\n processed_batches = predictor.det_predictor.pre_processor(doc)\n- seg_map = predictor.det_predictor.model(processed_batches[0])[0]\n+ seg_map = predictor.det_predictor.model(processed_batches[0])[\"proba_map\"]\n+ seg_map = tf.squeeze(seg_map, axis=[0, 3])\n seg_map = cv2.resize(seg_map.numpy(), (doc[0].shape[1], doc[0].shape[0]),\n interpolation=cv2.INTER_LINEAR)\n # Plot the raw heatmap\n", "issue": "Demo app error when analyzing my first document\n## \ud83d\udc1b Bug\r\n\r\nI tried to analyze a PNG and a PDF, got the same error. I try to change the model, didn't change anything.\r\n\r\n## To Reproduce\r\n\r\nSteps to reproduce the behavior:\r\n\r\n1. Upload a PNG\r\n2. Click on analyze document\r\n\r\n\r\n<!-- If you have a code sample, error messages, stack traces, please provide it here as well -->\r\n```\r\nKeyError: 0\r\nTraceback:\r\nFile \"/Users/thibautmorla/opt/anaconda3/lib/python3.8/site-packages/streamlit/script_runner.py\", line 337, in _run_script\r\n exec(code, module.__dict__)\r\nFile \"/Users/thibautmorla/Downloads/doctr/demo/app.py\", line 93, in <module>\r\n main()\r\nFile \"/Users/thibautmorla/Downloads/doctr/demo/app.py\", line 77, in main\r\n seg_map = predictor.det_predictor.model(processed_batches[0])[0]\r\n```\r\n\r\n\r\n## Additional context\r\n\r\nFirst image upload\n", "before_files": [{"content": "# Copyright (C) 2021, Mindee.\n\n# This program is licensed under the Apache License version 2.\n# See LICENSE or go to <https://www.apache.org/licenses/LICENSE-2.0.txt> for full license details.\n\nimport os\nimport streamlit as st\nimport matplotlib.pyplot as plt\n\nos.environ[\"TF_CPP_MIN_LOG_LEVEL\"] = \"2\"\n\nimport tensorflow as tf\nimport cv2\n\ngpu_devices = tf.config.experimental.list_physical_devices('GPU')\nif any(gpu_devices):\n tf.config.experimental.set_memory_growth(gpu_devices[0], True)\n\nfrom doctr.documents import DocumentFile\nfrom doctr.models import ocr_predictor\nfrom doctr.utils.visualization import visualize_page\n\nDET_ARCHS = [\"db_resnet50\"]\nRECO_ARCHS = [\"crnn_vgg16_bn\", \"crnn_resnet31\", \"sar_vgg16_bn\", \"sar_resnet31\"]\n\n\ndef main():\n\n # Wide mode\n st.set_page_config(layout=\"wide\")\n\n # Designing the interface\n st.title(\"DocTR: Document Text Recognition\")\n # For newline\n st.write('\\n')\n # Set the columns\n cols = st.beta_columns((1, 1, 1))\n cols[0].header(\"Input document\")\n cols[1].header(\"Text segmentation\")\n cols[-1].header(\"OCR output\")\n\n # Sidebar\n # File selection\n st.sidebar.title(\"Document selection\")\n # Disabling warning\n st.set_option('deprecation.showfileUploaderEncoding', False)\n # Choose your own image\n uploaded_file = st.sidebar.file_uploader(\"Upload files\", type=['pdf', 'png', 'jpeg', 'jpg'])\n if uploaded_file is not None:\n if uploaded_file.name.endswith('.pdf'):\n doc = DocumentFile.from_pdf(uploaded_file.read())\n else:\n doc = DocumentFile.from_images(uploaded_file.read())\n cols[0].image(doc[0], \"First page\", use_column_width=True)\n\n # Model selection\n st.sidebar.title(\"Model selection\")\n det_arch = st.sidebar.selectbox(\"Text detection model\", DET_ARCHS)\n reco_arch = st.sidebar.selectbox(\"Text recognition model\", RECO_ARCHS)\n\n # For newline\n st.sidebar.write('\\n')\n\n if st.sidebar.button(\"Analyze document\"):\n\n if uploaded_file is None:\n st.sidebar.write(\"Please upload a document\")\n\n else:\n with st.spinner('Loading model...'):\n predictor = ocr_predictor(det_arch, reco_arch, pretrained=True)\n\n with st.spinner('Analyzing...'):\n\n # Forward the image to the model\n processed_batches = predictor.det_predictor.pre_processor(doc)\n seg_map = predictor.det_predictor.model(processed_batches[0])[0]\n seg_map = cv2.resize(seg_map.numpy(), (doc[0].shape[1], doc[0].shape[0]),\n interpolation=cv2.INTER_LINEAR)\n # Plot the raw heatmap\n fig, ax = plt.subplots()\n ax.imshow(seg_map)\n ax.axis('off')\n cols[1].pyplot(fig)\n\n # OCR\n out = predictor(doc)\n fig = visualize_page(out.pages[0].export(), doc[0], interactive=False)\n cols[-1].pyplot(fig)\n\n\nif __name__ == '__main__':\n main()\n", "path": "demo/app.py"}]} | 1,661 | 156 |

gh_patches_debug_4637 | rasdani/github-patches | git_diff | conda__conda-4585 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

ERROR conda.core.link:_execute_actions(319): An error occurred while installing package 'defaults::qt-5.6.2-vc9_3'

```

Current conda install:

platform : win-64

conda version : 4.3.9

conda is private : False

conda-env version : 4.3.9

conda-build version : 2.1.3

python version : 2.7.13.final.0

requests version : 2.12.4

root environment : C:\Users\joelkim\Anaconda2 (writable)

default environment : C:\Users\joelkim\Anaconda2

envs directories : C:\Users\joelkim\Anaconda2\envs

package cache : C:\Users\joelkim\Anaconda2\pkgs

channel URLs : https://repo.continuum.io/pkgs/free/win-64

https://repo.continuum.io/pkgs/free/noarch

https://repo.continuum.io/pkgs/r/win-64

https://repo.continuum.io/pkgs/r/noarch

https://repo.continuum.io/pkgs/pro/win-64

https://repo.continuum.io/pkgs/pro/noarch

https://repo.continuum.io/pkgs/msys2/win-64

https://repo.continuum.io/pkgs/msys2/noarch

config file : None

offline mode : False

user-agent : conda/4.3.9 requests/2.12.4 CPython/2.7.13 Windows/10 Windows/10.0.14393

```

I got this error when I tried to install qt:

```

> conda create -n test qt

Fetching package metadata ...........

Solving package specifications: .

Package plan for installation in environment C:\Users\joelkim\Anaconda2\envs\test:

The following NEW packages will be INSTALLED:

icu: 57.1-vc9_0 [vc9]

jpeg: 9b-vc9_0 [vc9]

libpng: 1.6.27-vc9_0 [vc9]

openssl: 1.0.2k-vc9_0 [vc9]

pip: 9.0.1-py27_1

python: 2.7.13-0

qt: 5.6.2-vc9_3 [vc9]

setuptools: 27.2.0-py27_1

vs2008_runtime: 9.00.30729.5054-0

wheel: 0.29.0-py27_0

zlib: 1.2.8-vc9_3 [vc9]

Proceed ([y]/n)?

ERROR conda.core.link:_execute_actions(319): An error occurred while installing package 'defaults::qt-5.6.2-vc9_3'.

```

</issue>

<code>

[start of conda/common/compat.py]

1 # -*- coding: utf-8 -*-

2 # Try to keep compat small because it's imported by everything

3 # What is compat, and what isn't?

4 # If a piece of code is "general" and used in multiple modules, it goes here.

5 # If it's only used in one module, keep it in that module, preferably near the top.

6 from __future__ import absolute_import, division, print_function, unicode_literals

7

8 from itertools import chain

9 from operator import methodcaller

10 from os import chmod, lstat

11 from os.path import islink

12 import sys

13

14 on_win = bool(sys.platform == "win32")

15

16 PY2 = sys.version_info[0] == 2

17 PY3 = sys.version_info[0] == 3

18

19

20 # #############################

21 # equivalent commands

22 # #############################

23

24 if PY3: # pragma: py2 no cover

25 string_types = str,

26 integer_types = int,

27 class_types = type,

28 text_type = str

29 binary_type = bytes

30 input = input

31 range = range

32

33 elif PY2: # pragma: py3 no cover

34 from types import ClassType

35 string_types = basestring,

36 integer_types = (int, long)

37 class_types = (type, ClassType)

38 text_type = unicode

39 binary_type = str

40 input = raw_input

41 range = xrange

42

43

44 # #############################

45 # equivalent imports

46 # #############################

47

48 if PY3: # pragma: py2 no cover

49 from io import StringIO

50 from itertools import zip_longest

51 elif PY2: # pragma: py3 no cover

52 from cStringIO import StringIO

53 from itertools import izip as zip, izip_longest as zip_longest

54

55 StringIO = StringIO

56 zip = zip

57 zip_longest = zip_longest

58

59

60 # #############################

61 # equivalent functions

62 # #############################

63

64 if PY3: # pragma: py2 no cover

65 def iterkeys(d, **kw):

66 return iter(d.keys(**kw))

67

68 def itervalues(d, **kw):

69 return iter(d.values(**kw))

70

71 def iteritems(d, **kw):

72 return iter(d.items(**kw))

73

74 viewkeys = methodcaller("keys")

75 viewvalues = methodcaller("values")

76 viewitems = methodcaller("items")

77

78 def lchmod(path, mode):

79 try:

80 chmod(path, mode, follow_symlinks=False)

81 except (TypeError, NotImplementedError, SystemError):

82 # On systems that don't allow permissions on symbolic links, skip

83 # links entirely.

84 if not islink(path):

85 chmod(path, mode)

86

87

88 from collections import Iterable

89 def isiterable(obj):

90 return not isinstance(obj, string_types) and isinstance(obj, Iterable)

91

92 elif PY2: # pragma: py3 no cover

93 def iterkeys(d, **kw):

94 return d.iterkeys(**kw)

95

96 def itervalues(d, **kw):

97 return d.itervalues(**kw)

98

99 def iteritems(d, **kw):

100 return d.iteritems(**kw)

101

102 viewkeys = methodcaller("viewkeys")

103 viewvalues = methodcaller("viewvalues")

104 viewitems = methodcaller("viewitems")

105

106 try:

107 from os import lchmod as os_lchmod

108 lchmod = os_lchmod

109 except ImportError:

110 def lchmod(path, mode):

111 # On systems that don't allow permissions on symbolic links, skip

112 # links entirely.

113 if not islink(path):

114 chmod(path, mode)

115

116 def isiterable(obj):

117 return (hasattr(obj, '__iter__')

118 and not isinstance(obj, string_types)

119 and type(obj) is not type)

120

121

122 # #############################

123 # other

124 # #############################

125

126 def with_metaclass(Type, skip_attrs=set(('__dict__', '__weakref__'))):

127 """Class decorator to set metaclass.

128

129 Works with both Python 2 and Python 3 and it does not add

130 an extra class in the lookup order like ``six.with_metaclass`` does

131 (that is -- it copies the original class instead of using inheritance).

132

133 """

134

135 def _clone_with_metaclass(Class):

136 attrs = dict((key, value) for key, value in iteritems(vars(Class))

137 if key not in skip_attrs)

138 return Type(Class.__name__, Class.__bases__, attrs)

139

140 return _clone_with_metaclass

141

142

143 from collections import OrderedDict as odict

144 odict = odict

145

146 NoneType = type(None)

147 primitive_types = tuple(chain(string_types, integer_types, (float, complex, bool, NoneType)))

148

149

150 def ensure_binary(value):

151 return value.encode('utf-8') if hasattr(value, 'encode') else value

152

153

154 def ensure_text_type(value):

155 return value.decode('utf-8') if hasattr(value, 'decode') else value

156

157

158 def ensure_unicode(value):

159 return value.decode('unicode_escape') if hasattr(value, 'decode') else value

160

161

162 # TODO: move this somewhere else

163 # work-around for python bug on Windows prior to python 3.2

164 # https://bugs.python.org/issue10027

165 # Adapted from the ntfsutils package, Copyright (c) 2012, the Mozilla Foundation

166 class CrossPlatformStLink(object):

167 _st_nlink = None

168

169 def __call__(self, path):

170 return self.st_nlink(path)

171

172 @classmethod

173 def st_nlink(cls, path):

174 if cls._st_nlink is None:

175 cls._initialize()

176 return cls._st_nlink(path)

177

178 @classmethod

179 def _standard_st_nlink(cls, path):

180 return lstat(path).st_nlink

181

182 @classmethod

183 def _windows_st_nlink(cls, path):

184 st_nlink = cls._standard_st_nlink(path)

185 if st_nlink != 0:

186 return st_nlink

187 else:

188 # cannot trust python on Windows when st_nlink == 0

189 # get value using windows libraries to be sure of its true value

190 # Adapted from the ntfsutils package, Copyright (c) 2012, the Mozilla Foundation

191 GENERIC_READ = 0x80000000

192 FILE_SHARE_READ = 0x00000001

193 OPEN_EXISTING = 3

194 hfile = cls.CreateFile(path, GENERIC_READ, FILE_SHARE_READ, None,

195 OPEN_EXISTING, 0, None)

196 if hfile is None:

197 from ctypes import WinError

198 raise WinError()

199 info = cls.BY_HANDLE_FILE_INFORMATION()

200 rv = cls.GetFileInformationByHandle(hfile, info)

201 cls.CloseHandle(hfile)

202 if rv == 0:

203 from ctypes import WinError

204 raise WinError()

205 return info.nNumberOfLinks

206

207 @classmethod

208 def _initialize(cls):

209 if not on_win:

210 cls._st_nlink = cls._standard_st_nlink

211 else:

212 # http://msdn.microsoft.com/en-us/library/windows/desktop/aa363858

213 import ctypes

214 from ctypes import POINTER

215 from ctypes.wintypes import DWORD, HANDLE, BOOL

216

217 cls.CreateFile = ctypes.windll.kernel32.CreateFileW

218 cls.CreateFile.argtypes = [ctypes.c_wchar_p, DWORD, DWORD, ctypes.c_void_p,

219 DWORD, DWORD, HANDLE]

220 cls.CreateFile.restype = HANDLE

221

222 # http://msdn.microsoft.com/en-us/library/windows/desktop/ms724211

223 cls.CloseHandle = ctypes.windll.kernel32.CloseHandle

224 cls.CloseHandle.argtypes = [HANDLE]

225 cls.CloseHandle.restype = BOOL

226

227 class FILETIME(ctypes.Structure):

228 _fields_ = [("dwLowDateTime", DWORD),

229 ("dwHighDateTime", DWORD)]

230

231 class BY_HANDLE_FILE_INFORMATION(ctypes.Structure):

232 _fields_ = [("dwFileAttributes", DWORD),

233 ("ftCreationTime", FILETIME),

234 ("ftLastAccessTime", FILETIME),

235 ("ftLastWriteTime", FILETIME),

236 ("dwVolumeSerialNumber", DWORD),

237 ("nFileSizeHigh", DWORD),

238 ("nFileSizeLow", DWORD),

239 ("nNumberOfLinks", DWORD),

240 ("nFileIndexHigh", DWORD),

241 ("nFileIndexLow", DWORD)]

242 cls.BY_HANDLE_FILE_INFORMATION = BY_HANDLE_FILE_INFORMATION

243

244 # http://msdn.microsoft.com/en-us/library/windows/desktop/aa364952

245 cls.GetFileInformationByHandle = ctypes.windll.kernel32.GetFileInformationByHandle

246 cls.GetFileInformationByHandle.argtypes = [HANDLE, POINTER(BY_HANDLE_FILE_INFORMATION)]

247 cls.GetFileInformationByHandle.restype = BOOL

248

249 cls._st_nlink = cls._windows_st_nlink

250

[end of conda/common/compat.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/conda/common/compat.py b/conda/common/compat.py

--- a/conda/common/compat.py

+++ b/conda/common/compat.py

@@ -152,7 +152,15 @@

def ensure_text_type(value):

- return value.decode('utf-8') if hasattr(value, 'decode') else value

+ if hasattr(value, 'decode'):

+ try:

+ return value.decode('utf-8')

+ except UnicodeDecodeError:

+ from requests.packages.chardet import detect

+ encoding = detect(value).get('encoding') or 'utf-8'

+ return value.decode(encoding)

+ else:

+ return value

def ensure_unicode(value):

| {"golden_diff": "diff --git a/conda/common/compat.py b/conda/common/compat.py\n--- a/conda/common/compat.py\n+++ b/conda/common/compat.py\n@@ -152,7 +152,15 @@\n \n \n def ensure_text_type(value):\n- return value.decode('utf-8') if hasattr(value, 'decode') else value\n+ if hasattr(value, 'decode'):\n+ try:\n+ return value.decode('utf-8')\n+ except UnicodeDecodeError:\n+ from requests.packages.chardet import detect\n+ encoding = detect(value).get('encoding') or 'utf-8'\n+ return value.decode(encoding)\n+ else:\n+ return value\n \n \n def ensure_unicode(value):\n", "issue": "ERROR conda.core.link:_execute_actions(319): An error occurred while installing package 'defaults::qt-5.6.2-vc9_3'\n```\r\nCurrent conda install:\r\n\r\n platform : win-64\r\n conda version : 4.3.9\r\n conda is private : False\r\n conda-env version : 4.3.9\r\n conda-build version : 2.1.3\r\n python version : 2.7.13.final.0\r\n requests version : 2.12.4\r\n root environment : C:\\Users\\joelkim\\Anaconda2 (writable)\r\n default environment : C:\\Users\\joelkim\\Anaconda2\r\n envs directories : C:\\Users\\joelkim\\Anaconda2\\envs\r\n package cache : C:\\Users\\joelkim\\Anaconda2\\pkgs\r\n channel URLs : https://repo.continuum.io/pkgs/free/win-64\r\n https://repo.continuum.io/pkgs/free/noarch\r\n https://repo.continuum.io/pkgs/r/win-64\r\n https://repo.continuum.io/pkgs/r/noarch\r\n https://repo.continuum.io/pkgs/pro/win-64\r\n https://repo.continuum.io/pkgs/pro/noarch\r\n https://repo.continuum.io/pkgs/msys2/win-64\r\n https://repo.continuum.io/pkgs/msys2/noarch\r\n config file : None\r\n offline mode : False\r\n user-agent : conda/4.3.9 requests/2.12.4 CPython/2.7.13 Windows/10 Windows/10.0.14393\r\n```\r\n\r\nI got this error when I tried to install qt:\r\n```\r\n> conda create -n test qt\r\nFetching package metadata ...........\r\nSolving package specifications: .\r\n\r\nPackage plan for installation in environment C:\\Users\\joelkim\\Anaconda2\\envs\\test:\r\n\r\nThe following NEW packages will be INSTALLED:\r\n\r\n icu: 57.1-vc9_0 [vc9]\r\n jpeg: 9b-vc9_0 [vc9]\r\n libpng: 1.6.27-vc9_0 [vc9]\r\n openssl: 1.0.2k-vc9_0 [vc9]\r\n pip: 9.0.1-py27_1\r\n python: 2.7.13-0\r\n qt: 5.6.2-vc9_3 [vc9]\r\n setuptools: 27.2.0-py27_1\r\n vs2008_runtime: 9.00.30729.5054-0\r\n wheel: 0.29.0-py27_0\r\n zlib: 1.2.8-vc9_3 [vc9]\r\n\r\nProceed ([y]/n)?\r\n\r\nERROR conda.core.link:_execute_actions(319): An error occurred while installing package 'defaults::qt-5.6.2-vc9_3'.\r\n```\r\n \n", "before_files": [{"content": "# -*- coding: utf-8 -*-\n# Try to keep compat small because it's imported by everything\n# What is compat, and what isn't?\n# If a piece of code is \"general\" and used in multiple modules, it goes here.\n# If it's only used in one module, keep it in that module, preferably near the top.\nfrom __future__ import absolute_import, division, print_function, unicode_literals\n\nfrom itertools import chain\nfrom operator import methodcaller\nfrom os import chmod, lstat\nfrom os.path import islink\nimport sys\n\non_win = bool(sys.platform == \"win32\")\n\nPY2 = sys.version_info[0] == 2\nPY3 = sys.version_info[0] == 3\n\n\n# #############################\n# equivalent commands\n# #############################\n\nif PY3: # pragma: py2 no cover\n string_types = str,\n integer_types = int,\n class_types = type,\n text_type = str\n binary_type = bytes\n input = input\n range = range\n\nelif PY2: # pragma: py3 no cover\n from types import ClassType\n string_types = basestring,\n integer_types = (int, long)\n class_types = (type, ClassType)\n text_type = unicode\n binary_type = str\n input = raw_input\n range = xrange\n\n\n# #############################\n# equivalent imports\n# #############################\n\nif PY3: # pragma: py2 no cover\n from io import StringIO\n from itertools import zip_longest\nelif PY2: # pragma: py3 no cover\n from cStringIO import StringIO\n from itertools import izip as zip, izip_longest as zip_longest\n\nStringIO = StringIO\nzip = zip\nzip_longest = zip_longest\n\n\n# #############################\n# equivalent functions\n# #############################\n\nif PY3: # pragma: py2 no cover\n def iterkeys(d, **kw):\n return iter(d.keys(**kw))\n\n def itervalues(d, **kw):\n return iter(d.values(**kw))\n\n def iteritems(d, **kw):\n return iter(d.items(**kw))\n\n viewkeys = methodcaller(\"keys\")\n viewvalues = methodcaller(\"values\")\n viewitems = methodcaller(\"items\")\n\n def lchmod(path, mode):\n try:\n chmod(path, mode, follow_symlinks=False)\n except (TypeError, NotImplementedError, SystemError):\n # On systems that don't allow permissions on symbolic links, skip\n # links entirely.\n if not islink(path):\n chmod(path, mode)\n\n\n from collections import Iterable\n def isiterable(obj):\n return not isinstance(obj, string_types) and isinstance(obj, Iterable)\n\nelif PY2: # pragma: py3 no cover\n def iterkeys(d, **kw):\n return d.iterkeys(**kw)\n\n def itervalues(d, **kw):\n return d.itervalues(**kw)\n\n def iteritems(d, **kw):\n return d.iteritems(**kw)\n\n viewkeys = methodcaller(\"viewkeys\")\n viewvalues = methodcaller(\"viewvalues\")\n viewitems = methodcaller(\"viewitems\")\n\n try:\n from os import lchmod as os_lchmod\n lchmod = os_lchmod\n except ImportError:\n def lchmod(path, mode):\n # On systems that don't allow permissions on symbolic links, skip\n # links entirely.\n if not islink(path):\n chmod(path, mode)\n\n def isiterable(obj):\n return (hasattr(obj, '__iter__')\n and not isinstance(obj, string_types)\n and type(obj) is not type)\n\n\n# #############################\n# other\n# #############################\n\ndef with_metaclass(Type, skip_attrs=set(('__dict__', '__weakref__'))):\n \"\"\"Class decorator to set metaclass.\n\n Works with both Python 2 and Python 3 and it does not add\n an extra class in the lookup order like ``six.with_metaclass`` does\n (that is -- it copies the original class instead of using inheritance).\n\n \"\"\"\n\n def _clone_with_metaclass(Class):\n attrs = dict((key, value) for key, value in iteritems(vars(Class))\n if key not in skip_attrs)\n return Type(Class.__name__, Class.__bases__, attrs)\n\n return _clone_with_metaclass\n\n\nfrom collections import OrderedDict as odict\nodict = odict\n\nNoneType = type(None)\nprimitive_types = tuple(chain(string_types, integer_types, (float, complex, bool, NoneType)))\n\n\ndef ensure_binary(value):\n return value.encode('utf-8') if hasattr(value, 'encode') else value\n\n\ndef ensure_text_type(value):\n return value.decode('utf-8') if hasattr(value, 'decode') else value\n\n\ndef ensure_unicode(value):\n return value.decode('unicode_escape') if hasattr(value, 'decode') else value\n\n\n# TODO: move this somewhere else\n# work-around for python bug on Windows prior to python 3.2\n# https://bugs.python.org/issue10027\n# Adapted from the ntfsutils package, Copyright (c) 2012, the Mozilla Foundation\nclass CrossPlatformStLink(object):\n _st_nlink = None\n\n def __call__(self, path):\n return self.st_nlink(path)\n\n @classmethod\n def st_nlink(cls, path):\n if cls._st_nlink is None:\n cls._initialize()\n return cls._st_nlink(path)\n\n @classmethod\n def _standard_st_nlink(cls, path):\n return lstat(path).st_nlink\n\n @classmethod\n def _windows_st_nlink(cls, path):\n st_nlink = cls._standard_st_nlink(path)\n if st_nlink != 0:\n return st_nlink\n else:\n # cannot trust python on Windows when st_nlink == 0\n # get value using windows libraries to be sure of its true value\n # Adapted from the ntfsutils package, Copyright (c) 2012, the Mozilla Foundation\n GENERIC_READ = 0x80000000\n FILE_SHARE_READ = 0x00000001\n OPEN_EXISTING = 3\n hfile = cls.CreateFile(path, GENERIC_READ, FILE_SHARE_READ, None,\n OPEN_EXISTING, 0, None)\n if hfile is None:\n from ctypes import WinError\n raise WinError()\n info = cls.BY_HANDLE_FILE_INFORMATION()\n rv = cls.GetFileInformationByHandle(hfile, info)\n cls.CloseHandle(hfile)\n if rv == 0:\n from ctypes import WinError\n raise WinError()\n return info.nNumberOfLinks\n\n @classmethod\n def _initialize(cls):\n if not on_win:\n cls._st_nlink = cls._standard_st_nlink\n else:\n # http://msdn.microsoft.com/en-us/library/windows/desktop/aa363858\n import ctypes\n from ctypes import POINTER\n from ctypes.wintypes import DWORD, HANDLE, BOOL\n\n cls.CreateFile = ctypes.windll.kernel32.CreateFileW\n cls.CreateFile.argtypes = [ctypes.c_wchar_p, DWORD, DWORD, ctypes.c_void_p,\n DWORD, DWORD, HANDLE]\n cls.CreateFile.restype = HANDLE\n\n # http://msdn.microsoft.com/en-us/library/windows/desktop/ms724211\n cls.CloseHandle = ctypes.windll.kernel32.CloseHandle\n cls.CloseHandle.argtypes = [HANDLE]\n cls.CloseHandle.restype = BOOL\n\n class FILETIME(ctypes.Structure):\n _fields_ = [(\"dwLowDateTime\", DWORD),\n (\"dwHighDateTime\", DWORD)]\n\n class BY_HANDLE_FILE_INFORMATION(ctypes.Structure):\n _fields_ = [(\"dwFileAttributes\", DWORD),\n (\"ftCreationTime\", FILETIME),\n (\"ftLastAccessTime\", FILETIME),\n (\"ftLastWriteTime\", FILETIME),\n (\"dwVolumeSerialNumber\", DWORD),\n (\"nFileSizeHigh\", DWORD),\n (\"nFileSizeLow\", DWORD),\n (\"nNumberOfLinks\", DWORD),\n (\"nFileIndexHigh\", DWORD),\n (\"nFileIndexLow\", DWORD)]\n cls.BY_HANDLE_FILE_INFORMATION = BY_HANDLE_FILE_INFORMATION\n\n # http://msdn.microsoft.com/en-us/library/windows/desktop/aa364952\n cls.GetFileInformationByHandle = ctypes.windll.kernel32.GetFileInformationByHandle\n cls.GetFileInformationByHandle.argtypes = [HANDLE, POINTER(BY_HANDLE_FILE_INFORMATION)]\n cls.GetFileInformationByHandle.restype = BOOL\n\n cls._st_nlink = cls._windows_st_nlink\n", "path": "conda/common/compat.py"}]} | 3,814 | 159 |

gh_patches_debug_3507 | rasdani/github-patches | git_diff | jazzband__pip-tools-1039 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

setup.py install_requires should have `"click>=7"` not `"click>=6"`

Thank you for all the work on this tool, it's very useful.

Issue:

As of 4.4.0 pip-tools now depends on version 7.0 of click, not 6.0.

The argument `show_envvar` is now being passed to `click.option()`

https://github.com/jazzband/pip-tools/compare/4.3.0...4.4.0#diff-c8673e93c598354ab4a9aa8dd090e913R183

That argument was added in click 7.0

https://click.palletsprojects.com/en/7.x/api/#click.Option

compared to

https://click.palletsprojects.com/en/6.x/api/#click.Option

Fix: setup.py install_requires should have `"click>=7"` not `"click>=6"`

</issue>

<code>

[start of setup.py]

1 """

2 pip-tools keeps your pinned dependencies fresh.

3 """

4 from os.path import abspath, dirname, join

5

6 from setuptools import find_packages, setup

7

8

9 def read_file(filename):

10 """Read the contents of a file located relative to setup.py"""

11 with open(join(abspath(dirname(__file__)), filename)) as thefile:

12 return thefile.read()

13

14

15 setup(

16 name="pip-tools",

17 use_scm_version=True,

18 url="https://github.com/jazzband/pip-tools/",

19 license="BSD",

20 author="Vincent Driessen",

21 author_email="[email protected]",

22 description=__doc__.strip(),

23 long_description=read_file("README.rst"),

24 long_description_content_type="text/x-rst",

25 packages=find_packages(exclude=["tests"]),

26 package_data={},

27 python_requires=">=2.7, !=3.0.*, !=3.1.*, !=3.2.*, !=3.3.*, !=3.4.*",

28 setup_requires=["setuptools_scm"],

29 install_requires=["click>=6", "six"],

30 zip_safe=False,

31 entry_points={

32 "console_scripts": [

33 "pip-compile = piptools.scripts.compile:cli",

34 "pip-sync = piptools.scripts.sync:cli",

35 ]

36 },

37 platforms="any",

38 classifiers=[

39 "Development Status :: 5 - Production/Stable",

40 "Intended Audience :: Developers",

41 "Intended Audience :: System Administrators",

42 "License :: OSI Approved :: BSD License",

43 "Operating System :: OS Independent",

44 "Programming Language :: Python",

45 "Programming Language :: Python :: 2",

46 "Programming Language :: Python :: 2.7",

47 "Programming Language :: Python :: 3",

48 "Programming Language :: Python :: 3.5",

49 "Programming Language :: Python :: 3.6",

50 "Programming Language :: Python :: 3.7",

51 "Programming Language :: Python :: 3.8",

52 "Programming Language :: Python :: Implementation :: CPython",

53 "Programming Language :: Python :: Implementation :: PyPy",

54 "Topic :: System :: Systems Administration",

55 ],

56 )

57

[end of setup.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/setup.py b/setup.py

--- a/setup.py

+++ b/setup.py

@@ -26,7 +26,7 @@

package_data={},

python_requires=">=2.7, !=3.0.*, !=3.1.*, !=3.2.*, !=3.3.*, !=3.4.*",

setup_requires=["setuptools_scm"],

- install_requires=["click>=6", "six"],

+ install_requires=["click>=7", "six"],

zip_safe=False,

entry_points={

"console_scripts": [

| {"golden_diff": "diff --git a/setup.py b/setup.py\n--- a/setup.py\n+++ b/setup.py\n@@ -26,7 +26,7 @@\n package_data={},\n python_requires=\">=2.7, !=3.0.*, !=3.1.*, !=3.2.*, !=3.3.*, !=3.4.*\",\n setup_requires=[\"setuptools_scm\"],\n- install_requires=[\"click>=6\", \"six\"],\n+ install_requires=[\"click>=7\", \"six\"],\n zip_safe=False,\n entry_points={\n \"console_scripts\": [\n", "issue": "setup.py install_requires should have `\"click>=7\"` not `\"click>=6\"`\nThank you for all the work on this tool, it's very useful.\r\n\r\nIssue:\r\nAs of 4.4.0 pip-tools now depends on version 7.0 of click, not 6.0.\r\n\r\nThe argument `show_envvar` is now being passed to `click.option()`\r\nhttps://github.com/jazzband/pip-tools/compare/4.3.0...4.4.0#diff-c8673e93c598354ab4a9aa8dd090e913R183\r\n\r\nThat argument was added in click 7.0\r\nhttps://click.palletsprojects.com/en/7.x/api/#click.Option\r\ncompared to \r\nhttps://click.palletsprojects.com/en/6.x/api/#click.Option\r\n\r\nFix: setup.py install_requires should have `\"click>=7\"` not `\"click>=6\"`\n", "before_files": [{"content": "\"\"\"\npip-tools keeps your pinned dependencies fresh.\n\"\"\"\nfrom os.path import abspath, dirname, join\n\nfrom setuptools import find_packages, setup\n\n\ndef read_file(filename):\n \"\"\"Read the contents of a file located relative to setup.py\"\"\"\n with open(join(abspath(dirname(__file__)), filename)) as thefile:\n return thefile.read()\n\n\nsetup(\n name=\"pip-tools\",\n use_scm_version=True,\n url=\"https://github.com/jazzband/pip-tools/\",\n license=\"BSD\",\n author=\"Vincent Driessen\",\n author_email=\"[email protected]\",\n description=__doc__.strip(),\n long_description=read_file(\"README.rst\"),\n long_description_content_type=\"text/x-rst\",\n packages=find_packages(exclude=[\"tests\"]),\n package_data={},\n python_requires=\">=2.7, !=3.0.*, !=3.1.*, !=3.2.*, !=3.3.*, !=3.4.*\",\n setup_requires=[\"setuptools_scm\"],\n install_requires=[\"click>=6\", \"six\"],\n zip_safe=False,\n entry_points={\n \"console_scripts\": [\n \"pip-compile = piptools.scripts.compile:cli\",\n \"pip-sync = piptools.scripts.sync:cli\",\n ]\n },\n platforms=\"any\",\n classifiers=[\n \"Development Status :: 5 - Production/Stable\",\n \"Intended Audience :: Developers\",\n \"Intended Audience :: System Administrators\",\n \"License :: OSI Approved :: BSD License\",\n \"Operating System :: OS Independent\",\n \"Programming Language :: Python\",\n \"Programming Language :: Python :: 2\",\n \"Programming Language :: Python :: 2.7\",\n \"Programming Language :: Python :: 3\",\n \"Programming Language :: Python :: 3.5\",\n \"Programming Language :: Python :: 3.6\",\n \"Programming Language :: Python :: 3.7\",\n \"Programming Language :: Python :: 3.8\",\n \"Programming Language :: Python :: Implementation :: CPython\",\n \"Programming Language :: Python :: Implementation :: PyPy\",\n \"Topic :: System :: Systems Administration\",\n ],\n)\n", "path": "setup.py"}]} | 1,293 | 120 |

gh_patches_debug_5040 | rasdani/github-patches | git_diff | pymodbus-dev__pymodbus-1355 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

pymodbus.simulator fails with no running event loop

### Versions

* Python: 3.9.2

* OS: Debian Bullseye

* Pymodbus: 3.1.3 latest dev branch

* Modbus Hardware (if used):

### Pymodbus Specific

* Server: tcp - sync/async

* Client: tcp - sync/async

### Description

Executing pymodbus.simulator from the commandline results in the following error:

```

$ pymodbus.simulator

10:39:28 INFO logging:74 Start simulator

Traceback (most recent call last):

File "/usr/local/bin/pymodbus.simulator", line 33, in <module>

sys.exit(load_entry_point('pymodbus===3.1.x', 'console_scripts', 'pymodbus.simulator')())

File "/usr/local/lib/python3.9/dist-packages/pymodbus/server/simulator/main.py", line 112, in main

task = ModbusSimulatorServer(**cmd_args)

File "/usr/local/lib/python3.9/dist-packages/pymodbus/server/simulator/http_server.py", line 134, in __init__

server["loop"] = asyncio.get_running_loop()

RuntimeError: no running event loop

```

NOTE: I am running this from the pymodbus/server/simulator/ folder, so it picks up the example [setup.json](https://github.com/pymodbus-dev/pymodbus/blob/dev/pymodbus/server/simulator/setup.json) file.

Manually specifying available options from the commandline results in the same error as well:

```

$ pymodbus.simulator \

--http_host 0.0.0.0 \

--http_port 8080 \

--modbus_server server \

--modbus_device device \

--json_file ~/git/pymodbus/pymodbus/server/simulator/setup.json

11:24:07 INFO logging:74 Start simulator

Traceback (most recent call last):

File "/usr/local/bin/pymodbus.simulator", line 33, in <module>

sys.exit(load_entry_point('pymodbus===3.1.x', 'console_scripts', 'pymodbus.simulator')())

File "/usr/local/lib/python3.9/dist-packages/pymodbus/server/simulator/main.py", line 112, in main

task = ModbusSimulatorServer(**cmd_args)

File "/usr/local/lib/python3.9/dist-packages/pymodbus/server/simulator/http_server.py", line 134, in __init__

server["loop"] = asyncio.get_running_loop()

RuntimeError: no running event loop

```

</issue>

<code>

[start of pymodbus/server/simulator/main.py]

1 #!/usr/bin/env python3

2 """HTTP server for modbus simulator.

3

4 The modbus simulator contain 3 distint parts:

5

6 - Datastore simulator, to define registers and their behaviour including actions: (simulator)(../../datastore/simulator.py)

7 - Modbus server: (server)(./http_server.py)

8 - HTTP server with REST API and web pages providing an online console in your browser

9

10 Multiple setups for different server types and/or devices are prepared in a (json file)(./setup.json), the detailed configuration is explained in (doc)(README.md)

11

12 The command line parameters are kept to a minimum:

13

14 usage: main.py [-h] [--modbus_server MODBUS_SERVER]

15 [--modbus_device MODBUS_DEVICE] [--http_host HTTP_HOST]

16 [--http_port HTTP_PORT]

17 [--log {critical,error,warning,info,debug}]

18 [--json_file JSON_FILE]

19 [--custom_actions_module CUSTOM_ACTIONS_MODULE]

20

21 Modbus server with REST-API and web server

22

23 options:

24 -h, --help show this help message and exit

25 --modbus_server MODBUS_SERVER

26 use <modbus_server> from server_list in json file

27 --modbus_device MODBUS_DEVICE

28 use <modbus_device> from device_list in json file

29 --http_host HTTP_HOST

30 use <http_host> as host to bind http listen

31 --http_port HTTP_PORT

32 use <http_port> as port to bind http listen

33 --log {critical,error,warning,info,debug}

34 set log level, default is info

35 --log_file LOG_FILE

36 name of server log file, default is "server.log"

37 --json_file JSON_FILE

38 name of json_file, default is "setup.json"

39 --custom_actions_module CUSTOM_ACTIONS_MODULE

40 python file with custom actions, default is none

41 """

42 import argparse

43 import asyncio

44

45 from pymodbus import pymodbus_apply_logging_config

46 from pymodbus.logging import Log

47 from pymodbus.server.simulator.http_server import ModbusSimulatorServer

48

49

50 def get_commandline():

51 """Get command line arguments."""

52 parser = argparse.ArgumentParser(

53 description="Modbus server with REST-API and web server"

54 )

55 parser.add_argument(

56 "--modbus_server",

57 help="use <modbus_server> from server_list in json file",

58 type=str,

59 )

60 parser.add_argument(

61 "--modbus_device",

62 help="use <modbus_device> from device_list in json file",

63 type=str,

64 )

65 parser.add_argument(

66 "--http_host",

67 help="use <http_host> as host to bind http listen",

68 type=str,

69 )

70 parser.add_argument(

71 "--http_port",

72 help="use <http_port> as port to bind http listen",

73 type=str,

74 )

75 parser.add_argument(

76 "--log",

77 choices=["critical", "error", "warning", "info", "debug"],

78 help="set log level, default is info",

79 default="info",

80 type=str,

81 )

82 parser.add_argument(

83 "--json_file",

84 help='name of json file, default is "setup.json"',

85 type=str,

86 )

87 parser.add_argument(

88 "--log_file",

89 help='name of server log file, default is "server.log"',

90 type=str,

91 )

92 parser.add_argument(

93 "--custom_actions_module",

94 help="python file with custom actions, default is none",

95 type=str,

96 )

97 args = parser.parse_args()

98 pymodbus_apply_logging_config(args.log.upper())

99 Log.info("Start simulator")

100 cmd_args = {}

101 for argument in args.__dict__:

102 if argument == "log":

103 continue

104 if args.__dict__[argument] is not None:

105 cmd_args[argument] = args.__dict__[argument]

106 return cmd_args

107

108

109 def main():

110 """Run server."""

111 cmd_args = get_commandline()

112 task = ModbusSimulatorServer(**cmd_args)

113 asyncio.run(task.run_forever(), debug=True)

114

115

116 if __name__ == "__main__":

117 main()

118

[end of pymodbus/server/simulator/main.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/pymodbus/server/simulator/main.py b/pymodbus/server/simulator/main.py

--- a/pymodbus/server/simulator/main.py

+++ b/pymodbus/server/simulator/main.py

@@ -106,11 +106,16 @@

return cmd_args

-def main():

- """Run server."""

+async def run_main():

+ """Run server async."""

cmd_args = get_commandline()

task = ModbusSimulatorServer(**cmd_args)

- asyncio.run(task.run_forever(), debug=True)

+ await task.run_forever()

+

+

+def main():

+ """Run server."""

+ asyncio.run(run_main(), debug=True)

if __name__ == "__main__":

| {"golden_diff": "diff --git a/pymodbus/server/simulator/main.py b/pymodbus/server/simulator/main.py\n--- a/pymodbus/server/simulator/main.py\n+++ b/pymodbus/server/simulator/main.py\n@@ -106,11 +106,16 @@\n return cmd_args\n \n \n-def main():\n- \"\"\"Run server.\"\"\"\n+async def run_main():\n+ \"\"\"Run server async.\"\"\"\n cmd_args = get_commandline()\n task = ModbusSimulatorServer(**cmd_args)\n- asyncio.run(task.run_forever(), debug=True)\n+ await task.run_forever()\n+\n+\n+def main():\n+ \"\"\"Run server.\"\"\"\n+ asyncio.run(run_main(), debug=True)\n \n \n if __name__ == \"__main__\":\n", "issue": "pymodbus.simulator fails with no running event loop\n### Versions\r\n\r\n* Python: 3.9.2\r\n* OS: Debian Bullseye\r\n* Pymodbus: 3.1.3 latest dev branch\r\n* Modbus Hardware (if used):\r\n\r\n### Pymodbus Specific\r\n* Server: tcp - sync/async\r\n* Client: tcp - sync/async\r\n\r\n### Description\r\n\r\nExecuting pymodbus.simulator from the commandline results in the following error:\r\n\r\n```\r\n$ pymodbus.simulator\r\n10:39:28 INFO logging:74 Start simulator\r\nTraceback (most recent call last):\r\n File \"/usr/local/bin/pymodbus.simulator\", line 33, in <module>\r\n sys.exit(load_entry_point('pymodbus===3.1.x', 'console_scripts', 'pymodbus.simulator')())\r\n File \"/usr/local/lib/python3.9/dist-packages/pymodbus/server/simulator/main.py\", line 112, in main\r\n task = ModbusSimulatorServer(**cmd_args)\r\n File \"/usr/local/lib/python3.9/dist-packages/pymodbus/server/simulator/http_server.py\", line 134, in __init__\r\n server[\"loop\"] = asyncio.get_running_loop()\r\nRuntimeError: no running event loop\r\n```\r\nNOTE: I am running this from the pymodbus/server/simulator/ folder, so it picks up the example [setup.json](https://github.com/pymodbus-dev/pymodbus/blob/dev/pymodbus/server/simulator/setup.json) file.\r\n\r\nManually specifying available options from the commandline results in the same error as well:\r\n```\r\n$ pymodbus.simulator \\\r\n --http_host 0.0.0.0 \\\r\n --http_port 8080 \\\r\n --modbus_server server \\\r\n --modbus_device device \\\r\n --json_file ~/git/pymodbus/pymodbus/server/simulator/setup.json\r\n\r\n11:24:07 INFO logging:74 Start simulator\r\nTraceback (most recent call last):\r\n File \"/usr/local/bin/pymodbus.simulator\", line 33, in <module>\r\n sys.exit(load_entry_point('pymodbus===3.1.x', 'console_scripts', 'pymodbus.simulator')())\r\n File \"/usr/local/lib/python3.9/dist-packages/pymodbus/server/simulator/main.py\", line 112, in main\r\n task = ModbusSimulatorServer(**cmd_args)\r\n File \"/usr/local/lib/python3.9/dist-packages/pymodbus/server/simulator/http_server.py\", line 134, in __init__\r\n server[\"loop\"] = asyncio.get_running_loop()\r\nRuntimeError: no running event loop\r\n```\r\n\n", "before_files": [{"content": "#!/usr/bin/env python3\n\"\"\"HTTP server for modbus simulator.\n\nThe modbus simulator contain 3 distint parts:\n\n- Datastore simulator, to define registers and their behaviour including actions: (simulator)(../../datastore/simulator.py)\n- Modbus server: (server)(./http_server.py)\n- HTTP server with REST API and web pages providing an online console in your browser\n\nMultiple setups for different server types and/or devices are prepared in a (json file)(./setup.json), the detailed configuration is explained in (doc)(README.md)\n\nThe command line parameters are kept to a minimum:\n\nusage: main.py [-h] [--modbus_server MODBUS_SERVER]\n [--modbus_device MODBUS_DEVICE] [--http_host HTTP_HOST]\n [--http_port HTTP_PORT]\n [--log {critical,error,warning,info,debug}]\n [--json_file JSON_FILE]\n [--custom_actions_module CUSTOM_ACTIONS_MODULE]\n\nModbus server with REST-API and web server\n\noptions:\n -h, --help show this help message and exit\n --modbus_server MODBUS_SERVER\n use <modbus_server> from server_list in json file\n --modbus_device MODBUS_DEVICE\n use <modbus_device> from device_list in json file\n --http_host HTTP_HOST\n use <http_host> as host to bind http listen\n --http_port HTTP_PORT\n use <http_port> as port to bind http listen\n --log {critical,error,warning,info,debug}\n set log level, default is info\n --log_file LOG_FILE\n name of server log file, default is \"server.log\"\n --json_file JSON_FILE\n name of json_file, default is \"setup.json\"\n --custom_actions_module CUSTOM_ACTIONS_MODULE\n python file with custom actions, default is none\n\"\"\"\nimport argparse\nimport asyncio\n\nfrom pymodbus import pymodbus_apply_logging_config\nfrom pymodbus.logging import Log\nfrom pymodbus.server.simulator.http_server import ModbusSimulatorServer\n\n\ndef get_commandline():\n \"\"\"Get command line arguments.\"\"\"\n parser = argparse.ArgumentParser(\n description=\"Modbus server with REST-API and web server\"\n )\n parser.add_argument(\n \"--modbus_server\",\n help=\"use <modbus_server> from server_list in json file\",\n type=str,\n )\n parser.add_argument(\n \"--modbus_device\",\n help=\"use <modbus_device> from device_list in json file\",\n type=str,\n )\n parser.add_argument(\n \"--http_host\",\n help=\"use <http_host> as host to bind http listen\",\n type=str,\n )\n parser.add_argument(\n \"--http_port\",\n help=\"use <http_port> as port to bind http listen\",\n type=str,\n )\n parser.add_argument(\n \"--log\",\n choices=[\"critical\", \"error\", \"warning\", \"info\", \"debug\"],\n help=\"set log level, default is info\",\n default=\"info\",\n type=str,\n )\n parser.add_argument(\n \"--json_file\",\n help='name of json file, default is \"setup.json\"',\n type=str,\n )\n parser.add_argument(\n \"--log_file\",\n help='name of server log file, default is \"server.log\"',\n type=str,\n )\n parser.add_argument(\n \"--custom_actions_module\",\n help=\"python file with custom actions, default is none\",\n type=str,\n )\n args = parser.parse_args()\n pymodbus_apply_logging_config(args.log.upper())\n Log.info(\"Start simulator\")\n cmd_args = {}\n for argument in args.__dict__:\n if argument == \"log\":\n continue\n if args.__dict__[argument] is not None:\n cmd_args[argument] = args.__dict__[argument]\n return cmd_args\n\n\ndef main():\n \"\"\"Run server.\"\"\"\n cmd_args = get_commandline()\n task = ModbusSimulatorServer(**cmd_args)\n asyncio.run(task.run_forever(), debug=True)\n\n\nif __name__ == \"__main__\":\n main()\n", "path": "pymodbus/server/simulator/main.py"}]} | 2,253 | 163 |

gh_patches_debug_16515 | rasdani/github-patches | git_diff | ansible__ansible-lint-2666 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Docs: Add details for using profiles

##### Summary

As content moves through the automation content creation pipeline, various linting rules that are developed against may or may not apply depending on where the content is being executed.

For example, in development, as a content creator, there should be the ability to create some rules and quickly validate them utilising ansible-lint locally. Then in the first test run, an ansible-lint profile should allow the executor from ignoring the rules to ensure that the content itself runs as desired.

Update documentation to provide context for using profiles in a more progressive (step-by-step) way with additional context that addresses motivation.

##### Issue Type

- Bug Report (Docs)

##### Ansible and Ansible Lint details

N/A

##### OS / ENVIRONMENT

Fedora 36

##### STEPS TO REPRODUCE

N/A

##### Desired Behavior

N/A

##### Actual Behavior

N/A

</issue>

<code>

[start of src/ansiblelint/generate_docs.py]

1 """Utils to generate rules documentation."""

2 import logging

3 from pathlib import Path

4 from typing import Iterable

5

6 from rich import box

7

8 # Remove this compatibility try-catch block once we drop support for rich < 10.7.0

9 try:

10 from rich.console import group

11 except ImportError:

12 from rich.console import render_group as group # type: ignore

13

14 from rich.markdown import Markdown

15 from rich.table import Table

16

17 from ansiblelint.config import PROFILES

18 from ansiblelint.constants import RULE_DOC_URL

19 from ansiblelint.rules import RulesCollection

20

21 DOC_HEADER = """

22 # Default Rules

23

24 (lint_default_rules)=

25

26 Below you can see the list of default rules Ansible Lint use to evaluate playbooks and roles:

27

28 """

29

30 _logger = logging.getLogger(__name__)

31

32

33 def rules_as_docs(rules: RulesCollection) -> str:

34 """Dump documentation files for all rules, returns only confirmation message.

35

36 That is internally used for building documentation and the API can change

37 at any time.

38 """

39 result = ""

40 dump_path = Path(".") / "docs" / "rules"

41 if not dump_path.exists():

42 raise RuntimeError(f"Failed to find {dump_path} folder for dumping rules.")

43

44 with open(dump_path / ".." / "profiles.md", "w", encoding="utf-8") as f:

45 f.write(profiles_as_md(header=True, docs_url="rules/"))

46

47 for rule in rules.alphabetical():

48 result = ""

49 with open(dump_path / f"{rule.id}.md", "w", encoding="utf-8") as f:

50 # because title == rule.id we get the desired labels for free

51 # and we do not have to insert `(target_header)=`

52 title = f"{rule.id}"

53

54 if rule.help:

55 if not rule.help.startswith(f"# {rule.id}"):

56 raise RuntimeError(

57 f"Rule {rule.__class__} markdown help does not start with `# {rule.id}` header.\n{rule.help}"

58 )

59 result = result[1:]

60 result += f"{rule.help}"

61 else:

62 description = rule.description

63 if rule.link:

64 description += f" [more]({rule.link})"

65

66 result += f"# {title}\n\n**{rule.shortdesc}**\n\n{description}"

67 f.write(result)

68

69 return "All markdown files for rules were dumped!"

70

71

72 def rules_as_str(rules: RulesCollection) -> str:

73 """Return rules as string."""

74 return "\n".join([str(rule) for rule in rules.alphabetical()])

75

76

77 def rules_as_md(rules: RulesCollection) -> str:

78 """Return md documentation for a list of rules."""

79 result = DOC_HEADER

80

81 for rule in rules.alphabetical():

82

83 # because title == rule.id we get the desired labels for free

84 # and we do not have to insert `(target_header)=`

85 title = f"{rule.id}"

86

87 if rule.help:

88 if not rule.help.startswith(f"# {rule.id}"):

89 raise RuntimeError(

90 f"Rule {rule.__class__} markdown help does not start with `# {rule.id}` header.\n{rule.help}"

91 )

92 result += f"\n\n{rule.help}"

93 else:

94 description = rule.description

95 if rule.link:

96 description += f" [more]({rule.link})"

97

98 result += f"\n\n## {title}\n\n**{rule.shortdesc}**\n\n{description}"

99

100 return result

101

102

103 @group()

104 def rules_as_rich(rules: RulesCollection) -> Iterable[Table]:

105 """Print documentation for a list of rules, returns empty string."""

106 width = max(16, *[len(rule.id) for rule in rules])

107 for rule in rules.alphabetical():

108 table = Table(show_header=True, header_style="title", box=box.MINIMAL)

109 table.add_column(rule.id, style="dim", width=width)

110 table.add_column(Markdown(rule.shortdesc))

111

112 description = rule.help or rule.description

113 if rule.link:

114 description += f" [(more)]({rule.link})"

115 table.add_row("description", Markdown(description))

116 if rule.version_added:

117 table.add_row("version_added", rule.version_added)

118 if rule.tags:

119 table.add_row("tags", ", ".join(rule.tags))

120 if rule.severity:

121 table.add_row("severity", rule.severity)

122 yield table

123

124

125 def profiles_as_md(header: bool = False, docs_url: str = RULE_DOC_URL) -> str:

126 """Return markdown representation of supported profiles."""

127 result = ""

128

129 if header:

130 result += """<!---

131 Do not manually edit, generated from generate_docs.py

132 -->

133 # Profiles

134

135 One of the best ways to run `ansible-lint` is by specifying which rule profile

136 you want to use. These profiles stack on top of each other, allowing you to

137 gradually raise the quality bar.

138

139 To run it with the most strict profile just type `ansible-lint --profile=production`.

140

141 If you want to consult the list of rules from each profile, type

142 `ansible-lint -P`. For your convenience, we also list the same output below.

143

144 The rules that have a `*` suffix, are not implemented yet but we documented

145 them with links to their issues.

146

147 ```{note}

148 Special rule tags such `opt-in` and `experimental` are automatically removed

149 when a rule is included in a profile, directly or indirectly. This means that

150 they will always execute once included.

151 ```

152

153 """

154

155 for name, profile in PROFILES.items():

156 extends = ""

157 if profile.get("extends", None):

158 extends = (

159 f" It extends [{profile['extends']}](#{profile['extends']}) profile."

160 )

161 result += f"## {name}\n\n{profile['description']}{extends}\n"

162 for rule, rule_data in profile["rules"].items():

163 if "[" in rule:

164 url = f"{docs_url}{rule.split('[')[0]}/"

165 else:

166 url = f"{docs_url}{rule}/"

167 if not rule_data:

168 result += f"- [{rule}]({url})\n"

169 else:

170 result += f"- [{rule}]({rule_data['url']})\n"

171

172 result += "\n"

173 return result

174

175

176 def profiles_as_rich() -> Markdown:

177 """Return rich representation of supported profiles."""

178 return Markdown(profiles_as_md())

179

[end of src/ansiblelint/generate_docs.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/src/ansiblelint/generate_docs.py b/src/ansiblelint/generate_docs.py

--- a/src/ansiblelint/generate_docs.py

+++ b/src/ansiblelint/generate_docs.py

@@ -132,22 +132,10 @@

-->

# Profiles

-One of the best ways to run `ansible-lint` is by specifying which rule profile

-you want to use. These profiles stack on top of each other, allowing you to

-gradually raise the quality bar.

-

-To run it with the most strict profile just type `ansible-lint --profile=production`.

-

-If you want to consult the list of rules from each profile, type

-`ansible-lint -P`. For your convenience, we also list the same output below.

-

-The rules that have a `*` suffix, are not implemented yet but we documented

-them with links to their issues.

+Ansible-lint profiles gradually increase the strictness of rules as your Ansible content lifecycle.

```{note}

-Special rule tags such `opt-in` and `experimental` are automatically removed

-when a rule is included in a profile, directly or indirectly. This means that

-they will always execute once included.

+Rules with `*` in the suffix are not yet implemented but are documented with linked GitHub issues.

```

"""